Abstract

With regard to hearing perception, it remains unclear as to whether, or the extent to which, different conceptual categories of real-world sounds and related categorical knowledge are differentially represented in the brain. Semantic knowledge representations are reported to include the major divisions of living versus non-living things, plus more specific categories including animals, tools, biological motion, faces, and places—categories typically defined by their characteristic visual features. Here, we used functional magnetic resonance imaging (fMRI) to identify brain regions showing preferential activity to four categories of action sounds, which included non-vocal human and animal actions (living), plus mechanical and environmental sound-producing actions (non-living). The results showed a striking antero-posterior division in cortical representations for sounds produced by living versus non-living sources. Additionally, there were several significant differences by category, depending on whether the task was category-specific (e.g. human or not) versus non-specific (detect end-of-sound). In general, (1) human-produced sounds yielded robust activation in the bilateral posterior superior temporal sulci independent of task. Task demands modulated activation of left-lateralized fronto-parietal regions, bilateral insular cortices, and subcortical regions previously implicated in observation-execution matching, consistent with “embodied” and mirror-neuron network representations subserving recognition. (2) Animal action sounds preferentially activated the bilateral posterior insulae. (3) Mechanical sounds activated the anterior superior temporal gyri and parahippocampal cortices. (4) Environmental sounds preferentially activated dorsal occipital and medial parietal cortices. Overall, this multi-level dissociation of networks for preferentially representing distinct sound-source categories provides novel support for grounded cognition models that may underlie organizational principles for hearing perception.

Keywords: Auditory system, fMRI, grounded cognition, action recognition, biological motion, category specificity

Introduction

Human listeners learn to readily recognize real-world sounds, typically in the context of producing sounds by their own motor actions and/or viewing the actions of agents associated with the sound production. These skills are honed throughout childhood as one learns to distinguish between different conceptual categories of objects that produce characteristic sounds. Object knowledge representations have received considerable scientific study for over a century. In particular, neuropsychological lesion studies, and more recently neuroimaging studies, have identified brain areas involved in retrieving distinct conceptual categories of knowledge including living versus non-living things (Warrington and Shallice, 1984; Hillis and Caramazza, 1991; Silveri et al., 1997; Lu et al., 2002; Zannino et al., 2006). More specific object categories reported to be differentially represented in cortex include animals, tools, fruits/vegetables, faces, and places (Warrington and Shallice, 1984; Moore and Price, 1999; Kanwisher, 2000; Haxby et al., 2001; Caramazza and Mahon, 2003; Damasio et al., 2004; Martin, 2007). Traditionally, these studies have been heavily visually biased, using photos, diagrams, or actual objects, or alternatively using visual verbal stimuli. However, many aspects of object knowledge are derived from interactions with objects, imparting salient multisensory qualities or properties to objects, such as their characteristic sounds, which are qualities that may significantly contribute to knowledge representations in the brain (Mesulam, 1998; Adams and Janata, 2002; Tyler et al., 2004; Canessa et al., 2007). However, due to the inherently different nature of acoustic versus visual input, some fundamentally different sensory or sensorimotor properties might drive the central nervous system to segment, process, and represent different categories of object or action-source knowledge in the brain. This in turn may lead to, or be associated with, distinct network representations that show category-specificity, thereby reflecting a gross level of organization for conceptual systems that may subserve auditory perception.

The production of sound necessarily implies some form of motion or action. In vision, different types of motion, such as “biological motion” (e.g. point light displays depicting human articulated walking) versus rigid body motion (Johansson, 1973), have been shown to lead to activation along different cortical pathways (Shiffrar et al., 1997; Pavlova and Sokolov, 2000; Wheaton et al., 2001; Beauchamp et al., 2002; Grossman and Blake, 2002; Thompson et al., 2005). Results from this visual literature indicate that when one observes biological actions, such as viewing another person walking, neural processing leads to probabilistic matches to our own motor repertoire of actions (e.g. schemas), thereby “embodying” the visual sensory input to provide us with a sense of meaning behind the action (Liberman and Mattingly, 1985; Norman and Shallice, 1986; Corballis, 1992; Nishitani and Hari, 2000; Buccino et al., 2001; Barsalou et al., 2003; Kilner et al., 2004; Aglioti et al., 2008; Cross et al., 2008). Embodied or “grounded” cognition models more generally posit that object concepts are grounded in perception and action, such that modal simulations (e.g. thinking about sensory events) and situated actions, should, at least in part, be represented in the same networks activated during the perception of sensory and sensory-motor events (Broadbent, 1878; Lissauer, 1890/1988; Barsalou, 1999; Gallese and Lakoff, 2005; Beauchamp and Martin, 2007; Canessa et al., 2007; Barsalou, 2008).

In the realm of auditory processing, several studies have shown that the perception of action sounds produced by human conspecifics differentially activate distinct brain networks, notably in motor-related regions such as the left intraparietal lobule (IPL) and left inferior frontal gyri (IFG). This includes comparisons of human-performable versus non-performable action sounds (Pizzamiglio et al., 2005), hand-tools versus animal vocalizations (Lewis et al., 2005; Lewis et al., 2006), hand and mouth action sounds relative to environmental and scrambled sounds (Gazzola et al., 2006), expert piano players versus naïve listeners hearing piano playing (Lahav et al., 2007), attending to footsteps versus onset of noise (Bidet-Caulet et al., 2005), and hand, mouth and vocal sounds versus environmental sounds (Galati et al., 2008). Consistent with studies of macaque monkey auditory mirror-neurons (Kohler et al., 2002; Keysers et al., 2003), human neuroimaging studies implicate the left IPL and left IFG as major components of a putative mirror-neuron system, which may serve to represent the goal or intention behind observed actions (Rizzolatti and Craighero, 2004; Pizzamiglio et al., 2005; Gazzola et al., 2006; Galati et al., 2008). Thus, these regions may subserve aspects of auditory perception, in that human-produced (conspecific) sounds may be associated with, or processed along, networks related to motor production.

Other regions involved in sound action processing include the left posterior superior temporal sulcus and middle temporal gyri, here collectively referred to as the pSTS/pMTG complex. These regions have a role in recognizing natural sounds, in contrast to backward-played, unrecognizable control sounds (Lewis et al., 2004). They are generally reported to be more selective for human action sounds (Bidet-Caulet et al., 2005; Gazzola et al., 2006; Doehrmann et al., 2008), including the processing of hand-tool manipulation sounds relative to vocalizations (Lewis et al., 2005; Lewis et al., 2006). The pSTS/pMTG complexes are also implicated in audio-visual interactions, and may play a general perceptual role in transforming the dynamic temporal features of auditory information together with spatially and temporally dynamic visual information into a common reference frame and neural code (Avillac et al., 2005; Taylor et al., 2009; Lewis, under review). However, whether, or the extent to which, such functions may apply to other types or categories of complex real-world action sounds remains unclear.

Although neuroimaging studies of human action sound representations are steadily increasing, to our knowledge none have systematically dissociated activation networks for conceptually distinct categories of living (or “biological”) versus non-living (non-biological) action sounds (Fig. 1A). Nor have there been reports examining different sub-categories of action sounds, using a wide range of acoustically well-matched stimuli, drawing on analogies to category-specific processing studies reported for the visual and conceptual systems (Allison et al., 1994; Kanwisher et al., 1997; Caramazza and Mahon, 2003; Hasson et al., 2003; Martin, 2007). Thus, the objective of the present study, using functional magnetic resonance imaging (fMRI), was to explore category-specificity from the perspective of hearing perception, and further sub-divide conceptual categories of sounds including those produced by humans (conspecifics) versus non-human animals, and mechanical versus environmental sources. We explicitly excluded vocalizations, as they are known to evoke activation along relatively specialized pathways related to speech perception (Belin et al., 2000; Fecteau et al., 2004; Lewis et al., 2009). Our first hypothesis was that human action sounds, in contrast to other conceptually distinct action sounds, would evoke activation in motor-related networks associated with embodiment of the sound-source, but with dependence on the listening task. Our second hypothesis was that other action sound categories would also show preferential activation along distinct networks, revealing high-level auditory processing stages and association cortices that may subserve multisensory or amodal action knowledge representations. Both of these hypotheses, if verified, would provide support for grounded cognition theories for auditory action and object knowledge representations.

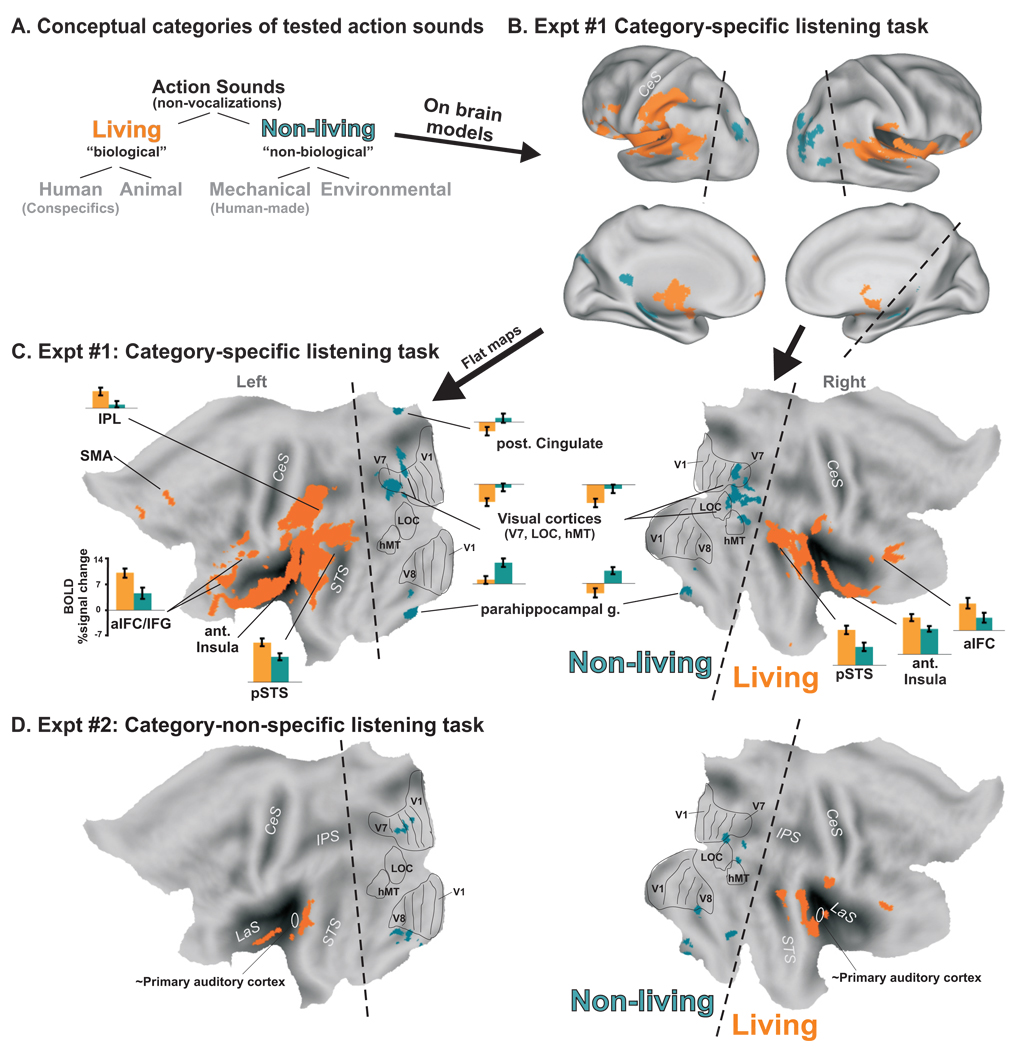

Figure 1.

Activation to Living vs Non-living categories of sound-sources. (A) Schematic division of the action sound categories tested. Group-averaged data from Experiment#1 (n=20, α<0.05, corrected) illustrated on (B) inflated and (C) flat map renderings of cortex (standardized PALS atlas) showing preferential activation to living (human plus animal) vs. non-living sounds (mechanical plus environmental). Histograms indicate the BOLD percent signal change from ROI’s (at p<0.001, α<0.05) to living or non-living sound categories relative to silent events (D) Group-averaged data from Experiment #2 (n=12, α<0.05, corrected) illustrated on flat maps. Ovals depict the approximate location of primary auditory cortices, located along the medial two-thirds of Heschl’s gyrus. Note the striking anterior to posterior distinction of activation for living vs. non-living (dashed lines) sound sources bilaterally, independent of listening task. Visual area boundaries are from the PALS atlas database. CeS=central sulcus, LaS=lateral sulcus, IPS=intraparietal sulcus, STS=superior temporal sulcus, V1 = primary visual cortex, LOC=lateral occipital cortex. Refer to text for other details.

Materials and methods

Participants

We tested 32 participants (all right-handed, 19–29 years of age, 17 women). Twenty were tested in the main scanning paradigm (Experiment #1), and 12 were tested in a control paradigm (Experiment #2). All participants were native English speakers with no previous history of neurological, psychiatric disorders, or auditory impairment, and had a self-reported normal range of hearing. Informed consent was obtained for all participants following guidelines approved by the West Virginia University Institutional Review Board.

Sound stimulus creation and presentation

The stimulus set consisted of 256 sound stimuli compiled from professional compilations (Sound Ideas, Inc, Richmond Hill, Ontario, Canada), including action sounds from four conceptual categories: human, animal, mechanical, and environmental (HAME; see Appendix 1). All the human and animal sounds analyzed were explicitly devoid of vocalizations or vocal-related content (10 were excluded post hoc) to avoid potentially confounding activation in pathways specialized for vocalizations (Belin et al., 2000; Lewis et al., 2009). Mechanical sounds were selected that were judged as not being directly attributed to a human agent instigating the action. Sound stimuli were all edited to 3.0 ± 0.5 second duration, matched for total root mean squared power, and onset/offset ramped 25 msec (Cool Edit Pro, Syntrillium Software Co., owned by Adobe). Sound stimuli were converted to one channel (mono, 44.1kHz, 16-bit) but presented to both ears, thereby removing any binaural spatial cues.

Appendix 1.

Complete list of sound stimuli

| Human | Animal | Mechanical | Environmental |

|---|---|---|---|

| applause | bat flapping wings 1 | airline fly by | avalanch |

| banging on door | bat flapping wings 2 | airplane enginge starting | bubbling mud |

| blowing nose 1 | bee buzzing around | airplane, prop | bubbling water |

| blowing nose 2 | bees buzzing | airplane, prop2 | fire crackling 1 |

| blowing up balloon | butterfly flapping wings | automated metal puncher | fire crackling 2 |

| bongo drums | buzzing insect | bell chimes | fire crackling 3 |

| camera, taking picture | cicada chirp | bells moving | fire crackling 4 |

| clapping hands | cows moving | boat | fire in fire place 5 |

| counting change | dog breathing 1 | cars passing by | fire in fire place 6 |

| cymbal crash | dog breathing 2 | chopper | forrest fire |

| deep breathing | dog breathing heavily | church bells ringing | glacier break 1 |

| dialing on touch tone phone | dog eat biscuit | clock | glacier break 2 |

| dialing telephone, rotary | dog footsteps | clock ticking 1 | glacier break 3 |

| door knocker | dog lapping & eating | clock ticking 2 | glacier break 4 |

| doorknob 1 | dog lapping & licking | clock ticking 3 | heavy rain |

| doorknob 2 | dog lapping up water 1 | clocks, multiple | heavy rainstorm |

| dribbling and shooting basketball | dog lapping up water 2 | coin falling | heavyrain |

| dribbling basketball | dog licking 1 | conveyor | lake water wave ashore |

| dribbling basketball, echoey | dog licking 2 | cuckoo clock | large river |

| eating apple | dog panting & sniffing | door creaking closed | mud bubbling |

| eating celery | dog panting 1 | egg timer | ocean waves |

| eating chips 1 | dog panting 2 | exhaust fan | oceanwaves |

| eating chips 2 | dog panting 3 | fax arriving | rain fall 1 |

| footstep on hard surface | dog panting heavily | fax machine | rain fall 2 |

| footsteps 1 | dog panting, heavy breathing | fax machine, paper coming out | rain fall 3 |

| footsteps 2 | dog sniffling | fax or copy machine adjusting | rain running |

| footsteps on wood | dog swimming, shakes collar | fax warming up | raining falling with thunder |

| footstop on rough surface | dog trotting 1 | film projector reel rolling | river |

| human gargling | dog trotting 2 | fireworks going off | river medium |

| jumping rope | dog trotting 3 | flywheel | rock in water |

| knocking on door | dog trotting 4 | garage door opening 1 | rocks falling |

| knocking on wooden door | dog walking | garage door opening 2 | rocks in water |

| money in vending machine | fly buzzing | heavy machine, quiet | rockslide |

| opening bottle of champagne | hen caught, flight & vocalizes | helicopter | small brush fire |

| opening can of beer | hen chased 1 | helicopter passing | small waterfall |

| pouring cereal | hen chased 2 | industrial engine running | thunder |

| pouring juice | hen flap around, vocalizes | industry | water |

| putting coin in slot machine | hen flapping | industry generator (compressor) | water bubbling 1 |

| raking gravel | hoofed animal footsteps | machinery 1 | water bubbling 2 |

| raking something | hoofed animal stampede | machinery 2 | water bubbling 3 |

| ringing doorbell | horse drawing carriage | machinery 3 | water dripping 1 |

| ripping paper up | horse eating | money falling out of slot machine | water dripping 2 |

| scratching | horse gallop 1 | office machine | water dripping 3 |

| setting microwave | horse gallop 2 | office printer, printing | water dripping 4 |

| shaving, electric razor | horse trotting 1 | paint can lid rolling on floor | water dripping in cave |

| shuffling cards | horse trotting 2 | police car passing | water flow |

| starting large power tool | horse trotting 3 | pressbook | water leaking |

| starting up power tool | horse trotting 4 | printer 1 | water running |

| taking picture with polaroid | insect flying | printer 2 | waves |

| tearing paper off pad | insects buzzing 1 | printer warming up | waves, ocean |

| tennis ball rally | insects buzzing 2 | printer, dot matrix | wind |

| turning on television | large herd passing by | printer, feeding paper | wind blowing 1 |

| Typing computer keyboard | large mutt | printer, office | wind blowing 2 |

| typing computer keyboard2 | pidgeon flutter | refridgerator motor turning on | wind blowing 3 |

| typing on cash register | pidgeon flutter fast | scanner adjusting | wind blowing 4 |

| using a table saw | pigeon flight | stopwatch ticking | wind blowing 5 |

| using handtools | pigs feeding | train squeel breaks to a stop | wind blowing 6 |

| vacuuming | rattle snake rattling 1 | train, frieght passing | wind blowing 7 |

| writing on chalkboard 1 | rattle snake rattling 2 | train, steam engine driving by | wind blowing 8 |

| writing on chalkboard 2 | rattle snake rattling 3 | washing machine 1 | wind blowing 9 |

| writing on chalkboard 3 | water fowl flapping wings | washing machine 2 | wind blowing 10 |

| writing pencil on paper | woodpecker 1 | water going down drain | wind blowing, cold |

| zippering | woodpecker 2 | water going down toilet | wind gusting |

| zippering up tent | zebra trotting | windshield wiper | wind, fast |

Italicized text contained subtle vocalizations and were censored out post-hoc.

Five additional participants, not included in the fMRI studies, and naïve to the purpose of the experiment, assessed numerous sound stimuli, presented via a personal computer and headphones, to determine that they could reliably be identified as being generated by a human or not: They responded using a Likert scale of 1–5 as being created by a human (5) or by a non-human (1), and stimuli that averaged a score greater than 4 were used for the fMRI scanning paradigm. The other three categories were similarly screened (three participants) such that most could be unambiguously recognized as belonging to one of the four categories, retaining a total of 64 sounds in each category.

For the fMRI study, high fidelity sound stimuli were delivered using a Windows PC computer, with Presentation software (version 11.1, Neurobehavioral Systems Inc.) via a sound mixer and MR compatible electrostatic ear buds (STAX SRS-005 Earspeaker system; Stax LTD., Gardena, CA), worn under sound attenuating ear muffs. Stimulus loudness was set to a comfortable level for each participant, typically 80–83 dB C-weighted in each ear (Brüel & Kjær 2239a sound meter), as assessed at the time of scanning.

Scanning paradigms

Experiment #1, involving a category-specific listening task, consisted of 8 separate runs, across which the 256 sound stimuli and 64 silent events were presented in pseudorandom order, with no more than two silent events presented in a row. Participants (n=20) were given explicit instructions, just prior to the scanning session, to carefully focus on the sound stimulus and to determine silently whether or not a human was directly involved with the production of the action sound. None of the participants had heard the specific stimuli, and nor were they aware of the nature of the study and that the other three action sound categories (animal, mechanical, and environmental) were parameters of interest. We elected to have participants not perform any overt response task to avoid activation due to motor output (e.g. pushing a button or overtly naming sounds). However, we wanted to be certain that they were alert and attending to the content of the sound content (human or not) to a level where they were “recognizing” the sound, as further assessed by their post-scanning responses (see below).

Experiment #2, involving a category-non-specific task, was conducted under identical scanning conditions and used the same stimuli as Experiment #1. However, these participants (n=12) were instructed to press a response box button immediately at the offset of each sound stimulus—they were unaware that category-specific sound processing was the parameter of interest.

Magnetic resonance imaging and data analysis

Scanning was conducted on a 3 Tesla General Electric Horizon HD MRI scanner using a quadrature bird-cage head coil. We acquired whole-head, spiral in and out imaging of blood-oxygenated level dependent (BOLD) signals (Glover and Law, 2001), using a clustered-acquisition fMRI design which allowed stimuli to be presented without scanner noise (Edmister et al., 1999; Hall et al., 1999). A sound or silent event was presented every 9.3 seconds, and 6.8 seconds after event onset BOLD signals were collected as 28 axial brain slices (including the dorsal-most portion of the brain) with 1.875 × 1.875 × 4.00 mm3 spatial resolution (TE = 36 msec, OPTR = 2.3 sec volume acquisition, FOV = 24 mm). The presentation of each stimulus event was triggered by a TTL pulse from the MRI scanner. After the completion of the functional imaging scans, whole brain T1-weighted anatomical MR images were collected using a spoiled GRASS pulse sequence (SPGR, 1.2 mm slices with 0.9375 × 0.9375 mm2 in-plane resolution).

Immediately after the scanning session, each participant listened to all the stimuli again in experimental order outside the scanner, indicating by keyboard button press whether he or she thought the action sound was produced by a (1) human, (2) animal, (3) mechanical, or (4) environmental source when originally heard in the scanner. These data were subsequently used to censor out brain responses to incorrectly categorized sounds (Experiments #1 and #2), and subsequently used for error-trial analyses in Experiment #1.

Acquired data were analyzed using AFNI software (http://afni.nimh.nih.gov/) and related plug-ins (Cox, 1996). For each participant’s data, the eight scans were concatenated into a single time series and brain volumes were motion corrected for global head translations and rotations. Multiple linear regression analyses were performed to compare a variety of cross-categorical BOLD brain responses. BOLD signals were converted to percent signal change on a voxel-by-voxel basis relative to responses to silent events. For the primary analyses, only correctly categorized sounds were utilized. The first regression model tested for voxels showing significant differential responses to living (human plus animal) relative to non-living (mechanical plus environmental) sounds. The subsequent analyses entailed pair-wise comparisons and conjunctions across three or four of the categories of sound (e.g. (M>H) ∩ (M>A) ∩ (M>E)) to identify voxels showing preferential activation to any one of the four categories of sound or subsets therein. For both analyses, multiple regression coefficients were first spatially low-pass filtered (4 mm box filter), and subjected to t-test and thresholded. For whole-brain correction, an analysis of the functional noise in the BOLD signal across voxels was estimated using AFNI plug-ins 3dDeconvolve and AlphaSim, yielding and estimated 2.4 mm spatial smoothness (full-width half-max Gaussian filter widths) in x, y, and z dimensions. Applying a minimum cluster size of 12 (or 5) voxels, together with p<0.02 (or p<0.001) voxel-wise t-test, yielded a whole-brain correction at α<0.05. The mis-categorized sounds were subsequently analyzed separately as error trials, using a multiple linear regression to model responses that corresponded with the erroneously reported perception of human-produced versus non-human-produced sounds.

Anatomical and functional imaging data were transformed into standardized Talairach coordinate space (Talairach and Tournoux, 1988). Data were then projected onto the PALS atlas cortical surface models (in AFNI-tlrc) using Caret software (http://brainmap.wustl.edu) for illustration purposes (Van Essen et al., 2001; Van Essen, 2003). Portions of these data can be viewed at http://sumsdb.wustl.edu/sums/directory.do?id=6694031&dir_name=LEWIS_NI09, which contains a database of surface-related data from other brain mapping studies.

Post-scanning assessment of sound stimuli

Apart from being “conceptually” distinct, the four action sound categories could have differed along other dimensions that would influence activation in different brain regions. To test for this possibility, a subset of participants from Experiment #1 (n=11) listened to all 256 sounds after scanning and rated each sound on a Likert scale (1–5) first for pleasantness (1=unpleasant, 3=neutral, 5=pleasant), and then listened to them again and rated their overall sense of familiarity with each action event depicted (1=less familiar, 3=moderately familiar, 5=highly familiar).

Results

We first present results regarding the processing of sounds produced by living versus non-living sources, followed by results pertaining to preferential processing for any one of the four categories of sound; human, animal, mechanical, or environmental (HAME). This is followed by a series of control condition analyses, including an error trial analysis of miscategorized sounds, and additional conjunction analyses. Finally results from a second experiment examine the effects that task demands have on preferentially activated networks subserving real-world sound recognition.

Networks for living vs. non-living sound recognition

For Experiment #1, our first analysis identified regions preferentially activated by living or biologically-produced sounds (Fig. 1A; human and animal) relative to sounds produced by non-living things (mechanical and environmental sources). Only brain responses to correctly categorized sound-sources for each individual were included in this analysis. Strikingly, biologically- versus non-biologically-produced sounds revealed a distinct anterior to posterior division of activation in both hemispheres (Fig. 1B–C, dashed lines). In particular, biologically-produced sounds preferentially activated auditory- and sensorimotor-related regions (orange): This included much of bilateral auditory cortex proper, the bilateral superior temporal gyri (STG) plus posterior superior temporal sulci (pSTS), left lateralized inferior frontal gyrus (IFG) plus bilateral anterior inferior frontal cortex (aIFC), bilateral portions of the insulae, left supplementary motor area (SMA), and the left inferior parietal lobule (IPL). Additionally, several sub-cortical regions showed preferential activation to biologically-produced sounds, including the thalamus, bilateral basal ganglia, and cerebellum (not shown, though see Fig. 2).

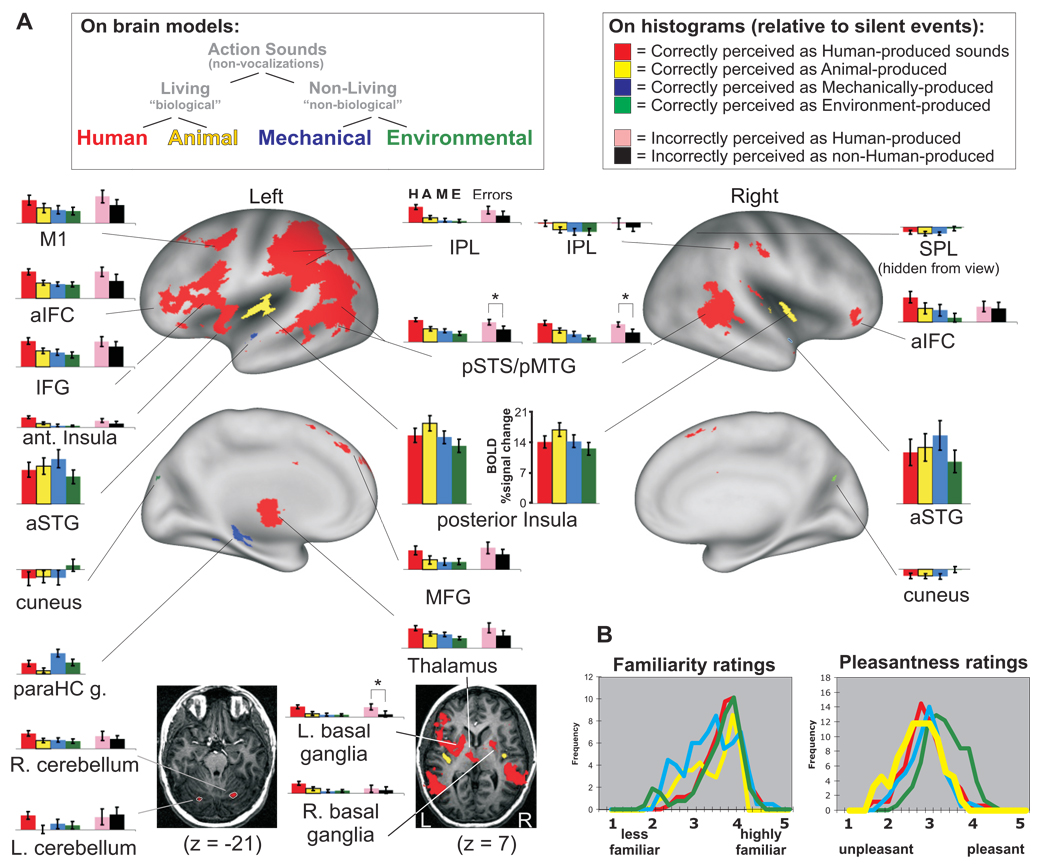

Figure 2.

(A) Group-averaged activations from Experiment #1 (n=20) for action sounds produced by humans (red), animals (yellow), mechanical (blue) sources, and environmental (green) sources (p<0.002, α<0.05, corrected). Histograms depict relative degrees of activation to each sound category for each ROI in BOLD percent signal change relative to silent events (mean ± standard error). Error trial data (pink and black histograms) are depicted only for ROIs that showed preferential activation to human-produced sounds (red ROIs). Asterisks on error trial histograms indicate t-test significant differences at p<0.01. paraHC = parahippocampal gyrus; MFG = medial frontal gyrus. (B) Lower right insets illustrate Likert scale ratings (n=11) for familiarity and pleasantness for each category or sound. Refer to text for other details.

Conversely, sounds produced by non-living or non-biological things (Fig. 1B–C, blue-green) resulted in preferential activation in cortex traditionally reported to be visually-sensitive, the bilateral parahippocampal gyrus, and posterior cingulate cortices (left > right). Surface-registered visual areas from the PALS database were superimposed onto these data (Fig. 1C, black outlines on flat maps), indicating that this overlap included high-level visual areas such as area V7, lateral occipital cortex (LOC), visual motion processing area hMT/V5, among other visual-related regions. Interestingly, different regions of interest (ROIs) showing preferential, or at least differential, activation to sounds produced by non-living things showed three different activation profiles relative to responses to silent events (Fig. 1C, histograms). For instance, the left parahippocampal gyrus region showed positive BOLD signal to both biological and non-biological sounds relative to silent events, but with a strong preference for the non-biological sounds. The left posterior cingulate and right parahippocampal gyrus showed positive activation to non-biological sounds, but “negative” responses to biological sounds relative to silent events. Bilateral activity in high-level visual areas showed negative BOLD activation signals to both living and non-living sound sources, but with significantly greater negative response magnitudes for the biological action sounds. Thus, there was a wider range in differential response profiles for activation elicited by the non-living sound-sources.

Experiment #2, which included a category-non-specific task, also revealed a striking anterior versus posterior division of cortical activation (Fig. 1D). This basic pattern was present within individual data sets, and thus the lesser expanse of activation in the group-average data appeared to be partially due to a smaller sample size (n=12 vs 20). In sum, this study is the first, to our knowledge, to demonstrate a double-dissociation in cortical networks for the two neuropsychologically distinct semantic categories, living versus non-living, using real-world sound-sources. Moreover, this double-dissociation was segregated at a gross anatomical level, being largely restricted to anterior (living) versus posterior (non-living) brain regions (Fig. 1, dashed lines).

Networks for sub-categories of action sounds using a category-specific listening task

The second analysis of Experiment #1 tested whether different conceptual subcategories of living and non-living action sounds-sources would be represented differentially across cortical networks. One hypothesis was that listening for human-produced (non-vocal) action sounds would differentially activate distinct brain networks, including audio-motor association and mirror-neuron networks, as reported previously. However, in contrast to earlier studies, our control conditions critically included animal action sounds, as a second type of biological action sound, together with two conceptually distinct categories of non-biological action sounds—mechanical and environmental sound-sources. We next explicitly tested for brain regions showing activation preferential for any one of the four categories of sound (human, animal, mechanical, or environmental; HAME) relative to the other three categories after conducting pair-wise contrasts followed by a conjunction analysis (see Methods). Again, only brain responses to correctly categorized sounds for each individual were included in this analysis. As illustrated in Figure 2, all four action-sound categories yielded significant differential patterns of cortical network activation across the group, demonstrating a multi-level dissociation of network representations for these categories of real-world sounds. This included preferentially activated networks for human-produced sounds (Fig. 2A, red), animal-produced sounds (yellow), mechanically-produced sounds (blue), and environment-produced sounds (green).

When making category-specific judgments of the sounds (human versus non-human) the human-produced action sounds (Appendix 1), having no vocalization content, produced robust left-lateralized activation in several regions, including the IPL and IFG, anterior insula, and motor cortex (M1), plus bilateral activation in the pSTS, pMTG and anterior inferior frontal cortex (aIFC) (also see Table 1). Nearly all regions preferential for human-produced sounds did, however, also show significant positive activation to the other three categories of sounds relative to silence (Fig. 2, histograms). Human-produced sounds also evoked preferential activation in the three sub-cortical regions mentioned earlier for biologically-produced action sounds, including the basal ganglia, cerebellum, and thalamus (Fig. 2, axial images). Moreover, each of the other three categories of sound produced unique sets of cortical activation patterns as well.

Table 1.

Group activation centroids for activations in Talairach coordinates for human (H), animal (A), mechanical (M), and environment-produced (E) sounds pairwise relative to other categories for data depicted in Figure 2.

| Talairach coordinates |

volume | |||||

|---|---|---|---|---|---|---|

| Anatomical location |

x | y | z | (mm3) | ||

| Right hemisphere | ||||||

| H > AME | aIFC | 42 | 25 | −17 | 51 | |

| H > AME | IFG | 48 | 33 | −1 | 780 | |

| H > AME | IPL | 50 | −32 | 44 | 34 | |

| H > AME | pSTS/pMTG | 51 | −31 | −11 | 5919 | |

| H > AME | basal ganglia | 22 | 1 | 7 | 424 | |

| H > AME | cerebellum | 22 | −65 | −20 | 83 | |

| H > AME | thalamus | 7 | −10 | −2 | 205 | |

| A > HME | posterior insula | 38 | −18 | 11 | 500 | |

| M > HAE | aSTG | 40 | 4 | −3 | 181 | |

| E > HAM | post-central lobe | 17 | −41 | 53 | 125 | |

| E > HAM | cuneus | 9 | −78 | 25 | 66 | |

| E > HAM | precuneus | 22 | −72 | 25 | 184 | |

| Left hemisphere | ||||||

| H > AME | aIFC | −45 | 35 | 9 | 1945 | |

| H > AME | anterior insula | −33 | 4 | 7 | 5898 | |

| H > AME | IFG | −50 | 12 | 14 | 4663 | |

| H > AME | IPL | −50 | −41 | 36 | 23973 | |

| H > AME | anterior SFG | −12 | 44 | 39 | 1475 | |

| H > AME | midline SFG | −1 | 13 | 57 | 652 | |

| H > AME | basal ganglia | −20 | 3 | 9 | 979 | |

| H > AME | cerebellum | −49 | −72 | 28 | 31 | |

| H > AME | thalamus | −8 | −14 | 6 | 958 | |

| A > HME | posterior insula | −37 | −28 | 16 | 3284 | |

| A > HME | Cuneus | −6 | −87 | 28 | 75 | |

| parahippocampus | ||||||

| M > HAE | g. | −24 | −36 | −10 | 420 | |

| M > HAE | pMTG/pSTS | −53 | −7 | 2 | 124 | |

| E > HAM | precuneus | −6 | −87 | 28 | 75 | |

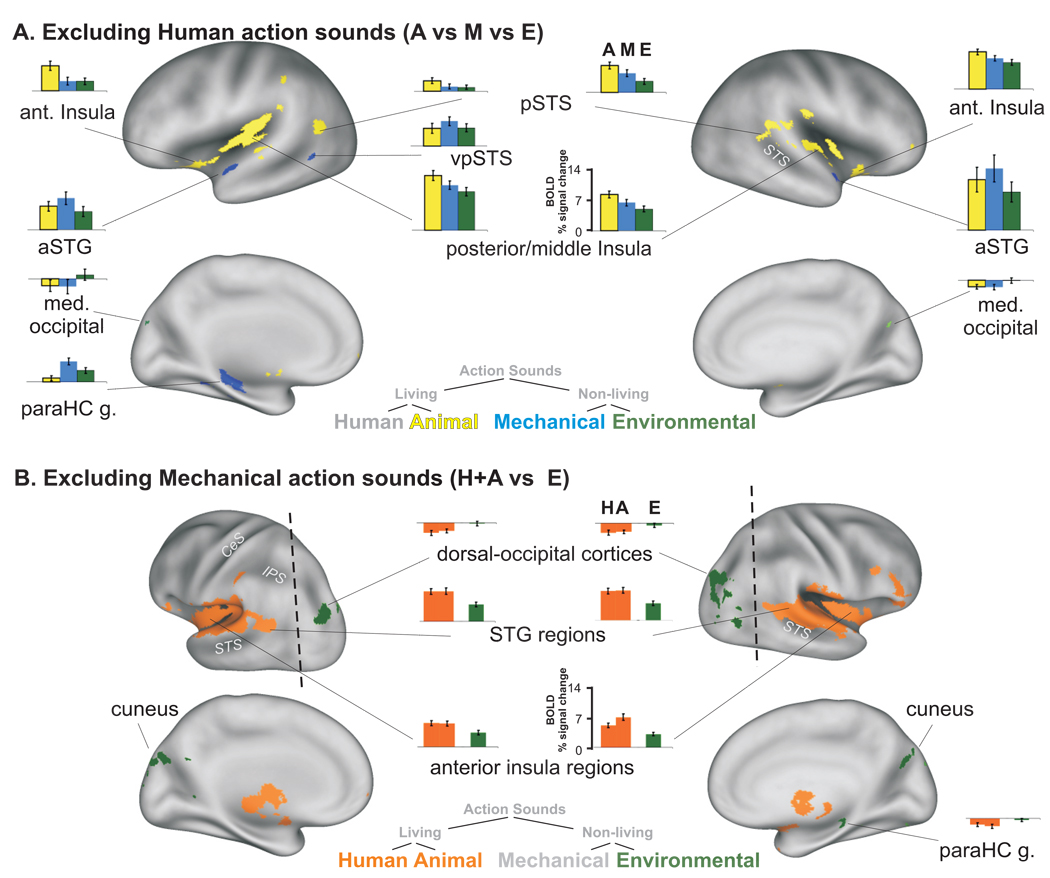

Animal action sounds, also devoid of vocalizations, produced robust preferential activation bilaterally along the left and right posterior insula (Fig. 2, yellow). As an additional analysis of data from Experiment #1, we excluded responses to human-produced sounds and tested for preferential activation for animal, mechanical or environmental categories only (Fig. 3A). This analysis further showed that animal action sounds, representing a sub-category of biological (animate agent) sound-sources, produced the most extensive network (yellow) of cortical activation of these three categories. In addition to the posterior insulae, animal sound-sources preferentially activated the anterior insulae and portions of the bilateral pSTS/pSTG complex. However, they did not yield significant activation in the fronto-parietal networks, as was the case for the sounds produced by human sources (cf. Fig. 2 red vs. yellow, and Fig. 3 yellow).

Figure 3.

Group-averaged activation from Experiment #1 for (A) animal vs. mechanical vs. environmental-produced sounds, after excluding responses to the human-produced category of sound (p<0.002, α<0.05, corrected), and (B) environmental vs. living (human and animal) sound-source categories, after excluding responses to the mechanically-produced sounds (p<0.002, α<0.05, corrected). Histograms show mean ± standard error as percent signal change relative to response to silence.

Mechanically-produced sounds, all automated human-made machinery, preferentially activated a few cortical regions, including restricted portions of the left and right anterior STG (Fig. 2 and Fig. 3A, blue). Importantly, these bilateral foci were located immediately anterior and lateral to the foci preferential for animal-produced sounds within most individuals. The left parahippocampal gyrus also showed robust preferential activation for mechanical action sounds, which largely overlapped with the ROI for non-living sounds illustrated previously (cf. Fig. 1B, blue-green).

Interestingly, the foci preferential for animal and mechanical sounds that were located near or in auditory cortex proper showed the greatest relative BOLD percent signal change magnitudes overall (posterior insula and aSTG; Fig. 2, histograms). This was despite the fact that the participants were explicitly listening for human action sounds, and unaware that there were three conceptually distinct “other” categories of sound. These larger signal amplitudes may reflect the processing of acoustic signal attributes along relatively early cortical stages, though the relative timing of processing along these pathways remains to be examined. Additionally, while the human-produced action sounds preferentially activated the widest range in cortical expanse during this listening task, surprisingly, there were few, if any, ROIs preferential for human-produced sounds within auditory cortex proper, even within individual datasets (not shown).

Environmental sounds, including flowing water, rain, wind, and fire (Appendix 1), preferentially activated the cuneus and medial-dorsal occipital cortex bilaterally and right superior parietal lobe. An additional conjunction analysis of Experiment #1 examined environmental sounds versus the two categories of living sounds (excluding responses to mechanical sounds). This analysis revealed further activation along dorsal-occipital cortices (contiguous with activation foci in the cuneus) and right parahippocampus regions. Most of these activated foci preferential for environmental sounds showed a “negative” activation signal to the human and animal action sounds (relative to silence), leading to a net differential activation (Fig. 3B, histograms).

The sounds were assessed by the fMRI scanning participants (n=11 of 20) for overall ratings of familiarity and pleasantness post-hoc (see Methods). Mean scores calculated from the Likert scales exhibited approximately Normal distributions (Fig. 2B). The environmental sounds (green curve) were rated as being slightly, yet significantly, more pleasant to hear as a category overall (mean 3.20; ANOVA by the method of restricted maximum likelihood (REML) F=10.55, p<0.001), while the human (mean 2.69), animal (2.53), and mechanical (2.70) sounds were slightly less pleasant (with neutral being a rating of 3). For three individuals, we mapped responses to the human, animal plus mechanical sounds that were rated as being more pleasant (4 or 5) relative to those rated as unpleasant (1 or 2). These results did not yield significant activation in dorsal occipital cortices (data not shown), suggesting that these differences in emotional valence were less likely to account for the occipital cortex preferential activation to environmental sounds.

Miscategorized sounds

For all participants, some sounds not produced by humans were incorrectly judged to be produced by a human (7.7%), and conversely, some sounds produced by humans were miscategorized as being produced by non-human sources (17.3%). Thus, as an additional analysis of Experiment #1 we examined responses to these error trials. We could only meaningfully examine human versus non-human produced sound categorization, as this was the judgment that participants made during the fMRI scanning for Experiment #1. Within the ROIs preferential for correctly categorized human-produced sounds (Fig. 2, red) we charted brain responses to sounds incorrectly perceived to be human-produced (Fig. 2, pink histograms) or incorrectly perceived as not being human-produced (black). Virtually all of the cortical regions preferential for correctly perceived human-produced sounds also showed at least a trend for preferential activation to sounds incorrectly perceived to be human-produced (cf. red and pink), with the left basal ganglia plus left and right pSTS/pMTG complex each showing a significant difference (t-test, p<0.01). Thus, most portions of the cortical network preferential for correctly categorized human-produced sounds (red) generally correlated with the perception of those action sounds as having been produced by other humans (conspecifics), whether or not the participants were correct.

Effect of listening task on category-specific sound processing pathways

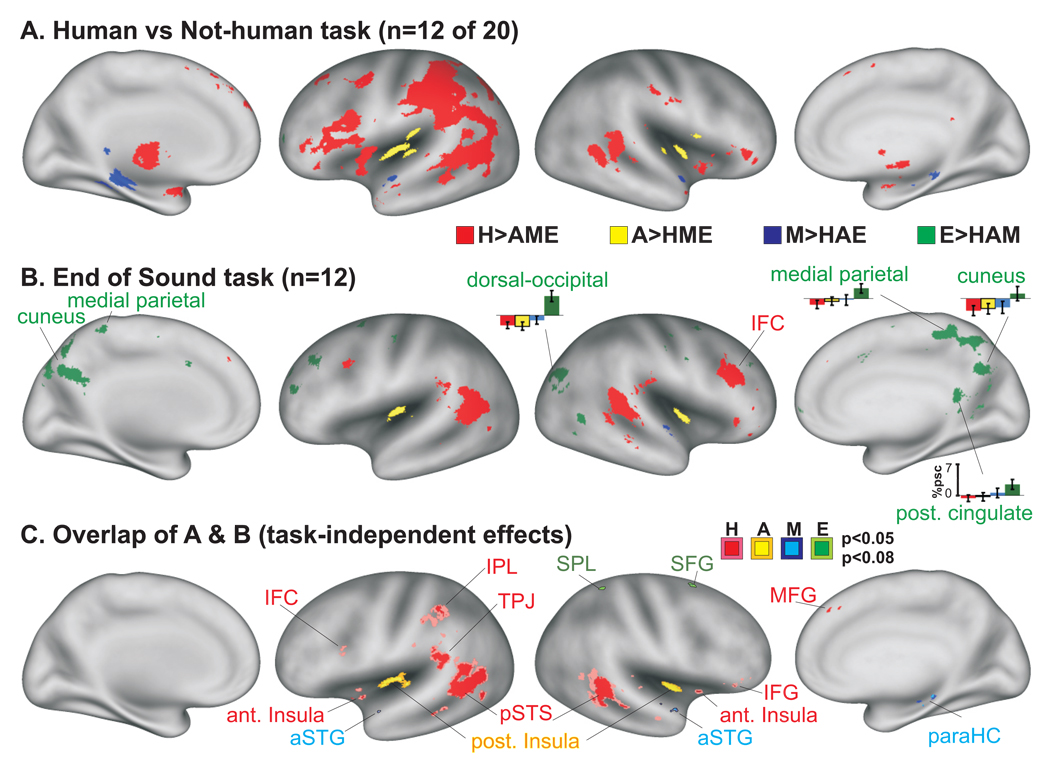

The task demands for Experiment #1 (differentiating a human from a non-human sound source) had the advantage of assessing the participant’s attention to sound content together with a fairly unbiased examination of brain responses the three non-human categories of sound. However, this task emphasized sounds produced by human sources and hence may have biased activation for the human-produced category. To address this issue, we next assessed whether the dissociation of brain networks for the four different conceptual categories of sound would persist independent of task. Thus, we adopted a category-non-specific task wherein naïve participants (n=12), who were unfamiliar with the aims of the study, were instructed to press a button as quickly as possible to the offset of any sound.

Independent of listening task, Experiments #1 and #2 revealed a quadruple-dissociation for these conceptually distinct categories of sound. However, the task demands did lead to some major differences (Fig. 4A vs 4B). For instance, during the category-non-specific listening task, human-produced sounds did not lead to nearly as robust activation of the left fronto-parietal regions (mirror-neuron systems), insulae, nor to other cortical and sub-cortical motor-related networks. Additionally, subcortical regions such as the basal ganglia, cerebellum, and thalamus were not significantly activated during the category-non-specific task. However, the human-produced sounds did preferentially activate the right IFC region during the category-non-specific listening task. With regard to mechanical action sounds, the parahippocampal regions were much less robustly activated (just below threshold significance) during the category-non-specific listening task. Somewhat surprisingly, the environmental sounds led to substantially greater expanses of preferential activation along cuneus and medial parietal cortices, yielding significant positive BOLD signal differences (Fig. 4B, histograms), as well as activating various frontal regions with the category-non-specific task. Some of these regions overlapped those involved in the putative “default mode” network associated with the resting state (Greicius et al., 2003; Fransson and Marrelec, 2008).

Figure 4.

Group-averaged activation comparisons for sound categorization using different listening tasks. (A) Data from 12 of the 20 participants illustrated in Fig. 2, judging each sound as being produced by a human or not. (B) Data from 12 naïve participants for Experiment #2 performing a category-non-specific task. Several histograms for the E>HAM condition are illustrated (%psc=percent signal change relative to silent events). Data in panels A and B are both at p<0.05, α<05 corrected. (C) Common four-fold differential activation patterns across the two groups (p<0.05 and p<0.08, uncorrected) revealing task independent effects. Some colored foci were outlined for clarity.

To further illustrate similarities across the results from both listening tasks, we mapped regions that were commonly activated by each of the four sound categories (Fig. 4C). These results were presented at lower threshold settings due to the greater degree of variability when comparing across separate groups of individuals. Nonetheless, they highlighted a subset of the regions revealed in Experiment #1 (Fig. 2), again illustrating a quadruple-dissociation of network activation by category of sound. In particular, this analysis indicated that independent of task, human-produced action sounds preferentially and robustly activated the bilateral pSTS/pMTG complexes, and also activated the bilateral anterior insulae, the left temporal parietal junction (TPJ), and weakly activated portions of the left IPL and IFC regions. The animal action sounds produced robust activation of the posterior insula bilaterally independent of task, and the mechanical sounds preferentially activated restricted, but significant, portions of the aSTG regions bilaterally. The environmental sounds activated more variable locations across individuals (cf. Fig. 2–Fig. 4, green), but consistently involved dorsal occipital and dorsal fronto-parietal networks. Together, these findings indicate that independent of task demands a multi-level dissociation exists in cortex for differentially processing action sounds derived from human, animal, mechanical, or environmental sources.

On a technical note, there remains some controversy regarding the criteria to use when defining a brain region response that is category “specific”, “selective”, or “preferential” when using neuroimaging techniques such as fMRI (Fodor, 2001; Pernet et al., 2007). Most of the activated brain regions we revealed showed significant activity to all sound categories we tested, and thus may not meet strict physiological criteria for being regarded as category-specific (Calvert et al., 2001). Nonetheless, some regions showed significant quadruple-dissociations across two task conditions, meeting criteria for regarding these activation patterns as specific responses, while other regions were dissociated during only one of the task conditions, thereby meeting criteria of preferential responses, and are referred to as such in the discussion below.

Discussion

The present study revealed distinct activation patterns associated with the perception of living (non-vocal) versus non-living sound-sources, as well as to more specific conceptual categories, including processing of human-, animal-, mechanically- and environment-produced sounds. Together, these results revealed intermediate- and high-level processing networks that may subserve auditory perception in the human brain. They further supported grounded cognition theories as a means for establishing representations of knowledge associated with different categories of objects and their characteristic sound-producing actions, as discussed below.

Living vs non-living sound-sources

Independent of listening task, we revealed a striking anterior versus posterior division of activation in brain networks preferentially involved in processing biological action sounds (human and animal) versus non-biological (mechanical and environmental) action sounds, respectively. Phenomenologically these results are consistent with classical brain lesion and neuropsychological neuroimaging studies over the last century, demonstrating separable brain systems for representing semantic and word-form knowledge for the categories of living versus non-living things (Warrington and Shallice, 1984; Hillis and Caramazza, 1991; Gainotti et al., 1995; Damasio et al., 1996; Silveri et al., 1997; Gainotti, 2000; Capitani et al., 2003).

In the present study, correct categorization of non-vocal sounds produced by living (biological) sound-sources activated a host of brain regions reported to be involved in motor and audio-motor association systems, presumably being linked to one’s own motor repertoire associated with sound production (Buccino et al., 2001; Bidet-Caulet et al., 2005; Calvo-Merino et al., 2005; Gazzola et al., 2006; Lahav et al., 2007; Mutschler et al., 2007; Galati et al., 2008). In contrast, sounds produced by non-living (non-biological) sources preferentially activated networks affiliated more with intermediate to high-level visual motion processing, plus parahippocampal regions that are involved in visual form, feature and object recognition, which may be linking crossmodal associations (Epstein et al., 1999; Murray et al., 2000; Haxby et al., 2001; Grill-Spector and Malach, 2004; Spiridon et al., 2006). Posterior cingulate cortices were also preferentially activated by non-living sound-sources, which are regions previously reported to be associated with various aspects of visual imagery, visual spatial context, and memory (Vogt et al., 1992; Hassabis et al., 2007). Alternatively, posterior cingulate activity may have been related to changes in intrinsic activity of the so called “default mode” network (Greicius et al., 2003; Fransson and Marrelec, 2008), which may have been relatively more disengaged when attending to living versus non-living sound-sources. Nonetheless, the overall organization of these two distinct networks, one preferential for processing living sound-sources and the other for non-living sound-sources, were generally consistent with grounded cognition models for sensory and multisensory perceptual organization in the brain (Barsalou, 2008), and is further addressed below in the context of category-preferential processing.

Human- versus animal-produced action sounds

During the category-specific listening task, action sounds (non-vocal) produced by human conspecifics versus non-human animals yielded a much more extensive network of preferential activation including both cortical and sub-cortical regions. Specifically, this including bilateral portions of the basal ganglia, cerebellum, the left thalamus, and left lateralized anterior insulae and medial frontal gyri (MFG), which are all implicated in motor-system networks (Lahav et al., 2007; Mutschler et al., 2007). Human-produced sounds also preferentially activated a large left lateralized swath of IPL and IFG and M1. These left IPL and IFG are involved in associating gestural movements with human-produced sounds: They are thought to play a role in interpreting realized goals of observed prehensile actions (Grèzes et al., 2003; Johnson-Frey et al., 2003; Johnson-Frey et al., 2005; Lewis et al., 2005; Lahav et al., 2007; McNamara et al., 2008), and are also widely implicated as major constituents of a mirror-neuron system (Rizzolatti and Craighero, 2004; Pizzamiglio et al., 2005; Gazzola et al., 2006). Thus, these motor-related networks may subserve action recognition systems in terms of our own motor repertoires, and thus be involved in human-action sound recognition.

The left and right pSTS/pMTG complexes were preferentially activated by human-produced actions independent of listening task, and were preferentially activated (to a lesser extent) by animal-produced actions when responses to human-produced sounds were excluded from the analysis. These regions show activity to a wide variety of sensory action events, including the recognition of non-verbal action sounds (Maeder et al., 2001; Beauchamp et al., 2004; Lewis et al., 2004; Engelien et al., 2005), plus complex visual motion processing together with audio-visual and sensory-motor interactions (Martin et al., 1996; Chao et al., 1999; Beauchamp et al., 2002; Calvert and Lewis, 2004; Lewis et al., 2005; Lewis et al., 2006). However, they do not appear to show modality specificity, but rather associate dynamic action information in an amodal manner, and relatively independent of verbal or semantic content (Tanabe et al., 2005; Hocking and Price, 2008). Thus, they may have a more perceptual than conceptual role in processing sensory information. More specifically, the bilateral pSTS/pMTG complexes appear to have a dominant function in transforming dynamic sensory information (auditory, visual and sensorimotor interactions) into a common neural code, which can serve to facilitate crossmodal integration of those sensory inputs, if or when present (Taylor et al., 2009; Lewis, under review). As such, these regions may serve to establish an experience-based reference frame for comparing the predicted or expected acoustic (plus visual or tactile) dynamic action information based on what has already occurred. Despite the similar familiarity ratings (Fig. 2B), human actions may generally have more extensively learned and deeply encoded complex motion and sensory attributes relative to non-biological an non-conspecific biological actions, thereby accounting for the preferential activation by human sound-sources.

Interestingly, the error trial analysis indicated that the bilateral pSTS regions were activated in accordance with the listener’s perception of human-produced sounds, whether or not they were correctly categorized. Together, activation of the bilateral pSTS/pMTG complexes, the left IPL, left IFG, and motor-related subcortical regions support the idea that sounds perceived to be human-produced evoke some form of mental simulation of these “performable” sound-producing actions. Again, these findings are consistent with a mechanism for action understanding via an observation-execution matching system with reference to one’s own motor repertoire.

A related perspective is that these regions represent sites may be innately predisposed to perform particular types of functions or process certain types of computations or operations regardless of the sensory input modality. Such regions, termed metamodal operators (Pascual-Leone and Hamilton, 2001), may compete for the ability to perform a sensory processing or cognitive task, and thus may be better thought of in terms of their roles in extracting certain types of information from the senses, if present. Thus, the pSTS/pMTG complexes may represent domain-specific regions (Caramazza and Shelton, 1998; Caramazza and Mahon, 2003) that are predisposed to process dynamic sensory action information, including the arguably more frequently encountered actions produced by ourselves and by other human conspecifics.

The animal sounds we selected represented a category of biologically-produced (non-vocal) actions sounds that cannot be fully reproduced by humans. However, many of these sounds could be roughly mimicked by humans (e.g. flapping arms, running on all fours, lapping up water), and hence an “embodiment” mechanism could conceivably represent sounds of this biological action category. This may explain why motor-related regions such as the bilateral anterior and posterior/middle insular cortices were preferentially activated by animal-produced sounds relative to mechanical and environmental sound-sources (Fig. 3A, yellow), wherein portions of the insula have previously been implicated in motor learning (Fink et al., 1997; Mutschler et al., 2007). However, animal-produced action sounds, in contrast to human-produced action sounds, led to significantly less activation in the left IPL and no significant activation in the IFG. These results are consistent with the non-human animal action sounds evoking a lesser degree of audio-motor associations, or lesser degree of embodiment, relative to human-produced action sounds.

Together, the present results lend support to simulation-theories as mechanisms for conceptualizing and providing meaning to acoustic sensory events (Gallese and Goldman, 1998; Barsalou, 2008). In particular, identifying networks preferentially activated when perceiving human-produced sounds, and to a lesser extent animal-produced action sounds (IFC, IPL, bilateral pSTS/pMTG), support a Bayesian-like neural network mechanism for making probabilistic matches between input of a complex sound evolving over time and one’s own motor repertoires of motor actions that may have produced such a sound (Körding and Wolpert, 2004), thereby providing a sense of recognition and meaning to the sound.

Mechanically-produced sounds

Automated mechanical action sounds, which were judged to be independent of a human (or biological agent) instigating the action, represented a category that could not easily be “embodied” into the listener’s motor repertoire. Human-made mechanical sounds (especially motorized things) have arguably been around for less than 200 years. As such, this conceptual category of sound contrasts with the other three categories, for which evolutionary pressures may have driven neural systems to be prone to become specifically or optimally organized for rapid, efficient processing and identification—along the lines of cortical organizational principles based on domain-specific knowledge representations and metamodal operations, as addressed above. Qualitatively, our selection of mechanical sounds tended to have a more regular temporal structure and cadence (e.g. the temporal regularity of motors, clocks, and automated machinery within 20–100 msec temporal windows), and perhaps had relatively more distinctive frequency components, such as those uniquely produced by metallic objects. Thus, perhaps not too surprisingly, mechanical sounds preferentially activated cortical networks different from the motor-related and MNS-related regions addressed earlier. Rather, one pair of foci showing preferential positive activation to mechanically-produced sounds included the aSTG (e.g. Fig. 3, blue), the location of which, near auditory cortex proper, was consistent with representing higher-level stages of acoustic signal processing (Talavage et al., 2004; Lewis et al., 2009). A second focus included the left parahippocampal cortex, which is associated with processing visual scenes, combinations of objects, and identifiable visual features or form of an object (Whatmough et al., 2002; Mahon et al., 2007; Martin, 2007). Though the hierarchy of processing pathways for mechanical sounds remain to be examined, these results are consistent with a mechanism whereby the acoustic signals attributes distinctive of machinery are pre-processed along high-level stages of auditory cortices (i.e. the aSTG). This may then be followed by subsequent probabilistic matching to other knowledge representations of objects, including non-living visual object representations and rigid-body motion associations, along ventral pSTS and parahippocampal regions (Fig. 3A, blue), thereby providing a sense of meaning for, or the identity of, the likely sound-source.

Environment-produced sounds

For both listening task conditions, preferential activation for environmental-produced sounds included various dorsal occipital and medial parietal cortices bilaterally, among other portions of visual-related areas and bilateral frontal cortices (Fig. 4A–C, green). The parietal and occipital portions of the networks preferential for environmental sound processing overlapped networks reported for audio-visual interactions pertaining to the matching and integration of spatially and/or temporally congruent intermodal features, and independent of semantic content (Bushara et al., 2001; Sestieri et al., 2006; Baumann and Greenlee, 2007; Meyer et al., 2007; Lewis, under review). Thus, these preferential activations for environmental sounds were consistent with abstract audio-visual spatial associations (non-motoric representations) and/or visual imagery mechanisms for representation of these sounds (Murray et al., 2000; Kosslyn et al., 2005; Spiridon et al., 2006; Hassabis et al., 2007). Relative to the other three sound-source categories, environmental sound-sources are generally less concrete or tangible—we cannot easily grasp or interact with rain, wind, or fire as we do with concrete objects such as tools, machinery, animals, and humans. Thus motor-related embodiments may be even less available as a neural mechanism for encoding environmental sound-sources. Rather, other properties, such as spatial scale and audio-visual associations, appear to prevail as organizational mechanisms to represent knowledge of environmental sound-sources.

Alternatively, or additionally, because the environmental sounds were judged by our participants as being more pleasant as a category overall, these networks may reflect activations and de-activations related to affect or emotional valence. Nature sounds are frequently used for relaxation purposes, and thus they may generally be evoking a more relaxed, or less task demanding, state (Richards, 1994) leading to a greater extent of default mode activation. An analysis of the potential effects of affect evoked by our sounds was beyond the scope of the present study, but will merit further study.

Tools and non-living things?

Historically, examples of the semantic categories living versus non-living things have included animals versus tools, respectively (Warrington and Shallice, 1984; Ilmberger et al., 2002; McRae et al., 2005; Lewis, 2006). This fits well from the perspective of vision and touch, where an artefact such as a hammer lying on a table (without a living agent in the scene), would be perceived as an inanimate, non-living thing. However, the characteristic sounds produced by, and associated with, hand tools (such as the hammer producing characteristic pounding sounds) necessarily implies the presence of a human agent using the tool. Cortical networks preferential for processing human-produced sounds in the present study showed a substantial degree of overlap with our earlier study (see Supplementary online Fig. 1) that identified separate cortical pathways for processing hand-manipulated tool sounds relative to animal vocalizations in right-handed individuals (Lewis et al., 2005). Consequently, hand-tool actions (action sounds and dynamic visual actions) appear to be encoded in cortex more in terms of embodied representations and biological motion and action (Beauchamp et al., 2002; Lewis et al., 2005), which includes lateralized cortical coding in the IPL that is partially dependent on handedness (Lewis et al., 2006). In short, tool action sounds appear to be represented in terms of one’s own motor actions. In contrast, the mechanical sound-sources we used, although representing a class of objects/tools made by humans, did not preferentially activate any motor-related networks. Thus, whereas acoustic features and spectro-temporal information may be more prominent for interpreting acoustic qualities of vocalizations (or even music), identifying the goal of, or the motor intentions behind, action sounds appears be the more relevant or salient processing dimension for purposes of recognizing human-produced action sounds, including sounds produced by hand tools.

Conclusions

In everyday life a listener may need to efficiently process a barrage of unseen acoustic information in order to function in his or her environment. The dissociation of activated cortical networks for human, animal, mechanical, and environmental sound-sources provided strong support for a category-preferential organization for processing real-world sounds. Activation patterns to sounds produced by living things, especially human-produced sounds, when recognized as such, involved cortical and subcortical networks associated with action execution, perhaps representing simulations of motor actions of the agent that could have produced the sound, thereby providing a sense of meaning. Non-living sound-sources, which could not match well to audio-motor related repertoires, predominantly activated networks involved in visual and spatial relation associations, thereby reflecting a possible audio-visual association mechanism for representing knowledge of these action sound-sources in sighted listeners. Thus, in parallel with reports of category-specific visual object representations and biological-motion processing streams, the present data are indicative of category-preferential networks for sound-source and auditory object knowledge representations in the human brain. Thus, consistent with the visual object knowledge literature, sound-source knowledge also appears to be grounded by embodiment and multisensory associations, thereby representing system-level mechanisms for encoding and organizing networks that subserve hearing perception.

Supplementary Material

Comparison of the present results (HAME) with previously reported network activation to sounds produced by hand-tool use (red) versus animal vocalizations (light blue), adapted from Lewis et al., 2005 (with permission).

ACKNOWLEDGMENTS

We thank Dr. David Van Essen, Donna Hanlon, and John Harwell for continual development of software for cortical data analyses and presentation with CARET and the SUMS database, and Dr. Gerry Hobbs for statistical assistance. We thank Ms Mary Pettit for assistance with our participants, William Talkington and Kate Tallaksen for assistance with data analyses. This work was supported by the NCRR NIH COBRE grant RR10007935 (to West Virginia University), as well as a WVU Summer Undergraduate Research Internship.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

REFERENCES

- Adams RB, Janata P. A comparison of neural circuits underlying auditory and visual object categorization. Neuroimage. 2002;16:361–377. doi: 10.1006/nimg.2002.1088. [DOI] [PubMed] [Google Scholar]

- Aglioti SM, Cesari P, Romani M, Urgesi C. Action anticipation and motor resonance in elite basketball players. Nat Neurosci. 2008 doi: 10.1038/nn.2182. [DOI] [PubMed] [Google Scholar]

- Allison T, McCarthy G, Nobre A, Puce A, Belger A. Human extrastriate visual cortex and the perception of faces, words, numbers, and colors. Cerebral Cortex. 1994;5:544–554. doi: 10.1093/cercor/4.5.544. [DOI] [PubMed] [Google Scholar]

- Avillac M, Deneve S, Olivier E, Pouget A, Duhamel JR. Reference frames for representing visual and tactile locations in parietal cortex. Nat Neurosci. 2005;8:941–949. doi: 10.1038/nn1480. [DOI] [PubMed] [Google Scholar]

- Barsalou LW. Perceptual symbol systems. Behav Brain Sci. 1999;22:577–609. doi: 10.1017/s0140525x99002149. discussion 610-560. [DOI] [PubMed] [Google Scholar]

- Barsalou LW. Grounded cognition. Annu Rev Psychol. 2008;59:617–645. doi: 10.1146/annurev.psych.59.103006.093639. [DOI] [PubMed] [Google Scholar]

- Barsalou LW, Kyle Simmons W, Barbey AK, Wilson CD. Grounding conceptual knowledge in modality-specific systems. Trends Cogn Sci. 2003;7:84–91. doi: 10.1016/s1364-6613(02)00029-3. [DOI] [PubMed] [Google Scholar]

- Baumann O, Greenlee MW. Neural correlates of coherent audiovisual motion perception. Cereb Cortex. 2007;17:1433–1443. doi: 10.1093/cercor/bhl055. [DOI] [PubMed] [Google Scholar]

- Beauchamp M, Lee K, Haxby J, Martin A. Parallel visual motion processing streams for manipulable objects and human movements. Neuron. 2002;34:149–159. doi: 10.1016/s0896-6273(02)00642-6. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Martin A. Grounding object concepts in perception and action: evidence from fMRI studies of tools. Cortex. 2007;43:461–468. doi: 10.1016/s0010-9452(08)70470-2. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Argall BD, Bodurka J, Duyn JH, Martin A. Unraveling multisensory integration: patchy organization within human STS multisensory cortex. Nature Neuroscience. 2004;7:1190–1192. doi: 10.1038/nn1333. [DOI] [PubMed] [Google Scholar]

- Belin P, Zatorre RJ, Lafaille P, Ahad P, Pike B. Voice-selective areas in human auditory cortex. Nature. 2000;403:309–312. doi: 10.1038/35002078. [DOI] [PubMed] [Google Scholar]

- Bidet-Caulet A, Voisin J, Bertrand O, Fonlupt P. Listening to a walking human activates the temporal biological motion area. Neuroimage. 2005;28:132–139. doi: 10.1016/j.neuroimage.2005.06.018. [DOI] [PubMed] [Google Scholar]

- Broadbent WA. A case of peculiar affection of speech with commentary. Brain. 1878;1:484–503. [Google Scholar]

- Buccino G, Binkofski F, Fink GR, Fadiga L, Fogassi L, Gallese V, Seitz RJ, Zilles K, Rizzolatti G, Freund H-J. Action observation activates premotor and parietal areas in a somatotopic manner: an fMRI study. European Journal of Neuroscience. 2001;13:400–404. [PubMed] [Google Scholar]

- Bushara KO, Grafman J, Hallett M. Neural correlates of auditory-visual stimulus onset asynchrony detection. J Neurosci. 2001;21:300–304. doi: 10.1523/JNEUROSCI.21-01-00300.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calvert GA, Lewis JW. In: Hemodynamic studies of audio-visual interactions. In: Handbook of multisensory processing. Calvert GA, Spence C, Stein B, editors. Cambridge, Massachusetts: MIT Press; 2004. pp. 483–502. [Google Scholar]

- Calvert GA, Hansen PC, Iversen SD, Brammer MJ. Detection of audio-visual integration sites in humans by application of electrophysiological criteria to the BOLD effect. Neuroimage. 2001;14:427–438. doi: 10.1006/nimg.2001.0812. [DOI] [PubMed] [Google Scholar]

- Calvo-Merino B, Glaser DE, Grezes J, Passingham RE, Haggard P. Action observation and acquired motor skills: an FMRI study with expert dancers. Cereb Cortex. 2005;15:1243–1249. doi: 10.1093/cercor/bhi007. [DOI] [PubMed] [Google Scholar]

- Canessa N, Borgo F, Cappa SF, Perani D, Falini A, Buccino G, Tettamanti M, Shallice T. The Different Neural Correlates of Action and Functional Knowledge in Semantic Memory: An fMRI Study. Cereb Cortex. 2007;18:740–751. doi: 10.1093/cercor/bhm110. [DOI] [PubMed] [Google Scholar]

- Capitani E, Laiacona M, Mahon BZ, Caramazza A. What are the facts of semantic category-specific deficits? A critical review of the clinical evidence. Cognitive Neuropsychology. 2003;20:213–261. doi: 10.1080/02643290244000266. [DOI] [PubMed] [Google Scholar]

- Caramazza A, Shelton JR. Domain-specific knowledge systems in the brain the animate-inanimate distinction. J Cogn Neurosci. 1998;10:1–34. doi: 10.1162/089892998563752. [DOI] [PubMed] [Google Scholar]

- Caramazza A, Mahon BZ. The organization of conceptual knowledge: the evidence from category-specific semantic deficits. Trends Cogn Sci. 2003;7:354–361. doi: 10.1016/s1364-6613(03)00159-1. [DOI] [PubMed] [Google Scholar]

- Chao LL, Haxby JV, Martin A. Attribute-based neural substrates in temporal cortex for perceiving and knowing about objects. Nature Neuroscience. 1999;2:913–919. doi: 10.1038/13217. [DOI] [PubMed] [Google Scholar]

- Corballis MC. On the evolution of language and generativity. Cognition. 1992;44:197–126. doi: 10.1016/0010-0277(92)90001-x. [DOI] [PubMed] [Google Scholar]

- Cox RW. AFNI: Software for analysis and visualization of functional magnetic resonance neuroimages. Computers and Biomedical Research. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Cross ES, Kraemer DJ, Hamilton AF, Kelley WM, Grafton ST. Sensitivity of the Action Observation Network to Physical and Observational Learning. Cereb Cortex. 2008 doi: 10.1093/cercor/bhn083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damasio H, Grabowski TJ, Tranel D, Hichwa RD, Damasio RD. A neural basis for lexical retrieval. Nature. 1996;380:499–505. doi: 10.1038/380499a0. [DOI] [PubMed] [Google Scholar]

- Damasio H, Tranel D, Grabowski T, Adolphs R, Damasio A. Neural systems behind word and concept retrieval. Cognition. 2004;92:179–229. doi: 10.1016/j.cognition.2002.07.001. [DOI] [PubMed] [Google Scholar]

- Doehrmann O, Naumer MJ, Volz S, Kaiser J, Altmann CF. Probing category selectivity for environmental sounds in the human auditory brain. Neuropsychologia. 2008;46:2776–2786. doi: 10.1016/j.neuropsychologia.2008.05.011. [DOI] [PubMed] [Google Scholar]

- Edmister WB, Talavage TM, Ledden PJ, Weisskoff RM. Improved auditory cortex imaging using clustered volume acquisitions. Hum Brain Mapp. 1999;7:89–97. doi: 10.1002/(SICI)1097-0193(1999)7:2<89::AID-HBM2>3.0.CO;2-N. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engelien A, Tuscher O, Hermans W, Isenberg N, Eidelberg D, Frith C, Stern E, Silbersweig D. Functional neuroanatomy of non-verbal semantic sound processing in humans. J Neural Transm. 2005 doi: 10.1007/s00702-005-0342-0. [DOI] [PubMed] [Google Scholar]

- Epstein R, Harris A, Stanley D, Kanwisher N. The parahippocampal place area: recognition, navigation, or encoding? Neuron. 1999;23:115–125. doi: 10.1016/s0896-6273(00)80758-8. [DOI] [PubMed] [Google Scholar]

- Fecteau S, Armony JL, Joanette Y, Belin P. Is voice processing species-specific in human auditory cortex? An fMRI study. NeuroImage. 2004;23:840–848. doi: 10.1016/j.neuroimage.2004.09.019. [DOI] [PubMed] [Google Scholar]

- Fink GR, Frackowiak RSJ, Pietrzyk U, Passingham RE. Multiple nonprimary motor areas in the human cortex. Journal of Physiology. 1997;77:2164–2174. doi: 10.1152/jn.1997.77.4.2164. [DOI] [PubMed] [Google Scholar]

- Fodor J. The mind doesn't work that way: the scope and limits of computational psychology. Cambridge, MA: "A Bradford book" MIT Press; 2001. [Google Scholar]

- Fransson P, Marrelec G. The precuneus/posterior cingulate cortex plays a pivotal role in the default mode network: Evidence from a partial correlation network analysis. Neuroimage. 2008;42:1178–1184. doi: 10.1016/j.neuroimage.2008.05.059. [DOI] [PubMed] [Google Scholar]

- Gainotti G. What the locus of brain lesion tells us about the nature of the cognitive defect underlying category-specific disorders: a review. Cortex. 2000;36:539–559. doi: 10.1016/s0010-9452(08)70537-9. [DOI] [PubMed] [Google Scholar]

- Gainotti G, Silveri M, Daniele A, Giustolisi L. Neuroanatomical correlates of category-specific impairments: A critical survey. Memory. 1995;3/4:247–264. doi: 10.1080/09658219508253153. [DOI] [PubMed] [Google Scholar]

- Galati G, Committeri G, Spitoni G, Aprile T, Di Russo F, Pitzalis S, Pizzamiglio L. A selective representation of the meaning of actions in the auditory mirror system. Neuroimage. 2008;40:1274–1286. doi: 10.1016/j.neuroimage.2007.12.044. [DOI] [PubMed] [Google Scholar]

- Gallese V, Goldman A. Mirror neurons and the simulation theory of mind-reading. Trends Cogn Sci. 1998;2:493–501. doi: 10.1016/s1364-6613(98)01262-5. [DOI] [PubMed] [Google Scholar]

- Gallese V, Lakoff G. The brain's concepts: the role of the sensory-motor system in conceptual knowledge. Cognitive Neuropsychology. 2005;22:455–479. doi: 10.1080/02643290442000310. [DOI] [PubMed] [Google Scholar]

- Gazzola V, Aziz-Zadeh L, Keysers C. Empathy and the somatotopic auditory mirror system in humans. Curr Biol. 2006;16:1824–1829. doi: 10.1016/j.cub.2006.07.072. [DOI] [PubMed] [Google Scholar]

- Glover GH, Law CS. Spiral-in/out BOLD fMRI for increased SNR and reduced susceptibility artifacts. Magn Reson Med. 2001;46:515–522. doi: 10.1002/mrm.1222. [DOI] [PubMed] [Google Scholar]

- Greicius MD, Krasnow B, Reiss AL, Menon V. Functional connectivity in the resting brain: a network analysis of the default mode hypothesis. Proc Natl Acad Sci U S A. 2003;100:253–258. doi: 10.1073/pnas.0135058100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grèzes J, Tucker M, Armony J, Ellis R, Passingham RE. Objects automatically potentiate action: an fMRI study of implicit processing. Eur J Neurosci. 2003;17:2735–2740. doi: 10.1046/j.1460-9568.2003.02695.x. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Malach R. The human visual cortex. Annu Rev Neurosci. 2004;27:649–677. doi: 10.1146/annurev.neuro.27.070203.144220. [DOI] [PubMed] [Google Scholar]

- Grossman ED, Blake R. Brain areas active during visual perception of biological motion. Neuron. 2002;35:1167–1175. doi: 10.1016/s0896-6273(02)00897-8. [DOI] [PubMed] [Google Scholar]

- Hall DA, Haggard MP, Akeroyd MA, Palmer AR, Summerfield AQ, Elliott MR, Gurney EM, Bowtell RW. "Sparse" temporal sampling in auditory fMRI. Hum Brain Mapp. 1999;7:213–223. doi: 10.1002/(SICI)1097-0193(1999)7:3<213::AID-HBM5>3.0.CO;2-N. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hassabis D, Kumaran D, Maguire EA. Using imagination to understand the neural basis of episodic memory. J Neurosci. 2007;27:14365–14374. doi: 10.1523/JNEUROSCI.4549-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasson U, Harel M, Levy I, Malach R. Large-scale mirror-symmetry organization of human occipito-temporal object areas. Neuron. 2003;37:1027–1041. doi: 10.1016/s0896-6273(03)00144-2. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;293:2425–2430. doi: 10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- Hillis AE, Caramazza A. Category-specific naming and comprehension impairment: a double dissociation. Brain. 1991;114(Pt 5):2081–2094. doi: 10.1093/brain/114.5.2081. [DOI] [PubMed] [Google Scholar]

- Hocking J, Price CJ. The role of the posterior superior temporal sulcus in audiovisual processing. Cereb Cortex. 2008;18:2439–2449. doi: 10.1093/cercor/bhn007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ilmberger J, Rau S, Noachtar S, Arnold S, Winkler P. Naming tools and animals: asymmetries observed during direct electrical cortical stimulation. Neuropsychologia. 2002;40:695–700. doi: 10.1016/s0028-3932(01)00194-4. [DOI] [PubMed] [Google Scholar]

- Johansson G. Visual perception of biological motion and a model for its analysis. Percept Psychophys. 1973;14:201–211. [Google Scholar]

- Johnson-Frey SH, Newman-Norlund R, Grafton ST. A distributed left hemisphere network active during planning of everyday tool use skills. Cereb Cortex. 2005;15:681–695. doi: 10.1093/cercor/bhh169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson-Frey SH, Maloof FR, Newman-Norlund R, Farrer C, Inati S, Grafton ST. Actions or hand-object interactions? Human inferior frontal cortex and action observation. Neuron. 2003;39:1053–1058. doi: 10.1016/s0896-6273(03)00524-5. [DOI] [PubMed] [Google Scholar]

- Kanwisher N. Domain specificity in face perception. Nature Neuroscience. 2000;3:759–763. doi: 10.1038/77664. [DOI] [PubMed] [Google Scholar]