Abstract

Dorsomedial prefrontal cortex (dmPFC), dorsolateral prefrontal cortex (dlPFC), and inferior frontal gyrus (IFG) have all been implicated in resolving decision conflict whether this conflict is generated by having to select between responses of similar value or by making selections following a reversal in reinforcement contingencies. However, work distinguishing their individual functional contributions remains preliminary. We used functional magnetic resonance imaging to delineate the functional role of these systems with regard to both forms of decision conflict. Within dmPFC and dlPFC, blood oxygen level-dependent responses increased in response to decision conflict regardless of whether the conflict occurred in the context of a reduction in the difference in relative value between objects, or an error following a reversal of reinforcement contingencies. Conjunction analysis confirmed that overlapping regions of dmPFC and dlPFC were activated by both forms of decision conflict. Unlike these regions, however, activity in IFG was not modulated by reductions in the relative value of available options. Moreover, although all three regions of prefrontal cortex showed enhanced activity to reversal errors, only dmPFC and dlPFC were also modulated by the magnitude of value change during the reversal. These data are interpreted with reference to models of dmPFC, dlPFC, and IFG functioning.

Introduction

Optimal decision-making requires selecting the response that yields the greatest value. In many situations, however, specific responses do not elicit constant levels of reward. Instead, the reward associated with a given response may fluctuate over time and across contexts, leading to changes in the level of decision conflict. Decision conflict is defined as the degree of competition between responses initiated by the stimulus (i.e., the relative extent to which a particular response is primed by a given stimulus). Core regions of prefrontal cortex implicated in this form of flexible decision making include dorsomedial prefrontal cortex (dmPFC), dorsolateral prefrontal cortex (dlPFC), and inferior frontal gyrus (IFG) (Ernst et al., 2004; Rogers et al., 2004). Considerable data suggest that at least two of these regions, dmPFC and dlPFC, are involved in resolving conflict in Stroop-like paradigms (Carter et al., 1999; Botvinick et al., 2004). In addition, dmPFC, dlPFC, and IFG have each been implicated in resolving decision conflict whether this conflict is generated by having to select between responses of similar value (Blair et al., 2006; Pochon et al., 2008) or by making selections following a reversal in reinforcement contingencies (Cools et al., 2002; O'Doherty et al., 2003; Remijnse et al., 2005). However, selecting between two options of similar value and reversal learning potentially embody different forms of decision conflict. For example, whereas the former example involves conflict generated by reward differential, the latter would involve overruling a previously learned response. These key differences raise the possibility that regions of prefrontal cortex make distinct contributions to resolving decision conflict. This appears particularly likely given accounts stressing functional specialization within dissociable regions of frontal cortex. Thus, it has been argued that dmPFC is implicated in response conflict detection (cf. Carter et al., 1999; Botvinick et al., 2004), error detection (Holroyd et al., 2004), or action-reinforcement learning (Rushworth et al., 2007). In contrast, dlPFC is implicated in maintaining stimulus information against interference from competing nontarget stimuli (Casey et al., 2001), selecting context-appropriate representations (Liu et al., 2006; Hester et al., 2007), or classifying representations with respect to a criterion (Han et al., 2009). Last, IFG is implicated in the selection of appropriate motor responses (Rushworth et al., 2005; Budhani et al., 2007), processing punishment information (O'Doherty et al., 2001), or the inhibition of prepotent responses (Casey et al., 2001). However, the extent of overlap between neural regions activated by decision conflict during dynamic changes in relative reward value and decision conflict generated by a reversal of value remains unclear.

The current study tests two contrasting hypotheses regarding the functional contribution of dmPFC, dlPFC, and IFG in resolving decision conflict. The first suggests that all three regions are implicated in both forms of decision conflict with dmPFC detecting conflict (cf. Carter et al., 1999; Botvinick et al., 2004), dlPFC enhancing attention to relevant stimulus features (MacDonald et al., 2000), and IFG selecting an appropriate motor response (Rushworth et al., 2005; Budhani et al., 2007). This hypothesis implies significant conjunction of activity across these regions for decision conflict whether it is encountered by changes in reward differential or by reversal learning. A second possibility suggests greater functional specialization with recruitment of dmPFC and dlPFC during both forms of decision conflict, but recruitment of IFG only when a suboptimal response must change. We tested these contrasting hypotheses using a novel instrumental learning task that included both forms of decision conflict: (1) conflict generated by dynamic changes in reward differentials associated with available choices over time (either increasing or decreasing reward differentials); and (2) conflict generated by reversing the reward contingencies.

Materials and Methods

Participants.

Twenty-two subjects participated in the study. Three subjects were excluded due to technical difficulties (user-interface, scanner, or computer failure) and their data were not analyzed, leaving 19 participants in total (10 female and 9 male) aged 21–50 years (mean = 29.6; SD = 8.6). Three subjects did not show evidence of reversal learning (performance was 2 SDs or more below the mean) and were excluded from the functional magnetic resonance imaging (fMRI) analysis leaving 16 participants (8 female, age range 21–50, mean 29.19, SD 8.53). All participants underwent a medical exam performed by a physician, were free of psychotropic medication, and were screened with the Structured Clinical Interview for DSM-IV (First et al., 1997) to exclude those with a history of psychiatric or neurological disorder. Before proceeding to the fMRI scanner, all participants completed an abbreviated practice version of the task consisting of 48 trials to ensure that they understood the objectives of the task and were proficient in their responding.

fMRI data acquisition.

Subjects were scanned during task performance using a 1.5 Tesla GE Signa scanner in a supine position while in a light head restraint to limit head movement. Functional images were acquired with a gradient echo-planar imaging (EPI) sequence (repetition time = 3000 ms, echo time = 40 ms, 64 × 64 matrix, flip angle 90°, field of view 24 cm). Coverage was obtained with 29 axial slices (thickness 4 mm; in-plane resolution, 3.75 × 3.75 mm). A high-resolution anatomical scan (three-dimensional spoiled GRASS; repetition time = 8.1 ms, echo time = 3.2 ms; field of view = 24 cm; flip angle = 20°; 124 axial slices; thickness = 1.0 mm; 256 × 256 matrix) in register with the EPI dataset was obtained covering the whole brain.

Experimental task.

We developed a novel object discrimination task in which participants made operant responses for positive reinforcement (token dollar amounts). The objective of the task was to maximize monetary gains while response options underwent graded changes in reinforcement. On each trial, subjects selected one object (fractal image) within a pair displayed against a white background. Subjects were told that the values associated with the objects would be changing throughout the task and that it may be necessary to alter their responding at any time. Following each selection, subjects received reinforcement (e.g., “you win $55”). The reward differed depending on their accuracy and the preassigned value of the selected image. Each trial lasted 3000 ms and involved the presentation of a choice screen depicting the two objects (1750 ms), a feedback display (1000 ms), and a fixation cross (250 ms). In addition, 32 fixation trials (also of 3000 ms duration) were presented per run to serve as a baseline. Subjects responded during the choice screen by making left/right button presses on keypads held in both hands. Within each pair, object positions were counterbalanced so that they appeared equally on the left and right side of the screen. The task was programmed in E-Studio (Psychology Software Tools, 2002).

Varying the reward differential (blocks 1–3) (see Figure 1).

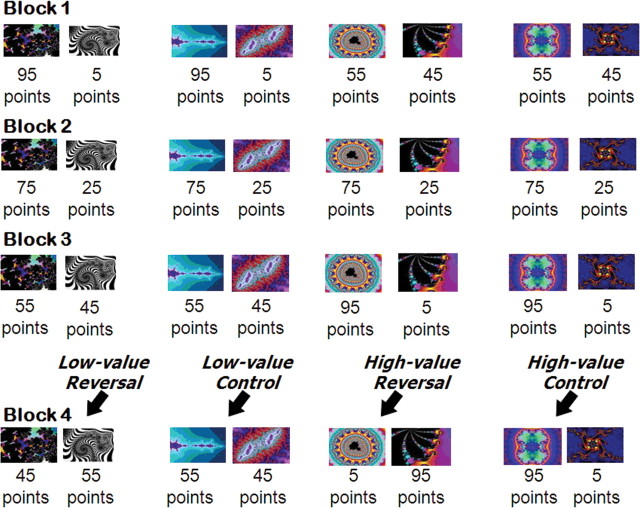

Figure 1.

An example of the stimuli and reinforcement contingencies used to determine the impact of varying reward differential on BOLD responding (blocks 1–3). In a given run, four distinct object pairs were used. Two object pairs corresponded to the decreasing reward differential condition (left); the reward differential between these objects steadily declined across the blocks. Two object pairs correspond to the increasing reward differential condition (right); the reward differential for these objects steadily increases across the blocks. In the fourth row, sample stimuli and reinforcement contingencies used to determine the impact of varying the reward differential of reversals on the BOLD response are depicted (block 4). In a given run, two stimuli underwent a reversal in contingencies (shown in the fourth row of columns 1 and 3), and two objects retained their original values (shown in the fourth row of columns 2 and 4). Of the two stimuli that reversed contingencies, one involved a low-differential reversal (fourth row, column 1), and the second underwent a high-differential reversal (fourth row, column 3). Of the two control pairs that retained their original values, one involved a low reward differential between objects within a pair (fourth row, column 2), and the other involved a high reward differential (fourth row, column 4).

In the first three blocks, we manipulated the reward differential between four pairs of objects across three blocks (each object pair was presented eight times per block). For two of the pairs, the decreasing reward differential pairs, the reward differential between the correct and incorrect object was initially high (e.g., $95 for correct responses vs $5 for incorrect responses). The correct response then steadily decreased in value from block 1 to block 3 (block 1: $95 vs $5; block 2: $75 vs $25; block 3: $55 vs $45); see Figure 1. For the other two pairs, the increasing reward differential pairs, the reward differential between the correct and incorrect object was initially low (e.g., $55 for correct responses vs $45 for incorrect responses). The correct response then steadily increased across blocks 2 and 3 (block 1: $55 vs $45; block 2: $75 vs $25; block 3: $95 vs $5).

Low and high-differential reversals (block 4).

In the fourth block, two of the four object pairs reversed reward values. One of the pairs, the low-differential reversal pair, involved the reversal of two objects of similar values (e.g., the object previously valued at $55 became the “incorrect” object valued at $45; conversely, the object previously valued at $45 became the “correct” object valued at $55). The other reversed pair, the high-differential reversal pair, involved the reversal of two objects with dissimilar values (e.g., the object previously valued at $95 became the “incorrect” object valued at $5, and vice versa). Each reversal pair had a corresponding control pair that retained the same value across blocks 3 and 4. There were therefore a total of four conditions in block 4: low-differential reversal, low-differential control, high-differential reversal, and high-differential control.

Subjects completed four 8 min runs of the task. Each run involved new stimuli with unique reward values to ensure that participants learned new reinforcement values and contingencies each time. As a consequence, participants received a total of 64 trials for each of the variable reward differential conditions (blocks 1–3), and 32 trials for each of the differential reversal conditions (block 4).

fMRI analysis.

Data were analyzed within the framework of the general linear model using the Analysis of Functional Neuroimages program (AFNI) (Cox, 1996). Motion correction was performed by registering all volumes in the EPI dataset to a volume collected shortly before the high-resolution anatomical dataset was acquired. EPI datasets were spatially smoothed (isotropic 6 mm Gaussian kernel) and converted into percentage signal change from the mean to reduce the effect of anatomical variability among the individual maps in generating group maps. The time series data were normalized by dividing the signal intensity of a voxel at each time point by the mean signal intensity of that voxel for each run, and multiplying the result by 100. Resultant regression coefficients represented a percentage signal change from the mean. Regressors depicting each of the trial types were created by convolving the train of stimulus events with a γ-variate hemodynamic response function to account for the slow hemodynamic response. The hemodynamic response function was modeled across the trial. To control for voxelwise correlated drifting, a baseline plus linear drift and quadratic trend were modeled in each voxel's time series. Voxelwise group analyses involved transforming single-subject β coefficients into the standard coordinate space of Talairach and Tournoux, followed by a statistical analysis of the functional data. Separate analyses were conducted for each of the two experimental phases as described below.

Two separate regressor models of the data were developed. The first regressor model investigated the blood oxygen level-dependent (BOLD) response associated with correct responding under the impact of varying reward differentials (blocks 1–3). This variable reward differential analysis involved six separate regressors each depicted in Figure 1 (blocks 1–3): (1) high reward differential acquisition (block 1, pair 1 and 2); (2) low reward differential acquisition (block 1, pair 3 and 4); (3) decreasing-value medium reward differential (block 2, pair 1 and 2); (4) increasing-value medium reward differential (block 2, pair 3 and 4); (5) low reward differential (block 3, pair 1 and 2); and (6) high reward differential (block 3, pair 3 and 4). Errors were modeled as regressors of no interest. The regressors of interest were then used to form the 2 (reward differential: increasing or decreasing) × 3 (block: 1, 2, or 3) ANOVA of the BOLD response.

The second regressor model concentrated on the BOLD response associated with correct responding after varying the reward differential of reversals (block 4). This low- and high-differential reversal analysis involved four separate regressors depicted in Figure 1 (block 4): (1) low-differential reversal (pair 1); (2) low-differential control (nonreversal, pair 2); (3) high-differential reversal (pair 3); and (4) high-differential control (nonreversal, pair 4). Using a similar strategy as that used in previous studies (O'Doherty et al., 2001; Budhani et al., 2007; Mitchell et al., 2008), we contrasted reversal errors with all correct control condition responses to identify regions involved in reversal learning. The percentage signal change relative to the mean within regions of interest (ROIs) was then examined across conditions to determine whether the BOLD response within these regions varied according to the magnitude of change.

Last, a conjunction analysis was conducted to determine the extent to which neural regions sensitive to our reward differential manipulation overlapped with those neural regions that showed a differential BOLD response to reversal errors. We created a mask of the voxels that were active during each of our statistical maps of interest ([reversal errors vs correct control] and [reward differential × block interaction]) using a common threshold value for each map (p < 0.005). Using the 3dCalc function (http://afni.nimh.nih.gov/sscc/gangc/ConjAna.html) in AFNI (Cox, 1996), we were able to identify regions that were activated by voxels that were modulated as a function of reward differentials and reversal errors either singularly or collectively.

Results

Behavioral results

Impact of varying reward differential

Our first ANOVA examined reward differential changes (i.e., blocks 1–3). A 2 (reward differential pair: increasing or decreasing) × 3 (block: 1, 2, or 3) ANOVA was conducted on the error data. This revealed a significant main effect of reward differential (F(1,18) = 56.43; p < 0.001). Relative to pairs that decreased their reward differential over time, subjects made more errors to pairs that increased in reward differential over time [i.e., object pairs that were initially similar in value (e.g., $55 vs $45), but became dissimilar in value ($95 vs $5)]. There was also a significant main effect of block (F(2,36) = 61.53; p < 0.001). Subjects made more errors in the first than in the second or third block (p < 0.001). A significant reward differential × block interaction also emerged (F(2,36) = 17.91; p < 0.001). This interaction was notable in blocks 2 and 3 (see Fig. 2). Across blocks 2 and 3, error rates for the increasing reward differential pairs (block 2: $75 vs $25; block 3: $95 vs $5) decreased significantly (p < 0.005). Conversely, across blocks 2 and 3, error rates for the decreasing reward differential pairs (block 2: $75 vs $25; block 3: $55 vs $45) actually increased significantly (p < 0.01).

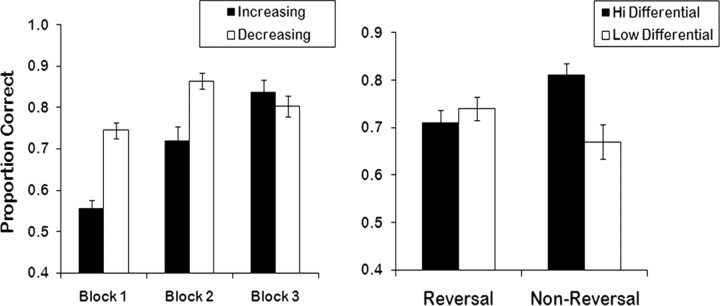

Figure 2.

Behavioral results from the experimental task reveal a significant reward differential × block interaction for error rates (y-axis = proportion correct). Across blocks 2 and 3, error rates decreased as the reward differential between the two options increased. Conversely, error rates increased when the reward differentials between the two pairs decreased (p < 0.01). An ANOVA conducted on the reversal learning phase of the task revealed a significant effect of reward differential; more errors were committed to low relative to high reward differentials. Participants also made significantly more errors to high-differential reversal pairs relative to high-differential control pairs (p < 0.005). Error bars represent the SEM.

Impact of reward differential on reversal

Our second ANOVA examined the reversal of high relative to low reward differential pairs (block 4). A 2 (reward differential: low or high) × 2 (reversal: reversing or nonreversing) ANOVA was conducted on the error data. This revealed a significant main effect of reward differential; subjects made significantly more errors to the low (block 4: $45 vs $55) relative to the high reward differential (block 4: $5 vs $95) pairs (F(1,15) = 18.70; p < 0.005). The main effect of reversal was not significant (F(1,15) = 0.19; ns). However, there was a significant reward differential × reversal interaction (F(1,15) = 16.84; p < 0.005). Subjects made significantly more errors to high-differential reversal stimuli than to high-differential control pairs (those stimuli that had not changed value) (t = 3.84; p < 0.005). In contrast, there was no significant difference between low-differential reversal and low-differential control condition selections (t = 1.65; p > 0.10). Finally, subjects made significantly more errors in the low- versus high-differential control condition (t = 5.71; p < 0.001), but not for the low- versus high-differential reversals (t = 1.22; p > 0.20).

fMRI results

Impact of varying reward differential

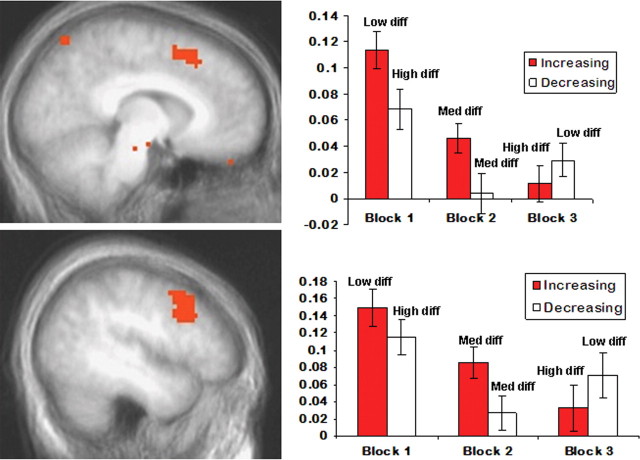

Our initial analysis involved a 2 (reward differential pair: increasing or decreasing) × 3 (block: 1, 2, or 3) ANOVA on the whole-brain BOLD response data (p < 0.005; corrected at p < 0.05). This analysis revealed main effects of reward differential pair and block as well as our result of interest, the reward differential pair-by-block interaction; see Table 1. Importantly the reward differential pair-by-block interaction was significant within both dmPFC and dlPFC. However, no significant reward differential × block interaction was observed in IFG (Brodmann's area 47, 44, or 45), even at a more liberal threshold (p < 0.01). Notably, and mirroring the behavioral data, the interaction emerged in blocks 2 and 3 (see Fig. 3). This interaction was characterized by greater activity in dmPFC and dlPFC to smaller reward differentials regardless of block. Thus, across blocks 2 and 3, activity in both dmPFC and dlPFC decreased for the increasing reward differential pairs (block 2: $75 vs $25; block 3: $95 vs $5; p < 0.05). Conversely, across blocks 2 and 3, activity in dmPFC and dlPFC increased for the decreasing reward differential pairs (block 2: $75 vs $25; block 3: $55 vs $45; p < 0.05 and p = 0.065, respectively).

Table 1.

Reward differential × block ANOVA

| Anatomical location | L/R | BA | x | y | z | F | Volume (mm3) |

|---|---|---|---|---|---|---|---|

| Dorsolateral PFC | L | 8/9 | −43 | 12 | 40 | 10.46 | 3294 |

| Medial PFC | L | 10 | −26 | 48 | 19 | 10.72 | 1782 |

| Dorsomedial PFC | R | 6 | 8 | 16 | 52 | 9.97 | 1350 |

| Caudate | L | − | −7 | 13 | 13 | 9.20 | 999 |

| Middle frontal gyrus | L | 6 | −28 | −1 | 65 | 10.96 | 945 |

| Superior parietal | R | 7 | 31 | −66 | 51 | 6.61 | 783 |

| Precuneus/superior parietal | R | 7 | 12 | −72 | 63 | 6.08 | 729 |

| Precuneus/superior parietal | L | 7 | −29 | −68 | 40 | 6.46 | 486 |

| Caudate | R | 14 | 9 | 13 | 6.35 | 324 |

Reward differential (increasing vs decreasing) × block (1, 2, 3) interaction (p < 0.005; corrected for multiple comparisons, p < 0.05). BA, Brodmann's area; L, left; R, right.

Figure 3.

A significant interaction between reward differential (diff) and block (p < 0.05 corrected) was observed in dorsomedial prefrontal cortex (top) and dorsolateral prefrontal cortex (bottom). Notably, across blocks 2 and 3, percentage signal change (y-axis) in these regions decreased when the reward differential between two response options increased. Conversely, activity in these regions increased when the reward differential between two response options decreased. Error bars represent the SEM.

Impact of reward differential during reversals

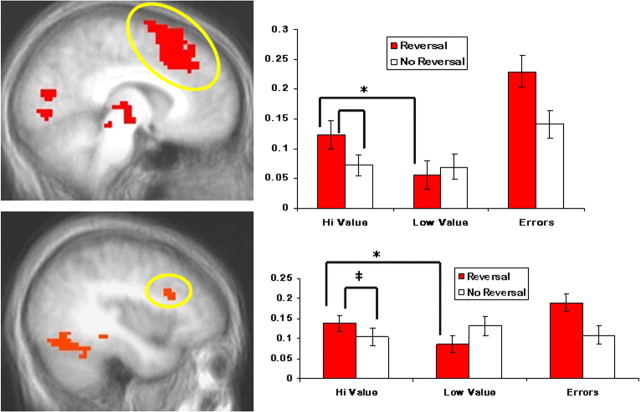

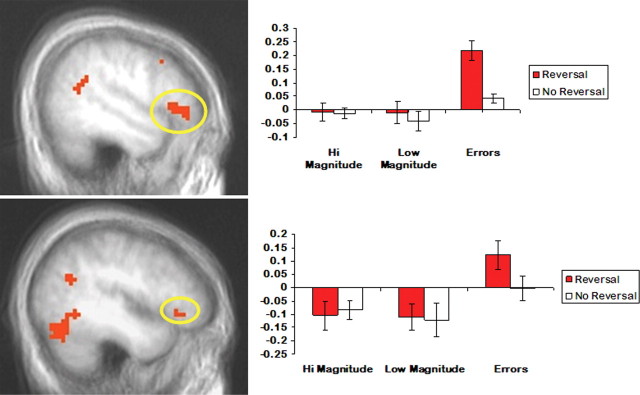

In accordance with strategies used in previous studies (O'Doherty et al., 2001; Kringelbach and Rolls, 2003; Budhani et al., 2007; Mitchell et al., 2008) to identify regions critical for reversal learning, we first contrasted the BOLD response to reversal errors with the BOLD response to correct selections (Table 2). In line with previous work, dmPFC, dlPFC and IFG all showed significantly enhanced activity to reversal errors relative to correct responses (Figs. 4 and 5). These regions formed our ROIs in subsequent analyses to determine the impact of reward differential during reversals. The resulting paired t tests uncovered significantly enhanced activity to large- versus small-differential correct reversals for dmPFC (t = 3.24; p < 0.01) and left dlPFC (t = 3.34; p < 0.005). In contrast, the percentage signal change in left and right IFG did not differ significantly between these two reversal conditions (t < 1; ns).

Table 2.

Reversal errors versus correct control condition selections

| Anatomical location | R/L | BA | x | y | z | t | Volume (mm3) |

|---|---|---|---|---|---|---|---|

| Dorsomedial PFC | R/L | 6/32 | 6 | 15 | 60 | 5.38 | 12,123 |

| Dorsolateral PFC | L | 46/9 | −36 | 16 | 26 | 5.28 | 432 |

| Inferior frontal gyrus | R | 44/47 | 49 | 27 | 2 | 5.24 | 6615 |

| Inferior frontal gyrus | L | 47 | −46 | 27 | −1 | 4.55 | 243 |

| Insula | L | 47/13 | −27 | 16 | 6 | 4.42 | 1701 |

| Superior frontal gyrus | L | 6 | −23 | −10 | 71 | 5.05 | 756 |

| Ventromedial PFC | L | 25 | −7 | 20 | −17 | 6.01 | 297 |

| Anterior cingulate cortex | R | 10 | 18 | 43 | −3 | 4.85 | 297 |

| Middle temporal gyrus | R | 21 | 60 | −21 | −7 | 4.31 | 243 |

| Superior temporal gyrus | R | 22 | 54 | −53 | 17 | 5.68 | 999 |

| Inferior occipital gyrus | R/L | 18 | −3 | −77 | 2 | 5.13 | 6804 |

| Fusiform gyrus | L | 19 | −34 | −65 | −22 | 3.92 | 8262 |

| Thalamus/substantia nigra | R/L | 3 | −21 | −4 | 3.78 | 3051 | |

| Lingual gyrus | R | 18 | 20 | −76 | −10 | 5.51 | 675 |

| Inferior parietal | L | 39 | −44 | −58 | 23 | 4.67 | 378 |

| Superior parietal | R | 7 | 30 | −76 | 43 | 4.00 | 648 |

| Caudate head | L | −6 | 7 | 8 | 4.89 | 594 | |

| Caudate head | R | 16 | 6 | 10 | 4.75 | 459 | |

| Caudate tail | R | 32 | −43 | 8 | −4.46 | 297 | |

| Cerebellum | R | 4 | −61 | −21 | 4.06 | 378 |

p < 0.05 corrected for multiple comparisons. BA, Brodmann's area; L, left; R, right.

Figure 4.

Both dmPFC (top) and dlPFC (bottom) showed significantly enhanced activity to reversal errors relative to correct responses (p < 0.05, corrected). In addition, both regions were sensitive to the magnitude of the reversal differential showing significantly enhanced activity to large versus small changes in value (*p < 0.05; ‡p = 0.06). Error bars represent the SEM.

Figure 5.

Both right (top) and left (bottom) IFG showed significantly enhanced activity to reversal errors relative to correct responses (p < 0.05, corrected). However, unlike dorsal regions of prefrontal cortex, activity in IFG was not significantly modulated by the size of the change in reinforcement. Error bars represent the SEM.

Because our low- and high-differential reversals also differed in reward magnitude, the observed differences in percentage signal change to high versus low-differential reversals may reflect choice properties specific to reward magnitude independent of whether a reversal in contingencies had occurred. To control for this potential confound, we compared percentage signal change within our ROIs during low- and high-differential reversals relative to their respective control conditions (those trials that were of equal value but had not undergone a reversal in contingencies). Significantly greater signal change was observed to high-differential reversals versus high-differential control conditions in both dmPFC (t = 2.52; p < 0.05) and left dlPFC (t = 2.02; p = 0.06). In contrast, signal in left or right IFG did not distinguish between high-differential reversals and high-differential control trials during correct selections (t < 1; ns). These results are consistent with the hypothesis that the BOLD response within dmPFC and dlPFC was modulated by the size of the differential reversal during correct responding rather than by the magnitude of reinforcement alone.

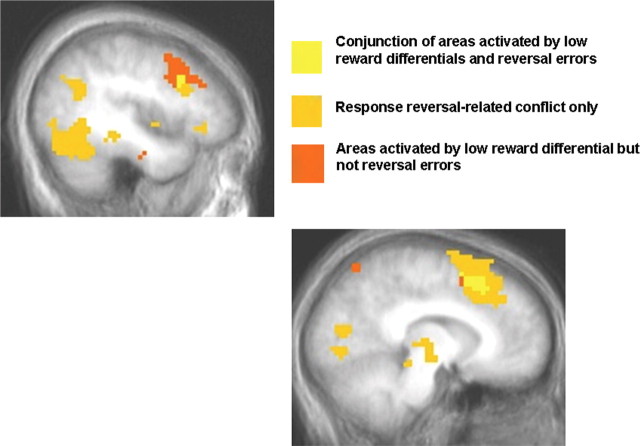

Conjunction analysis

Our analysis showed that there was a differential BOLD response in dmPFC and dlPFC for both reward differential and magnitude of reward reversal. To test the hypothesis that similar regions of dmPFC and dlPFC were involved in decision-making conflict generated by both reward differential and reversal learning, we conducted a conjunction analysis. Shown in Figure 6, the conjunction of [reversal errors vs correct control] and [reward differential × block interaction] (p < 0.005) revealed common areas of activity in both dmPFC (1242 mm3) and dlPFC (270 mm3).

Figure 6.

The results of a conjunction analysis (p < 0.005) demonstrate that overlapping areas of dlPFC (top left) and dmPFC (bottom right) exist that respond to decision conflict whether it is conflict generated by low reward differentials or conflict encountered during reversal learning.

Discussion

The goal of this study was to delineate the function of dmPFC, dlPFC, and IFG in the context of two distinct sources of decision conflict: conflict generated through decreasing reward differentials between available choices and conflict generated by reversing reinforcement contingencies. We observed that activity in dmPFC and dlPFC, but not IFG, was greater when participants selected between two options that were similar in value (had a low reward differential) relative to options that were dissimilar in value (had a high reward differential). Notably, this effect of reward differential was not restricted to the initial learning of stimulus–response associations; activity in this region changed to reflect the updated levels of decision conflict across trials. Specifically, activity in dmPFC and dlPFC decreased when the reward differential between two options got larger, and increased when the reward differential between two options got smaller. Reversal errors were also associated with activity in dmPFC and dlPFC. Moreover, greater activity in dmPFC and dlPFC was observed to correct reversals in responding when the stimuli underwent larger relative to smaller changes in value. Importantly, a conjunction analysis revealed that the areas of dmPFC and dlPFC activated by decision conflict generated by diminishing reward differentials are highly overlapping with those areas activated by reversal errors. In contrast, while IFG showed clear activation to the decision conflict induced by reversal errors, there was no indication of IFG activity to decision conflict generated by smaller reward differentials. Even the region of IFG identified as responding to reversal errors showed no significant response to decision conflict generated by smaller reward differentials. Finally, IFG did not distinguish between reversals in reward that involved larger relative to smaller changes in value. In summary, whereas dmPFC and dlPFC were responsive to both forms of decision conflict examined, IFG activity was enhanced only when a suboptimal response had been made and an alternative selection was warranted.

According to influential conflict monitoring accounts, dmPFC signals instances of response conflict, triggering compensatory adjustments in cognitive control via activity in dlPFC (Carter et al., 1998, 1999; Botvinick et al., 2004). Subsequent studies have supported the view that cognitive control is achieved by amplifying cortical responses to task-relevant representations (Egner and Hirsch, 2005). There have been suggestions that the functional neuroanatomy of conflict resolution may differ depending on whether the source of conflict is generated by “stimulus-based conflict” or “response-based conflict” (Liu et al., 2006; Egner et al., 2007). While this may be correct, the current data, particularly the conjunction analysis, indicate that the resolution of conflict generated by choosing between options of similar value and the resolution of conflict generated by reversing reinforcement contingencies involve overlapping areas of dmPFC and dlPFC. Moreover, it is notable that the regions of dmPFC and dlPFC are proximal to those identified in previous decision conflict studies (Blair et al., 2006; Marsh et al., 2007; Pochon et al., 2008) as well as those identified in previous work with tasks such as the Stroop (Botvinick et al., 2004).

There are three features of the current results with respect to dmPFC and dlPFC that are worthy of note. First, in line with previous work (Blair et al., 2006; Pochon et al., 2008), both dmPFC and dlPFC showed increased activity when choosing between response options that were similar in value (had a small reward differential) relative to options that were dissimilar in value (had a large reward differential). However, strikingly, activity within dmPFC and dlPFC was modulated by this reward differential even when the objects to be chosen between remained the same. For objects whose reward differential increased across blocks 2 and 3, activity in dmPFC and dlPFC decreased. However, for objects whose reward differential decreased across blocks 2 and 3, activity in dmPFC and dlPFC increased. This was true in both cases despite the fact that the correct response had not changed. We interpret these data in terms of response conflict resolution (cf. Carter et al., 1999; Botvinick et al., 2004); as the differentials decreased, there is increased competition between the two response tendencies (respond toward pattern 1 vs pattern 2) because the reward value differentiating these response tendencies is reduced. While dmPFC appears implicated in action-reinforcement learning (Kennerley et al., 2006; Rushworth et al., 2007), we believe the current data support its potentially additional role in decision conflict resolution. Activity in these regions was greatest in the first block, perhaps reflecting action-reinforcement learning. However, activity in these regions was also modulated by reward differential in blocks 2–3 when the correct response had not changed. Activity in dmPFC and dlPFC regions increased for stimuli whose reward differentials increased across blocks 2 and 3, and decreased for stimuli whose reward differentials increased across blocks 2 and 3). We believe our data are compatible with the view that dmPFC's role in conflict resolution leads to the recruitment of dlPFC during decision making, such that attention to relevant stimulus features is enhanced as a function of the level of conflict (cf. Liu et al., 2006; Hester et al., 2007).

Second, in block 2, it is notable that although the reward differential between both increasing and decreasing pairs was equal, there was significantly greater activity to the increasing relative to the decreasing pairs. This finding is consistent with suggestions that dmPFC plays a key role in integrating information about actions and outcomes over time to guide responding (Kennerley et al., 2006; Walton et al., 2007). However, data are also compatible with the idea that superior learning associated with the decreasing (high reward differential) pairs in block 1 meant less response conflict for these pairs relative to the increasing (low reward differential) pairs in block 2 (cf. Carter et al., 1999; Botvinick et al., 2004).

Third, while subjects were no more likely to make errors for large reward differential reversals relative to small reward differential reversals, both dmPFC and dlPFC showed greater activity for larger differential reversals. This pattern of activity may reflect the higher volatility associated with a greater discrepancy between past and current reward values in the larger versus smaller differential reversals (Rushworth and Behrens, 2008). In this situation, there should be rapid behavioral adjustment on the basis of the reversed reward outcomes, particularly for the high-differential reversals where the cost of error is particularly high. During the reversal component of the task, we believe that there is a conflict between the previous optimal response tendency (“respond toward pattern 1”) and the new optimal response tendency (“respond toward pattern 2”). Of course, it is notable that dmPFC has been implicated in responding to errors (Holroyd et al., 2004). Following previous literature, we identified these regions of dmPFC and dlPFC with the contrast reversal errors versus correct responses (O'Doherty et al., 2001; Budhani et al., 2007; Mitchell et al., 2008). However, activity was significantly greater within both regions for correct responses to high-differential reversal pairs relative to low value reversal pairs. Furthermore, whereas these two conditions did not differ significantly in error rate, they do differ in terms of response conflict: the high-differential reversal involves reversing the tendency to “respond toward the previously high pattern 1” and instead “respond toward the previously low pattern 2.”

The current results have implications for attempts to distinguish the functional contribution to decision making of IFG relative to dmPFC and dlPFC. At present, there are two prevailing views about how these regions interact to resolve decision conflict. The first suggests that whereas dmPFC is involved in conflict detection (cf. Carter et al., 1999; Botvinick et al., 2004) and dlPFC in resolving conflict through attentional means (MacDonald et al., 2000), IFG is involved in selecting an appropriate motor response (Rushworth et al., 2005; Budhani et al., 2007). This hypothesis implies significant conjunction of activity across these regions for decision conflict whether it is generated by reward differentials or reversals of reward. A second possibility suggests greater functional specialization with recruitment of dmPFC and dlPFC to both forms of decision conflict, but recruitment of IFG only when a suboptimal response must change. Our results support the latter suggestion; whereas activity in overlapping areas of dmPFC and dlPFC reflected conflict generated by reward differentials or a reversal in contingencies, IFG activity was enhanced only when a suboptimal response had been made and a change must take place on a subsequent trial. To our knowledge, this is the first neuroimaging study to dissociate the functional contribution to reversal learning of IFG from that of dlPFC and dmPFC.

In the current study expected values were tied to specific stimuli rather than specific motor responses (either left or right button presses could be correct depending on the spatial location of stimuli). As a consequence, we cannot rule out the possibility that IFG may be sensitive to the reinforcement differential between specific actions. In short, if we had manipulated the reward differential between two actions with respect to a single stimulus (e.g., the left vs right button) rather than the reward differential between two stimuli, we might have seen modulation of IFG rather than dlPFC. Future work that varies the reward value associated with specific actions rather than stimuli (cf. Tanaka et al., 2008; Gläscher et al., 2009) is needed to address this unresolved issue. However, the current results do suggest at least in the context of the form of object discrimination paradigm used here, the production of a suboptimal response is necessary for IFG activity. Moreover, it is important to note that while IFG showed greater activity to reversal errors relative to correct responses, activity in this region, unlike dmPFC and dlPFC, was not influenced by whether the reversal involved a high or low differential, a manipulation that should be related to response competition.

Conclusions

In this study we demonstrated the complementary yet dissociable roles that dmPFC, dlPFC, and IFG play with respect to decision conflict. dmPFC and dlPFC were both responsive to increases in decision conflict regardless of whether it was decision conflict engendered by choosing between two objects of similar value, or conflict encountered during reversal learning. Conjunction analysis showed that overlapping areas within these regions were activated in each form of decision conflict. Moreover, whereas dmPFC and dlPFC were responsive to conflict as a function of the current and past stimulus-response values, IFG showed enhanced responding only when a suboptimal response had been made. In contrast, we found no evidence that activity in IFG was modulated by either current or past reward differentials. These results provide additional insight into the distinct functional contributions made by dorsal versus ventral regions of prefrontal cortex to decision making.

Footnotes

This research was conducted at the National Institutes of Health in Bethesda, Maryland, and was supported by the Intramural Research Program of the National Institutes of Health–National Institute of Mental Health and by a grant to D.G.V.M. from the Natural Sciences and Engineering Research Council of Canada.

References

- Blair K, Marsh AA, Morton J, Vythilingam M, Jones M, Mondillo K, Pine DC, Drevets WC, Blair JR. Choosing the lesser of two evils, the better of two goods: specifying the roles of ventromedial prefrontal cortex and dorsal anterior cingulate in object choice. J Neurosci. 2006;26:11379–11386. doi: 10.1523/JNEUROSCI.1640-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Botvinick MM, Cohen JD, Carter CS. Conflict monitoring and anterior cingulate cortex: an update. Trends Cogn Sci. 2004;8:539–546. doi: 10.1016/j.tics.2004.10.003. [DOI] [PubMed] [Google Scholar]

- Budhani S, Marsh AA, Pine DS, Blair RJ. Neural correlates of response reversal: considering acquisition. Neuroimage. 2007;34:1754–1765. doi: 10.1016/j.neuroimage.2006.08.060. [DOI] [PubMed] [Google Scholar]

- Carter CS, Braver TS, Barch DM, Botvinick MM, Noll D, Cohen JD. Anterior cingulate cortex, error detection, and the online monitoring of performance. Science. 1998;280:747–749. doi: 10.1126/science.280.5364.747. [DOI] [PubMed] [Google Scholar]

- Carter CS, Botvinick MM, Cohen JD. The contribution of the anterior cingulate cortex to executive processes in cognition. Rev Neurosci. 1999;10:49–57. doi: 10.1515/revneuro.1999.10.1.49. [DOI] [PubMed] [Google Scholar]

- Casey BJ, Forman SD, Franzen P, Berkowitz A, Braver TS, Nystrom LE, Thomas KM, Noll DC. Sensitivity of prefrontal cortex to changes in target probability: a functional MRI study. Hum Brain Mapp. 2001;13:26–33. doi: 10.1002/hbm.1022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cools R, Clark L, Owen AM, Robbins TW. Defining the neural mechanisms of probabilistic reversal learning using event-related functional magnetic resonance imaging. J Neurosci. 2002;22:4563–4567. doi: 10.1523/JNEUROSCI.22-11-04563.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Egner T, Hirsch J. Cognitive control mechanisms resolve conflict through cortical amplification of task-relevant information. Nat Neurosci. 2005;8:1784–1790. doi: 10.1038/nn1594. [DOI] [PubMed] [Google Scholar]

- Egner T, Delano M, Hirsch J. Separate conflict-specific cognitive control mechanisms in the human brain. Neuroimage. 2007;35:940–948. doi: 10.1016/j.neuroimage.2006.11.061. [DOI] [PubMed] [Google Scholar]

- Ernst M, Nelson EE, McClure EB, Monk CS, Munson S, Eshel N, Zarahn E, Leibenluft E, Zametkin A, Towbin K, Blair J, Charney D, Pine DS. Choice selection and reward anticipation: an fMRI study. Neuropsychologia. 2004;42:1585–1597. doi: 10.1016/j.neuropsychologia.2004.05.011. [DOI] [PubMed] [Google Scholar]

- First MB, Spitzer RL, Gibbon M. Washington, DC: American Psychiatric; 1997. Structured clinical interview for DSM-IV axis I disorders-clinician version (SCID-CV) [Google Scholar]

- Gläscher J, Hampton AN, O'Doherty JP. Determining a role for ventromedial prefrontal cortex in encoding action-based value signals during reward-related decision making. Cereb Cortex. 2009;19:483–495. doi: 10.1093/cercor/bhn098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Han S, Huettel SA, Dobbins IG. Rule-dependent prefrontal cortex activity across episodic and perceptual decisions: an fMRI investigation of the criterial classification account. J Cogn Neurosci. 2009;21:922–937. doi: 10.1162/jocn.2009.21060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hester R, D'Esposito M, Cole MW, Garavan H. Neural mechanisms for response selection: comparing selection of responses and items from working memory. Neuroimage. 2007;34:446–454. doi: 10.1016/j.neuroimage.2006.08.001. [DOI] [PubMed] [Google Scholar]

- Holroyd CB, Nieuwenhuis S, Yeung N, Nystrom L, Mars RB, Coles MG, Cohen JD. Dorsal anterior cingulate cortex shows fMRI response to internal and external error signals. Nat Neurosci. 2004;7:497–498. doi: 10.1038/nn1238. [DOI] [PubMed] [Google Scholar]

- Kennerley SW, Walton ME, Behrens TE, Buckley MJ, Rushworth MF. Optimal decision making and the anterior cingulate cortex. Nat Neurosci. 2006;9:940–947. doi: 10.1038/nn1724. [DOI] [PubMed] [Google Scholar]

- Kringelbach ML, Rolls ET. Neural correlates of rapid reversal learning in a simple model of human social interaction. Neuroimage. 2003;20:1371–1383. doi: 10.1016/S1053-8119(03)00393-8. [DOI] [PubMed] [Google Scholar]

- Liu X, Banich MT, Jacobson BL, Tanabe JL. Functional dissociation of attentional selection within PFC: response and non-response related aspects of attentional selection as ascertained by fMRI. Cereb Cortex. 2006;16:827–834. doi: 10.1093/cercor/bhj026. [DOI] [PubMed] [Google Scholar]

- MacDonald AW, 3rd, Cohen JD, Stenger VA, Carter CS. Dissociating the role of the dorsolateral prefrontal and anterior cingulate cortex in cognitive control. Science. 2000;288:1835–1838. doi: 10.1126/science.288.5472.1835. [DOI] [PubMed] [Google Scholar]

- Marsh AA, Blair KS, Vythilingam M, Busis S, Blair RJR. Response options and expectations of reward in decision-making: The differential roles of dorsal and rostral anterior cingulate cortex. Neuroimage. 2007;35:979–988. doi: 10.1016/j.neuroimage.2006.11.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell DGV, Rhodes RA, Pine DS, Blair RJR. The role of ventrolateral and dorsolateral prefrontal cortex in response reversal. Behav Brain Res. 2008;187:80–87. doi: 10.1016/j.bbr.2007.08.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Doherty J, Kringelbach ML, Rolls ET, Hornak J, Andrews C. Abstract reward and punishment representations in the human orbitofrontal cortex. Nat Neurosci. 2001;4:95–102. doi: 10.1038/82959. [DOI] [PubMed] [Google Scholar]

- O'Doherty J, Critchley H, Deichmann R, Dolan RJ. Dissociating valence of outcome from behavioral control in human orbital and ventral prefrontal cortices. J Neurosci. 2003;23:7931–7939. doi: 10.1523/JNEUROSCI.23-21-07931.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pochon JB, Riis J, Sanfey AG, Nystrom LE, Cohen JD. Functional imaging of decision conflict. J Neurosci. 2008;28:3468–3473. doi: 10.1523/JNEUROSCI.4195-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Psychology Software Tools. Pittsburgh: Psychology Software Tools; 2002. E-Prime (version 1.1) [Google Scholar]

- Remijnse PL, Nielen MM, Uylings HB, Veltman DJ. Neural correlates of a reversal learning task with an affectively neutral baseline: an event-related fMRI study. Neuroimage. 2005;26:609–618. doi: 10.1016/j.neuroimage.2005.02.009. [DOI] [PubMed] [Google Scholar]

- Rogers RD, Ramnani N, Mackay C, Wilson JL, Jezzard P, Carter CS, Smith SM. Distinct portions of anterior cingulate cortex and medial prefrontal cortex are activated by reward processing in separable phases of decision-making cognition. Biol Psychiatry. 2004;55:594–602. doi: 10.1016/j.biopsych.2003.11.012. [DOI] [PubMed] [Google Scholar]

- Rushworth MF, Behrens TE. Choice, uncertainty and value in prefrontal and cingulate cortex. Nat Neurosci. 2008;11:389–397. doi: 10.1038/nn2066. [DOI] [PubMed] [Google Scholar]

- Rushworth MF, Buckley MJ, Gough PM, Alexander IH, Kyriazis D, McDonald KR, Passingham RE. Attentional selection and action selection in the ventral and orbital prefrontal cortex. J Neurosci. 2005;25:11628–11636. doi: 10.1523/JNEUROSCI.2765-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rushworth MF, Behrens TE, Rudebeck PH, Walton ME. Contrasting roles for cingulate and orbitofrontal cortex in decisions and social behaviour. Trends Cogn Sci. 2007;11:168–176. doi: 10.1016/j.tics.2007.01.004. [DOI] [PubMed] [Google Scholar]

- Tanaka SC, Balleine BW, O'Doherty JP. Calculating consequences: brain systems that encode the causal effects of actions. J Neurosci. 2008;28:6750–6755. doi: 10.1523/JNEUROSCI.1808-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walton ME, Croxson PL, Behrens TE, Kennerley SW, Rushworth MF. Adaptive decision making and value in the anterior cingulate cortex. Neuroimage. 2007;36(Suppl 2):T142–T154. doi: 10.1016/j.neuroimage.2007.03.029. [DOI] [PMC free article] [PubMed] [Google Scholar]