Abstract

To probe how the brain integrates visual motion signals to guide behavior, we analyzed the smooth pursuit eye movements evoked by target motion with a stochastic component. When each dot of a texture executed an independent random walk such that speed or direction varied across the spatial extent of the target, pursuit variance increased as a function of the variance of visual pattern motion. Noise in either target direction or speed increased the variance of both eye speed and direction, implying a common neural noise source for estimating target speed and direction. Spatial averaging was inefficient for targets with >20 dots. Together these data suggest that pursuit performance is limited by the properties of spatial averaging across a noisy population of sensory neurons rather than across the physical stimulus. When targets executed a spatially uniform random walk in time around a central direction of motion, an optimized linear filter that describes the transformation of target motion into eye motion accounted for ∼50% of the variance in pursuit. Filters had widths of ∼25 ms, much longer than the impulse response of the eye, and filter shape depended on both the range and correlation time of motion signals, suggesting that filters were products of sensory processing. By quantifying the effects of different levels of stimulus noise on pursuit, we have provided rigorous constraints for understanding sensory population decoding. We have shown how temporal and spatial integration of sensory signals converts noisy population responses into precise motor responses.

INTRODUCTION

To perceive and respond appropriately to sensory stimuli, our brains must interpret the activity of large populations of cortical neurons. In visual motion processing, target direction and speed are estimated by pooling across the population response in cortical extrastriate area MT. However, the intrinsic variability of neural responses poses a problem. To improve the reliability of sensory estimates, the brain must integrate stimulus information over time, pool the responses of many neurons, or both.

We have approached the problem of sensory estimation in a noisy neural environment by evaluating the behavioral responses generated by a precise sensory-motor system, smooth pursuit eye movements. In pursuit, the eyes move smoothly to intercept and track a moving target and minimize the slip of the target's image across the retina (Rashbass 1961). To track a moving target, pursuit must detect the onset of motion and form an estimate of the target's direction and speed. Our prior work has suggested that trial-by-trial variation in pursuit arises primarily from errors in estimating the sensory parameters of target motion, while the motor side of the system follows those erroneous estimates loyally (Osborne et al. 2005, 2007). The possible sensory origin to trial-by-trial variation in pursuit adds to other evidence that the properties of visually guided smooth eye movements can be direct probes of the features of sensory processing (e.g., Churchland and Lisberger 2001; Kawano and Miles 1986; Lisberger and Westbrook 1985; Masson et al. 1997; Osborne et al. 2005, 2007).

Even for the most unambiguous target motion, there is a fixed level of internal variation that prevents the visual system from estimating the parameters of visual motion perfectly for either pursuit or perception. As part of sensory-motor decoding, the nervous system reduces the impact of noise on sensory estimation by accumulating sensory evidence, both over time (e.g., Britten et al. 1992, 1996; de Bruyn and Orban 1988; Gold and Shadlen 2003; Perrett et al. 1998; Snowden and Braddick 1991) and across large populations of neurons (Georgopoulos et al. 1986; Lee et al. 1988; Treue et al. 2000). It follows that we might gain insight into the properties of sensory-motor decoding by adding a temporally or spatially stochastic component to visual motion, thereby creating a higher level of neural variation in sensory representations of motion and evaluating the effects on variation in pursuit. Given the close relationship between the precision of pursuit eye movements and motion perception (Kowler and McKee 1987; Osborne et al. 2005, 2007; Stone and Krauzlis 2003; Watamaniuk and Heinen 1999) and the role of MT in both behaviors (Born et al. 2000; Britten et al. 1992, 1996; Groh et al. 1997; Liu and Newsome 2005; Newsome et al. 1985), knowing how visual motion signals are integrated to generate pursuit eye movements should yield insight into cortical processing of visual motion signals for perception as well.

To investigate integration across time and space for population decoding and visual motion estimation, we measured pursuit eye movements in response to target motion with carefully contrived stochastic components. We created temporal noise by providing spatially uniform motion that changed direction or speed at regular temporal intervals: analysis of the resulting eye movements revealed that pursuit integrates visual motion signals on a time scale of ∼25 ms. We created spatiotemporal noise by providing patches of dots in which each dot underwent an independent random walk, again changing direction or speed at regular temporal intervals (Watamaniuk and Duchon 1992; Watamaniuk and Heinen 1999; Williams and Sekuler 1984). By quantifying the effects of different levels of stimulus noise, we have provided rigorous constraints for understanding sensory population decoding. Our results show how temporal and spatial integration of sensory signals leads to relatively precise motor behavior.

METHODS

Eye movements were recorded from four male rhesus monkeys that had been implanted with a head holder and scleral search coils (Ramachandran and Lisberger 2005) and trained to fixate and track visual targets. Most experiments were performed on two of the monkeys (P and J), and their data comprise most of our figures. Nonetheless, the basic findings were replicated on all four monkeys. In addition, several of the experiments were performed most completely on monkeys Y and D, and their data are substituted in the figures for less complete analyses on monkey J. These instances are explained in the relevant figure legends. During experiments, monkeys sat in a specialized primate chair for 2–3 h with their heads stabilized through the implanted head holder. They received juice or water rewards for tracking visual targets accurately. All procedures had been approved by UCSF's Institutional Animal Care and Use Committee and were in compliance with National Institutes of Health's Guide for the Care and Use of Laboratory Animals.

Experiments consisted of a series of trials, each delivering a single stimulus form and motion trajectory. Trials began with the monkey fixating a stationary spot target at the center of the screen for a random interval of 700–1,200 ms. Targets then appeared either centrally or eccentrically 2.5–3.7° from the point of fixation and immediately began to provide local stimulus motion toward the fixation position. Formally, the target motions we used comprised a base target velocity plus a stochastic component of speed or direction. Thus they provided a stimulus with a fitful, but inexorable, drift in a given direction at a given speed. While remaining mindful of their formal description, we will use the shorthand throughout the paper of calling them “noise” targets with direction or speed noise. The base target speed was 20°/s in most experiments and 10 or 30°/s in a few. We included several different base target directions in each experiment. The directions were typically horizontal and 9° rotated above and below horizontal (0, 180, 9, 171, 189, and 351°) and included smaller angles (i.e., 0, ±3, ±6, and ±9°) when the number of task conditions was tractable. In a few experiments, we collected a limited amount of data at oblique angles with identical results.

Monkeys were rewarded if they maintained eye position within 2° of the fixation spot for the final 200 ms of its illumination and within 3–5° of target center, depending on the form of the target, in the last 400 ms of pursuit. Rewards were not contingent on the tracking performance during the time window of our analysis. In all experiments, target motions were balanced in the two directions along each motion axis, and trials were presented in random order so that the monkeys could not anticipate the direction of the upcoming target motion. Datasets consisted of eye velocity responses to ≥32 and typically >100 repetitions of each specific target motion.

Signals proportional to vertical and horizontal eye velocity were generated by passing voltages proportional to eye position through an analog circuit that differentiated frequencies <25 Hz and rejected higher frequencies with a roll–off of 20 dB/decade. This circuit responds to a step of position with a twitch of velocity having a full width at half-height of 7.5 ms (i.e., the duration of the impulse response function). To confirm that the temporal filters obtained from our experiments were properties of the eye movements and not of the external signal processing, we used a differentiator with a 100-Hz cutoff frequency and a full-width at half height of 3 ms in a few experiments. Eye position and velocity signals were sampled and stored at 1,000 samples/s on each channel.

Visual stimuli

We presented bright, high-contrast visual targets in a dimly lit room against the dark screen of a high–resolution analog display oscilloscope that subtended horizontal and vertical visual angles of 48 × 38°. The oscilloscope was driven by 16-bit D/A converters on a digital signal processing board. This system allowed nominal spatial resolution of 216 pixels along each axis and temporal resolution of 2 ms. “Patch” targets consisted of typically 50 or 99 bright dots that were positioned randomly within a 4 × 4 or 7 × 7° square aperture sized to yield a dot density of ∼2–3 dots/deg2. “Spot” targets, including the fixation target, were provided by a cluster of typically 12 oscilloscope pixels subtending a 0.4o square. New image frames were painted every 4 ms in all experiments, but the temporal update interval for changes in target motion was varied systematically. Thus smooth motion was ensured by updating the position of a target every 4 ms, but its direction or speed of motion was altered only every N times 4 ms, where N ranged from 1 to 32 in different experiments. Within each frame, the computer system painted individual pixels as quickly as it could, with average inter-pixel intervals of ∼10 microsecs.

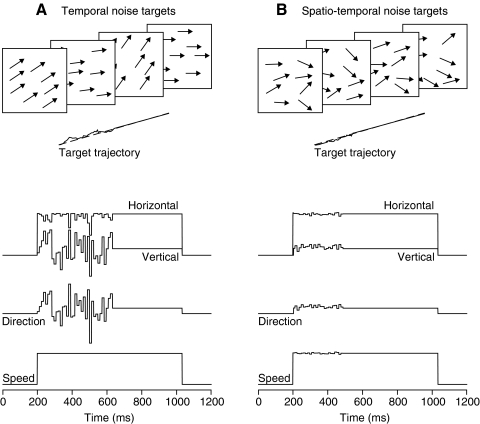

For temporal noise targets, the stimulus appeared eccentric to the fixation point at the end of the fixation interval, and the dots and aperture started to move immediately. Targets moved with a constant velocity base trajectory to which we added a stochastic perturbation in either direction or speed so that targets executed a random walk around the base trajectory. The temporal perturbation affected every dot in a patch synchronously for the first 432 ms of target motion and changed the direction or speed of stimulus motion at regular times defined by the update interval (Fig. 1 A). Because the dots in temporal noise targets underwent coordinated motion, the trajectory of the pattern was identical to that of the individual dots and underwent fairly impressive variation from segment to segment. Single spot targets were essentially patches of only one dot; patch targets had apertures of 4 × 4° and typically contained 50 dots. The direction or speed of the motion perturbation for each update of a temporal noise target was drawn from a Gaussian distribution and then added to the base horizontal and vertical components of target velocity. For experiments that added a stochastic component to target direction, target speed was held constant, usually at 20°/s, so that the noise appeared only in target direction (Fig. 1A, bottom 2 panels). The situation was reversed for experiments that added a stochastic component to target speed (not pictured).

Fig. 1.

Schematic diagrams of the 2 ways that a stochastic component of direction was added to standard target motion. A: in “temporal noise targets,” either a single spot or all the dots within a patch target moved coherently but with direction or speed that changed randomly at regular update intervals. B: in “spatiotemporal noise targets,” each of the dots within a patch target underwent an independent random walk with changes in either direction or speed at regular update intervals. In both cases, the dots were painted on the screen every 2 ms, and the update interval was fixed within a target motion over a range from 4 to 96 ms. From top to bottom in each column, the panels show: schematic of the stochastic motion; target trajectory in 2-dimensional position space; horizontal and vertical velocity of the pattern motion; pattern direction, and pattern speed. “Pattern” motion was defined as the mean direction and speed across all the individual dots in a patch target.

For spatiotemporal noise targets, the patches appeared at the center of the screen when the fixation point was extinguished, and the dots started to move immediately within a stationary aperture. After ∼150 ms, the aperture also began to move. Thus local motion provided the only stimulus for the first 125 ms of pursuit allowing us to connect our pursuit results to the literature on human psychophysics using similar targets. Whenever a dot moved beyond the edge of the aperture, it was replaced by a fresh dot placed randomly on the opposite edge. Motion included a stochastic component like that used for temporal noise targets except that the perturbations were independent for each dot in the pattern. Our spatiotemporal noise targets are modeled after those introduced by Williams and Sekuler (1984) and used by Watamaniuk and Heinen (1999) and others rather than after those of Newsome and Paré (1988) in which a fraction of dots moved coherently while others translated randomly. For direction noise, dot directions were chosen randomly with replacement from a uniform distribution about the central direction of motion. The spacing between possible dot directions was always 1°. For speed noise, dot speeds ranged logarithmically rather than linearly about a central value. The speed of each dot, S, was chosen at each update step according to S = S0·2x/E, where S0 indicates the mean speed of the target chosen for that trial and x was a random value drawn from a uniform distribution from –N to N with a granularity of 0.05; to obtain different ranges of speed noise, N took on values from 0 (no noise) to 7 in integer steps. E represents the expected value of 2x given by Σxp(x)2x, where p(x) is the probability of each value of x. The average value of the individual dot and pattern speed approached S0 after sufficient time steps.

The variance in the overall pattern direction or speed within one frame of our spatiotemporal noise targets was σdots2N where σdots2 is the variance of the distribution of directions or speeds of a single dot and N is the number of dots in the pattern. Because there were typically a large number of dots in our patterns, the distribution of pattern directions or speeds was nearly Gaussian. The range of dot directions within a target never exceeded ±90°, so that dots never moved against the central direction of motion. The use of a large number of dots meant that pattern direction had considerably lower variance for the spatiotemporal noise targets (Fig. 1B) than for the temporal noise targets (Fig. 1A). After 432 ms of motion, the stochastic component of motion was eliminated, and the patch of dots moved coherently for the remainder of the trial. For experiments testing the effect of the number of dots or the size of the target, apertures ranged from 3.2 × 3.2 to 7 × 7° with 10–200 dots. With practice, the monkeys pursued spatiotemporal stochastic target motion well. The initial dot motion provided a strong stimulus for pursuit initiation, and the moving dots and aperture sustained excellent pursuit.

The range of dot directions within a target affects the speed as well as the direction of pattern motion. If the speed of individual dots is held constant, then the projection of each dot's motion along the average trajectory of motion becomes smaller as the range of dot directions increases, and the overall speed of pattern motion falls. At the extreme, a dot moving at 90° relative to the average direction of motion makes no contribution to pattern speed and thus reduces the average speed of the pattern. To assess this directly and verify the absence of other problems in our stimuli, we reconstructed the motion of all dots in each stimulus to compute pattern motion on individual trials. We confirmed that pattern speed is reduced by the cosine of the SD of dot directions and conducted experiments with stimuli that increased dot speed to maintain a constant pattern speed as well as with stimuli that held dot speed constant over all direction ranges. Pattern speed corrections did not turn out to be an important factor in our results.

Data analysis

Eye movements in all trials were inspected prior to analysis. Trials were not analyzed if they contained blinks, saccades during the time window of analysis, or a drift in fixation at a speed in excess of 2°/s. Horizontal and vertical eye position and velocity data were extracted, and target motion was reconstructed for each trial. Trial data were aligned on target motion onset.

To analyze the relationship between eye velocity and the stochastic component of target velocity, we created an array of residuals, or noise vectors, by subtracting the trial-averaged eye velocity from the response each single trial. The pursuit response can be described as a velocity vector where indicates an eye velocity vector and the subscripts H and V label horizontal and vertical. The residual for the ith trial is given by where (...) denotes an average across responses to the same target motion. Because the directions of target motion used in our experiments were within 9° of horizontal, we restricted our analysis of eye direction to the vertical component of velocity and our analysis of eye speed to the horizontal component. For all experiments, we also analyzed instantaneous eye direction or speed with essentially identical results.

For the temporal noise experiments, we described the temporal relationship between eye and target velocity by computing the linear filter, h(τ), that best predicts the pursuit response to a given target motion (Mulligan 2002; Papoulis 1991; Tavassoli and Ringach 2009; Weiner 1949). The linear filter is defined for continuous signals by:

| (1) |

where the stimulus s is a fluctuation in target velocity (δvT), the predicted response is a fluctuation in eye velocity (δvE), τ represents the time lag relative to t, and the filter h(τ) is the function with units of 1/time that minimizes the summed squared error between the predicted and actual responses. We determined h(τ) by taking the inverse Fourier transform of the ratio of the cross spectrum between stimulus and response to the power spectrum of the stimulus. We computed the power spectra by taking the Fourier transform of the cross-correlation function between target and eye movement and the auto-correlation function of the target motion

| (2) |

The argument of the integral is the transfer function, , the Fourier transform of the linear filter that expresses the relationship between eye and target as a function of frequency. We computed the temporal filters and transfer functions using a modified version of the Matlab (Natick, MA) function TFE, which is based on Welch's averaged periodogram method. The algorithm divides the input (target velocity fluctuations) and output (eye velocity fluctuations) into overlapping sections that are detrended, convolved with a Hanning (square) window, and padded with zeros to efficiently compute a discrete Fourier transform. The power spectral densities were computed as the squared magnitude of the Fourier transform averaged over the overlapping sections and over trials. Analysis intervals consisted of either the entire time duration of the pursuit response from the onset of target motion to the characteristic time of the first saccade (typically 350–400 ms), or short time windows (125 or 200 ms) starting from each time point in the response. To capture the shape of the filter within a shorter window, we shifted the time window for eye velocity by 50–70 ms relative to the window for target velocity to compensate for the pursuit latency. We avoided over-fitting by testing predictions with data that had not been used to generate the filters. We computed h(τ) from multiple random draws of 70% of the recorded trials and tested the filters against the remaining 30% of the data by comparing the linear prediction to the actual eye velocity fluctuation on each trial. The final estimate of the temporal filter was obtained by averaging across all draws. We also tested the effect of adding a static nonlinearity in the form of a velocity-dependent gain term in front of the integral in Eq. 1 but found that it did not reduce error.

For spatiotemporal noise experiments, we report mainly the variance of eye direction and speed variation during the initial ∼125 ms of pursuit, the open-loop interval during which pursuit is driven by feed-forward sensory signals (Lisberger and Westbrook 1985). In addition, we performed two additional analyses based on work reported in earlier papers (Osborne et al. 2005, 2007).

In one analysis, we used methods described in Osborne et al. (2007) to compute behavioral threshold, the smallest difference in target direction, or speed that could be discriminated from pursuit. In brief, we used the covariance of eye velocity fluctuations from motion onset to the time T, δv(T) to compute a signal-to-noise ratio (SNR) at time T. Because SNR scaled with the squared difference in target directions, SNR(T) = K(T)·Δθ2, we could define K(T) as SNR(T)/Δθ2 averaged across all combinations of target directions. We then defined as a behavioral threshold (Δθ = Δθthresh) where SNR was equal to 1, corresponding to 69% correct at time T. We report the behavioral threshold near the end of the open-loop interval, 100 ms after the onset of pursuit.

In a second analysis, we used the methods of Osborne et al. (2005) to quantify the trial-by-trial variation in pursuit. To do so, we described each trial's variation from the mean eye velocity vector in terms of three dimensions that accounted for >90% of the trial-by-trial variance. Briefly, we formed a vector model that described each trial's deviation from the mean response in terms of errors in estimating the direction (δθ) and speed (δv) of target motion and plotted the distribution of those errors across trials. We also computed the correlations between direction and speed errors across trials as

RESULTS

To track a moving target with our eyes, the brain must estimate the target's motion direction and speed and convert that estimate to motor commands. Because the responses of individual sensory neurons are variable, pursuit may form its estimate of target motion by integrating or averaging both across time and across neurons that represent the relevant region of the visual field. In the present experiments, we analyze responses to stochastic target motion that introduces fluctuations over time or over space and time, adding noise to the process of sensory estimation. The use of “temporal noise” and “spatiotemporal noise” stimuli allows us to assay temporal and spatial integration of the visual motion signals for pursuit eye movements.

Effect of spatiotemporal stimulus noise on eye motion variance

Monkeys pursued targets produced by patches of dots in which the stimulus varied across space and time because the stochastic component of each dot's motion was selected independently at each update time. For the first 150 ms of stimulus motion, the dots within the patch moved but the aperture remained stationary. Still, the local motion in the pattern provided consistent guidance for pursuit and is a stimulus configuration that generates eye movements nearly identical to those evoked when the aperture also moves (Osborne et al. 2007). The effectiveness of the stimulus with the stationary aperture was expected given the prior demonstration that pursuit is driven by local motion within a patch of dots and not by the motion of the aperture (Priebe et al. 2001).

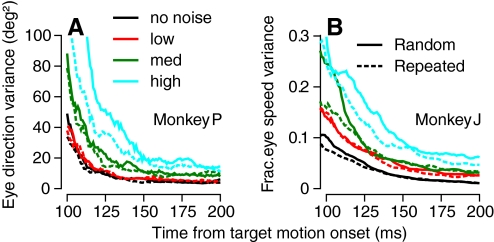

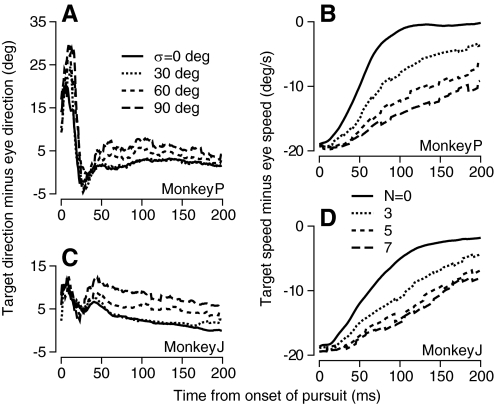

To enable analysis of how and where in the nervous system noise in the visual stimulus affects the command signals for pursuit, we start by confirming that spatiotemporal variation in target motion increased variation in pursuit (Watamaniuk and Heinen 1999). In Fig. 2, we compare the effects of targets that had different levels of spatial noise on the time course of the variance of eye motion during the first 100 ms of pursuit. For both direction (Fig. 2A) and speed (B), the variation in eye movement decreased over the first 150 ms of pursuit for all noise levels. At each time point, the behavioral variance was higher when there were larger amounts of spatiotemporal noise in the stimulus. We quantified the effect of different levels of stimulus noise on pursuit by measuring the variance of eye direction or speed near the end of the “open-loop” interval, 100 ms after the onset of pursuit, and pooling the results across multiple experimental sessions using stimuli with a variety of noise ranges (Fig. 3). We plotted the response variance across trials as a function of the variance of the average pattern motion at any moment, given by σdots2/N where σdots2 is the variance of the uniform distribution from which dot direction or speed values were chosen and N is the number of dots in the pattern. In both monkeys we tested, the variance in pursuit scaled linearly with the added stimulus noise for both direction and speed.

Fig. 2.

Time course of variance of eye direction for spatio-temporal noise in direction (A),and fractional variance of eye speed for speed noise (B) for different levels of spatiotemporal noise in target motion. Each graph plots measurements as a function of time from the onset of target motion. Black, red, green, and cyan traces show results from single experiments for targets without noise and with low, medium, and high levels of noise, corresponding to a ±30, ±60, or ±90° range of noise for direction, and a 22, 32, or 40°/s range of noise (n = 3, 5, 7) for speed. Solid traces show data from trials that used a different random pattern of dot motion in each trial, and dashed traces from trials that repeated the same stochastic noise on each trial.

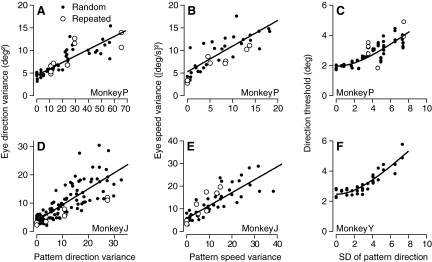

Fig. 3.

Effect of variance of target speed or direction on the variance of pursuit initiation. A, B, D, and E: •, the variance in pursuit eye direction (A and D) or eye speed (B and E) as a function of stimulus variance measured in a 15-ms window 100 ms after the onset of pursuit. ○, results from trials that repeated the same stochastic target motion so that the physical visual stimulus was identical on all trials. Each symbol represents a different data set. —, the regression fit for Eq. 4. Pattern speed and direction variance are defined as the variance of individual dot motion divided by the number of dots in the target. Speed variances are given as a fraction of base target speed, which was 20°/s. C and F: the direction discrimination threshold for pursuit 100 ms after the onset of pursuit is plotted as a function of the SD of pattern direction. • and ○, results of experiments with the same stochastic noise on each trial vs. randomized noise. Data from monkey Y appear in F because its data satisfied the requirements for the analysis of direction threshold that a given experimental day include many repetitions of very few stimuli. Similar data were obtained from monkey J but using a different experimental design. Fewer repetitions of many stimuli were presented on each day, so that the threshold analysis would have required combining data across experimental days for each stimulus noise level.

One feature of the spatiotemporal noise targets is that the pattern direction and speed varies slightly from stimulus to stimulus as a function of the numerical seed used to choose the random component of each dot's motion. As a result, target motions are slightly different on each trial. To determine whether the trial-to-trial difference in pattern motion or the simultaneous presence of different directions or speeds increased variation in pursuit, we interleaved trials that used the same seed repeatedly or different, randomized, seeds. In the “fixed-seed” trials, each dot repeated its individual random walk on every trial so that there was zero variation in pattern motion across trials. The level of behavioral variance was slightly smaller with the repeated stimuli (Fig. 2, dashed lines) compared with the random stimuli (solid lines), but the dependence of eye direction and speed variance on target noise level largely remained throughout the first 150 ms of pursuit. In the quantitative analysis of Fig. 3, data for the targets that repeated the same noise sequence (○) lay either within or at the bottom of the distribution of pursuit variances for the targets that used different noise sequences in each trial (•). There is no trial-by-trial difference in the pattern trajectory for experiments that repeated the same stochastic dot motions, but we plotted those points at σdots2/N on the x axis.

In interpreting our data, one important issue will be the extent to which the conclusions we draw from analysis of pursuit also can be applied to perception. Therefore we have converted our measurements into quantities that are directly related to perceptual judgments. Using the experimental and analytical methods sketched in methods and detailed in Osborne et al. (2007), we estimated the direction discrimination thresholds of pursuit for each level of stimulus noise. As expected given the effects of directional noise of eye velocity variance, the direction discrimination thresholds increased steadily as a function of the magnitude of the directional noise in both monkeys we used for this analysis; Fig. 3, C and F shows thresholds 100 ms after the onset of pursuit. Further, the thresholds obtained by repeating the same dot motions in every trial (Fig. 3C, ○) fell within the distribution for those obtained when the dot motions varied from trial to trial.

Interactions of direction and speed noise

To determine the origin of trial-by-trial variation in pursuit, one important issue will be the extent of generalization of stimulus noise. Does noise in stimulus direction cause increases only in the variance of pursuit direction or does it also affect the variance or amplitude of pursuit speed? We addressed the generalization of variation using methods detailed in Osborne et al. (2005) to express the deviation in the open-loop pursuit response on each trial as sensory errors in estimating the direction, speed, and time of onset of target motion. This analysis operates under the assumption, validated by much of our recent work (Medina and Lisberger 2007; Osborne et al. 2005, 2007; Schoppik et al. 2008), that downstream motor circuits loyally follow sensory errors in estimating target direction and speed. In our earlier work and in the present data, the three axes of direction, speed, and timing errors accounted for >93% of the trial-to-trial variation in the initiation of pursuit.

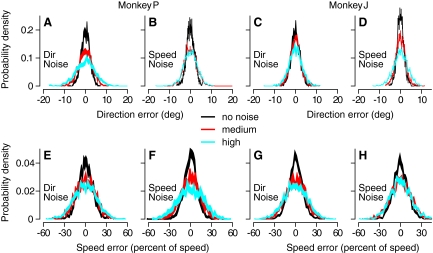

In both monkeys whose data we analyzed, directional noise increased speed errors, and speed noise increased directional errors. Figure 4 shows that the magnitude of the noise in either the speed or direction of target motion affected the distributions of both speed and direction errors for pursuit of spatiotemporal noise targets. In each panel, the distributions broadened as the noise in the visual stimulus was increased from zero (black) to medium (red) to high (cyan), although the effect of speed noise on direction errors was relatively small in monkey P; quantification appears in the legend to Fig. 4. For the direction noise experiments, we increased the speed of dot motion to compensate for a drop in pattern speed with noise level and found no change in the effect of stimulus noise on the distributions of errors. Even though noise in one modality affected sensory estimation errors in both modalities, direction and speed errors were not correlated. We conclude that the sensory estimates of direction and speed that guide the initiation of pursuit eye movements are independent but that they are affected by a common source of noise.

Fig. 4.

Effect of spatiotemporal noise on errors in estimating that direction and speed of target motion in the initiation of pursuit eye movements. Each panel plots a distribution of direction (A–D) or speed (E–H) errors based on analysis of the first 125 ms of pursuit, across a large number of repetitions of the same stimulus. For each monkey, the graphs show the effects of speed and direction noise on both speed and direction errors. Black, red, and cyan curves indicate distributions for no noise, medium noise, or high noise levels in the stochastic component of target motion. The data are shown as ribbons with thicknesses that indicate ±SD from the means. For direction noise with a range of 0, ±60, and ±80 deg, δθrms was 1.5 ± 0.05, 2.2 ± 0.08, and 3.5 ± 0.1°, respectively, for monkey P and 1.9 ± 0.03, 2.2 ± 0.04, and 2.6 ± 0.05° for monkey J; δvrms was 9.1 ± 0.2, 13 ± 0.3, and 17 ± 0.3% for monkey P and 10 ± 0.2, 15 ± 0.2, and 15 ± 0.2% for monkey J. For speed noise with ranges of 0, 27, and 40°/s, δvrms was 10 ± 0.2, 13 ± 0.3, and 15 ± 0.3% for monkey P and 10 ± 0.3, 14 ± 0.3, and 16 ± 0.3% for monkey J; δθrms was 1.4 ± 0.1, 1.9 ± 0.1, and 1.7 ± 0.1° for monkey P and 1.5 ± 0.1, 2.1 ± 0.03, and 2.8 ± 0.1° for monkey J. The errors for these numbers represent SDs over random draws of half of the data.

Neither directional nor speed noise had much effect on the mean eye direction (Fig. 5, A and C). The difference between target and eye direction settled close to zero within the first 25 ms of pursuit for all levels of speed or directional noise. In contrast, increases in either directional or speed noise decreased the mean estimates of target speed for pursuit (Fig. 5, B and D). The difference between eye and target speed during the first 150 ms of pursuit decreased quite quickly for noiseless stimuli, but the rate of decrease became more sluggish as the amount of direction or speed noise in the stimulus increased. Unfortunately we do not know whether the effect of noise level on eye speed during the initiation of pursuit occurs because stimulus-induced neural noise causes a consistent decrease in estimates of target speed by population decoding or a decrease in the strength of visual-motor transmission (Tanaka and Lisberger 2001).

Fig. 5.

Effect of spatiotemporal noise on the direction and speed accuracy of pursuit. A and C: direction. Numbers in key define the half range of direction values. B and D: speed. Different line types show data for different levels of speed or direction noise. Each graph plots the average difference between the target and eye as a function of time from the initiation of pursuit. Top and bottom panels show data for monkeys P and J.

Spatial integration: effect of number of dots

Knowledge of how local motion signals are pooled across the spatial extent of a target should inform an understanding of how sensory inputs are transformed into motor behavior. We do not anticipate that averaging across space should be perfect because it is unlikely that that nervous system measures each local motion without adding noise or that it pools the measurements perfectly. The goal of the present section was to quantify the failures of spatial averaging as a means of constraining the sources and handling of noise in visual motion processing for pursuit.

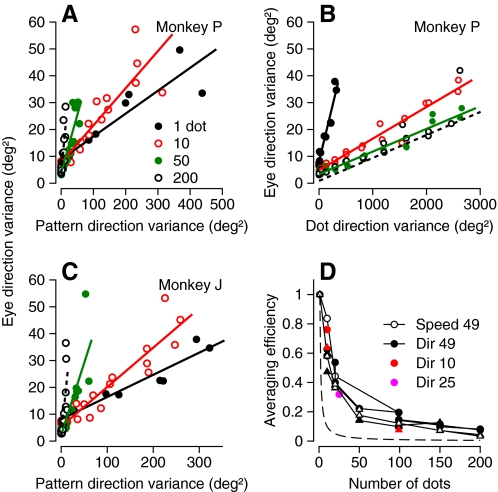

We quantified the extent of spatial integration in the visual motion inputs for pursuit by comparing results from experiments in which spatiotemporal noise targets were composed of different numbers of dots, ranging from 1 (a spot target) to 200. For each number of dots (N), we varied the range of noise in the motion of each individual dot (σdots2) and plotted the variance of eye direction as a function of the variance of the pattern direction, σdots2/N. The slope of the relationship between eye and pattern direction variance depended strongly on the number of dots, increasing as the number of dots increased in very similar ways for the two monkeys (Fig. 6, A and C), and the dependence on dot number persisted throughout pursuit initiation. The results looked quite different if we plotted the variance of eye direction as a function of the variance of single dot direction (Fig. 6B) instead of as a function of the variance of pattern direction (A and C). Except for the results for a single dot comprising a spot target, the relationships in Fig. 6B were very similar as the number of dots ranged from 10 to 200.

Fig. 6.

Analysis of spatial averaging for pursuit as a function of the number of moving dots in the targets. A and C: eye direction variance as a function of pattern direction variance. Different symbols/colors indicate results for targets that contain different numbers of dots; lines represent regression fits for Eq 3. B: eye direction variance plotted as a function of the variance of motion of single dots, using same data as in A. D: effective noise reduction is plotted as a function of the number of dots for the 2 monkeys. Filled and open black symbols show data for direction and speed noise in a 7 × 7° patch, red and purple symbols for direction noise in 3 × 3 and 5 × 5° patches, and triangles and circles for the 2 monkeys. Numbers in the key for B and D indicate the area of the stimulus in deg2. Effective noise reduction is defined in the text.

To understand the significance of the effects illustrated in Fig. 6, A–C, consider expectations if the visual motion input for pursuit were subject to perfect spatial averaging. Then pattern direction variance would predict eye direction variance independent of the number of dots in the target, and the relationships in Fig. 6, A and C, would superpose for different numbers of dots: the slopes should be the same for all numbers of dots. Instead we find that the slopes increase steadily as a function of the number of dots. At the other extreme, if there were no spatial averaging at all, then the variance of dot (rather than pattern) motion would determine behavioral variance: the relationships in Fig. 6, A and C, should separate as a function of the number of dots, but the curves would collapse onto a single relationship when eye direction variance is plotted as a function of the variance of motion of each individual dot (Fig. 6B).

Figure 6 shows that there is some, but incomplete, spatial averaging when the patch contains 1–10 dots, but little additional spatial averaging as more dots are added to the stimulus. We quantified the efficiency of spatial averaging by fitting the data for each number of dots with

| (3) |

where σeye2represents eye movement variation, σint2 is the fixed level of internal noise in the pursuit system, and σpattern2 is the noise added by the stimulus. SN quantifies the effective sampling rate for stimuli containing N dots and is given by the inverse of the slope of the linear fits to data like those shown in Fig. 5, A and C. If averaging was perfect, then SN should equal N times S1. We defined the “averaging efficiency” as SN/(S1·N), which would be 1 for perfect averaging and 1/N for no averaging. In both monkeys, for both speed and direction (Fig. 6D), averaging efficiency is fair for small numbers of dots, but the data approach 1/N (dashed curve) for textures that contain >20 dots, indicating that averaging efficiency is quite poor. We attribute the reduction in the quality of spatial averaging to the number of dots rather than the dot density because the colored points showing data for smaller patches (and therefore higher dot densities) plot along the trajectories defined by the data for 7 × 7° patches (black symbols). We take the poor spatial averaging as evidence that the variation of pursuit is determined by the noise properties of the central sensory neurons and the limitations of pooling across those neurons. We do not think that the relationship between averaging efficiency and number of dots can be attributed to peculiarities in our visual stimulus, because recording experiments have found rational responses in MT and V1 using the same stimulus system (e.g., Churchland et al. 2004).

Temporal integration with temporal noise

The preceding section showed that spatial averaging in the visual input for pursuit is unexpectedly poor. We next asked if temporal averaging could be an effective mechanism for noise reduction and whether the locus of temporal averaging is more likely to be in the sensory or motor arm of the pursuit circuit.

Monkeys pursued visual targets that had a base direction and speed plus a temporally stochastic element of target motion either in direction or speed. In temporal noise targets, all dots moved uniformly and synchronously with a trajectory that comprised a base direction and speed plus a stochastic element that was drawn at each update interval (typically 12 ms) from a zero-mean Gaussian distribution. We present a complete sequence of results for temporal noise in target direction, obtained by analyzing vertical eye velocity when the base direction of target motion was close to horizontal. We obtained very similar results for temporal noise in target speed and present them only in summary figures.

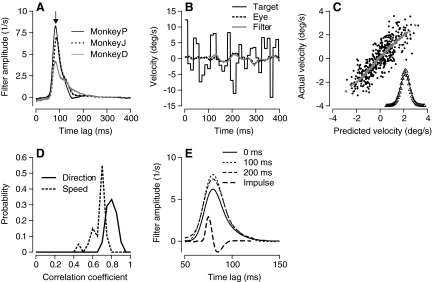

We analyzed the data by computing the linear temporal filter that best captured the relationship between target and eye motion, yielding a description of how target motion at all times t − Δt is weighted to drive the eye movement at time t. For the three monkeys for which results are illustrated in Fig. 7 A, the temporal filters had similar shapes, but different amplitudes. In 68 experiments across three monkeys, the filters peaked at lags of 84±4 (SD) ms, corresponding roughly to the latency of pursuit in our monkeys. The width of the filters, defined as the full width at half the maximum amplitude, ranged from 17 to 41 ms across all target conditions with a mean and SD of 25 ± 5 ms. The filters were much longer than the impulse response of the eye velocity differentiator, which was 7.5 ms. The temporal properties of the filters we obtained in monkeys were somewhat narrower than those reported by Tavassoli and Ringach (2009) in humans.

Fig. 7.

Linear filters used to define the temporal integration window of visual motion processing for pursuit. A: examples of filters that described the transformation from target to eye direction for 3 monkeys. The stimulus comprised smooth motion at 20°/s plus a stochastic component of directions drawn from a Gaussian distribution with a SD of −20° an update interval of 12 ms. Downward arrow indicates a time lag of 84 ms. B: vertical target velocity, actual eye velocity, and eye velocity predicted from the filter plotted as a function of time from the onset of target motion for a single trial. Meaning of the 3 line types is given in the key. C: correlation between actual and predicted eye velocity across all trials and times for a single day's experiment. Black dots plot the data at each time point and gray symbols represent the average of 1,000 adjacent points. In the inset, the triangles show the distribution of residuals defined as actual minus predicted velocity, and the curve shows a Gaussian fit. D: the distribution of linear correlation coefficients (R) between actual and predicted eye velocity across all experiments. Solid and dashed curves show data for temporal noise in direction and speed. E: different line types show filters measured from 125 ms of data starting 0, 100, or 200 ms after the onset of target motion for temporal direction noise. Data from monkey D. The trace defined by the longest dashes plots the eye velocity impulse response for single shock stimulation of the vestibular apparatus (from Brontë-Stewart and Lisberger 1994, Fig. 3A).

We next showed that the linear filter accounted for a high percentage of the variance of smooth pursuit eye velocity and therefore provides a good description of temporal filtering in the visual motion inputs for pursuit. To provide cross-validation, we derived filters repeatedly from a randomly chosen fraction of each dataset and used the filters to predict the average eye velocity on the remaining trials (e.g., Fig. 7B). The correlation between the predicted and actual averages of eye velocity at each time point was excellent (Fig. 7C) with a near-Gaussian distribution of residual errors (Fig. 7C, inset). Across all experiments, the correlations formed tight distributions (Fig. 7D) around means of 0.80 ± 0.05 (SD, n = 48) for directional noise and 0.66 ± 0.07 (n = 20) for speed noise. On average, the linear model accounted for 64% of the variance in pursuit for directional temporal noise and 44% for speed temporal noise. The general features of Fig. 7 did not depend strongly on the spatial form of the target; filters had similar shapes and amplitudes for spot targets versus patches containing 50 dots, although the temporal filters were ∼12 ms broader for spot targets and accounted for ∼10% less variance than did the filters for patch targets (n = 13).

Three features of our data argue that the width and amplitude of the temporal filters are consequences of visual processing rather than of the dynamics of the motor plant or the properties of the motor system. First, the shape of the temporal filters was relatively constant over the first several hundred milliseconds of the pursuit response, showing similar shapes and amplitudes when based on data in 125-ms time windows that started at the onset of pursuit and 100 or 200 ms later (Fig. 7E). Because of the frequent stochastic changes in target direction, data from all intervals are effectively open loop. Still, eye velocity consistently increased through the three consecutive analysis intervals; extraretinal signals that contribute to pursuit (e.g., Newsome et al. 1985; Tanaka and Lisberger 2001) could have, but did not, alter the temporal properties of the filter. The larger amplitudes of the filters in the second and third intervals may reflect the fact that the motor system was engaged at a higher level in the final analysis window (Schwartz and Lisberger 1994). Second, the temporal filters obtained from analyzing pursuit eye movements are much broader than the impulse response of the eyeball, estimated as the eye velocity response produced when single shocks are applied in the vestibular apparatus [Fig. 7E, small amplitude, long-dashed curve (replotted from Brontë-Stewart and Lisberger 1992)]. We verified that the use of a faster differentiator in their study did not create the large difference in the time courses illustrated in Fig. 6E. In a few temporal noise experiments, applying the faster differentiator used in the vestibular studies the temporal filters were only a few milliseconds narrower than those obtained with the slower differentiator.

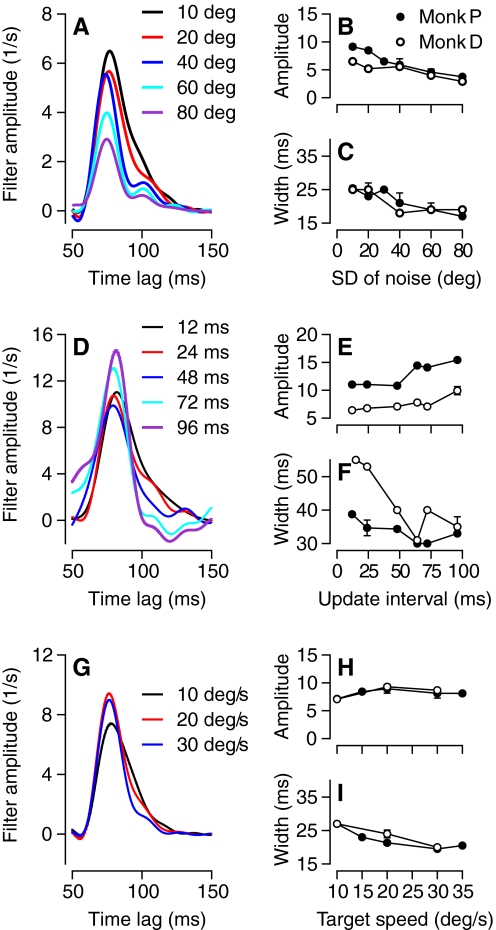

Figure 8 illustrates the third feature of the temporal filters suggesting that their dynamics arise in sensory processing for pursuit: their amplitude and width depended on a number of properties of the stochastic component of the visual stimulus, but not on the mean target (and eye) speed. For example, when the SD of the direction noise increased from 10 to 80° (Fig. 8A), the amplitude of the temporal filter decreased to half of its maximal level (Fig. 8B) and its width shortened by ∼8 ms (Fig. 8C). We observed a similar effect on amplitude when we increased the SD of speed noise with a smaller effect on the width of the filter. Altering the update interval for the temporal noise also affected the properties of the optimal filter (Fig. 8D) but differently. Increases in update interval caused modest increases in the amplitude of the filter (Fig. 8E) along with decreases in width (Fig. 8F). The opposite relationships between filter amplitude and width for changes in the amount of target noise (Fig. 8, A–C) versus the update interval (D–F) add to the evidence that the filter properties are a property of sensory processing rather than a fixed feature of the motor system. In support of a sensory origin for the temporal filters, we also observed little effect of eye speed on the filter properties: decreases in eye speed caused by decreases in base target speed produced small increases in filter width without consistent changes in filter amplitude (Fig. 8, G–I). The latter observation also provides a control showing that increased stochastic component of target motion caused decreases in filter amplitude and width directly rather than indirectly through an undesired decrease in the mean speed of the monkey's eye movements in the analysis interval.

Fig. 8.

Effects of experimental conditions on the shape of temporal filters for pursuit. A: temporal filters for temporal noise drawn from different distributions of directions given by the numbers in the key. Data from monkey D. B and C: the peak amplitude of the filters (B) and the full width at half-amplitude (C) as a function of the SD of the directional noise. D: temporal filters for temporal noise with different update intervals given by the numbers in the key. Data from monkey P. E and F: the peak amplitude of the filters (E) and the full width at half-amplitude (F) as function of the update interval. G: temporal filters for temporal noise in direction with different base target speeds given by the numbers in the key. Data from monkey P. H and I: the peak amplitude of the filters (H) and the full width at half-amplitude (I) as functions of the base target speed. Different symbols in the graphs show data for different monkeys. Error bars represent SD and are shown only when they were available and larger than the symbols. Data from monkey D appear in the graphs, because he and monkey P participated in the most complete set of parametric variations for computing pursuit filters.

Temporal integration with spatiotemporal noise

The analysis of responses to temporal-noise targets implied that the visual motion input is sampled with a filter having a duration of ∼25 ms. We now obtain independent verification of the duration of the sampling interval by returning to the eye movements evoked by spatiotemporal noise targets and varying both the variance of stimulus speed or direction and the temporal interval between updates of the stochastic motion.

When the update interval was short, the pattern direction variance was effectively averaged away, and on the effect of pattern direction variance on eye direction variance was weak (Fig. 9 A, ○). As the update interval increased, so did the magnitude of the effect of pattern direction variance on eye direction variance. Figure 9B shows a smaller effect of update interval in the same direction for speed noise. To move from the measurements in Fig. 8 to estimates of the duration of the temporal sampling interval in the visual motion inputs for pursuit, we return to a minor variant of Eq. 3 in which noise within by the physical stimulus adds to the system's internal noise level

| (4) |

where σeye2 represents eye movement variation, σint2 is the fixed level of internal noise in the pursuit system, and σpattern2 is the noise added by the stimulus. In Eq. 4, S is the inverse of the slope of the regression line and characterizes the number of independent samples taken across time in visual motion processing. Lower slopes indicate that more samples were taken resulting in greater noise reduction through temporal averaging.

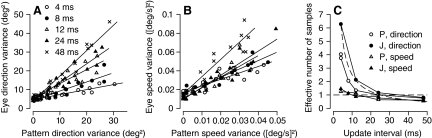

Fig. 9.

Analysis of temporal averaging for pursuit based on varying the update interval for the stochastic component of spatiotemporal noise targets. A: effect of variance of pattern direction on eye direction variance. B: effect of pattern speed variance on eye speed variance. Different symbols show results for different update intervals and lines are regression fits of Eq. 4 to the relationship between eye and pattern variance for each individual update interval. C: effective number of samples, defined as the inverse of the slope of the regression lines from Eq. 4, plotted as a function of update interval. • and ○ and ▴ and ▵ show data for direction and speed, respectively; ○ and ▵ and • and ▴ data for monkeys P and J, respectively. - - -, the prediction of Eq. 4 under the assumption that the temporal integration time is 25 ms, meaning that a single sample would be taken when the update interval was 25 ms.

When we applied Eq. 4 to the data from Fig. 3, A, B, D, and E, we obtained values of σint of 2 and 1.9° for internal noise in pursuit direction in monkeys P and J, respectively, and 2.2 and 2.4°/s (0.11 and 0.12 times target speed) for internal speed noise. We estimated the temporal integration window as the product of effective number of samples (S) in Eq. 4 and the duration of the update interval between changes in the stochastic component of target motion. The direction noise data (Fig. 3, A and D) imply temporal integration windows of 29 and 20 ms for the two monkeys in good agreement with the widths of the temporal filters derived in Fig. 7. The temporal integration windows for speed noise (Fig. 3, B and E) were 21 ms for the two monkeys, again in reasonable agreement with the widths of the temporal filters derived for temporal noise stimuli.

Finally, we returned to the effects of varying the temporal update interval of the stimulus (Fig. 9, A and B) and plotted the effective number of samples (S) as a function of the update interval (Fig. 9C). Pursuit behaved as if only one sample was taken when the update interval was ∼25 ms for both direction and speed noise in both monkeys tested, in agreement with the width of the temporal filters for direction perturbations presented in Figs. 7 and 8 and the analysis in the prior paragraph for the data in Fig. 3. The data for direction noise at all update intervals agreed well with interpreting S in Eq. 4 as the number of independent samples in time, formalized by the dashed curve in Fig. 9C, which plots the temporal integration window of pursuit divided by the update interval, Δtint/Δu as a function of update interval, under the assumption that Δtint = 25. However, the data for speed noise consistently indicated that pursuit used small numbers of samples at all update intervals and in that sense disagreed with the predictions of Eq. 4. We do not have any explanation for these minor differences in the responses to directional and speed noise, but we note that differences between effects of speed and directional noise also appear in the data of Watamaniuk and Duchon (1992). They found very little impact of spatiotemporal speed noise on performance in perceptual discrimination experiments. We were able to obtain consistent effects in our monkey subjects by using a wider range of speeds.

DISCUSSION

The smooth pursuit system transforms visual motion signals into commands for eye movement with the goal of eliminating retinal image motion. It does so quite effectively even though noise-free stimuli allow considerable trial-to-trial variation in the responses of neurons in visual motion pathways. The eye velocity of smooth pursuit also varies from trial to trial, but the magnitude of the variation is relatively small. Importantly, little of the variation in pursuit is “noise” but instead can be understood in terms of sensory errors in estimating the direction, speed, or time of onset of target motion (Osborne et al. 2005). To produce a behavior that has relatively small variation in the face of neural responses that have considerable variation, pursuit must integrate the noisy responses of visual motion neurons, pooling visual signals across both space and time.

Temporal averaging for pursuit

Temporal integration of visual information is well known for perceptual decisions, where discriminability of motion signals improves over viewing times of 100–200 ms, longer if the signal-to-noise ratio of the stimulus is low (e.g., de Bruyn and Orban 1988; Gold and Shadlen 2003; Morgan et al. 1983; Snowden and Braddick 1991; Watamaniuk and Sekuler 1992; Watamaniuk et al. 1989). For pursuit, only 100 ms of visual motion occurs before the onset of pursuit, and the response to that brief motion tracks the target motion accurately on average and quite precisely. Further, directional and speed precision evolve almost to their asymptotic limits within the first 100 ms of pursuit (Osborne et al. 2007). Therefore pursuit, like perception, must extract the parameters of object motion from a noisy neural background over a short time frame.

Two features of the eye velocity evoked by temporal noise in target motion imply that pursuit integrates across a time window of ∼25 ms. First, temporal filters that were ∼25 ms wide at half-amplitude provided the best description of the transformation from temporal noise target motion to eye velocity. Second, pursuit behaved as if taking a single sample of the visual stimulus when the update interval between changes in the stochastic component of motion was ∼25 ms; shorter update intervals allowed more samples. Converting the temporal filters to the frequency domain implies that pursuit reduces noise by suppressing visual inputs at frequencies >10 Hz. This seems like a well-planned compromise: pursuit integrates across a long enough temporal window to lend some noise immunity, but its frequency response still is well-matched to the temporal-frequency spectrum of natural moving images (Dong and Atick 1995).

Several lines of evidence suggest that temporal averaging for pursuit occurs during sensory processing and that the duration and shape of the temporal filters for pursuit arise in the sensory system. First, the duration of the filters that transformed visual motion into eye motion is much longer than the impulse response of the oculomotor plant as assessed by single shock stimulation of the vestibular apparatus. Therefore we suggest that the shape of the temporal filters for pursuit arises in neural rather than mechanical processing. Second, the amplitude and width of the filters depended on the visual properties of the stimulus rather than on the eye movement. Decreases in filter amplitude with increased direction or speed range in the visual stimulus could be caused by a broadly tuned divisive normalization mechanism (e.g., Heeger 1993) that is derived from an increasingly broad population of neurons. Degrading the visual stimulus also could decrease filter amplitude by decreasing the gain of visual-motor transmission downstream from MT (Goldreich et al. 1992; Tanaka and Lisberger 2001). However, altering the gain of visual-motor transmission directly by changing target speed did not have consistent effects on filter amplitude.

If, as we propose, the temporal features of pursuit filters reside in sensory processing, then our data imply all subsequent filtering has faster temporal properties than does sensory processing. Therefore pursuit is driven by samples of visual motion signals taken in windows of duration ∼25 ms. Given that the average MT neuron emits ∼11.5 spikes in the first 150 ms of a preferred visual motion stimulus (Huang and Lisberger 2009; Osborne et al. 2004), integration across 25 ms means that pursuit is sampling on average 1 to 2 spikes at a time from each neuron. Thus motion integration must operate on the basis of rather sparse spike trains from each individual neuron. Combining across many neurons would allow the system to count a substantial number of spikes within the integration interval, but the small number of spikes for each neuron in a given integration interval would allow alternate codes based on combinations of spikes and silence across neurons with similar tuning properties (Osborne et al. 2008; Reich et al. 2001).

Changes in the response characteristics of neurons in the retina, LGN, and V1 have been shown to depend on the variance and temporal correlation structure of stimuli. Therefore processing in these structures may provide a neural basis for the behavioral filters we observed (e.g., Chander and Chichilnisky 2001; Clifford et al. 2007; Hamamoto et al. 1994; Kim and Rieke 2001; Lesica et al. 2007; Schwartz and Simoncelli 2001; Sharpee et al. 2006; Smirnakis et al. 1997; Victor 1987; Wark et al. 2009). Finally, the width of the filters we obtained for pursuit agree well with the narrowest filters obtained in MT neurons by Borghuis et al. (2003) using stimuli quite similar to ours, and are slightly shorter than those obtained by Bair and Movshon (2004) by triggering averages of a stochastic stimulus on the spikes of MT neurons. We conclude that a 25-ms filter width likely is already evident in the responses of MT neurons and sets the duration of temporal integration for pursuit.

Spatial averaging for pursuit

We think of spatial averaging as a way to combine stimuli that appear in different places in the visual field, but our data imply that spatial averaging of the visual input for pursuit eye movements is quite inefficient. Eye direction and speed variances decrease as a function of the number of moving elements in the stimulus but less than expected from motion averaging. For stimuli that contain only 10 dots, the efficiency of spatial averaging is half of the theoretical prediction that eye motion variance should decrease in proportion to the number of dots. For stimuli with ≥50 dots, the efficiency of spatial averaging is only 10–20% of the theoretical prediction.

At some level, we must think of noise reduction in sensory processing as a consequence of integrating across a population of noisy neural responses rather than across the physical stimulus itself. A number of our central observations underscore the advantages of thinking in terms of noise reduction as part of population decoding, where the noise across the population responses varies as a function of, but not in proportion to, the noise present in the stimulus. First, pursuit variance is nonzero even for nominally noise-free stimuli (Osborne et al. 2005, 2007), a fact that can be explained only by neural noise (Huang and Lisberger 2009). Second, spatiotemporal noise in either speed or direction affects sensory estimation in both. Separable effects would be expected if pursuit were averaging across the physical stimulus, whereas shared effects would be expected if target speed and direction were estimated from a neural population response that acts as a shared noise source, for example MT. Third, pursuit variation increases with the range of dot motions in the target even when each repetition of a given level of stimulus noise contains the exact same dot motions. If pursuit was estimating the direction and speed of each dot and averaging across the many dots in the stimulus, then we would not expect any increment above the baseline level of pursuit variation. Instead, it seems that the presence of noise in the stimulus increases the noise in the neural population response just by its existence. Finally, the variance of pursuit does not scale in inverse proportion to the number of dots in the stimulus as expected if pursuit were averaging across the dot motions in the stimulus.

Correlated noise in the responses of MT neurons (Bair et al. 2001; Cohen and Newsome 2008; Zohary et al. 1994) can explain the magnitude of variation in pursuit direction and speed (Huang and Lisberger 2009). We also know from preliminary studies (Yang and Lisberger 2009) that spatiotemporal noise alters the direction tuning of individual MT neurons. We suspect that spatiotemporal noise has its biggest effect on the neuron-by-neuron variation in firing across the MT population rather than on the trial-by-trial variation of the individual neurons. To provide more quantitative interpretations, we will need to document a large sample of MT responses (and neuron-neuron correlations) for both noise-free and spatiotemporal noise targets. Only then will we be able to create and decode a realistic model MT population response. Neural data also will allow us to determine whether the properties of spatial integration expressed in the pursuit response are related to the saturation of the responses of MT neurons at very low numbers of dots (Snowden et al. 1992).

Common spatial integration mechanisms for pursuit and perception

Watamaniuk and Heinen (1999) used a spatiotemporal noise stimulus like ours to show parallel degradation of direction precision for pursuit and perception according to the model described by Eq. 3. Their work implies that noise in the sensory stimulus creates a source of neural variation that is shared by perception and behavior. Our research uses measures of pursuit eye movement only. However, the advantages of pursuit as a behavioral endpoint have allowed us to go further in our understanding of temporal and spatial integration for motion estimation. We have been able to define the temporal window of motion integration for pursuit and strengthen the case that temporal integration for pursuit is localized in sensory processing. Further, we have focused attention on explanations of pursuit variation in terms of noise in the sensory representation of motion rather than from the motor system or the physical properties of the sensory stimulus itself. We think that our conclusions from measures of pursuit variation also will apply to visual motion integration for perception. Because of the similarities of direction and speed thresholds for pursuit and perception for noise-free and noisy stimuli, we expect that explanations of the neural basis of sensory estimation for perception will draw heavily from our ultimate understanding of the motor effects revealed here.

Grants

This research was supported by National Eye Institute Grant EY-03878 and the Howard Hughes Medical Institute.

ACKNOWLEDGMENTS

We thank S. Tokiyama, E. Montgomery, and K. MacLeod for assistance with animal monitoring and maintenance, S. Ruffner for computer programming, and the late B. Wright for helpful conversations about numerical methods.

REFERENCES

- Bair W, Movshon JA. Adaptive temporal integration of motion in direction-selective neurons in macaque visual cortex. J Neurosci 18: 7305–7323, 2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bair W, Zohary E, Newsome WT. Correlated firing in macaque visual area MT: time scales and relationship to behavior. J Neurosci 21: 1676–1697, 2001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borghuis BG, Perge JA, Vajda I, van Wezel RJ, van de Grind WA, Lankheet MJ. The motion reverse correlation (MRC) method: a linear systems approach in the motion domain. J Neurosci Methods 123: 153–166, 2003 [DOI] [PubMed] [Google Scholar]

- Born RT, Groh JM, Zhao R, Lukasewycz SJ. Segregation of object and background motion in visual area MT: effects of microstimulation on eye movements. Neuron 26: 725–34, 2000 [DOI] [PubMed] [Google Scholar]

- Britten KH, Newsome WT, Shadlen MN, Celebrini S, Movshon JA. A relationship between behavioral choice and the visual response of neurons in macaque MT. Vis Neurosci 13: 87–100, 1996 [DOI] [PubMed] [Google Scholar]

- Britten KH, Shadlen MN, Newsome WT, Movshon JA. The analysis of visual motion: a comparison of neuronal and psychophysical performance. J Neurosci 12: 4745–4755, 1992 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brontë-Stewart HM, Lisberger SG. Physiological properties of vestibular primary afferents that mediate motor learning and normal performance of the vestibulo-ocular reflex in monkeys. J Neurosci 14: 1290–1308, 1994 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chander D, Chichilnisky EJ. Adaptation to temporal contrast in primate and salamander retina. J Neurosci 21: 9904–9916, 2001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Churchland MM, Lisberger SG. Shifts in the population response in the middle temporal visual area parallel perceptual and motor illusions produced by apparent motion. J Neurosci 21: 9387–9402, 2001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Churchland MM, Priebe NJ, Lisberger SG. Comparison of the spatial limits on direction selectivity in visual areas MT and V1. J Neurophysiol 93: 1235–1245, 2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clifford CW, Webster MA, Stanley GB, Stocker AA, Kohn A, Sharpee TO, Schwartz O. Visual adaptation: neural, psychological and computational aspects. Vision Res 47: 3125–3131, 2007 [DOI] [PubMed] [Google Scholar]

- Cohen MR, Newsome WT. Context-dependent changes in functional circuitry in visual area MT. Neuron 60: 162–173, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Bruyn B, Orban GA. Human velocity and direction discrimination measured with random dot patterns. Vision Res 28: 1323–1335, 1988 [DOI] [PubMed] [Google Scholar]

- Dong DW, Atick JJ. The statistics of time-varying images. Network: Comput Neural Syst 6: 345–358, 1995 [Google Scholar]

- Georgopoulos AP, Schwartz AB, Kettner RE. Neuronal population coding of movement direction. Science 233: 1416–1419, 1986 [DOI] [PubMed] [Google Scholar]

- Gold JI, Shadlen MN. The influence of behavioral context on the representation of a perceptual decision in developing oculomotor commands. J Neurosci 23: 632–651, 2003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldreich D, Krauzlis RJ, Lisberger SG. Effect of changing feedback delay on spontaneous oscillations in smooth pursuit eye movements of monkeys. J Neurophysiol 67: 625–638, 1992 [DOI] [PubMed] [Google Scholar]

- Groh JM, Born RT, Newsome WT. How is a sensory map read out? Effects of microstimulation in visual area MT on saccades and smooth pursuit eye movements. J Neurosci 17: 4312–4330, 1997 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hamamoto J, Cheng H, Yoshida K, Smith EL, 3rd, Chino YM. Transfer characteristics of lateral geniculate nucleus X-neurons in the cat: effects of temporal frequency. Exp Brain Res 98: 191–199, 1994 [DOI] [PubMed] [Google Scholar]

- Heeger DJ. Modeling simple-cell direction selectivity with normalized, half-squared, linear operators. J Neurophysiol 70: 1885–1898, 1993 [DOI] [PubMed] [Google Scholar]

- Huang X, Lisberger SG. Noise correlations in cortical area MT and their potential impact on trial-by-trial variation in the direction and speed of smooth pursuit eye movements. J Neurophysiol 101: 3012–3030, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kawano K, Miles FA. Short-latency ocular following responses of monkey. II Dependence on a prior saccadic eye movement. J Neurophysiol 56: 1355–1380, 1986 [DOI] [PubMed] [Google Scholar]

- Kim KJ, Rieke F. Temporal contrast adaptation in the input and output signals of salamander retinal ganglion cells. J Neurosci 21: 287–299, 2001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kowler E, McKee SP. Sensitivity of smooth eye movement to small differences in target velocity. Vision Res 27: 993–1015, 1987 [DOI] [PubMed] [Google Scholar]

- Lee C, Rohrer WH, Sparks DL. Population coding of saccadic eye movements by neurons in the superior colliculus. Nature 332: 357–360, 1988 [DOI] [PubMed] [Google Scholar]

- Lesica NA, Jin J, Weng C, Yeh CI, Butts DA, Stanley GB, Alonso JM. Adaptation to stimulus contrast and correlations during natural visual stimulation. Neuron 55: 479–491, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lisberger SG, Movshon JA. Visual motion analysis for pursuit eye movements in area MT of macaque monkeys. J Neurosci 19: 2224–2246, 1999 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lisberger SG, Westbrook LE. Properties of visual inputs that initiate horizontal smooth pursuit eye movements in monkeys. J Neurosci 5: 1662–1673, 1985 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu J, Newsome WT. Correlation between visual perception and neural activity in the middle temporal visual area. J Neurosci 25: 711–722, 2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Masson GS, Busettini C, Miles FA. Vergence eye movements in response to binocular disparity without depth perception. nature 389: 283–286, 1997 [DOI] [PubMed] [Google Scholar]

- Medina JF, Lisberger SG. Variation, signal and noise in cerebellar sensory-motor processing for smooth pursuit eye movements. J Neurosci 27: 6832–6842, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Medina JF, Lisberger SG. Links from complex spikes to local plasticity and motor learning in the cerebellum of awake-behaving monkeys. Nat Neurosci 11: 1185–1192, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morgan MJ, Watt RJ, McKee SP. Exposure duration affects the sensitivity of vernier acuity to target motion. Vision Res 23: 541–546, 1983 [DOI] [PubMed] [Google Scholar]

- Mulligan JB. Sensory processing delays measured with the eye-movement correlogram. Ann NY Acad Sci 956: 476–478, 2002 [DOI] [PubMed] [Google Scholar]

- Newsome WT, Paré EB. A selective impairment of motion perception following lesions of the middle temporal visual area (MT). J Neurosci 8: 2201–2211, 1988 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newsome WT, Wurtz R, Dursteler MR, Mikami A. Deficits in visual motion processing following ibotenic lesions of the middle temporal visual area of the macaque monkey. J Neurosci 5: 825–840, 1985 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Osborne LC, Bialek W, Lisberger SG. Time course of information about motion direction in visual area MT of macaque monkeys. J Neurosci 24: 3210–3222, 2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Osborne LC, Hohl S, Bialek W, Lisberger SG. Time course of precision in smooth-pursuit eye movements of monkeys. J Neurosci 27: 2987–2998, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Osborne LC, Lisberger SG, Bialek W. A sensory source for motion variation. Nature 437: 412–416, 2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Osborne LC, Palmer SE, Lisberger SG, Bialek W. The neural basis for combinatorial coding in a cortical population response. J Neurosci 28: 13522–13531, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Papoulis A.Probability, Random Variables and Stochastic Processes (3rd ed.) New York: McGraw-Hill, 1991 [Google Scholar]

- Perrett DI, Oram MW, Ashbridge E. Evidence accumulation in cell populations responsive to faces: an account of generalization of recognition without mental transformations. Cognition 67: 111–1145, 1998 [DOI] [PubMed] [Google Scholar]

- Priebe NJ, Churchland MM, Lisberger SG. Reconstruction of target speed for the guidance of pursuit eye movements. J Neurosci 21: 3196–3206, 2001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramachandran R, Lisberger SG. Normal performance and expression of learning in the vestibulo-ocular reflex (VOR) at high frequencies. J Neurophysiol 93: 2028–2038, 2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rashbass C. The relationship between saccadic and smooth tracking eye movements. J Physiol 159: 326–338, 1961 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reich DS, Mechler F, Victor JD. Independent and redundant information in nearby cortical neurons. Science 294: 2566–2568, 2001 [DOI] [PubMed] [Google Scholar]

- Schoppik D, Nagel KI, Lisberger SG. Cortical mechanisms of smooth eye movements revealed by dynamic covariations of neural and behavioral responses. Neuron 58: 248–260, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwartz JD, Lisberger SG. Initial tracking conditions modulate the gain of visuo-motor transmission for smooth pursuit eye movements in monkeys. Vis Neurosci 11: 411–424, 1994 [DOI] [PubMed] [Google Scholar]

- Schwartz O, Simoncelli EP. Natural signal statistics and sensory gain control. Nat Neurosci 4: 819–825, 2001 [DOI] [PubMed] [Google Scholar]

- Sharpee TO, Sugihara H, Kurgansky AV, Rebrik SP, Stryker MP, Miller KD. Adaptive filtering enhances information transmission in visual cortex. Nature 439: 936–942, 2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smirnakis SM, Berry MJ, Warland DK, Bialek W, Meister M. Adaptation of retinal processing to image contrast and spatial scale. Nature 386: 69–73, 1997 [DOI] [PubMed] [Google Scholar]

- Snowden RJ, Braddick OJ. The temporal integration and resolution of velocity signals. Vision Res 31: 907–914, 1991 [DOI] [PubMed] [Google Scholar]

- Snowden RJ, Treue S, Andersen RA. The responses of neurons in areas V1 and MT of the alert rhesus monkey to moving random dot patterns. Exp Brain Res 88: 389–400, 1992 [DOI] [PubMed] [Google Scholar]

- Stone LS, Krauzlis RJ. Shared motion signals for human perceptual decisions and oculomotor actions. J Vis 3: 725–736, 2003 [DOI] [PubMed] [Google Scholar]

- Tanaka M, Lisberger SG. Regulation of the gain of visually guided smooth-pursuit eye movements by frontal cortex. Nature 409: 191–194, 2001 [DOI] [PubMed] [Google Scholar]

- Tavassoli A, Ringach DL. Dynamics of smooth pursuit maintenance. J Neurophysiol 102: 110–118, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Treue S, Hol K, Rauber HJ. Seeing multiple directions of motion-physiology and psychophysics. Nat Neurosci 3: 270–276, 2000 [DOI] [PubMed] [Google Scholar]

- Victor JD. The dynamics of the cat retinal x cell center. J Physiol 386: 219–246, 1987 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wark B, Fairhall A, Rieke F. Timescales of inference in visual adaptation. Neuron 61: 750–761, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watamaniuk SNJ, Duchon A. The human visual system averages speed information. Vision Res 32: 931–941, 1992 [DOI] [PubMed] [Google Scholar]

- Watamaniuk SNJ, Sekuler R. Temporal and spatial integration in dynamic random-dot stimuli. Vision Res 32: 2341–2347, 1992 [DOI] [PubMed] [Google Scholar]

- Watamaniuk SNJ, Sekuler R, Williams DW. Direction perception in complex dynamic displays: the integration of direction information. Vision Res 29: 47–59, 1989 [DOI] [PubMed] [Google Scholar]

- Watamaniuk SNJ, Heinen SJ. Human smooth pursuit direction discrimination. Vision Res 39: 59–70, 1999 [DOI] [PubMed] [Google Scholar]

- Weiner N.Extrapolation, Interpolation, and Smoothing of Time Series New York: Wiley, 1949 [Google Scholar]

- Williams DW, Sekuler R. Coherent global motion percepts from stochastic local motion. Vis Res 24: 55–62, 1984 [DOI] [PubMed] [Google Scholar]