Abstract

Theories of instrumental learning aim to elucidate the mechanisms that integrate success and failure to improve future decisions. One computational solution consists of updating the value of choices in proportion to reward prediction errors, which are potentially encoded in dopamine signals. Accordingly, drugs that modulate dopamine transmission were shown to impact instrumental learning performance. However, whether these drugs act on conscious or subconscious learning processes remains unclear. To address this issue, we examined the effects of dopamine-related medications in a subliminal instrumental learning paradigm. To assess generality of dopamine implication, we tested both dopamine enhancers in Parkinson's disease (PD) and dopamine blockers in Tourette's syndrome (TS). During the task, patients had to learn from monetary outcomes the expected value of a risky choice. The different outcomes (rewards and punishments) were announced by visual cues, which were masked such that patients could not consciously perceive them. Boosting dopamine transmission in PD patients improved reward learning but worsened punishment avoidance. Conversely, blocking dopamine transmission in TS patients favored punishment avoidance but impaired reward seeking. These results thus extend previous findings in PD to subliminal situations and to another pathological condition, TS. More generally, they suggest that pharmacological manipulation of dopamine transmission can subconsciously drive us to either get more rewards or avoid more punishments.

Keywords: dopamine, instrumental learning, subliminal perception, reward, punishment

How we learn from success and failure is a long-standing question in neuroscience. Instrumental learning theories explain how outcomes can be used to modify the value of choices, such that better decisions are made in the future. A basic learning mechanism consists of updating the value of the chosen option according to a reward prediction error, which is the difference between the actual and the expected reward (1, 2). This learning rule, using prediction error as a teaching signal, has provided a good account of instrumental learning in a variety of species including both human and nonhuman primates (3, 4). Single-cell recordings in monkeys suggest that reward prediction errors are encoded by the phasic discharge of dopamine neurons (5, 6). In humans, dopamine-related drugs have been shown to bias prediction error encoding in the striatum to modulate reward-based learning (7). One of these drugs, levodopa (a metabolic precursor of dopamine), is used to alleviate motor symptoms in idiopathic Parkinson's disease (PD), which is primarily caused by degeneration of nigral dopamine neurons. PD patients were shown to learn better from positive feedback when on levodopa and from negative feedback when off levodopa (8, 9). This double dissociation lead Frank and colleagues to propose a computational model of fronto-striatal circuits where dopamine bursts (encoding positive prediction errors) reinforce approach pathways, while dopamine dips (encoding negative prediction errors) reinforce avoidance pathways (10).

Instrumental learning may involve both conscious and subconscious processes. We recently demonstrated that healthy subjects can learn associations between cues and choice outcomes, even if the cues are masked and hence not consciously perceived (11). During performance of this subliminal conditioning task, prediction errors generated with a standard reinforcement learning algorithm were reflected in striatal activity, possibly due to dopaminergic inputs. However, the assumption that subconscious learning is actually driven by dopamine release in the striatum remains to be tested. It is noteworthy that learning is dramatically reduced in the subliminal compared to the unmasked condition, where the associations can be trivially acquired in one trial. Thus, conscious processes, notably the ability to keep in mind the cues and outcomes seen previously, seem important for a good learning performance, but are not necessary for a more limited acquisition of instrumental responses.

To our knowledge, the question of whether dopamine-related drugs affect conscious or subconscious learning-related processes has not been addressed so far. Here, we examined this issue by administrating our subliminal conditioning paradigm to PD patients. The hypothesis was that the above-mentioned double dissociation, between reinforcement valence (reward or punishment) and medication status (off or on levodopa), could be replicated in subliminal conditions. To strengthen the demonstration, we also tested whether a reverse double dissociation could be observed in patients with Gilles de la Tourette's syndrome (TS), which can be opposed to PD in terms of both symptoms and treatments. TS is characterized by hyperkinetic symptoms (motor and vocal tics) alleviated by neuroleptics (dopamine receptor antagonists), whereas PD is a hypokinetic syndrome alleviated by dopamine receptor agonists. Medication effects were assessed between two groups of 12 TS patients on one hand and within one group of 12 PD patients on the other. Matched healthy controls (24 young and 12 older subjects) were also administrated to the same experimental paradigm. Disease effects were assessed by comparing each group of patients off medication with their matched control group. Subjects' demographic and clinical features are displayed in Tables 1 and 2, respectively.

Table 1.

Demographic data

| Demographic features | PD (n = 12) | Seniors (n = 12) | TS Off (n = 12) | TS On (n = 12) | Juniors (n = 24) |

|---|---|---|---|---|---|

| Age (years) | 57.0 ± 3.1 | 60.7 ± 2.7 | 21.3 ± 2.6 | 19.8 ± 2.6 | 22.3 ± 0.9 |

| Sex (female/male) | 1/11 | 5/7 | 3/9 | 2/10 | 12/12 |

| Education (years) | 10.3 ± 1.3 | 16.4 ± 1.0 | 11.3 ± 1.4 | 10.0 ± 0.9 | 15.1 ± 0.5 |

Table 2.

Clinical data

| Clinical features | PD (n = 12) | Clinical features | TS Off (n = 12) | TS On (n = 12) | |

|---|---|---|---|---|---|

| Disease duration (years) | 10.7 ± 1.2 | Disease duration (years) | 13.7 ± 2.9 | 12.3 ± 2.8 | |

| UPDRSIII score Off | 28.7 ± 4.5 | YGTSS/50 score | 15.9 ± 1.6 | 18.3 ± 2.1 | |

| UPDRSIII score On | 6.9 ± 1.6 | YGTSS/100 score | 33.4 ± 3.8 | 42.4 ± 4.0 | |

| Treatment | Levodopa* | Treatment | — | Risperidone | Primozide |

| Daily dose (mg/day) | 850 ± 116 | Daily dose (mg/day) | — | 2.3 ± 0.7 | 3.3 ± 2.3 |

*Dose is expressed as dopa-equivalent, taking into account both levodopa (all patients) and dopamine agonists (seven patients).

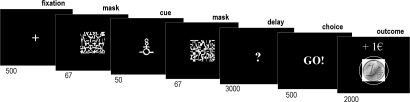

The subliminal conditioning task used three abstract cues that were paired with different monetary outcomes (−1€, 0€, +1€). The cues were briefly flashed between two mask images, after which subjects had to choose between safe and risky options (Fig. 1). The safe choice means a null outcome for sure: no gain, no loss. A risky choice may result in a gain (+1€), a loss (−1€), or a neutral outcome (0€), depending on the cue. As they would not see the cues, subjects were encouraged to follow their intuition: to make a risky choice if they had the feeling they were in a winning trial or to make a safe choice if they felt it was a losing trial. For half of the subjects, the risky response was a “Go” (key press), and for the other half it was a “Nogo” (no key press). Thus the experimental design allowed measuring dependent variables for three orthogonal dimensions: the rate of Go response (motor impulsivity), risky choice (cognitive impulsivity), and monetary payoff (reinforcement learning). Note that if subjects always made the same response, or if they performed at chance, their final payoff would be zero. Hence a positive payoff indicates that some representation of cue–outcome contingencies had been acquired through conditioning. A separate visual discrimination task was subsequently conducted to assess the subjects' sensitivity to differences between cues, presented with the same masking procedure as during conditioning. The rationale is that if subjects are unable to discriminate between cues, then they are a fortiori unable to build conscious representations of cue–outcome associations.

Fig. 1.

Subliminal learning task. Successive screenshots displayed during a given trial are shown from Left to Right, with durations in milliseconds. After seeing a masked contextual cue flashed on a computer screen, subjects choose to press or not to press a response key and subsequently observe the outcome. In this example, “Go” appears on the screen because the subject has pressed the key, following the cue associated with reward (winning 1€).

Results

All dependent measures in the different groups have been summarized in Table 3. We first tested motor and cognitive impulsivity measures (Go response and risky choice). There was no significant difference between PD and TS groups (all P > 0.1, two-tailed t tests) and no significant effect of medication, either in PD or TS (all P > 0.05, two-tailed t tests). These results were not necessarily expected given the motor and cognitive signs associated with the diseases and treatments, but they suggest that performance was not driven by a difficulty in pressing keys or a propensity to take risks.

Table 3.

Experimental data

| Behavioral measures | PD (n = 12) |

Seniors (n = 12) | TS Off (n = 12) | TS On (n = 12) | Juniors (n = 24) | |

|---|---|---|---|---|---|---|

| Off | On | |||||

| Monetary payoff (€) | 1.0 ± 0.8 | 1.3 ± 0.6 | 0.6 ± 0.6 | 1.9 ± 0.6 | 1.8 ± 0.8 | 2.8 ± 0.9 |

| Visual discrimination (d′) | 0.14 ± 0.25† | 0.33 ± 0.15 | 0.43 ± 0.14 | 0.37 ± 0.11 | 0.07 ± 0.14 | 0.05 ± 0.12 |

| Payoff/d′ correlation (r) | 0.22 | 0.17 | −0.29 | −0.13 | 0.29 | 0.22 |

| Go responses (%) | 50.9 ± 6.4 | 47.5 ± 6.2 | 48.1 ± 2.5 | 51.2 ± 5.1 | 49.6 ± 3.8 | 46.7 ± 3.8 |

| Risky choices (%) | 70.1 ± 2.4 | 55.6 ± 6.0 | 67.7 ± 2.2 | 65.7 ± 1.9 | 58.8 ± 2.8 | 63.4 ± 2.6 |

| Reward obtained (€) | −0.3 ± 0.7 | 1.5 ± 0.5 | 0.9 ± 0.4 | 1.9 ± 1.0 | 0.1 ± 0.4 | 1.5 ± 0.8 |

| Punishment avoided (€) | 1.3 ± 0.5* | −0.3 ± 0.5 | −0.2 ± 0.4 | 0.0 ± 0.5 | 1.6 ± 0.5 | 1.3 ± 0.7 |

*P < 0.05, significant difference with the control group (two-tailed t test)

†Data were collected in 11 patients only.

Then we examined learning performance (monetary payoff) and discrimination sensitivity (d′). Monetary payoffs were significantly above zero, indicating a conditioning effect, in both PD and TS patients (PD, 1.1 ± 0.5€, t11 = 2.1, P < 0.05; TS, 1.8 ± 0.5€, t23 = 3.7, P < 0.001, one-tailed t test). In contrast, performance did not improve in the visual discrimination test, where subjects remained at chance level throughout the entire series of trials [see Fig. S1]. As the impulsivity measures, payoffs and d′ were not affected by dopamine enhancers in PD or by dopamine blockers in TS (all P > 0.1, two-tailed t test). Note, however, that d′ were numerically above zero in all situations, suggesting that learning effects may have been driven by some occasional conscious perception. To address this issue, we calculated correlations between d′ and payoffs: Pearson's coefficients were around zero and nonsignificant (PD Off, r = 0.22, P > 0.5; PD On, r = 0.17, P > 0.5; TS Off, r = −0.13, P > 0.1; TS On, r = −0.29, P > 0.5), suggesting that learning effects were not driven by patients with above-chance discrimination performance.

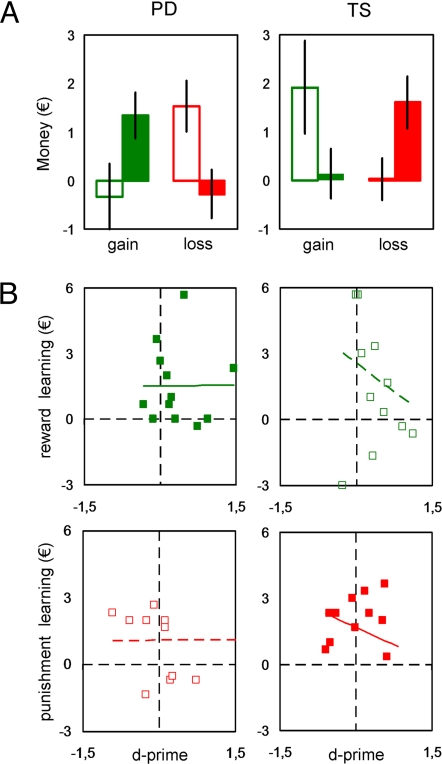

After controlling for these potential confounding effects, we next examined the hypothesized double dissociation between reinforcement valence and medication status. We distinguished between reward and punishment learning in the calculation of monetary payoffs. Relative to the neutral condition, additional correct choices were considered as an index of reward learning in the gain condition and as an index of punishment learning in the loss condition. Note that subtracting the neutral condition removes the potential effects of motor and cognitive impulsivity. The number of correct choices was expressed as euros that subjects won for reward learning or avoided losing for punishment learning (Fig. 2A).

Fig. 2.

Monetary payoffs. (Left) Idiopathic Parkinson's disease (PD) patients. (Right) Gilles de la Tourette's syndrome (TS) patients. (A) Reward bias. Histograms in each graph show additional correct choices (in euros) in the gain (Left) and loss (Right) condition relative to the neutral condition. Solid histograms represent medicated patients (on dopamine enhancers or blockers) whereas open histograms represent unmedicated patients. Error bars are plus or minus between-subjects standard errors of the mean. (B) Learning vs. discrimination performance. Graphs represent for each individual the euros won (reward learning) or not lost (punishment learning) as a function of discrimination sensitivity (d′). Medicated patients (on dopamine enhancers or blockers) are represented by solid squares and solid regression lines and unmedicated patients by open squares and dashed lines. Only situations where learning was significant are shown: On PD and Off TS patients for rewards, Off PD and On TS patients for punishments.

As expected, we observed that off-medication PD patients significantly learned to avoid punishments (1.3 ± 0.5€, t11 = 2.8, P < 0.01, one-tailed t test) but not to get rewards (−0.3 ± 0.7€, t11 = −0.5, P > 0.1, one-tailed t test). On-medication PD patients exhibited the opposite pattern: no punishment learning (−0.3 ± 0.5€, t11 = −0.6, P > 0.1, one-tailed t test) but significant reward learning (1.5 ± 0.5€, t11 = 2.9, P < 0.01, one-tailed t test). The reverse double dissociation was observed in TS patients: When off medication, they learned to obtain rewards (1.9 ± 1.0€, t11 = 2.0, P < 0.05, one-tailed t test) but not to avoid punishments (0.0 ± 0.5€, t11 = 0.1, P > 0.5, one-tailed t test) and when on medication, they failed to obtain rewards (0.1 ± 0.4€, t11 = 0.3, P > 0.1, one-tailed t test) but successfully avoided punishments (1.6 ± 0.5€, t11 = 3.0, P < 0.01, one-tailed t test). Having identified the combinations of medication status and reinforcement valence where patients did learn, we checked the correlations between d′ and learning in these situations (Fig. 2B). They were again close to zero and not significant in both PD patients (Off/punishment, r = 0.01, P > 0.5; On/reward, r = 0.01, P > 0.5) and TS patients (Off/reward, r = −0.20, P > 0.5; On/punishment, r = −0.29, P > 0.5). Moreover, regression lines crossed the y axis (d′ = 0) for positive payoffs in all situations, demonstrating the presence of conditioning effects in the absence of visual discrimination.

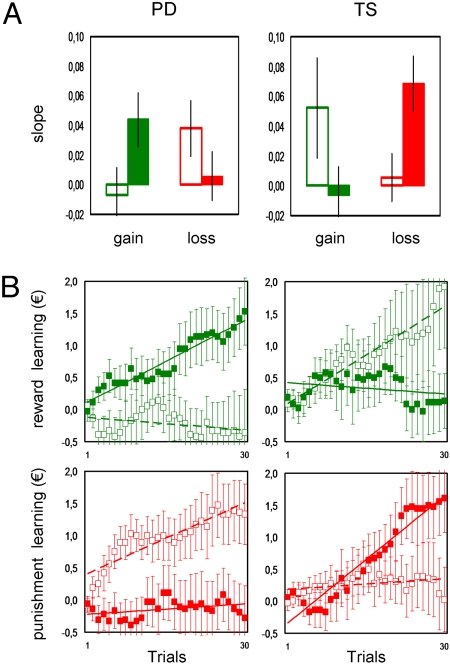

To verify that the double dissociations were due to difference in learning rates, we plotted the cumulative money won (for reward learning) and not lost (for punishment learning) as a function of trials (Fig. 3B). Linear regression coefficients (slopes) of these learning curves were extracted and tested for the different groups and medications (Fig. 3A). These slopes exhibited a profile very similar to what was obtained with payoffs (compare with Fig. 2A). They were significantly positive (P < 0.05, one-tailed t test) only in Off PD with punishments, in On PD with rewards, and in On TS with punishments.

Fig. 3.

Learning rates. (Left) Idiopathic Parkinson's disease (PD) patients. (Right) Gilles de la Tourette's syndrome (TS) patients. (A) Accumulation rates. Histograms in each graph show linear regression coefficients of corresponding learning curves below. Solid histograms represent medicated patients (on dopamine enhancers or blockers) whereas open histograms show unmedicated patients. Error bars are plus or minus between-subjects standard errors of the mean. (B) Accumulation curves. Graphs represent for each individual the cumulative sum of euros won (reward learning) or not lost (punishment learning) as a function of trials. The curves have been averaged across sessions and subjects. Medicated patients (on dopamine enhancers or blockers) are represented by solid squares and solid regression lines and unmedicated patients by open squares and dashed lines.

We then tested the effects of medication on the reward bias, defined as the difference between the money won (correct choices following reward cues) and the money not lost (correct choices following punishment cues). This measure can hence be considered an index of the difference between reward and punishment learning performance. We found that the reward bias was significantly increased by dopamine enhancers in PD patients (Off, −1.7 ± 0.9€; On, 1.8 ± 0.8€; t11 = 3.0, P < 0.05, two-tailed t test) and significantly decreased by dopamine blockers in TS patients (Off, 1.9 ± 1.0€; On, −1.5 ± 0.4€; t22 = 2.2, P < 0.05, two-tailed t test). Thus, the reward bias was the only dependent variable sensitive to medication, with reciprocal effects in PD and TS showing that dopamine enhancers favored reward learning, whereas dopamine blockers favored punishment avoidance.

Finally, all experimental data were systematically compared between patients and controls. Note that controls were matched with patients in terms of age but not sex or education. However, taking into account all of the 36 healthy subjects, we found no significant effect of sex on monetary payoff or reward bias (both P > 0.5, two-tailed t tests) and no significant correlation between education level and monetary payoff or reward bias (r = 0.21 and r = 0.04, both P > 0.1). Thus reinforcement learning performance was not dependent on sex or education. In both control groups our crucial measure, the reward bias, was found between those obtained for the on and off medication status in the corresponding patient group. In other words, the trend was that relative to healthy old subjects, PD patients had a lower reward bias when off medication and a higher one when on medication. And relative to healthy young subjects, TS patients had a higher reward bias when off medication and a lower one when on medication. However, the differences being smaller than when comparing on and off states, the comparison with control subjects was significant only for Off PD patients (t22 = 2.6, P < 0.05; all other P > 0.1; two-tailed t test). Medication effects on the reward bias therefore appear much more reliable than disease effects.

Discussion

To summarize, we extended the double dissociation between reinforcement valence and dopamine medication status, which was originally demonstrated in PD patients by Frank and colleagues (8), to the subliminal case and to TS patients. In short, reinforcement learning was biased toward reward seeking when boosting dopamine transmission and toward punishment avoidance when blocking dopamine transmission. The effects were independent from factors such as discrimination sensitivity and motor or cognitive impulsivity, which were orthogonal to the reinforcement valence in our design. Moreover, these factors were not significantly affected by medication, suggesting that patients did not perceive the cues, press the button, or choose the risky response any more in the on- than in the off-medication state.

Despite the use of short duration and backward masking, we cannot formally ensure that all cues remained subliminal in all trials, as there is no direct window to the conscious mind. We nonetheless provide standard criteria that are generally considered as indirect evidence for nonconscious perception (12, 13). Verbal reports were recorded to assess the subjective criterion: When shown the unmasked cues, all subjects reported not having seen them previously. Discrimination performance was measured to assess the objective criterion: Learning effects were obtained even for a null d′, which indicates that subjects were unable to correctly decide whether two consecutive cues were the same or different. We therefore conclude that the learning processes affected by medications were largely subconscious. Masking was undoubtedly helped by the fact that subjects had no prior representation to guide visual search, since, contrary to most subliminal perception studies, the cues were never shown until the debriefing at the end of the experiment. Although they did not provide the above criteria for absence of awareness, some previous studies in PD reported deficits in implicit learning (8, 9, 14, 15). In these paradigms the cues are consciously perceived, but subjects fail to report explicitly the cue–outcome contingencies at debriefing, even if they previously expressed some knowledge of these contingencies in their motor responses. Debriefing tests have, however, been criticized as confounded by memory decay (16–18), so masking cues serves as a more stringent approach to limit conscious associations between cues and outcomes. Compared to implicit learning paradigms, such as probabilistic classification or transitive inference tasks, the Go/Nogo mode of response used here makes reinforcement learning more direct, with no need for building high-level representations of cue–outcome contingencies.

Our findings are in line with a growing body of evidence that reinforcement learning can operate subconsciously (19–23). More specifically, they extend a previous functional neuroimaging study using the same subliminal conditioning paradigm (11), which showed that reward prediction errors were reflected in the ventral striatum. A parsimonious explanation may be that dopamine enhancers and blockers, because they interfere with dopamine transmission, modulate the magnitude of prediction error signals, as was previously demonstrated during conscious instrumental learning (7). This would be compatible with Frank's model (10), if we assume that dopamine enhancers and blockers have opposite effects both on positive prediction errors following rewards and on negative prediction errors following punishments. The drugs may impact the reinforcement of fronto-striatal synapses, which allegedly underlies the formal process of using prediction error as a teaching signal to update the value of the current cue, according to Rescorla and Wagner's rule (1). At a lower level, the underlying mechanisms remain speculative, however, as it is unclear which dopamine receptors (D1, D2, or others) and which component of dopamine release (tonic, phasic, or a combination of both) are impacted by medications. Although we argue that the reinforcement process modulated by medications was subconscious, we do not imply that conscious feelings, when seeing the masks or the outcomes, remained unaffected. It remains, for instance, possible that subjects, even if not perceiving the cue itself, had a conscious positive feeling following a reward-predicting cue or a negative one after a punishment-predicting cue. Further experiments are needed to determine whether we can develop a conscious access to the value of cues that we do not consciously perceive.

The replication of the double dissociation in a second pathological condition (TS) suggests that our manipulation tapped into general dopamine-related mechanisms and not into peculiar dysfunction restricted to PD. Our findings potentially facilitate understanding not only dopamine-related drug effects but also dopamine-related disorders. The case for dopamine neuron degeneration in PD is well established (24), so from Frank's model (10) it could be predicted that off-medication PD patients were impaired in reward learning but not in punishment avoidance. A lack of positive reinforcement following rewards might explain action selection deficits that are frequently reported in PD (14, 15, 25). Indeed, if an action is not reinforced when rewarded, selection of that action will not be facilitated in the future. A deficit in movement selection could also account for some motor symptoms, such as akinesia and rigidity, that are the hallmarks of PD. The double dissociation evidenced in PD may also provide insight into compulsive behaviors, such as pathological gambling, induced in these patients by dopamine agonists (26, 27). The explanation would be that due to dopamine agonists, repetitive behaviors would be more reinforced by rewarding outcomes than impeded by punishing consequences.

In contrast, the case for an overactive dopamine transmission in TS has not reached general agreement (28–30), despite supporting evidence from both genetic and neuroimaging studies (31–34). That TS patients mirrored PD patients would further support the idea of underlying dopaminergic hyperactivity. Of course this does not necessarily imply that dopaminergic hyperactivity is causal to the pathology of TS. It is nonetheless tempting to speculate that tics may come from excessive reinforcement of certain cortico-striatal pathways. We must remain cautious however, because we observed only a trend and not a significant difference between off-medication TS patients and matched healthy controls.

More generally, because they eliminate conscious strategies that could confound potential deficits, subliminal stimulations may allow targeting more specific cognitive processes, just as was done here for reinforcement learning, and hence provide insight into a variety of neurological or psychiatric conditions. For the same reasons, subliminal conditions might also prove useful in identifying specific effects of drugs, other than those of dopamine enhancers and blockers on reinforcement learning. To our knowledge, pharmacological studies have not intended so far to distinguish between drug effects on conscious and subconscious processes. Indeed, a huge literature is devoted to understanding how drugs modify conscious experience, but little is known about how drugs play on processes occurring outside conscious awareness. We believe that the present study opens the door to research on the pharmacology of subconscious processing.

Experimental Procedures

Subjects.

The study was approved by the Ethics Committee for Biomedical Research of the Pitié-Salpêtrière Hospital, where the study was conducted. A total of 72 subjects, including 36 patients and 36 controls, were included in the study. All subjects gave written informed consent before their participation. They were not paid for their voluntary participation and were told that the money won in the task was purely virtual. Previous studies have shown that using real money is not mandatory to obtain robust motivational or conditioning effects (8, 35). In our case using real money would be unethical since it would mean paying patients according to their handicap or treatment. In total, 12 patients with idiopathic PD and 24 patients with TS were included in the study. We also tested 12 old (seniors) and 24 young (juniors) healthy controls, who were screened out for any history of neurological or psychiatric conditions and selected for age to match that of either PD or TS patients. We checked that age was not significantly different between old subjects and PD patients (t22 = 0.9, P > 0.1, two-tailed t test) or between young subjects and TS patients (t46 = −0.9, P > 0.1, two-tailed t test).

PD patients were consecutive candidates for deep brain stimulation, hospitalized for a clinical preoperative examination. Inclusion criteria were a diagnosis of idiopathic PD, with a good response to levodopa [>50% improvement on the Unified Parkinson's Disease Rating (UPDRSIII) Scale], in the absence of dementia [Mini Mental State (MMS) score >25] and depression [Montgomery and Asberg Depression Rating Scale (MADRS) score <20]. Consequently, average MMS score was 27.7 ± 0.3, average MADRS score was 4.3 ± 0.8, and Hoenh and Yahr stage was 2.46 ± 0.10 in the “off” state and 2.17 ± 0.15 in the “on” state. Among the 12 patients, 5 were on levodopa alone, and 7 were also taking dopamine receptor agonists. For the sake of simplicity, we converted all medications as levodopa equivalents (Table 3) and we used the term dopamine enhancers to designate both levodopa and receptor agonists. Every patient was assessed twice, on the morning of 2 different days: once in the off state, after overnight (>12 h) withdrawal of levodopa and a full day (24 h) withdrawal of dopamine agonists, and once in the on state, 1 h after intake of habitual medication dose (levodopa in all patients + dopamine agonists in 7 of them). One patient included in the study could not complete the visual discrimination task in the off state due to excessive motor fatigue. Three patients were unable to perform the conditioning task in the off state and were therefore not included in the study.

TS patients were consecutive candidates screened for the French Reference Center for Gilles de la Tourette's syndrome. Patients were at least 10 years old and did not present relevant comorbid conditions (depression, obsessive-compulsive disorder, and/or attention deficit with hyperactivity disorder). Treatment usually cannot be stopped in these patients for ethical reasons: It would leave patients in discomfort for too long during washout. However, some patients diagnosed with TS remain unmedicated, because their tics do not represent a huge handicap. We included medicated and unmedicated patients in equal numbers, such that we could make on–off comparisons with the same number of data points (n = 24) as in Parkinson's disease. The difference was that comparisons were made within patients in PD and between patients in TS. All On TS patients were treated with neuroleptics only: eight with risperidone, four with primozide. For the sake of simplicity, we referred to neuroleptics as dopamine blockers. There was no significant difference between on-medication (treated) and off-medication (untreated) TS patients regarding age (t22 = 0.4, P > 0.5, two-tailed t test), sex (χ2 = 0.25; P > 0.5, chi-square test), disease duration (t22 = 0.4, P > 0.5, two-tailed t test), and education (t22 = 0.7, P > 0.1, two-tailed t test). The Yale Global Tic Severity Scale (YGTSS) showed no significant difference between On and Off patients, either with the 50-items (motor tics) or with the 100-items (complex tics) version (respectively t22 = 1.6, P > 0.1; t22 = 0.9, P > 0.1, two-tailed t test).

Experimental Task and Design.

The behavioral tasks used in our previous study (11) were slightly shortened and translated into French and euros. Subjects first read the instructions (see SI Text), which were later explained again step by step. They were first trained to perform the conditioning task on a 16-trial practice version. Then, they had to perform three sessions of this conditioning task, each containing 90 trials and lasting 10 min, and one session of the perception task, containing 60 trials and lasting ≈5 min. The abstract cues were letters taken from the Agathodaimon font. The same two masking patterns, one displayed before and the other after the cue, were used in all task sessions (Fig. 1). Assignment of cues to the different task sessions, and associations of cues with the different outcomes, was fixed for all subjects to undergo the exact same experimental procedure. For similar purposes, duration of cue display was fixed at 50 ms and not adapted to each individual, such that subliminal stimulations were identical for all subjects.

As 50 ms is near the threshold for conscious perception, however, some subjects (three TS patients and three junior and one senior controls) could not be included because they managed to discriminate some part of the cues. Indeed they reported having spotted discriminative parts (both during task performance and at debriefing), had abnormally high discrimination sensitivity (d′ > 1.5), and won unusually high amounts of money (payoff >10€). Note that without excluding the TS patients who saw the cues, the double dissociation reported in this condition would fail to reach significance. Indeed, these TS patients were on medication and nonetheless learned to get rewards, consistent with the intuitive idea that the task gets trivial as soon as subjects can discriminate the cues.

The instrumental conditioning task involved choosing between pressing or not pressing a key, in response to masked cues. After showing the fixation cross and the masked cue, the response interval was indicated on the computer screen by a question mark. The interval was fixed to 3 s and the response was taken at the end: Go if the key was being pressed, and Nogo if the key was released. The response was written on the screen as soon as the delay had elapsed. Subjects were told that one response was safe (you do not win or lose anything) while the other was risky (you can win 1€, lose 1€, or get nothing). Subjects were also told that the outcome of the risky response would depend on the cue that was displayed between the mask images. In fact, three cues were used: One was rewarding (+1€), one was punishing (−1€), and the last was neutral (0€). Because subjects were not informed about the associations, they could learn them only by observing the outcome, which was displayed at the end of the trial. This was a circled coin image (meaning +1€), a barred coin image (meaning −1€), or a gray square (meaning 0€).

The risky response was assigned to Go for half of task completions and to Nogo for the other half, such that motor aspects were counterbalanced between reward and punishment conditions. TS patients and junior controls were assessed only once and hence performed either the Go or the Nogo version of the task. Junior controls were randomly assigned to either the Go version for one half or the Nogo version for the other half. In TS, the task version was balanced with respect to the medication status, such that each of the four combinations (Off/Nogo, Off/Go, On/Nogo, and On/Go) was administrated in the same number of patients (n = 6). PD patients and senior controls were assessed twice, once on the Go version and once on the Nogo version. For senior controls the order of Go and Nogo task versions was simply alternated. In PD, the order was balanced with respect to the medication status, such that each of the four combinations (Off/Nogo–On/Go, Off/Go–On/Nogo, On/Nogo–Off/Go, and On/Go–Off/Nogo) was administrated in the same number of patients (n = 3).

The perceptual discrimination task was used as a control for awareness at the end of conditioning sessions. Hence it was administrated once in TS patients and junior controls and twice in PD patients and senior controls. In this task, subjects were flashed two masked cues, 3 s apart, displayed on the center of a computer screen, each following a fixation cross. As there were 60 trials, each cue was presented 40 times, which is more than in conditioning sessions (30 times). Subjects had to report whether or not they perceived any difference between the two visual stimulations. The response was given manually, by pressing one of two keys assigned to “same” and “different” choices. Importantly, subjects had no opportunity to see the cues unmasked, so they could not get any prior information about what these cues look like. Note that the three cues used in the perceptual discrimination control were different from those used in instrumental learning sessions, to avoid subjects distinguishing cues on the basis of their learned values. At the end of the experiment, subjects were debriefed about whether or not they could perceive some piece of cues. They were also shown the cues unmasked one by one and asked whether or not they had seen them before. No included subject reported having seen any cue.

Statistical Analysis.

From the conditioning task we extracted the percentages of Go and risky responses, which can be taken as indirect measures of motor and cognitive impulsivity, respectively. We also extracted the number of correct choices, which is equivalent to the monetary payoff. The payoff can then be split into euros won for the reward condition and euros not lost for the punishment condition. To correct for motor and cognitive bias, we subtracted the correct choices made in the neutral condition, which captures the propensity to make a Go response and a risky choice. To display learning progression, we plotted the cumulative money won (reward learning) or not lost (punishment learning) across trials. A linear regression was fitted on these learning curves, and coefficients (betas) were considered as an index of learning rates. From the visual discrimination task we calculated a sensitivity index (d′), as the difference between normalized rates of hits (correct different responses) and false alarms (incorrect different responses).

All data (demographic, clinical, or experimental) are reported as mean ± between-subjects standard error of the mean (SEM). To assess instrumental conditioning, we used one-tailed paired t tests comparing individual performances with chance level (which corresponds to a zero payoff). Similarly, to assess visual discrimination, we compared individual d′ with chance level (which is also zero), using one-tailed paired t tests. Within each pathological condition (PD or TS), we assessed medication effects by comparing dependent variables between On and Off states. We used within-group comparisons (paired two-tailed t tests) for PD patients, who were tested in the two medication states, and between-group comparisons (unpaired two-tailed t tests) for TS patients, who were either medicated or not. To assess disease effects relative to controls we performed between-group comparisons (unpaired two-tailed t tests). Finally, to assess significance of linear correlation between learning (payoff) and discrimination (d′) measures, we calculated Pearson's coefficients. For all statistical tests the threshold for significance was set at P < 0.05.

Supplementary Material

Acknowledgments.

We are grateful to Helen Bates for helping with behavioral task administration and to Virginie Czernecki and Priscilla Van Meerbeeck for providing clinical data. We also thank Arlette Welaratne and all of the staff of the Centre d'Investigation Clinique for taking care of patients. Aman Saleem, Shadia Kawa, and Beth Pavlicek checked the English. S.P. received a Ph.D. fellowship from the Neuropôle de Recherche Francilien. The study was funded by the Ecole de Neurosciences de Paris.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/cgi/content/full/0904035106/DCSupplemental.

References

- 1.Rescorla RA, Wagner AR. A theory of Pavlovian conditioning: Variations in the effectiveness of reinforcement and nonreinforcement. In: Black AH, Prokasy WF, editors. Classical Conditioning II: Current Research and Theory. New York: Appleton-Century-Crofts; 1972. pp. 64–99. [Google Scholar]

- 2.Sutton RS, Barto AG. Reinforcement Learning. Cambridge, MA: MIT Press; 1998. [Google Scholar]

- 3.Daw ND, Doya K. The computational neurobiology of learning and reward. Curr Opin Neurobiol. 2006;16(2):199–204. doi: 10.1016/j.conb.2006.03.006. [DOI] [PubMed] [Google Scholar]

- 4.O'Doherty JP, Hampton A, Kim H. Model-based fMRI and its application to reward learning and decision making. Ann N Y Acad Sci. 2007;1104:35–53. doi: 10.1196/annals.1390.022. [DOI] [PubMed] [Google Scholar]

- 5.Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275(5306):1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- 6.Waelti P, Dickinson A, Schultz W. Dopamine responses comply with basic assumptions of formal learning theory. Nature. 2001;412(6842):43–48. doi: 10.1038/35083500. [DOI] [PubMed] [Google Scholar]

- 7.Pessiglione M, Seymour B, Flandin G, Dolan RJ, Frith CD. Dopamine-dependent prediction errors underpin reward-seeking behaviour in humans. Nature. 2006;442(7106):1042–1045. doi: 10.1038/nature05051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Frank MJ, Seeberger LC, O'Reilly RC. By carrot or by stick: Cognitive reinforcement learning in parkinsonism. Science. 2004;306(5703):1940–1943. doi: 10.1126/science.1102941. [DOI] [PubMed] [Google Scholar]

- 9.Cools R, Altamirano L, D'Esposito M. Reversal learning in Parkinson's disease depends on medication status and outcome valence. Neuropsychologia. 2006;44(10):1663–1673. doi: 10.1016/j.neuropsychologia.2006.03.030. [DOI] [PubMed] [Google Scholar]

- 10.Frank MJ. Dynamic dopamine modulation in the basal ganglia: A neurocomputational account of cognitive deficits in medicated and nonmedicated Parkinsonism. J Cogn Neurosci. 2005;17(1):51–72. doi: 10.1162/0898929052880093. [DOI] [PubMed] [Google Scholar]

- 11.Pessiglione M, et al. Subliminal instrumental conditioning demonstrated in the human brain. Neuron. 2008;59(4):561–567. doi: 10.1016/j.neuron.2008.07.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kouider S, Dehaene S. Levels of processing during non-conscious perception: A critical review of visual masking. Philos Trans R Soc Lond B Biol Sci. 2007;362(1481):857–875. doi: 10.1098/rstb.2007.2093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Dehaene S, Changeux JP, Naccache L, Sackur J, Sergent C. Conscious, preconscious, and subliminal processing: A testable taxonomy. Trends Cogn Sci. 2006;10(5):204–211. doi: 10.1016/j.tics.2006.03.007. [DOI] [PubMed] [Google Scholar]

- 14.Knowlton BJ, Mangels JA, Squire LR. A neostriatal habit learning system in humans. Science. 1996;273(5280):1399–1402. doi: 10.1126/science.273.5280.1399. [DOI] [PubMed] [Google Scholar]

- 15.Shohamy D, et al. Cortico-striatal contributions to feedback-based learning: Converging data from neuroimaging and neuropsychology. Brain. 2004;127(Pt 4):851–859. doi: 10.1093/brain/awh100. [DOI] [PubMed] [Google Scholar]

- 16.Lagnado DA, Newell BR, Kahan S, Shanks DR. Insight and strategy in multiple-cue learning. J Exp Psychol Gen. 2006;135(2):162–183. doi: 10.1037/0096-3445.135.2.162. [DOI] [PubMed] [Google Scholar]

- 17.Lovibond PF, Shanks DR. The role of awareness in Pavlovian conditioning: Empirical evidence and theoretical implications. J Exp Psychol Anim Behav Process. 2002;28(1):3–26. [PubMed] [Google Scholar]

- 18.Wilkinson L, Shanks DR. Intentional control and implicit sequence learning. J Exp Psychol Learn Mem Cogn. 2004;30(2):354–369. doi: 10.1037/0278-7393.30.2.354. [DOI] [PubMed] [Google Scholar]

- 19.Morris JS, Ohman A, Dolan RJ. Conscious and unconscious emotional learning in the human amygdala. Nature. 1998;393(6684):467–470. doi: 10.1038/30976. [DOI] [PubMed] [Google Scholar]

- 20.Olsson A, Phelps EA. Learned fear of “unseen” faces after Pavlovian, observational, and instructed fear. Psychol Sci. 2004;15(12):822–828. doi: 10.1111/j.0956-7976.2004.00762.x. [DOI] [PubMed] [Google Scholar]

- 21.Knight DC, Nguyen HT, Bandettini PA. Expression of conditional fear with and without awareness. Proc Natl Acad Sci USA. 2003;100(25):15280–15283. doi: 10.1073/pnas.2535780100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Seitz AR, Kim D, Watanabe T. Rewards evoke learning of unconsciously processed visual stimuli in adult humans. Neuron. 2009;61(5):700–707. doi: 10.1016/j.neuron.2009.01.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Li W, Howard JD, Parrish TB, Gottfried JA. Aversive learning enhances perceptual and cortical discrimination of indiscriminable odor cues. Science. 2008;319(5871):1842–1845. doi: 10.1126/science.1152837. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Braak H, Del Tredici K. Invited article: Nervous system pathology in sporadic Parkinson disease. Neurology. 2008;70(20):1916–1925. doi: 10.1212/01.wnl.0000312279.49272.9f. [DOI] [PubMed] [Google Scholar]

- 25.Pessiglione M, et al. An effect of dopamine depletion on decision-making: The temporal coupling of deliberation and execution. J Cogn Neurosci. 2005;17(12):1886–1896. doi: 10.1162/089892905775008661. [DOI] [PubMed] [Google Scholar]

- 26.Voon V, Potenza MN, Thomsen T. Medication-related impulse control and repetitive behaviors in Parkinson's disease. Curr Opin Neurol. 2007;20(4):484–492. doi: 10.1097/WCO.0b013e32826fbc8f. [DOI] [PubMed] [Google Scholar]

- 27.Lawrence AD, Evans AH, Lees AJ. Compulsive use of dopamine replacement therapy in Parkinson's disease: Reward systems gone awry? Lancet Neurol. 2003;2(10):595–604. doi: 10.1016/s1474-4422(03)00529-5. [DOI] [PubMed] [Google Scholar]

- 28.Singer HS. Tourette's syndrome: From behaviour to biology. Lancet Neurol. 2005;4(3):149–159. doi: 10.1016/S1474-4422(05)01012-4. [DOI] [PubMed] [Google Scholar]

- 29.Albin RL, Mink JW. Recent advances in Tourette syndrome research. Trends Neurosci. 2006;29(3):175–182. doi: 10.1016/j.tins.2006.01.001. [DOI] [PubMed] [Google Scholar]

- 30.Leckman JF. Tourette's syndrome. Lancet. 2002;360(9345):1577–1586. doi: 10.1016/S0140-6736(02)11526-1. [DOI] [PubMed] [Google Scholar]

- 31.Wong DF, et al. Mechanisms of dopaminergic and serotonergic neurotransmission in Tourette syndrome: Clues from an in vivo neurochemistry study with PET. Neuropsychopharmacology. 2008;33(6):1239–1251. doi: 10.1038/sj.npp.1301528. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Tarnok Z, et al. Dopaminergic candidate genes in Tourette syndrome: Association between tic severity and 3′ UTR polymorphism of the dopamine transporter gene. Am J Med Genet B Neuropsychiatr Genet. 2007;144B(7):900–905. doi: 10.1002/ajmg.b.30517. [DOI] [PubMed] [Google Scholar]

- 33.Gilbert DL, et al. Altered mesolimbocortical and thalamic dopamine in Tourette syndrome. Neurology. 2006;67(9):1695–1697. doi: 10.1212/01.wnl.0000242733.18534.2c. [DOI] [PubMed] [Google Scholar]

- 34.Yoon DY, et al. Dopaminergic polymorphisms in Tourette syndrome: Association with the DAT gene (SLC6A3) Am J Med Genet B Neuropsychiatr Genet. 2007;144B(5):605–610. doi: 10.1002/ajmg.b.30466. [DOI] [PubMed] [Google Scholar]

- 35.Schmidt L, et al. Disconnecting force from money: Effects of basal ganglia damage on incentive motivation. Brain. 2008;131(Pt 5):1303–1310. doi: 10.1093/brain/awn045. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.