Abstract

This review examines the neural systems underlying auditory word recognition processes using both lesion and functional neuroimaging studies. Focus is on the influence of the sound properties of language (its phonetic as well as its phonological properties) in the service of identifying a particular word or the conceptual/meaning associated with that word. Results indicate that auditory word recognition recruits a neural system in which information is passed through the network in what appears to be functionally distinct stages – acoustic-phonetic analysis in temporal areas, mapping of sound structure to the lexicon, accessing a lexical candidate and its associated lexical-semantic network in temporo-parietal areas, and lexical selection in frontal areas. Information cascades throughout the network as shown by the influence of ‘goodness’ of fit and phonological/lexical competition on modulation of activation in both posterior areas including the superior temporal gyrus and supramarginal gyrus and in frontal areas including the inferior frontal gyrus.

Introduction

To recognize a word requires processing the sound properties of language and mapping these sound structure representations on to lexical form, where the appropriate word is ultimately selected from the thousands of words in the lexicon. Much past research has looked at these processes contributing to word recognition separately. For example, the processes involved in perceiving the sounds of language and delineating the acoustic cues used in shaping phonetic category structure are typically studied independent of words and their meanings. And the processes involved in selecting a word and accessing its meaning are typically studied independent of perceiving the phonetic details of the input and the acoustic cues giving rise to phonetic categories. Yet, in the end, word recognition is in the service of a goal – which is to understand what the speaker is saying and hence requires delineating the processes involved in mapping what the speaker has said phonetically on to the sound shape associated with the word to ultimately extract its meaning.

It is the goal of this review to examine the neural systems underlying word recognition processes using both lesion studies and results from functional neuroimaging (fMRI). Together, they provide insight into how the various processes leading to word recognition intersect and, as we will see, interact. And more generally, they provide a window into the functional architecture of the language system. Specifically, we will examine auditory word recognition as it is influenced by the sound properties of language. The sound properties of language include the acoustic-phonetic detail of the sound shape of language which the listener receives as input from a speaker (its phonetic properties) and the more abstract phonological categories of sound used in a particular language system (its phonological properties).

Preliminaries

Understanding the neural systems underlying language processing and word recognition in particular draws from a rich literature in both aphasia and neuroimaging. Each research domain provides something that the other does not. Aphasia provides a rich tapestry of abilities and disabilities in language as a function of the underlying pathology. Moreover, as well shall see, different patterns of pathology may emerge under the same task conditions as a result of lesions to different areas, allowing for inferences about the functional roles those regions play. However, the fact is the lesions of aphasic patients tend to be large, making it difficult to pinpoint precisely the neural area giving rise to the deficit. Thus, strong claims about the neural underpinnings of language processes are difficult to make. In contrast, functional neuroimaging studies provide excellent localization. Activation patterns can be delineated on a voxel by voxel basis. However, it is not always clear whether activation in an area means that this particular area is necessary for a specific function (Price et al. 1999). And when several areas are activated within a particular task (as is often the case), it is impossible to know whether the functions of those areas differ. Together, however, the results of lesion studies and neuroimaging experiments allow for converging evidence about the neural systems underlying language and their functional roles. If, for example, an fMRI study shows activation in several areas, it is possible to look at the consequences of lesions to those particular areas to determine whether they are individually or severally involved and to ascertain what functional role they may play.

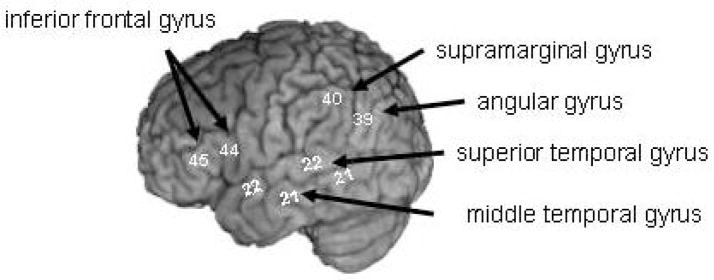

To presage the findings, there is no one area or ‘module’ where word recognition occurs. Rather, word recognition recruits a neural system involving temporal, parietal, and frontal structures (see Figure 1). These areas are engaged during the processes of word recognition. For example, while it is the case (and it is of no surprise) that the auditory processing of words recruits temporal lobe areas (since the primary auditory areas surface in the temporal lobe), frontal areas are also recruited and the extent of their activation is modulated by sound structure properties of words (Burton 2001; for reviews see Scott and Johnsrude 2003; Scott and Wise, 2004; Hickok and Poeppel 2000). As we will discuss in detail below, these findings suggest that word recognition involves a processing stream engaging multiple neural areas and that while there are multiple stages of processing, information flow at one level of processing modulates and influences other stages of processing.

Figure 1.

Neural areas associated with word recognition processes. Lateral view of the left hemisphere. Numbers correspond to Brodmann’s areas.

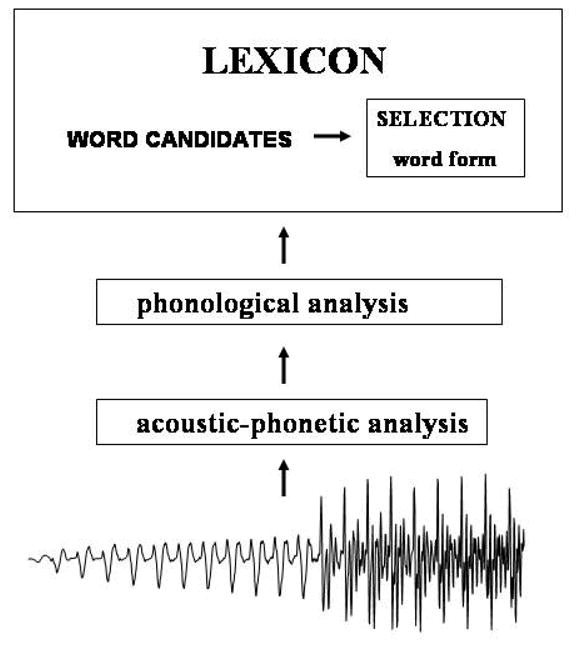

Such modulation of activation across the processing stream has implications for the functional architecture of the language system. It supports those models of word recognition in which there are multiple stages of processing organized in a network-like architecture in which information flow at one stage of processing influences that at other stages of processing (hence forming a network) (Gaskell and Marslen-Wilson 1999; McClelland and Elman 1986; McClelland and Rumelhart 1986; Marslen-Wilson 1987; Marslen-Wilson and Welsh 1978). Most models of word recognition propose a series of stages in word recognition. As shown in Figure 2, the auditory input goes through successive stages that progressively map the acoustic input into the acoustic-phonetic properties of speech and ultimately to the more abstract phonological properties of speech. These phonological properties are then mapped on to lexical representations (i.e. words in the lexicon) where the particular lexical entry is selected from the potential set of word candidates. At each stage of processing representational units are coded in terms of patterns of activation and inhibition, and the extent to which a representational unit is activated is a function of the ‘goodness’ of the input. As a consequence, activation patterns at a particular level of representation are not all-or-none but are graded or probabilistic.

Figure 2.

Model of processing stages in word recognition

Where current models of word recognition differ is the extent to which processing stages influence each other. As we will show, the results of behavioral, lesion, and neuroimaging studies are consistent with those models in which information cascades through the system such that the degree to which a word is recognized is influenced by processing stages downstream from it. In this way, the ‘goodness’ of the sound structure input at either the phonetic or phonological level not only affects the activation of its representational units but it also affects the activation level of the lexical form of a word and this in turn has a cascading effect on the activation of its lexical-semantic network.

The network properties of the functional architecture of the model also influence the extent to which a representation unit may be activated. In particular, because representational units are themselves part of a network, the degree to which they are activated is based on whether there are other representational units that share properties with that unit and hence compete with it. Representational units that share many properties have greater competition than those that do not. Thus, competition is an inherent part of the word recognition system, and hence selection of a word candidate relies on resolving competition. The extent of competition influences the time course and patterns of activation at each of the levels of representation, and ultimately, the performance of the network. The greater the degree of competition, the more difficult it is to select the word candidate. As we will show, competition derived from both acoustic-phonetic properties and phonological structure modulates word recognition processes. Hence, earlier processing stages generate multiple competing representations which are transmitted to subsequent later stages of processing (Dell and O’Seaghdha 1991; Dell et al. 1997; Gaskell and Marslen-Wilson 1999; Rapp and Goldrick 2000; McClelland 1979; McClelland and Rumelhart, 1986).

Influence of Acoustic Properties and Phonetic Category Structure on Word Recognition

The speech perception literature has shown that within a phonetic category, some members of the category are better exemplars than others. In particular, exemplars of a phonetic category that vary in equal steps across a particular acoustic dimension such as voice-onset time (VOT) are graded (Blumstein et al. 2005; Myers 2007). (Voice-onset time is the timing relation between the release of a stop consonant and the onset of vocal cord vibration). Given a [ta] to [da] VOT continuum, subjects show slower reaction-time latencies (RT) and poorer goodness ratings to the phonetic category [t] (or to the phonetic category [d]) as the stimuli approach the acoustic-phonetic boundary between [t] and [d], even though categorization performance for the particular stimuli may be the same. The question is first how and in what ways phonetic category structure affects access to words, and second, what neural areas are engaged in such processing.

One way to explore this question is to investigate the extent to which a poor exemplar of a phonetic category influences access to the lexical representation of a word and in turn access to its lexical-semantic network. The use of the semantic priming paradigm provides a means of addressing this question. In this paradigm, subjects are presented with a prime followed by a target, and are asked to make a lexical decision on the target stimulus, i.e. decide whether the stimulus is a word or not, by pressing one of two buttons. Measures of performance and reaction time (RT) to correct responses are taken. Subjects show faster RT latencies to word pairs if they are semantically related than if they are not; participants are faster in making lexical decisions to a word such as dog when it is preceded by a semantically related word like cat than they are to dog when it is preceded by a semantically unrelated word such as ring.

Changes in the ‘goodness’ of the phonetic input of the prime has an effect on the magnitude of semantic priming. Thus, if the VOT of the initial segment of a voiceless stop of a prime stimulus such as cat is shortened, and hence is a poorer exemplar of the voiceless phonetic category, there is a reduction in the magnitude of semantic priming (Andruski et al. 1994; Utman et al. 2001). Based on the functional architecture described above, this effect emerges because the mapping of the acoustic phonetic input to phonetic category structure and ultimately to the lexical representation of the word is influenced by the goodness of the auditory input. In this case, the initial voiceless stop is not only a poorer exemplar of the voiceless phonetic category [k], but it is closer in acoustic-phonetic space to the voiced phonetic category [g]. Moreover, the auditory input is a poorer match to its lexical representation (‘cat’) and affects the information flow throughout the system, ultimately influencing the degree to which the lexical-semantic network associated with the word cat is activated. Thus, the ‘goodness’ of the auditory input has a cascading effect on the processes involved in mapping sound structure on to lexical form and ultimately to meaning and suggests that access to the lexicon is not ‘all or none’ but rather is graded (see also McMurray 2002 who showed graded access to words using an eyetracking paradigm).

Broca’s aphasics with left hemisphere damage including the inferior frontal gyrus (IFG) (see Figure 1) show sensitivity to the goodness of the auditory input. In particular, similar to normal participants, they show a reduction in the magnitude of semantic priming when the sound shape of a word is a poorer match to its lexical representation than when it is a good match (Utman et al. 2001; Misiurski et al. 2005). Thus, Broca’s aphasics show priming for ‘cat’-‘dog’ and a reduction of priming for acoustically modified ‘c*at’-‘dog’. These findings are critically important because they indicate that damage to frontal areas have no effect on the early stages of mapping sound structure to lexical form and access to the meaning of a word. In this sense, information flow is normal in that Broca’s aphasics show graded activation of the lexical-semantic network as a function of phonetic category goodness.

Nonetheless, the goodness of the auditory input is not the whole story in its effects on word recognition. Another crucial factor is the extent to which the auditory input may be perceptually similar to a word in the lexicon other than the target - in other words, the extent to which access to the word is influenced by the presence of a lexical competitor. In this case, the presence of a lexical competitor has severe consequences for patients with lesions involving the left IFG – whereas normal subjects show a reduction in the magnitude of priming under these conditions, Broca’s aphasics lose semantic priming. Thus, Broca’s aphasics show normal semantic priming to the prime target stimuli pear – fruit when the prime stimulus is a good exemplar and matches its phonological representation. However, pear has a voiced competitor bear. Thus, if the VOT of the initial [p] of pear is shortened, it not only is a poorer exemplar of [p], but it is nearer in acoustic-phonetic space to the sound shape representation for [b]. As a result, the stimulus is not only a poorer representation of the lexical entry ‘pear’, but, the acoustic-phonetic representation is also closer to the lexical representation of bear, enhancing the extent to which pear and bear compete with each other. The poor exemplar of pear reduces the degree of match between the auditory input and the lexical representation of pear while at the same time increasing the degree to which the auditory input matches the lexical representation of the competitor bear. Under these circumstances, Broca’s aphasics lose semantic priming for fruit (Utman et al. 2001).

Consistent with these results is the presence of phonetically driven mediated semantic priming. In phonetically driven mediated semantic priming, a target word is primed or shows faster RT latencies when it is preceded by a prime word that is phonologically related to a word that is a semantic associate of the target. In other words, acoustically manipulated time primes penny via the phonological competitor dime (Misiurski et al. 2005). In this case, the presentation of an acoustically modified word such as time with a shortened VOT of the initial stop consonant not only decreases its match to the representation of time but at the same time increases its closeness to the voiced competitor dime as well as the activation of the lexical-semantic network associated with dime, i.e. penny. Given that Broca’s aphasics show deficits under conditions of lexical competition, then they should fail to show priming under these conditions. And this is the case. Although Broca’s aphasics show normal priming for the prime target pairs time-clock and dime-penny, they fail to show mediated priming when the acoustic input of the prime has been modified to be a poor exemplar of [t] and closer in acoustic-phonetic space to [d], i.e. time fails to prime penny (Misiurski et al. 2005; see also Baum 1997). Thus, the role of frontal areas in word recognition processes is to ultimately select the lexical candidate, and the degree to which this area is recruited is modulated by the presence of lexical competitors driven by the nature of the acoustic-phonetic input.

Previous work by Thompson-Schill (Thompson-Schill et al. 1997, 1999; Snyder et al. 2007) suggests that frontal areas and in particular the IFG is involved in the resolution of competition among competing semantic alternatives. What the results of the studies with aphasic studies suggest is that frontal areas are recruited not only in selecting among competing semantic alternatives but is also recruited when the competition derives from competition at acoustic-phonetic stages of processing. However, because the lesions of Broca’s aphasics typically extend well beyond Broca’s area (BA44 and 45), it is impossible to delineate further the neural areas contributing to these selection processes. Nonetheless, as discussed below, evidence from the neuroimaging literature suggests that the IFG as well as temporo-parietal areas are recruited under conditions of phonological-lexical competition.

What role do temporal and parietal areas play in the processes of word recognition when acoustic-phonetic input is varied? We know from both lesion and functional neuroimaging studies that the temporal areas including the superior temporal gyrus (STG) and parietal areas including the supramarginal (SMG) and angular gyri (AG) (see Figure 1) are recruited in processing the acoustic properties of speech (Blumstein et al. 1977b, 1984; Caplan et al. 1995; Carpenter and Rutherford 1973; Gow and Caplan 1996; Blumstein et al 2005; Binder et al. 1996, 2000, 2004; Zatorre et al. 1992, 1996) and more generally in speech perception (for reviews see Hickok and Poeppel 2000; Scott and Johnsrude 2003; Scott and Wise 2004). These areas play a crucial role in mapping phonological properties of speech on to lexical form. It is to this issue that we now turn.

Influence of Phonological Structure on Word Recognition

As described above, most models of speech processing assume that the acoustic-phonetic input is transformed into more abstract phonological representations. These representations appear to correspond to phonetic features which underlie the sound segments of language. Thus, the sound segment [b] is comprised of a set of features or attributes corresponding to the fact that it is a stop consonant [+ consonant], it is made with a closure [+stop], the closure is at the lips [+labial], and the vocal folds vibrate either prior to or shortly after the consonant release [+voice]. It is generally the case that participants make more errors when they are required to discriminate pairs of stimuli that share phonetic features compared to when they don’t [pa – ta] vs. [pa vs. la], and they make more errors discriminating pairs of stimuli that are distinguished by one phonetic feature than two phonetic features, e.g. [pa – ba] vs. [pa – da].

Posterior aphasics with lesions involving temporo-parietal areas and specifically the STG, SMG, and bordering parietal operculum show impairments in discriminating words distinguished by minimal phonological contrasts (Caplan et al. 1995). There is no question that their performance is enhanced as a function of the lexical status of the stimuli, i.e. they do better discriminating words than nonwords (Baker et al. 1981). Nonetheless, they still show pathological performance even when the stimuli are word pairs and not nonsense syllables. Even patients with IFG damage show mild impairments in discriminating pairs of phonologically similar words or nonwords (Baker et al. 1981; Blumstein et al. 1977a; Miceli et al. 1980).

Thus, damage to temporal, parietal, and frontal areas affects the processing of the sound structure of words with the greatest impairments occurring with damage to temporo-parietal areas. Neuroimaging findings are consistent with these results (Indefrey and Levelt 2004; Hickok and Poeppel 2007; Paulesu et al. 1993). Word perception tasks reveal activation in temporal areas including the STG and MTG as well as posterior-temporal-parietal areas (the SMG and AG) and frontal areas (the IFG) (see Figure 1). It has been proposed that the temporal areas are involved in acoustic-phonetic analysis, the MTG and temporo-parietal areas in mapping the sound structure on to lexical form (lexical code retrieval and phonological working memory), and the frontal areas involved in lexical selection. These findings are consistent with the view that the mapping of sound structure to lexical form recruits a distributed neural system involving temporal, parietal, and frontal structures with the temporo-parietal areas particularly involved in mapping sound structure on to lexical form (Hickok and Poeppel 2000).

Even though both frontal and temporo-parietal areas are involved in mapping sound structure on to lexical form, results of a study by Tyler (1992) using a gating paradigm showed that patients with posterior or anterior lesions behave similar to normals in being able to identify potential word candidates as they are presented with increasingly larger portions of the auditory input of words. This paradigm has shown that normal participants report a number of word candidates when there is little auditory input. As the amount of auditory input increases, the number of possible word candidates that match the sound structure input decreases until at some point, only one word is uniquely identified (Grosjean 1980). The pattern of results for brain-injured patients is similar to that of age-matched controls (Tyler 1992). They identify a similar set of candidate words for a particular auditory input; they recognize the word at approximately the same point as normals; and this ‘uniqueness point’ (the point where the word is completely disambiguated) occurs before all of the auditory input is presented, i.e. prior to its offset. Despite these spared abilities, further examination of word recognition processes using other paradigms suggests that word recognition is modulated by phonological structure and the number of potential word candidates that share phonological structure and that both anterior and posterior areas are recruited under such conditions.

It has been shown that the ease or difficulty with which a spoken word is accessed is influenced by the number of words which are phonologically similar to it (Luce and Pisoni 1998; Luce and Large 2001; Vitevitch and Luce 1999). Words which have a lot of phonologically similar ‘neighbors’ are harder to access than words which have a few phonologically similar ‘neighbors’. In particular, lexical decision and naming latencies are slower to words from dense neighborhoods than words from sparse neighborhoods (Luce and Pisoni 1998). These findings are consistent with the view that all potential word candidates that share sound structure properties with the target word are partially activated and the candidate that best matches the target is eventually selected. The greater the number of competitors, the harder it is to select the target stimulus.

Broca’s aphasics with damage including the IFG show normal lexical density effects in a lexical decision task. Namely, similar to normal control subjects, they show slower reaction-time latencies to words from high density neighborhoods than to words from low density neighborhoods (Rissman and Blumstein, in preparation). And functional neuroimaging results with normal subjects show increased activation in the SMG as a function of lexical density with greater activation for high density than for low density words (Prabhakaran et al. 2006). Taken together, these results suggest that the SMG is critically involved in mapping sound structure to the lexicon, and greater processing resources are required in accessing words from phonologically similar neighborhoods. Surprisingly, the IFG did not appear to be involved in resolving phonological-lexical competition. Thus, as shown earlier, frontal areas including the IFG are recruited under conditions of semantic competition (see Swaab et al., 1998: Bilenko et al., 2008), but they do not appear to be recruited under conditions of phonological competition. If this were the case patients with lesions including the IFG should have shown deficits in selecting words from high density neighborhoods compared to low density neighborhoods and the neuroimaging findings of Prabhakaran et al. (2006) should have shown increased activation in the IFG for high density compared to low density words.

Taken together, these findings raise the possibility that there is a functional division in those neural areas recruited in the resolution of competition with the SMG resolving phonological-lexical competition and the IFG resolving lexical-semantic competition. However, it is important to consider the task demands used to investigate the neighborhood density effects. In particular, recruitment of the SMG occurred when subjects were required to determine the lexical status of the stimulus. In this case, they only needed to determine whether there was a match between the auditory input and a lexical entry. Thus, this task tapped only the mapping of sound structure on to lexical form and not the mapping of the lexical target on to a conceptual representation of the auditory input. Critically, as we will show below, when subjects have to access the conceptual as well as the phonological representation of a word by pointing to a picture from a stimulus array corresponding to an auditorily presented word, evidence from both lesion studies and functional neuroimaging indicates that both the IFG and posterior areas including the posterior STG and SMG are modulated by the presence of phonological/lexical competition (cf. also Celsis et al. 1999; Binder and Price 2001; Gelfand and Bookheimer 2003; Hickok and Poeppel 2000; Gold and Buckner 2002). Let us turn to the evidence.

Behavioral findings in the normal literature using an eye-tracking paradigm have shown that the presence of an onset competitor influences the time course in selecting the picture of an auditory target (Tanenhaus et al. 1995). In particular, if subjects are asked to point to a picture corresponding to an auditorily presented word from a four picture display containing pictures of a target word, e.g. hammock, a word that shares the onset of the target word, e.g. hammer, and two semantically and phonologically unrelated distractors, e.g. chocolate and monkey, initially they are more likely to look at the competitor than at the two unrelated distractors (Allopenna et al. 1998).

Results of a study using the eyetracking paradigm to explore the neural systems underlying this effect showed two areas of increased activation when comparing the competitor trials to no-competitor trials (Righi et al. 2009). One cluster emerged in the posterior STG extending into the SMG. Two clusters emerged in the IFG, one cluster in Brodmann’s area (BA) 44 and the other in BA45 (see Figure 1). Thus, both the SMG and IFG are recruited in the processes of lexical selection, and the activation of these areas is modulated by phonological competition.

Consistent with these findings are the results of a series of experiments with Wernicke’s aphasics with lesions involving posterior areas (including the posterior STG and SMG) and Broca’s aphasics with lesions involving anterior areas (including the IFG) (Yee et al. 2008). One eyetracking experiment was patterned after the Allopenna et al. (1998) and Righi et al. (2009) studies discussed above examining phonological onset competition. The second study investigated whether phonological competitor effects would emerge even when the onset competitor stimulus was not present in the stimulus array. In particular, similar to the lexical decision studies described earlier, this study investigated phonologically mediated semantic priming effects. That is given the target word hammock, normal subjects preferentially look at the picture of a nail (semantically related to the onset competitor of the target, i.e. hammer) compared to two unrelated distractors (Yee and Sedivy 2006; Yee et al. 2008).

Results of the two experiments revealed that both groups of patients demonstrated pathological patterns (Yee et al. 2008). Although both Broca’s and Wernicke’s aphasics successfully looked at the picture of the auditorily presented target, patients with posterior damage showed increased looks over time to the competitor stimuli than to the distractors. In contrast, patients with anterior damage lost the competitor effect and were as likely to look at the distractor stimuli as to the competitor. These different patterns suggest separate functional roles for posterior and anterior areas in the resolution of competition. Thus, damage to both posterior and anterior areas has consequences for word recognition; both areas show deficits as a function of phonological competition and hence are a part of a common network which underlies the processes which ultimately allow a listener to recognize and access words (cf. also Janse 2006).

Functional Architecture and Neural Systems Underlying Word Recognition

The results of the studies reviewed in this paper are consistent with models of word recognition in which information flow from one stage of processing has a cascading effect on processing at later stages of processing (Gaskell and Marslen-Wilson 1999; McClelland and Elman 1986; McClelland and Rumelhart 1986; Marslen-Wilson 1987; Marslen-Wilson and Welsh 1978). They challenge those modular, feed-forward models in which gradations in activation affect only the current level of processing and processing stages have limited influence on each other (Levelt, 1992; Levelt et al., 1999). In this view, a processing stage generates a single representation once its computations are complete and this single output representation is then transmitted to subsequent processing stages. Thus, these models do not allow for graded activation in subsequent processing stages.

The results from lesion studies and functional neuroimaging experiments support the principles on which the functional architecture of the word recognition system appears to be based. Namely, word recognition recruits a neural system involving anterior, parietal, and temporal areas. Information passes through these areas in what appears to be functionally distinct stages – acoustic-phonetic analysis in temporal areas (STG), mapping of sound structure to the lexicon and accessing a lexical candidate and its associated lexical-semantic network in temporo-parietal areas (MTG, SMG, AG), and lexical selection in frontal areas (IFG). These areas are interconnected and information cascades throughout the network as shown by graded activation during word recognition in these areas as a function of the nature of the acoustic-phonetic input and the extent of lexical competition. Such graded activation affecting this neural network has been shown not only in word recognition processes but also in the perception of the phonetic categories of speech (Blumstein et al. 2005; Myers 2007), in the influence of the lexical status of a word on phonetic perception (Myers and Blumstein 2008), and in semantic priming (Rissman et al. 2003; Cardillo et al. 2004).

Acknowledgments

This research was supported in part by NIH Grants RO1 DC006220 and RO1 DC00314 from the National Institute on Deafness and Other Communication Disorders. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute on Deafness and Other Communication Disorders or the National Institutes of Health.

Works Cited

- Allopenna Paul D, Magnuson James S, Tanenhaus Michael K. Tracking the time course of spoken word recognition using eye movements: evidence for continuous mapping models. Journal of Memory and Language. 1998;38:419–439. [Google Scholar]

- Andruski Jean E, Blumstein Sheila E, Burton Martha. The effect of subphonetic differences on lexical access. Cognition. 1994;52:163–187. doi: 10.1016/0010-0277(94)90042-6. [DOI] [PubMed] [Google Scholar]

- Baker Errol, Blumstein Sheila E, Goodglass Harold. Interaction between phonological and semantic factors in auditory comprehension. Neuropsychologia. 1981;19:1–16. doi: 10.1016/0028-3932(81)90039-7. [DOI] [PubMed] [Google Scholar]

- Baum Shari R. Phonological, semantic, and mediated priming in aphasia. Brain and Language. 1997;60:347–359. doi: 10.1006/brln.1997.1829. [DOI] [PubMed] [Google Scholar]

- Bilenko Natalia, Grindrod Christopher, Blumstein Sheila E. Neural correlates of semantic competition during processing of ambiguous words. Journal of Cognitive Neuroscience. 2008 Aug 14; doi: 10.1162/jocn.2009.21073. epub ahead of print. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder Jeffrey R, Price Cathy J. Functional neuroimaging of language. In: Cabeza Roberto, Kingstone Alan., editors. Handbook of functional neuroimaging. Cambridge, MA: MIT Press; 2001. pp. 187–254. [Google Scholar]

- Binder JR, Frost JA, Hammeke TA, Rao SM, Cox RW. Function of the left planum temporale in auditory and linguistic processing. Brain. 1996;119:239–1247. doi: 10.1093/brain/119.4.1239. [DOI] [PubMed] [Google Scholar]

- Binder JR, Frost JA, Hammeke TA, Bellgowan PSF, Springer JA, Kaufman JN, Possing ET. Human temporal lobe activation by speech and nonspeech sounds. Cerebral Cortex. 2000;10:512–528. doi: 10.1093/cercor/10.5.512. [DOI] [PubMed] [Google Scholar]

- Binder Jeffrey R, Liebenthal Einat, Possing Edward T, Medler David A, Ward B Douglas. Neural correlates of sensory and decision processes in auditory object identification. Nature Neuroscience. 2004;7:295–301. doi: 10.1038/nn1198. [DOI] [PubMed] [Google Scholar]

- Blumstein Sheila E, Baker Errol, Goodglass Harold. Phonological factors in auditory comprehension in aphasia. Neuropsychologia. 1977a;15:19–30. doi: 10.1016/0028-3932(77)90111-7. [DOI] [PubMed] [Google Scholar]

- Blumstein Sheila E, Cooper William E, Zurif Edgar B, Caramazza Alfonso. The perception and production of voice-onset time in aphasia. Neuropsychologia. 1977b;15:371–383. doi: 10.1016/0028-3932(77)90089-6. [DOI] [PubMed] [Google Scholar]

- Blumstein Sheila E, Milberg William, Shrier Robin. Semantic processing in aphasia: Evidence from an auditory lexical decision task. Brain and Language. 1982;17:301–315. doi: 10.1016/0093-934x(82)90023-2. [DOI] [PubMed] [Google Scholar]

- Blumstein Sheila E, Myers Emily B, Rissman Jesse. The perception of voice onset time: An fMRI investigation of phonetic category structure. Journal of Cognitive Neuroscience. 2005;17:1353–1366. doi: 10.1162/0898929054985473. [DOI] [PubMed] [Google Scholar]

- Blumstein Sheila E, Tartter Vicky C, Nigro Georgia, Statlender Sheila. Acoustic cues for the perception of place of articulation in aphasia. Brain and Language. 1984;22:128–149. doi: 10.1016/0093-934x(84)90083-x. [DOI] [PubMed] [Google Scholar]

- Burton Martha W. The role of inferior frontal cortex in phonological processing. Cognitive Science. 2001;25:695–709. [Google Scholar]

- Caplan David, Gow David, Makris Nicholas. Analysis of lesions by MRI in stroke patients with acoustic-phonetic processing deficits. Neurology. 1995;45:293–298. doi: 10.1212/wnl.45.2.293. [DOI] [PubMed] [Google Scholar]

- Cardillo Eileen R, Aydelott Jennifer, Matthews Paul M, Devlin Joseph T. Left inferior prefrontal cortex activity reflects inhibitory rather than facilitatory priming. Journal of Cognitive Neuroscience. 2004;16:1552–1561. doi: 10.1162/0898929042568523. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carpenter Robert L, Rutherford David R. Acoustic cue discrimination in adult aphasia. Journal of Speech and Hearing Research. 1973;16:534–544. doi: 10.1044/jshr.1603.534. [DOI] [PubMed] [Google Scholar]

- Celsis P, Boulanouar K, Doyon B, Ranjeva JP, Berry I, Nespoulous JL, Chollet F. Differential fMRI responses in the left posterior superior temporal gyrus and left supramarginal gyrus to habituation and change detection in syllables and tones. Neuroimage. 1999;9:135–144. doi: 10.1006/nimg.1998.0389. [DOI] [PubMed] [Google Scholar]

- Connine Cynthia M, Titone Debra, Dellman Thomas, Blasko Dawn. Similarity mapping in spoken word recognition. Journal of Memory and Language. 1997;37:463–480. [Google Scholar]

- Dell Gary S, O’Seaghdha Padraig G. Mediated and convergent lexical priming in language production: A comment on Levelt et al. 1991. Psychological Review. 1991;98:604–614. doi: 10.1037/0033-295x.98.4.604. [DOI] [PubMed] [Google Scholar]

- Dell Gary S, Schwartz Myrna F, Martin Nadine, Saffran Eleanor M, Gagnon Deborah A. Lexical access in aphasic and nonaphasic speakers. Psychological Review. 1997;104:801–838. doi: 10.1037/0033-295x.104.4.801. [DOI] [PubMed] [Google Scholar]

- Gaskell M Gareth, Marslen-Wilson William D. Ambiguity, competition, and blending in spoken word recognition. Cognitive Science. 1999;23:439–462. [Google Scholar]

- Gelfand Jenna R, Bookheimer Susan Y. Dissociating neural mechanisms of temporal sequencing and processing phonemes. Neuron. 2003;38:831–842. doi: 10.1016/s0896-6273(03)00285-x. [DOI] [PubMed] [Google Scholar]

- Gold Brian T, Buckner Randy L. Common prefrontal regions coactivate with dissociable posterior regions during controlled semantic and phonological tasks. Neuron. 2002;35:803–812. doi: 10.1016/s0896-6273(02)00800-0. [DOI] [PubMed] [Google Scholar]

- Gow David W, Caplan David. An examination of impaired acoustic-phonetic processing in aphasia. Brain and Language. 1996;52:386–407. doi: 10.1006/brln.1996.0019. [DOI] [PubMed] [Google Scholar]

- Grosjean Francois. Spoken word recognition processes and the gating paradigm. Perception and Psychophysics. 1980;28:267–283. doi: 10.3758/bf03204386. [DOI] [PubMed] [Google Scholar]

- Hickok Gregory, Poeppel David. Towards a functional neuroanatomy of speech perception. Trends in Cognitive Science. 2000;4:131–138. doi: 10.1016/s1364-6613(00)01463-7. [DOI] [PubMed] [Google Scholar]

- Hickok Gregory, Poeppel David. The cortical organization of speech processing. Nature Reviews Neuroscience. 2007;8:393–402. doi: 10.1038/nrn2113. [DOI] [PubMed] [Google Scholar]

- Indefrey Peter, Levelt William JM. The spatial and temporal signatures of word production components. Cognition. 2004;92:101–144. doi: 10.1016/j.cognition.2002.06.001. [DOI] [PubMed] [Google Scholar]

- Janse Esther. Lexical competition effects in aphasia: Deactivation of lexical candidates in spoken word processing. Brain and Language. 2006;97:1–11. doi: 10.1016/j.bandl.2005.06.011. [DOI] [PubMed] [Google Scholar]

- Levelt Willem. Accessing words in speech production: Stages, processes and representations. Cognition. 1992;42:1–22. doi: 10.1016/0010-0277(92)90038-j. [DOI] [PubMed] [Google Scholar]

- Levelt Willem JM, Roelofs Ardi, Meyer Antje S. A theory of lexical access in speech production. Behavioral and Brain Sciences. 1999;22:1–75. doi: 10.1017/s0140525x99001776. [DOI] [PubMed] [Google Scholar]

- Luce Paul A, Large Nathan R. Phonotactics, density, and entropy in spoken word recognition. Language and Cognitive Processes. 2001;16:565–581. [Google Scholar]

- Luce Paul A, Pisoni David B. Recognizing spoken words: The neighborhood activation model. Ear and Hearing. 1998;19:1–36. doi: 10.1097/00003446-199802000-00001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marslen-Wilson William. Functional parallelism in spoken word-recognition. Cognition. 1987;25:71–102. doi: 10.1016/0010-0277(87)90005-9. [DOI] [PubMed] [Google Scholar]

- Marslen-Wilson William, Welsh Alan. Processing interactions and lexical access during word-recognition in continuous speech. Cognitive Psychology. 1978;10:29–63. [Google Scholar]

- McClelland James L. On the time relations of mental processes: An examination of systems of processes in cascade. Psychological Review. 1979;86:287–330. [Google Scholar]

- McClelland James L, Elman Jeffrey. The TRACE model of speech perception. Cognitive Psychology. 1986;18:1–86. doi: 10.1016/0010-0285(86)90015-0. [DOI] [PubMed] [Google Scholar]

- McClelland James L, Rumelhart David. Psychological and biological models. 2. Cambridge, MA: MIT Press; 1986. Parallel distributed processing. [Google Scholar]

- McMurray Bob, Tannenhaus Michael K, Aslin Richard N. Gradient effects of within-category phonetic variation on lexical access. Cognition. 2002;86:B33–B42. doi: 10.1016/s0010-0277(02)00157-9. [DOI] [PubMed] [Google Scholar]

- Miceli Gabrieli, Gainotti Guido, Caltagirone Carlo, Masullo Carlo. Some aspects of phonological impairment in aphasia. Brain and Language. 1980;11:159–169. doi: 10.1016/0093-934x(80)90117-0. [DOI] [PubMed] [Google Scholar]

- Milberg William, Blumstein Sheila E. Lexical decision and aphasia: Evidence for semantic processing. Brain and Language. 1981;14:371–385. doi: 10.1016/0093-934x(81)90086-9. [DOI] [PubMed] [Google Scholar]

- Misiurski Cara, Blumstein Sheila E, Rissman Jesse, Berman Daniel. The role of lexical competition and acoustic-phonetic structure in lexical processing: Evidence from normal subjects and aphasic patients. Brain and Language. 2005;93:64–78. doi: 10.1016/j.bandl.2004.08.001. [DOI] [PubMed] [Google Scholar]

- Myers Emily B. Dissociable effects of phonetic competition and category typicality in a phonetic categorization task: An fMRI investigation. Cerebral Cortex. 2007;45:1463–1473. doi: 10.1016/j.neuropsychologia.2006.11.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myers Emily B, Blumstein Sheila E. The neural bases of the lexical effect: An fMRI investigation. Cerebral Cortex. 2008;18:278–288. doi: 10.1093/cercor/bhm053. [DOI] [PubMed] [Google Scholar]

- Paulesu E, Frith CD, Frackowiak RSJ. The neural correlates of the verbal component of working memory. Nature. 1993;362:342–345. doi: 10.1038/362342a0. [DOI] [PubMed] [Google Scholar]

- Prabhakaran Ranjani, Blumstein Sheila E, Myers Emily B, Hutchinson Emmette, Britton Brendan. An event-related investigation of phonological-lexical competition. Neuropsychologia. 2006;44:2209–2221. doi: 10.1016/j.neuropsychologia.2006.05.025. [DOI] [PubMed] [Google Scholar]

- Price CJ, Mummery C, Moore CJ, Frackowiak JC, Friston KJ. Delineating necessary and sufficient neural systems with functional imaging of studies with neuropsychological patients. Journal of Cognitive Neuroscience. 1999;11:371–382. doi: 10.1162/089892999563481. [DOI] [PubMed] [Google Scholar]

- Rapp Brenda, Goldrick Matthew. Discreteness and interactivity in spoken word production. Psychological Review. 2000;107:460–499. doi: 10.1037/0033-295x.107.3.460. [DOI] [PubMed] [Google Scholar]

- Righi Giulia, Blumstein Sheila E, Mertus John, Worden Michael S. Neural Systems underlying lexical competition: An eyetracking and fMRI Study. Journal of Cognitive Neuroscience. 2009 doi: 10.1162/jocn.2009.21200. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rissman Jesse, Eliassen James, Blumstein Sheila E. An event-related fMRI investigation of implicit semantic priming. Journal of Cognitive Neuroscience. 2003;15:1160–1175. doi: 10.1162/089892903322598120. [DOI] [PubMed] [Google Scholar]

- Scott Sophie K, Johnsrude Ingrid S. The neuroanatomical and functional organization of speech perception. Trends in Neuroscience. 2003;26:100–107. doi: 10.1016/S0166-2236(02)00037-1. [DOI] [PubMed] [Google Scholar]

- Scott Sophie K, Wise Richard JS. The functional neuroanatomy of prelexical processing in speech perception. Cognition. 2004;92:13–45. doi: 10.1016/j.cognition.2002.12.002. [DOI] [PubMed] [Google Scholar]

- Snyder Hannah R, Feigenson Keith, Thompson-Schill Sharon L. Prefrontal cortical response to conflict during semantic and phonological tasks. Journal of Cognitive Neuroscience. 2007;19:761–775. doi: 10.1162/jocn.2007.19.5.761. [DOI] [PubMed] [Google Scholar]

- Swaab Tamara, Brown Colin, Hagoort Peter. Understanding ambiguous words in sentence contexts: electrophysiological evidence for delayed contextual selection in Broca’s aphasia. Neuropsychologia. 1998;36:737–761. doi: 10.1016/s0028-3932(97)00174-7. [DOI] [PubMed] [Google Scholar]

- Tanenhaus MK, Spivey-Knowlton MJ, Eberhard KM, Sedivy JC. Integration of visual and linguistic information in spoken language comprehension. Science. 1995;268:632–634. doi: 10.1126/science.7777863. [DOI] [PubMed] [Google Scholar]

- Thompson-Schill Sharon L, D’Esposito Mark, Aguirre Geoffrey K, Farah Martha J. Role of the left inferior prefrontal cortex in retrieval of semantic knowledge: A reevaluation. Proceedings of the National Academy of Sciences. 1997;94:14792–14797. doi: 10.1073/pnas.94.26.14792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson-Schill Sharon L, D’Esposito Mark, Kan Irene P. Effects of repetition and competition on activation in left prefrontal cortex during word generation. Neuron. 1999;23:513–522. doi: 10.1016/s0896-6273(00)80804-1. [DOI] [PubMed] [Google Scholar]

- Tyler Lorriane K. Spoken language comprehension. Cambridge, MA: MIT Press; 1992. [Google Scholar]

- Utman Jennifer A, Blumstein Sheila E, Sullivan Kelly. Mapping from sound to meaning: Reduced lexical activation in Broca’s aphasics. Brain and Language. 2001;79:444–472. doi: 10.1006/brln.2001.2500. [DOI] [PubMed] [Google Scholar]

- Vitevitch Michael S, Luce Paul A. Probabilistic phonotactics and neighborhood activation in spoken word recognition. Journal of Memory and Language. 1999;40:374–408. [Google Scholar]

- Yee Eiling, Blumstein Sheila E, Sedivy Julie. Lexical-semantic activation in Broca’s and Wernicke’s aphasia: Evidence from eye movements. Journal of Cognitive Neuroscience. 2008;20:592–612. doi: 10.1162/jocn.2008.20056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yee Eiling, Sedivy Julie. Eye movements to pictures reveal transient semantic activation during spoken word recognition. Journal of Experimental Psychology: Learning, Memory and Cognition. 2006;32:1–14. doi: 10.1037/0278-7393.32.1.1. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Evans AC, Meyer E, Gjedde A. Lateralization of phonetic and pitch discrimination in speech processing. Science. 1992;256:846–849. doi: 10.1126/science.1589767. [DOI] [PubMed] [Google Scholar]

- Zatorre Robert, Meyer Ernst, Gjedde Albert, Evans Alan. PET studies of phonetic processing of speech: Review, replication, and reanalysis. Cerebral Cortex. 1996;6:21–30. doi: 10.1093/cercor/6.1.21. [DOI] [PubMed] [Google Scholar]