Abstract

For over 100 years, clinicians have noted that patients with nonfluent aphasia are capable of singing words that they cannot speak. Thus, the use of melody and rhythm has long been recommended for improving aphasic patients’ fluency, but it was not until 1973 that a music-based treatment (Melodic Intonation Therapy, (MIT)) was developed. Our ongoing investigation of MIT’s efficacy has provided valuable insight into this therapy’s effect on language recovery. Here we share those observations, our additions to the protocol that aim to enhance MIT’s benefit, and the rationale that supports them.

Keywords: Melodic Intonation Therapy, nonfluent aphasia, language recovery, brain plasticity, music therapy

Introduction

According to the National Institutes for Health (NINDS Aphasia Information Page: NINDS, 2008), approximately 1 in 272 Americans suffer from aphasia, a disorder characterized by the loss of ability to produce and/or comprehend language. Despite its prevalence, the neural processes that underlie recovery remain largely unknown and thus, have not been specifically targeted by aphasia therapies. One of the few accepted treatments for severe, nonfluent aphasia is Melodic Intonation Therapy (MIT),1-6 a treatment that uses the musical elements of speech (melody & rhythm) to improve expressive language by capitalizing on preserved function (singing) and engaging language-capable regions in the undamaged right hemisphere. In this chapter, we describe how to administer MIT (based on Helm-Estabrooks’ descriptions3,4), share our additions to the protocol, and explain how it may exert is therapeutic effect.

What, exactly, is MIT?

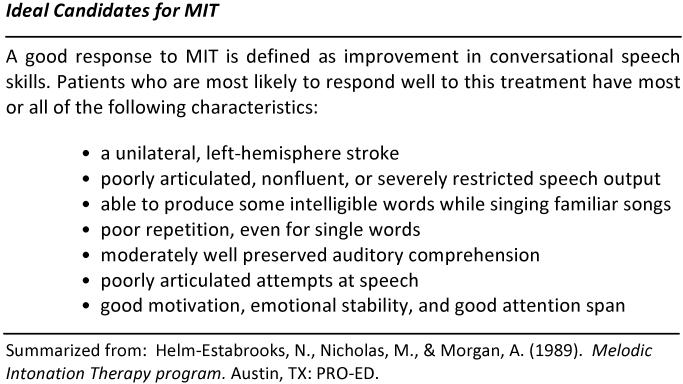

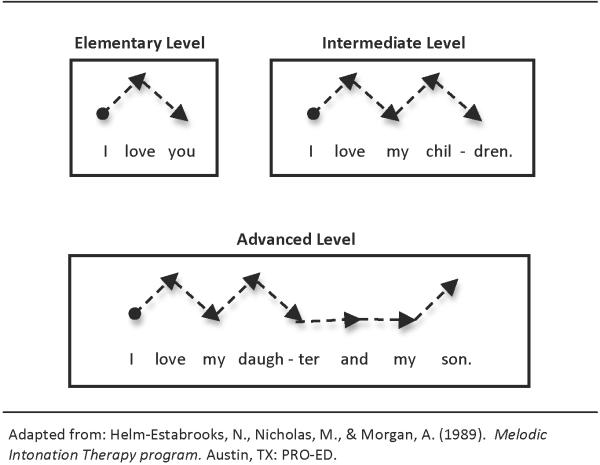

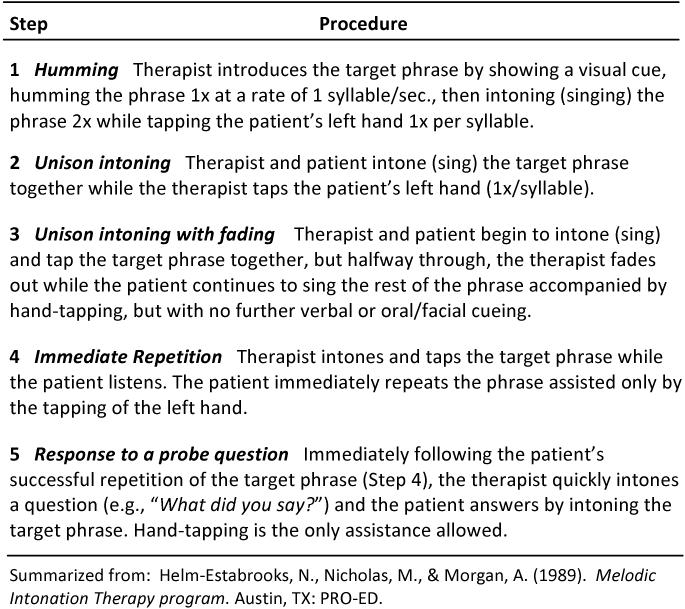

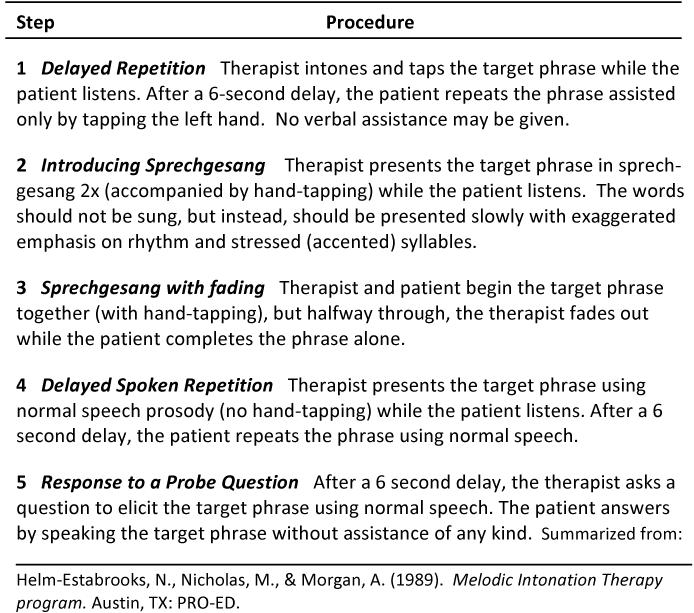

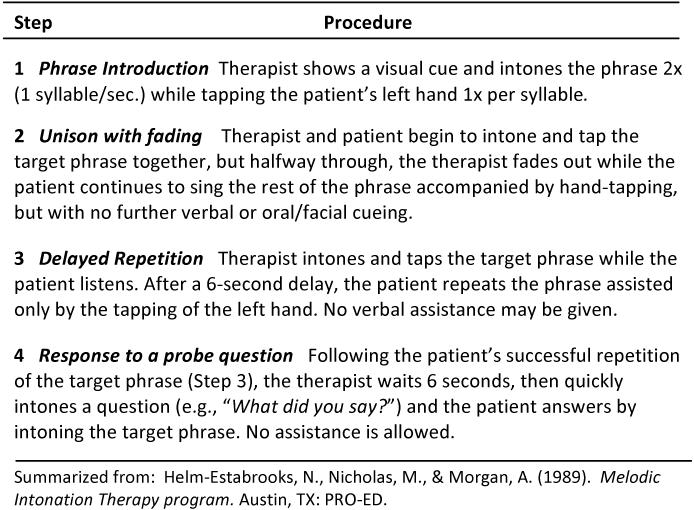

The original program is designed to lead nonfluent aphasic patients (Fig.1) from intoning (singing) simple, 2–3 syllable phrases, to speaking 5+ syll. phrases1,3,4 across 3 levels of treatment. Each level consists of 20 high-probability words (e.g., “Water”) or social phrases (e.g., “I love you.”) presented with visual cues. Phrases are intoned on just 2 pitches, “melodies” are determined by the phrases’ natural prosody (e.g., stressed syllables are sung on the higher of the 2 pitches, unaccented syllables on the lower pitch (Fig.2)), and the patient’s left hand is tapped 1x/syllable. Although it may appear that the primary difference between the levels is phrase length, the more important distinctions are the administration of the treatment and degree of support provided by the therapist (Fig.3-5).

Figure 1.

Melodic Intonation Therapy

Figure 2. MIT: Melodic Phrase Construction.

Figure 3. MIT: Elementary Level.

Figure 5. MIT: Advanced Level.

Interestingly, there appear to be almost as many interpretations of the original protocol as there are people using it. While early reports6, 7 depict phrases using 3 pitches rather than the originally specified 2, anecdotal evidence (DVDs from prospective patients across the US) shows a number of therapists using the technique, and no two sessions are alike. Some use 2 pitches separated by a perfect 4th or 5th, while others write a new tune for each phrase using as many as 7–8 pitches in a specified key. Still others accompany their patients on the piano, use familiar song melodies, or rapidly “play” 4–5 notes up and down the patient’s arm as they sing words or phrases. While all such variations might have the potential to engage right-hemisphere regions capable of supporting speech, it may be just such complex interpretations of the protocol that prevent therapists with little or no musical background from using the treatment. Thus, we aim to simplify the process so any therapist can administer it, and well-trained patients and caregivers can learn to apply the method when intensive treatment ends. Because the focus is not on performance, one does not need to be a musician or even a good singer to administer or participate in this treatment. The goal is to uncover the inherent melody in speech to gain fluency and increase expressive output.

Getting Started

Seated across a table from the patient, the therapist shows a visual cue and introduces a word/phrase (e.g., “Thank you”). The accented/stressed syllable(s) will be sung on the higher of the 2 pitches, unaccented on the lower (Fig.2). The starting pitch should rest comfortably in the patient’s voice range, and the other pitch should be a minor 3rd (3 semitones) above or below (middle C and the A just below it work well for most people). For those unfamiliar with this terminology, think of the children’s taunt, “Naa-naa - Naa-naa”. These 2 pitches create the interval of a minor 3rd, which is universally familiar, requires no special singing skill, and provides a good approximation of the prosody of speech that still falls into the category of singing.

What Else is New?

While it has been shown that MIT in its original form leads to greater fluency in small case series5, sustaining treatment effects can be a challenge for any intervention. Thus, we have instituted the use of Inner Rehearsal and Auditory-motor Feedback Training to help patients gain maintainable independence as they improve expressive speech.

Inner Rehearsal

So patients learn to establish their own “target” phrases, the therapist models the process of inner rehearsal by slowly tapping the patient’s hand (1 syll/sec) while humming the melody, then softly singing the words, explaining that s/he is “hearing” the phrase sung “inside”. If the patient has trouble understanding how to do this, s/he is asked to imagine hearing someone sing “Happy Birthday” or a parent’s voice saying, “Do your homework”. Once the concept is understood, the therapist taps while softly singing the phrase and indicating that the patient should hear his/her own voice singing the phrase “inside”. This inner rehearsal (covert production) of the phrase creates an auditory “target” with which the overtly produced phrase can be compared. Those who master this technique can eventually transfer the skill from practiced MIT phrases to expressive speech initiated with little or no assistance.

Auditory-motor Feedback Training

Because re-learning to identify and produce individual speech sounds is essential to patients’ success, training them to hear the difference between the target phrase and their own speech is a key aspect of the recovery process. In the early phases of treatment, patients listen as the therapist sings the target, and learn to compare their own output as they repeat the words/phrases. Sounds identified as incorrect become the focus of remediation. Once a problem is corrected, the process of singing, listening, and repeating begins again. As patients learn to create their own target through inner rehearsal, auditory-motor feedback training allows them to self-monitor as thoughts are sung aloud. Over time, they learn to use the auditory-motor feedback “loop” to hear their own speech objectively, identify problems and adjust to correct them as they speak, and thereby decrease dependence on the therapist.

How does MIT work?

Preliminary data comparing MIT to an equally intense control therapy that uses no intoning or left-hand tapping, indicate that those 2 elements add greatly to MIT’s effectiveness.5 While its developers suggested that tapping and intoning could engage homologous language regions in the right hemisphere, they did not explain how this would occur.2 Additionally, since ideal candidates for MIT2 (Fig.1) are patients with Broca’s aphasia, a population with both linguistic and motor speech impairments, the extent to which MIT addresses aphasia, apraxia, or both is not yet clear. Below is a brief discussion of MIT’s critical elements and how they may contribute its therapeutic effect.

Intonation

The intonation at the heart of MIT was originally intended to engage the right hemisphere, given its dominant role in processing spectral information, global features of music, and prosody.2,8,9 The right hemisphere may be better suited for processing slowly-modulated signals, while the left hemisphere may be more sensitive to rapidly-modulated signals.10 Therefore, it is possible that the slower rate of articulation and continuous voicing that increases connectedness between syllables and words in singing, may reduce dependence on the left hemisphere.

Left-hand tapping

Tapping the left hand may engage a right-hemisphere sensorimotor network that controls both hand and mouth movements.11 It may also facilitate sound-motor mapping, which is a critical component of meaningful vocal communication.12 Furthermore, tapping, like a metronome, may pace the speaker and provide continuous cueing for syllable production.

Inner Rehearsal

Inner rehearsal may be particularly effective for addressing apraxia (impaired ability to sequence and implement serial and higher order motor commands). Silently intoning the target phrase may reinitiate a cascade of activation from a higher level in the cognitive-linguistic architecture (e.g., from the level of a prosodic or phonological representation), thus giving the speaker another attempt to correctly sequence the motor commands.

Auditory-motor Feedback Training

In speech, phonemes occur so quickly it is difficult for severely aphasic and/or apraxic patients to process auditory feedback in time to self-correct. However, when words are sung, phonemes are isolated and thus, can be heard distinctly while still connected to the word. In addition, sustained vowel sounds provide time to “think ahead” about the next sound, make internal comparisons to the target, and self-correct when sounds produced begin to go awry.

Whether learning to sing, play an instrument, recover language after stroke, or acquire any new skill, the key to mastery is in the process. Previous small, open-label studies examining patients with moderate to severely nonfluent aphasia have found MIT to be a promising path to fluency, and we have outlined and discussed its critical elements and our enhancements to the protocol here. However, the efficacy of this technique and its potential application to other disorders will require further exploration, and more research will be necessary to fully understand the neural processes that underlie MIT’s effect.

Figure 4. MIT: Intermediate Level.

References

- 1.Albert ML, Sparks RW, Helm NA. Melodic intonation therapy for aphasia. Arch. Neurol. 1973;29:130–131. doi: 10.1001/archneur.1973.00490260074018. [DOI] [PubMed] [Google Scholar]

- 2.Sparks R, Helm N, Albert M. Aphasia rehabilitation resulting from melodic intonation therapy. Cortex. 1974;10:303–316. doi: 10.1016/s0010-9452(74)80024-9. [DOI] [PubMed] [Google Scholar]

- 3.Helm-Estabrooks N, Nicholas M, Morgan A. Melodic intonation therapy. Pro-Ed., Inc.; Austin, TX: 1989. [Google Scholar]

- 4.Helm-Estabrooks N, Albert ML. Manual of aphasia and aphasia therapy, Chapter 16. 2nd ed. Pro-Ed; Austin, TX: 2004. Melodic intonation therapy; pp. 221–233. [Google Scholar]

- 5.Schlaug G, Marchina S, Norton A. From singing to speaking: why singing may lead to recovery of expressive language function in patients with Broca’s aphasia. Music Perception. 2008;25:315–323. doi: 10.1525/MP.2008.25.4.315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Marshall N, Holtzapple P. In: Brookshire RH, editor. Melodic Intonation Therapy: Variations on a Theme; Clinical Aphasiology Conference: 6th Clinical Aphasiology Conference; BRK Publishers. Minneapolis, MN. 1976.pp. 115–141. [Google Scholar]

- 7.Sparks RW, Holland AL. Method: Melodic Intonation Therapy for Aphasia. J. Speech Hear. Disord. 1976;41:287–297. doi: 10.1044/jshd.4103.287. [DOI] [PubMed] [Google Scholar]

- 8.Zatorre RJ, Belin P. Spectral and temporal processing in human auditory cortex. Cereb. Cortex. 2001;11:946–953. doi: 10.1093/cercor/11.10.946. [DOI] [PubMed] [Google Scholar]

- 9.Schuppert M, Münte TF, Wieringa BM, Altenmüller E. Receptive amusia: evidence for cross-hemispheric neural networks underling music processing strategies. Brain. 2000;123:546–559. doi: 10.1093/brain/123.3.546. [DOI] [PubMed] [Google Scholar]

- 10.Poeppel D, Idsardi WJ, van Wassenhove V. Speech perception at the interface of neurobiology and linguistics. Philos. Trans. R. Soc. Lond. Ser. B. 2008;363:1071–1086. doi: 10.1098/rstb.2007.2160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Gentilucci M, Dalla Volta R. Spoken language and arm gestures are controlled by the same motor control system. Q. J. Exp. Psychol. (Colchester) 2008;61:944–957. doi: 10.1080/17470210701625683. [DOI] [PubMed] [Google Scholar]

- 12.Lahav A, Saltzman E, Schlaug G. Action representation of sound: audiomotor recognition network while listening to newly acquired actions. J. Neurosci. 2007;27:308–314. doi: 10.1523/JNEUROSCI.4822-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]