Abstract

People on the autism spectrum often experience states of emotional or cognitive overload that pose challenges to their interests in learning and communicating. Measurements taken from home and school environments show that extreme overload experienced internally, measured as autonomic nervous system (ANS) activation, may not be visible externally: a person can have a resting heart rate twice the level of non-autistic peers, while outwardly appearing calm and relaxed. The chasm between what is happening on the inside and what is seen on the outside, coupled with challenges in speaking and being pushed to perform, is a recipe for a meltdown that may seem to come ‘out of the blue’, but in fact may have been steadily building. Because ANS activation both influences and is influenced by efforts to process sensory information, interact socially, initiate motor activity, produce meaningful speech and more, deciphering the dynamics of ANS states is important for understanding and helping people on the autism spectrum. This paper highlights advances in technology that can comfortably sense and communicate ANS arousal in daily life, allowing new kinds of investigations to inform the science of autism while also providing personalized feedback to help individuals who participate in the research.

Keywords: affective computing, autism, autonomic nervous system, wearable sensors, electrodermal activity, arousal

1. Introduction

The motivation for this research begins with a scenario that has replayed many times as part of a longer, sometimes heart breaking, story of why a family felt compelled to remove their son or daughter away from environments focused on learning and social opportunities, and into a place where the child's behaviour could be better controlled:

David is a mostly non-verbal autistic1 teenager interacting with his teacher during a lesson. He appears calm and attentive. When it is time for him to respond, the teacher encourages him to try harder as he is not doing what she is asking. She knows he is capable from past experiences, and he appears to be feeling fine; he just needs a nudge. All of a sudden—it appears to come out of nowhere—David has a meltdown, engaging in injurious behavior to himself and perhaps to others. Afraid for him and others around him, the teacher calls for help to restrain him. What was intended to be a positive, productive learning episode turns into a harmful major setback, with discouragement and possibly despair ensuing.

Many versions of this story exist with a variety of causal explanations. Consider this one possibility: David was experiencing extreme pain with an undiagnosed tooth abscess. While most of us who experience excruciating pain reveal it in our faces, speak about it and do nothing else until we first address the pain, David could not communicate through his speech or facial expressions, and the more pain he felt, the more his motor system did not respond in the usual way. While sometimes his face capably did express emotion, at this time it did not, nor could he initiate the movement to cooperate with the task the teacher requested of him.

The autism spectrum refers to a broad set of diagnoses given to individuals who show certain combinations of atypical communication, social interaction and restricted repetitive patterns of behaviour, interests and activities (American Psychiatric Association (APA) 2000). While not part of the official diagnostic criteria at this time, autism frequently includes mild-to-major affective swings that may arise from sensory and other challenges, and mild-to-major motor disturbances that can affect gait, posture and ability to type, write speak and produce facial expressions (Leary & Hill 1996; Wing & Shah 2000). Complicating matters, movement disturbances can come and go, giving the appearance of a wilful lack of cooperation when a person does not move as desired or expected, even if he or she is trying to cooperate (Ming et al. 2004). Sensory and movement problems are a source of increased stress for the afflicted person, and the increased stress may precipitate other problematic behaviours, including facial expressions and other movements that contrast with what the person intends (Donnellan et al. 2006). David's unsuccessful efforts to communicate or get his body to move appropriately may have increased his internal frustration and anger to the point where they erupted as self-injury. Several autistic people who self-injure have explained that these behaviours do not come out of the blue, but may arise from mounting frustration, stress and failed attempts to be understood.

Observations that include measurements of physiology in autism have shown that a person can appear differently on the outside than what is measured on the inside. For example, an autistic person can appear perfectly calm to those who know him or her, while having an unusually high resting heart rate, 120 beats per minute (b.p.m.) or more, instead of the usual 60–80 b.p.m. (Goodwin et al. 2006). Similarly, an autistic person's electrodermal activity (EDA), a measure of sympathetic arousal, can swing very high (Hirstein et al. 2001), as if engaged in a physical work-out, but without any visible signs of sweating, heavy breathing or outward stress.

How is a teacher, parent or carer supposed to know what is truly going on when all they can observe is what is shown on the outside and that is misleading? The best-intentioned helpers who deeply care for and try to understand their autistic companions may, inadvertently cause quite a bit of trouble and even danger when the outward signals indicate the opposite of the inward experience.

The emotion communication problem in autism can be viewed as an amplification of a problem that exists across Homo sapiens. Anyone who has lived with a person in marriage or in a long-term relationship knows that misunderstandings occur, no matter how well you know, love and care for a person. Furthermore, there are important rational reasons as to why there is ambiguity in how humans display emotion: one man might choose to hide his feelings of arousal for a woman because he does not want to threaten her marriage, or his, with the possibility of broken vows and divorce. There may also be important survival reasons: the woman is married to a tall irascible man who happens to be a professional fighter. For whatever reasons, rational, biological or other, emotion communication mechanisms do not accurately and reliably display outwardly what is felt inwardly.

Technology has increasing potential to bridge the chasm between what is felt inwardly and displayed outwardly. However, because there are also good reasons to keep feelings private, it is important to consider how such technology can be designed so that it respects human needs for control over the display of feelings. This paper presents new technology that is being developed to give people expanded tools to bridge the chasm between internal feeling and external display, while maintaining important control over what is communicated and to whom. Here is a vision of one such technology, providing an alternative outcome to the scenario above:

David is looking forward to his lesson with a teacher whom he likes and trusts. He chooses to communicate his autonomic data to her, and pulls on a wrist band with a logo of his favorite superhero. His teacher chooses to wear one too, and he touches his to hers in a way that indicates ‘share.’ He appears calm and attentive during instruction. When it is time for him to respond, the teacher notices that his physiology is soaring unusually high and steadily escalating despite his outwardly calm appearance. He is not responding to the lesson in a way she believes him capable. Instead of pushing him to respond, she stays out of his face, and brings him tools that the two of them have previously chosen for him to use to self-regulate and calm himself. He looks toward the sound-modifying headphones, which she helps him put on. Suddenly, she becomes aware of a sound in the distance that she hadn't noticed before, which might be bothering him. After some time, she sees his physiological state returning to a calm and attentive state, where what is signalled from the inside matches what she sees on the outside. She gently recommences the lesson and he participates eagerly, surpassing his previous achievement level.

The MIT Media Laboratory is working to realize new technologies that people with communication challenges can use to improve their abilities to communicate emotion; however, the problem is not an easy one to solve.

2. The challenge of emotion communication

To communicate a phenomenon like emotion, you need to first know what it is, which suggests starting with a definition. Unfortunately, emotion theorists do not agree on a definition of emotion, despite that nearly a hundred definitions were suggested decades ago (Kleinginna & Kleinginna 1981) and new ones continue to appear. Most theorists agree that the two dominant dimensions of emotion can be described as valence (pleasant versus unpleasant or positive versus negative) and arousal (activated versus deactivated or excited versus calm). These two dimensions have been in use since at least Schlosberg (1954). Several third dimensions have also been proposed, but there is less agreement about those. While the valence and arousal dimensions do not fully capture the space of emotion, they can be considered a second-order approximation to the emotion space. Consequently, measures of arousal and valence are useful for describing emotion, even though they do not perfectly represent it.

A popular way of measuring and communicating emotion, in part because it is easily done with pencil and paper, is to ask people to rate their feelings along valence–arousal dimensions, e.g. using the self-assessment mannequin of Lang et al. (1993). However, self-reported ratings are untrustworthy in populations of typical people, and coupled with the high rate of alexythymia in autism (Hill et al. 2004), self-reports can be expected to be inaccurate for many autistic individuals. When a personal digital assistant for manually logging self-reported feelings (SymTrend 2009) was used to compare autistic teens' self-reports with teachers' reports of their feelings, there were huge discrepancies, and no way of knowing which was most accurate (Levine & Mesibov 2007). Furthermore, many autistic people have difficulty in producing speech and getting it to mean what they want it to mean; they may find that their typed speech more accurately reflects their thoughts than their spoken speech. People who rely upon voice-output devices to help them communicate their feelings do need and use verbal expressions of feelings such as ‘I'm frustrated’ or ‘I'm in pain’. Such expressions, even if a person can only push a button, may provide life-saving communication, and are critical to provide. However, at the moment of mounting pain and overload, it may also be the case that a person cannot physically move in a way to operate their communication device, much less navigate to the correctly worded choice.

What is the best physical measure for capturing the two dimensions of arousal and valence: face? voice? gestures? physiology? context? other? Over the years researchers have found that the face is a good indicator of valence, especially with corrugator and zygomatic activity separating states such as pleasure/displeasure, liking/disliking and joy/sadness. EDA is a good indicator of arousal, going up with sympathetic nervous system activation—the ‘fight or flight’ response. However, measuring emotion is not as simple as measuring a few bodily parameters. When people infer an emotion such as ‘exuberance’, they combine multiple channels of information in a complex way: smiles, shrieks, upward bodily bouncing or arm gestures, and perhaps even tears of joy. No algorithms yet exist that describe how to precisely combine the many contributing channels into a full space of emotions. A different complex combination may need to be characterized for each emotion. Furthermore, it is not sufficient to map the combinations of signals emanating from the person whose emotion is being assessed; it is also necessary to observe the context, to see, for example, that the person is simply trying to act exuberant at the request of their drama teacher.

A scientific illustration of the importance of context and how it exploits the similarity of different expressions appears in a study by Aviezer et al. (2008). In this study, Ekman–Friesen's basic faces of emotion are paired with different contexts and found to be interpreted in strongly different ways based on the pairing. For example, a disgust face is paired in one case with a man holding up his fist, and in another case with the same man holding up soiled underwear. When asked to ‘label the facial expression’, 87 per cent of participants labelled the disgust face in the first image as expressing anger, while 91 per cent of participants labelled the disgust face in the second image as expressing disgust. The surprise in this result is that the face was identical in both images, containing the prototypical facial action units for disgust, chosen from the Ekman & Friesen (1976) illustrations for basic emotions. Also, participants were clearly asked to label the facial expression. Thus, an automated system to ‘label facial expressions’ will probably require more than facial information, at least if you want it to label expressions like people do.

The behaviour of a group of people labelling an identical ‘basic’ facial expression differently, depending on context, points to greater complexity required in developing a system for automatically understanding facial expressions. Whether to help people with non-verbal learning challenges or to build software that a computer or robot could utilize, there is a need to develop systematic mappings between what is viewed and what emotion is interpreted—and not just what is obtained from the face. Interpreting facial movements alone is an extraordinarily complex problem, with an estimated 10 000 different combinations of facial expressions capable of being produced and with new expressions able to appear in tens of milliseconds. Autistic poet Tito Mukhopadhyay captured the visual complexity of this space beautifully when he wrote, ‘Faces are like waves, different every moment. Could you remember a particular wave you saw in the ocean?’ (Iversen 2007). While the space of facial expressions is oceanic, the space formed by crossing all possible expressions with all possible contexts, and the demand to compute their meaning in real time, is computationally intractable. Autistic people who attempt to perform such a feat in a precise and rigorous systematic way are setting themselves up for likely overload and failure.

Mysteriously, people often succeed at reading facial expressions, or at least most people think they do this task fine, and they do so in real time, even if far from perfectly. While we do not know how people actually compute the labels that are commonly agreed upon (and many labels are not agreed upon), our challenge at the MIT Media Laboratory Autism Communication Technology Initiative is to build tools that imitate what typical people do, providing labels that most people would agree on, where there is such agreement. Our challenge is also to construct these tools in a way that can be easily appropriated by people who have trouble interpreting emotion, if they wish to use them. The tools will not be perfect, but neither is human emotional communication. Our approach is also not to force the typical social way of operating on people who do not easily operate this way, or who may choose to not operate this way; people should not be required to communicate emotion if they do not want to. Instead we are interested in providing people with expanded means for communicating, in ways they can freely choose to use or ignore.

3. Expanding emotion communication possibilities in autism: autonomic nervous system

The autonomic nervous system (ANS) has two widely recognized subdivisions: sympathetic and parasympathetic, which work together to regulate physiological arousal. While the parasympathetic nervous system promotes restoration and conservation of bodily energy, ‘rest and digest’, the sympathetic nervous system stimulates increased metabolic output to deal with external challenges, the so-called ‘fight and flight’. Increased sympathetic activity (sympathetic arousal) elevates heart rate, blood pressure and sweating, and redirects blood from the intestinal reservoir towards the skeletal muscles, lungs, heart and brain in preparation for motor action. Since sweat is a weak electrolyte and a good conductor, the filling of sweat ducts increases the conductance of an applied current. Changes in skin conductance at the surface, known as EDA, thus provide a sensitive and convenient measure of assessing sympathetic arousal changes associated with emotion, cognition and attention (Boucsein 1992; Critchley 2002). Thus, EDA provides a measure of the arousal dimension of emotion, although it can change for non-emotional reasons as well, such as when a mental task increases cognitive load or when ambient heat and humidity are suddenly increased.

Connections between the ANS and emotion have been debated for years. Historically, William James was the major proponent of emotion as an experience of bodily changes, such as your heart pounding or your hands perspiring (James 1890). This view was challenged by Cannon (1927) and later by Schachter and Singer who argued that the experience of physiological changes was not sufficient to discriminate emotions, but also required cognitive appraisal of the current situation (Schachter 1964). Since Schachter and Singer, there has been a debate about whether emotions are accompanied by specific physiological changes other than simply arousal level. Ekman et al. (1983) and Winton et al. (1984) provided some of the first findings showing significant differences in ANS signals according to a small number of emotional categories or dimensions, but they did not develop systematic means of recognizing or communicating emotion from these signals.

Could emotion be recognized by a computer with which you chose to share your physiological signals? Fridlund & Izard (1983) appear to have been the first to apply automated pattern recognition (linear discriminants) to classification of emotion from physiological features, attaining rates of 38–51% accuracy (via cross-validation) on the subject-dependent classification of four different facial expressions (happy, sad, anger and fear) given four facial electromyogram signals. Later, using pattern analysis techniques and combining four modalities—skin conductance, heart rate, respiration and facial electromyogram—eight emotions (including neutral) were automatically discriminated in an individual, over six weeks of measures, with 81 per cent accuracy (Picard et al. 2001). The latter also showed that nonlinear combinations of physiological features could discriminate emotions of different valence as well as emotions of different arousal. More recently, Liu et al. (2008a,b) applied pattern analysis to dozens of physiological indices to discriminate liking, anxiety and engagement in autistic children while they interacted with a robot . While all these studies restricted measurement to laboratories and small sets of emotions, they demonstrated that there is significant emotion-related information that can be recognized through physiological activity.

The Media Lab's initiative to advance autism communication technologies currently has four active research areas: (i) understanding and communicating ANS activity and behaviours that co-occur with its changes, (ii) providing tools for learning to read facial–head expressions (el Kaliouby & Robinson 2005; el Kaliouby et al. 2006; Madsen et al. 2008), (iii) enabling low-cost robust communication aids for people who do not speak or whose speech does not carry the intended meaning (R. W. Picard, J. Smith & A. Baggs 2008, unpublished manuscript), and (iv) developing games to help improve vocal expression and prosody production. These four areas are connected because processes of communication both influence and are influenced by the dynamics of the ANS. For example, the social challenges faced by many autistic individuals, such as discomfort looking at faces and making eye contact, are associated with increased ANS activation and hyper-arousal of associated brain regions (Joseph et al. 2008; Kleinhans et al. 2009). This was expressively communicated by autistic blogger, video artist and speaker, Baggs (1996), who uses an augmentative communication device to talk:

It's been a long time since someone's really been insistent with the eye contact while I'm squirming and trying to get away. I noticed today exactly how much I react to that.

So I'm sitting there in a doctor's office, and he's leaning towards me and sticking his face up to mine.

And I'm sitting there trying to think in a way that, were it in words, would go something like this:

Okay … he's got to … . EYEBALLS EYEBALLS EYEBALLS he's got to be unaware … EYEBALLS!!!! … he's … uh … eyeballs … uh … EYEBALLS!!!!!!!!! people like him think this is EYEBALLS EYEBALLS EYEBALLS EYEBALLS EYEBALLS … some people think … EYEBALLS!! … some people think this is friendly … EYEBALLS!!!! EYEBALLS!!!! EYEBALLS!!!! he really doesn't mean anything EYEBALLS EYEBALLS EYEBALLS he doesn't mean anything EYEBALLS EYEBALLS he eyeballs doesn't eyeballs mean eyeballs anything eyeballs bad EYEBALLS EYEBALLS he doesn't understand why I'm turning EYEBALLS EYEBALLS EYEBALLS why I'm not coming up EYEBALLS why I'm not coming up with words EYEBALLS EYEBALLS EYEBALLS oh crap hand going banging head EYEBALLS EYEBALLS oh crap not right thing to do EYEBALLS EYEBALLS EYEBALLS EYEBALLS EYEBALLS stop hand now

[…]

Until I finally reached some point of shutdown. And where every EYEBALLS is not just the picture of eyeballs but of something very threatening about to eat me or something. I unfortunately in all that couldn't figure out how to tell him that it was his eyeballs that were unnerving me, and I'm not sure I did a very good job of convincing him that I'm not that freaked out all the time. It wasn't just eyeballs either, it was leaning at me with eyeballs. I tried briefly to remember that people like him consider eyeballs to be friendliness, but it got drowned out in the swamp of eyeballs, and all thinking got drowned out in the end in a sea of fight/flight.

Note to anyone who interacts with me: Eyeballs do not help, unless by ‘help’ you mean ‘extinguish everything but eyeballs and fear’.

Many social behaviours can activate the sympathetic flight/fight response in autistic people: yes eyeballs, and also difficulties that increase cognitive load, such as having to integrate speech with perception of non-verbal cues (e.g. speech prosody and facial expressions) in real-time interactions (Iarocci & McDonald 2006). Furthermore, the motor function required to speak or to accurately operate an augmentative communication device can be impaired when autonomic functioning enters a state of overload or shutdown (Donnellan et al. 2006). Inability to get one's body to move as desired is a recipe for further increasing stress, fuelling a cycle that makes it harder to communicate and get the needed help.

Harking back to the opening scenario in this paper, accurate communication of David's ANS activation may or may not have prevented the meltdown and the injury that ensued, but it could have at least alerted a trusted person to take a second look at what might be causing the ANS activation, making it more likely that they could help debug what was causing David trouble. Timely communication might have led to addressing the problematic state, enabling subsequent continuation of productive learning and increased understanding of David's sensitivities and differences in functioning. Moreover, David might be given tools to help him learn how to better understand and regulate his own ANS activation without having to share his internal changes with others, allowing him more control and autonomy, which is also very important in autism. In any case, we aim to design technology that people can use, on their terms and under their control, to better understand and, if they wish, communicate their internal emotional states.

4. Personalized measurement for learning and communication

Earlier research at MIT showed that ANS data related to emotion varied significantly from day to day in the same person, even when the person intended to express the same emotion, the same way, at the same time of day (Picard et al. 2001). The findings indicated that simply averaging the patterns across the person's different days reduced the accuracy with which they could be characterized. What was needed was recognition of how each pattern related to an ever-changing ‘baseline’ of underlying physiological activity, but how can such a baseline be obtained?

When measurement is taken over a short period of time in a laboratory, it causes several problems in characterizing a person's responses. Typically, a researcher constructs a baseline by asking the participant to relax quietly, holding still for 15 min. The researcher waits for signals to settle, and then averages the signals at their lowest and most stable level. However, this process is an artefact of having a small amount of time to characterize a person, usually because the measurement apparatus is not suited for home wear. What can be found when measuring a person 24/7 is that these ‘low stable’ periods occur naturally during portions of sleep and at other times of day, and an hour in a strange laboratory may be entirely non-representative of this.

Here is a common, modern-day scenario that illustrates not only the baseline problem but also a larger problem: a person goes to an unfamiliar clinic or laboratory and is asked to take a test in front of a stranger while wearing possibly uncomfortable electrodes, perhaps with sticky fasteners and wires taped to the skin. His or her personal information streams to a computer where the data are read by researchers, normalized against their 15-min ‘baseline’, averaged over a dozen or more people, compared in aggregate to a control group and then published in a research article. The participant (and/or family) may later read the article and learn, for example, that ‘the autism group has higher average levels’ or something that supposedly holds for that study. However, these findings may not actually apply to any one individual who was in the group, whose data may appear in significant clusters not numerically in the centre, who may be a statistical outlier or whose ‘momentary baseline’ may have resulted in a characterization not at all representative of daily life.

Scientists know that they are trading off conclusions about the individual for conclusions about the group2; however, this represents only one concern with today's approach. A bigger problem is that the very nature of going into an unfamiliar clinic or laboratory for assessment means that only a tiny sample of the participant's behavioural repertoire is used to characterize him or her. This methodology can be likened to someone listening to a dozen bars randomly played from the middle of Beethoven's Ninth, averaging them and offering the result as a description of the symphony. The results should not be trusted as a scientifically accurate characterization, even if the practice is standard in science.

The need for monitoring people accurately over extensive periods of time has stimulated interest in comfortable wearable technologies—unobtrusive devices that can be worn during normal daily activity to gather physiological data over periods of several weeks or months (Picard & Healey 1997; Bonato 2003). Researchers at MIT have led several of these efforts, envisioning and developing devices that can be worn comfortably during everyday activities (Picard & Scheirer 2001; Strauss et al. 2005), including a new wireless EDA and motion sensor packaged comfortably on the wrist (figure 1), freeing up the hand for everyday use.

Figure 1.

Wearable EDA sensor. (a) Sensor is inside stretchy breathable wristband. (b) Disposable Ag/AgCl electrodes attached to the underside of the wristband. (c) The EDA sensor can be worn comfortably for long periods of time.

A wireless EDA sensor was one of eight technologies we took on a first visit to meet autistic adult, Baggs (2007), who was generously willing to try out and give us critical feedback on our prototypes. After our visit, she blogged about the EDA sensor:

…they had one that was just electrodes that attached to a thing that transmits to a computer, which then shows it on a graph. Because there were so many people in the room, my arousal level was really high, it turned out (I wouldn't be surprised, being around lots of strangers stresses me out). But if I sat and rocked and didn't look at the people, it slowly went down. The moment one of them turned her head to look at me, though, it suddenly jumped up again. And this was before the point of eye contact, even, and certainly before I could feel more than a small difference in my stress levels. …

At the end of this post she described how she wished she had had the device during a CNN interview with a medical doctor, as well as other interesting possibilities:

I wish I'd had that on during the interview with Sanjay Gupta so I could show him that I was even reacting in a measurable physiological way to his attempts at making eye contact (he asked me, in a part that didn't get aired, why I didn't just look at him, and he was, I think in an attempt at friendliness, leaning into me the whole time in a way that was making me very stressy indeed, too stressed out to fully explain to him the effect he was having on me). I also wish I had one of those devices to play with for longer. It sounds as if they could be really useful in learning what stresses me out before it reaches the point that I notice it. I also wonder if showing readings like that to the sort of professionals who are heavily invested in forcing eye contact and other invasively direct forms of interaction on autistic children would make them think twice about it.

While the experiences above belong to one person, it could be fruitful to give other people the option of learning how their social interactions affect their physiological state, especially when such effects may include harmful overload. Such information might suggest that we change our understanding of how to best interact with them in ways that are helpful. As such information is truly personalized for each individual, it might inform better practices for fostering individualized learning and development. Instead of believing that an approach that was published in a scientific journal as ‘best for a group of autistics’ must be best for every autistic person, this method would allow a closer look at individualized patterns, and how a technique interacts with those. Scientists can still look for common patterns across individuals, clusters of similarity and phenotypes and ways of responding to these clusters that foster helpful outcomes in general; in short, the ‘group analysis’ can still be done. Thus, an individualized measurement approach, scaled to include anybody who wants to participate, can contribute to deepening the understanding of the science of autism.

The science of individualized measurements—the idiographic approach—is not a new idea, and Molenaar and others have elsewhere argued for the benefits of this methodology (Molenaar 2004). The idea is to measure and characterize patterns over time (not just minutes, but also days and weeks) for an individual, and then repeat this methodology for many individuals. The immense scientific value of this approach has long been known, yet it has been impractical. Today's technological advances make the idiographic science newly practical. Most of all, with the individualized data-intensive approach based on measurement in a person's natural environment, it is not just the science that benefits: each participant can now benefit with information specific to his or her needs and situation. Participants need not wait a year or more to see if a publication shows a finding, and wonder if their data were an outlier or a core part of that finding. Instead, if you participate in a study, you see your own data, and you can see if your patterns connect to those that the scientists find across the group of individuals.

5. Design principles and challenges: comfort, clarity and control

The challenge is to design a device that can accurately and comfortably measure data outside the laboratory as well as it does inside the laboratory. As shown in figure 1, researchers at MIT have developed an EDA device that is able to be worn comfortably around the clock, privately logging or wirelessly transmitting data. The wrist sensor is also easy to put on or take off, requiring little dexterity to slip over the hand. Comfort is substantially improved over previous sensors, which required application of electrodes to fingers, securing of wires and attaching of a recording instrument to the body.

Researchers at MIT have tested the quality of the new device's signal by comparing it both to a commercial device used on the inner wrist and to traditional EDA sensing on the fingertips (Poh et al. submitted). These tests considered stimuli from three kinds of stressors: emotional, physical and cognitive. Measurements of EDA by the wrist-worn sensor showed very strong correlation (median correlation coefficient, 0.93 ≤ r| ≤ 0.99) with a widely used commercial system (Thought Technology Flexcomp Infinity). A range of resistors from 0.1 to 4.0 MΩ was applied to the device and the mean error in conductance was measured as small (0.68 ± 0.64%). Correlation between EDA on the distal forearm and ipsilateral palmar sites was strong (0.57 ≤ r| ≤ 0.78) during all three stressor tasks, showing that the wrist is a feasible EDA recording site.

Attaining clarity with EDA measurement is a two-sided coin. On the one hand, scientists would like a precise measure of the individual emotion or state being experienced; on the other hand, many people feel uneasy with that possibility, and would not like detailed emotional state information to be obtained through analysing EDA. EDA represents generalized arousal—from emotional, cognitive or physical stimulation. If humidity, physical motion or other variables introduce changes in the moisture barrier between the skin and electrodes, these artefacts may masquerade as sympathetic responses; however, the wrist sensor also detects motion and usually these artefacts can be identified by their patterns (e.g. a sudden drop in level is usually due to the slippage of electrodes and not due to a reduction in sympathetic activation). Once identified, such artefacts can be discounted and/or removed. Also, humidity and temperature can be measured in the device and their effects removed to some extent. Importantly, EDA does not communicate valence: it can go up with liking or disliking, with truth or lies, or with joy or anger. While negative events tend to be more emotionally arousing than positive, one cannot reliably read valence from EDA alone.

Ambiguity around valence is protective, but can also cause misunderstandings. If you see arousal go up, how do you know if the person is feeling good or bad? One mother of an autistic child told me that she recognizes her daughter is over-aroused when she sees her tapping her fingers in a certain way. However, she cannot tell from the tapping if the child is in a negatively aroused state or in a positively aroused state, until she tries the following: mother taps in the same way, and then slightly varies it. When her daughter adapts her tapping to follow her mother's variation, she is usually in a positive state; if, however, the daughter does not adapt, then it is probably a negative state. While this works for this person, such patterns will be different for different people, and may need to be discovered. In general, arousal must be combined with other information (such as facial expressions, provided these are accurate and working properly) to get a more complete understanding of emotion.

Control is a major factor in arousal regulation. A survey of the most stressful jobs concluded that bus drivers had the most stressful job because keeping their schedule was largely dependent on traffic and other people, things that were all out of their control. If you build a tool to help somebody regulate negative arousal, and they have trouble controlling the tool, or if they feel controlled by the tool or by others when they use the tool, then effectiveness will be compromised. In our research we do several things to enhance control, including practising an ‘opt-in’ policy where nothing is sensed from a person without their fully informed consent, which can be withdrawn at any time without penalty. We also emphasize creating devices that a person can easily put on or take off, deactivate or fake their participation with. We have been collaborating with autistic people to co-design toolkits that customize the control and output of their devices, so that we can understand their perspectives, needs and wants. We do not expect that there will be one best solution; instead, our approach is to develop a garden of devices, hoping that one or more can be adapted to meet individual needs and preferences.

6. Out of the laboratory and into daily life

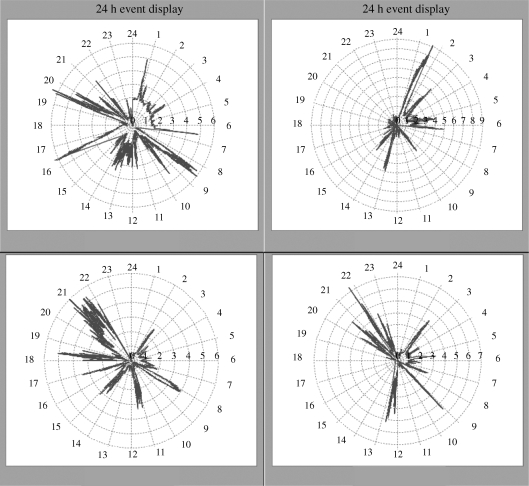

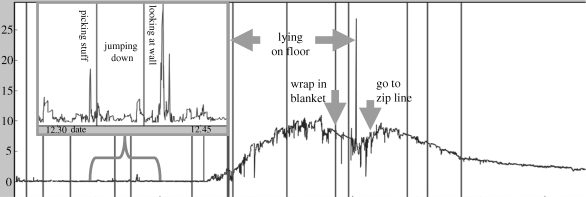

Emotional measures from daily life provide the advantage of representing real-world experiences in familiar and unfamiliar environments under naturally varying conditions (say after a good night's sleep versus a bad night's sleep) and in encounters that really matter to people as opposed to most laboratory encounters, where some activity is requested that a person may or may not feel motivated to perform. Figures 2 and 3 show samples of data collected during daily life using the MIT EDA wrist sensor. Figure 2 shows 24 h changes in a neurotypical adult, while figure 3 shows data from a child while he undergoes a 45 min occupational therapy session, a sample from a much larger set that we are beginning to collect in a series of clinical studies that have just got underway. Both these sets of data show a huge variation in response level, even over only 45 min.

Figure 2.

Radial 24-h plots of EDA data from four days of wearing the wireless wrist sensor. Arousal level is proportional to distance from the centre. During three of the days, the biggest peaks occur during wake; while in the upper right, the biggest peak is during sleep. Black lines, skin conductance.

Figure 3.

EDA over a 45 min occupational therapy session, as measured from wireless wrist EDA sensor, for a child with sensory processing disorder. Arousal escalates when child is lying quietly on the floor, appearing outwardly calm.

Published results of ANS activity in autism show at least two groups of responders during short laboratory measurements: one group with high ANS arousal and one group with low arousal (Schoen et al. 2008). However, we see in figure 3 an example of one child showing both very low and very high responses, with the largest escalation happening for no apparent reason. Specifically, at the point where the child lies on the ground, the level goes up by a factor of five, even though the child remains quiet and calm in appearance. Does this child have a high or a low baseline? Here it would depend completely on whether he is measured before the escalation, or later while he is lying calmly on the floor. Just because he looks calm lying on the floor does not mean it is a good time to capture an ANS ‘low baseline’. This child could easily be classified as ‘too high’ or ‘too low’ simply depending on when you measure him, and judging from the outside is not an accurate measure. A comfortable wearable sensor that can be worn long term, during daily activity, provides new opportunities for greater scientific understanding of the ANS in autism, and in many other conditions as well.

Emotion changes most readily with what is real and what really matters in a person's life. While laboratory visits offer observation over some outward influences on a person's emotional state, and allow useful control over some of those influences, they also run the risk of entirely missing the patterns that characterize the symphony of real life. In autism, where ANS variations abound, it is all the more imperative that comfortable, personally controllable solutions be developed to better understand ANS changes and interactions, for the sake of the individual who may not know what his or her own ANS system is doing as well as for the sake of enhancing scientific knowledge. If scientific findings are to apply to daily life, it is important that daily life data be represented in the scientific data collection process. New technology, if properly developed, can make this advancement both possible and practical.

7. Conclusions

Those who have worked with a lot of people on the autism spectrum know the following adage: ‘If you've met one person with autism, then you've met one person with autism’. In this day when the computational power of the first lunar mission fits into a trouser pocket, when wireless technology is pervasive and when kids upload home videos for the entertainment of people around the planet, there is no reason to restrict research to the old paradigm of laboratory observations that use snapshot measurement technology and average the findings across a group. While there are important conclusions to draw about groups, the technology is ready to address the richer understanding of individuals, especially bringing light to the everyday challenges faced by people on the autism spectrum, and the role of emotion and ANS changes in these challenges. While difficulties bedevil the researcher who wishes to conduct rigorous science in the uncontrolled real-world measurement environment, these difficulties can be overcome with ultra-dense large sets of individualized data and with dynamic pattern analysis tools that characterize spaces of changing variables. New emotion communication technology can remedy many of the old measurement problems and enable personalized opportunities for learning, especially for people with autism diagnoses and for those who aim to better understand and serve them and their needs.

Acknowledgements

This work has benefited from discussions and collaborations with Matthew Goodwin, Amanda Baggs, Rich Fletcher, Oliver Wilder-Smith, Michelle Dawson, Joel Smith, Jonathan Bishop, Kathy Roberts, Dinah Murray, Lucy Jane Miller, Rana el Kaliouby and the whole Affective Computing group, especially Jackie Lee, Ming-Zher Poh, Elliott Hedman and Daniel Bender. I am especially grateful to Leo Burd at Microsoft, the National Science Foundation, the Nancy Lurie Marks Family Foundation and the Things That Think Consortium for helping support our autism technology research. The opinions, findings, conclusions and recommendations expressed in this article are those of the author and do not necessarily represent the views of Microsoft, the National Science Foundation or the Nancy Lurie Marks Family Foundation.

Endnotes

One contribution of 17 to a Discussion Meeting Issue ‘Computation of emotions in man and machines’.

Many people diagnosed on the autism spectrum wish to avoid ‘person with autism’ language in favour of being called ‘autistic’ (Sinclair 1999), while others prefer ‘classified autistic’ (Biklen 2005). American Psychological Association style proscribes that authors ‘respect people's preferences; call people what they prefer to be called’ (APA 1994, p. 48). A Google search conducted on 14 March 2007, revealed that 99 per cent of the first 100 Google hits for the term ‘autistics’ pointed to organizations run by autistic persons, whereas all of the first 100 Google hits for the terms ‘person/s with autism’ or ‘child/ren with autism’ led to organizations run by non-autistic individuals (Gernsbacher et al. 2008).

Study of the individual is referred to as idiographic, while study of group data is referred to as nomothetic. Nomothetic comes from the Greek nomos, meaning ‘custom’ or ‘law’, while idiographic comes from the Greek idios, meaning ‘proper to one’. Several arguments have been put forth for bringing back idiographic methods (Molenaar 2004); new technologies and analysis techniques, such as described here, make this approach increasingly favourable.

References

- American Psychiatric Association 2000Diagnostic and statistical manual on mental disorders, 4th edn, text revision Washington, DC: American Psychiatric Association [Google Scholar]

- Aviezer H., et al. 2008Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychol. Sci 19, 724–732 (doi:10.1111/j.1467-9280.2008.02148.x) [DOI] [PubMed] [Google Scholar]

- Baggs A.2007Sorry I'm late with the blog carnival. My home was invaded by interesting geeks. Cited 2009 April 18. Available from http://ballastexistenz.autistics.org/?p=367

- Baggs A.1996Eyeballs eyeballs eyeballs. Cited 2009 April 18. Available from http://ballastexistenz.autistics.org/?p=110

- Biklen D., et al. 2005Autism and the myth of the person alone New York, NY: New York University Press. [Google Scholar]

- Bonato P.2003Wearable sensors/systems and their impact on biomedical engineering. IEEE Eng. Med. Biol. Mag 22, 18–20 (doi:10.1109/MEMB.2003.1213622) [DOI] [PubMed] [Google Scholar]

- Boucsein W.1992Electrodermal activity. The Plenum series in behavioral psychophysiology and medicine. New York, NY: Plenum Press [Google Scholar]

- Cannon W. B.1927The James–Lange theory of emotions: a critical examination and an alternative theory. Am. J. Psychol. 39, 106–124 (doi:10.2307/1415404) [PubMed] [Google Scholar]

- Critchley H. D.2002Electrodermal responses: what happens in the brain. Neuroscientist 8, 132–42 (doi:10.1177/107385840200800209) [DOI] [PubMed] [Google Scholar]

- Donnellan A. M., Leary M. R., Patterson Robledo J.2006I can't get started: stress and the role of movement differences in people with autism. In Stress and coping in autism (eds Baron M. G., Groden J., Groden G., Lipsitt L. P.), pp. 205–245 New York, NY: Oxford University Press. [Google Scholar]

- Ekman P., Friesen W. V.1976Pictures of facial affect Palo Alto, CA: Consulting Psychologists Press [Google Scholar]

- Ekman P., Levenson R. W., Friesen W. V.1983Autonomic nervous system activity distinguishes among emotions. Science 221, 1208–1210 [DOI] [PubMed] [Google Scholar]

- el Kaliouby R., Robinson P.2005The emotional hearing aid: an assistive tool for children with Asperger syndrome. Universal Access Inf. Soc 4, 121–134 [Google Scholar]

- el Kaliouby R., Teeters A., Picard R.2006An exploratory social–emotional prosthesis for autism spectrum disorders. International Workshop on Wearable and Implantable Body Sensor Networks 2006, pp. 4–30 Cambridge, MA: MIT Media Lab. [Google Scholar]

- Fridlund A. J., Izard C. E.1983Electromyographic studies of facial expressions of emotions and patterns of emotions. In Social psychophysiology: a sourcebook (eds. Cacioppo J. T., Petty R. E.), pp. 243–286 New York, NY: The Guildford Press [Google Scholar]

- Gernsbacher M. A., et al. 2008Why does joint attention look atypical in autism? Child Dev. Perspect. 2, 38–45 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodwin M. S., et al. 2006Cardiovascular arousal in individuals with autism. Focus Autism Other Dev. Disabil 21, 100–123 [Google Scholar]

- Hill E., Berthoz S., Frith U.2004Brief report: cognitive processing of own emotions in individuals with autistic spectrum disorder and in their relatives. J. Autism Dev. Disord 34, 229–235 (doi:10.1023/B:JADD.0000022613.41399.14) [DOI] [PubMed] [Google Scholar]

- Hirstein W., Iversen P., Ramachandran V. S.2001Autonomic responses of autistic children to people and objects. Proc. R. Soc. Lond. B 268, 1883–1888 (doi:10.1098/rspb.2001.1724) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iarocci G., McDonald J.2006Sensory integration and the perceptual experience of persons with autism. J. Autism Dev. Disord 36, 77–90 [DOI] [PubMed] [Google Scholar]

- Iversen P.2007Strange son New York, NY: Riverhead Trade [Google Scholar]

- James W.1890The principles of psychology Cambridge, MA: Harvard University [Google Scholar]

- Joseph R. M., Ehrman K., McNally R., Keehn B.2008Affective response to eye contact and face recognition ability in children with ASD. J. Int. Neuropsychol. Soc 14, 947–955 (doi:10.1017/S1355617708081344) [DOI] [PubMed] [Google Scholar]

- Kleinginna P. R., Jr, Kleinginna A. M.1981A categorized list of emotion definitions, with suggestions from theory to practice. Motiv. Emotion 5, 345–379 (doi:10.1007/BF00992553) [Google Scholar]

- Kleinhans N. M., et al. 2009Reduced neural habituation in the amygdala and social impairments in autism spectrum disorders. AJP Adv 1–9 [DOI] [PubMed] [Google Scholar]

- Lang P. J., Greenwald M. K., Bradley M. M., Hamm A. O.1993Looking at pictures: affective, facial, visceral, and behavioral reactions. Psychophysiology 261–273 (doi:10.1111/j.1469-8986.1993.tb03352.x) [DOI] [PubMed] [Google Scholar]

- Leary M. R., Hill D. A.1996Moving on: autism and movement disturbance. Ment. Retard 34, 39–53 [PubMed] [Google Scholar]

- Levine M., Mesibov G.2007Personal guidance system (PGS) for school and vocational functioning. Paper presented at the 6th Annual International Meeting for Autism Research (IMFAR), Seattle, WA [Google Scholar]

- Liu C., et al. 2008aOnline affect detection and robot behavior adaptation for intervention of children with autism. IEEE Trans. Robot 24, 883–896 (doi:10.1109/TRO.2008.2001362) [Google Scholar]

- Liu C., Conn K., Sarkar N., Stone W.2008bPhysiology-based affect recognition for computer-assisted intervention of children with autism spectrum disorder. Int. J. Hum.–Comput. Stud 66, 662–677 (doi:10.1016/j.ijhsc.2008.04.003) [Google Scholar]

- Madsen M., el Kaliouby R., Goodwin M., Picard R. W.2008Technology for just-in-time in-situ learning of facial affect for persons diagnosed with an autism spectrum disorder. Tenth ACM Conf. on Computers and Accessibility (ASSETS), Halifax, Canada. [Google Scholar]

- Ming X., Julu P. O. O., Wark J., Apartopoulos F., Hansen S.2004Discordant mental and physical efforts in an autistic patient. Brain Dev 26, 519–524 [DOI] [PubMed] [Google Scholar]

- Molenaar P. D. M.2004A manifesto on psychology as idiographic science: bringing the person back into scientific psychology, this time forever. Measurement 2, 201–218 [Google Scholar]

- Picard R. W., Healey J.1997Affective wearables. Pers. Technol 1, 231–240 (doi:10.1007/BF01682026) [Google Scholar]

- Picard R. W., Scheirer J.2001The Galvactivator: a glove that senses and communicates skin conductivity. Proc. Ninth Int. Conf. on Human–Computer Interaction, New Orleans, LA [Google Scholar]

- Picard R. W., Vyzas E., Healey J.2001Toward machine emotional intelligence: analysis of affective physiological state. IEEE Trans. Pattern Anal. Mach. Intell 23, 1175–1191 (doi:10.1109/34.954607) [Google Scholar]

- Poh M.-Z., Swenson N. C., Picard R. W.Submitted A wearable sensor for comfortable, long-term assessment of electrodermal activity. [DOI] [PubMed]

- Schachter S.1964The interaction of cognitive and physiological determinants of emotional state. Adv. Exp. Soc. Psychol. 1, 49–80 [Google Scholar]

- Schlosberg H.1954Three dimensions of emotion. Psychol. Rev 61, 81–88 (doi:10.1037/h0054570) [DOI] [PubMed] [Google Scholar]

- Schoen S. A., Miller L. J., Brett-Green B., Hepburn S. L.2008Psychophysiology of children with autism spectrum disorder. Res. Autism Spectr. Disord 2, 417–429 (doi:10.1016/j.rasd.2007.09.002) [Google Scholar]

- Sinclair J.1999Why I dislike ‘person first’ language. Available from author [Google Scholar]

- Strauss M., Reynold C., Hughes S., Park K., McDarby G., Picard R. W.2005The HandWave Bluetooth Skin Conductance Sensor. The First Int. Conf. on Affective Computing and Intelligent Interaction, Beijing, China. [Google Scholar]

- SymTrend. Electronic diaries and graphic tools for health care and special education. 2009. Cited 2009 April 3. Available from http://www.symtrend.com .

- Wing L., Shah A.2000Catatonia in autistic spectrum disorders. Br. J. Psychiatry 176, 357–362 (doi:10.1192/bjp.176.4.357) [DOI] [PubMed] [Google Scholar]

- Winton W. M., Putnam L. E., Krauss R. M.1984Facial and autonomic manifestations of the dimensional structure of emotion. J. Exp. Soc. Psychol 20, 195–216 [Google Scholar]