Abstract

Knowledge of the stage composition and the temporal dynamics of human cognitive operations is critical for building theories of higher mental activity. This information has been difficult to acquire, even with different combinations of techniques such as refined behavioral testing, electrical recording/interference, and metabolic imaging studies. Verbal object comprehension was studied herein in a single individual, by using three tasks (object naming, auditory word comprehension, and visual word comprehension), two languages (English and Farsi), and four techniques (stimulus manipulation, direct cortical electrical interference, electrocorticography, and a variation of the technique of direct cortical electrical interference to produce time-delimited effects, called timeslicing), in a subject in whom indwelling subdural electrode arrays had been placed for clinical purposes. Electrical interference at a pair of electrodes on the left lateral occipitotemporal gyrus interfered with naming in both languages and with comprehension in the language tested (English). The naming and comprehension deficit resulted from interference with processing of verbal object meaning. Electrocorticography indices of cortical activation at this site during naming started 250–300 msec after visual stimulus presentation. By using the timeslicing technique, which varies the onset of electrical interference relative to the behavioral task, we found that completion of processing for verbal object meaning varied from 450 to 750 msec after current onset. This variability was found to be a function of the subject’s familiarity with the objects.

Determining the functional-anatomic correlates and temporal dynamics of verbal object comprehension has been difficult with current investigative techniques. Previous investigations have inferred brain–language associations from impairments after brain injury (1), activation studies during language tasks (2–6), cortical electrical interference studies (7, 8), or electroencephalogram/event-related potentials (ERP) (9, 10). To date, several studies have integrated these methods to maximize their spatial and temporal resolution for functional brain mapping [e.g., positron-emission tomography and ERP (10)]. However, few studies have clearly delineated the functional stages involved in object processing, their temporal dynamics, or the specific brain regions that may be involved. One reason has been the difficulty in bringing on-line measures to bear on this problem in humans.

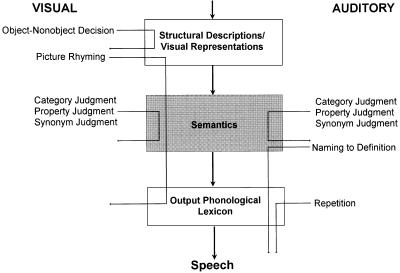

To embark on such studies, it is necessary to first adopt a model of language processing as a framework for investigation. A widely accepted model proposes three levels of object meaning—category, object, and property levels—in a single amodal semantic system (Fig. 1) (1, 11–14, 17). Within this framework verbal object meaning processing may be examined with object naming and comprehension tasks. In addition, verbal object processing in this schema can be integrally linked with the visual object recognition stage described in subhuman primates (18) and, more recently, in humans (19) (Fig. 1).

Figure 1.

Stages and stage connections generally thought to underlie object processing in visual confrontation naming are illustrated in Fig. 1: structural descriptions/visual representations, semantics, semantics-to-phonology transfer, and the output phonological lexicon (1, 11–15). To assess the integrity of these stages, the following tasks were used: for structural description/visual representations, visual object/nonobject decision (1, 11–14); for semantics, category judgment task, synonym judgment task, and property judgment task (1, 11–15); for semantic to phonologic transfer, picture rhyming and naming to definition; for output phonological lexicon, auditory repetition of the words used for naming (16).

This outline has been well established; however, the details of the anatomic location and temporal dynamics remain undetermined. For example, one unresolved issue is the location of the region(s) responsible for amodal object meaning processing in language (11, 15, 20). Moreover, if such a region exists, is its processing engaged immediately upon seeing an object or does it follow modality-specific object recognition in the visual system? What is the time course of object meaning processing in this region? What factors (e.g., sensory or linguistic) influence object meaning processing there?

To address these questions about object meaning, we used multiple investigative measures of on-line processing, including electrical “lesion” and activation techniques, focusing on object naming and comprehension. These were investigated in a single clinical subject who underwent implantation of subdural electrodes for the treatment of epilepsy. The techniques included electrocorticography (ECoG) to assess the temporal onset of neural activation at a given brain site and a variation of direct electrical cortical interference (timeslicing) that allows assessment of the time course and dynamics of object processing at that brain site.

METHODS

Subject.

The subject was a 22-year-old right-handed male with a history of medically intractable complex partial seizures since age 8. He was fluent in Farsi, English, and French. The subject’s language development was normal. He first learned Farsi at home at an appropriate age (1–2 years). At school, he began formal training in English at approximately age 11, and from ages 13 to 15 he studied English at the National Institute for Linguistics in Iran. At approximately age 15, he moved to the United States where he spoke English as his primary language; Farsi was spoken to family and friends. He began learning French when he moved to the United States and at the time of testing was in his third year in college as a French major.

Neurological examination was normal. MRI scan was normal except for a venous angioma in the left precuneus. Baseline neuropsychological function was considered normal in view of his cultural background.

Procedures.

Full informed consent was obtained for all clinical and research procedures, as approved by our institution’s Human Subjects Committee. We used cortical electrical interference to delineate the stage of object processing being studied, direct cortical electrographic recording to investigate onset of cerebral processing during object processing, and variation of the onset of direct electrical cortical interference (timeslicing) to determine the time course of processing.

Direct electrical cortical interference.

Direct cortical electrical interference is a functional mapping technique that appears to induce a temporary focal area of cortical dysfunction subjacent to an electrode pair mainly through the massive depolarization of neurons beneath that pair (21–23). Models of the electrical interference used have suggested that its effects are limited to cortical deactivation, with minimal distant effects (24, 25).

To aid anticipated surgical resection of his seizure focus, indwelling subdural electrode arrays were surgically implanted, with a total of 174 platinum/iridium electrodes (2.3 mm, exposed surface; 1 cm apart) covering both frontal lobes, anterior parietal lobes, and lateral and inferior aspects of the temporal lobes (Fig. 2). Electrodes remained in place for 12 days for clinical seizure localization and cortical function mapping (21, 22).

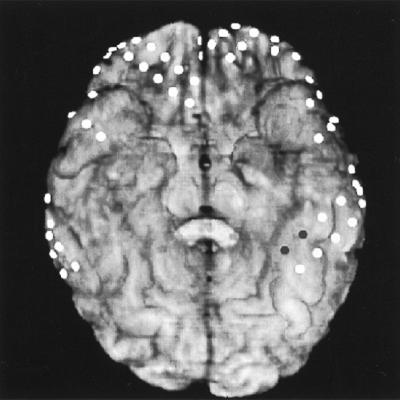

Figure 2.

Coregistration of a computed tomographic scan of the subdural electrodes with the subject’s three-dimensional reconstructed brain MRI. The perspective is a basal brain view with electrode locations represented by the circles. The pair of dark circles in the left lateral occipitotemporal gyrus (anterior aspect) represents the electrode pair (LD17–18).

Electrode location was determined by coregistration of a computed tomographic scan of the electrodes with a three-dimensional reconstruction of the subject’s brain MRI using the automated image registration program (from Roger P. Woods, Univer. of California, Los Angeles, 1993 version). Electrode positions were also independently verified by plain skull x-rays; both methods gave similar localization of the electrodes.

Electrical interference testing was performed by using established protocols (21). Testing was initiated after the subject had fully recovered from surgery. He was awake and fully responsive during testing and was tested individually in a private room. Cortical interference testing of cognitive function and the electroencephalogram from the tested region were recorded onto videotape, permitting later analysis of regional electrocortical activity in relation to the onset/offset of the electrical current and the scoring of subject’s responses. Clinical screening for language function mapping was conducted first, followed by the research testing of ECoG and timeslicing.

Electrical interference was obtained by generating 300-msec square-pulse waves of alternating polarity at a rate of 50 pulses per sec, for up to 5-sec intervals, between adjacent electrode pairs. Current thresholds, measured in mA, were established for each electrode pair before testing by increasing current intensity (0.5- to 1.0-mA steps) until the maximal level of 15 mA was obtained. In the event of afterdischarges, testing was conducted at the next lowest current level that did not produce significant afterdischarges.

To screen for language functions related to object comprehension, we used visual confrontation naming,§§ auditory comprehension, spontaneous speech, and word repetition. For sites where naming and comprehension were impaired, we tested semantic comprehension by visual confrontation naming tasks, auditory word/object comprehension, and visual word/object comprehension. Two input routes—auditory–word and visual–word routes—were used to ensure that results were independent of input modality. The tasks probed the integrity of the processing stages that have been generally accepted as responsible for object naming and comprehension (Fig. 1) (1, 11–14): visual object recognition/structural descriptions, verbal object semantics, semantics-to-phonology transfer, and the output phonological lexicon (1, 11–15). To assess the integrity of these stages, the following tasks were used:

For screening of object processing, visual confrontation naming was used. Black and white line drawings of 84 objects from the Boston Naming Test, Experimental Edition (16), were presented on a computer monitor with the verbal response timed by a voice/onset-time-activated microphone. Responses were hand scored by the experimenter. The stimuli were prescreened and only those named correctly without hesitation were used in this and the timeslicing experiments. For visual object recognition/structural description testing, the visual object decision task was used. Line drawings of objects and nonobjects were presented to the subject for a yes or no verbal/gesture response: “Does the line drawing represent a familiar object shape?” (1, 11–14, 19). For verbal object semantic comprehension, three tasks were used: category, synonym, and property judgment. In the category judgment task, questions of the type, Is an [object] a [category label]?, (e.g., “Is a bird an animal?”) are presented. For the synonym judgment task, questions probed whether or not pairs of words mean the same thing (e.g., “do car and automobile mean the same thing?”). Stimuli consisted of abstract word pairs and concrete word pairs. For the property judgment task, we used questions of the type, Does an [object] have [feature]? (e.g., “Does a bird have wings?”) Stimuli for tasks testing the semantic stage were presented both in writing and, on a different administration, auditorily. Responses were yes or no, by verbal response or gesture (1, 11–15). For semantic to phonologic transfer, the picture rhyming and naming to definition tasks were used. In the picture rhyming task, the subject decided whether names of pairs of high frequency (26) pictures rhymed; a yes or no verbal/gesture response was required. The names of the rhyming pairs were orthographically similar. For naming to definition, definitions of high frequency objects were presented orally for the subject to provide the object’s name (1, 11–14). The output phonological lexicon was tested by auditory repetition. The names of the pictures from the Boston Naming Test (16) were presented auditorily for the subject to repeat out loud.¶¶

Direct electrical cortical recording.

To assess onset of cortical activation, we analyzed changes in the power spectrum of ECoG recordings from the subdural electrodes during performance of a visual confrontation naming task (similar to the task described for direct electrical cortical interference). Suppression of rhythmic activity in the alpha (8–13 Hz) and beta (15–25 Hz) bands has been correlated with cortical activation during sensorimotor and language tasks using scalp electroencephalogram (28) and subdural ECoG recordings (29) and in basal temporal cortices during the picture naming task used herein (30–32).

The 84 object pictures were presented individually on a video monitor for a verbal response of the object name. ECoG was continuously recorded from the subdural electrode arrays for 1 sec before and 2 sec after object presentation. On 2 days, ECoG signals were recorded (1 kHz, sampling; 1–100 Hz, passband) for this task.

Timeslicing of direct electrical cortical interference.

The onset latency of the electrical interference was varied relative to the presentation of the behavioral stimulus. In this manner, processing could be interfered with at different time points during the on-line performance of the cognitive task.

The task used was visual confrontation naming, performed as for direct electrical cortical interference. The picture stimulus was presented at time 0 and electrical interference was started at some point relative to picture onset (e.g., 500 msec after stimulus). Electrical interference continued until a naming response was initiated, at which time the picture was withdrawn, or until the trial timed out.

Initial screening on this subject for the interference paradigm was done at −100, 0, +400, and +1,000 msec after picture onset (screening intervals based on previous pilot studies with other subjects). Picture naming was completely disrupted by interference at and before 400 msec from picture presentation but was unaffected by interference beginning 1,000 msec after picture onset. This time interval was, therefore, explored further at 50-msec increments (from 450 to 900 msec). At each time, 21 stimuli that had been consistently named correctly on prior testing were presented. All items and time delays were randomized; this testing was done in one block. From these data, the +400- and +450-msec intervals were further tested with 21 pictures for each with randomization.

To determine whether object familiarity was a critical factor in determining which objects completed semantic processing earlier or later, we obtained post hoc subjective familiarity ratings. Pictures of 85 objects, including the 21 in question, were presented to the subject for rating of visual familiarity on a scale from 1 to 10 (33).

RESULTS

Cortical Interference: Screen for Language Function.

Sites in both left and right temporal and frontal regions were screened clinically for language function by cortical electrical interference (Table 1), at times through a bilingual interpreter. At one electrode pair (LD17–18) (Fig. 2) in the lateral occipitotemporal gyrus of the left basal temporal lobe, cortical interference caused a deficit in naming, spontaneous speech, and comprehension, while repetition remained intact (Table 1), suggesting that the deficit occurred in semantic processing (Fig. 1). Notably, visual confrontation naming was equally impaired in both English and Farsi.

Table 1.

Experiments on basic language functions and the stages involved with naming at electrode pair LD17-18

| Correct/incorrect | No. without interference | No. with interference | |

|---|---|---|---|

| Basic language screening | |||

| Visual confrontation naming in English | Correct | 7 | 0 |

| Incorrect | 0 (0.76, 1) | 5 (0, 032) | |

| Visual confrontation naming in Farsi | Correct | 6 | 0 |

| Incorrect | 0 (0.73, 1) | 6 (0, 027) | |

| Auditory comprehension in English (Token Test) | Correct | 6 | 0 |

| Incorrect | 0 (0.73, 1) | 4 (0, 0.38) | |

| Spontaneous speech in English | Correct | 4 | 1 |

| Incorrect | 0 (0.62, 1) | 2 (0.02, 0.84) | |

| Oral repetition of single words in English | Correct | 10 | 5 |

| Incorrect | 0 (0.83, 1) | 0 (0.68, 1) | |

| Stages of visual confrontation naming | |||

| Structural descriptions/visual representations | |||

| Visual object/nonobject decision | Correct | 49 | 33 |

| Incorrect | 0 (0.96, 1) | 1 (0.88, 1.00) | |

| Semantic processing | |||

| Auditory-word presentation | |||

| Category judgment | Correct | 18 | 0 |

| Incorrect | 1 (0.79, 1.00) | 9 (0, 0.19) | |

| Synonym judgment | Correct | 9 | 0 |

| Incorrect | 1 (0.63, 0.99) | 9 (0, 0.19) | |

| Property judgment | Correct | 15 | 0 |

| Incorrect | 0 (0.88, 1) | 10 (0, 0.17) | |

| Visual-word presentation | |||

| Category judgment | Correct | 15 | 0 |

| Incorrect | 0 (0.88, 1) | 11 (0, 0.16) | |

| Synonym judgment | Correct | 17 | 0 |

| Incorrect | 1 (0.78, 1.00) | 16 (0, 0.11) | |

| Property judgment | Correct | 13 | 0 |

| Incorrect | 0 (0.86, 1) | 10 (0, 0.17) | |

| Semantics-to-phonology | |||

| Picture rhyming | Correct | 10 | 0 |

| Incorrect | 1 (0.66, 0.99) | 8 (0, 0.21) | |

| Naming to definition | Correct | 15 | 1 |

| Incorrect | 3 (0.62, 0.96) | 13 (0, 0.28) | |

| Output phonological lexicon | |||

| Oral word repetition from Boston Naming Test | Correct | 19 | 18 |

| Incorrect | 0 (0.90, 1) | 0 (0.90, 1) |

Results of language screening tests and specific tests of naming stages through direct electrical cortical interference. Numbers of correct/incorrect responses (including no response) are listed for each task, with and without electrical cortical interference. Likelihood support intervals, equivalent to large sample 95% confidence intervals (34) for the probability of a correct answer are in parentheses.

Cortical Interference: Verbal Semantic Object Processing.

The subject’s pattern of errors during direct cortical interference implied a deficit in semantic processing. During testing of spontaneous speech, he had word-finding difficulties, empty speech, paraphasias, and speech interruption. During visual confrontation naming, there were no responses, circumlocutions, self-cuing, and paraphasic errors. During auditory comprehension (assessed by the Token Test), there were misinterpretations of the verb, color, and/or shape, leading to incorrect responses.

These data are most plausibly interpreted as arising from a deficit in semantic object processing, subject to several assumptions. One is that the entire pattern of data is caused by a single processing deficit. It is conceivable, although obviously not parsimonious, that several separate processing deficits (e.g., semantic processing and in semantic-to-phonologic translation) could also account for these data. Another assumption is that we have discounted the possibility of direct visual-to-phonologic connections in naming, for which there is little to no evidence under these circumstances (35).

Given the model and these explicit assumptions, as the data in Table 1 show, the intact performance on visual object/nonobject decision can be most easily interpreted as evidence that access to visual structural descriptions and visual object representations was intact. The intact auditory word repetition indicates intact output phonologic processing. The deficits apparent in Table 1—in category, synonym, and property judgment—then indicate that cortical electrical interference caused a deficit localized to the semantic level of object processing.

ECoG: Onset of Verbal Object Semantic Processing.

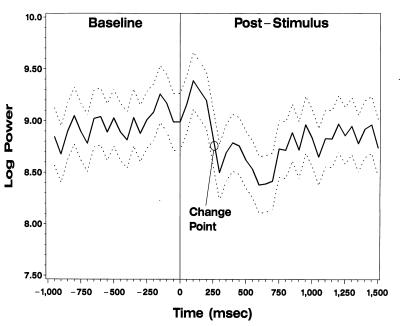

For the ECoG obtained during the picture naming task, alpha and beta bands were analyzed to estimate the time at which processing began in cortex underlying electrode LD17. The ECoG signals were referenced to an average reference, and power in the alpha/beta bands was computed for 100 msec overlapping time segments. Power measurements were logarithmically transformed for statistical analysis. For each testing day, an ANOVA was used to compare the alpha/beta power at each poststimulus time segment with the average prestimulus power. To account for the dependence among repeated observations within a trial and the heteroscadasticity between trials, a first-order autoregressive covariance structure with heterogeneous covariance was assumed.

A significant overall difference was detected at the 0.001 level for both days when all trials were included and when only the trials with correct responses were analyzed. To determine the most likely onset time of alpha/beta power suppression, a change-point analysis was performed by fitting a series of ANOVA models assuming two constant means: one before and one after the change point, where the change point was varied by 50 msec from 0 to 600 msec after stimulus. The same covariance structure described above was assumed. A consistent change point, estimated as the point at which the profile likelihood is maximized (36), was found between 250 and 300 msec for both days when all trials were included. In addition, there was a significant (P < 0.001) decrease in alpha/beta power at this time on both days. When only the correct trials were analyzed, the same results were found for day 2 (Fig. 3, change point circled).

Figure 3.

ECoG spectral analysis of onset of activation at LD17–18. Solid line designates the mean logarithmic power of the ECoG signal over time. Dashed lines represent the 95% confidence interval of these means. A consistent change point of alpha/beta power suppression is illustrated by the circle between 250 and 300 msec.

Timeslicing: Verbal Object Semantic Processing.

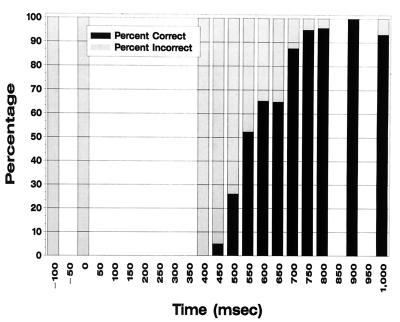

Given the multimodal semantic deficit at LD17–18 during naming and comprehension, we next investigated the time course of semantic processing at this site by using the visual confrontation naming task.§§ By varying the onset of electrical interference, the expectations were that (i) if semantic processing were already completed before interference began, it would not be affected and thus naming would proceed; (ii) processing that had not yet begun would be disrupted and naming would not occur; and (iii) processing already in progress would be variably affected. Such a pattern was observed (Fig. 4).

Figure 4.

Object naming performance as a function of the electrical interference onset time during the timeslicing experiment. The x axis designates the delay of onset of electrical interference relative to object presentation. Percentage of objects named correctly and incorrectly for each time delay are represented by the bars.

Semantic processing was disrupted for all stimuli up to 400 msec after stimulus presentation by cortical interference, implying that semantic processing was still in effect at that time for all stimuli. For some stimuli, processing for object meaning became resistant to interference at LD17–18 as early as 450 msec after picture presentation, but for others, it remained vulnerable up to 750 msec after picture onset (Fig. 4).

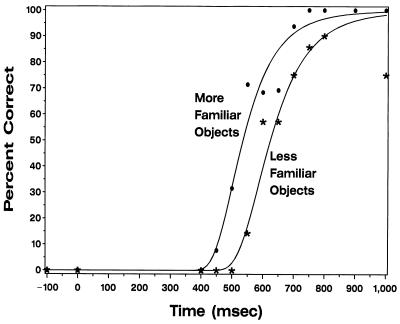

To investigate the basis for this difference, the stimuli were separated into two groups, corresponding to pictures of high and low subjective familiarity, as rated by the subject. Relationships between naming probability and onset time of cortical electrical interference were closely fit by a Weibull proportional hazards model (37). When familiarity was coded as a dichotomous variable (scores: 4–6, more familiar; 7–10, less familiar), model fit improved significantly (likelihood ratio = 12.8; df = 1; P < 0.001). Semantic processing was completed significantly earlier (about 90 msec, P < 0.001) for objects with high subjective familiarity, and naming of these objects was not impaired for cortical interference delays greater than 650 msec (Fig. 5). The model with a factor allowing the processing time for each object to be estimated separately did not significantly improve fit compared with the model with familiarity score (likelihood ratio = 28.3; df = 19; P = 0.0771). This suggests that the majority of the variability in processing times can be accounted for by the subject’s visual familiarity with the objects.

Figure 5.

Percentage of objects named correctly as a function of electrical interference onset time for objects deemed as more or less familiar at LD17–18. There is a statistically significant difference in time of completion of semantic processing for high familiarity compared with low familiarity objects.

DISCUSSION

To summarize, in this single subject, aspects of semantic object processing could be related to a single site in the left lateral occipitotemporal gyrus. The processing appeared to begin 250–300 msec after visual presentation of the object picture and was completed between 450 and 750 msec after presentation. The time of completion of processing appeared to be related to the subjective familiarity of the object; those with high subjective familiarity had processing that completed earlier than those with lower subjective familiarity. The deficit caused by direct electrical cortical interference at this site affected several levels of object processing at the semantic level (category, item/object, and property), across different input modalities (auditory-word, visual-word, and visual-picture), and across at least two relatively distinct languages (English and Farsi).

These results have both methodological and theoretical implications.

Methodologic Issues.

It must be emphasized that these results were obtained in the context of several distinct, and perhaps unique, opportunities, as well as limitations. This is only a single case; the subject was trilingual; he also had epilepsy and an arteriovenous malformation (AVM). Neither the AVM nor the epilepsy would have been expected to alter his cerebral organization (38), but they are potentially confounding factors. It has been debated whether multilingualism is associated with altered cerebral organization for aspects of language (8), although the results from this case would suggest that at least aspects of semantic processing can have a common localization across languages. Single-case studies such as this one have advantages and concerns for theoretical interpretation (see, for example, refs. 39 and 40). The very uniqueness of this subject permitted studies that could not have been attempted in a monolingual normal subject who was not being considered for cerebral resection.

Architecture of Semantic Systems.

It has frequently been debated whether there are multiple semantic systems or a single semantic system, either functionally, anatomically, or both. Our findings argue that, in the sequence of processing, there exists at least one point (and possibly more) where there is a single semantic processing stage, regardless of whether prior or subsequent processing stages have an amodal or multiple modality-specific semantic systems (15, 20). Furthermore, this amodal semantic stage is dependent on at least one relatively specific anatomic site in this subject.

It was of course not possible to rigorously exclude all other possible explanations of these data, adjudicate among all possible theories of the tasks used, or address all possible theories of semantics. Several general possibilities need to be considered to explain the deficit obtained with cortical interference. An attentional deficit could be invoked to explain these findings. However, it could not have been a generalized attentional deficit, or even a modality-specific attentional deficit, because the subject had intact performance on auditory repetition and on visual object/nonobject decision. Any proposed attentional deficit would have to account for the specific pattern of his deficits and for the specific pattern of his errors on each task. A deficit in priming might also be entertained as an account of these deficits. Yet priming effects would not have been expected to be pronounced in these experiments. The intervals between trials were irregular, somewhat self-paced (but also dependent upon on-line scoring), and fairly long (typically greater than 8 sec and variable). These factors would have worked against both automatic and conscious priming effects. A deficit in recall might be invoked, instead of a deficit in the actual processing. However, the subject’s tasks were procedural, on-line tasks, for which recall deficits are not usually invoked to explain impairments (41). Alternatively, it might be argued that it was not semantics that was affected, but some other function common to all of the tasks. However, within standard models of the functions underlying these tasks, no other deficit appears to be able to parsimoniously account for the data. For example, an orthographic deficit would not explain his pattern of performance including deficits in spontaneous speech, auditory word comprehension, or in picture naming.

The composition of the tasks used may be more complex or different than we assumed, and this could affect interpretation of the results. For example, some models of naming and spontaneous speech add an additional stage, that of lemma selection, between semantics and output phonology (for a discussion, see ref. 14). However, because one of the putative markers of the lemma stage is syntactic information, and our stimuli were typically objects and testing was in English, it was not possible to explore a possible lemma stage. However, his failure to neither comprehend (e.g., on the Token Test) nor to produce with electrical inference at the same site are most parsimoniously explained by a semantic deficit, which would affect both tasks, rather than by a lemma deficit, which would only affect production.

Neither could this study test all possible theories of semantics or of semantic phenomena. In the model of semantic processing we considered, processing modules are independent enough to allow assignment of errors to specific stages. However, some distributed models of naming and semantics make it more difficult to assign errors to failure of a particular stage. There are also models of semantic organization that do not necessarily separate out explicit category, object, and property levels (42).

Another limitation of this study was anatomical. Lack of extensive electrode coverage precluded delineating the full extent of cortex in this area associated with semantic functions. The left occipitotemporal region has been implicated in other semantic functions besides those of semantic object processing. Semantic priming, for example, was studied in an evoked response (ERP) study, using indwelling electrodes at locations similar to the ones we explored. This study recorded changes related to semantic priming that started around 250 msec after stimulus presentation, which peaked at 400 msec (9, 43). Because of stimulus, task, and measurement differences, these data are not necessarily congruent with our own.

The general conclusion nonetheless remains relatively secure, that semantic processing has at least one common amodal stage and that this stage may have specific anatomic correlates. This conclusion is consistent with positron-emission tomography studies suggesting that amodal semantic processing is associated with activation of the left lateral occipitotemporal gyrus (among other structures) (2, 4) and confirms and extends previous studies suggesting a network of cortical regions involved in semantic representation, access, and/or processing. It also extends the number of language-related functions associated with the basal temporal lobe (5, 6, 44).

Time to Access Single Object Meaning.

In keeping with expectations that visual object processing must precede access to meaning, our data show no evidence for regional activation in the left basal temporal lobe until 250–300 msec after visual stimulus presentation. Thus, it appears that although processing for meaning may or may not temporally overlap visual object recognition (which cannot be determined from our data), any such overlap is incomplete and thus some degree of seriality apparently remains at this level of human cognitive processing for naming.

This result agrees with several previous estimates of the onset of semantic processing derived from scalp and intracranial ERP techniques (e.g., 150–300 msec from ERP, 230 msec in the left inferior frontal region) (10, 45). Others have suggested that cognitive processing during naming occurs in the lateral posterior temporal lobe at 700–1,200 msec, as assessed by change of spectral density of the ECoG (46). However, these investigators did not perform any testing to verify whether the cognitive processing was semantic, did not assess the sites in the occipitotemporal region we investigated, explored only lateral temporal lobe regions thought to be involved later in the naming stream than the basal temporooccipital region, and used only 500-msec epochs, limiting their temporal resolution.

Dynamics of Processing.

The temporal dynamics of information processing within a stage—for example, whether it is all-or-none or more gradual—are of considerable interest in neuroscience. Data from the two techniques used in this study (ECoG and varying the onset of cortical electrical interference) imply several essential points about the time course of semantic object processing at this site. The neural activity that underlies this cognitive function does not begin until 250–300 msec after stimulus onset (shown by ECoG). The next 200 msec of processing (250–450 msec) are necessary for semantic operations for all objects. Whether additional processing is necessary (from 450 to 750 msec after stimulus onset) depends upon the item familiarity. By 750 msec after stimulus onset, semantic processing has been essentially completed for all items at this site (as shown by the lack of effect of electrical interference). These current data, obtained within a single stage, at a single neuroanatomic site, are further evidence that information accumulates gradually, rather than in a strictly all-or-none fashion. Furthermore, once past the bulk of processing, a stage becomes progressively less susceptible to disruption. Of course, this account does not exclude the possibility that there are thresholds for processing or that there is all-or-none processing in the nervous system. It does suggest, however, that at the more macroscopic cognitive level studied herein, the change in information can be described as continuous and gradual rather than abrupt.

Effects of Familiarity on Dynamics of Processing.

Familiarity is well known to influence the speed of picture/object naming (47). An appreciable familiarity effect (∼90 msec) was seen even with the relatively restricted range of familiarities in the current experiment. The greater the subjective familiarity, the earlier an item loses susceptibility to interference (Fig. 5).

The magnitude of the familiarity effect found herein was comparable to those reported in the literature, but its source was not. The familiarity effect in picture/object naming is usually attributed to postsemantic processes, in lexical access (47), but in the current experiment, the visual object recognition and/or semantic stage was clearly being influenced. Thus, more than one stage in the sequence involved in naming may be responsible for familiarity effects. There may even be strategic influences on which stage is most influential in a given situation; the conditions of the current experiment may have emphasized the semantic stage.

Acknowledgments

We thank M. Tesoro, S. Kremen, Dr. J. Zinreich, M. Wilson, S. Nathan, and Dr. P. Talalay. This investigation was supported in part by the National Institutes of Health (Grants RO1 NS26553 and NS29973 and K08 NS01821 from the National Institute of Neurological Disorders and Stroke and K08 DC00099 from the National Institute on Deafness and Other Communication Disorders) and the Dana Foundation.

Footnotes

This paper was submitted directly (Track II) to the Proceedings Office.

Abbreviations: ECoG, electrocorticography; ERP, event-related potentials.

Pictures chosen for presentation included objects from several categories (e.g., animals, food, plants, personal items, furniture, tools, household objects, sports equipment, school supplies, transportation, etc.), without a selection bias.

Whole-word repetition is thought to depend primarily on whole-word output phonology and, to a lesser degree, on output syllabic, phonemic, or even articulatory processes as noted by Berndt (27).

References

- 1.Hart J, Gordon B. Ann Neurol. 1990;27:226–231. doi: 10.1002/ana.410270303. [DOI] [PubMed] [Google Scholar]

- 2.Vandenberghe R, Price C, Wise R, Josephs O, Frackowiak R S. Nature (London) 1996;383:254–256. doi: 10.1038/383254a0. [DOI] [PubMed] [Google Scholar]

- 3.Wise R, Chollet F, Hadar U, Friston K, Hoffner E, Frackowiak R. Brain. 1991;114:1803–1817. doi: 10.1093/brain/114.4.1803. [DOI] [PubMed] [Google Scholar]

- 4.Damasio H, Grabowski T J, Tranel D, Hichwa R D, Damasio A R. Nature (London) 1996;380:499–505. doi: 10.1038/380499a0. [DOI] [PubMed] [Google Scholar]

- 5.Martin A, Haxby J V, Lalonde F M, Wiggs C L, Ungerleider L G. Science. 1995;270:102–105. doi: 10.1126/science.270.5233.102. [DOI] [PubMed] [Google Scholar]

- 6.Martin A, Wiggs C L, Ungerleider L G, Haxby J V. Nature (London) 1996;379:649–652. doi: 10.1038/379649a0. [DOI] [PubMed] [Google Scholar]

- 7.Ojemann G. J Neurosurg. 1979;50:164–169. doi: 10.3171/jns.1979.50.2.0164. [DOI] [PubMed] [Google Scholar]

- 8.Ojemann G, Whitaker H. Arch Neurol. 1978;35:409–412. doi: 10.1001/archneur.1978.00500310011002. [DOI] [PubMed] [Google Scholar]

- 9.Nobre A C, Allison T, McCarthy G. Nature (London) 1994;372:260–263. doi: 10.1038/372260a0. [DOI] [PubMed] [Google Scholar]

- 10.Snyder A Z, Abdullaev Y G, Posner M I, Raichle M E. Proc Natl Acad Sci USA. 1995;92:1689–1693. doi: 10.1073/pnas.92.5.1689. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hart J, Gordon B. Nature (London) 1992;359:60–64. doi: 10.1038/359060a0. [DOI] [PubMed] [Google Scholar]

- 12.Riddoch M, Humphreys G. Cogn Neuropsychol. 1987;4:131–185. [Google Scholar]

- 13.Hillis A, Caramazza A. Brain Lang. 1991;40:106–144. doi: 10.1016/0093-934x(91)90119-l. [DOI] [PubMed] [Google Scholar]

- 14.Starreveld P, La Heij W. JEP: Learn, Mem & Cog. 1996;22:896–918. [Google Scholar]

- 15.Humphreys G, Riddoch M, Quinlan P. Cogn Neuropsychol. 1988;5:67–104. [Google Scholar]

- 16.Goodglass H, Kaplan E, Weintraub S. Boston Naming Test. Boston: Boston Univ.; 1976. , Experimental Edition. [Google Scholar]

- 17.Miller G A, Johnson-Laird P N. Language and Perception. Cambridge, MA: Harvard Univ. Press; 1976. [Google Scholar]

- 18.Mishkin M, Ungerleider L, Macko K. Trends Neurosci. 1983;6:414–417. [Google Scholar]

- 19.Kraut M, Hart J, Jr, Soher B J, Gordon B. Neurology. 1997;48:1416–1420. doi: 10.1212/wnl.48.5.1416. [DOI] [PubMed] [Google Scholar]

- 20.Shallice T. Cogn Neuropsychol. 1988;5:133–142. [Google Scholar]

- 21.Lesser R P, Lueders H, Klem G, Dinner D, Morris H, Hahn J, Wyllie E. J Clin Neurophysiol. 1987;4:27–53. doi: 10.1097/00004691-198701000-00003. [DOI] [PubMed] [Google Scholar]

- 22.Hart J, Lesser R P, Gordon B. J Cogn Neurosci. 1992;4:337–343. doi: 10.1162/jocn.1992.4.4.337. [DOI] [PubMed] [Google Scholar]

- 23.Li C-H, Chou S N. J Cell Comp Physiol. 1962;60:1–16. doi: 10.1002/jcp.1030600102. [DOI] [PubMed] [Google Scholar]

- 24.Nathan S S, Sinha S R, Gordon B, Lesser R P, Thakor N V. EEG Clin Neurophysiol. 1993;86:183–192. doi: 10.1016/0013-4694(93)90006-h. [DOI] [PubMed] [Google Scholar]

- 25.Agarwal V S. Master’s thesis. Baltimore, MD: Johns Hopkins Univ.; 1994. [Google Scholar]

- 26.Kucera H, Francis W N. Computational Analysis of Present-Day American English. Providence, RI: University Press; 1967. [Google Scholar]

- 27.Berndt R S. In: Handbook of Neuropsychology. Boller F, Grafman J, editors. Vol. 1. Amsterdam: Elsevier; 1988. p. 329. [Google Scholar]

- 28.Toro C, Cox C, Friehs G, Ojakangas C, Maxwell R, Gates J R, Gumnit R J, Ebner T J. EEG Clin Neurophysiol. 1994;93:380–389. doi: 10.1016/0168-5597(94)90127-9. [DOI] [PubMed] [Google Scholar]

- 29.Crone N E, Hart J, Lesser R P, Boatman D, Gordon B. Epilepsia. 1994;35:103. (abstr.). [Google Scholar]

- 30.Pfurtscheller G, Aranibar A. EEG Clin Neurophysiol. 1977;42:817–826. doi: 10.1016/0013-4694(77)90235-8. [DOI] [PubMed] [Google Scholar]

- 31.Pfurtscheller G, Klimesch W. EEG Clin Neurophysiol. 1991;90:58–65. [PubMed] [Google Scholar]

- 32.Crone N, Hart J, Boatman D, Lesser R, Gordon B. Brain Lang. 1994;47:466–468. [Google Scholar]

- 33.Gordon B. J Mem Lang. 1985;24:631–645. [Google Scholar]

- 34.Edwards A W F. Likelihood. Cambridge, MA: Cambridge Univ. Press; 1972. [Google Scholar]

- 35.Gordon B. In: Anomia. Goodglass H, Wingfield A, editors. San Diego: Academic; 1997. pp. 31–64. [Google Scholar]

- 36.Carlin B P, Gelfand A E, Smith A F M. Appl Stat. 1992;41:389–405. [Google Scholar]

- 37.McCullagh P, Nelder J. Generalized Linear Models. 2nd Ed. London: Chapman & Hall; 1989. [Google Scholar]

- 38.Rasmussen T, Milner B. Ann NY Acad Sci. 1977;299:355–369. doi: 10.1111/j.1749-6632.1977.tb41921.x. [DOI] [PubMed] [Google Scholar]

- 39.Newcombe F, Marshall J C. Cogn Neuropsychol. 1988;5:549–564. [Google Scholar]

- 40.McCloskey M, Caramazza A. Cogn Neuropsychol. 1988;5:583–623. [Google Scholar]

- 41.Squire L R, Zola-Morgan S. Proc Natl Acad Sci USA. 1996;93:13515–13522. doi: 10.1073/pnas.93.24.13515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Small S L, Hart J, Nguyen T, Gordon B. Brain. 1995;118:441–453. doi: 10.1093/brain/118.2.441. [DOI] [PubMed] [Google Scholar]

- 43.Nobre A, McCarthy G. J Neurosci. 1995;15:1090–1098. doi: 10.1523/JNEUROSCI.15-02-01090.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Lueders H, Lesser R P, Hahn J, Dinner D S, Morris H H, Wyllie E, Godoy J. Brain. 1991;114:743–754. doi: 10.1093/brain/114.2.743. [DOI] [PubMed] [Google Scholar]

- 45.Kounios J. Psychopharmacol Bull Rev. 1996;3:265–286. doi: 10.3758/BF03210752. [DOI] [PubMed] [Google Scholar]

- 46.Ojemann G, Fried I, Lettich E. EEG Clin Neurophysiol. 1989;73:453–463. doi: 10.1016/0013-4694(89)90095-3. [DOI] [PubMed] [Google Scholar]

- 47.Levelt W J M. Speaking: From Intention to Articulation. Cambridge, MA: MIT Press; 1989. [Google Scholar]