Abstract

Over a decade ago it was proposed that the primate auditory cortex is organized in a serial and parallel manner in which there is a dorsal stream processing spatial information and a ventral stream processing non-spatial information. This organization is similar to the “what”/“where” processing of the primate visual cortex. This review will examine several key studies, primarily electrophysiological, that have tested this hypothesis. We also review several human imaging studies that have attempted to define these processing streams in the human auditory cortex. While there is good evidence that spatial information is processed along a particular series of cortical areas, the support for a non-spatial processing stream is not as strong. Why this should be the case and how to better test this hypothesis is also discussed.

Keywords: Primate Auditory Cortex, Dual-Stream Processing, Macaque Serial Parallel Processing

Introduction

The mammalian auditory cortex is a critical link between auditory stimuli and perception and is crucial in the comprehension speech, which is arguably the most significant social stimulus to humans. In spite of this clear importance, there are relatively few focused studies on the functional organization of the primate auditory cortex, especially with respect to neural correlates of perception, compared to the visual system. Consequently there was relatively little understood about this structure until the end of the last century. Experiments investigating the functional organization of the auditory cortex were limited in the 1970s [e.g. 9, 51, 84] and received similarly little attention throughout the 1980s. Real interest was generated in the mid to late 1990s with several studies, which were primarily anatomical in nature, that began to tease apart how the relationship between multiple auditory cortical fields and the hierarchical nature of processing between these fields.

In the late 1990s, a hypothesis was put forth suggesting that auditory-cortical processing might follow the general functional organization of the visual cortex. Based on a series of anatomical studies by Romanski, Rauschecker, Kaas, and Hackett, a similar functional organization of primate auditory-cortical organization was proposed [37, 69, 70, 78] that was analogous to information flow in the visual system [91, 92]. That is, information is processed in two parallel and hierarchical ‘streams’: a dorsal spatial-processing (i.e., a‘where’) stream and a ventral non-spatial-processing (i.e., a‘what’) stream. Over the past decade, anatomical, physiological and functional imaging studies have tested this hypothesis and have extended this, although they have also led to mixed results in many respects. While the functional-imaging studies tend to support the two-stream hypothesis, the physiological studies in non-human primates are much fewer in number. More importantly, these physiological data are equivocal with respect to the two stream hypothesis. Indeed, studies both consistent and inconsistent with this hypothesis have been produced in the same laboratory. This review will examine many of these recent studies, concentrating on non-human primates but include human functional imaging studies as well as studies on other animal models that address the two-stream hypothesis.

The anatomical organization of the primate auditory cortex

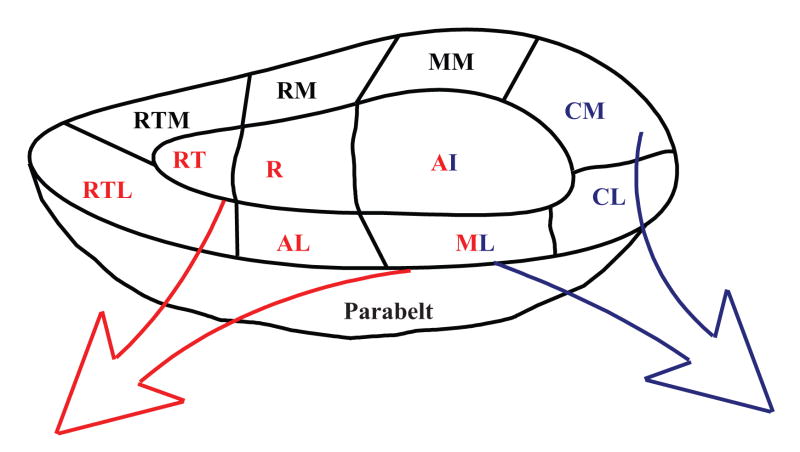

Early studies that used cytoarchitecture as the primary investigatory tool defined several auditory fields [e.g. 11, 22]. However, each laboratory used different nomenclatures for these fields making cross-study comparisons difficult. More recently, studies that used modern anatomical techniques have reconciled these older studies into a unified framework: the auditory cortex is organized into a core – belt – parabelt network. The core comprises three distinct tonotopic fields (Figure 1) and is surrounded by a series of cortical fields that make up the ‘belt’ auditory cortex. Lateral to the belt is a region of cortex denoted as the ‘parabelt’ [see 37].

Figure 1.

Schematic illustration of the primate auditory cortex. The core area is made up of the primary auditory cortex (AI), the rostral field (R) and the rostrotemporal field (RT). The belt area is comprised of the caudolateral (CL), caudomedial (CM), middle medial (MM), rostromedial (RM), rostrotemporal medial (RTM) rostrotemporal lateral (RTL), anterolateral (AL) and middle lateral (ML) fields. Lateral to the lateral belt areas is the parabelt. The dual stream hypothesis posits that spatial processing will occur in a dorsal, or caudal stream (blue) and non-spatial processing will occur in the ventral, or rostral stream (red).

Connectivity studies have shown that these regions of auditory cortex (core, belt, and parabelt) are serially interconnected with their nearest neighbors but are not interconnected with regions that are further removed. Consequently, whereas the core fields are interconnected with belt fields and the belt is interconnected with the parabelt, there is not a direct projection from the core to the parabelt [e.g. 37 see also 17, 18].

The projections from the parabelt out of the auditory cortex out to higher order cortical structures define the auditory dorsal (spatial) processing stream and the ventral (non-spatial) processing stream. Specifically, the projection from the core to the parabelt to the parietal lobe defines the dorsal stream, whereas the projection through the temporal lobe and to the prefrontal cortex defines the ventral stream. Importantly, these parietal and prefrontal areas overlap with, and include, part of the visual dorsal and ventral processing streams, making a strong case that the functional organization of auditory cortical processing is segregated in the same way as for visual cortical functions.

This serial and parallel processing principle has been defined in other primate species besides macaque monkeys. These species include chimpanzees [28], marmosets [17, 18] and humans using both functional imaging [e.g. 34, 95] and anatomical techniques [e.g. 12, 20]. Thus, much like the visual and somatosensory systems, there is clear anatomical evidence for serial and parallel processing of information from the core to the belt, then parabelt and higher order cortical areas.

Physiological-response properties

The data involving physiological responses comes in two primary forms. The first is functional-imaging studies, predominately in the form of functional magnetic resonance imaging (fMRI). This technique measures a change in the oxygenation of blood within the brain and is believed to reflect changes in the synaptic activity and, to a slightly lesser extent, action potentials in the adjacent neural tissue [46]. Recent studies using optical imaging in animals, which has spatial resolution to the level of individual cells, raises the intriguing possibility that glial cells (astrocytes) could also contribute to such a signal [83]. Regardless of the cellular mechanism, however, it is generally accepted that the changes in blood oxygenation measured using fMRI is strongly correlated with stimulus-driven neuronal activity. The second form of data is the direct recordings of action potentials from single neurons or from small clusters of neurons across the different cortical fields in non-human primates, primarily the macaque monkey.

In the auditory cortex, both fMRI and recording studies produce similar results with respect to the basic response properties of the auditory cortex. Namely, the core fields respond well to tones and noise, whereas the belt and parabelt respond best to more complex stimuli. For example, studies in the alert and anesthetized macaque [67, 71, 74, 90], as well as the marmoset [6] have shown that neurons in core areas have narrow tuning functions and respond best to tone stimuli. In contrast, neurons in the belt areas have broader frequency tuning functions and respond better to band-passed noise. Similarly, imaging studies in both macaques and humans have shown that responsivity to complex stimuli, such as vocalizations and environmental noise is greater in non-core areas [61–64]. In humans, non-core regions of the auditory cortex also respond best to more complex stimuli such as moving auditory stimuli [41, 42] and vocalizations or speech [e.g. 7, 45].

The basis of this progression from simple to more complex response properties lies in the unique pattern of connectivity from between the auditory thalamus and the network of areas/fields in the auditory cortex. Different areas in the belt cortex are innervated independently by different core fields; these belt areas also have direct projections from the thalamus that are not shared with the core [e.g. 11, 36, 56]. Consequently, lesions of the core fields eliminate the responses of neurons in the belt to tones but not to noise stimuli [68]. These findings are consistent with those in the visual and somatosensory cortex, where the receptive fields of cortical neurons appears to be more complex as one goes from the core to the belt areas.

Studies that have attempted to determine which stimulus features these areas, or any areas responsive to complex auditory stimuli, are preferentially processing have not been wholly successful (e.g. 67, 71, 74, 89, 97]. This lack of success may be due, in part, to the fact that at the level of the auditory cortex, neurons do not code auditory-stimulus features but instead are coding auditory objects [see 39]. As such, it may be more profitable to test the mechanisms underlying components of auditory-object processing such as object invariance.

While the studies described above show good evidence of serial processing in the auditory cortex, they do not provide a direct test of the parallel-stream hypothesis. The first and perhaps most compelling evidence for parallel pathways processing spatial and non-spatial information was an early study by Rauschecker and colleagues [90]. In this experiment, neuronal activity was recorded from the lateral belt cortex (areas CL, ML and AL) while anesthetized macaques were stimulated with con-specific vocalizations presented at different locations. It was found that the selectivity for the vocalization, independent of its spatial location, was greater in neurons in the more rostral belt fields. In contrast, the selectivity of neurons in the caudal belt fields was greater for the spatial location of the stimulus, independent of vocalization type. This experiment was the first to provide direct evidence in favor of the dual processing-stream hypothesis. The subsequent studies described below further tested this idea.

Spatial processing in non-human primates

Historically, there has been great interest in how acoustic space is represented in the mammalian cerebral cortex. Multiple studies in carnivores and primates indicate that a topographic map of acoustic space does not exist in the primary or secondary auditory cortex [e.g. 8, 33, 38, 52, 66, 75, 86, 97]. This observation led to the hypothesis that acoustic space must be encoded by the responses of populations of neurons [e.g. 19, 21, 55, 87]. More recently, several single-unit studies conducted in the alert macaque indicated that the response properties are similar to those seen in the cat: both cat and macaque auditory-cortex neurons have receptive field sizes that are very broad and are not topographically organized [75, 94, 97]. These receptive fields have a graded response to different spatial locations, with responses to ipsilateral space generally weaker to those in contralateral space. Consequently, this response profile produces a monotonic function of neural activity as a function of space if one is constrained to frontal space [94] or more Gaussian in shape if 360° of space are tested [97].

The relationship between the shape/size of the receptive fields in the auditory cortex and the macaque’s psychophysical sensitivity has been tested by Recanzone and colleagues [55, 75, 97]. An important contribution from these studies was that these recordings from different auditory-cortical areas were obtained from the same monkey whose psychophysical sensitivity was also measured. The first study demonstrated that the spatial tuning was sharper in caudal fields CM relative to spatial tuning in A1 neuron for both azimuth and elevation [75]. Further, by using stimuli with different spectral bandwidths, it was demonstrated that the spatial tuning of populations of CM neurons, but not A1 neurons, was better able to account for the sound-localization ability of the monkeys.

In a subsequent study, the resolution of neurons in the auditory cortex was more thoroughly tested by assessing their spatial selectivity, not only at different elevations of the interaural axis but across 360° of azmithual space and at four different stimulus intensities [75]. Spatial sensitivity to sound-source location was, once again, better for CM neurons than for A1 neurons, but CL neurons also had the greatest spatial selectivity; ML neurons had slightly better spatial selectivity than AI neurons, whereas neurons in R and MM had the poorest spatial selectivity. Also, unlike previous studies in carnivores [cited above, see also 57, 73, 65], many neurons had their sharpest tuning at the highest stimulus intensities. However, across the population, spatial tuning did not substantially increase with increasing intensity. This result is consistent with behavioral studies that demonstrated that sound localization is relatively stable across a wide range of intensities, once above detection threshold. [2, 15, 76, 82, 88].

Finally, this relationship between neural sensitivity and sound-localization sensitivity was formally tested by Miller and Recanzone [55]. In this study, they used the neural data from Woods et al. (2006) to create a population-based maximum-likelihood model [35]. They demonstrated that the firing rates of CL neurons carried enough information to account for sound-localization performance as a function of both location and intensity. A different caudal belt area (CM) was less accurate than CL. However, the population of neurons in both areas were better than the core areas (A1 and R) and medial-belt areas ML and MM in predicting localization accuracy.

In summary, these studies clearly support the hypothesis of a caudal spatial processing stream in the primate auditory cortex. As predicted, neurons in the caudal belt fields show much greater spatial selectivity compared to neurons in the more rostral areas. It should be noted that studies in other mammals are converging on the same conclusion. For example, in the cat, neurons in the dorsal zone have sharper spatial tuning, similar to that seen in the lateral belt of primates [86, however see 8] and have sound-localization deficits when this region is deactivated [47–50]. Thus, a spatial-processing stream may be a general cortical mechanism for representing acoustic space.

Non-spatial processing in non-human primates

Several studies have tested the hypothesis that non-spatial attributes are selectively processed by the more rostral belt and parabelt cortical areas. As discussed above, much of the evidence in support of this hypothesis originates with the initial studies by Rauschecker and colleagues: neurons in the rostral belt and parabelt cortical areas are more selective for vocalizations than those in the caudal pathway [90] and are relatively less sensitive to spatial location. These findings were the initial, and strongest, evidence for a selective non-spatial processing stream in rostral auditory cortical areas. They are also consistent with the very early reports of vocalization responses in auditory cortex of squirrel monkeys in the 1970s [see 26, 59, 96]. Vocalizations are spectrally- and temporally-complex stimuli that are behaviorally relevant [see 24] and it is known that auditory cortex is necessary for their perception in rhesus monkeys [29, 30].

One confounding aspect in interpreting these series of studies is that it is not possible to distinguish between responses to specific vocalizations or to generally complex stimuli or even to specific spectral or temporal features of the stimuli. Indeed, subsequent studies that have manipulated the vocalizations, including other complex non-vocalization stimuli in the test battery, or instantiated more rigorous data-analysis techniques, have suggested that responses in rostral cortical areas are not particularly sensitive to specific vocalizations. For example, in a study that used 2-deoxyglucose to assess auditory-induced activity, Poremba and Mishkin [62] found that vocalizations did not selectively activate the core and belt regions. Instead, the only region of the temporal lobe to demonstrate any selectivity for a particular stimulus class was the rostral pole, a region of cortex that is well beyond the regions of cortex that compromise the belt and parabelt of the auditory cortex [64]. In a similar approach, Petkov et al. [61] used fMRI to reveal multiple auditory cortical fields, and once again, vocalization specificity was not noted until the more rostral regions of the temporal lobe. Moreover, complex stimuli such as environmental sounds were similarly effective in driving core and belt cortical neurons as were vocalizations. Thus, it may be that these regions are not vocalization selective, but rather simply respond well to complex stimuli. Functional imaging, however, cannot address the responses of single neurons, so while such selectivity could exist in a subset of neurons, this signal could be lost as other non-selective cells would require similar metabolic demands regardless of the stimulus.

This issue can be addressed, in part, by recording single-neuron responses to vocalizations as well as time-reversed vocalizations in the core and belt cortical areas of alert monkeys [77]. In this study, when selectivity was quantified using traditional spike-rate based metrics, there was no selectivity for forward vocalizations compared to time-reversed vocalizations in any of the core or belt areas (A1, R, CM, CL and ML). Moreover, the same analysis showed that there was also no difference between the different cortical areas.

This pattern of results is in contrast to the aforementioned vocalization-selective studies, which could be due to several key differences between them. For example, Recanzone [77] used alert animals (vs. anesthetized), time reversed stimuli (vs. forward-only stimuli), and a smaller set of vocalizations (four vs. seven) compared to Tian and Rauschecker [90]. However, even if there was not any selectivity for vocalizations, one would have expected from the dual-stream hypothesis that neurons in the more rostral cortical areas (such as R and ML) would be more selective or responsive to these complex stimuli than neurons in the caudal areas (CM and CL). The argument could be made, of course, that forward and time-reversed vocalizations are not the appropriate ‘non-spatial’ stimuli to dissociate these differences, a topic which we will deal with in some detail at the end of this review.

The dual-stream hypothesis beyond the auditory cortex

The extent that this processing scheme holds beyond the auditory cortex has begun to be elucidated. When monkeys listen passively to auditory stimuli, Cohen and colleagues [13, 14, 25] found that neurons in the lateral intraparietal cortex (which is part of the dorsal stream) and the ventral prefrontal cortex (vPFC; which is part of the ventral stream) responded to both the spatial and non-spatial features of an auditory stimulus. More importantly, the degree to which vPFC and parietal neurons are modulated by the spatial and non-spatial features of an auditory stimulus is comparable.

One possible explanation for the described above results is that the passive-listening tasks utilized in these vPFC and parietal studies [14, 25] do not appropriately engage the neurons in these cortical areas. Indeed, the well-known role of the PFC in executive function [10, 23, 53, 54] and the parietal cortex in spatial cognition [43, 85] can only be demonstrated when subjects are actively engaged in a task. Thus, a true test of how the neurons in these areas code the spatial and non-spatial attributes of an auditory stimulus requires that activity be tested while monkeys are in the process of selectively attending to these attributes.

To test this hypothesis, Cohen et al. (unpublished observations) recorded from vPFC neurons while monkeys engaged in a task in which they reported when the spatial or non-spatial features of an auditory stimulus changed. vPFC neurons were reliably modulated when the monkeys reported changes in the non-spatial features of an auditory stimulus. But, they were not modulated when the monkeys reported changes in the spatial features. Moreover, Cohen et al. found that when the monkeys were reporting changes in the non-spatial features, the degree of neural modulation was positively correlated with the monkeys’ behavioral performance. Thus, when monkeys are actively engaged in a task, the vPFC appears to be preferentially responsive to non-spatial features, a result consistent with the dual-stream hypothesis.

Another issue for the viability of the two-stream hypothesis beyond the auditory cortex is the degree to which areas beyond the auditory cortex remain functionally specialized. A reasonable hypothesis is that between the auditory cortex and the vPFC, neurons should become sensitive to more complex features. When traditional metrics [14, 90] and different decoding algorithms [80] are applied, vPFC neurons are less selective for different vocalizations than those in the superior temporal gyrus, a region of the auditory cortex that includes the anterolateral belt. However, more sophisticated analyses indicate that the pairwise responses of vPFC neurons have the capacity to code more complex properties of a vocalization than do the pairwise responses of neurons in the superior temporal gyrus (Miller and Cohen, In Press). Additionally, an analysis of the output of the decoding-algorithms suggested that vPFC neurons code more semantic properties of a vocalization than those in the superior temporal gyrus (Miller and Cohen, In Press). Overall then, these sets of studies are consistent with the hypotheses that the vPFC is part of a circuit involved in non-spatial auditory processing and that the vPFC has a functional role in non-spatial auditory cognition [80, Lee et al., In Press].

Comparison with human-imaging studies

Human-imaging studies appear to be overall more consistent with the dual-stream hypothesis than single-unit studies; although, a clear differentiation between spatial and non-spatial processing is not always revealed [e.g. see 3]. For example, sometimes only a ventral stream shows differentiation, only the dorsal stream shows differentiation, or both streams selectively process spatial or non-spatial stimuli as in Rauschecker and Tian [90]. Such examples can be found whether subjects passively listened [e.g. 5, 44, 60], when they listened or looked for a target stimulus [4, 7, 40, 93], or when the selectively attended spatial or non-spatial aspects of the stimulus [e.g. 1, 31, 45]. Whereas it is difficult to directly compare the human-imaging and the monkey-electrophysiological studies, the data are converging on a non-spatial selective pathway in the dorsal regions of auditory cortex that extend beyond the belt and parabelt and into the parietal lobe. The evidence for a non-spatial processing stream is not as clear, although this may be more due to technical reasons such as the choice of stimuli rather than actual differences between processing between the two species [e.g. see 39, 58, 72].

Can these two different techniques in different species be compared to converge on a common answer? The first issue to note is that fMRI studies are not directly measuring neuronal spiking activity, as is the case with the single neuron studies described above. This is an important issue, as the fMRI technique is by necessity measuring the population responses, not the responses of single neurons. Thus, it can be that small, statistically non-significant differences at the single neuron level are combined into a statistically significant difference at the population level. A second issue is that the ‘what’ stimuli used in the fMRI and single-neuron experiments are largely different, and it may be that the categorization of different sounds in human fMRI experiments are more appropriate to reveal these differences compared to the rather arbitrarily chosen stimuli used in animal studies. For example, several studies have used vocalizations that were recorded from monkeys that the subject monkeys had never seen [e.g. 90, 79, 81]. In contrast, Recanzone [77] used vocalizations that were recorded from the animal’s vivarium and were thus quite familiar to the animals. However, caution is warranted as it could be equally argued that these stimuli were presented in a behaviorally non-contextual manner on daily sessions over the course of several years. These stimuli may then have very little if any relevance to the animals. How the behavioral relevance to these stimuli compares to the behavioral relevance of consonant-vowel complexes, FM sweeps, stop consonants, ripple stimuli or synthetic vowels, which have been used in human studies, is also worthy of debate. Finally, differences between behavioral states may play a role. Many of the primate studies were done in anesthetized or passively-listening monkeys, whereas most of the fMRI studies were conducted with humans that were actively participating in an auditory task. Even if only the behaving-monkey studies [55, 77, 79, 80, 97] are compared with the human studies, there might still be substantial differences between behavioral state. The monkeys are highly trained and working on—for them—highly demanding tasks, whereas the humans are barely trained and participate in relatively easy tasks.

Conclusion

As we have discussed, the data for a ‘where’ pathway in the primate auditory cortex is mounting and appears to be on fairly solid ground based on both anatomical as well as physiological [55, 75, 90, 97] and functional-imaging data [e.g. 16, 44]. However, there is less reliable evidence for a ‘what’ pathway based on physiological and functional imaging and lesion data. This may be for several, non-mutually exclusive reasons. For example, ‘what’ is very difficult to define in any sensory system, and this is certainly true for the auditory system as well. ‘What’ can be thought to be something more complex than a simple tone, for example a frequency or amplitude modulated tone, a ‘ripple’ stimulus, a harmonic complex, or a vocalization or environmental sound. At which level this stimulus complexity is appropriate for study, and for which level of the auditory cortex, remains unclear. The lack of electrophysiological evidence for the selective processing of an auditory ‘what’ stimulus therefore does not necessarily torpedo the dual stream hypothesis, it may simply be that our understanding of stimulus complexity processing in auditory cortex in general is too limited to effectively ask the question.

A second possibility, which was discussed in different sections above, is that the behavioral tasks performed by either monkeys or humans do not tap into the appropriate resources. That is, the tasks are too easy and therefore selectivity is not necessary and consequently unseen. For example, it is cognitively straightforward for subjects to perform a stimulus-localization task. This type of task comes in basically two flavors: where is it or is this at the same place as something else? This discrimination is based on well-defined interaural and spectral cues of the sound and the subject’s spatial resolution, but the general task properties remain the same. Task difficulty is then based on the physical cues provided and has little to do with the nuances of the task.

In contrast, there are a myriad of potential cues that can be used to discriminate between complex stimuli. These cues can be based on spectral, temporal, or intensity features of the stimulus or some combination. Higher-order processing is also likely to occur, particularly when discriminating complex stimuli such as speech or vocalizations (e.g., memory or semantic processes). Thus, task difficulty may be a key element in uncovering selective processing, and many studies to date have done little more than verify that the subjects have ‘listened to’ the stimulus, and in other cases have even discouraged the subjects from attending to the stimuli (e.g. watch silent movies or studied under anesthesia).

One potential way to overcome this issue is to devise a task that is equally difficult for both the ‘where’ discrimination and the ‘what’ discrimination. However, even if the subject may perform at the same level (e.g. 75% correct), equating a non-spatial discrimination with a spatial discrimination is quite difficult. This equating is difficult because even when discriminating a non-spatial feature, such as the rate of amplitude modulation, significant spatial information is also perceived. Thus, it may not be possible to entirely isolate spatial and non-spatial information, which may in fact be the way that the auditory cortex processes information.

An alternative possibility is that it is inappropriate to classify the two processing streams as what/where or even what/how as is viewed in the visual system, which can generally be defined in two-dimensional space. Perhaps the temporal domain must be more intimately considered in this processing. Similarly, ‘where’ in the auditory system is quite different than in the visual system and is intimately related to what the stimulus is. For example, the spatial location of a stimulus necessarily has to be computed by the nervous system and is not carried forward in a ‘labeled-line’ as can be done in the visual system given the two-dimensional organization of the retina. This computation is potentially similar to the computation necessary for feature extraction and combination to integrate and segregate different acoustic signals into their appropriate sources and auditory objects [see 27, 39]. Thus, there may not be a clear selectivity for non-spatial processing in the auditory cortex because the ‘where’ pathway has to also know what the object is in order to act on it. In this view, one would not expect to see clear differences between response properties between the different areas.

A final possibility to consider is that the primate auditory cortex actually codes acoustic stimuli using a what-how dichotomy [e.g. 72, see also 32]. In this scenario, both the what and the how pathways need to identify and represent the different objects. The how pathway in order to know the manner in which the auditory object will be dealt with (reached toward, avoided, etc.), whereas the what pathway to identify the object so that it can be represented and compared to similar and different objects in memory or put into context of the particular object and other events. For example, the same phrase can have very different meanings depending on the context and intonation of the speaker. The phrase “come here please” can mean that person is about to give you something desirable (reach toward), that they are upset (console) or that they are angry (avoid). In each case, it is equally important to know where the speaker is as well as what they are saying. Under these circumstances one would not expect to find selective processing under the conditions that laboratory experiments generally provide.

Instead, differential activations would be expected primarily based on the task demands. In cases where no action is required, the where/how pathway would presumably be working at the basal level and the ‘what’ pathway could be differentially activated. This is certainly the case for an anesthetized monkey, where differences between selective processing were noted [90]. In alert animals, this has not been observed. Recanzone[77] had monkeys depress a lever to initiate a trial and to release that lever on an acoustic cue, thus there was a behavioral response associated with the sound and the location of the stimulus. However, even under this condition a clear disassociation between the where/how and what pathways was not observed. Differentiating between these possibilities will be necessary to better understand the functional roles of these different anatomically based processing streams.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Literature Cited

- 1.Alain C, Arnott SR, Hevenor S, Graham S, Grady CL. “What” and “where” in the human auditory system. Proc Nat Acad Sci. 2001;98:12301–12306. doi: 10.1073/pnas.211209098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Altshuler MW, Comalli PE. Effect of stimulus intensity and frequency on median horizontal plane sound localization. J Auditory Res. 1975;15:262–265. [Google Scholar]

- 3.Arnott SR, Binns MA, Grady CL, Alain C. Assessing the auditory dual-pathway model in humans. Neuroimage. 2004;22:401–408. doi: 10.1016/j.neuroimage.2004.01.014. [DOI] [PubMed] [Google Scholar]

- 4.Barrett DJK, Hall DA. Response preferences for “what” and “where” in human non-primary auditory cortex. NeuroImage. 2006;32:968–977. doi: 10.1016/j.neuroimage.2006.03.050. [DOI] [PubMed] [Google Scholar]

- 5.Belin P, Zatorre RJ, Lafaille P, Ahad P, Pike B. Voice-selective areas in human auditory cortex. Nature. 2000;403:309–312. doi: 10.1038/35002078. [DOI] [PubMed] [Google Scholar]

- 6.Bendor D, Wang X. Neural response properties of primary, rostral, and trostrotemporal core fields in the auditory cortex of marmoset monkeys. J Neurophysiol. 2008;100:888–906. doi: 10.1152/jn.00884.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Binder JR, Frost JA, Hammeke TA, Bellgowan PSF, Springer JA, Kaufman JN, Possing ET. Human temporal lobe activation by speech and nonspeech sounds. Cer Cortex. 2000;10:512–528. doi: 10.1093/cercor/10.5.512. [DOI] [PubMed] [Google Scholar]

- 8.Bizley JK, Walker KMM, Silverman BW, King AJ, Schnupp JWH. Interdependent encoding of pitch, timbre, and spatial location in auditory cortex. J Neurosci. 2009;29:2064–2075. doi: 10.1523/JNEUROSCI.4755-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Brugge JF, Merzenich MM. Responses of neurons in auditory cortex of the macaque monkey to monaural and binaural stimulation. J Neurophysiol. 19723;36:1138–58. doi: 10.1152/jn.1973.36.6.1138. [DOI] [PubMed] [Google Scholar]

- 10.Bunge SA, Kahn I, Wallis JD, Miller EK, Wagner AD. Neural circuits subserving the tretrieval and maintenance of abstract rules. J Neurophysiol. 2003;90:3419–3428. doi: 10.1152/jn.00910.2002. [DOI] [PubMed] [Google Scholar]

- 11.Burton H, Jones EG. The posterior thalamic region and its cortical projection in New World and Old World monkeys. J Comp Neurol. 1976;168:249–301. doi: 10.1002/cne.901680204. [DOI] [PubMed] [Google Scholar]

- 12.Chiry O, Tardif E, Magistretti PJ, Clarke S. Patterns of calcium-binding proteins support parallel and hierarchical organization of human auditory areas. Eur J Neurosci. 2003;17:397–410. doi: 10.1046/j.1460-9568.2003.02430.x. [DOI] [PubMed] [Google Scholar]

- 13.Cohen YE, Theunissen F, Russ BE, Gill P. Acoustic features of rhesus vocalization and their representation in the ventrolateral prefrontal cortex. J Neurophysiol. 2007;97:1470–1484. doi: 10.1152/jn.00769.2006. [DOI] [PubMed] [Google Scholar]

- 14.Cohen YE, Russ BE, Gifford GW, III, Kiringoda R, MacLean KA. Selectivity for the spatial and nonspatial attributes of auditory stimuli in the ventrolateral prefrontal cortex. J Neurosci. 2004;24:11307–11316. doi: 10.1523/JNEUROSCI.3935-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Comalli PE, Altshuler MW. Effect of stimulus intensity, frequency, and unilateral hearing loss on sound localization. J Auditory Res. 1976;16:275–279. [Google Scholar]

- 16.Degerman A, Rinne T, Salmi J, Salonen O, Alho K. Selective attention to sound location or pitch studied with fMRI. Brain Res. 2006;1077:123–134. doi: 10.1016/j.brainres.2006.01.025. [DOI] [PubMed] [Google Scholar]

- 17.De La Mothe LA, Blumell S, Kajikawa Y, Hackett TA. Cortical connections of the auditory cortex in marmoset monkeys: core and medial belt regions. J Comp Neurol. 2006a;496:27–72. doi: 10.1002/cne.20923. [DOI] [PubMed] [Google Scholar]

- 18.De La Mothe LA, Blumell S, Kajikawa Y, Hackett TA. Thalamic connections of the auditory cortex in marmoset monkeys: core and medial belt regions. J Comp Neurol. 2006b;496:72–96. doi: 10.1002/cne.20924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Eisenman LM. Neural encoding of sound location: An electrophysiological study in auditory cortex (AI) of the cat using free field stimuli. Brain Res. 1974;75:203–214. doi: 10.1016/0006-8993(74)90742-2. [DOI] [PubMed] [Google Scholar]

- 20.Fullerton BC, Pandya DN. Architectonic analysis of the auditory-related areas of the superior temporal region in human brain. J Comp Neurol. 2007;504:470–498. doi: 10.1002/cne.21432. [DOI] [PubMed] [Google Scholar]

- 21.Furukawa S, Xu L, Middlebrooks JC. Coding of sound-source location by enxembles of cortical neurons. J Neurosci. 2000;20:1216–1228. doi: 10.1523/JNEUROSCI.20-03-01216.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Galaburda AM, Pandya DN. The intrinsic architectonic and connectional organization of the superior temporal region of the rhesus monkey. J Comp Neurol. 1983;221:169–184. doi: 10.1002/cne.902210206. [DOI] [PubMed] [Google Scholar]

- 23.Gabrieli JD, Pldrack RA, Desmond JE. The role of left prefrontal cortex in language and memor. Proc Natl Acad Sci. 1998;95:906–913. doi: 10.1073/pnas.95.3.906. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Ghazanfar AA, Hauser MD. The neuroethology of primate vocal communication: substrates for the evolution of speech. Trends Cog Neurosci. 1999;3:377–384. doi: 10.1016/s1364-6613(99)01379-0. [DOI] [PubMed] [Google Scholar]

- 25.Gifford GW, III, Cohen YE. Spatial and non-spatial auditory processing in the lateral intraparietal area. Exp Brain Res. 2005;162:509–512. doi: 10.1007/s00221-005-2220-2. [DOI] [PubMed] [Google Scholar]

- 26.Glass I, Wollberg Z. Lability in the responses of cells in the auditory cortex of squirrel monkeys to species-specific vocalizations. Exp Brain Res. 1979;34:489–498. doi: 10.1007/BF00239144. [DOI] [PubMed] [Google Scholar]

- 27.Griffiths TD, Warren JD, Scott SK, Nelken I, King AJ. Cortical processing of complex sound: a way forward? Trends Neurosc. 2004;27:181–185. doi: 10.1016/j.tins.2004.02.005. [DOI] [PubMed] [Google Scholar]

- 28.Hackett TA, Preuss TM, Kaas JH. Architectonic identification of the core region in auditory cortex of macaques, chimpanzees, and humans. J Comp Neurol. 2001;441:197–222. doi: 10.1002/cne.1407. [DOI] [PubMed] [Google Scholar]

- 29.Harrington IA, Heffner RS, Heffner HE. An investigation of sensory deficits underlying the aphasia-like behavior of macaques with auditory cortex lesions. Neuroreport. 2001;12:1217–21. doi: 10.1097/00001756-200105080-00032. [DOI] [PubMed] [Google Scholar]

- 30.Heffner HE, Heffner RS. Effect of restricted cortical lesions on absolute thresholds and aphasia-like deficits in Japanese macaques. Behav Neurosci. 1989;103:158–69. doi: 10.1037//0735-7044.103.1.158. [DOI] [PubMed] [Google Scholar]

- 31.Herrmann CS, Senkowski D, Maess B, Friederici AD. Spatial versus object feature processing in human auditory cortx: a magnetoencephalographic study. Neurosci Lett. 2002;334:37–40. doi: 10.1016/s0304-3940(02)01063-7. [DOI] [PubMed] [Google Scholar]

- 32.Hickok G, Poeppel D. The cortical organization of speech processing. Nat Rev Neurosci. 2007;8:393–402. doi: 10.1038/nrn2113. [DOI] [PubMed] [Google Scholar]

- 33.Imig TJ, Irons WA, Samson FR. Single-unit selectivity to azimuthal direction and sound pressure level of noise bursts in cat high-frequency primary auditory cortex. J Neurophysiol. 1990;63:1448–66. doi: 10.1152/jn.1990.63.6.1448. [DOI] [PubMed] [Google Scholar]

- 34.Inui K, Okamoto H, Miki K, Gunji A, Kakigi R. Serial and parallel processing in the human auditory cortex: A magnetoenceephalographic study. Cer Cortex. 2006;16:18–30. doi: 10.1093/cercor/bhi080. [DOI] [PubMed] [Google Scholar]

- 35.Jazayeri M, Movshon JA. Optimal representation of sensory information by neuronal populations. Nat Neurosci. 2006;5:690–696. doi: 10.1038/nn1691. [DOI] [PubMed] [Google Scholar]

- 36.Jones EG. Chemically defined parallel pathways in the monkey auditory system. Ann N Y Acad Sci. 2003;999:218–233. doi: 10.1196/annals.1284.033. [DOI] [PubMed] [Google Scholar]

- 37.Kaas JH, Hackett TA. Subdivisions of auditory cortex and processing streams in primates. Proc Natl Acad Sci USA. 2000;97:11793–9. doi: 10.1073/pnas.97.22.11793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.King AJ, Bajo VM, Bizley JK, Campbell RA, Nodal FR, Schultz AL, Schnupp JW. Physiological and behavioral studies of spatial coding in the auditory cortex. Hear Res. 2007;229:106–115. doi: 10.1016/j.heares.2007.01.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.King AJ, Nelken I. Unraveling the principles of auditory cortical processing: can we learn from the visual system? Nat Neurosci. 2009;12:698–701. doi: 10.1038/nn.2308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Krumbholz K, Eickhoff SB, Fink GR. Feature- and object-based attentional modulation in the human auditory ‘where’ pathway. J Cog Neurosci. 2007;19:1721–1733. doi: 10.1162/jocn.2007.19.10.1721. [DOI] [PubMed] [Google Scholar]

- 41.Krumbholz K, Schonwiesner M, Rubsamen R, Zilles K, Fink GR, von Cramon DY. Hierarchical processing of sound location and motion in the human brainstem and planum temporale. Eur J Neurosci. 2005;21:230–238. doi: 10.1111/j.1460-9568.2004.03836.x. [DOI] [PubMed] [Google Scholar]

- 42.Krumbholz K, Schowiesner M, von Cramon DY, Rubsamen R, Shah NJ, Zilles K, Fink GR. Represenation of interaural temporal information from left and right auditory space in the human planum temporale and inferior parietal lobe. Cer Cortex. 2005;15:317–324. doi: 10.1093/cercor/bhh133. [DOI] [PubMed] [Google Scholar]

- 43.Kusunoki M, Gottlieb J, Goldberg ME. The lateral intraparietal area as a salience map: the representation of abrupt onset, stimulus motion, and task relevance. Vision Res. 2000;40:1459–1468. doi: 10.1016/s0042-6989(99)00212-6. [DOI] [PubMed] [Google Scholar]

- 44.Lewald J, Riederer KAJ, Lentz T, Meister IG. Processing of sound location in human cortex. Eur J Neurosci. 2008;27:1261–1270. doi: 10.1111/j.1460-9568.2008.06094.x. [DOI] [PubMed] [Google Scholar]

- 45.Liebenthal E, Binder JR, Spitzer SM, Possing ET, Medler DA. Neural substrates of phonemic perception. Cereb Cortex. 2005;15:1621–1631. doi: 10.1093/cercor/bhi040. [DOI] [PubMed] [Google Scholar]

- 46.Logothetis NK, Pauls J, Augath M, Trinath T, Oeltermann A. Neurophysiological investigation of the basis of the fMRI signal. Nature. 2001;412:150–157. doi: 10.1038/35084005. [DOI] [PubMed] [Google Scholar]

- 47.Lomber SG, Malhotra S. Double dissociation of ‘what’ and ‘where’ processing in auditory cortex. Nat Neurosci. 2008;5:609–616. doi: 10.1038/nn.2108. [DOI] [PubMed] [Google Scholar]

- 48.Malhotra S, Hall AJ, Lomber SG. Cortical control of sound localization in the cat: Unitaleral cooling deactivation of 19 cerebral areas. J Neurophysiol. 2004;92:1625–1643. doi: 10.1152/jn.01205.2003. [DOI] [PubMed] [Google Scholar]

- 49.Malhotra S, Stecker CG, Middlebrooks JC, Lomber SG. Sound localization deficits during reversible deactivation of primary auditory cortex and/or the dorsal zone. J Neurophysiol. 2008;99:1628–1642. doi: 10.1152/jn.01228.2007. [DOI] [PubMed] [Google Scholar]

- 50.Malhotra S, Lomber SG. Sound localization during homotopic and heterotopic bilateral cooling deactivation of primary and nonprimary auditory cortical areas in the cat. J Neurophysiol. 2007;97:26–43. doi: 10.1152/jn.00720.2006. [DOI] [PubMed] [Google Scholar]

- 51.Merzenich MM, Brugge JF. Representation of the cochlear partition of the superior temporal plane of the macaque monkey. Brain Res. 1973;50:275–296. doi: 10.1016/0006-8993(73)90731-2. [DOI] [PubMed] [Google Scholar]

- 52.Middlebrooks JC, Pettigrew JD. Functional classes of neurons in primary auditory cortex of the cat distinguished by sensitivity to sound location. J Neurosci. 1981;1:107–20. doi: 10.1523/JNEUROSCI.01-01-00107.1981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Ann Rev Neurosci. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- 54.Miller EK, Freedman DJ, Wallis JD. The prefrontal cortex: categories, concepts and congnition. Philos Trans R Soc Lond B Biol Sci. 2002;357:1123–1136. doi: 10.1098/rstb.2002.1099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Miller LM, Recanzone GH. Populations of auditory cortical neurons can accurately encode acoustic space across stimulus intensity. Proc Natl Acad Sci U S A. 2009;106:5931–5. doi: 10.1073/pnas.0901023106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Morel A, Garraghty PE, Kaas JH. Tonotopic organization, architectonic fields, and connections of auditory cortex in macaque monkeys. J Comp Neurol. 1993;335:437–59. doi: 10.1002/cne.903350312. [DOI] [PubMed] [Google Scholar]

- 57.Mrsic-Flogel TD, King AJ, Schnupp JWH. Encoding of virtual acoustic space stimuli by neurons in ferret primary auditory cortex. J Neurophysiol. 2005;93:3489–3503. doi: 10.1152/jn.00748.2004. [DOI] [PubMed] [Google Scholar]

- 58.Nelken I. Processing of complex stimuli and natural scenes in the auditory cortex. Curr Opin Neurobiol. 2004;14:474–480. doi: 10.1016/j.conb.2004.06.005. [DOI] [PubMed] [Google Scholar]

- 59.Newman JD. Perception of sounds used in species-specific communication: the auditory cortex and beyond. J Med Primatol. 1978;7:98–105. doi: 10.1159/000459792. [DOI] [PubMed] [Google Scholar]

- 60.Obleser J, Zimmermann J, Van Meter J, Rauschecker JP. Multiple stages of auditory speech perception reflected in event-related fMRI. Cereb Cortex. 2007;17:2251–2257. doi: 10.1093/cercor/bhl133. [DOI] [PubMed] [Google Scholar]

- 61.Petkov CI, Kayser C, Augath M, Logothetis NK. Functional imaging reveals numerous fields in the monkey auditory cortex. PLOS Biology. 2006;4:e215. doi: 10.1371/journal.pbio.0040215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Poremba A, Mishkin M. Exploring the extent and function of higher-order auditory cortex in rhesus monkeys. Hear Res. 2007;229:14–23. doi: 10.1016/j.heares.2007.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Poremba A, Malloy M, Saunders RC, Carson RE, Herscovitch P, Mishkin M. Species-specific calls evoke asymmetric activity in the monkey’s temporal poles. Nature. 2004;427:448–51. doi: 10.1038/nature02268. [DOI] [PubMed] [Google Scholar]

- 64.Poremba A, Saunders RC, Crane AM, Cook M, Sokoloff, Mishkin M. Functional mapping of the primate auditory system. Science. 2003;299:568–572. doi: 10.1126/science.1078900. [DOI] [PubMed] [Google Scholar]

- 65.Rajan R, Aitkin LM, Irvine DRF, McKay J. Azimuthal sensitivity of neurons in primary auditory cortex of cats. I. Types of sensitivity and the effects of variations in stimulus parameters. J Neurophysiol. 1990a;64:872–887. doi: 10.1152/jn.1990.64.3.872. [DOI] [PubMed] [Google Scholar]

- 66.Rajan R, Aitkin LM, Irvine DR. Azimuthal sensitivity of neurons in primary auditory cortex of cats. II. Organization along frequency-band strips. J Neurophysiol. 1990b;64:888–902. doi: 10.1152/jn.1990.64.3.888. [DOI] [PubMed] [Google Scholar]

- 67.Rauschecker JP, Tian B, Hauser M. Processing of complex sounds in the macaque nonprimary auditory cortex. Science. 1995;268:111–4. doi: 10.1126/science.7701330. [DOI] [PubMed] [Google Scholar]

- 68.Rauschecker JP, Tian B, Pons T, Mishkin M. Serial and parallel processing in rhesus monkey auditory cortex. J Comp Neurol. 1997;382:89–103. [PubMed] [Google Scholar]

- 69.Rauschecker JP. Parallel processing in the auditory cortex of primates. Audiol Neurootol. 1998;3:86–103. doi: 10.1159/000013784. [DOI] [PubMed] [Google Scholar]

- 70.Rauschecker JP, Tian B. Mechanisms and streams for processing of “what” and “where” in auditory cortex. Proc Natl Acad Sci USA. 2000;97:11800–6. doi: 10.1073/pnas.97.22.11800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Rauschecker JP, Tian B. Processing of band-passed noise in the lateral auditory belt cortex of the rhesus monkey. J Neurophysiol. 2004;91:2578–2589. doi: 10.1152/jn.00834.2003. [DOI] [PubMed] [Google Scholar]

- 72.Rauschecker JP, Scott SK. Maps and streams in the auditory cortex: nonhuman primates illuminate human speech processing. Nat Neurosci. 2009;12:718–724. doi: 10.1038/nn.2331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Reale RA, Jenison RL, Brugge JF. Directional sensitivity of neurons in the primary auditory (AI) cortex: effects of sound-source intensity level. J Neurophysiol. 2003;89:1024–38. doi: 10.1152/jn.00563.2002. [DOI] [PubMed] [Google Scholar]

- 74.Recanzone GH, Guard DC, Phan ML. Frequency and intensity response properties of single neurons in the auditory cortex of the behaving macaque monkey. J Neurophysiol. 2000a;83:2315–2331. doi: 10.1152/jn.2000.83.4.2315. [DOI] [PubMed] [Google Scholar]

- 75.Recanzone GH, Guard DC, Phan ML, Su TK. Correlation between the activity of single auditory cortical neurons and sound localization behavior in the macaque monkey. J Neurophysiol. 2000b;83:2723–2739. doi: 10.1152/jn.2000.83.5.2723. [DOI] [PubMed] [Google Scholar]

- 76.Recanzone GH, Beckerman NS. Effects of intensity and location on sound location discrimination in macaque monkeys. Hear Res. 2004;198:116–124. doi: 10.1016/j.heares.2004.07.017. [DOI] [PubMed] [Google Scholar]

- 77.Recanzone GH. Representation of con-specific vocalizations in the core and belt areas of the auditory cortex in the alert macaque monkey. J Neurosci. 2008;28:13184–13193. doi: 10.1523/JNEUROSCI.3619-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Romanski LM, Tian B, Fritz J, Mishkin M, Goldman-Rakic PS, Rauschecker JP. Dual streams of auditory afferents target multiple domains in the primate prefrontal cortex. Nat Neurosci. 1999;2:1131–6. doi: 10.1038/16056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Romanski LM, Averbeck BB, Diltz M. Neural representation of vocalizations in the primate ventrolateral prefrontal cortex. J Neurophysiol. 2004;93:734–747. doi: 10.1152/jn.00675.2004. [DOI] [PubMed] [Google Scholar]

- 80.Russ BE, Ackelson AL, Baker AE, Cohen YE. Coding of auditory-stimulus identity in the auditory non-spatial processing stream. J Neurophysiol. 2008;99:87–95. doi: 10.1152/jn.01069.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Russ BE, Lee YS, Cohen YE. Neural and behavioral correlates of auditory categorization. Hear Res. 2007;229:204–212. doi: 10.1016/j.heares.2006.10.010. [DOI] [PubMed] [Google Scholar]

- 82.Sabin AT, Macpherson EA, Middlebrooks JC. Human sound localization at near-threshold levels. Hear Res. 2005;199:124–134. doi: 10.1016/j.heares.2004.08.001. [DOI] [PubMed] [Google Scholar]

- 83.Schummers J, Yu H, Sur M. Tuned responses of astrocytes and their influence on hemodynamic signals in the visual cortex. Science. 2008;320:1638–43. doi: 10.1126/science.1156120. [DOI] [PubMed] [Google Scholar]

- 84.Seltzer B, Pandya DN. Afferent cortical connections and architectonics of the superior temporal sulcus and surrounding cortex in the rhesus monkey. Brain Res. 1978;149:1–24. doi: 10.1016/0006-8993(78)90584-x. [DOI] [PubMed] [Google Scholar]

- 85.Snyder LH, Batista AP, Andersen RA. Intention-related activity in theposterior parietal cortex: a review. Vision Res. 2000;40:1433–1441. doi: 10.1016/s0042-6989(00)00052-3. [DOI] [PubMed] [Google Scholar]

- 86.Stecker CG, Harrington IA, Macpherson EA, Middlebrooks JC. Spatial sensitivity in the dorsal zone (area DZ) of cat auditory cortex. J Neurophysiol. 2005a;94:1267–1280. doi: 10.1152/jn.00104.2005. [DOI] [PubMed] [Google Scholar]

- 87.Stecker GC, Harrington IA, Middlebrooks JC. Location coding by opponent neural populations in the auditory cortex. PLos Biol. 2005b;3:520–528. doi: 10.1371/journal.pbio.0030078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Su TK, Recanzone GH. Differential effect of near-threshold stimulus intensities on sound localization performance in azimuth and elevation in normal human subjects. J Assoc Research Otolaryngol. 2001;2:246–256. doi: 10.1007/s101620010073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Tian B, Rauschecker JP. Processing of frequency-modulated sounds in the lateral auditory belt cortex of the rhesus monkey. J Neurophysiol. 2004;92:2993–3013. doi: 10.1152/jn.00472.2003. [DOI] [PubMed] [Google Scholar]

- 90.Tian B, Reser D, Durham A, Kustov A, Rauschecker JP. Functional specialization in rhesus monkey auditory cortex. Science. 2001;292:290–3. doi: 10.1126/science.1058911. [DOI] [PubMed] [Google Scholar]

- 91.Ungerlieder LG, Mishkin M. Two cortical visual systems. In: Ingle DJ, Goodale MA, Mansfield RJW, editors. Analysis of Visual Behavior. MIT Press; Boston: 1982. pp. Pp549–586. [Google Scholar]

- 92.Ungerleider LG, Haxby JV. ‘What’ and ‘where’ in the human brain. Curr Opin Neurobiol. 1994;4:157–165. doi: 10.1016/0959-4388(94)90066-3. [DOI] [PubMed] [Google Scholar]

- 93.Uppenkamp S, Johnsrude IS, Norris D, Marslen-Wilson W, Patterson RD. Locating the initial stages of speech-sound processing in human temporal cortex. NeuroImage. 2006;31:1284–1296. doi: 10.1016/j.neuroimage.2006.01.004. [DOI] [PubMed] [Google Scholar]

- 94.Werner-Reiss U, Groh JM. A rate code for sound azimuth in monkey auditory cortex: implications for human neuroimaging studies. J Neurosci. 2009;28:3747–3758. doi: 10.1523/JNEUROSCI.5044-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Wessinger CM, VanMeter J, Tian B, Van Lare J, Pekar J, Rauschecker JP. Hierarchical organization fo the human auditory cortex revealed by functional magnetic resonance imaging. J Cog Neurosci. 2001;13:1–7. doi: 10.1162/089892901564108. [DOI] [PubMed] [Google Scholar]

- 96.Winter P, Funkenstein HH. The effect of species-specific vocalization on the discharge of auditory cortical cells in the awake squirrel monkey. Exp Brain Res. 1973;18:489–504. doi: 10.1007/BF00234133. [DOI] [PubMed] [Google Scholar]

- 97.Woods TM, Lopez SE, Long JH, Rahman JE, Recanzone GH. Effects of stimulus azimuth and intensity on the single-neuron activity in the auditory cortex of the alert macaque monkey. J Neurophysiol. 2006;96:3323–37. doi: 10.1152/jn.00392.2006. [DOI] [PubMed] [Google Scholar]