Abstract

Recent improvements in cortically-controlled brain-machine interfaces (BMIs) have raised hopes that such technologies may improve the quality of life of severely motor-disabled patients. However, current generation BMIs do not perform up to their potential due to the neglect of the full range of sensory feedback in their strategies for training and control. Here we confirm that neurons in primary motor cortex (MI) encode sensory information and demonstrate a significant heterogeneity in their responses with respect to the type of sensory modality available to the subject about a reaching task. We further show using mutual information and directional tuning analyses that the presence of multi-sensory feedback (i.e. vision and proprioception) during replay of movements evokes neural responses in MI that are almost indistinguishable from those responses measured during overt movement. Finally, we suggest how these playback-evoked responses may be used to improve BMI performance.

Keywords: Motor cortex; observation; mirror-neuron; brain machine interface; sensory feedback, proprioception

INTRODUCTION

The field of brain-machine interfaces (BMIs) has seen rapid and substantial growth over the past decade. BMIs that record signals from the cortex offer the possibility of deciphering motor intentions in order to control devices. This capability could allow severely motor-disabled people to interact with the outside world, thereby improving their quality of life. In principle, BMIs could help people with such central or peripheral nerve injuries and disease states as spinal cord injury, amyotrophic lateral sclerosis (ALS), stroke, muscular dystrophy, amputation, and cerebral palsy. The principal assumption for successful operation of cortically-controlled BMIs is that cortical activity is still available and can be decoded despite the injury or disease. Early-stage clinical testing of BMIs has indicated that, in fact, cortical activity can be voluntarily modulated to control simple devices [10, 12, 28].

Despite these initial clinical successes, the next-generation BMIs will need to take advantage of different forms of sensory information to reliably build or ‘train’ decoding algorithms as well as augment closed-loop BMI control in patients who cannot move. Experimental evidence has shown greater diversity in the responses of neurons in primary motor cortex (MI) than is typically assumed. In addition to driving overt movement, neurons in MI discharge in response to passive visual observation of action [26], visual-motor imagery [2, 20], kinesthetic perception [17], and passive joint motion [6]. Recently, some have even proposed using the movement related activity in MI triggered by passive observation of an action to build a decoder [26, 27, 30]. To date, however, no one has demonstrated the utility of proprioceptive sensory information within the context of a BMI application.

The proprioceptive sense is critical for normal motor control. Experimental evidence indicates that abilities for on-line control and error correction are normally highly-dependent on the proprioceptive system, which in turn is mediated by the fastest conducting nerves in the body. In humans, alterations to movement trajectory have been detected as early as 70 ms after a proprioceptive cue [3]. Furthermore, patients with large-fiber neuropathy affecting proprioceptive afferents exhibit uncoordinated and slowed movements [8, 21]. Although proprioceptive feedback is vital for accurate and naturalistic movements, almost all current BMIs rely solely on visual feedback to correct errors during on-line control of a device. As a result, the output of such systems generates movements that tend to be erratic and difficult to control [10]. A BMI that incorporates proprioceptive as well as visual feedback would likely show significantly improved device control.

In this paper we describe the results of an experiment designed to test the hypothesis that proprioceptive feedback together with vision can trigger more informative motor commands from MI during passive stimulation than during observation of movement alone. Using mutual information (between spiking activity and cursor/hand direction) and directional tuning metrics, we compare the neural responses in MI elicited by visual and proprioceptive sensory feedback during passive playback of movement. The data suggest that proprioceptive feedback alone has a greater effect on neural activity than visual feedback alone. More importantly, when these two sensory modalities are combined, the resultant neural activity is nearly indistinguishable from that activity observed during active movement of the arm. Finally, we suggest how these responses could be used to improve training and control in BMI applications.

MATERIALS AND METHODS

Behavioral Task

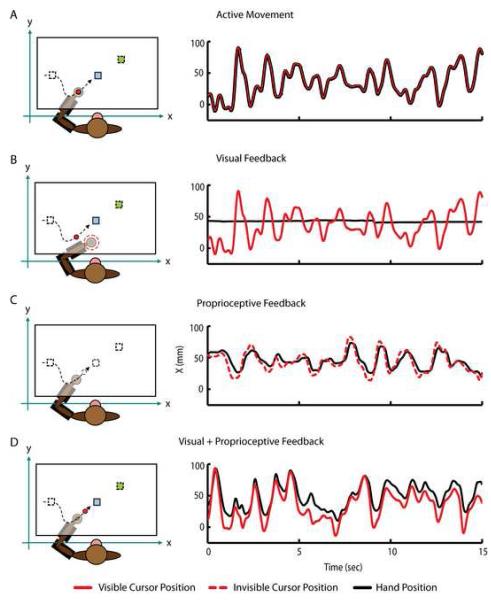

Two adult male rhesus macaques (Macaca mulatta) were operantly trained to control a cursor in two dimensions using a two-link robotic exoskeleton [22]. The animals sat in a primate chair and placed their arm in the exoskeleton. Their shoulder joint was abducted 90 degrees and their arm supported by the exoskeletion such that all movements were made in the horizontal plane. Direct vision of the limb was precluded by a horizontal projection screen above the monkey’s arm. A visual cursor aligned with the location of the monkey’s hand was projected onto the screen and served as a surrogate for the location of the hand (Figure 1, red circle). Shoulder and elbow angle and angular velocity were digitized at 500 Hz and transformed to the visual cursor position (mm) using the forward kinematics equations for the exoskeleton [22].

Figure 1.

Experimental apparatus and trajectories during the active movement, visual, proprioceptive and visual +proprioceptive playback phases. The monkey performs the random target pursuit (RTP) task in the horizontal plane using a two-link exoskeletal robot. Direct vision of the arm is precluded. During the RTP task the monkeys moves a visual cursor (red circle) to a target (blue square). The target appeared at a random location within the workspace (10 by 6 cm), and each time the monkey hits it, a new target appeared immediately in a new location selected at random (green square). In order to complete a successful trial and receive a juice reward, the monkey was required to sequentially acquire seven targets. (A) During Active Movement, the position of the visual cursor (red trace) was controlled by the movements of the monkey’s hand (black trace). (B) During Visual Playback, target positions (squares) and cursor trajectories (red circle) recorded during the Active Movement condition are replayed while the animal voluntarily maintains static posture in the robotic exoskeleton. If the monkey moves his hand outside of the hold region (dashed red circle) the current trial is aborted and the cursor and target are extinguished until the hand is returned. The right panel shows the X dimension of the visual cursor (red trace) and hand movement (black trace). (C) During Proprioceptive Playback condition, the monkey’s hand is moved through the cursor trajectories recorded during Active Movement. Here, the visual cursor and target are invisible (dashed black circle and squares). The right panel shows how the hand (black trace) is driven through the same trajectory as the invisible visual cursor (dashed red trace) providing Proprioceptive Playback condition. Notice how the hand trajectory lags behind the cursor trajectory due to the dynamics of the position controller/exoskeleton (D) During the Visual+Proprioceptive Playback condition, target positions (squares) and cursor trajectories (red circle) recorded during the Active Movement phase are replayed and the monkey’s hand is moved through the cursor trajectories by the exoskeleton. The right panel shows how the visual cursor (red trace) and hand (black trace) move through the same trajectory in the X dimension. Again, hand movement lags slightly behind the movement of visual cursor due to position controller/exoskeleton dynamics. In all conditions, the same trends were observed in the Y dimension.

The random target pursuit (RTP) task required the monkeys to repetitively move a cursor (6 mm diameter circle) to a square target (2.25 cm2). The target appeared at a random location within the workspace (10 cm by 6 cm), and each time it was hit, a new target appeared immediately in a new location selected at random (Figure 1). In order to complete a successful trial and receive a juice reward, the monkeys were required to sequentially acquire seven targets. Because each trial completion was followed by the immediate presentation of another target, the monkeys typically did not pause between trials, but rather generated continuous movement trajectories. A trial was aborted if any movement between targets took longer than 5000 ms or if the monkeys removed their arm from the apparatus.

Experimental Design

This experiment consisted of four experimental conditions: 1) Active Movement, 2) Visual Playback, 3) Proprioceptive Playback and 4) Visual+Proprioceptive Playback. In the Active Movement condition, the animals performed the standard RTP task and controlled the cursor via the exoskeleton (Figure 1A). During the playback conditions, target positions and cursor movements generated during the Active Movement phase were replayed to the monkeys through different sensory modalities. The playback conditions were designed to dissociate the effects of vision and proprioception on the spiking activity of MI. In the Visual Playback condition (Figure 1B) both the cursor and the target were visible to the monkeys, just as during the Active Movement condition, while the monkeys maintained a static, relaxed posture in the exoskeleton (Figure 1B, black line). If the monkeys moved the handle of the exoskeleton outside of a “hold” region (Figure 1B, dotted black circle) or removed their arm from the exoskeleton the game was “turned off” until the monkeys returned their arm to the appropriate position. In the Proprioceptive Playback condition (Figure 1C), both the cursor and target were invisible and the monkeys‘ arms (Figure 1C, black line) were moved through the replayed trajectory of the invisible cursor (Figure 1C, dashed red line) by the robotic exoskeleton. The final condition (Visual+Proprioceptive Playback, Figure 1D) combined both the visual and proprioceptive sensory feedback modalities. Here, the monkeys visually observed playback of the cursor trajectories and target positions recorded during the Active Movement condition while their arm was moved through the replayed cursor trajectory by the exoskeleton. During all passive playback conditions, the monkeys received juice at the completion of every successful trial just as during performance of the RTP task, even when the cursor and target were not visible.

In the Proprioceptive and Visual+Proprioceptive Playback conditions, a PD controller was used to drive the robot’s end-effector (i.e. the monkeys’ hand) to follow the trajectory of the cursor. To assess the accuracy of the PD controller, we performed a separate control experiment where we measured the dynamics and average error between the commanded (i.e. the cursor) and actual positions of the robot (and hand) during replayed trajectories with anesthetized monkeys. The monkeys were anesthetized (Ketamine, 2mg/kg; Dexmedatomidine, 75mcg/kg; Atropine, 0.04mg/kg) and then placed in the primate chair with their arm secured in the exoskeleton. Cursor position was digitized (500 Hz) and recorded independently while the monkeys’ relaxed arm was moved through replayed cursor trajectories for approximately 5 minutes. Playback of each trajectory was repeated three times for a total exposure time of 15 minutes. We computed the cross-correlation between the X and Y cursor and hand positions during passive arm movement to measure the time delay between movement of the cursor and the hand. As expected, a strong correlation (> 0.95) was observed between cursor and hand position at time delays averaging 98ms and 52ms in the X and Y direction, respectively. To compute the error between cursor and hand positions, we first corrected for the temporal delay of the position controller/exoskeleton by shifting the hand position data in time by the appropriate delay and then computed the Euclidean distance (error) between the cursor and hand position on a sample-by-sample basis. In this control experiment the error between the cursor and hand position averaged 5.40 ± 4.03 (SD) mm for monkey MK and 8.99 ± 4.46 mm for monkey B.

Electrophysiology

Each monkey was chronically implanted with a 100-electrode (400 μm interelectrode separation) microelectrode array (Blackrock Microsystems, Inc., Salt Lake City, UT) in primary motor cortex contralateral to the arm used for the task [14]. The electrodes on each array were 1.5 mm in length, and their tips were coated with iridium oxide. During a recording session, signals from up to 96 electrodes were amplified (gain of 5000), band-pass filtered between 0.3 kHz and 7.5 kHz, and recorded digitally (14-bit) at 30 kHz per channel using a Cerebus acquisition system (Blackrock Microsystems, Inc., Salt Lake City, UT). Only waveforms (1.6 ms in duration; 48 sample time points per waveform) that crossed a threshold were stored and spike-sorted using Offline Sorter (Plexon, Inc., Dallas, TX). Signal-to-noise ratios were defined as the difference in mean peak-to-trough voltage divided by twice the mean standard deviation. The mean standard deviation was computed by measuring the standard deviation of the spike waveform over all acquired spikes at each of the 48 sample time points of the waveform and then averaging. All isolated single units used in this study possessed signal-to-noise ratios of three or higher. A total of seven data sets (four data sets for animal MK and three data sets for animal B) were analyzed in this experiment. A data set is defined as the simultaneously recorded neural activity during a single recording session and contained between 300 to 800 individual trials.

ANALYSIS

Kinematics

Kinematic parameters (position and direction) of hand and cursor movement in each condition were binned in 50 ms bins and boxcar-smoothed using a 150 ms sliding window for most analyses. In this experiment, the monkeys were trained to voluntarily relax their arm while either maintaining a static posture (i.e. Visual Playback condition) or while their arm was moved by the exoskeleton (i.e. Proprioceptive and Visual+Proprioceptive Playback conditions). To avoid including those trials during which the monkeys may have drifted or voluntarily moved their arm away from the desired position, we defined a relaxation metric to filter the data. After correcting for the time delay of position controller based on values obtained from the control experiment, we computed the error (Euclidian distance) between the cursor and hand positions on a sample—by-sample basis. Trials with an average error exceeding the mean error plus two standard deviations (as obtained from the control experiment described above) were excluded from further analysis. This threshold was 13.46 mm for monkey MK and 17.91 mm for monkey B.

Mutual information

Mutual information between binned neural data and kinematics (50 ms bins) was calculated at multiple time leads and lags as in Paninski et al. [18] . This analysis captures nonlinear relationships between the two variables by means of signal entropy reduction. The computation yields a measure of the strength of the relationship between the two variables when they are shifted with respect to each other by different time lags. By examining the relative timing of the peak mutual information, we were able to determine at what time lag a neuron’s modulation was most related to the cursor movement.

The kinematic probability distributions (one-dimensional distribution of instantaneous movement direction) conditioned on the number of observed spikes were estimated by histograms of the empirical data. To account for biases in this estimation, the information calculated from shuffled kinematic bins (mean of one hundred shuffles) was subtracted from the values obtained from the actual data for each cell. Furthermore, statistical significance of the peaks in mutual information profiles was determined by comparing the magnitude of the resulting peak against the distribution of peak magnitudes at that lag resulting from the one hundred shuffles. If the magnitude of the peak mutual information was greater than ninety-nine of the values at that specific lag resulting from the one hundred computations on shuffled data, then the peak was deemed to be significant at the p < 0.01 level. Lastly, the significant lead/lag mutual information profiles were boxcar-smoothed with a 3-bin window (150 ms).

Directional tuning

Preferred directions (PDs) were determined for each experimental condition by calculating the mean binned spike count (50 ms bins) as a function of instantaneous movement direction (at π/8 radian resolution). The lag between neural activity and movement direction for each cell was chosen based on the lag of the peak mutual information (see “Mutual information profiles”). The mean spike counts per direction were fit with a cosine function [7]. Cells were considered to be cosine-tuned if the correlation between the empirical mean spike counts and the best-fit cosine function was greater than 0.5. Only cells that were cosine-tuned in both conditions were used to assess the difference in preferred directions between the Active Movement and individual playback conditions.

RESULTS

Context Dependent Modulation of Spiking Activity

We have previously demonstrated that neurons in motor cortex demonstrate congruent activity during visual observation of action when compared to active movement [26]. Here, we designed an experiment to test the hypothesis that proprioceptive as well as visual feedback during observation of action would elicit responses in motor cortex similar to those seen during active movement of the arm. We utilized four experimental conditions to test this hypothesis: Active Movement, Visual Playback, Proprioceptive Playback, and Visual+Proprioceptive Playback.

We first examined the spiking response of each neuron to changes in experimental condition by computing the instantaneous binned firing rate (50 ms bin size). Over the time scale of the entire experiment, we found significant heterogeneity in the responses of individual neurons. A one-sample t-test revealed that the firing rate of 97.4% (452/464) of neurons we recorded was modulated with respect to its mean firing rate over the duration of the experiment in at least one experimental condition. We compared the conditional firing rate to the mean firing rate over the duration of the experiment (baseline) because the experimental design did not include a time of quiet rest in which to measure a true baseline firing rate for each neuron. In most cases, we found that neurons responded (either an increase or decrease in firing rate) to more than one condition as 82.1% (371), 73.4% (332), 72.4% (327) and 65.7% (297) of neurons responded to the Active Movement, Visual Playback, Proprioceptive Playback, and Visual+Proprioceptive Playback conditions, respectively. The firing rate of the majority of cells decreased with respect to baseline in each experimental condition [52% (193), 59.4% (199), 59.6% (195) and 64.3% (191) in the Active Movement, Visual Playback, Proprioceptive Playback, and Visual+Proprioceptive Playback conditions, respectively].

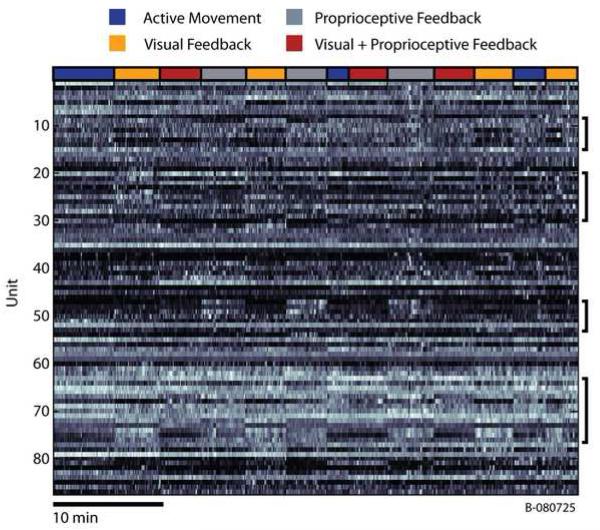

Some neurons seemed to prefer Active Movement, while others preferred individual sensory modalities or some complex combination of movement and sensory feedback. This diversity as well as structured neural activity are illustrated Figure 2 which shows the normalized binned firing rate as a function of time for each of the 87 neurons recorded during a single session. Changes in the experimental condition precisely correlate with substantial changes in the firing rate of individual neurons appearing as vertical striations in Figure 2 (in particular, note those neurons emphasized by black brackets).

Figure 2.

Time series of binned firing rates for all units recorded during a single session (B080725). Firing rates from each individual neuron were binned (50 ms bin size) and normalized to their maximum firing rate. The resulting time series were then smoothed using a zero-phase, 4th order, butterworth, lowpass filter with a cutoff frequency of 0.1Hz for display purposes. Bins shown in white represent the highest firing rates for each cell, while those areas shown in black correspond those time when the firing rate was very low. Notice the substantial changes in the firing rates of some cells at the transitions between experimental conditions (especially those cells denoted by the black brackets). The colored bar at the top of the figure shows the transitions between the 4 experimental conditions.

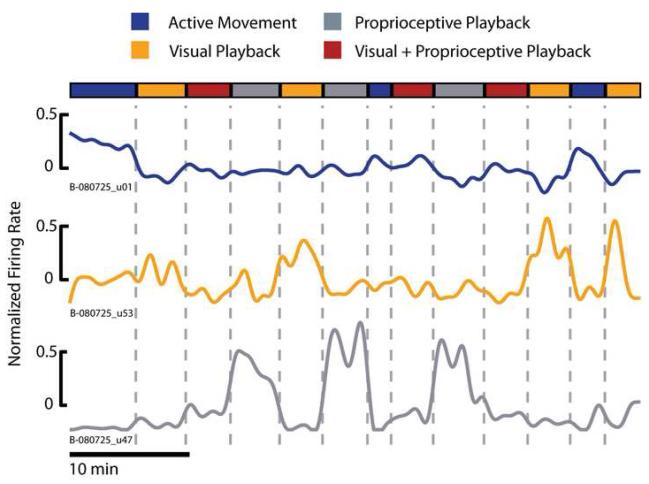

To formalize this diversity of neuronal responses, we first removed the mean firing rate of individual neurons over the duration of the experiment and computed the average firing rate per each experimental condition. Next, we used a one-way ANOVA to identify differences in the neuronal firing rates related to experimental conditions. ANOVA found a main effect of experimental condition in 96% (446/452) of the neurons that modulated in any condition. We then used post hoc t-tests to sort each neuron into one of four groups based on their conditional firing rate. We first identified those cells that increased their firing rate only during the Active Movement condition (Figure 3, blue trace). Only 3.6% of cells (16/446) were placed in this group indicating that the activity of neurons in MI is related to more than just overt movements. We further classified each neuron based on its responses during the Visual and Proprioceptive Playback conditions. Those cells that increased their firing rate in the Visual Playback condition as compared to the Proprioceptive Playback condition were placed in the Prefers Vision group (Figure 3, orange trace). Conversely, those cells that increased their firing rate in the Proprioceptive Playback as compared to the Visual Playback conditions were placed in the Prefers Proprioception group (Figure 3, gray trace). This is not to say that those cells classified as Prefers Vision or Prefers Proprioception responded only to the unimodal playback conditions (these cells likely modulate during multiple experimental conditions including Active Movement), but rather that these cells simply responded more strongly to one sensory modality over another during playback. The remaining cells were modulated similarly in the Visual and Proprioceptive Playback conditions and were categorized as Multi-Sensory. The majority of cells were placed in one of the unimodal sensory feedback categories with 39.4% (176/446) and 34.8% (155/446) of cells categorized as Prefers Vision or Prefers Proprioception, respectively. The Multi-Sensory group contained 22.2% (99/446) of the neurons.

Figure 3.

Diverse pattern of activity in single units during observation of action with different sensory modalities. Individual cells from the population of neurons we recorded were sorted into 4 categories based on their average firing rate in each experimental condition. Some cells only responded to Active Movement (B-080725_u01, blue trace). The Prefers Vision group of cells responded most strongly to the Visual Playback condition (B-080725_u53, orange trace), while the Prefers Proprioception population responded most strongly to the Proprioceptive Playback condition (B-080725_u47, gray trace). The final group (Multi-Sensory, not shown) consisted of those cells that responded similarly to both the Visual and Proprioceptive Playback conditions. It is important to note that those cells classified as Prefers Vision or Prefers Proprioception did not respond only during the unimodal playback conditions; rather the firing rate of these cells was modulated during multiple experimental conditions (see increased firing rate during Visual+Proprioceptive Playback condition in the example cell classified as Prefers Proprioception). The colored bar at the top of the figure shows the transitions between the 4 experimental conditions. Here the binned firing rate time series were smoothed using a zero-phase, 4th order, butterworth, lowpass filter with a cutoff frequency of 0.05Hz for display purposes.

Information in Neural Spiking Activity

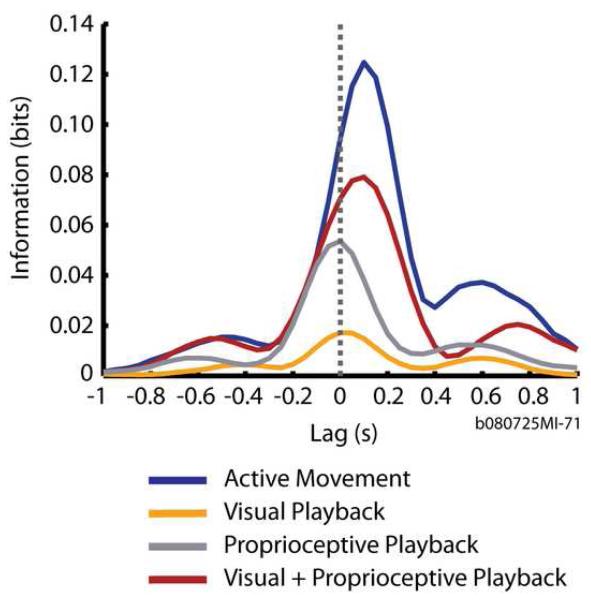

To assess the effect of sensory playback modality on the neural activity, we computed the mutual information between the instantaneous binned firing rate of each cell and the binned direction of either the cursor or monkey’s hand, depending on the experimental condition. The amount of mutual information about the direction of either the cursor or the hand movement is computed at temporal lags with respect to the instantaneous binned firing rate. Values at negative lags represent the amount of mutual information in the current neural activity about the direction movement that has already taken place. Alternatively, values at positive lags represent the amount of mutual information in the current neural activity about the direction of movement that has yet to occur. By considering the relative timing of the peak in mutual information together with the magnitude of the peak mutual information we were able to determine at what time lag a neuron’s modulation is most related to the direction of movement.

We computed the mutual information measure using the direction of the visual cursor movement when analyzing the data from the Active Movement and Visual Playback conditions. In the Active Movement condition, the visual cursor position was identical to the position of the monkey’s hand. In the Visual Playback condition, the monkey’s arm was motionless while the visual cursor and targets are the only relevant sensory stimuli. Since in the Proprioceptive Playback condition the only relevant sensory stimulus was the movement of the monkey’s hand along the trajectory of the invisible cursor, we computed the mutual information measure using the direction of movement of the monkeys’ hand to analyze the data from this condition. In the Visual+Proprioceptive Playback conditions, both the visual and proprioceptive stimuli are present since the monkey’s arm is being driven along the trajectory of the visual cursor. For this condition, we computed the mutual information metric twice - on the direction of the cursor movement as well as on the direction of the hand movement.

Consider the mutual information profiles for one cell, shown in Figure 4. The activity of this particular cell carried the greatest information (0.127 bits) about the direction of cursor movement during the Active Movement condition (Figure 4, blue trace). Furthermore, the neural activity of this cell carried the greatest amount of information about the direction of cursor movement occurring ∼160 ms in the future. This temporal relationship may be interpreted as a feed-forward signal, “driving” the cursor movement and is typical of motor cortical neurons that causally “drive” movement [15, 18]. Note that the amount of peak information and the timing at which it occurred differed for this neuron under the four experimental conditions. The trend in the difference indicated that the Visual+Proprioceptive Playback condition (Figure 4, red trace) generated a more “motor” response and was most similar to the Active Movement condition, while the Visual Playback and Proprioceptive Playback conditions (Figure 4, orange and gray traces, respectively) elicited a more “sensory” response.

Figure 4.

Example mutual information temporal profiles (in bits) for a single unit during Active Movement and playback conditions. Each trace plots the mutual information between the firing rate of a single neuron and the movement direction over different relative times between the two. A positive lag time denotes that the neural activity was measured before the movement direction whereas a negative lag time denotes that the neural activity was measured after the movement direction. A zero lag time denotes that the neural activity and movement direction were measured simultaneously. Mutual information profiles are plotted during Active Movement (blue trace), Visual Playback (orange trace), Proprioceptive Playback (gray trace) and Visual+Proprioceptive Playback conditions (red trace).

Using the mean firing rate-based cell classifications described earlier, we compared the magnitude of peak mutual information in the three playback conditions across the population of recorded neurons. A one-way ANOVA on mutual information found a significant effect of playback condition in cells classified as Prefers Proprioception or Multi-Sensory (p < 0.05; F2,462 = 8.73 and F2,294 = 3.78, respectively). We then used post-hoc t-test to determine pair-wise differences in information magnitude for these groups of neurons.

In those neurons classified as Prefers Proprioception, the magnitude of peak mutual information was greater in the conditions where the monkeys received proprioceptive sensory feedback (Proprioceptive Playback and Visual+Proprioceptive Playback) as compared to the Visual Playback condition (p < 0.05). In these neurons, there was no difference in resulting peak mutual information magnitudes between the Proprioceptive and Visual+Proprioceptive Playback conditions. Similarly, in those neurons categorized as Multi-Sensory, peak information magnitude was greater during Visual+Proprioceptive Playback as compared to Visual Playback (p < 0.05). In these neurons there was no difference, however, between the peak information magnitude in the Proprioceptive Playback condition and the Visual+Proprioceptive and Visual Playback conditions, respectively. We found no significant effect of playback condition in the neurons classified as Prefers Vision.

We were specifically interested in understanding how the strength and temporal relationship of mutual information was modulated by sensory feedback modality in cells whose activity contained significant information about direction in each of the experimental conditions. Therefore, to be included in the following analyses, cells had to exhibit significant peak mutual information computed on the direction of cursor movement in the Active Movement and Visual Playback conditions as well as on the direction of the hand movement in the Proprioceptive and Visual+Proprioceptive Playback conditions. Approximately 27% (125/464) of all recorded cells from both monkeys passed this criterion. To make comparisons relevant to the Visual+ Proprioceptive Playback condition, cells had to meet the above criterion as well as exhibit significant mutual information peaks in the Visual+Proprioceptive Playback condition when computed on the direction of cursor movement. Having imposed this extra criterion we were left with 124 of the original 125 accepted neurons. Based on the mean conditional firing rate classification, 57 (45.9%), 29 (23.3%) and 35 (28.2%) of these 124 neurons were classified as Prefers Vision, Prefers Proprioception and Multi-Sensory, respectively. Only two neurons (1.6%) responded solely to the Active Movement condition, and one neuron was unclassified.

We examined the mutual information content by pooling the peak mutual information values (Table 1) of each cell and pooling the lags (Table 2) at which those peak values occurred within each experimental condition (Figure 5 and 6, respectively), across the two monkeys. We then used paired t-tests to examine the experimental conditions for differences between the peak information magnitudes and lags. We used a threshold of α= 0.05/4 = 0.0125 (bonferroni correction for multiple comparisons) to determine statistical significance.

Table 1. MI Values - Statistics Summary.

Statistical comparison of mean peak mutual information values with respect to direction of movement among the four different experimental conditions. Significant values are shown in red. All tests were paired t-tests.

| Act | V+P (Hand) | V+P (Cursor) | Prop | Vis | ||

|---|---|---|---|---|---|---|

| Act | t | 6.49 | 8.0672 | 5.1476 | 7.859 | |

| p | <0.005 | <0.005 | <0.005 | <0.005 | ||

| Vis | t | -5.26 | -4.922 | -4.422 | ||

| p | <0.005 | <0.005 | <0.005 | |||

| Prop | t | -1.884 | -0.882 | |||

| p | 0.0619 | 0.3797 | ||||

| Mean | 0.0769 | 0.0563 | 0.0504 | 0.0461 | 0.0257 | |

| ± SE | ± 0.0074 | ± 0.0067 | ± 0.006 | ± 0.0045 | ± 0.0026 | |

|

More MI (bits) | ||||||

Table 2. MI Lags - Statistics Summary.

Statistical comparison of mean peak mutual information lags with respect to direction of movement among the four different experimental conditions. Significant values are shown in red. All tests were paired t-tests.

| Act | V+P (Hand) | V+P (Cursor) | Vis | Prop | ||

|---|---|---|---|---|---|---|

| Act | t | 2.0125 | 2.7066 | 3.6713 | 7.2184 | |

| p | 0.0464 | 0.0078 | < 0.005 | < 0.005 | ||

| Vis | t | -2.5621 | -1.7328 | 1.301 | ||

| p | 0.0116 | 0.0856 | 0.196 | |||

| Prop | t | -5.9049 | -3.7318 | |||

| p | < 0.005 | < 0.005 | ||||

| Mean | 118 | 92 | 75 | 41 | 14 | |

| ± SE | ± 11 | ± 11 | ± 15 | ± 18 | ± 10 | |

|

MORE DRIVING (MS) | ||||||

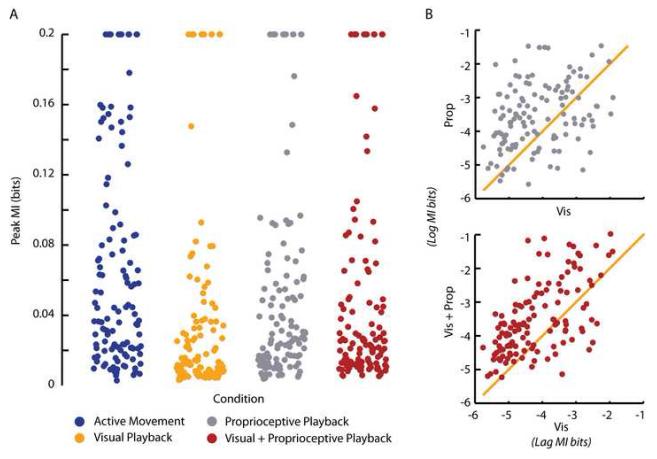

Figure 5.

Graded changes in peak mutual information about movement direction. (A) Peak mutual information values (bits) with respect to direction of movement of the 125 cells used in our analyses during Active Movement (blue), Visual Playback (orange), Proprioceptive Playback (gray) and Visual+Proprioceptive Playback (red) conditions. Values exceeding 0.2 bits in magnitude are reported at that value. (B) Top panel (gray dots) shows the comparison of the magnitude of peak mutual information with respect to direction of movement conveyed by neurons during the Proprioceptive Playback condition with the magnitude of information conveyed during Visual Playback condition. The bottom panel (red dots) shows the comparison of the magnitude of peak mutual information with respect to direction of movement conveyed by neurons during the Visual+Proprioceptive Playback condition with the magnitude of information conveyed during the Visual Playback condition. Data in both panels is reported as the natural log of the mutual information bit values.

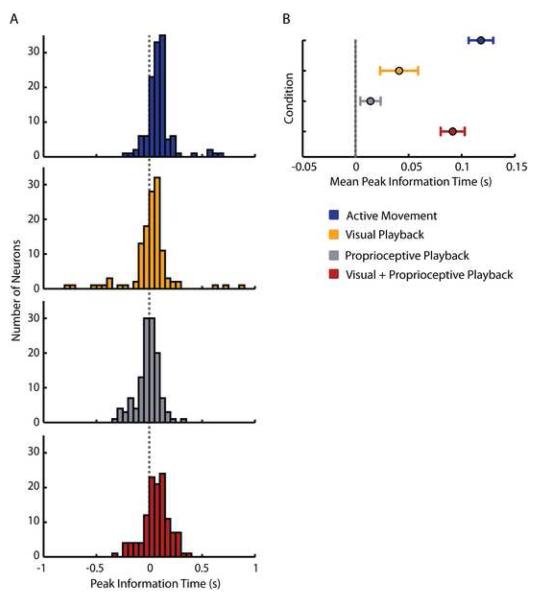

Figure 6.

Graded changes in the time lag of peak mutual information about movement direction. (A) Distribution of lags at which mutual information with respect to direction of movement peaks for the 125 cells used in our analysis during Active Movement (blue histogram), Visual Playback (orange histogram), Proprioceptive Playback (gray histogram) and Visual+Proprioceptive Playback (red histogram) conditions. The dotted vertical line intersecting the four histograms represents the 0s time lag. (B) The summary of panel A, showing average (± standard error) peak mutual information lags across all cells during Active Movement (blue circle), Visual Playback (orange circle), Proprioceptive Playback (gray circle) and Visual+Proprioceptive Playback (red circle) conditions.

When comparing the two unimodal sensory conditions (Visual Playback and Proprioceptive Playback; Figure 5A, data shown in orange and gray, respectively), we found that the peak information provided by most cells about the direction of movement was significantly higher in the Proprioceptive than in the Visual Playback conditions (Figure 5B top panel; Table 1). Neural activity sampled during the Visual and Proprioceptive Playback conditions provided significantly less information about the direction of movement than the activity in the Active Movement condition (Table 1). Similarly, the Visual Playback condition yielded significantly less information about the direction of movement when compared to the Visual+Proprioceptive Playback condition (Figure 5B bottom panel; Table 1). We found no difference, however, between the magnitude of peak mutual information in the Proprioceptive and Visual+Proprioceptive Playback conditions.

We found that the peak information provided by most cells about the direction of movement was highest in the two experimental conditions that gave the monkeys both visual and proprioceptive feedback about the direction of movement, that is the Active Movement and Visual+Proprioceptive Playback conditions (Figure 5A, data shown in blue and red, respectively). The amount of information was highest during the Active Movement condition and was second highest during the Visual+Proprioceptive Playback condition when computed on the direction of the hand movement as well as when computed on the direction of the cursor movement (Table 1). While small, the difference between the distributions of peak mutual information magnitudes for the Visual+Proprioceptive Playback condition derived from using the direction of the hand and the direction of the cursor movement was statistically significant (T123 = 6.4; p < 0.005; paired t test).

We also considered the timing of the mutual information peak in each experimental condition (Figure 6). Timing results from the Active Movement condition were regarded as a control, representing the timing of the expected neural activity in MI that ‘drives’ behavior (Table 2). In the Visual Playback condition, the timing of the peak mutual information shifted closer to zero lag. While the difference in the lags of peak mutual information during the Visual Playback condition and the Active Movement condition was significant, the difference in peak information timing during the Visual Playback condition and the Visual+Proprioceptive Playback condition was not (Table 2). The timing of peak mutual information was closest to zero lag during the Proprioceptive Playback condition (Figure 6A, data shown in gray). Here, the average lag at peak mutual information was not significantly different from the average lag in the other unimodal sensory condition — Visual Playback condition (Table 2). However, there was a significant difference in the timing of neural responses when comparing the peak mutual information lags during the Proprioceptive Playback condition and the lags during the Visual+Proprioceptive Playback condition (Table 2).

The timing of peak mutual information during the Visual+Proprioceptive Playback condition resembled the timing of the Active Movement condition most closely (Figure 6A). In fact, there was no difference between the mean peak mutual information time lag in the Active Movement condition and Visual+Proprioceptive Playback condition computed on the direction of hand movement (Table 2). However, there was a marginally significant difference in average peak mutual information time lag between the Active Movement condition and the Visual+Proprioceptive Playback condition computed on the direction of cursor movement (Table 2). We found no difference between the average peak mutual information lags during Visual+Proprioceptive Playback condition derived from direction of cursor movement and direction of hand movement (T123 = 1.4; p = 0.15; paired t-test). Therefore, for the remainder of this analysis we will only report on the peak mutual information lags computed with respect to the direction of hand movement.

Our mutual information analysis showed that neural responses most closely resembled movement-like activity when both the visual and proprioceptive sensory modalities were present during playback. Proprioceptive Playback alone facilitates greater amount of mutual information about the direction of movement than Visual Playback alone. However, the temporal characteristics of neural activity during Visual Playback more strongly resembled neural activity during Active Movement when compared to the temporal characteristics of neural activity during Proprioceptive Playback (Figure 6B).

Directional Tuning

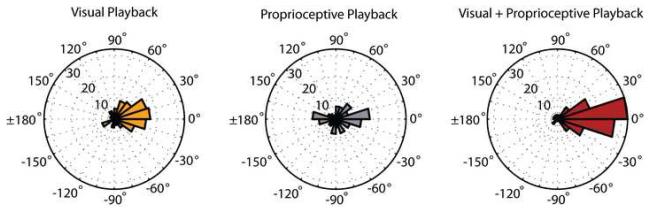

To further characterize the relationship between neural modulation and direction of movement, we computed the preferred direction of each cell during each condition, and compared the preferred directions of neurons during the Active Movement condition with each of the respective playback conditions (Figure 7). Of the 124 neurons that passed our mutual information significance criterion, only those that exhibited stable and significant cosine tuning during each of the compared conditions were considered for this analysis. We found no difference in the distributions of preferred directions during the Active Movement and Visual Playback conditions (N=116 cells, p = 0.81, Kuiper test). The mean (±SE) difference in preferred directions between these two conditions was 16.85° (28.07°). In contrast, the distributions of preferred directions during Active Movement and Proprioceptive Playback conditions were statistically different (N=122 cells, p < 0.05, Kuiper test). We found no difference between the distribution of preferred directions during the Active Movement and Visual+Proprioceptive Playback conditions (N=121 cells, p = 0.52, Kuiper test). The mean (±SE) difference in preferred direction between these two conditions was 3.39° (15.65°).

Figure 7.

Similarity in preferred directions of cells during Active Movement and the three observation conditions shown as the distributions of differences in preferred directions between: Active Movement and the Visual Playback conditions (orange); Active Movement and Proprioceptive Playback conditions (gray); Visual Playback and Visual+Proprioceptive Playback conditions (red).

Consistent with our previous report [26], the analysis of differences in preferred directions demonstrates that the neurons had similar directional tuning properties during the Active Movement and Playback conditions where a visual target was present (i.e. Visual Playback and Visual+Proprioceptive Playback). In contrast, a bimodal distribution of differences in preferred direction was observed when comparing tuning in the Proprioceptive Playback and Active Movement conditions (Figure 7, gray histogram). The bimodal distribution of differences suggested the existence of two populations of neurons, one whose preferred directions were congruent in both conditions and another that were oppositely tuned in the two conditions.

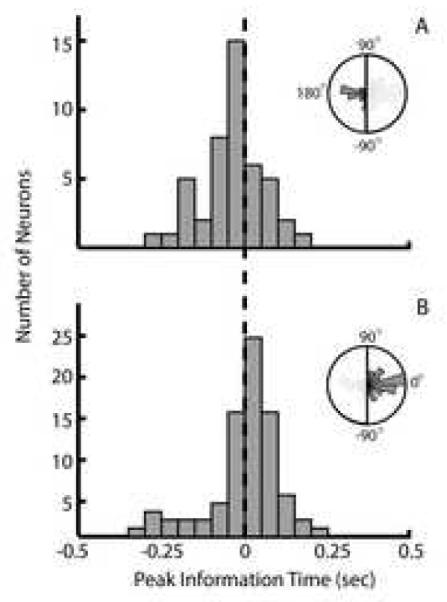

We further examined this bimodal distribution of preferred direction differences by sorting each significantly tuned neuron into one of two categories. The ‘Oppositely Tuned’ category contained those neurons whose differences in preferred directions during the Proprioceptive Playback and Active Movement conditions were greater than 90° or less than -90° (Figure 8A, inset). Conversely, the ‘Similarly Tuned’ category contained those neurons whose differences in preferred directions were between -90° and 90° (Figure 8B, inset). Next, we computed the mean peak mutual information lag of neurons in these two groups. A one sample t-test showed that in fact the mean peak mutual information lag of ‘Oppositely Tuned’ neurons (-10.9 ms; Figure 8A) was significantly less than the mean peak mutual information lag of ‘Similarly Tuned’ neurons (25.7 ms; Figure 8B). This indicates that the ‘Oppositely Tuned’ neurons show more of a sensory response during the Proprioceptive Playback condition. Paradoxically, these same cells exhibit a mean peak mutual information lag of 129 ms during the Active Movement condition, which is interpreted as a ‘driving’ lag as opposed to the sensory one as seen in the Proprioceptive Playback condition.

Figure 8.

Separable sensory and motor responses during Proprioceptive Playback. (A) Distribution of peak mutual information lags of cells that show a 180 degree shift in preferred direction between the Proprioceptive Playback and Active Movement conditions. The mean lag of this distribution occurs to the left of the zero lag and thus reflect ‘sensory’ activity. (B) Distribution of peak mutual information lags of cells that show no shift in preferred direction between the Proprioceptive Playback and Active Movement conditions. The mean lag of this distribution occurs to right of the zero lag reflecting activity that ‘drives’ movement. In both panels, the inset figures describe histogram of preferred direction differences for their respective group of cells.

DISCUSSION

Over the past ten years, considerable progress has been made in improving three of the four fundamental components of a cortically-controlled, brain-machine interface: 1) multi-electrode recording sensor arrays, 2) decoding algorithms, and 3) output interfaces to be controlled by the cortically-derived signals. Much less attention has been paid to the fourth component: sensory feedback [9]. In this work, we used a task involving the visual and proprioceptive replay of movements to dissociate the effect of each modality on the spiking activity of neurons in MI. We tested the hypothesis that task relevant proprioceptive sensory information is present in the activity of neurons in MI and that the addition of veridical proprioceptive feedback about the observed action enhances congruence in neural activity compared to visual observation alone. Consistent with our hypotheses, we found that the activity of neurons in MI was strongly modulated by the direction of arm movement in conditions where monkeys had access to proprioceptive feedback. Furthermore, the combination of visual and proprioceptive feedback during action observation elicited responses in motor cortex that were very similar to those responses recorded during overt arm movements.

Although perhaps not surprising, one of the striking results of this work is the heterogeneity of neural activity evoked by the experimental manipulations. Specifically, the firing rate of the majority of neurons we sampled was modulated by multiple experimental conditions (both Active Movement and Passive Playback). Additionally, some cells demonstrated clear preferences for distinct feedback modalities during playback (Figure 3, orange and gray traces). In fact, only 3.6% of the population responded during the Active Movement condition alone. This richness in response characteristics is consistent with the notion that the activity in MI is related to more than simply a single variable explicitly controlling motor output (see [23] for a review). There is, in fact, significant experimental evidence demonstrating that neural activity in MI is related to many different types of movement information including spatial goals [11, 26], hand motion [7, 15], sensory feedback [6], force output [4], and muscle activity [16].

Enhanced Congruence with Multisensory Feedback

The combination of sensory feedback modalities in the Visual+Proprioceptive Playback condition resulted in peak mutual information values which were statistically greater than Visual Playback alone. Furthermore, these peaks occurred at time lags very close to those observed in the Active Movement condition. We interpret these findings as evidence that when both visual and proprioceptive sensory information about the observed action are veridical, activity in MI more faithfully represents those motor commands that would be present if the animal was actually moving the cursor with his arm. We find further support for this interpretation in the results of our directional tuning analysis. Just as during the Visual Playback condition, neurons tend to maintain directional tuning properties during Visual+Proprioceptive Playback that are very similar to those observed during Active Movement condition (Figure 7, red histograms).

We attribute this enhanced congruence in the Visual+Proprioceptive Playback condition to the addition of proprioceptive feedback, which allows the monkey to better estimate the state of his hand in this condition. During the Visual Playback condition, monkeys are given veridical visual information about the goal of the desired movement, but they lack complete information about the starting location of the would-be movement. When the arm is unseen, planning a movement requires the visual sense to establish a movement goal and the proprioceptive sense to establish the starting position of the movement [19, 24, 29]. When the two modalities are combined, the information necessary for a more accurate movement plan is available and therefore we observe the greatest peak mutual information at the most ‘motor’ lags during conditions where veridical feedback from both sensory modalities is present (i.e. Vision+Proprioceptive Playback).

An alternative explanation is that the monkeys might be actively moving their arm with assistance from the exoskeleton. This is unlikely because movements generated by the animal would not necessarily follow the same trajectory as the visual cursor thereby increasing the error between the visual cursor and the hand. This increase in error would cause the trial to be removed from our analyses due to a violation of the empirically established error threshold.

Consistent with the expectation that proprioceptive feedback enhances the congruency in neural responses, we found that Proprioceptive Playback generates strong state information about the arm. We found no difference in the magnitude of peak mutual information about hand movement in the Proprioceptive Playback condition as compared to Visual+Proprioceptive Playback, and the information magnitude was greater than in Visual Playback. In contrast, we found that the tuning relationships of a subset of neurons were shifted by approximately 180 degrees (‘Oppositely Tuned’) during Proprioceptive Playback. When examining the responses of these cells more closely, we found that those neurons had a sensory peak information lag at - 10.9 ms (i.e. movement precedes neural activity), while those neurons with congruent tuning had a peak information lag of 25ms.

Proprioceptive Information Induces Rapid Motor Responses to Sensory Stimuli

In a study examining the motor and sensory responses of neurons in MI, Fetz and colleagues observed a population of neurons that increased their spiking activity in response to active and passive elbow movements made in the same direction [6]. We also found that some cells maintained their tuning relationship during Proprioceptive Playback and discharged at lags consistent with a ‘driving’ response (Figure 8B). The motor-like response of these cells is unexpected because the visual target is invisible during Proprioceptive Playback, and thus the stimulus required to plan a movement is absent. During playback, however, the monkeys are trained to comply with the movements of the exoskeleton. Continuous movement of the exoskeleton provides an understanding of where the arm is moving and because the hand’s future movement direction is related to the current direction, it is probable that the monkeys can make an accurate prediction of the goal of the movement for some short time delay (on the order of the driving response, 25ms). Thus, a weak motor command that facilitates the current movement direction is likely generated, although it is not executed.

Responses similar to the opposing responses we described have been observed previously in experiments exploring neural responses to load compensation ([5] and active/passive movements [6]. Evarts and Tanji described this type of neural response in MI pyramidal tract neurons. In that study the monkeys were trained to maintain a static posture as they held on to a joystick. During a hold phase, a light instructed the direction of movement that the animals had to generate when a torque perturbation cue was applied to the joystick. The instructed direction of movement either coincided with or opposed the direction of torque perturbation. They found that a large number of neurons would discharge to generate a movement in a particular direction, and that the same neurons would discharge reflexively, when an external stimulus was applied in an opposing direction [5]. Similarly, Fetz and colleagues found a population of neurons in MI that responded to active and passive elbow movements made in opposite directions. [6]. They speculated that cells with these responses could function as a component of a ‘transcortical reflex’ loop.

Our paradigm is similar to that of Evarts and Tanji in that an external stimulus is being applied to the handle that the monkeys hold. In our experiment, however, the stimulus is continuous and the monkeys are taught to comply with the perturbation and allow their arm to follow the motion of the exoskeleton. We speculate that when the movement of the exoskeleton changed direction, a reflexive response was evoked in some cells. We observed this response in the ‘Oppositely Tuned’ cells as a 180 degree shift in the neurons’ preferred directions, as well as a shift in the mean peak mutual information lags to reflect a sensory relationship (Figure 8A).

These cells did not demonstrate an opposing response during the Visual+Proprioceptive Playback condition. Instead, we found that this population of cells had similar tuning properties as those observed when the monkeys actively moved their arm. During playback with a visual target, the motor command does not result in a movement because the monkeys are trained to relax. It is, however, likely that the sensory consequences of this covert motor command (i.e. a motor command that is generated but movement execution is suppressed) are available by way of the combination of efference copy and a forward model (for a review see [31]). Because the relaxed arm is driven to the target by the exoskeleton and the visual target is present in the Visual+Proprioceptive Playback condition, the sensory prediction closely approximates the actual state of the limb resulting in a down-regulation of reflex responses via alpha-gamma motorneuron coactivation [1]. This process results in the congruent tuning relationship observed in the Visual+Proprioceptive Playback condition (Figure 7, red histogram). In contrast, during Proprioceptive Playback the target is invisible and a weak motor command is generated due to the monkeys’ implicit understanding that they must comply with movements of the exoskeleton. Therefore, the neuromotor controller is not able to appropriately adjust the activity of the gamma motor neuron pool resulting in a neural response to arm motion having an opposite tuning relationship compared to active movement.

BMI Application of Visually Triggered Responses in MI

Consistent with our previous report, we demonstrated that the activity of neurons in MI during visual observation of action contains a significant amount of information related to movement direction at time lags consistent with the generation of motor commands [19, 26]. The tuning properties of these neurons are also congruent with preferred directions measured during the Active Movement condition (Figure 7, orange histogram). These congruent (‘mirror-like’) responses during Visual Playback seem to be triggered involuntarily (i.e. in the absence of and volitional motor intention) as the monkeys are required to keep their arm still.

These responses have utility in BMI applications. Previous strategies have used motor imagery to elicit activity in MI in patient populations in order to build a decoder [10]. The ability of patients to imagine movements, however, was not consistently present (Maryam Saleh, unpublished data). Moreover, there is some evidence that “first-person”, kinetic motor imagery elicits stronger activation in primary motor cortex as compared to “third-person”, visual motor imagery [13]. However, it is more difficult to instruct the patient to generate kinetic imagery [25]. It is likely that these automatic, visually-triggered responses we observed during Visual Playback can be used to build a mapping between neural modulation and cursor motion that can be used to guide the movement of a BMI in real-time in patients who are unable to generate movement.

Conclusion

The finding that passive, task-like movements enhance congruent responses in the motor cortex is very important in the context of augmenting BMIs with additional sensory modalities. Given this finding, two possible strategies arise for such an augmentation. One strategy is to rely on both visual and proprioceptive feedback to build or ‘train’ a decoder. One can imagine a paralyzed subject with residual proprioceptive sense — a patient suffering from ALS for instance — passively observing a repertoire of prerecorded movements while a temporary exoskeleton moves the subject’s limbs to mimic the observed movements. Since proprioceptive feedback enhances the mirror-like responses of MI neurons, the resulting performance of such an implementation could be superior to a decoder trained with visual feedback alone.

The second strategy is to rely on proprioceptive feedback during real-time decoding. That is, relying on proprioceptive feedback to enhance the accuracy of the decoder that was trained using only passive observation. This approach could be implemented again either by the use of a portable exoskeleton or through functional electrical stimulation (FES). While the exoskeleton would be controlled by the output of the decoder to provide the subject with proprioceptive feedback about the decoded action, FES could be used to stimulate the muscles in the limb and thus the residual proprioceptive sense would inform the patient about the movements of their own limb [9]. Whichever strategy is ultimately chosen, the results of this study suggest that the performance of BMIs can be improved by adding additional forms of sensory feedback, like proprioception, during the training stage, the decoding stage, or both.

ACKNOWLEDGMENTS

The first two authors of this paper contributed equally to this project. The authors wish to thank Josh Coles for his assistance with the experiments. The work is supported by funding from NIH NINDS R01 N545853-01 to NG and the PVA Research Foundation 114338 to AS.

Footnotes

STATEMENT OF COMMERCIAL INTEREST

N.G. Hatsopoulos has stock ownership in a company, Cyberkinetics Neurotechnology Systems, Inc., that fabricated and sold the multi-electrode arrays and acquisition system used in this study.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

REFERENCES

- 1.Bullock D, Cisek P, Grossberg S. Cortical networks for control of voluntary arm movements under variable force conditions. Cereb Cortex. 1998;8(1):48–62. doi: 10.1093/cercor/8.1.48. [DOI] [PubMed] [Google Scholar]

- 2.Carrillo-de-la-Pena MT, Galdo-Alvarez S, Lastra-Barreira C. Equivalent is not equal: Primary motor cortex (MI) activation during motor imagery and execution of sequential movements. Brain Res. 2008 doi: 10.1016/j.brainres.2008.05.089. [DOI] [PubMed] [Google Scholar]

- 3.Crago PE, Houk JC, Hasan Z. Regulatory actions of human stretch reflex. J Neurophysiol. 1976;39(5):925–35. doi: 10.1152/jn.1976.39.5.925. [DOI] [PubMed] [Google Scholar]

- 4.Evarts EV. Relation of pyramidal tract activity to force exerted during voluntary movement. Journal of Neurophysiology. 1968;31:14–27. doi: 10.1152/jn.1968.31.1.14. [DOI] [PubMed] [Google Scholar]

- 5.Evarts EV, Tanji J. Reflex and intended responses in motor cortex pyramidal tract neurons of monkey. J Neurophysiol. 1976;39(5):1069–80. doi: 10.1152/jn.1976.39.5.1069. [DOI] [PubMed] [Google Scholar]

- 6.Fetz EE, Finocchio DV, Baker MA, Soso MJ. Sensory and motor responses of precentral cortex cells during comparable passive and active joint movements. J Neurophysiol. 1980;43(4):1070–89. doi: 10.1152/jn.1980.43.4.1070. [DOI] [PubMed] [Google Scholar]

- 7.Georgopoulos AP, Kalaska JF, Caminiti R, Massey JT. On the relations between the direction of two-dimensional arm movements and cell discharge in primate motor cortex. 1982. pp. 1527–1537. [DOI] [PMC free article] [PubMed]

- 8.Ghez C, Gordon J, Ghilardi MF. Impairments of reaching movements in patients without proprioception. II. Effects of visual information on accuracy. J Neurophysiol. 1995;73(1):361–72. doi: 10.1152/jn.1995.73.1.361. [DOI] [PubMed] [Google Scholar]

- 9.Hatsopoulos NG, Donoghue JP. The Science of Neural Interface Systems. Annual Review of Neuroscience. 2009;32(1) doi: 10.1146/annurev.neuro.051508.135241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hochberg LR, Serruya MD, Friehs GM, Mukand JA, Saleh M, Caplan AH, Branner A, Chen D, Penn RD, Donoghue JP. Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature. 2006;442(7099):164–71. doi: 10.1038/nature04970. [DOI] [PubMed] [Google Scholar]

- 11.Kakei S, Hoffman DS, Strick PL. Muscle and Movement Representations in the Primary Motor Cortex. Science. 1999;285:2136–2139. doi: 10.1126/science.285.5436.2136. [DOI] [PubMed] [Google Scholar]

- 12.Kennedy PR, Bakay RA. Restoration of neural output from a paralyzed patient by a direct brain connection. Neuroreport. 1998;9(8):1707–11. doi: 10.1097/00001756-199806010-00007. [DOI] [PubMed] [Google Scholar]

- 13.Lotze M, Halsband U. Motor imagery. J Physiol Paris. 2006;99(46):386–95. doi: 10.1016/j.jphysparis.2006.03.012. [DOI] [PubMed] [Google Scholar]

- 14.Maynard EM, Hatsopoulos NG, Ojakangas CL, Acuna BD, Sanes JN, Normann RA, Donoghue JP. Neuronal interactions improve cortical population coding of movement direction. Journal of Neuroscience. 1999;19:8083–8093. doi: 10.1523/JNEUROSCI.19-18-08083.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Moran DW, Schwartz AB. Motor cortical representation of speed and direction during reaching. J Neurophysiol. 1999;82(5):2676–92. doi: 10.1152/jn.1999.82.5.2676. [DOI] [PubMed] [Google Scholar]

- 16.Morrow MM, Miller LE. Prediction of muscle activity by populations of sequentially recorded primary motor cortex neurons. J Neurophysiol. 2003;89(4):2279–88. doi: 10.1152/jn.00632.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Naito E, Roland PE, Ehrsson HH. I feel my hand moving: a new role of the primary motor cortex in somatic perception of limb movement. Neuron. 2002;36(5):979–88. doi: 10.1016/s0896-6273(02)00980-7. [DOI] [PubMed] [Google Scholar]

- 18.Paninski L, Fellows MR, Hatsopoulos NG, Donoghue JP. Spatiotemporal tuning of motor cortical neurons for hand position and velocity. Journal of Neurophysiology. 2004;91:515–532. doi: 10.1152/jn.00587.2002. [DOI] [PubMed] [Google Scholar]

- 19.Rossetti Y, Desmurget M, Prablanc C. Vectorial coding of movement: vision, proprioception, or both? J Neurophysiol. 1995;74(1):457–63. doi: 10.1152/jn.1995.74.1.457. [DOI] [PubMed] [Google Scholar]

- 20.Roth M, Decety J, Raybaudi M, Massarelli R, Delon-Martin C, Segebarth C, Morand S, Gemignani A, Decorps M, Jeannerod M. Possible involvement of primary motor cortex in mentally simulated movement: a functional magnetic resonance imaging study. Neuroreport. 1996;7(7):1280–4. doi: 10.1097/00001756-199605170-00012. [DOI] [PubMed] [Google Scholar]

- 21.Sainburg RL, Ghilardi MF, Poizner H, Ghez C. Control of limb dynamics in normal subjects and patients without proprioception. J Neurophysiol. 1995;73(2):820–35. doi: 10.1152/jn.1995.73.2.820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Scott SH. Apparatus for measuring and perturbing shoulder and elbow joint positions and torques during reaching. J Neurosci Methods. 1999;89(2):119–27. doi: 10.1016/s0165-0270(99)00053-9. [DOI] [PubMed] [Google Scholar]

- 23.Scott SH. The role of primary motor cortex in goal-directed movements: insights from neurophysiological studies on non-human primates. Curr Opin Neurobiol. 2003;13(6):671–7. doi: 10.1016/j.conb.2003.10.012. [DOI] [PubMed] [Google Scholar]

- 24.Sober SJ, Sabes PN. Multisensory integration during motor planning. J Neurosci. 2003;23(18):6982–92. doi: 10.1523/JNEUROSCI.23-18-06982.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Solodkin A, Hlustik P, Chen EE, Small SL. Fine modulation in network activation during motor execution and motor imagery. Cereb Cortex. 2004;14(11):1246–55. doi: 10.1093/cercor/bhh086. [DOI] [PubMed] [Google Scholar]

- 26.Tkach D, Reimer J, Hatsopoulos NG. Congruent activity during action and action observation in motor cortex. J Neurosci. 2007;27(48):13241–50. doi: 10.1523/JNEUROSCI.2895-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Tkach D, Reimer J, Hatsopoulos NG. Observation-based learning for brain-machine interfaces. Curr Opin Neurobiol. 2008 doi: 10.1016/j.conb.2008.09.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Truccolo W, Friehs GM, Donoghue JP, Hochberg LR. Primary motor cortex tuning to intended movement kinematics in humans with tetraplegia. J Neurosci. 2008;28(5):1163–78. doi: 10.1523/JNEUROSCI.4415-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.van Beers RJ, Sittig AC, Gon JJ. Integration of proprioceptive and visual position-information: An experimentally supported model. Journal of Neurophysiology. 1999;81(3):1355–64. doi: 10.1152/jn.1999.81.3.1355. [DOI] [PubMed] [Google Scholar]

- 30.Wahnoun R, He J, Tillery S.I. Helms. Selection and parameterization of cortical neurons for neuroprosthetic control. J Neural Eng. 2006;3(2):162–71. doi: 10.1088/1741-2560/3/2/010. [DOI] [PubMed] [Google Scholar]

- 31.Wolpert DM, Ghahramani Z. Computational principles of movement neuroscience. Nat Neurosci. 2000;3(Suppl):1212–7. doi: 10.1038/81497. [DOI] [PubMed] [Google Scholar]