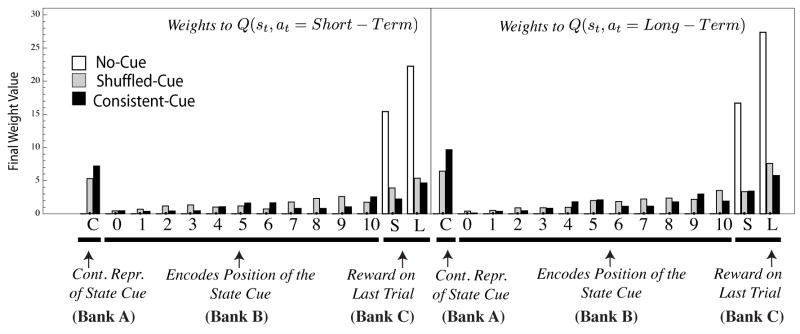

Figure 7.

The final setting of the learning weights in the Q-learning network model in Experiment 2. For each subject, in each condition, the model predicted the participant’s choices on a trial-by-trial basis and the weights were updated. The setting of the weights following the last trial of the experiment were recorded and averaged within each condition. The horizontal axis of each panel displays the 14 input units to the model shown in Figure 5. The input labeled C represents the continuous representation of the state cue provided in the shuffled-cue and consistent-cue conditions. The inputs labeled 0–10 reflect the discrete encoding of the same cue. Finally, the inputs labeled S and L encoded the magnitude of the reward signal on the previous trial as a result of selecting either the Short-term (S) or Long-term (L) action. The panels are divided in half, with the left side showing the weights from the input units to the output unit predicting the value of Q(st, at = Short – Term) (i.e, the Short-term action) and the right side showing the weights to the Q(st, at = Long –; Term) (i.e., the Long-term action) unit.