Abstract

Reports of qualitative studies typically do not offer much information on the numbers of respondents linked to any one finding. This information may be especially useful in reports of basic, or minimally interpretive, qualitative descriptive studies focused on surveying a range of experiences in a target domain, and its lack may limit the ability to synthesize the results of such studies with quantitative results in systematic reviews. Accordingly, the authors illustrate strategies for deriving plausible ranges of respondents expressing a finding in a set of reports of basic qualitative descriptive studies on antiretroviral adherence and suggest how the results might be used. These strategies have limitations and are never appropriate for use with findings from interpretive qualitative studies. Yet they offer a temporary workaround for preserving and maximizing the value of information from basic qualitative descriptive studies for systematic reviews. They show also why quantitizing is never simply quantitative.

Keywords: mixed-methods research, qualitative, quantitizing, research synthesis

The ability to integrate qualitative and quantitative findings in systematic reviews of research sometimes depends on the extent to which qualitative findings can be converted to a form compatible with quantitative findings or the extent to which quantitative findings can be converted to a form compatible with qualitative findings. In the mixed-methods research literature, references are routinely made to what Teddlie and Tashakkori (2006, p. 17) called “conversion designs” that consist of analytic strategies in which qualitative and quantitative data are transformed to allow either statistical or textual treatment of both data sets (e.g., Greene, 2007; Onwuegbuzie & Teddlie, 2003). The direction of data conversion (qualitative → quantitative or quantitative → qualitative) will depend on which one will better accommodate the research purpose and nature of the data to be combined.

In the course of an ongoing study directed toward developing methods to synthesize qualitative and quantitative research findings, we were experimenting with ways to “quantitize” (Tashakkori & Teddlie, 1998, p. 126) a set of qualitative findings or to convert them into a form that would make them combinable with a set of quantitative findings on the same topic. This required knowing the number of respondents linked to any one finding, but the reports of the qualitative studies we reviewed offered little information on this number. Accordingly, we describe here practical ways we developed to derive this information from qualitative reports that may make their findings combinable with those in quantitative reports. We illustrate these strategies using a set of 11 reports of qualitative studies on antiretroviral adherence (marked with an asterisk in the reference list).

Differences in Sampling Imperatives

That reports of qualitative studies frequently do not allow readers to discern the number of participants linked to a finding is not a deficiency in qualitative reports per se but rather a reflection of the differences between the purposeful sampling and analytic imperatives associated with qualitative research and the probability sampling and analytic imperatives associated with quantitative research. Purposeful sampling is directed toward the selection of information-rich cases to draw “illustrative inferences” regarding “possibility” (Wood & Christy, 1999, p. 185). Illustrative inferences are drawn about what is possible, in contrast to statistical inferences drawn in quantitative research about the prevalence of specified possibilities. Validity in qualitative research depends on samples that are informationally representative and “deliberately biased . . . in favor of interesting possibilities” (Wood & Christy, p. 189), and sample sizes and compositions that support claims to informational redundancy, or theoretical, scene, or case saturation, and that enable idiographic, case-bound, or analytic generalizations (Onwuegbuzie & Leech, 2007; Sandelowski, 1995). The analytic imperative in qualitative research is to illuminate the complex particularities of the case; data analysis in qualitative studies is, therefore, ideally case oriented.

In contrast, probability sampling is directed toward the selection of statistically representative cases to draw statistical inferences regarding probability. Validity in quantitative research depends on samples of sufficient size and power to minimize bias, support the use of inferential statistics, and allow nomothetic or formal generalizations from samples to populations. The analytic imperative in quantitative research is to ascertain differences between specified groups on a selected and relatively small number of specified features; data analysis in quantitative studies is, therefore, typically variable oriented.

Also making sample size less relevant (albeit not irrelevant) as a means of determining whether something should be counted as a finding, or its importance relative to other findings, is that sampling in qualitative research is not necessarily of people per se but rather of any source of information about the target experience or event under study. There is no a priori size mandate (e.g., to have so many men and so many women or to have equal numbers of them) before a valid interpretation can be made. Numbers do not provide the “power” in purposeful sampling the way they do in probability sampling. Instead, there is the mandate to have shown sufficient engagement with sources of information about the target phenomenon, for example, to have shown enough time in the field (which may include persons, documents, and artifacts) or frequency and duration of contact with participants via interviews and observations to support an interpretation.

Accordingly, it is often less informative to count numbers of persons expressing a theme than to interpret the thematic lines themselves and to ensure that they are distinguished from each other. For example, it is more pertinent in a report of a grounded theory study to describe the connections among the temporal patterns of adherence (e.g., constant, episodic), the circumstances in which these patterns were observed (e.g., when disease symptoms were most and least pronounced, when medication side effects were most and least manageable), and the confluence of social factors characterizing the users of these strategies (e.g., felt or enacted stigma, gender, race) than to enumerate the numbers of persons showing constant versus episodic adherence, more versus less disease symptomatology, and the like. Indeed, any one or a combination of temporal patterns, circumstances, and social factors may have been discerned in just one person. Although counting persons or themes, or persons in relation to themes, will have been necessary to achieve the interpretive goal of a study and to ensure that all data are accounted for (Sandelowski, 2001), it would distract from the main point to emphasize this count in reporting the study. What would be central in such a report is to distinguish clearly between types of adherence and the relationship of each type of adherence to each other and to the circumstances of its occurrence and to social factors. Even if a pattern or configuration of circumstances was discernible in only one person, it would still “count,” that is, it would still be reportable as a pattern or configuration. In contrast, statistical inference depends on the prevalence or frequency of observations that occur more than once. Indeed, onetime observations in quantitative research may be considered outliers and discarded.

Yet there is a genre of qualitative research—that is, the qualitative survey, or the basic, minimally interpretive qualitative descriptive study (Sandelowski & Barroso, 2007)—reports of which may acquire more value were they to contain information on the numbers of persons linked to a finding. The qualitative survey was the dominant type of qualitative study conducted in the domain of our review (antiretroviral adherence). The defining features of qualitative surveys are the (a) reduction of qualitative data in ways that remain data-near, or relatively close to the way information was given to the researcher; (b) nominal use of concepts to organize data; and (c) nominal use of quotations to illustrate them. Such surveys feature lists and inventories of topics or themes covered by research participants. The usual format of the survey is to name a topic or theme, define it, and then illustrate it with examples or quotations (Sandelowski & Barroso, 2007). As they are the least interpretive form of qualitative research, qualitative survey findings are countable, but what is often missing is the number of persons tied to any one item, or any hint that the order in which these items are presented is an indicator of their prevalence in the study sample. Yet their methodological similarity to quantitative surveys and descriptive studies makes them potentially amenable to quantitative transformation (Sandelowski, Barroso, & Voils, 2007). The remainder of this article is focused on how we sought to offset the lack of specific frequency counts of findings in reports of qualitative surveys.

Methods and Results

For the purpose of illustration here, we focused our efforts on factors related to medication regimens (as opposed to other factors, such as beliefs or social support) identified in the set of 11 reports of qualitative studies we reviewed as facilitating or hindering antiretroviral adherence. In systematic reviews, it is usually necessary to combine factors seen to be similar and, therefore, treatable as the same as otherwise there would be few findings available to synthesize, no matter what the method of synthesis used. Such categorizations are credible to the extent that readers of systematic reviews (here clinicians and researchers in the domain of antiretroviral adherence) see them as encompassing factors that could reasonably be seen as like each other. Thus, we included in the category medication regimen any finding addressing dosing frequency, size of pills, timing of medications, availability of medication refills, medication side effects, ease or difficulty of incorporation of pill-taking into daily routine, dietary requirements of drugs, and regimen changes.

We dichotomized medication regimen into more complex regimens favoring nonadherence and less complex regimens favoring adherence, as there was no factor linking more complex regimens with adherence or less complex regimens with nonadherence. Examples of more complex medication regimens included frequent dosing schedules, too many pills to take, larger or poor-tasting pills, difficult to include in routine daily or nonroutine schedules, side effects, necessity for strict eating or dietary habits, and frequent changes of medication regimens. Examples of less complex medication regimens were once- or twice-a-day dosing, smaller pills, simpler timing of medications, little or no side effects, and availability of medication refills. Table 1 shows factors from the 11 qualitative reports reviewed included in the more complex and less complex regimen categories.

Table 1.

Medication Regimen Factors Affecting Adherence in 11 Reports of Qualitative Studies

Having created these regimen categories, we then sought to determine the number of respondents reporting that any factor related to more or less complex medication regimens hindered or facilitated adherence (with a view toward combining these numbers with results pertaining to medication regimen and its influence on adherence from quantitative observational studies in this domain). Although having exact numbers is always preferred, the reports of the qualitative studies did not contain this information. Systematic reviews often require additional information from authors not available in their reports, but such information frequently remains unavailable even after efforts to contact them have been made. Reviewers must then decide whether they should exclude reports with missing information or whether they can work around it. We sought to work around it, as a prevailing criticism of systematic reviews is that they too often end up based on a minority of the eligible reports available, the majority having been excluded for a host of reasons (MacLure, 2005; Sandelowski, 2008). In this review, that would have meant excluding all reports of qualitative studies, which is contrary to the goals of conducting a mixed research synthesis and of preserving the information value of studies.

Verbal Counting Survey

Lacking specific information on the number of respondents linked to any one finding, we worked to use the information provided in each report to infer a range for the number of respondents expressing the finding. In 9 of the 11 reports of qualitative studies we reviewed, authors used “verbal counting” (Sandelowski, 2001, p. 236), for example, few, several, many, most, to convey the numbers of respondents linked to a finding (i.e., a topic raised, a theme discerned). Deriving the number of respondents directly from these terms is not straightforward as these words are not well-defined in common English language usage. Indeed, a quick perusal of any dictionary or thesaurus or synonym compendium will show that words such as many or several are often defined by other words such as most or few. The total sample size in a study may also influence the meaning of verbal counts, as when an author reports that “several” or “few” women expressed a finding in a study that included only five women. Five may be seen as already constituting “several” or “few.” Accordingly, the decision rule for the numerical conversion of words such as several or few must be accommodated to—that is, make sense in the context of—the total study N.

To ascertain what specific numbers or ranges of numbers researchers might have had in mind when using these terms, we conducted a brief online survey of graduate faculty on a listserv in a school of nursing. This survey was approved by an institutional review board. After responding “yes” to an e-mail message inviting them to participate, individual faculty were sent one of four surveys reflecting four sample sizes common in qualitative research: 5, 10, 20, and 50. For each of these sample sizes, participants were asked to provide a specific number or range of numbers they had in mind for each of the following seven words: couple, few, majority, many, most, several, and some. Forty-one participants completed surveys. Not counting surveys excluded because of missing or unusable information (e.g., responding that few was less than some, that the numbers they gave were dependent on sample size when the sample size was clearly indicated), the total sample included 35 complete surveys: 8 for a sample size of 5, and 9 each for the sample sizes of 10, 20, and 50.

Participants provided one number, a range of numbers, or a percentage. Our analytic goal was to derive a plausible range represented by each word for each sample size. For each sample size, we constructed a range for each of the seven words using the smallest and largest numbers reported. To avoid the influence of outliers on these ranges, if the smallest or largest number for a word was reported by only one participant, we discarded that number and used the second-smallest or the second-largest reported value. Table 2 presents the ranges per word for each sample size derived from the survey results.

Table 2.

Results From Verbal Counting Survey

| Sample Size |

||||

|---|---|---|---|---|

| Words | 5 | 10 | 20 | 50 |

| Couple | ||||

| Lower limit | 2 | 2 | 2 | 2 |

| Upper limit | 2 | 2 | 2 | 4 |

| Few | ||||

| Lower limit | 2 | 1 | 2 | 1 |

| Upper limit | 3 | 4 | 8 | 9 |

| Some | ||||

| Lower limit | 2 | 2 | 2 | 1 |

| Upper limit | 3 | 5 | 10 | 15 |

| Many | ||||

| Lower limit | 3 | 4 | 7 | 11 |

| Upper limit | 5 | 9 | 20 | 50 |

| Several | ||||

| Lower limit | 2 | 2 | 3 | 3 |

| Upper limit | 4 | 5 | 10 | 30 |

| Majority | ||||

| Lower limit | 3 | 6 | 11 | 26 |

| Upper limit | 5 | 10 | 20 | 50 |

| Most | ||||

| Lower limit | 4 | 6 | 11 | 26 |

| Upper limit | 5 | 10 | 20 | 50 |

Application of the Survey Results

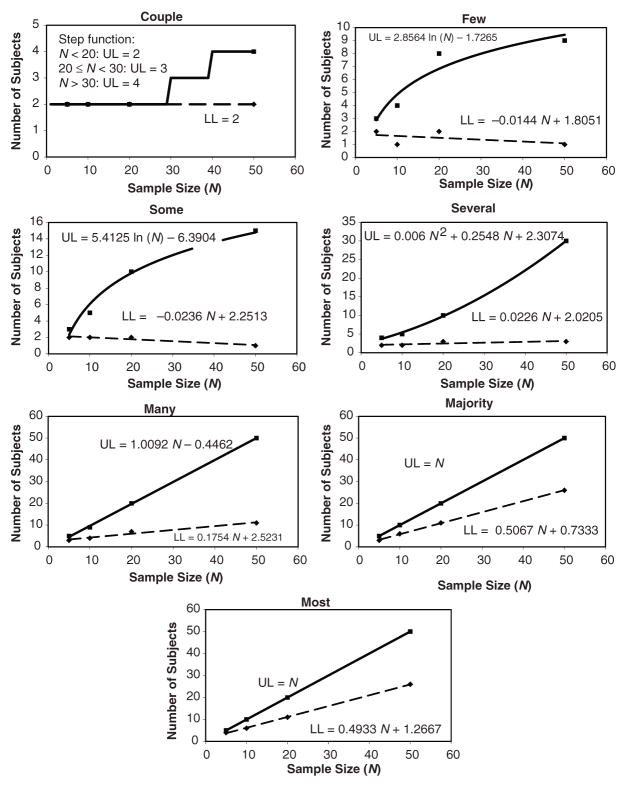

To apply the results of the survey to the actual sample sizes in the reports of the 11 qualitative studies we reviewed, we estimated the mathematical relationship between sample size and the number represented by each word. This was done by fitting a regression equation for the lower and upper limit of the range for each of the seven words. Linear regression was used when appropriate; step functions, polynomials, and logarithmic transformations were used otherwise. Equations were chosen that had good asymptotic properties (i.e., did not change direction or increase unrealistically quickly for larger sample sizes). The plots and regression equations are shown in Figure 1. Each of the seven plots corresponds to a single verbal counting word and shows the regression lines and equations for the upper and lower limits of the number of respondents indicated by that word, as a function of sample size.

Figure 1. Results of Verbal Counting Survey, With Fitted Regression Lines.

Note: The number of respondents expressing a finding for the desired sample size can be estimated using the regression equations given. The solid line indicates the upper limit, the dashed line the lower limit (UL = upper limit; LL = lower limit).

When a verbal count was given in a research report, the regression equations were then used to estimate a plausible range of respondents for that finding. For example,Gant and Welch (2004) reported that “several” women described side effects as factors that weakened their medication adherence (categorized as an indicator of a more complex regimen). Inserting the sample size of this study (N = 30) into the “several” upper and lower limit regression equations shown in Figure 1, we derived 3 to 15 as a plausible range for this finding. In the same report, the authors noted that “some” people found simpler timing of medications, the availability of medication refills, and voucher payments had made it easy to adhere (categorized as indicators of a less complex regimen), so a range of 2 to 12 was calculated.

Because we combined different findings designated with different verbal counts into the categories more complex regimen and less complex regimen, we had to decide which of the words to use to represent that category. We calculated the lower limit for each word using the lower limit regression equation and then chose the word that was associated with the greater value of the lower limit. For example, Misener and Sowell (1998) used many and some to report findings falling into the category of more complex regimen. Many yielded a lower limit of 6, whereas some yielded a lower limit of 2. We selected 6 as more likely to be precise because we were combining findings from multiple aspects of the regimen (i.e., 2 is too small to account for some people reporting that side effects during pregnancy is particularly problematic and many people reporting that the physical side effects they experienced made them stop taking antiretroviral drugs). Table 3 shows plausible ranges for all 11 reports derived from the regression models.

Table 3.

Plausible Ranges of Findings Derived From 11 Reports of Qualitative Studies

| Report | Total Study N | Pattern(s) of Reporting Used to Calculate Frequencies | Calculated Range of Findings for Nonadherence/More Complex Regimen | Calculated Range of Findings for Adherence/Less Complex Regimen |

|---|---|---|---|---|

| Abel and Painter (2003) | 6 | L, Q | 2–6 | 1–6 |

| Gant and Welch (2004) | 30 | V | 3–15 | 2–12 |

| Misener and Sowell (1998) | 22 | V | 6–22 | 3–11 |

| Powell-Cope et al. (2003)a | 24 | V, L | 13–24 | 1–24 |

| Remien et al. (2003) | 110 | V | 56–110 | 5–103 |

| Richter et al. (2002) | 33 | Q, V, Q | 2–33 | 2–13 |

| Roberts and Mann (2000) | 20 | V | 15–20 | NF |

| Schrimshaw et al. (2005) | 158 | V | 100–142 | 16–79 |

| Siegel and Gorey (1997) | 71 | V | 15–71 | NF |

| Siegel et al. (2001) | 51 | V | 11–51 | NF |

| Wood et al. (2004) | 36 | L | 2–36 | NF |

Note: L = lists; NF = no finding; Q = quotations; V = verbal counting

Finding extracted from qualitative component of mixed-methods study.

Patterns of Reporting Findings Other Than Verbal Counting

Verbal counting was the object of study in our survey because it was the dominant mode of presenting findings, appearing in 9 of the 11 reports of qualitative studies. Other patterns included the use of quotations and unenumerated lists. Researchers also used more than one pattern. For example, Schrimshaw et al. (2005) reported that the “majority” of women reported too many pills, bad-tasting pills, or large pills as a reason for nonadherence (indicating a more complex regimen), followed by supporting quotations.

Whenever verbal counting was used, whether it was the only pattern of reporting or used with another pattern, we considered it as the dominant pattern and calculated the range using the regression equations. In reports in which there was no verbal counting, and quotations were the only information source for deriving a frequency count, we first sought to determine whether each quotation was drawn from different respondents and, only if that was clearly the case, used the number of such quotations per finding to represent the lower limit of the number of respondents expressing the finding. In the report by Richter et al. (2002), for example, it was clear that the quotations came from different respondents whenever the authors indicated that one woman said something and a second woman agreed. The upper limit in this case could have been anything between the number of quotes +1 and the total sample size of 33. To be confident that our range captured the true count, we used the sample size of 33 as the upper limit.

Another secondary pattern of reporting was the list, whereby findings were simply listed without any verbal counts or quotations and without any indication that the order in which findings were listed was to communicate prevalence. When such lists were used, we assumed that each finding was expressed by at least one respondent. For example, Powell-Cope et al. (2003) found that once- or twice-a-day dosing favored taking antiretroviral medication (an indicator of a less complex regimen). Because at least one respondent must have described this factor, we conservatively used 1 to represent the lower limit and the total sample size of 24 to represent the upper limit. A report where the calculated range is so large does not provide much information about the frequency with which respondents expressed a finding. This range, therefore, coincides with the limited amount of information available in the report.

In studies where verbal counts were available, the regression equations were used to calculate the ranges, and any quotations or lists were used to further refine the calculated ranges. For example, for the Richter et al. (2002) report, the lower limit from the regression equation— lower limit of 1—was deemed to be too conservative as they presented two quotes from two obviously different respondents. Instead of 1, therefore, we used 2 to represent the lower limit in this report.

Discussion

As illustrated, these strategies can be used to generate a plausible range for the number of respondents expressing a given finding in a report of a qualitative study that contains no specific numbers (and about which there is no additional information available from the authors). The range (upper and lower limits) in a report can be divided by the sample size to estimate a range for the frequency effect size or the proportion of respondents expressing the finding (Onwuegbuzie, 2003). For example, for the relationship between nonadherence and more complex regimen in Abel and Painter (2003), the lower proportion would be 33.3% (2/6), and the upper would be 100% (6/6). More desirable is to obtain a single estimate rather than a range of the proportion of respondents expressing the finding. This can be accomplished with likelihood-based methods, where maximum likelihood or Bayesian estimation can be used to estimate the proportion of subjects expressing the finding. Although the focus here was only on the relationship between a single factor, medication regimen complexity, and adherence, if several factors were examined simultaneously within a study, the proportions could be used to rank the relative importance of each barrier to or facilitator of adherence. A desirable result of this quantitizing effort is that the proportions generated from it could be combined also with proportions calculated from quantitative research findings and then combined to yield a combined effect size, either in frequentist or Bayesian meta-analysis. We used this method to synthesize qualitative and quantitative research findings concerning the relationship between regimen complexity and adherence using Bayesian meta-analysis (Voils et al., 2009).

These strategies also offer another way to quantitatively synthesize the findings from descriptive qualitative studies alone. In contrast to the metasummary method Sandelowski and Barroso (2007) described, which is a quantitative method of synthesizing qualitative survey findings at the study level (i.e., based on frequency counts of individual findings across studies, regardless of how many participants showed those findings in any one study), the strategies proposed here constitute a quantitative method for synthesizing such findings at the level of the participant (i.e., based on sample size). These strategies may add a bit more numerical precision in systematic review projects where researchers deem this to be desirable.

The strategies we describe here are bound to generate controversy, if not outright antipathy, as they challenge deeply held beliefs about the value and even appropriateness of quantitizing qualitative data, the requirements for drawing inferences in quantitative research, and the relationship between qualitative and quantitative data. There is no question that the calculated ranges are imprecise relative to the precise ranges in quantitative reports. Moreover, the range of quantitative statistical analyses that could be conducted with proportions is limited. The 2 × 2 contingency table, which is the basis of many forms of quantitative analyses, cannot be created because information will likely be missing in qualitative reports for 2 of the 4 cells. Specifically, the qualitative reports reviewed do not contain information on how many participants indicated that a less complex regimen was associated with nonadherence or, conversely, how many indicated that a more complex regimen was associated with adherence. Researchers might, therefore, assume that the value would be zero. Yet it is possible that at least one person had that particular experience but did not mention it. Without information for each cell, inferential statistics, such as chi-square or odds ratios, cannot be calculated.

Another limitation to the method proposed here is the small sample size on which calculations were based: that is, 8 to 9 respondents for each of the four sample sizes studied. Even with this limitation, however, several trends were discernible, as indicated in Table 2, including the apparent consensus on the numerical meaning of couple (i.e., 2) and the lower limits of majority and most as >50%. Respondents also consistently rated many as > several. Researchers may want to repeat such a study with a larger sample and more sample size categories to see if these trends hold and others become discernible.

Despite these limitations, the strategies we describe here are practical ways to offset the limited information on frequency counts in reports of minimally interpretive qualitative descriptive studies, when researchers are unable to obtain this information from authors and when the syntheses of qualitative and quantitative findings they want to conduct call for more numerical precision at the participant level. These strategies offer researchers conducting systematic reviews another potentially workable option for combining qualitative findings with each other or with quantitative findings. They also signal the need for authors of reports of minimally interpretive qualitative studies to include frequency counts in their reports in lieu of verbal counts and unenumerated lists. Such information can be included in a table by which authors can avoid the inelegant 2-women-said-this and 10-women-said-that style of reporting. Indeed, such a table would allow authors to use words such as few and most as readers would know what authors meant by them. Accordingly, we see these strategies as constituting a temporary workaround, designed to be used with judgment and great caution, until such time as the reporting of qualitative surveys makes it no longer necessary. We see them too as yet another way further to enhance the information yield of such studies.

The strategies we illustrate here are wholly inappropriate for findings from fully interpretive qualitative (e.g., grounded theory, phenomenologic, narrative, ethnographic) studies as they do not lend themselves to such quantitative conversion, do not fit the reporting imperatives of such findings, and constitute misguided attempts to count the uncountable. Yet these strategies show how much interpretive work goes into quantitative conversion, making less distinct the line between quantitizing and qualitizing (Voils et al., 2009). The “interpretive gesture” is always present in quantitizing of any kind (Love, Pritchard, Maguire, McCarthy, & Paddock, 2005, p. 283), which is why quantitizing is never simply quantitative.

Acknowledgments

The method study referred to here, “Integrating Qualitative & Quantitative Research Findings,” is funded by the National Institute of Nursing Research, National Institutes of Health (5R01NR004907). This material is the result of work also supported with resources and facilities at the Veterans Affairs Medical Center in Durham, North Carolina. Views expressed in this article are those of the authors and do not necessarily represent the Department of Veterans Affairs.

Footnotes

For reprints and permissions queries, please visit SAGE’s Web site at http://www.sagepub.com/journalsPermissions.nav.

Contributor Information

YunKyung Chang, University of North Carolina at Chapel Hill School of Nursing.

Corrine I. Voils, Durham Veterans Affairs Medical Center Duke University Medical Center

Margarete Sandelowski, University of North Carolina at Chapel Hill School of Nursing.

Vic Hasselblad, Duke University Medical Center.

Jamie L. Crandell, University of North Carolina at Chapel Hill School of Nursing

References

- *Abel E, Painter L. Factors that influence adherence to HIV medications: Perceptions of women and health care providers. Journal of the Association of Nurses in AIDS Care. 2003;14:61–69. doi: 10.1177/1055329003252879. [DOI] [PubMed] [Google Scholar]

- *Gant LM, Welch LA. Voices less heard: HIV-positive African American women, medication adherence, sexual abuse, and self-care. Journal of HIV/AIDS and Social Services. 2004;3:67–91. [Google Scholar]

- Greene JC. Mixed methods in social inquiry. San Francisco: Jossey-Bass; 2007. [Google Scholar]

- Love K, Pritchard C, Maguire K, McCarthy A, Paddock P. Qualitative and quantitative approaches to health impact assessment: An analysis of the political and philosophical milieu of the multi-method approach. Critical Public Health. 2005;15:275–289. [Google Scholar]

- MacLure M. “Clarity bordering on stupidity”: Where’s the quality in systematic review? Journal of Education Policy. 2005;20:393–416. [Google Scholar]

- *Misener TR, Sowell RL. HIV infected women’s decisions to take antiretrovirals. Western Journal of Nursing Research. 1998;20:431–447. doi: 10.1177/019394599802000403. [DOI] [PubMed] [Google Scholar]

- Onwuegbuzie AJ. Effect sizes in qualitative research: A prolegomenon. Quality & Quantity. 2003;37:393–409. [Google Scholar]

- Onwuegbuzie AJ, Leech NL. A call for qualitative power analyses. Quality & Quantity. 2007;41:105–121. [Google Scholar]

- Onwuegbuzie AJ, Teddlie C. A framework for analyzing data in mixed methods research. In: Tashakkori A, Teddlie C, editors. Handbook of mixed methods in social and behavioral research. Thousand Oaks, CA: Sage; 2003. pp. 351–383. [Google Scholar]

- *Powell-Cope GM, White J, Henkelman EJ, Turner BJ. Qualitative and quantitative assessments of HAART adherence of substance-abusing women. AIDS Care. 2003;15:239–249. doi: 10.1080/0954012031000068380. [DOI] [PubMed] [Google Scholar]

- *Remien RH, Hirky AE, Johnson MO, Weinhardt LS, Whittier D, Le GM. Adherence to medication treatment: A qualitative study of facilitators and barriers among a diverse sample of HIV+ men and women in four U.S. cities. AIDS and Behavior. 2003;7:61–72. doi: 10.1023/a:1022513507669. [DOI] [PubMed] [Google Scholar]

- *Richter DL, Sowell RL, Pluto DM. Attitudes toward antiretroviral therapy among African American women. American Journal of Health Behavior. 2002;26:25–33. doi: 10.5993/ajhb.26.1.3. [DOI] [PubMed] [Google Scholar]

- *Roberts KJ, Mann T. Barriers to antiretroviral medication adherence in HIV-infected women. AIDS Care. 2000;12:377–386. doi: 10.1080/09540120050123774. [DOI] [PubMed] [Google Scholar]

- Sandelowski M. Sample size in qualitative research. Research in Nursing & Health. 1995;18:179–183. doi: 10.1002/nur.4770180211. [DOI] [PubMed] [Google Scholar]

- Sandelowski M. Real qualitative researchers do not count: The use of numbers in qualitative research. Research in Nursing & Health. 2001;24:230–240. doi: 10.1002/nur.1025. [DOI] [PubMed] [Google Scholar]

- Sandelowski M. Reading, writing and systematic review. Journal of Advanced Nursing. 2008;64:104–110. doi: 10.1111/j.1365-2648.2008.04813.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sandelowski M, Barroso J. Handbook for synthesizing qualitative research. New York: Springer; 2007. [Google Scholar]

- Sandelowski M, Barroso J, Voils CI. Using qualitative metasummary to synthesize qualitative and quantitative descriptive findings. Research in Nursing & Health. 2007;30:99–111. doi: 10.1002/nur.20176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- *Schrimshaw EW, Siegel K, Lekas HM. Changes in attitudes toward antiviral medication: A comparison of women living with HIV/AIDS in the pre-HAART and HAART eras. AIDS and Behavior. 2005;9:267–279. doi: 10.1007/s10461-005-9001-6. [DOI] [PubMed] [Google Scholar]

- *Siegel K, Gorey E. HIV-infected women: Barriers to AZT use. Social Science & Medicine. 1997;45:15–22. doi: 10.1016/s0277-9536(96)00303-6. [DOI] [PubMed] [Google Scholar]

- *Siegel K, Lekas HM, Schrimshaw EW, Johnson JK. Factors associated with HIV-infected women’s use or intention to use AZT during pregnancy. AIDS Education and Prevention. 2001;13:189–206. doi: 10.1521/aeap.13.3.189.19747. [DOI] [PubMed] [Google Scholar]

- Tashakkori A, Teddlie C. Mixed methodology: Combining qualitative and quantitative approaches. Thousand Oaks, CA: Sage; 1998. [Google Scholar]

- Teddlie C, Tashakkori A. A general typology of research designs featuring mixed methods. Research in the Schools. 2006;13:12–28. [Google Scholar]

- Voils CI, Hasselblad V, Crandell J, Chang Y, Lee E, Sandelowski M. A Bayesian method for the synthesis of evidence from qualitative and quantitative reports: An example from the literature on antiretroviral medication adherence. 2009 doi: 10.1258/jhsrp.2009.008186. Manuscript submitted for publication. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wood M, Christy R. Sampling for possibilities. Quality & Quantity. 1999;33:185–202. [Google Scholar]

- *Wood SA, Tobias C, McCree J. Medication adherence for HIV-positive women caring for children: In their own words. AIDS Care. 2004;16:909–913. doi: 10.1080/09540120412331290158. [DOI] [PubMed] [Google Scholar]