Abstract

Visual short-term memory (VSTM) has received intensive study over the past decade, with research focused on VSTM capacity and representational format. Yet, the function of VSTM in human cognition is not well understood. Here we demonstrate that VSTM plays an important role in the control of saccadic eye movements. Intelligent human behavior depends on directing the eyes to goal-relevant objects in the world, yet saccades are very often inaccurate and require correction. We hypothesized that VSTM is used to remember the features of the current saccade target so that it can be rapidly reacquired after an errant saccade, a fundamental task faced by the visual system thousands of times each day. In four experiments, memory-based gaze correction was found to be accurate, fast, automatic, and largely unconscious. In addition, a concurrent VSTM load was found to interfere with memory-based gaze correction, but a verbal short-term memory load did not. These findings demonstrate VSTM plays a direct role in a fundamentally important aspect of visually guided behavior, and they suggest the existence of previously unknown links between VSTM representations and the occulomotor system.

Human vision is active and selective. In the course of viewing a natural scene, the eyes are reoriented approximately three times each second to bring the projection of individual objects onto the high-resolution, foveal region of the retina (for reviews, see Henderson & Hollingworth, 1998; Rayner, 1998). Periods of eye fixation, during which visual information is acquired, are separated by brief saccadic eye movements, during which vision is suppressed and we are virtually blind (Matin, 1974). The input for vision is therefore divided into a series of discrete episodes. To span the perceptual gap between individual fixations, a transsaccadic memory for the visual properties of the scene must be maintained across each eye movement.

Transsaccadic Memory and Visual Short-Term Memory (VSTM)

Early theories proposed that transsaccadic memory integrates low-level sensory representations (i.e., iconic memory) across saccades to construct a global image of the external world (McConkie & Rayner, 1976). However, a large body of research demonstrates conclusively that is false; participants cannot integrate sensory information presented on separate fixations (Irwin, Yantis, & Jonides, 1983; O'Regan & Lévy-Schoen, 1983; Rayner & Pollatsek, 1983). Recent work using naturalistic scene stimuli has arrived at a similar conclusion. Relatively large changes to a natural scene can go undetected if the change occurs during a saccadic eye movement or other visual disruption (Grimes, 1996; Henderson & Hollingworth, 1999, 2003b; Rensink, O'Regan, & Clark, 1997; Simons & Levin, 1998), an effect that has been termed change blindness. For example, Henderson and Hollingworth (2003b) had participants view scene images that were partially occluded by a set of vertical gray bars (as if viewing the scene from behind a picket fence). During eye movements, the bars were shifted so that the occluded portions of the scene became visible and the visible portions became occluded. Despite the fact that every pixel in the image changed, subjects were almost entirely insensitive to these changes, demonstrating that low-level sensory information is not preserved from one fixation to the next.

Although transsaccadic memory does not support low-level sensory integration, visual representations are nonetheless retained across eye movements. In transsaccadic object identification studies, participants are faster to identify an object when a preview of that object has been available in the periphery before the saccade (Henderson, Pollatsek, & Rayner, 1987), and this benefit is reduced when the object undergoes a visual change during the saccade, such as substitution of one object with another from the same basic-level category (Henderson & Siefert, 2001; Pollatsek, Rayner, & Collins, 1984) and mirror reflection (Henderson & Siefert, 1999). In addition, object priming across saccades is governed primarily by visual similarity rather than by conceptual similarity (Pollatsek et al., 1984). Finally, structural descriptions of simple visual stimuli can be retained across eye movements (Carlson-Radvansky, 1999; Carlson-Radvansky & Irwin, 1995). Thus, memory across saccades appears to be limited to higher level visual codes, abstracted away from precise sensory representation but detailed enough to specify individual object tokens and viewpoint.

Multiple lines of converging evidence indicate that visual memory across saccades depends on the VSTM system originally identified by Phillips (1974) and investigated extensively over the last decade (see Luck, in press, for a review).1 On all dimensions tested, transsaccadic memory exhibits properties similar to those found for VSTM. Both VSTM and transsaccadic memory have a capacity of 3–4 objects (Irwin, 1992a; Luck & Vogel, 1997; Pashler, 1988) and lower spatial precision than sensory memory (Irwin, 1991; Phillips, 1974). Both VSTM and transsaccadic memory maintain object-based representations, with capacity determined primarily by the number of objects to remember and not by the number of visual features to remember (Irwin & Andrews, 1996; Luck & Vogel, 1997). Finally, both VSTM and transsaccadic memory are sensitive to higher-order pattern structure and grouping (Hollingworth, Hyun, & Zhang, 2005; Irwin, 1991; Jiang, Olson, & Chun, 2000). The logical inference is that VSTM is the memory system comprising transsaccadic memory.

A final strand of evidence about VSTM and transsaccadic memory is particularly germane to the present study. Before a saccade, visual attention is automatically and exclusively directed to the target of that saccade (Deubel & Schneider, 1996; Hoffman & Subramaniam, 1995). Attention also supports the selection of perceptual objects for consolidation into VSTM (Averbach & Coriell, 1961; Hollingworth & Henderson, 2002; Irwin, 1992a; Schmidt, Vogel, Woodman, & Luck, 2002; Sperling, 1960). Thus, the saccade target object is preferentially encoded into VSTM and stored across the saccade. Indeed, memory performance is higher for objects at or near the target position of an impending or just-completed saccade (Henderson & Hollingworth, 1999, 2003a; Irwin, 1992a; Irwin & Gordon, 1998).

The Function of VSTM across Saccades

Although VSTM can be used to store object information across saccades, it is not exactly clear what purpose that storage serves. More generally, the functional role of VSTM in real-world cognition is not well understood. VSTM research has typically investigated the capacity (Alvarez & Cavanagh, 2004; Luck & Vogel, 1997) and representational format (Hollingworth et al., 2005; Irwin & Andrews, 1996; Jiang et al., 2000; Luck & Vogel, 1997; Phillips, 1974) of VSTM, with the question of VSTM function relatively neglected.

One plausible function for VSTM is to establish correspondence between objects visible on separate fixations. With each saccade, the retinal positions of objects change, generating a correspondence problem: How does the visual system determine that an object projecting to one retinal location on fixation N is the same object as the one projecting to a different retinal location on fixation N + 1? This is a fundamental problem the visual system must solve. Researchers have proposed that transsaccadic VSTM may be used to compute object correspondence across saccades. Memory for the visual properties of a few objects—particularly the saccade target—is stored across the saccade and compared with visual information available after the saccade to determine which post-saccade objects correspond with the remembered pre-saccade objects (Currie, McConkie, Carlson-Radvansky, & Irwin, 2000; Henderson & Hollingworth, 1999, 2003a; Irwin, McConkie, Carlson-Radvansky, & Currie, 1994; McConkie & Currie, 1996).

The issue of object correspondence across saccades has often been framed as a problem of visual stability: How do we consciously perceive the world to be stable when the image projected to the retina shifts with each saccade? Irwin, McConkie, and colleagues (Currie et al., 2000; Irwin et al., 1994; McConkie & Currie, 1996; see also Deubel & Schneider, 1994 ) proposed a saccade target theory to explain visual stability. In this view, an object is selected as the saccade target before each saccade. Perceptual features of the target are encoded into VSTM and maintained across the saccade. After completion of the saccade, the visual system searches for an object that matches the target information in VSTM, with search limited to a spatial region around the landing position. If the saccade target is located within the search region, the experience of visual stability is maintained. If the saccade target is not found, the observer becomes consciously aware of a discrepancy between pre- and post-saccade visual experience. Saccade target theory was proposed to account for the phenomenology of visual stability, but the evidence reviewed above demonstrating preferential encoding of the saccade target into VSTM raises the possibility that VSTM supports the more general function of establishing correspondence between the saccade target object visible before and after the saccade. Such correspondence might produce the experience of visual stability, but that need not be its only purpose. We will argue that an important function of VSTM across saccades is to support the correction of gaze when the eyes fail to land on the target of a saccade. Such gaze corrections are required thousands of times each day. A VSTM representation of the saccade target allows the target to be found after an inaccurate eye movement, and a corrective saccade can be generated to that object.

Gaze Correction and VSTM

Real-world tasks require orienting the eyes to goal-relevant objects in the world (Hayhoe, 2000; Henderson & Hollingworth, 1998; Land & Hayhoe, 2001; Land, Mennie, & Rusted, 1999), but eye movements are prone to error, with the eyes often failing to land on the target of the saccade. Even under highly simplified conditions in which participants generate saccades to single targets on blank backgrounds (Frost & Pöppel, 1976; Kapoula, 1985), saccades errors are very common, occurring on at least 30–40% of trials (for a review, see Becker, 1991). Saccade errors presumably occur thousands of times each day as people go through their normal activities. During natural scene viewing, inter-object saccades occur about once per second, on average. Thus, in a 16-hour day, assuming that 30–40% of saccades fail to land on the target object, gaze correction could be required as many as 17,000 times. When the eyes fail to land on the saccade target object, gaze must be corrected to bring that object to the fovea. Accurate, rapid gaze corrections are therefore critical to ensure that the eyes are efficiently directed to goal-relevant objects.

Unlike other motor actions, such as visually guided reaching, saccadic eye movements are ballistic; the short duration of the saccade and visual suppression prevent any correction based on visual input during the eye movement itself. Thus, correction of gaze must depend on perceptual information available after the eyes have landed and target information retained in memory across the saccade. When the eyes miss the target object, there is typically a short fixation followed by a corrective saccade to the target (Becker, 1972; Deubel, Wolf, & Hauske, 1982). The duration of the fixation before the corrective saccade (correction latency) varies inversely with correction distance, with small corrections (< 2°) requiring 150–200 ms to initiate and longer corrections requiring shorter latencies (Becker, 1972; Deubel et al., 1982; Kapoula & Robinson, 1986). Previous research on corrective saccades has used single targets displayed in isolation. When the eyes miss the target in these experiments, finding the target is trivial, because there is only one visible object near the saccade landing position. The complexity of real-world environments makes this problem much more interesting and challenging. If the eyes miss the saccade target, there will likely be multiple objects near fixation. For example, if a saccade to a phone on a cluttered desk misses the phone, other objects (e.g., pen, scissors, notepad) will lie near the landing position of the eye movement. To correct gaze to the appropriate object (and efficiently execute a phone call), correspondence must be established between the remembered pre-saccade target object and visual object information available after the saccade.

There are two ways in which pre-saccade information could be used to correct gaze after an errant saccade. First, the general goals that led to the initial saccade could be used to find the target. In the example of making a saccade to a telephone, the general goal of making a telephone call or the specific goal of looking at the telephone might allow an observer to re-cquire the original target (or another object that is worth fixating). A second possibility is that the specific visual details of the target—which may have been irrelevant to the observer’s goal—could be stored in VSTM and used to re-acquire the target. For example, although the color of the telephone might be irrelevant to the observer’s task, this information may be automatically stored in VSTM prior to the saccade and used to re-acquire the telephone if the eyes fail to land on it. It is certainly possible that both of these mechanisms operate in natural vision, but memory for the exact features of the target may allow a more rapid re-acquisition than general task goals. Indeed, Wolfe, Horowitz, Kenner, Hyle, and Vasan (2004) found that visual search is substantially more efficient when the observers were shown a picture of the target (e.g., a black vertical bar) than when they were given a verbal description of the target (e.g., the words “black vertical”). In the present study, we therefore focus on whether memory for an incidental feature of a saccade target can be used to guide gaze corrections.

There is one study in the existing literature suggesting that memory across saccades might support gaze correction. Deubel, Wolf, and Hauske (1984) presented a stimulus composed of densely packed vertical bars of different widths. During a saccade, the entire array was shifted either in the direction of the saccade or in the reverse direction. The final position of the eyes was systematically related to the shift direction and distance, with a secondary saccade generated to correct for the array displacement. This evidence of memory-guided gaze correction must be considered preliminary, however, because Deubel et al. did not report many of the details of the experiment (stimulus parameters, number of participants, number of corrective saccades on a trial, latency of the corrections, and so on), making it difficult to assess the results of the experiment. Thus, the question of whether memory can drive gaze correction is largely open. The present study focused on whether memory for visual objects in VSTM can guide gaze correction to the appropriate saccade target object.

In summary, we hypothesize that VSTM maintains target information across the saccade, so that when the eyes fail to land on the target, the target can be discriminated from other, nearby objects and gaze efficiently corrected. The use of VSTM to correct gaze is likely to be an important factor governing the efficiency of visual perception and behavior. If VSTM were not available across eye movements, saccade errors might not be successfully corrected, leading to significant delays in the perceptual processing of goal-relevant objects and slowing performance of the complex visual tasks (e.g., making tea, driving, air traffic control, to name just a few) that comprise much of waking life. Considering that we make approximately three saccades each second and many of these fail to land on the target object, establishing object correspondence and supporting gaze correction is likely to be a central function of the VSTM system.

The Gaze Correction Paradigm

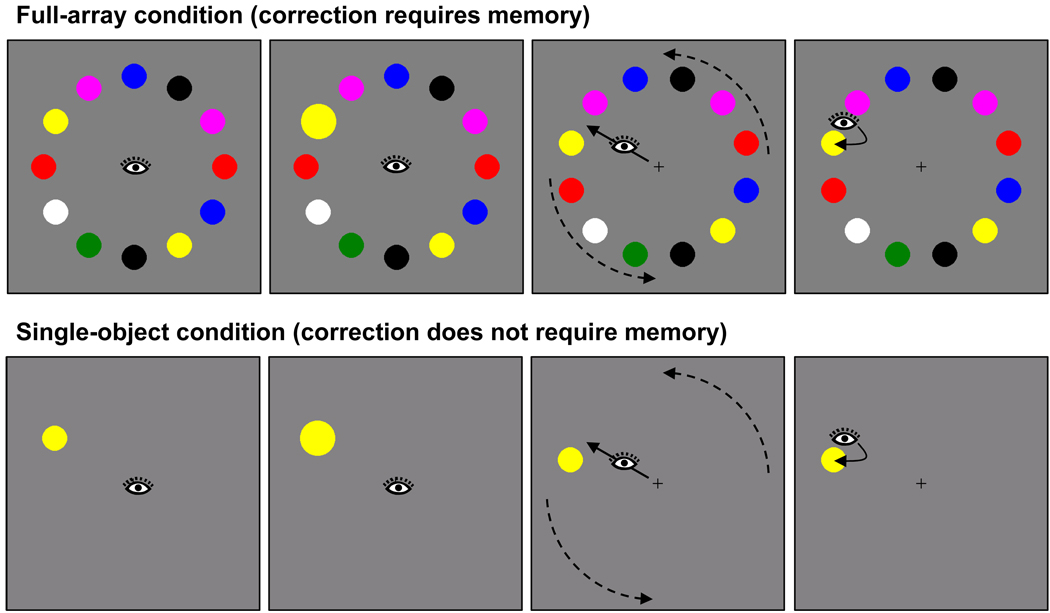

To examine the role of VSTM in object correspondence and gaze correction, we developed a new procedure that simulates target ambiguity after an inaccurate eye movement, illustrated in Figure 1. An array of objects was presented in a circular configuration so that each object was equally distant from fixation. After a brief delay, one object was cued by rapid expansion and contraction. The participant’s task simply was to generate a saccade to the cued object and fixate it. On a critical subset of trials (⅓ of the total), the array rotated ½ object position during the saccade to the cued object, when vision was suppressed. The eyes typically landed between two objects, the target object and a distractor object adjacent to the target. On average, this landing position was equidistant from the two objects. By artificially inducing saccade errors, we could precisely control the sensory input that followed the saccade.

Figure 1.

Sequence of events in a rotation trial of Experiment 1. The top row shows the full-array condition and the bottom row the single-object condition

When the array rotated, the sensory input once the eyes landed was not by itself sufficient to determine which of the two nearby objects was the original saccade target. In addition, subjects could not correct gaze based on direct perception of the rotation, as the rotation occurred during the period of saccadic suppression. The only means to determine which object was the original target—and to make a corrective saccade—was to remember properties of the array from before the saccade and compare this memory trace to the new perceptual input after the eyes landed. Consider, for example, the case in which the saccade target was a yellow disk, and the ½ position rotation of the array caused the eyes to land midway between the yellow disk and a violet disk (Figure 1). To make a corrective saccade to the yellow disk and not to the violet disk, the visual system must retain some information about the pre-saccade array (e.g., the color of the saccade target) and compare this information with the post-saccade array.

Two measures of gaze correction efficiency were examined. Gaze correction accuracy was the percentage of trials on which the target was fixated first after landing between target and distractor. Gaze correction latency was the duration of the fixation before the corrective saccade when a single corrective saccade took the eyes to the target object. This latter measure reflects the speed with which the target object was identified and a corrective saccade computed and initiated. Gaze correction accuracy and latency under conditions of target ambiguity were compared with a single-object control condition in which only a single object was presented before and after the saccade (Figure 1). This condition is analogous to the conditions of previous experiments on saccade correction (Becker, 1972; Deubel et al., 1982), and it allowed us to assess the efficiency of saccade correction when correction did not require memory.

Experiments 1 and 2 tested whether participants could reliably and efficiently correct gaze to the target object when correction required memory. Experiment 3 provided a direct test of the role of VSTM in gaze correction, adding a concurrent VSTM load that should interfere with gaze correction if gaze correction depends on VSTM. Experiment 4 examined the automaticity of gaze correction by placing gaze correction and task instructions at odds. Together, these experiments demonstrated that visual memory can support rapid and accurate gaze correction, that this ability depends specifically on the VSTM system, and that VSTM-based gaze correction is largely automatic.

In addition to illuminating the role of VSTM in gaze correction, the gaze correction paradigm addressed two additional issues central to understanding the function of VSTM in human cognition. First, the gaze correction task is a variant of visual search task: After the saccade, the target object must be found among a set of distractors. Thus, the present study provided direct evidence regarding the role of VSTM in visual search (Chelazzi, Miller, Duncan, & Desimone, 1993; Desimone & Duncan, 1995; Duncan & Humphreys, 1989; Woodman & Luck, 2004; Woodman, Vogel, & Luck, 2001). Second, general theories of object correspondence (Kahneman, Treisman, & Gibbs, 1992) have held that only spatiotemporal properties of an object (i.e., object position and trajectories) are used to establish object correspondence. In the gaze correction paradigm used in the present study, spatial information is not informative, and successful gaze correction requires that object correspondence be computed on the basis of a surface feature match (e.g., finding the object that matches the target color). Accurate and rapid gaze correction in the present study indicates that memory for surface features can be used to establish object correspondence, and therefore that object correspondence operations are not limited to spatiotemporal information. These two topics are addressed in the General Discussion.

Experiment 1

In Experiment 1, we examined whether memory for a simple visual feature—color—can be used to establish object correspondence across saccades and support efficient gaze correction. Color patches are commonly used in VSTM studies (e.g., Luck & Vogel, 1997), allowing us to draw inferences about the relationship between VSTM and transsaccadic memory.

In addition, we tested an alternative, nonmemorial hypothesis regarding the information used to correct gaze in complex environments. When multiple objects are present after an inaccurate saccade, the saccade target object is quite likely to be the object nearest to the saccade landing position. Thus, the object nearest the landing position might be preferentially selected as the target of the corrective saccade, and this means of selection need not consult memory at all. To test this hypothesis, we exploited natural variability in the saccade landing position in the rotation trials of Experiment 1. We examined the relationship between correction accuracy and the distance of the initial saccade landing position from the target. In addition, we tested whether saccades that landed closer to the target than to the distractor were more likely to be corrected to the target than saccades that landed closer to the distractor than to the target. Although nonmemorial distance effects and memory-based effects are not mutually exclusive possibilities, it is possible that distance can trump memory, with memory-based gaze correction occurring only when the target and a distractor are approximately equidistant from the initial saccade landing position. Alternatively, it is possible that memory can trump distance, allowing accurate gaze correction to the remembered item even when it is substantially farther from the saccade landing position than a distractor.

Methods

Participants

Twelve University of Iowa students participated for course credit. Each reported normal, uncorrected vision.

Stimuli and Apparatus

For the full-array condition, object arrays consisted of 12 color disks (Figure 1, top panel) displayed on a gray background. Two initial array configurations were possible, one with the objects at each of the 12 clock positions and another rotated by 15°. The color of each disk was randomly chosen from a set of seven (red, green, blue, yellow, violet, black, and white), with the constraint that color repetitions be separated by at least two objects. The x, y, and luminance values for each color were measured with a Tektronix model J17 colorimeter using the 1931 CIE color coordinate system, and were as follows: red (x = .64, y = .33; 14.79 cd/m2), green (x = .31, y = .57; 9.08 cd/m2), blue (x = .15, y = .06; 9.20 cd/m2), yellow (x = .42, y = .49; 69.58 cd/m2), violet (x = .27, y = .12; 25.77 cd/m2), black (< .001 cd/m2), and white (75.5 cd/m2). Disks subtended 1.6° and were centered 5.9° from central fixation. The distance between the centers of adjacent disks was 3.0°. The target object was equally likely to appear at each of the 12 possible locations. When the target was cued, it expanded to 140% of its original size and contracted back to the original size over 50 ms of animation. The angular difference between adjacent patches was 30°. For rotation trials, the array was rotated 15° clockwise on half the trials and 15° counterclockwise on the other half. The single-object control condition was identical to the full-array condition, except only the target object was displayed (Figure 1, bottom panel).

Stimuli were displayed on a 17-in CRT monitor with a 120 Hz refresh rate. Eye position was monitored by a video-based, ISCAN ETL-400 eyetracker sampling at 240Hz. A chin and forehead rest (with clamps resting against the temples) was used to maintain a constant viewing distance of 70 cm and to minimize head movement. The experiment was controlled by E-prime software. Gaze position samples were streamed in real time from the eyetracker to the computer running E-prime. E-prime then used gaze position data to control trial events (such as transsaccadic rotation) and saved the raw position data to a file that mapped eye events and stimulus events.

Array rotation during the saccade to the target was accomplished using a boundary technique. Participants initially fixated the center of the array. An invisible, circular boundary was defined with a radius of 1.3° from central fixation. After the cue, E-prime monitored for an eye position sample beyond the circular boundary, and on array rotation trials, the rotated array was then written to the screen (on no-rotation trials, a new image was also written to the screen during the saccade, but it was the same as the preview image). It is important to ensure that rotation changes were made quickly enough to be completed during the eye movement, so they were not directly visible. With the present apparatus, the maximum total delay between boundary crossing and completion of screen change was 19 ms. In pilot work, the mean actual delay between boundary crossing and the first fixation after the change was 29 ms, and the shortest actual delays were 22 ms. Thus, the rotation change occurred during the period of saccadic suppression and was completed before the eyes landed.

Procedure

Each trial was initiated by the experimenter after eyetracker calibration was checked. Following a delay of 500 ms, the preview array was presented for 1000 ms as participants maintained central fixation. Next, the target cue animation was presented for 50 ms. Participants were instructed to generate an eye movement to the target as quickly as possible. The array was rotated during this saccade on 1/3 of trials, typically causing the eyes to land between the target and an adjacent distractor. The rotation was accomplished by replacing the original array (within a single refresh cycle) with a copy that was rotated 15° clockwise or counterclockwise. Once the target had been fixated, it was outlined by a box for 500 ms to indicate successful completion of the trial.

The full-array and single-object conditions were blocked, and block order was counterbalanced across participants. In each block, participants first completed 12 practice trials, followed by 144 experimental trials, 48 of which were rotation trials. Trial order within a block was determined randomly. Each participant completed a total 288 experiment trials. The entire session lasted approximately 45 min.

Data Analysis

Eye tracking data analysis was conducted offline using dedicated software. A velocity criterion (eye rotation > 31°/s) was used to define saccades. During a fixation, the eyes are not perfectly still. Fixation position was calculated as the mean position during a fixation period weighted by the proportion of time at each sub-location within the fixation. These data were then analyzed with respect to critical regions in the image, such as the target and distractor regions, allowing us to determine whether the eyes were directed first to the target region or first to the distractor region and the latency of the correction. Object scoring regions were circular and had a diameter of 1.9°, 20% larger than the color disks themselves.

Rotation trials were eliminated from analysis if the eyes initially landed on an object rather than between objects, if more than one saccade was required to bring the eyes from central fixation to the general region of the object array, or if the eyetracker lost track of the eye. The large majority of eliminated trials were those in which the eyes landed on an object, reflecting the fact that saccades are often inaccurate, a basic assumption of this study. To equate the proportion of eliminated trials in the full-array and single-object conditions, trials were eliminated in the single-object condition if the eyes would have landed on an object had there been a full array of objects. A total of 28% of the trials was eliminated across the experiment.

Results

Rotation trials

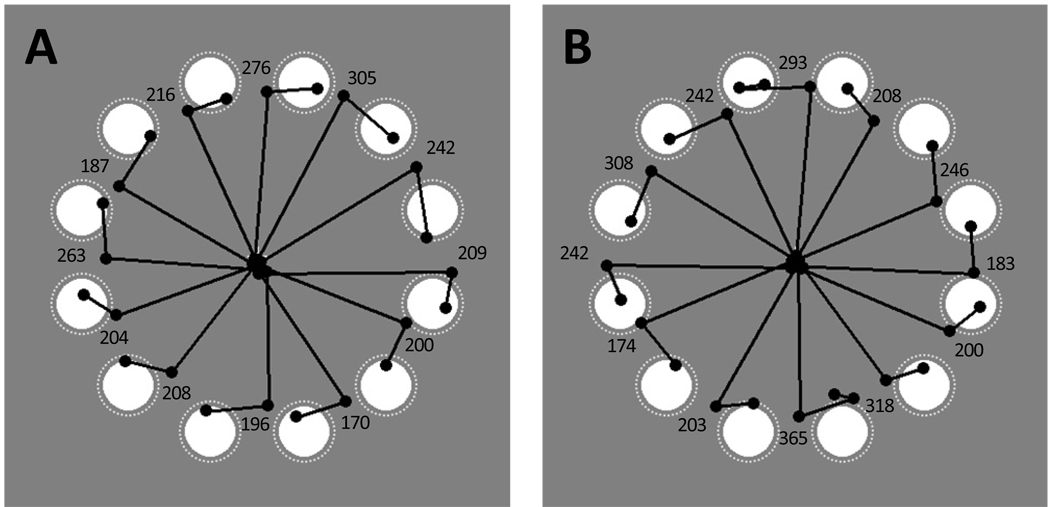

The rotation trials were of central interest for examining memory-based gaze correction. Figure 2 shows the eye movement scan paths for a single, representative participant in the full-array rotation condition.

Figure 2.

Eye movement scan paths for the full-array rotation trials of a single participant in Experiment 1. Panel A shows all 12 trials on which the array started at the clock positions and rotated clockwise during the saccade. Panel B shows all 12 trials on which the array started at the clock positions and rotated counterclockwise during the saccade. The white disks indicate object positions after the rotation. White dotted circles indicate the scoring regions used for data analysis. Black lines represent saccades and small black dots fixations. The initial saccade directed from central fixation to the array typically landed between target and distractor, as expected given the rotation of the array during the saccade. Then, gaze was corrected. Numerical values indicate correction latency (duration of the fixation before the corrective saccade) in ms for each depicted trial. Note that corrective saccades were directed to the appropriate clockwise (Panel A) or counterclockwise (Panel B) target object.

We first examined the basic question of whether visual memory can support accurate gaze correction. In the full-array condition, which required memory to discriminate target from distractor, mean gaze correction accuracy was 98.1%. That is, after landing between target and distractor, the eyes were directed first to the target on 98.1% of trials. Unsurprisingly, gaze correction accuracy in the single-object condition, which did not require memory, was 100% correct. The accuracy difference between the full-array and single-object conditions was statistically reliable, t(11) = 2.31, p < .05.

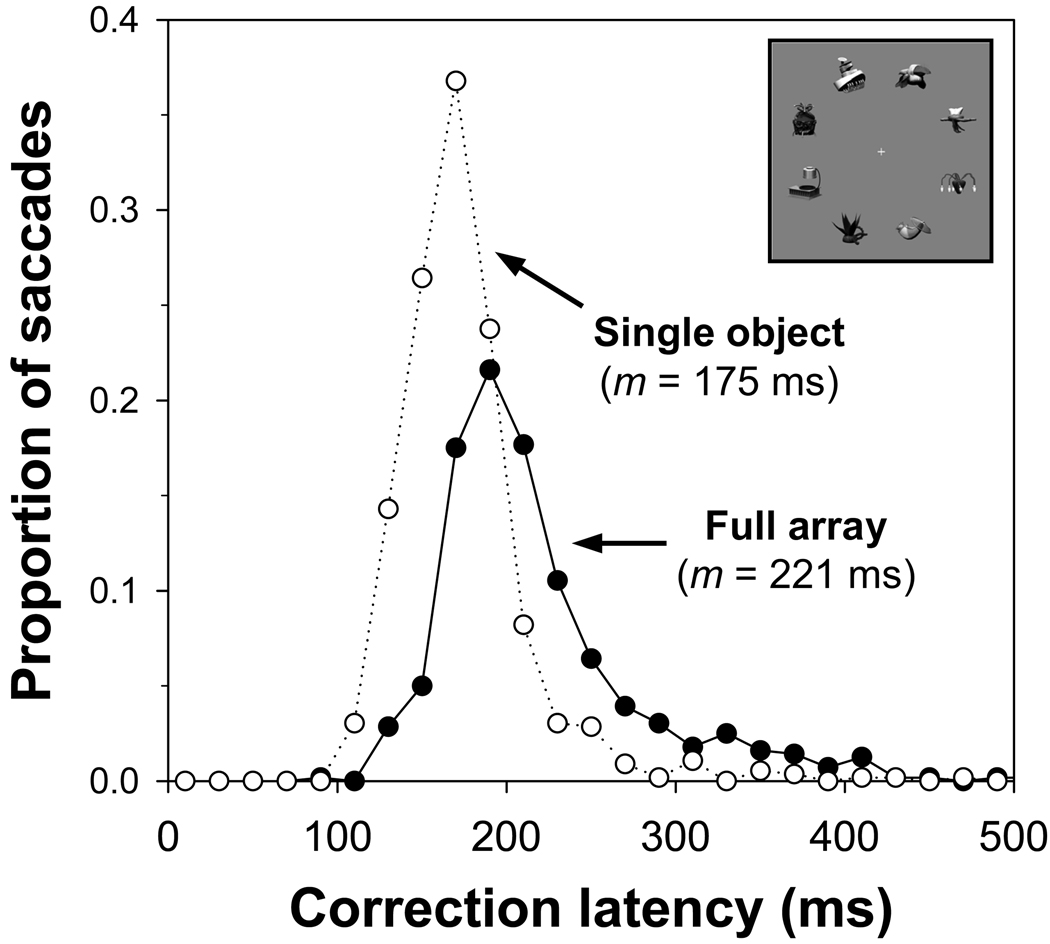

To assess the speed of memory-based gaze correction, we examined gaze correction latency (the duration of the fixation before an accurate corrective saccade) in the full-array and single-object conditions. When comparing gaze correction latencies, it is important to ensure that the distance of the correction was the same in the two conditions. Indeed, mean corrective saccade distance did not differ between the single-object (1.38°) and full-array conditions (1.38°). Mean gaze correction latency was 240 ms in the full-array condition and 201 ms in the single-object condition, t(11) = 4.1, p < .005. The memory requirements in the full-array condition added only 39 ms, on average, to gaze correction latency. Figure 3 shows the distributions of correction latencies for the single-object and full-array conditions. The use of memory to correct gaze was observed as a shift in the peak of the distribution of latencies and an increase in variability. In summary, memory-based gaze correction was highly accurate and introduced only a relatively small increase in gaze correction latency.

Figure 3.

Distributions of correction latencies for the full-array and single-object conditions in Experiment 1.

Correction latencies in this experiment might appear fairly long given existing evidence that corrective saccade latencies can be as short as 110–150 ms in studies presenting a single object (Becker, 1991). However, the longer latencies in the present experiment are likely to reflect the fact that the eyes landed relatively close to the contours of the array objects (less than 1° from the nearest object contour, on average). When the eyes land near an object, gaze correction latency is similar to latencies observed for primary saccades, in the range of 150–205 ms (Becker, 1991). In the present experiment, baseline correction latency in the single-object control condition (201 ms) fell within the range of correction latency observed in prior studies. Thus, we can be confident that the present experiment examined typical corrective saccades (in the single-object condition) and the effect of memory demands on the latency of typical corrective saccades (in the full-array condition). Note also that the peaks of the distributions of saccade latency for the single-object and full-array conditions diverged in the range of 100–200 ms (see Figure 3).

In addition to examining memory-based gaze correction, we tested the hypothesis that the relative distance of objects from the saccade landing position influences gaze correction. Given that correction accuracy 98.1% in the full-array condition, distance could not have exerted a major influence on which object was selected as the goal of the corrective saccade. There was no relationship between correction accuracy and the distance of the initial saccade landing position from the target object (rpb = −.05, p = .35).2 In addition, gaze correction was no more accurate for saccades that landed closer to the target than to the distractor (98.8%) than for saccades that landed closer to the distractor than to the target (97.8%), t(11) = .58, p = .57. Thus, relative distance appears to play little role in gaze correction in the present paradigm, which was dominated by memory for color.

No-rotation trials

We also assessed naturally occurring saccade errors on the no-rotation trials of Experiment 1. A significant proportion of saccades directed from central fixation to the target failed to land on the target, with undershoots the most common error. On 35.9% of trials, the eyes did not land on the 1.6° target object. On 16.8% of trials, the eyes did not land within the 1.9° target scoring region. We can be confident that, in the latter trials, the saccade failed to land on the target. Considering these trials, gaze correction accuracy was similar to the results for experimentally induced errors on the rotation trials. Mean gaze correction accuracy for naturally occurring errors was 99.2% in the full-array condition and 99.4% in the single-object condition, F < 1.

We were also able to examine correction latency for naturally occurring saccade errors, but these data must be treated with caution. The analysis could be performed only over the subset of natural error trials on which a single corrective saccade took the eyes to the target object (a total of only 298 trials across 12 participants), and there was significant variability in the number of natural error trials per subject, leaving some subjects with very few observations. The numerical pattern of correction latency data was similar to the data from the rotation condition. Mean gaze correction latency was 295 ms in the full-array condition and 271 ms in the single-object condition, F < 1. The longer overall latencies observed for the correction of natural errors were driven by two participants who had very few observations and very high mean correction latencies, greater than 400 ms. In addition, the eyes tended to land closer to the target object for naturally occurring saccade errors than for experimentally induced errors. Longer latencies for natural error correction reflected the typical inverse relationship between correction distance and correction latency (Deubel et al., 1982; Kapoula & Robinson, 1986).

As a whole, the data for the correction of natural errors produced a similar pattern of results as that for experimentally induced errors in the rotation condition, validating the use of array rotation during the saccade as a means to examine gaze correction.

Discussion

The results from Experiment 1 demonstrated that visual memory can guide saccade correction in a manner that is highly accurate and efficient. In the full-array condition—which required pre-saccade memory encoding, transsaccadic retention, and post-saccade comparison of memory with objects lying near the landing position—correction accuracy was nearly perfect, and correction latency increased by only 39 ms, on average, compared with a single-object control condition in which correction did not require memory. This remarkably efficient use of visual memory for occulomotor control supports the general hypothesis that VSTM is used to establish object correspondence across saccades (Currie, McConkie, Carlson-Radvansky, & Irwin, 2000; Henderson & Hollingworth, 1999, 2003a; Irwin, McConkie, Carlson-Radvansky, & Currie, 1994; McConkie & Currie, 1996) and supports our specific proposal that VTSM across saccades is used to correct gaze in the common circumstance that the eyes fail to land on the target object.

Experiment 2

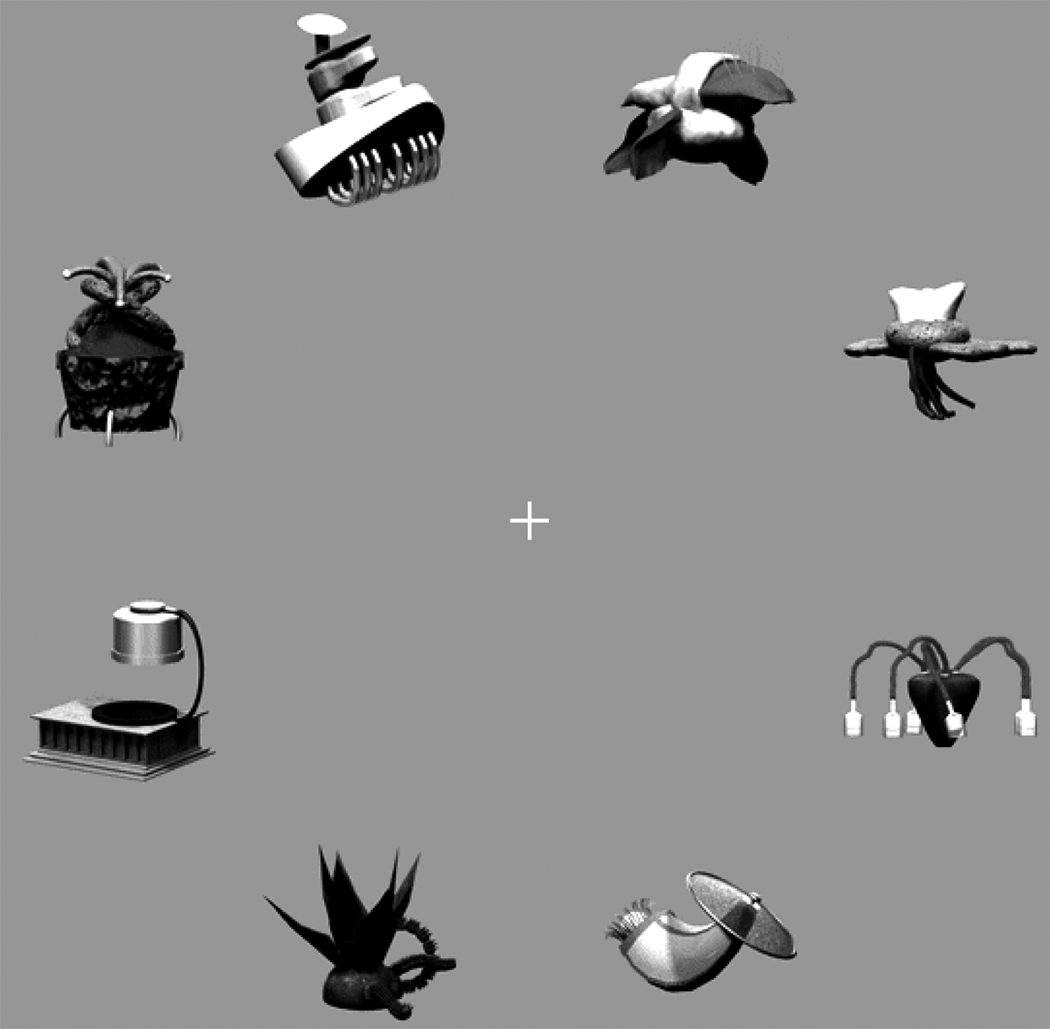

The goal of the present study was to understand the functional role of VSTM in real-world perception and behavior. Experiment 1 displayed relatively simple objects that are commonly used in VSTM experiments, but objects in the world typically are much more complex. In Experiment 2, we sought to demonstrate that the occulomotor system can solve the correspondence problem for objects similar in complexity to those visible in the real world. However, we wanted to avoid using familiar, real-world objects as targets and distractors, because accurate correction for familiar objects could be due to non-visual representations such as conceptual or verbal codes. We therefore created a set of complex novel objects, which simulated the complexity of natural objects without activating conceptual representations or names. As illustrated in Figure 4, object arrays consisted of eight novel objects selected from a set of 48 objects. The basic method and logic of the experiment was the same as in Experiment 1.

Figure 4.

Sample full array of novel objects in Experiment 2.

Methods

Participants

A new group of 12 University of Iowa students participated for course credit. Each reported normal, uncorrected vision.

Stimuli, Apparatus, and Procedure

Forty-eight complex novel objects were created using 3D-modeling and rendering software. Each object was designed to approximate the complexity of natural objects without closely resembling any common object type. Novel objects were presented in grayscale. Grayscale objects were used in anticipation of Experiment 3, in which novel-object gaze correction was combined with a concurrent color memory load.

Object arrays consisted of 8 complex novel objects drawn randomly without replacement from the set of 48 (see Figure 4). The target object was equally likely to appear at each of the 8 possible locations. Two initial configurations were possible, offset by 22.5°. Objects subtended 3.1° on average and were centered 7.5° from fixation. The distance between the centers of adjacent objects was 5.8°. As in Experiment 1, the saccade cue was the expansion of the target to 140% of its original size and contraction to the original size over 50 ms of animation. For rotation trials, the array was rotated 22.5° clockwise on half the trials and 22.5° counterclockwise on the other half. The apparatus was the same as in Experiment 1.

The sequence of events in a trial was the same as in Experiment 1. The full-array and single-object conditions were blocked, and block order was counterbalanced across participants. In each block, participants first completed 12 practice trials, followed by 192 experimental trials, 64 of which were rotation trials. Trial order within a block was determined randomly. Each participant completed a total 384 experiment trials. The entire session lasted approximately 55 min.

Data Analysis

The eyetracking data were coded relative to scoring regions surrounding each object. Scoring regions were square, 3.3° × 3.3°, so as to encompass the variable shapes of the novel objects. A total of 7.9% of the rotation trials was eliminated from the analysis for the reasons discussed in Experiment 1.

Results and Discussion

The analyses below focused on gaze correction in the rotation trials. Due to variation in object shape, an analysis of naturally occurring saccade errors in the no rotation trials was not feasible. Gaze correction on rotation trials was again accurate, both in the full-array condition (87.0%) and in the single-object control condition (100%), t(11) = 5.2, p < .001. The lower accuracy in the full-array condition in Experiment 2 compared with Experiment 1 could have been caused by VSTM limitations on the encoding, maintenance, or comparison of complex object representations. However, it could also have been caused simply by the difficulty of perceiving complex shape-defined objects in the periphery prior to the saccade. In any case, gaze correction was quite accurate, though it was less than perfect.

Memory-based gaze correction was also quite efficient. Mean gaze correction latency in the full-array condition (221 ms) was only 46 ms longer than mean correction latency in the single-object condition (175 ms), t(11) = 5.1, p < .001. Figure 5 shows the distributions of correction latencies for the single-object and full-array conditions. As in Experiment 1, the memory requirements in the full-array condition led to a shift in the peak of the distribution of latencies and an increase in variability. Correction latencies in the single-object condition again fell within the range of correction latencies observed in previous research.

Figure 5.

Distributions of correction latencies for the full-array and single-object conditions in Experiment 2.

Unlike Experiment 1, there was a reliable difference in the mean distance of the corrective saccade in the full-array (2.40°) and single-object (2.48°) conditions, t(11) = 2.65, p < .05. The source of this difference is not clear, and the numerical magnitude of the effect was very small. However, the effect should, if anything, have led to overestimation of the latency difference between the single-object and full-array conditions. With longer corrections requiring less time to initiate, the longer corrections in single-object condition would have led to slightly faster correction latencies based solely on the distance of the correction.

It is tempting to compare the correction latencies for color patches and complex objects across Experiments 1 and 2, especially as corrections were faster for complex objects (221 ms) than for color patches (240 ms). However, differences in the distance of the correction (1.38° in Experiment 1; 2.40° in Experiment 2) make any cross-experiment comparison difficult. Nevertheless, memory-based correction for complex objects was highly efficient, especially considering the computational and memorial demands of encoding, maintaining, and comparing complex novel object representations (Alvarez & Cavanagh, 2004).

As in Experiment 1, we examined whether the distance of the initial saccade landing position from the target object influenced the selection of that object as the goal of the corrective saccade. Unlike Experiment 1, there was a reliable correlation between distance and correction accuracy (rpb = −.17, p < .001), with the probability of correction to the target decreasing with increasing distance of the saccade landing position from the target. In addition, gaze correction was more accurate for saccades that landed closer to the target than to the distractor (91.6%) than for saccades that landed closer to the distractor than to the target (82.4%), t(11) = 4.14, p < .005. Previous research has shown that distance influences correction latency to single targets (Deubel et al., 1982; Kapoula & Robinson, 1986). The present finding is the first demonstration that distance influences which of several objects is chosen as the target of the correction. However, even when the eyes landed closer to the distractor than to the target, the rate of accurate correction to the target (82.4%) was reliably higher than chance correction of 50%, t(11) = 10.6, p < .001, indicating that memory was still the primary factor determining which object was selected as the goal of the corrective saccade.

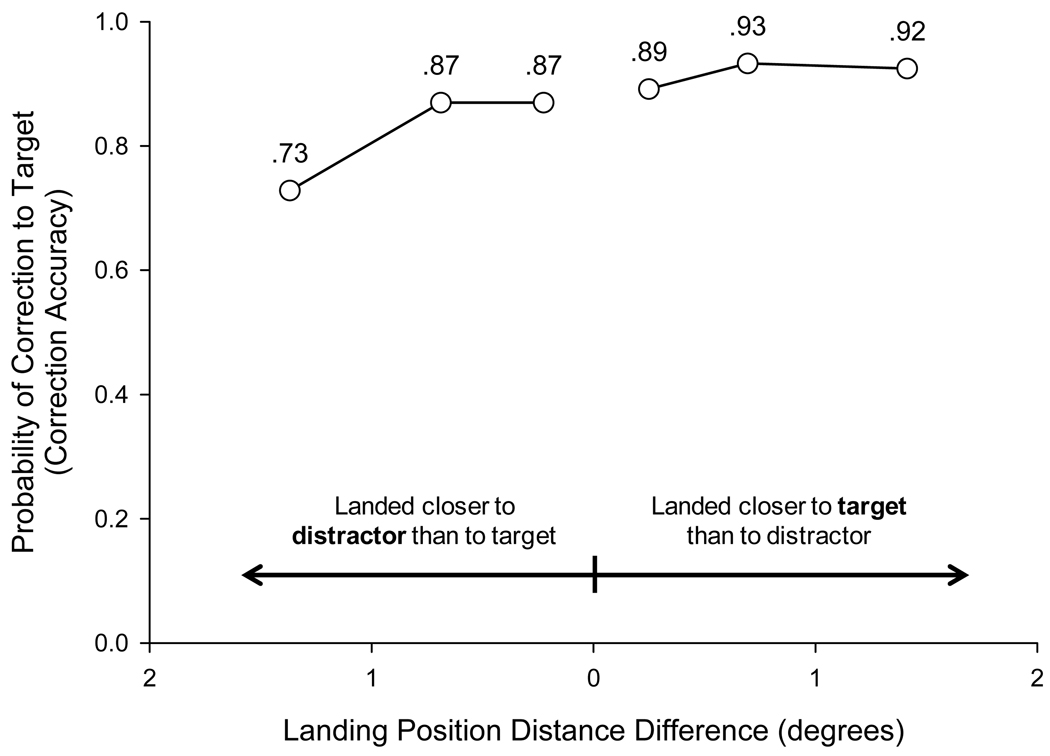

To investigate more thoroughly the effect of relative distance from the initial saccade landing position, a difference score was computed for each trial by subtracting the distance of the closer of the two objects (target or distractor) from the distance of the farther of the two objects. For example, if on a particular trial the initial saccade landed 3.1° from the center of the target object and 2.3° from the center of the distractor, the difference score would be 0.8°. Larger values represent cases in which the initial saccade landed much closer to one object than to the other. Smaller values represent cases in which the initial saccade landed approximately midway between the two objects. Figure 6 plots correction accuracy as a function of this difference score, with trials on which the eyes landed closer to distractor than to target plotted on the left and trials on which the eyes landed closer to target than to distractor plotted on the right. Two features of the data are notable. The probability of correcting to the target fell (i.e., the probability of correcting to the distractor rose) when the distance of the distractor from the landing position was relatively small compared with the distance of the target from the landing position. However, even for trials on which the eyes landed much closer to the distractor than to the target (far left data point of Figure 6), gaze was still more likely to be corrected to the target object (73% of trials) than to the distractor (27% of trials); 73% accurate correction to the target was reliably higher than chance correction of 50%, t(11) = 5.52, p < .001.

Figure 6.

Gaze correction accuracy for full-array, rotation trials in Experiment 2 as a function of the difference in distance of the target and distractor from the landing position of the initial saccade. Small differences in distance (center of the figure) represent trials on which the eyes landed near the midpoint between target and distractor. Large differences in distance (far right and left of figure) represent trials on which the eyes landed much closer to one object than to the other. Trials on which the eyes landed closer to the distractor than to the target are plotted on the left. Trials on which the eyes landed closer to the target than to the distractor are plotted on the right. For each type of trial, the distance difference data were split into thirds. Mean distance difference in each third is plotted against mean gaze correction accuracy in that third.

In summary, memory-based gaze correction for complex novel objects was both accurate and efficient, demonstrating that transsaccadic memory is capable of controlling gaze correction for objects of similar complexity to those found in the real world. In addition, the most plausible nonmemorial cue to correction, relative distance of objects from the saccade landing position, also influenced gaze correction accuracy, but this effect was small compared with the effect of target memory. Even when the eyes landed closer to the distractor than to the target, gaze was still corrected to the remembered target object on the large majority of trials.

Experiment 3

The timing parameters used in Experiments 1 and 2 were designed to induce the use of VSTM rather than other memory systems in computing object correspondence and gaze correction. However, it is possible that some other memory system was responsible for the accurate and efficient gaze correction performance observed in these experiments. Consequently, Experiment 3 directly tested whether gaze correction depends on the VSTM system that has been studied extensively over the last decade (Alvarez & Cavanagh, 2004; Hollingworth, 2004; Irwin & Andrews, 1996; Vogel, Woodman, & Luck, 2001; Wheeler & Treisman, 2002; Xu & Chun, 2006). We combined the gaze-correction paradigm used in Experiment 2 with a secondary task—color change detection—that is known to require VSTM (Luck & Vogel, 1997). If VSTM plays a central role in controlling corrective saccades, then a secondary VSTM task should interfere with gaze correction (and vice versa), as the two tasks will compete for limited VSTM resources. Similar methods have been used to examine the role of VSTM in visual search (Woodman & Luck, 2004; Woodman, Luck, & Schall, in press; Woodman et al., 2001) and in mental rotation (Hyun & Luck, in press).

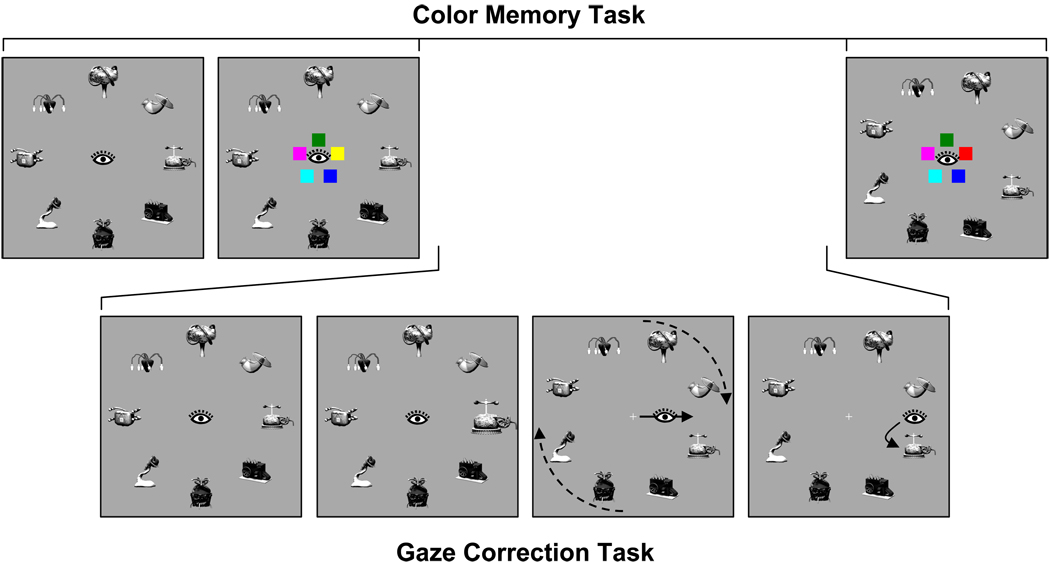

Arrays consisted of eight grayscale novel objects (the single-object condition was eliminated). In the dual-task condition (Figure 7), participants saw a to-be-remembered array of five color patches before the gaze correction target was cued. After the participants made an eye movement to this target (and possibly a corrective saccade), a test array of colors was presented, with all colors remaining the same or one changed. Participants responded “same” or “changed” by manual button press. Thus, memory was required both to perform the color change-detection task and to make a memory-guided gaze correction. Performance in the dual-task condition was compared with performance in two single-task conditions. In the gaze-correction-only condition, participants were instructed to ignore the initial set of colors, and color memory was not tested at the end of the trial. In the color-memory-only condition, participants were instructed to ignore the novel objects and performed only the color-memory task.

Figure 7.

Sequence of events in a rotation trial of the dual-task condition of Experiment 3.

If the same memory system is used both to make memory-guided gaze corrections and to perform the color change-detection task, then it should be more difficult to perform these two tasks together than to perform each task alone. If different memory systems are used, however, then the two tasks should not interfere and performance should be equivalent in the dual-task and single-task conditions. Although one might expect some dual-task interference even if different memory systems are used, previous research shows that interference in this type of procedure is highly specific. For example, a spatial change-detection task interferes with visual search whereas an object change-detection task often does not (Woodman & Luck, 2004; Woodman et al., 2001), and an object change-detection task interferences with mental rotation whereas a spatial change-detection task does not (Hyun & Luck, in press). Thus, the presence or absence of interference in this paradigm can provide specific information about the use of shared cognitive resources. A follow-up experiment will be described later to provide more direct evidence about the specificity of the interference.

Methods

Participants

A new group of 12 University of Iowa students participated for course credit. Each reported normal, uncorrected vision.

Stimuli and Apparatus

The gaze correction stimuli were the same grayscale novel objects used in Experiment 2. The stimuli for the color memory task were square patches, 1.3° × 1.3°. Five color patches were presented on each trial. The center of each patch was 1.8° from central fixation, and patches were evenly spaced around central fixation. Colors were chosen randomly without replacement from a set of nine: red, green, blue, yellow, violet, light-green, light-blue, brown, and pink. Red, green, blue, yellow, and violet were the same as in Experiment 1. The x, y, and luminance values for the new colors were as follows: light-green (x = .30, y = .56; 52.61 cd/m2), light-blue (x = .21, y = .25; 62.96 cd/m2), brown (x = .57, y = .38; 3.79 cd/m2), and pink (x = .32, y = .27; 48.98 cd/m2).

The apparatus was the same as in the previous experiments. Button responses for the color change detection task were collected using a serial button box.

Procedure

The three tasks were blocked (96 trials per block) and block order counterbalanced across participants. Each block was preceded by 12 practice trials. Trial order within a block was determined randomly.

On each rotation trial of the dual-task block, the sequence of events was as follows (Figure 7). The novel object array was presented for 1000 ms as the participant maintained central fixation. Then, the color memory array was added to the display for 200 ms. The colors were removed for 500 ms. The target object in the novel object array was cued for 50 ms by expansion and contraction. The array was rotated as the participant generated a saccade to the cued object. After landing, gaze was corrected to the target. Once the target was fixated, it was outlined by a box for 400 ms. This signaled successful fixation of the target and also cued the participant to return gaze to the central fixation point. The test array of color patches was presented until the participant registered the same/changed button response. Trials were evenly divided between same and changed. When a color changed, it was replaced by a new color, randomly selected for the set of 4 colors that had not appeared in the memory array. The time required to complete the gaze correction task varied from trial to trial, and thus did the delay between the color memory and test arrays. Mean delay between the offset of the color memory array and the onset of the color test array was 1882 ms.

The sequence of events in the gaze-correction-only condition was identical to that in the dual-task condition, except the test array of colors was not presented.

In the color-memory-only condition, the sequence of events was the same as in the preceding conditions through the presentation of the color memory array. The color memory array was followed by an ISI in which only the novel-object array was presented (no object was cued, as this would likely have led to a reflexive saccade). The color test array was then presented until response.

To ensure that the color memory demands were equivalent in the color-memory-only and dual-task conditions, the duration of the ISI in the color-memory-only condition was yoked to the actual delays between color arrays observed in the dual-task condition. For half of the participants, the dual-task block preceded the color-memory-only block. For these participants, the ISI between color memory and test arrays in the color-memory-only block was yoked, trial by trial, to the actual delay observed on the corresponding trial of the participant’s own dual-task block. This method could not be used for participants who completed the color-memory-only block before the dual-task block. For these participants, the ISI duration was randomly selected on each trial from the set of actual delays observed across all preceding participants’ dual-task trials. Thus, we equated, to the closest possible approximation, not only the mean delay between color arrays in the two conditions but also the variability in that delay. The results did not differ between the two yoking methods, and the data were combined for analysis.

Data Analysis

Eyetracking data were analyzed with respect to the scoring regions described in Experiment 2. A total of 19.5% of trials was eliminated for the reasons described in Experiment 1.

Results

Performance in the gaze correction and color memory tasks is reported in Table 1.

Table 1.

Experiment 2: Gaze correction and color memory performance under dual-task and single-task conditions.

| Condition | |||

|---|---|---|---|

| Measure | Dual Task | Gaze Correction Only | Color Memory Only |

| Gaze Correction | |||

| Accuracy (% correct)* | 80.8 | 90.2 | -- |

| Latency (ms)* | 228 | 203 | |

| Color Memory | |||

| Accuracy (% correct)** | 70.7 | -- | 77.2 |

Single-task/dual-task contrast p < .05,

p < .005

Gaze correction performance

The central question was whether gaze correction performance on rotation trials would be impaired in the dual-task condition compared with the gaze-correction-only condition. Gaze correction performance was significantly impaired by the color memory task. Gaze correction accuracy was reliably lower in the dual-task condition (80.8%) than in the gaze-correction-only condition (90.2%), t(11) = 2.74, p < .05. And gaze correction latency was reliably longer in the dual-task condition (228 ms) than in the gaze-correction-only condition (203 ms), t(11) = 2.33, p < .05. Thus, we can infer that gaze correction depends directly on the VSTM system.

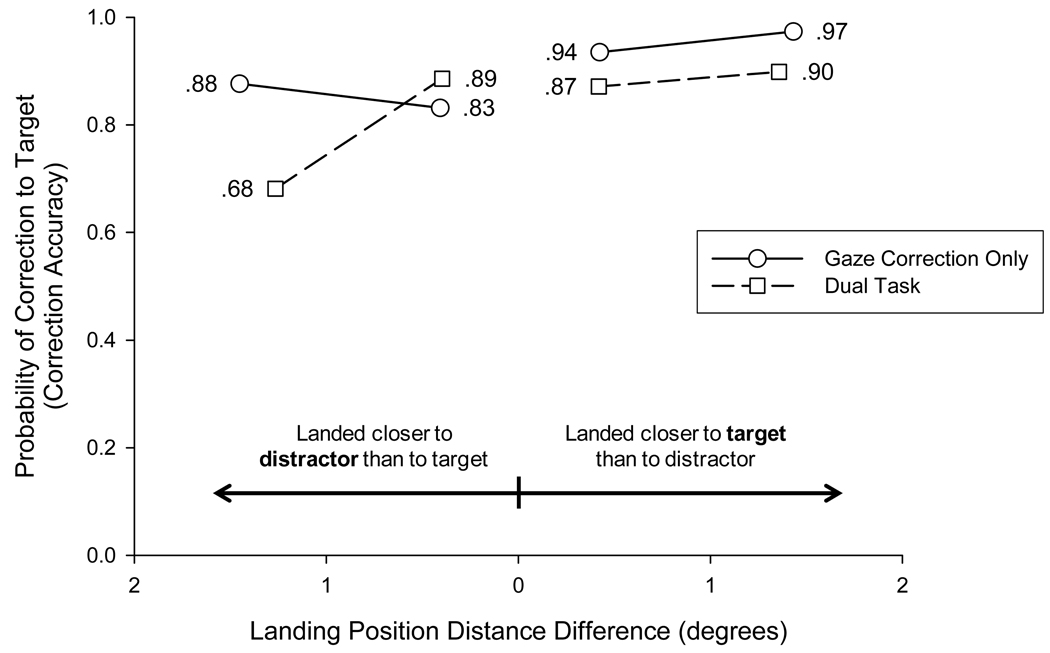

In addition to examining the effect of a VSTM load on gaze correction, we again examined the effect of target distance from the landing position of the initial saccade. For the gaze-correction-only condition, the probability of accurate correction decreased with increasing distance (rpb = −.12, p < .05), and saccades that landed closer to the target than to the distractor were more likely to be corrected to the target (95.4%) than saccades that landed closer to the distractor than to the target (85.8%), t(11) = 5.35, p < .001. Similar results were obtained in the dual-task condition, though the effects did not reach statistical significance. The correlation between distance and correction accuracy approached reliability (rpb = −.11, p = .07), and saccades that landed closer to the target than to the distractor were more likely to be corrected to the target (85.4%) than saccades that landed closer to the distractor than to the target (75.5%), t(11) = 2.07, p = .06. Note, however, that even when the eyes landed closer to the distractor than to the target, the rate of accurate correction to the target was still reliably higher than chance correction of 50% in both the gaze-correction-only condition [85.8%, t(11) = 13.8, p < .001] and the dual-task condition [75.5%, t(11) = 5.97, p < .001], indicating that memory was the primary factor determining selection of the goal of the corrective saccade.

For each trial, we computed the distance difference score by subtracting the distance of the landing position to the closer of the two objects from the distance of the landing position to the farther of the two objects. Figure 8 shows gaze correction accuracy as a function of the magnitude of this difference, with trials on which the eyes landed closer to distractor than to target plotted on the left and trials on which the eyes landed closer to target than to distractor plotted on the right. The overall pattern of data was similar to that found in Experiment 2 (see Figure 6). In addition, distance had a larger effect in the dual-task condition than in the gaze-correction-only condition, with correction accuracy falling considerably (to 68% correct) in the dual-task condition when the eyes landed relatively close to the distractor and far from the target. [Even in this case, the rate of accurate correction to the target (68%) was marginally higher than chance, t(11) = 2.20, p = .05.] These data suggest that nonmemorial distance information might play a larger role in the selection of the goal of the corrective saccade when VSTM information is degraded due to the dual-task interference.

Figure 8.

Gaze correction accuracy for full-array, rotation trials in Experiment 3 as a function of the difference in distance of the target and distractor from the landing position of the initial saccade. For each type of trial, the distance difference data were split into halves. Mean distance difference in each half is plotted against mean gaze correction accuracy in that half Data are plotted separately for the gaze-correction-only and dual-task conditions.

Color memory performance

The gaze correction task produced reciprocal interference with the color memory task. When including both rotation and no-rotation trials of the dual-task condition, color change detection accuracy for the dual-task condition (70.7%) was reliably lower than for the color-memory-only condition (77.2%), t(11) = 3.58, p < .005.

A comparison of color change detection in the rotation and no-rotation trials of the dual-task condition is potentially informative of the locus of VSTM interference. Both rotation and no-rotation trials would have led to the encoding of saccade target information into VSTM prior to the saccade (because the need for correction became evident only after the saccade was completed). However, only in the rotation condition did participants actually need to use VSTM to correct gaze on most trials. There was no accuracy difference for color change detection between the rotation (70.6%) and no-rotation (70.8%) trials of the dual-task condition, t(11) = .04, p = .97. And both were reliably worse than change detection in the color-memory-only condition (rotation versus color-memory-only, t(11) = 2.27, p < .05; no-rotation versus color-memory-only, t(11) = 2.54, p < .05). Although preliminary, this result suggests that interference is first introduced at the stage of encoding saccade target information into VSTM.

Overall magnitude of interference

Interference in the dual-task condition was observed on both the color memory task and the gaze correction task. Given that the gaze correction task required, at minimum, the encoding and maintenance of one object across the saccade (the saccade target), it is important to demonstrate that there was at least one object’s worth of interference in the dual-task condition. For color memory, we calculated Cowan’s K [(hit rate – false alarm rate)×set size], which provides an estimate of the number of objects retained in memory (Cowan et al., 2005). In the color-memory-only condition, K was 2.73. In the dual-task condition, K dropped to 1.96. Thus, there was a mean decrement of .77 object for color memory in the dual-task condition. This effect constitutes only one component of interference, however, because gaze correction accuracy also was impaired in the dual-task condition. It is difficult to estimate the latter interference in terms of the number of objects retained. However, the numerical magnitude of gaze correction interference (9.4%) was larger than the magnitude of color memory interference (6.5%). Thus, we can conclude, conservatively, that the dual-task condition introduced approximately one object’s worth of interference, consistent with the need to encode the saccade target into VSTM for accurate gaze correction.

Discussion

Performance on both the gaze-correction and color-memory tasks was impaired when they were performed concurrently. This is the strongest evidence to date that memory across saccades depends on the same memory system engaged by conventional VSTM tasks. Whereas prior studies have shown that VSTM and transsaccadic memory have similar representational properties (for a review, see Irwin, 1992b), the present experiment is the first to demonstrate that VSTM actually plays a functional role in gaze control.

We conducted an additional dual-task experiment (N = 12) to ensure that the interference observed in Experiment 3 was the result of competition for VSTM resources rather than a general consequence of performing two tasks concurrently. The color-memory task was replaced by a verbal short-term memory task. In the dual-task condition, participants heard five consonants before the gaze correction task. (Letters were selected randomly without replacement from the set of English consonants; stimuli were digitized recordings of a female voice speaking each letter; letters were presented at a rate of 700 ms/letter). After gaze was corrected, a single consonant was played, and participants reported whether it had or had not been present in the original set (mean accuracy was 87.9%). The gaze-correction-only condition was the same, except participants were instructed to ignore the initial set of letters, and verbal memory was not tested at the end of the trial. We did not conduct a verbal-memory-only condition, because we were specifically interested in gaze correction interference. In all other respects, however, the method was identical to that in Experiment 3.

The verbal task did not require VSTM resources, and therefore it should not have interfered with gaze correction. Indeed, the addition of the verbal memory task did not significantly impair gaze correction accuracy or latency. Mean correction accuracy was 87.4% in the dual-task condition and 84.3% in the gaze-correction-only condition, t(11) = 1.67, p = .12. Mean gaze correction latency was 204 ms in the dual-task condition and 208 ms in the gaze-correction-only condition, t(11) = .41, p = .69.

Thus, interference with gaze correction was specific to a secondary task (color change detection) that competed with gaze correction for VSTM resources.

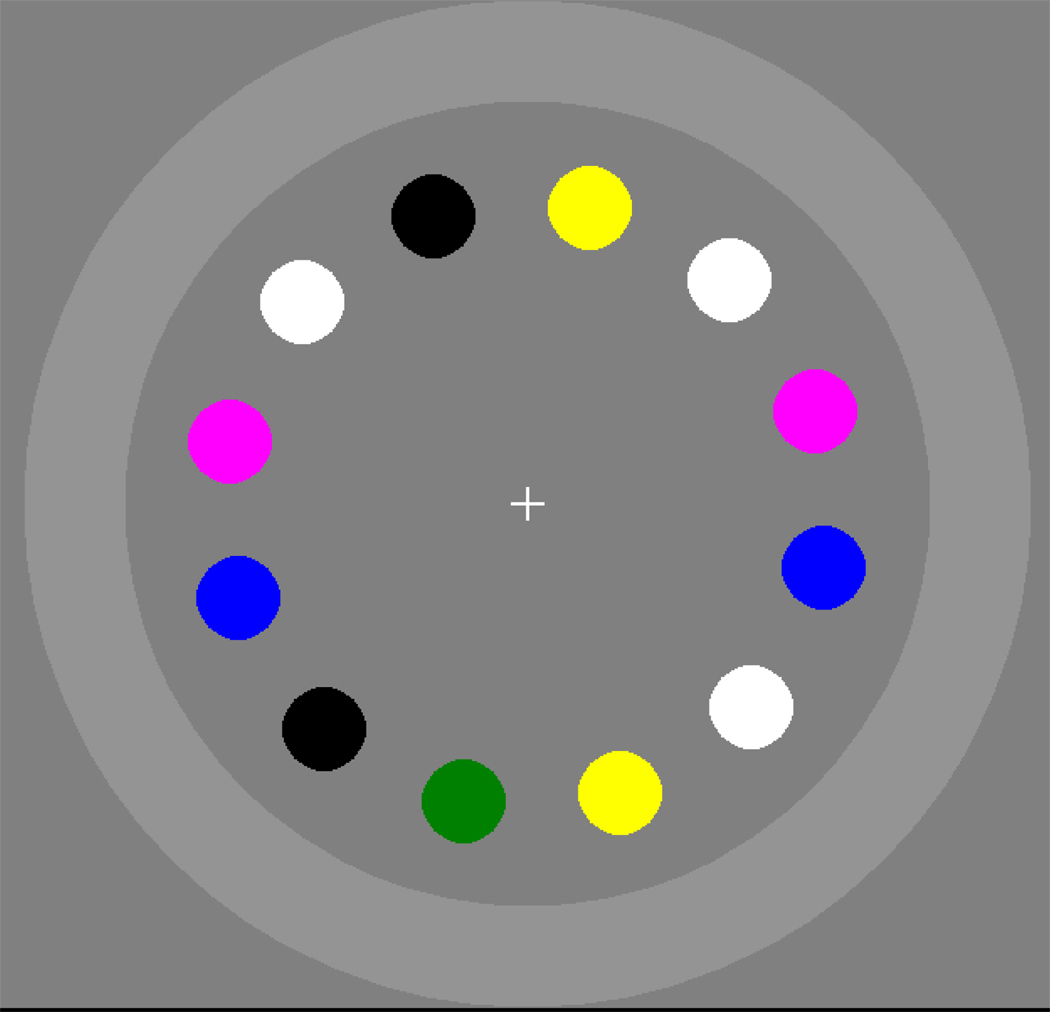

Experiment 4

The speed of VSTM-based correction suggests that correction to the remembered saccade target might be a largely automatized skill, made efficient by the use of VSTM-based correction thousands of times each day. Experiment 4 examined whether gaze correction requires awareness of rotation and whether participants can voluntarily control VSTM-based corrective saccades. The basic method of Experiment 1 was used, except that an outer ring was added to the array (Figure 9). Participants were instructed that if they noticed that the array rotated, they should immediately shift their eyes to the outer ring without looking at any of the objects in the array. We predicted that gaze corrections would occur whether or not the participants were aware that the array had rotated, and that the participants would be unable to suppress corrections even when they were aware of the rotation.

Figure 9.

Object array illustrating the outer ring in Experiment 4.

methods

Participants

A new group of 12 University of Iowa students participated for course credit. Each reported normal, uncorrected vision.

Stimuli, Apparatus, and Procedure

The stimuli, apparatus, and procedure were identical to those of Experiment 1, with the following exceptions.

A continuously visible, light-gray ring was added to the stimulus images (Figure 9). The width of the ring was 1.9°, and the inner contour of the ring was 7.7° from central fixation. In addition, the initial object array was offset randomly (between 0 and 29°) from the clock positions to minimize the possible strategy of detecting rotations by encoding the array configuration categorically. Participants were told that the array would rotate during their eye movement to the target object on some trials. They were instructed that if they noticed that the array rotated, they should direct gaze immediately to the outer ring without fixating any of the objects. If they did not notice that the array rotated, they should simply direct gaze to the target object. Half of the 12 participants were given an additional instruction. (It became evident that this additional task would be informative only after having analyzed the data from the first six participants.) After the completion of each trial on which the outer ring was fixated, the participants were asked to report, by making a button press, whether they had or had not fixated the target object. This allowed us to gain preliminary evidence about whether participants were aware of their corrective saccades to the target object. Gaze correction performance did not differ between the two subgroups of participants.

The single-object control condition was eliminated. Participants completed 288 full-array trials (96 rotation trials) in a single session. Trial order was determined randomly. The entire session lasted approximately 45 minutes.

Data Analysis

At total of 15.7% of the data was eliminated for the reasons described in Experiment 1.

Results and Discussion

The critical trials were rotation trials on which the eyes landed between target and distractor. Participants fixated the outer ring, indicating correct detection of rotation, on 77% of the rotation trials. On no-rotation trials, participants fixated the outer ring on only 2% of trials, demonstrating that they were moderately sensitive to the rotations in the rotation condition. Because the rotation occurred during the saccade, when vision is suppressed, detection of rotation could not have been based on direct perception of displacement. Thus, these data indicate participants can remember information about the pre-saccade array (such as the locations of array objects) sufficient to detect that the array has rotated after the saccade.

We first examined the 23% of rotation trials on which the outer ring was not fixated; on these trials, we assume that the participant did not detect the rotation. Gaze was corrected to the target object first on 96% of these trials, and mean correction latency was 228 ms (SE = 18 ms). These corrections were just as fast as the memory-guided gaze corrections in Experiment 1. Thus, efficient gaze correction does not require explicit awareness of the rotation.

On the other 77% of rotation trials, participants detected the rotation and fixated the outer ring. Despite the instruction to avoid fixating any of the objects, participants made a corrective saccade to the target before fixating the ring on 56% of these trials. The distractor was fixated before the ring on only 4% of the detected rotation trials. The mean latency of corrections to the target was 225 ms (SE = 25 ms), which was just as efficient as the corrections observed in Experiment 1. Thus, gaze was corrected to the target object efficiently on a significant proportion of trials despite the task instruction to shift gaze immediately to the outer ring. On the remaining 40% of these trials, participants fixated the outer ring without fixating either the target or distractor, as instructed, but these saccades were quite inefficient. Mean saccade latency to the ring on these trials was 483 ms (SE = 51 ms), which was more than twice as long as the mean latency for corrective saccades. A plausible explanation for these long saccade latencies is that participants programmed a corrective saccade to the target but inhibited it before programming a saccade to the outer ring.

Some caution is always necessary when trying to determine whether an observer was aware of a stimulus or behavior, and proving a lack of awareness is at best an uphill battle (see Greenwald & Draine, 1998), especially because awareness is likely to be variable rather than categorical in most cases (for an exception, see Sergent & Dehaene, 2004). In the present study, it is likely that the failure of the participants to make a saccade to the outer ring on approximately ¼ of the rotation trials occurred because the perceptual evidence for a rotation failed to exceed some threshold on these trials and not because they were completely unaware of the rotation on these trials (and fully aware of the rotation when they shifted to the outer ring). Thus, the present results do not indicate that corrective saccades occur in the complete absence of awareness of rotation. Rather, they demonstrate that corrective saccades can occur even when the level of awareness is so low that an observer reports being unaware of the rotation.

To assess awareness of gaze correction, six of the subjects from this experiment were required to report whether they had or had not fixated the target object after each trial on which they fixated the outer ring. For each participant, trials were divided by whether the participant had or had not actually fixated the target object. When the target was fixated, participants correctly reported target fixation on 87% of trials. When the target was not fixated, participants correctly reported that they did not fixate the target on only 29% of trials. Thus, participants were biased to report that they fixated the target, and the most common error was reporting target fixation when the target had not been fixated. This effect could derive from the same source as the long saccade latencies to the ring discussed above. If, upon landing, attention was covertly shifted to the target object and a saccade to the target inhibited, participants would have been quite likely to confuse attending to the target with fixating the target (Deubel, Irwin, & Schneider, 1999), generating false report of target fixation. Collapsing across trial type, overall awareness of correction was quite poor, with a mean accuracy of 65% correct (chance = 50%). Accounting for guessing, participants had information sufficient to correctly report target fixation on approximately 30% of trials.

In summary, VSTM-based corrective saccades did not depend on having explicitly detected the rotation; corrections were automatic in the sense that they were often generated despite the instruction to shift gaze immediately to the outer ring; and participants often could not report whether they had or had not made a correction to the target. These results, together with evidence that the use of VSTM to correct gaze is highly efficient, suggest that VSTM-based gaze correction is a largely automatized skill. In addition, these results constitute some of the first evidence that VSTM, typically equated with conscious cognition, can operate implicitly to support automated visual behavior.

General Discussion

In the present study we sought to understand the functional role of VSTM in real-world cognition and behavior. We tested the hypothesis that VSTM plays a central role in memory across saccades, in the computation of object correspondence, and in successful gaze correction following an inaccurate eye movement. The results of four experiments demonstrate that (a) memory can be used to correct saccade errors; (b) these memory-guided corrections are nearly as fast and accurate as stimulus-guided corrections; (c) VSTM is the memory system that underlies these corrections; (d) memory-guided gaze corrections are automatic rather than being a strategic response to task demands; and e) these corrections typically occur with minimal awareness. The accuracy, speed, and automaticity of VSTM-based gaze correction are characteristics one would expect of a system that has been optimized, through extensive experience, to ensure that the eyes are efficiently directed to goal-relevant objects in the world.

The Function of VSTM

If gaze errors could not be corrected quickly, visually guided behavior would be significantly slowed. This can be observed directly on the occasional trials in Experiment 2 when the corrective saccade was made first to a distractor instead of to the target. On these trials, fixation of the target object was delayed by an average of 482 ms compared with trials on which gaze was first corrected to the target. Such a delay would significantly impair performance of real-world tasks that require rapid acquisition of perceptual information. For example, in a basketball game in which one’s own team wears yellow and the other red, if gaze was inaccurately corrected to a red-shirted player when attempting to make a pass to a yellow-shirted player, a delay of half a second could be the difference between a successful pass and a turnover. In driving, the delay introduced by inaccurate correction to a blue car rather than to a red car could be disastrous if the red car was the one being driven erratically and dangerously. It is also important to note that many everyday tasks—such as making a meal or giving directions from a map—unfold over the course of minutes and require literally hundreds of saccades to goal-relevant objects (Hayhoe, 2000; Land et al., 1999). Given that saccade errors occur on approximately 30–40% of trials under the simplest conditions, the accumulated effect of inaccurate gaze correction could be very large in day-to-day human activities. Thus, VSTM-based gaze correction is likely to be an important factor governing efficient visual behavior.

Although the present study demonstrates that VSTM is used to perform gaze correction, VSTM across saccades may have additional functions. Irwin, McConkie and colleagues have argued that VSTM for the saccade target object supports the phenomenon of visual stability across saccades (i.e., that the world does not appear to change across an eye movement despite changes in retinal projection) (Currie et al., 2000; McConkie & Currie, 1996). In this view, features of the saccade target object are stored across the saccade. If an object matching the remembered target is found close to the landing position, stability is maintained. If not, one becomes consciously aware of a discrepancy between pre- and post-saccade visual experience. Although transsaccadic VSTM might certainly support perceptual stability, we observed efficient gaze correction independently of whether participants perceived stability or change. In Experiment 4, gaze was corrected quickly to the target object whether or not the participant was aware of the change introduced by rotation. This suggests a more fundamental role for VSTM across saccades: establishing correspondence between the saccade target object visible before and after the saccade.