Abstract

A variety of studies have demonstrated that organizing stimuli into categories can affect the way the stimuli are perceived. We explore the influence of categories on perception through one such phenomenon, the perceptual magnet effect, in which discriminability between vowels is reduced near prototypical vowel sounds. We present a Bayesian model to explain why this reduced discriminability might occur: it arises as a consequence of optimally solving the statistical problem of perception in noise. In the optimal solution to this problem, listeners’ perception is biased toward phonetic category means because they use knowledge of these categories to guide their inferences about speakers’ target productions. Simulations show that model predictions closely correspond to previously published human data, and novel experimental results provide evidence for the predicted link between perceptual warping and noise. The model unifies several previous accounts of the perceptual magnet effect and provides a framework for exploring categorical effects in other domains.

Keywords: perceptual magnet effect, categorical perception, speech perception, Bayesian inference, rational analysis

Introduction

The influence of categories on perception is well-known in domains ranging from speech sounds to artificial categories of objects. Liberman, Harris, Hoffman, and Griffth (1957) first described categorical perception of speech sounds, noting that listeners’ perception conforms to relatively sharp identification boundaries between categories of stop consonants and that whereas between-category discrimination of these sounds is nearly perfect, within-category discrimination is little better than chance. Similar patterns have been observed in the perception of colors (Davidoff, Davies, & Roberson, 1999), facial expressions (Etcoff & Magee, 1992), and familiar faces (Beale & Keil, 1995), as well as the representation of objects belonging to artificial categories that are learned over the course of an experiment (Goldstone, 1994; Goldstone, Lippa, & Shiffrin, 2001). All of these categorical effects are characterized by better discrimination of between-category contrasts than within-category contrasts, though the magnitude of the effect varies between domains.

In this paper, we develop a computational model of the influence of categories on perception through a detailed investigation of one such phenomenon, the perceptual magnet effect (Kuhl, 1991), which has been described primarily in vowels. The perceptual magnet effect involves reduced discriminability of speech sounds near phonetic category prototypes. For several reasons, speech sounds, particularly vowels, provide an excellent starting point for assessing a model of the influence of categories on perception. Vowels are naturally occurring, highly familiar stimuli that all listeners have categorized. As will be discussed later, a precise two-dimensional psychophysical map of vowel space can be provided, and using well-established techniques, discrimination of pairs of speech sounds can be systematically investigated under well-defined conditions so that perceptual maps of vowel space can be constructed. By comparing perceptual and psychophysical maps, we can measure the extent and nature of perceptual warping and assess such warping with respect to known categories. In addition, the perceptual magnet effect shows several qualitative similarities to categorical effects in perceptual domains outside of language, as vowel perception is continuous rather than sharply categorical (Fry, Abramson, Eimas, & Liberman, 1962) and the degree of category influence can vary substantially across testing conditions (Gerrits & Schouten, 2004). Finally, the perceptual magnet effect has been the object of extensive empirical and computational research (e.g. Grieser & Kuhl, 1989; Kuhl, 1991; Iverson & Kuhl, 1995; Lacerda, 1995; Guenther & Gjaja, 1996). This previous research has produced a large body of data that can be used to provide a quantitative evaluation of our approach, as well as several alternative explanations against which it can be compared.

We take a novel approach to modeling the perceptual magnet effect, complementary to previous models that have explored how the effect might be algorithmically and neurally implemented. In the tradition of rational analysis proposed by Marr (1982) and J. R. Anderson (1990), we consider the abstract computational problem posed by speech perception and show that the perceptual magnet effect emerges as part of the optimal solution to this problem. Specifically, we assume that listeners are optimally solving the problem of perceiving speech sounds in the presence of noise. In this analysis, the listener’s goal is to ascertain category membership but also to extract phonetic detail in order to reconstruct coarticulatory and non-linguistic information. This is a difficult problem for listeners because they cannot hear the speaker’s target production directly. Instead, they hear speech sounds that are similar to the speaker’s target production but that have been altered through articulatory, acoustic, and perceptual noise. We formalize this problem using Bayesian statistics and show that the optimal solution to this problem produces the perceptual magnet effect.

The resulting rational model formalizes ideas that have been proposed in previous explanations of the perceptual magnet effect but goes beyond these previous proposals to explain why the effect should result from optimal behavior. It also serves as a basis for further empirical research, making predictions about the types of variability that should be seen in the perceptual magnet effect and in other categorical effects more generally. Several of these predictions are in line with previous literature, and one additional prediction is borne out in our own experimental data. Our model parallels models that have been used to describe categorical effects in other areas of cognition (Huttenlocher, Hedges, & Vevea, 2000; Köording & Wolpert, 2004; Roberson, Damjanivic, & Pilling, 2007), suggesting that its principles are broadly applicable to these areas as well.

The paper is organized as follows. We begin with an overview of categorical effects across several domains, then focus more closely on evidence for the perceptual magnet effect and explanations that have been proposed to account for this evidence. The ensuing section gives an intuitive overview of our model, followed by a more formal introduction to its mathematics. We present simulations comparing the model to published empirical data and generating novel empirical predictions. An experiment is presented to test the predicted effects of speech signal noise. Finally, we discuss this model in relation to previous models, revisit its assumptions, and suggest directions for future research.

Categorical Effects

Categorical effects are widespread in cognition and perception (Harnad, 1987), and these effects show qualitative similarities across domains. This section provides an overview of basic findings and key issues concerning categorical effects in the perception of speech sounds, colors, faces, and artificial laboratory stimuli.

Speech Sounds

The classic demonstration of categorical perception comes from a study by Liberman et al. (1957), who measured subjects’ perception of a synthetic speech sound continuum that ranged from /b/ to /d/ to /g/, spanning three phonetic categories. Results showed sharp transitions between the three categories in an identification task and corresponding peaks in discrimination at category boundaries, indicating that subjects were discriminating stimuli primarily based on their category membership. The authors compared the data to a model in which listeners extracted only category information, and no acoustic information, when perceiving a speech sound. Subject performance exceeded that of the model consistently but only by a small percentage: discrimination was little better than could be obtained through identification alone. These results were later replicated using the voicing dimension in stop consonant perception, with both word-initial and word-medial cues causing discrimination peaks at the identification boundaries (Liberman, Harris, Kinney, & Lane, 1961; Liberman, Harris, Eimas, Lisker, & Bastian, 1961). Other classes of consonants such as fricatives (Fujisaki & Kawashima, 1969), liquids (Miyawaki et al., 1975), and nasals (J. L. Miller & Eimas, 1977) show evidence of categorical perception as well. In all these studies, listeners show some discrimination of within-category contrasts, and this within-category discrimination is especially evident when more sensitive measures such as reaction times are used (e.g. Pisoni & Tash, 1974). Nevertheless, within-category discrimination is consistently poorer than between-category discrimination across a wide variety of consonant contrasts.

A good deal of research has investigated the degree to which categorical perception of consonants results from innate biases or arises through category learning. Evidence supports a role for both factors. Studies with young infants show that discrimination peaks are already present in the first few months of life (Eimas, Siqueland, Jusczyk, & Vigorito, 1971; Eimas, 1974, 1975), suggesting a role for innate biases. These early patterns may be tied to general patterns of auditory sensitivity, as non-human animals show discrimination peaks at category boundaries along the dimensions of voicing (Kuhl, 1981; Kuhl & Padden, 1982) and place (Morse & Snowdon, 1975; Kuhl & Padden, 1983), and humans show similar boundaries in some non-speech stimuli (J. D. Miller, Wier, Pastore, Kelly, & Dooling, 1976; Pisoni, 1977). Studies have also shown cross-linguistic differences in perception, which indicate that perceptual patterns are influenced by phonetic category learning (Abramson & Lisker, 1970; Miyawaki et al., 1975). The interaction between these two factors remains a subject of current investigation (e.g. Holt, Lotto, & Diehl, 2004).

The role of phonetic categories in vowel perception is more controversial: vowel perception is continuous rather than strictly categorical, without obvious discrimination peaks near category boundaries (Fry et al., 1962). However, there has been some evidence for category boundary effects (Beddor & Strange, 1982) as well as reduced discriminability of vowels specifically near the centers of phonetic categories (Kuhl, 1991), and we will return to this debate in more detail in the next section.

Colors

It has been argued that color categories are organized around universal focal colors (Berlin & Kay, 1969; Rosch Heider, 1972; Rosch Heider & Oliver, 1972), and these universal tendencies have been supported through more recent statistical modeling results (Kay & Regier, 2007; Regier, Kay, & Khetarpal, 2007). However, color terms show substantial cross-linguistic variation (Berlin & Kay, 1969), and this has led researchers to question whether color categories influence color perception. Experiments have revealed discrimination peaks corresponding to language-specific category boundaries for speakers of English, Russian, Berinmo, and Himba, and perceivers whose native language does not contain a corresponding category boundary have failed to show these discrimination peaks (Winawer et al., 2007; Davidoff et al., 1999; Roberson, Davies, & Davidoff, 2000; Roberson, Davidoff, Davies, & Shapiro, 2005). These results indicate that color categories do influence performance in color discrimination tasks.

More recent research in this domain has asked whether these categorical effects are purely perceptual or whether they are mediated by the active use of linguistic codes in perceptual tasks. Roberson and Davidoff (2000) demonstrated that linguistic interference tasks can eliminate categorical effects in color perception (see also Kay & Kempton, 1984). Investigations have shown activation of the same neural areas in naming tasks as in discrimination tasks (Tan et al., 2008) as well as left-lateralization of categorical color perception in adults (Gilbert, Regier, Kay, & Ivry, 2006). These results suggest a direct role for linguistic codes in discrimination performance, indicating that categorical effects in color perception are mediated largely by language. Nevertheless, categorical effects may play a large role in everyday color perception. Linguistic codes appear to be used in a wide variety of perceptual tasks, including those that do not require memory encoding (Witthoft et al., 2003), and verbal interference tasks fail to completely wipe out verbal coding when the type of interference is unpredictable (Pilling, Wiggett, ÖOzgen, & Davies, 2003).

Faces

Categorical effects in face perception were first shown for facial expressions of emotion in stimuli constructed from line drawings (Etcoff & Magee, 1992) and photograph-quality stimuli (Calder, Young, Perrett, Etcoff, & Rowland, 1996; Young et al., 1997; Gelder, Teunisse, & Benson, 1997). Stimuli for these experiments were drawn from morphed continua in which the endpoints were prototypical facial expressions (e.g. happiness, fear, anger). With few exceptions, results showed discrimination peaks at the same locations as identification boundaries between these prototypical expressions. Evidence for categorical effects has been found in seven-month-old infants (Kotsoni, Haan, & Johnson, 2001), nine-year-old children (Gelder et al., 1997), and older individuals (Kiffel, Campanella, & Bruyer, 2005), indicating that category structure is similar across different age ranges. However, these categories can be affected by early experience as well. Pollak and Kistler (2002) presented data from abused children showing that their category boundaries in continua ranging from fearful to angry and from sad to angry were shifted such that they interpreted a large portion of these continua as angry; discrimination peaks were shifted together with these identification boundaries.

In addition to categorical perception of facial expressions, discrimination patterns show evidence of categorical perception of facial identity, where each category corresponds to a different identity. Beale and Keil (1995) found discrimination peaks along morphed continua between faces of famous individuals, and these results have been replicated with several different stimulus continua constructed from familiar faces (Stevenage, 1998; Campanella, Hanoteau, Seron, Joassin, & Bruyer, 2003; Rotshtein, Henson, Treves, Driver, & Dolan, 2005; Angeli, Davidoff, & Valentine, 2008). The categorical effects are stronger for familiar faces than for unfamiliar faces (Beale & Keil, 1995; Angeli et al., 2008), but categorical effects have been demonstrated for continua involving previously unfamiliar faces as well (Stevenage, 1998; Levin & Beale, 2000). The strength of these effects for unfamiliar faces may derive from a combination of learning during the course of the experiment (Viviani, Binda, & Borsato, 2007), the use of labels during training (Kikutani, Roberson, & Hanley, 2008), and the inherent distinctiveness of endpoint stimuli in the continua (Campanella et al., 2003; Angeli et al., 2008).

Learning Artificial Categories

Several studies have demonstrated categorical effects that derive from categories learned in the laboratory, implying that the formation of novel categories can affect perception in laboratory settings. As proposed by Liberman et al. (1957), this learning component might take two forms: acquired distinctiveness involves enhanced between-category discriminability, whereas acquired equivalence involves reduced within-category discriminability. Evidence for one or both of these processes has been found through categorization training in color perception (Özgen & Davies, 2002) and auditory perception of both speech sounds (Pisoni, Aslin, Perey, & Hennessy, 1982) and white noise (Guenther, Husain, Cohen, & Shinn-Cunningham, 1999). These results extend to stimuli that vary along multiple dimensions as well. Categorizing stimuli along two dimensions can lead to acquired distinctiveness (Goldstone, 1994), and similarity ratings for drawings that differ along several dimensions have shown acquired equivalence in response to categorization training (Livingston, Andrews, & Harnad, 1998). Such effects may arise partly from task-specific strategies but likely involve changes in underlying stimulus representations as well (Goldstone et al., 2001).

Additionally, several studies have demonstrated that categories for experimental stimuli are learned quickly over the course of an experiment even without explicit training. Goldstone (1995) found that implicit shape-based categories influenced subjects’ perception of hues and that these implicit categories changed depending on the set of stimuli presented in the experiment. A similar explanation has been proposed to account for subjects’ categorical treatment of unfamiliar face continua (Levin & Beale, 2000), where learned categories seem to correspond to continuum endpoints. Gureckis and Goldstone (2008) demonstrated that subjects are sensitive to the presence of distinct clusters of stimuli, showing increased discriminability between clusters even when those clusters receive the same label. Furthermore, implicit categories have been used to explain why subjects often bias their perception toward the mean value of a set of stimuli in an experiment. Huttenlocher et al. (2000) argued that subjects form an implicit category that includes the range of stimuli they have seen over the course of an experiment and that they use this implicit category to correct for memory uncertainty when asked to reproduce a stimulus. Under their assumptions, the optimal way to correct for memory uncertainty using this implicit category is to bias all responses toward the mean value of the category, which in this case is the mean value of the set of stimuli. The authors presented a Bayesian analysis to account for bias in visual stimulus reproduction that is nearly identical to the one-category model derived here in the context of speech perception, reflecting the similar structure of the two problems and the generality of the approach.

Summary

The categorical effects in all of these domains are qualitatively similar, with enhanced between-category discriminability and reduced within-category discriminability. Though there is some evidence that innate biases contribute to these perceptual patterns, the patterns can be influenced by learned categories as well, even by implicit categories that arise from specific distributions of exemplars. Despite widespread interest in these phenomena, the reasons and mechanisms behind the connection between categories and perception remain unclear. In the remainder of this paper we address this issue through a detailed exploration of the perceptual magnet effect, which shares many qualitative features with the categorical effects discussed above.

The Perceptual Magnet Effect

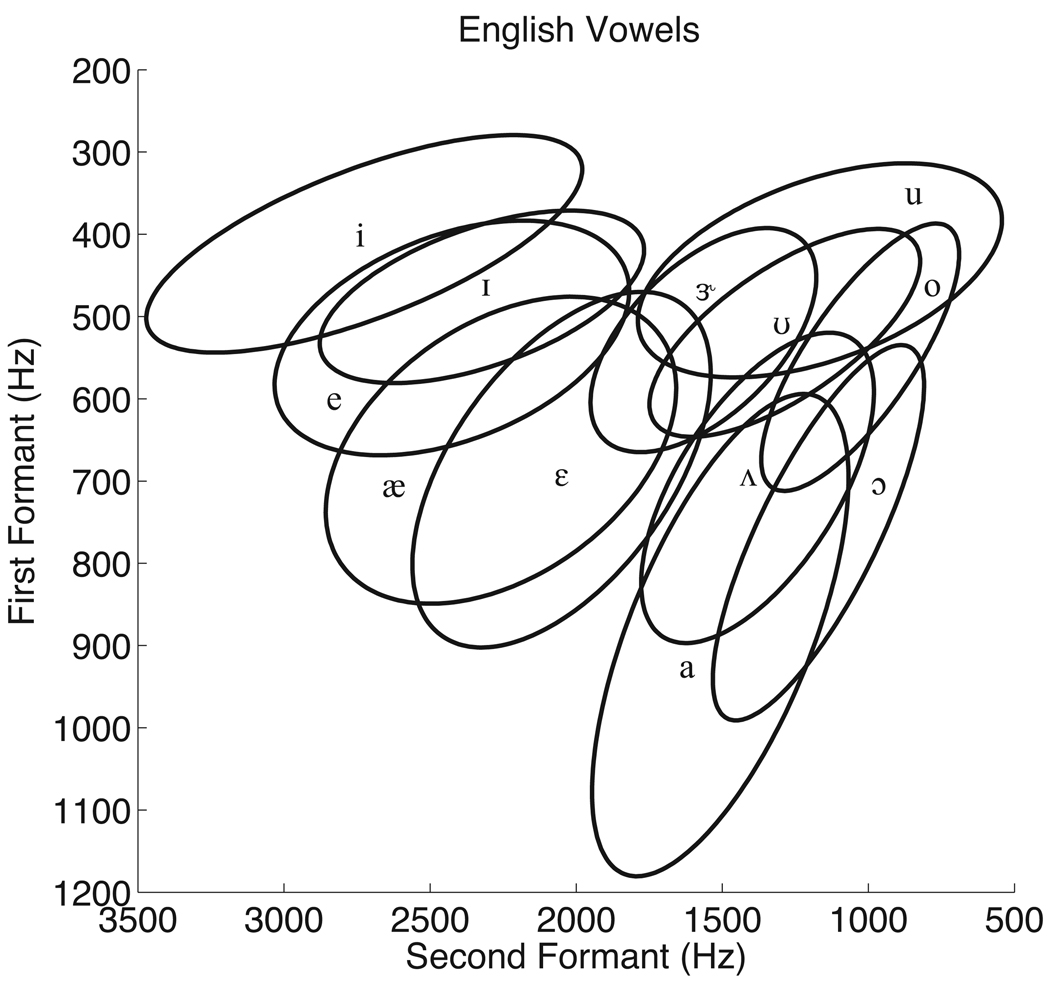

The phenomenon of categorical perception is robust in consonants, but the role of phonetic categories in the perception of vowels has been more controversial. Acoustically, vowels are specified primarily by their first and second formants, F1 and F2. Formants are bands of frequencies in which acoustic energy is concentrated – peaks in the frequency spectrum – as a result of resonances in the vocal tract. F1 is inversely correlated with tongue height, whereas F2 is correlated with the proximity of the most raised portion of the tongue to the front of the mouth. Thus, a front high vowel such as /i/ (as in beet) spoken by a male talker typically has center formant frequencies around 270 Hz (F1) and 2290 Hz (F2), and a back low vowel such as /a/ (as in father) spoken by a male typically has center formant frequencies around 730 Hz and 1090 Hz (Peterson & Barney, 1952). Tokens of vowels are distributed around these central values. A map of vowel space based on data from Hillenbrand, Getty, Clark, and Wheeler (1995) is shown in Figure 1. Though frequencies are typically reported in Hertz, most research on the perceptual magnet effect has used the mel scale to represent psychophysical distance (e.g. Kuhl, 1991). The mel scale can be used to equate distances in psychophysical space because difference limens, the smallest detectable pitch differences, correspond to constant distances along this scale (S. S. Stevens, Volkmann, & Newman, 1937).

Figure 1.

Map of vowel space from Hillenbrand et al.’s (1995) production experiment. Ellipses delimit regions corresponding to approximately 90% of tokens from each vowel category.

Early work suggested that vowel discrimination was not affected by native language categories (K. N. Stevens, Liberman, Studdert-Kennedy, & Öhman, 1969). However, later findings have revealed a relationship between phonetic categories and vowel perception. Although within-category discrimination for vowels is better than for consonants, clear peaks in discrimination functions have been found at vowel category boundaries, especially in tasks that place a high memory load on subjects or that interfere with auditory memory (Pisoni, 1975; Repp, Healy, & Crowder, 1979; Beddor & Strange, 1982; Repp & Crowder, 1990). In addition, between-category differences yield larger neural responses as measured by event related potentials (Näätäanen et al., 1997; Winkler et al., 1999). Viewing phonetic discrimination in spatial terms, Kuhl and colleagues have found evidence of shrunken perceptual space specifically near category prototypes, a phenomenon they have called the perceptual magnet effect (Grieser & Kuhl, 1989; Kuhl, 1991; Kuhl, Williams, Lacerda, Stevens, & Lindblom, 1992; Iverson & Kuhl, 1995).

Empirical Evidence

The first evidence for the perceptual magnet effect came from experiments with English-speaking six-month-old infants (Grieser & Kuhl, 1989). Using the conditioned headturn procedure to assess within-category generalization of speech sounds, the authors found that a prototypical /i/ vowel based on mean formant values in Peterson and Barney’s production data was more likely to be generalized to sounds surrounding it than was a non-prototypical /i/ vowel. In addition, they found that infants’ rate of generalization correlated with adult goodness ratings of the stimuli, so stimuli that were judged as the best exemplars of the /i/ category were generalized most often to neighboring stimuli. Kuhl (1991) showed that adults, like infants, can discriminate stimuli near a non-prototype of the /i/ category better than stimuli near the prototype. Kuhl et al. (1992) tested English- and Swedish-learning infants on discrimination near prototypical English /i/ (high, front, unrounded) and Swedish /y/ (high, front, rounded) sounds, again using the conditioned headturn procedure; they found that while English infants generalized the /i/ sounds more than the /y/ sounds, Swedish-learning infants showed the reverse pattern. Based on this evidence, the authors described the perceptual magnet effect as a language-specific shrinking of perceptual space near native language phonetic category prototypes, with prototypes acting as perceptual magnets to exert a pull on neighboring speech sounds (see also Kuhl, 1993). They concluded that these language-specific prototypes are in place as young as six months.

Iverson and Kuhl (1995) used signal detection theory and multidimensional scaling to produce a detailed perceptual map of acoustic space near the prototypical and non-prototypical /i/ vowels used in previous experiments. They tested adults’ discrimination of 13 stimuli along a single vector in F1−F2 space, ranging from F1 of 197 Hz and F2 of 2489 Hz (classified as /i/) to F1 of 429 Hz and F2 of 1925 Hz (classified as /e/, as in bait). In both analyses, they found shrinkage of perceptual space near the ends of the continuum, especially near the /i/ end. They found a peak in discrimination near the center of the continuum between stimulus 6 and stimulus 9. This supported previous analyses, suggesting that perceptual space was shrunk near category centers and expanded near category edges. The effect has since been replicated in the English /i/ category (Sussman & Lauckner-Morano, 1995), and evidence for poor discrimination near category prototypes has been found for the German /i/ category (Diesch, Iverson, Kettermann, & Siebert, 1999). In addition, the effect has been found in the /r/ and /l/ categories in English but not Japanese speakers (Iverson & Kuhl, 1996; Iverson et al., 2003), lending support to the idea of language-specific phonetic category prototypes.

Several studies have found large individual differences between subjects in stimulus goodness ratings and category identification, suggesting that it may be difficult to find vowel tokens that are prototypical across listeners and thus raising methodological questions about experiments that examine the perceptual magnet effect (Lively & Pisoni, 1997; Frieda, Walley, Flege, & Sloane, 1999; Lotto, Kluender, & Holt, 1998). However, data collected by Aaltonen, Eerola, Hellström, Uusipaikka, and Lang (1997) on the /i/−/y/ contrast in Finnish adults showed that discrimination performance was less variable than identification performance, and the authors argued based on these results that discrimination operates at a lower level than overt identification tasks. A more serious challenge has come from studies that question the robustness of the perceptual magnet effect. Lively and Pisoni (1997) found no evidence of a perceptual magnet effect in the English /i/ category, suggesting that listeners’ discrimination patterns are sensitive to methodological details or dialect differences, though the authors could not identify the specific factors responsible for these differences. The effect has also been difficult to isolate in vowels other than /i/: Sussman and Gekas (1997) failed to find an effect in the English /i/ (as in bit) category, and Thyer, Hickson, and Dodd (2000) found the effect in the /i/ category but found the reverse effect in the /ɔ/ (as in bought) category and failed to find any effect in other vowels. While there has been evidence linking changes in vowel perception to differences in interstimulus interval (Pisoni, 1973) and task demands (Gerrits & Schouten, 2004), much of the variability found in vowel perception has not been accounted for.

In summary, vowel perception has been shown to be continuous rather than categorical: listeners can discriminate two vowels that receive the same category label. However, studies have suggested that even in vowels, perceptual space is shrunk near phonetic category centers and expanded near category edges. In addition, studies have shown substantial variability in the perceptual magnet effect. This variability seems to depend on the phonetic category being tested and also on methodological details. Based on the predictions of our rational model, we will argue that some of this variability is attributable to differences in category variance between different phonetic categories and to differences in the amount of noise through which stimuli are heard.

Previous Models

Grieser and Kuhl (1989) originally described the perceptual magnet effect in terms of category prototypes, arguing that phonetic category prototypes exert a pull on nearby speech sounds and thus create an inverse correlation between goodness ratings and discriminability. While this inverse correlation has been examined more closely and used to argue that categorical perception and the perceptual magnet effect are separate phenomena (Iverson & Kuhl, 2000), most computational models of the perceptual magnet effect have assumed that it is a categorical effect, parallel to categorical perception.

Lacerda (1995) began by assuming that the warping of perceptual space emerges as a side-effect of a classification problem: the goal of listeners is to classify speech sounds into phonetic categories. His model assumes that perception has been trained with labeled exemplars or that labels have been learned using other information in the speech signal. In perceiving a new speech sound, listeners retrieve only the information from the speech signal that is helpful in determining the sound’s category, or label, and they categorize and discriminate speech sounds based on this information. Listeners can perceive a contrast only if the two sounds differ in category membership. Implementing this idea in neural models, Damper and Harnad (2000) showed that when trained on two endpoint stimuli, neural networks will treat a voice onset time (VOT) continuum categorically. One limitation of the models proposed by Lacerda (1995) and Damper and Harnad (2000) is that they do not include a mechanism by which listeners can perceive within-category contrasts. As demonstrated by Lotto et al. (1998), this assumption cannot capture the data on the perceptual magnet effect because within-category discriminability is higher than this account would predict.

Other neural network models have argued that the perceptual magnet effect results not from category labels but instead from specific patterns in the distribution of speech sounds. Guenther and Gjaja (1996) suggested that neural firing preferences in a neural map reflect Gaussian distributions of speech sounds in the input and that because more central sounds have stronger neural representations than more peripheral sounds, the population vector representing a speech sound that is halfway between the center and the periphery of its phonetic category will appear closer to the center of the category than to its periphery. This model implements the idea that the perceptual magnet effect is a direct result of uneven distributions of speech sounds in the input. Similarly, Vallabha and McClelland (2007) have shown that Hebbian learning can produce attractors at the locations of Gaussian input categories and that the resulting neural representation fits human data accurately. The idea that distributions of speech sounds in the input can influence perception is supported by experimental evidence showing that adults and infants show better discrimination of a contrast embedded in a bimodal distribution of speech sounds than of the same contrast embedded in a unimodal distribution (Maye & Gerken, 2000; Maye, Werker, & Gerken, 2002).

These previous models have provided process-level accounts of how the perceptual magnet effect might be implemented algorithmically and neurally, but they leave several questions unanswered. The prototype model does not give independent justification for the assumption that prototypes should exert a pull on neighboring speech sounds; several models cannot account for better than chance within-category discriminability of vowels. Other models give explanations of how the effect might occur but do not address the question of why it should occur. Our rational model fills these gaps by providing a mathematical formalization of the perceptual magnet effect at Marr’s (1982) computational level, considering the goals of the computation and the logic by which these goals can be achieved. It gives independent justification for the optimality of a perceptual bias toward category centers and simultaneously predicts a baseline level of within-category discrimination. Furthermore, it goes beyond these previous models to make novel predictions about the types of variability that should be seen in the perceptual magnet effect.

Theoretical Overview of the Model

Our model of the perceptual magnet effect focuses on the idea that we can analyze speech perception as a kind of optimal statistical inference. The goal of listeners, in perceiving a speech sound, is to recover the phonetic detail of a speaker’s target production. They infer this target production using the information that is available to them from the speech signal and their prior knowledge of phonetic categories. Here we give an intuitive overview of our model in the context of speech perception, followed by a more general mathematical account in the next section.

Phonetic categories are defined in the model as distributions of speech sounds. When speakers produce a speech sound, they choose a phonetic category and then articulate a speech sound from that category. They can use their specific choice of speech sounds within the phonetic category to convey coarticulatory information, affect, and other relevant information. Because there are several factors that speakers might intend to convey, and each factor can cause small fluctuations in acoustics, we assume that the combination of these factors approximates a Gaussian, or normal, distribution. Phonetic categories in the model are thus Gaussian distributions of target speech sounds. Categories may differ in the location of their means, or prototypes, and in the amount of variability they allow. In addition, categories may differ in frequency, so that some phonetic categories are used more frequently in a language than others. The use of Gaussian phonetic categories in this model does not reflect a belief that speech sounds actually fall into parametric distributions. Rather, the mathematics of the model are easiest to derive in the case of Gaussian categories. As will be discussed later, the general effects that are predicted in the case of Gaussian categories are similar to those predicted for other types of unimodal distributions.

In the speech sound heard by listeners, the information about the target production is masked by various types of articulatory, acoustic, and perceptual noise. The combination of these noise factors is approximated through Gaussian noise, so that the speech sound heard is normally distributed around the speaker’s target production.

Formulated in this way, speech perception becomes a statistical inference problem. When listeners perceive a speech sound, they can assume it was generated by selecting a target production from a phonetic category and then generating a noisy speech sound based on the target production. Listeners hear the speech sound and know the structure and location of phonetic categories in their native language. Given this information, they need to infer the speaker’s target production. They infer phonetic detail in addition to category information in order to recover the gradient coarticulatory and non-linguistic information that the speaker intended.

With no prior information about phonetic categories, listeners’ perception should be unbiased, since under Gaussian noise, speech sounds are equally likely to be shifted in either direction. In this case, listeners’ safest strategy is to guess that the speech sound they heard was the same as the target production. However, experienced listeners know that they are more likely to hear speech sounds near the centers of phonetic categories than speech sounds farther from category centers. The optimal way to use this knowledge of phonetic categories to compensate for a noisy speech signal is to bias perception toward the center of a category, toward the most likely target productions.

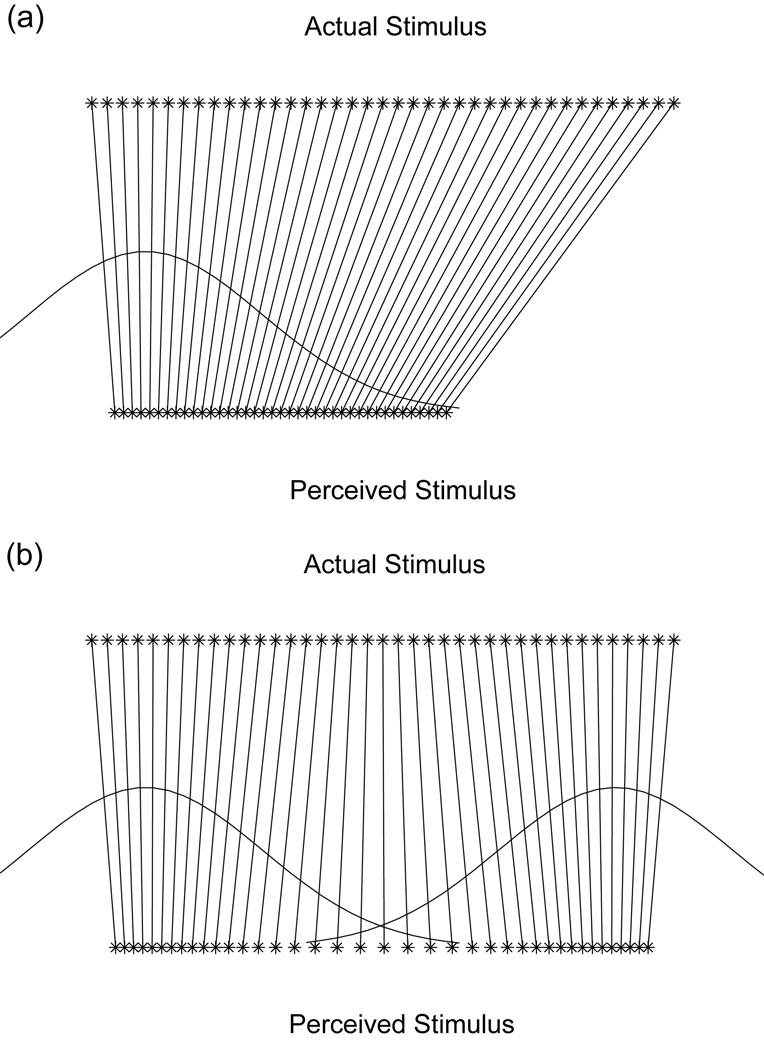

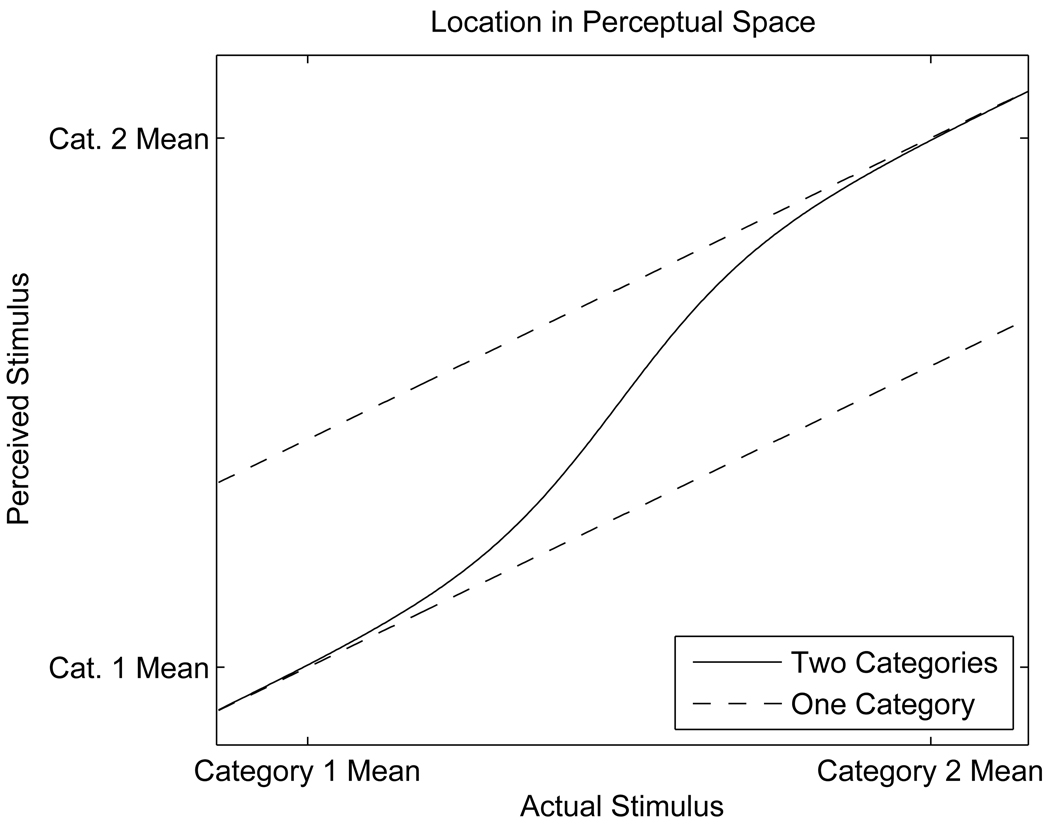

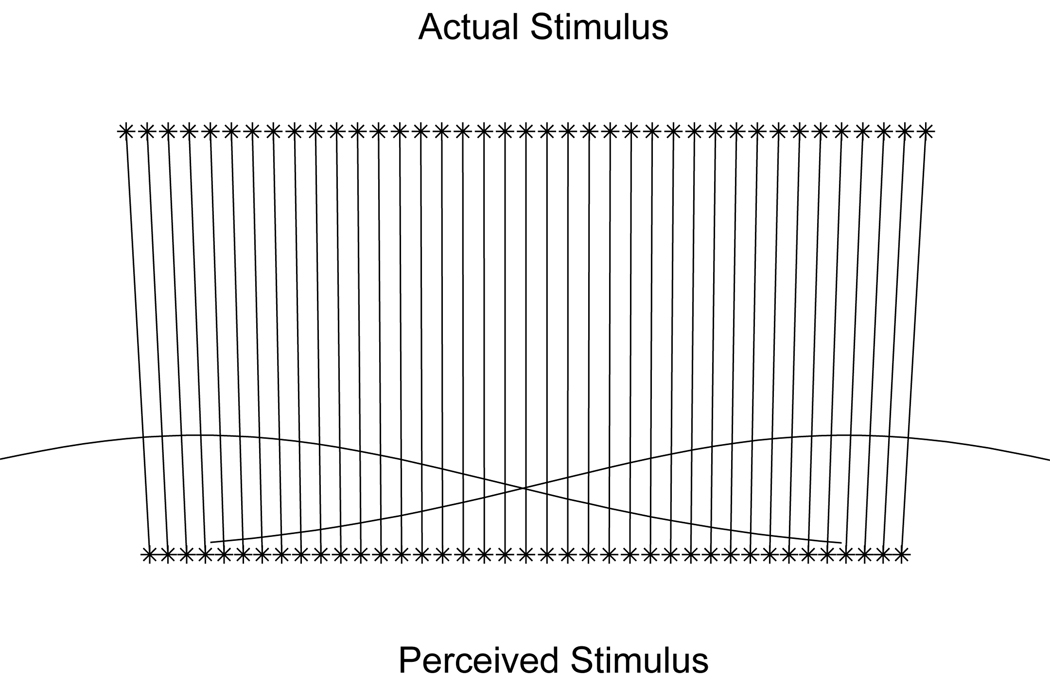

In a hypothetical language with a single phonetic category, where listeners are certain that all sounds belong to that category, this perceptual bias toward the category mean causes all of perceptual space to shrink toward the center of the category. The resulting perceptual pattern is shown in Figure 2 (a). If there is no uncertainty about category membership, perception of distant speech sounds is more biased than perception of proximal speech sounds so that all of perceptual space is shrunk to the same degree.

Figure 2.

Predicted relationship between acoustic and perceptual space in the case of (a) one category and (b) two categories.

In order to optimally infer a speaker’s target production in the context of multiple phonetic categories, listeners must determine which categories are likely to have generated a speech sound. They can then predict the speaker’s target production based on the structure of these categories. If they are certain of a speech sound’s category membership, their perception of the speech sound should be biased toward the mean of that category, as was the case in a language with one phonetic category. This shrinks perceptual space in areas of unambiguous categorization. If listeners are uncertain about category membership, they should take into account all the categories that could have generated the speech sound they heard, but they should weight the influence of each category by the probability that the speech sound came from that category. This ensures that under assumptions of equal frequency and variance, nearby categories are weighted more heavily than those farther away. Perception of speech sounds precisely on the border between two categories is pulled simultaneously toward both category means, each cancelling out the other’s effect. Perception of speech sounds that are near the border between categories is biased toward the most likely category, but the competing category dampens the bias. The resulting pattern for the two-category case is shown in Figure 2 (b).

The interaction between the categories produces a pattern of perceptual warping that is qualitatively similar to descriptions of the perceptual magnet effect and other categorical effects that have been reported in the literature. Speech sounds near category centers are extremely close together in perceptual space, whereas speech sounds near the edges of a category are much farther apart. This perceptual pattern results from a combination of two factors, both of which were proposed by Liberman et al. (1957) in reference to categorical perception. The first is acquired equivalence within categories due to perceptual bias toward category means; the second is acquired distinctiveness between categories due to the presence of multiple categories. Consistent with these predictions, infants acquiring language have shown both acquired distinctiveness for phonemically distinct sounds and acquired equivalence for members of a single phonemic category over the course of the first year of life (Kuhl et al., 2006).

Mathematical Presentation of the Model

This section formalizes the rational model within the framework of Bayesian inference. The model is potentially applicable to any perceptual problem in which a perceiver needs to recover a target from a noisy stimulus, using knowledge that the target has been sampled from a Gaussian category. We therefore present the mathematics in general terms, referring to a generic stimulus S, target T, category c, category variance , and noise variance . In the specific case of speech perception, S corresponds to the speech sound heard by the listener, T to the phonetic detail of a speaker’s intended target production, and c to the language’s phonetic categories; the category variance represents meaningful within-category variability, and the noise variance represents articulatory, acoustic, and perceptual noise in the speech signal.

The formalization is based on a generative model in which a target T is produced by sampling from a Gaussian category c with mean μc and variance . The target T is distributed as

| (1) |

Perceivers cannot recover T directly, but instead perceive a noisy stimulus S that is normally distributed around the target production with noise variance such that

| (2) |

Note that integrating over T yields

| (3) |

indicating that under these assumptions, the stimuli that perceivers observe are normally distributed around a category mean μc with a variance that is a sum of the category variance and the noise variance.

Given this generative model, perceivers can use Bayesian inference to reconstruct the target from the noisy stimulus. According to Bayes’ rule, given a set of hypotheses H and observed data d, the posterior probability of any given hypothesis h is

| (4) |

indicating that it is proportional to both the likelihood p(d|h), which is a measure of how well the hypothesis fits the data, and the prior p(h), which gives the probability assigned to the hypothesis before any data were observed. Here, the stimulus S serves as data d; the hypotheses under consideration are all the possible targets T; and the prior p(h), which gives the probability that any particular target will occur, is specified by category structure. In laying out the solution to this statistical problem, we begin with the case in which there is a single category and then move to the more complex case of multiple categories.

One Category

Perceivers are trying to infer the target T given stimulus S and category c, so they must calculate p(T|S, c). They can use Bayes’ rule:

| (5) |

The likelihood p(S|T), given by the noise process (Equation 2), assigns highest probability to stimulus S, and the prior p(T|c), given by category structure (Equation 1), assigns highest probability to the category mean. As described in Appendix A, the right-hand side of this equation can be simplified to yield a Gaussian distribution

| (6) |

whose mean falls between the stimulus S and the category mean μc.

This posterior probability distribution can be summarized by its mean (the expectation of T given S and c),

| (7) |

The optimal guess at the target, then, is a weighted average of the observed stimulus and the mean of the category that generated the stimulus, where the weighting is determined by the ratio of category variance to noise variance.1 This equation formalizes the idea of a perceptual magnet: the term μc pulls the perception of stimuli toward the category center, effectively shrinking perceptual space around the category.

Multiple Categories

The one-category case, while appropriate to explain performance on some perceptual tasks (e.g. Huttenlocher et al., 2000), is inappropriate for describing natural language. In a language with multiple phonetic categories, listeners must consider many possible source categories for a speech sound. We therefore extend the model so that it applies to the case of multiple categories.

Upon observing a stimulus, perceivers can compute the probability that it came from any particular category using Bayes’ rule

| (8) |

where p(S|c) is given by Equation 3 and p(c) reflects the prior probability assigned to category c.

To compute the posterior on targets p(T|S), perceivers need to marginalize, or sum, over categories,

| (9) |

The first term on the right-hand side is given by Equation 6 and the second term can be calculated using Bayes’ rule as given by Equation 8. The posterior has the form of a mixture of Gaussians, where each Gaussian represents the solution for a single category. Restricting our analysis to the case of categories with equal category variance , the mean of this posterior probability distribution is

| (10) |

which can be rewritten as

| (11) |

A full derivation of this expectation is given in Appendix A.

Equation 11 gives the optimal guess for recovering a target in the case of multiple categories. This guess is a weighted average of the stimulus S and the means μc of all the categories that might have produced S. When perceivers are certain of a stimulus’ category, this equation reduces to Equation 7, and perception of a stimulus S is biased toward the mean of its category. However, when a stimulus is on a border between two categories, the optimal guess at the target is influenced by both category means, and each category weakens the other’s effect (Figure 2 (b)). Shrinkage of perceptual space is thus strongest in areas of unambiguous categorization – the centers of categories – and weakest at category boundaries.

This analysis demonstrates that warping of perceptual space that is qualitatively consistent with the perceptual magnet effect emerges as the result of optimal perception of noisy stimuli. In the next two sections, we provide a quantitative investigation of the model’s predictions in the context of speech perception. The next section focuses on comparing the predictions of the model to empirical data on the perceptual magnet effect, estimating the parameters describing category means and variability from human data. In the subsequent section, we examine the consequences of manipulating these parameters, relating the model’s behavior to further results from the literature.

Quantitative Evaluation

In this section, we test the model’s predictions quantitatively against the multidimensional scaling results from Experiment 3 in Iverson and Kuhl (1995). These data were selected as a modeling target because they give a clean, precise spatial representation of the warping associated with the perceptual magnet effect, mapping 13 /i/ and /e/ stimuli that are separated by equal psychoacoustic distance onto their corresponding locations in perceptual space. Because these multidimensional scaling data constitute the basis for both this simulation and the experiment reported below, we describe the experimental setup and results in some detail here.

Iverson and Kuhl’s multidimensional scaling experiment was conducted with thirteen vowel stimuli along a single continuum in F1−F2 space ranging from /i/ to /e/, whose exact formant values are shown in Table 1. The stimuli were designed to be equally spaced when measured along the mel scale, which equates distances based on difference limens (S. S. Stevens et al., 1937). Subjects performed an AX discrimination task in which they pressed and held a button to begin a trial, releasing the button as quickly as possible if they believed the two stimuli to be different or holding the button for the remainder of the trial (2000 ms) if they heard no difference between the two stimuli. Subjects heard 156 “different” trials, consisting of all possible ordered pairs of non-identical stimuli, and 52 “same” trials, four with each of the 13 stimuli.

Table 1.

Formant values for stimuli used in the multidimensional scaling experiment, reported in Iverson and Kuhl (2000).

| Stimulus Number | F1 (Hz) | F2 (Hz) |

|---|---|---|

| 1 | 197 | 2489 |

| 2 | 215 | 2438 |

| 3 | 233 | 2388 |

| 4 | 251 | 2339 |

| 5 | 270 | 2290 |

| 6 | 289 | 2242 |

| 7 | 308 | 2195 |

| 8 | 327 | 2148 |

| 9 | 347 | 2102 |

| 10 | 367 | 2057 |

| 11 | 387 | 2012 |

| 12 | 408 | 1968 |

| 13 | 429 | 1925 |

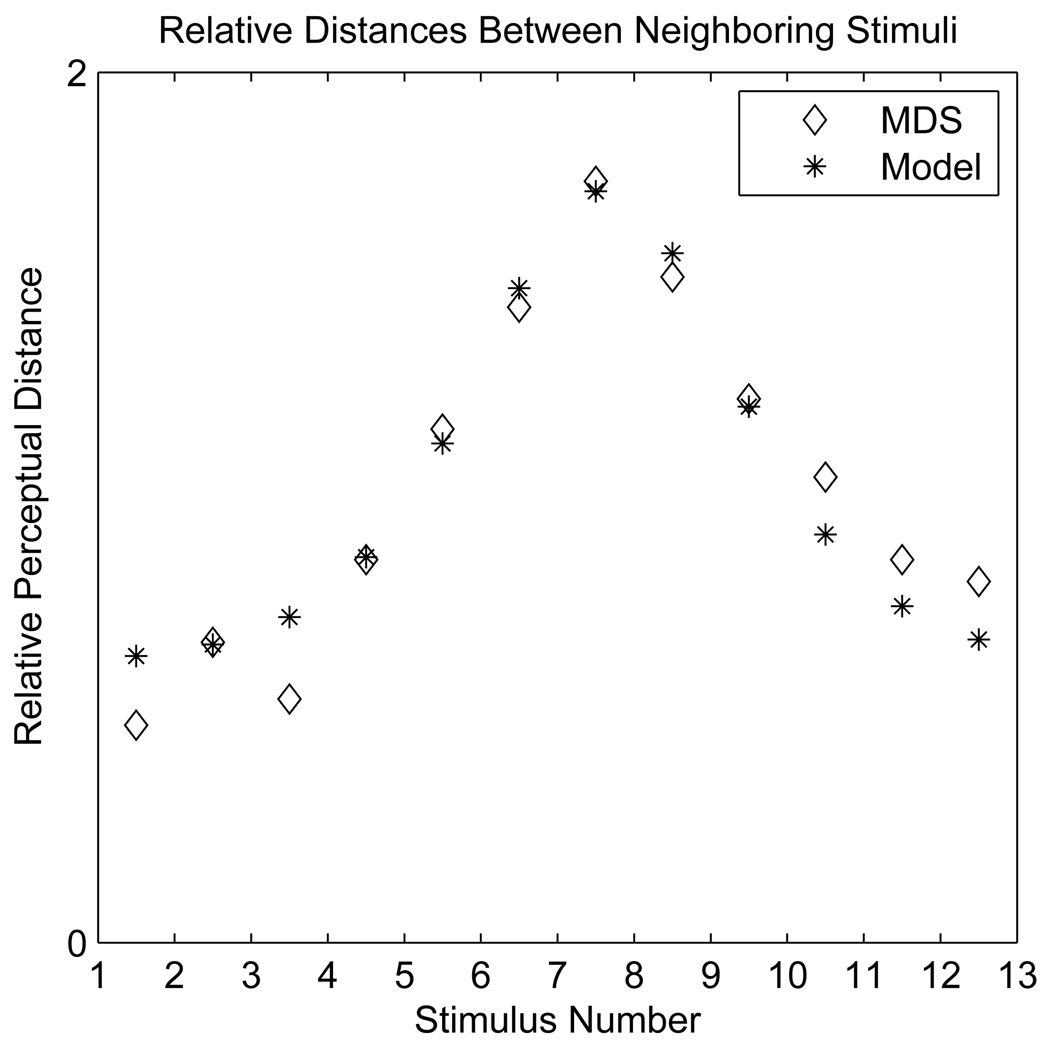

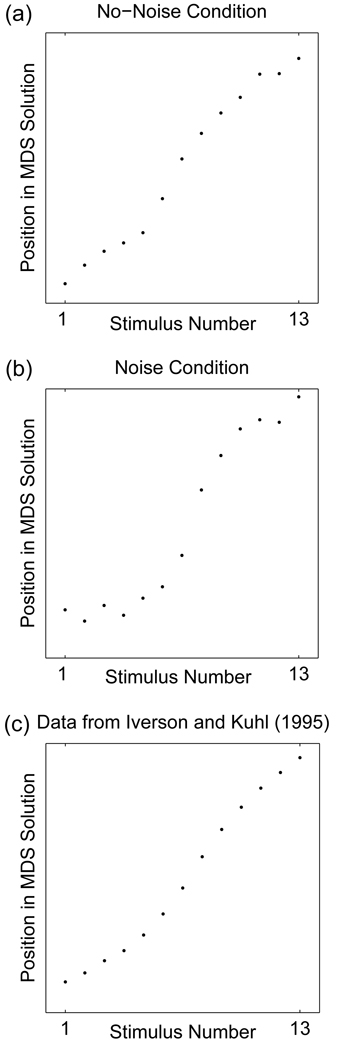

Iverson and Kuhl reported a total accuracy rate of 77% on “different” trials and a false alarm rate of 31% on “same” trials, but they did not further explore direct accuracy measures. Instead, they created a full similarity matrix consisting of log reaction times of “different” responses for each pair of stimuli. To avoid sparse data in the cells where most participants incorrectly responded that two stimuli were identical, the authors replaced all “same” responses with the trial length, 2000 ms, effectively making them into “different” responses with long reaction times. This similarity matrix was used for multidimensional scaling, which finds a perceptual map that is most consistent with a given similarity matrix. In this case, the authors constrained the solution to be in one dimension and assumed a linear relation between similarity values and distance in perceptual space. The interstimulus distances obtained from this analysis are shown in Figure 3. The perceptual map obtained through multidimensional scaling showed that neighboring stimuli near the ends of the stimulus vector were separated by less perceptual distance than neighboring stimuli near the center of the vector. These results agreed qualitatively with data obtained in Experiment 2 of the same paper, which used d′ as an unbiased estimate of perceptual distance. We chose the multidimensional scaling data as our modeling target because they are more extensive than the d′ data, encompassing the entire range of stimuli.

Figure 3.

Relative distances between neighboring stimuli in Iverson and Kuhl’s (1995) multidimensional scaling analysis and in the model.

We tested a two-category version of the rational model to determine whether parameters could be found that would reproduce these empirical data. Equal variance was assumed for the two categories and parameters in the model were based as much as possible on empirical measures in order to reduce the number of free parameters. The simulation was constrained to a single dimension along the direction of the stimulus vector. The parameters that needed to be specified were as follows:

Subject goodness ratings from Iverson and Kuhl (1995) were first used to specify the mean of the /i/ category, μ/i/. These goodness ratings indicated that the best exemplars of the /i/ category were stimuli 2 and 3, so the mean of the /i/ category was set halfway between these two stimuli.2

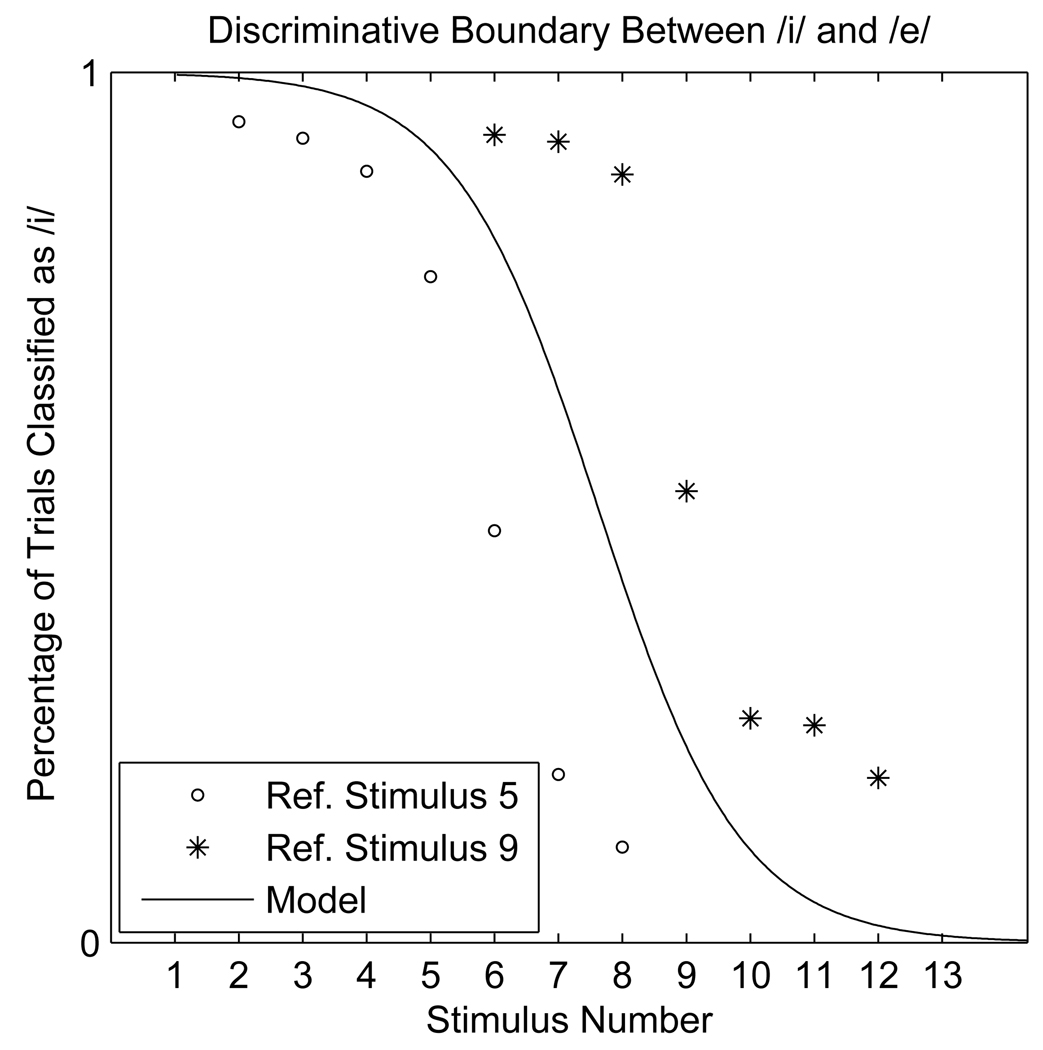

The mean of the /e/ category, μ/e/, and the sum of the variances, , were calculated as described in Appendix B based on phoneme identification curves from Lotto et al. (1998). These identification curves were produced through an experiment in which subjects were played pairs of stimuli from the 13-stimulus vector and asked to identify either the first or the second stimulus in the pair as /i/ or /e/. The other stimulus in the pair was one of two reference stimuli, either stimulus 5 or stimulus 9. The authors obtained two distinct curves in these two conditions, showing that the phoneme boundary shifted based on the identity of the reference stimulus. Because the task used for multidimensional scaling involved presentation of all possible pairings of the 13 stimuli, the phoneme boundary in the model was assumed to be halfway between the boundaries that appeared in these two referent conditions. In order to identify this boundary, two logistic curves were fit to the prototype and non-prototype identification curves. The two curves were constrained to have the same gain, and the biases of the two curves were averaged to obtain a single bias term. Based on Equation 34, these values indicated that μ/e/ should be placed just to the left of stimulus 13; Equation 35 yielded a value of 10,316 for . The resulting discriminative boundary is shown together with the data from Lotto et al. (1998) in Figure 4.

Figure 4.

Identification percentages obtained by Lotto et al. (1998) with reference stimuli 5 and 9 were averaged to produce a single intermediate identification curve in the model (solid line).

The ratio between the category variance and the speech signal noise was the only remaining free parameter, and its value was chosen in order to maximize the fit to Iverson and Kuhl’s multidimensional scaling data. This direct comparison was made by calculating the expectation E[T|S] for each of the 13 stimuli according to Equation 11 and then determining the distance in mels between the expected values of neighboring stimuli. These distances were compared with the distances between stimuli in the multidimensional scaling solution. Since multidimensional scaling gives relative, and not absolute, distances between stimuli, this comparison was evaluated based on whether mel distances in the model were proportional to distances found through multidimensional scaling. As shown in Figure 3, the model yielded an extremely close fit to the empirical data, yielding interstimulus distances that were proportional to those found in multidimensional scaling (r = 0.97). This simulation used the following parameters:

The fit obtained between the simulation and the empirical data is extremely close; however, the model parameters derived in this simulation are meant to serve only as a first approximation of the actual parameters in vowel perception. Because of the variability that has been found in subjects’ goodness ratings of speech stimuli, it is likely that these parameters are somewhat off from their actual values, and it is also possible that the parameters vary between subjects. Instead, the simulation is a concrete demonstration that the model can reproduce empirical data on the perceptual magnet effect quantitatively as well as qualitatively using a reasonable set of parameters, supporting the viability of this rational account.

Effects of Frequency, Variability, and Noise

The previous section has shown a direct quantitative correspondence between model predictions and empirical data. In this section we explore the behavior of the rational model under various parameter combinations, using the parameters derived in the previous section as a baseline for comparison. These simulations serve a dual purpose: they establish the robustness of the qualitative behavior of the model under a range of parameters, and they make predictions about the types of variability that should occur when category frequency, category variance, and speech signal noise are varied. We first introduce several quantitative measures that can be used to visualize the extent of perceptual warping, and these measures are subsequently used to visualize the effects of parameter manipulations.

Characterizing Perceptual Warping

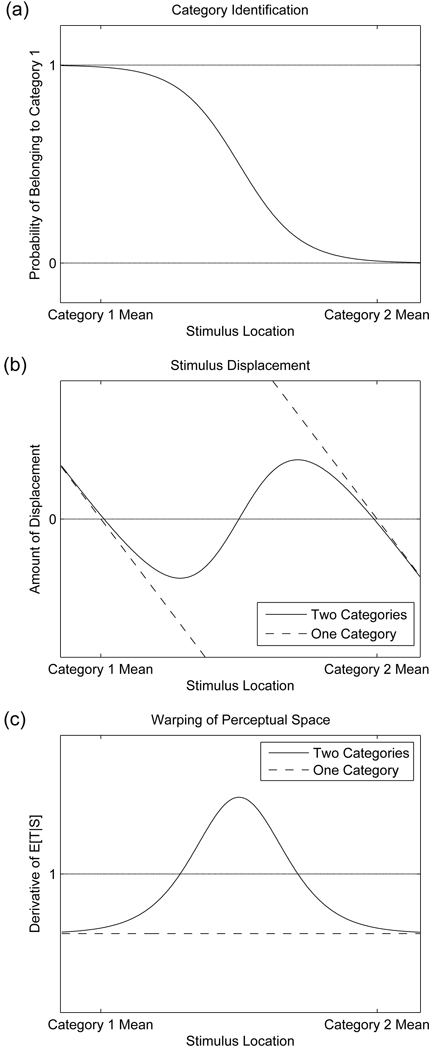

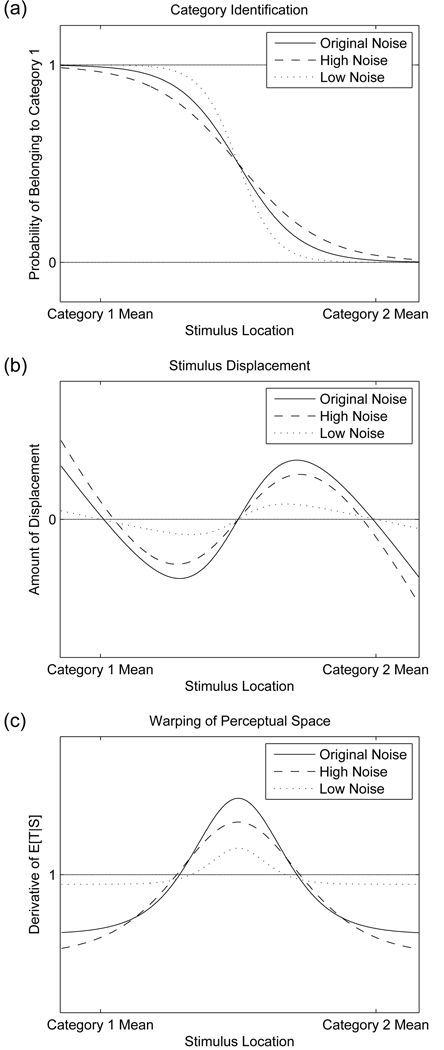

Our statistical analysis establishes a simple function mapping a stimulus, S, to a percept of the intended target, given by E[T|S]. This is a linear mapping in the one-category case (Equation 7), but it becomes non-linear in the case of multiple categories (Equation 11). Figure 5 illustrates the form of this mapping in the cases of one category and two categories with equal variance. Note that this function is not an identification function: the vertical axis represents the exact location of a stimulus in a continuous perceptual space, E[T|S], not the probability with which that stimulus receives a particular label. Slopes that are more horizontal indicate that stimuli are closer in perceptual space than in acoustic space. In the two-category case, stimuli that are equally spaced in acoustic space are nevertheless clumped near category centers in perceptual space, as shown by the two nearly horizontal portions of the curve near the category means. In order to analyze this behavior more closely, we examine the relationship between three measures: identification, the posterior probability of category membership; displacement, the difference between the actual and perceived stimulus; and warping, the degree of shrinkage or expansion of perceptual space.

Figure 5.

Model predictions for location of stimuli in perceptual space relative to acoustic space. Dashed lines indicate patterns corresponding to a single category; solid lines indicate patterns corresponding to two categories of equal variance.

The identification function p(c|S) gives the probability of a stimulus having been generated by a particular category, as calculated in Equation 8. This function is then used to compute the posterior on targets, summing over categories. In the case of two categories with equal variance, the identification function takes the form of a logistic function. Specifically, the posterior probability of category membership can be written as

| (12) |

where the gain and bias of the logistic are given by . An identification function of this form is illustrated in Figure 6 (a). In areas of certain categorization, the identification function is at either 1 or 0; a value of 0.5 indicates maximum uncertainty about category membership.

Figure 6.

Model predictions for (a) identification, (b) displacement, and (c) warping. Dashed lines indicate patterns corresponding to a single category; solid lines indicate patterns corresponding to two categories of equal variance.

Displacement involves a comparison between the location of a stimulus in perceptual space E[T|S] and its location in acoustic space S. It corresponds to the amount of bias in perceiving a stimulus. We can calculate this quantity as

| (13) |

In the one-category case, this means the amount of displacement is proportional to the distance between the stimulus S and the mean μc of the category. As stimuli get farther away from the category mean, they are pulled proportionately farther toward the center of the category. The dashed lines in Figure 6 (b) show two cases of this. In the case of multiple categories, the amount of displacement is proportional to the distance between S and a weighted average of the means μc of more than one category. This is shown in the solid line, where ambiguous stimuli are displaced less than would be predicted in the one-category case because of the competing influence of a second category mean.

Finally, perceptual warping can be characterized based on the distance between two neighboring points in perceptual space that are separated by a fixed step ΔS in acoustic space. This quantity is reflected in the distance between neighboring points on the bottom layer of each diagram in Figure 2. By the standard definition of the derivative as a limit, as ΔS approaches zero this measure of perceptual warping corresponds to the derivative of E[T|S] with respect to S. This derivative is

| (14) |

where the last term is the derivative of the logistic function given in Equation 12. This equation demonstrates that distance between two neighboring points in perceptual space is a linear function of the rate of change of p(c|S), which measures category membership of stimulus S. Probabilities of category assignments are changing most rapidly near category boundaries, resulting in greater perceptual distances between neighboring stimuli near the edges of categories. This is shown in Figure 6 (c), and the form of the derivative is described in more detail in Appendix C.

In summary, the identification function (Equation 12) shows a sharp decrease at the location of the category boundary, going from a value near one (assignment to category 1) to a value near zero (assignment to category 2). Perceptual bias, or displacement (Equation 13), is a linear function of distance from the mean in the one-category case but is more complex in the two-category case; it is positive when stimuli are displaced in a positive direction and negative when stimuli are displaced in a negative direction. Finally, warping of perceptual space (Equation 14), which has a value greater than one in areas where perceptual space is expanded and a value less than one in areas where perceptual space is shrunk, shows that all of perceptual space is shrunk in the one-category case but that there is an area of expanded perceptual space between categories in the two-category case. Qualitatively, note that displacement is always in the direction of the most probable category mean and that the highest perceptual distance between stimuli occurs near category boundaries. This is compatible with the idea that categories function like perceptual magnets and also with the observation that perceptual space is shrunk most in the centers of phonetic categories. The remainder of this section uses these measures to explore the model’s behavior under various parameter manipulations that simulate changes in phonetic category frequency, within-category variability, and speech signal noise.

Frequency

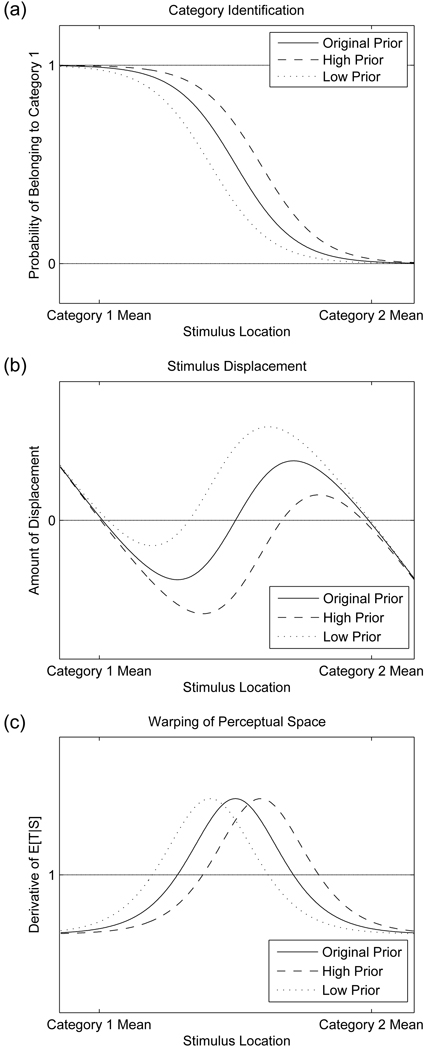

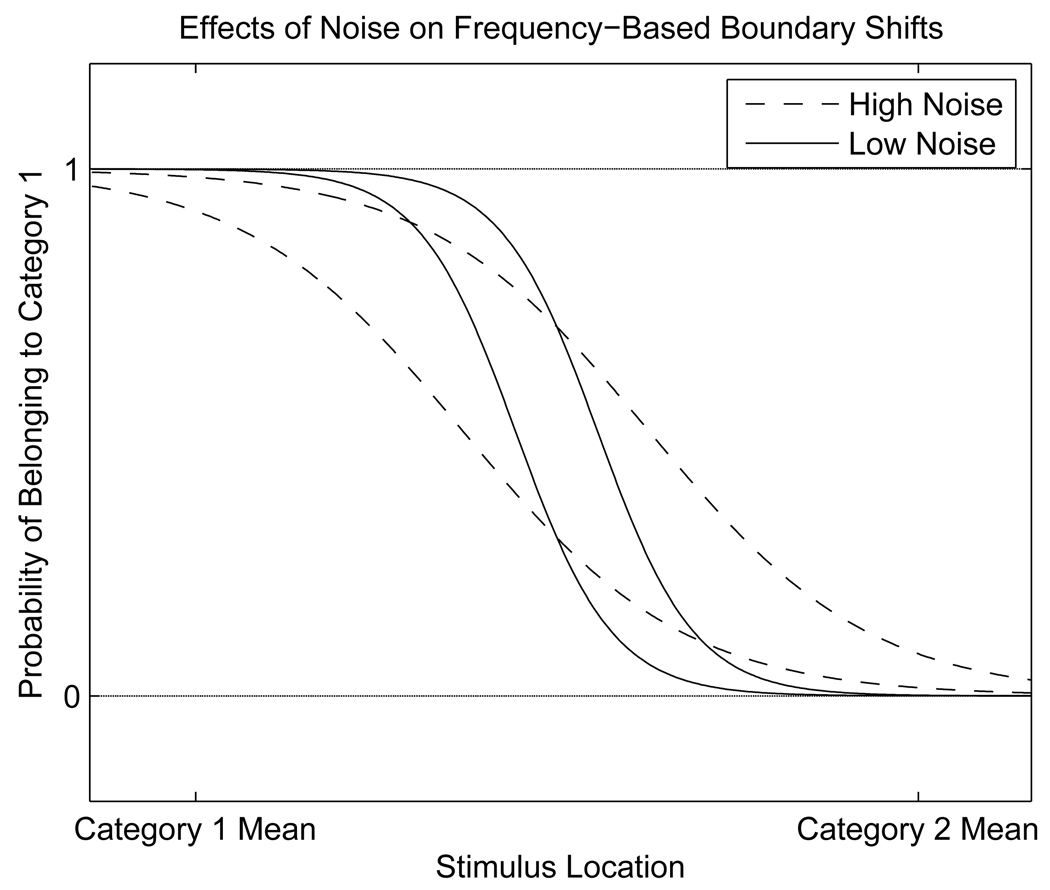

Manipulating the frequency of phonetic categories corresponds in our model to manipulating their prior probability. This manipulation causes a shift in the discriminative boundary between two categories, as described in Appendix B. In Figure 7 (a), the boundary is shifted toward the category with lower prior probability so that a larger region of acoustic space between the two categories is classified as belonging to the category with higher prior probability. Figure 7 (b) shows that when the prior probability of category 1 is increased, most stimuli between the two categories are shifted in the negative direction toward the mean of that category. This occurs because more sounds are classified as being part of category 1. Decreasing the prior probability of category 1 yields a similar shift in the opposite direction. Figure 7 (c) shows that the location of the expansion of perceptual space follows the shift in the category boundary.

Figure 7.

Effects of prior probability manipulation on (a) identification, (b) displacement, and (c) warping. The prior probability of category 1, p(c1), was either increased or decreased while all other model parameters were held constant.

This shift qualitatively resembles the boundary shift that has been documented based on lexical context (Ganong, 1980). In contexts where one phoneme would form a lexical item and the other would not, phoneme boundaries are shifted toward the phoneme that makes the non-word, so that more of the sounds between categories are classified as the phoneme that would yield a word. Similar effects have also been found for lexical frequency (Connine, Titone, & Wang, 1993) and phonotactic probability (Massaro & Cohen, 1983; Pitt & McQueen, 1998). To model such a shift using the rational model, information about a specific lexical or phonological context needs to be encoded in the prior p(c). The prior would thus reflect the information about the frequency of occurrence of a phonetic category in a specific context. The rational model then predicts that the boundary shift can be modeled by a bias term of magnitude and that the peak in discrimination should shift together with the category boundary.

Variability

The category variance parameter indicates the amount of meaningful variability that is allowed within a phonetic category. One correlate of this might be the amount of coarticulation that a category allows: categories that undergo strong coarticulatory effects have high variance, whereas categories that are resistant to coarticulation have lower variance.3 In the model, categories with high variability should differ from categories with low variability in two ways. First, the discriminative boundary between the categories should be either shallow, in the case of high variability, or sharp, in the case of low variability (Figure 8 (a)). This means that listeners should be nearly deterministic in inferring which category produced a sound in the case of low variability, whereas they should be more willing to consider both categories if the categories have high variability. This pattern has been demonstrated empirically by Clayards, Tanenhaus, Aslin, and Jacobs (2008), who showed that the steepness of subjects’ identification functions along a /p/−/b/ continuum depends on the amount of category variability in the experimental stimuli.

Figure 8.

Effects of category variance on (a) identification, (b) displacement, and (c) warping. The category variance parameter was either increased or decreased while all other model parameters were held constant.

In addition to this change in boundary shape, the rational model predicts that the amount of variability should affect the weight given to the category means relative to the stimulus S when perceiving acoustic detail. Less variability within a category implies a stronger constraint on the sounds that the listener expects to hear, and this gives more weight to the category means. This should cause more extreme shrinkage of perceptual space in categories with low variance.

These two factors should combine to yield extremely categorical perception in categories with low variability and perception that is less categorical in categories with high variability. Figure 8 (b) shows that displacement has a higher magnitude than baseline for stimuli both within and between categories when category variance is decreased. Displacement is reduced with higher category variance. Figure 8 (c) shows the increased expansion of perceptual space between categories and the increased shrinkage within categories that result from low category variance. In contrast, categories with high variance yield more veridical perception.

Differences in category variance might explain why it is easier to find perceptual magnet effects in some phonetic categories than in others. According to vowel production data from Hillenbrand et al. (1995), reproduced here in Figure 1, the /i/ category has low variance along the dimension tested by Iverson and Kuhl (1995). The difficulty in reproducing the effect in other vowel categories might be partly attributable to the fact that listeners have weaker prior expectations about which vowel sounds speakers might produce within these categories.

This parameter manipulation can also be used to explore the limits on category variance: the rational model places an implicit upper limit on category variance if one is to observe enhanced discrimination between categories. This limit occurs when categories are separated by less than two standard deviations, that is, when the standard deviation increases to half the distance to the neighboring category. When the category variance reaches this point, the distribution of speech sounds in the two categories becomes unimodal and the acquired distinctiveness between categories disappears. Instead of causing enhanced discrimination at the category boundary, noise now causes all speech sounds to be pulled inward toward the space between the two category means, as illustrated in Figure 9. Shrinkage of perceptual space may be slightly less between categories than within categories, but all of perceptual space is pulled toward the center of the distribution. This perceptual pattern resembles the pattern that would be predicted if these speech sounds all derived from a single category, indicating that it is the distribution of speech sounds in the input, rather than the explicit category structure, that produces perceptual warping in the model.

Figure 9.

Categories that overlap to form a single unimodal distribution act perceptually like a single category: speech sounds are pulled toward a point between the two categories.

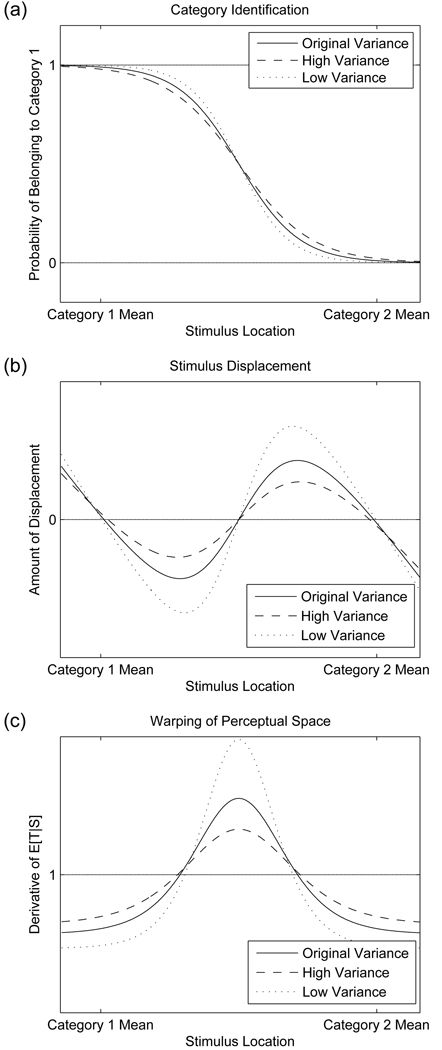

Noise

Manipulating the speech signal noise also affects the optimal solution in two different ways. More noise means that listeners should be relying more on prior category information and less on the speech sound they hear, yielding more extreme shrinkage of perceptual space within categories. However, adding noise to the speech signal also makes the boundary between categories less sharp so that in high noise environments, listeners are uncertain of speech sounds’ category membership (Figure 10 (a)). This combination of factors produces a complex effect: whereas adding low levels of noise makes perception more categorical, there comes a point where noise is too high to determine which category produced a speech sound, blurring the boundary between categories.

Figure 10.

Effects of speech signal noise on (a) identification, (b) displacement, and (c) warping. The speech signal noise parameter was either increased or decreased while all other model parameters were held constant.

With very low levels of speech signal noise, perception is only slightly biased (Figure 10 (b)) and there is a very low degree of shrinkage and expansion of perceptual space (Figure 10 (c)). This occurs because the model relies primarily on the speech sound in low-noise conditions, with only a small influence from category information. As noise levels increase to those used in the simulation in the previous section, the amount of perceptual bias and warping both increase. With further increases in speech signal noise, however, the shallow identification function begins to interfere with the availability of category information. For unambiguous speech sounds, displacement and shrinkage are both increased, as shown at the edges of the graphs in Figure 10. However, this does not simultaneously expand perceptual space between the categories. Instead, the high uncertainty about category membership causes reduced expansion at points between categories, dampening the difference between between-category and within-category discriminability.

The complex interaction between perceptual warping and speech signal noise suggests that there is some level of noise for which one would measure between-category discriminability as much higher than within-category discriminability. However, for very low levels of noise and for very high levels of noise, this difference would be much less noticeable. This suggests a possible explanation for variability that has been found in perceptual warping even among studies that have examined the English /i/ category (e.g. Lively & Pisoni, 1997). Extremely low levels of ambient noise should dampen the perceptual magnet effect, whereas the effect should be more prominent at higher levels of ambient noise.

A further prediction regarding speech signal noise concerns its effect on boundary shifts. As discussed above, the rational model predicts that when prior probabilities p(c) are different between two categories, there should be a boundary shift caused by a bias term of . This bias term produces the largest boundary shift for small values of the gain parameter, which correspond to a shallow category boundary (see Appendix B). High noise variance produces this type of shallow category boundary, giving the bias term a large effect. This is illustrated in Figure 11, where for constant changes in prior probability, larger boundary shifts occur at higher noise levels. This prediction qualitatively resembles data on lexically driven boundary shifts: larger shifts occur when stimuli are low-pass filtered (McQueen, 1991) or presented in white noise (Burton & Blumstein, 1995).

Figure 11.

Effects of speech signal noise on the magnitude of a boundary shift. Simulations at both noise levels used prior probability values for c1 of 0.3 (left boundary) and 0.7 (right boundary). The boundary shift is nevertheless larger for higher levels of speech signal noise.

Summary

Simulations in this section have shown that the qualitative perceptual patterns predicted by the rational model are the same under nearly all parameter combinations. The exceptions to this are the case of no noise, in which perception should be veridical, and the case of extremely high category variance or extremely high noise, in which listeners cannot distinguish between the two categories and effectively treat them as a single, larger category. In addition, these simulations have examined three types of variability in perceptual patterns. Shifts in boundary location occur in the model due to changes in the prior probability of a phonetic category, and these shifts mirror lexical effects that have been found empirically (Ganong, 1980). Differences in the degree of categorical perception in the model depend on the amount of meaningful variability in a category, and these predictions are consistent with the observation that the /i/ category has low variance along the relevant dimension. Finally, the model predicts effects of ambient noise on the degree of perceptual warping, a methodological detail that might explain the variability of perceptual patterns under different experimental conditions.

Testing the Predicted Effects of Noise

Simulations in the previous section suggested that ambient noise levels might be partially responsible for the contradictory evidence that has been found in previous empirical studies of the perceptual magnet effect. In this section, we present an experiment to test the model’s predictions with respect to changes in speech signal noise. The rational model makes two predictions about the effects of noise. The first prediction is that noise should yield a shallower category boundary, making it difficult at high noise levels to determine which category produced a speech sound. This effect should lower the discrimination peak between categories at very high levels of noise and is predicted by any model in which noise increases the variance of speech sounds from a phonetic category. The second prediction is that listeners should weight acoustic and category information differentially depending on the amount of speech signal noise. As noise levels increase, they should rely more on category information, and perception should become more categorical. This effect is predicted by the rational model but not by other models of the perceptual magnet effect, as will be discussed in detail later in the paper. While this effect is overshadowed by the shallow category boundary at very high noise levels, examining low and intermediate levels of noise should allow us to test this second prediction.

Previous research into effects of uncertainty on speech perception has focused on the role of memory uncertainty. Pisoni (1973) found evidence that within-category discrimination shows a larger decrease in accuracy with longer interstimulus intervals than between-category discrimination. He interpreted these results as evidence that within-category discrimination relies on acoustic (rather than phonetic) memory more than between-category discrimination and that acoustic memory traces decay with longer interstimulus intervals. Iverson and Kuhl (1995) also investigated the perceptual magnet effect at three different interstimulus intervals; though they did not explicitly discuss changes in warping related to interstimulus interval, within-category clusters appear to be tighter in their 2500 ms condition than in their 250 ms condition. These results are consistent with the idea that memory uncertainty increases with increased interstimulus intervals.

Several studies have also studied asymmetries in discrimination, under the assumption that memory decay will have a greater effect on the stimulus that is presented first. However, many of these studies have produced contradictory results, making the effects of memory uncertainty difficult to interpret (see Polka & Bohn, 2003, for a review). Furthermore, data from Pisoni (1973) indicate that longer interstimulus intervals do not necessarily increase uncertainty: discrimination performance was worse with a 0 ms interstimulus interval than with a 250 ms interstimulus interval.

Adding white noise is a more direct method of introducing speech signal uncertainty, and its addition to speech stimuli has consistently been shown to decrease subjects’ ability to identify stimuli accurately. Subjects make more identification errors (G. A. Miller & Nicely, 1955) and display a shallower identification function (Formby, Childers, & Lalwani, 1996) with increased noise, consistent with the rational model’s predictions. While it is known that subjects rely to some extent on both temporal and spectral cues in noisy conditions (Xu & Zheng, 2007), it is not known how reliance on these acoustic cues compares to reliance on prior information about category structure. To test whether reliance on category information is greater in higher noise conditions than in lower noise conditions, we replicated Experiment 3 of Iverson and Kuhl (1995), their multidimensional scaling experiment, with and without the presence of background white noise.

The rational model predicts that perceptual space should be distorted to different degrees in the noise and no-noise conditions. At moderate levels of noise, we should observe more perceptual warping than with no noise due to higher reliance on category information. At very high noise levels, however, if subjects are unable to make reliable category assignments, warping should decrease; as noted, this decrease is predicted by any model in which subjects are using category membership to guide their judgments. Thus, while the model is compatible with changes in both directions for different noise levels, our aim is to find levels of noise for which warping is higher with increased speech signal noise. Moreover, manipulating the noise parameter in the rational model should account for behavioral differences due to changing noise levels.

Methods

Subjects

Forty adult participants were recruited from the Brown University community. All were native English speakers with no known hearing impairments. Participants were compensated at a rate of $8 per hour. Data from two additional participants were excluded, one because of equipment failure and one because of failure to understand the task instructions.

Apparatus

Stimuli were presented through noise cancellation headphones, Bose Aviation Headset model AHX-02, from a computer at comfortable listening levels. Participants’ responses were entered and recorded using the computer that presented the stimuli. The presentation of the stimuli was controlled using Bliss software (Mertus, 2004), developed at Brown University for use in speech perception research.

Stimuli

Thirteen /i/ and /e/ stimuli, modeled after the stimuli in Iverson and Kuhl (1995), were created using the KlattWorks software (McMurray, in preparation). Stimuli varied along a single F1−F2 vector that ranged from an F1 of 197 Hz and an F2 of 2489 Hz to an F1 of 429 Hz and an F2 of 1925 Hz. The stimuli were spaced at equal intervals of 30 mels; exact formant values are shown in Table 1. F3 was set at 3010 Hz, F4 at 3300 Hz, and F5 at 3850 Hz for all stimuli. The bandwidths for the five formants were 53, 77, 111, 175, and 281 Hz. Each stimulus was 435 ms long. Pitch rose from 112 to 130 Hz over the first 100 ms and dropped to 92 Hz over the remainder of the stimulus. Stimuli were normalized in Praat (Boersma, 2001) to have a mean intensity of 70 dB.

For stimuli in the noise condition, 435 ms of white noise was created using Praat by sampling randomly from a uniform [−0.5,0.5] distribution at a sampling rate of 11,025 Hz. The mean intensity of this waveform was then scaled to 70 dB. The white noise was added to each of the 13 stimuli, creating a set of stimuli with a zero signal-to-noise ratio.

Procedure

Participants were assigned to either the no-noise or the noise condition. After reading and signing a consent form, they completed ten practice trials designed to familiarize them with the task and stimuli and subsequently completed a single block of 208 trials. This block included 52 “same” trials, four trials for each of 13 stimuli, and 156 “different” trials in which all possible ordered pairs of non-identical stimuli were presented once each. In each trial, participants heard two stimuli sequentially with a 250 ms interstimulus interval. They were instructed to respond as quickly as possible, pressing one button if the two stimuli were identical and another button if they could hear a difference between the two stimuli. Responses and reaction times were recorded.

This procedure was nearly identical to that used by Iverson and Kuhl (1995), though the response method differed slightly in order to provide reaction times for “same” responses in addition to “different” responses. We also eliminated the response deadline of 2000 ms and instead recorded subjects’ full reaction times for each contrast, up to 10,000 ms.

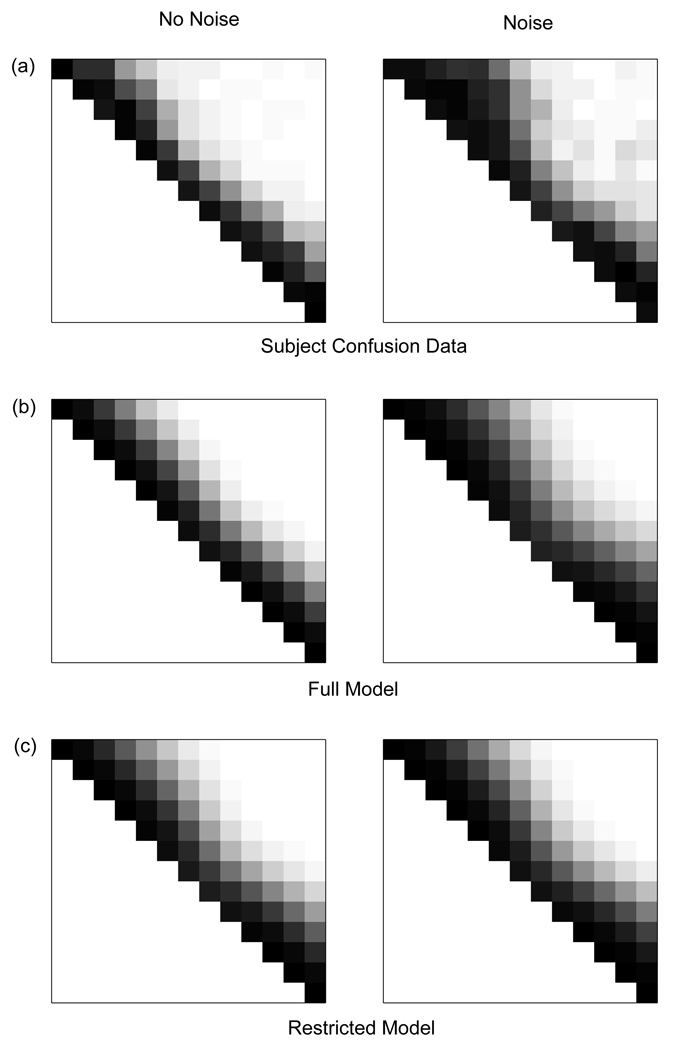

Results and Discussion