Abstract

The vestibular system helps maintain equilibrium and clear vision through reflexes, but it also contributes to spatial perception. In recent years, research in the vestibular field has expanded to higher level processing involving the cortex. Vestibular contributions to spatial cognition have been difficult to study because the circuits involved are inherently multisensory. Computational methods and the application of Bayes theorem are used to form hypotheses about how information from different sensory modalities is combined together with expectations based on past experience in order to obtain optimal estimates of cognitive variables like current spatial orientation. To test these hypotheses, neuronal populations are being recorded during active tasks in which subjects make decisions based on vestibular and visual or somatosensory information. This review highlights what is currently known about the role of vestibular information in these processes, the computations necessary to obtain the appropriate signals, and the benefits that have emerged thus far.

Introduction

Aristotle's five senses provide us with a conscious awareness of the world around us. Whether seeing a beloved face, hearing a familiar song, smelling a fragrant flower, tasting a favorite food or touching a soft fur coat, each sense gives rise to distinct sensations and perceptions. There is also a stealth sixth sensory system which often flies below our conscious radar. The vestibular end organs in the inner ear, adjacent to the cochlea, provide a sense of balance and orientation. Specifically, three semicircular canals on each side of the body measure how the head rotates in three-dimensional space (i.e., yaw, pitch and roll), and two otolith organs (the utricle and saccule) measure how the body translates in space and how it is positioned relative to gravity. Signals from the canals and otoliths are transmitted to the central nervous system via afferents in the vestibular (8th cranial) nerve.

In general, the vestibular system serves many different functions, some of which have been well-studied. For example, vestibular signals are vital for generating reflexive eye movements that keep vision clear during head motion, known as the vestibuloocular reflex (for recent reviews see Angelaki, 2004; Angelaki and Hess, 2005; Cullen and Roy, 2004; Raphan and Cohen, 2002). The vestibular system is also critical for a number of autonomic and limbic system functions (Balaban, 1999; Yates and Bronstein, 2005; Yates and Stocker, 1998). Interestingly, there is no distinct, conscious vestibular sense or percept (Angelaki and Cullen, 2008), and even with one's eyes closed, most conditions that activate the vestibular system also activate other sensors as well; mostly body proprioceptors and/or tactile receptors. Thus, while other senses are often stimulated in isolation, vestibular stimulation is seldom discrete. In fact, proprioceptive-vestibular interactions occur as early as the first synapse in the brain, as signals from muscles, joints, skin and eyes are continuously integrated with vestibular inflow.

Perhaps related to the lack of a distinct vestibular sensation is the fact that, unlike other senses, there is no primary vestibular cortex. That is, there is no discrete, circumscribed cortical field where either all cells respond to vestibular stimulation or, those that do respond, do so primarily for vestibular stimuli. Rather, vestibular responsiveness is found in many cortical areas, typically with convergent visual, somatosensory or motor-related signals, and there is little or no evidence that these areas are hierarchically organized such as is the case for primary visual cortex and the tiers of extrastriate areas (Felleman and Van Essen, 1991; Lewis and Van Essen, 2000). Thus our current level of understanding cortical vestibular functions is still fragmented and it is not clear what the functional roles are of vestibular signals in these areas.

Vestibular-related research in the 70's, 80's and 90's focused on low-level (brainstem-mediated) automatic responses, like the vestibulo-ocular and vestibulo-colic reflexes. This focus displaced the study of the sensory functions of the vestibular system, including its role in spatial perception, which had been very influential in the 50's and 60's. The ability to isolate and study central processing during reflexive motor behavior that is purely vestibular in origin, the simplicity of these circuits, as well as the ability to measure and quantify motor output, all contributed to the impressive focus on reflexes. In recent years, however, interest in vestibular-mediated sensation has re-surfaced in the context of multisensory integration, cortical processing and spatial perception, and this work constitutes the focus of the current review. The studies we will review utilize quantitative measures based on signal detection theory that were developed for the purpose of linking neuronal activity with perception in other sensory systems, as well as probabilistic theories, such as Bayesian inference, which have been recently applied successfully to vestibular multisensory perception.

More specifically, we bring to light recent studies that have applied novel quantitative analysis to the vestibular system, focusing exclusively on its role in spatial perception. We have chosen three spatial orientation functions to describe in detail: (1) the perception of self-motion, (2) the perception of tilt, and (3) the role of vestibular signals in visuospatial updating and the maintenance of spatial constancy. Since many, if not all, of these behaviors likely find a neural correlate in the cortex, we first summarize the main cortical areas that process vestibular information.

Representation of vestibular information in cortex

A number of cortical areas receiving short latency vestibular signals either alone or more commonly in concert with proprioceptive and tactile inputs have been well explored (see reviews by Guldin and Grüsser 1998; Fukushima 1997). Other efforts have focused on vestibular signals in cortical oculomotor areas like frontal and supplementary eye fields (e.g., Ebata et al. 2004; Fukushima et al. 2000; 2004). More recently, strong vestibular modulation has been reported in extrastriate visual cortex, particularly in the dorsal subdivision of the medial superior temporal area (MSTd), which is thought to mediate the perception of heading from optic flow (Britten and Van Wezel, 1998; Duffy and Wurtz, 1991; 1995). Approximately half of MSTd neurons are selective for motion in darkness (Bremmer et al., 1999; Duffy, 1998; Gu et al., 2006; 2007; Page and Duffy, 2003; Takahashi et al., 2007). Convergence of vestibular and optic flow responses is also found in the ventral intraparietal area (VIP; Bremmer et al., 2002a,b; Klam and Graf, 2003; Schaafsma and Duysens, 1996; Schlack et al., 2002). Unlike MSTd neurons, many VIP cells respond to somatosensory stimulation with tactile receptive fields on the monkey's face and head (Avillac et al., 2005; Duhamel et al., 1998). Indeed, one might expect most vestibular-related areas to exhibit somatosensory/proprioceptive responses since these two signals are highly convergent, even as early as the level of the vestibular nucleus (Cullen and Roy, 2004; Gdowski and McCrea, 2000).

Imaging studies reveal an even larger cortical involvement in representing vestibular information, including the temporo-parietal cortex and the insula, the superior parietal lobe, the pre- and post-central gyri, anterior cingulate and posterior middle temporal gyri, premotor and frontal cortices, inferior parietal lobule, putamen and hippocampal regions (Bottini et al., 1994; Fasold et al., 2002; Friberg et al., 1985; Lobel et al., 1998; Suzuki et al., 2001; Vitte et al., 1996). Using electrical stimulation of the vestibular nerve in patients, the prefrontal lobe and anterior portion of the supplementary motor area have also been activated at relatively short latencies (De Waele et al., 2001). However, imaging, and to a lesser extent single unit recording studies, may overstate the range of vestibular representations. In particular, vestibular stimuli often co-activate the somatosensory/proprioceptive systems, as well as evoke postural and oculomotor responses, which might in turn result in increased cortical activations.

The complex and multimodal nature of cortical vestibular representations may explain why progress has been slow in understanding the neural basis of spatial orientation. Even more important, however, is the necessity of using active tasks. Most neurophysiological studies of the vestibular system to date have measured neuronal responses during passive vestibular stimulation. In contrast, work on the neural basis of other sensory functions, for example, visual motion and somatosensory frequency discrimination, have recorded or manipulated neural activity while subjects perform a relevant and demanding perceptual task (Brody et al., 2002; Cohen and Newsome, 2004; Parker and Newsome, 1998; Romo and Salinas, 2001; 2003; Sugrue et al., 2005). Active tasks have now begun to be used to investigate the neuronal basis of heading perception. We next describe a framework for this work.

Bayesian framework for multisensory perception

Because high level vestibular signals are so often combined with information from other sensory systems, an approach that explicitly addresses multimodal cue combination is essential. One such approach, particularly well-suited to the vestibular system, derives from Bayesian probability theory (Kersten et al., 2004; Knill and Pouget, 2004; Wilcox et al., 2004; MacNeilage et al. 2008). The basic concept is that all sensory signals contain some degree of uncertainty, and that one way to maximize performance is to take into account both signal reliability (sensory likelihoods) and the chance of encountering any given signal (prior probabilities) (Clark and Yuille, 1990; Knill and Pouget, 2004). The approach relies on probability distributions, which can be multiplied together in a sort of chain rule based on Bayes' theorem. For example, we can compute the probability of an environmental variable having a particular value X (e.g., heading direction) given two sensory cues, s1 (e.g., vestibular) and s2 (e.g., optic flow) [note that s1 and s2 can be thought of as sets of firing rates from populations of neurons selective for cues 1 and 2]:

| [1] |

In this equation, P(X|s1,s2) is called the `posterior' probability, which can be thought of as containing both an estimate of X and the uncertainty associated with that estimate. Note that `uncertainty' in this framework translates into the width of the underlying probability distributions. That is, a narrow distribution (low uncertainty) corresponds to an estimate with low variability; a broad distribution (high uncertainty) illustrates an estimate with high variance.

To estimate the posterior density function, important terms in equation [1] are P(s1|X) and P(s2|X), which represent the sensory `likelihood' functions for cues 1 and 2, respectively. The likelihood distributions quantify the probability of acquiring the observed sensory evidence (from cue 1 or cue 2), given each possible value of the stimulus. The equation also includes the distribution of likely stimuli, called the `prior', P(X). For example, P(X) can include knowledge of the statistical properties of experimentally or naturally occurring stimuli (e.g., for head/body orientation, upright orientations a priori are more likely than tilted orientations; see later section on tilt perception). Finally, P(s1) and P(s2), the probability distributions of the sensory signals, are stimulus-independent and behave similar to constants in the equation. The Bayesian approach defines an optimal solution as one that maximizes the left side of the equation, i.e., the posterior. This estimate is called the `maximum a posteriori' or `MAP' estimate. Note that, when the prior distribution is broad relative to the likelihoods, its influence on the posterior is vanishingly small, in which case the MAP estimate is identical to the `maximum-likelihood” (ML) estimate.

This framework makes specific predictions about cue integration that can be tested behaviorally. Although comprehensive, the full-bodied Bayesian framework remains computationally demanding as it involves predictions based on multiplication of sensory likelihoods and prior distributions. Two assumptions greatly simplify the computations. The first assumption is a broad (uniform) prior that is essentially flat when compared to the individual cue likelihoods (e.g., for heading perception a uniform prior means that all directions of motion are equally probable). As stated earlier, this assumption removes the prior from the computation, so that the posterior estimate (here referred to as SBIMODAL) can be approximated by the product of the sensory likelihoods. The second assumption is Gaussian likelihoods, described with two parameters, the mean and uncertainty, or variance, σ2. In this case, the peak of the product of the likelihoods (i.e., the ML estimate) is simply a weighted sum of the two single cue estimates. This weighting is in proportion to reliability, where reliability is described as the inverse variance, 1/σ2 (Hillis et al., 2004; Knill and Saunders, 2003):

| [2] |

As a result, stronger cues have a higher weighting and weaker cues have a lower weighting on the posterior estimate. In addition, the variance of the bimodal estimate (posterior) is lower than that of the unimodal estimates:

| [3] |

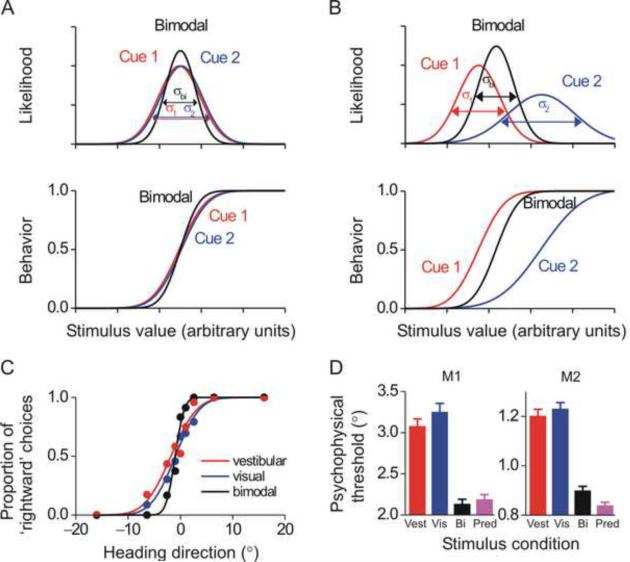

According to equation [3], the largest improvement in performance, relative to the performance obtained by using the more reliable single cue, occurs when the two cues are of equal reliability (i.e., σ1 = σ2). This condition is shown schematically in Fig. 1A (top). Experimentally, the decrease in the variance of the bimodal estimate would be manifest as a steepening of the psychophysical curve and a decrease in the discrimination threshold by a factor of the square root of 2, as illustrated in Fig. 1A (bottom, black versus red/blue lines). Thus, to experimentally test for the predicted improvement in sensitivity (threshold), approximately matching the reliability of the two cues is best (Fig. 1A, equation [3]).

Fig. 1. Visual/vestibular cue integration: theory and behavioral data.

(A) According to the Bayesian framework, if the reliability of two cues, cue 1 (red) and cue 2 (blue), is equal, then when both cues are present, as in the bimodal condition (black), the posterior distribution should be narrower and the psychometric function steeper. (B) If the reliability of two cues, cue 1 (red) and cue 2 (blue) is not equal, the resultant bimodal (posterior) probability is shifted towards the most reliable cue. Similarly, the bimodal psychometric function in a cue-conflict experiment will be shifted towards the most reliable cue. (C) This theory was tested using a heading discrimination task with vestibular cues (red), visual cues (blue) and a bimodal condition (black). (D) Average behavioral thresholds in two monkeys; the bimodal threshold (black) was lower than both single cue thresholds (red=vestibular; blue=visual) and similar to the prediction from equation [3] (purple). Error bars represent standard errors. C and D are reprinted with permission from Gu Y, Angelaki DE, DeAngelis GC. Neural correlates of multisensory cue integration in macaque MSTd. Nat. Neurosci. 11: 1201–1210, 2008.

In Fig. 1A, when the two cues are of equal reliability (same variance), the posterior is equally influenced by both cues. In Fig. 1B, cue 2 is degraded (e.g., by lowering the coherence for visual motion stimuli, see below), resulting in a Gaussian with higher variance (lower reliability). In this case, the stronger cue would have the largest influence on the posterior. To illustrate the larger influence of the most reliable cue on the posterior, a small conflict is presented, such that the means of the two likelihoods differ (Fig. 1B, top; Ernst and Banks 2002). As expected from equations [2], the mean of the bimodal distribution lies closer to the cue with the higher reliability. This experimental manipulation, i.e., varying cue reliability and putting the two cues in conflict, allows the weights with which cues 1 and 2 are combined in the bimodal estimate to be experimentally measured (Ernst and Banks 2002; Fetsch et al. 2009a).

Here we use these concepts and predictions to tackle two perceptual functions of the vestibular system: the perception of self-motion (heading) and tilt perception. Note that the assumption of a broad prior is only valid under some conditions. We will see that many aspects of spatial orientation perception (e.g., tilt illusions during low frequency linear acceleration and systematic errors in the perception of visual vertical for tilted subjects) can be explained by a biased prior that dominates perception when the sensory cues are known to be unreliable. We return to this topic later in this review. Next we summarize an application of the concepts outlined above, and the simplified expressions in equations [2] and [3], in experiments aimed to understand the neural basis of multisensory integration for heading perception.

Visual/vestibular cue integration for heading perception

The predictions illustrated schematically in Fig. 1A and 1B have recently been tested for visual/vestibular heading perception in both humans and macaques using a two-alternative-forced-choice (2AFC) task (Fetsch et al. 2009a,b; Gu et al. 2008). In a first experiment, the reliability of the individual cues was roughly equated by reducing the coherence of the visual motion stimulus, thus testing the predictions of equation [3] (Gu et al., 2008). As illustrated in Fig. 1C, the monkey's behavior followed the predictions of Fig. 1A: the psychometric function was steeper for bimodal compared to unimodal stimulation (black vs. red/blue). On average, bimodal thresholds were reduced by ~30% under cue combination (Fig. 1D, black vs. red/blue) and were similar to the predictions (Fig. 1D, purple). Thus, macaques combine vestibular and visual sensory self-motion cues nearly optimally to improve perceptual performance.

In a follow-up study, visual and vestibular cues were put in conflict while varying visual motion coherence, thus testing the predictions of equations [2] (Fetsch et al. 2009a,b). Both macaque and human behavior showed trial-by-trial re-weighting of visual and vestibular information, such that bimodal perception was biased towards the most reliable cue, according to the predictions. At the two extremes, perception was dominated by visual cues at high visual coherences (`visual capture') and by vestibular cues at low visual coherences (`vestibular capture') (Fetsch et al. 2009a,b). These observations, illustrating optimal or near optimal visual/vestibular cue integration for self-motion perception and the switching between visual and vestibular capture according to cue reliability, help resolve previous conflicting reports in the literature. For example, real motion was reported to dominate the perceived direction of self-motion in some experiments (Ohmi, 1996; Wright et al., 2005), but vision dominated in other studies (Telford et al., 1995). To account for these disparate findings, it had been assumed that visual/vestibular convergence involves complex, non-linear interactions or frequency-dependent channels and that the weightings are determined by the degree of conflict (Berthoz et al., 1975; Ohmi, 1996; Probst et al., 1985; Wright et al., 2005; Zacharias and Young, 1981). Optimal cue integration provides a robust and quantitative alternative that likely explains these multiple experimental outcomes.

By showing, for the first time, cue integration behavior in monkeys, these experiments offered a unique opportunity to tackle the neural correlate of multisensory ML estimation and Bayesian inference at the neuronal level. By contrast, previous neurophysiological work on multisensory integration was done in anesthetized or passively-fixating animals. Next we summarize how single neurons combine visual and vestibular cues in the context of a perceptual task around psychophysical threshold.

Vestibular responses in dorsal visual stream and their role in heading perception

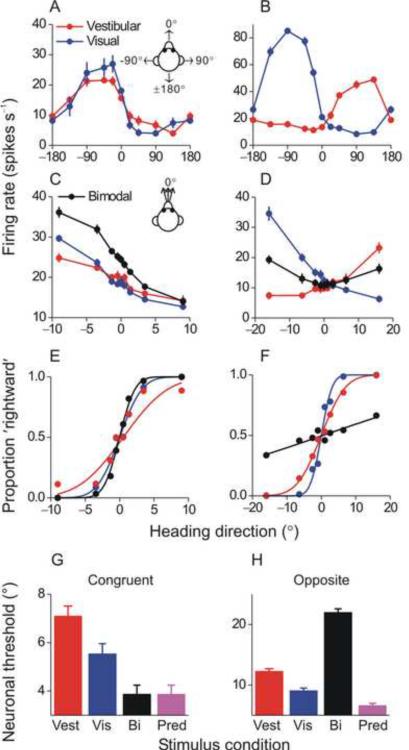

By taking advantage of macaques trained in the unimodal and bimodal heading discrimination tasks, a recent series of experiments (Fetsch et al. 2009a,b; Gu et al. 2007; 2008) used the ML framework to identify a potential neural correlate of heading perception in the dorsal medial vestibular temporal area (MSTd) of the dorsal visual stream. More than half of MSTd neurons are multimodal and are tuned both to optic flow and translational motion in darkness (Bremmer et al., 1999; Duffy, 1998; Gu et al., 2006; Page and Duffy 2003). That neuronal responses in darkness are driven by vestibular signals was shown by recording before and after bilateral lesions of the vestibular end organs (Gu et al., 2007; Takahashi et al., 2007). Multimodal MSTd cells fall into one of two groups: (1) `Congruent' neurons have similar visual/vestibular preferred directions and thus signal the same motion direction in three-dimensional space under both unimodal (visual or vestibular) stimulus conditions (Fig. 2A) and (2) `Opposite' neurons prefer nearly opposite directions of heading under visual and vestibular stimulus conditions (Fig. 2B).

Fig. 2. Optimal multisensory integration in multimodal MSTd cells.

(A) `Congruent' cells have similar tuning for vestibular (red) and visual (blue) motion cues. (B) `Opposite' cells respond best to vestibular cues in one direction and visual cues in the opposite direction. (C), (D) Mean firing rates of the congruent and opposite MSTd cell during the heading discrimination task based on vestibular (red), visual (blue) or bimodal (black) cues. (E), (F) Neurometric functions for the congruent and opposite MSTd cell (same data as in E and F). (G), (H) Average neuronal thresholds for congruent cells and opposite cells in the bimodal condition (black) are compared with the single cue thresholds (red and blue) and with the prediction (equation [3], purple). Reprinted with permission from Gu Y, Angelaki DE, DeAngelis GC. Neural correlates of multisensory cue integration in macaque MSTd. Nat. Neurosci. 11: 1201–1210, 2008.

By recording neural activity while the animal performed the 2AFC heading discrimination task (Fig. 2C, D), Gu et al. (2008) showed that congruent and opposite MSTd neurons may have different, but complementary, roles in heading perception. When the cues were combined (bimodal condition), tuning became steeper for congruent cells but more shallow for opposite cells. The effect on tuning steepness is reflected in the ability of an ideal observer to use a congruent or opposite neuron's firing rate to accurately discriminate heading under visual, vestibular and combined conditions. This can be seen by ROC analysis (Britten et al., 1992), in which firing rates are converted into `neurometric' functions (Fig. 2E and F). A cumulative Gaussian can be fit to these data, so that neuronal thresholds can be computed by analogy to behavior thresholds. The smaller the threshold, the steeper the neurometric function and the more sensitive the neuron is to subtle variations in heading. For congruent neurons, the neurometric function was steepest in the combined condition (Fig. 2E, black), indicating that they became more sensitive, i.e., can discriminate smaller variations in heading when both cues are provided. In contrast, the reverse was true for opposite neurons (Fig. 2F), which became less sensitive during bimodal stimulation.

Indeed, the average neuronal thresholds for congruent MSTd cells followed a pattern similar to the monkeys' behavior, i.e., thresholds for bimodal stimulation were lower than those in either single cue condition and similar to the maximum likelihood prediction (derived from unimodal vestibular and visual neuronal thresholds, which were the inputs to equation [3]) (Fig. 2G). In contrast, bimodal neuronal thresholds for opposite cells were higher than either threshold in the single-cue conditions or the predictions (Fig. 2H), indicating that these neurons became less sensitive during cue combination (Gu et al., 2008). MSTd responses in the vestibular condition were significantly correlated with perceptual decisions, with correlations being strongest for the most sensitive neurons (Gu et al. 2007). Notably, only congruent cells were significantly correlated with the monkey's heading judgments in the bimodal stimulus condition, consistent with the hypothesis that congruent cells might be monitored selectively by the monkey to achieve near-optimal performance under cue combination (Gu et al., 2008).

How cue reliability (manipulated by changing visual coherence) affects the weights by which neurons combine their visual and vestibular inputs and whether the predictions of equations [2] hold at the single neuron level was tested first in passively-fixating animals (Morgan et al. 2008) and more recently in trained animals as they perform the heading discrimination task where visual and vestibular cues were put in conflict while varying visual motion coherence (Fetsch et al. 2009b). Bimodal neural responses were well fit by a weighted linear sum of vestibular and visual unimodal responses, with weights being dependent on visual coherence (Morgan et al. 2008). Thus, MSTd neurons appear to give more weight to the strongest cue and less weight to the weakest cue, a property that might contribute to similar findings in behavior.

In summary, these experiments in MSTd have pioneered two general approaches that have proven valuable in studying the neural basis of vestibular multisensory self-motion perception: (1) Cortical vestibular responses were quantified in the context of a perceptual task performed around psychophysical threshold and (2) Neuronal visual/vestibular cue integration was studied in a behaviorally-relevant way, while simultaneously measuring behavior. But is MSTd the only area with links to multisensory integration for heading perception? What about other areas with vestibular/optic flow multimodal responses, like VIP? Interestingly, a recent human neuroimaging study suggested that area VIP and a newly-described visual motion area in the depths of the cingulate sulcus at the boundary of the medial frontal cortex and the limbic lobe may have a more central role than MST in extracting visual cues to self-motion (Wall and Smith, 2008). This observation can be directly investigated with single unit recording in monkeys. It is thus important that approaches like the one described here, where the readout of cue integration involves an active perceptual decision, continue to be used for studying the detailed mechanisms by which neurons mediate visual/vestibular integration and for examining how these signals are dynamically re-weighted to optimize performance as cue reliability varies (Knill and Pouget, 2004). Such approaches will continue to be important for understanding both higher (i.e., perceptual) functions of vestibular signals and their functional integration with extra-labyrinthine information (e.g., visual, somatosensory).

Contribution of vestibular signals to body tilt perception and spatial orientation

Vestibular information is also critical for spatial orientation (i.e., the perception of how our head and body are positioned relative to the outside world). Under most circumstances, human and non-human primates orient themselves using gravity, which provides a global, external reference. Thus, spatial orientation typically refers to our orientation and change in orientation relative to gravity, collectively referred to as `tilt'. Gravity produces a linear acceleration, which is measured by the otolith organs. Subjects with defects in their vestibular system have severe spatial orientation deficits (for reviews see Brandt and Dieterich, 1999; Bronstein, 1999; 2004; Karnath and Dieterich, 2006).

The function of the vestibular system to detect head and body tilt is complicated by a sensory ambiguity that arises from a physical law known as `Einstein's equivalence principle': the inertial accelerations experienced during self-motion are physically indistinguishable from accelerations due to gravity. Because the otolith organs function as linear acceleration sensors, they only detect net acceleration and cannot distinguish its source (for example, forward motion versus backward tilt) (Angelaki et al., 2004; Fernandez and Goldberg, 1976). At high frequencies, this sensory ambiguity is resolved by combining otolith signals with cues from the semicircular canals (Angelaki et al., 1999; Green and Angelaki, 2003; 2004; Green et al., 2005; Shaikh et al. 2005; Zupan et al., 2002; Yakusheva et al. 2007).

However, because of the dynamics of semicircular canal afferents, the vestibular system (unassisted by visual information) cannot disambiguate tilts relative to gravity and inertial accelerations at low frequencies. This is due to the fact that canal dynamics are frequency-dependent, such that they reliably encode rotational velocity only above ~0.05 Hz (Goldberg and Fernandez 1971). As a result, the brain often chooses a default solution when experiencing low frequency accelerations: in the absence of extra-vestibular information (e.g., from vision), low frequency linear accelerations are typically, and often erroneously (i.e., even when generated by translational motion), interpreted as tilt (Glasauer, 1995; Kaptein and Van Gisbergen, 2006; Paige and Seidman, 1999; Park et al., 2006; Seidman et al., 1998; Stockwell and Guedry, 1970).

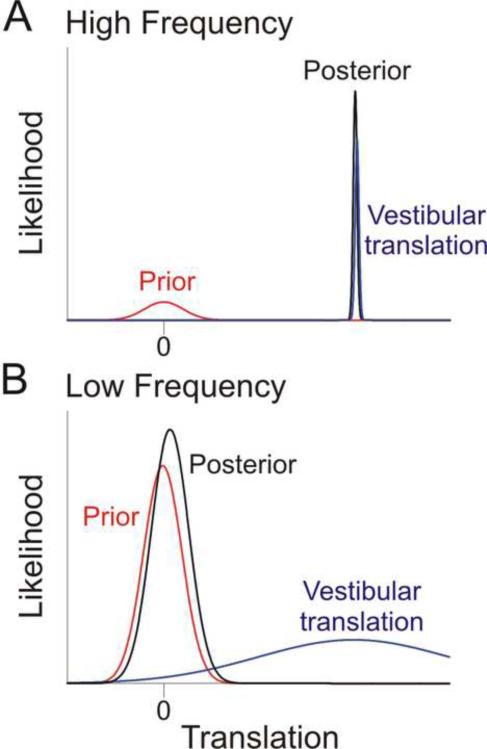

In the Bayesian framework (equation [1]), the prevalence of tilt perception during low frequency linear accelerations can be explained by a zero inertial acceleration prior. This prior essentially says that, before any other information is taken into account, it is more likely that we are stationary rather than moving (Fig. 3; Laurens and Droulez, 2007; MacNeilage et al., 2007; 2008). The effect of this prior on the posterior depends on the frequency of motion. At high frequencies, the system `knows' that it can reliably distinguish translation from dynamic tilt (see previous paragraphs). This results in a vestibular sensory likelihood function that is relatively narrow (i.e., has a small variance; Fig. 3A). In this case, the sensory evidence dominates, pulling the posterior estimate towards itself. In contrast, at low frequencies, the system `knows' that it cannot reliably distinguish translation from tilt, and so the vestibular sensory likelihood function is relatively broad (Fig. 3B). In this case, the prior dominates, pulling the posterior estimate towards the “no self-motion” conclusion and interpreting the low frequency linear acceleration as tilt. In each case, the interaction between the sensory likelihood and the prior is similar to the interaction described earlier (equation [2]) for multisensory integration. In the earlier description, the two distributions were the likelihoods for cue 1 and cue 2; here, the two distributions are the prior and the vestibular translation likelihood; note that likelihoods and priors have the same mathematical influence on the posterior; equation [1].

Fig. 3. Schematic illustrating the influence of a zero inertial acceleration prior at low and high frequency linear accelerations.

(A) During high frequency linear accelerations, canal cues can resolve the ambiguous otolith signal and distinguish when a translation has occurred. Thus, the vestibular translation likelihood (blue) is much narrower (most reliable) than the zero inertial acceleration prior (red), resulting in a posterior density function (black) that is little affected by the prior. (B) During low frequency linear accelerations, canal cues cannot disambiguate if the otolith activation is signaling a tilt or a translation, resulting in a vestibular translation likelihood that is much broader (larger variance) than the prior. As a result, the posterior (black trace) is pulled towards zero by the prior and otolith activation is being interpreted as a tilt relative to gravity.

Thus, in summary, although the posterior probability of actually translating is robust and unbiased at high frequency accelerations, the posterior probability of translating at low frequency accelerations is reduced (i.e., pulled towards zero translation by the prior), and thus the brain misinterprets low frequency otolith stimulation as a change in body tilt. Note that we refer to the sensory likelihood as `vestibular translation', rather than `otolith' likelihood, to emphasize in this simplified schematic that the likelihood estimate also relies on canal cues (Laurens and Droulez, 2007; MacNeilage et al., 2007; 2008). Again, the Bayesian framework provides an elegant solution to a long-debated problem in the vestibular field: how vestibular signals are processed to segregate tilt from translation, concepts of `frequency segregation' (Mayne 1974; Paige and Tomko 1991; Paige and Seidman, 1999; Park et al., 2006; Seidman et al., 1998) and `canal/otolith convergence' (Angelaki 1998; Angelaki et al. 1999; Merfeld et al. 1999) appeared for many years to be in conflict. The schematic of Fig. 3 provides a framework, whereby both frequency segregation and canal/otolith convergence hypotheses are correct, when placed into a Bayesian framework.

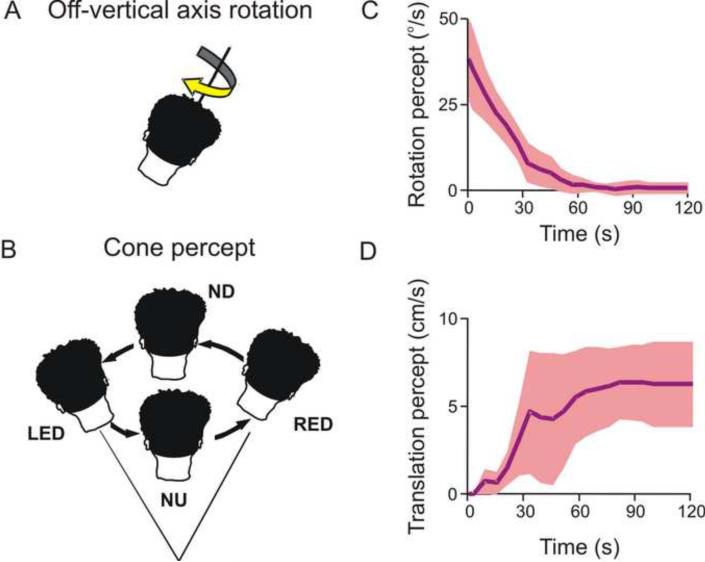

The otolith sensory ambiguity has previously been shown to cause tilt illusions (Dichgans et al., 1972; 1974; Lewis et al., 2008). A paradigm where the reverse is true, i.e., where a change in orientation relative to gravity (tilt) is incorrectly perceived as self-motion (translation), is illustrated in Fig. 4 (Vingerhoets et al., 2007). The paradigm, known as `off-vertical axis rotation' (OVAR), involves constant velocity horizontal (yaw) rotation about an axis that is tilted relative to the direction of gravity (Fig. 4A). Because of the tilted axis, head orientation changes continuously with respect to gravity. When this occurs in darkness, subjects initially have a veridical rotation percept which, as in the case of rotation about an upright yaw axis, gradually decreases over time. However, since OVAR also constantly stimulates the otolith organs, the perception of rotation decreases and concurrently there is a build-up of a sense of illusory translation along a circular trajectory (Lackner and Graybiel, 1978a,b; Mittelstaedt et al., 1989). Subjects describe that they feel swayed around a cone with the summit below the head, and with their head following a circle in a direction opposite to the actual rotation (Fig. 4B). The perceived translational self-motion along the circular trajectory is typically devoid of actual rotatory sensation. This illusion, which also occurs during roll/pitch OVAR (Bos and Bles, 2002; Mayne, 1974), is referred to as the `Ferris wheel illusion', as subjects eventually experience a circular path of self-motion without a sense of turning, just like in a gondola of a Ferris wheel.

Fig. 4. Misperceptions of translation due to changes in tilt.

(A) Off-vertical axis rotation (OVAR) rotates the subject about an axis that is not aligned with gravity. Thus the subject's head is constantly changing its orientation relative to gravity. (B) Subjects undergoing OVAR perceive that they are swaying around a cone. The head's orientation changes from nose-down (ND) to right-ear-down (RED) to nose-up (NU) to left-ear-down (LED), while facing the same direction in space. (C), (D) Over time, the subject's perception of rotation dissipates and, in an apparently compensatory fashion, the subject begins to perceive that they are translating. Replotted with permission from Vingerhoets RA, Van Gisbergen JA, Medendorp WP. Verticality perception during off-vertical axis rotation. J. Neurophysiol. 97: 3256–3268, 2007.

Following up on earlier observations using verbal reports (Denise et al., 1988; Guedry, 1974), Vingerhoets and colleagues (2006; 2007) used a 2AFC task to quantify these sensations in human subjects. They showed that the initially veridical rotation percept during prolonged OVAR decays gradually (Fig. 4C) and that a percept of circular head translation opposite to the direction of rotation emerges and persists throughout the constant velocity rotation (Fig. 4D). Such a translational self-motion percept, although strong, is not as large as would be expected if the total otolith activation was perceived as self-motion. Instead, part of the otolith drive is interpreted as tilt (even though vertical canal cues are not concurrently activated), as judged by the fact that the movement path feels conical rather than cylindrical (Fig. 4B). Of course, visual information is an important additional cue that prevents the illusions that would otherwise be present if perception were based on otolith signals alone.

The neural basis of these motion illusions remains unexplored. Based on lesion studies (Angelaki and Hess 1995a,b; Wearne et al. 1998), a role of the vestibulo-cerebellum, particularly vermal lobules X (nodulus) and IX (uvula) in spatial orientation has been suggested. Indeed, the simple spike responses of nodulus/uvula Purkinje cells have been shown to reflect the intricate spatially- and temporally-matched otolith/canal convergence necessary to resolve the otolith sensory ambiguity at high frequencies (Yakusheva et al. 2007). Importantly, simple spike responses also seem to reflect the failure of otolith/canal convergence to resolve the ambiguity at low frequencies (Yakusheva et al. 2008). Although nodulus/uvula Purkinje cells have yet to be examined during tilt and translation illusions, the possibility that neural correlates of the zero-inertial acceleration prior postulated in Fig. 3 can be identified in either the simple or complex spike activity of the cerebellar cortex represents an exciting direction for future research.

Role of vestibular signals in the estimation of visual vertical

An intriguing aspect of spatial vision, known as orientation constancy, is our ability to maintain at least a roughly correct percept of allocentric visual orientation despite changes in head orientation. Specifically, as we examine the world visually, the projection of the scene onto the retina continuously changes because of eye movements, head movements, and changes in body orientation. Despite the changing retinal image, the percept of the scene as a whole remains stably oriented along an axis called `earth-vertical', defined by a vector normal to the earth's surface. This suggests that the neural representation of the visual scene is modified by static vestibular/proprioceptive signals that indicate the orientation of the head/body.

There is extensive literature on human psychophysical studies showing that static vestibular/somatosensory cues can generate a robust percept of earth-vertical (i.e., which way is up?). The classical laboratory test involves asking subjects to rotate a luminous line until it is aligned with the perceived earth-vertical. These tasks reveal that such lines are systematically tilted towards the ipsilateral side when a subject is lying on his side in an otherwise dark environment (Aubert, 1861). As a result, subjects systematically underestimate the true vertical orientation for tilts greater than 70° (known as the A-effect). For small tilt angles, the reverse is true, as subjects tend to overestimate the subjective vertical (known as the E-effect; Muller, 1916). These perceived deviations from true vertical contrast with verbal (i.e., non-visual) estimates of body orientation that are subject to smaller systematic errors, although with larger variability across all tilt angles (Van Beuzekom and Van Gisbergen, 2000).

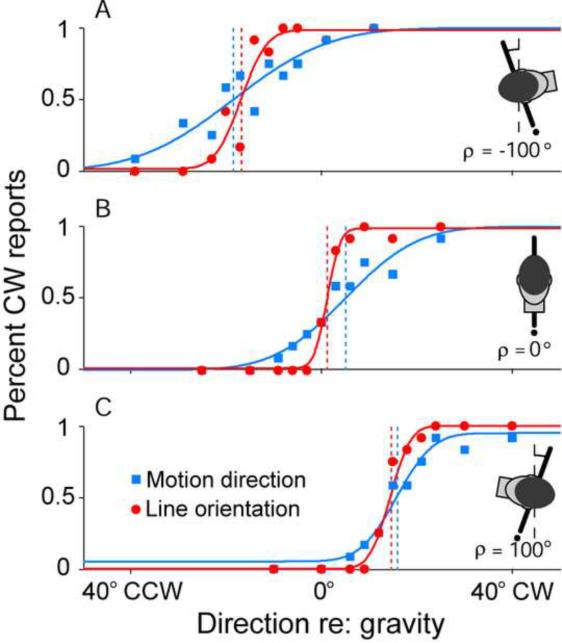

Using a 2AFC task, it was recently shown that the systematic errors characterizing incomplete compensation for head tilt in the estimation of earth-vertical line orientation also extend to visual motion direction (De Vrijer et al., 2008). Subjects were asked to either report line orientation relative to the earth-vertical, or the direction of visual motion of a patch of dots relative to the earth-vertical. That the two tasks are characterized by similar systematic errors is illustrated in Fig. 5, which shows the performance of a typical subject at three tilt angles (A: 100° left ear-down, B: upright, C: 100° right ear-down) for both the motion direction and line orientation tasks (blue squares versus red circles, respectively). The horizontal position of the psychometric function along the abscissa illustrates the accuracy of the subject in the task and quantifies the systematic errors in the estimation of visual vertical (e.g., the A-effect). The slope of the psychometric function reflects the precision of the subject in the task. As indicated by the steeper slopes of the line orientation fits, subjects are less certain about the motion vertical than about the line vertical; i.e., there is greater precision with line judgments. Nevertheless, the shifts of the psychometric functions away from true earth-vertical (vertical dashed lines) were in the same direction for both tasks. These shared systematic errors point to common processing and compensation for roll tilt for both spatial motion and pattern vision.

Fig. 5. Accuracy and precision in the perception of vertical line orientation and vertical visual motion direction during static body tilts.

A 2AFC task was implemented to gauge subjects' abilities to perceive either (1) how the direction of motion of a random dot pattern was moving relative to gravity (blue squares) or (2) how a visible line was oriented relative to gravity (red circles). Subjects' performances were quite accurate when upright (B – center of psychometric function is near zero on abscissa), but became inaccurate with large body tilts (A and C). Errors were always in the same direction as the body tilt. Note that systematic errors in accuracy (illustrated by the bias of the psychometric functions) were similar for motion perception and the visual vertical tasks. However, precision (illustrated by the slope of the psychometric function) was higher for the line orientation than the motion direction task (steeper psychometric functions reflect higher precision). Vertical dashed lines indicate performance at 50% CW reports. Replotted with permission from De Vrijer M, Medendorp WP, Van Gisbergen JA. Shared computational mechanism for tilt compensation accounts for biased verticality percepts in motion and pattern vision. J. Neurophysiol. 99: 915–913, 2007.

But why are such systematic errors in body orientation present at all? Why can the brain not accurately estimate body orientation in space at all tilt angles? The most comprehensive hypothesis to date models the systematic errors in the perceived visual vertical with a statistically-optimal Bayesian approach that uses existing knowledge (priors) in the interpretation of noisy sensory information (De Vrijer et al., 2008; Eggert, 1998; MacNeilage et al., 2007). The approach is similar to what has been used previously to account for apparent shortcomings in other aspects of sensory processing; e.g., visual motion speed underestimation for low contrast stimuli (Stocker and Simoncelli, 2006) and the reduced ability to detect visual motion during saccades (Niemeier et al., 2003). In this framework, the estimation of the visual vertical is biased by an a priori assumption about the probability that a particular tilt may have occurred. Since we spend most of our time in an upright orientation, it is assumed that the subjective vertical is most likely to be aligned with the long axis of the body.

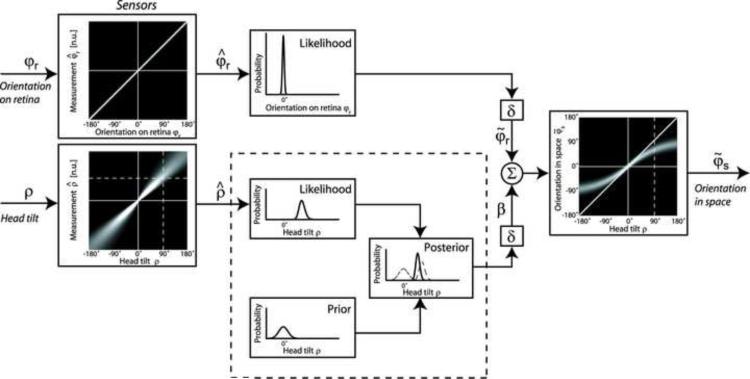

A schematic version of such a Bayesian model is illustrated in Fig. 6 (De Vrijer et al., 2008). Inputs to the model are static head orientation in space (ρ) and visual orientation of the bar relative to the retina (φr). The sensory tilt signal is assumed to be noisy (i.e., unreliable), as illustrated by the thick white cloud around the mean and the relatively broad likelihood function whose variance increases with tilt angle. To supplement this noisy input, prior knowledge is taken into account to obtain a statistically optimal estimate. The prior distribution assumes that the head is usually oriented near upright and is implemented by a Gaussian distribution that peaks at zero tilt. The model also assumes that Bayesian processing of the tilt signal is applied at a stage prior to the computation of the visual vertical. As a result, the internal tilt signal (β), which ultimately transforms retinal signals (φr) to visual orientation in space (φs), is obtained as the posterior distribution by multiplying the two probability distributions, the tilt sensory likelihood and the prior (panels in dashed box of Fig. 6).

Fig. 6. A Bayesian model explains the observed errors in body-tilt perception.

In order to determine body orientation in space (φs), the brain takes into account the sensory likelihoods of the orientation of lines on the retina (φr) and head tilt (ρ), the latter of which is noisy (as indicated by thick white cloud around the mean). The brain also takes into account prior knowledge that head tilts around 0° are much more likely than head tilts far away from upright (prior box). The tilt likelihood and prior probabilities multiply to yield the posterior distribution (posterior box). The resultant orientation in space is determined by summing the retinal orientation (φr) with the posterior tilt estimate (β). The three functions in the dashed box can be related back to the three functions in Figs. 1A,B and 3. Replotted with permission from De Vrijer M, Medendorp WP, Van Gisbergen JA. Shared computational mechanism for tilt compensation accounts for biased verticality percepts in motion and pattern vision. J. Neurophysiol. 99: 915–913, 2007.

To understand the predictions of the model, consider the interpretation of a noisy sensory input in the presence of a strong prior to be analogous to multisensory integration; the Bayesian rules applied to the functions in the dashed box are the same as those originally proposed with equations [1] and [2] and illustrated in Fig. 1B (see also Fig. 3). Here we are no longer dealing with two cues (cue 1 and cue 2, as in Fig. 3B), but rather with the sensory likelihood of one cue (i.e., head tilt) and the prior probability. Again, the resultant posterior (black curves in Fig. 3) lies closer to the distribution with the smallest variance (prior versus sensory likelihood). According to equation [2], at small tilt angles the orientation estimate is dominated by the head tilt signal due to its smaller variability relative to the prior distribution). In contrast, at large tilt angles, the variance of the tilt likelihood is assumed to be high, such that the orientation estimate is now dominated by the prior, thus resulting in an underestimation of tilt angle (A-effect). In fact, the narrower the prior distribution, the more the perceived tilt shifts away from the sensory estimate and towards the prior. An advantage of this systematic bias is a decrease in the noise of the internal tilt estimate. A disadvantage is that, at large tilt angles, perception is erroneously biased (Fig. 6; De Vrijer et al., 2008). Thus, in summary, systematic perception biases are explained as follows: In the presence of noisy (i.e., unreliable) sensory inputs, a systematic bias in perception can improve performance at small tilt angles, but produces large systematic errors at (rarely encountered) large tilt angles. Notably, most astronauts in space feel as if vertical is always aligned with their body axis (Clément et al., 2001; Glasauer and Mittelstaedt, 1992), a finding that is consistent with a prior whereby the earth-vertical is most likely to be aligned with the long axis of the body.

Although visual orientation constancy is of fundamental importance to the perceptual interpretation of the visual world, little is known currently about its physiological basis. An early study in anesthetized cats suggested that ~ 6% of V1 neurons shift their orientation preference (relative to a retinotopic coordinate system) with changes in head/body orientation so as to maintain visual orientation constancy (Horn et al., 1972). In macaques, Sauvan and Peterhans (1999) reported that < 10% of cells in V1 and ~38% of neurons in a mixed population of V2 and V3/V3A also showed qualitative changes in their orientation preferences after changes in the head/body orientation. A much more vigorous exploration of how the orientation tuning of visual cells changes quantitatively with body orientation and the specific roles of static vestibular cues has yet to be performed.

Visual orientation constancy is only one particular case of the much more general and fundamental function of perceptual stability during eye, head and body movements. Dynamic vestibular signals are also tightly coupled to spatial memory and visuospatial constancy. Next we briefly summarize how vestibular signals contribute to visuospatial constancy (for a more extensive review, see Klier and Angelaki, 2008).

Visual constancy and spatial updating

Visuopatial constancy (i.e., the perception of a stable visual world despite constantly changing retinal images caused by eyes, head and body movements) is functionally important for both perception (e.g., to maintain a stable percept of the world) and sensorimotor transformations (e.g., to update the motor goal of an eye or arm movement). Visuospatial updating, which is the means by which we maintain spatial constancy, has been extensively studied and shown to be quite robust using saccadic eye movements (Duhamel et al., 1992; Hallett and Lightstone, 1976; Mays and Sparks, 1980; Sparks and Mays, 1983). But is spatial constancy also maintained after passive displacements that introduce vestibular stimulation?

A typical spatial updating paradigm for passive movements includes the following sequence: (1) the subject fixates a central head-fixed target, (2) a peripheral space-fixed target is briefly flashed, (3) after the peripheral target is extinguished, the subject is either rotated or translated to a new position (while maintaining fixation on the head-fixed target that moves along with them), and (4) once the motion ends the subject makes a saccade to the remembered location of the space-fixed target. Updating ability is then measured by examining the accuracy of the remembered saccade.

The first such experiments examined updating after intervening yaw rotations and found poor performance, suggesting an inability to integrate stored vestibular signals with retinal information about target location (Blouin et al., 1995a,b; also see Baker et al. 2003). Subsequent studies have shown that subjects are able to localize remembered, space-fixed targets better after roll rotations (Klier et al., 2005; 2006). However, not all roll rotations are equally compensated for. Spatial updating about the roll axis from an upright orientation was 10 times more accurate than updating about the roll axis in a supine orientation. Thus roll rotations likely use dynamic gravitational cues effectively whenever they are present (derived from either the otolith organs, proprioceptive cues or both), resulting in relatively accurate memory saccades (Klier et al., 2005; 2006; Van Pelt et al., 2005). In contrast, subjects, on average, can only partially update the remembered locations of visual targets after yaw rotations – irrespective of whether the yaw rotation changes the body's orientation relative to gravity (which occurs, for example, during yaw rotation while supine; Klier et al., 2006).

Visuospatial updating has also recently been examined after translations (Klier et al., 2008; Li and Angelaki, 2005; Li et al., 2005; Medendorp et al. 2003). Updating for translations is more complex than updating for rotations because translations change both the direction and the distance of an object from the observer. As a result, updating accuracy is measured by changes in both ocular version and vergence. Both humans (Klier et al., 2008) and trained macaques (Li and Angelaki, 2005) can compensate for traveled distances in depth and make vergence eye movements that are appropriate for the final position of the subject relative to the target. Notably, trained animals loose their ability to properly adjust memory vergence angle after destruction of the vestibular labyrinths (Li and Angelaki, 2005). Such deficits are also observed for lateral translation and yaw rotation, however, while yaw rotation updating deficits recover over time, updating capacity after forward and backward movements remain compromised even several months following a lesion (Wei et al., 2006). These uncompensated deficits are reminiscent of the permanent loss observed for fine direction discrimination after labyrinthine lesions (Gu et al., 2007) and suggest a dominant role of otolith signals for the processing of both self-motion information and spatial updating in depth.

Like many other aspects of vestibular-related perception, the neural basis of how vestibular information changes the goal of memory-guided eye movements remains to be explored. Current thinking surrounds a process known as visual remapping in which signals carrying information about the amplitude and direction of an intervening movement are combined with cortical visual information regarding the location of a target in space (Duhamel et al., 1992; Goldberg and Bruce, 1990; Nakamura and Colby, 2002). Preliminary findings suggest that gaze-centered remapping is also present with vestibular movements (Powell and Goldberg, 1997; White and Snyder 2007). It is likely that information about the intervening movements are derived sub-cortically because updating ability across hemifields remains largely intact after destruction of the interhemispheric connections (Heiser et al., 2005; Berman et al., 2007). To this end, sub-cortical vestibular cues may reach cortical areas implicated with spatial updating via the thalamus (Meng et al., 2007) as patients with thalamic lesions are unable to perform vestibular memory-contingent saccades (Gaymard et al., 1994).

Concluding Remarks

We now know that vestibular-related activity is found in multiple regions of the cerebral cortex including parieto-temporal, frontal, somatosensory and even visual cortices. Most of these neurons are multisensory, receiving converging vestibular, visual and/or somatosensory inputs. Significant progress has been made in recent years, but much more needs to be done. For example, how do vestibular signals in multiple visuomotor and visual motion areas differ from each other? And do these site-specific vestibular signals aid in processes already associated with these areas (e.g., motion perception in MSTd versus eye movement generation in FEF), or do they serve a unique set of goals?

Borrowing from computational neuroscience, we have now started characterizing vestibular responses not just by their mean values but also by their neuronal variability. Thus, tools based exclusively on deterministic control systems have now been complemented by stochastic processing, signal detection theory and Bayesian population-style concepts. In addition, recent studies have begun to use more functionally-relevant approaches in an attempt to understand not only the basic response properties of these areas, but also the functional significance of diverse cortical representations of vestibular information. The heading discrimination task mentioned in this review is one such example in which vestibular signals are tested in the framework of a behaviorally-relevant task (i.e., the monkey must make a conscious decision about its direction of motion). Single-unit studies should also be performed in which animals are actively perceiving visual illusions or are performing subjective visual vertical tasks. Such studies will begin to reveal some of the many functional properties of the multiple cortical vestibular representations.

From a computational standpoint, it is also important to find out how well the Bayesian framework explains the diversity of behavioral data. Such a framework has clear advantages. By taking into account the reliability of each cue, the variability in neural responses and sensory processing, and the probability of certain parameter values over others based on prior experience, it is possible to model and predict multiple experimental observations without arbitrary curve-fitting and parameter estimation. But do our brains really make use of the variability in neuronal firing? And how realistic is it that our brains actually implement such a complex framework? Some of these questions may be answered using the multisensory approaches we advocate here.

As another example, the study of visual constancy via spatial updating has almost been exclusively studied using saccades and a stationary body. Only recently have vestibular contributions to spatial updating been examined in any format, and it has quickly become apparent that both canal and otolith cues help us not only keep track of our ongoing movements, but also allow us to update the location of visual targets in space. However, we still do not know how these brainstem-derived vestibular signals travel to the cortical areas associated with spatial updating, or in fact, which cortical areas specifically exhibit spatial updating using vestibular cues.

The next several years will be important in further exploring these multisensory interactions as more and more studies use functionally relevant tasks to understand higher-level vestibular influences in both spatial perception and motor control. Fortunately, these questions leave the vestibular field wide open to new opportunities for conducting cortical research on spatial perception using novel experiments and multisensory paradigms.

Acknowledgements

We thank members of our lab for useful comments on the manuscript. Supported by DC007620 and DC04260. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIDCD or the National Institutes of Health.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Angelaki DE. Three-dimensional organization of otolith-ocular reflexes in rhesus monkeys. III. Responses to translation. J. Neurophysiol. 1998;80:680–695. doi: 10.1152/jn.1998.80.2.680. [DOI] [PubMed] [Google Scholar]

- Angelaki DE. Eyes on target: what neurons must do for the vestibuloocular reflex during linear motion. J. Neurophysiol. 2004;92:20–35. doi: 10.1152/jn.00047.2004. [DOI] [PubMed] [Google Scholar]

- Angelaki DE, Cullen KE. Vestibular system: the many facets of a multimodal sense. Annu. Rev. Neurosci. 2008;31:125–150. doi: 10.1146/annurev.neuro.31.060407.125555. [DOI] [PubMed] [Google Scholar]

- Angelaki DE, Hess BJ. Inertial representation of angular motion in the vestibular system of rhesus monkeys. II. Otolith-controlled transformation that depends on an intact cerebellar nodulus. J. Neurophysiol. 1995a;73:1729–1751. doi: 10.1152/jn.1995.73.5.1729. [DOI] [PubMed] [Google Scholar]

- Angelaki DE, Hess BJ. Lesion of the nodulus and ventral uvula abolish steady-state off-vertical axis otolith response. J. Neurophysiol. 1995b;73:1716–1720. doi: 10.1152/jn.1995.73.4.1716. [DOI] [PubMed] [Google Scholar]

- Angelaki DE, Hess BJ. Self-motion-induced eye movements: effects on visual acuity and navigation. Nat. Rev. Neurosci. 2005;6:966–976. doi: 10.1038/nrn1804. [DOI] [PubMed] [Google Scholar]

- Angelaki DE, McHenry MQ, Dickman JD, Newlands SD, Hess BJ. Computation of inertial motion: neural strategies to resolve ambiguous otolith information. J. Neurosci. 1999;19:316–327. doi: 10.1523/JNEUROSCI.19-01-00316.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Angelaki DE, Shaikh AG, Green AM, Dickman JD. Neurons compute internal models of the physical laws of motion. Nature. 2004;430:560–564. doi: 10.1038/nature02754. [DOI] [PubMed] [Google Scholar]

- Aubert H. Eine scheinbare bedeutende Drehung von Objekten bei Neigung des Kopfes nach rechts oder links. Arch. of Pathol. Anat. 1861;20:381–393. [Google Scholar]

- Avillac M, Deneve S, Olivier E, Pouget A, Duhamel JR. Reference frames for representing visual and tactile locations in parietal cortex. Nat. Neurosci. 2005;8:941–949. doi: 10.1038/nn1480. [DOI] [PubMed] [Google Scholar]

- Baker JT, Harper TM, Snyder LH. Spatial memory following shifts of gaze. I. Saccades to memorized world-fixed and gaze-fixed targets. J Neurophysiol. 2003;89:2564–2576. doi: 10.1152/jn.00610.2002. [DOI] [PubMed] [Google Scholar]

- Balaban CD. Vestibular autonomic regulation (including motion sickness and the mechanism of vomiting. Curr. Opin. Neurol. 1999;12:29–33. doi: 10.1097/00019052-199902000-00005. [DOI] [PubMed] [Google Scholar]

- Berman RA, Heiser LM, Dunn CA, Saunders RC, Colby CL. Dynamic circuitry for updating spatial representations. III. From neurons to behavior. J. Neurophysiol. 2007;98:105–121. doi: 10.1152/jn.00330.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berthoz A, Pavard B, Young LR. Perception of linear horizontal self-motion induced by peripheral vision (linear vection) basic characteristics and visual-vestibular interactions. Exp. Brain Res. 1975;23:471–489. doi: 10.1007/BF00234916. [DOI] [PubMed] [Google Scholar]

- Blouin J, Gauthier GM, Van Donkelaar P, Vercher JL. Encoding the position of a flashed visual target after passive body rotations. Neuroreport. 1995a;6:1165–1168. doi: 10.1097/00001756-199505300-00023. [DOI] [PubMed] [Google Scholar]

- Blouin J, Gauthier GM, Vercher JL. Failure to update the egocentric representation of the visual space through labyrinthine signal. Brain Cogn. 1995b;29:1–22. doi: 10.1006/brcg.1995.1264. [DOI] [PubMed] [Google Scholar]

- Bos JE, Bles W. Theoretical considerations on canal-otolith interaction and an observer model. Biol. Cybern. 2002;86:191–207. doi: 10.1007/s00422-001-0289-7. [DOI] [PubMed] [Google Scholar]

- Bottini G, Sterzi R, Paulesu E, Vallar G, Cappa SF, Erminio F, Passingham RE, Frith CD, Frackowiak RS. Identification of the central vestibular projections in man: a positron emission tomography activation study. Exp. Brain Res. 1994;99:164–169. doi: 10.1007/BF00241421. [DOI] [PubMed] [Google Scholar]

- Brandt T, Dieterich M. The vestibular cortex. Its locations, functions, and disorders. Ann. N Y Acad. Sci. 1999;871:293–312. doi: 10.1111/j.1749-6632.1999.tb09193.x. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Duhamel JR, Ben Hamed S, Graf W. Heading encoding in the macaque ventral intraparietal area (VIP) Eur. J. Neurosci. 2002a;16:1554–1568. doi: 10.1046/j.1460-9568.2002.02207.x. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Klam F, Duhamel JR, Ben Hamed S, Graf W. Visual-vestibular interactive responses in the macaque ventral intraparietal area (VIP) Eur. J. Neurosci. 2002b;16:1569–1586. doi: 10.1046/j.1460-9568.2002.02206.x. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Kubischik M, Pekel M, Lappe M, Hoffmann KP. Linear vestibular self-motion signals in monkey medial superior temporal area. Ann. N Y Acad. Sci. 1999;871:272–281. doi: 10.1111/j.1749-6632.1999.tb09191.x. [DOI] [PubMed] [Google Scholar]

- Britten KH, Shadlen MN, Newsome WT, Movshon JA. The analysis of visual motion: a comparison of neuronal and psychophysical performance. J. Neurosci. 1992;12:4745–4765. doi: 10.1523/JNEUROSCI.12-12-04745.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Britten KH, Van Wezel RJ. Electrical microstimulation of cortical area MST biases heading perception in monkeys. Nat. Neurosci. 1998;1:59–63. doi: 10.1038/259. [DOI] [PubMed] [Google Scholar]

- Brody CD, Hernandez A, Zainos A, Lemus R, Romo R. Analysing neuronal correlates of the comparison of two sequentially presented sensory stimuli. Philos. Trans. R. Soc. Lond. B. Biol. Sci. 2002;357:1843–1850. doi: 10.1098/rstb.2002.1167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bronstein AM. The interaction of otolith and proprioceptive information in the perception of verticality. The effects of labyrinthine and CNS disease. Ann. N Y Acad. Sci. 1999;871:324–333. doi: 10.1111/j.1749-6632.1999.tb09195.x. [DOI] [PubMed] [Google Scholar]

- Bronstein AM. Vision and vertigo: some visual aspects of vestibular disorders. J. Neurol. 2004;251:381–387. doi: 10.1007/s00415-004-0410-7. [DOI] [PubMed] [Google Scholar]

- Clark JJ, Yuille AL. Data Fusion for Sensory Information Processing Systems. Kluwer Academic Publishers; Norwell, MA: 1990. [Google Scholar]

- Clément G, Deguine O, Parant M, Costes-Salon MC, Vasseur-Clausen P, Pavy-LeTraon A. Effects of cosmonaut vestibular training on vestibular function prior to spaceflight. Eur. J. Appl. Physiol. 2001;85:539–545. doi: 10.1007/s004210100494. [DOI] [PubMed] [Google Scholar]

- Cohen MR, Newsome WT. What electrical microstimulation has revealed about the neural basis of cognition. Curr. Opin. Neurobiol. 2004;14:169–177. doi: 10.1016/j.conb.2004.03.016. [DOI] [PubMed] [Google Scholar]

- Cullen KE, Roy JE. Signal processing in the vestibular system during active versus passive head movements. J. Neurophysiol. 2004;91:1919–1933. doi: 10.1152/jn.00988.2003. [DOI] [PubMed] [Google Scholar]

- Denise P, Darlot C, Droulez J, Cohen B, Berthoz A. Motion perceptions induced by off-vertical axis rotation (OVAR) at small angles of tilt. Exp. Brain Res. 1988;73:106–114. doi: 10.1007/BF00279665. [DOI] [PubMed] [Google Scholar]

- De Vrijer M, Medendorp WP, Van Gisbergen JA. Shared computational mechanism for tilt compensation accounts for biased verticality percepts in motion and pattern vision. J. Neurophysiol. 2008;99:915–930. doi: 10.1152/jn.00921.2007. [DOI] [PubMed] [Google Scholar]

- De Waele C, Baudonniere PM, Lepecq JC, Tran Ba Huy P, Vidal PP. Vestibular projections in the human cortex. Exp. Brain Res. 2001;141:541–551. doi: 10.1007/s00221-001-0894-7. [DOI] [PubMed] [Google Scholar]

- Dichgans J, Diener HC, Brandt T. Optokinetic-graviceptive interaction in different head positions. Acta. Otolaryngol. 1974;78:391–398. doi: 10.3109/00016487409126371. [DOI] [PubMed] [Google Scholar]

- Dichgans J, Held R, Young LR, Brandt T. Moving visual scenes influence the apparent direction of gravity. Science. 1972;178:1217–1219. doi: 10.1126/science.178.4066.1217. [DOI] [PubMed] [Google Scholar]

- Duffy CJ. MST neurons respond to optic flow and translational movement. J. Neurophysiol. 1998;80:1816–1827. doi: 10.1152/jn.1998.80.4.1816. [DOI] [PubMed] [Google Scholar]

- Duffy CJ, Wurtz RH. Sensitivity of MST neurons to optic flow stimuli. I. A continuum of response selectivity to large-field stimuli. J. Neurophysiol. 1991;65:1329–1345. doi: 10.1152/jn.1991.65.6.1329. [DOI] [PubMed] [Google Scholar]

- Duffy CJ, Wurtz RH. Response of monkey MST neurons to optic flow stimuli with shifted centers of motion. J. Neurosci. 1995;15:5192–5208. doi: 10.1523/JNEUROSCI.15-07-05192.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duhamel JR, Colby C, Goldberg ME. The updating of the representation of visual space in parietal cortex by intended eye movement. Science. 1992;255:90–92. doi: 10.1126/science.1553535. [DOI] [PubMed] [Google Scholar]

- Duhamel JR, Colby CL, Goldberg ME. Ventral intraparietal area of the macaque: congruent visual and somatic response properties. J. Neurophysiol. 1998;79:126–136. doi: 10.1152/jn.1998.79.1.126. [DOI] [PubMed] [Google Scholar]

- Ebata S, Sugiuchi Y, Izawa Y, Shinomiya K, Shinoda Y. Vestibular projection to the periarcuate cortex in the monkey. Neurosci. Res. 2004;49:55–68. doi: 10.1016/j.neures.2004.01.012. [DOI] [PubMed] [Google Scholar]

- Eggert T. Thesis/Dissertation. Munich Technical University; 1998. Der Einfluss orientierter Texturen auf die subjective Vertikale und seine systemtheoretische Analyse. [Google Scholar]

- Ernst MO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. 2002;415:429–433. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- Fasold O, von Brevern M, Kuhberg M, Ploner CJ, Villringer A, Lempert T, Wenzel R. Human vestibular cortex as identified with caloric stimulation in functional magnetic resonance imaging. Neuroimage. 2002;17:1384–1393. doi: 10.1006/nimg.2002.1241. [DOI] [PubMed] [Google Scholar]

- Felleman DJ, Van Essen DC. Distributed hierarchical processing in the primate cerebral cortex. Cereb Cortex. 1991;1:1–47. doi: 10.1093/cercor/1.1.1-a. [DOI] [PubMed] [Google Scholar]

- Fernandez C, Goldberg JM. Physiology of peripheral neurons innervating otolith organs of the squirrel monkey. I. Response to static tilts and to long-duration centrifugal force. J. Neurophysiol. 1976;39:970–984. doi: 10.1152/jn.1976.39.5.970. [DOI] [PubMed] [Google Scholar]

- Fetsch CR, Turner AH, DeAngelis GC, Angelaki DE. Dynamic re-weighting of visual and vestibular cues during self-motion perception. J. Neurosci. 2009a doi: 10.1523/JNEUROSCI.2574-09.2009. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetsch CR, Turner AH, DeAngelis GC, Angelaki DE. Neural mechanisms of reliability-based cue re-weighting in the macaque. Soc. Neurosci. Abstr. 2009b:803.8. [Google Scholar]

- Friberg L, Olsen TS, Roland PE, Paulson OB, Lassen NA. Focal increase of blood flow in the cerebral cortex of man during vestibular stimulation. Brain. 1985;108:609–623. doi: 10.1093/brain/108.3.609. [DOI] [PubMed] [Google Scholar]

- Fukushima K. Corticovestibular interactions: anatomy, electrophysiology, and functional considerations. Exp. Brain Res. 1997;117:1–16. doi: 10.1007/pl00005786. [DOI] [PubMed] [Google Scholar]

- Fukushima J, Akao T, Takeichi N, Kurkin S, Kaneko CR, Fukushima K. Pursuit-related neurons in the supplementary eye fields: discharge during pursuit and passive whole body rotation. J. Neurophysiol. 2004;91:2809–2825. doi: 10.1152/jn.01128.2003. [DOI] [PubMed] [Google Scholar]

- Fukushima K, Sato T, Fukushima J, Shinmei Y, Kaneko CR. Activity of smooth pursuit-related neurons in the monkey periarcuate cortex during pursuit and passive whole-body rotation. J. Neurophysiol. 2000;83:563–587. doi: 10.1152/jn.2000.83.1.563. [DOI] [PubMed] [Google Scholar]

- Gaymard B, Rivaud S, Pierrot-Deseilligny C. Impairment of extraretinal eye position signals after central thalamic lesions in humans. Exp. Brain Res. 1994;102:1–9. doi: 10.1007/BF00232433. [DOI] [PubMed] [Google Scholar]

- Gdowski GT, McCrea RA. Neck proprioceptive inputs to primate vestibular nucleus neurons. Exp. Brain Res. 2000;135:511–526. doi: 10.1007/s002210000542. [DOI] [PubMed] [Google Scholar]

- Glasauer S. Linear acceleration perception: frequency dependence of the hilltop illusion. Acta. Otolaryngol. Suppl. 1995;520:37–40. doi: 10.3109/00016489509125184. [DOI] [PubMed] [Google Scholar]

- Glasauer S, Mittelstaedt H. Determinants of orientation in microgravity. Acta. Astronaut. 1992;27:1–9. doi: 10.1016/0094-5765(92)90167-h. [DOI] [PubMed] [Google Scholar]

- Goldberg ME, Bruce CJ. Primate frontal eye fields. III. Maintenance of a spatially accurate saccade signal. J. Neurophysiol. 1990;64:489–508. doi: 10.1152/jn.1990.64.2.489. [DOI] [PubMed] [Google Scholar]

- Goldberg JM, Fernandez C. Physiology of peripheral neurons innervating semicircular canals of the squirrel monkey. I. Resting discharge and response to constant angular accelerations. J. Neurophysiol. 1971;34:635–660. doi: 10.1152/jn.1971.34.4.635. [DOI] [PubMed] [Google Scholar]

- Green AM, Angelaki DE. Resolution of sensory ambiguities for gaze stabilization requires a second neural integrator. J. Neurosci. 2003;23:9265–9275. doi: 10.1523/JNEUROSCI.23-28-09265.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green AM, Angelaki DE. An integrative neural network for detecting inertial motion and head orientation. J. Neurophysiol. 2004;92:905–925. doi: 10.1152/jn.01234.2003. [DOI] [PubMed] [Google Scholar]

- Green AM, Shaikh AG, Angelaki DE. Sensory vestibular contributions to constructing internal models of self-motion. J. Neural Eng. 2005;2:164–179. doi: 10.1088/1741-2560/2/3/S02. [DOI] [PubMed] [Google Scholar]

- Gu Y, Angelaki DE, DeAngelis GC. Neural correlates of multisensory cue integration in macaque MSTd. Nat. Neurosci. 2008;11:1201–1210. doi: 10.1038/nn.2191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, DeAngelis GC, Angelaki DE. A functional link between area MSTd and heading perception based on vestibular signals. Nat. Neurosci. 2007;10:1038–1047. doi: 10.1038/nn1935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, Watkins PV, Angelaki DE, DeAngelis GC. Visual and nonvisual contributions to three-dimensional heading selectivity in the medial superior temporal area. J. Neurosci. 2006;26:73–85. doi: 10.1523/JNEUROSCI.2356-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guedry FE., Jr. Psychophysics of vestibular sensation. In: Guedry FE Jr., editor. Handbook of Sensory Physiology – Vestibular System Part 2 - Psychophysics, Applied Aspects and General Interpretations. Springer-Verlag; Berlin: 1974. pp. 1–154. [Google Scholar]

- Guldin WO, Grüsser OJ. Is there a vestibular cortex? Trends Neurosci. 1998;21:254–259. doi: 10.1016/s0166-2236(97)01211-3. [DOI] [PubMed] [Google Scholar]

- Hallett PE, Lightstone AD. Saccadic eye movements to flashed targets. Vision Res. 1976;16:107–114. doi: 10.1016/0042-6989(76)90084-5. [DOI] [PubMed] [Google Scholar]

- Heiser LM, Berman RA, Saunders RC, Colby CL. Dynamic circuitry for updating spatial representations. II. Physiological evidence for interhemispheric transfer in area LIP of the split-brain macaque. J. Neurophysiol. 2005;94:3249–3258. doi: 10.1152/jn.00029.2005. [DOI] [PubMed] [Google Scholar]

- Hillis JM, Watt SJ, Landy MS, Banks MS. Slant from texture and disparity cues: optimal cue combination. J Vis. 2004;4:967–992. doi: 10.1167/4.12.1. [DOI] [PubMed] [Google Scholar]

- Horn G, Stechler G, Hill RM. Receptive fields of units in the visual cortex of the cat in the presence and absence of bodily tilt. Experimental Brain Research. 1972;15:113–132. doi: 10.1007/BF00235577. [DOI] [PubMed] [Google Scholar]

- Kaptein RG, Van Gisbergen JA. Canal and otolith contributions to visual orientation constancy during sinusoidal roll rotation. J. Neurophysiol. 2006;95:1936–1948. doi: 10.1152/jn.00856.2005. [DOI] [PubMed] [Google Scholar]

- Karnath HO, Dieterich M. Spatial neglect – a vestibular disorder? Brain. 2006;129:293–305. doi: 10.1093/brain/awh698. [DOI] [PubMed] [Google Scholar]

- Kersten D, Mamassian P, Yuille A. Object perception as Bayesian inference. Annu. Rev. Psychol. 2004;55:271–304. doi: 10.1146/annurev.psych.55.090902.142005. [DOI] [PubMed] [Google Scholar]

- Klam F, Graf W. Vestibular response kinematics in posterior parietal cortex neurons of macaque monkeys. European J. Neurosci. 2003;18:995–1010. doi: 10.1046/j.1460-9568.2003.02813.x. [DOI] [PubMed] [Google Scholar]

- Klier EM, Angelaki DE. Spatial updating and the maintenance of visual constancy. Neuroscience. 2008;156:801–818. doi: 10.1016/j.neuroscience.2008.07.079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klier EM, Angelaki DE, Hess BJM. Roles of gravitational cues and efference copy signals in the rotational updating of memory saccades. J. Neurophysiol. 2005;94:468–478. doi: 10.1152/jn.00700.2004. [DOI] [PubMed] [Google Scholar]

- Klier EM, Hess BJ, Angelaki DE. Differences in the accuracy of human visuospatial memory after yaw and roll rotations. J. Neurophysiol. 2006;95:2692–2697. doi: 10.1152/jn.01017.2005. [DOI] [PubMed] [Google Scholar]

- Klier EM, Hess BJ, Angelaki DE. Human visuospatial updating after passive translations in three-dimensional space. J. Neurophysiol. 2008;99:1799–1809. doi: 10.1152/jn.01091.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knill DC, Saunders JA. Do humans optimally integrate stereo and texture information for judgments of surface slant? Vision Res. 2003;43:2539–2558. doi: 10.1016/s0042-6989(03)00458-9. [DOI] [PubMed] [Google Scholar]

- Knill DC, Pouget A. The Bayesian brain: the role of uncertainty in neural coding and computation. Trends Neurosci. 2004;27:712–719. doi: 10.1016/j.tins.2004.10.007. [DOI] [PubMed] [Google Scholar]

- Lackner JR, Graybiel A. Postural illusions experienced during Z-axis recumbent rotation and their dependence upon somatosensory stimulation of the body surface. Aviat. Space Environ. Med. 1978a;49:484–489. [PubMed] [Google Scholar]

- Lackner JR, Graybiel A. Some influences of touch and pressure cues on human spatial orientation. Aviat. Space Environ. Med. 1978b;49:798–804. [PubMed] [Google Scholar]

- Laurens J, Droulez J. Bayesian processing of vestibular information. Biol. Cybern. 2007;96:405. doi: 10.1007/s00422-007-0141-9. [DOI] [PubMed] [Google Scholar]

- Lewis RF, Haburcakova C, Merfeld DM. Roll tilt psychophysics in rhesus monkeys during vestibular and visual stimulation. J. Neurophysiol. 2008;100:140–153. doi: 10.1152/jn.01012.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis JW, Van Essen DC. Corticocortical connections of visual, sensorimotor, and multimodal processing areas in the parietal lobe of the macaque monkey. J. Comp. Neurol. 2000;428:112–137. doi: 10.1002/1096-9861(20001204)428:1<112::aid-cne8>3.0.co;2-9. [DOI] [PubMed] [Google Scholar]

- Li N, Angelaki DE. Updating visual space during motion in depth. Neuron. 2005;48:149–158. doi: 10.1016/j.neuron.2005.08.021. [DOI] [PubMed] [Google Scholar]

- Li N, Wei M, Angelaki DE. Primate memory saccade amplitude after intervened motion depends on target distance. J. Neurophysiol. 2005;94:722–733. doi: 10.1152/jn.01339.2004. [DOI] [PubMed] [Google Scholar]

- Lobel E, Kleine JF, Bihan DL, Leroy-Willig A, Berthoz A. Functional MRI of galvanic vestibular stimulation. J. Neurophysiol. 1998;80:2699–2709. doi: 10.1152/jn.1998.80.5.2699. [DOI] [PubMed] [Google Scholar]

- MacNeilage PR, Banks MS, Berger DR, Bulthoff HH. A Bayesian model of the disambiguation of gravitoinertial force by visual cues. Exp. Brain Res. 2007;179:263–290. doi: 10.1007/s00221-006-0792-0. [DOI] [PubMed] [Google Scholar]