Abstract

Designing usable geovisualization tools is an emerging problem in GIScience software development. We are often satisfied that a new method provides an innovative window on our data, but functionality alone is insufficient assurance that a tool is applicable to a problem in situ. As extensions of the static methods they evolved from, geovisualization tools are bound to enable new knowledge creation. We have yet to learn how to adapt techniques from interaction designers and usability experts toward our tools in order to maximize this ability. This is especially challenging because there is limited existing guidance for the design of usable geovisualization tools. Their design requires knowledge about the context of work within which they will be used, and should involve user input at all stages, as is the practice in any human-centered design effort. Toward that goal, we have employed a wide range of techniques in the design of ESTAT, an exploratory geovisualization toolkit for epidemiology. These techniques include; verbal protocol analysis, card-sorting, focus groups, and an in-depth case study. This paper reports the design process and evaluation results from our experience with the ESTAT toolkit.

Introduction

The task of understanding the causes, controls, and distribution of cancer presents an array of diverse and intricate challenges to those who wish to develop geovisualization tools to support this work. Within the discipline of cancer epidemiology there is still relatively little insight into the complex, interconnected set of factors that influence outcomes (mortality and incidence) and their primary spatial components. An important new focus in cancer epidemiology research encourages exploration of geographic health data to generate new hypotheses (Cockings et al. 2004; Khan and Skinner 2003). Cartography has a tradition of enabling the development of new and enhanced hypotheses with respect to health data, from the seminal spatial epidemiology of John Snow (1855) to contemporary atlases (Pickle et al. 1999; Wennberg et al. 1999). Therefore, we expect that new methods of geovisualization will foster this kind of knowledge creation as well. Indeed there is a potential that the interactivity and dynamism inherent in geovisualizations will facilitate exploration in a more effective and dynamic manner than prior cartographic techniques (MacEachren et al. 1998).

There are a handful of recent software packages designed to facilitate spatial epidemiology. Examples include SaTScan (Kulldorff and Information Management Services 2004) and ClusterSEER (Jacquez and Estberg 2003), both of which were developed through projects with the National Cancer Institute (NCI). These tools were designed to aid in the detection and analysis of disease clusters. The Cancer Atlas Viewer (http://www.biomedware.com/software/atlas.html) appears to be the only other example of an interactive geovisualization environment designed specifically to support health analysis. It provides access to a variety of linked visualization tools but is limited to the display of a single mortality database provided by NCI.

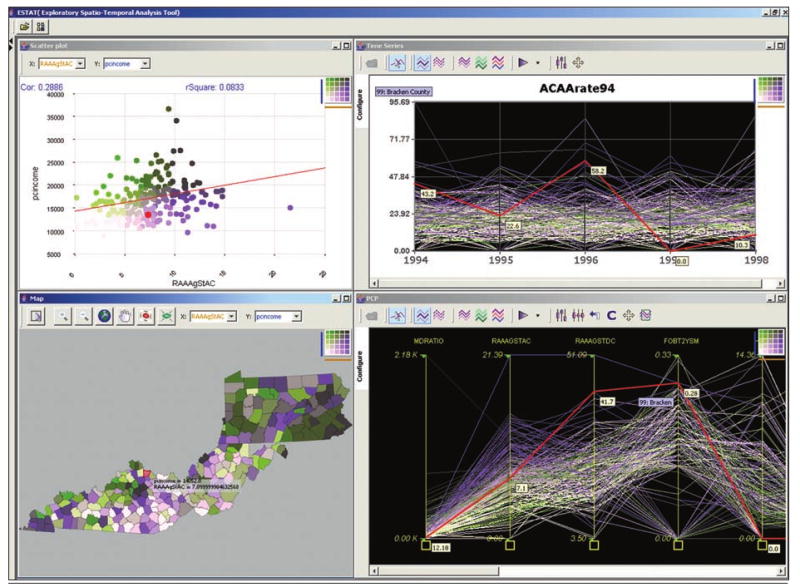

In this paper, we introduce the Exploratory Spatio-Temporal Analysis Toolkit (ESTAT), a geovisualization toolkit developed for the Statistical Research & Applications Branch of the National Cancer Institute by the Geographic Visualization Science, Technology, and Applications (GeoVISTA) Center at The Pennsylvania State University. ESTAT features four primary visual analysis elements. We have combined a scatter plot, bivariate map, time series plot, and a parallel coordinate plot (PCP) in a dynamically linked interface (Figure 1). In each analytic tool, brushing, selection, classification, and color scheme choice is linked in real time; this supports quick exploration of both multivariate relationships and the geographic aspects of those relationships. ESTAT is an open-source application and is available standalone, or as a design document for use in its parent application, GeoVISTA Studio (Takatsuka and Gahegan 2002).

Figure 1.

The ESTAT Geovisualization Toolkit featuring a scatterplot (top left), bivariate map (bottom left), time series graph (top right), and parallel coordinate plot (bottom right).

This paper presents the development history of the ESTAT application, specifically reporting on our efforts to make geovisualization tools usable for epidemiology. ESTAT is the result of a user-centered design process; the series of assessments we have employed are described and discussed. In particular, we focus attention on a case study application using ESTAT for a specific problem in cancer epidemiology. Additionally, we detail the process of usability research that led up to the decision to embark on a long-term case study and collaboration. The results of our case study are discussed, but our primary focus is on the methods we have employed to assess the utility and enhance the interfaces of our geovisualization tools.

Background

User issues and interface design are common themes in current geovisualization research. The work reported here attempts to address interface and cognitive/usability goals outlined by MacEachren and Kraak (2001), particularly their call, “…to develop a comprehensive user-centered design approach to geovisualization usability”.

There are a number of recent examples of usability studies with geovisualization tools (Andrienko et al. 2002; Edsall 2003; Haklay and Tobon 2003; Montello et al. 2003; Slocum et al. 2003; Suchan 2002), and many of the methods described herein are inspired by aspects of this body of work. Of these examples, we draw the most from Slocum et al. (2003), who describe a six-stage design process for the creation of a user-centered tool to visualize issues related to water balance. In the research they report, however, end-user input is incorporated only after key functionality and interface design issues have been decided; the authors ultimately lament the lack of early and repeated user input in their discussion of results. Their recommendation is that user participation should happen from start to finish, rather than after key elements have been decided by developers. This recommendation matches common practice in user-centered design outside of GIScience (Gabbard et al. 1999; Nielsen 1993; Norman 2002).

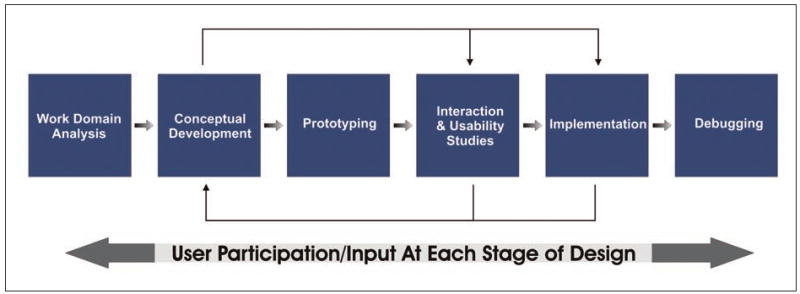

As a result, we have adopted a design process (Figure 2) that incorporates end-users throughout. The ways in which user input and information are incorporated into this process differ across each stage, and these differences are detailed below along with brief descriptions of each procedural element.

Figure 2.

Our adaptation of the user-centered design process.

The first stage, work domain analysis, represents the initial communication of ideas and requirements between the client (in this case, NCI) and developers (GeoVISTA), as well as our focused research into the tasks and traditions of epidemiology. The latter aspect of this stage is reported on in another paper we are preparing. As input to the broader method and tool development project that ESTAT builds upon, we interviewed domain members and studied their published work in order to develop a detailed picture of epidemiologists and the work they pursue.

Conceptual development refers to the outline of desired features that comes from understanding the work domain. During this stage, the layout, tools, and architecture are discussed and the application is drawn as a graphical concept prototype. This stage iterates through multiple designs, and each iteration benefits from stakeholder feedback. During the ESTAT development process, designs are discussed through regular meetings and via informal email communication.

After conceptual development defines the core of the application, prototyping begins. At this stage, working models of the application are created. During the development of ESTAT, this stage has been essentially concurrent with the stage that follows it in our process diagram—interaction/usability assessment. In this paper, we use the term ‘assessment’ when we refer to formal and informal evaluation of both the overall usability of our tools, as well as the interactions that they foster. We are especially interested in the latter of these two aspects.

Interaction/usability assessment activities are crucial to understanding the pieces of an application that work well, as well as those that need further re-design. Formal assessment efforts may take place in a usability laboratory where audio and video can be captured while users attempt to work with an application. They may also include interviews and focus groups that discuss the application in question. Informal assessment occurs as end-users are asked to try out prototypes and forward comments, questions, and ideas. Additionally, a wide range of informal assessment activities occur on the development side while the application is being critiqued internally. Each of these methods has been employed during the design of ESTAT, in addition to a hybrid of these techniques that emerged through long-term case study collaboration.

Implementation follows assessment activities, and it typically spawns its own fresh set of design issues. It is difficult to simulate “real” work well enough during the assessment stage in order to ensure that you have no significant problems during implementation. We therefore include feedback loops between both assessment and implementation stages which then work backward into conceptual development and initial design. Our experiences have shown that the results of both stages have often caused us to return to the proverbial drawing board and try other options. Similarly, we have been inspired to explore new designs for different domains/tasks by the things we learned during these activities. Furthermore, if one can imagine concept development as a stage occurring across multiple projects, the lessons learned from usability assessment on one project are extremely valuable inputs to the beginning steps of other similar projects.

The final stage in this process is debugging. In this portion of the design process the application is adjusted to enhance stability and compatibility, and to make the most out of the computing infrastructure in which it has been implemented. Mechanisms for user feedback at this stage include web-based issue trackers such as JIRA (http://www.atlassian.com/software/jira), links to email support in help documentation, and follow-up phone calls to individual users.

The remainder of this paper focuses attention on stage four, that of interaction and usability assessments. The following sections outline the array of techniques we have applied and the things we have learned from them about our tools, our users, and strategies for usability assessment of complex, exploratory geovisualization environments.

Assessing ESTAT

We have opted to combine several methods of usability assessment in order to examine the ESTAT toolkit. The following sub-sections briefly describe the techniques of card sorting, verbal protocol analysis, focus groups, and ethnographic case studies.

Card sorting (Nielsen 1993) is a simple and fast method of assessing the structure of an interface. Users are given 3 × 5 note cards labeled with individual functions and asked to arrange them in categories and an order that they think makes the most sense. This method has found particular success in website usability research, as designers seek end-user guidance to help them arrange web pages.

Verbal protocol analysis (VPA) gathers user experiences in real time as they “think aloud” (Ericsson and Simon 1993). It is especially valuable for understanding both the critical needs of an application, as well as its expected behavior from the perspective of the end-user. Typically, users are given a task to achieve using the application in question, and they are instructed to verbalize their thought processes as they work. The VPA has the inherent benefit (or detriment) of generating a massive amount of data very quickly.

Focus groups (Morgan et al. 1998) solicit ideas and feedback through group discussion. They are moderated by a discussion leader who asks questions and prompts for elaboration, as described in advance by those sponsoring the session. Focus groups are even faster than VPA and generate a similarly large amount of data. In general, focus groups allow users to share their experiences with an application and develop hypothetical situations/ideas in a quick and efficient manner. These aspects make focus groups an applicable method across multiple stages of the software design process. We have chosen to combine VPA with follow-up focus groups to capture both the details inherent in the epidemiological workflow and the reflections our users have about how our tools could be situated more appropriately for their daily usage.

Ethnographic case studies combine a real-world application of methods with participant observation practices (Yin 1994). Case studies are often undertaken as proof-of-concept exercises to demonstrate the utility of a particular tool or method. Ethnographic case studies are different in the sense that they are undertaken not only to evaluate tool utility, but also so that researchers may observe how work takes place around these tools. Data from such studies are usually collected ad hoc, in notes, through informal and formal communication with subjects, and from direct observation. Ethnographic case studies require a significant time commitment in order to complete, and the results are subject to interpretation. They do, however, yield deep knowledge of the situated work experience.

There is a clear emphasis in our work with ESTAT on methods that elicit qualitative results. While we recognize the value of quantitative measures to answer some categories of questions, at the stage of work presented here, we are interested in how people are working (or not working) with exploratory geovisualization tools; thus our focus is on formative rather than summative evaluation. Formative evaluations are carried out early in the design process (focusing on assessing the needs of users and the extent to which the overall conceptual approach fits those needs), while summative evaluations occur after a design has been completed (and their aim is to directly compare the new design to other applications designed to accomplish the same tasks) (Gabbard et al. 1999). In general, formative studies lend themselves to qualitative methods, while summative evaluations rely more often on quantitative measures.

In many instances, usability seeks to reduce the time it takes to perform a routine task or limit the number of errors that might occur when solving specific problems. Recently, Saraiya et al. (2004) presented an intriguing model for summative studies that quantifies the number of “insights” generated during exploration. Insights were judged for validity by outside experts, in their case from microbiology. In contrast to Saraiya et al., the tasks we are examining are exploratory, and ideally result in a hypothesis. In our case it is not clear that we could rely on measuring validity, as our users are, in fact, the experts we might solicit to judge this—though their insight-based study is a useful model for future work we may pursue. In the future we will shift toward summative evaluations in order to critique the utility of ESTAT against existing methods of spatial data exploration in epidemiology.

The Assessment Process

In accordance with work pursued to understand the work domain and devise initial conceptual designs, we have been conducting usability and interaction assessments for ESTAT since October 2003. Initially, we focused our efforts toward building prototypes based on contract specifications and informal communication with NCI staff, which could be quickly evaluated by GIScience graduate students who had expertise with spatial data analysis. This was followed by formal user testing with health researchers at NCI, the results of which were later augmented by a long-term case study collaboration with an epidemiologist. Each of these stages is described below.

Rapid Prototype Assessment

As noted above, the first prototype of ESTAT featured the core features and functionality that had been requested by our colleagues at NCI and augmented by our research into their work domain. Our initial evaluation efforts focused on a single component of ESTAT, the parallel coordinate plot tool, and used the card-sorting method and verbal protocol analysis with GIScience graduate student participants. Our focus here was to quickly determine whether or not the tool was understandable enough to accomplish the tasks we were trying to facilitate. These evaluations were easy for us to execute, but in general they provided more questions than answers. Few of our initial testers understood how to use a Parallel Coordinate Plot, and more importantly, most were unable to simulate the tasks of epidemiology. When we asked users to explore epidemiological outcomes along with population information and predictor variables, none were able to develop the kind of hypothesis that ESTAT was supposed to yield. These initial assessments did, however, provide some useful information. Analysis of the protocol transcripts identified a number of instances in which testers expressed frustration with the basic layout of the tools, the data-loading process, and the lack of consistency in our interfaces.

We applied the card-sorting method to try and reorganize our interface. Again, our participants were GIScience graduate students. Our card-sorting results showed that there appeared to be two general interface groupings that we could implement. While we were able to uncover these possible reconfigurations of the interface controls, we still had no sense of how epidemiologists might choose to organize the ESTAT interface differently.

Assessment with Domain Experts

At this stage, we turned to our collaborators at NCI for additional input. A formal usability assessment of the alpha stage ESTAT prototype occurred in February 2004 with a group of testers identified by our primary NCI contacts as likely ESTAT end-users. Each user worked through a tutorial and a brief set of epidemiological tasks before participating in a focus group discussion to verbally assess the tools.

During the tutorial and task sessions, we captured audio and video of the session and encouraged participants to both ask questions (of the two moderators) and describe what they were attempting to accomplish. Although it was essentially a cognate of a protocol analysis session, we did not use a “keep talking” prompt or otherwise force our participants to vocalize their interactions. Our method was driven by the fact that we had a limited time to work with a relatively large number of users (17) in two short sessions over a period of two days. During these sessions the moderators took notes, and subsequent analysis of the video and audio helped to augment these notes with further detail.

Immediately following the tutorial and task sessions, a focus group was held to discuss various aspects of the ESTAT toolkit. During the focus group, the same two moderators led the discussion, with occasional input and questions from two of the NCI project leaders who collaborate with the GeoVISTA Center. In hindsight, it would have been better to not have this additional input, as we were not aware before the session of the questions they wanted to ask or the issues that they would be most interested to explore. In general, the NCI project leaders were interested in hearing about what new features were still required, while we were focused on determining whether or not ESTAT functioned effectively as an exploratory visualization toolkit. As a result, our focus group discussion was somewhat more discontinuous than it may have been had we had the full time and scope within our control.

Both the modified VPA and focus group approaches to tool assessment by domain experts were extremely valuable to the development of ESTAT. From the tutorial/task sessions we were able to determine that our data-loading mechanism needed to be completely redesigned in order to be reasonably efficient. While we had our own informal debates regarding this part of ESTAT prior to testing at NCI, having documentation of end-users trudging through the interface had a greater impact on our developers. The tutorial/task sessions revealed that most users never got very far into actual epidemiological analysis because of the clumsiness of the interface and their lack of familiarity with the visualization methods being applied. This latter point was an aspect of our work that we had not anticipated—we found that the visualizations that NCI had requested we build in ESTAT were not widely understood by the users NCI had in mind. In particular, most users needed tutoring to understand the Parallel Coordinate Plot and Time Series graphs. We had included descriptions about how these work in our tutorial. However, for users who were experiencing this kind of analysis tool for the first time, more focused training was clearly needed.

From our focus group discussions we were provided with insights into the modifications and additions needed for ESTAT in order to make it usable for epidemiological work. In general, our users were excited about the potential that geovisualization tools hold for their discipline. The version of ESTAT that was tested, however, lacked a number of the essential ingredients needed to make the software practical for use by typical public-health researchers.

Specifically, our users repeatedly mentioned a desire to see descriptive statistics of the data displayed, in order to help them assess the character of the data patterns and relationships in question. One user went so far as to ask, “Why is it called ESTAT if there are no stats?” When we pressed our users for more details regarding the specific statistics they would require, we were met with a wide range of possibilities, including; regression values/lines, correlation coefficients, means/modes/medians, and significance values. A small number of users had specific suggestions for more advanced statistics, including; Poisson regression, Bayesian models, and the ability to use spatial analysis methods. For these more advanced functions we were advised that it would be worthwhile for our tools to be able to “talk to” a statistical software package so that users could create and execute complex and customized routines on data they have viewed, initially, in ESTAT.

As a result, we were faced with the challenge of deciding which statistical methods to incorporate into our tools and which we should leave to other software. Additionally, the suggestion to enable our tools to “talk to” a statistical package is not easy to implement because most statistical software is designed to be self-contained, and coordination with our Java-based software involves a substantial software engineering effort.

Case Study Collaboration

While we gathered many useful ideas from these tests at NCI, it was clear that we could pursue a more elegant and effective solution by embarking on an in-depth collaboration over a significant period of time with an epidemiologist. Doing so would provide deeper insight into common methods of epidemiological analysis, help determine the kinds of statistical/mathematical methods that are used most frequently to assess the quality and general characteristics of data, and allow us to understand in greater detail the kinds of interfaces that epidemiologists encounter regularly and therefore might be most comfortable adopting. Furthermore, we could augment the results gathered from our other assessment efforts and begin to triangulate areas of common agreement.

The process we followed draws upon the practice of interaction design (Preece et al. 2002). Interaction design methodology calls for special attention to the situation in which an application will be used, as well as the users and the tasks they wish to accomplish. We adopted a participant-observation approach to studying and cataloguing the actions and ideas of our collaborator, with goals similar to those we had in mind during the work domain analysis stage of our design process. Over a period of roughly four months, Anthony Robinson worked together with Dr. Eugene Lengerich, an epidemiologist from the Penn State University College of Medicine. Lengerich has been a colleague in several medical geography projects we host at the GeoVISTA center and, therefore, was familiar with our staff, software, and approach. Our goal from the beginning was to identify a problem in epidemiology that we could use ESTAT to explore, and by doing so together we would attempt to uncover a wide array of issues regarding the functionality and usefulness of our geovisualization toolkit. The case study work took place in several semi-formal sessions where we worked together using a laptop, sometimes augmented by a projector so that other colleagues could participate as well. Outside of these sessions, Robinson and Dr. Lengerich often collaborated via email and phone.

Initially, our meetings were largely informational, as we began to know each other and determine how we might work together on a specific epidemiological research question. These first steps allowed us to build an understanding of the kinds of problems that were interesting to our colleague, and conversely provided Dr. Lengerich with ample opportunity to see a wide array of what we were working on and what kinds of tools were at his disposal. This is the kind of mutual understanding that is difficult to achieve when an outside expert is brought in for a limited amount of time, as is typically the case in many structured evaluations of software tools. Participant observation provided us with the ability to effectively develop this understanding into real synergy between our group of geovisualization experts and a researcher focused on epidemiology.

Over the course of several meetings, Dr. Lengerich decided he would like to try using these tools to augment a more traditional epidemiological study. Specifically, he wanted to be certain that ESTAT could echo the results he would obtain from a structured mathematical analysis. The example analysis was a study of colon cancer incidence in the Appalachian counties of Pennsylvania, Kentucky, and West Virginia. This area is a focus of the Appalachian Cancer Network, for which our colleague is a research director. He had a working hypothesis that there are differences between the spatial patterns exhibited for the incidence of colon cancer, depending on whether that cancer occurs in the ascending or descending colon. This idea stems from current research in epidemiology that is examining potential etiologic differences in colon cancer that occurs in the ascending colon versus the descending colon (Hopenhayn et al. 2004; Iacopetta 2002). Moreover, Dr. Lengerich was interested in exploring how ascending/descending malignancies might differ according to variables representing prevalence of health screening and access to healthcare facilities. Our initial work with ESTAT on this problem centered on issues related to the data we wanted to analyze, and a great deal of work became necessary in order to create the right multivariate dataset that covered enough detail to explore a rather broad hypothesis.

The final sessions of our case study collaboration focused on using ESTAT to explore the colon cancer hypothesis. Dr. Lengerich verbally confirmed his findings as we used ESTAT to examine the relationships he had examined statistically. He was able to visually explore the same data he had previously analyzed and identify corroborating evidence to support his conclusions.

In a statistical analysis completed prior to our collaboration, Dr. Lengerich found a significant positive correlation between the number of physicians per 100,000 persons (doctor ratio) and the incidence of ascending colon cancer (and this possible relationship was of great interest to him). There was not a significant correlation (positive or negative) between the doctor ratio and descending colon cancer. Neither type of colon cancer was significantly correlated with the number of hospitals per 100,000 persons. Additionally, Dr. Lengerich observed that both types of colon cancer incidence showed significant positive correlation with per capita income, and negative correlation with unemployment rates.

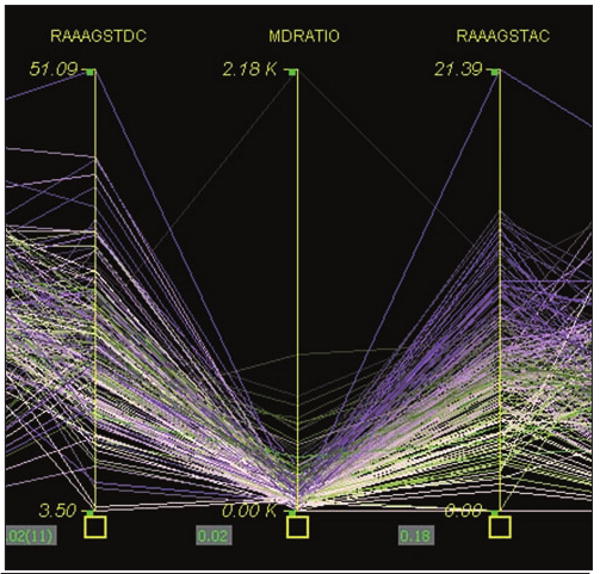

Our approach to integrating ESTAT with this analysis was to visualize the same data and use these findings as a guide for what to look for. Dr. Lengerich preferred to use the parallel coordinate plot to visually compare the differences between ascending and descending colon cancer incidences and the covariate in question. In the doctor ratio example, Dr. Lengerich turned on the PCP correlation values and observed the same results his analysis had uncovered (Figure 3). One by one, each of the other findings was examined in this way, often with the help of a scatterplot.

Figure 3.

Using the parallel coordinate plot to analyze one covariate between two outcomes. Ascending colon cancer incidence is on the right, and descending incidence is on the left. Between them is the doctor ratio indicator. The correlation value is displayed between the axes.

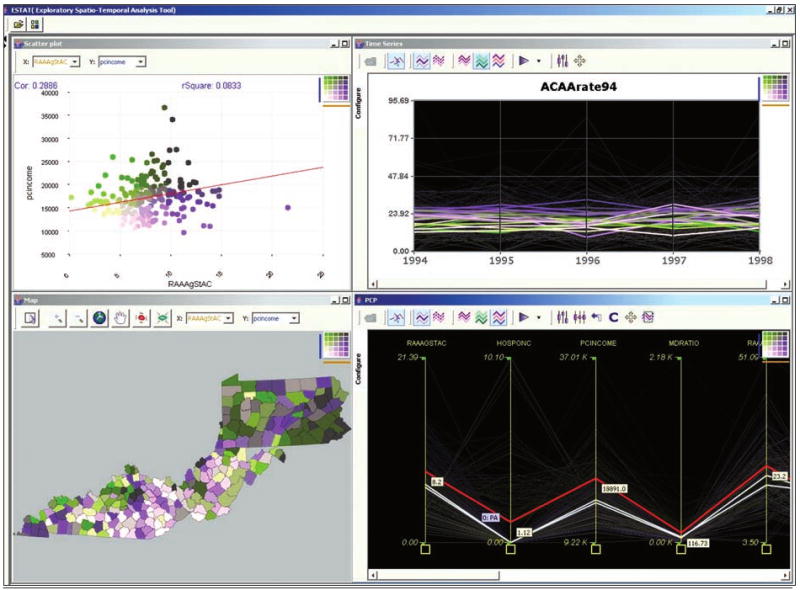

Following this confirmatory activity, Dr. Lengerich explored the spatial portion of this problem using the bivariate map and PCP together. He used the category median summary line tool to create median lines for each of the three states in question and then brushed over these to look at individual states and their patterns of colon cancer incidence and socioeconomic indicators (Figure 4). During this process, he verbalized a desire to try and determine why Pennsylvania appeared to have different patterns for colon cancer than Kentucky and West Virginia. While Pennsylvanians were generally more affluent and had better access to doctors and screening, they also had higher rates of colon cancer incidence of both types.

Figure 4.

Exploring state differences across socioeconomic indicators and colon cancer incidence in three states in Appalachia. This capture shows the state summary line for Pennsylvania as a red highlighted string in the PCP. One can see that on average, Pennsylvania has higher rates of ascending colon cancer, as well as higher access to health care, doctors, and higher per capita income than Kentucky and West Virginia. The map shows the rate of ascending colon cancer incidence versus per capita income, the darkest counties showing high rates of cancer and relatively high income.

Our experiences with NCI research staff indicated that most cancer researchers will be unsatisfied with purely visual analysis, that they want a range of descriptive statistics as well. For this case study, Dr. Lengerich had carried out statistical analysis of the data prior to using ESTAT, thus he had less need for integrated statistical tools. Perhaps more importantly, ESTAT presented the geographic picture of Dr. Lengerich's analysis that he had not seen before. Robinson and Dr. Lengerich spent time iterating through each state in the three-state study region to explore the geographic pattern in greater detail. In this stage of data exploration it would have been valuable to have access to spatial statistics in ESTAT to examine the geography more systematically. In general, the spatial patterns confirmed Dr. Lengerich's suspicion that Pennsylvania was experiencing a different health situation from Kentucky and West Virginia with respect to colon cancer and a wide array of socioeconomic covariates. These patterns mirrored the aforementioned relationships between economic affluence (and correspondingly better access to health care and screening) and high rates in both types of colon cancer.

The differences between what our formal testing at NCI and our case study suggested about the need for statistics were likely influenced by the fact that our users at NCI had a short amount of time to make themselves familiar with ESTAT, and their alpha version lacked even simple measures of correlation or regression, which were implemented by the time Lengerich was using ESTAT. Furthermore, for the case study we situated ESTAT as a tool that would augment and confirm a “typical” epidemiological analysis—a departure from the “explore and hypothesize” approach we had encouraged at NCI. This change in focus happened primarily because Lengerich wanted to make sure ESTAT would echo his traditional analysis before he would begin to rely on it for exploratory tasks. Lengerich's conservative approach was mirrored in many instances by users at NCI who vocalized their skepticism about visualization techniques and how they may be misrepresenting various aspects of the data.

Discussion

As a result of our efforts to systematically evaluate ESTAT in terms of its design and usability, we have been able to better shape our toolkit for the tasks of epidemiology. We were especially fortunate to have a window into a discipline through our case study collaboration. We knew this would allow a limited viewpoint because we were dealing with a single user alone. In practice, it is sometimes difficult to separate individual idiosyncrasies from the more valuable common themes in order to refine and enhance a design. However, our one-on-one collaboration enabled a deeper understanding of one expert's data analysis strategies, providing us with a basis for strategic decisions regarding which suggestions to follow (from this expert and others) and which we should reserve for further input from future evaluative efforts.

Insights derived from our case study are complemented by those from our group evaluation sessions at NCI, as well as by our early, internal usability testing. The synergy among multiple usability methods with multiple participants (having different backgrounds) provided an intricate and comprehensive input into our software design process. Virtually all aspects of the interface and the functionality of ESTAT were addressed by at least one component of our assessment strategy. Furthermore, focusing on user input and participation throughout the design process has shifted emphasis from developing new methods in isolation to developing pieces of toolkits that have an immediate application.

The case study portion of our usability work with ESTAT was particularly beneficial to our general understanding of the problem domain, and our colleague's specific, as well as implicit, suggestions led us to focus our attention on issues that we had previously neglected—loading/sorting data and interface icons/controls. It cannot be stressed enough that without attention to the actual use of our geovisualization tools it would have been difficult for us to accurately predict these deficiencies, though it may have been possible had we taken a proactive stance and modeled user interactions with our design. Moreover, our experiences in the design process presented here (and in other related work) support Buttenfield's (1999) argument that converging methods are essential to assessing complex, geospatial interfaces.

While it is quite possible to learn a great deal from a single exercise, it is not a way in which we can effectively tailor designs to a particular context of use. That evaluative effort must mirror the development effort, in that it should be constant throughout the progress of the project. We advocate incorporation of use and usability assessment from the start of any software development project. Furthermore, developers should not be expected to “take care” of usability issues on their own. The software design process is akin to cartographic design, as we would not expect those who create commercial GIS software or the databases we use to be responsible for the production of elegant and appropriate maps for distribution. Internally, our development process has undergone major changes since we have dedicated resources to research assistants who do not develop software and are explicitly focused on user issues.

A series of modifications were performed on our software in response to usability issues brought up by our case study collaboration and other interaction/usability assessments. Our initial in-house focus on the PCP component led to a total redesign of its interface and behaviors. The subsequent evaluation activities have led to a similar redesign of the entire ESTAT environment. The following sections describe the major issue areas that emerged as a result of our assessment work with ESTAT.

Issues Emerging as a Result of Assessments

Data

ESTAT has gone through an extensive reworking of the way it loads and handles datasets for epidemiological research. Our initial testing at NCI revealed that our data-loading tool was far too complicated as a single panel item (Figure 5). Armed with a video showing our end-users struggling with its interface, we were able to demonstrate the need for a redesign to our developers. As a group, we decided to create a data-loading wizard that would guide users through each step more efficiently. Following an initial prototype, we changed each prompt so that it used natural language in place of the technical “programmer-speak” that had been implemented. At the same time, icons were reworked to create visual cues to the kinds of functions they represent. Both of these changes, as well as others we have implemented, are inspired by interface design guidelines discussed by Shneiderman and Plaisant (2005).

Figure 5.

The original ESTAT data loader was a single complicated panel with multiple controls. The new data-loading wizard features a set of easy to understand steps that guide users toward the creation of a project and through the process of selecting a set of variables to explore.

The case study collaboration was particularly valuable toward our understanding of data handling in epidemiology. We had placed less importance on this stage of analysis than on the visual exploration tools, because the visual exploration stage is the focus of our GeoVISTA research center and the focus of the primary research grant supporting this particular work. What we found was that our epidemiologists were in need of a mechanism for visualizing and making sense of the complex datasets before they could select variables and explore them using our exploratory geovisualization tools. While we work on a visual method to support variable selection, we have implemented categorizing and sorting tools into our data loading wizard. This has provided a structure for data selection that complements the analysis strategy we were able to observe from our case study work with Dr. Lengerich. We were able to confirm the utility of these added features in our case study meetings as we explored the colon cancer hypothesis.

We have also created a simple metadata file to accompany each project. The metadata provide detailed variable descriptions to help alleviate problems of comprehension that emerge when a large number of truncated variable names are on the screen at once. Rollovers in our tools now display the full description of each variable.

Supplementary Statistics

A crucial issue we came across a number of times during our evaluations was a strong desire for basic descriptive statistics to characterize data that were visualized. The initial ESTAT prototype did not incorporate such statistics, and our users were reluctant to explore the data visually without some sense of their mathematical structure up front. Since this sentiment had been declared previously by our tester group at NCI, we implemented basic descriptive statistics prior to embarking on our case study collaboration.

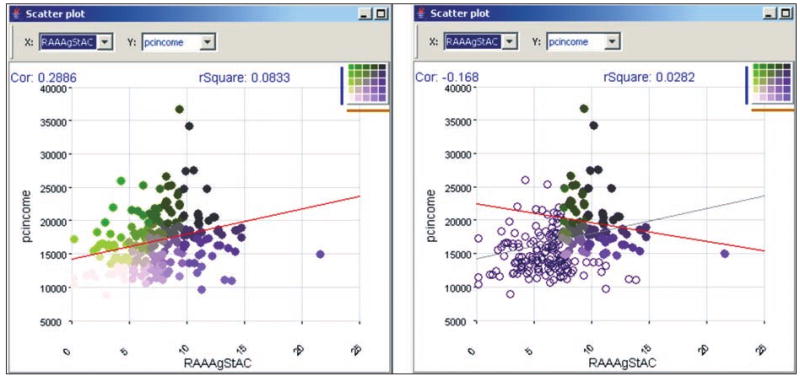

Correlation coefficients are now available between each pair of PCP and Time Series axes, as well as for the distribution displayed in the scatter plot. In addition, the scatter plot can show a regression line and r-squared value. The scatter plot calculates these statistics on the fly, and selecting a subset on the plot will cause values to change to reflect that grouping (Figure 6).

Figure 6.

Subset correlation as displayed on the ESTAT scatterplot.

Performance/Computing Infrastructure Issues

The disparity between the computers we have access to in our research center and those that are seeing daily use by our colleagues in epidemiology (typically the latter have less memory, lower resolution screens, and slower processors) has caused us to focus attention on increasing performance of our software tools for use on machines that are not on the cutting edge of computing technology.

A more vexing issue is that our visual tools require much screen space, and many of our developers are building them on dual-panel machines. Not surprisingly, few of our end-users have or will soon have this capability at their disposal. While it is reasonable to expect that higher-resolution monitors will become more affordable and commonplace over time, we have begun to encourage scrutiny of interfaces in terms of how much space they consume. Innovative window management tools, such as the methods available in Macintosh OSX, may also alleviate some of these issues.

Future Directions

At present, we are working on further methods of evaluating and redesigning ESTAT for epidemiology. In the near future, an in-depth individual user assessment is scheduled to take place at NCI with a small number of participants. This activity will focus again on providing domain experts with the ability to perform quick, visual exploration of multivariate spatial data in order to formulate new and enhanced hypotheses. The verbal protocol analysis technique will be employed in its more traditional form, and the users will discuss their experiences with the latest version of ESTAT after their individual sessions in a focus group. It is hoped that through this evaluation effort we will gain a greater understanding of which aspects of ESTAT are useful for exploration, and how epidemiologists from a wide range of backgrounds situate visualization within their current research.

In the near term, we will incorporate a suite of spatial statistics in ESTAT and its parent application GeoVISTA Studio as a result of our software development collaboration with the creators of the GeoDA spatial analysis toolkit at the Spatial Analysis Laboratory of the University of Illinois at Urbana-Champaign.

Finally, we hope to address the challenge of modifying common summative evaluation techniques for our exploratory tools. We wish to answer the call for more definitive evidence that visualization, and geovisualization in particular, provides clear advantages over other methods of exploratory analysis. Our case study results in particular provide some evidence that we are within reach of this goal. ESTAT, along with sample datasets, is available for downloading on the web at http://www.geovista.psu.edu/ESTAT/.

Acknowledgments

The research reported here has been supported in part by a contract from the National Cancer Institute (to construct the initial ESTAT application) and by grant CA95949 from the National Cancer Institute, which supported the development of our approach to user-centered design and the study of ESTAT use.

Contributor Information

Anthony C. Robinson, Email: arobinson@psu.edu.

Jin Chen, Email: jxc93@psu.edu.

Eugene J. Lengerich, Email: elengerich@psu.edu.

Hans G. Meyer, Email: hmeyer@psu.edu.

Alan M. MacEachren, Email: maceachren@psu.edu.

References

- Andrienko GL, Andrienko NV, Voss H, Bernardo F, Hipolito J, Kretchmer U. Testing the usability of interactive maps in CommonGIS. Cartography and Geographic Information Science. 2002;29(4):325–42. [Google Scholar]

- Buttenfield B. Usability evaluation of digital libraries. Science & Technology Libraries. 1999;17(3):39–59. [Google Scholar]

- Cockings S, Dunn CE, Bhopal RS, Walker DR. Users' perspectives on epidemiological, GIS and point pattern approaches to analysing environment and health data. Health & Place. 2004;(10):169–82. doi: 10.1016/j.healthplace.2003.09.001. [DOI] [PubMed] [Google Scholar]

- Edsall RM. Design and usability of an enhanced geographic information system for exploration of multivariate health statistics. Professional Geographer. 2003;55(2):605–19. [Google Scholar]

- Ericsson KA, Simon HA. Protocol analysis: Verbal reports as data. Cambridge, MA: MIT Press; 1993. [Google Scholar]

- Gabbard JL, Hix D, Swan JEI. User-centered design and evaluation of virtual environments. IEEE Computer Graphics and Applications. 1999;19(6):51–9. [Google Scholar]

- Haklay M, Tobon C. Usability evaluation and PPGIS: Towards a user-centered design approach. International Journal of Geographical Information Science. 2003;17(6):577–92. [Google Scholar]

- Hopenhayn C, Moore DB, Huang B, Redmond J, Tucker TC, Kryscio RJ, Boissonneault GA. Patterns of colorectal cancer incidence, risk factors, and screening in Kentucky. Southern Medical Journal. 2004;97(3):216–23. doi: 10.1097/01.SMJ.0000116041.78617.92. [DOI] [PubMed] [Google Scholar]

- Iacopetta B. Are there two sides to colorectal cancer? International Journal of Cancer. 2002;101(5):403–08. doi: 10.1002/ijc.10635. [DOI] [PubMed] [Google Scholar]

- Jacquez GM, Estberg L. BioMedware, Inc.; Clusterseer 2.0: Software for the detection and analysis of spatial, temporal, and spatio-temporal patterns. http://www.terraseer.com/products/clusterseer.html. [Google Scholar]

- Khan OA, Skinner R, editors. Geographic information systems and health applications. Hershey: Idea Group Publishers; 2003. [Google Scholar]

- Kulldorff M, I. Information Management ServicesSatscan v5.1 Software for the spatial and space-time scan statistics. http://www.satscan.org/

- MacEachren AM, Boscoe F, Haug D, Pickle LW. Geographic visualization: Designing manipulable maps for exploring temporally varying georeferenced statistics. Proceedings of the IEEE Information Visualization Symposium, Research Triangle Park; North Carolina. October 19-20.1998. [Google Scholar]

- MacEachren AM, Kraak MJ. Research challenges in geovisualization. Cartography and Geographic Information Science. 2001;28(1):3–12. [Google Scholar]

- Montello DR, Fabrikant SI, Ruocco M, Middleton RS. Testing the first law of cognitive geography on point-display spatializations. Proceedings of the Proceedings, Conference on Spatial Information Theory (COSIT ‘03), Lecture Notes in Computer Science 2825; Ittingen, Switzerland. September 24-28.2003. [Google Scholar]

- Morgan DL, Krueger RA, King JA. The focus group kit. Thousand Oaks, CA: Sage Publications; 1998. [Google Scholar]

- Nielsen J. Usability engineering. Boston: Massachusetts: Academic Press, Inc.; 1993. [Google Scholar]

- Norman D. The design of everyday things. New York: Basic Books; 2002. [Google Scholar]

- Pickle LW, Mungiole M, Jones GK, White AA. Exploring spatial patterns of mortality: The new atlas of united states mortality. Statistics in Medicine. 1999;18(23):3211–20. doi: 10.1002/(sici)1097-0258(19991215)18:23<3211::aid-sim311>3.0.co;2-q. [DOI] [PubMed] [Google Scholar]

- Preece J, Rogers Y, Sharp H. Interaction design: Beyond human-computer interaction. New York: John Wiley & Sons; 2002. [Google Scholar]

- Saraiya P, North C, Duca K. An evaluation of microarray visualization tools for biological insight. Proceedings of the IEEE Symposium on Information Visualization 2004; Austin, TX. October 10-12.2004. [Google Scholar]

- Shneiderman B, Plaisant C. Designing the user interface: Strategies for effective human-computer interaction. Boston, MA: Addison-Wesley; 2005. [Google Scholar]

- Slocum T, Cliburn D, Feddema J, Miller J. Evaluating the usability of a tool for visualizing the uncertainty of the future global water balance. Cartography and Geographic Information Science. 2003;30(4):299–317. [Google Scholar]

- Snow J. On the mode of communication of cholera. New York: The Commonwealth Fund; 1855. [Google Scholar]

- Suchan TA. Usability studies of geovisualization software in the workplace. Proceedings of the National Conference for Digital Government Research; Los Angeles, CA. May 19-22.2002. [Google Scholar]

- Takatsuka M, Gahegan M. GeoVISTA Studio: A codeless visual programming environment for geoscientific data analysis and visualization. Computers and Geosciences. 2002;28(10):1131–44. [Google Scholar]

- Wennberg JE, Cooper MM, Birkmeyer JD, Bronner KK, Bubolz TA, Campbell DE, Fisher EF, O'Connor GT, Poage JF, Sharp SM, Skinner J, Stukel TA, Wennberg DE. The Dartmouth atlas of health care 1999. Chicago, IL: American Hospital Publishing, Inc.; 1999. [Google Scholar]

- Yin RK. Case study research: Design and methods. 2nd. Thousand Oaks, CA: Sage Publications; 1994. [Google Scholar]