Abstract

Objectives

To ascertain hospital inpatient mortality in England and to determine which factors best explain variation in standardised hospital death ratios.

Design

Weighted linear regression analysis of routinely collected data over four years, with hospital standardised mortality ratios as the dependent variable.

Setting

England.

Subjects

Eight million discharges from NHS hospitals when the primary diagnosis was one of the diagnoses accounting for 80% of inpatient deaths.

Main outcome measures

Hospital standardised mortality ratios and predictors of variations in these ratios.

Results

The four year crude death rates varied across hospitals from 3.4% to 13.6% (average for England 8.5%), and standardised hospital mortality ratios ranged from 53 to 137 (average for England 100). The percentage of cases that were emergency admissions (60% of total hospital admissions) was the best predictor of this variation in mortality, with the ratio of hospital doctors to beds and general practitioners to head of population the next best predictors. When analyses were restricted to emergency admissions (which covered 93% of all patient deaths analysed) number of doctors per bed was the best predictor.

Conclusion

Analysis of hospital episode statistics reveals wide variation in standardised hospital mortality ratios in England. The percentage of total admissions classified as emergencies is the most powerful predictor of variation in mortality. The ratios of doctors to head of population served, both in hospital and in general practice, seem to be critical determinants of standardised hospital death rates; the higher these ratios, the lower the death rates in both cases.

Key messages

Between 1991-2 and 1994-5 average standardised hospital mortality ratios in English hospitals reduced by 2.6% annually, but the ratios varied more than twofold among the hospitals

After adjustment for the percentage of emergency cases and for age, sex, and primary diagnosis, the best predictors of standardised hospital death rates were the numbers of hospital doctors per bed and of general practitioners per head of population in the localities from which hospital admissions were drawn

England has one of the lowest number of physicians per head of population of the OECD countries, being only 59% of the OECD average

It is now possible to control for factors outside the direct influence of hospital policy and thereby produce a more valid measure of hospital quality of care

Introduction

Wide variations in English hospital inpatient death rates have been observed over many years,1–4 and concerns have been expressed that such variations could reflect important differences in the quality of medical care available in different hospitals.5,6 Hitherto, research has provided contradictory evidence about the relation of hospital mortality to quality of care.6–9 While differences in patients’ age and severity of illness may explain some of the variation in hospital death rates, adjustment for age, sex, and severity leaves a large amount of unexplained variation.10–15

Comparisons of hospital inpatient death rates, published annually in the United States as league tables, have resulted in lively discussion and debate about their compilation and usefulness.13,16–18 Meaningful comparison of hospital death rates requires adjustments for severity of illness, length of hospital stay, age, diagnosis, and type of admission. Suitably standardised hospital death rates are used both as indicators of quality of care and in the setting of standards in the United States.19–22

The NHS offers unique opportunities for examining the reasons for differences in hospital death rates because it provides a virtually closed system of care available to almost everyone in the country. Since 1987, data have been routinely collected nationally on every admission to hospital, providing a comprehensive database on all inpatient admissions. By linking other sources of routinely collected data to analyse inpatient hospital death rates, we attempted to ascertain differences in hospital mortality in England and to determine the main factors explaining the variation between hospitals over a four year period.

Methods

Data sources

We obtained data from three main sources: the NHS hospital episode statistics data system,23 the national decennial census,24,25 and other routine NHS data such as hospital characteristics,26,27 hospital staffing levels, and general practitioner distribution over England.28 For 51 hospitals, the results of a patient centred survey were available.29

The hospital episode statistics database from 1991-2 to 1994-5 includes information on every inpatient spell in NHS hospitals in England. Each spell includes the following information: patient’sage, sex, postcode of residence, primary diagnosis and up to six additional sub-diagnoses coded with the International Classification of Diseases, Ninth Revision (ICD-9), type of admission (emergency or elective), and length of stay.

We obtained census data from 1991 at the level of the 8595 English electoral wards (average population 5500 residents), which provided a range of socioeconomic indicators30–33 and the percentage of people with self reported, limiting, longstanding illness. The census data also contained information about the NHS facilities, hospices, and local authority or nursing home places available within each area.

We used other data sources, many routinely published by the NHS, such as numbers of hospital beds and indicators of staffing levels of hospital doctors and nurses and general practitioners per head of population.

Data extraction

NHS hospitals vary greatly in their size and purpose. Our goal was to compare roughly similar facilities, and we therefore selected the data using criteria based on type and size as well as on the quality of the data recorded in the hospital episode statistics database.

We looked at four years of data, from1991-2 to 1994-5. We excluded community and specialty institutions, small hospitals (under 9000 admissions during the four years), and hospitals without accident and emergency units. We also excluded any hospital that had poor quality data (more than 30% of inpatient episodes without a valid discharge or more than 30% of primary diagnoses recorded as “unknown”) for at least one of the four years. We included discharge records only—that is, episodes which ended in discharge (alive or dead) from the hospital rather than transfer to the care of another consultant within the hospital. In this paper, we use the terms admission and discharge to refer to the same outcome measure, namely the number of alive or dead hospital discharges; the term hospital refers to hospital trusts, which may occupy more than one site.

Discharges were included in the analysis if the primary diagnosis was one of 85 primary diagnoses which accounted for 80% of deaths. Weeliminated from the analyses all transfers between hospitals (2% of admissions and 3% of discharges). Data on deaths outside hospital were unavailable; it was therefore difficult to take account of differences in discharge practices that could affect comparisons of inpatient mortality. To address this situation, we recorded the availability of other NHS resources within each hospital health authority area, selected patients by lengths of stay of less than 14, 21, or 28 days, and used length of stay as a possible explanatory variable.

Measures of coexisting illness

Several studies stress the importance of adjusting for severity of illness in hospital admissions when comparing quality of health care.34–41 Since hospital statistics of inpatient episodes do not include detailed data on clinical severity, in addition to standardising for primary diagnosis, we calculated several measures of comorbidity based on discharge diagnoses for each hospital: the number of bodily systems affected by disease, the percentage of patient admissions with one of the 15 most serious primary diagnoses (responsible for 50% of all deaths), and the percentage both of cases and of deaths with comorbidities (that is, subdiagnoses) in each of the 85 diagnoses that led to 80% of all deaths. We ranked subdiagnoses by their univariable correlation with hospital standardised mortality ratios and created a measure of comorbidity by combining the top two or three comorbidity diagnoses. We used each of these measures in our model as independent estimates of the severity of illness treated.

Analysis

Because initial findings suggested that the percentage of emergency admissions was the strongest predictor of hospital standardised mortality ratios, we built up two models, the first (model A) included all admissions (both emergency and elective), and the second (model B) looked at mortality for emergency admissions only.

We conducted weighted multiple linear regressions that took account of the varying hospital volumes of cases (weights were defined as the reciprocal of the standard error squared where standard errors were derived using the normal approximation to a Poisson distribution of observed deaths). Each potential explanatory variable was used separately in a univariable regression model, and then multivariable analyses were performed. Backwards and forwards stepwise selection techniques were used, with a significance level of P=0.01. The adjusted R2 was derived—this is the percentage of variation explained by the model after adjustment for the number of variables in the model.

The residuals were checked with standard diagnostic methods and were found to be satisfactory.42 The stability of the final model was checked by repeating the fitting procedure after removing observations with high influence. Fractional polynomials were also used to check for curvature in the explanatory variables, and no curvature was found.43

Dependent variable—Our dependent variable was the hospital indirectly standardised mortality ratio, which is defined as the ratio of actual number of deaths to expected deaths multiplied by 100. We calculated death rates for the four years studied stratified by age (using 10 year age groups), sex, and the 85 primary diagnoses. These were used to calculate the expected deaths for each hospital by multiplying the number of hospital inpatient admissions in each stratum of age, sex, and primary diagnosis by the stratum specific rates. We also calculated hospital standardised mortality ratios using direct standardisation, which produced similar results to those from indirect methods.

Independent variables—The Appendix lists each of the independent variables considered in a univariable analysis. Three types of variables were used: aggregated discharge data such as the percentage of emergency cases, individual hospital data such as total number of beds, and community attributed data such as the percentage of patients with limiting longstanding illness. Aggregate discharge data was taken from the individual discharge records and aggregated across each hospital. Community data was taken from geographical areas (1991 electoral wards and 1995 health authorities), attributed from area of residence to each discharge (via postcode), and then averaged across discharges for each hospital.

Results

Descriptive statistics

We retained 183 acute general hospital trusts for analysis, roughly two hospitals per health authority in England. Over the four year study period, 7.7 million admissions were considered, of which 60% were classified as emergencies, accounting for 93% of all deaths considered (table 1). These 183 hospitals covered 85% of all admissions (88% of emergency admissions) in the England hospital episode statistics data for the 85 diagnoses.

Table 1.

Descriptive statistics for admissions to the 183 study hospitals during 1991-2 to 1994-5

| Year

|

|||||

|---|---|---|---|---|---|

| 1991-2 | 1992-3 | 1993-4 | 1994-5 | All | |

| All cases (emergency and elective admissions) | |||||

| No of admissions: | |||||

| Total | 1 773 598 | 1 858 320 | 1 996 160 | 2 025 449 | 7 653 527 |

| Mean (SD) | 9 692 (4 235) | 10 155 (4 422) | 10 908 (4 982) | 11 068 (5 359) | 41 823 (18 547) |

| Total No of deaths | 157 083 | 154 500 | 162 348 | 150 200 | 624 131 |

| Mean (SD) crude death rate (%) | 9.2 (2.3) | 8.6 (2.0) | 8.4 (2.0) | 7.6 (1.9) | 8.5 (1.9) |

| Mean (SD) HSMR | 104.9 (14.7) | 101.1 (14.2) | 100.4 (14.6) | 97.0 (13.2) | 100.8 (13.0) |

| Emergencies (%) | 61 | 60 | 60 | 58 | 60 |

| Emergency admissions only | |||||

| No of admissions: | |||||

| Total | 1 076 647 | 1 121 077 | 1 197 893 | 1 173 430 | 4 569 047 |

| Mean (SD) | 5 883 (2 315) | 6 126 (2 317) | 6 546 (2 571) | 6 412 (2 690) | 24 967 (9 578) |

| Total No of deaths | 143 590 | 142 807 | 151 611 | 140 616 | 578 624 |

| Mean (SD) crude death rate (%) | 13.5 (2.6) | 12.9 (2.4) | 12.8 (2.4) | 12.0 (2.4) | 12.8 (2.2) |

| Mean (SD) HSMR | 102.6 (11.3) | 99.8 (11.1) | 99.9 (11.8) | 98.1 (10.7) | 100.1 (9.9) |

HSMR=hospital standardised mortality ratios.

Crude death rates varied between hospitals from 3.4% to 13.6%, with a mean mortality of 8.5%. The mean annual mortality fell from 9.2% to 7.6% over the four years. When annual death rates were standardised by age, sex, and primary diagnoses the mean hospital standardised mortality ratios fell from 104.9 to 97.0 (average annual fall of 2.6%).

Regression analyses

Length of stay proved not to be significant, and table 2 shows results only for all lengths of stay. It shows the predictors associated with hospital standardised mortality ratios at the 1% significance level and their regression coefficients. Table 3 shows the univariable associations for these predictors.

Table 2.

Results of stepwise regression analyses: variables associated with hospital standardised mortality ratios at 1% significance level and tabulated in order of selection.

| Variable | Regression coefficient (95% CI)* | P value | Mean (SD) |

|---|---|---|---|

| Model A: all cases (emergencies and electives) in 183 hospitals (adjusted R2=0.65) | |||

| Percentage of cases admitted as emergency | 0.58 (0.41 to 0.75) | <0.001 | 61.4 (8.5) |

| No of hospital doctors per 100 hospital beds in 1994-5 | −0.47 (−0.64 to −0.30) | <0.001 | 25.4 (8.0) |

| No of general practitioners per 100 000 population† | −0.67 (−1.05 to −0.30) | <0.001 | 54.6 (3.4) |

| Standardised admissions ratio‡ | −0.15 (−0.23 to −0.06) | 0.001 | 107 (14.4) |

| Percentage of live discharges to home | 1.61 (0.71 to 2.50) | 0.001 | 98.0 (1.5) |

| Percentage of cases with comorbidity§ | 1.51 (0.47 to 2.55) | 0.005 | 4.2 (1.4) |

| NHS facilities per 100 000 population‡ | −1.12 (−1.92 to −0.32) | 0.007 | 3.43 (1.6) |

| Model B: emergencies only in 183 hospitals (adjusted R2=0.50) | |||

| No of hospital doctors per 100 hospital beds in 1994-5 | −0.51 (−0.65 to −0.38) | <0.001 | 25.4 (8.0) |

| Percentage of cases with comorbidity¶ | 4.51 (3.11 to 5.92) | <0.001 | 1.7 (0.8) |

| Percentage of live discharges to home | 1.24 (0.74 to 1.73) | <0.001 | 97.0 (2.3) |

| NHS facilities per 100 000 population‡ | −1.62 (−2.27 to −0.97) | <0.001 | 3.4 (1.6) |

| Standardised admissions ratio‡ | −0.12 (−0.19 to −0.04) | 0.003 | 107 (14.4) |

Coefficients represent expected change in hospital standardised mortality ratios for a given change in the associated variable. For example, an increase of 10% in numbers of hospital doctors would increase average doctor:bed ratio by 2.54, from 25.4 to 27.94. From model A, this increase would be expected to result in a fall of −1.19 in hospital standardised mortality ratios (2.54×−0.47).

According to Office of National Statistics.

For health authority where hospital located.

Percentage comorbidity of bronchopneumonia or heart failure or fracture of neck of femur.

Percentage comorbidity of bronchopneumonia or malignant neoplasm.

Table 3.

Univariable associations between hospital standardised mortality ratios and variables used in models A and B of regression analyses

| Variables | Regression coefficient (95% CI) |

|---|---|

| Model A | |

| Percentage of cases admitted as emergency | 1.01 (0.86 to 1.17) |

| No of hospital doctors per 100 hospital beds in 1994-5 | −0.90 (−1.09 to −0.71) |

| No of general practitioners per 100 000 population* | −1.53 (−2.05 to −1.0) |

| Standardised admissions ratio† | −0.18 (−0.31 to −0.05) |

| Percentage of live discharges to home | −0.61 (−2.02 to 0.81) |

| Percentage of cases with comorbidity‡ | 4.89 (3.62 to 6.15) |

| NHS facilities per 100 000 population† | −2.92 (−4.02 to −1.81) |

| Model B | |

| No of hospital doctors per 100 hospital beds in 1994-5 | −0.57 (−0.73 to −0.42) |

| Percentage of cases with comorbidity§ | 5.71 (4.06 to 7.34) |

| Percentage of live discharges to home | 0.58 (−0.09 to 1.26) |

| NHS facilities per 100 000 population† | −1.99 (−2.83 to −1.16) |

| Standardised admissions ratio† | −0.11 (−0.21 to −0.01) |

According to Office of National Statistics.

For health authority where hospital located.

Percentage comorbidity of bronchopneumonia or heart failure or fracture of neck of femur.

Percentage comorbidity of bronchopneumonia or malignant neoplasm.

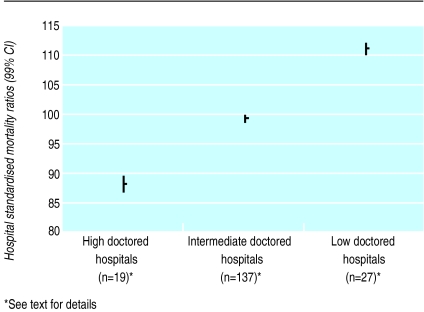

For model A, based on emergency and elective admissions, the adjusted R2 was 0.65. For model B, based on emergency admissions only, the adjusted R2 was 0.50. The results show that in model A, after adjustment for the percentage of emergency admissions, the best predictors of hospital mortality were numbers of hospital doctors per 100 hospital beds and general practitioners per 100 000 population. The figure displays hospital standardised mortality ratios for three groups of hospitals and areas: low doctored (numbers of doctors per beds and general practitioners per head of population below mean values by at least ½ SD), with a mean hospital standardised mortality ratio of 112; high doctored (doctors per beds and general practitioners per head of population more than ½ SD above mean), with a mean hospital standardised mortality ratio of 88; and intermediate hospitals, with a mean hospital standardised mortality ratio of 99.

In model A higher hospital standardised mortality ratios were associated with higher percentages of emergency admissions, lower numbers of hospital doctors per hospital bed, and lower numbers of general practitioners per head of population. The numbers of hospital doctors of different grades were also considered as explanatory variables, but total doctors per bed was found to be the best predictor. Higher hospital standardised mortality ratios were also associated with four other factors: low standardised admissions ratios for the health authority where the hospital was located, higher percentages of live discharged patients who went home (that is, non-death discharges to normal residence), higher percentages of cases of comorbidities of bronchopneumonia or heart failure or fracture of neck of femur, and lower availability of NHS facilities per 100 000 population for the health authority where the hospital was located. At the 5% level of significance, only one other predictor entered the model—possession of a specialist renal unit, which was associated with lower hospital standardised mortality ratios. At the 1% level, only the proportion of emergency admissions, numbers of hospital doctors per bed, and numbers of general practitioners per head of population were significant.

For model B, the percentage of cases with comorbidities of bronchopneumonia or malignant neoplasm was a significant predictor: number of general practitioners per 100 000 population was no longer significant. At the 5% level of significance, two variables entered the model, the proportion of grade A nurses (auxiliary nurses in training) as a percentage of all hospital nurses and bed occupancy. High percentages of grade A nurse and high bed occupancy were associated with higher hospital standardised mortality ratios.

By removing the effect of factors directly beyond hospital control (that is, all except doctors per bed), it is possible to calculate a hospital standardised mortality ratio that is likely to be a more valid measure of hospital quality of care. When we did this the range of resulting hospital standardised mortality ratios narrowed to 79-125.

Discussion

We have calculated hospital death ratios adjusted for age, sex, and diagnosis and looked at their association with factors likely, on clinical grounds, to be associated with quality of care. We focused on factors in the hospital and in the community surrounding the hospital that took account of financial and human resources, such as the number of doctors and nurses per hospital bed and the number of general practitioners per head of population from which hospital admissions were drawn.

Implications of results

The overall standardised death ratio in the 183 hospitals studied decreased on average by 2.6% a year between 1991-2 and 1994-5, but the variation between hospitals remained large. The associations we found between lower numbers of general practitioners per head of population and higher death rates raise several possible explanations. When general practitioners are relatively overworked the patients whom they send to hospital may be relatively sicker; and in these areas patients are more likely to be admitted as emergencies: high percentage of emergency admissions was significantly correlated with low numbers of general practitioners per 100 000 population, that is, with high average list size (r=−0.35, P<0.001). In model A of our regression analysis a reduction of 5000 hospital deaths per year was associated with a 27% increase in hospital doctors (9000 more doctors) or an 8.7% increase in general practitioners (2300 more doctors). In other words, our results suggest that a 1% increase in the number of hospital doctors per bed (333 more hospital doctors if the number of beds remains unchanged) is associated with a 0.119% decrease in hospital standardised mortality ratios (186 fewer deaths), and a 1% increase in general practitioners per head of population (267 more general practitioners if the population is unchanged) is associated with a 0.368% decrease in hospital standardised mortality ratios (575 fewer deaths).

In discussing risk and safety in hospital practice, Vincent puts heavy clinical workloads at the top of a list of conditions in which unsafe acts may occur.44 Compared with other countries in the Organisation for Economic Cooperation and Development (OECD), the United Kingdom has a low number of physicians per head of population,45 although it is planned to change this (Department of Health press release 98/337, 14 August 1998). In 1994 the United Kingdom had 1.6 physicians per 1000 population, which is more than one standard deviation below the mean of 2.7 for the 28 countries recorded by the OECD, the UK average amounting to only 59% of the OECD average for that year.

A higher percentage of live discharges to patients’ homes was also associated with higher hospital standardised mortality ratios. This probably reflects the fact that, where there are more NHS facilities, hospices, and local authority or nursing home places available, patients are more likely to be discharged to one of these for recovery and any deaths that follow would not be in hospital. The number of NHS facilities per head of population in the district surrounding the hospital is also a good predictor—the more facilities, the lower the hospital standardised mortality ratio. This effect may be similar to that of non-home discharges—that is, where these facilities do not exist patients are more likely to remain in hospital to die.

The age standardised admission ratio was also an important predictor, with higher admission rates being associated with lower mortality ratios—possibly indicating that some hospitals may admit relatively higher percentages of less sick patients because they have lower thresholds for admission.

At the 5% level of significance, hospitals with a specialist renal unit had lower hospital standardised mortality ratios—possession of a renal unit possibly being a marker of the quality of hospital care generally. Measures of social deprivation of the area of residence were not significantly related to mortality ratios. However, the percentage of hospital nurses graded A (the lowest grade, which indicates auxiliary nurses in training) was associated with higher hospital standardised mortality ratios: this result further reinforces the relation between staffing factors and outcomes.

Contrary to recent US data,46 teaching hospital status was significant at the univariable level, but, once adjusted for doctor:bed ratio in the multivariable regression, proved not to be significant. University teaching hospitals had 56% higher doctor:bed ratios than non-teaching hospitals (mean values 0.378 v 0.243 respectively).

Considerable care should be exercised in interpreting hospital mortality data. In view of the literature on case mix,9,13,16,34,36,47 it is surprising that only one of our measures of comorbidity was significant in the model (table 3), and this might be related to the lack of data on severity of illness. Data for individual hospitals could prove useful, especially if broken down by individual diagnoses or specialties, provided that the number of cases is sufficient to give narrow confidence intervals and the data adjustments described can be made.48–51 Results could prompt hospitals with high standardised mortality ratios to examine their care processes and staff ratios.

Future studies

We have found an association between mortality rates and doctor number (in hospital and in general practice). We know of no studies that have looked at this association before, and our findings need to be validated by further investigations. A matched pair study of patients admitted to hospitals with high and low standardised mortality ratios could help to elucidate these findings. In such an investigation detailed data would have to be collected to allow for accurate adjustment of case mix.

Most of the significant predictors in our two models are outside the direct influence of hospital policy (except doctor numbers per bed), and adjustment for these external factors narrows the range of mortality ratios. This finding indicates that variation in quality of hospital care is smaller than incompletely adjusted statistics suggest, and that our model may be used to produce more valid indicators of quality of care.

Figure.

Hospital standardised mortality ratios (with 99% confidence intervals) for hospitals and areas with low, medium, and high staffing levels of doctors

Acknowledgments

We thank Professor John Henry and Dr Paul Aylin for reading the paper, Debbie Hart for data preparation, and the BMJ’s referees for their comments.

Appendix: Independent variables included in univariable analysis for each hospital showing those used in the regression analysis

Aggregate discharge data

Percentage of emergency cases*

Percentage of live discharges who went home

Percentage of cases and deaths with comorbidity (subdiagnoses) of the 85 diagnoses leading to 80% of all deaths and combinations of those with the highest correlations with hospital standardised mortality ratios*

Percentage of cases and deaths with each of the top 15 diagnoses which account for 50% of all deaths*

Percentage of cases with comorbidity (subdiagnoses) of the two or three conditions most highly correlated with hospital mortality*

Average number of diseased bodily systems

Standardised admissions ratio for health authority where hospital located*

Average length of stay

Number of cases

Hospital data

Hospital doctors per bed* and per case

Percentage of nurses at grades A* to I, nurses per doctor and per bed

Number of hospital beds

Percentage of geriatric beds

Bed occupancy*

Location—inner London,* outer London,* or outside London

University teaching,* non-university teaching, other general hospital

Provision of a range of specialist units.

Hospital income per bed and per case

Total and first accident and emergency attendances

Hospital charter standards (including waiting times)

Results of survey of patient centred care (51 hospitals only)

Community attributed data†

General practitioners per 100 000 population according to Office of National Statistics (based on health authority of patient residence,* individual data averaged at health authority of residence level)

General practice nurses per 1000 population according to Office of National Statistics in hospital local health authority

NHS facilities per 100 000 population in hospital local health authority*

Underprivileged area score* (individual data averaged at electoral ward of residence level)

Percentage of patients with various social factors: elderly living alone, children aged under 5, one parent families,* social class V, unemployed, overcrowded accommodation, mobility,* ethnic minority(individual data averaged at electoral ward of residence level)

Percentage of patients with limiting longstanding illness(individual data averaged at electoral ward of residence level)

Provision of nursing homes, residential care homes in hospital local health authority area

Footnotes

Funding: None

Competing interest: Professor Jarman is the medical member of the Bristol Royal Infirmary inquiry, but this research was completed before he was appointed on 26 January 1999.

Variables with high adjusted R2 from univariable regression entered into multivariable regression models

Based on electoral ward of patient residence and averaged for all admissions (aggregate health authority of hospital data except where stated)

References

- 1.Nightingale F. Notes on hospitals. 3rd ed. London: Longman Green; 1863. [Google Scholar]

- 2.Buckle F. Vital and economical statistics of the hospitals, infirmaries, etc of England and Wales for the year 1863. London: Churchill; 1865. [Google Scholar]

- 3.National Confidential Enquiry into Perioperative Deaths (NCEPOD) Report 1992. London: NCEPOD; 1992. [Google Scholar]

- 4.Jarman B, Lang H, Ruggles R, Wallace M, Gault S, Astin P. The contribution of London’s academic medicine to healthcare and the economy – report commissioned by the deans of the medical schools of the University of London. London: University of London; 1997. [Google Scholar]

- 5.Commission on Professional Hospital Activities. Risk adjusted hospital mortality norms 1986 workbook. Ann Arbor, MI: CPHA; 1987. [Google Scholar]

- 6.DuBois RW, Rogers WH, Moxley JH, Draper D, Brook RH. Hospital inpatient mortality: is it a predictor of quality? N Engl J Med. 1987;317:1674–1680. doi: 10.1056/NEJM198712243172626. [DOI] [PubMed] [Google Scholar]

- 7.Fottler DM, Slovensky DJ, Rogers SJ. Public release of hospital specific death rates, guidelines for health care executives. Hosp Health Serv Administration. 1987;32(3):343–356. [PubMed] [Google Scholar]

- 8.Blumberg MS. Comments on HCFA hospital death rate statistical outliers. Health Serv Res. 1987;21:715–739. [PMC free article] [PubMed] [Google Scholar]

- 9.Thomas JW, Hofer TP. Research evidence on the validity of risk-adjusted mortality rate as a measure of hospital quality of care. Med Care Res Rev. 1998;55:371–404. doi: 10.1177/107755879805500401. [DOI] [PubMed] [Google Scholar]

- 10.Chassin MR, Park RE, Lohr KN, Keesey J, Brook RH. Differences among hospitals in Medicare patient mortality. Health Serv Res. 1989;24:1–31. [PMC free article] [PubMed] [Google Scholar]

- 11.Bradbury RC, Stearns FE, Steen PM. Interhospital variations in admission severity—adjusted hospital mortality and morbidity. Health Serv Res. 1991;26:407–424. [PMC free article] [PubMed] [Google Scholar]

- 12.Al-Haider AS, Wan TTH. Modelling organizational determinants of hospital mortality. Health Serv Res. 1991;26:303–323. [PMC free article] [PubMed] [Google Scholar]

- 13.Park RE, Brook RH, Kosecoff J, Keesey J, Rubenstein L, Keeler E. Explaining variations in hospital death rates, randomness, severity of illness, quality of care. JAMA. 1990;264:484–490. [PubMed] [Google Scholar]

- 14.Iezzoni LI, Ash AS, Shwartz M, Daley J, Hughes JS, Mackiernan YD. Judging hospitals by severity-adjusted mortality rates: the influence of the severity-adjustment method. Am J Public Health. 1996;86:1379–1387. doi: 10.2105/ajph.86.10.1379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Iezzoni LI. The risks of risk adjustment. JAMA. 1997;278:1600–1607. doi: 10.1001/jama.278.19.1600. [DOI] [PubMed] [Google Scholar]

- 16.Goss MEW, Read JI. Evaluating the quality of hospital care through severity adjusted death rates: some pitfalls. Med Care. 1974;12:202. doi: 10.1097/00005650-197403000-00002. [DOI] [PubMed] [Google Scholar]

- 17.Thomas JW, Holloway JJ, Guire KE. Validating risk-adjusted mortality as an indicator for quality of care. Inquiry. 1993;30:6–22. [PubMed] [Google Scholar]

- 18.Hartz AJ, Gottlieb MS, Kuhn EM, Rimm AA. The relationship between adjusted hospital mortality and the results of peer review. Health Serv Res. 1993;27:765–777. [PMC free article] [PubMed] [Google Scholar]

- 19.Lipworth L, Lee JAH, Morris JN. Case fatality in teaching and nonteaching hospitals, 1956-1959. Lancet. 1963;i:71. [Google Scholar]

- 20.Palmer HR, Reilly MC. Individual and institutional variables which may serve as indicators of quality of medical care. Med Care. 1979;18:693–717. doi: 10.1097/00005650-197907000-00001. [DOI] [PubMed] [Google Scholar]

- 21.Luft HS, Hunt SS. Evaluation of individual hospital quality through outcome statistics. JAMA. 1986;255:2780. [PubMed] [Google Scholar]

- 22.Hartz AJ, Krakauer H, Kuhn EM, Young M, Jacobsen SJ, Greer G. Hospital characteristics and mortality rates. N Engl J Med. 1989;321:1720–1725. doi: 10.1056/NEJM198912213212506. [DOI] [PubMed] [Google Scholar]

- 23.Department of Health. Hospital episode statistics. England: financial years 1991-92, 1992-93, 1993-94 and 1994-95. London: DoH; 1993-1996. [Google Scholar]

- 24.Office of Population Censuses and Surveys. 1991 census definitions. Great Britain. London: HMSO; 1992. [Google Scholar]

- 25.Dale A, Marsh C, editors. The 1991 census users guide. London: HMSO; 1993. [Google Scholar]

- 26.NHS Executive. Hospital and ambulance services: the patient’s charter comparative performance guide 1993-1994. London: Department of Health; 1995. [Google Scholar]

- 27.Department of Health. Waiting times for first outpatient appointments in England: quarter ended 30 September 1994. Statistical bulletin 1995/3. London: DoH; 1995. [Google Scholar]

- 28.Department of Health. GMS (general medical services) GMP (general medical practitioner) census. London: DoH; 1997. [Google Scholar]

- 29.Bruster S, Jarman B, Bosanquet N, Weston D, Erens R, Delbanco TL. The patient’s view—a national survey of hospital patients. BMJ. 1994;309:1542–1546. doi: 10.1136/bmj.309.6968.1542. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Jarman B. Underprivileged areas: validation and distribution of scores. BMJ. 1984;289:1587–1592. doi: 10.1136/bmj.289.6458.1587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Townsend P, Phillimore P, Beattie A. Bristol: Northern RHA, University of Bristol; 1986. inequalities in health in the Northern region. [Google Scholar]

- 32.Carstairs V, Morris R. Deprivation and health in Scotland. Aberdeen: Aberdeen University Press; 1991. [Google Scholar]

- 33.Office of Population Censuses and Surveys. Classification of occupations 1980. London: HMSO; 1980. [Google Scholar]

- 34.Greenfield S, Aronow HU, Elashoff RM, Watanabe D. Flaws in mortality data: the hazards of ignoring comorbid disease. JAMA. 1988;260:2253–2255. [PubMed] [Google Scholar]

- 35.Green J, Wintfeld N, Sharkey P, Passman LJ. The importance of severity of illness in assessing hospital mortality. JAMA. 1990;263:241–246. [PubMed] [Google Scholar]

- 36.Dicing with death rates (editorial) Lancet. 1993;341:1183–1184. [PubMed] [Google Scholar]

- 37.Orchard C. Comparing health care outcomes. BMJ. 1994;308:1493–1496. doi: 10.1136/bmj.308.6942.1493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.McKee M, Hunter D. Mortality league tables: do they inform or mislead? Qual Health Care. 1995;4:5–12. doi: 10.1136/qshc.4.1.5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Goldfarb MG, Coffey RM. Case mix differences between teaching and non-teaching hospitals. Inquiry. 1987;24:68–84. [PubMed] [Google Scholar]

- 40.Charlson ME, Pompei P, Ales KL, MacKenzie CR. A new method of classifying prognostic comorbidity in longitudinal studies: development and validation. J Chron Dis. 1987;40:373–383. doi: 10.1016/0021-9681(87)90171-8. [DOI] [PubMed] [Google Scholar]

- 41.Orchard C. Measuring the effects of casemix on outcomes. J Eval Clin Pract. 1996;2:111–121. doi: 10.1111/j.1365-2753.1996.tb00035.x. [DOI] [PubMed] [Google Scholar]

- 42.Royston JP. A simple method for evaluating the Shapiro Francia W test for non-normality. Statistician. 1983;32:297–230. [Google Scholar]

- 43.Royston P, Altman DG. Regression using fractional polynomials of continuous covariates: parsimonious parametric modelling (with discussion) Appl Stat. 1994;43:429–467. [Google Scholar]

- 44.Vincent C. Framework for analysing risk and safety in clinical medicine. BMJ. 1998;316:1154–1157. doi: 10.1136/bmj.316.7138.1154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.OECD. Health data—a comparative analysis of 29 countries. Paris: OECD; 1998. [Google Scholar]

- 46.Kassirer JP. Hospitals, heal yourselves. N Engl J Med. 1999;340:309–310. doi: 10.1056/NEJM199901283400410. [DOI] [PubMed] [Google Scholar]

- 47.Goldstein H, Spiegelhalter DJ. League tables and their limitations: statistical issues in comparisons of institutional performance (with discussion) J R Stat Soc A. 1996;159:385–443. [Google Scholar]

- 48.Roemer MR, Friedman JW. Doctors in hospitals: medical staff organization and hospital performance. Baltimore: Johns Hopkins University; 1971. [Google Scholar]

- 49.Selker HP. Systems for comparing actual and predicted mortality rates: characteristics to promote co-operation in improving hospital care. Ann Intern Med. 1993;118:820–822. doi: 10.7326/0003-4819-118-10-199305150-00010. [DOI] [PubMed] [Google Scholar]

- 50.NHS Executive. ‘Faster access to modern treatment’: how NICE appraisal will work. London: Department of Health; 1999. [Google Scholar]

- 51.McKee M. Indicators of clinical performance. BMJ. 1997;315:142. doi: 10.1136/bmj.315.7101.142. [DOI] [PMC free article] [PubMed] [Google Scholar]