Abstract

Random dot motion (RDM) displays have emerged as one of the standard stimulus types employed in psychophysical and physiological studies of motion processing. RDMs are convenient because it is straightforward to manipulate the relative motion energy for a given motion direction in addition to stimulus parameters such as the speed, contrast, duration, density, aperture, etc. However, as widely as RDMs are employed so do they vary in their details of implementation. As a result, it is often difficult to make direct comparisons across studies employing different RDM algorithms and parameters. Here, we systematically measure the ability of human subjects to estimate motion direction for four commonly used RDM algorithms under a range of parameters in order to understand how these different algorithms compare in their perceptibility. We find that parametric and algorithmic differences can produce dramatically different performances. These effects, while surprising, can be understood in relationship to pertinent neurophysiological data regarding spatiotemporal displacement tuning properties of cells in area MT and how the tuning function changes with stimulus contrast and retinal eccentricity. These data help give a baseline by which different RDM algorithms can be compared, demonstrate a need for clearly reporting RDM details in the methods of papers, and also pose new constraints and challenges to models of motion direction processing.

Keywords: Motion Processing, Random Dot Motion, Spatiotemporal Displacement Tuning, Luminance Contrast Effects, Direction Estimation

1. Introduction

The ability to perceive the direction of a moving object in the environment is an important visual function. Random dot motion (RDM) stimuli are used as standard inputs to probe motion perception, because of the ease with which arbitrary amounts of relative motion energy in given directions and speeds can be manipulated and because they target the Dorsal visual pathway (Where stream), owing to the lack of coherent form cues. A typical RDM stimulus consists of a sequence of several frames in which the dots move through space and time following a particular algorithm to evoke direction and speed percepts at some level of coherence (i.e., motion strength). For example, for a 5% coherent motion display, 5% of the dots (i.e., signal dots) move in the signal direction from one frame to the next in the sequence while the other 95% of the dots (i.e., noise dots) move randomly. As one would expect, the higher the coherence, the easier it is to perceive the global motion direction.

Psychophysical and neurophysiological experiments based on RDM stimuli have helped us to understand mechanisms and principles underlying motion perception (Britten, Shadlen, Newsome, & Movshon, 1992), motion decision-making (Gold & Shadlen, 2007; Roitman & Shadlen, 2002), perceptual learning (Ball & Sekuler, 1982; Seitz & Watanabe, 2003; Watanabe, Nanez, Koyama, Mukai, Liederman, & Sasaki, 2002; Zohary, Celebrini, Britten, & Newsome, 1994), fine (Purushothaman & Bradley, 2005) and coarse (Britten, Newsome, Shadlen, Celebrini, & Movshon, 1996) direction discrimination, motion transparency (Bradley, Qian, & Andersen, 1995), motion working memory (Zaksas & Pasternak, 2006), and depth perception from motion (Nadler, Angelaki, & DeAngelis, 2008), among other issues. Even a cursory look at this extensive literature reveals that a large variety of RDM stimuli have been employed. As a result, it is often difficult to make direct comparisons across these studies. RDMs vary not only in their parameters (such as duration, speed, luminance contrast, aperture size, etc.), but also in the underlying algorithms that generate them.

While there have been a number of studies that have parametrically investigated aspects of a given RDM algorithm, little attention has been given regarding how choices of algorithm impact the perception of the moving dot fields under various parameters. Such comparative studies are important; as Watamaniuk and Sekuler (1992) suggest, “differences in the algorithms used to generate the displays may account for differences in temporal integration limits” found between two previous studies. Also recently, Benton and Curran (2009) considered how different stimulus parameters that were employed, the refresh rate in particular, can explain the increasing and decreasing effects of coherence on perceived speed reported in the literature. There have been a few studies (Scase, Braddick, & Raymond, 1996; Snowden & Braddick, 1989; Williams & Sekuler, 1984) that specifically compared how some RDM algorithms affect direction discrimination performance; see General Discussion. For the algorithms which were tested, the main conclusion was a lack of significant differences in performance. Scase et al. (1996) also found little difference in overall performance under nominal variations in dot density and speed.

However, a number of questions remain. Are there some other RDM algorithms, which are currently being used, that can produce different performances? Do parameters differentially impact perception for different algorithms? Would a different perceptual task, namely direction estimation (Nichols & Newsome, 2002), which is more sensitive than discrimination, reveal divergence in performances across RDM algorithms? Can comparing the performances of human subjects in response to various algorithms reveal some understanding of the mechanisms underlying motion direction processing? Can these results be linked to known neurophysiological data regarding the spatiotemporal displacement tuning of motion-selective cortical neurons?

The goal of this paper is to provide answers to these questions. Here we directly address how parametric and algorithmic differences affect perception of motion directionality for RDM stimuli by comparing direction estimation performances of human subjects. The estimation task is more natural than discrimination for humans and animals alike as it does “not impose perceptual categories on the [subjects’] directional estimates”, thus allowing a direct correspondence between motion representation in the brain and the perceptual report (Nichols & Newsome, 2002). The following four commonly used RDM algorithms are considered (see Figure 1 for illustrations), of which algorithms MN and LL have not previously been comparatively investigated:

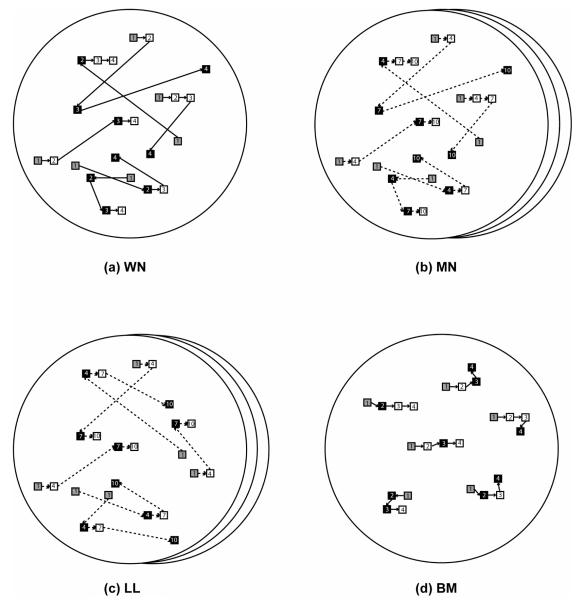

Figure 1.

Illustration of the four RDM algorithms under consideration. Each panel shows sample trajectories of the dots, which are 6 in number, moving in the rightward direction at 50% coherence for 4 frames. Each frame displays only the 6 dots; the number on each dot represents the frame in which it flashes. Gray dots constitute the first frame, and black and white dots represent noise and signal dots, respectively. Note that signal dots are recruited afresh from each frame to the next. Layered apertures in (b) and (c) depict the interleaving of three uncorrelated motion sets; i.e., an arbitrary frame has some correlation only with a frame that is either 3 frames backwards or forwards. The dashed arrows in (b) and (c) represent dot motion across non-consecutive frames.

A. White Noise (WN)

A new set of signal dots are randomly chosen to move in the signal direction from each frame to the next, and the remaining (noise) dots are randomly relocated; i.e., each noise dot is given random direction and speed (Britten et al., 1996; Britten et al., 1992).

B. Movshon/Newsome (MN)

This is similar to WN, but three uncorrelated random dot sequences are generated and frames from each are interleaved to form the presented motion stimulus; i.e., signal dots move from frame 1 to 4 and then from 4 to 7 and so on, from frame 2 to 5 and then from 5 to 8 and so on, and from frame 3 to 6 and then from 6 to 9 and so on (Roitman & Shadlen, 2002; Shadlen & Newsome, 2001).

C. Limited Lifetime (LL)

This is similar to MN, but with the constraint that from one frame to the next in each of the three interleaved sequences the dots with the longest lifetime as signal are the first to be chosen to become noise dots, which are then randomly relocated (Law & Gold, 2008). This restricts the signal dot lifetime from a probabilistic function of coherence level (employed by the other three algorithms) to a hard cutoff, where no dot moves as signal for more than one displacement for coherences below 50%.

D. Brownian Motion (BM)

This is similar to WN, but all dots move with the same speed; i.e., noise dots are only given random directions (Seitz, Nanez, Holloway, Koyama, & Watanabe, 2005; Seitz & Watanabe, 2003).

Note that in algorithms MN and LL, the parameter of speed is defined with respect to signal dots belonging to the same constituent motion set. Thus a signal dot jumps from one presentation to the next by a displacement that is 3 times bigger than that in algorithms WN and BM for the same speed. In this article, spatial displacement corresponds to the spatial separation between one and the next flash of a signal dot, and temporal displacement to the temporal interval between their onsets. So the speed is equivalent to spatial displacement divided by temporal displacement. Also, note that for algorithms WN, MN and BM, it is probable for a dot to be chosen as signal for more than one displacement. However for algorithm LL this never happens for the coherences used in this paper.

In Experiment 1, we examined the effect of viewing duration on the ordinal relationship among the motion algorithms with respect to direction estimation performance of human subjects. In Experiment 2, we examined the effects of contrast and speed. In Experiment 3, we examined the effects of contrast, speed and aperture size. In Experiment 4, we examined if speed, or the particular combination of spatial and temporal displacements, determines the relative perceptibility for RDM algorithms under variations of contrast and aperture size. Our emphasis was to understand both the differences among algorithms with changes in parametric conditions, and also the effects of these parameters on performance for each algorithm. Our results show dramatic interactions in performance both between and within algorithms under different parameters, some of which are counterintuitive. The obtained results are explained as behavioral correlates of various neurophysiological data obtained from motion-selective cortical neurons.

2. Experiment 1

How does the brain estimate the direction of a moving object in clutter? In primates, directional transient responses are thought to be produced in V1 by local motion detectors, which can be implemented in several ways (Derrington, Allen, & Delicato, 2004); namely correlation-type motion detector (Reichardt, 1961), null direction inhibition model (Barlow & Levick, 1965), and motion-energy filter model (Adelson & Bergen, 1985). In the next stage, directional short-range filters (Braddick, 1974) give rise to directional V1 simple cells by accumulating directional transients over relatively short spatial and temporal ranges. Following this, directional long-range filters accumulate local directional signals from V1 over relatively large spatial and temporal ranges to create global motion direction cells. Some models (Chey, Grossberg, & Mingolla, 1997; Grossberg, Mingolla, & Viswanathan, 2001) also propose a motion capture mechanism in which global motion cells mutually inhibit each other across space depending on how opponent their tuned directions are, leading to a gradual refinement of the brain’s motion representation and thereby a robust percept. A directional estimate may then be obtained by computing the directional vector average of global motion cells coding various directions (Nichols & Newsome, 2002) across tuning to other motion parameters such as speed, spatiotemporal frequency, spatiotemporal displacement, etc., in either parietal or frontal cortex.

An RDM stimulus consists of a sequence of frames, each of which contains a fixed number of dots on a plain background. A single dot that moves in a particular direction does not create any local directional ambiguity. However when a stimulus consists of multiple moving dots, local motion direction mechanisms can be fooled such that cells tuned to incoherent (non-signal or noise) directions also become active (see Figure 2). This problem of informational uncertainty in the directional short-range filters can be aggravated by certain stimulus factors such as low coherence, thereby resulting in only partial resolution of the neural code for global motion direction (Grossberg & Pilly, 2008). In other words, parameters such as low coherence increase the number of incorrect local motion signals amidst fewer coherent signals, and as a result produce only a weak directional percept. And short viewing duration limits the accumulation of evidence in the global motion cells that code the signal direction, and the extent of mutual suppression of incoherent motion signals across space.

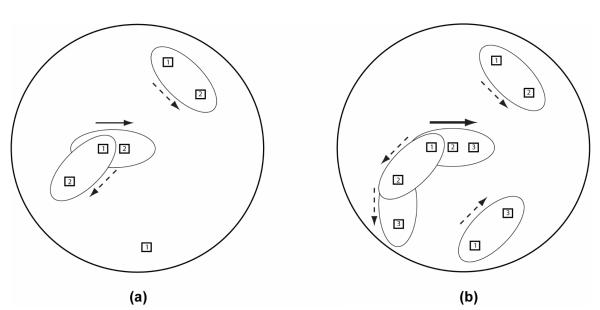

Figure 2.

Two illustrations to clarify the local motion directional ambiguity that is elicited by an RDM stimulus. In both panels, the coherence level is 33%, the signal direction (solid arrow) is rightward, and the refresh rate is 60 Hz (16.67 ms−1). Note that incoherent local motion signals (dashed arrows) are also activated. In the direction estimation task, the analog signal direction needs to be extracted in the presence of directional clutter. The oval-shaped receptive fields represent directional short-range filters, which have relatively short spatial and temporal limits within which directional evidence can be accumulated. In (b), note that the same dot was chosen to move in the signal direction for two frames, which is expected to produce a stronger local motion signal (thicker arrow) in the activated short-range filter population coding the signal direction.

In Experiment 1, we conducted a first comparison of the four motion algorithms under different levels of coherence and for different viewing durations. The main goal was to obtain an order among the algorithms based on subjects’ performances, and examine if the order changed with variations in viewing duration (100, 200, 400, and 800 ms). Our choice of the viewing durations was set to largely span the range of integration times used in perceptual decision-making of motion direction under different levels of motion coherence (Palmer, Huk, & Shalden, 2005).

Based on the theoretical framework, discussed above, we made the following predictions: More viewing duration will tend to improve performance irrespective of the motion algorithm. Estimation accuracy will be best in response to stimuli driven by algorithm BM, as it sets up the lowest local directional ambiguity among the four algorithms under consideration given that the noise dots are only locally repositioned (see Figure 1). For the remaining algorithms, performance will be better in response to algorithm WN than that to algorithms MN and LL as the interleaving of three uncorrelated motion sets is expected to decrease the effective stimulus coherence due to additional random local motion groupings of transient signals that are evoked by dots belonging to frames from different motion sets. Expected performance is predicted to be worst for algorithm LL as the limited lifetime constraint should reduce the strength of activation in the short-range filters that code the signal direction owing to the lack of long-lasting signal dots (see Figure 2) and as a result the effectiveness of the global motion capture process.

2.1. Methods

2.1.1. Subjects

Twelve subjects (9 male, 3 female; age range: 18-33 years) were recruited for Experiment 1, and six subjects (3 male, 3 female; age range: 18-30 years) for a control experiment. All participants had normal or corrected-to-normal vision and were naïve regarding the purpose of the experiment.

All subjects in the study gave informed written consent and received compensation for their participation and were recruited from the Riverside, CA and Boston, MA areas. The University of California, Riverside and Boston University Institutional Review Boards approved the methods used in the study, which was conducted in accordance with the Declaration of Helsinki.

2.1.2. Apparatus

Subjects sat on a height adjustable chair at a distance of 60 cm from a 36 cm horizontally wide, Dell M992 CRT monitor set to a resolution of 1280 × 960 and a refresh rate of 85 Hz. The distance between the subjects’ eyes and the monitor was fixed by having them position their head in a chin-rest with a head-bar. Care was taken such that the eyes and the monitor center were at the same horizontal level. Stimuli were presented using Psychtoolbox Version 2 (Brainard, 1997; Pelli, 1997) for MATLAB 5.2.1 (The MathWorks, Inc.) on a Macintosh G4 machine natively running OS 9.

2.1.3. Stimuli

Motion stimuli consisted of RDM displays. White dots (113 cd/m2) moved at a speed of 12°/s on a black background (~0 cd/m2). Each dot was a 3 × 3 pixel square, and at the screen center sub-tended a visual angle of 0.08° on the eyes. Dots were displayed within an invisible 18° diameter circular aperture centered on the screen. Dot density was fixed at 16.7 dots deg−2 s−1 (Britten, Shadlen, Newsome, & Movshon, 1993; Shadlen & Newsome, 2001). The number of dots in each frame of the stimuli was, thus, 16.7×π ×(9)2 ×(1/85) = 50 dots, which corresponds to 0.2 dots deg−2. Dot motion was first computed within a bounding square from which the circular aperture was carved out. If any of the signal dots were to move out of the square aperture, they were wrapped around to appear from the opposite side to conserve dot density. It is important to note that while the stimuli driven by different algorithms perceptually look different (in particular, BM vs. others), they all were tested with the same ‘physical’ parameters.

2.1.4. Procedure

The experiment was conducted in a dark room. Subjects were required to fixate a 0.2° green point in the center of the screen, around which the stimulus appeared. In each trial, subjects viewed the RDM binocularly for a fixed duration (100, 200, 400, or 800 ms), and then reported the perceived motion direction after a 500 ms delay period. This analog response was made by orienting a response bar, via mouse movements, in the judged direction and then clicking the mouse button. Subjects had 4 s to make their response and trials were separated by a 400 ms intertrial period. This procedure is depicted in Figure S1. Unclicked responses, which were few and far apart, were not considered in the data analysis. During stimulus viewing, subjects were specifically instructed not to track any individual dot motion, but to maintain central fixation while estimating the motion direction.

All subjects participated in three experimental sessions. The first session comprised about 5 minutes of practice which was given to familiarize them with the procedure. In trials of this session, random dot motion following one of the four algorithms was presented for 400 ms at a relatively easy coherence level (60, 70, 80, 90, 100%) and in one of 8 directions (0, 45, 90, 135, 180, 225, 270, 315°). If the response was within 22.5° of the presented direction, then visual and auditory feedback that the response was correct was given.

Subjects then participated in two, one-hour sessions which were conducted on different days. The method of constant stimuli was employed with a different set of 8 equally spaced, non-cardinal directions (22.5, 67.5, 112.5, 157.5, 202.5, 247.5, 292.5, 337.5°), 10 coherence levels (2, 4, 6, 8, 10, 15, 20, 25, 30, 50%) and 4 durations (100, 200, 400, 800 ms) for each motion algorithm. No response feedback was given in these main sessions. Each session comprised 1280 trials that were divided into four sections, with a possibility for a short rest between sections. The parameters were arranged in blocks of 80 trials that consisted of the 8 directions at each of the 10 coherence levels, randomly interleaved, for a given motion algorithm at a given duration. This design was based on initial observations in which we found that blocking trials of the same duration and algorithm was helpful to overall performance (however, a control experiment showed that the basic pattern of observed results is also found when interleaving all parametric conditions, including duration and algorithm; see Figure S4). These blocks were randomly ordered on per subject and per session basis.

2.1.5. Accuracy Measure

To evaluate estimation performance, we first calculated the absolute error of the subject’s analog choice of direction compared to the presented direction. On average, chance level performance yields an absolute error of 90° (see Appendix in the Supplementary Information). Based on this, the accuracy measure for each trial is defined as a percentage ratio: , such that accuracy spans from 0% to 100% as the performance improves from purely random guessing to perfect estimation. In the data figures, we display the average accuracy across trials. The accuracy measure as a function of coherence is defined as the coherence response function.

2.2. Results

To quantify the data collected in Experiment 1, we performed a four-way ANOVA with repeated measures to evaluate the effects of Coherence × Algorithm × Duration × Session as factors. As one would expect, we found a highly significant effect of coherence (F(9,99)=184.5, p<0.0001). We also found a highly significant effect of algorithm (F(3,33)=7.26, p<0.001) and duration (F(3,33)=47.5, p<0.0001), but no effect of session (F(1,11)=0.8, p=0.39). There was no interaction between session and algorithm (F(3,33)=0.57, p=0.64), but there were highly significant interactions between coherence and algorithm (F(27,297)=3.69, p<0.0001) and between coherence and duration (F(27,297)=2.73, p<0.0001), and significant interactions between coherence and session (F(9,99)=2.0, p<0.05) and between algorithm and duration (F(27,297)=2.46, p<0.01). We will generally not remark on effects of, and interactions involving, coherence in the rest of the paper. And given the lack of effect of session we will collapse across sessions for the remaining analyses. Also, given the limited number of presentations per combination of parameters, results are averaged across the 8 directions to obtain a better coherence response function for each algorithm under the main parameters of interest. While it is potentially interesting to consider interactions involving the 8 motion directions, there is little theoretical ground to justify such an investigation, especially considering that all the tested directions are non-cardinal.

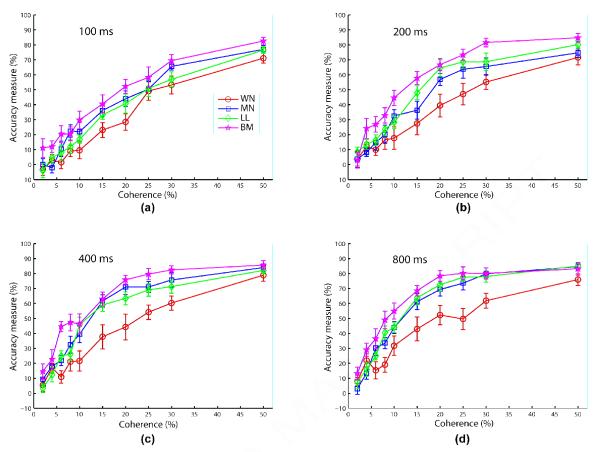

Figure 3 shows the coherence response functions of the motion algorithms at each viewing duration separately. Overall we find that human subjects are best at estimating the motion direction of stimuli derived from the Brownian Motion (BM) algorithm, followed by Movshon/Newsome (MN) or Limited Lifetime (LL) algorithms, and worst with the White Noise (WN) algorithm. This thus reveals the following ordinal relationship: WN <* LL < MN <* BM, where the asterisk superscript indicates significance in the sense that there is no overlap between the error bars (standard error) of the corresponding overall accuracy measures, which were computed by further averaging across coherence levels. As expected we also found (see Figure S2 for data for each algorithm as a function of duration) that viewing duration tends to improve performance for each RDM algorithm. The same pattern of results was found when computing other metrics of identification performance, such as vector dispersion and the percent of choices within the quadrant of the target direction; see Figure S3.

Figure 3.

Experiment 1 results comparing the coherence response functions, combined across the two sessions, of the four algorithms (WN: circle/blue; MN: square/green; LL: diamond/red; BM: penta-gram/cyan) at each viewing duration. Error bars represent standard error of mean. The legend for all panels is shown in (a). Fixed stimulus parameters are 18° aperture diameter, 12°/s speed, and high contrast.

We examined the distribution of directional choices made by the subjects with respect to the presented direction for each algorithm and viewing duration condition in the various coherence trials distributed between the two sessions; see Table S1 for vector average and circular variance. We found that for each algorithm, the relative errors are distributed symmetrically around 0° (the target direction).

As mentioned in Section 2.1.4, some stimulus conditions, namely algorithm and viewing duration, were blocked. In order to be sure that this blocked design does not confound the data trends, we performed a control experiment on new subjects (n = 6) that essentially is similar to Experiment 1, but using a completely interleaved design and with fewer conditions (2 algorithms: WN, MN; 1 viewing duration: 400 ms; same set of coherences and directions). The resulting coherence response functions for algorithms WN and MN bore resemblance to the corresponding results from Experiment 1 (compare Figure S4 with Figure 3c), and there was a highly significant effect of algorithm (p<0.01, repeated measures ANOVA).

2.3. Discussion

Notably, we found that our initial model failed to predict how subjects would perform relatively on the different motion algorithms. The only prediction that turned out to be correct was optimal performance in response to algorithm BM. We expected that performance would be worst for algorithms MN and LL than for WN, however the opposite relationship was found. Also we expected a significant difference in performance between algorithms MN and LL. While there are some individual subject differences in ordinal performance across the motion algorithms (see Figure S5), we see that there is a problem with our initial model of how these motion stimuli are processed and perceived. A notable issue, that is not well dealt with in the model, is that while the algorithms were tested at the same speed (12°/s), the spatial and temporal displacements between two consecutive signal dot flashes for the interleaved algorithms (MN and LL) are 3 times those for the other algorithms (WN and BM); see Experiment 4 and General Discussion for more treatment of this issue. Thus to better understand the mechanisms underlying these effects, and to verify the validity of these results under different stimulus conditions, we performed Experiments 2-4.

3. Experiment 2

In Experiment 2, we examined the effects of speed and contrast on the coherence response functions for the RDM algorithms. We chose to employ two speeds: slow (4°/s) and fast (12°/s), and also low and high contrast dots given the increasing number of psychophysical and neurophysiological studies showing that motion processing is non-trivially impacted by stimulus contrast (Krekelberg, van Wezel, & Albright, 2006; Livingstone & Conway, 2007; Pack, Hunter, & Born, 2005; Peterson, Li, & Freeman, 2006; Seitz, Pilly, & Pack, 2008; Tadin, Lappin, Gilroy, & Blake, 2003; Thompson, Brooks, & Hammett, 2006). Under low visual contrast conditions, sensitivity to stimulus features is generally thought to be reduced as in the model discussed in the previous experiment.

3.1. Methods

Eight new subjects (3 male, 5 female; age range: 18-25 years) were recruited for this experiment. The methods were identical to those of Experiment 1 with the following exceptions. The viewing duration was fixed at 400 ms, as viewing duration was not found to change the relative order of the algorithms and at 400 ms performance was good for most subjects without much additional benefit from longer viewing durations; see Figure S2. The aperture diameter was reduced from 18° to 8°. Reduction in aperture size allowed us to indirectly examine the effects of stimulus size and retinal eccentricity, given results from Experiment 1.

Dots were shown either at low (11.25 cd/m2) or high (117 cd/m2) luminance on a dark background (4.5 cd/m2) to produce the low or high contrast condition, respectively. Similar to Experiment 1, trials were blocked for algorithm, contrast and speed, and different coherence levels and directions were randomly interleaved within each block. The first half of trials in each session consisted of one contrast and the second half of the other contrast (order of this was counterbalanced between sessions and across subjects). Within each half session, blocks of algorithm and speed at the particular contrast were randomly arranged. The experiment was conducted in dim illumination in order to minimize the impact of dark adaptation and to avoid nonstationarities in performance resulting from contrast changes.

3.2. Results

To quantify the results from Experiment 2, we performed a five-way ANOVA with repeated measures to evaluate the effects of Coherence × Algorithm × Speed × Contrast × Session as factors. Again we found a highly significant effect of algorithm (F(3,21)=12.1, p<0.0001), and no effect of session (F(1,7)=0.01, p=0.91). We also found a significant effect of contrast (F(1,7)=7.7, p<0.05), and a marginal effect of speed (F(1,7)=3.1, p=0.1). While the individual effects of speed and contrast were small, there were highly significant interactions between algorithm and contrast (F(3,21)=18.5, p<0.0001), between algorithm and speed (F(3,21)=93.3, p<0.0001), and between speed and contrast (F(1,7)=38.14, p<0.0001).

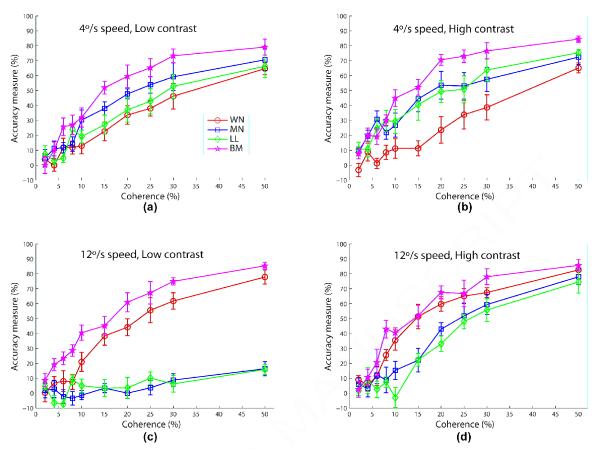

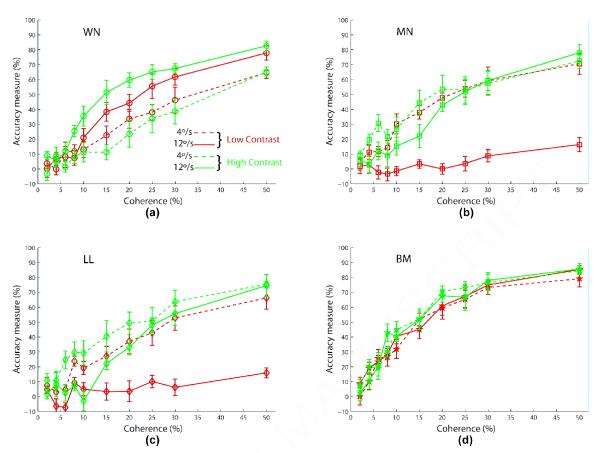

The main results of Experiment 2 can be seen in Figure 4. In this figure, each subplot shows the relative performances for the motion algorithms under the four combinations of speed and contrast. Whereas Figures 4a,b (those for slow speed - 4°/s) show a pattern of results reminiscent of those found in Figure 3 (WN < LL < MN <* BM, WN <* MN at low contrast; WN <* LL < MN <* BM at high contrast), Figures 4c,d (those for fast speed - 12°/s) show a very different trend (MN < LL <* WN <* BM at low contrast; LL < MN <* WN < BM at high contrast). Indeed for the fast speed (12°/s) condition, algorithms MN and LL are now showing the worst performance, and at low contrast (Figure 4c) subjects are actually performing at close to chance level for the interleaved algorithms MN and LL even at 50% coherence.

Figure 4.

Experiment 2 results comparing the coherence response functions of the four algorithms (WN: circle/blue; MN: square/green; LL: diamond/red; BM: pentagram/cyan) for each speed and contrast condition. Error bars represent standard error of mean. The legend for all panels is shown in (a). Fixed stimulus parameters are 400 ms duration, and 8° aperture diameter.

Given the highly significant interactions between algorithm and speed, and between algorithm and contrast, we replot in Figure 5 the data shown in Figure 4, but with the results grouped now by algorithm. Based on this figure, we can make the following observations (data here was quantified by performing a four-way ANOVA with repeated measures for each RDM algorithm with Coherence × Speed × Contrast × Session as factors):

Figure 5.

Experiment 2 results showing the coherence response functions of each algorithm for various speed (4°/s: dashed; 12°/s: solid) and contrast (low: blue; high: red) conditions. Error bars represent standard error of mean. The legend for all panels is shown in (a). Fixed stimulus parameters are 400 ms duration, and 8° aperture diameter.

Algorithm WN

At both low and high contrasts, performance improved with increased speed. However at slow speed (4°/s), performance reduced slightly with increased contrast, whereas it increased with contrast at fast speed (12°/s). We found a highly significant effect of speed (F(1,7)=35.5, p<0.001), but not of contrast (F(1,7)=0.4, p=0.55), and a highly significant interaction between speed and contrast (F(1,7)=15.4, p<0.0001).

Algorithms MN, LL

At low contrast, performance greatly reduced with increased speed. At high contrast, performance reduced slightly with speed. At slow speed (4°/s), contrast helped for algorithm LL and slightly for MN, whereas at fast speed (12°/s), higher contrast greatly improved performance. We found significant effects of speed (MN: F(1,7)=13.0, p<0.01; LL: F(1,7)=14.5, p<0.01) and of contrast (MN: F(1,7)=17.9, p<0.01; LL: F(1,7)=10.1, p<0.01), and highly significant interactions between speed and contrast (MN: F(1,7)=39.4, p<0.0001; LL: F(1,7)=10.6, p<0.001).

Algorithm BM

Performance was marginally better for high contrast than for low contrast, but there was no notable effect of speed. Accordingly, we found a significant effect of contrast (F(1,7)=9.5, p<0.05) but not of speed (F(1,7)=0.31, p=0.59), and no significant interaction between speed and contrast (F(1,7)=2.51, p=0.11).

3.3. Discussion

The results of this experiment are striking in the fact that parameters of speed and contrast had very different effects across the different algorithms. For instance, performance on algorithm WN generally improved with speed (4°/s < 12°/s), whereas performance on algorithms MN and LL was impaired, and algorithm BM was largely unaffected by speed. Also, while we had expected that performance would be better at high contrast, we did not expect the dramatic impact of contrast at fast speed (12°/s) found for algorithms MN and LL, nor the slight reduction in performance with increased contrast found for algorithm WN at slow speed (4°/s). Statistically, these observations explain the highly significant interaction between speed and contrast found for each of the algorithms WN, MN and LL. These results may be a reflection of how lowering contrast is known to alter the spatiotemporal receptive field structure of motion-selective cortical neurons (Krekelberg, van Wezel, & Albright, 2006; Livingstone & Conway, 2007; Pack, Hunter, & Born, 2005; Peterson, Li, & Freeman, 2006; Seitz, Pilly, & Pack, 2008). This link is considered further in Experiments 3 and 4, and analyzed in General Discussion.

Other interesting results in Experiment 2 come from the ordinal relations among algorithms. First, algorithms MN and LL yield nearly equal performances, as was found in Experiment 1, also in each of the new combinations of speed, contrast and aperture size. This suggests that at least for the particular dot density being used, the directional long-range filters, which summate local motion signals across a large spatial range, may be agnostic to how regular or irregular the local groupings in the signal direction across space and time are. Similar ideas discussed in Snowden and Braddick (1989) and Scase, Braddick, and Raymond (1996) with respect to direction discrimination, thus, also hold for direction estimation. Second, subjects continue to estimate the direction best for algorithm BM, in accordance with the theoretical framework articulated in the introduction to Experiment 1.

An additional curiosity is that, while we find WN <* MN in the slow speed (4°/s) condition as in Experiment 1, the relationship reverses in the fast speed (12°/s) condition (i.e., MN <* WN). This is particularly surprising given that the results shown in Figure 4d were obtained for the same parametric combination of contrast, speed and duration as is plotted in Figure 3c. While it is possible that this is due to individual subject differences (see Figure S5) or contextual effects due to the different sets of conditions presented in the two experiments, it is also possible that the relative performance differences result from the difference in aperture size (18° vs. 8°) between the two experiments. Experiment 3 was designed to test this hypothesis.

4. Experiment 3

In Experiment 3, we conducted a more thorough examination of the effects of speed, contrast and aperture size on the direction estimability of RDM stimuli. In particular, we explored whether the difference in the aperture sizes employed in Experiments 1 and 2 can explain the observed differences in ordinal relations among the algorithms. Given that performance on algorithm BM was found to be the best across conditions, and those for algorithms MN and LL were largely similar in both experiments, we only explored algorithms WN and MN in this experiment for the purpose of efficiency. By reducing the number of algorithms, we were able to examine the effects of aperture size, in addition to speed and contrast, in a within-subjects design without any reduction in the number of trials per condition.

4.1. Methods

Seven new subjects (1 male, 6 female; age range: 18-25 years) were recruited for this experiment. The methods were identical to those of Experiment 2 with the following exceptions. Only motion algorithms WN and MN were used, and two aperture sizes (8° dia., 18° dia.) were tested. Similar to Experiment 2, trials were blocked for contrast, algorithm, speed, and aperture size. And within each block, directions and coherences were randomly interleaved.

4.2. Results

To statistically examine the results from Experiment 3, we performed a six-way ANOVA with repeated measures to evaluate the effects of Coherence × Algorithm × Speed × Contrast × Aperture × Session as factors. Here we found a significant effect of aperture size (F(1,6)=6.5, p<0.05), where performance was higher for the larger aperture size (see Figures S6 and S7 for plots showing overlaid data for the two apertures). However, there were no significant interactions involving aperture size. There was no effect of algorithm (F(1,6)=1.1, p=0.34), but there were highly significant interactions between algorithm and contrast (F(1,6)=119, p<0.0001), and algorithm and speed (F(1,6)=453, p<0.0001). We also observed a significant interaction between speed and contrast (F(1,6)=9.22, p<0.005).

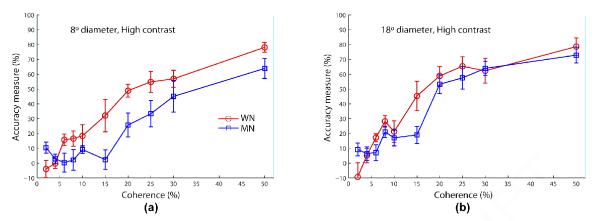

First, all results for algorithms WN and MN from Experiment 2 are replicated here with one exception: for algorithm WN, we find that the crossed interaction between speed and contrast is no longer evident, and that performance is now surprisingly reduced with increased contrast at both speeds; see Figure S8a. Second, the effects of speed and contrast for each algorithm are consistent between the two aperture sizes; see Figure S8. Third, while more aperture size tends to improve performance, algorithm MN in the fast speed (12°/s) and high contrast condition got a relatively larger benefit from the bigger aperture (18° dia.) than found in the other conditions (compare panel (d) with other panels in Figure S7 and with Figure S6). Fourth, the relative order of the two algorithms for the slow speed (4°/s) under both low and high contrasts is common between the two apertures; namely WN <* MN (see Figure S9). The opposite order (MN <* WN) for the fast speed (12°/s) is seen in all contrast and aperture size conditions, except for the high contrast and bigger aperture (18° dia.) case in which subjects performed nearly equally in response to the two algorithms (MN < WN); see Figures S10 and 6. Fifth, this latter result confirms that the different aperture sizes at least partially account for the different relational results found in Experiments 1 and 2.

Figure 6.

Experiment 3 results comparing the coherence response functions of the two algorithms WN (circle/blue) and MN (square/green) at fast speed (12°/s) under high contrast in each aperture size condition. Error bars represent standard error of mean. The legend for both panels is shown in (a). Fixed stimulus duration is 400 ms.

4.3. Discussion

In Experiment 3, we find that even in the larger aperture at fast speed (12°/s) and low contrast, performance is particularly poor for algorithm MN. At 12°/s, the underlying spatial and temporal displacements for algorithm MN (and also LL) are 0.42° and 35.29 ms, respectively, which are 3 times more than those for algorithm WN (and also BM). As mentioned in the Discussion of Experiment 2, the spatiotemporal receptive field structure of neurons involved in motion processing changes in low contrast (Krekelberg, van Wezel, & Albright, 2006; Livingstone & Conway, 2007; Pack, Hunter, & Born, 2005; Peterson, Li, & Freeman, 2006; Seitz, Pilly, & Pack, 2008), and it is likely that under low contrast the higher spatiotemporal combination of (0.42°, 35.29 ms) does not evoke strong enough responses in motion-selective cortical neurons that code the signal direction. This potential explanation assumes that the underlying spatiotemporal displacement, and not speed, is the factor that determines perceptibility of an RDM stimulus.

This experiment also reveals some counterintuitive results regarding how lowering contrast helps subjects to estimate the direction better for algorithm WN at the two speeds in both apertures. And the lack of this effect for algorithm MN at the same speeds further indicates an interaction between contrast and spatiotemporal displacement, instead of between contrast and speed.

The positive influence of aperture size on estimation accuracy found in all conditions may result from a selective increase in the occurrence of correct local motion groupings (i.e., in the signal direction) when compared to incorrect ones (i.e., in other directions) at the directional short-range filter stage, which thereby increases the effectiveness of the global directional grouping stage in inhibiting cells that code incoherent (noise) directions. Additionally, a bigger aperture size invokes more eccentric motion-selective neurons, which have bigger receptive fields and are known to be able to register a directional signal for relatively bigger spatial displacements (psychophysics: Baker & Braddick, 1982, 1985a; Nakayama & Silverman, 1984; and neurophysiology: Mikami, Newsome, & Wurtz, 1986; Pack, Conway, Born, & Livingstone, 2006 [Figures 3 and 4]). This suggests that the overall spatial displacement tuning broadens with a peak shift towards larger spatial displacements for bigger apertures. This effect is supported by the substantial gain in performance with aperture size seen, in particular, for algorithm MN under high contrast at 12°/s speed, which corresponds to a higher spatial displacement of 0.42°. Also, the switch in the relative order of the two algorithms with respect to speed, except in the high contrast and bigger aperture condition, likely results from the overall spatial displacement tuning of direction-selective cells and the effects of aperture size and contrast on it (see Figure 9 and General Discussion).

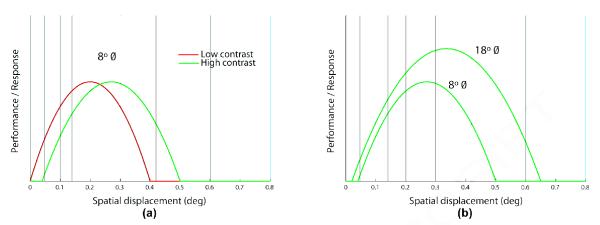

Figure 9.

Cartoon of how the spatial displacement tuning changes with contrast and aperture size. In (a), lowering contrast is shown to shift the tuning towards smaller spatial displacements. In (b), increasing aperture size is shown to broaden the tuning with a peak shift towards larger spatial displacements.

Results regarding the main motive of Experiment 3, shown in Figure 6, seem to indicate some inconsistency between the comparative results of Experiment 1 (WN <* MN) and 3 (MN < WN) for the same parametric combination of high contrast, bigger aperture, 12°/s speed and 400 ms viewing duration; compare Figure 3c with Figure 6b. The only procedural difference between Experiments 1 and 3 is that the former was conducted in the dark, whereas the latter was conducted in a dimly illuminated room. Thus, the mean stimulus luminance was different in Experiments 1 (0.14 cd/m2) and 3 (4.64 cd/m2); see Table S2. Accordingly, the disparate results may be reconciled given previous psychophysical studies that showed the upper spatial displacement limit (Dmax) for direction discrimination of two-exposure dot fields in a 2AFC task is inversely proportional to mean luminance (Dawson & Di Lollo, 1990; Lankheet, van Doorn, Bourman, & van de Grind, 2000), and if an assumption is made that increasing mean luminance shifts the spatial displacement tuning function towards smaller displacements; see General Discussion for how this might contribute to the explanation. This hypothesis needs to be examined rigorously in a future work.

5. Experiment 4

In Experiment 4, we set out to confirm that the observed effects of speed, in particular the interactions between speed and contrast, are not related to speed per se, but are actually an effect of the underlying combination of spatial and temporal displacements as per the hypothesis put forward in the previous experiment. In order to test this we used two different monitor refresh rates (60 Hz and 120 Hz). In this way the same speed could be tested with two different combinations of spatial and temporal displacements for each RDM algorithm. We were also interested in how subjects respond to algorithms WN and MN for matched spatial displacements, instead of speeds as in the previous 3 experiments.

5.1. Methods

Eight new subjects (0 male, 8 female; age range: 18-25 years) were recruited for this experiment. The methods were identical to those of Experiment 3 with the following exceptions. A different CRT monitor (Dell P991 Trinitron) was employed so that stimuli could be presented at 120 Hz, apart from 60 Hz, with the monitor set to a reasonable resolution (1024 × 768). Note that a refresh rate of 85 Hz was used in Experiments 1-3. Dot size, and dot and background luminance values were approximately the same as those used in Experiments 1-3. We used 12°/s and 36°/s speeds for algorithm WN, and 4°/s and 12°/s speeds for algorithm MN. Given that the spatial and temporal displacements for algorithm MN are 3 times more than those for algorithm WN at a fixed speed, these parameter choices gave us for both algorithms matched spatial displacements of 0.2° and 0.6° at 60 Hz, and 0.1° and 0.3° at 120 Hz. But the underlying temporal displacements for the algorithms WN and MN were not matched and were, respectively, 16.67 ms and 50 ms at 60 Hz, and 8.33 ms and 25 ms at 120 Hz. We avoided spatial aliasing in the stimulus by ensuring the smallest of the tested spatial displacements (0.1°) was significantly greater than the size of a pixel (0.034°). Similar to Experiment 3, trials were blocked for contrast, algorithm, spatial displacement, and aperture size. And within each block, directions and coherences were randomly interleaved. Trials of different refresh rates were blocked by session and the order of their presentation was counterbalanced across subjects.

5.2. Results

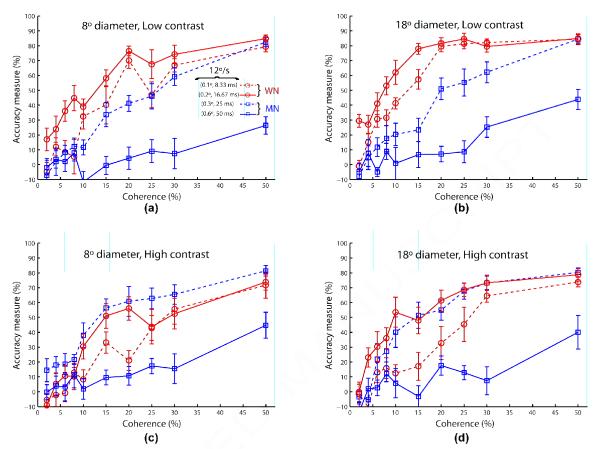

We quantified the data from Experiment 4 by performing a four-way ANOVA with repeated measures to evaluate the effects of Coherence × Contrast × Aperture × Displacement as factors for the two algorithms. For algorithm MN, we found a highly significant effect of spatial displacement (F(1,7)=74.9, p<0.0001), a significant effect of contrast (F(1,7)=6.6, p<0.05), and significant interactions between contrast and spatial displacement (F(1,7)=7.5, p<0.01), and contrast and aperture size (F(1,7)=5.33, p<0.05). In the ANOVA for algorithm MN, we found significant effects of displacement (F(1,7)=8.6, p<0.05), contrast (F(1,7)=22.15, p<0.005), and a highly significant effect of aperture (F(1,7)=48.14, p<0.001) without any notable interactions.

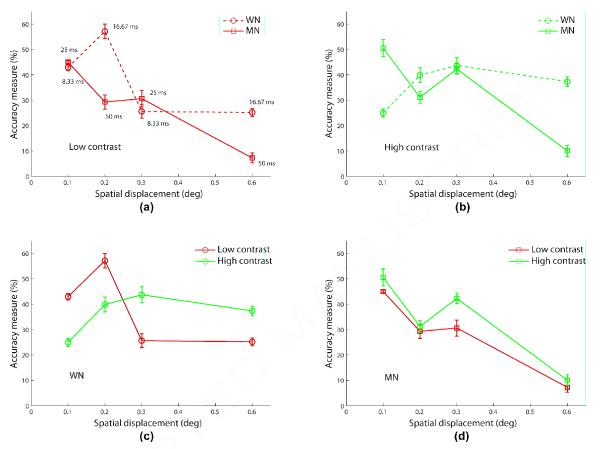

Figure 7 compares the coherence response functions of the two algorithms for the same speed (12°/s) but different underlying spatial and temporal displacements ([0.1°, 8.33 ms] and [0.2°, 16.67 ms] for algorithm WN; [0.3°, 25 ms] and [0.6°, 50 ms] for algorithm MN) in separate subplots for each aperture size and contrast condition. These results clearly show that direction estimation performances for algorithms WN, MN are not related to speed, but depend more directly on the underlying spatial and temporal displacements.

Figure 7.

Experiment 4 results comparing the coherence response functions of the two algorithms WN (circle/blue) and MN (square/green) at the same speed (12°/s) but different underlying spatial and temporal displacements (blue/dashed: (0.1°, 8.33 ms); blue/solid: (0.2°, 16.67 ms); green/dashed (0.3°, 25 ms); green/solid (0.6°, 50 ms)) for each contrast and aperture size condition. Error bars represent standard error of mean. The legend for all panels is shown in (a). Fixed stimulus duration is 400 ms.

Given that speed is not a direct factor that determines perceptibility of RDM stimuli, we looked at how the underlying spatial and temporal displacements influence accuracy measures. In Figure 8, we plot performances averaged across all coherences and the two aperture sizes for both algorithms at each of the four spatial displacements (0.1°, 0.2°, 0.3°, 0.6°) under low (8a) and high (8b) contrast conditions. In these panels, we can see a correspondence in performance between algorithms at 0.3° under both contrasts and at 0.1° under low contrast. And performances differ to a greater extent at 0.2° and 0.6° under either contrast. But this divergence can be understood given the relatively large temporal displacement of 50 ms for algorithm MN at these spatial displacements. With the exception of 0.1° under high contrast, these results provide evidence that for direction estimation, spatial displacement is the more determining factor when temporal displacement is smaller (<< 50 ms). Data from panels (a) and (b) in Figure 8 is replotted in panels (c) and (d) but for each algorithm separately. In the bottom row of Figure 8, we can make the interesting observation that when both spatial and temporal displacements are small, estimation performance is counterintuitively better for low contrast than high contrast indicating an interaction among contrast, spatial and temporal displacements. We conclude that both spatial and temporal displacements are individually important in predicting how subjects perceive RDM stimuli. However, a systematic study of the degree of interaction between spatial and temporal displacements behaviorally is beyond the scope of this article.

Figure 8.

Experiment 4 results showing performances, combined across all coherence levels and the two apertures, in response to algorithms WN (circle) and MN (square) as a function of matched spatial displacement under (a) low and (b) high contrast conditions. The temporal displacements of algorithms WN and MN are 8.33 ms and 25 ms, respectively at spatial displacements of 0.1° and 0.3°, and are 16.67 ms and 50 ms, respectively at 0.2° and 0.6°, which are shown near the corresponding data points in panel (a). Data presented in the first row is grouped by algorithm in panels (c) and (d) for WN and MN, respectively. Fixed stimulus duration is 400 ms.

5.3. Discussion

The results shown in Figure 7 match neurophysiological data showing that directionally selective cells in V1 and MT, on average, respond best to spatial displacements of around 0.26° and 0.31° (Dopt), respectively, and the maximal spatial displacements to which they respond are around 0.59° and 0.67° (Dmax), respectively, measured using a reverse correlation mapping technique employing two-dot motion stimuli at 60 Hz (Pack et al., 2006). These Dmax values are also consistent with how directional selectivity in V1 and MT for moving dot fields, on average, is lost at spatial displacements of 0.66° and 0.675°, respectively, found in another study (Churchland, Priebe, & Lisberger, 2005). Maximal spatial displacements (Dmax) for direction discrimination of two-exposure (Braddick, 1974) and multi-exposure (Nakayama & Silverman, 1984) motion stimuli were first reported psychophysically. While Dopt and Dmax values would invariably be different for various parametric conditions, they would certainly covary with the values found in Pack et al. (2006). Likewise given that spatial displacement tuning falls off for displacements smaller and larger than Dopt, the performance for algorithm WN in Figure 7 is better at 0.2° than 0.1°, and that for algorithm MN is better at 0.3° than 0.6°.

Pack et al. (2006) also quantified the degree of separability, called the separability index (SI), in the spatiotemporal receptive field structures of V1 and MT neurons. A neuron with an SI of 0 is perfectly tuned to speed, and an SI of 1 implies that the neuronal response depends separately on the underlying spatial and temporal displacements, and not their ratio. In other words, for an SI of 1 the spatiotemporal structure is realized by the product of individual spatial and temporal displacement tuning functions. Interestingly, the mean SI values for V1 and MT neurons reported in the above study are 0.71 and 0.7, respectively. The lack of “speed tuning” in the direction estimation performances found in the experiments reported here is very consistent with these SI values, and also with discrimination studies involving two-exposure (Baker & Braddick, 1985b: human) and multi-exposure (Kiorpes & Movshon, 2004: macaque) motion stimuli.

Also, the dependence of human estimation performances in response to algorithms WN and MN mainly on spatial displacement, when compared to temporal displacement, is in agreement with a similar dependence of cortical responses shown in other physiological studies (MT: Newsome, Mikami, & Wurtz, 1986; MST: Churchland, Huang, & Lisberger, 2007), and how macaque discrimination sensitivities are tuned to spatial displacement (Kiorpes & Movshon, 2004).

It should be noted that given the different stimuli (vis-à-vis viewing duration, contrast, retinal eccentricity, etc.), tasks (direction estimation in this study vs. passive fixation), and species used in these studies (humans vs. macaques), the above comparisons between neurophysiology and behavior can only be qualitative; but they nonetheless provide an intuitive understanding of our data.

The interaction between contrast and spatial displacement, which is evident in Figure 8c, has recently been investigated in detail by Seitz, Pilly, and Pack (2008). They reported how the behavioral spatial displacement tuning, obtained from human subjects performing the direction estimation task, shifts towards lower spatial displacements as stimulus contrast is reduced, and its striking qualitative correlation with a similar influence of contrast on speed tuning in macaque area MT (Pack, Hunter, & Born, 2005). Contrast (Eagle & Rogers, 1997; Seitz, Pilly, & Pack, 2008) and mean luminance (Dawson & Di Lollo, 1990; Lankheet et al., 2000) have been found to have opposite effects on Dmax. It will be interesting to test if reducing mean luminance at a fixed contrast improves motion performance for stimuli involving larger spatial displacements. In this regard it will be better to measure a well sampled tuning function (Seitz, Pilly, & Pack, 2008), rather than just either the upper or lower limit as was done in most previous psychophysical studies (Dawson & Di Lollo, 1990; Eagle & Rogers, 1997; Nakayama & Silverman, 1984; etc.); because, for example, two tuning curves with similar upper and lower limits can potentially differ in the intermediate parameter range.

6. General Discussion

The main contributions of this work are to directly compare the ability of human subjects to estimate the direction of random dot motion stimuli driven by four commonly used RDM algorithms under various parameters of viewing duration, speed, contrast, aperture size, spatial displacement and temporal displacement, and also to explain these results as behavioral correlates of pertinent neurophysiological data particularly from area MT.

The first result is that we did not observe a consistent ordinal relationship among the algorithms. We find that performance can differ greatly across the different motion algorithms as various parameters are changed. Generally, subjects estimated the direction best for algorithm BM (Brownian Motion), and the relative performances for other algorithms were shown to depend on parameters such as contrast, aperture size, spatial displacement and temporal displacement, but not speed. Accordingly in some conditions, subjects performed the worst for algorithm WN (White Noise) and in other conditions for algorithms MN (Movshon/Newsome) or LL (Limited Lifetime). And accuracy differences between algorithms WN and MN were dramatic for matched speeds, but nominal for matched spatial displacements unless either temporal displacement is large (~ 50 ms). Also, subjects performed roughly the same in response to algorithms MN and LL under the conditions that we tested.

This lack of significant performance differences between MN and LL finds concordance with previous similar comparative studies. In particular, Williams and Sekuler (1984) and Snowden and Braddick (1989) used multi-frame stimuli to compare discrimination performance in a 2AFC task under two conditions: same (the signal dots are fixed for the entire sequence) and different (Williams & Sekuler, 1984: signal dots are chosen afresh in each frame, which is the same as algorithm WN; Snowden & Braddick, 1989: the signal dots in each frame get the least preference to be chosen as signal in the next frame, which is the non-interleaved version of algorithm LL). Similar to our study, they found no significant difference in performance between the two conditions. The conclusion hence is that for both direction discrimination and estimation, our motion processing system does not take much advantage of the occurrence of long-lived signal dots at least for a relatively dense motion stimulus like the ones utilized in the present study. What matters most is the proportion of dots moving in the signal direction from one frame to the next in the sequence, and not which ones. This suggests that the directional percept in response to RDM stimuli is indeed determined by global motion processing mechanisms.

Another comparative study (Scase, Braddick, & Raymond, 1996) primarily compared three motion algorithms (random position: each noise dot is given a random direction and displacement; random walk: each noise dot is given a random direction, but the same displacement as signal dots; random direction: similar to random walk but each dot moves only in its designated direction, which is randomly assigned at the beginning, whenever it is chosen to be noise) in a 2AFC task, under same and different conditions like in Williams and Sekuler (1984), with nominal variations in dot density and speed. Note that algorithms random position and random walk under the different condition correspond to algorithms WN and BM, respectively. They also found no significant overall performance differences between same and different conditions, and their practical conclusion was that the motion algorithms did not differ much in overall discriminability. But like in our study they did report WN <* BM; i.e., subjects discriminated the direction better for random walk than random position in the different condition. Within the framework of the model described in Experiment 1, this result, which holds for estimation as well, is intuitive as algorithm BM causes the least amount of directional ambiguity in the short-range filter stage when compared to the other algorithms, thereby eliciting the most effective motion capture of incoherent directional signals at the global motion stage. Another reason could be the lack of speed clutter, which results in lesser mutual inhibition from global motion cells tuned to non-stimulus speeds, leading to higher firing rates in the winner population coding the signal direction and speed. This improved selection in the neural code of, say, area MT directly results in a more accurate directional estimate.

In contrast to these previous studies, the algorithms considered in our study did not all share the same spatial and temporal displacements between consecutive signal dot flashes. Thus by including the now dominant RDM algorithm MN, and its variant LL, we were able to examine the effects of spatial and temporal displacements, especially their interactions with contrast, and how they are affected by aperture size or retinal eccentricity. We also provide a unified account of our results based on recent neurophysiological and previous psychophysical studies.

Comparison of performance under various parametric and algorithmic conditions in our study primarily suggests that spatial displacement, and not speed, explains much of the variance in performance between the motion algorithms WN and MN (or LL). Our data gives evidence of a spatial displacement tuning function that broadens with a peak shift towards larger spatial displacements as aperture size is increased (see Discussion of Experiment 3) and that undergoes a rightward peak shift as contrast is lowered (see Discussion of Experiment 4). It has been suggested that behavioral estimates of motion direction may be obtained by computing the directional vector average of directionally tuned cells in area MT (Nichols & Newsome, 2002). Given the spatiotemporal displacement tuning properties of MT cells (Churchland, Priebe, & Lisberger, 2005; Mikami, Newsome, & Wurtz, 1986; Newsome, Mikami, & Wurtz, 1986; Pack et al., 2006), an accurate model of motion direction estimation must also take into account not only the spatial and temporal displacements between consecutive signal dot presentations in the random dot motion stimuli but also how they interact with contrast and aperture size.

An example of how spatial displacement tuning function may vary with contrast and aperture size is shown in Figure 9. This cartoon helps us explain why the relative order of performances in response to the algorithms WN and MN (or LL) changes under different parametric conditions and why the parameters have different effects for each algorithm. For example, subjects estimate the direction for algorithm MN better than that for WN at 12°/s speed under high contrast for 18° aperture diameter (WN <* MN in Experiment 1), but the relationship reverses (MN <* WN in Experiment 2) for 8° aperture diameter. At 12°/s speed, the spatial displacement of MN (0.42°) is closer to Dopt for 18° aperture size than that of WN (0.14°), which reverses for 8° aperture size as can be seen in Figure 9b. And at 4°/s speed, subjects perform better in response to MN than WN under both contrasts in either aperture size as the corresponding spatial displacement of MN (0.14°) is nearer, when compared to 0.047° of WN, to the Dopt values in the four conditions. Also, we can now deduce in Figure 9a how lowering contrast surprisingly improves estimation accuracy only for algorithm WN in Experiment 3 at speeds of 4°/s and 12°/s, which yield smaller spatial displacements of 0.047° and 0.14°, respectively.

7. Conclusions

Our results point towards the importance of carefully choosing, and accurately reporting, algorithmic details and parameters of RDM stimuli used in vision research. We found some dramatic differences of performance between motion displays when changing algorithm and parameters. We note that parameters of contrast, spatial displacement and temporal displacement, which are often not reported in papers, have fundamental impact on performance, whereas the parameter of speed, which is usually reported, explains little of the variance of performance. The results present some novel insight into how directional grouping occurs in the brain for an estimation task, and provide new constraints and challenges to existing mechanistic models of motion direction perception.

Supplementary Material

Acknowledgements

PKP was supported by NIH (R01-DC02852), NSF (IIS-0205271 and SBE-0354378) and ONR (N00014-01-1-0624). ARS was supported by NSF (BCS-0549036) and NIH (R21-EY017737). We would like to thank Joshua Gold and Christopher Pack for helpful comments, and Joshua Gold for sharing the code used in generating some of the motion stimuli.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

8. References

- 1.Adelson EH, Bergen JR. Spatiotemporal energy models for the perception of motion. J Opt Soc Am A. 1985;2(2):284–299. doi: 10.1364/josaa.2.000284. [DOI] [PubMed] [Google Scholar]

- 2.Ball K, Sekuler R. A specific and enduring improvement in visual motion discrimination. Science. 1982;218(4573):697–698. doi: 10.1126/science.7134968. [DOI] [PubMed] [Google Scholar]

- 3.Baker CL, Jr., Braddick OJ. The basis of area and dot number effects in random dot motion perception. Vision Res. 1982;22(10):1253–1259. doi: 10.1016/0042-6989(82)90137-7. [DOI] [PubMed] [Google Scholar]

- 4.Baker CL, Jr., Braddick OJ. Eccentricity-dependent scaling of the limits for short-range apparent motion perception. Vision Res. 1985a;25:803–812. doi: 10.1016/0042-6989(85)90188-9. [DOI] [PubMed] [Google Scholar]

- 5.Baker CL, Jr., Braddick OJ. Temporal properties of the short-range process in apparent motion. Perception. 1985b;14(2):181–192. doi: 10.1068/p140181. [DOI] [PubMed] [Google Scholar]

- 6.Barlow HB, Levick WR. The mechanism of directionally selective units in the rabbit’s retina. J Physiol. 1965;178:477–504. doi: 10.1113/jphysiol.1965.sp007638. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Benton CP, Curran W. The dependence of perceived speed upon signal intensity. Vision Res. 2009;49:284–286. doi: 10.1016/j.visres.2008.10.017. [DOI] [PubMed] [Google Scholar]

- 8.Braddick O. A short-range process in apparent motion. Vision Res. 1974;14(7):519–527. doi: 10.1016/0042-6989(74)90041-8. [DOI] [PubMed] [Google Scholar]

- 9.Bradley DC, Qian N, Andersen RA. Integration of motion and stereopsis in middle temporal cortical area of macaques. Nature. 1995;373(6515):609–611. doi: 10.1038/373609a0. [DOI] [PubMed] [Google Scholar]

- 10.Brainard DH. The Psychophysics Toolbox. Spat Vis. 1997;10(4):433–436. [PubMed] [Google Scholar]

- 11.Britten KH, Newsome WT, Shadlen MN, Celebrini S, Movshon JA. A relationship between behavioral choice and the visual responses of neurons in macaque MT. Vis Neurosci. 1996;13(1):87–100. doi: 10.1017/s095252380000715x. [DOI] [PubMed] [Google Scholar]

- 12.Britten KH, Shadlen MN, Newsome WT, Movshon JA. The analysis of visual motion: a comparison of neuronal and psychophysical performance. J Neurosci. 1992;12(12):4745–4765. doi: 10.1523/JNEUROSCI.12-12-04745.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Britten KH, Shadlen MN, Newsome WT, Movshon JA. Responses of neurons in macaque MT to stochastic motion signals. Vis Neurosci. 1993;10(6):1157–1169. doi: 10.1017/s0952523800010269. [DOI] [PubMed] [Google Scholar]

- 14.Chey J, Grossberg S, Mingolla E. Neural dynamics of motion grouping: From aperture ambiguity to object speed and direction. J Opt Soc Am A. 1997;14:2570–2594. [Google Scholar]

- 15.Churchland MM, Priebe NJ, Lisberger SG. Comparison of the spatial limits on direction selectivity in visual areas MT and V1. J Neurophysiol. 2005;93(3):1235–1245. doi: 10.1152/jn.00767.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Churchland AK, Huang X, Lisberger SG. Responses of neurons in the medial superior temporal visual area to apparent motion stimuli in macaque monkeys. J Neurophysiol. 2007;97(1):272–282. doi: 10.1152/jn.00941.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Dawson M, Di Lollo V. Effects of adapting luminance and stimulus contrast on the temporal and spatial limits of short-range motion. Vision Res. 1990;30(3):415–429. doi: 10.1016/0042-6989(90)90083-w. [DOI] [PubMed] [Google Scholar]

- 18.Derrington AM, Allen HA, Delicato LS. Visual mechanisms of motion analysis and motion perception. Annu Rev Psychol. 2004;55:181–205. doi: 10.1146/annurev.psych.55.090902.141903. [DOI] [PubMed] [Google Scholar]

- 19.Eagle RA, Rogers BJ. Effects of dot density, patch size and contrast on the upper spatial limit for direction discrimination in random-dot kinematograms. Vision Res. 1997;37(15):2091–2102. doi: 10.1016/s0042-6989(96)00153-8. [DOI] [PubMed] [Google Scholar]

- 20.Gold JI, Shadlen MN. The neural basis of decision making. Annu Rev Neurosci. 2007;30:535–574. doi: 10.1146/annurev.neuro.29.051605.113038. [DOI] [PubMed] [Google Scholar]

- 21.Grossberg S, Mingolla E, Viswanathan L. Neural dynamics of motion integration and segmentation within and across apertures. Vision Res. 2001;41(19):2521–2553. doi: 10.1016/s0042-6989(01)00131-6. [DOI] [PubMed] [Google Scholar]

- 22.Grossberg S, Pilly PK. Temporal dynamics of decision-making during motion perception in the visual cortex. Vision Res. 2008;48(12):1345–1373. doi: 10.1016/j.visres.2008.02.019. [DOI] [PubMed] [Google Scholar]

- 23.Kiorpes L, Movshon JA. Development of sensitivity to visual motion in macaque monkeys. Vis Neurosci. 2004;21(6):851–859. doi: 10.1017/S0952523804216054. [DOI] [PubMed] [Google Scholar]

- 24.Krekelberg B, van Wezel RJ, Albright TD. Interactions between speed and contrast tuning in the middle temporal area: Implications for the neural code for speed. J Neurosci. 2006;26:8988–8998. doi: 10.1523/JNEUROSCI.1983-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Lankheet MJ, van Doorn AJ, Bourman MA, van de Grind WA. Motion coherence detection as a function of luminance level in human central vision. Vision Res. 2000;40(26):3599–3611. doi: 10.1016/s0042-6989(00)00187-5. [DOI] [PubMed] [Google Scholar]

- 26.Law CT, Gold JI. Neural correlates of perceptual learning in a sensory-motor, but not a sensory, cortical area. Nat Neurosci. 2008;11(4):505–513. doi: 10.1038/nn2070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Livingstone MS, Conway BR. Contrast affects speed tuning, space-time slant, and receptive-field organization of simple cells in macaque V1. J Neurophysiol. 2007;97(1):849–857. doi: 10.1152/jn.00762.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Mikami A, Newsome WT, Wurtz RH. Motion selectivity in macaque visual cortex. II. Spatiotemporal range of directional interactions in MT and V1. J Neurophysiol. 1986;55:1328–1339. doi: 10.1152/jn.1986.55.6.1328. [DOI] [PubMed] [Google Scholar]

- 29.Nadler JW, Angelaki DE, DeAngelis GC. A neural representation of depth from motion parallax in macaque visual cortex. Nature. 2008;452(7187):642–645. doi: 10.1038/nature06814. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Nakayama K, Silverman GH. Temporal and spatial characteristics of the upper displacement limits for motion in random dots. Vision Res. 1984;24(4):293–299. doi: 10.1016/0042-6989(84)90054-3. [DOI] [PubMed] [Google Scholar]

- 31.Newsome WT, Mikami A, Wurtz RH. Motion selectivity in macaque visual cortex. III. Psychophysics and physiology of apparent motion. J Neurophysiol. 1986;55(6):1340–1351. doi: 10.1152/jn.1986.55.6.1340. [DOI] [PubMed] [Google Scholar]

- 32.Nichols MJ, Newsome WT. Middle temporal visual area microstimulation influences veridical judgments of motion direction. J Neurosci. 2002;22(21):9530–9540. doi: 10.1523/JNEUROSCI.22-21-09530.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Pack CC, Conway BR, Born RT, Livingstone MS. Spatiotemporal structure of nonlinear subunits in macaque visual cortex. J Neurosci. 2006;26(3):893–907. doi: 10.1523/JNEUROSCI.3226-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Pack CC, Hunter JN, Born RT. Contrast dependence of suppressive influences in cortical area MT of alert macaque. J Neurophysiol. 2005;93(3):1809–1815. doi: 10.1152/jn.00629.2004. [DOI] [PubMed] [Google Scholar]

- 35.Palmer J, Huk AC, Shadlen MN. The effect of stimulus strength on the speed and accuracy of a perceptual decision. J Vis. 2005;5(5):376–404. doi: 10.1167/5.5.1. [DOI] [PubMed] [Google Scholar]

- 36.Pelli DG. The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spat Vis. 1997;10(4):437–442. [PubMed] [Google Scholar]

- 37.Peterson MR, Li B, Freeman RD. Direction selectivity of neurons in the striate cortex increases as stimulus contrast is decreased. J Neurophysiol. 2006;95:2705–2712. doi: 10.1152/jn.00885.2005. [DOI] [PubMed] [Google Scholar]

- 38.Purushothaman G, Bradley DC. Neural population code for fine perceptual decisions in area MT. Nat Neurosci. 2005;8(1):99–106. doi: 10.1038/nn1373. [DOI] [PubMed] [Google Scholar]

- 39.Reichardt W. Autocorrelation, a principle for the evaluation of sensory information by the central nervous system. In: Rosenblith WA, editor. Sensory Communication. Wiley Press; New York, NY: 1961. pp. 303–317. [Google Scholar]

- 40.Roitman JD, Shadlen MN. Response of neurons in the lateral intraparietal area during a combined visual discrimination reaction time task. J Neurosci. 2002;22(21):9475–9489. doi: 10.1523/JNEUROSCI.22-21-09475.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Scase MO, Braddick OJ, Raymond JE. What is noise for the motion system? Vision Res. 1996;36(16):2579–2586. doi: 10.1016/0042-6989(95)00325-8. [DOI] [PubMed] [Google Scholar]

- 42.Seitz AR, Nanez JE, Holloway SR, Koyama S, Watanabe T. Seeing what is not there shows the costs of perceptual learning. Proc Natl Acad Sci U S A. 2005;102(25):9080–9085. doi: 10.1073/pnas.0501026102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Seitz AR, Pilly PK, Pack CC. Interactions between contrast and spatial displacement in visual motion processing. Curr Biol. 2008;18(19):R904–R906. doi: 10.1016/j.cub.2008.07.065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Seitz AR, Watanabe T. Psychophysics: Is subliminal learning really passive? Nature. 2003;422(6927):36. doi: 10.1038/422036a. [DOI] [PubMed] [Google Scholar]

- 45.Shadlen MN, Newsome WT. Neural basis of a perceptual decision in the parietal cortex (area LIP) of the rhesus monkey. J Neurophysiol. 2001;86(4):1916–1936. doi: 10.1152/jn.2001.86.4.1916. [DOI] [PubMed] [Google Scholar]

- 46.Snowden RJ, Braddick OJ. Extension of displacement limits in multiple-exposure sequences of apparent motion. Vision Res. 1989;29(12):1777–1787. doi: 10.1016/0042-6989(89)90160-0. [DOI] [PubMed] [Google Scholar]

- 47.Tadin D, Lappin JS, Gilroy LA, Blake R. Perceptual consequences of centre-surround antagonism in visual motion processing. Nature. 2003;424(6946):312–315. doi: 10.1038/nature01800. [DOI] [PubMed] [Google Scholar]

- 48.Thompson P, Brooks K, Hammett ST. Speed can go up as well as down at low contrast: implications for models of motion perception. Vision Res. 2006;46(67):782–786. doi: 10.1016/j.visres.2005.08.005. [DOI] [PubMed] [Google Scholar]

- 49.Watamaniuk SN, Sekuler R. Temporal and spatial integration in dynamic random-dot stimuli. Vision Res. 1992;32(12):2341–2347. doi: 10.1016/0042-6989(92)90097-3. [DOI] [PubMed] [Google Scholar]

- 50.Watanabe T, Nanez JE, Sr., Koyama S, Mukai I, Liederman J, Sasaki Y. Greater plasticity in lower-level than higher-level visual motion processing in a passive perceptual learning task. Nat Neurosci. 2002;5(10):1003–1009. doi: 10.1038/nn915. [DOI] [PubMed] [Google Scholar]

- 51.Williams DW, Sekuler R. Coherent global motion percepts from stochastic local motions. Vision Res. 1984;24(1):55–62. doi: 10.1016/0042-6989(84)90144-5. [DOI] [PubMed] [Google Scholar]

- 52.Zaksas D, Pasternak T. Directional signals in the prefrontal cortex and in area MT during a working memory for visual motion task. J Neurosci. 2006;26(45):11726–11742. doi: 10.1523/JNEUROSCI.3420-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Zohary E, Celebrini S, Britten KH, Newsome WT. Neuronal plasticity that underlies improvement in perceptual performance. Science. 1994;263(5151):1289–1292. doi: 10.1126/science.8122114. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.