Abstract

The drift-diffusion model (DDM) describes decision making in simple, two-alternative forced choice (2AFC) tasks. It accurately fits response-time distributions and implements an optimal decision procedure for stationary 2AFC tasks: for a given accuracy, no other model achieves faster average response times. The value of a decision threshold applied to accumulated information also determines a speed-accuracy tradeoff (SAT) for the DDM, thereby accounting for a ubiquitous feature of human performance in speeded response tasks. However, little is known about how participants settle on particular tradeoffs. One possibility is that they select SATs that maximize the rate of earned rewards. For the DDM, there exist unique, reward-rate-maximizing values for its threshold and starting point parameters in free response tasks that reward correct responses (Bogacz et al, 2006). These optimal values vary as a function of response-stimulus interval, prior stimulus probability and relative reward magnitude for correct responses. We tested the resulting quantitative predictions regarding response time, accuracy and response bias under these task manipulations and found that grouped data conformed well to the predictions of an optimally parameterized DDM.

When an organism extracts signals out of noisy inputs from the environment, it faces a fundamental tradeoff: should it spend more time observing a stimulus to increase certainty about its identity and the appropriate response to it, or should it act more quickly at the cost of greater inaccuracy? Such a tradeoff between speed and accuracy has long been recognized as a ubiquitous feature of human behavior in speeded response tasks (Fitts, 1966; Garrett, 1922; Pachella & Pew, 1968; Schouten & Bekker, 1967; Wickelgren, 1977). Yet the factors that lead to a particular tradeoff are still not well understood.

Clues about the nature of speed-accuracy tradeoff (SAT) selection have emerged from theoretical and behavioral research on decision making in simple, two-alternative forced choice (2AFC) tasks, which require participants to choose one or the other alternative on every trial (e.g., Audley & Pike, 1965; Busemeyer & Townsend, 1993; LaBerge, 1962; Laming, 1968; Link, 1975; Link & Heath, 1975; Ratcliff, 1978; Smith & Vickers, 1989; Stone, 1960; Usher & McClelland, 2001; Vickers, 1970). Other clues come from physiological research on the neural mechanisms that may underlie this type of decision making (e.g., Carpenter & Williams, 1995; Gold & Shadlen, 2002; Hanes & Schall, 1996; Ratcliff, Cherian, & Segraves, 2003; Roitman & Shadlen, 2002; Schall, 2001; Shadlen & Newsome, 2001; Smith & Ratcliff, 2004). In particular, a large body of evidence (e.g., Palmer, Huk, & Shadlen, 2005; Ratcliff & Rouder, 2000; Ratcliff, Thapar, Gomez, & McKoon, 2004; Voss, Rothermund, & Voss, 2004) now strongly suggests that decision making in 2AFC tasks can be accurately described by the drift-diffusion model (DDM) (Ratcliff, 1978), for which the SAT can be controlled by adjusting a single parameter (the decision threshold parameter, described below).

In its simplest form, the DDM is simply an application of the sequential probability ratio test (SPRT) to a decision making task (Stone, 1960). The SPRT (Wald, 1945) is the optimal algorithm for two-alternative hypothesis testing when the likelihoods of data samples under each hypothesis are known and stationary (constant from trial to trial): that is, on average, the SPRT will be fastest to reach a decision for a given level of accuracy, and most accurate for a given response time (RT), relative to any other procedure (Wald & Wolfowitz, 1948).

In sequential sampling models (which include the SPRT as a special case), evidence favoring each of the two alternatives is added to any prior expectations by repeatedly sampling a given stimulus. When the sampling happens continuously, the iterative log-likelihood-ratio computation of the SPRT is equivalent to a drift-diffusion (DD) process (Feller, 1968), which we discuss in the following section. If the task involves free responding, in which participants can respond at any time after stimulus onset, then the corresponding response is made when the evidence favoring one alternative crosses a decision threshold. The choice of threshold determines the SAT: lower thresholds permit faster responses, but at the expense of less accumulation of information and therefore less accurate performance; higher thresholds support greater accuracy but at the expense of slower responding. The choice of starting point determines the response bias: if the starting point of evidence accumulation is closer to one response's threshold, then the probability of that response increases.

The drift parameter of the DDM is equivalent to the average rate at which information accumulates. If drift is determined by the logarithm of the stimulus likelihood ratios (and not modulated, for example, by strategic control processes), then the conditions of the SPRT-optimality theorem apply, and no other model can make decisions faster on average than the DDM, for a given level of accuracy. But what level of accuracy — and therefore, which point along the model's SAT function — should be preferred? And how should prior expectations be incorporated into the decision process?

The SPRT does not specify how to select a particular SAT (by specifying a threshold value) or a particular response bias (by specifying a starting point), and little is known about how human participants do so. One possibility is that they seek to maximize the number of correct responses per unit time, especially in fixed-duration tasks in which faster responding leads to a greater total number of trials. This is equivalent to maximizing the rate of reward when correct responses earn rewards. Reward maximizing behavior has long been used in signal detection theory to construct receiver-operating-characteristic (ROC) curves (Tanner & Swets, 1954), and the effectiveness and logical consistency of payoffs as feedback in human behavioral research in general has been recognized at least since the 1960s (Edwards, 1961). Recent theoretical work has demonstrated that, for any given set of task parameters, there is a unique, optimal combination of threshold and starting point for the DDM that will maximize the expected reward rate (Bogacz, Brown, Moehlis, Holmes, & Cohen, 2006). This result can be used to make quantitative predictions about the way in which task factors should influence SAT and response bias. In this study, we sought to test these predictions and determine whether human participants adjust SATs and response biases in order to maximize reward rate.

Our study focuses on three factors in particular: the average response-stimulus interval (RSI), which determines the pace of the task, the prior probability of each of the two stimuli, and the relative reward associated with correct responses to each stimulus. Bogacz et al. (2006) examined the influence of these variables on the optimal threshold and the optimal starting point of evidence accumulation for the DDM. In the section that follows, we briefly review this theoretical work, and the behavioral predictions it entails. We then describe three experiments conducted to test these predictions. Their results provide new support for the DDM as a model of human decision making performance in 2AFC tasks; additionally, they support the hypothesis that human participants adapt response thresholds and starting points in order to maximize rewards, as predicted by a reward-rate-optimized DDM.

The Drift Diffusion Model

We now briefly describe the DDM and the quantitative RT and accuracy predictions that we test in our experiments.

A drift-diffusion (DD) process is the limiting case of a random walk in which the time between steps becomes vanishingly small (Feller, 1968). Technically, it is defined by the simple stochastic differential equation:

| (1) |

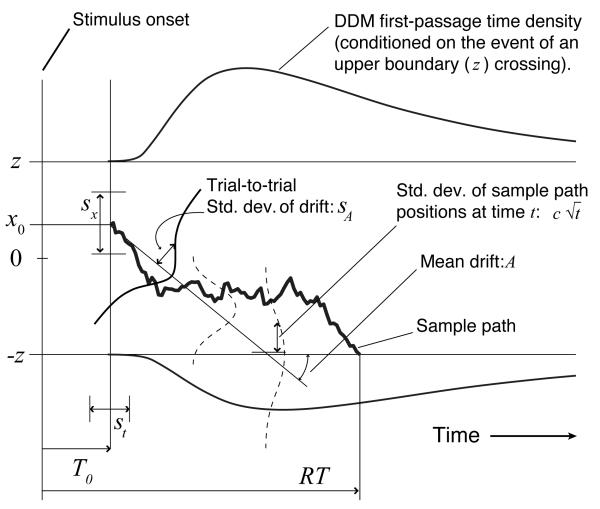

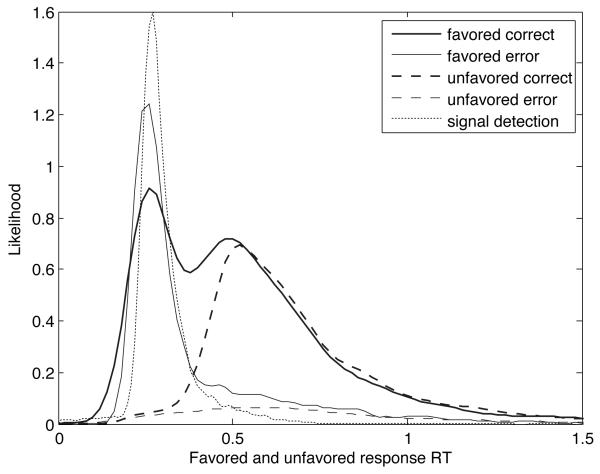

Here x represents the net evidence accumulated in favor of one of the two alternatives (and −x evidence in favor of the other); the drift A represents the discriminability of the stimulus favoring one alternative (with −A favoring the other, assuming equal discriminabilities); and c weights the influence of a Wiener (Brownian motion) process W, which represents the cumulative effect on x of white noise in the stimulus1 (see Fig. 1). A sample path of the process (i.e., a particular random walk trajectory) begins with x at a specified starting point x0, which can be taken to represent the decision maker's prior belief about the relative likelihood of each stimulus type. It ends when the value of x exceeds a threshold ±z in the positive or negative direction. This ‘first-passage’ across a threshold defines the decision time (DT) of the process. In fitting to empirical data, an additional residual latency component T0 (reflecting sensory and motor processes unrelated to the decision itself) is added to DT, to derive the predicted RT: RT = DT + T0.

Figure 1.

Parameters, first-passage density and sample path for the extended drift-diffusion model (DDM). Parameters of the DDM are labeled according to the terminology of Bogacz et al. (2006); see Appendix A for a translation into the terminology of Ratcliff and colleagues (e.g., Ratcliff & Rouder, 1998).

For the DDM with starting point equidistant from both thresholds,2 expected decision time (denoted ) and the expected proportion of errors (denoted ) depend only on the signal-to-noise ratio A/c and the threshold-to-signal ratio z/A, as described by the following analytic expressions (Busemeyer & Townsend, 1992; cf. Bogacz et al., 2006 and Gardiner, 2004):

| (2) |

| (3) |

This allows quantitative predictions to be made about and for a given drift A, noise level c, and threshold z. Drift and noise reflect the influence of two primary factors: the intrinsic discriminability of the stimulus in the environment, and the signal-to-noise properties of the internal processes responsible for transducing, encoding, and attending to the stimulus. The former can be experimentally manipulated, and the latter is frequently assumed to be relatively stable for motivated performance within a given task condition. Accordingly, A and c can be estimated for a particular stimulus and individual. What is less clear is the basis on which decision makers choose the threshold z and the starting point x0 — that is, how they choose to trade off speed against accuracy, and how they choose a response bias (if any). We test the hypothesis that participants make decisions using a DD process and that they parameterize the process so as to maximize reward rate, under the assumption of a physically unavoidable upper bound on the signal-to-noise ratio (SNR), A/c.

Reward-rate optimization of the DDM

Recent theoretical work (Bogacz et al., 2006) has shown that when drift, noise, mean RSI (), prior stimulus probability and the relative reward for correct responses to each stimulus are held constant in a free-response, 2AFC task, there exist unique, optimal threshold and starting point values for the DDM3 that maximize expected reward rate (), defined as follows (Gold & Shadlen, 2002):

| (4) |

Here we assume that errors are unrewarded. We now examine how optimal DDM parameterizations (those that maximize Eq. 4) depend on the task conditions that we manipulate in our experiments.

Response-stimulus interval

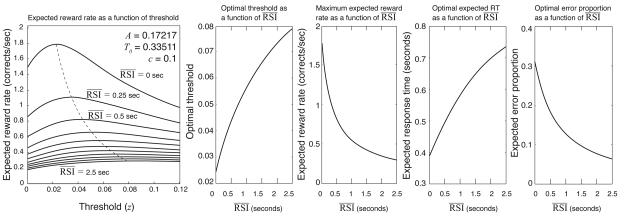

By substituting Eq. 2 and Eq. 3 into Eq. 4, and solving for the maximum , the following equation can be derived describing the optimal value of z as a function of A, c, T0 and (Bogacz et al., 2006):

| (5) |

(Here we assume that the starting point is equidistant between the two thresholds — x0 = 0 — since this maximizes expected reward rate in tasks with equally likely and equally rewarded stimuli.)

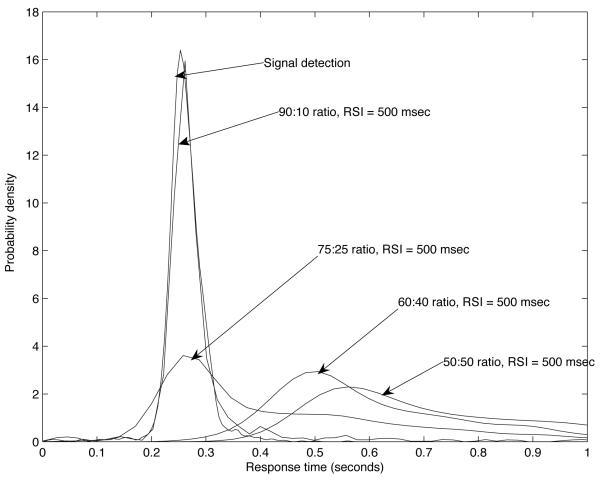

Since the left-hand side of Eq. 5 increases with z while the right-hand side decreases, there is a unique value of z that solves Eq. 5 for a given combination of A, c, T0 and . This can be seen clearly in panel A of Fig. 2, where expected reward rate (, given by Eq. 4) is plotted as a function of threshold for representative values of A, c and T0 (obtained by fitting the DDM to behavioral data), and for a variety of average RSI values. The figure shows that a unique, reward-rate-maximizing threshold exists for each RSI, and that this optimal threshold value grows as RSI increases (the specific value can be determined by solving Eq. 5 numerically, e.g., by Newton's method). Insofar as A, c, T0 and z are stable for a given individual and task condition, their values can be estimated from behavioral performance, and used to evaluate the goodness of fit of the DDM. Furthermore, if evidence suggests that A, c and T0 are stable for a given individual across manipulations of task variables such as , then changes in z can be estimated in response to such task manipulations, and compared to the optimal values predicted by Eq. 5. Panel B of Fig. 2 plots optimal threshold values as a function of . Optimal threshold predictions in turn entail specific expected reward rates, RTs and accuracies that can be compared to data (remaining panels of Fig. 2). Experiment 1 was designed to test these predictions.

Figure 2.

A: Expected reward rate () plotted as a function of threshold z for a range of values (dashed curve connects the peaks of each curve). B: Optimal threshold as a function of . C: as a function of , assuming optimal thresholds at each . D: Expected RT as a function of , assuming optimal thresholds. E: Expected proportion of errors () as a function of , assuming optimal thresholds.

Stimulus probability

Thus far we have focused on conditions in which each stimulus is presented equally often. If one stimulus appears more often than the other, then maximization of reward requires that the starting point of evidence integration for the DDM (x0) be moved closer to the threshold corresponding to the more frequent response (Bogacz et al., 2006; cf. Edwards, 1965, and Laming, 1968). This produces faster RTs when the drift is in the direction of the closer threshold and more errors when the drift is in the opposite direction. However, the reduced frequency of trials for this case makes their increased inaccuracy worth the cost. Specifically, if Π denotes the probability of stimuli for which crossings of +z are correct, then for optimal performance (Edwards, 1965) the initial condition of x should be set as

| (6) |

Note that x0 should equal 0 when Π = 1/2. In addition, a value of Π greater than 0.5 produces a reduction in the optimal threshold value, which in the case of unequal stimulus ratios is obtained by numerically solving the following equation (Bogacz et al., 2006):

| (7) |

(Eq. 7 reduces to Eq. 5 for Π = 1/2.) Expected accuracy and decision time for the optimally parameterized DDM with Π > 1/2 are given in Appendix B.

Although the optimal value of x0 does not depend on RSI, it interacts in interesting ways with the optimal threshold as the mean RSI is changed. Fig. 2 (second panel from left) shows that as RSI increases, the optimal threshold also increases. This relationship also holds in the case of unequal stimulus frequencies. Thus, simultaneously decreasing the RSI while increasing the inequality in stimulus ratios effectively exaggerates the shift of the starting point toward the threshold for the favored response (i.e., the response corresponding to the more likely stimulus). For RSIs that are sufficiently short and values of Π that are sufficiently close to 1, Eq. 6 places the optimal starting point beyond the favored response threshold. In this case, the simplest interpretation of the theory predicts that the decision maker should forgo integration and choose the favored response on every trial. Assuming that there is a penalty for anticipatory responding (that is, responding before stimulus onset), RT should simply reflect signal detection and therefore equal T0, and the proportion of errors should equal the probability of the less likely stimulus. We will refer to this behavior as non-integrative responding to indicate that no integration of evidence is being carried out by the decision maker; non-integrative responding is equivalent to making fast-guess responses (Ollman, 1966; Yellott, 1971), except that it involves always making the same guess that the favored response is correct.

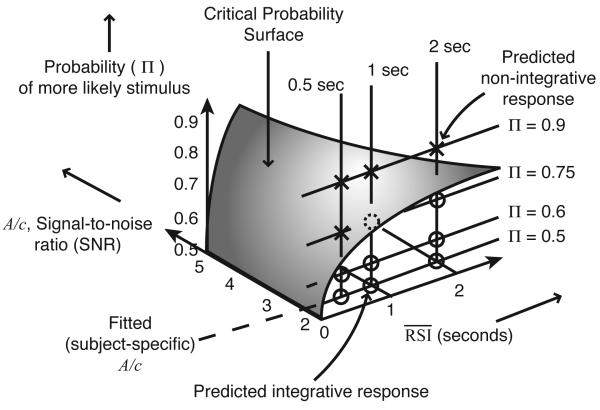

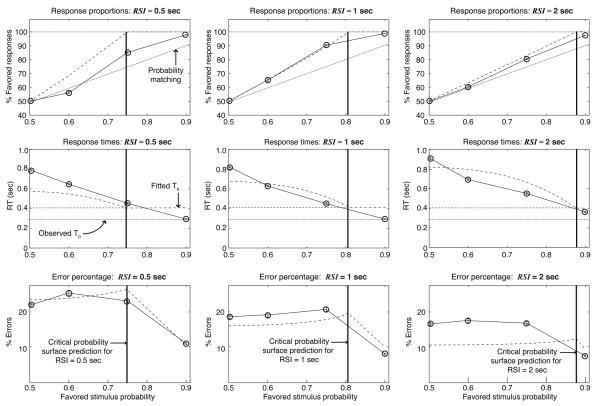

Bogacz et al. (2006) describe task conditions in which non-integrative responding is expected by dividing the three-dimensional task parameter space into two regions separated by a curved, two-dimensional critical probability surface. This surface — on which the optimal starting point and threshold coincide — is defined by Eq. 8, which describes it in terms of as a function of Π, A, c and T0:

| (8) |

This surface is depicted in Fig. 3. The parameters defining this space are the SNR (A/c), the average RSI, and the probability of the more likely stimulus, Π. The residual decision latency (T0) determines the height of the surface. For asymmetries Π above this surface, non-integrative responding is expected. For points below the surface, integrative responding is expected.

Figure 3.

Critical probability surface, dividing parameter space into predicted integrative and non-integrative conditions.

It seems reasonable to expect that a sufficiently strong asymmetry in stimulus ratios would lead participants to choose exclusively one alternative in speeded-response, 2AFC tasks irrespective of other factors, such as RSI. However, Eq. 8 prescribes a parametric and possibly counterintuitive relationship between DDM parameters and task parameters that should produce non-integrative responding. In particular, this relationship implies that a given asymmetry Π should produce non-integrative responding for short RSIs, but not for longer RSIs. Fits of the DDM parameters (particularly T0 and the ratio A/c) allow prediction of the values of Π and at which this transition should occur if reward rate is being maximized. In Experiment 2, we covaried mean RSIs and stimulus ratios in order to determine whether such a surface exists, and if so, whether its shape conforms to the predictions of the DDM concerning reward rate maximization.

Relative reward

Since we assume that participants seek to maximize reward rate, direct manipulations of the reward associated with each response should also produce predictable effects on behavior. Bogacz et al. (2006) also investigated tasks in which a proportion r of some unit of reward is assigned to one response (when it is correct), and the remaining proportion 1 − r is assigned to the other response when correct. In contrast to the case of unequal stimulus proportions, analytical expressions for optimal starting points were not obtainable in the case of reward asymmetries. However, numerical results indicated that differences in reward should produce effects similar to those of unequal stimulus proportions, except that values of r were predicted to produce stronger response biases than those produced by equivalent values of Π (in contrast to relative reward, the absolute magnitude of the rewards was predicted to be irrelevant).

Specifically, two expressions were obtained that define an interval within which the optimal starting point should lie. As the sum of and T0 grows small, Eq. 9 defines the upper boundary of this interval, which is the same as the optimal starting point for unequal stimulus probabilities if Π is replaced by r:

| (9) |

As the sum of and T0 grows large, Eq. 10 defines the lower boundary of this interval:

| (10) |

The optimal starting point shift is thus smaller in the case of reward asymmetry than in the case of an equivalent stimulus ratio (r = Π). Optimal thresholds, in contrast, are dramatically reduced in response to reward asymmetry relative to stimulus proportion asymmetry. The net effect is that the optimal separation between the starting point and the favored response threshold is smaller in the case of reward asymmetry.

Thus, we should expect unequal rewards to bias decision makers toward one response over the other in a manner qualitatively like that predicted for unequal stimulus probabilities. Bogacz et al. (2006) numerically computed a critical reward surface that is analogous to the critical probability surface of Fig. 3, but which predicts a transition to non-integrative responding at larger values of . Experiment 3 was designed to test this prediction.

Extended DDM and data fitting

The theoretical work described above has focused on the simplest version of the DDM, in which the absolute value of the drift, the starting point, and the residual latency are all assumed to be constant for a given participant and a given task condition. We will hereafter refer to this version of the DDM as the pure DDM. The pure DDM, like the SPRT itself, predicts equal mean RTs for correct and error responses, but this prediction is frequently violated in practice and has led some to reject the SPRT as a decision-making model (e.g., Luce, 1986). However, assuming random variability across trials in A, x0, and T0 corrects this deficiency (Ratcliff & Rouder, 1998; Ratcliff, Van Zandt, & McKoon, 1999). We will refer to this form of the model (the pure DDM with three additional parameters, sA, sx, and st respectively, as well as a fourth parameter, p0, specifying the proportion of contaminant RTs uniformly distributed between the minimum and maximum RT as in Ratcliff & Tuerlinckx, 2002) as the extended DDM (depicted in Fig. 1). (Assuming thresholds are set optimally, the pure-DDM/SPRT equivalence and the theorem of Wald and Wolfowitz (1948) imply that rewards are maximized when sA, sx, st and p0 are all 0.)

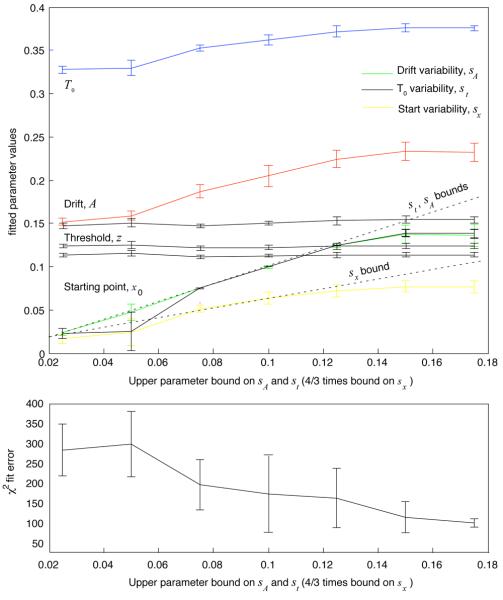

The extended DDM fits a broader range of empirical data sets (especially those with differences in average RT between correct and error responses), but it has not yet been found to be amenable to formal analysis (although see Bogacz et al. (2006) for analytical approximations and numerical approaches). Thus the extended DDM does not yield explicit relationships such as those of Eqs. 5-7. Furthermore, although adding more parameters gives the DDM enough flexibility to fit data, it also exacerbates a problem that occurs during fitting: this is that fitted values of DDM parameters are correlated with each other (Ratcliff & Tuerlinckx, 2002). For example, when fitting data, a minimum-fit-error parameter set can be modified by simultaneously increasing both drift and threshold; this leads to a parameter set with larger values that may nevertheless have a fit-error nearly as low as the original; reducing multiple parameters simultaneously can similarly result in good fits. Thus there is a tendency for parameter values to rise and fall together during fitting. However, since variability parameters are equal to 0 in the pure DDM and cannot be less than 0 in the extended DDM (indeed, fitted values of these are almost always greater than 0), these correlations among parameters appear to explain why in fits to our empirical data, the extended DDM always results in larger drift, threshold and T0 parameter values than in fits of the pure DDM.4

The values of these parameters are critical for the numerical accuracy of the predictions of Eqs. 5-7, but no widely accepted method exists for controlling parameter inflation as parameters are added to the simpler, pure DDM. If fit error is the only criterion on which parameter values are judged, then larger values are acceptable. If a source of bias toward larger values exists, however, then techniques should be considered for limiting the growth of parameters during fitting.

Our approach to the parameter-inflation phenomenon was to use the extended DDM to fit data, but to constrain its variability parameters by applying upper bounds on their allowable values. This approach left the pure-DDM parameters free to take on any values (including those that would disconfirm our hypotheses) while demonstrably reducing parameter inflation. Fig. 4, for example, demonstrates that drift and T0 increased as upper bounds on drift variability, starting point variability and residual latency variability were relaxed in fits to the data from Experiment 1 (standard error bars were generated in a cross-validation procedure that involved fitting 150 subsets of half the data at each upper bound value). Threshold values in the three RSI conditions, in contrast, remained flat across bound values. Starting points (not plotted), showed the same constancy. At the same time, fit error naturally decreased as constraints were relaxed. Validation error, computed by applying the fitted parameters in each fit to the unfitted half of the data, showed no signs of overfitting — that is, it never increased as bounds were relaxed. However, failure to find evidence of overfitting does not imply the absence of possible bias in the fitting procedure.

Figure 4.

Top panel: Average extended DDM parameter values from fits to 150 subsets of half the data (sampled with replacement) in each condition of Experiment 1, plotted as a function of the upper bound applied to the st and sA parameters during fitting (error bars represent the standard error of the mean). Drift A and residual latency T0 inflate as upper bounds on sA, sz and st increase, indicating a possible source of bias in parameter estimation. Bottom panel: Chi-square fit error as a function of upper bound values. Average fit-error for the bound value closest to the bounds used in our analyses was approximately 200.

Since we currently have no method for selecting an optimal tradeoff between parameter-inflation and fit-error, we relied on simulations to determine the best bound values. We set the bounds in our data analyses (listed in Table 1) roughly equal to the variability-parameter values recovered in the most accurate fits of A and T0 to the simulated data sets of Ratcliff and Tuerlinckx (2002) (see Fig. 6 in that paper). We relied on these extended DDM simulations because they used parameter values that were relatively close to those obtained by fits to our data, and because these values are representative of fits to data from a wide range of experiments (e.g., Ratcliff & Rouder, 1998, 2000; Ratcliff & Smith, 2004; Ratcliff et al., 1999). Also, since the simulated data in the correlation analyses of Ratcliff and Tuerlinckx (2002) assumed a constant value of T0, the bound on its corresponding variability parameter st came from our cross-validation procedure. The bounds occur roughly half way between an asymptotic fit-error of approximately 100 for completely unconstrained fits at the right edge of the graphs, and a fit-error of approximately 300 for the maximally constrained model (which better approximates the pure DDM) at the left edge. (The exact placement of these bounds does not drastically affect the numerical accuracy of our analytical predictions of optimal parameter values until it results in drift values well above 0.2 and T0 values well above 370 msec, at which point predictions and fitted values match only qualitatively.)

Table 1.

Fitted parameter values for group data from 0.5, 1 and 2 sec RSI conditions, with equally likely stimuli. Comparisons to empirical histograms for this fit appear in Fig. 7, and comparisons to empirical quantile-probability plots appear in Fig. 6.

| Pooled participant data | ||

|---|---|---|

| Parameter | Value | |

| Drift A: | 0.17348 | |

| Noise coefficient c: | 0.1 | |

| Drift std dev sA: | 0.068683 | Bound: 0.08 |

| Start range sx: | 0.03 | Bound: 0.03 |

| T0 range st: | 0.1 | Bound: 0.10 |

| Contaminant proportion p0: | 0.029182 | Bound: 0.05 |

| Residual latency T0: | 345.47 msec | |

| (compare to avg. signal detection RT of 301 msec) | ||

| Total χ2 fit error: | 194.5232 | |

| RSI condition | 500 msec | 1 second | 2 sec |

| Threshold z | 0.0558 | 0.0606 | 0.0734 |

| Optimal threshold | 0.0438 | 0.0566 | 0.0730 |

| Starting point x | 0.0029 | 0.0016 | 0.0016 |

| Optimal start pt | 0 | 0 | 0 |

| χ2 fit error | 84.58 | 46.44 | 62.47 |

Figure 6.

Quantile probability plot for pooled data from all participants in Experiment 1. Solid lines connect the nth quantile of the empirical data; X's and dashed lines represent the predicted quantiles for the best fit (listed in Table 1).

The result was a model that could be fit much faster than the pure DDM. Resulting fit errors were small enough for the model to pass an Akaike information criterion (AIC) test for model selection (Akaike, 1974) over the pure DDM, but fitted values of the theory-critical A and T0 parameters were nevertheless close to those obtained by fitting the pure DDM. We used the resulting estimates of A and T0 to make predictions about the effects of on threshold setting in Experiment 1, the interaction of RSI with stimulus probabilities in Experiment 2, and the effect of unequal rewards for left and right responses in Experiment 3.

Experiment 1

In this experiment, we held the SNR of the stimulus constant and manipulated the mean RSI across blocks of trials in a free-response, 2AFC motion discrimination task with equally likely stimuli (i.e., Π = 0.5). We sought to test the hypothesis that participants' SATs would shift across conditions in the absence of explicit instructions. We also sought to determine whether the extended DDM could account for RT distributions and accuracy in all conditions, and whether fitting the model to data would produce parameter estimates that conform to the following predictions of the pure DDM,5 parameterized to maximize reward rate (Bogacz et al., 2006):

1a) Estimates of drift (A) should be constant across all RSI conditions, reflecting the assumption that participants are motivated and allocate maximum attention to the task, and further reflecting the fact that the optimal strategy is to extract as much information as possible from the stimulus (which has a fixed SNR) in all task conditions,;

1b) Estimates of residual latency (T0) should be constant across conditions and commensurate with an independently observed signal detection RT (in a signal detection task with easily detectable signals);

1c) Estimates of the starting point x0 should be 0 in all conditions, reflecting no predisposition toward either response;

1d) Estimates of the threshold parameter (z) should increase as increases, reflecting a shift toward accuracy (see Fig. 2, panel B);

1e) Estimates of the threshold parameter should equal the function z(A, c, T0, ) defined implicitly by Eq. 5, evaluated at the current RSI and with the fitted values of A/c and T0.

Method

Participants

Twelve participants, ranging in age from 19 to 64 (mean 26), were recruited from the Princeton University campus area to participate in ten, one-hour task sessions. Experiment 1 consisted of the first five sessions; the second five sessions constituted Experiment 2. For their performance, participants were paid the greater of $10.00 or their total earnings in the task. Participants earned one cent for each correct response given, and no explicit penalties were imposed for errors. Average earnings were around $15.00 per session.

One participant performed at chance in all sessions, and this data was discarded. One participant dropped out after a single session. Data from two sessions was corrupted by power failures for a third participant, and this participant's remaining data was excluded from analysis. Another participant did not comply with instructions and did not wear vision-correcting glasses during some sessions, so this data was excluded as well. Finally, an older participant's data was excluded (reducing average age to 23 and maximum age to 27) so that age-related performance changes would not affect our findings. Data was therefore analyzed for seven participants who completed the ten sessions. Data for each participant was analyzed only for the last seven of ten sessions in order to reduce the impact of practice effects on the analysis.

Apparatus and stimuli

Stimuli were presented on a standard computer monitor; button press responses were entered on a standard keyboard. Stimulus display and response collection were done with the Psychophysics Tool-box (Brainard, 1997; Pelli, 1997) extensions to MATLAB running on an Apple G4 Power Mac with the OS 9 operating system. Stimulus generation software was created for use with the Psychophysics Toolbox by J. I. Gold.

Stimuli were random dot kinematograms, similar to those used in a series of psychophysical and decision making experiments involving monkeys as participants (e.g., Britten, Shadlen, Newsome, & Movshon, 1992; Gold & Shadlen, 2001; Shadlen & Newsome, 2001). Stimuli consisted of an aperture of approximately 3 inch diameter viewed from approximately 2 feet (approximately 8 degrees visual angle) in which white dots (2 × 2 pixels) moved on a black background. A subset of dots moved coherently either to the left or to the right on each trial, and the remainder of dots were distractors that jumped randomly from frame to frame of the display. Motion coherence was defined as the percentage of coherently moving dots. Dot density was 17 dots/square degree, selected so that individual dots could not easily be tracked.

Procedure

Motion coherence was adapted manually at the end of each of the first three experimental sessions in order to produce errors in at least 10% of responses. This was done to produce a substantial sample of error RTs, which is useful for constraining fits of the DDM (Ratcliff & Tuerlinckx, 2002). Some participants required no coherence adaptation, and average motion coherence ranged from the default value of 10% to a lower limit of 5%. No participants required an increase in motion coherence (except for the participant who performed consistently at chance, and whose data was excluded from analysis).

Responses involved presses of the Z key on the lower left of the keyboard with the left index finger to signal perception of leftward motion and presses of the M key on the lower right with the right index finger to signal perception of rightward motion, as in the empirical work presented in Bogacz et al. (2006) and Bogacz, Hu, Cohen, and Holmes (in review). Correct responses were signaled by an auditory beep, and after every five trials, the current total of correct responses was displayed in the center of the screen in place of the motion aperture for a duration equal on average to the mean RSI duration in each block of trials. Errors were indicated by the absence of the auditory beep.

Two measures were taken to prevent anticipatory responses, in which participants do not integrate stimulus information but instead prepare a response before stimulus onset in order to reduce RT and thereby increase the total opportunity for reward.6 First, the RSI on a given trial was selected from a normal distribution with a standard deviation of 100 msec to make stimulus onset unpredictable. Second, whenever responses were recorded prior to or within 100 msec after the stimulus onset, a penalty delay of four seconds was imposed to reduce the opportunity to earn rewards, and a buzzing error tone was presented.

In the first five, hour-long task sessions, the two stimulus types were equally likely. Each session consisted of one practice block of four minutes (practice was reduced to two minutes in sessions 4 and 5), followed by twelve four-minute blocks within each of which was held constant. was 500 msec in three blocks, 1 second in three blocks, and 2 seconds in six blocks. There were twice the number of two second-RSI blocks since these produced a significantly smaller number of trials within a four-minute block. The order of blocks and conditions was counterbalanced across sessions and across participants with a Latin square design. Self-paced rest periods occurred between blocks.

Participants were informed that the RSI might be different in different blocks, and that blocks would always last four minutes — therefore, faster responding would lead to more trials overall. They were encouraged to earn as much money as possible.

The twelve blocks in each session were followed by two, two-minute blocks of a signal detection task with easily detectable stimuli. In each signal detection block, stimuli were the same as in previous blocks (with a mean RSI of 500 msec), but only a single response earned rewards (a left button press in one block, and a right button press in the other). In these blocks, participants were instructed to respond as quickly as possible with the designated button press as soon as the stimulus appeared, regardless of coherent motion direction. While discriminating motion direction was relatively difficult in the preceding 2AFC blocks, simply detecting the presence of a high-luminance, moving-dots stimulus was not — signal detection RT was rapid and narrowly distributed; no misses occurred, and false alarms (anticipations) were rare. These blocks were used to establish a minimum signal detection RT for each response (left finger and right) that could be compared as a baseline to estimates of T0 from the signal discrimination trials, as well as to the RTs of any potentially non-integrative responses in Experiments 2 and 3.

Analysis

We directly examined RT distributions to assess the magnitude of SAT adjustment across RSI conditions, and also compared observations to speed and accuracy predictions based on model fits of the DDM and on the theory of optimal threshold parameterization in Eq. 5.

In order to maximize statistical power, and assess the generality of findings, we focused our analysis on group averaged data (while noting that similar results hold for almost all individual participants; individual performance for a selected participant is examined in Appendix E). Although pooling raw data from multiple participants presents potential dangers for interpretation (Estes & Maddox, 2005; Ratcliff, 1979), group RT distributions have been shown to be useful for analysis of RT data from multiple participants (Ratcliff, 1979), and they have been used successfully in practice (Spieler, Balota, & Faust, 1996; Ratcliff et al., 2004).

Group performance was assessed by pooling together the data from all participants. Frequently, a Vincentizing procedure is used to construct group RT distributions from individual RT distributions (Ratcliff, 1979; Van Zandt, 2000). This involves averaging (or taking the median of) the quantiles of individual RT distributions in order to derive the quantiles of an estimated RT distribution for the ‘average’ participant. One virtue of this approach is that a set of unimodal, individual distributions cannot lead to a multimodal ‘average’ distribution (which clearly would not represent the typical participant), although some evidence suggests that this approach has drawbacks (Rouder & Speckman, 2004). In our case, though, the Vincentized distribution appeared nearly identical to the distribution of RTs obtained simply by pooling the raw data from multiple participants (possibly because our manipulations of motion coherence in the first three sessions in order to obtain at least 10% errors tended to equalize response time and accuracy among participants). We therefore carried out the analyses that follow by pooling untransformed RT data from multiple participants; the analysis of Vincentized data leads to nearly identical results.

Results

While five out of seven participants displayed clear evidence of SAT adaptation across RSI conditions by the fifth session of Experiment 1, two participants did not. However, data from these participants was not excluded from the pooled data analysis (and these participants did show evidence of SAT adaptation in Experiment 2).

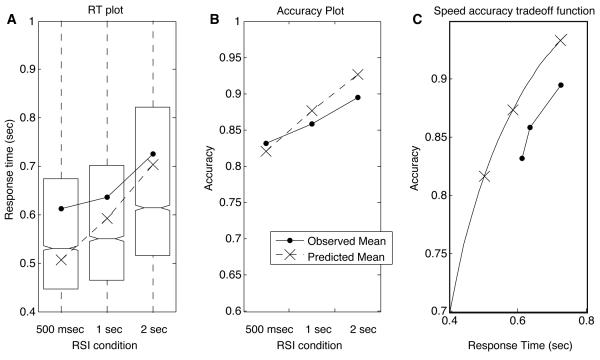

Differences in SAT across RSI conditions

A boxplot of RT data across three conditions in Fig. 5 (left panel) shows that RTs for the average participant increased as increased. All pairwise median RT differences were significant (p < 0.05, Wilcoxon rank-sum test). Notches in the boxes in Fig. 5 represent nonparametric 95% confidence intervals around the median, which is denoted by the horizontal line in each box. The observed average RTs are indicated with circle markers and superimposed on these plots, and the corresponding predictions based on optimal threshold values and fitted values of A and T0 are shown with X's. (Note that the mismatch between predictions and observations in Fig. 5 cannot derive entirely from suboptimal threshold selection, which would lead to longer RTs and greater accuracy, or shorter RTs and lower accuracy, than predicted. Instead, the estimates of A and T0 must be somewhat noisy, since RTs are longer than predicted and accuracy is lower than predicted in the 1 and 2 sec-RSI conditions.)

Figure 5.

A: Boxplot of response times for pooled data from all participants. Boxes represent the interquartile range (difference between first and third quartiles), and lines bisecting the boxes represent medians. Notches represent non-parametric 95% confidence intervals around the median RTs. Dashed lines and X markers indicate the expected RT predicted by Eq. 3 for a DDM with optimal thresholds, given values of A and T0 obtained from the best fit of the model to the data. Solid lines and circle markers indicate observed RT averages (these are higher than the medians indicated in the boxplots because of the skew of the RT distributions). B: Accuracy across conditions. Solid lines and circles indicate the observed proportions of correct responses. Dashed lines and X's indicate the expected proportions, , where ER in each condition is obtained by substituting fitted A and T0 values along with the optimal z value into Eq. 2. C: Predicted speed-accuracy tradeoff function (SATF) — i.e., as a function of — based on a fit of the DDM. Circles indicate the observed tradeoffs in each condition; X's indicate the optimal tradeoffs.

Accuracy also increased as increased, as shown in the center panel of Fig. 5. Error bars indicating the standard error of the mean are barely visible; differences in error proportions were highly significant. These results are consistent with an increase in threshold as RSI increases, in accord with prediction 1d.

A predicted speed-accuracy tradeoff function (SATF) is shown in the right panel of Fig. 5, where accuracy is plotted as a function of RT. The solid SATF curve is generated by holding all DDM parameters constant while gradually increasing thresholds. Observed RT/accuracy pairs are marked with circles; predictions for SATs in corresponding RSI conditions are marked with X's.

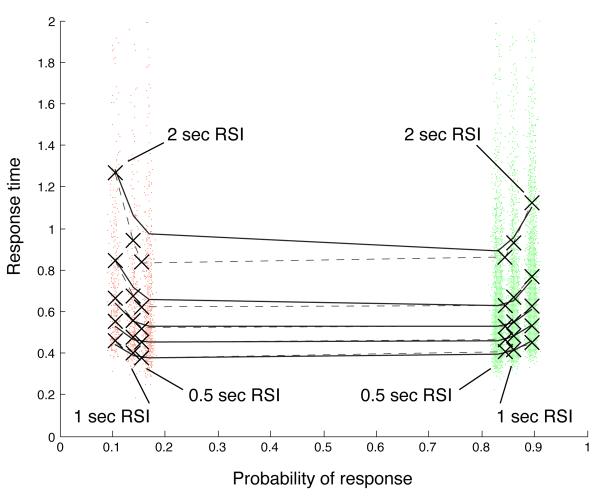

Quantile probability plots

Quantile probability plots (Ratcliff, 2001) provide a compact form of representation for RT and accuracy data across multiple conditions. In a quantile probability plot (such as Fig. 6), quantiles of a distribution of RTs of a particular type (say, correct responses) are plotted as a function of the proportion of responses of that type: thus a vertical column of N markers would be centered above the position 0.8 if N quantiles were computed from the correct RTs in a task condition in which accuracy was 80%. (Following Ratcliff and Tuerlinckx (2002) in both plotting and model-fitting, we used five RT quantiles: 0.1, 0.3, 0.5, 0.7 and 0.9.) The ith quantile in each distribution is then connected by a line to the ith quantiles of other distributions.

Here we have further elaborated quantile probability plots to include a superimposed scatterplot of individual RTs in each condition. Each sample point is plotted at a vertical coordinate corresponding to its RT value, and at a horizontal coordinate corresponding to the response probability, plus a normally distributed, random offset (laterally scattering individual RTs so that they can be discerned). This adds a visual representation of the number of responses in each condition to a quantile probability plot. Correct response RTs are plotted in green; error RTs are plotted in red.

In Fig. 6, the quantile probability plot for the pooled participant data is shown for the fourth and fifth sessions together. The five lines correspond to the five RT quantiles that were computed. The six RT distributions depicted in the plot correspond to correct and error RTs in each of the three different RSI conditions: 500 msec, 1 sec and 2 sec. Performance was much better than chance in all conditions, so the correct RT distributions appear on the right side of the plot.

For the average participant (represented by the pooled data), blocks with longer RSIs were associated with a higher likelihood of a correct response (since accuracy increased with increasing RSI). (This pattern also held for all but two of the participants individually.) Thus the correct responses for the 2 sec-RSI condition appear as the rightmost column of quantiles. The error responses in this condition form the leftmost column.

Fig. 6 clearly shows that the more likely correct responses coincided with longer RTs. Similarly, the corresponding error RTs were longer for the less likely errors. Thus a tradeoff between speed and accuracy is depicted in the U-shaped plot. In fact, the data are consistent with the theory of optimal DDM parameterization and SAT adaptation: blocks with longer RSIs were associated with more accurate but slower responses. In contrast, when changes in the drift parameter produce changes in accuracy, speed and accuracy do not trade off against each other; instead, response time and accuracy are negatively correlated. The resulting quantile probability plot in that case has an inverted U shape, as in Ratcliff and Tuerlinckx (2002), where variations in drift, but not threshold, were simulated. This pattern of increasing RT as RSI increased was observed in all but two participants. The results for the average participant are thus — so far — consistent with threshold adaptation, but not with drift adaptation.

There is also no significant difference between the median correct RT and the median error RT in the 500 msec (p = 0.3239, Wilcoxon rank-sum test) and 1 sec (p = 0.28) RSI conditions, although there is a trend in which average error RT is slower than average correct RT by about 30 msec. Data from these conditions are therefore arguably consistent with the pure DDM. Average error RTs are significantly slower, by about 50 msec, in the 2 sec-RSI condition (medians are significantly different at p = 0.0192); data from this condition are therefore inconsistent with the pure DDM.

Model fits

We fit RT distributions using a constrained optimization algorithm implemented in MATLAB's fmincon.m function. Appendix D details the model-fitting procedure, but we note here that researchers often use an unconstrained Simplex algorithm (Nelder & Mead, 1965) to fit the DDM to data (e.g., Ratcliff & McKoon, 2008). In contrast, constrained optimization approaches allow a user to restrict parameter values with equality and inequality constraints, including bounding parameters above or below by a constant. As we noted previously, we restricted the extended DDM's additional variability parameters by bounding them above. Table 1 lists the bounds we used during fitting. We examined a range of upper bound values and found that fitted A and T0 values bottomed out at values near those obtained from a pure DDM fit as the bounds were reduced to the following: 0.04 for sz, 0.03 for sA and 0.08 for T0. As previously discussed, we chose the bounds in Table 1 (0.03, 0.08, 0.1, respectively, as well as 0.05 for contaminant proportion p0) because they appeared to be the variability parameter values that were recovered in the most accurate fits of A and T0 in the simulated data sets of Ratcliff and Tuerlinckx (2002) (see Fig. 6 in that paper), and because their simulations used pure DDM parameter values close to those obtained by fits to our data.

Table 1 lists parameter values from a fit to the group data. Following the practice of Ratcliff and colleagues, we set the value of the noise parameter c to 0.1 (c is a ‘scaling parameter’, meaning that multiplying this term by any factor k will produce identical fits by multiplying the other DDM parameters by k — thus, the actual value of c is irrelevant (Ratcliff & Tuerlinckx, 2002)). In these simultaneous fits to data from each RSI condition, all parameters other than threshold and starting point were constrained to be equal across RSI conditions. This is consistent with the notion that drift is constant when the DDM is parameterized optimally, and it maximizes the power of the analysis to see changes in threshold. At the same time, it leaves open the possibility that starting points will violate the prediction of being equidistant from the two thresholds. (Furthermore, a separate parametric bootstrap analysis with unconstrained fits showed no significant differences between any parameters other than threshold across conditions.)

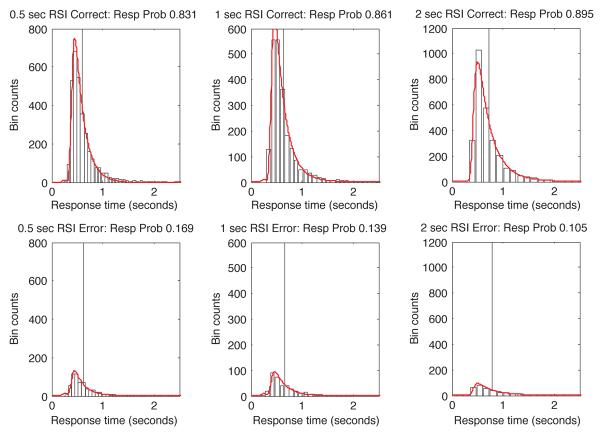

Fig. 7 shows a graphical comparison between histograms of the empirical data and the appropriately scaled RT densities corresponding to this model-fit, separately for correct and error responses (top and bottom rows of plots respectively). Visually, the match is close. However, model-data mismatches are more visible in quantile-probability plots than in density plots, so we superimpose fitted quantile-probability plots (X markers) on the empirical plots in Fig. 6. Visually, the match in Fig. 6 is also close, except in the case of the 500 msec-RSI condition, where accuracy is slightly overestimated and the 0.9 quantile RT is significantly underestimated, and in the last two error quantiles for the 1 sec-RSI condition, where RT is underestimated. These shortcomings can be rectified by leaving the extended DDM's variability parameters completely unconstrained, but this comes at the cost of inflated drift and T0 estimates. Fit-error can be further reduced by allowing all parameters to vary, but this comes at the cost of weakening the power to detect threshold changes across conditions.

Figure 7.

Group RT histograms and predicted RT densities from a fit of the DDM, sessions 4-5, Experiment 1. Columns correspond to distinct RSI conditions. The top row shows RT distributions for correct responses, while the bottom row shows the distributions of error RTs. Vertical lines indicate average RTs in each condition, computed separately for errors and corrects. Histogram bin widths were the same in both the correct and error plots for each RSI, and were determined by the Freedman-Diaconis rule (described in Appendix D).

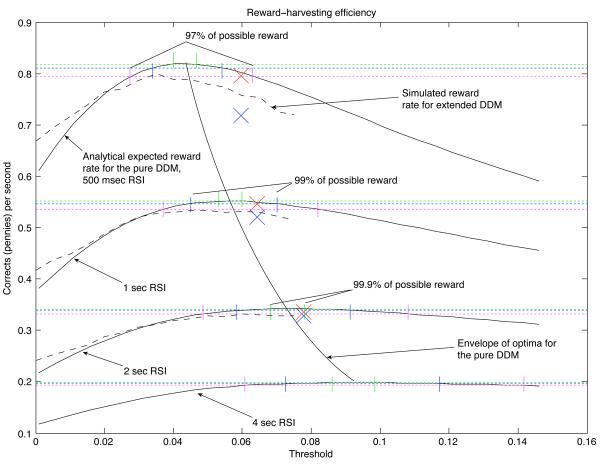

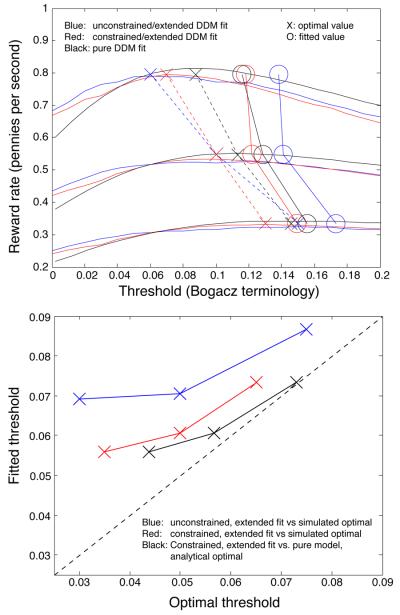

Quantitatively, the extended DDM's variability parameters contributed to a large reduction in fit error relative to pure DDM fits (pure DDM fits, not listed, had chi-square fit errors on the order of 1800, compared to 195 for the constrained, extended DDM). However, these variability parameters were not obviously so large as to rule out application of the optimality theory developed for the pure DDM. To confirm this, we simulated the extended DDM with the fitted parameter values and a range of threshold values to numerically estimate the expected reward rate as a function of threshold. This approximation (plotted in Fig. 10) was close to the function predicted analytically by the pure DDM, with optimal thresholds appearing to be generally smaller than the optimal thresholds for the pure DDM (peaks of the extended DDM's simulated reward rate function are to the left of the peaks of the pure DDM's analytical reward rate function). We discuss this figure in more detail when we compare fitted thresholds to optimal values for the pure DDM below.

Figure 10.

Reward harvesting efficiency of participants in three RSI conditions. One solid reward-rate curve per RSI condition represents the analytical expected reward rate for the pure DDM with the A and T0 values listed in Table 1, and with extended-DDM variability parameters set to 0. Dashed reward-rate curves show the numerical average reward rate for the extended DDM with the nonzero variability parameters listed in Table 1, simulated 10,000 times at 16 different threshold values. Green vertical lines bound intervals within which a threshold setting is expected to produce 99.9% of the maximum reward; blue lines bound 99% intervals, and magenta lines bound 97% intervals. Superimposed on these plots are blue X's denoting the fitted threshold in each condition and the observed rate of reward in each condition (total of rewards divided by total duration). Red X's correct for the penalty delays incurred by anticipatory responding, illustrating the larger proportion of anticipations in conditions with a shorter mean RSI.

Confidence intervals for parameter estimates

In order to carry out hypothesis tests regarding the adaptation of model parameters across task conditions, we used the parametric bootstrap method (Efron & Tibshirani, 1993) to construct confidence intervals around the fitted parameter values in each condition.

To test whether thresholds were adapted across conditions — and that other parameter adaptations were not the primary contributors to SAT adaptation — we generated 300 bootstrap samples of simulated RTs for the parameters obtained by fitting the extended DDM to the pooled RT data. Simulated RTs were generated with the probability integral transform method discussed in Tuerlinckx, Maris, Ratcliff, and De Boeck (2001) and computed in MATLAB with the cumulative RT distribution function CDFDif.m of Tuerlinckx (2004). We then fit each simulated data set and computed non-parametric 95% confidence intervals around the median of the parameter estimates in order to test the statistical significance of parameter adaptations across RSI conditions.

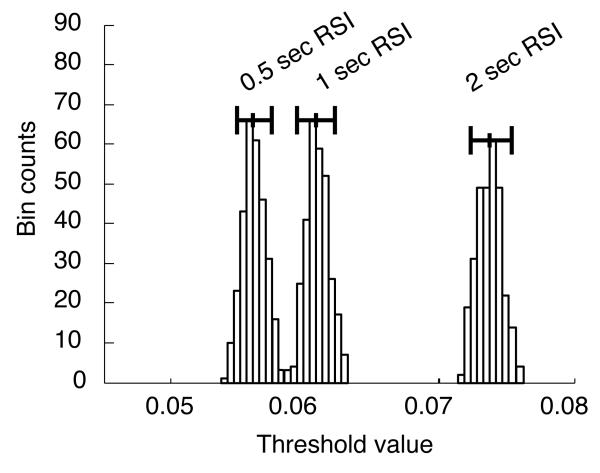

Fig. 8 shows superimposed histograms for the three different threshold estimates. The leftmost histogram corresponds to the 500 msec condition, the middle histogram to the 1 sec RSI condition, and the rightmost histogram to the 2 sec RSI condition. Whisker-bars plotted at the top of the tallest histogram bins denote 95% percentile confidence intervals for each parameter-estimate. They indicate significant differences in the parameter estimates across conditions.

Figure 8.

Parametric bootstrap estimates of threshold z, showing significant differences in threshold across conditions. Horizontal whisker lines denote 95% bootstrap confidence intervals around the median threshold value.

Thresholds and starting points were the only parameters that were allowed to range freely across RSI conditions in this bootstrap analysis. In other fits to group data that allowed all extended-DDM parameters to range freely, only the threshold parameters showed any significant differences across conditions. In contrast, fits to data from some individual participants did appear to show an increase in drift with increasing RSI. Such an increase in drift is inconsistent with prediction 1a. Whether this increase in estimated drift was due simply to correlations between drift, threshold and residual latency (which showed an increasing trend as RSI increased in individual fits), or whether the SNR for the individual participants concerned actually increased when RSI was longer is an open question. However, no participants displayed an inverted-U shape in their quantile probability plots, and most clearly displayed a U-shape. This suggests that at minimum, thresholds were increasing simultaneously with adaptations in drift across RSI conditions. Fits to individual performance for some participants also suggested that T0 may have increased as RSI increased. This increase violates prediction 1b, but again, this may be an artifact of parameter correlations. There is no evidence of T0 adaptation for the average participant.

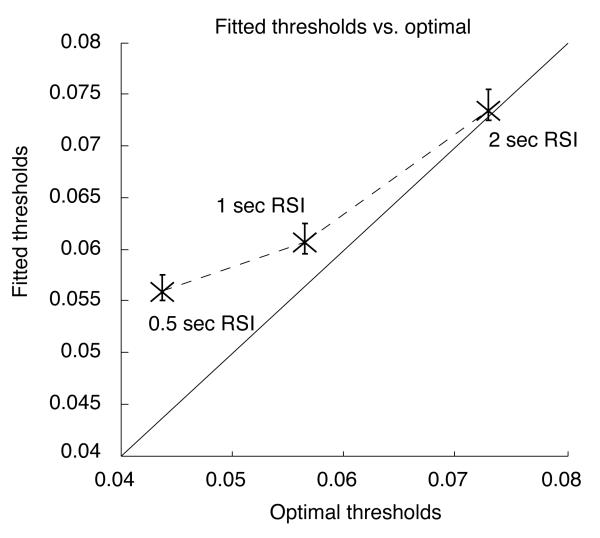

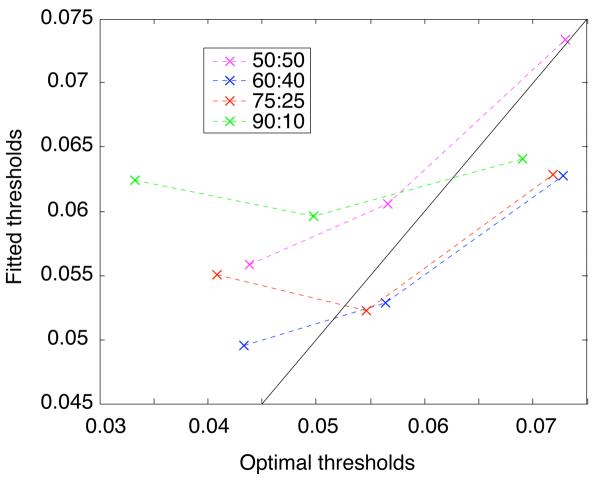

Proximity of fitted thresholds to optimal values

Fig. 9 shows fitted thresholds plotted as a function of the optimal thresholds for each condition. Optimal values were computed by numerically solving Eq. 5 after substituting fitted drift and residual latency parameters. The best approximation to the optimal threshold occurred in the 2 second RSI condition (the optimal value was within the 95% confidence interval obtained by the parametric bootstrap analysis). The approximation was worse in the 1 second RSI condition, and was quite far off in the 500 msec RSI condition. In the latter two cases, thresholds were suboptimally large. This is consistent with previous observations in the literature, which have been interpreted as reflecting an emphasis on accuracy over speed that results in a failure to maximize reward (Maddox & Bohil, 1998).

Figure 9.

Plot of fitted thresholds vs. optimal thresholds. Vertical crossbars indicate 95% confidence intervals around the fitted threshold values plotted as X's.

As we relaxed the upper bounds on the extended DDM's variability parameters during fitting, the fitted values of A and T0 inflated. Substituting these inflated values into Eq. 5 led to decreased values of the predicted optimal threshold, causing fitted thresholds to appear much larger than optimal. It is possible, however, that if participants implement the DDM but cannot control variability in starting point, drift and T0, then they may still be able to set thresholds to nearly optimal values for the extended DDM. These values might then only appear to be suboptimal according to an analysis based on Eq. 5.

Analytical expressions for reward rate as a function of threshold do not exist for the extended DDM, so we tested this hypothesis by numerically simulating the extended DDM with the parameters from Table 1. The resulting reward rate curves are close to the analytical curves for the pure DDM, but appear to have even smaller optimal thresholds (we also did this for a completely unconstrained fit of the extended DDM; results shown in Fig. F1 of Appendix F demonstrate a larger mismatch between fitted and optimal thresholds). The match between simulations of the constrained, extended DDM and analytical results suggests that predictions based on the pure DDM are likely to be useful in practice even if there is some variability in parameters that the pure DDM assumes to be constant.

Fig. 10 shows these simulation-based curves along with the analytical reward rate curves for the pure DDM, and illustrates the efficiency of reward gathering in the different RSI conditions of Experiment 1. Participants were able to achieve 97% of the maximum reward rate in the 500 msec-RSI condition, 99% of the maximum in the 1 second-RSI condition, and 99.9% in the 2 second-RSI condition. Since relative reward harvesting efficiency increases as RSI increases, we speculate that performance might be even closer to optimal with longer RSIs (a 4 second-RSI curve is plotted in Fig. 10 for comparison).

Fig. 10 also shows the effect of anticipations. X's mark the fitted threshold and the reward rate earned in each condition. Blue X's are based on summing up all rewards and dividing by the duration of blocks of trials. This duration may also include a number of 4-second penalty delays incurred for anticipatory responses. The DDM predictions of Eq. 4 do not incorporate these delays, however, so we subtracted out the total penalty duration from the block duration in each condition to get a corrected, earned reward rate estimate for comparison with the DDM predictions; these estimates are plotted with red X's. The differences between the blue and red X's in each condition therefore indicate the proportion of anticipations in each condition, and they demonstrate that the frequency of anticipations decreased dramatically as RSI increased.

Discussion

Consistent with the predictions of an optimally tuned DDM, fits to pooled data from all participants (and to data from individual participants) suggest that threshold values increased with RSI across blocks (prediction 1d), and that starting points remained equidistant from both thresholds (prediction 1c). In the case of pooled data, no other parameters were seen to covary with mean RSI (predictions 1a and 1b). An SAT function relating expected RT and accuracy is also determined by the drift parameter of the DDM, and this function was approximated by the observed SATs in the three RSI conditions. However, both individual participants and the average participant represented by pooled data appeared to set thresholds at values higher than optimal in two of the RSI conditions (violating prediction 1e).

A possible explanation for suboptimally high thresholds and the suboptimally high accuracy that results is that participants may derive intrinsic value from accuracy itself (Maddox & Bohil, 1998). Another possible explanation for a propensity toward suboptimally high thresholds was proposed in Bogacz et al. (2006). There it was argued that if errors in threshold selection were to occur, then it would be better to err toward higher rather than lower thresholds. This argument derives from the skewed shape of the curve defining reward rate as a function of threshold (see Fig. 2A and Fig. 10). This skew implies that reward rate decreases more rapidly as thresholds become suboptimally small than as they become suboptimally large.

The proportion of anticipatory responses in each RSI condition suggests a third possibility: this is that participants may need to set thresholds higher than the optimum in conditions where anticipations are more likely. It may be that anticipation becomes a prepotent behavior at high response rates (which are much higher in the 500 msec-RSI condition than the 2 second-RSI condition, for example). If so, then setting thresholds artificially high may reduce the likelihood of anticipation by slowing the response rate, and the need for this slowing should decrease as RSI increases. Consistent with this explanation — or at least with a general impairment of strategic control at short RSIs — several researchers have found that RTs increase and accuracy decreases as RSI decreases below 500 msec (Jentzsch & Dudschig, 2009; Sommer, Leuthold, & Soetens, 1999).

Another curious aspect of the data is that the reward rate curves plotted as a function of threshold in Fig. 10 flatten as the RSI increases. Under simple hill-climbing strategies for optimizing thresholds (e.g., Myung & Busemeyer, 1989), this flatness would suggest that deviations from optimal thresholds should be larger as RSIs increase. However, it may be that the amount of reward earned as a proportion of the total possible is the quantity that determines performance (such proportional judgments have often been proposed to underlie Weber's law for just-noticeable differences in perceptual judgments, for example). If such ratios are what determine performance, then absolute amounts of reward (and flatter maxima of reward rate curves for longer RSIs) are irrelevant. These two factors together — proportional reward rate estimation and performance degradation with increasing task pace — constitute a possible explanation for improvements in performance as RSIs increase.

A fourth possibility is that reward simply does not have as strong an effect as predicted on behavior. Importantly, though, the theory of optimal DDM parameterization also predicts dramatic, qualitative changes in behavior in the case of unequally likely stimuli and unequally rewarded responses that result from optimal threshold and starting point shifts. Observing behavior consistent with these predictions would bolster the case for strategic threshold adaptation in Experiment 1. We assess these predictions in Experiments 2 and 3.

Experiment 2

In decision making tasks involving multiple trials, stimulus ratios provide potentially useful information to the decision maker. When stimuli are unequally likely, a decision maker can exploit estimates of prior probability to improve earnings by favoring the response to the more frequent stimulus (we refer to this response as the favored response, and the more likely stimulus as the favored stimulus). Optimizing the pure DDM produces precise, quantitative predictions about how the decision maker should respond to changes in stimulus probabilities (Π and 1 − Π) when stimulus discriminability is held constant. The first two of these predictions are identical to those in Experiment 1, and the remainder are modified to account for unequal stimulus probabilities:

2a. Estimates of drift (A) should be constant across all stimulus-probability and RSI conditions.

2b. Estimates of residual latency (T0) should be constant across conditions.

2c. Estimates of the starting point x0 should be shifted toward the favored response threshold as specified by Eq. 6, reflecting a bias toward the favored response; the size of the optimal starting-point shift should be independent of the mean RSI.

2d. As in Experiment 1, estimates of the threshold parameter (z) should increase as increases, reflecting a shift of the SAT toward greater accuracy; threshold magnitudes in this case should equal the function z(A, c, T0, , Π) defined implicitly by Eq. 7, evaluated at the current values of RSI and Π and the fitted values of A, c, and T0.

2e. Estimates of the threshold parameter (z) should decrease according to Eq. 7 as Π increases; as shown numerically in Bogacz et al. (2006), the optimal threshold decrease should be smaller than the optimal starting-point shift.

Expected reward rate for the pure DDM in Experiment 2 is thus maximized by shifting the starting point of evidence integration (x0) in the direction of the favored response threshold, by slightly reducing both thresholds, and by leaving drift to be determined entirely by the stimulus. (In contrast, for the extended DDM, it is possible that strategically adapting the mean drift value along with thresholds and starting points across conditions could maximize the expected reward rate.)

A particularly strong prediction of the optimally parameterized DDM is that for particular combinations of a sufficiently short RSI and sufficiently asymmetric stimulus ratios, the shift in starting point places it beyond the response threshold for the correct response. At this point, participants should exhibit non-integrative responding. That is, on every trial they should make the response corresponding to the more frequent stimulus, with average RT comparable to that observed in an easy signal detection task. Eq. 8 expresses this prediction as a function of task conditions ( and stimulus probability) that defines the surface depicted in Fig. 3. For conditions falling below the surface, participants should exhibit non-integrative responding. Behavior conforming to these predictions would constitute strong support both for the DDM and for the hypothesis that participants adjust the parameters of their decision processes to maximize reward rate. To test these quantitative predictions, we conducted an experiment that was similar to Experiment 1, but that also involved manipulating the probabilities of the two stimuli in addition to the RSI.

Method

Participants

Participants were the same as in Experiment 1. They had completed the five sessions of Experiment 1 prior to the five sessions constituting this experiment.

Apparatus and stimuli

Apparatus and stimuli were identical to those in Experiment 1.

Procedure

Participants engaged in five, hour-long task sessions consisting of blocks of trials in which one stimulus (one direction of coherent motion) was more likely than the other. Within each block of trials, the direction of motion chosen to be more likely was selected randomly and with equal probability. Participants were informed that the stimulus probabilities, in addition to the RSI, might be different in different blocks. They were once again informed that blocks would always last four minutes — therefore, faster responding would produce more trials overall. They were encouraged to earn as much money as possible.

Each session consisted of one practice block of 2-4 minutes (practice was reduced in later sessions), followed by twelve four-minute blocks, within each of which a given set of task parameters was held constant. The task parameters were the RSI and the proportions of leftward and rightward stimuli (equivalently, the prior probability Π of the favored stimulus). For each participant, motion coherence was set to the same value as in sessions 4 and 5 of Experiment 1. As in Experiment 1, the actual RSI on a given trial was jittered around the average value with a standard deviation of 100 msec, in order to discourage anticipations. A 4-second penalty delay between trials was again enforced whenever responses occurred prior to 100 msec following stimulus presentation. and Π were factorially covaried, with taking values of 500 msec, 1 sec, or 2 sec, and Π taking values of 0.6, 0.75 or 0.9. The order of conditions was counterbalanced across sessions and across participants with a Latin square design. Two consecutive blocks of trials were allocated to each condition in which was 2 seconds, since a 2 second RSI produced far fewer trials within a four-minute block than did an of 1 second or 0.5 seconds. Finally, the twelve blocks in each session were followed by two, two-minute blocks of a signal detection task identical to that of Experiment 1.

Analysis

To assess predictions, we examined in detail the performance of the average participant, represented by the pooled data from all participants. (Data for an individual participant is presented in Appendix E.) Since estimates of A and T0 were all that were required to make behavioral predictions, we were able to base our predictions in Experiment 2 entirely on a fit to the data from Experiment 1. Estimates of A and T0 were used to predict the optimal threshold z and starting point x0 based on Eq. 5 and Eq. 6 respectively (c was assumed to be 0.1, as noted previously). These values of A, T0, x0 and z (and the values of the variability parameters st, sx, andsA derived from extended DDM fits) in turn predicted a specific RT, accuracy and proportion of right vs. left responses as a function of mean RSI and stimulus probability in the various conditions of Experiment 2.

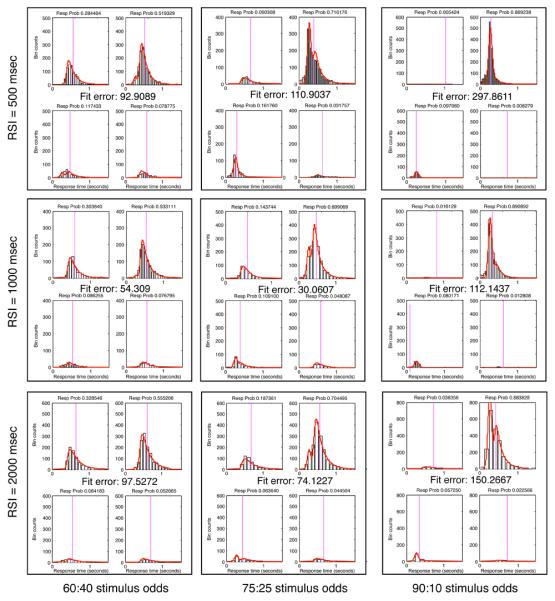

We also fit the data of Experiment 2 itself simultaneously with the data from Experiment 1 (these simultaneous fits are the ones listed in Table 1 and 2), and the critical A and T0 parameters were within 8% of the values found in fits to the data from Experiment 1 alone. However, fitting in this experiment was complicated by the stimulus-proportion manipulation. Although the data conformed to our prediction of non-integrative responding when was small and Π was large, the resulting RT distributions (both for pooled data and for individual participants) were bimodal, or showed hints of bimodality, in most conditions. Bimodality appeared to result within participants from runs of non-integrative trials interspersed with runs of integrative trials (see Fig. E2), as well as in the pooled data from integration by some participants and non-integration by others in some conditions. Since the DDM with a single set of parameters cannot predict a bimodal RT distribution, this made fitting the DDM to data from Experiment 2 effectively impossible when stimulus ratios were greater than 60:40 and was less than 1 sec.7

Table 2.

Fitted parameter values for the average participant (pooled data from all participants). Data from Experiment 1 and Experiment 2 were fit simultaneously, leading to parameter values identical to Table 1 for all parameters other than threshold, starting point, mixture weight and drift increment.

| Pooled participant data, unequal stimulus probabilities | ||

|---|---|---|

| Parameter | Value | |

| Drift A: | 0.17348 | |

| Noise coefficient c: | 0.1 | |

| Residual latency T0: | 345.47 msec | |

| (compare to avg. signal detection RT of 301 msec) | ||

| Drift std dev sA: | 0.068683 | Bound: 0.08 |

| Start range sx: | 0.03 | Bound: 0.03 |

| T0 range st: | 0.1 | Bound: 0.10 |

| Contaminant proportion p0: | 0.029182 | Bound: 0.05 |

| Fast-guess RT mean: | 266.77 msec | Bound: 290 |

| Fast-guess RT std dev: | 49.099 msec | Bound: 100 |

| Total χ2 fit error: | 1020.104 | |

| Stim prob: | 60:40 | 75:25 | 90:10 |

| Threshold z | |||

| RSI: 500 msec | 0.049545 | 0.055035 | 0.062455 |

| 1 second | 0.052885 | 0.05222 | 0.059620 |

| 2 second | 0.062745 | 0.06289 | 0.064055 |

| Optimal thresh | |||

| RSI: 500 msec | 0.043370 | 0.040829 | 0.033304 |

| 1 second | 0.056341 | 0.054623 | 0.049731 |

| 2 second | 0.072819 | 0.071804 | 0.069020 |

| Starting point x0 | |||

| RSI: 500 msec | 0.000915 | 0.012183 | 0.021868 |

| 1 second | 0.004557 | 0.010700 | 0.011437 |

| 2 second | 0.003909 | 0.005578 | 0.011804 |

| Optimal start pt | |||

| RSI: 500 msec | 0.011686 | 0.03166 | 0.063330 |

| 1 second | 0.011686 | 0.03166 | 0.063330 |

| 2 second | 0.011686 | 0.03166 | 0.063330 |

| Mixture weight | |||

| RSI: 500 msec | 0.079295 | 0.48188 | 0.84125 |

| 1 second | 0.031668 | 0.22471 | 0.70061 |

| 2 second | 0.016402 | 0.13526 | 0.45467 |

| Drift increment | |||

| RSI: 500 msec | 0.01536 | 0.00377 | 0 |

| 1 second | 0.00304 | 0.0001 | 0.083964 |

| 2 second | 0 | 0.03522 | 0.057137 |

| χ2 fit error | |||

| RSI 500 msec | 92.91 | 110.90 | 297.86 |

| 1 second | 54.31 | 30.06 | 112.14 |

| 2 second | 97.53 | 74.12 | 150.27 |

Although a mixture of integrative and non-integrative responding is not predicted by an optimally parameterized, pure DDM, this result should be expected if there is variability in the model's parameters from trial to trial; this is precisely what is assumed in the extended DDM. In order to fit the data from Experiment 2, we therefore fit a model that was a mixture of a non-integrative or fast-guess distribution (consisting only of guesses that the more likely stimulus was present), together with an RT distribution generated by the DDM. Since the fast responses made in the signal detection blocks at the end of each session appeared almost normally distributed, we modeled the non-integrative mixture component as coming from a normal distribution.8

We also fit a model that allowed an increment to be added to the drift term; in this way, response biasing could be achieved by increasing drift toward the more likely response threshold, no matter which stimulus was presented. This is equivalent to changing the reference point in the 1-dimensional stimulus space that determines a drift value of 0 (see Ratcliff, 1985 for discussion of how the 0-point of drift relates to the criterion parameter of signal detection theory). This type of model has been successfully fit to monkey behavioral and neurophysiological data in tasks that vary signal discriminability from trial to trial (e.g., Yang et al., 2005). Adapting the average drift across conditions may also be the optimal strategy in tasks with constant discriminability if the variability parameters of the extended DDM are large enough (and if participants cannot act to reduce this variability below a given level) — the current lack of analytical results for the extended DDM makes this result (or its opposite) difficult to prove. Empirically though, including a drift increment term that can vary across conditions allowed us to test whether human participants can be modeled as adapting drift across conditions when signal discriminability is constant from trial to trial (a circumstance in which optimal performance, in contrast, requires a pure DDM and no drift-adaptation).

Results

Quantile probability plots

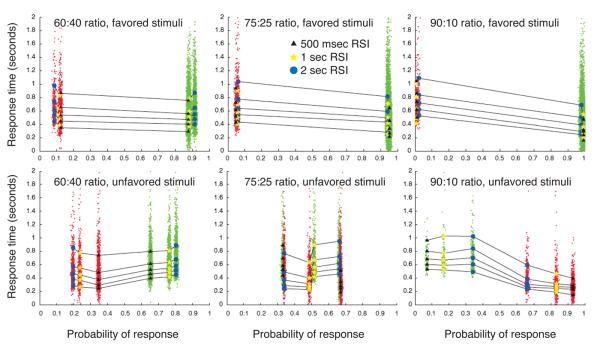

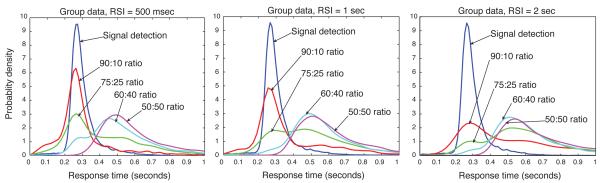

The top row of panels in Fig. 11 displays the quantile probability plots for trials in which the favored stimulus is presented; the bottom row displays the quantile probability plot for the unfavored-stimulus trials.

Figure 11.

Quantile probability plots for all conditions of Experiment 2. Superimposed scatterplots of RT data are plotted in green for correct responses and red for errors. Left column: 60:40 stimulus ratio. Middle column: 75:25 stimulus ratio. Right column: 90:10 stimulus ratio. The top row of panels shows quantile probability plots for responses to the more likely stimulus. The bottom row plots responses to the less likely stimulus; note the exchange of correct and error probabilities as stimulus-ratio asymmetry increases.

In the superimposed scatterplot of RTs, correct response RTs are plotted in green; error RTs are plotted in red. This makes visible the shift of error and correct RT probabilities in response to unfavored stimuli as Π increases (bottom row of panels in Fig. 11). This approach also highlights the occurrence and relative frequency of anticipatory responding across conditions.

For a stimulus ratio of 60:40 (Π = 0.6), quantile probability plots (shown in the leftmost column of Fig. 11) continue to show SAT adaptation of the type shown in Experiment 1: for both types of stimuli, the plots retain roughly the U-shape seen in Fig. 6, consistent with prediction 2d. In response to unfavored stimuli, accuracy decreased (as indicated by the shift of the quantile columns toward the middle of the graph). In response to favored stimuli, correct responses in a given condition tended to be faster than errors. Conversely, in response to unfavored stimuli, error responses were typically faster than corrects.

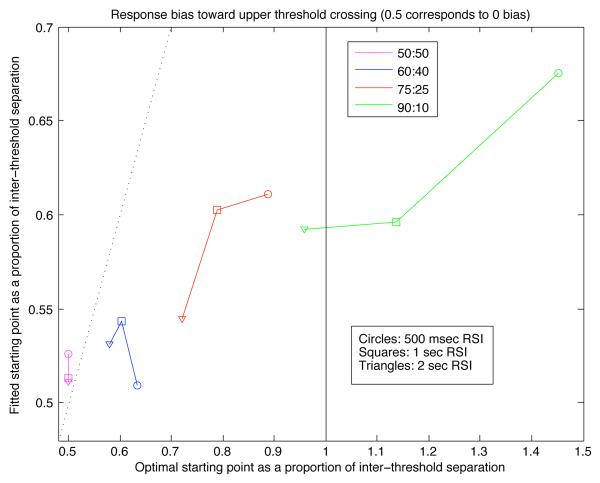

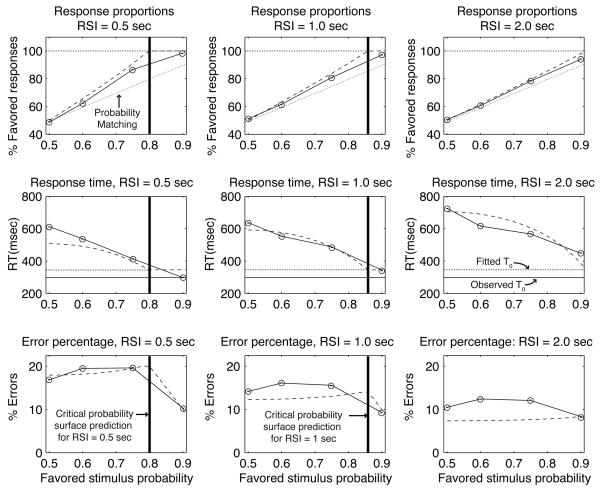

For a stimulus ratio of 75:25, performance resulted in quantile probability plots with radically different shapes (middle column of Fig. 11). Accuracy in response to favored stimuli increased markedly relative to the 60:40 condition, moving correct quantile columns to the right edge of the plot and error columns to the left. Unfavored stimuli, in contrast, produced quantile columns that are shifted further toward the center, and correct responses became less likely than errors when was 500 msec. Furthermore, errors were much faster than correct responses to the unfavored stimulus, and this asymmetry in RT was more exaggerated for shorter RSIs. Both of these phenomena are consistent with an optimally tuned DDM, in which threshold magnitudes decrease as decreases, and the starting point moves closer to the response threshold for the favored stimulus as Π increases. Similar starting point shifts and relative constancy of drift (but not anticipatory responding) were observed by Ratcliff and McKoon (2008) in their investigation of stimulus probability effects in a two-alternative motion discrimination task similar to Experiment 2, but using a fixed RSI and response deadline bands.