Abstract

This study investigated the influences of knowledge, particularly Internet, Web browser, and search engine knowledge, as well as cognitive abilities on older adult information seeking on the Internet. The emphasis on aspects of cognition was informed by a modeling framework of search engine information-seeking behavior. Participants from two older age groups were recruited: twenty people in a younger-old group (ages 60–70) and twenty people in an older-old group (ages 71–85). Ten younger adults (ages 18–39) served as a comparison group. All participants had at least some Internet search experience. The experimental task consisted of six realistic search problems, all involving information related to health and well-being and which varied in degree of complexity. The results indicated that though necessary, Internet-related knowledge was not sufficient in explaining information-seeking performance, and suggested that a combination of both knowledge and key cognitive abilities is important for successful information seeking. In addition, the cognitive abilities that were found to be critical for task performance depended on the search problem’s complexity. Also, significant differences in task performance between the younger and the two older age groups were found on complex, but not on simple problems. Overall, the results from this study have implications for instructing older adults on Internet information seeking and for the design of Web sites.

General Terms: Theory, Experimentation, Performance, Measurement, Human Factors

Additional Key Words and Phrases: Human-computer interaction: older adults, health information seeking, Internet, mental models, Pathfinder networks, search engines

1. INTRODUCTION

The emergence of the Internet has given people access to an information environment with several unique and outstanding qualities. First, the Internet contains vast amounts of information—as of August 2005, the search engine Google contained an index of more than 8 billion Web pages [Google 2005]. Second, this information is interlinked, allowing a person to jump from page to page. Third, the information can be accessed relatively rapidly, depending on the speed of the connection. Lastly, the Internet provides search tools, services, indexes, and directories that help the user find information. Yet despite these virtues, for many people the activity of information seeking continues to be problematic [Pew Internet and American Life Project 2004].

Many of the issues concerning information seeking on the Internet are similar to those that continue to confront traditional information retrieval (IR) research [Baeza-Yates and Ribeiro-Neto 1999]. Historically, IR systems were viewed as technological artifacts that required search experts to perform search and retrieval activities. With the introduction of user-centered perspectives to library and information science, the research agenda shifted away from studying experts and toward examining the capabilities and limitations as well as the information needs of end-users interacting with these IR systems [Nahl 1997; 2003]. It was not until the late 1990s that we began to see an emphasis on user-centered Internet information seeking (e.g., Wang et al. [2000]).

The goal of the study reported in this article was to investigate the influence of various domains of Internet-related knowledge and cognitive abilities on Internet information-seeking performance by older adults. The focus was on health-related information as older adults are likely to search for this type of information. In this regard, the study contributes to the growing body of research issues concerning e-health [Bass 2003]. As noted by Powell et al. [2003], the Internet is altering the knowledge-based balance of power between healthcare professionals and healthcare consumers. Essentially, it is spurring the public to become more involved in healthcare decision making by enabling access to data and information that was previously inaccessible. The Inter-net thus has the potential to empower older adults by providing information that could help them deal more effectively with issues that could impact their health, independence, and well-being.

Despite an increasing older adult population—by 2030, people aged 65 and older will make up 20% of the US population [U.S. Department of Health and Human Services 2001]—research on this group’s ability to use the Internet to find information has been limited, especially when the information-seeking activities relate to problem solving and decision making. Of particular concern for older adults is that the inherent complexity of the Internet may make it difficult for them to negotiate Web sites and utilize available resources. In addition, information seeking is an activity that places heavy demands on cognitive abilities such as working memory, spatial ability, and reasoning. For many older adults who have limited experience and knowledge concerning the Inter-net and exhibit declines in cognitive abilities, effective Web-based information seeking can be a daunting task. The contention here is that understanding the factors which hinder and influence older adults’ Internet information-seeking activity can lead to the design of better Web sites, search engines, and instruction that takes into account the capabilities and limitations of older adults.

1.1 Modeling Information-Seeking Behavior

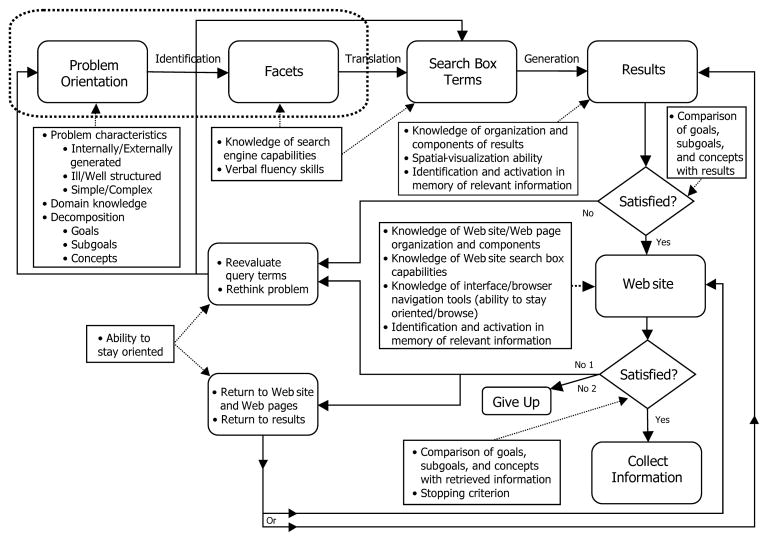

Information-seeking behavior has been the focus of much research and theoretical activity. The methodology of this study was based on an Internet information-seeking model (Figure 1) that was influenced primarily by the work of Sutcliffe and Ennis [1998] and Marchionini [1997], but also by several other researchers including Borgman [1986; 1996] and Kulthau [2003].

Fig. 1.

A model of search engine information-seeking behavior.

The theoretical framework proposed by Sutcliffe and Ennis [1998] consists of four cyclical cognitive activities: problem identification, need articulation, query formulation, and results evaluation. Problem identification involves identifying the information need from a problem statement. During need articulation, the information seeker expresses the information need by selecting low-level terms from long-term memory, which may lead to a refinement or restatement of the information need. Query formulation is the process of generating queries and depends on the information seeker’s skill level and on the capabilities and possibilities of the IR system. Finally, results evaluation is a decision-making process whereby the information seeker decides whether to accept the retrieved results or continue searching for more results. The framework of Sutcliffe and Ennis also attributes four types of knowledge to each information seeker: (1) domain knowledge (knowledge about the specific domain searched); (2) device knowledge (knowledge regarding system support facilities for browsing, querying, and evaluating results); (3) information resources knowledge (knowledge regarding the specific database(s) that can be searched by a system); and (4) IR knowledge (knowledge of search strategies).

Marchionini [1997] conceptualized information seeking as guided by analytical strategies at one end of a continuum and browsing strategies at the other end. Analytically-guided search occurs when querying a Web search engine and reviewing the results, whereas with browsing the search has a serendipitous quality and the information-seeking goal is attained more indirectly. He proposed a process model of information seeking consisting of several subprocesses that can be run in parallel: recognize and accept the problem; define the problem; select the source; formulate the query; execute the query; examine the results; extract information; and reflect, iterate, or stop.

Overall, the model depicted in Figure 1 is consistent with the view of information seeking as a problem-solving process [Mayer and Whittrock 1996; Marchionini 1997], whereby the problem-solver’s knowledge and other mental representations are manipulated in order to achieve a goal. The problem-solving process consists of three subprocesses: representation, planning, and execution. During representation, the problem statement is internalized through the creation of a mental representation of the facts. In Figure 1, this is represented by the Problem Orientation component. The planning process consists of generating a method for coming up with a solution, which often requires breaking the problem up into parts or subgoals; in Figure 1, aspects of Problem Orientation as well as the Facets component represent the planning process. Execution involves carrying out the operations that were developed during the planning process. These processes are iterative in nature (depicted by the iterative loops in Figure 1) as planning may spur further insights into the problem and thus promote modified problem representations. Also represented in the model are the potential impacts of domain and technical system knowledge and the potential role of cognitive abilities during navigation and evaluation activities related to information seeking.

Taken together, knowledge and cognitive abilities are assumed to be critical in keeping the user oriented, that is, in preventing the user from getting confused or lost. These factors are expected to assume an even greater role, especially for older adults, as the problem-solving (i.e., information-seeking) process becomes more complex. Problems can generally be described as consisting of an initial state, a goal state, and a set of allowable operations and constraints that govern transitions between states. When problems are complex or ill-defined, one or more of these components may not be clearly specified [Chi and Glaser 1985], and the information-seeking process can become exceedingly challenging. Overall, the information-seeking model depicted in Figure 1 provides the basis for the emphasis in this study on knowledge, cognitive abilities, and problem complexity.

1.2 Knowledge and Mental Models

The relationship between knowledge and information-seeking performance is far from unequivocal. In examining the effects of knowledge about hyper-text and search experience on search performance using a hypertext-based bibliographic search system, Dimitroff and Wolfram [1995] found that individuals with little or no search experience did as well as those with knowledge of hypertext-searching techniques. In contrast, Bhavnani [2001] found that search experts working within their domain of expertise were able to use domain-specific declarative knowledge related to URLs (Web addresses) to directly type in the URL of a useful Web site, which led to effective searches. However, when they searched for information outside their domain of expertise, they relied on general-purpose search knowledge and on clicking links, which led to ineffective and, at times, aborted searches.

Another important and related construct in Internet information seeking is that of mental models. These models are essentially mental representations that embody information about the structure, function, relationships, and other characteristics of objects in the world, and thus can help people explain and predict the behavior of things in the world around them [Craik 1943; Brewer 2003]. The importance of mental models for successful information-seeking behavior was noted by Borgman [1986], who found that subjects trained with an analogical model of an online system (training manuals described the online system in terms of a card catalog) performed better in a complex task condition, but not in a simple task condition, and suggested that mental models might be useful in situations where problem-solving behavior was required (i.e., when no method of solution is obvious). A number of other researchers, including Liebscher and Marchionini [1988], Slone [2002], and Zhang and Chignell [2001], have used the concept of mental models as a theoretical basis for their information retrieval and Web studies.

1.3 Cognitive Abilities

Few if any studies on Internet information seeking have examined the role of the user’s cognitive abilities in relation to search performance. Evidence from studies on database information search and retrieval indicates that age-related declines in many cognitive abilities may make this factor especially important when examining the Internet information-seeking performance of older adults. For example, in a simulated health insurance customer service task, Czaja et al. [2001] found that factors such as working memory and spatial skills influenced search performance. In a simulated telecommuting task in which older adults were required to respond to queries from fictitious customer emails by navigating through a database configured in the form of a hyperlinked information environment similar to the Internet, measures of verbal ability, memory span, attention/concentration, and perceptual speed were found to strongly predict performance [Sharit et al. 2004].

Another aspect of cognition that can have important implications for Inter-net information-seeking performance is as measured by the visual recognition Group Embedded Figures Test that assesses susceptibility to visual interference. This measure can differentiate those individuals whose perception is dependent on the organization of the surrounding perceptual field (i.e., field dependent (FD)) from those whose perception is independent (i.e., field independent (FI)) of such influences [Sternberg and Grigorenko 1997]. Its relevance to Internet information-seeking performance derives from the results of a number of studies that have incorporated the FD/FI distinction. For example, Weller et al. [1994] found that FI participants accessed more hypermedia nodes and achieved greater learning than their FD counterparts. They proposed that the FD/FI distinction reflects how well a learner restructures the information presented in a hypermedia-type information environment by relying on salient cues and the arrangement of elements in the perceptual field. Palmquist and Kim [2000] found that in comparison to FI novice users, FD novice individuals used more embedded links (ordinary Web page clickable links), which reflected a more passive navigational style, and became lost more frequently. These researchers concluded that FD novice users should receive special attention from Web designers in the form of well-structured and visually simple Web sites.

1.4 Study Objectives

An important objective of this study was to investigate the relationship between Internet-related knowledge and information-seeking performance and whether this relationship differs depending on whether the search problems are simple or complex. Toward this end, a structured interview instrument was developed to assess four different types of declarative (factual) and procedural (how one does something) knowledge: general Internet, Web browser, simple search, and advanced search. In addition, the Pathfinder methodology [Dorsey et al. 1999] was used as a means for evaluating the importance of Internet-related structural knowledge (how key concepts regarding the Internet and Web-based information search are related) for search performance.

Another objective was to evaluate the mental models people have concerning the Internet, Web browsers, and search engines and how these mental models impact search performance on both simple and complex problems. For this analysis, selected items from the structured interview instrument were examined.

In light of findings regarding the importance of cognitive abilities for older adult information-seeking performance, a key objective of this study was to determine if cognitive abilities predicted performance after accounting for Internet-related knowledge. Also of interest was determining how problem complexity might influence which cognitive ability measures are predictive of performance and if age had an influence on performance after both knowledge and cognitive abilities were taken into account.

Overall, the goal of this research was to provide a better understanding of the factors that influence the ability of older adults to find information on the Internet. Knowledge of this type is fundamental to the development of guidelines for interface designs and instructional strategies that are tailored to the specific needs of older adults.

2. EXPERIMENTAL METHODS

2.1 Sample

A sample of 40 older adults was recruited in two age groups: a younger-old group (60–70 years of age, M = 65.2, SD = 3.17, n = 20) and an older-old group (71–85 years of age, M = 76.7, SD = 4.08, n = 20). A comparison group of 10 younger adults (18–39 years of age, M = 27.9, SD = 6.39) was also included in the study to establish benchmark performance comparisons. There were 17 males and 33 females in the sample; 2 males and 8 females in the younger group, 8 males and 12 females in the younger-old group, and 7 males and 13 females in the older-old group. With respect to ethnicity, the sample consisted of 5 Black/African-Americans, 31 White/Caucasians, 11 Hispanic/Latino, and 3 other ethnicities. The highest education attained for the younger group was as follows: 8 with some college, 1 with a bachelors degree, and 1 with a masters degree; for the younger-old adults: 1 with high school, 11 with some college, 1 with a bachelors degree, 4 with a masters degree, and 3 with an advanced degree; and for the older-old adults: 1 with high school, 6 with some college, 5 with a bachelors degree, 1 with some graduate school, 4 with masters degree, and 3 with an advanced degree. The reason for the relatively large “some college” classification for the younger participants was because many of these participants were still pursuing their undergraduate degrees. Grouping education level into the following two categories—some college and below, and bachelors degree and above—resulted in no significant differences in education level between the 20 younger-old adults and the 20 older-old adults, X 2(df = 1, n = 40) = 0.11, p > .05. All participants had at least minimal Internet and Web-based search engine experience and spoke fluent English.

2.2 Setting and Materials

Performance of the Internet search tasks, the Pathfinder task, and administration of the structured interview instrument took place in an office equipped with the experimental computer workstation. Participants performed their information search tasks on a Dell computer system that had a 19” flat panel display, mouse, and high-sensitivity microphone, and accessed the Internet through the University of Miami’s high-speed broadband connection. The system was configured with Microsoft Internet Explorer 6.0, the Hypercam 2.10.00 screen capture utility [Hyperionics Technology 2006], Weblogger software [Reeder et al. 2000], Windows Media Player, and PCKNOT software for Pathfinder network analysis [Interlink 1998].

The Hypercam screen capture utility enabled each participant’s onscreen task to be recorded in the form of a Windows-based digital movie. This movie was used primarily for review purposes. Verbal protocol data was also captured using this software. The Weblogger application, which creates a visual map in Microsoft Visio of the participants’ solution path through the Internet, was used to collect time and URL information related to the participants’ information-seeking moves.

2.3 Procedure

The study was conducted over two days. On the first day, a battery of tests consisting of 21 standardized measures of component cognitive abilities was administered to each participant in a group testing format. These measures are widely used in the literature and have demonstrated reliability and validity. The measures were chosen to assess a broad range of abilities including verbal fluency, psychomotor speed, perceptual speed, attention/concentration, long-term memory, memory span, working memory, spatial-visualization ability, reasoning, and lifelong knowledge. There were at least two markers for each of the abilities. Details concerning this cognitive battery can be found in Czaja et al. [2006]. A list of the component cognitive abilities and their corresponding markers is presented in Table I. For this study, we also included the Group Embedded Figures Test as the distinction between FI and FD styles has been shown to be related to information-seeking performance.

Table I.

Component Cognitive Abilities and their Test Measures

| Cognitive Ability | Test Measure | Reference |

|---|---|---|

| Psychomotor Speed | RT Both Mean | Choice Reaction Time [Wilkie et al. 1990] |

| Perceptual Speed | Digit Symbol | Digit Symbol Substitution Test [Wechsler 1981] |

| Attention/Concentration | Stroop Test – Color Word | Stroop Color Word Association Test [Golden 1978] |

| Long-Term Memory | Meaningful Memory | Meaningful Memory [Institute for Personality and Ability Testing, Inc. 1982] |

| Memory Span | CVLT-Immediate | California Verbal Learning Test – Immediate [Delis et al. 1987] |

| Verbal Fluency | Reading Comprehension Score | Nelson-Denney Reading Comprehension [Brown et al. 1993] |

| Spatial-Visualization | Paper Folding Correct | Paper Folding [Ekstrom et al. 1976] |

| Working Memory | Computation Span – Simple | Computation Span [Salthouse and Babcock 1991] |

| Reasoning | Inference Test Correct | Inference Test [Ekstrom et al. 1976] |

| Life Knowledge | MAB Information | Multidimensional Aptitude Battery [Jackson 1998] |

Additional instruments also completed on the first day included a demographic and health questionnaire, a technology and computer experience questionnaire, and a Web experience questionnaire. On the second day, participants completed the individualized testing protocol that included tests of vision (Snellen Near and Far), psychomotor speed, attention, and the Group Embedded Figures Test. Following a lunch break, they were given a brief introduction to the experiment and a short introduction to the verbal protocol methodology and accompanying exercise. The exercise consisted of having the participant imagine his or her home and then count aloud all of the windows they encountered as they mentally traversed the space.

The participants then proceeded to find information that would answer six information problems (Table II) that were presented in a fixed sequence. The simplest problems, 1(a) and 1(b), were presented first. A randomized order was used for selecting the sequence for the remaining four problems. The same order was used for all participants. Each question was printed on a separate laminated card. To perform their searches, participants were free to use any search engine and any approach to solving the problem as long as they found it somewhere on the Internet (e.g., they could proceed directly to a Web site or Web page by using its URL). The experimenter then launched the Web browser, cleared the history feature, and pointed the browser to Google’s homepage. The Google search engine was selected because it is a general-purpose search engine with a simple interface and powerful search features. Although its use was not required, in almost all cases, the participants chose to conduct their searches using Google.

Table II.

Search Task Information Problems

| Search Task ID | Search Task Text |

|---|---|

| 1(a) | The US Government has a department that deals with aging and issues which concern older adults. Find a Web site for one of these departments, the Administration on Aging. |

| 1(b) | In the Administration on Aging Web site, find a Web page containing information on ways to remodel a home or apartment that make it more senior-friendly or more comfortable for older adults. |

| 2 | Suppose you have a friend and suspect he or she is overweight. You remember something called the BMI that might help determine whether your friend is overweight or not. You know 2 facts about your friend:

|

| 3 | Flu season is coming around and you’re interested in getting a flu shot. However, you want to be sure you don’t belong to the group of people who should not receive this shot. Find information on at least 3 types of people who should not get a flu shot. |

| 4 | You’ve decided that you want to get back in shape. Using the Internet, find information on 5 things you can do to get back into shape. Remember that these recommendations must be appropriate for your age. |

| 5 | A friend of yours uses a wheelchair. He wants to get a new one and has asked you to help him find information on the Internet regarding new models and prices. Wheelchairs are also known as mobility solutions and you can recommend new designs that don’t look like traditional wheelchairs but are still recommended for older adults. Find 3 mobility solutions and corresponding prices for your friend. They cannot all be wheelchairs. |

Participants were handed each of the problems, one at a time, on laminated cards. After reading the card, the subject placed it on a stand next to the computer. Participants had up to 15 minutes to solve each problem. However, in a few cases where it was judged that the participant was close to a solution, the time limit was exceeded by a few minutes. All of the problems dealt with medical, health, and wellness-related issues. Problems classified as complex were generally ill-structured, with the exception of problem 3 (concerning flu shots).

The experimenter monitored the participant’s search process. By examining the final screen outputs and guided by a scoring sheet, the experimenter determined the correctness of the answer offered as a solution to each of the problems. As discussed in the following section, problems were scored as incorrect, partially correct, or correct.

After completing the search tasks participants were given a rest break, and then a brief introduction to the Pathfinder task. This task required judging the relatedness of a pair of concepts displayed on the Pathfinder PCKNOT software computer window, which were drawn from a pool of Internet and search-related concepts. For this study, a total of 14 concepts were used (Table III). Participants provided pairwise ratings by entering a number from one (Unrelated) to nine (Related). Most of the participants completed the 90 pairwise ratings in under 15 minutes. These data were then analyzed by software (PCKNOT) that can generate a graphical network representation of the participant’s knowledge structure for these concepts and that also computes a similarity rating that compares each participant’s Pathfinder network to that of a referent (e.g., expert) network.

Table III.

The 14 Internet and Search-Related Concepts Used for the Pathfinder Task

| Number | Internet or Search-Related Concept |

|---|---|

| 1 | Internet Browser - Software |

| 2 | Hits or Page of Results |

| 3 | Site Map |

| 4 | Stop Button |

| 5 | Back Button |

| 6 | Web Directory or Directory of Web Sites |

| 7 | Web Address or URL |

| 8 | Web Site |

| 9 | Web Page |

| 10 | Internet |

| 11 | Clickable Link |

| 12 | Home Page |

| 13 | Search Engine |

| 14 | Keywords or terms |

Finally, each participant took part in a structured interview that consisted of questions that assessed declarative and procedural knowledge related to Internet information seeking. This instrument contained 74 questions divided into four parts: a general Internet section, followed by a section on Web browsers, and ending with a two-part section related to simple and advanced Internet search knowledge. In addition to fundamental factual knowledge, the questions also required demonstration of practical hands-on knowledge related to Internet information seeking. Contained within this 74-item instrument1 were 10 questions that specifically probed the participant’s mental models of the Internet, Web browser, and search engine (Table IV). All questions were read aloud to the participant who was required to verbalize a response. The participant’s response was immediately rated by the researcher. The scoring of the structured interview questions varied according to the nature and complexity of the question. Some questions were simply marked correct or incorrect. Other more complex questions were scored correct, partially correct, or incorrect, and, for some questions, the score reflected the number of correct items identified by the participant (e.g., all that apply). The maximum possible score was 300. The structured interviews were audio-taped.

Table IV.

The 10 Mental Model Questions from the 74-item Structured Interview Instrument

| Question ID | Structured Interview Question Text |

|---|---|

| 1 | Ok, here’s a computer. If I asked you what a computer is, you could say something like “It’s a device that’s made up of a screen, a keyboard, the computer, a printer, and it runs software.” Please describe the Internet in a similar way. What is the Internet? (Internet domain) |

| 2 | The Internet has millions of Web sites and more are being added every day. Where are Web sites stored and what features of the Internet permit you to see these Web sites? (Internet domain) |

| 3 | Suppose you’re sitting in front of a computer that’s connected to the Internet. You type an Internet address, that is, a Web site address, and hit the enter key. Describe as best as you can how a Web page that’s stored on a computer somewhere on the Internet actually makes it into your computer and onto your screen. I don’t want technical details; just give me the main events. (Internet domain) |

| 4 | Take a look at this map of an Internet network in the United States. Suppose you are in Chicago, Illinois, using a computer and you are viewing a Web site that’s stored on a computer in Dallas, Texas. Look at the connection that ties these two computers together and tell me what would happen if the connection suddenly stopped working or was broken or interrupted in some way? What would happen to your experience viewing and interacting with the Web site? (Internet domain) |

| 5 | How does the Web browser work? In other words, how does it communicate with the computer that stores the Web site? (Web browser domain) |

| 6 | Try to explain the sorts of things that might go wrong if a Web browser can’t access a particular Web site or Web page? (Web browser domain) |

| 7 | Can you describe what a search engine does? (Simple and advanced search domains) |

| 8 | When a search engine tries to match your search query words, is it searching the Internet or something else? (Simple and advanced search domains) |

| 9 | Can search engines look for information on the entire World Wide Web? Explain. (Simple and advanced search domains) |

| 10 | Is there a difference between the Internet and the World Wide Web or are they the same thing? Explain. (Simple and advanced search domains) |

All participants were compensated $75.00 for their participation in the study. The study protocol was approved by the University’s Institutional Review Board.

2.4 Measures

The primary measures of this study were: (1) task performance scores for simple, complex, and all problems; (2) knowledge subcategory domain scores and a total knowledge domain score; (3) Pathfinder similarity scores; (4) cognitive ability test scores, including the Group Embedded Figures Test; and (5) measures of education and Web experience.

For each participant, a task performance score (TPSij) was computed corresponding to the performance of person i on problem j. This performance score was a function of problem correctness, problem completion time, and problem complexity. It proved to be more sensitive than either time or accuracy alone and had good (normal) distributional characteristics.

TPSij was computed as follows. First, for any given problem j, the participants were divided into three groups according to how correctly they answered the problem: those that provided incorrect answers or no answer to the problem, those that provided partially correct answers to the problem, and those that answered the problem correctly. Let cij represent the category of correctness for participant i on problem j. If the problem solution was incorrect, cij = 0; if the problem solution was partially correct, cij = 1; and if the problem solution was correct, cij = 2. All problems had to be answered from information found on the Internet (i.e., they could not be answered based on the participant’s prior knowledge of the problem content).

Next, “time to completion” of the problem was considered. Let tcij represent the time to completion value assigned to participant i on problem j. These values were only assigned to participants whose problem solutions were either correct or partially correct (for those participants whose solutions to problem j were incorrect, TPS was assigned a value of zero for that problem). For those participants whose solutions to problem j were partially correct, tcij = tmax j/tij, where tmax was the time taken by the slowest participant whose solution to problem j was partially correct, and tij represented the time each participant within this group took to complete that problem. Thus, for those participants whose completion of problem j was partially correct, the participant who had the longest completion time, that is, when tij = tmax j, had a tcij value of one. Faster completion times (i.e., tij < tmax j) indicated better performance and led to tcij values greater than one.

For those participants who completed problem j correctly, their tcij values were computed as follows. First, the highest tcij value from the next best group, the partially correct group, was determined. This value was then added to the tmax j/tij value computed for each participant who had completed problem j correctly. Thus, any participant who answered problem j correctly would be assigned a higher tcij value than any participant in the partially correct group.

Finally, problem difficulty weights, d j, were assigned to each problem j. Based on the consensus of three of the study’s investigators, problem difficulty was rated on a four-point scale with the easiest and hardest problems assigned weights of one and four, respectively. Problem weights were derived according to the number of answers the problem required and whether any of its parts, such as initial conditions or expected outcomes, were not well-defined. The weights were assigned as follows: 1 for problems 1(a) and 2; 2 for problem 1(b); 4 for problem 3; and 3 for problems 4 and 5.

For any given problem j, the task performance score of participant i was computed as TPSij = cij× tcij× d j. As indicated before, if participant i completed problem j incorrectly, then TPSij = 0 because cij = 0.

A participant i’s overall task performance score was derived by summing TPSij across all j problems. For each participant, task performance scores were also derived for two categories of problems: simple and complex. The task performance score for simple problems was computed by summing TPSij across problems 1(a), 1(b), and 2; the task performance score for complex problems was computed by summing TPSij across problems 3, 4, and 5. Simple problems generally dealt with common conditions, required few answers, and were relatively well-defined, whereas the complex problems generally dealt with uncommon conditions, required many answers, and were more ill-defined.

We included the measure of overall task performance in the analyses as it reflects a complexity-free measure. That is, it provides a measure of task performance that is not based on subjective judgment with respect to the classification of problems into simple and complex categories. Also, by aggregating scores from more problems, the overall task performance measure is expected to provide more reliable statistical results.

3. RESULTS

3.1 Task Performance, Internet-Related Domain Knowledge, and Age

As noted, measures of simple task performance, complex task performance, and overall task performance measures (performance on all problems) were computed. In addition, four measures of Internet-related domain knowledge as well as the total knowledge score (their sum) was computed for each participant. The means and standard deviations for each of the knowledge measures are presented in Table V. Means and standard deviations for each of the three task performance measures are presented in Table VI (note that complex problem performance scores were higher than those for simple problems because they were given more weight). As previously described, we also categorized the sample of older participants into younger-old and older-old adults.

Table V.

Means and Standard Deviations of the Total Knowledge Score and the Individual Knowledge Domain Scores

| Groups | ||||||

|---|---|---|---|---|---|---|

| Younger | Younger-Old | Older-Old | ||||

| Knowledge Domain | M | SD | M | SD | M | SD |

| Internet | 34.6 | 19.0 | 34.6 | 17.0 | 26.7 | 15.0 |

| Web Browser | 37.0 | 10.9 | 27.1 | 11.5 | 25.0 | 11.0 |

| Simple Search | 31.6 | 13.2 | 28.0 | 12.3 | 23.2 | 7.82 |

| Advanced Search | 30.6 | 24.7 | 21.6 | 18.2 | 16.1 | 9.34 |

| Total Knowledge | 133.8 | 62.3 | 111.2 | 47.8 | 89.9 | 35.2 |

Table VI.

Means and Standard Deviations of the Task Performance Measures

| Task Performance | ||||||

|---|---|---|---|---|---|---|

| All | Simple | Complex | ||||

| Problems | Problems | Problems | ||||

| Groups | M | SD | M | SD | M | SD |

| Younger (n= 10) | 228.3 | 107.9 | 90.0 | 64.6 | 138.3 | 67.0 |

| Younger-Old (n= 20) | 180.9 | 67.6 | 77.2 | 34.9 | 103.7 | 54.6 |

| Older-Old (n= 20) | 158.0 | 69.8 | 74.9 | 35.5 | 83.1 | 46.2 |

| All Older (n= 40) | 169.4 | 68.8 | 76.1 | 34.8 | 93.4 | 51.0 |

Table VII presents the correlations between the three task performance measures and each of the knowledge scores. These data indicate different patterns in relationships between the knowledge and the task performance measures across the younger-old and older-old age groups. Specifically, significant correlations between the various knowledge scores and the task performance measures, especially for performance on all problems, were much more evident for the younger-old participants. Also evident from this analysis was the importance of Internet knowledge for the simple problems and advanced search knowledge for the complex problems for both the younger-old and the older-old participants.

Table VII.

Correlations between Measures of Performance and Measures of Knowledge for the Younger-Old and the Older-Old Participants

| Younger-Old | Older-Old | |||||

|---|---|---|---|---|---|---|

| Knowledge Domain | All Problems | Simple Problems | Complex Problems | All Problems | Simple Problems | Complex Problems |

| Internet | .408 | .469* | .205 | .398 | .485* | .228 |

| Web Browser | .501* | .384 | .376 | .161 | .282 | .026 |

| Simple Search | .484* | .184 | .482* | .188 | .181 | .146 |

| Advanced Search | .603** | .330 | .537* | .455* | .303 | .454* |

| Total Knowledge | .619** | .431 | .491* | .381 | .415 | .258 |

p < .05,

p < .01 (younger-old: n = 20; older-old: n = 20)

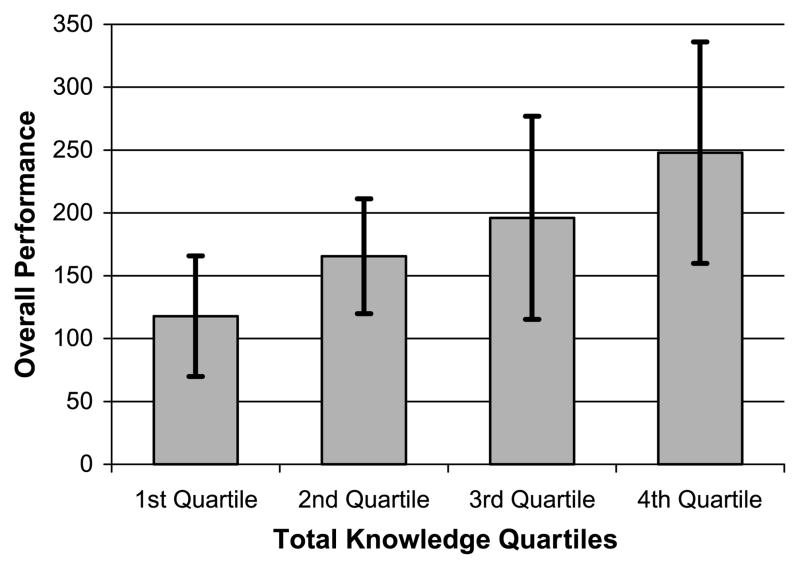

To further examine the relationship between knowledge and performance, total knowledge scores were recoded into four quartile groups: 1st quartile (worst knowledge scores), n = 10; 2nd quartile, n = 10; 3rd quartile, n = 10; and 4th quartile (best knowledge scores), n = 10. Using overall task performance as the dependent measure, the analysis of variance (ANOVA) procedure was then used to test for differences in performance as a function of knowledge quartile. A significant effect of quartiles was found, F[3,36] = 4.90, p < .01 (Figure 2). Post-hoc tests using Tukey’s HSD revealed significant differences between the 1st and the 3rd quartiles (p < .05) and between the 1st and the 4th quartiles (p < .01). Similar results were found for the simple and complex problems.

Fig. 2.

Mean performance on all problems across total knowledge expressed in terms of quartile groups. Error bars indicate +/− one standard deviation.

Interestingly, although Figure 2 implies a monotonically increasing relationship between overall performance and total knowledge, the top five performers had a mean performance score of M = 293.36 (SD = 39.25) and a mean knowledge score of M = 141.6 (SD = 45.87), while the individuals who had the top five total knowledge scores had a much higher mean knowledge score (M = 176.4, SD = 9.10) but a much lower mean performance score (M = 219.89, SD = 72.24).

Differences in knowledge between the younger-old and the older-old participants (Table V) were examined using t-tests. No significant differences were found for total knowledge or any of the four knowledge domains. However, when the performance of the 40 older adults was compared to that of the 10 younger adults (the comparison sample), a trend toward significance was found for total knowledge, t(48) = 2.00, p = .051, and a significant effect was found for the Web browser knowledge domain, t(48) = 2.80, p = .007, with the younger adults scoring higher in both cases.

Finally, differences in performance between the younger-old and older-old participants (Table VI) were examined on each of the three task performance measures: all problems, simple problems, and complex problems (these two groups did not significantly differ in previous Web experience). The results indicated that there were no significant differences on any of the three task performance measures. It should be noted, however, that when the performance of the 40 older adults was compared to that of the 10 younger adults, significant results were found for all problems, t(48) = 2.15, p= .037,as well as for complex problems, t(48) = 2.48,p= .023, but not for simple problems, t(48) = 0.94. In both cases, the younger adults achieved higher scores than the older adults (Table VI).

3.2 Task Performance and Structural Knowledge (Pathfinder)

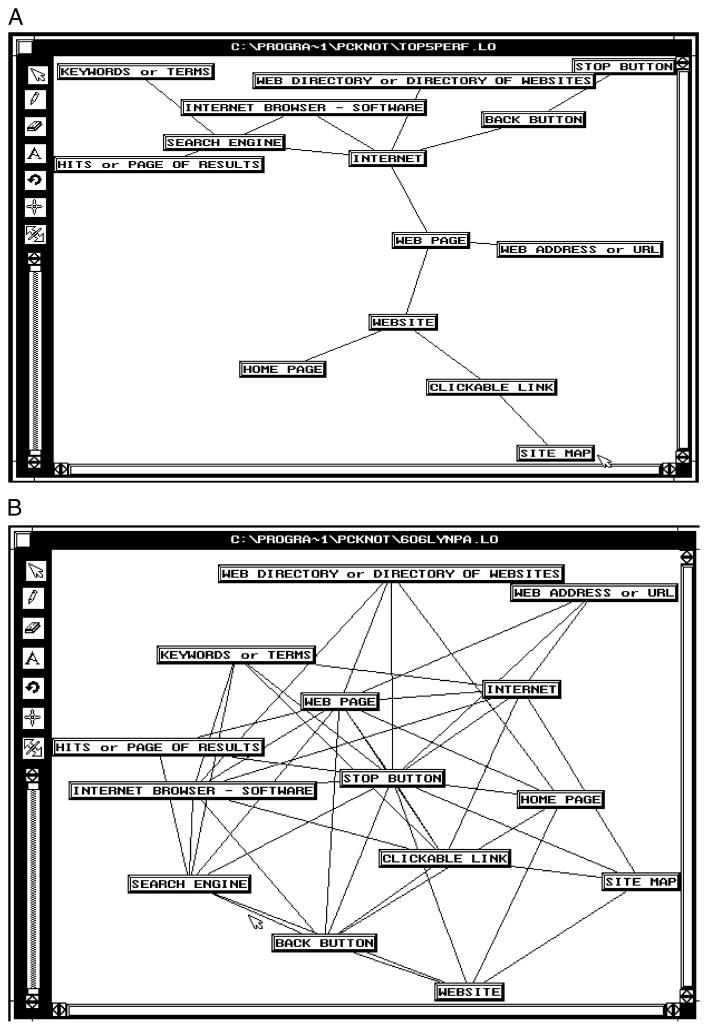

Pathfinder, a psychological scaling technique, was used in this study to examine the importance of Internet-related structural knowledge for task performance. Pathfinder’s measure of structural knowledge, the similarity score, requires that each individual’s network of relationships be compared to a referent network. In this study, the referent network consisted of the top five performers, based on the overall task performance measure, in the sample (Figure 3(a)). An example of a single participant’s Pathfinder network is shown in Figure 3(b) where the many links between concepts and the somewhat symmetrical nature of this network suggest poor structural knowledge.

Fig. 3.

(a) Pathfinder consensus referent structure corresponding to the top five performers. (b) A single participant’s Pathfinder network. The multiple links between concepts suggest poor structural knowledge.

The correlations between the Pathfinder similarity scores and each of the domain knowledge scores derived from the structured interview instrument were as follows: total knowledge, r(40) = .504, p < .01; Internet, r(40) = .41, p < .01; Web browser, r(40) = .439, p < .01; simple search, r(40) = .516, p < .01; and advanced search, r(40) = .316, p < .05. These data indicate relatively strong relationships between structural knowledge and the other knowledge measures. However, there were no significant correlations between the Pathfinder similarity scores and any of the three task performance measures.

3.3 Predictors of Information-Seeking Performance

A hierarchical regression analysis was performed to examine the extent to which cognitive abilities predicted task performance after accounting for the effects of knowledge and to determine the influence of age on performance after accounting for differences in knowledge and cognitive abilities. As noted earlier, a battery of tests consisting of 21 standardized measures of a broad range of component cognitive abilities was administered to each participant, with at least two markers for each of the abilities [Czaja et al. 2006]. From each of these component cognitive abilities, one marker was selected, based on the test measure that most highly correlated with the measure of overall task performance, to explore the role of cognitive abilities on Internet information seeking. In addition, we included the Group Embedded Figures Test score.

For these analyses, the total knowledge score was entered in the first step. We chose to use total knowledge as it served as the best measure of overall Internet-related knowledge. All ten cognitive ability measures as well as the Group Embedded Figures Test score were entered in the second step using the stepwise procedure. Age was then entered in the last step.

Table VIII summarizes the results of each step of the procedure for each of the three task performance measures. Note that the second step actually consists of several substeps where each substep is the addition of a cognitive variable by the stepwise procedure. Given that we forced age into step 3 to examine the impact of age over and beyond the model in step 2 and that age was not a significant predictor of any of the performance measures after accounting for knowledge and cognitive abilities, the final models are those specified in step 2.

Table VIII.

Summary of Regression Analysis for the Three Performance Measures

| All Problems | |||||

|---|---|---|---|---|---|

| Model Step | Variable | R2 | β | t-score | p |

| 1 | Total Knowledge | .292 | .541 | 3.86 | <.001 |

| 2 | Total Knowledge | .292 | .313 | 2.18 | .037 |

| Reasoning | .405 | .305 | 2.07 | .046 | |

| Working Memory | .474 | .268 | 2.19 | .035 | |

| Perceptual Speed | .537 | .253 | 2.11 | .042 | |

| 3 | Age* | .537 | .021 | 0.16 | .874 |

| Simple Problems | |||||

| Model Step | Variable | R2 | β | t-score | p |

| 1 | Total Knowledge | .174 | .417 | 2.75 | .009 |

| 2 | Total Knowledge | .174 | .102 | .635 | .530 |

| Reasoning | .388 | .560 | 3.50 | .001 | |

| 3 | Age* | .388 | −.008 | −.059 | .954 |

| Complex Problems | |||||

| Model Step | Variable | R2 | β | t-score | p |

| 1 | Total Knowledge | .190 | .435 | 2.90 | .006 |

| 2 | Total Knowledge | .190 | .373 | 2.62 | .013 |

| Working Memory | .312 | .355 | 2.49 | .018 | |

| 3 | Age* | .312 | .001 | .003 | .997 |

Step 2 represents the final model as adding age in step 3 was not significant.

From Table VIII, the changes in R2 obtained by adding a variable in step 2 can be derived by subtracting the R2 value associated with the model without that variable (the preceding R2 value in the column) from the R2 value associated with the model containing that variable. The beta values that are provided are the standardized beta coefficients. The squares of these coefficients can be used to obtain estimates of the relative contributions of the predictor variables to the overall variance of the performance measure.

Not surprisingly, the highest R2 (.537) was found for performance on all problems as this measure reflected the largest number of problems performed by the participant. In addition to knowledge, three cognitive ability measures were found to be predictors of overall performance: reasoning, working memory, and perceptual speed, with each measure accounting for a reasonably large proportion of the variance in performance.

For performance on the simple problems, following the inclusion of total knowledge the only cognitive ability measure found to be significant was reasoning, resulting in a final model with R2 = .388. Interestingly, knowledge was no longer significant when reasoning was added to the model.

Finally, for the performance of the complex problems, following the inclusion of total knowledge, the only cognitive ability measure found to be significant was working memory, resulting in a final model with R2 = .312. Also, in contrast to the final (step 2) model for performance on the simple problems, the knowledge variable remained significant even after the inclusion of working memory.

For the sample of 40 older adults, a weak correlation was found between age and overall task performance, r(40)= −.223, p = .083 and between age and complex task performance, r(40) = −.219, p = .087. Still, even for this restricted age range, there was a clear indication of a relationship between age and performance and specifically for performance on the complex problems. However, the results of the regression analysis clearly indicate that after accounting for knowledge and cognitive ability factors, the influence of age is negligible.

3.4 Task Performance and Mental Models

To understand the relationship between the user’s mental model and performance, ten questions were extracted from the structured interview instrument (Table IV) and grouped into three categories according to the type of mental model they addressed, either Internet, Web browser, or search engine (the latter comprised questions from both the simple search and advanced search sections of the structured interview instrument). In each of these cases, having a better mental model was operationally defined by composite scores of 4 or greater on the questions corresponding to that mental model. Twenty-two of the 40 older participants had a better mental model of the Internet; 14 had a better mental model of Web browsers; and 14 had a better mental model of search engines.

To determine if goodness of mental model was reflected in performance, t-tests were conducted. Specifically, for each of the three measures of task performance, the impact on performance of each of the mental model categories was assessed where the two groups were defined based on whether the participant was in the better or worse group for that mental model category. The results of these tests, which are summarized in Table IX, indicated that the mental model of the Internet had the most impact on overall and simple task performance, while the search engine mental model had the most impact on performance of the complex problems.

Table IX.

T-tests of Performance for Superior vs. Inferior Types of Mental Models

| Performance | |||

|---|---|---|---|

| Mental Model | All Problems | Simple Problems | Complex Problems |

| Internet | 2.19** | 3.24*** | 0.84 |

| Web Browser | 0.76 | 0.56 | 0.64 |

| Search Engine | 1.88* | 0.93 | 1.89* |

The values in the table are t-values used for comparison of mean performance for superior versus inferior types of mental models.

p < .01,

p < .05,

p < .10 (N = 40).

4. DISCUSSION

Older adults have the potential to benefit tremendously from the vast stores of information on the Internet. With rising healthcare costs and an overloaded healthcare system, access to health-related information can empower older adults by enabling them to become better informed about their own health and healthcare choices. This, in turn, could impact their independence and well-being and thus have a substantial impact on their lives and on society as a whole. However, despite these potential benefits one should not lose sight of the fact that information seeking is an activity that places demands on many cognitive abilities, and the dynamics and processes of finding information are often obscure. For many older adults who possess limited knowledge concerning the Internet and search engine processes and who may also exhibit declines in cognitive abilities, Web-based information seeking can be an intimidating and frustrating experience.

The goals in this study were to examine the influence of various types of Internet-domain knowledge, cognitive abilities, and problem complexity on the Internet health information-seeking capabilities of older adults. An important overall finding was that knowledge of various aspects of the Internet, though necessary, was not sufficient in explaining information-seeking performance. As a general illustration of this point, consider those older participants with the five best total knowledge scores. Compared to these participants, the older participants with the five best overall performance scores had, on average, a lower total knowledge score (141.6 vs. 176.4). However, these five best performers had, on average, a much higher overall performance score (293.36 vs. 219.89). Thus, although having good knowledge was found to be strongly related to effective information seeking (Figure 2), other factors besides knowledge had an impact on information seeking.

In particular, a number of cognitive abilities were critical for task performance. Importantly, the data also indicated that the relationship between abilities and performance depended on problem complexity. For overall task performance, after accounting for individual differences in knowledge, significant predictors of performance were reasoning, working memory, and perceptual speed. For the complex problems, knowledge and working memory were significant predictors of performance. However, for simple problems, only reasoning ability was found to be a significant predictor of performance. Thus as problems become more complex, information-seeking performance appears to become more dependent on a combination of knowledge and abilities.

The importance of working memory in Web navigation has been noted in a number of studies [Kubeck et al. 1999; Laberge and Scialfa 2005] where both recall of where one is, planning of where one wants to go, and comprehension of information on Web pages need to be carried out more or less concurrently. The coordination of the processing and storage of information is a critical aspect of working memory activity [Salthouse and Babcock 1991] and, as implied in Figure 1, can play an important role in keeping the user oriented during more complex information-seeking activities. With simpler problems, the emphasis may be on identifying relevant facets and ultimately search box terms that are more likely to map into immediately recognizable solutions. Therefore, for simpler problems that do not generate complex navigational behaviors, reasoning ability would likely be more important than working memory. However, for more complex problems, in addition to working memory, knowledge pertaining to search would also play an instrumental role with respect to the translation of facets into search box terms as implied in Figure 1.

The finding that perceptual speed was predictive of the overall task performance measure, even after accounting for knowledge, reasoning, and working memory, is also not surprising. First, speed was one of the components of the task performance measure. Second, according to the model depicted in Figure 1, a deficit in perceptual speed which is related to visual scanning ability, could adversely affect information seekers during the Results stage by decreasing the number of results and result pages that they would be able to process.

It should also be noted that intuitively the identification of relevant facets or search terms would depend as well on verbal fluency and lifelong knowledge abilities. While these two latter abilities did not emerge as significant predictors of performance in the regression models, they were highly correlated with the performance measures as well as with reasoning ability. Thus their shared variance with reasoning ability would likely require much higher sample sizes for their effects to become manifest.

This study also incorporated the Group Embedded Figures Test to differentiate field dependent (FD) from field independent (FI) individuals. The results from this study were not consistent with findings that a field-independent cognitive style is associated with better search performance on Internet-based information-seeking tasks [Palmquist and Kim 2000]. The lack of sensitivity of this measure to performance in this study could be due to a number of factors, including reduced variability in this measure for the restricted age range comprising this sample of participants, or perhaps to the reduced influence of visual complexity when using powerful search engines for problem solving.

What the analyses in this study do suggest is that certain cognitive abilities, in particular reasoning, working memory, and perceptual speed, and to some extent verbal fluency, have a large impact on the extent to which older adults can negotiate the demands inherent in Internet information seeking. The importance of reasoning ability also suggests that with the powerful search engines currently available (such as Google), the crucial information-seeking skills may be shifting from navigation activities to translation of the problem into appropriate facets and discriminating useful from unhelpful results (Figure 1).

With respect to knowledge, the data suggest that its role in information seeking varies with problem complexity. Specifically, the findings indicate that for complex problems, the most critical Internet domain knowledge is search knowledge, and in particular, advanced search knowledge (Table VII). The mental model data are also consistent with this finding. As indicated in Table IX, good mental models of the Internet appear to support performance on simple problems, while there is a trend toward good search engine mental models supporting complex task performance. The latter result is consistent with findings from Borgman [1996], which indicated that participants trained on an analogical model of an online search system showed better performance on complex problems compared to those who received procedural training. The ability for older adults to successfully negotiate more complex Web-based searches is crucial in light of the sheer quantity of health-related information on the Internet and the complex nature of health issues. Importantly, these results imply the need to incorporate knowledge related to search engines into instructional programs targeting older adults.

Another consideration in the relationship between knowledge and Internet information-seeking behavior is the role of structural knowledge. The findings of significant and positive correlations between Pathfinder similarity scores, the total knowledge score, and the individual knowledge domain scores imply that the more knowledge a participant had, the more organized that knowledge was, and are in line with other findings from other task domains (e.g., Conner et al. [2004]). However, despite the rather impressive visual demonstrations of differences in knowledge organization provided by the Pathfinder network graphs (e.g., Figures 3(a) and 3(b)), there were no significant correlations between the Pathfinder similarity scores and task performance by the older participants. Studies have found mixed relationships between Pathfinder similarity scores and performance (e.g., Wyman and Randel [1998]; Dorsey et al. [1999]). The absence in this study of stronger relationships to task performance may have been due to the specific set of concepts used in the pairwise comparisons (Table III), which may not have been as relevant to information-seeking performance as were the declarative and procedural knowledge elements of the structured interview instrument.

Overall, it appears that while knowledge related to various aspects of the Internet is critical, what may be most crucial to performance is attaining some threshold of this knowledge; beyond this point the benefits in performance may derive from relatively undiminished functioning on key cognitive abilities. Further studies are necessary for clarifying the relative roles that knowledge and cognitive abilities play in the Internet information-seeking performance of older adults. In any case, it is important to note that there is no implication from this study that knowledge can compensate for widespread deficits in cognitive abilities or, conversely, that having good cognitive abilities can compensate for very poor knowledge.

On the practical side, the results suggest that instruction directed at the four domains of knowledge investigated in this study (with emphasis on search-related knowledge for more complex problems) could significantly enhance the older adult’s ability to perform information searches on the Internet. In addition, training aimed at improving cognitive abilities such as reasoning, working memory, and perceptual speed, while more challenging, may also result in performance improvements. Evidence exists demonstrating the effectiveness of training interventions of this kind. Nyberg [2005], in a review of cognitive training studies, concludes that there is “robust evidence for the potential for plasticity in older age” (p. 311). Working memory declines in older adults can also be compensated for by good Web designs, for example, designs that utilize dynamic navigational side-trees or breadcrumbs [Maldonado and Resnick 2002].

A limitation of this study that should be noted was that measures of prior knowledge that participants may have had about the problem domains was not measured. This aspect of domain knowledge could encompass several areas, including general health literacy skills, knowledge of specific Web sites and their related Web addresses (URLs), and specific prior knowledge related to the content of each problem (e.g., flu shots). However, we intentionally designed the questions so that it would be difficult to identify a particular relevant URL. In addition, the participants were instructed that they could proceed directly to a Web site or Web page by using its URL rather than a search engine. As it turned out, the participants usually initiated their searches using the search engine. Also, having any specialist knowledge, for example, of wheelchairs or flu shots, would not have benefited their performance scores as these scores were based on their ability to find the correct information from the Internet. Thus, while the lack of measuring problem domain knowledge was a limitation of this study, we did not feel that the lack of inclusion of this type of measure detracts from the study’s results and the important implications of the findings.

In conclusion, our overall goal is to ensure that any adult, regardless of his or her age, computer, or information-seeking knowledge and expertise, is successful at finding useful information on the Internet in reasonable time no matter how difficult the nature of the information problem. However, Marchionini [2004] has voiced concern over precisely this kind of exalted goal and cautions information science against harboring unreasonable expectations. He states: (1) “Our expectations that people can find and understand information without thinking and investing effort are unreasonable”; and (2) “Our hopes that we can create systems (solutions) that ‘do’ IR for us are unreasonable” (slide 32). Instead, he argues for IR systems that evolve using the interactions of people with computers. Both entities would continuously learn and change by virtue of their symbiotic relationship, much as today’s Google search engine evolves month-to-month by adding the intellectual investment of hundreds of employees who are dedicated to finetuning the system [Marchionini 2004].

Acknowledgments

This research was supported by the National Institute on Aging of the National Institutes of Health Grant P01 AG17211-0252.

We thank Sankaran Nair, Rod Wellens, and Timothy Goldsmith for their help.

Footnotes

Permission to make digital or hard copies of part or all of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies show this notice on the first page or initial screen of display along with the full citation. Copyrights for components of this work owned by others than ACM must be honored. Abstracting with credit is permitted. To copy otherwise, to republish, to post on servers, to redistribute to lists, or to use any component of this work in other works requires prior specific permission and/or a fee. Permissions may be requested from the Publications Dept., ACM, Inc., 2 Penn Plaza, Suite 701, New York, NY 10121-0701 USA, fax +1 (212) 869-0481, or permission@acm.org.

The entire instrument is available upon request.

Contributor Information

JOSEPH SHARIT, Department of Industrial Engineering, University of Miami, P.O. Box 248294, Coral Gables, FL 33124.

MARIO A. HERNÁNDEZ, Department of Psychiatry and Behavioral Sciences, University of Miami Miller School of Medicine, 1695 N.W. 9th Ave, Miami, FL 33136

SARA J. CZAJA, Email: sczaja@med-miami.edu, Department of Psychiatry and Behavioral Sciences, University of Miami Miller School of Medicine, 1695 N.W. 9th Ave, Miami, FL 33136.

PETER PIROLLI, Palo Alto Research Center, 3333 Coyote Hill Road, Palo Alto, CA 94304.

References

- Baeza-Yates R, Ribeiro-Neto B. Modern Information Retrieval. ACM Press/Addison Wesley; New York, NY: 1999. [Google Scholar]

- Bass SB. How will Internet use affect the patient? A review of computer network and closed Internet-based system studies and the implications in understanding how the use of the Internet affects patient populations. J Health Psych. 2003;8:25–38. doi: 10.1177/1359105303008001427. [DOI] [PubMed] [Google Scholar]

- Bhavnani SK. Important cognitive components of domain-specific search knowledge. Proceedings of TREC’01. 2001:571–578. [Google Scholar]

- Borgman CL. The user’s mental model of an information retrieval system: An experiment on a prototype online catalog. Int J Man-Mach Stud. 1986;24(1):47–64. [Google Scholar]

- Borgman CL. Why are online catalogs still hard to use. J Amer Soc Inform Sci. 1996;47(7):493–503. [Google Scholar]

- Brown JL, Fischo VV, Hanna G. The Nelson-Denney Reading Test. Riverside Publishing Co.; Chicago, IL: 1993. [Google Scholar]

- Brewer WF. Mental models. In: Nadel L, editor. Encyclopedia of Cognitive Science. Nature Publishing Group / Macmillan Publishers Ltd.; London, UK: 2003. [Google Scholar]

- Chi MTH, Glaser R. Problem solving ability. In: Sternberg RJ, editor. Human Abilities: An Information Processing Approach. Freeman; New York, NY: 1985. [Google Scholar]

- Craik KJW. The Nature of Explanation. Cambridge University Press; Cambridge, UK: 1943. [Google Scholar]

- Czaja SJ, Charness N, Fisk AD, Hertzog C, Nair SN, Rogers WA, Sharit J. Factors predicting the use of technology: Findings from the Center for Research and Education on Aging and Technology Enhancement (CREATE) Psych Aging. 2006;21:333–352. doi: 10.1037/0882-7974.21.2.333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Czaja SJ, Sharit J, Ownby R, Roth DL, Nair SN. Examining age differences in performance of a complex information search and retrieval task. Psych Aging. 2001;16:564–579. doi: 10.1037/0882-7974.16.4.564. [DOI] [PubMed] [Google Scholar]

- Delis DC, Kramer JH, Kaplan E, Ober BA. California Verbal Learning Test: Adult version. The Psychological Corporation; San Antonio, TX: 1987. [Google Scholar]

- Dimitroff A, Wolfram D. Searcher response in a hypertext-based bibliographic information retrieval system. J Amer Soc Inform Sci. 1995;46(1):22–29. [Google Scholar]

- Dorsey DW, Campbell GE, Foster LL, Miles DE. Assessing knowledge structures: Relations with experience and posttraining performance. Human Perform. 1999;12(1):31–57. [Google Scholar]

- Ekstrom RB, French JW, Harman HH, Dermen D. Manual for Kit of Factor-Referenced Cognitive Tests. Educational Testing Services; Princeton, NJ: 1976. [Google Scholar]

- Golden CJ. Stroop Color and Word Test. A Manual for Clinical and Experimental Uses. Stoelting Company; Wood Dale, IL: 1978. [Google Scholar]

- Google 2005. www.google.com.

- Heppner PP. The Problem Solving Inventory (PSI) Manual. Consulting Psychologists Press; Palo Alto, CA: 1988. [Google Scholar]

- Hyperionics Technology 2006. HyperCam. Murrysville, PA. info@hyperionics.com.

- Institute for Personality and Ability Testing, Inc. Manual for the Comprehensive Ability Battery. Champaign, IL: 1982. [Google Scholar]

- Interlink. 1998. PCKNOT Version 4.3 for Pathfinder Network Analysis. Gilbert, AZ. http://interlinkinc.net.

- Jackson DN. MAB-II. Multidimensional Aptitude Battery. Sigma Assessment Systems; Port Huron, MI: 1998. [Google Scholar]

- Johnson K, Magusin E. Exploring the Digital Library: A Guide for Online Teaching and Learning. Jossey-Bass/Wiley; San Francisco, CA: 2005. [Google Scholar]

- Kubeck JE, Miller-Albrecht SA, Murphy MM. Finding information on the World Wide Web: Exploring older adults’ exploration. Educ Geront. 1999;25:167–183. [Google Scholar]

- Kulthau G. Seeking Meaning: A Process Approach to Library and Information Services. 2. Libraries Unlimited; Westport, CT: 2003. [Google Scholar]

- Laberge J, Scialfa CT. Predictors of Web navigation performance in a life span sample of adults. Human Factors. 2005;47(2):289–302. doi: 10.1518/0018720054679470. [DOI] [PubMed] [Google Scholar]

- Liebscher P, Marchionini G. Browse and analytical search strategies in a full-text CD-ROM encyclopedia. School Library Media Quart. 1988 Summer;:223–233. [Google Scholar]

- Marchionini G. Information Seeking in Electronic Environments. Cambridge University Press; Cambridge, UK: 1997. [Google Scholar]

- Marchionini G. Human-computer information retrieval. 2004 http://www.ils.unc.edu/~march/HCIRMIT.pdf.

- Mayer RE, Whittrock M. Problem-solving transfer. In: Berliner DC, Calfee RC, editors. Handbook of Educational Psychology. Simon & Schuster; New York, NY: 1996. [Google Scholar]

- Maldonado CA, Resnick ML. Do common user interface design patters improve navigation? Proceedings of the Human Factors and Ergonomics Society 46th Annual Meeting. 2002:1315–1319. [Google Scholar]

- Nahl D. The user-centered revolution: 1970–1995. In: Kent A, Williams J, editors. Encycolpedia of Microcomputers. Vol. 19. Marcel Dekker; New York, NY: 1997. [Google Scholar]

- Nahl D. The user-centered revolution. In: Drake M, editor. Encyclopedia of Library and Information Science. 2. Marcel Dekker Inc.; New York, NY: 2003. [Google Scholar]

- Nyberg L. Cognitive training in healthy aging: A cognitive neuroscience perspective. In: Cabeza R, Nyberg L, Park D, editors. Cognitive Neuroscience of Aging: Linking Cognitive and Cerebral Aging. Oxford University Press; Oxford, UK: 2005. [Google Scholar]

- Palmquist RA, Kim KS. Cognitive style and online database earch experience as predictors of Web search performance. J Amer Soc Inform Sci. 2000;51(6):558–566. [Google Scholar]

- Pew Internet and American Life Project 2004. Older Americans and the Internet. Washington, D.C. http://www.pewinternet.org.

- Powell JA, Darvell M, Gray JAM. The doctor, the patient and the WorldWide Web: How the Internet is changing healthcare. J Royal Soc Medicine. 2003;96:74–76. doi: 10.1258/jrsm.96.2.74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reeder R, Pirolli P, Card SK. Weblogger: A data collection tool for Web-use studies. 2000. UIR Tech. Rep. UIR-R-2000-06, Xeorx PARC. [Google Scholar]

- Salthouse TA, Babcock RL. Decomposing adult age differences in working memory. Develop Psych. 1991;47:433–440. [Google Scholar]

- Sharit J, Czaja SJ, Hernández MA, Yang Y, Perdomo D, Lewis JE, Lee CC, Nair S. An evaluation of performance by older persons on a simulated telecommuting task. J Geront: Psycho Sci. 2004;59B(6):305–316. doi: 10.1093/geronb/59.6.p305. [DOI] [PubMed] [Google Scholar]

- Slone DJ. The influence of mental models and goals on search patterns during Web interaction. J Amer Soc Inform Sci Tech. 2002;53(13):1152–1169. [Google Scholar]

- Sternberg RJ, Grigorenko EL. Are cognitive styles still in style? Amer Psych. 1997;52(7):700–712. [Google Scholar]

- Sutcliffe A, Ennis M. Towards a cognitive theory of IR. Interact Comput. 1998;10:321–351. [Google Scholar]

- U.S. Department of Health and Human Services. A Profile of Older Americans: 2001. Administration on Aging; Washington, D.C: 2001. [Google Scholar]

- Wang P, Hawk WB, Tenopir C. Users’ interaction with World Wide Web Resources: An exploratory study using a holistic approach. Inform Process Manag. 2000;36:229–251. [Google Scholar]

- Wechsler D. Manual for Wechsler Memory Scaled Revised. The Psychological Corp.; New York, NY: 1981. [Google Scholar]

- Weller HG, Repman J, Rooze GE. The relationship of learning, behavior, and cognitive styles in hypermedia-based instruction: Implications for design of HBI. Comput Schools. 1994;10:401–420. [Google Scholar]

- Wilkie FK, Eisdorfer C, Morgan R, Lowenstein DA, Szapocznik J. Cognition in early HIV infection. Archives Neurol. 1990;47:433–440. doi: 10.1001/archneur.1990.00530040085022. [DOI] [PubMed] [Google Scholar]

- Wyman BG, Randel JM. The relation of knowledge organization to performance of a complex cognitive task. J Appl Cognit Psych. 1998;12:251–264. [Google Scholar]

- Zhang X, Chignell M. Assessment of the effects of user characteristics on mental models of information retrieval systems. J Amer Soc Inform Sci Tech. 2001;52(6):445–459. [Google Scholar]