Abstract

Probably one of the most characteristic features of a living system is its continual propensity to change as it juggles the demands of survival with the need to replicate. Internally these changes are manifest as changes in metabolite, protein and gene activities. Such changes have become increasingly obvious to experimentalists with the advent of high-throughput technologies. In this chapter we highlight some of the quantitative approaches used to rationalize the study of cellular dynamics. The chapter focuses attention on the analysis of quantitative models based on differential equations using biochemical control theory. Basic pathway motifs are discussed, including straight chain, branched and cyclic systems. In addition, some of the properties conferred by positive and negative feedback loops are discussed particularly in relation to bistability and oscillatory dynamics.

Keywords: Motifs, control analysis, stability, dynamic models

1 Introduction

Probably one of the most characteristic features of a living system is its continual propensity to change even though it is also arguably the one characteristic that, as molecular biologists, we often ignore. Part of the reason for this neglect is the difficulty in making time dependent quantitative measurements of proteins and other molecules although that is rapidly changing with advances in technology. The dynamics of cellular processes, and in particular cellular networks, is one of the defining attributes of the living state and deserves special attention.

Before proceeding to the main discussion, it is worth briefly listing the kinds of questions that can and have been answered by a quantitative approach (See Table 1). For example the notion of the rate-limiting step was originally a purely intuitive invention; once analyzed quantitatively however, it was shown to be both inconsistent with logic and experimental evidence. There are many examples such as this where a quantitative analysis has overturned a long held view of how cellular networks operate. In the long term one of the aims of a quantitative approach is to uncover general principles of cellular control and organization. In turn this will lead to new approaches to engineering organisms and the development of new therapeutics.

Table 1.

Some problems amenable to a quantitative approach

| Problem | Representative Solution |

|---|---|

| Rate-Limiting Steps | Kacser and Burns (1973) |

| Role of Feedback and Robustness | Savageau (1976) |

| Analysis of Cell-to-cell Variation | Mettetal et al. (2006) |

| Rationalization of Network Structure | Voit et al. (2006) |

| Design of Synthetic Networks | Kaern and Weiss (2006) |

| New Principles of Regulation | Altan-Bonnet and Germain (2005) |

| New Therapeutic Approaches | Bakker et al. (2000) |

| Origin of Dominance and Recessivity | Kacser and Burns (1981) |

| Missing Interactions | Ingolia (2004) |

| Multistationary Systems | Many Examples Exist (Kholodenko 2006) |

Although traditionally, the discipline of molecular biology has had little need for the machinery of mathematics, the non-trivial nature of cellular networks and the need to quantify their dynamics has made mathematics a necessary addition to our arsenal. In this chapter we can only sketch some of the quantitative results and approaches that can be used to describe network dynamics. We will not cover topics such as flux balance, bifurcation analysis, or stochastic models, all important areas of study for systems biology. For the interested reader, much more detail can be had by consulting the reading list at the end of the chapter. Moreover, in this chapter we will not deal with the details of modeling specific systems because this topic is covered in other chapters.

1.1 Quantitative Approaches

The most common formal approach to representing cellular networks has been to use a deterministic and continuous formalism, based invariably on ordinary differential equations (ODE). The reason for this is two fold, firstly ODEs have been shown in many cases to represent adequately the dynamics of real networks, and secondly, there is a huge range of analytical results on ODE based models one can draw upon. Such analytical results are crucial to enabling a deeper understanding of the network under study.

An alternative approach to describing cellular networks is to use a discrete, stochastic approach, based usually on the solution of the master equation via the Gillespie method (Gillespie 1976; Gillespie 1977). This approach takes into account the fact that at the molecular level, species concentrations are whole numbers and change in discrete, integer amounts. In addition, changes in molecular amounts are assumed to be brought about by the inherent random nature of microscopic molecular collisions. In principle, many researchers view the stochastic approach to be a superior representation because it directly attempts to describe the molecular milieu of the cellular space. However, the approach has two severe limitations, the first is that the method does not scale, that is when simulating large systems, particularly where the number of molecules is large (> 200), it is computationally very expensive. Secondly, there are few analytical results available to analyze stochastic models, which means that analysis is largely confined to numerical studies from which it is difficult generalize. One of the great and exciting challenges for the future is to develop the stochastic approach to a point where it is as powerful a description as the continuous, deterministic approach. Without doubt, there is a growing body of work, such as studies on measuring gene expression in single cells, that depends very much on a stochastic representation. Unfortunately the theory required to interpret and analyze stochastic models is still immature though rapidly changing (Paulsson and Elf 2006; Scott et al. 2006). The reader may consider the companion chapter by Resat et. al. for the latest developments in stochastic dynamics.

In this chapter we will concentrate on some properties of network structures using a deterministic, continuous approach.

2 Stoichiometric Networks

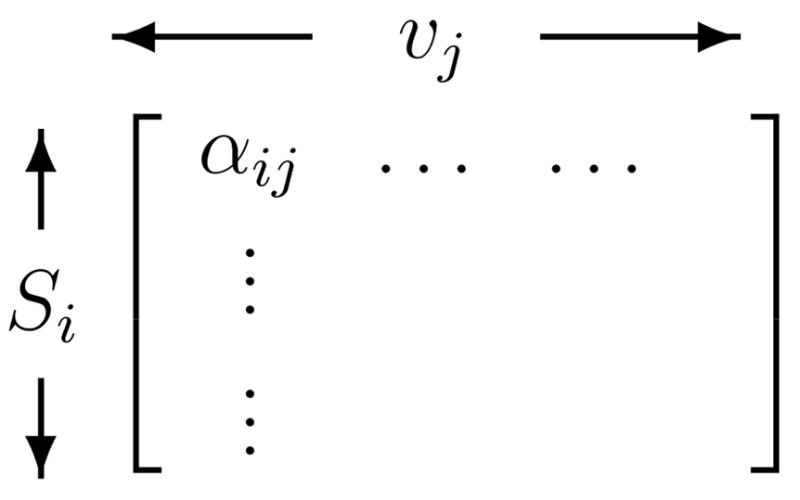

The analysis of any biochemical network starts by considering the network’s topology. This information is embodied in the stoichiometry matrix, N1. In the following description we will follow the standard formalism introduced by Reder (Reder and Mazat 1988). The columns of the stoichiometry matrix correspond to the distinct chemical reactions in the network, the rows to the molecular species, one row per species. Thus the intersection of a row and column in the matrix indicates whether a certain species takes part in a particular reaction or not, and, according to the sign of the element, whether it is a reactant or product, and by the magnitude, the relative quantity of substance that takes part in that reaction. Stoichiometry thus concerns the relative mole amounts of chemical species that react in a particular reaction; it does not concern itself with the rate of reaction.

If a given network is composed of m molecular species involved in n reactions then the stoichiometry matrix is an m × n matrix. Only those molecular species which evolve through the dynamics of the system are included in this count. Any source and sink species needed to sustain a steady state (non-equilibrium in the thermodynamic sense) are set at a constant level and therefore do not have corresponding entries in the stoichiometry matrix.

2.1 The System Equation

To fully characterize a system one also needs to consider the kinetics of the individual reactions as well as the network’s topology. Modeling the reactions by differential equations, we arrive at a system equation which involves both the stoichiometry matrix and the rate vector, thus:

| (1) |

where N is the m ×n stoichiometry matrix and v is the n dimensional rate vector, whose ith component gives the rate of reaction i as a function of the species concentrations.

2.2 Conservation Laws

In many models of real systems, there will be mass constraints on one or more sets of species. Such species are termed conserved moieties (Reich and Selkov 1981). A recent review of conservation analysis which also highlights the history of stoichiometric analysis can be found in Sauro and Ingalls 2004. In this section only the main results will be given.

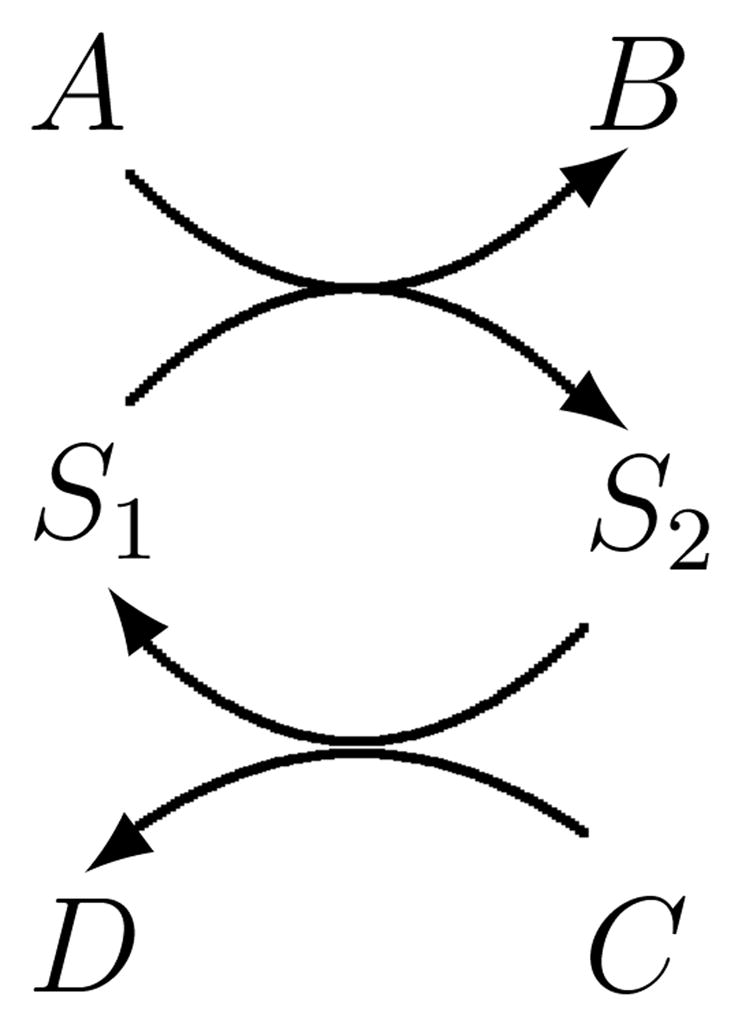

A typical example of a conserved moiety in a computational model is the conservation of adenine nucleotide, i.e. when the total amount of ATP, ADP and AMP is constant during the evolution of the model. Other examples include NAD/NADH, phosphate, phosphorylated proteins forms, and so on. Figure 2 illustrates the simplest possible network which displays a conserved moiety, in this case the total mass, S1 + S2 is constant during the entire evolution of the network.

Figure 2.

Simple conserved cycle with the constraint, S1 + S2 = T

The total amount of a particular moiety in a network is time invariant and is determined solely by the initial conditions imposed on the system2.

Conserved moieties in the network reveal themselves as linear dependencies in the rows of the stoichiometry matrix (Hofmeyr 1986; Cornish-Bowden and Hofmeyr 2002).

If we examine the system equations for the model depicted in Figure 2, it is easy to see that the rate of appearance of S1 must equal the rate of disappearance of S2, in other words dS1/dt = −dS2/dt. This identity is a direct result of the conservation of mass, namely that the sum S1 + S2 is constant throughout the evolution of the system.

The stoichiometry matrix for the network depicted in Figure 2 has two rows [1, −1] and [−1, 1]. Since either row can be derived from the other by multiplication by −1, they are linearly dependent, and the rank of the matrix is 1. Whenever the network exhibits conserved moieties, there will be dependencies among the rows of N, and so the rank of N (rank(N)) will be less than m, the number of rows of N. The rows of N can be rearranged so that the first rank(N) rows are linearly independent. The species which correspond to these rows can then be defined as the independent species (Si). The remaining m − rank(N) are called the dependent species (Sd).

In the simple example shown in Figure 2, there is one independent species, S1 and one dependent species, S2 (or, alternatively, S2 is independent and S1 dependent).

Once the matrix N has been rearranged as described, we can partition it as

where the submatrix NR is full rank, and each row of the submatrix N0 is a linear combination of the rows of NR. Following Reder (Reder 1988), we make the following construction. Since the rows of N0 are linear combinations of the rows of NR we can define a link-zero matrix L0 which satisfies N0 = L0NR. We can combine L0 with the identity matrix (of dimension rank(N)) to form the link matrix, L and hence we can write:

By partitioning the stoichiometry matrix into a dependent and independent sets we also partition the system equation. The full system equation which describes the dynamics of the network is thus:

where the terms dSi/dt and dSd/dt refer to the independent and dependent rates of change respectively. From the above equation, it can be shown that the relationship between the dependent and independent species is given by: Sd(t) − Sd(0) = L0 [Si(t) − Si(0)] for all time t. Introducing the constant vector T = Sd(0) − L0Si(0), and recalling that S = (Si, Sd), we can introduce Γ = [−L0I], and write the vector T concisely as

Γ is called the conservation matrix.

In the example shown in Figure 2, the conservation matrix, Γ can be shown to be

A more complex example is illustrated in Box 1. Algorithms for evaluating the conservation constraints and the Link matrix can be found in (Hofmeyr 1986; Cornish-Bowden and Hofmeyr 2002; Sauro and Ingalls 2004; Vallabhajosyula et al. 2006).

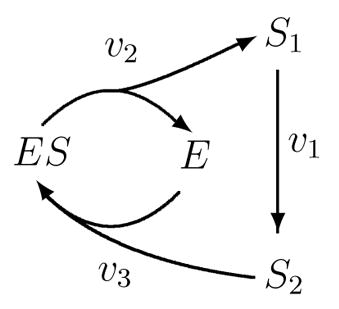

Box 1. Conservation Analysis.

Consider the simple reaction network shown on the left below:

The stoichiometry matrix for this network is shown on the right. This network possesses two conserved cycles given by the constraints: S1 + S2 + ES = T1 and E + ES = T2. The set of independent species includes: {ES, S1} and the set of dependent species {E, S2}.

The L0 matrix can be shown to be:

The complete set of equations for this model is therefore:

Note that even though there appears to be four variables in this system, there are in fact only two independent variables, {ES, S1}, and hence only two differential equations and two linear constraints.

An excellent source of material related to the analysis of the stoichiometry matrix can be found in the text book by Heinrich and Schuster (Heinrich and Schuster 1996) and more recently (Klipp et al. 2005).

3 Biochemical Control Theory

The system equation (1) describes the time evolution of the network. This evolution can be characterized in three ways, thermodynamic equilibrium where all net flows are zero and no concentrations change in time; steady state where net flows of mass traverse the boundaries of the network and no concentrations change in time; and finally the transient state where flows and concentrations are both changing in time. Only the steady state and transients states are of real interest in biology. Steady states can be further characterized as stable or unstable which will be discussed in a later section.

The steady state solution for a network is obtained by setting the left-hand side of the system equation (1) to zero, Nv = 0, and solving for the concentrations. Consider the simplest possible model:

| (2) |

where we will assume that Xo and X1 are boundary species that do not change in time and that each reaction is governed by simple mass-action kinetics. With these assumptions we can write down the system equation for the rate of change of S1 as:

We can solve for the transient behavior of this system by integrating the system equation and setting an initial condition S1(0) = Ao, to yield:

This equation describes how the concentration of S1 changes in time. The steady state can be determined either by letting t go to infinity or by setting the system equation to zero and solving for S1, either way, the steady state concentration of S1 can be shown to be:

Although simple systems such as this can be solved analytically for both the time course evolution and the steady state, the method rapidly become unworkable for larger systems. The problem becomes particulary acute when, instead of simple mass-action kinetics, we begin to use enzyme kinetic rate laws which introduce nonlinearities into the equations. For all intent and purposes, analytical solutions for biologically interesting systems are unattainable. Instead one must turn to numerical solutions, however numerical solutions are particular solutions, not general, which an analytical approach would yield. As a result, to obtain a thorough understanding of a model, many numerical simulations may need to be carried out. In view of these limitations many researchers apply small perturbation theory (linearization) around some operating point, usually the steady state. By analyzing the behavior of the system using small perturbations only the linear modes of the model are stimulated and therefore the mathematics becomes tractable. This is a tried and tested approach that has been used extensively in many fields, particularly engineering, to deal with systems where the mathematics makes analysis difficult.

Probably the first person to consider the linearization of biochemical models was Joseph Higgins at the University of Pennsylvania in the 1950s. Higgins introduced the idea of a ‘reflection coefficient’ (Higgins 1959; Higgins 1965), this described the relative change of one variable to another for small perturbations. In his Ph.D. thesis, Higgins describes many properties of the reflection coefficients and in later work, three groups, Savageau (Savageau 1972; Savageau 1976), Heinrich and Rapoport (Heinrich and Rapoport 1974b; Heinrich and Rapoport 1974a) and Kacser and Burns (Burns 1971; Kacser and Burns 1973), independently and simultaneously developed this work into what is now called Metabolic Control Analysis or Biochemical Systems Theory. These developments extended Higgins’ original ideas significantly and the formalism is now the theoretical foundation for describing deterministic, continuous models of biochemical networks. The theory has in the last twenty years or so been further developed with the most recent important advances by Ingalls (Ingalls 2004) and Rao (Rao et al. 2004). In this chapter we will call this approach Biochemical Control Theory, or BCT.

3.1 Linear Perturbation Analysis

3.1.1 Elementary Processes

The fundamental unit in biological networks is the chemical transformation. Such transformations vary, ranging from simple binding processes, transport processes, to more elaborate aggregated kinetics such as Michaelis-Menten and complex cooperative kinetics.

Traditionally chemical transformations are described using a rate law. For example the rate law for a simple irreversible Michaelis-Menten reaction is often given as

| (3) |

where S is the substrate and the Vmax and Km kinetic constants. Such rate laws form the basis of larger pathway models.

A fundamental property of any rate law is the so-called kinetic order, sometimes also called the reaction order. In simple mass-action chemical kinetics, the kinetic order is the power to which a species is raised in the kinetic rate law. Reactions with zero-order, first-order and second-order are common types of reactions found in chemistry, and in each case the kinetic order is zero, one and two respectively. It is possible to generalize the kinetic order as the scaled derivative of the reaction rate with respect to the species concentration, thus

When expressed this way, the kinetic order in biochemistry is called the elasticity coefficient. Applied to a simple mass-action rate law, such as: v = kS we can see that For a generalized mass-action law such as

the elasticity for the ith species is simply ni, that is it equals the kinetic order. For aggregate rate laws such as the Michaelis-Menten rate law, the elasticity is more complex, for example, the elasticity for the rate law (3) is:

This equation illustrates that the kinetic order, though a constant for simple rate laws, is a variable for complex rate laws. In this particular case, the elasticity approaches unity at low substrate concentrations (first-order) and zero at high substrate concentrations (zero-order).

Elasticity coefficients can be defined for any effector molecule that might influence the rate of reaction, this includes substrates, products, inhibitors, activators and so on. Elasticities are positive for substrates and activators but negative for products and inhibitors.

At this point, elasticities might seem like curiosities and of no great value; left on their own this might well be true. The real value of elasticities is that they can be combined into expressions that describe how the whole pathway responds collectively to pertubations. To explain this statement one must consider an additional measure, the control coefficient.

3.1.2 Control Coefficients

Unlike an elasticity coefficient, which describes the response of a single reaction to perturbations in its immediate environment, a control coefficient describes the response of a whole pathway to perturbations in the pathway’s environment.

At steady state, a reaction network will sustain a steady rate called the flux, often denoted by the symbol, J. The flux describes the rate of mass transfer through the pathway. In a linear chain of reactions, the steady state flux has the same value at every reaction. In a branched pathway, the flux divides at the branch points. The flux through a pathway can be influenced by a number of external factors, these include factors such as enzyme activities, rate constants and boundary species. Thus, changing the gene expression that codes for an enzyme in a metabolic pathway will have some influence on the steady state flux through the pathway. The amount by which the flux changes is expressed by the flux control coefficient.

| (4) |

In the expression above, J is the flux through the pathway and Ei the enzyme activity of the ith step. The flux control coefficient measures the fractional change in flux brought about by a given fractional change in enzyme activity. Note that the coefficient, as well as the elasticity coefficients, are defined for small changes.

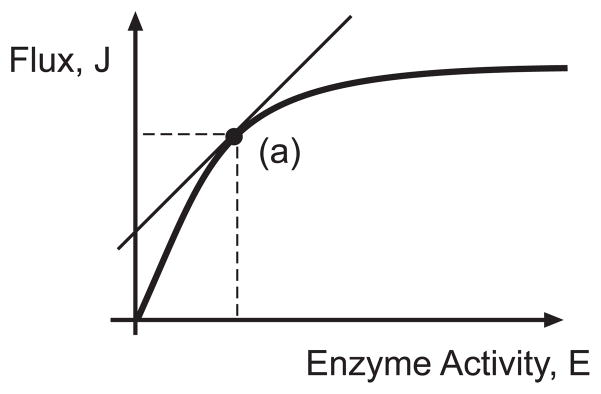

For a reaction pathway one can plot (Figure 3) the steady state flux, J, as a function of the activity of one of the enzymes. The flux control coefficient can be interpreted on this graph as the scaled slope of the response at a given steady state. Given that the curve is a function of the enzyme activity it should be clear that the value of the control coefficient is also a function of enzyme activity and consequently the steady state. Control Coefficients are not constants but vary according to the current steady state.

Figure 3.

Typical response of the pathway steady state flux as a function of enzyme activity. The flux control coefficient is defined at a particular operating point, marked (a) on the graph. The value of the coefficient is measured by the scaled slope of the curve at (a).

One can also define a similar coefficient, the concentration control coefficient, with respect to species concentrations, thus:

| (5) |

3.1.3 Relationship between Elasticities and Control Coefficients

One of the most significant discoveries made early on in the development of BCT (Biochemical Control Theory) was the existence of a relationship between the elasticities and the control coefficients. This enabled one, for the first time, to describe in a general way, how properties of individual enzymes could contribute to pathway behavior. More importantly this relationship could be studied without the need to solve, analytically, the system equation (1). Particular examples of these relationships will be given in the subsequent sections, here we will concentrate on the general relationship.

There are two related ways to derive the relationship between elasticities and control coefficients, the first is via the differentiation of the system equation (1) at steady state and the second by the connectivity theorem.

System Equation Derivation

The system equation can be written more explicitly to show its dependence on the enzyme activities (or any parameter set) of the system: Nv(s(E), E) = 0. By differentiating this expression with respect to E, we obtain

| (6) |

The terms ∂/s and ∂/E are unscaled elasticities (See (Reder 1988; Heinrich and Schuster 1996; Hofmeyr 2001; Klipp et al. 2005) for details of the derivation). By scaling the equation with the species concentration and enzyme activity, the left-hand side becomes the concentration control coefficient expressed in terms of scaled elasticities. The flux control coefficients can also be derived by differentiating the expression: J = v (s(p), p) to yield:

| (7) |

Again, the flux expression can be scaled by E and J to yield the scaled flux control coefficients. These expressions, though unwieldy to some degree, are very useful for deriving symbolic expressions relating the control coefficients to the elasticities. A very thorough treatment together with derivations of these equations and much more can be found in (Hofmeyr 2001).

Theorems

Examination of expressions (6) and (7) yields some additional and unexpected relationships between the control coefficients and elasticities, called the summation and connectivity theorems. These theorems were originally discovered by modeling small networks using an analog computer (Jim Burns, personal communication) but have since been derived by other means.

The flux summation theorem states that the sum of all the flux control coefficients in any pathway is equal to unity.

If is also possible to derive a similar relationship with respect to species concentrations, namely

In both relationships, n, is the number of reaction steps in the pathway. The flux summation theorem indicates that there is a finite amount of ‘control’ (or sensitivity) in a pathway and implies that control is shared between all steps. In addition, it states that if one step were to gain control then one or more other steps must lose control.

Arguably the most important relationship is between the control coefficients and the elasticities:

This theorem, and its relatives (Westerhoff and Chen 1984; Fell and Sauro 1985a; Fell and Sauro 1985b), is called the connectivity theorem and is probably the most significant relationship in computational systems biology because it relates two different levels of description, the local level, in the form of elasticities and the system level, in the form of control coefficients. Given the summation and connectivity theorems it is possible to combine them and solve for the control coefficients in terms of the elasticities. For small networks this approach is a viable way to derive the relationships (Fell and Sauro 1985a) especially when combined with software such as MetaCon (Thomas and Fell 1994) which can compute the relationships algebraically. Box 2 illustrates a simple example of this method.

Box 2. Using Theorems to Derive Control Equations.

Consider the simple reaction network, comprised of three enzyme catalyzed reactions, shown below:

where, Xo and X1 are fixed boundary species. The flux summation theorem can be written down as:

while the two connectivity theorems, one centered around each species, are given by:

These three equations can be recast in matrix form as:

The matrix equation can be rearranged to solve for the vector, by inverting the elasticity matrix, to yield:

Further details of the procedure can be found in (Fell and Sauro 1985a; Fell and Sauro 1985b). For larger systems equation (7) can be used in conjunction with software tools such as Maple, bearing in mind the equation (7) yields unscaled coefficients.

3.2 Linear Analysis of Pathway Motifs

In the following sections we will describe the application of BCT to some basic and common motifs found in cellular networks. These include, straight chains, branches, cycles and feedback loops.

3.2.1 Straight Chains

Although linear sequences of reaction steps are actually quite rare in cellular networks (most networks are so heavily branched that uninterrupted sequences are quite uncommon), their study can reveal some basic properties that are instructive to know.

One of the oldest concepts in cellular regulation is the notion of the rate-limiting step. It was Blackman in 1905 (Blackman 1905) who wrote the famous phrase: ‘when a process is conditioned as to its rapidity by a number of separate factors, the rate of the process is limited by the pace of the slowest factor’. It was this statement that started a century long love-affair with the idea of the rate-limiting step in biochemistry, a concept that has lasted to this very day. From the 1930s to the 1950s there were however a number of published papers which were highly critical of the concept, most notably Burton (Burton 1936), Morales (Morales 1921) and Hearon (Hearon 1952) in particular. Unfortunately much of this work did not find it’s way into the rapidly expanding fields of biochemistry and molecular biology after the second world war and instead the intuitive idea first pronounced by Blackman still remains today one of the basic but erroneous concepts in cellular regulation. This is more surprising because a simple quantitative analysis shows that it cannot be true, and there is ample experimental evidence (Heinisch 1986; Burrell et al. 1994) to support the alternative notion, that of shared control.

The confusion over the existence of rate–limiting steps stems from a failure to realize that rates in cellular networks are governed by the law of mass-action, that is, if a concentration changes, then so does it’s rate of reaction. Many researchers try to draw analogies between cellular pathways and human experiences such as traffic congestion on freeways or customer lines at shopping store checkouts. In each of these analogies, the rate of traffic and the rate of customer checkouts does not depend on how many cars are in the traffic line or how many customers are waiting. Such situations warrant the correct use of the phrase rate-limiting step. Traffic congestion and the customer line are rate-limiting because the only way to increase the flow is to either widen the road or increase the number of cash tills, that is there is a single factor that determines the rate of flow. In reaction networks flow is governed by many factors including the capacity of the reaction (Vmax) and substrate/product/effector concentrations. In biological pathways, rate-limiting steps are therefore the exception rather than the rule. Many hundreds of measurements of control coefficients have born out this prediction. A simple quantitative study will also make this clear.

Consider a simple linear sequence of reactions governed by reversible mass-action rate laws:

where Xo and Xn are fixed boundary species so that the pathway can sustain a steady state. If we assume the reaction rates to have the simple form:

where qj is the thermodynamic equilibrium constant, and kj the forward rate constant, we can compute the steady state flux, J, to be (Heinrich and Schuster 1996):

By modifying the rate laws to include an enzyme factor, such as: we can also compute the flux control coefficients as (Heinrich and Schuster 1996):

Both equations show that the ability of a particular step to limit the flux is governed not only by the particular step itself but by all other steps. Prior to the 1960s this was a well known result (Morales 1921; Hearon 1952) but was subsequently forgotten with the rapid expansion of biochemistry and molecular biology. The control coefficient equation also puts limits on the values for the control coefficients in a linear chain, namely and

which is the flux control coefficient summation theorem. In a linear pathway the control of flux is therefore most likely to be distributed among all steps in the pathway. This simple study shows that the notion of the rate-limiting step is too simplistic and a better way to describe a reaction’s ability to limit flux is to state it’s flux control coefficient.

Although a linear chain puts bounds on the values of the flux control coefficients, branched systems offer no such limits. It is possible that increases in enzyme activity in one limb can decrease the flux through another, hence the flux control coefficient can be negative. In addition it is possible for the flux control coefficient to be greater than unity3.

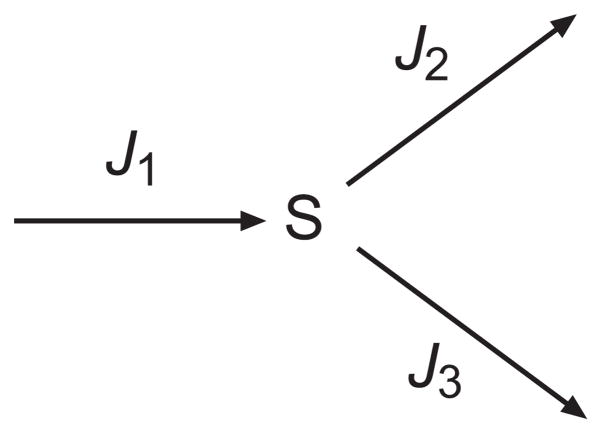

3.2.2 Branched Systems

Branching structures in metabolism are probably one of the most common metabolic patterns. Even a pathway such as glycolysis, often depicted as a straight chain in textbooks is in fact a highly branched pathway.

A linear perturbation analysis of a branched pathway can reveal some interesting potential behavior. Consider the following simple branched pathway:

where Ji are the steady state fluxes. By the law of conservation of mass, at steady state, the fluxes in each limb are governed by the relationship:

In terms of control theory, there will be four sets of control coefficients, one concerned with changes in the intermediate, S, and three sets corresponding to each of the individual fluxes.

Let the fraction of flux through J2 be given by α = J2/J1 and the fraction of flux through J3 be 1 − α = J3/J1. The flux control coefficients for step two and three can be derived and shown to be equal to (Fell and Sauro 1985a):

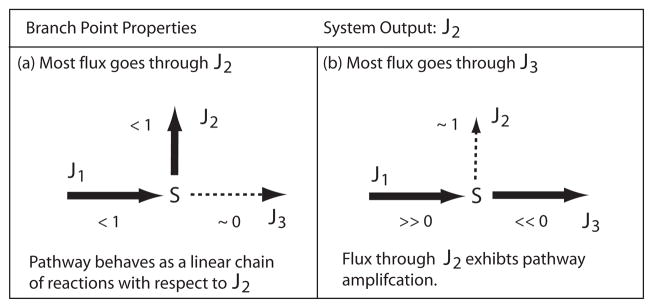

Note that the flux control coefficient, is negative, indicating that changes in the activity of E3 decrease the flux in the other limb. To understand the properties of a branched system it is instructive to look at different flux distributions. For example consider the case when the bulk of flux moves down J3 and only a small amount goes through the upper limb J2, that is α → 0 and 1 − α → 1 (See Figure 5(b)). Let us examine how the small amount of flux through J2 is influenced by the two branch limbs, E2 and E3.

Figure 5.

The figure shows two flux extremes relative to the flux through branch J2. In case (a) where most of the flux goes through J2, the branch reverts functionally to a simple linear sequence of reactions comprised of J1 and J2. In case (b), where most of the flux goes through J3, the flux through J2 now becomes very sensitive to changes in activity at J1 and J3. Given the right kinetic settings, the flux control coefficients can become ‘ultrasensitive’ with values greater than one (less than minus one for activity changes at J3). The values next to each reaction indicates the flux control coefficient for the flux through J2 with respect to activity at the reaction.

The first thing to note is that E2 tends to have proportional influence over its own flux. Since J2 only carries a very small amount of flux, any changes in E2 will have little effect on S, hence the flux through E2 is almost entirely governed by the activity of E2. Because of the flux summation theorem and the fact that means that the remaining two coefficients must be equal and opposite in value. Since is negative, must be positive. Unlike a linear chain, the values for and are not bounded between zero and one and depending on the values of the elasticities it is possible for the control coefficients to greatly exceed one (Kacser 1983; LaPorte et al. 1984). It is conceivable to arrange the kinetic constants so that every step in the branch has a control coefficient of unity (one of which must be −1). Using the old terminology, we would conclude from this that every step in the pathway is the rate limiting step.

Let us now consider the other extreme, when most of the flux is through J2, that is α → 1 and 1 − α → 0 (See Figure 5(a)). Under these conditions the control coefficients yield:

In this situation the pathway has effectively become a simple linear chain. The influence of E3 on J2 is negligible. Figure 5 summarizes the changes in sensitivities at a branch point.

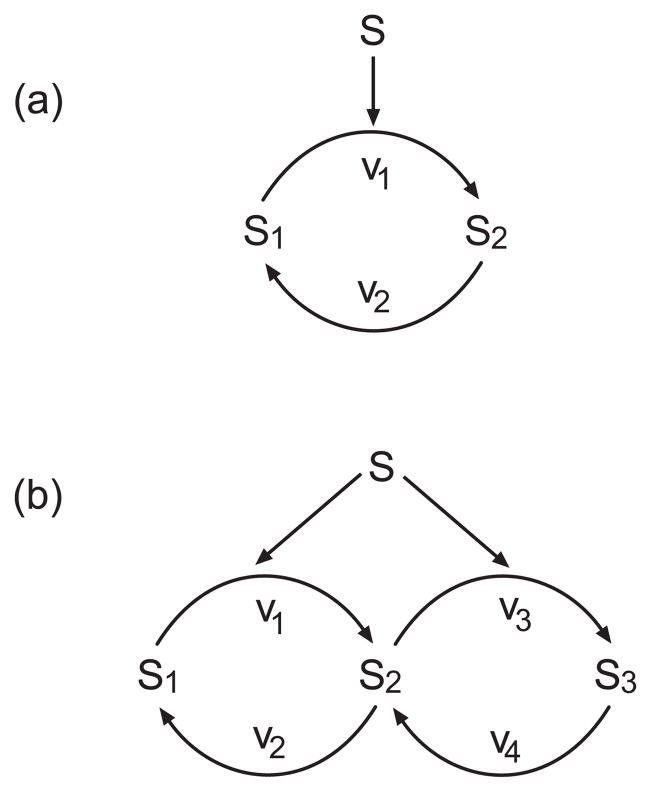

3.2.3 Cyclic Systems

Cyclic systems are extremely common in biochemical networks, they can be found in metabolic, genetic and particularly signaling pathways. The functional role of cycles is not however fully understood although in some cases their operational function is beginning to become clear. We can use linear perturbation analysis to uncover some of the main properties of cycles.

Figure 6 illustrates two common cyclic structures found in signaling pathways. Such cycles are often formed by a combination of a kinase and a phosphatase. In many cases only one of the molecular species is active. For example, in Figure 6(a), let us assume that S2 is the active (output) species, while in Figure 6(b), S3 is the active (output) species. In a number of cases one observes multiple cycles formed by multi-site phosphorylation. Figure 6(b) shows a common two stage multi-site cycle. Note that in each case, the cycle steady state is maintained by the turnover of ATP. One question that can be addressed is how the steady state output of each cycle, S2 and S3, depends on the input stimulus, S. This stimulus is assumed to be a stimulus of the kinase activity.

Figure 6.

Two common cyclic motifs found in signalling pathways. (a) Single covalent modification cycle, S2 is the active species, S is the stimulus; (b) Double cycle with S3 the active species, S is the stimulus.

One approach to this is to build a detailed kinetic model and solve for the steady state concentration of S2 and S3 as a function of S. This has been done analytically in a few cases (Goldbeter and Koshland 1981; Goldbeter and Koshland 1984) but requires the modeler to choose a particular kinetic model for the kinase and phosphatase steps. A perturbation analysis based on BCT need only be concerned with the response characteristics of the kinase and phosphatase steps, not the details of the kinetic mechanism. The response of S2 to changes in the stimulus S can be shown to be given by the expression (Small and Fell 1990; Sauro and Kholodenko 2004):

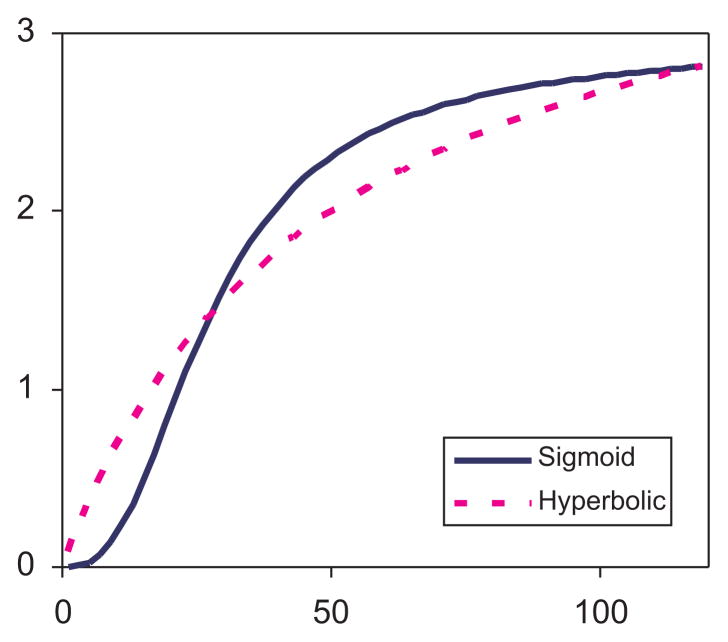

where is the control coefficient of S2 with respect to S. M1 and M2 are the mole fractions of S1 and S2 respectively and the elasticity of v1 with respect to S1 and the elasticity of v2 with respect to S2. If kinase and phosphatase are operating below saturation, then the elasticities will equal one, and , therefore the response of S2 to S is simply given by the mole fraction M1 which means that the response is bounded between zero and one. This situation is equivalent to the non-ultrasensitive response, sometimes termed the hyperbolic response (Goldbeter and Koshland 1984).

In contrast, if the kinase and phosphatase operate closer to saturation, such that the elasticities are much smaller than one, then the denominator in the response equation can be less than the numerator and the control coefficient can exceed one. This situation is representative of zero-order ultrasensitivity and corresponds to the well known sigmoid response (Goldbeter and Koshland 1984). Thus without any reference to detailed kinetic mechanisms it is possible to uncover the ultrasensitive behavior of the network. We can carry out the same kind of analysis on the dual cycle, Figure 6(b), to derive the following expression for .

If we assume linear kinetics on each reaction such that all the elasticities equal one, the equation simplifies to:

This shows that given the right ratios for S1, S2 and S3, it is possible for . Therefore unlike the case of a single cycle where near saturation is required to achieve ultrasensitivity, multiple cycles can achieve ultrasensitivity with simple linear kinetics (See Figure 7).

Figure 7.

Steady state responses for the cycles shown in Figure 6. The simplest cycle 6(a) shows a hyperbolic response when the kinase and phosphatase operate below saturation (dotted line). The double cycle 6(b) shows more complex behavior in the form of a sigmoid response, the kinetics again operating below saturation (solid line). This shows that zero order kinetics is not a necessary condition of ultrasensitivity.

The cyclic models considered here assume negligible sequestration of the cycle species by the catalyzing kinase and phosphatase. In reality this is not likely to be the case because experimental evidence indicates that the concentrations of the catalyzing enzymes and cycle species are comparable (See (Blüthgen et al. 2006) for a range of illustrative data). In such situations additional effects are manifest (Fell and Sauro 1990; Sauro 1994), of particular interest is the emergence of new regulatory feedback loops which can alter the behavior quite markedly (See Markevich et al. 2004 and Ortega et al. 2006).

3.2.4 Negative Feedback

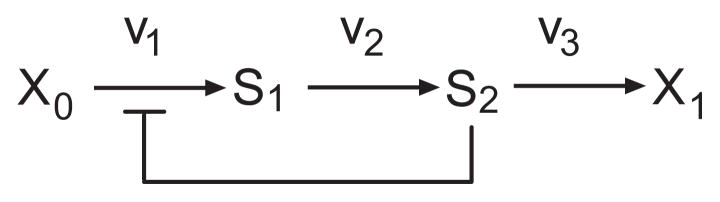

A common regulatory motif found in cellular networks is the negative feedback loop (Figure 8). Feedback has the potential to confer many interesting properties on a pathway with homeostasis probably being the most well known. In this chapter we do not have space to cover all the effects of negative feedback and will focus instead on two properties, homeostasis and instability, however more details can be found in (Sauro and Kholodenko 2004). Using BCT it is easy to show the effect of negative feedback on a pathway.

Figure 8.

Simple negative feedback loop. v1, v2 and v3 are the reaction rates. S2 acts to inhibit its own production by inhibition of v1.

The flux control coefficients for the three steps in Figure 8 are shown below (Savageau 1976; Kacser 1983). To aid comparison, the left-hand equations show the equations with feedback while the right-hand equations have been derived assuming no feedback. The feedback term is represented by a single elasticity term, . This elasticity measures the strength of the feedback and has a negative value indicating that changes in S2 result in decreases in the reaction rate of v1. For cooperative enzymes, the elasticity may also have values less than −1.

The first difference to notice in the equations is that the denominator, though remaining positive in value, has an additional term compared to the system without feedback, . This additional term includes the elasticity of the feedback mechanism.

The numerators for E1 and E2 are both unaffected by the feedback. However, because the denominator has an additional positive term, the ratio of numerator to denominator in both cases must be smaller. The flux control coefficients for E1 and E2 are therefore reduced in the presence of feedback. This result might appear at first glance counter-intuitive, surely the ‘controlled’ step must have more ‘control’ (as many undergraduate textbooks will assert)? Closer inspection however, will reveal a simple explanation. Suppose the concentrations of either E1 or E2 are increased. This will cause the concentration of the signal metabolite, S2 to increase. An increase in S2 will have two effects, the first is to increase the rate of the last reaction step, the second will inhibit the rate through E1. The result of this is that the rate increase originally achieved by the increase in E1 or E2 will be reduced by the feedback. Therefore, compared to the non-feedback pathway, both enzymes, E1 and E2 will have less control over the pathway flux. In addition, the greater the feedback elasticity, the smaller the control coefficients, . Thus the stronger the feedback, the less ‘control’ the E1 and E2 have over the flux.

What about the flux control coefficient distal to the feedback signal, ? According to the summation theorem, which states that the sum of the flux control coefficients of a pathway must sum to unity, if some steps experience a reduction in control then other steps must acquire control. If the flux control of the first two steps decline then it must be the case that control at the third step must increase. Examination of the third control coefficient equation reveals that as the feedback elasticity ( ) strengthens, then approaches unity, that is the last step of the feedback system acquires most of the control.

For drug companies wishing to target pathways, this simple analysis would suggest that the best place to target a drug would be steps distal to a controlling signal. Traditionally many have believed it to be the controlled step that should be targeted, however this analysis indicates that the controlled step is the worst step to target, since it has the least effect on the system. This argument assumes that the targeting does not affect the strength of the feedback itself.

As mentioned previously, one of the most well known effects of negative feedback is to enhance homeostasis. In this case homeostasis refers to the stabilization of the end product, S2. We can examine the effect of negative feedback on the homeostasis of S2 by writing down the concentration control coefficient for .

Note that the numerator is unaffected by the presence of the feedback, whereas the denominator has an additional positive term originating from the feedback mechanism. This means that the feedback decreases the sensitivity of end product, S2, with respect to the distal step, E3. The effect of the feedback is to stabilize the end product concentration in the face of changing demand from distal steps. This allows a pathway to satisfy changing demand characteristics of a subsystem distal to the negative feedback loop. We see such an arrangement in many metabolic pathways, clear examples include glycolysis, where demand is measured by ATP consumption or amino biosynthesis where demand is protein synthesis. In both cases one could imagine that it is important for the demand system, energy consumption and protein production, to be unimpeded by supply restraints.

Negative feedback therefore has the important task of matching different cellular systems. Hofmeyr and Cornish-Bowden (Hofmeyr and Cornish-Bowden 2000) have written extensively on this topic which they call supply-demand analysis. Interfacing different cellular modules using negative feedback, particularly in signalling pathways, is also discussed in (Sauro and Kholodenko 2004).

Only a simple feedback loop has been considered here, for readers who are interested in a more exhaustive analysis, the work by Savageau and co-workers (Savageau 1974; Savageau 1976; Alves and Savageau 2000) is highly recommended. Moreover feed-forward negative loops have recently been found to be a common motif and further details can be found in (Mangan and Alon 2003).

3.3 Relationship to Engineering Control Theory

In engineering there is much emphasis on questions concerning the stability and performance of technological systems. Over the years, engineers have developed an elaborate and general theory of control which is applicable to many different technological systems. It is therefore the more surprising that engineering control theory has had little impact on understanding control systems found in biological networks. Part of the problem is related to the rich terminology and abstract nature of some of the mathematics that engineers use, this in turn makes the connection to biological systems difficult to see. This also partly explains why the biological community developed its own theory of control in the form of BCT. Until recently there was little appreciation of what, if any connection, existed between these two approaches. It turns out, the connection is rather more direct any anyone expected. The work by Ingalls (Ingalls 2004) in particular (but also Rao et al. 2004) showed that the control coefficients in BCT and the transfer functions used so often in engineering are one and the same thing. This means that much of the machinery of engineering control theory, rather than being perhaps unrelated to biology, can in fact be transferred directly to biological problems.

Following Ingalls (Ingalls 2004), let us write down the system equation in the following form:

This equation can be linearized around a suitable operating point such as a steady state to obtain the linearized equation:

| (8) |

This equation describes the rate of change of a perturbation, x around the steady state. For a stable system, the perturbation x will decay towards the steady state and x(t) will thus tend to zero. The linearized equation has the standard state space form commonly used in engineering control theory, that is

with

| (9) |

u(t) is the input vector to the system, and may represent a set of perturbations in boundary conditions, kinetic constants or depending on the particular model, gene expression changes.

Because of its equivalence to the state space form, Equation (8) marks the entry point for describing biological control systems using the machinery of engineering control theory. In the following sections two applications, frequency analysis and stability analysis will be presented that apply engineering control theory, rephrased using BCT, to biological problems.

3.3.1 Frequency Response

It has been noted previously (Arkin 2000) that chemical networks can act as signal filters, that is amplify or attenuate specific varying inputs. It may be the case that the ability to filter out specific frequencies has biological significance; for example a cell may receive many different varying inputs that enter a common signaling pathway, signals that have different frequencies could be identified. In addition, multiple signals could be embedded in a single chemical species (such as Ca2+) and demultiplexed by different target systems. Finally, gene networks tend to be sources of noisy signals which may interfere with normal functioning, one could imagine specific control systems that reduce the noise using high frequency filtering (Cox et al. 2006).

In steady state, sinusoidal inputs to a linear or linearized system generate sinusoidal responses of the same frequency but of differing amplitude and phase. These differences are functions of frequency. For a more detailed explanation Ingalls (Ingalls 2004) provides a readable introduction to the concept of the frequency response of a system in a biological context.

Whereas the linearized equation (8) describes the evolution of the system in the time domain, the frequency response must be determined in the frequency domain. Mathematically there is a standard approach, called the Laplace transform, to moving a time domain representation into the frequency representation. By taking the ratio of the Laplace transform of the output to the transform of the input one can derive the transfer function which is a complex expression describing the relationship between the input and output in the frequency domain. The change in the amplitude between the input and output is calculated by taking the absolute magnitude of the transfer function. The phase shift, which indicates how much the output signal has been delayed can be computed by computing the phase angle. Note that under a linear treatment, the frequency does not change.

In biological systems the outputs are often the species concentrations or fluxes while the inputs are parameters such as kinetic constants, boundary conditions or gene expression levels. By taking the Laplace transform of equation (8) one can generate its transfer function (Ingalls 2004; Rao et al. 2004) The transfer function for the species vector, s with respect to a set of parameters, p is given by:

| (10) |

The response at zero frequency is given by

Comparison of the above equation with the concentration control coefficient equation (6) shows they are equivalent. This is a most important result because it links classical control theory directly with BCT. Moreover, it gives a biological interpretation to the transfer functions so familiar to engineers. The transfer functions can be interpreted as a sensitivity of the amplitude and phase of a signal to perturbations in the input signal. The control coefficients of BCT are the transfer functions computed at zero frequency. Moreover, the denominator term in the transfer functions can be used to ascertain the stability of the system, a topic which will be covered in a later section.

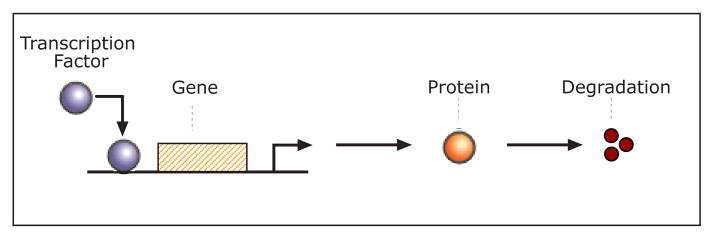

Frequency Analysis of Simple Linear Reaction Chains

The simplest example to consider for a frequency analysis is a two step pathway which can be represented as a single gene expressing a protein which undergoes degradation. This simple system has been considered previously by (Arkin 2000) who used a conventional approach to compute the response. Here we will use the BCT approach which allows us to express the frequency response in terms of elasticities. Using equation (10) and assuming that the protein concentration has no effect on its synthesis, we can derive the following expression:

where is the elasticity for protein degradation with respect to the protein concentration. i is the complex number and w the frequency input. At zero frequency (w = 0) the equation reduces to the traditional control coefficient.

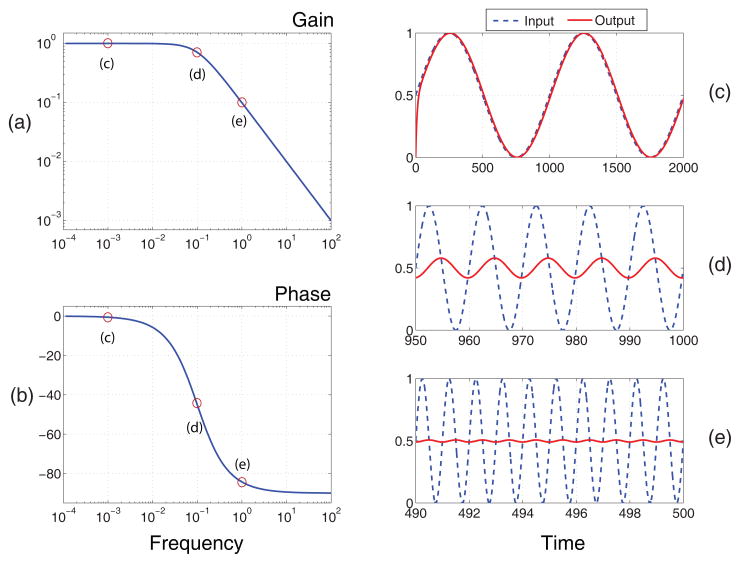

The frequency response of this simple network is shown in Figure 9. This response shows a classic low pass filter response, where at low frequencies the response is high and as the frequency increases the response of the system falls off. The explanation for this is straight forward, at high frequencies, kinetic mechanisms are simply too sluggish to respond fast enough to a rapidly changing signal and the system is unable to pass the input to the output.

Figure 9.

Simple genetic circuit that can act as a low pass filter.

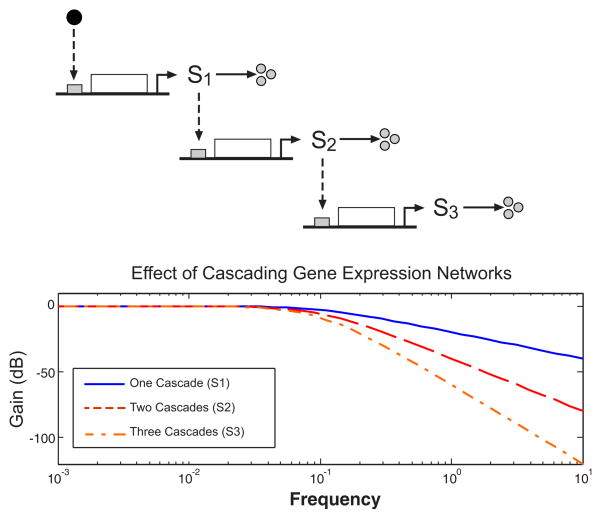

If we cascade a series of genes one after the other (Figure 11), the effect is simply to increase the attenuation so that even moderate frequencies are filtered out.

Figure 11.

Cascade of simple genetic circuits. See Figure 9 for symbolism. The graph shows the frequency response as the cascade grows in stages. The more stages the greater the attenuation.

The unscaled response equation for the model shown in Figure 11 is given by

where the tilde, ~ indicates an unscaled elasticity. n equals the number of genetic stages. We assume that all the elasticities are equal in value.

Many simple systems behave as low pass filters because physically they are unable to respond fast enough at higher frequencies. Chemical systems are not unusual in this respect. It is possible however through added regulation to change the frequency response. In the paper by Paladugu (Paladugu et al. 2006) examples of networks exhibiting a variety of frequency responses are given, including high pass and band pass filters. In the next section we will consider how negative feedback can significantly change the frequency response.

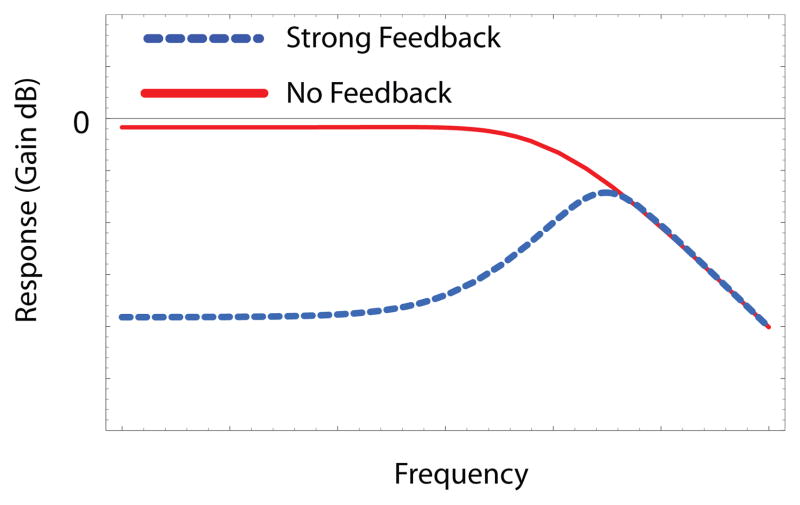

Frequency Analysis of a Simple Negative Feedback

In earlier discussions on the effect of negative feedback, the analysis focused on the response to step perturbations on the steady state, effectively the response at zero frequency in the frequency response curve. Here we wish to investigate the frequency response across the entire frequency range.

Figure 12 shows the frequency response for the simple network shown in Figure 8. The figure includes two graphs, one computed with negative feedback and another without feedback. Without feedback the pathway operates as a simple low pass filter (Solid line). With feedback (Dotted line), the frequency response is different. As expected, the response at low frequencies is attenuated which reflects the homoeostatic properties of the pathway. What is more interesting is the increase in responsiveness at higher frequencies, that is the system becomes more sensitive to disturbances over a certain frequency range. This suggests that negative feedback adds a degree of resonance to the system and given the right conditions can cause the system to become unstable and spontaneously oscillate. The shift in sensitivity to higher frequencies as a result of negative feedback has been observed experimentally in synthetic networks (Austin et al. 2006).

Figure 12.

Frequency response of end product S2 with respect to the input species Xo for a model of the kind shown in Figure 8.

3.3.2 Stability Analysis

The stability of a system is the response it has to a disturbance to its internal state. Such disturbances can arise as a result of stochastic fluctuations in the concentrations of species or as external disturbances that impose changes on the internal species. If the system recovers to the original state after a disturbance then it is classed as stable, if the system diverges then it is classed as unstable. An excellent review by Jorg Stucki that focuses on stability in biochemical systems can be found in (Stucki 1978).

Consider the simple pathway shown in equation (2). The differential equation for this simple pathway is given by

| (11) |

It can easily be shown that disturbances to S1 are stable. At steady state, dS1/dt = 0, thus by making a small disturbance, δS1 in S1 we can compute the effect this has on the rate of change of δS1 to be:

| (12) |

This shows that after the initial disturbance, the disturbance itself declines exponentially to zero, in other words the system returns to the original steady state and the system is therefore stable. By dividing both sides by δS1 and taking the limit to infinitesimal changes, one can show (Klipp et al. 2005) that the term, −k2, is equal to, ∂(dS1/dt)/∂S1. The stability of this simple system can therefore be determined by inspecting the sign of ∂(dS1/dt)/∂S1.

Now consider a change to the kinetic law, k1Xo, governing the first reaction. Instead of simple linear kinetics let use a cooperative enzyme which is activated by the product S1. The rate law for the first reaction is now given by:

Setting Xo = 1, k1 = 100, k2 = 0.14, a steady state concentration of S1 can be determined to be 66.9. Evaluating the derivative ∂(dS1/dt)/∂S1 at this steady state yields a value of 0.084 which is clearly a positive value. This means that any disturbance to S1 at this particular steady state will cause S1 to increase, in other works this steady state is unstable.

For single variable systems the question of stability reduces to determining the sign of the ∂(dS1/dt)/∂S1 derivative. For larger systems the stability of a system can be determined by looking at all the terms ∂(dSi/dt)/∂Si which are given collectively by the expression:

| (13) |

where J is called the Jacobian matrix containing elements of the form ∂(dSi/dt)/∂Si. Equation(12) can be generalized to:

Analysis shows that solutions to the disturbance equations (12) and (13) are sums of exponentials where the exponents of the exponentials are given by the eigenvalues of the Jacobian matrix, J (Klipp et al. 2005). If the eigenvalues are negative then the exponents decay (stable) whereas if they are positive then the exponents grow (unstable).

Another way to obtain the eigenvalues is to look at the roots (often called the poles in engineering) of the characteristic equation which can be found in the denominator of the transfer function, equation (10). For stability, the real parts of all the poles of the transfer function should be negative. If any pole is positive then the system is unstable. The characteristic equation can be written as a polynomial, where the order of the polynomial reflects the size of the model.

A test for stability is that all the coefficients of the polynomial must have the same sign if all the poles are to have negative real parts. Also it is necessary for all the coefficients to be nonzero for stability. A technique called the Routh-Hurwitz criterion can be used to determine the stability. This procedure involves the construction of a “Routh Array” shown in Table 2. TABLE 2 HERE The third and fourth rows of the table are computed using the relations:

Table 2.

Routh-Hurwitz Table

| an | an−2 | an−4 | ··· |

| an−1 | an−3 | an−5 | ··· |

| b1 | b2 | b3 | ··· |

| c1 | c2 | c3 | ··· |

| etc. |

Rows to the table are added until a row of zeros is reached. Stability is then determined by the number of sign changes in the 1st column which is equal to the number of poles with real parts greater than zero. Table (3) shows the Routh table for the characteristic equation s3 + s2 − 3s − 1 = 0 where s = iw. From the table (3) we see one sign change between the second and third rows. This tells us that there must be one positive root. Since there is one positive root, the system from which this characteristic equation was derived is unstable.

Table 3.

Routh-Hurwitz Table

| 1 | −3 |

| 1 | −1 |

| −2 | −1 |

The advantage of using the Routh-Hurwitz table is that entries in the table will be composed from elasticity coefficients. Thus sign changes (and hence stability) can be traced to particular constraints on the elasticity coefficients. Examples of this will be given in the next section.

3.4 Dynamic Motifs

3.4.1 Bistable Systems

The question of stability leads on to the study of systems with non-trivial behaviors. In the previous section a model was considered which was shown to be unstable. This model was described by the following set of rate equations:

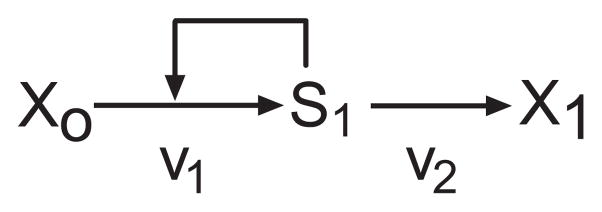

The network is depicted in Figure 13 and illustrates a positive feedback loop, that is S1 stimulates its own production.

Figure 13.

Simple pathway with positive feedback

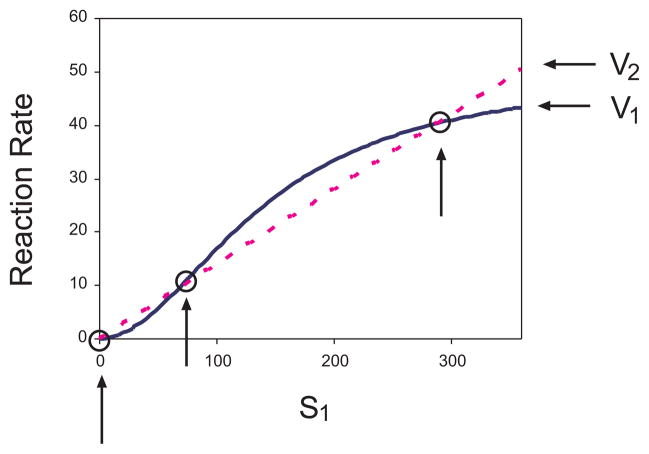

The steady state of this simple model is computed at dS1/dt = v1 − v2 = 0 or v1 = v2. If v1 and v2 are plotted against S1 (Figure 14), the points where the curves intersect correspond to the steady states of the system. Inspection of Figure 14 shows three intersection points.

Figure 14.

Graph showing v1 and v2 plotted against the species concentration, S1 for the model depicted in Figure 13. The intersection points, where v1 = v2 are marked with small circles and indicate three possible steady states. Rate equations: v1 = (k1Xo(Xo + 1)(S1 + 1)2)/((S1 + 1)2(Xo + 1)2 + 80000), v2 = k2S1, and parameter values Xo = 1, k1 = 100, k2 = 0.14. The steady state solutions correspond to values of S1 at 0.019, 66.89, and 288.23.

The steady state solution that was examined earlier (S1 = 66.9) corresponds to the second intersection point and as shown, this steady state is unstable. Solutions to the system can be found at values of S1 at 0.019, 66.89, and 288.23. By substituting these values into the equation for dS1/dt we can compute the Jacobian element in each case (Table 4).

Table 4.

Table of steady state S1 and corresponding value for the Jacobian element. Negative Jacobian values indicate a stable steady state, positive elements indicate an unstable steady state. The table shows one stable and two unstable steady states.

| Steady State S1 | Jacobian Element: (dS1/dt)/dS1 |

|---|---|

| 0.019 | −0.086 |

| 66.89 | 0.084 |

| 288.23 | −0.135 |

This system possess three steady states, one unstable and two stable. Such a system is known as a bistable system because it can rest in one of two stable states. One question which arises is what are the conditions for bistability? This can be easily answered using BCT. The unscaled frequency response of S1 with respect to v1 can be computed using equation (10) to yield:

Constructing the Routh-Hurwitz table indicates one sign change which is determined by the term, . Note that has a positive value because S1 activates v1. Because the pathway is a linear chain the elasticities can be scaled without changes to the stability terms criterion, thus the pathway is stable if

If we assume first order kinetics in the decay step, v2, then the scaled elasticity, will be equal to unity, hence

This result shows that it is only possible to achieve bistability if the elasticity of the positive feedback is greater than one (assuming the consumption step is first-order). The only way to achieve this is through some kind of cooperative or multimeric binding, such as dimerization or tetramer formation. The bistability observed in the lac operon is possible example of this effect (Levandoski et al. 1996; Laurent and Kellershohn 1999).

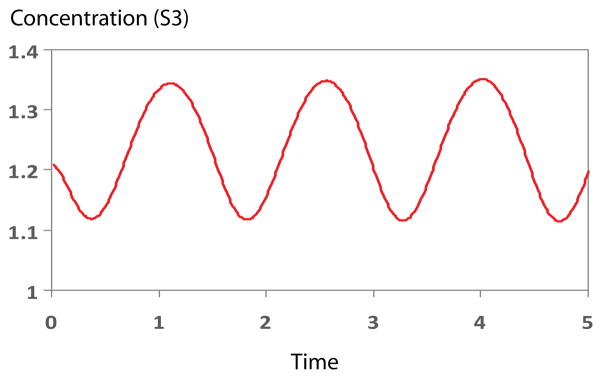

3.4.2 Feedback and Oscillatory Systems

The study of oscillatory systems in biochemistry has a long history dating back to at least the 1950’s. Until recently however, there was very little interest in the topic from mainstream molecular biology. In fact, one suspects that the concept of oscillatory behavior in cellular networks was considered more a curiosity, and a rare one at that, than anything serious. With the advent of new measurement technologies, particulary high quality microscopy, and the ability to monitor specific protein levels using GFP and other fluorescence techniques a whole new world has opened up to many experimentalists. Of particular note is the recent discovery of oscillatory dynamics in the p53/Mdm2 couple (Lahav et al. 2004; Geva-Zatorsky et al. 2006) and Nf-κB (Hoffmann et al. 2002) signaling; thus rather than being a mere curiosity, oscillatory behavior is in fact an important, though largely unexplained, phenomenon in cells.

Basic Oscillatory Designs

There are two basic kinds of oscillatory designs, one based on negative feedback and a second based on a combination of negative and positive feedback. Both kinds of oscillatory design have been found in biological systems. An excellent review of these oscillators and specific biological examples can be found in (Tyson et al. 2003; Fall et al. 2002). A more technical discussion can be found in (Tyson 1975; Tyson and Othmer 1978).

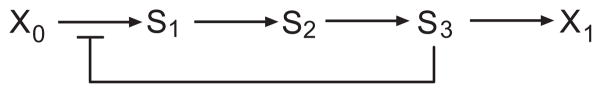

Negative Feedback Oscillator

Negative feedback oscillators are the simplest kind to understand and probably one of the first to be studied theoretically (Goodwin 1965). Savageau (Savageau 1976) in his book provides a detailed analysis and summary of the properties of feedback oscillators. Figure 8 shows a simple example of a system with a negative feedback loop. We can use BCT to analyze this system by deriving the characteristic equations (the denominator of the frequency response) and constructing a Routh-Hurwitz table. Using this technique it can be easily shown that a pathway with only two intermediates in the feedback loop cannot oscillate. In general a two variable system with a negative feedback is stable under all parameter regimes. Once a third variable has been added the situation changes and the pathway shown in Figure 15, which has three variables, can admit oscillatory behavior.

Figure 15.

Simple negative feedback model with three variables, S1, S2 and S3. This network can oscillate.

A critical factor that determines the onset of oscillations, apart from the number of variables is the strength of the feedback. Savageau (Savageau 1976) showed that if the substrate elasticities were equal (e.g. all first-order kinetics) then the ratio of the feedback elasticity (εinh) to the output elasticity (εsub, ) determined the onset of oscillations (Table 5). Table 5 shows that as the pathway becomes longer less feedback inhibition is required to destabilize the pathway. This highlights the other factor that contributes to instability, the delay in routing the signal around the network. All feedback oscillators require some device to provide amplification of a signal combined with a suitable time delay so that the signal response can go out of phase. In metabolic pathways amplification is often provided by a cooperative enzyme while the delay is provided by the intermediate steps in the pathway. In signalling pathways, amplification can be generated by covalent modification cycles. Amplification can also be provided by another means. The criterion for instability is the ratio of the inhibition elasticity to the substrate elasticity. If the output reaction of the pathway is governed by a saturable enzyme then it is possible to have εsub less than unity. This means that it is possible to trade cooperativity at the inhibition site with saturation at the output reaction. The modified Goodwin model of Bliss (Bliss et al. 1982) illustrates the model with no cooperativity at the inhibition site but with some saturation at the output reaction by using a simple Michaelis-Menten rate law.

Table 5.

Relationship between the pathway length and the degree of feedback inhibition on the threshold for stability. εinh is the elasticity of the feedback inhibition and εsub is the elasticity of the distal step with respect to the signal.

| Length of Pathway | Instability Threshold −εinh/εsub |

|---|---|

| 1 | stable |

| 2 | stable |

| 3 | 8.0 |

| 4 | 4.0 |

| 5 | 2.9 |

| 6 | 2.4 |

| 7 | 2.1 |

| ⋮ | ⋮ |

| ∞ | 1.0 |

A second property uncovered by BCT is that stability is enhanced if the kinetic parameters of the participating reactions are widely separated, that is a mixture of ‘fast’ and ‘slow’ reactions. The presence of ‘fast’ reactions effectively shortens the pathway and thus it requires higher feedback strength to destabilize the pathway since the delay is now less.

One of the characteristics of negative feedback oscillators is that they tend to generate smooth oscillations (Figure 16) and in man-made devices they are often used to generate simple trigonometric functions.

Figure 16.

Plot of S3 versus time for the model shown in Figure 15. Note that the profile of the oscillation is relatively smooth.

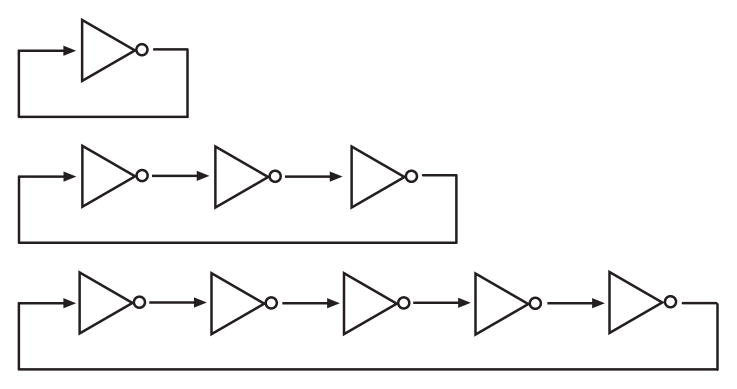

A related oscillator that operates in a similar way to the feedback oscillator is the ring oscillator (See Figure 17). This device is composed of an odd number of signal inverters connected into a closed chain. Instability requires sufficient amplification between each inverter so that the signal strength is maintained. A ring oscillator has been implemented experimentally in E. coli (Elowitz and Leibler 2000) where it was termed a repressilator. Ring oscillators with an even number of inverters can be used to form memory units or toggle switches. The even number of units means that the signal latches to either on or off, the final state depending on the initial conditions. Toggle circuits have also been implemented experimentally in E. coli (Gardner et al. 2000).

Figure 17.

Three ring oscillators, one stage, three stage and five stage oscillators. All ring oscillators require an odd number of gating elements. Even rings behave as toggle switches.

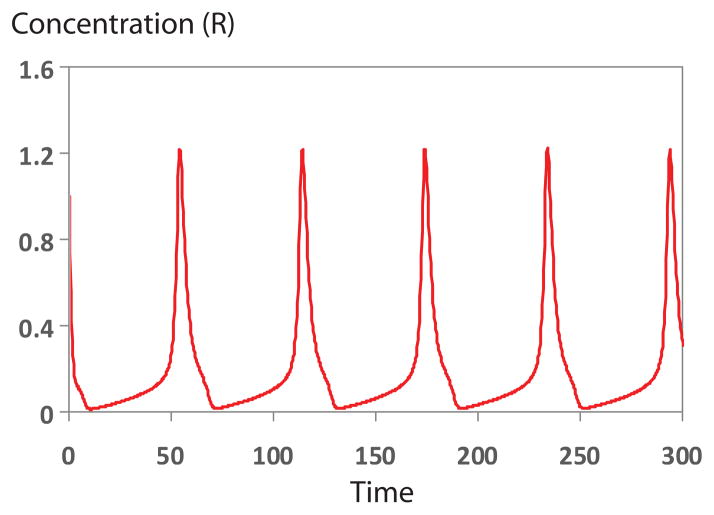

Relaxation Oscillators

A favorite oscillator design amongst theorists (Lotka 1920; Nicolis 1971; R et al. 1972; Field and Noyes 1974) as well as biological evolution (van der Pol and van der Mark 1928; FitzHugh 1955; Goldbeter 1997; Pomerening et al. 2003; Chen et al. 2004) is the relaxation oscillator. This kind of oscillator operates by charging a species concentration which, upon reaching a threshold, changes the state of a bistable switch. When the switch changes state it causes the species to discharge. Once the species has discharged the bistable switch returns to the original state and the sequence begins again. Positive feedback or a two-step ring oscillator forming a toggle switch is used to generate the bistability and a negative feedback loop provides the signal to switch the bistable switch.

One of the characteristics of a relaxation oscillator is the ‘spiky’ appearance of the oscillations. This is due to the rapid switching of the bistable circuit which is much faster compared to the operation of the negative feedback. Man-made devices that utilize relaxation oscillators are commonly used to generate saw-tooth signals. Figure 18 illustrates a plot from a hypothetical relaxation oscillator published by Tyson’s group (Tyson et al. 2003).

Figure 18.

Typical spiky appearance of oscillatory behavior from a relaxation oscillator, from Tyson et. al, 2003, model 2(c).

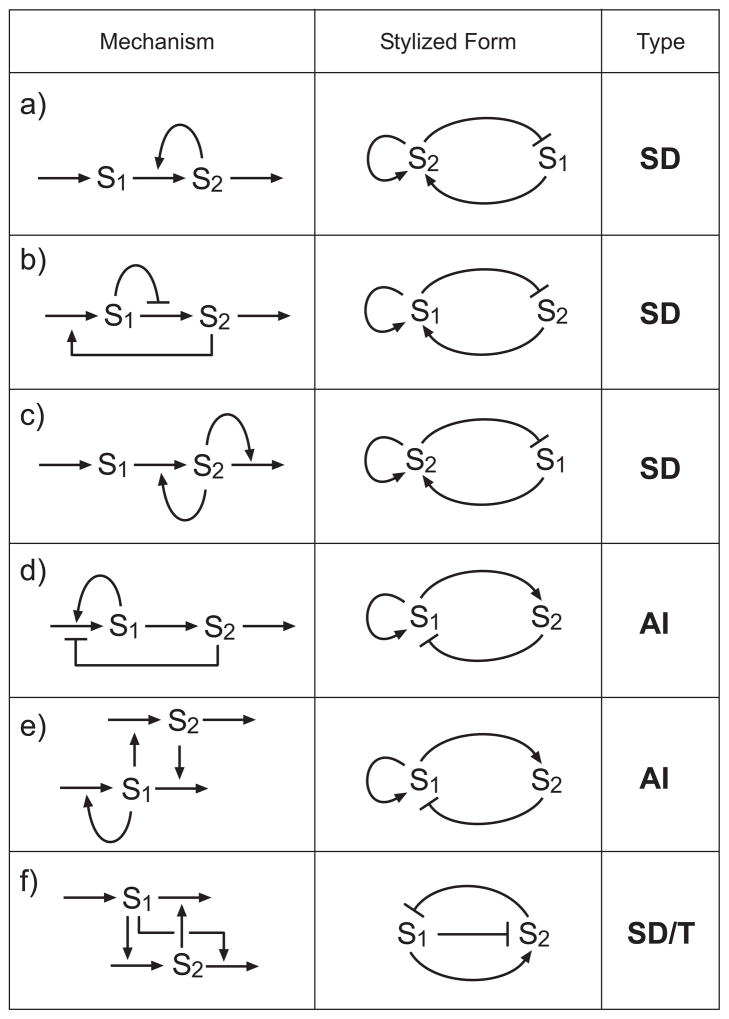

Oscillator Classification

As previously discussed, oscillators fall into two broad categories, feedback oscillators and relaxation oscillators. Within the relaxation oscillation group, some authors (Tyson et al. 2003) have proposed to divide this group into two and possibly three additional subgroups, these include; substrate-depletion, activator-inhibitor and toggle based relaxation oscillators. The grouping is based on two variable oscillators and a comparison of the sign patterns in the Jacobian matrix. Although toggle based relaxation oscillations have the same Jacobian sign pattern as substrate-depletion based oscillations, the bistability is implemented differently.

Figure 19 shows examples of six different oscillators together with their classification and stylized forms.

Figure 19.

Classification of Relaxation Oscillators into substrate-depletion, activator-inhibitor and toggle based. Note that although the mechanistic networks are quite variable, the underling operation is the same as shown in the stylized column. Type codes: SD = Substrate-Depletion; AI = Activator-Inhibitor; SD/T = Substrate-Depletion/Toggle. The stylized form is generated by computing the Jacobian matrix for each network. Elements in the Jacobian indicate how each species influences changes in another. Model a) corresponds to model c) in Figure 2 of (Tyson et al. 2003) and model e) to model b) in Figure 2 of (Tyson et al. 2003)

Even though each mechanistic form (first column) in Figure 19 looks different, the stylized forms (second column) fall, into one of three types. The stylized forms reflect the structure of the Jacobian for each model. Only a limited number of sign patterns in the Jacobian can yield oscillators (Higgins 1967). Using evolutionary algorithms (Deckard and Sauro 2004; Paladugu et al. 2006) many hundreds of related mechanisms can be generated, see the model repository at www.sys-bio.org for a large range of examples. Although many of these evolved oscillators look quite different, each one can be classified in a only a few basic configurations.

4 Summary

This chapter has focused on the describing some of the theory that is available to analyze the dynamics of deterministic/continuous models of biochemical networks. Some areas have been omitted, in particular bifurcation analysis has not been discussed but is probably one of the more important tools at our disposal because it can be used to uncover the different qualitative behavioral regimes a network might posses. Bifurcation analysis would require an entire chapter to describe, however good starting points include the chapter by Conrad and Tyson (Conrad and Tyson 2006) and the book by Izhikevich (Izhikevich 2007).

The most significant area missing from this chapter is undoubtedly a discussion on stochastic modeling (Wilkinson 2006). As more experimental data becomes available on times series changes in species concentrations it is becoming abundantly clear that many process, particularly genetic networks, are noisy. In prokaryotic systems we are often dealing with small numbers of molecules and the stochastic nature of reaction dynamics becomes an important consideration. Unfortunately there is at present little accessible theory on the analysis of stochastic models which greatly impedes their utility. In almost all cases the analysis of stochastic systems relies exclusively on numeric simulation which means generalizations are difficult to make. Some researches have started to consider the theoretical analysis of stochastic systems (Paulsson and Elf 2006; Scott et al. 2006) and the field is probably one of the more exiting areas to consider in the near future.

Reading List

The following lists books and articles which cover the material in this chapter in much more depth.

Introductory and Advanced Texts on Systems Analysis

Fell, D (1996) Understanding the Control of Metabolism, Ashgate Publishing, ISBN: 185578047X

Heinrich R, Schuster S (1996) The Regulation of Cellular Systems. Chapman and Hall. ISBN: 0412032619

Klipp E, et. al (2005), Systems Biology in Practice, Concepts, Implementation and Application Wiley-VCH Verlag, ISBN: 3527310789

Izhikevich, E. M (2007) Dynamical Systems in Neuroscience: The Geometry of Excitability and Bursting, MIT Press, ISBN: 0262090430

Control Theory

Ingalls, BP (2004) A Frequency Domain Approach to Sensitivity Analysis of Biochemical Systems, Journal of Physical Chemistry B, 108, 1143–1152

Bistablilty and Oscillations

Tyson J, et. al. (2003), Sniffers, buzzers, toggles and blinkers: dynamics of regulatory and signaling pathways in the cell. Current Opinion in Cell Biology 15:221–231

Stochastic Modeling

Wilkinson DJ (2006) Stochastic Modeling for Systems Biology. Chapman and Hall. ISBN: 1584885408

Figure 1.

Stoichiometry matrix: N: m × n, where αij is the stoichiometric coefficient. Si denotes the ith species, and vj the jth reaction.

Figure 4.

A simple branched pathway. This pathway has three different fluxes, J1, J2, and J3 which at steady state are constrained by J1 = J2 + J3.

Figure 10.

Low pass frequency response of the simple genetic circuit, Figure 9. The two plots on the left indicate the amplitude and phase response respectively. The three plots on the right show in each case the input signal and corresponding output signal. Each plot on the right was computed at a different frequency, these frequencies are indicated by the marked circles on the plots on the left.

Acknowledgments

I wish to acknowledge Ravishankar R. Vallabhajosyula for assistance in preparing the simulation data and figures for the gene cascade circuits. The preparation of this chapter was supported by generous grants from the NSF (award number CCF-0432190) and NIGMS program 1R01GM081070-01.

Footnotes

A recent and potentially confusing trend has been to use the symbol, S to signify the stoichiometry matrix. The use of the symbol N has however a long tradition in the field, the letter N being used to represent ‘number’, indicating stoichiometry. The symbol, S, is usually reserved for species.

There are rare cases when a ‘conservation’ relationship arises out of a non-moiety cycle. This does not affect the mathematics but only the physical interpretation of the relationship. For example, A → B + C; B + C → D has the conservation, B − C = T,

Possibly inviting the use of the term, ultra-rate-limiting?

References

- Altan-Bonnet G, Germain RN. Modeling T cell antigen discrimination based on feedback control of digital ERK responses. PLoS Biol. 2005;3(11):1925–1938. doi: 10.1371/journal.pbio.0030356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alves R, Savageau MA. Effect of Overall Feedback Inhibition in Unbranched Biosynthetic Pathways. Biophys J. 2000;79:2290–2304. doi: 10.1016/S0006-3495(00)76475-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arkin AP. Signal Processing by Biochemical Reaction Networks. In: Walleczek J, editor. Self-Organized Biological Dynamics and Nonlinear Control. Cambridge University Press; 2000. pp. 112–144. [Google Scholar]

- Austin DW, Allen MS, McCollum JM, Dar RD, Wilgus JR, Sayler GS, Samatova NF, et al. Gene network shaping of inherent noise spectra. Nature. 2006;439(7076):608–611. doi: 10.1038/nature04194. [DOI] [PubMed] [Google Scholar]

- Bakker BM, Westerhoff HV, Opperdoes FR, Michels PAM. Metabolic control analysis of glycolysis in trypanosomes as an approach to improve selectivity and effectiveness of drugs. Mol Biochem Parasitology. 2000;106:1–10. doi: 10.1016/s0166-6851(99)00197-8. [DOI] [PubMed] [Google Scholar]

- Blackman FF. Optima and limiting factors. Ann Botany. 1905;19:281–295. [Google Scholar]

- Bliss RD, Painter PR, Marr AG. Role of feedback inhibition in stabilizing the classical operon. J Theor Biol. 1982;97(2):177–193. doi: 10.1016/0022-5193(82)90098-4. [DOI] [PubMed] [Google Scholar]

- Blüthgen N, Bruggeman FJ, Legewie S, Herzel H, Westerhoff HV, Kholodenko BN. Effects of sequestration on signal transduction cascades. FEBS J. 2006;273(5):895–906. doi: 10.1111/j.1742-4658.2006.05105.x. [DOI] [PubMed] [Google Scholar]

- Burns JA. Ph. D. thesis. University of Edinburgh; 1971. Studies on Complex Enzyme Systems. http://www.sys-bio.org/BurnsThesis. [Google Scholar]

- Burrell MM, Mooney PJ, Blundy M, Carter D, Wilson F, Green J, Blundy KS, et al. Genetic manipulation of 6-phosphofructokinase in potato tubers. Planta. 1994;194:95–101. [Google Scholar]

- Burton AC. The Basis of the Principle of the Master Reaction in Biology. Journal Cellular and Comparative Physiology. 1936;9(1):1–14. [Google Scholar]