Abstract

Purpose

Oncologists in academic cancer centers usually generate professional fees that are insufficient to cover salaries and other expenses, despite significant clinical activity; therefore, supplemental funding is frequently required in order to support competitive levels of physician compensation. Relative value units (RVUs) allow comparisons of productivity across institutions and practice locations and provide a reasonable point of reference on which funding decisions can be based.

Methods

We reviewed the clinical productivity and other characteristics of oncology physicians practicing in 13 major academic cancer institutions with membership or shared membership in the National Comprehensive Cancer Network (NCCN). The objectives of this study were to develop tools that would lead to better-informed decision making regarding practice management and physician deployment in comprehensive cancer centers and to determine benchmarks of productivity using RVUs accrued by physicians at each institution. Three hundred fifty-three individual physician practices across the 13 NCCN institutions in the survey provided data describing adult hematology/medical oncology and bone marrow/stem-cell transplantation programs. Data from the member institutions participating in the survey included all American Medical Association Current Procedural Terminology (CPT®) codes generated (billed) by each physician during each organization's fiscal year 2003 as a measure of actual clinical productivity. Physician characteristic data included specialty, clinical full-time equivalent (CFTE) status, faculty rank, faculty track, number of years of experience, and total salary by funding source. The average adult hematologist/medical oncologist in our sample would produce 3,745 RVUs if he/she worked full-time as a clinician (100% CFTE), compared with 4,506 RVUs for a 100% CFTE transplant oncologist.

Results and Conclusion

Our results suggest specific clinical productivity targets for academic oncologists and provide a methodology for analyzing potential factors associated with clinical productivity and developing clinical productivity targets specific for physicians with a mix of research, administrative, teaching, and clinical salary support.

Introduction

For medical oncologists in academic hospital-based practices, revenues from professional fees alone typically are inadequate to cover the portion of salary consistent with time spent delivering clinical services. Additional sources for clinical salary support are therefore often provided by the health care facility, either as direct compensation or indirectly as academic or administrative program support. Division and department chairs and hospital CEOs alike have an interest in defining reasonable benchmarks of clinical productivity when determining how much direct compensation and supplemental funding are necessary to support clinical practice activities.

Although survey data compiled by the Medical Group Management Association (MGMA) and other organizations provides some guidance,1 very little information is available regarding physician productivity in major National Cancer Institute (NCI) –designated Comprehensive Cancer Centers. The National Comprehensive Cancer Network (NCCN), a not-for-profit corporation established in 1995, represents an alliance of 19 major cancer centers (usually NCI-designated) that maintains clinical care guidelines on all aspects of cancer care, and provides a variety of other services to its members. We reviewed clinical productivity characteristics of oncology physicians practicing in 12 NCCN member institutions. The objective of this study was to develop tools that would lead to better-informed decision making regarding practice management and physician deployment in comprehensive cancer centers. Our results suggest specific clinical productivity targets for academic medical oncologists, hematologists, and bone marrow/stem-cell transplant physicians, and provide a methodology for analyzing potential factors associated with clinical productivity.

Methods

Survey Participants and Consultants

Thirteen of 19 NCCN member institutions (12 NCCN member organizations) were surveyed regarding selected measures of physician productivity. (Member institutions surveyed were the Fox Chase Cancer Center, Fred Hutchinson Cancer Research Center and University of Washington, H. Lee Moffitt Cancer Center & Research Institute at the University of South Florida, Stanford Hospital & Clinics, Sidney Kimmel Comprehensive Cancer Center at Johns Hopkins, UCSF Comprehensive Cancer Center, University of Michigan Comprehensive Cancer Center, Roswell Park Cancer Institute, University of Alabama at Birmingham Comprehensive Cancer Center, Dana-Farber Cancer Institute, Comprehensive Cancer Center–Arthur G. James Cancer Hospital and Richard J. Solove Research Institute at The Ohio State University, and the University of Nebraska Medical Center. University of Washington and Fred Hutchinson Cancer Research Center were analyzed as separate institutions although their membership is shared as a single NCCN member organization.) ECG Management Consultants Inc, under the direction of Robert L. Wasserman, was retained to develop the database and to collect and analyze the peer data.

Scope of Survey

The survey instrument was developed by NCCN member-representatives of the organization's Best Practices Committee. The instrument contained 12 sections, including both narrative and data elements. The survey was piloted with two institutions throughout several months, refined with the assistance of the NCCN Committee, and distributed via e-mail to member institutions. The survey was distributed to 17 of the 19 NCCN member institutions, yielding a 70.5% response rate. The two institutions not solicited were unable to participate because the physicians were not employees of the institutions. Four institutions did not respond, two of which have physician counts at the higher end of the range of the survey participants. Had they participated, the survey results would have been more significant, though no known potential biases of their nonresponse exist.

Three hundred fifty-three individual physician practices (79 transplant and 274 oncology/hematology physicians) across the 13 institutions in the 2003 survey provided data describing adult hematology/medical oncology and bone marrow/stem-cell transplantation programs. Data from the member institutions participating in the survey included all CPT codes generated (billed) by each physician during each organization's 2003 fiscal year as a measure of actual clinical productivity and physician characteristic data including specialty, clinical full-time equivalent (CFTE) status (defined later and in Table 1), faculty rank, faculty track, number of years of experience, and total salary by funding source. Institutional characteristics including admission and inpatient days were provided. In addition, the survey included the number of physician extenders assigned to each specialty service, including fellows, residents, physician assistants, nurse practitioners, and other nursing personnel.

Table 1.

RVUs and CFTEs

| Definition of Terms | Productivity Index | |

|---|---|---|

| Work RVU | Standardized RVU representing physician work and excluding other RVU measurements such as malpractice and practice expense | |

| CFTE | Portion of time physician spends in billable inpatient and outpatient activities (eg, clinical sessions) | RVU/CFTE: productivity per assigned time spent in clinical work (measures efficiency) |

| Funded CFTE | Portion of total salary funded through professional fees or other clinical support dollars | RVU/funded CFTE: productivity per clinical dollars attributed to salary support (measures productivity in context of other salary support, eg, research grants, etc) |

Abbreviations: RVU, relative value units; CFTE, clinical full-time equivalent.

Calculations of Relative Value Units

Relative value units (RVUs) are used to measure time and nontime input designed to permit comparison of the amounts of resources required to perform various physician services by assigning weights to such factors as personnel time, level of skill, stress level, and sophistication of equipment required to render a service.2–5 Medicare RVUs published by the federal Centers for Medicare & Medicaid Services (CMS) were used for the purpose of this study. Under the Medicare system three major components of a service or procedure provided have RVUs assigned to them: physician work (work RVUs), practice expense, and malpractice expense. Work RVUs are measures of physician effort/output and increase with the intensity of the service provided. For example, the work RVU amount for a new patient visit (CPT code 99,203) is 1.34, while the work RVU for intracavitary chemotherapy (CPT code 96445) is 2.20.

RVU data were collected at the physician level utilizing a common set of data element definitions. RVUs were calculated from CPT codes using a common relative value scale for all respondents in order to allow comparison across institutions. It is important to note that relative value scales change over time; therefore, it is important, in doing longitudinal comparisons, that a common relative value scale be used for all periods of study. Work RVUs were calculated for each physician by assigning fiscal year 2003 Medicare RVUs to the CPT activity reported for each physician (RVUs are published annually in the Federal Register).

Under the Medicare relative value system, the application of certain modifiers to CPT codes increases or decreases their assigned levels of physician effort. A CPT modifier is a two-digit suffix to a CPT service or procedure code, which indicates that the service or procedure was modified in some way (eg, a service or procedure consisting of only a professional or technical component), a bilateral procedure (eg, bilateral bone marrow biopsy), a service or procedure that was extended or reduced, and so on. Where applicable, RVUs used in this study were adjusted to reflect the impact of CPT modifiers (Appendix 1 presents an explanation of modifier adjustments).

CFTEs

CFTEs based on clinical time, as defined in this survey, represented the portion of assigned time each physician spent in inpatient and outpatient billable activities (eg, four half-day clinics per week coupled with 4 weeks of inpatient service per year). This definition included any time spent with residents and other students directly related to patient care. It did not include clinical activity for which a patient bill was not generated (eg, telephone triage). “CFTE based on clinical funding,” or funded CFTE, represented the portion of total salary funded through professional fees or other clinical support dollars. These measures provided two different units of input against which units of output (RVUs) were compared to generate productivity indicators (Table 1).

Validation of Data

Project staff worked with each participating institution to validate reported clinical effort. Actual RVU production data for each physician were compared against his or her reported CFTE values. Institution-specific outliers were defined as physicians with either inpatient or outpatient RVU production per CFTE that was greater or less than three standard deviations from the mean. Once outliers were identified for further analysis, clinical managers were able to recharacterize the majority of these physicians' clinical efforts, primarily because scheduled time at the beginning of the academic year may not have been reflective of actual effort. To the extent that the variance could not be explained, remaining outlier physicians (13 of 366; 3.6%) were excluded from the survey. The average number of physician providers per institution, across specialties, was 13, with a range of two to 46.

Statistical Analysis

Clinical productivity was summarized by institution as the median value among practicing physicians at each institution. Correlations between institutional productivity and amount of nonattending provider support (midlevel providers, fellows, residents) were evaluated using Pearson correlation coefficients. Comparisons of productivity between institutions with and without incentive-based compensation were evaluated using t tests.

Results

Physician Characteristics

Table 2 presents a summary of physicians' faculty rank, years of experience, and faculty track. Physicians who spent more than 50% of their time in clinical activities (billable or nonbillable) were assumed to be on a clinician teacher faculty track for the purpose of this analysis. Those with more than 50% time spent in research-related activities were assumed to be on a physician scientist track.

Table 2.

Distribution of Faculty by Rank, Experience, and Faculty Track

| Faculty rank | N | % of Total* | CFTE† | % of Total†‡ | CFTE No. (%) |

|---|---|---|---|---|---|

| Instructor | 51 | 14.4 | 26.5 | 15.5 | 51.8 |

| Assistant professor | 134 | 38.0 | 71.1 | 41.7 | 53.1 |

| Associate professor | 90 | 25.5 | 43.0 | 25.2 | 47.8 |

| Professor | 74 | 21.0 | 28.5 | 16.7 | 38.5 |

| Department chair | 4 | 1.1 | 1.6 | 0.9 | 40.0 |

| Total faculty | 353 | 100.0 | 170.5 | 100.0 | 48.3 |

| Less than 2 years | 38 | 10.8 | 19.2 | 11.3 | 50.5 |

| More than 2 years | 315 | 89.2 | 151.3 | 88.7 | 48.0 |

| Total | 353 | 100.0 | 170.5 | 100.0 | 48.3 |

| Clinician teacher | 174 | 49.3 | 121.7 | 68.2 | 69.9 |

| Physician scientist | 150 | 42.5 | 36.9 | 24.6 | 24.6 |

| Other | 29 | 8.2 | 12.2 | 7.2 | 42.1 |

| Total | 353 | 100.0 | 170.5 | 100.0 | 48.3 |

* Based on total number of faculty.

† Clinical full-time equivalent (CFTE) positions based on time spent performing clinical work.

‡ Based on total number of CFTE.

The majority of physicians were adult medical oncologists or hematologists, with bone marrow/stem-cell transplant physicians representing fewer than one-third of those surveyed. Faculty were distributed among instructor, assistant, associate, and full professor ranks, with more than 85% having more than 2 years of clinical faculty experience. Using the above differentiating features, almost 50% of the physicians were designated as clinician teachers, while approximately 43% were defined as physician scientists. Faculty members who did not meet our “faculty track” definitions (either at least 50% research to qualify as a physician scientist or 50% clinical to qualify as a clinician teacher), may have split their time differently (eg, more didactic teaching). In a few cases, a division did not have an explicit “track” and may have been reluctant to use our standardized definitions.

In Table 2, the ratio of CFTE/N is computed by determining the percent of time spent in direct clinical activities, summing the percentages for all faculty in that rank, and dividing by the number of physicians. For example the figures show that, in aggregate, instructors in this survey spent 51.8% of their time in clinical activities.

Nonattending Providers

Nonattending providers included physician's assistants (PAs), nurse practitioners (NPs), nurse case managers/clinical nurse specialists, fellows, and residents. As presented in Table 3, PAs were most highly utilized by stem-cell transplantation programs, while residents, fellows, and NPs were preferentially used by medical oncology services. Clinical nurse specialists and nurse case managers were used by both services.

Table 3.

Utilization of Nonattending Providers

| Specialty | Mean Nonattending Providers per 1.0 Faculty CFTE | ||||

|---|---|---|---|---|---|

| Residents | Fellows | Physician Assistants | Nurse Practitioners | Clinical Nurse Specialists/Case Managers | |

| Adult hematology/medical oncology | 0.27 | 0.74 | 0.03 | 0.35 | 0.10 |

| Institutional range | 0.11–0.86 | 0.03–0.86 | 0.0–0.2 | 0.03–1.22 | 0.0–0.81 |

| Adult bone marrow/stem-cell transplantation | 0.05 | 0.72 | 0.66 | 0.41 | 0.55 |

| Institutional range | 0.0–0.28 | 0.0–5.63 | 0.0–2.18 | 0.0–2.6 | 0.0–1.99 |

Abbreviation: CFTE, clinical full-time equivalent.

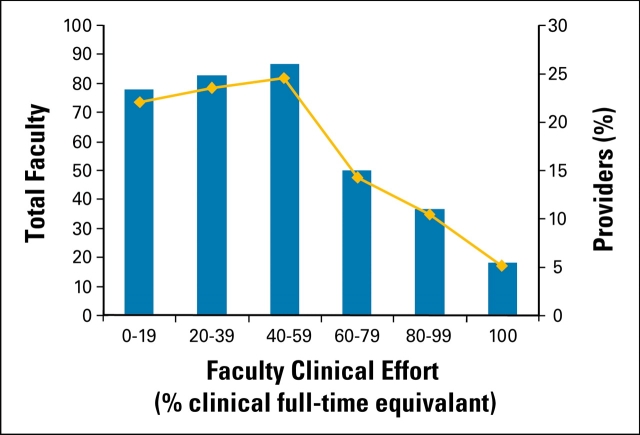

The distribution of time spent performing billable clinical activities is shown in Figure 1. Approximately 70% of physicians in this survey spent less than 60% of their time performing billable clinical activities.

Figure 1.

Percentage of time spent in billable clinical activity.

Production and Compensation Findings

Aggregate production and compensation findings across the two specialties are measured by five summary-level categories presented in Table 4.

Table 4.

Aggregate Productivity and Compensation

| Median Statistics for All Faculty |

Specialty | |

|---|---|---|

| Adult Hematology/Medical Oncology | Adult Bone Marrow/Stem-Cell Transplantation | |

| Work RVUs per 1.0 CFTE | ||

| Median | 3,745 | 4,506 |

| Institutional range | 2,421–7,465 | 2,481–7,118 |

| Inpatient work RVUs per 1.0 CFTE | ||

| Median | 5,016 | 6,655 |

| Institutional range | 3,700–11,463 | 1,634–11,311 |

| Outpatient work RVUs per 1.0 CFTE | ||

| Median | 3,438 | 3,259 |

| Institutional range | 1,936–6,411 | 1,598–5,927 |

| Work RVUs per 1.0 FTE paid from clinical sources | ||

| Median | 4,567 | 4,731 |

| Institutional range | 2,602–7,615 | 2,747–13,174 |

| Faculty salary | ||

| Median, $ | 151,368 | 160,344 |

| Institutional range, $ | 122,500–201,410 | 105,000–223,095 |

Abbreviations: RVU, relative value units; CFTE, clinical full-time equivalent.

It should be noted that work RVUs per 1.0 CFTE reflect the “efficiency” of practice (ie, the number of work RVUs generated for actual time spent in clinical care). Work RVUs per 1.0 FTE (full-time equivalent [position]) paid from clinical sources reflect the number of RVUs generated for “expected time” in clinical service (ie, the remainder of time after subtracting from 100% FTE salary support from research grants, endowments, or administrative sources). As presented in Table 4, the average adult hematologist/medical oncologist would produce 3,745 RVUs if he or she were to work full-time as a clinician, while a similar individual focusing on transplantation services would produce 4,506 RVUs. The wide institutional variation reflects occasional small numbers of physicians in a particular category (eg, transplant at a particular institution), but may also relate to variation in expected standards of productivity between institutions or differences among physicians in the use of CPT coding techniques.

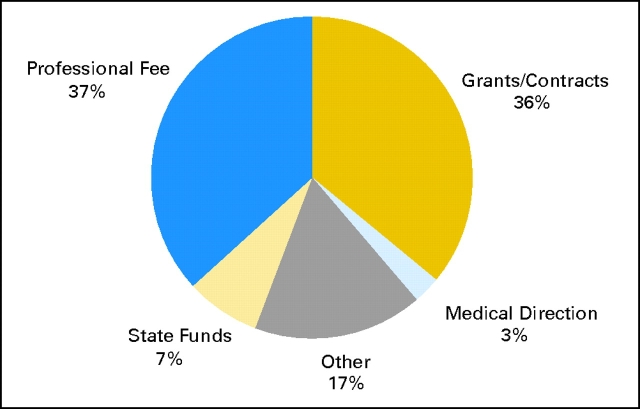

Faculty Salary Funding Source

Figure 2 shows the distribution of faculty salaries according to funding source. Differences in nonclinical funding were observed in the sample population, and these differences may have contributed to significant variations in observed clinical productivity per faculty member. For example, faculty member A may have produced the same total work RVUs as faculty member B. However, if faculty member A had a higher level of grant support, his or her RVU productivity per funded CFTE (normalized to 1.0 CFTE) would have been higher (less salary and therefore a lower funded CFTE to support with clinical revenues). “Other” may include support from endowments, fixed institutional support, and so on.

Figure 2.

Distribution of faculty salaries according to funding source.

Effects of Faculty Rank and Track and Incentive Based Compensation Plans

There were no significant differences in productivity according to faculty rank and track within any of the institutions or in aggregate (data not shown). Incentive plans identified at five institutions were not significantly correlated with observed levels of clinical productivity compared to productivity at institutions without incentive plans.

Effects of Nonattending Providers

An analysis was undertaken to examine the relationship between clinical productivity (work RVUs per 1.0 CFTE) and two institutional characteristics. For this purpose, the clinical productivity of an institution was represented as the median productivity value among its participating physicians. The institutional characteristics were defined by the amount of nonattending provider support available (expressed as FTEs of nonattending provider support per FTE of physician clinical effort).

These relationships were evaluated for total productivity, inpatient productivity, and outpatient productivity among both specialties, and for oncology in total. There were no statistically significant associations found; however, there was some suggestion of a trend for increased inpatient productivity with increased inpatient nonattending provider support. Because of the small sample size, we are unable to conclude that these factors do not influence observed institutional or individual productivity.

Productivity Data at the Evaluation and Management CPT Code Level

Table 5 (online only) presents productivity data at the Evaluation and Management (E&M) CPT code level in aggregate form for both specialties. In general, bone marrow/stem-cell transplant physicians more frequently billed the higher/highest E&M codes for outpatient consultations, subsequent hospital care, and subsequent inpatient visits. For example, usage of E&M level 3 (99233) was 51.3% (range, 9.7% to 93.3%) for oncology and hematology, versus 70.0% (range, 0.3% to 99.3%) for bone marrow/stem-cell transplant. This may be explained by differences in the acuity of care between the two inpatient services.

Table 5.

Weighted Average Distribution of Charges by CPT Code Office Visits

| CPT Code | Adult Hematology/ Medical Oncology |

Adult Bone Marrow/ Stem-Cell Transplantation |

||

|---|---|---|---|---|

| Mean (%) | Institutional Range (%)* | Mean (%) | Institutional Range (%)* | |

| New office/outpatient visits | ||||

| 99201 | 0.4 | 0.0–.3 | 0.5 | 0.0–1.6 |

| 99202 | 1.8 | 0.0–34.1 | 1.7 | 0.0–13.2 |

| 99203 | 6.5 | 0.3–50.6 | 8.9 | 0.0–22.6 |

| 99204 | 23 | 19.1–52.3 | 17.5 | 7.4–41.0 |

| 99205 | 68.1 | 10.4–89.9 | 71.4 | 41.0–92.6 |

| Mean count across all institutions | 651 | 83 | ||

| Established office/outpatient visits | ||||

| 99211 | 2.1 | 0.0–8.9 | 0.9 | 0.0–4.6 |

| 99212 | 2.0 | 0.5–8.5 | 2.0 | 0.1–13.9 |

| 99213 | 20.7 | 3.6–61.5 | 15.7 | 0.6–75.6 |

| 99214 | 52.8 | 23.9–65.5 | 52.6 | 4.7–90.2 |

| 99215 | 22.5 | 0.5–44.2 | 28.8 | 61.0–99.1 |

| Mean count across all institutions | 13,162 | 2,904 | ||

| Total office/outpatient visits | ||||

| New | 4.7 | 1.9–9.4 | 2.4 | 0.2–5.8 |

| Established Consults | 95.3 | 90.6–98.1 | 97.6 | 94.2–99.8 |

| Outpatient consults | ||||

| 99241 | 0.3 | 0.0–0.7 | 0.4 | 0.0–1.5 |

| 99242 | 0.8 | 0.0–2.2 | 0.6 | 0.0–2.4 |

| 99243 | 4.7 | 0.4–10.7 | 2.3 | 0.0–7.3 |

| 99244 | 17.9 | 2.6–90.6 | 10.9 | 0.7–33.7 |

| 99245 | 76.4 | 3.1–96.8 | 85.8 | 61.0–99.1 |

| Mean count across all institutions | 1,471 | 238 | ||

| Initial inpatient consults | ||||

| 99242 | 0.8 | 0.0–2.2 | 0.6 | 0.0–2.4 |

| 99251 | 3.2 | 0.0–9.4 | 2.6 | 0.0–10.5 |

| 99252 | 7.8 | 0.0–38.8 | 9.0 | 0.0–26.7 |

| 99253 | 19.0 | 5.8–55.8 | 23.9 | 0.0–60. |

| 99254 | 34.1 | 2.0–75.9 | 11.6 | 0.0–36.8 |

| 99255 | 35.8 | 2.0–76.7 | 52.9 | 3.3–100 |

| Mean count | 217 | 10 | ||

| Follow-up consults | ||||

| 99261 | 18.6 | 9.7–21.2 | 6.7 | |

| 99262 | 63.3 | 51.6–71.6 | 86.7 | |

| 99263 | 18.1 | 8.0–38.7 | 6.7 | |

| Mean count across all institutions | 14 | 2 | ||

| Confirmatory consults | ||||

| 99271 | 0.5 | 0.0–2.9 | 1.9 | 0.0–6.1 |

| 99272 | 0.7 | 0.0–3.1 | 0.0 | 0.0–0.0 |

| 99273 | 3.7 | 0.0–7.6 | 2.9 | 0.0–4.8 |

| 99274 | 15.8 | 3.6–57.1 | 2.9 | 0.0–6.1 |

| 99275 | 79.2 | 34.3–95.9 | 92.2 | 84.8–100 |

| Mean count across all institutions | 238 | 11 | ||

| Consults, all categories | ||||

| Outpatient | 75.8 | 54.8–100 | 90.8 | 64.1–100 |

| Initial inpatient | 11.2 | 0.0–30.7 | 5.2 | 0.0–15.5 |

| Follow-up | 0.7 | 0.0–4.8 | 0.5 | 0.0–15.3 |

| Confirmatory | 12.3 | 0.0–27.2 | 3.5 | 0.0–32.8 |

| Inpatient care | ||||

| Initial hospital care | ||||

| 99221 | 11.5 | 0.0–73.4 | 12.2 | 0.0–40.1 |

| 99222 | 16.4 | 0.9–52.4 | 12.0 | 0.0–83.3 |

| 99223 | 72.1 | 21.4–97.6 | 75.8 | 16.7–99.8 |

| Mean count | 824 | 216 | ||

| Subsequent hospital care | ||||

| 99231 | 5.2 | 0.0–15.1 | 1.2 | 0.0–32.6 |

| 99232 | 43.5 | 6.5–84.9 | 28.8 | 0.5–99.7 |

| 99233 | 51.3 | 9.7–93.3 | 70.0 | 0.3–99.3 |

| Mean count across all institutions | 5,729 | 3,090 | ||

| Prolonged physician service | ||||

| 99354 | 45.6 | 35.2 | ||

| 99355 | 22.1 | 17.2 | ||

| 99356 | 32.4 | 44.5 | ||

| 99357 | 0.0 | 3.1 | ||

| Mean count across all institutions | 5 | 38 | ||

Abbreviations: CPT, American Medical Association Current Procedural Terminology.

* Institutional range for institutions with at least 10 visits (not given if number of institutions less than three).

Current Procedural Terminology (CPT) is copyright 2005 American Medical Association. All Rights Reserved. No fee schedules, basic units, relative values, or related listings are included in CPT. The AMA assumes no liability for the data contained herein. Applicable FARS/DFARS restrictions apply to government use.

Discussion

Oncologists in academic practices frequently are prohibited from billing for the technical components of chemotherapy, laboratory, and radiology procedures, unlike physicians in private office practices. Therefore, revenues from professional fees alone typically are inadequate to cover the clinical portion of their compensation. Our study provides strong support for the concepts that RVUs can be used to develop comparative measures of oncologist clinical productivity and that such measures can play a reasonable role in decisions regarding appropriate levels of institutional salary support.

Our results demonstrate that it is possible to measure clinical productivity in two ways, using RVUs/CFTE and RVUs/funded CFTE. Productivity per funded CFTE measures clinical output per unit of compensation derived from clinical sources. A low number might indicate a variety of causes ranging from inefficiency in practice to the use of clinically derived compensation to support nonclinical academic activities. A high number might be indicative of high practice efficiency, generous funding from other sources, or perhaps a misallocation of resources. Coupling this measure with measures of productivity per actual CFTE (ie, actual efficiency of practice) allows the analyst to begin the process of discriminating among competing explanations and devising appropriate remedial actions. A key component of the analytic process is the availability of benchmark or normative productivity measures against which individual institutional findings can be compared.

Based on our study, it appears practical to create and maintain the necessary productivity benchmarks. We have established that reasonable annual median numbers of work RVUs for a full-time equivalent practicing academic physician are 3,745 for adult hematology/oncology and 4,506 for bone marrow/stem-cell transplant physicians, respectively. When productivity is defined according to RVUs per full-time equivalent paid from clinical sources, median values shift to 4,567 and 4,731 RVUs, respectively. Inpatient work seems to generate more RVUs per period than outpatient work. While our data are persuasive, wide variations among institutions in this survey do exist, which may relate to small numbers of physicians in a particular practice (eg, stem cell transplantation), differences in expected productivity, variations in the use of E&M codes, and other institutional idiosyncrasies. Using our model, RVU targets may be “fitted” to generate a required number of clinics, clinic visits, and/or inpatient rotations. For example, a hematologist/oncologist with 40% salary from research support and 10% administrative salary support needs 50% salary from clinical sources. Hence, an appropriate annual RVU target would be 2,283. One possible scenario to achieve this target based on CPT conversion from RVU might require the physician to accomplish 2 months of inpatient service coupled with 2-and-a-half days of outpatient clinics per week with three new patients, and 15 follow-up patients per clinic day. There are, of course, an infinite number of ways in which various clinical activities can be scheduled in order to meet RVU clinical productivity expectations. The key point here is that a relatively abstract concept like an RVU target can be easily converted into measures of clinical activity that are readily understood by practicing physicians. With productivity targets established and agreed on, it becomes a simple matter to estimate both physician compensation support likely to be derived from clinical practice and the amount of any necessary supplemental institutional funding.

Our study documents patterns of physician productivity, but does not evaluate the influence of reported productivity on cost per unit of service or per episode of care. This is an area that needs further study. For example, it may be possible to achieve high levels of physician productivity by overbuilding or overstaffing facilities, but if this does not lead to average lower total costs, the process can be self-defeating.6 Various models have been proposed to link resources with productivity.7

We did evaluate the number of nonattending providers per program (not per physician), but could make no conclusions about their effects on productivity. Well-trained nonattending providers can render outstanding care and, if used effectively, might independently contribute to enhanced overall clinical productivity.

Although our study did not address this, links between physician productivity targets and compensation seem to be most effective for increasing overall productivity.8 Some institutions that have implemented incentive programs that reward both individual and group productivity have seen a high degree of physician satisfaction.6,9 With missions that often extend beyond clinical care, a variety of academic centers include other measures of productivity (eg, teaching).10 Since multiple departments and the hospital often compete for resources with the cancer center, the organizational structure of an institution may play an important role. Within the cancer center, the establishment of a clear line of administrative authority, a unified budget and aligned financial incentives among all stakeholders (departments, hospital, cancer center), and physician accountability for their effort (RVUs, grants) within the center may serve to maximize financial and academic success for the entire institution.11 Future studies should focus on the impact of “incentives” to optimize productivity.

The validity of RVUs as a measure of productivity is dependent on accuracy in the clinical documentation and coding process, which also has important implications for legal and regulatory compliance. Information of the type generated in this survey can be used to identify physicians who display unusual coding patterns and analyze their practices for under- and overcoding. Incorporation of these concepts into a billing compliance program may, in addition to enhancing measurements of physician productivity, yield the additional benefit of improving coding accuracy and billing compliance.11,12

In summary, major academic cancer centers need good management reporting systems to track and manage physician productivity and billing compliance, and benchmarks based on comparative reporting of RVUs can play an important role in the process. Well-defined and generally accepted productivity targets (measured in RVUs per clinical FTE or RVUs per FTE derived from clinical sources) can be important tools in managing practice efficiency, and help provide a rational basis on which to make resource allocation decisions, particularly in deciding appropriate levels of faculty salary support.

Authors' Disclosures of Potential Conflicts of Interest

Although all authors completed the disclosure declaration, the following authors or their immediate family members indicated a financial interest. No conflict existed for drugs or devices used in a study if they are not being evaluated as part of the investigation.

| Authors | Employment | Leadership | Consultant | Stock | Honoraria | Research Funds | Testimony | Other |

|---|---|---|---|---|---|---|---|---|

| Marcy Waldinger | University of Michigan CCC | Stanford External Advisory Board; Dana-Farber External Advisory Board |

Supplementary Material

Acknowledgment

We thank Jennifer Scarborough for her help with this manuscript. The authors also thank the thirteen institutions that participated in the study: Fox Chase Cancer Center, Fred Hutchinson Cancer Research Center, H. Lee Moffitt Cancer Center & Research Institute at the University of South Florida, Stanford Hospital & Clinics, Sidney Kimmel Comprehensive Cancer Center at Johns Hopkins, UCSF Comprehensive Cancer Center, University of Michigan Comprehensive Cancer Center, University of Washington, Roswell Park Cancer Institute, University of Alabama at Birmingham Comprehensive Cancer Center, Dana-Farber Cancer Institute, Comprehensive Cancer Center–Arthur G. James Cancer Hospital and Richard J. Solove Research Institute at The Ohio State University, and the University of Nebraska Medical Center.

Appendix 1: Explanation of Modifier Adjustments

The value of the adjustments included: (modifier/percentage adjustment) 21/125%, 22/125%, 47/25%, 50/150%, 51/50%, 52/50%, 53/50%, and 80/20%. After adjusting the RVU components, work RVUs were then summed for each physician. Because most physicians worked less than full-time clinically, and some were employed for less than 1 year, each physician's RVU figures were divided by his or her CFTE in order to arrive at the equivalent production for a 1.0 CFTE. Values were computed individually for each physician and normalized by CFTE.

Footnotes

Current Procedural Terminology (CPT) is copyright 2005 American Medical Association. All Rights Reserved. No fee schedules, basic units, relative values, or related listings are included in CPT. The AMA assumes no liability for the data contained herein. Applicable FARS/DFARS restrictions apply to government use.

References

- 1.Medical Group Management Association. Englewood, CO: MGMA; 2005. Physician Compensation and Production Survey: 2005 Report Based on 2004 Data. [Google Scholar]

- 2.Hsiao WC. Boston, MA: Harvard School of Public Health; 1985. Resource Based Relative Values of Selected Medical and Surgical Procedures in Massachusetts: Final Report on Research Contract for Rate Setting Commission, Commonwealth of Massachusetts. [Google Scholar]

- 3.Hsiao WC, Braun P, Dunn DL, et al. An overview of the development and refinement of the resource based relative value scale: The foundation for reform of U.S. physician payment. Med Care. 1992;30:NS1–NS12. doi: 10.1097/00005650-199211001-00001. [DOI] [PubMed] [Google Scholar]

- 4.Hsiao WC, Stason WB. Toward developing a relative value scale for medical and surgical services. Health Care Financ Rev. 1979;1:23–38. [PMC free article] [PubMed] [Google Scholar]

- 5.Centers for Medicare & Medicaid Services. Introduction and background. www.cms.hhs.gov/physcians/physicians/pfs.

- 6.Willis DR, Kelton GM, Saywell RM, Jr, et al. An incentive compensation system that rewards individual and corporate productivity. Fam Med. 2004;36:270–278. [PubMed] [Google Scholar]

- 7.Blalock J, Mackowiak PA. A resource-allocation model to enhance productivity of academic physicians. Acad Med. 1998;73:1062–1066. doi: 10.1097/00001888-199810000-00013. [DOI] [PubMed] [Google Scholar]

- 8.Sussman AJ, Fairchild DG, Coblyn J, et al. Primary care compensation at an academic medical center: A model for the mixed-payer environment. Acad Med. 2001;76:693–699. doi: 10.1097/00001888-200107000-00009. [DOI] [PubMed] [Google Scholar]

- 9.Stewart MG, Jones DB, Garson AT. An incentive plan for professional fee collections at an indigent-care teaching hospital. Acad Med. 2001;76:1094–1099. doi: 10.1097/00001888-200111000-00009. [DOI] [PubMed] [Google Scholar]

- 10.Khan NS, Simon HK. Development and implementation of a relative value scale for teaching in emergency medicine: The teaching value unit (TVU) Acad Emerg Med. 2003;10:904–907. doi: 10.1111/j.1553-2712.2003.tb00639.x. [DOI] [PubMed] [Google Scholar]

- 11.Spahlinger DA, Pai CW, Waldinger MB, et al. New organizational and funds flow models for an academic cancer center. Acad Med. 2004;79:623–627. doi: 10.1097/00001888-200407000-00003. [DOI] [PubMed] [Google Scholar]

- 12.Mast LJ. Designing an internal audit process for physician billing compliance. Healthc Financ Manage. 1998;52:80–84. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.