Abstract

Gaze direction signals another's focus of social attention. Here we recorded ERPs to a multi-face display where a gaze aversion created three different social scenarios involving social attention, mutual gaze exchange, and gaze avoidance. N170 was unaffected by social scenario. P350 latency was shortest in social attention and mutual gaze exchange, whereas P500 was largest for gaze avoidance. Our data suggest that neural activity after 300ms post-stimulus may index processes associated with extracting social meaning, whereas that earlier than 300ms may index processing of gaze change independent of social context.

Keywords: social cognition, eyes, gaze shifts, N170, P350, ERPs, temporal cortex, STS

Introduction

The eyes, and where they look, are important social signals. Gaze shifts indicate change in focus of attention, and potentially indifference, attraction, or deceit [1, 2]. In humans and monkeys the superior temporal sulcus, orbitofrontal cortex, and amygdala are important for social cognition [3-5] and evaluating gaze changes (e.g. [2, 6-8]). The superior temporal sulcus responds selectively to facial movements, including gaze changes [9, 10]. Baron-Cohen's (1995) ‘mindreading’ model proposes multiple neurocognitive mechanisms for interpreting intentions in others [4]. This includes an Eye Direction Detector which detects eyes, and where gaze is directed.

Human scalp event-related potential (ERP) studies have shown a posterior temporal negativity around 170ms (N170) that occurs to static images of faces and eyes [11]. Larger and earlier N170s occur to faces (or isolated eyes) where eyes avert versus when eyes look at the viewer. Later ERPs are also modulated by gaze changes [12]. When observers rate isolated eyes with verbal labels for affective state or gender, a late ERP (at 270-400ms) is more negative for judging mental state versus gender [13]. Taken together, these data show that neural activity to judgments of social attention and mental state is reliably elicited.

Only neural responses to single faces have been studied, so it is not clear how social context influences this activity. Here we recorded high-density (124 channel) ERPs to gaze aversion on multi-face displays in 3 different simulated social contexts. A pilot study in a small number of subjects and using a 64 channel array of electrodes based on the 10-10 system elicited N170s (Fig. 1.4 of [14]), but later ERPs were not as clearly seen. A combination of the relatively small subject number and the less denser electrode sampling may have made it more difficult to observe the later ERP peaks clearly.

Methods

Subjects

Fourteen healthy volunteers (age 22-56 years, mean age 31.3 ± 10.2 years; 7 males; 2 left-handers) with normal or corrected-to-normal vision participated. The West Virginia University Institutional Review Board for the Protection of Human Research approved the study.

Experiment

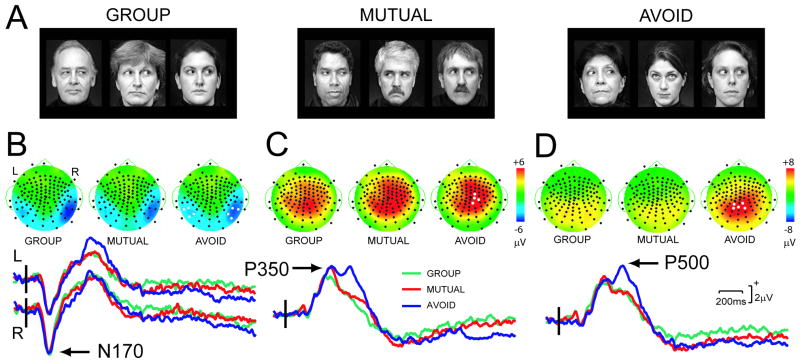

Subjects sat in a recliner approx. 170cm from a 35cm computer monitor in a quiet, dimly lit room. Neuroscan Stim software presented displays of 3 grayscale faces with neutral expressions on a black background (Fig. 1A). All faces subtended a total visual angle of 3.4 × 1.4 deg (horizontal × vertical) and each pair of eyes 0.5 × 0.1 deg.

Figure 1. Stimulus conditions and elicited group average topographic voltage maps and ERP waveforms.

A. Stimulus condition examples at a point in time immediately after gaze aversion on the central face. B. N170. Voltage maps at peak of N170 activity show clear activity in the bilateral posterior temporal scalp, and ERP waveforms have a clear N170 in all conditions (arrow). C. P350. Voltage maps show broadly distributed scalp activity, and ERPs have a P350 peak in all conditions (arrow). D. P500. Voltage maps depict broadly distributed centroparietal activity. Averaged ERPs show a clear P500 in AVOID (arrow). LEGEND for B-D: R=right, L=left. On maps black circles denote electrodes, and white circles show electrodes whose averaged data were analyzed with ANOVA. Color scale (right) indicates microvolts (μV) (identical for B-C). For ERPs, vertical bar indicates stimulus onset, waveform color identifies stimulus condition.

Each trial began with a 3 face display where the central face looked at the viewer, and two flanker faces looked away in the same direction, either to left or right (BASELINE). After 1.5sec the central face looked away while the two flankers kept their averted gaze (Fig. 1A). This configuration was displayed for 3.5sec before the next trial began with a new set of 3 faces. Three gaze change stimulus types were repeatedly presented in random order. In the GROUP condition, the central face looked in the same direction as the flankers, so that all 3 faces gazed at a common point to the side (a shared focus of attention). In MUTUAL, the central face looked opposite to the flankers, with the central face and one flanker sharing a mutual gaze exchange. In AVOID, the central face looked up, avoiding eye contact with the flankers, i.e. ‘interacting’ with neither face. Subjects pressed one of 3 buttons to indicate if the central face shared an ‘interaction’ with both faces (GROUP), one face (MUTUAL) or no face (AVOID). The response was made after the central face's gaze aversion. Reaction time (ms) and accuracy were recorded to each trial. The experiment was run in two 10-minute sessions with a short rest in between.

ERP Recordings

Subjects wore a Neuroscan Electrocap with silver/silver chloride electrodes with a frontal ground and a dual reference on each side of the nose. The horizontal electro-oculogram was recorded from the outer canthus of each eye, and vertical electro-oculogram from above and below the left eye. A continuous 124 channel electroencephalogram (band pass=0.1-100Hz, gain=5,000) was performed (Neuroscan 4.2 software). Subjects kept movements to a minimum and restricted blinking during the recording.

ERP analysis

The continuous electroencephalogram was epoched 100ms before each gaze change into 2044ms segments. Epochs with amplitudes > ± 75μV were rejected with an automated procedure and were then inspected visually to reject trials with more subtle electromyographic and electro-oculographic artifacts. Baseline was corrected by subtracting the pre-stimulus amplitude from all data points in the epoch. Epochs with correct behavioral responses were averaged by stimulus type for each subject. Each subject's averaged ERPs were digitally filtered (60Hz notch; zero phase shift).

Grand average ERPs were created for each condition across subjects. ERP peak latencies and amplitudes were identified within a predetermined latency window using a semi-automated method (N170 interval: 124-270ms; P350: 250-450ms; P500: 460-650ms). Grand average ERPs were visually inspected and topographic voltage maps created at times corresponding to ERP peaks. Electrode clusters with maximal amplitudes were identified for subsequent statistical analyses.

Statistical Analysis

Behavior

Mean latency and accuracy were calculated across trials and conditions for each subject. Differences were analyzed by one-way repeated measures Analysis of Variance (ANOVA) for Stimulus (GROUP, MUTUAL, AVOID) with Greenhouse-Geisser epsilon factor correction (SPSS V9). Group average response times and accuracies aided ANOVA interpretation.

ERPs

For each subject mean ERP peak latencies and amplitudes were calculated from the electrode cluster where ERP activity was greatest. Latency and amplitude differences were assessed by 2-way repeated measures ANOVA with Greenhouse-Geisser epsilon factor correction (Statistical Package for the Social Sciences V9) for main effects of Stimulus (GROUP, MUTUAL, AVOID) and Hemisphere (right, left). Main effects, interactions, and contrasts were deemed significant if P<0.05 (Greenhouse-Geisser corrected).

Results

Behavioral data

Mean and standard deviations (SD) of reaction times and accuracy appear in Table 1. ANOVA revealed a significant main effect for Stimulus only for reaction time (F[2,26] =3.77, P<0.05). Contrasts revealed that subjects responded faster in GROUP relative to MUTUAL (F[1,13] = 7.55, P<0.05), and AVOID relative to MUTUAL (F[1,13] = 6.85, P<0.05). There was no significant difference in accuracy across stimulus.

Table 1.

Mean reaction time (ms) and accuracy scores as a function of stimulus condition.

| Condition | |||

|---|---|---|---|

| Group Attention | Gaze Exchange | Control | |

| Reaction Time (ms) | |||

| Mean | 958 | 1025 | 942 |

| SD | 207 | 192 | 216 |

| Accuracy (% correct) | |||

| Mean | 95.8 | 95.7 | 94.8 |

| SD | 3.5 | 4.2 | 3.8 |

ERPs

The clearest and earliest ERP component in all conditions was N170 (Fig. 1B) being largest at the posterior temporal scalp. N170 latency varied between 190-203ms (left hemisphere) and 194-200ms (right hemisphere). ANOVA revealed no significant main effects (Stimulus, Hemisphere), nor interaction effects for N170 amplitude or latency.

Two subsequent positivities were observed, one around 250-450ms (P350, Fig. 2C) and the other around 460-650ms (P500, Fig. 2D). P350 was a broad centrally distributed potential. ANOVA showed no significant effects of stimulus for P350 amplitude. P350 latency varied significantly as a function of stimulus (Fig. 2C) (F[2,26]=5.90, P<0.01). Contrasts showed P350 latencies to be shorter in GROUP relative to AVOID (F[1,13]=6.54, P<0.05), and in MUTUAL versus AVOID (F[1,13]=13.80, P<0.005), but not in GROUP versus MUTUAL.

P500 was maximal centroparietally (Fig. 2D) with larger P500s occurring to AVOID, as shown by a significant main effect for Stimulus (F[2,26]=13.36, P<0.001). Contrasts showed that P500 was significantly smaller in GROUP versus AVOID (F[1,13]=18.24, P<0.005), and in MUTUAL versus AVOID (F[1,13]=15.26, P<0.005). There was no significant difference in P500 amplitude between GROUP and MUTUAL. There were no significant differences in P500 latency across stimulus type.

Discussion

Changing social context by gaze aversions in a multi-face display elicited three main ERPs, two which were affected by social context, and one that was not. We discuss them and their possible functional significance in turn.

The early potential – N170

N170 is sensitive to gaze changes, with larger and earlier N170s seen to averting gaze on single faces relative to gaze returning to look at the viewer [12]. Here, however, N170 was unaffected by social context. Indeed, N170 appears to be unaffected by judgments of mental state: Sabbagh and colleagues [13] used images of isolated eyes extracted from faces depicting differing emotional expressions. Subjects judged either the gender or the mental state of the individual whose eyes were shown and made forced-choice judgments from verbal labels presented immediately prior to the eye stimulus. Amplitude and latency of N170 (and indeed P100) were not affected by gender and mental state judgments. Hence, putting together the data from all of these studies, we conclude that N170 elicited to faces or eyes alone is impervious to judgments of emotional state and social context, but is sensitive to gaze changes.

A likely source for N170 could be the superior temporal sulcus and surrounding regions. Facial movements including gaze aversion elicit fMRI activation in this region [9]. Hoffman and Haxby (2000) reported fMRI activation in the superior temporal sulcus to judging gaze direction, in the absence of social context. Judging gaze direction in isolated faces is impaired when right superior temporal cortex is stimulated using Transcranial Magnetic Stimulation [15]. Indeed, invasive field potential recordings from human lateral temporal cortex show robust neurophysiological activity around 200ms to faces and eyes in isolation [16]. Taken together, the Eye Direction Detector as proposed by Baron-Cohen (1995) [4], may reside in the superior temporal sulcus and surrounding regions, and be reflected by N170. The Eye Direction Detector is thought to detect eyes and gaze changes, irrespective of social context. The behavior of N170, in this and previous studies would be consistent with this idea. Additionally, the superior temporal sulcus relies on input from ventral and dorsal higher-order visual regions (e.g. [10]) – important as one's gaze signals attention to locations in visual space.

However, neither N170 nor superior temporal sulcus activation are sensitive only to eye movements. Puce et al (2003) showed larger N170s for faces where the mouth opened and closed than for scrambled images matched for luminance, contrast, and local motion features [17]. In the same subjects, responses to mouth movements, relative to scrambled controls, also occurred. Other studies report N170 [12] or superior temporal sulcus activation [9] to mouth movements. Specifically, larger N170s occur to mouth opening versus mouth closing. In this context N170 may reflect a more general face part motion detector.

The later potentials: P350 & P500

P350 was faster when the central face shared an ‘interaction’ with at least one other face. In contrast, the centroparietal P500 was largest when the central face ‘avoided’ the gaze of the others.

From a social cognition standpoint, P350 might index a neural process associated with putting gaze changes into a social context. This type of process might be activated when one or more individuals engage in eye contact or share a common focus of visual attention. Indeed, Baron-Cohen's model proposed a component called a ‘Shared Attention Mechanism’ [4] which he proposed allowed an individual to follow another's gaze change or their pointing finger to a person or object of interest. It is tempting to speculate that P350 might index this type of shared social attention.

It is possible, however, that P350 latency differences could be due to an attentional shift that is not associated with social cognition. GROUP and MUTUAL both involve horizontal gaze shifts towards a flanker, whereas AVOID had a vertical gaze shift. However, our previous data show no differences in ERPs (early or late) to viewing horizontal versus vertical gaze shifts [16, 18]. Gaze shifts can trigger reflexive shifts in attention, even when this is detrimental to task performance e.g. [19, 20]. Changes in another's gaze may trigger automatic shifts in attention in the viewer, as shown by better performance in unilateral neglect patients for detecting targets in the periphery in their extinguished hemifield [21]. Indeed, spatial attention effects can occur in the N2, as late as 280ms post-stimulus [22]. However, given that the gaze changes in GROUP and MUTUAL produced a net spatial attentional focus in opposite directions. Hence, we believe it unlikely that P350 latency changes could be attributable to spatial attention alone.

Unlike the P350, P500 was larger in AVOID, where gaze was directed to space not containing a face. fMRI studies have reported activation of temporal and parietal cortex when the gaze of a single face is evaluated [7, 9, 23]. Pelphrey et al. (2003) reported that both intraparietal sulcus and superior temporal sulcus activation was greater when a face shifted its gaze to a spatial location opposite to the location of congruous target [23]. In our case, a congruous target is a face that ‘looks’ at the central face. The upward gaze is also a social signal – the central face ‘ignores’ the flankers and ‘looks at’ something, or someone, not seen by the viewer. P500 may be sensitive to this spatial aspect of social context.

As well as the superior temporal sulcus, orbitofrontal cortex and cortex around and including the anterior cingulate have been associated with social cognition [5]. Orbitofrontal activity is difficult to detect in ERP studies, whereas that in paracingulate cortex can be recorded [24]. One source for P500 could originate in this region. Kampe et al. (2003) reported that paracingulate cortex activated when subjects viewed faces with direct gaze [25].

Conclusion

Gaze is a powerful social cognitive cue. Impairments in using this information are thought to contribute to the social impairments observed in autism [4]. We suggest that N170, which is unaffected by social context, might be the neural correlate of the Eye Direction Detector in Baron-Cohen's mindreading model. Later ERPs sensitive to the context in which the gaze change occurs may reflect an evaluation of that social context.

Acknowledgments

We thank Dr Charlie Schroeder for his constructive comments on an earlier version of this manuscript, and Leah Henry and Mary Pettit for assistance with manuscript preparation.

References

- 1.Kleinke CL. Gaze and eye contact: A research review. Psychological Bulletin. 1986;100:78–100. [PubMed] [Google Scholar]

- 2.Emery NJ. The eyes have it: the neuroethology, function and evolution of social gaze. Neuroscience & Biobehavioral Reviews. 2000;24:581–604. doi: 10.1016/s0149-7634(00)00025-7. [DOI] [PubMed] [Google Scholar]

- 3.Brothers L. Friday's Footprint: How Society Shapes the Human Mind. Oxford: Oxford University Press; 1997. [Google Scholar]

- 4.Baron-Cohen S. Mindblindness: An Essay on Autism and Theory of Mind. Cambridge, MA: MIT Press; 1995. [Google Scholar]

- 5.Frith U, Frith CD. Development and neurophysiology of mentalizing. Philosophical Transactions of the Royal Society of London B: Biological Sciences. 2003;358:459–473. doi: 10.1098/rstb.2002.1218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kawashima R, Sugiura M, Kato T, Nakamura A, Hatano K, Ito K, et al. The human amygdala plays an important role in gaze monitoring. A PET study. Brain. 1999;122:779–783. doi: 10.1093/brain/122.4.779. [DOI] [PubMed] [Google Scholar]

- 7.Hoffman EA, Haxby JV. Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nature Neuroscience. 2000;3:80–84. doi: 10.1038/71152. [DOI] [PubMed] [Google Scholar]

- 8.Adolphs R, Baron-Cohen S, Tranel D. Impaired recognition of social emotions following amygdala damage. Journal of Cognitive Neuroscience. 2002;14:1264–1274. doi: 10.1162/089892902760807258. [DOI] [PubMed] [Google Scholar]

- 9.Puce A, Allison T, Bentin S, Gore JC, McCarthy G. Temporal cortex activation in humans viewing eye and mouth movements. Journal of Neuroscience. 1998;18:2188–2199. doi: 10.1523/JNEUROSCI.18-06-02188.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Puce A, Perrett D. Electrophysiology and brain imaging of biological motion. Philosophical Transactions of the Royal Society of London B: Biological Sciences. 2003;358:435–445. doi: 10.1098/rstb.2002.1221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Bentin S, Allison T, Puce A, Perez A, McCarthy G. Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience. 1996;8:551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Puce A, Smith A, Allison T. ERPs evoked by viewing facial movements. Cognitive Neuropsychology. 2000;17:221–239. doi: 10.1080/026432900380580. [DOI] [PubMed] [Google Scholar]

- 13.Sabbagh MA, Moulson MC, Harkness KL. Neural correlates of mental state decoding in human adults: an event-related potential study. Journal of Cognitive Neuroscience. 2004;16:415–246. doi: 10.1162/089892904322926755. [DOI] [PubMed] [Google Scholar]

- 14.Puce A, Constable RT, Luby ML, McCarthy G, Nobre AC, Spencer DD, et al. Functional magnetic resonance imaging of sensory and motor cortex: comparison with electrophysiological localization. Journal of Neurosurgery. 1995;83:262–270. doi: 10.3171/jns.1995.83.2.0262. [DOI] [PubMed] [Google Scholar]

- 15.Pourtois G, Sander D, Andres M, Grandjean D, Reveret L, Olivier E, et al. Dissociable roles of the human somatosensory and superior temporal cortices for processing social face signals. European of Journal Neuroscience. 2004;20:3507–3515. doi: 10.1111/j.1460-9568.2004.03794.x. [DOI] [PubMed] [Google Scholar]

- 16.McCarthy G, Puce A, Belger A, Allison T. Electrophysiological studies of human face perception. II: Response properties of face-specific potentials generated in occipitotemporal cortex. Cerebral Cortex. 1999;9:431–444. doi: 10.1093/cercor/9.5.431. [DOI] [PubMed] [Google Scholar]

- 17.Puce A, Syngeniotis A, Thompson JC, Abbott DF, Wheaton KJ, Castiello U. The human temporal lobe integrates facial form and motion: evidence from fMRI and ERP studies. Neuroimage. 2003;19:861–869. doi: 10.1016/s1053-8119(03)00189-7. [DOI] [PubMed] [Google Scholar]

- 18.Allison T, Lieberman D, McCarthy G. Here's not looking at you kid: An electrophysiological study of a region of human extrastriate cortex sensitive to head and eye aversion. Society for Neuroscience Abstracts. 1996;22:400. [Google Scholar]

- 19.Driver J, Davis G, Ricciardelli P, Kidd P, Maxwell E, Baron-Cohen S. Gaze perception triggers visuospatial orienting. Visual Cognition. 1999;6:509–540. [Google Scholar]

- 20.Friesen CK, Kingstone A. The eyes have it! Reflexive orienting is triggered by nonpredictive gaze. Psychonomic Bulletin & Review. 1998;5:490–495. [Google Scholar]

- 21.Vuilleumier P. Perceived gaze direction in faces and spatial attention: a study in patients with parietal damage and unilateral neglect. Neuropsychologia. 2002;40:1013–1026. doi: 10.1016/s0028-3932(01)00153-1. [DOI] [PubMed] [Google Scholar]

- 22.Woldorff MG, Liotti M, Seabolt M, Busse L, Lancaster JL, Fox PT. The temporal dynamics of the effects in occipital cortex of visual-spatial selective attention. Brain Research Cognitive Brain Research. 2002;15:1–15. doi: 10.1016/s0926-6410(02)00212-4. [DOI] [PubMed] [Google Scholar]

- 23.Pelphrey KA, Singerman JD, Allison T, McCarthy G. Brain activation evoked by perception of gaze shifts: the influence of context. Neuropsychologia. 2003;41:156–170. doi: 10.1016/s0028-3932(02)00146-x. [DOI] [PubMed] [Google Scholar]

- 24.Luu P, Flaisch T, Tucker DM. Medial frontal cortex in action monitoring. Journal of Neuroscience. 2000;20:464–469. doi: 10.1523/JNEUROSCI.20-01-00464.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kampe KK, Frith CD, Frith U. “Hey John”: signals conveying communicative intention toward the self activate brain regions associated with “mentalizing,” regardless of modality. Journal of Neuroscience. 2003;23:5258–5263. doi: 10.1523/JNEUROSCI.23-12-05258.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]