Abstract

In an effort to clarify whether semantic integration is impaired in verbal and nonverbal auditory domains in children with developmental language impairment (a.k.a., LI and SLI), the present study obtained behavioral and neural responses to words and environmental sounds in children with language impairment and their typically developing age-matched controls (ages 7–15 years). Event-related brain potentials (ERPs) were recorded while children performed a forced-choice matching task on semantically matching and mismatching visual-auditory picture-word and picture-environmental sound pairs. Behavioral accuracy and reaction time measures were similar for both groups of children, with environmental sounds eliciting more accurate responses than words. In picture-environmental sound trials, behavioral performance and the brain’s response to semantic incongruency (i.e., the N400 effect) of the children with language impairment were comparable to those of their typically developing peers. However, in picture-word trials, children with LI tended to be less accurate than their controls and their N400 effect was significantly delayed in latency. Thus, the children with LI demonstrated a semantic integration deficit that was somewhat specific to the verbal domain. The particular finding of a delayed N400 effect is consistent with the storage deficit hypothesis of language impairment (Kail & Leonard, 1986) suggesting weakened and/or less efficient connections within the language networks of children with LI.

Keywords: EEG, event-related potentials, ERP, N400, Environmental Sounds, Language Impairment, LI, SLI

INTRODUCTION

Developmental language impairment (LI), a.k.a. Specific Language Impairment (SLI), is characterized by language deficits with relative sparing of other cognitive domains (Bishop, 1997; Leonard, 1997). From the earliest stages of language development, children with LI appear to have lexical and semantic deficits and there is evidence suggesting that these deficits may become even more marked with age (e.g., Hayes, 1992; Stothard, Snowling, Bishop, Chipchase, & Kaplan, 1998). Children with LI produce first words at an older age (Trauner, Wulfeck, Tallal, & Hesselink, 1995) and they have smaller vocabularies (Bishop, 1997) than children with normal language development. In experimental word-learning contexts, children with LI learn fewer new words than typically developing children (e.g., Rice, Buhr, & Nemeth, 1990; Rice, Oetting, Marquis, Bode, & Pae, 1994). In naming tasks, they are often slower, and less accurate, than their typically developing peers (e.g., Katz, Curtiss, & Tallal, 1992; Lahey & Edwards, 1996; Leonard, Nippold, Kail, & Hale, 1983; Miller, Kail, Leonard, & Tomblin, 2001). Moreover, these children have difficulties in retrieving lexical items (see Messer & Dockrell, 2006 for a review), and their most frequent naming error type is semantic mislabeling (e.g., Lahey & Edwards, 1999; McGregor & Appel, 2002; McGregor, Newman, Reilly, & Capone, 2002).

Various hypotheses have been put forward to account for the semantic and lexical deficits in LI. Kail and Leonard (1986) proposed the storage deficit hypothesis, according to which naming (i.e., lexical) problems in children with LI are a byproduct of delayed language development. These authors proposed that the lexicons of children with LI resemble those of younger typically developing children in that only sparse information about lexical concepts has been mapped, and associations between related concepts are yet to be strengthened. Such a weakness of inter-connectedness of semantic representations is said to be apparent during word retrieval (e.g., McGregor and Appel, 2002; McGregor et al., 2002). Consistent with this idea, McGregor and Appel (2002) and McGregor et al. (2002) observed that for the items that children with SLI made naming errors on, they also drew with fewer features, provided less information for during definition tasks, and comprehended with less accuracy.

While a lexical-semantic deficit in LI might be most apparent and best measurable in the language domain, it is possible that it extends to the nonverbal domain as well (e.g., Karmiloff-Smith, 1998). For example, one account of LI implicates problems with auditory perception at a preverbal level (e.g., Marler, Champlin, & Gillam, 2002; Tallal & Piercy, 1974, 1975; Tallal, Stark, Mellits, 1985a, 1985b; Č eponienė et al., in revision). Evidence suggests that children with LI might have problems encoding and representing both verbal and complex nonverbal auditory information (Kraus, McGee, Carrell, et. al., 1996; Uwer, Albrecht, & von Suchodoletz, 2002; McArthur & Bishop, 2005). It is presently under debate as to whether individuals with LI demonstrate a generalized auditory semantic processing deficit that spans both the verbal and nonverbal domains, or whether their semantic integration deficit is specific to the verbal domain.

One way to test whether or not semantic processing of verbal and nonverbal information follows the same path in typically developing children and those with LI is to compare behavioral and neural responses to spoken words with those elicited by environmental sounds. Speech and environmental sounds are two different types of auditory information that can serve the same purpose: They convey meaningful information involving people and environmental events. Thus, our perception and cognition of both types of auditory information may proceed in a similar fashion (for more a more detailed discussion see Cummings, Čeponienė, Dick, Saygin, & Townsend, 2008; Cummings, Čeponienė, Koyama, Saygin, Townsend, & Dick, 2006). Consistent with this idea, recent behavioral evidence has suggested that word and sound processing change similarly during infancy (Cummings, Saygin, Bates, & Dick, in press), typical development (Borovsky, Saygin, Cummings, & Dick, in preparation), aging (Dick, Saygin, Pitzalis, et al., 2007; Saygin, Dick, and Bates, 2005), and in brain lesion patients (Saygin, Dick, Wilson, Dronkers, & Bates, 2003).

An unpublished behavioral study from our research group has compared how children with LI and their age-matched controls (n = 28 in each group, mean age = 12.3 years) process words and environmental sound (Borovsky et al., in preparation). In this study, children had to select one of two pictures that matched an auditory label – either a spoken word (noun + verb-ing, e.g., “piano playing”) or an environmental sound (e.g., brief melody from a Bach fugue). Children with LI exhibited slower reaction times than their controls to both the word and environmental sound labels. Moreover, in the children with LI, robust correlations were found across reaction times in the two auditory domains. These results are consistent with the notion of sparse semantic representations and/or weak connections between semantic representations in children with LI in both the verbal and nonverbal domain (e.g., Kail & Leonard, 1986; Lahey & Edwards, 1996; Montgomery, 2002; Windsor & Hwang, 1999). Alternatively however, a more general, non-specific mechanism (e.g., motor, memory, attention, or cognitive slowness) might also account for such a uniform pattern.

Indeed, although overall word and meaningful non-linguistic sound processing appear to yield similar behavioral indices, the neural processing routes involved in verbal vs. meaningful non-nonverbal processing are likely to be different. One measure that allows identification and assessment of distinct stages of neural processing is event-related brain potentials (ERPs) that reflect the precise timing of synchronous events in the neural encoding of stimuli. Therefore, they can reveal subtle differences in the processing of words and environmental sounds that may not be detected using behavioral measures. Specifically, all semantic stimuli (auditory or visual, orthographic or pictorial) elicit a N400 peak in the ERPs (e.g., Kutas & Federmeier, 2000; Kutas & Hillyard, 1980; Kutas & Hillyard, 1983). The ERP region around the N400 peak (not just the peak itself) is enhanced in negative voltage when the stimulus does not match an expectancy set by a fore-going message. This enhancement, termed the N400 effect, is used to assess semantic integration across the semantic components of a message since its timing and magnitude correlate with the degree of semantic incongruency. ERP difference waves (i.e., semantic mismatch minus semantic match trials) are the standard way to evaluate the semantic mismatch N400 effect.

Using a cross-modal picture-sound match/mismatch paradigm, earlier we have compared the N400 effect elicited by words and environmental sounds in groups of healthy pre-adolescent, adolescent, and adult participants (Cummings et al., 2006, 2008). We observed that in college age adults, the N400 effect elicited by words peaked later than the N400 effect elicited by corresponding environmental sounds (Cummings et al., 2006). The latency differences between the verbal and nonverbal stimuli were explained by suggesting that words undergo an additional, lexical processing loop before their semantic nature can be accessed, whereas environmental sounds may directly activate the corresponding semantic representations, corresponding with an earlier peak latency of the N400 effect.

In a subsequent study of typically developing children (Cummings et al., 2008), we found no major maturational changes in the N400 effect between the pre-adolescent and adolescent children. However, as compared with adults, children demonstrated significant maturational changes including longer latencies and larger amplitudes of the N400 effect. Interestingly, these developmental differences were driven by stimulus type: The environmental sound N400 effect decreased in latency from adolescence to adulthood, while no age effects were observed in response to words. Thus, it appears that the semantic processing of single words is established by 7 years of age, but the processing efficiency of environmental sounds continues to improve into adulthood. This effect was explained by the predominance of verbal processing in everyday life, especially given that words are the substrates of thoughts; they are actively produced and listened to during inter-personal communication, as well as from various media sources. It was further suggested that because of the predominance of verbal input in the environment, early in development both verbal and nonverbal sounds might pass through the lexical loop. Once high-order and high-efficiency automatic subroutines come online, the need for the environmental sounds to pass through the lexical loop decreases, leading to reduced N400 latencies (Cummings et al., 2008).

While no electrophysiological (ERP) study has broached the verbal versus nonverbal question in children with LI, one recent study with learning-disabled1 and healthy adults (n = 16 in each group, ages 18 to 36 years) did address this issue. Plante, van Petten, and Senkfor (2000) presented their participants with visual-auditory word pairs (e.g. typed word “apple” – spoken word “orange”) and picture-sound pairs (e.g. picture of a bird – sound of a birdsong). Both the typical and learning-disabled college students showed robust context N400 effects in response to the mismatching picture/sound pairs. However, the learning-disabled students exhibited a statistically smaller context N400 effect, as compared with that in their controls, in response to the word pairings. Thus, Plante and colleagues (2000) provided some evidence that college-aged adults with learning impairments are specifically impaired in the processing of verbal items. However, the verbal trials in their study consisted from a printed word paired with a spoken word, introducing a possible confound between lexical and orthographic processing.

The N400 effect in language impaired populations

Several studies have examined the N400 response in children with LI, with rather variable results. Miles and Stelmack (1994) presented children with reading, spelling, and learning disabilities (n = 8, ages 10 to 13 years) with printed words that were primed (i.e., preceded) by drawings (visual-visual pairs) or spoken words (auditory-visual pairs) that were either related or unrelated in meaning to the printed word. As expected, the control children exhibited a N400 effect (termed N450 in that study) in both context conditions. In contrast, the children with learning disabilities did not demonstrate the N400 effect in either condition. The authors suggested that an impairment of the auditory-verbal associative systems in the children with reading and spelling disabilities might have curtailed the semantic integration in this task.

In a study of sentence processing in children with LI (n = 12, ages 8 to 10 years), visual sentences were presented one word at a time, with the final word being either congruent or anomalous with the sentence context (Neville, Coffey, Holcomb, & Tallal, 1993). In response to the sentence-final words, the children with LI tended (p < .06) to exhibit larger N400 effects than their controls. The authors hypothesized that the larger N400 responses in children with LI represented compensatory increases in the effort required to integrate words into the context, as well as a greater reliance on context for word recognition, as compared with control children. These results are in contrast with those of a more recent study (Sabisch, Hahne, Glass, von Suchodoletz, & Friederici, 2006) in which German children with LI (n = 16, mean age = 9;7) and their controls listened to short auditory sentences that were either correct in syntactic and semantic structure or contained a semantic violation. While the control children exhibited a broadly distributed N400 effect in response to semantic violations, the children with LI did not show an N400 effect. In addition, Sabisch and colleagues (2006) reported that larger N400 effects were associated with better use of word knowledge (i.e., vocabulary as assessed by the German version of the Wechsler Intelligence Scale for Children, HAWIK-III).

The reviewed N400 studies suggest that children with LI have lexical semantic processing deficits in both visual and auditory domains. However, given the various design confounds, such as asking children with known reading impairments to read printed words or sentential paradigms violating complex syntax, it still remains to be shown how children with LI process single words in simple, un-confounded semantic association tasks. Further, no ERP study has examined how children with LI process meaningful nonverbal material or compared it with verbal semantic processing. Our own behavioral evidence (Borovsky et al., in prep) suggests that children with LI are less efficient in processing both verbal and nonverbal auditory information. However, the use of verbal and nonverbal auditory stimuli in an ERP paradigm may reveal processing differences between words and environmental sounds that are only apparent at the neural level.

Specifically, our previous work has reported that the primary electrophysiological difference between words and environmental sounds is a latency difference, with words eliciting a longer-latency N400 effect (Cummings et al., 2006, 2008). This was hypothesized to be due to verbal input looping through a “lexical loop” prior to accessing supra-modal semantic representations, while the environmental sounds might be bypassing this lexical activation stage and activating those representations directly. This phenomenon permits us to examine whether similar differences between the processing of the two input domains are expressed in the children with language impairment.

In summary, the goals of the current study were to examine, by utilizing behavioral and electrophysiological measures, whether 1) children with language impairment show verbal semantic integration deficits at a single-item level and, if found, 2) whether such an impairment extends to the non-verbal domain. Examining how children with language impairment process words and environmental sounds, as compared to their healthy age-matched peers, could provide valuable information in regards to the extent of the semantic deficits observed in developmental language impairment.

METHODS

Participants

Sixteen children with language impairment (LI) (ages 7 to 15 years; 13 male) and 16 age-matched typically developing controls (TD) (ages 7 to 15 years; 12 male) participated in the experiment. Three children from each language group completed the Noun Experiment and the other 13 children in each group completed the Verb Experiment.2 All participants were right-handed monolingual English speakers. They were screened for neurological disorders, hearing, uncorrected vision, emotional, and behavioral problems. Hearing was tested with a portable audiometer using pure tones of 500, 1000, 2000, and 4000 Hz. Thresholds of 20-dB or lower were required to pass. All participants signed informed consent in accordance with the UCSD Human Research Protections Program.

The specific inclusionary/exclusionary criteria for the TD children were as follows: a) normal developmental and medical history as assessed by a phone screening interview with the parents, medical and family history questionnaires developed in our Center, and a neurological assessment; b) normal intelligence: standard score at or above 85 based on IQ screening with age-appropriate Wechsler Revised/3rd Edition Vocabulary and Block design sub-tests (Wechsler, 1974, 1991), established to have high validity (validity coefficient [r] > .90; Sattler, 1988); c) normal language as per CELF screen Clinical Evaluation of Language Fundamentals – Revised Screening test (CELF-R; Semel, Wiig, & Secord, 1989); d) grade-level academic functioning.

The specific inclusionary/exclusionary criteria for children with language impairment were as follows: a) a nonverbal performance IQ 80 or higher (McGregor et al., 2002); b) expressive Language scores, computed from CELF-3 sub-tests, 1.5 or more standard deviations below the age-appropriate mean (Semel, Wiig, & Secord, 1995); c) no specific neurological diagnosis (cerebral palsy, stroke, seizures); d) no diagnosis of autism and/or emotional disturbance. One LI child had a concomitant attention-deficit hyperactivity disorder (ADHD). Standardized testing results are shown in Table 1

Table 1.

Participant Characteristics of the children with LI and typically developing (TD) children.

| TD (n=16) | LI (n=16) | |

|---|---|---|

| Age: Mean (SD) | 10;11 (2;6) | 11;5 (2;6) |

| t=0.59, p > .56 | ||

| WISC-3/WISC-R: Full-scale IQ | 109.4 (9.9) | 93.5 (13.1) |

| t=3.41, p < .003 | ||

| WISC-3/WISC-R: Verbal IQ | 110.9 (14.0) | 87.9 (14.1) |

| t=4.09, p < .0002 | ||

| WISC-3/R Vocabulary scaled score | 12.7 (3.2) | 7.5 (1.9) |

| t=4.89, p < .0001 | ||

| WISC-3/WISC-R: Nonverbal IQ | 106.5 (9.6) | 101.6 (17.4) |

| t=0.86, p > .79 | ||

| WISC-3/R Block Design scaled score | 11.5 (2.3) | 10.3 (2.7) |

| t=1.19, p > .24 | ||

| CELF-3 Receptive Language standard score | Screen pass | 77.8 (11.9) |

| CELF-3 Expressive Language standard score | Screen pass | 69.9 (13.3) |

| CELF-3 Total Language standard score | Screen pass | 72.1 (11.6) |

| PPVT-R standard score | 121.1 (8.8) | 87.6 (9.2) |

| t=8.76, p < .0001 | ||

| Hearing | Within normal limits | Within normal limits |

| Speech delays | n=1 | n=8 |

| Fine motor problems | n=1 | n=1 |

| Reading problems | - | n=5 |

| Learning disability | - | n=2 |

| ADHD | - | n=1 |

• The Clinical Evaluation of Language Fundamentals (CELF-3; Semel, Wiig, & Secord, 1995) has Receptive Language, Expressive Language, and Total Language measures. Sub-tests within these areas include assessments of semantics, morphology, syntax, and sentence memory.

• The CELF-3, Peabody Picture Vocabulary Test – Revised (PPVT-R; Dunn & Dunn, 1981), and Wechsler Intelligence Scale – Revised/3rd edition (WISC-R/WISC-3; Wechsler, 1974;Wechsler, 1991) yield standard scores with M=100 and SD=15.

• WISC-3/R Block Design and Vocabulary scaled scores have M=10 and SD =3.

• For the typically developing children, estimated Full-scale, Verbal, and Nonverbal IQ values are reported.

Stimuli

This study employed a picture-sound matching design to assess electrophysiological brain activity related to semantic integration as a function of auditory input type (Words vs. Environmental Sounds; Figure 1). Colorful pictures of objects were presented with either a word or an environmental sound, with the sound starting at 600 ms after the picture onset and both stimuli ending together. Such a construct allowed for obtaining clean visual ERPs to the pictures, left no time for their conscious labeling (verbalization), and avoided working memory load.3 In half the trials, pictures and sounds matched, and in half the trials they mismatched. Auditory stimuli were digitized at 44.1 kHz with a 16-bit sampling rate. The average intensity of all auditory stimuli was normalized to 65 dB SPL.

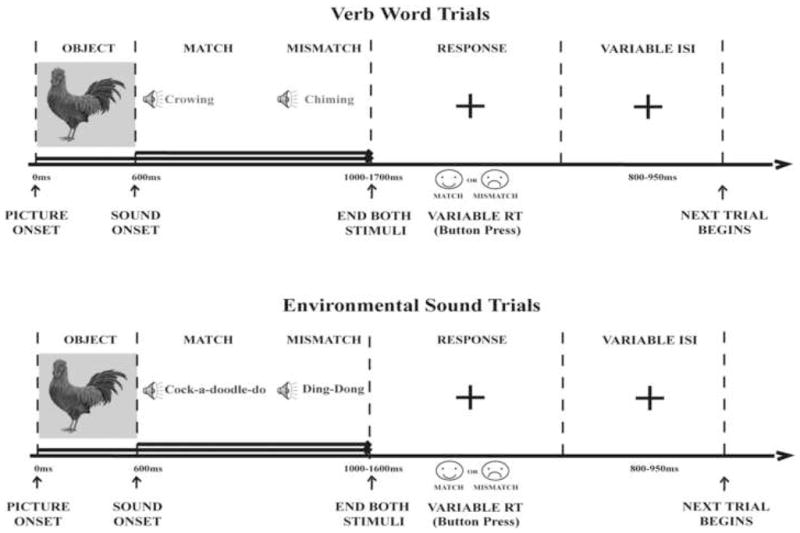

Figure 1.

Experimental Design. A picture of a real object was presented on a computer screen followed by a word or environmental sound 600 ms later. The auditory stimulus either matched or mismatched the visual stimulus. Once the auditory stimulus was complete, the picture disappeared from the computer screen and was replaced by a fixation cross. The participants had to press a happy-face smiley for matching trials and a sad-face smiley for mismatching trials.

Environmental Sounds

The sounds came from many different nonlinguistic areas: animal cries (n=15, e.g. cow mooing), human nonverbal vocalizations (n=3, e.g. sneezing), machine noises (n=8, e.g. car honking), alarms/alerts (n=7, e.g. phone ringing), water sounds (n=1, toilet flushing), event sounds (n=12, e.g. bubbles bubbling) and music (n=9, e.g. piano playing). The sounds ranged in duration from 400–870 ms (mean = 574 ms, SD = 104 ms).

Words

Words were pronounced by three North American speakers (one female and two male), which allowed for a greater acoustic variation. The words were digitally recorded in a sound isolated room (Industrial Acoustics Company, Inc., Winchester, UK) using a Beyer Dynamic (Heilbronn, Germany) Soundstar MK II unidirectional dynamic microphone and Behringer (Willich, Germany) Eurorack MX602A mixer. Noun stimuli ranged in duration from 262–940 ms (M = 466 ms, SD = 136 ms) and the verb stimuli ranged in duration from 395–1154 ms (M = 567 ms, SD = 158ms).

Visual stimuli

Pictures were full-color, digitized photos (280 × 320 pixels) of common action-related objects that could produce an environmental sound and be described by a verb or a noun. All pictures extended 6.9 degrees visual angle and were presented in the middle of the computer monitor on a gray background. The same set of pictures was used for both the matching and mismatching picture contexts. The only constraint on the semantic mismatching trials was that the mismatch had to be unambiguous (e.g. the picture of a basketball was not presented with the sound of hitting a golf ball).

Procedure

The stimuli were presented in blocks of 54 trials. All trial types occurred with equal probability, in a pseudo-random order with the constraints that no picture was presented twice in a row and that certain sounds could not be mismatched with certain pictures, as mentioned above. Thus, there was no immediate semantic priming across word and environmental sound trials, or across matching and mismatching trials. Six stimulus blocks (324 trials altogether) were presented to every participant. The pictures and sounds were delivered by stimulus presentation software (Presentation software, Version 0.70, www.neurobs.com). Pictures were presented on a computer screen situated 120 cm in front of the participant and sounds were played via two loudspeakers situated 30 degrees to the right and left from the midline in front of a participant. The sounds were heard as appearing from the midline space. The participants’ task was to press a button marked by the smiley face as quickly as possible if they thought the picture and auditory stimulus matched, and to press a button marked by the sad face if they thought that the stimuli mismatched.

Trial length was variable, being a combination of stimulus presentation (approximately 900–1700 ms), reaction time (M = 919–998 ms), and variable inter-trial interval (ISI; 800–950 ms). The happy face sticker on a response button always represented a match trial response and the sad face sticker always represented a mismatch trial response. Response hands were counterbalanced across the participants: half of the participants had the happy face button on the right side of the button box, and the other half had it on the left side. The experiment included trials of non-meaningful stimuli (as described in Cummings et al., 2006, 2008). They consisted of fractal-like pictures accompanied by computer-generated non-meaningful sounds. Since the meaningfulness dimension was not a focus of the present paper, the non-meaningful trial data is not reported.

Behavioral Data Analysis

Accuracy on a given trial was determined by a participant’s button press response to the Picture-Sound Type pair. Median reaction times from correct trials were calculated from the onset of the auditory stimulus to the button press (means yielded comparable results). The effects of Language Group on Sound Type were examined in a Language Group (LI, TD) × Sound Type (Words, Environmental Sounds) × Trial Type (Match, Mismatch) ANOVA.

EEG Recording and Averaging

Continuous EEG was recorded using a 32-electrode cap (Electrocap, Inc.) with the following electrodes attached to the scalp, according to the International 10–20 system: FP1, FP2, F7, F8, FC6, FC5, F3, Fz, F4, TC3, TC4 (30% distance from T3-C3 and T4-C4, respectively), FC1, FC2, C3, Cz, C4, PT3, PT4 (halfway between P3-T3 and P4-T4, respectively), T5, T6, CP1, CP2, P3, Pz, P4, PO3, PO4, O1, O2, and right mastoid. Eye movements were monitored with two electrodes, one attached below the left eye and another at the corner of the right eye. During data acquisition, all channels were referenced to the left mastoid; offline, data was re-referenced to the average of the left- and right-mastoid tracings.

The EEG (0.01–100Hz) was amplified 20,000 × and digitized at 250Hz for the off-line analyses. Prior to averaging, an independent-component analysis (ICA; Jung et al., 2000) was used to correct for eye blinks and lateral eye movements. The remaining artifactual trials due to excessive muscle artifact, amplifier blocking, and overall body movements were rejected from further analyses by adjusting rejection thresholds for each participant individually. Epochs containing 100 ms pre-auditory stimulus and 900 ms post-auditory stimulus time were baseline-corrected with respect to the pre-auditory stimulus interval and averaged by stimulus type: Picture-Word Match, Picture-Word Mismatch, Picture-Environmental Sound Match, and Picture-Environmental Sound Mismatch. A low-pass Gaussian digital filter was used to filter out frequencies higher than 60 Hz. On average, the remaining individual data contained 85 (SD = 13) Word trials (TD M = 87 (SD = 11); LI M = 81 (SD = 16)) and 88 (SD = 13) Environmental Sound trials (TD M = 89 (SD = 9); LI M = 86 (SD = 17)). The N400 effect was measured from difference waves, which were made by subtracting the ERP responses to the matching trials from the ERP responses to the mismatching trials of each sound type (Figure 2, right column).

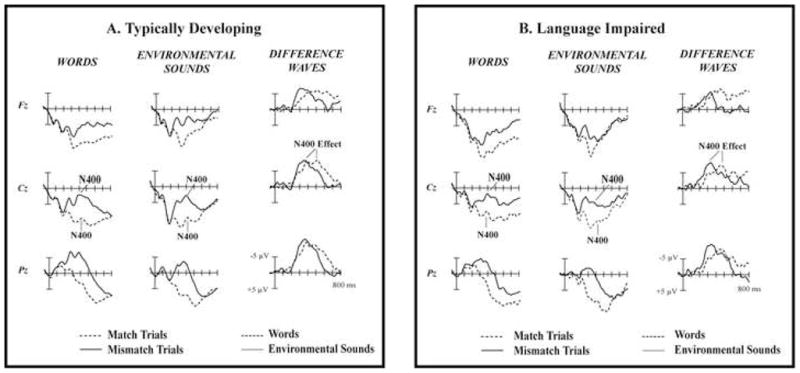

Figure 2.

The N400 peak is visible in the ERPs of both matching and mismatching words (left column) and environmental sounds (middle column). It was larger in response to the mismatching than to matching stimuli. The N400 effect is a difference between the match and mismatch trial ERPs in the 300 – 500 ms range (difference waves in the right column). A. Typically Developing Children. B. Children with Language Impairment.

ERP Measurements

ERP responses from correct trials were analyzed. The N400 effect was measured at the 15 electrodes, where it was present in the grand-average waveforms: F3/F4, Fz, FC1/FC2, C3/C4, Cz, CP1/CP2, P3/P4, Pz, and PO3/PO4. First, peak latencies of the N400 effect were measured in the grand averages of the two Language Groups from 300 to 500 ms after sound onset. A “center” latency of the N400 effect for each group was then calculated as a mean of the latencies across the 15 electrodes (Table 3). Mean amplitudes and peak latencies of the N400 effect of each participant were then measured at each of the 15 electrodes from 25 ms before to 25 ms after (total of 50 ms) the “center” latency of the group to which the participant belonged. In the few cases when the peak fell outside of this window, the peak was manually adjusted with the constraint that the peak had to fall within the 300 to 500 ms window.

Table 3.

N400 Amplitude (SEM) in microvolts for children with LI and TD children recorded at three midline electrodes. All mean amplitudes were significant at p < .0001 as compared to the pre-stimulus baseline.

| Sound Type | All Groups | LI | TD | ||||

|---|---|---|---|---|---|---|---|

| Fz | Cz | Pz | Fz | Cz | Pz | ||

| Words | −7.93 (.16) | −8.40 (.83) | −9.01 (1.02) | −9.17 (1.06) | −6.88 (1.24) | −8.93 (1.37) | −10.57 (1.30) |

| Environmental Sounds | −8.36 (.18) | −6.48 (1.57) | −9.36 (1.51) | −11.87 (1.27) | −8.06 (1.07) | −9.33 (1.00) | −11.46 (.89) |

| Both Sound Types | −7.91 (.18) | −8.38 (.17) | |||||

Two-tailed independent-sample t-tests were conducted to test the significance of the N400 effect elicited by the two sound types at the midline electrodes. The effects of Language Group on Sound Type were examined in a Language Group (LI, TD) × Sound Type (Words, Environmental Sounds) × Electrode (15 levels) ANOVA. When applicable, Geiser-Greenhouse corrected p-values are reported.

The N400 effect in the children was broad. Therefore, a possibility existed that Language Group or Stimulus Type differences might be confined to narrower latency intervals (e.g., Word N400 appeared to be smaller in amplitude in the children with LI than in the TD children specifically at 500–600 ms post-stimulus; Figure 3). Therefore, we measured the mean amplitude of the difference waves elicited by words and environmental sounds in both language groups in four 100 ms time windows: 300–400 ms, 400–500 ms, 500–600 ms, and 600–700 ms. The ANOVA was then re-run using these time intervals as a factor: Language Group (LI, TD) × Sound Type (Words, Environmental Sounds) × Time Window (1, 2, 3, 4) × Electrode (15 levels) ANOVA.

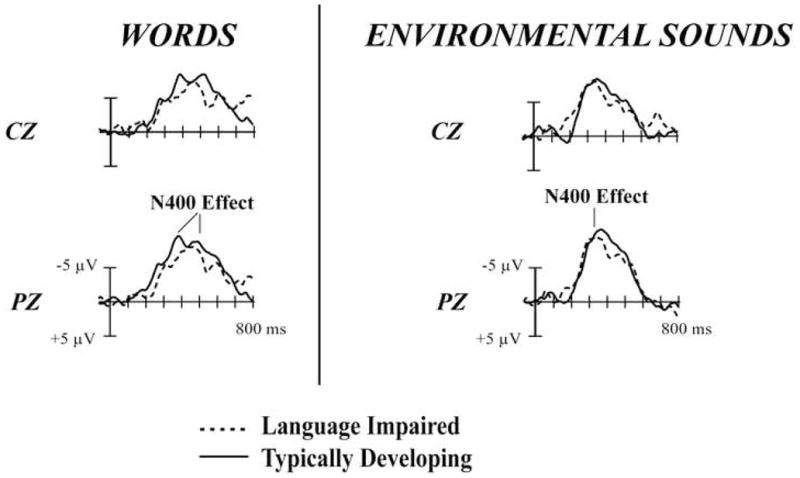

Figure 3.

Mismatch-ERP minus Match-ERP difference waves to Words and Environmental Sounds in the children with LI and TD children. Both language groups elicited similar N400 effect amplitudes and latencies in response to the environmental sound stimuli. In response to the word stimuli, the children with LI elicited significantly later N400 effect than did the TD children. The N400 effect amplitude in response to the words did not differ across the two language groups.

Scalp distribution analyses for Language Group and Sound Type effects were completed to search for differences along the Laterality and Anterior-Posterior dimensions. For scalp distribution analyses, mean amplitudes from 12 electrodes comprising 6 anterior-posterior levels and 2 left-right hemisphere levels scalp distribution, were included as follows: F3/F4, FC1/FC2, C3/C4, CP1/CP2, P3/P4, and PO3/PO4. All scalp distribution analyses involving interactions between variables were completed using amplitudes normalized using a z-score technique calculated separately for the responses to the Word and Environmental Sound stimuli (Picton et al., 2000). Normalized amplitudes were used only for between-group scalp distribution analyses.

For both the Anterior-Posterior and Laterality analyses, the scalp distribution differences were examined between the Language Groups as a function of Sound Type: Language Group (LI, TD) × Sound Type (Word, Environmental Sound) × Anteriority-Posteriority (6 levels) or Laterality (2 levels).

RESULTS

Behavioral Results

Accuracy

Overall, participants responded more accurately to Environmental Sounds than to Words (F(1,30) = 16.71, p < .0004, η2 = .358; Table 2). No effect of Language Group was observed in this analysis (p < .28). A trend for a Sound Type × Language Group interaction was found (F(1,30) = 3.92, p < .06, η2 = .116), however post-hoc ANOVAs did not reveal significant accuracy differences between the two Language Groups as they processed word and environmental sounds.

Table 2.

Accuracy and Median Reaction Times for Words and Environmental Sounds in children with LI and TD children.

| Sound Type | All Groups | LI | TD | ||||||

|---|---|---|---|---|---|---|---|---|---|

| OV | Match | Mismatch | OV | Match | Mismatch | OV | Match | Mismatch | |

| Accuracy % correct (SD) | |||||||||

| Words | 88% (9) | 83% (9) | 92% (7) | 86% (11) | 80% (9) | 92% (8) | 90% (7) | 86% (7) | 92% (7) |

| Environmental Sounds | 91% (6) | 89% (6) | 92% (6) | 90% (7) | 89% (6) | 92% (8) | 91% (6) | 90% (7) | 93% (5) |

| Both Sound Types | 86% (8) | 92% (7) | 88% (9) | 84% (9) | 92% (6) | 90% (7) | 88% (7) | 93% (6) | |

| RT in ms for correct responses (SEM) | |||||||||

| Words | 969 (34) | 954 (49) | 983 (48) | 966 (58) | 953 (83) | 978 (84) | 972 (37) | 956 (56) | 989 (50) |

| Environmental Sounds | 956 (35) | 934 (49) | 978 (50) | 939 (60) | 919 (85) | 959 (86) | 973 (36) | 948 (50) | 998 (52) |

| Both Sound Types | 944 (34) | 981 (34) | 952 (41) | 936 (59) | 969 (59) | 973 (26) | 952 (37) | 994 (35) | |

OV = Overall

Mismatching trials also elicited overall greater accuracy than matching trials (F(1,30) = 24.52, p < .0001, η2 = .45) in both language groups (LI: F(1,15) = 9.56, p < .008, η2 = .389; TD: F(1,15) = 15.00, p < .003, η2 = .50). That is, participants had more difficulty determining whether or not a word or environmental sound matched (described) an object than determining that it did not match.

Reaction Time

Sound Type (Word/Environmental Sound) did not affect participants’ reaction times (Table 2). No Language Group effect, or Sound Type × Language Group interaction, was found. Thus, both groups of children responded similarly to the word and environmental sound stimuli, with no Language Group differences observed. In contrast to the accuracy data, reaction times were faster in matching than mismatching trials (F(1,30) = 7.64, p < .01, η2 = .203). Trial Type did not interact with Language Group.

ERP Results

N400 Effect Amplitude

There was no overall effect of Sound Type or Language Group on the N400 effect magnitude (Table 3). No Sound Type × Language Group interaction was observed, either. Thus, both child groups showed similar-sized N400 effects to words and environmental sounds. Moreover, no effects were found on mean amplitude measures compiled over the four narrower 100 ms intervals.

N400 Effect Latency

The environmental sound stimuli elicited an earlier N400 effect than word stimuli (F(1,30) = 25.53, p < .0001, η2 = .46; Table 4, Figures 2 & 3). Further, the N400 effect peaked later in the LI group than in the TD group (F(1,30) = 4.296, p < .05, η2 = .125).

Table 4.

N400 Latency (SD) in milliseconds for children with LI and TD children, recorded at three midline electrodes.

| Sound Type | All Groups | LI | TD | ||||

|---|---|---|---|---|---|---|---|

| Fz | Cz | Pz | Fz | Cz | Pz | ||

| Words | 415 (49) | 433 (61) | 430 (57) | 431 (49) | 389 (44) | 397 (33) | 400 (37) |

| Environmental Sounds | 375 (49) | 376 (58) | 384 (72) | 369 (64) | 365 (52) | 363 (39) | 363 (31) |

| Both Sound Types | 406 (62) | 385 (39) | |||||

The Language Group × Sound Type (Word/Environmental Sound) interaction suggested a trend (F(1,30) = 3.30, p < .08, η2 = .10). Given that this interaction was of primary interest at the outset of the study, pre-planned ANOVAs revealed that while both the children with LI (F(1,15) = 20.33, p < .0001, η2 = .575) and TD children (F(1,15) = 6.24, p < .03, η2 = .294) demonstrated significantly later N400 effects in response to the Word than to Environmental Sound stimuli, the latency difference between the two stimulus types was much greater in the LI group (LI = 54 ms vs. TD = 27 ms). Consistent with this, pre-planned ANOVAs conducted separately on each sound type revealed that the word N400 effect peaked significantly later (35 ms) in the LI group than in the TD group (F(1,30) = 9.71, p < .005, η2 = .245), while the environmental sound N400 effect latency did not differ across the two groups. In other words, in children with LI, the responses to the words were significantly delayed when compared with their TD peers, but the responses to the nonverbal semantic information appeared to follow a similar neural activation timeline.

N400 Effect Scalp Distribution

A main effect of Anterior-Posterior distribution was observed (F(5,150) = 11.90, p < .0001, η2 = .284). Electrodes at the most anterior (F3/F4) scalp sites showed the smallest activation (M = −6.64 μV), while electrodes over the centro-parietal sites showed the greatest activation (CP1/CP2; M = −10.49 μV; Figure 2). No effect of Laterality was observed (p < .79) for either sound type: Right hemisphere, Words= −8.42 μV, ES= −9.38 μV; Left hemisphere, Words= −8.71 μV, ES = −9.23 μV. No interactions involving Language Group or Sound Type were found in either analysis.

DISCUSSION

This study compared behavioral and electrophysiological indices of semantic integration of words and environmental sounds in typically developing children and those with language impairment (ages 7 to 15 years). The main finding was delayed processing of picture-word trials in the LI group. This finding suggested that the children with LI have a semantic integration deficit, somewhat specific to the verbal domain.

Similarities in word and environmental sound processing between the LI and TD children

For the most part, the children with LI demonstrated response patterns to words and environmental sounds that were similar to those of their TD peers. Both groups were more accurate in responding to environmental sounds than words and had comparable reaction times across the two sound types. The N400 effect amplitudes did not differentiate the two language groups in either sound type condition. Moreover, the children with LI exhibited the same N400 effect latency pattern as seen in our previous healthy adult and child studies; specifically, the environmental sounds elicited earlier N400 effects than did words. Thus, based on these results from our semantic matching task, it would appear that the children with LI showed developmentally appropriate responses.

These findings are in contrast to previous studies examining the N400 semantic context effect in children with LI who found diminished (Miles & Stelmack, 1994; Sabisch et al., 2006) or enhanced (Neville et al., 1993) N400 effects in this population. The likely causes of these discrepancies lie in the differences between the experimental designs. For example, both Neville et al. (1993) and Miles and Stelmack (1994) required that the children with language/learning disorders read sentences or single words. Given that these populations had diagnosed reading disorders (Miles & Stelmack, 1994) or possible reading delays (Neville et al., 1993), the reading component of the task likely induced an important unfavorable confound. This is supported by the fact that in Miles and Stelmack (1994) study the N400 effect was missing not only in the trials with spoken words as primes but also in the trials with non-verbal drawings as primes, since in both cases, the target stimuli were typed words.

Another important design difference is the nature of the task. The design of the Miles and Stelmack (1994) study was most similar to that of the present study in that single semantic items were used. However, their experimental task also required that the children attempt to memorize the visually presented words. Although the children were “encouraged to try and guess whether the target [the second, primed] word would be the same or different than the first stimulus”, their main task was to remember the primed word in each pair for a subsequent recognition memory task. Thus, the children were performing two tasks: semantic matching and memorization. Even though the N400 generation appears to feature a great deal of automaticity (Cummings et al., 2006), our own unpublished data in adults indicates that the N400 is strongly diminished when attention is not directed to the semantic dimension of the stimuli. Therefore, the lack of the N400 effect in the Miles and Stelmack (1994) study might be more related to the diminished procedural capacities in the LI population rather than to a semantic integration deficit per se.

A third design difference is the linguistic complexity of the stimulus material. In the present study, a single picture, largely overlapping in time with the following word or sound, established the semantic context. Thus, memory load and grammatical skills were not taxed. Based on the present results, it appears that in such a simple match/mismatch context, the neural response (in terms of amplitude) to a semantically anomalous stimulus is quite similar across the LI and TD groups. Perhaps only when the task becomes more linguistically demanding, such as the processing of complex sentences (e.g., Neville et al., 1993), do semantic processing differences surface.

Differences in word and environmental sound processing in LI and TD children

While the timing of the neural responses to words and environmental sounds followed the same general pattern in children with LI as in their typically developing peers, the word-elicited N400 effect was disproportionately delayed in the LI group. Importantly, this was not the case in the environmental sound trials. Therefore, at a neural level, the children with LI in our sample appeared to exhibit slowness specifically in integrating verbal information.

The presence of ERP differences during verbal semantic processing despite high behavioral accuracy might suggest that the children with LI may have used different, less efficient, physiological mechanisms to accomplish the verbal semantic association task. However, as evidenced by the typical scalp distribution of their responses, it does not appear that the children with LI used different neural networks than their typically developing peers. Moreover, given that the shape and size of the elicited waveforms were similar to those of the control children, evidence for atypical configuration of semantic networks in LI seems to be lacking.

Since most of the literature surrounding the N400 semantic context effect in language-impaired populations has reported amplitude findings (e.g., Miles & Stelmack, 1994; Neville et al., 1993; Plante et al., 2000; Sabisch et al., 2006), it is somewhat unexpected that our language group differences concerned the N400 effect latency. However, while not highlighted in their paper, Neville et al. (1993) did report that the N400 to anomalous word endings occurred later in their LI group than in their controls. Indeed, visual inspection of their difference waves suggests that the peak of the N400 effect to sentence-final words in the children with LI was 50 to 75 ms later than that of the control children. Thus, whether engaged in a simple semantic matching task, such as ours, or a more complex sentence processing task, such as that found in Neville et al. (1993), children with language impairment appear to demonstrate delayed verbal semantic integration.

It is interesting to note that while the N400 effect latencies were later in the children with LI than in the TD children, their behavioral reaction times did not differ. Our previous studies (Cummings et al., 2006, 2008) reported similar word N400 latency-RT discrepancies in young adults and typically developing children, which were hypothesized to be the result of more efficient associations between the lexicon and behavioral response mechanisms due to the continuous use and production of words (Cummings et al., 2008). However, these findings are at odds with the significantly delayed latency of word N400 effect in the current group with LI. Therefore, an additional explanation is necessary to account for the shortened amount of time between the word N400 effect and the button press in these children.

One distinct possibility is that there is no direct relationship between the N400 effect latency and reaction times. Indeed, single-trial ERP analysis in our previous study with adults using the same design (Cummings et al., 2006) revealed that the timing of the N400 peak was not correlated with behavioral response times. The mismatching stimuli ERP waveforms contained both the “basic” N400 peak and an overlapping N400 effect, and as a result were reflective of the N400 effect-RT relationship. This finding was taken to support the automatic nature of the N400 generating process: Factors other than the N400 effect latencies appear to underlie differences in matching vs. mismatching trial reaction times. Therefore, it is likely that this relatively early and involuntary semantic processing stage lacks efficiency in the verbal domain in the children with LI. Moreover, it appears that these children are able to compensate for this early slow-down at subsequent, voluntary processing stages, resulting in the largely normal timing of a behavioral response. It must be emphasized, however, that such seemingly full compensation occurred in the present context of a very simple, single-item matching experimental design and, is likely to be insufficient in more complex, sentential paradigms.

A possible underlying cause of the verbal domain-specificity of semantic integration deficit in the LI population might be due to the relative weights of individual sound-object, as compared to word-object, associations (Cummings et al., 2006, 2008). More specifically, relatively few environmental sound semantic representations can be mapped onto any given object or action. In contrast, many different lexical representations (nouns, verbs, and even adjectives) can be associated with the same object. Moreover, words may be the more expected or familiar label for simple pictures depicting objects and actions. If so, any picture would be associated with, and pre-activate, a greater number of “average” word representations than environmental sound representations. Such an unequal association possibly makes the word match/mismatch task more complex.

The word semantic match/mismatch task could potentially be even more challenging for children with LI if they have sparse verbal semantic representations and/or weakened/less efficient connections in their language networks, as predicted by the storage deficit hypothesis of Kail & Leonard (1986). Specifically, the connectivity issues could account for the greater delay observed in their neural N400 effect to words. This is consistent with neuroimaging data showing reduced long-distance connectivity in children with disordered language abilities (Just, Cherkassky, Keller, & Minshew, 2004), as well as with ERP data demonstrating that the N400 effect reflects the activation of pre-established, automatically activated semantic representations (Cummings et al., 2006). In other words, children with LI arguably have sparse verbal semantic representations, or weakened connections between them, that require more time to compute the semantic relationships between the priming and target lexical items, as compared with their age-matched peers.

CONCLUSIONS

Children with LI appear to have a semantic integration deficit at a single-item level, specifically in the verbal domain. This deficit appears to involve early, automatic stages of semantic processing and might originate from sparse, weaker, and/or less efficient connections within their language networks.

Acknowledgments

AC was supported by NIH training grants #DC00041 and #DC007361 and the SDSU Lipinsky Family Doctoral Fellowship. RC was supported by NINDS grant #P50 NS22343. We would like to thank Dr. Ayse P. Saygin and Dr. Frederic Dick for help with stimuli creation.

Footnotes

This study was completed at the Project in Cognitive and Neural Development at UCSD.

As stated by Plante et al. (2000), a learning disability diagnosis was defined as involving a deficit in one or more of the components of language, which negatively impacts academic performance including listening, speaking, reading, and writing. A designation of a learning disability is closely related to, and overlaps with, other diagnostic categories including developmental dyslexia and specific language impairment.

The study was initially designed to address possible processing differences between nouns and verbs. Given that our adult and child data (Cummings et al., 2006, 2008) did not show any differences between noun and verb N400 effects, the data from the Verb and Noun Experiments were collapsed.

The 600 ms window was based on evidence by Simon-Cereijido, Bates, Wulfeck, Cummings, Townsend, Williams, and Ceponiene (2006) who reported that the time it took for young adults to overtly name objects presented in pictures was approximately 800–1000ms.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Bishop D. Uncommon understanding. East Sussex, UK: Psychology Press; 1997. [Google Scholar]

- Borovsky A, Saygin AP, Cummings A, Dick F. Emerging paths to sound understanding: language and environmental sound comprehension in specific language impairment and typically developing children unpublished data. [Google Scholar]

- Čeponienė R, Cummings A, Wulfeck B, Ballantyne A, Townsend J. Spectral vs. temporal auditory processing in developmental language impairment: An event-related potential study. doi: 10.1016/j.bandl.2009.04.003. under revision. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cummings A, Čeponienė R, Dick F, Saygin AP, Townsend J. A developmental ERP study of verbal and non-verbal semantic processing. Brain Research. 2008;1208:137–149. doi: 10.1016/j.brainres.2008.02.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cummings A, Čeponienė R, Koyama A, Saygin AP, Townsend J, Dick F. Auditory semantic networks for words and natural sounds. Brain Research. 2006;1115:92–107. doi: 10.1016/j.brainres.2006.07.050. [DOI] [PubMed] [Google Scholar]

- Cummings A, Saygin AP, Bates E, Dick F. Infants’ recognition of meaningful verbal and nonverbal sounds. Language Learning and Development. doi: 10.1080/15475440902754086. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dick F, Saygin AP, Galati G, Pitzalis S, Bentrovato S, D’Amico S, Wilson S, Bates E, Pizzamiglio L. What is involved and what is necessary for complex linguistic and nonlinguistic auditory processing: Evidence from functional magnetic resonance imaging and lesion data. Journal of Cognitive Neuroscience. 2007;19(5):799–816. doi: 10.1162/jocn.2007.19.5.799. [DOI] [PubMed] [Google Scholar]

- Dunn L, Dunn L. Peabody Picture Vocabulary Test-Revised. Bloomington, MN: Pearson Assessments; 1981. [Google Scholar]

- Hayes C. Vocabulary deficit – One problem or many? Child Language Teaching and Therapy. 1992;8:1–17. [Google Scholar]

- Just M, Cherkassky V, Keller T, Minshew N. Cortical activation and synchronization during sentence comprehension in high-functioning autism: Evidence of underconnectivity. Brain: A Journal of Neurology. 2004;127(8):1811–1821. doi: 10.1093/brain/awh199. [DOI] [PubMed] [Google Scholar]

- Jung T, Makeig S, Westerfield M, Townsend J, Courchesne E, Sejnowski T. Removal of eye activity artifacts from visual event-related potentials in normal and clinical subjects. Clinical Neurophysiology. 2000;111(10):1745–58. doi: 10.1016/s1388-2457(00)00386-2. [DOI] [PubMed] [Google Scholar]

- Kail R, Leonard L. ASHA Monographs. Vol. 25. Rockville, MD: American Speech-Language-Hearing Association; 1986. Word-finding abilities in language-impaired children. [PubMed] [Google Scholar]

- Karmiloff-Smith A. Development itself is the key to understanding developmental disorders. Trends in Cognitive Sciences. 1998;2(10):389–398. doi: 10.1016/s1364-6613(98)01230-3. [DOI] [PubMed] [Google Scholar]

- Katz W, Curtiss S, Tallal P. Rapid automatized naming and gesture by normal and language impaired children. Brain and Language. 1992;43:623–641. doi: 10.1016/0093-934x(92)90087-u. [DOI] [PubMed] [Google Scholar]

- Kraus N, McGee TJ, Carrell TD, Zecker SG, Nicol TG, Koch DB. Auditory neurophysiologic responses and discrimination deficits in children with learning problems. Science. 1996;273:971–973. doi: 10.1126/science.273.5277.971. [DOI] [PubMed] [Google Scholar]

- Kutas M, Federmeier KD. Electrophysiology reveals semantic memory use in language comprehension. Trends in Cognitive Sciences. 2000;12(4):463–470. doi: 10.1016/s1364-6613(00)01560-6. [DOI] [PubMed] [Google Scholar]

- Kutas M, Hillyard S. Event-related brain potentials to semantically inappropriate and surprisingly large words. Biol Psychol. 1980;11(2):99–116. doi: 10.1016/0301-0511(80)90046-0. [DOI] [PubMed] [Google Scholar]

- Kutas M, Hillyard S. Event-related brain potentials to grammatical errors and semantic anomalies. Memory & Cognition. 1983;11(5):539–50. doi: 10.3758/bf03196991. [DOI] [PubMed] [Google Scholar]

- Lahey M, Edwards J. Why do children with specific language impairments name pictures more slowly than their peers? Journal of Speech and Hearing Research. 1996;39:1081–1098. doi: 10.1044/jshr.3905.1081. [DOI] [PubMed] [Google Scholar]

- Lahey M, Edwards J. Naming error of children with specific language impairment. Journal of Speech, Language, and Hearing Research. 1999;42:195–205. doi: 10.1044/jslhr.4201.195. [DOI] [PubMed] [Google Scholar]

- Leonard L. Children with Specific Language Impairment. Cambridge, MA: MIT Press; 1999. [Google Scholar]

- Leonard L, Nippold M, Kail R, Hale C. Picture naming in language impaired children. Journal of Speech and Hearing Research. 1983;26:609–615. doi: 10.1044/jshr.2604.609. [DOI] [PubMed] [Google Scholar]

- Marler JA, Champlin CA, Gillam RB. Auditory memory for backward masking signals in children with language impairment. Psychophysiology. 2002;39:767–780. doi: 10.1111/1469-8986.3960767. [DOI] [PubMed] [Google Scholar]

- McArthur GM, Bishop DV. Speech and non-speech processing in people with specific language impairment: A behavioural and electrophysiological study. Brain and Language. 2005;94(3):260–73. doi: 10.1016/j.bandl.2005.01.002. [DOI] [PubMed] [Google Scholar]

- McGregor K, Appel A. On the relation between mental representation and naming in a child with specific language impairment. Clinical Linguistics & Phonetics. 2002;16(1):1–20. doi: 10.1080/02699200110085034. [DOI] [PubMed] [Google Scholar]

- McGregor K, Newman R, Reilly R, Capone N. Semantic representation and naming in children with specific language impairment. Journal of Speech, Language, and Hearing Research. 2002;45:998–1014. doi: 10.1044/1092-4388(2002/081). [DOI] [PubMed] [Google Scholar]

- Messer D, Dockrell J. Children’s naming and word-finding difficulties: Descriptions and explanations. Journal of Speech, Language, and Hearing Research. 2006;49:309–324. doi: 10.1044/1092-4388(2006/025). [DOI] [PubMed] [Google Scholar]

- Miles J, Stelmack R. Learning disability subtypes and the effects of auditory and visual priming on visual event-related potentials to words. Journal of Clinical and Experimental Neuropsychology. 1994;16:43–64. doi: 10.1080/01688639408402616. [DOI] [PubMed] [Google Scholar]

- Miller C, Kail R, Leonard L, Tomblin JB. Speed of processing in children with specific language impairment. Journal of Speech, Language, and Hearing Research. 2001;44:416–433. doi: 10.1044/1092-4388(2001/034). [DOI] [PubMed] [Google Scholar]

- Montgomery J. Examining the nature of lexical processing in children with specific language impairment: Temporal processing or processing capacity deficit? Applied Psycholinguistics. 2002;23:447–470. [Google Scholar]

- Neville H, Coffey S, Holcomb P, Tallal P. The neurobiology of sensory and language processing in language-impaired children. Journal of Cognitive Neuroscience. 1993;5:235–253. doi: 10.1162/jocn.1993.5.2.235. [DOI] [PubMed] [Google Scholar]

- Picton T, Bentin S, Berg P, Donchin E, Hillyard S, Johnson R, Jr, Miller G, Ritter W, Ruchkin D, Rugg M, Taylor M. Guidelines for using human event-related potentials to study cognition: Recording standards and publication criteria. Psychophysiology. 2000;37(2):127–152. [PubMed] [Google Scholar]

- Plante E, van Petten C, Senkfor A. Electrophysiological dissociation between verbal and nonverbal semantic processing in learning disabled adults. Neuropsychologia. 2000;38(13):1669–1684. doi: 10.1016/s0028-3932(00)00083-x. [DOI] [PubMed] [Google Scholar]

- Rice M, Buhr J, Nemeth M. Fast mapping and word-learning abilities of language-delayed preschoolers. Journal of Speech and Hearing Disorders. 1990;55:33–42. doi: 10.1044/jshd.5501.33. [DOI] [PubMed] [Google Scholar]

- Rice M, Oetting J, Marquis J, Bode J, Pae S. Frequency of input effects on word comprehension of children with specific language impairment. Journal of Speech and Hearing Research. 1994;37:106–122. doi: 10.1044/jshr.3701.106. [DOI] [PubMed] [Google Scholar]

- Sabisch B, Hahne A, Glass E, von Suchodoletz W, Friederici A. Lexical-semantic processes in children with specific language impairment. NeuroReport. 2006;17(14):1511–1514. doi: 10.1097/01.wnr.0000236850.61306.91. [DOI] [PubMed] [Google Scholar]

- Sattler J. Assessment of Children. 3. San Diego, CA: Jerome M. Sattler; 1988. [Google Scholar]

- Saygin AP, Dick F, Bates E. An on-line task for contrasting auditory processing in the verbal and nonverbal domains and norms for younger and older adults. Behavioral Research Methods. 2005;37(1):99–110. doi: 10.3758/bf03206403. [DOI] [PubMed] [Google Scholar]

- Saygin A, Dick F, Wilson S, Dronkers N, Bates E. Neural resources for processing language and environmental sounds: evidence from aphasia. Brain. 2003;126(Pt 4):928–945. doi: 10.1093/brain/awg082. [DOI] [PubMed] [Google Scholar]

- Semel E, Wiig E, Secord W. Clinical Evaluation of Language Fundamentals-Revised (CELF-R) San Antonio, TX: The Psychological Corporation; 1989. [Google Scholar]

- Semel E, Wiig E, Secord W. Clinical Evaluation of Language Fundamentals 3 (CELF-3) San Antonio, TX: The Psychological Corporation; 1995. [Google Scholar]

- Simon-Cereijido G, Bates E, Wulfeck B, Cummings A, Townsend J, Williams C, Čeponienė R. Picture naming in children with Specific Language Impairment: Differences in neural patterns throughout development. Poster presented at the Annual Symposium on Research in Child Language Disorders; Madison, WI. 2006. [Google Scholar]

- Stothard S, Snowling M, Bishop D, Chipchase B, Kaplan C. Language-impaired preschoolers: A follow-up into adolescence. Journal of Speech, Language, and Hearing Research. 1998;41:407–418. doi: 10.1044/jslhr.4102.407. [DOI] [PubMed] [Google Scholar]

- Tallal P, Piercy M. Developmental aphasia: Rate of auditory processing and selective impairment of consonant perception. Neuropsychologia. 1974;12:83–94. doi: 10.1016/0028-3932(74)90030-x. [DOI] [PubMed] [Google Scholar]

- Tallal P, Piercy M. Developmental aphasia: The perception of brief vowels and extended stop consonants. Neuropsychologia. 1975;13:69–74. doi: 10.1016/0028-3932(75)90049-4. [DOI] [PubMed] [Google Scholar]

- Tallal P, Stark RE, Mellits ED. Identification of language-impaired children on the basis of rapid perception and production skills. Brain and Language. 1985a;25:314–322. doi: 10.1016/0093-934x(85)90087-2. [DOI] [PubMed] [Google Scholar]

- Tallal P, Stark RE, Mellits ED. The relationship between auditory temporal analysis and receptive language development: evidence from studies of developmental language disorder. Neuropsychologia. 1985b;23:527–534. doi: 10.1016/0028-3932(85)90006-5. [DOI] [PubMed] [Google Scholar]

- Trauner D, Wulfeck B, Tallal P, Hesselink J. Neurologic and MRI profiles of language impaired children. (Publication no. CND-9513) Center for Research in Language, University of California; San Diego: 1995. [Google Scholar]

- Uwer R, Albrecht R, von Suchodoletz W. Automatic processing of tones and speech stimuli in children with specific language impairment. Dev Med Child Neurol. 2002;44:527–32. doi: 10.1017/s001216220100250x. [DOI] [PubMed] [Google Scholar]

- Wechsler D. Wechsler Intelligence Scale for Children-Revised. San Antonio, TX: The Psychological Corporation; 1974. [Google Scholar]

- Wechsler D. Wechsler Intelligence Scale for Children. 3. San Antonio, TX: The Psychological Corporation; 1991. [Google Scholar]

- Windsor J, Hwang M. Testing the generalized slowing hypothesis in specific language impairment. Journal of Speech, Language, and Hearing Research. 1999;42:1205–1218. doi: 10.1044/jslhr.4205.1205. [DOI] [PubMed] [Google Scholar]