Abstract

The present study contrasted the neural correlates of encoding item-context associations according to whether the contextual information was visual or auditory. Subjects (N=20) underwent fMRI scanning while studying a series of visually-presented pictures, each of which co-occurred with either a visually- or an auditorily-presented name. The task requirement was to judge whether the name corresponded to the presented object. In a subsequent memory test subjects judged whether test pictures were studied or unstudied and, for items judged as studied, indicated the presentation modality of the associated name. Dissociable cortical regions demonstrating increased activity for visual vs. auditory trials (and vice versa) were identified. A subset of these modality-selective regions also showed modality-selective subsequent source memory effects, that is, enhanced responses on trials associated with correct modality judgments relative to those for which modality or item memory later failed. These findings constitute direct evidence for the proposal that successful encoding of a contextual feature is associated with enhanced activity in the cortical regions engaged during the online processing of that feature. In addition, successful encoding of visual objects within auditory contexts was associated with more extensive engagement of the hippocampus and adjacent medial temporal cortex than was the encoding of such objects within visual contexts. This raises the possibility that the encoding of across-modality item-context associations places more demands on the hippocampus than does the encoding of within-modality associations.

Keywords: item-context association, episodic memory, fMRI, subsequent memory effect, source memory, medial temporal lobe

Episodic memory – memory for unique events - depends on the ability to bind the different elements of an event, such as a study item and its spatiotemporal context, into a cohesive memory representation (Tulving, 1983). According to one widely held proposal (e.g. Norman & O'Reilly, 2003) mnemonic binding is achieved through the establishment in the hippocampus of a representation of the patterns of cortical activity elicited by an episode as it is processed in real time1. Retrieval occurs when the hippocampal representation is reactivated by a retrieval cue, which in turn leads to the reinstatement of the pattern of cortical activity encoded by the representation (Alvarez & Squire, 1994; Persson & Nyberg, 2000; Rolls, 2000; Nyberg et al., 2000; 2001; Shastri, 2002; Norman & O'Reilly, 2003). Over the past few years functional neuroimaging studies, employing designs of increasing sophistication, have provided strong support for the notion of retrieval-related ‘cortical reinstatement’ (e.g. Wheeler et al., 2000, 2006; Vaidya et al., 2002; Wheeler &Buckner, 2003, 2004; Gottfried et al., 2004; Kahn et al., 2004; Khader et al., 2005; Woodruff et al., 2005; Johnson & Rugg, 2008). Here, we focus on the related but less well-investigated question whether the neural correlates of successful episodic encoding are also content-sensitive, as would be expected in the context of the above theoretical framework (Rugg et al., 2008).

Starting with Brewer et al. (1998) and Wagner et al. (1998), numerous studies have investigated the neural correlates of successful memory encoding with the ‘subsequent memory procedure’. In this procedure, event-related functional magnetic resonance imaging (fMRI) is used to contrast the neural activity elicited by study items according to how the items are endorsed on a subsequent memory test (e.g. whether the items are correctly recognized or misclassified as ‘new’). Studies employing this procedure have provided strong evidence in favor of the proposal outlined above that the hippocampus plays a key role in the formation of cohesive episodic memory representations. Thus, there are numerous reports that items associated with later successful recollection of contextual details of a study episode elicit greater levels of study activity in the hippocampus and surrounding medial temporal lobe (MTL) than do items for which such details cannot be recollected on the later memory test (e.g., Davachi et al., 2003; Ranganath et al., 2004; Kensinger & Schacter, 2006; Staresina & Davachi 2006; 2008; Uncapher & Rugg, in press; for reviews see Davachi, 2006; Diana et al., 2007; see Gold et al., 2006 and Kirwan et al., 2008 for contradictory findings).

More germane here are findings that support the proposal that episodic memories are encoded in terms of the processes engaged as events are experienced in real-time. For example, several studies have reported that the cortical loci of subsequent memory effects differ according to the nature of the study task, and that the loci of these task-selective effects are found in regions selectively engaged by the task itself (Otten & Rugg, 2001; Otten et al., 2002; Rugg et al., 2002; Mitchell et al., 2004; Park et al., 2008). Additionally, and of more relevance to the present study, it has also been reported that the localization of the cortical activity supporting the encoding of a contextual feature differs according to the nature of the feature (Uncapher et al., 2006; Uncapher & Rugg, in press). In the study of Uncapher et al. (2006), two orthogonally varying contextual features (font color and location) were associated with each study item. Subsequent memory effects predicting later memory for item-color associations were localized to a different cortical region than the regions where activity predicted memory for item-location associations.

Moreover, the regions demonstrating these color- and location-selective encoding effects overlapped regions implicated by prior studies in the processing of color and location information respectively. This finding is consistent with the proposal that cortical subsequent memory effects reflect modulation of activity supporting the on-line processing of the study episode (see above). Uncapher et al. (2006) proposed that feature-selective subsequent memory effects are a consequence of trial-wise fluctuations in the allocation of attentional resources to the feature. They argued that as the allocation of attentional resources to a particular feature increases, so does activity in the cortical regions engaged by the feature. This enhanced activity strengthens the feature's on-line representation, and increases the probability that the associated pattern of cortical activity will be bound into a (hippocampally-mediated) episodic memory representation. This proposal receives further support from a subsequent study that explicitly manipulated attention to color and location, and which reported dissociable subsequent memory effects for the two features that were selectively enhanced by feature-specific attention (Uncapher & Rugg, in press).

In the present experiment, we further address the question whether the encoding of different contextual features is associated with dissociable subsequent memory effects. The principal aim of the experiment was to obtain direct evidence that feature-selective subsequent memory effects are localized to the same cortical regions that are selectively engaged during the processing of the feature. Such evidence would both replicate and significantly extend the findings of Uncapher et al. (2006) and Uncapher & Rugg (in press). In both of those studies, the conclusion that feature-selective subsequent memory effects overlapped cortical regions engaged during the processing of color and location information depended on findings obtained from prior studies (i.e. on ‘reverse inference’; Poldrack, 2006) rather than on data acquired in the course of the experiment. By contrast, the design of the present experiment permitted a direct assessment of the extent of the overlap between subsequent memory effects predictive of later memory for a contextual feature, and the cortical regions selectively activated by the processing of the feature. This was accomplished by employing sensory modality as a contextual feature. Subjects studied a series of pictures that were accompanied by a word that was presented either visually or auditorily. At test, they were required to identify each studied picture and to recollect the modality of the associated word. We expected that subsequent memory effects predictive of successful memory for the visual modality would be found in the regions where activity was greater on visual vs. auditory study trials, whereas auditory subsequent memory effects would overlap auditorily-selective cortical regions. We further predicted that, regardless of modality, successful context memory would be associated with enhanced activity in hippocampus and adjacent MTL cortex (as was found by Uncapher & Rugg, in press, albeit for different visual features rather than sensory modalities). In addition, although the dearth of prior subsequent memory studies employing auditory stimuli (though see Peters et al., 2007; Poppenk et al., 2008) precludes formulation of a specific prediction, the present study affords the opportunity to address the question whether MTL encoding-related activity is modality-sensitive.

Materials and Methods

Subjects

Twenty-two subjects consented to participate in the study. All subjects reported themselves to be in good general health, right-handed, with no history of neurological disease or other contraindications for MR imaging, and to have learned English as their first language. They were recruited from the University of California, Irvine (UCI) community and remunerated for their participation, in accordance with the human subjects procedures approved by the Institutional Review Board of UCI. The data from two subjects were excluded from all analyses because they had fewer than twelve trials in the ‘miss’ experimental condition (Ns of 7 and 8 respectively). Data are reported from the remaining 20 subjects (nine females) ranging in age from 19yrs to 29yrs (mean = 20yrs).

Stimulus Materials

Three hundred and six stimulus triplets were used in the experiment. Each triplet consisted of a picture of a commonly encountered object and both a visually and an auditorily presented word naming the object represented in the picture. The colored pictures were drawn from Hemera Photo Objects 50,000 Volume III (http://www.hemera.com/index.html). The names of the pictures were between three and 10 letters long, with a mean written frequency between 1 and 100 counts per million (Kucera & Francis, 1967). Visually presented words were displayed in black uppercase 30 point Helvetica font on a gray background. Auditory words were recorded by a male voice in the laboratory, edited to a constant sound pressure level and filtered to remove ambient noise (http://audacity.sourcefourge.net). Auditory stimuli were presented binaurally via MR compatible headphones and did not exceed 1000ms in duration (mean duration 650ms). Presentation volume was adjusted in the scanner to a comfortable listening level for each volunteer prior to scanning.

Of the 306 item triplets, ten served as buffers (two at the beginning and end of each study list, and two at the beginning of the test list), and 16 additional triplets were used in the practice phases preceding the study and test sessions (see below). For every subject, 160 pictures and their corresponding names were randomly selected from the remaining 280 stimulus triplets to serve as the critical stimuli in the study session. These stimuli were further randomly divided into two groups; 80 pictures associated with their corresponding visual name, and 80 pictures associated with their corresponding auditory name (congruent trials).

Twenty additional pictures were randomly selected for the study phase. These pictures were not paired with their names; instead, names from unused pictures in the pool were selected, such that for these 20 pictures the subsequently presented name did not correspond to the object (incongruent trials). Out of these 20 pictures, 10 of the mismatching names were presented visually, while the other 10 were presented auditorily.

Two study lists were created from the 180 study pictures (160 pictures with corresponding names, 20 pictures with mismatching names) for each subject. Each list contained a pseudo-random ordering of 40 pictures associated with matching visual words, 40 pictures associated with matching auditory words, five pictures associated with mismatching visual words, and five pictures associated with mismatching auditory words. The study task required a decision as to whether or not the words corresponded to the pictured objects.

Test items consisted of the 160 pictures from the congruent study trials and 80 new pictures. The test requirement was to judge whether the item had been presented at study and, if so, to indicate whether the associated word had been presented visually or auditorily. Both study and test items were presented in a subject-unique pseudo-random order, such that there were no more than three consecutive presentations of items belonging to any one experimental condition.

Study items were back-projected onto a screen and viewed via a mirror mounted on the scanner headcoil. Pictures were presented in central vision within a continuously displayed solid gray frame, and subtended maximum visual angles of 5.7 ° × 5.7°. Words were centered 1° below the pictures and subtended a maximum horizontal visual angle of 5.7° and a maximum vertical visual angle of 1.15°. Pictures and words displayed together subtended a maximum visual angle of 5.7° × 8° (width by height).

Test items were presented outside of the scanner on a computer screen. The pictures and associated cues were presented in central vision within a solid gray frame (subtending 6.8° × 6.8 ° visual angle at the 1 m viewing distance) that was continuously presented.

Experimental Tasks and Procedures

The experiment comprised a single study-test cycle.

Study Procedure

Instructions and practice were administered outside the scanner. The study phase of the experiment proper consisted of the presentation of two blocks of items, separated by a brief rest period (approx. 1 minute). Each study trial began with the presentation of a red fixation character in the center of the display frame for 500 ms. This was replaced by a picture that was presented for 1500ms. Five hundred ms after the onset of the picture, a second item was presented concurrently. This was either a visual word (visual condition) or an auditory word (auditory condition). Following picture offset, a centrally presented black fixation character was displayed for a further 1500ms, completing the trial. Subjects were informed they would receive no warning as to the modality of the word on each trial. Congruency judgments were signaled by button press of left or right index fingers, and response mapping was counterbalanced across subjects. Instructions placed equal emphasis on speed and accuracy.

The stimulus onset asynchrony (SOA) of study trials was stochastically distributed with a minimum of 3500 ms modulated by the addition of 40 randomly intermixed null trials (Josephs and Henson, 1999). Trials were presented in pseudo-random order, with no more than three trials of one item-type (visual condition, auditory condition, or null) occurring consecutively. Each block consisted of 94 trials, comprising 80 critical study items (congruent trials), 10 noncritical items (incongruent trials), and four buffer items for a total of 160 critical study items across two blocks.

Test Procedure

Following the completion of the second study block, volunteers were removed from the scanner and taken to a neighboring testing room. Only then were they informed of the source memory test and given instructions and a short practice test. Approximately 30 minutes elapsed between the completion of the second study block and the beginning of the memory test. Each test trial began with a red fixation presented in the center of a gray frame for 500 ms, followed by the presentation for 500ms of a centrally presented picture within a solid gray frame.

The test items consisted of the 160 critical study items (i.e. from the congruent trials) and 80 randomly interspersed unstudied (new) pictures (no more than three items of one type were presented consecutively). Instructions were to judge whether each word was old or new, and to indicate the decision with the right index (old) or left index (new) finger. If uncertain whether an item was old or new, volunteers were instructed to indicate ‘new’ so as to maximize the likelihood that subsequent source memory judgments (see below) would be confined to confidently recognized items. If a picture was judged ‘new,’ the test advanced to the next trial (with a 1 s inter-trial interval, during which a black fixation character was presented). If the picture was judged ‘old’ the prompt “Heard, Seen, Unsure?” appeared in black uppercase letters. Subjects were required to recall the modality of the name associated with the picture at study. The modality judgment was signaled by one of two button presses: right index finger for ‘heard’, right middle finger for ‘seen’. Subjects were further instructed that if they were unable to retrieve the modality they should respond ‘unsure’ with their right ring finger. The test was self-paced, with instructions to complete the test as quickly as possible without sacrificing speed for accuracy. The test was presented as a single block, lasting approximately 20 minutes.

fMRI Data Acquisition

A Philips Achieva 3T MR scanner (Philips Medical Systesm, Andover, MA, USA) was used to acquire both T1–weighted anatomical volume images data (240 × 240 matrix, 1mm3 voxels, 160 slices, sagittal acquisition, 3D MP-RAGE sequence) and T2*–weighted echoplanar images (EPI) [80 × 79 matrix, 3 × 3 mm in-plane resolution, axial acquisition, flip angle 70°, echo time (TE) 30ms] with blood-oxygenation level dependent (BOLD) contrast. The data were acquired using a sensitivity encoding (SENSE) reduction factor of 2 on an eight-channel parallel imaging headcoil. Each EPI volume comprised 30 3mm-thick axial slices separated by 1mm, oriented parallel to the AC-PC plane, and positioned to give full coverage of the cerebrum and most of the cerebellum. Data were acquired in two sessions of 260 volumes each, with a repetition time (TR) of 2s/volume. Volumes within sessions were acquired continuously in an ascending sequential order. The first five volumes of each session were discarded to allow equilibration of tissue magnetization.

fMRI Data Analysis

Data were analyzed with Statistical Parametric Mapping (SPM5, Wellcome Department of Cognitive Neurology, London, UK; Friston et al., 1995) implemented under Matlab2006a (The Mathworks Inc., USA). Functional images were subjected to a two-pass spatial realignment. Images were realigned to the first image, generating a mean image of the sessions. In the second pass the raw images were realigned to the generated mean image. The images were then subjected to reorientation, spatial normalization to a standard EPI template (based on the Montreal Neurological Institute (MNI) reference brain; Cocosco et al., 1997) and smoothing with an 8mm FWHM Gaussian kernel. Functional time series were concatenated across sessions.

Statistical analyses were performed on the study phase data in two stages of a mixed effects model. In the first stage, neural activity elicited by the study pictures was modeled by delta functions (impulse event) that coincided with the onset of each picture. The ensuing BOLD response was modeled by convolving the neural functions with a canonical hemodynamic response function (HRF) and its temporal and dispersion derivatives (Friston et al., 1998) to yield regressors in a General Linear Model (GLM) that modeled the BOLD response to each event-type.

For the reasons discussed in the results section, the principal analyses were confined to four events of interest: studied pictures that were later recognized and correctly endorsed as having been paired with a visual or auditory word (visual source hits and auditory source hits, respectively), and studied pictures that, on the later memory tests, were associated either with inaccurate source judgments (incorrect or unsure) or which were misclassified as new. A fifth category of trials comprised events of no interest, namely, incongruent trials, buffer trials, and trials associated with incorrect or omitted study responses. Six regressors modeling concatenated movement-related variance (three rigid-body translations and three rotations determined from the realignment stage) and session-specific constant terms modeling the mean over scans in each session were also entered into the design matrix.

For each voxel, the functional timeseries was highpass-filtered to 1/128 Hz and scaled within-session to yield a grand mean of 100 across voxels and scans. Parameter estimates for events of interest were estimated using a General Linear Model. Nonsphericity of the error covariance was accommodated by an AR(1) model, in which the temporal autocorrelation was estimated by pooling over suprathreshold voxels (Friston et al., 2002). The parameters for each covariate and the hyperparameters governing the error covariance were estimated using Restricted Maximum Likelihood (ReML). Effects of interest were tested using linear contrasts of the parameter estimates. These contrasts were carried forward to a second stage in which subjects were treated as a random effect. Unless otherwise specified, only effects surviving an uncorrected threshold of p < .001 and including nine or more contiguous voxels were interpreted. The peak voxels of clusters exhibiting reliable effects are reported in MNI co-ordinates.

Regions of overlap between the outcomes of two contrasts were identified by inclusive masking of the relevant SPMs. When the two contrasts were independent, the statistical significance of the resulting SPM was computed using Fisher's method for estimating the conjoint significance of independent tests (Fisher, 1950; Lazar et al., 2002). In all cases, the SPM to be masked was thresholded at p < 0.01 (again with a 9 voxel extent threshold) and the SPM that constituted the mask was thresholded at p < 0.001, giving a conjoint significance level of p<10-4. Exclusive masking was used to identify voxels where effects were not shared between two contrasts. Contrasts to be masked were thresholded at p < 0.001 and the SPM constituting the exclusive mask was thresholded at p < 0.05 (p<.1 for bi-directional F contrasts). Note that the more liberal the threshold of an exclusive mask, the more conservative is the masking procedure.

Results

Behavioral Performance

Study Task

Accuracy of congruency judgments was high (.98 correct in both conditions). Mean reaction time (RT) for judgments on visual words was 1199 ms, (SD = 226) compared with 1349 ms (SD = 186) for auditory words. Study RTs are shown in Table 1 segregated according to later memory performance. A 2×3 ANOVA [factors of modality and later memory (source hit, source miss, item miss)], revealed a main effect of modality (F(1,19) = 105.63, p<.001), but no effect of memory, and no memory by modality interaction. This analysis was repeated after collapsing RTs across the three classes of incorrect trial, analogous to the fMRI analyses reported below. A 2×2 ANOVA [factors of modality and memory (source hit vs. forgotten)] revealed only a main effect of modality (F(1,19) = 115.39, p<.001).

Table 1.

Mean reaction times (ms) for congruency decisions at study segregated by subsequent memory and mean accuracy rates at test (SD in parentheses).

| Visual | Auditory | |

|---|---|---|

| Reaction Time | ||

| Source Hit | 1257 (208) | 1450 (204) |

| Source Unsure | 1257 (288) | 1336 (199) |

| Source Miss | 1337 (229) | 1417 (265) |

| Item Miss | 1207 (215) | 1423 (200) |

| Accuracy | ||

| Source Hit | .51 (.15) | .56 (.15) |

| Source Unsure | .12 (.09) | .14 (.10) |

| Source Miss | .15 (.08) | .11 (.08) |

| Item Miss | .21 (.12) | .20 (.10) |

Retrieval Task

Hit rate collapsed over source accuracy was .79 (SD = .12) for items presented in the visual condition and .80 (SD = .10) for items from the auditory condition, against a false alarm rate of .03. Unsurprisingly, these two hit rates did not significantly differ. Source memory performance conditionalized on items receiving a correct recognition judgment is given in Table 1, where it can be seen that the proportions of correct source judgments for the two modalities are very similar and, as in the case of the hit rates, did not differ significantly (t(19) < 1). An overall measure of source recollection (Psr) was estimated using an index derived from a single high threshold model (Snodgrass and Corwin, 1988), in which the probability of recollection was computed as: [p(Source Hit)−0.5(1−p(Source Unsure))]/[1−(0.5(1−p(Source Unsure)))], where ‘Source Hit’ refers to studied items that were recognized and assigned to their correct encoding context, and ‘Source Unsure’ refers to recognized items followed by an “unsure” modality response. The estimate of source recollection was 0.44 (SD= 0.13), which was significantly different from the chance value of 0 (t (19) = 15.19, p < .001).

fMRI Results

The distribution of responses in the test phase meant that whereas all subjects contributed sufficient study trials to the source correct condition (means (ranges) of 45 (17-64) and 45 (22-68) for visual and auditory conditions respectively), the great majority of subjects had too few (<12) trials in one or more of the remaining trial types (source miss, source unsure, and item miss) to allow stable estimates of the activity elicited by these different trial types. Therefore these trials were collapsed to form a single category of ‘source forgotten’ trials containing all study items for which source-specifying information was unavailable. Consequently, the analyses reported below identify the neural correlates of successful visual and auditory source memory but do not speak to the question of the neural correlates of item recognition in the absence of source-specifying information. Mean (range) trial numbers for the forgotten trials were 38 (21-62) and 35 (12-58) for the visual and auditory conditions respectively.

We first identified regions demonstrating subsequent source memory effects common to the two modality conditions. We then performed hypothesis-driven analyses to assess whether, as predicted, modality-selective subsequent source memory effects (that is, subsequent source memory effects that were reliable for items studied in one of the two encoding conditions, but not the other) overlapped regions demonstrating generic modality effects (see Introduction). Lastly, we identified regions where subsequent source memory effects were selective for only one of the two modalities when the analyses were unconstrained by the generic effects of modality.

Modality- Independent Subsequent Memory Effects

Regions where subsequent source memory effects were insensitive to modality were identified by exclusively masking the main effect of subsequent memory (source hit > forgotten, collapsed over modality) with the bi-directional (F) contrast for the subsequent memory × modality interaction. As detailed in table 2 and illustrated in figure 1, this analysis identified effects in bilateral inferior frontal gyrus (greater in extent on the left), bilateral fusiform cortex, left posterior intra-parietal sulcus (IPS) and left anterior MTL in the vicinity of the amygdala.

Table 2.

Regions demonstrating modality-independent and auditorily-selective subsequent memory effects.

| Coordinates (x,y,z) | Z (# voxels) | Region | BA |

|---|---|---|---|

| Modality independent | |||

| -48 6 33 | 4.83 (84) | L inferior frontal gyrus | 6/9 |

| -21 -3 -21 | 3.72 (9) | L amygdala | |

| -45 -48 -24 | 3.49 (12) | L fusiform gyrus | 37 |

| -48 -69 -15 | 3.96 (86) | L middle occipital gyrus | 19 |

| -24 -75 51 | 4.26 (48) | L posterior intra parietal sulcus | 7/19 |

| 48 9 27 | 3.55 (17) | R inferior frontal gyrus | 6/9 |

| 45 -54 -18 | 4.52 (304) | R fusiform gyrus | 37 |

| 48 -81 0 | 3.53 (25) | R middle occipital gyrus | 19 |

| Auditorily-Selective | |||

| -27 -3 -21 | 4.40 (44) | L anterior medial temporal lobe/amygdala | |

| -36 -36 -27 | 3.44 (9) | L Cerebellum | |

| 36 -15 -36 | 4.13 (10) | R entorhinal cortex | 20 |

| 15 -15 -24 | 4.34 (59) | R hippocampus | |

| 21 -36 -3 | 3.74 (9) | R posterior hippocampus/parahippocampal gyrus | |

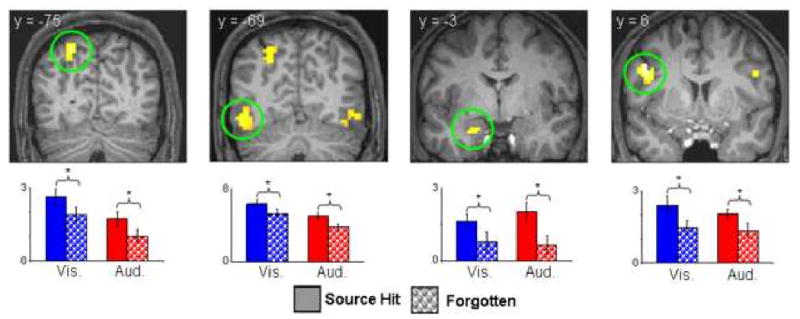

Figure 1.

Modality-independent subsequent source memory effects (displayed at p<.001, nine voxel extent threshold) projected onto sections of a representative subject's normalized structural image. Bar plots show (left to right) parameter estimates (in arbitrary units) for source hit and forgotten trials at peak voxels in left intra parietal sulcus (-24 -75 51), left middle occipital gyrus (-48 -69 -15), left amygdala (-21 -3 -21), and left inferior frontal gyrus (-48 6 33). * = p<.05.

Overlap between Subsequent Source Memory and Modality Effects

Visual effects: Visually-selective subsequent source memory effects that overlapped with regions selectively responsive on visual vs. auditory study trials were identified by inclusively masking the visual subsequent memory contrast with the main effect of modality (visual > auditory; see Materials and Methods). To ensure that any resulting effects were selective for the visual modality, voxels where analogous auditory subsequent memory effects were also significant were removed by using the auditory subsequent memory contrast as an exclusive mask (p<.05). This procedure identified several clusters (see table 3 and figure 2b), localized predominantly to regions within bilateral fusiform cortex and intraparietal sulcus.

Table 3.

Regions where visually- and auditorily-selective subsequent memory effects overlapped with global modality effects

| Coordinates (x,y,z) | Z (# voxels) | Region | BA |

|---|---|---|---|

| Visual Effects | |||

| -27 -72 54 | 3.90 (108) | L intra parietal sulcus | 7 |

| 27 -54 45 | 3.46 (53) | R intra parietal sulcus | 7 |

| 36 -63 -24 | 3.49 (20) | R fusiform gyrus | 19 |

| 30 -63 -12 | 3.27 (23) | R fusiform gyrus | 37 |

| 36 -84 0 | 2.73 (19) | R middle occipital gyrus | 19 |

| Auditory Effects | |||

| 60 -9 -15 | 3.08 (25) | R middle superior temporal sulcus | 21 |

| 45 -42 -3 | 2.69 (9) | R posterior temporal lobe (white matter) | |

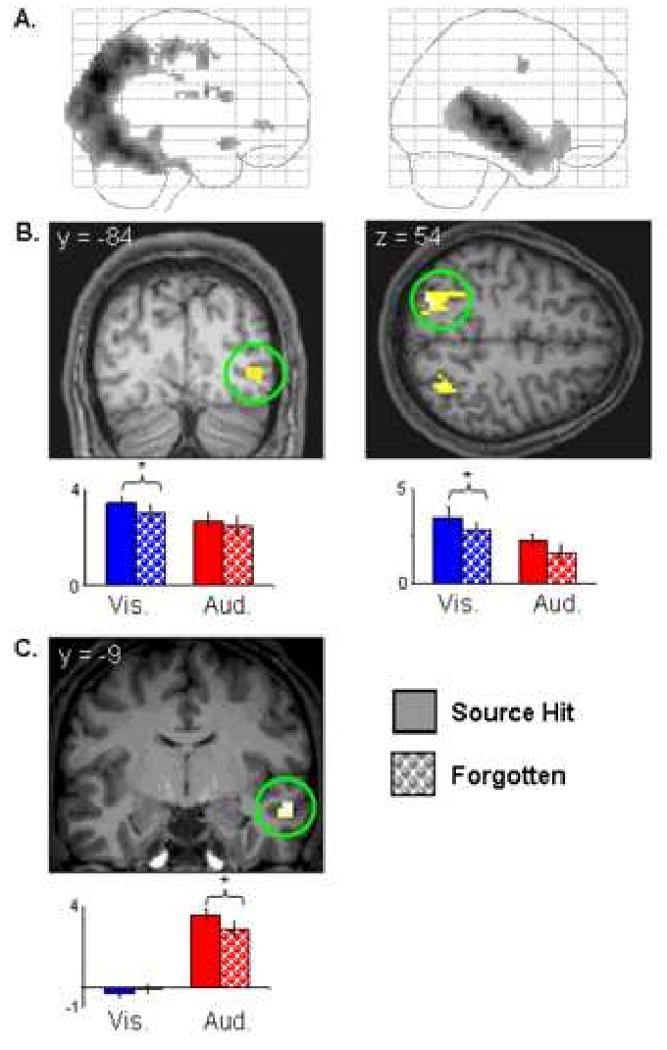

Figure 2.

A: Maximum intensity projections showing visually- (left) and auditorily- (right) selective regions. B: Upper: Visually-selective subsequent memory effects (p<.01) that overlap regions demonstrating visually-selective activity projected onto sections of a representative subject's normalized structural image. Lower: Peak parameter estimates (in arbitrary units) for source hit and forgotten trials at peak voxels in (left to right) right middle occipital gyrus (36 -84 0) and intraparietal sulcus (-27 -72 54). C: Upper: Auditorily-selective subsequent memory effects (p<.01) that overlap auditorily-selective regions projected onto sections of a representative subject's normalized structural image. Lower: Peak parameter estimates (in arbitrary units) for source hit and forgotten trials at the peak voxel in right middle superior temporal sulcus (60 -9 -15). * = p<.05. All subsequent memory effects displayed at p<.001, nine voxel extent threshold.

Auditory effects: An analogous approach was used to identify overlap between auditory subsequent memory effects and auditorily-selective regions. A cluster was identified in right middle superior temporal sulcus (see table 3 and figure 2c), along with a small cluster localized to white matter adjacent to posterior STS (see table 3).

To determine if the subsequent source memory effects elicited by visual and auditory study trials in each of the regions exhibiting modality-selective effects differed significantly in their magnitudes, the peak parameter estimates representing the visual and auditory subsequent memory effects in each region were directly contrasted (the equivalent of a 2 (source correct vs. incorrect) × 2 (auditory vs. visual) interaction contrast). In one region associated with subsequent visual source memory – right fusiform cortex (30 -63 -12) - the visual effects were reliably greater than the auditory effects (t (19) = 1.93, p<.05 1-tailed; see figure 2b). A reliable difference in the opposite direction was evident for the right STS region that exhibited an auditorily-selective subsequent source memory effect (t (19) = 4.37, p<.001 1-tailed; see figure 2c).

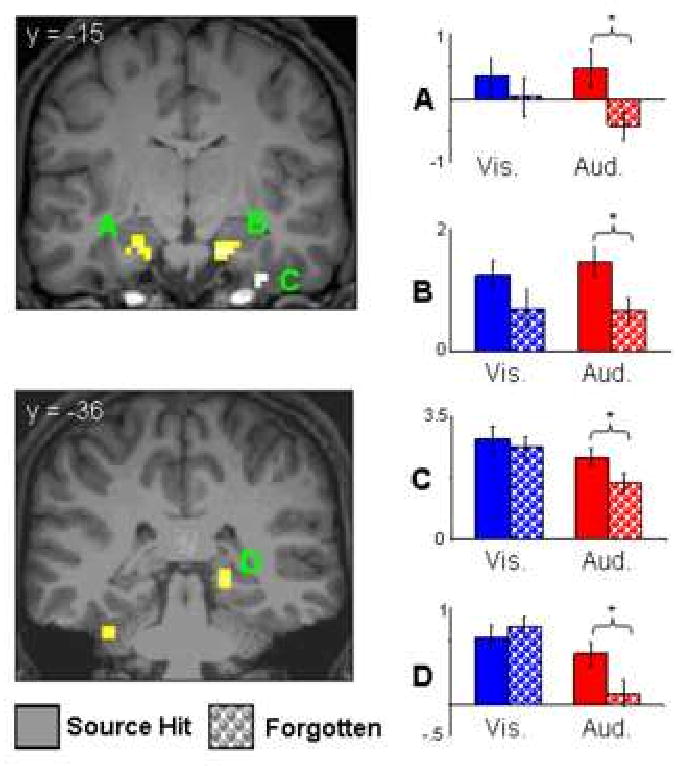

Modality-Selective Subsequent Memory Effects Unconstrained by Global Modality Effects

Visually-selective subsequent source memory effects were identified by the contrast between visual source hits and visual misses (p<.001), exclusively masked by the analogous contrast for the auditory condition (p<.05). This procedure revealed a single effect in right IPS (9 voxels, 27 -54 45, peak Z = 3.46, BA 7) that overlapped one of the regions identified in the analysis of visually-selective effects described above. The analogous procedure identified five MTL clusters where subsequent source memory effects were auditorily selective (table 2, figure 3). Three clusters were located in bilateral MTL in the vicinity of hippocampus and amygdala, while the remaining clusters were located in right entorhinal cortex and left cerebellum. With the exception of the left anterior MTL (-27 -3 -21), contrasts of the peak parameter estimates revealed that in each case the auditory subsequent memory effects were significantly larger than the visual effects (t(19) = 1.78 to 3.79, ps <.05 to <.001 1-tailed).

Figure 3.

Auditorally-selective subsequent source memory effects unconstrained by the global modality effect (displayed at p<.001, nine voxel extent threshold) projected onto sections of a representative subject's normalized structural image. Bar plots (from top to bottom) illustrate peak parameter estimates (in arbitrary units) for source hit and forgotten trials at peak voxels in left amygdala (A; -27 -3 -21), right hippocampus (B; 15 -15 -24), right entorhinal cortex (C; 36 -15 -36), and right posterior hippocampus/parahippocampal gyrus (D; 21 -36 -3). * = p<.05

Discussion

We contrasted the neural correlates of the incidental encoding of associations between pictures and the visual or auditory contextual information carried by a co-presented word. In addition to regions where subsequent source memory effects were common to the two types of association, we identified other regions where source effects were modality-selective – that is, reliable for only one of the two modalities. As predicted, some of these modality-selective effects were evident in regions that also responded preferentially to the corresponding class of study trials. To the best of our knowledge, the present findings constitute the first direct evidence that successful encoding of a contextual feature of a study episode is associated with enhanced activity in cortical regions selectively engaged during its online processing (cf. Uncapher et al. 2006; Uncapher & Rugg, in press).

Behavioral Findings

RTs during study were significantly slower for the auditory trials compared to the visual trials, an effect that likely reflects the more temporally extended delivery of the auditory information. Crucially, however, there was no evidence of a subsequent memory effect in RTs or judgment accuracy for either class of study trial. Thus, neither the visual nor the auditory fMRI subsequent memory effects discussed below can be attributed to differential study performance associated with later remembered versus later forgotten study trials.

fMRI Findings

A number of regions were identified where activity was predictive of accurate source memory for both visual and auditory trials, including bilateral fusiform cortex, bilateral dorsal IFG, and left anterior MTL in the vicinity of the amygdala. Subsequent memory effects predictive of successful episodic retrieval have been identified previously in all of these regions (see Cansino et al., 2002; Ranganath et al., 2004; and Gold et al., 2006 for examples of prior fusiform, dorsal IFG and amygdala effects respectively). We assume that these modality-independent effects reflect the successful encoding of episodic information (such as the identity of the picture) that was independent of the modality of the associated word, and to which modality-specific information was bound. Arguably, such effects are to be expected, since failure to incorporate information about picture identity into the memory representation of the study episode would likely lead to failure on the subsequent memory test. In addition, it is possible that some of these modality-independent effects (notably, those in posterior prefrontal cortex and IPS – part of the ‘dorsal frontoparietal attentional network’; Corbetta and Shulman, 2000) may reflect the benefit to modality memory of efficient redeployment of attention from the centrally presented picture to the later-presented word.

One seeming inconsistency with prior findings is the absence of modality-independent subsequent source memory effects in the hippocampus (cf. Davachi et al.; 2003; Ranganath et al.; 2004, Uncapher & Rugg, in press). This inconsistency may however be more apparent than real. When the threshold of the contrast employed to identify modality-insensitive effects was lowered to p<.01, the right anterior MTL effect was joined by a more posterior cluster in right anterior hippocampus (27 -12 -21, Z = 2.97). This remained significant (p<.05) when subjected to a small volume correction based on a 5mm radius sphere centered on the right hippocampal subsequent source memory effect reported by Uncapher et al. (2006; 27 -15 -15).

Analysis of modality-selective subsequent memory effects focused on the prediction that source effects should overlap with regions that responded preferentially to the corresponding class of study trials (see Introduction). This prediction was confirmed for both context modalities. Visually-selective subsequent source memory effects were evident in several posterior regions, whereas auditorily-selective source memory effects were identified in right mid-STS (figure 2). It should be noted, however, that in the case of visually-selective effects, in only one case did the effect significantly exceed the magnitude of the corresponding (albeit non-significant) auditory effect, and then at only a modest level of statistical significance. Thus, the evidence for overlap between modality-selective subsequent memory effects and modality-sensitive cortical activity is arguably somewhat stronger for the auditory than the visual condition. That said, these results add significantly to our prior finding that the cortical regions engaged during episodic encoding differ according to the nature of the accompanying contextual information (Uncapher et al., 2006).

The findings suggest that successful encoding of the modality of the study words was associated with enhanced processing in only a subset of the cortical regions engaged by each class of study trial. The localization of the visual subsequent source memory effect to right ventral fusiform and bilateral IPS suggests that memory for visual contextual information was facilitated when the context word elicited a strong visual representation of its referent (the posterior IPS has been shown to represent object-level information; Konen and Kastner, 2008). In addition, since, successful encoding in the visual condition required a shift of visual-spatial attention (from the picture to the word presented below it), the visually-selective IPS subsequent memory effects might, like the modality-independent effects discussed above, reflect the mnemonic benefit of efficient attentional re-orienting. Analogously, the finding that the auditory source memory effect was localized to right mid-STS – a region selectively activated during perception of speech relative to other auditory input (Scott et al., 2006; Hickok & Poeppel, 2007; Hein & Knight, 2008) - suggests that memory for auditory information benefited when processing of the phonological attributes of the context word was emphasized.

In addition to modality-selective subsequent source memory effects that overlapped the main effects of modality, additional source effects were also identified in analyses that were unconstrained by generic modality effects. Of most interest is the finding of an auditorily-selective effect in bilateral MTL, including the hippocampus (figure 3). This finding is of course consistent with the numerous prior reports of hippocampal subsequent source memory effects (see above and Introduction). The question arises however why memory for auditory information should have been associated with more extensive hippocampal effects than memory for visual contexts. In light of the equivalent levels of visual and auditory source memory on the later memory test, it is unlikely this finding reflects differences in the difficulty or efficacy of visual vs. auditory encoding. An intriguing possibility is that successful encoding of across-modality item-context associations places more demands on hippocampal resources than does encoding of within-modality associations, consistent perhaps with the proposal that ‘across-domain’ associations are more dependent upon the hippocampus than are ‘within-domain associations’ (Mayes et al., 2007). Alternatively, the findings might reflect a simple modality effect, indicating that memory for auditory information places more demands on hippocampally-supported encoding processes than does visual information (a consequence, perhaps, of the temporally extended nature of the auditory items, leading to heavier or more sustained attentional demands than in the case of the visual words). The lack of prior studies investigating the neural correlates of the encoding of auditory episodic information (though see Peters et al., 2007; Poppenk et al., 2008) means that resolution of this issue will have to await further research.

In conclusion, the present findings extend prior research on the neural correlates of episodic encoding in three principal ways: they provide direct evidence that encoding of a contextual feature is associated with enhanced activity in cortical regions engaged during its on-line processing; they demonstrate that such feature-selective encoding effects extend to the auditory modality; and they suggest that encoding of across-modality associations, or, perhaps, episodes that contain auditory contextual information, places greater demands on the hippocampus than does the encoding of exclusively visual episodic information.

Acknowledgments

This research was supported by the National Institute of Mental Health (NIH 1R01MH074528). The authors thank the members of the UCI Research Imaging Center for their assistance with fMRI data acquisition.

Footnotes

In an extension of these proposals (Rugg et al., 2008; Uncapher et al., 2006; Uncapher & Rugg, in press; see also Moscovitch, 1992), the hippocampus ‘captures’ only the most behaviorally relevant or salient aspects of the activity elicited by a study event within different cortical regions, rather than representing the entirety of the activity.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Alvarez P, Squire LR. Memory consolidation and the medial temporal lobe: A simple network model. Proc Natl Acad Sci USA. 1994;91:7041–7045. doi: 10.1073/pnas.91.15.7041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brewer JB, Zhao Z, Desmond JE, Glover GH, Gabrieli JDE. Making memories: Brain activity that predicts how well visual experience will be remembered. Science. 1998;281:1185–1187. doi: 10.1126/science.281.5380.1185. [DOI] [PubMed] [Google Scholar]

- Cansino S, Maquet P, Dolan RJ, Rugg MD. Brain activity underlying encoding and retrieval of source memory. Cerebral Cortex. 2002;12:1048–56. doi: 10.1093/cercor/12.10.1048. [DOI] [PubMed] [Google Scholar]

- Cocosco CA, Kollokian V, Kwan RS, Evans AC. Brainweb: Online interface to a 3D MRI simulated brain database. Proceedings of the 3rd International Conference on Functional Mapping of the Human Brain; Copenhagen, Denmark. 1997. p. S245. [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nature Reviews Neuroscience. 2002;3:201–15. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Davachi L, Mitchell JP, Wagner AD. Multiple routes to memory: distinct medial temporal lobe processes build item and source memories. Proceedings of the National Academy of Sciences of the United States of America. 2003;100:2157–62. doi: 10.1073/pnas.0337195100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diana RA, Yonelinas AP, Ranganath C. Imaging recollection and familiarity in the medial temporal lobe: a three-component model. Trends in Cognitive Sciences. 2007;11:379–86. doi: 10.1016/j.tics.2007.08.001. [DOI] [PubMed] [Google Scholar]

- Fisher R. Statistical methods for research workers. 11. London: Oliver & Boyd; 1950. [Google Scholar]

- Friston KJ, Fletcher P, Josephs O, Holmes A, Rugg MD, Turner R. Event-related fMRI: characterizing differential responses. NeuroImage. 1998;7:30–40. doi: 10.1006/nimg.1997.0306. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Penny W, Phillips C, Kiebel S, Hinton G, Ashburner J. Classical and Bayesian inference in neuroimaging: theory. NeuroImage. 2002;16:465–83. doi: 10.1006/nimg.2002.1090. [DOI] [PubMed] [Google Scholar]

- Friston K, Holmes AP, Worsely K, Poline J, Frith C, Frackowiak R. Statistical parametric maps in functional imaging: a general linear approach. Human Brain Mapping. 1995:189–210. [Google Scholar]

- Gold JJ, Smith CN, Bayley PJ, Shrager Y, Brewer JB, Stark CEL, et al. Item memory, source memory, and the medial temporal lobe: concordant findings from fMRI and memory-impaired patients. Proceedings of the National Academy of Sciences of the United States of America. 2006;103:9351–6. doi: 10.1073/pnas.0602716103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gottfried JA, Smith APR, Rugg MD, Dolan RJ. Remembrance of odors past: human olfactory cortex in cross-modal recognition memory. Neuron. 2004;42:687–695. doi: 10.1016/s0896-6273(04)00270-3. [DOI] [PubMed] [Google Scholar]

- Guillot A, Collet C, Nguyen VA, Malouin F, Richards C, Doyon J. Brain activity during visual versus kinesthetic imagery: An fMRI study. Human Brain Mapping. 2008 doi: 10.1002/hbm.20658. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hein G, Knight RT. Superior Temporal Sulcus-It's My Area: Or Is It? Journal of Cognitive Neuroscience. 2008;20:2125–2136. doi: 10.1162/jocn.2008.20148. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. The cortical organization of speech processing. Nature Reviews Neuroscience. 2007;8:393–402. doi: 10.1038/nrn2113. [DOI] [PubMed] [Google Scholar]

- Johnson JD, Rugg MD. Recollection and the Reinstatement of Encoding-Related Cortical Activity. Cerebral Cortex. 2007;17:2507–2515. doi: 10.1093/cercor/bhl156. [DOI] [PubMed] [Google Scholar]

- Josephs O, Henson RN. Event-related functional magnetic resonance imaging: modelling, inference and optimization. Philosophical Transactions of the Royal Society B: Biological Sciences. 1999;354:1215–28. doi: 10.1098/rstb.1999.0475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahn I, Davachi L, Wagner AD. Functional-neuroanatomic correlates of recollection: implications for models of recognition memory. J Neurosci. 2004;28:4172–4180. doi: 10.1523/JNEUROSCI.0624-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Khader P, Burke M, Bien S, Ranganath C, Rosler F. Content-specific activatoin During associative long-term memory retrieval. NeuroImage. 2005;27:805–816. doi: 10.1016/j.neuroimage.2005.05.006. [DOI] [PubMed] [Google Scholar]

- Kensinger EA, Schacter DL. Amygdala activity is associated with the successful encoding of item, but not source, information for positive and negative stimuli. The Journal of Neuroscience. 2006;26:2564–70. doi: 10.1523/JNEUROSCI.5241-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirwan CB, Wixted JT, Squire LR. Activity in the medial temporal lobe predicts memory strength, whereas activity in the prefrontal cortex predicts recollection. The Journal of Neuroscience. 2008;28:10541–8. doi: 10.1523/JNEUROSCI.3456-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Konen CS, Kastner S. Two hierarchically organized neural systems for object information in human visual cortex. Nature Neuroscience. 2008;11:224–31. doi: 10.1038/nn2036. [DOI] [PubMed] [Google Scholar]

- Kucera H, Francis W. Computational analysis of present-day American English. Providence, RI: Brown UP; 1967. [Google Scholar]

- Lazar NA, Luna B, Sweeney JA, Eddy WF. Combining brains: a survey of methods for statistical pooling of information. NeuroImage. 2002;16:538–50. doi: 10.1006/nimg.2002.1107. [DOI] [PubMed] [Google Scholar]

- Mayes A, Montaldi D, Migo E. Associative memory and the medial temporal lobes. Trends in Cognitive Sciences. 2007;11:126–35. doi: 10.1016/j.tics.2006.12.003. [DOI] [PubMed] [Google Scholar]

- Mitchell JP, Macrae CN, Banaji MR. Encoding-specific effects of social cognition on the neural correlates of subsequent memory. The Journal of Neuroscience. 2004;24:4912–7. doi: 10.1523/JNEUROSCI.0481-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moscovitch M. Memory and working with memory: A component process model based on modules and central systems. Journal of Cognitive Neuroscience. 1992;4:257–267. doi: 10.1162/jocn.1992.4.3.257. [DOI] [PubMed] [Google Scholar]

- Newman SD, Klatzky RL, Lederman SJ, Just MA. Imagining material versus geometric properties of objects: an fMRI study. Brain Research: Cognitive Brain Research. 2005;23:235–46. doi: 10.1016/j.cogbrainres.2004.10.020. [DOI] [PubMed] [Google Scholar]

- Norman KA, O'Reilly RC. Modeling hippocampal and neocortical contributions to recognition memory: a complementary-learning-systems approach. Psychological Review. 2003;110:611–46. doi: 10.1037/0033-295X.110.4.611. [DOI] [PubMed] [Google Scholar]

- Nyberg L, Habib R, McIntosh AR, Tulving E. Reactivation of encoding-related brain activity during memory retrieval. Proc Natl Acad Sci USA. 2000;97:11120–11124. doi: 10.1073/pnas.97.20.11120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nyberg L, Petersson KM, Nilsson LG, Sandblom J, Aberg C, Ingvar M. Reactivation of motor brain areas during explicit memory for actions. NeuroImage. 2001;14:521–528. doi: 10.1006/nimg.2001.0801. [DOI] [PubMed] [Google Scholar]

- Otten LJ, Rugg MD. Task-dependency of the neural correlates of episodic encoding as measured by fMRI. Cerebral Cortex. 2001;11:1150–60. doi: 10.1093/cercor/11.12.1150. [DOI] [PubMed] [Google Scholar]

- Otten LJ, Henson RNA, Rugg MD. State-related and item-related neural correlates of successful memory encoding. Nature Neuroscience. 2002;5:1339–44. doi: 10.1038/nn967. [DOI] [PubMed] [Google Scholar]

- Park H, Uncapher MR, Rugg MD. Effects of study task on the neural correlates of source encoding. Learning & Memory. 2008;15:417–25. doi: 10.1101/lm.878908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Persson J, Nyberg L. Conjunction analyses of cortical activations common to encoding and retrieval. Microsc Res Tech. 2000;51:39–44. doi: 10.1002/1097-0029(20001001)51:1<39::AID-JEMT4>3.0.CO;2-Q. [DOI] [PubMed] [Google Scholar]

- Peters J, Suchan B, Köster O, Daum I. Domain-specific retrieval of source information in the medial temporal lobe. European Journal of Neuroscience. 2007;26:1333–43. doi: 10.1111/j.1460-9568.2007.05752.x. [DOI] [PubMed] [Google Scholar]

- Poldrack RA. Can cognitive processes be inferred from neuroimaging data? Trends in Cognitive Sciences. 2006;10:59–63. doi: 10.1016/j.tics.2005.12.004. [DOI] [PubMed] [Google Scholar]

- Poppenk J, Walia G, McIntosh AR, Joanisse MF, Kohler S. Why is the meaning of a sentence better remembered than its form? An fMRI study on the role of novelty-encoding processes. Hippocampus. 2008;18:909–918. doi: 10.1002/hipo.20453. [DOI] [PubMed] [Google Scholar]

- Ranganath C, Yonelinas AP, Cohen MX, Dy CJ, Tom SM, D'Esposito M. Dissociable correlates of recollection and familiarity within the medial temporal lobes. Neuropsychologia. 2003;42:2–13. doi: 10.1016/j.neuropsychologia.2003.07.006. [DOI] [PubMed] [Google Scholar]

- Rolls ET. Memory systems in the brain. Annu Rev Psychol. 2000;51:599–630. doi: 10.1146/annurev.psych.51.1.599. [DOI] [PubMed] [Google Scholar]

- Rugg MD, Johnson JD, Park H, Uncapher MR. Chapter 21 Encoding-retrieval overlap in human episodic memory: A functional neuroimaging perspective. Progress in Brain Research. 2008;169:339–52. doi: 10.1016/S0079-6123(07)00021-0. [DOI] [PubMed] [Google Scholar]

- Rugg MD, Otten LJ, Henson RNA. The neural basis of episodic memory: evidence from functional neuroimaging. Philosophical Transactions of the Royal Society B: Biological Sciences. 2002;357:1097–110. doi: 10.1098/rstb.2002.1102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott SK, Rosen S, Lang H, Wise RJS. Neural correlates of intelligibility in speech investigated with noise vocoded speech--a positron emission tomography study. Journal of the Acoustical Society of America. 2006;120:1075–83. doi: 10.1121/1.2216725. [DOI] [PubMed] [Google Scholar]

- Shastri L. Epidodic memory and cortico-hippocampal interactions. Trends Cogn Sci. 2002;6:162–168. doi: 10.1016/s1364-6613(02)01868-5. [DOI] [PubMed] [Google Scholar]

- Snodgrass J, Corwin J. Perceptual identification thresholds for 150 fragmented pictures from the Snodgrass and Vanderwart picture set. Perceptual & Motor Skills. 1998;67:3–36. doi: 10.2466/pms.1988.67.1.3. [DOI] [PubMed] [Google Scholar]

- Staresina BP, Davachi L. Differential encoding mechanisms for subsequent associative recognition and free recall. The Journal of Neuroscience. 2006;26:9162–72. doi: 10.1523/JNEUROSCI.2877-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Staresina BP, Davachi L. Selective and Shared Contributions of the Hippocampus and Perirhinal Cortex to Episodic Item and Associative Encoding. Journal of Cognitive Neuroscience. 2008;20:1478–89. doi: 10.1162/jocn.2008.20104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tulving E. Elements of episodic memory. Oxford: Oxford University Press; 1983. [Google Scholar]

- Uncapher MR, Otten LJ, Rugg MD. Episodic encoding is more than the sum of its parts: an fMRI investigation of multifeatural contextual encoding. Neuron. 2006;52:547–56. doi: 10.1016/j.neuron.2006.08.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uncapher MR, Rugg MD. Selecting for memory? The influence of selective attention on the mnemonic binding of contextual information. Journal of Neuroscience. doi: 10.1523/JNEUROSCI.1043-09.2009. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vaidya CJ, Zhao M, Desmond JE, Gabrieli JDE. Evidence for cortical encoding specificity in episodic memory: memory-induced re-activation of picture processing areas. Neuropsychologia. 2002;40:2136–2143. doi: 10.1016/s0028-3932(02)00053-2. [DOI] [PubMed] [Google Scholar]

- Wagner AD, Schacter DL, Rotte M, Koutstaal W, Maril A, Dale AM, Rosen B, Buckner RL. Building memories: Remembering and forgetting of verbal experiences as predicted by brain activity. Science. 1998;281:1188–1191. doi: 10.1126/science.281.5380.1188. [DOI] [PubMed] [Google Scholar]

- Wheeler ME, Buckner RL. Functional dissociation among components of remembering: control, perceived oldness, and content. J Neurosci. 2003;23:3869–3880. doi: 10.1523/JNEUROSCI.23-09-03869.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wheeler ME, Buckner RL. Functional-anatomic correlates of remembering and knowing. NeuroImage. 2004;21:1337–1349. doi: 10.1016/j.neuroimage.2003.11.001. [DOI] [PubMed] [Google Scholar]

- Wheeler ME, Petersen SE, Buckner RL. Memory's echo: vivid remembering reactivates sensory-specific cortex. Proc Natl Acad Sci USA. 2000;97:11125–11129. doi: 10.1073/pnas.97.20.11125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wheeler ME, Shulman GL, Buckner RL, Miezin FM, Velanova K, Petersen SE. Evidence for separate perceptual reactivation and search processes during remembering. Cereb Cortex. 2006;16:949–959. doi: 10.1093/cercor/bhj037. [DOI] [PubMed] [Google Scholar]

- Woodruff CC, Johnson JD, Uncapher MR, Rugg MD. Content-specificity of the neural correlates of recollection. Neuropsychologia. 2005;43:1022–1032. doi: 10.1016/j.neuropsychologia.2004.10.013. [DOI] [PubMed] [Google Scholar]