Short abstract

To standardize oncology clinical practice and improve patient outcomes, multiple organizations have developed cancer-specific metrics on the basis of a systematic background review, expert guidance, and fundamental elements of cancer care—staging and treatment.

Abstract

Purpose:

To describe the extent to which poor documentation hinders assessment of quality of care provided to patients with colorectal cancer (CRC) in the community and academic oncology settings.

Methods:

This was a retrospective review of 499 medical records of patients obtained from 13 community and academic oncology practices in the southeastern United States. Data on diagnosis, TNM stage, any stage documentation, age, pathology report information, clinical care received, and care dates were abstracted. Descriptive statistics were used, and proportions of evaluable charts in which documented care conformed to accepted national metrics were calculated.

Results:

Of the 499 patients, 43% were women, and 40% were men, whereas sex was not identified in 17%; 64% had colon cancer, and 21% had rectal cancer; 54% were white, and 17% were African American, whereas data on race or ethnicity were missing or unknown in 26%; mean age at diagnosis was 61 years (standard deviation, 14 years). Limited data availability hindered assessment of quality; of the 499 eligible patients, only 61 could be included in the full analysis. Only 86% of the 499 medical records confirmed diagnosis; 38% provided TNM stage (stage documentation improved to 73% when any clinical notation of stage was accepted); 71% documented age. Pathology reports were missing in 34% of medical records. When chemotherapy was initiated, more than 10% of medical records did not report dates of administration. When electronic medical records were used, reporting improved, but documentation problems persisted. In medical records containing data sufficient to evaluate conformance to CRC metrics, conformance was low (50% to 70%).

Conclusion:

Assessment of quality of CRC care is impeded by the absence of sufficient documentation of data elements required to calculate performance.

Introduction

Colorectal cancer (CRC), the third most common cancer in the United States,1 incurs a substantial burden of illness. Continued high mortality rates in CRC seem to be related to late-stage diagnosis; early diagnosis and treatment are critical to improving patient outcomes.2

In an effort to standardize oncology clinical practice and improve patient outcomes, multiple organizations, including ASCO, the National Comprehensive Cancer Network (NCCN), the National Cancer Institute, and the National Quality Forum (NQF), have developed cancer-specific metrics on the basis of a systematic background review and expert guidance. In August 2006, ASCO and the NCCN released an initial set of quality metrics for CRC and breast cancer. Using separate processes and methodologies, the Commission on Cancer of the American College of Surgeons (ACoS) developed a similar set of measures for CRC and breast cancer and submitted them to the NQF for endorsement as part of the NQF Cancer Project. The NQF, the ACoS, ASCO, and the NCCN guidelines were synchronized and presented publicly in April 2007. Four CRC-related metrics (two for rectal cancer, one for colon cancer, and one for CRC) were specified.

The metrics were determined on the basis of the most fundamental elements of cancer care—staging and treatment. In surgical cases, a first measure of appropriate CRC management is removal and examination of a number of lymph nodes sufficient to determine stage of disease; international consensus holds that at least 12 nodes should be removed for adequate staging of tumors.3 Identification of tumor stage serves as the basis for diagnosis, treatment decisions, and prognosis. Adequate documentation of staging thus represents a second litmus test for quality of CRC care. Once stage is established, chemotherapy for stage III colon cancer, or chemotherapy and radiation for stage II and III rectal cancer, should follow, provided that the patient is an appropriate candidate.

As third-party payers and consumers seek to ensure best care, demonstration of quality using predefined metrics will become increasingly important. In this article, we designate the consensual national metrics as CRC1, CRC2, CRC3, and CRC4 (Table 1). Our study explored whether, at this time, academic and community oncology practices are prepared to report on these CRC metrics. Specifically, we evaluated whether medical records currently contain enough information to allow for assessment of quality of care. In this study, we sought to assess quality of care provided to patients with CRC as measured by the extent to which care documented in medical records of patients complied with the CRC quality metrics articulated by ASCO and the NCCN in April 2007.

Table 1.

Colorectal Cancer Metrics and Method of Calculation

| Metric | Numerator | Denominator | Notes |

|---|---|---|---|

| CRC1: If a patient has stage II or III colon or rectal cancer, has not received preoperative chemotherapy or radiation therapy, and has undergone curative colon or rectal surgery, a minimum of 12 lymph nodes should be removed and examined | All patients in the denominator who had at least 12 lymph nodes examined | Patients with stage II or III CRC who were between ages 19 and 79 years and who had undergone surgical excision | Patients for whom number of lymph nodes examined was not documented were considered to have not met this guideline |

| CRC2: If a patient is younger than age 80 years and has stage III colon cancer, the patient should receive adjuvant chemotherapy within 4 months of diagnosis | All patients in the denominator who had a record of adjuvant chemotherapy within 4 months of diagnosis | Patients with stage III colon cancer who were between ages 19 and 79 years and who had undergone surgical excision | Patients for whom diagnosis or chemotherapy date was missing were not considered to have met this guideline; if patients received both neoadjuvant and adjuvant chemotherapy, they were considered to have met the guideline if at least one of those therapies was initiated within 4 months of diagnosis |

| CRC3: If a patient is younger than age 80 years and has stage II or III rectal cancer, the patient should receive postoperative adjuvant chemotherapy within 9 months of diagnosis | All patients in the denominator who had a record of adjuvant chemotherapy within 9 months of diagnosis | Patients with stage II or III rectal cancer who were between ages 17 and 79 years and who had undergone surgical excision | Patients for whom diagnosis or adjuvant chemotherapy date was missing were not considered to have met this guideline |

| CRC4: If a patient is younger than age 80 years and has stage II or III rectal cancer, the patient should receive pelvic radiation therapy either before or after surgical excision | All patients in the denominator who had a record of radiotherapy either before or after surgery | Patients with stage II or III rectal cancer who were between ages 19 and 79 years and who had undergone surgical excision | Missing dates were not an issue for this measure because time frame was not specific; any indication that the patient received radiation was taken as indication of having met the guideline |

Abbreviation: CRC, colorectal cancer.

Methods

Overview

This was a secondary analysis conducted as part of a medical record abstraction study evaluating patterns of metastatic CRC care. The study reviewed 743 medical records of patients from 13 academic and community sites in the United States. Sites had different size, demographic, and payer profiles. The study was approved by the institutional review board of each site. Patients were adults with CRC whose metastatic disease was diagnosed between June 1, 2003, and June 30, 2006. Patients were observed for at least 1 year after diagnosis (ie, data were collected through June 30, 2007). Medical records were abstracted to obtain demographic, treatment, and evaluation (eg, stage and lymph node) data.

Study Procedures

A list of potentially eligible patients was generated for each participating site. The local tumor registry of each site was used for first-line identification of patients potentially meeting eligibility criteria; billing or other available on-site databases were used to identify potentially eligible patients at those sites that did not have tumor registries or had tumor registries that included analytic cases only. The list of potential patients for each site was shuffled into random order, and patients on the randomized list for each site were reviewed from top to bottom to determine eligibility; at least 40 medical records per site were sought. Selected medical records were photocopied by site-based personnel and mailed to central study personnel using confidential procedures compliant with the Health Insurance Portability and Accountability Act. All medical records were redacted to maintain confidentiality and abstracted by central study personnel using standard abstraction procedures.4 Data were double-entered into a customized study-specific database that included prespecified validation parameters to maximize data quality. Data discrepancies were reviewed by a lead study coordinator. Corrections to the database were entered into a detailed log of corrections, which was reviewed and approved by a senior study investigator.

All abstractors were trained in general medical record abstraction, using standardized forms, and in specific procedures related to this study (eg, reporting chemotherapeutic regimens). As a quality check, a physician coinvestigator (M.P.) repeated the review of a random sample of 20% of the medical records using 100% visual inspection. Quality measures, as an ongoing process, were completed weekly throughout the study. Data discrepancies were reviewed and corrected. Patterns of data errors were discussed with the study team weekly to maximize data quality.

The following data were retrieved from medical records included in the study: basic demographics, specific information about CRC diagnosis, surgeries, adjuvant chemotherapy, adjuvant radiation, first-line metastatic CRC chemotherapy regimen, date of initiation of chemotherapy, number of lymph nodes sampled, presence of pathology report, date of death, and other variables not relevant to this report (eg, hospice care). Stage was evaluated by TNM staging if available; if TNM stage was not available, other modes of documentation (eg, medical record notes and treatment received) were used to determine stage.

Analysis

Metrics were numbered to reflect the order of customary diagnosis and treatment: CRC1, assessment of lymph nodes; CRC2, provision of colon cancer chemotherapy; CRC3, provision of rectal cancer chemotherapy; and CRC4, provision of rectal cancer radiotherapy (Table 1).

Basic descriptive statistics were used to summarize data. Rates of conformance to quality metrics were calculated, in accordance with Table 1. Conformance to quality metrics within subgroups defined by the following variables was generated: type of practice (academic or community), use of electronic medical records (EMRs; yes or no), and payer source (Medicaid/Medicare, private insurance, or self-pay or no insurance). SAS 9.1.3 (SAS Institute, Cary, NC) was used for all statistical computations.

Results

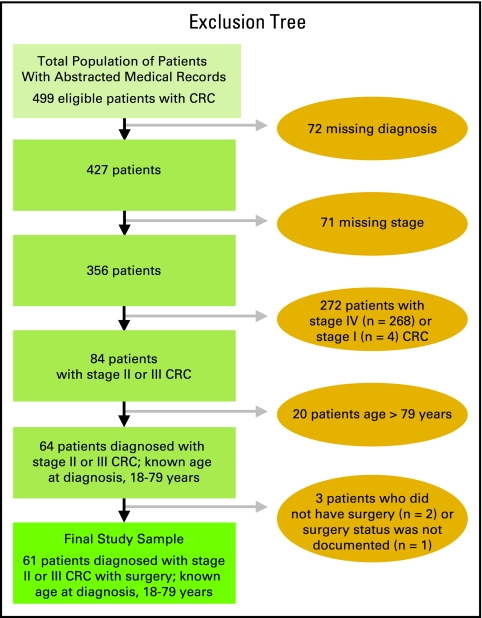

In total, 743 medical records of patients with CRC were screened; 499 of the 743 medical records were eligible and therefore extracted; these 499 medical records represent the total population of patients whose medical records were abstracted. The 499 medical records were additionally reviewed to evaluate their eligibility and completeness for inclusion in quality metric analyses. The main reason for exclusion of additional medical records was incompleteness of case notes such that 1 year of data could not be retrieved (Fig 1; if a patient died, time from death was considered available data). For 61 patients, stage II or III cancer was adequately documented; they were age 19 to 79 years at diagnosis and had had surgery, allowing for full calculation of all four CRC metrics. These 61 patients made up the final study sample (Fig 1).

Figure 1.

Derivation of the study sample.

The total population studied (N = 499) included 216 women (43%) and 199 men (40%), whereas sex was not identified for 84 patients (17%); 321 patients (64%) had colon cancer, and 106 (21%) had rectal cancer, whereas diagnosis was missing for 72 patients (14%); 271 patients (54%) were white, and 86 (17%) were African American, whereas race or ethnicity was missing or unknown for 131 patients (26%); mean age at diagnosis was 61.1 years (standard deviation, 13.8 years; Table 2).

Table 2.

Patient Demographics and Clinical Characteristics

| Demographic or Characteristic | Total Population of Patients (N = 499) | Final Study Sample (n = 61) | ||

|---|---|---|---|---|

| No. | % | No. | % | |

| Diagnosis | ||||

| Colon cancer | 321 | 64 | 48 | 79 |

| Rectal cancer | 106 | 21 | 13 | 21 |

| Missing or unknown | 72 | 14 | — | — |

| Stage | ||||

| I | 4 | 1 | — | — |

| II | 19 | 4 | 11 | 18 |

| III | 65 | 13 | 50 | 82 |

| IV | 278 | 56 | — | — |

| Missing or unknown | 133 | 27 | — | — |

| Sex | ||||

| Female | 216 | 43 | 31 | 51 |

| Male | 199 | 40 | 30 | 49 |

| Missing or unknown | 84 | 17 | ||

| Race/ethnicity | ||||

| Native American | 8 | 2 | 2 | 3 |

| Asian | 3 | 1 | — | — |

| African American | 86 | 17 | 16 | 26 |

| White | 271 | 54 | 41 | 67 |

| Missing or unknown | 131 | 26 | 2 | 3 |

| Age at diagnosis, years | ||||

| > 79 | 27 | 5 | — | — |

| Mean | 61.1 | 59.8 | ||

| SD | 14 | 12 | ||

Abbreviation: SD, standard deviation.

Poor availability of the data necessary to calculate the metrics prevented full assessment of quality of CRC care. Diagnosis was documented in 86% of medical records (Table 3; Fig 1). Complete TNM stage was available for 38% of patients; documentation of stage improved to 73% if any clinical notation of stage was accepted. Age, a requirement for calculating the metrics, was documented in only 71% of medical records. Medical records missing basic demographic information were frequently missing stage information as well. Oncology practices using EMRs had more complete staging information available, but TNM stage was still missing in 56% of medical records; 19% of medical records provided no staging information.

Table 3.

Availability of Data in Medical Records of Total Population of Patients (N = 499)

| Characteristic | Total No. of Medical Records | Availability of Data | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Diagnosis | TNM Stage | Any Documentation of Stage | Age | ||||||

| No. | % | No. | % | No. | % | No. | % | ||

| Overall | 499 | 427 | 86 | 188 | 38 | 366 | 73 | 356 | 71 |

| Practice type | |||||||||

| Academic based | 66 | 65 | 99 | 29 | 44 | 56 | 85 | 65 | 99 |

| Academic in community | 5 | 4 | 80 | 2 | 40 | 4 | 80 | 4 | 80 |

| Community based | 297 | 282 | 95 | 124 | 42 | 242 | 82 | 253 | 85 |

| Missing | 131 | 76 | 58 | 33 | 25 | 64 | 49 | 34 | 26 |

| Age at diagnosis, years | |||||||||

| 18-25 | 3 | 3 | 100 | 1 | 33 | 3 | 100 | 3 | 100 |

| 26-40 | 25 | 25 | 100 | 9 | 36 | 20 | 80 | 25 | 100 |

| 41-55 | 90 | 89 | 99 | 46 | 51 | 79 | 88 | 90 | 100 |

| 56-70 | 142 | 141 | 99 | 56 | 39 | 123 | 87 | 142 | 100 |

| > 70 | 96 | 93 | 97 | 40 | 42 | 77 | 80 | 96 | 100 |

| Missing | 143 | 76 | 53 | 36 | 25 | 64 | 45 | 0 | 0 |

| Sex | |||||||||

| Female | 216 | 205 | 95 | 84 | 39 | 175 | 81 | 182 | 84 |

| Male | 199 | 188 | 95 | 87 | 44 | 164 | 82 | 174 | 87 |

| Missing or unknown | 84 | 34 | 41 | 17 | 20 | 27 | 32 | 0 | 0 |

| Race/ethnicity | |||||||||

| Native American | 8 | 8 | 100 | 4 | 50 | 8 | 100 | 8 | 100 |

| Asian | 3 | 3 | 100 | 2 | 67 | 3 | 100 | 3 | 100 |

| African American | 86 | 83 | 97 | 34 | 40 | 69 | 80 | 72 | 84 |

| White | 271 | 258 | 95 | 119 | 44 | 225 | 83 | 248 | 92 |

| Missing or unknown | 131 | 75 | 57 | 29 | 22 | 61 | 47 | 25 | 19 |

| Method of payment | |||||||||

| Private insurance | 242 | 231 | 96 | 114 | 47 | 208 | 86 | 220 | 91 |

| Medicaid/Medicare | 98 | 95 | 97 | 33 | 34 | 76 | 78 | 80 | 82 |

| Self-pay or no insurance | 48 | 46 | 96 | 16 | 33 | 36 | 75 | 36 | 75 |

| Missing or unknown | 111 | 55 | 50 | 25 | 23 | 46 | 41 | 20 | 18 |

| Use of EMRs | |||||||||

| No | 176 | 127 | 72 | 45 | 26 | 106 | 60 | 105 | 60 |

| Yes | 287 | 265 | 92 | 125 | 44 | 232 | 81 | 232 | 81 |

| Missing or unknown | 36 | 35 | 97 | 18 | 50 | 28 | 78 | 19 | 53 |

Abbreviation: EMR, electronic medical record.

Although staging allows for determination of the appropriate metric, each metric in turn requires availability of specific clinical data for its calculation. Calculation of CRC3 requires documentation of adjuvant chemotherapy, date of diagnosis, and date of chemotherapy. Calculation of CRC4 requires documentation of radiation therapy, date of radiation therapy, and date of surgical excision. For patients who received neoadjuvant chemotherapy, 68% of medical records in the total population (N = 499) and 83% of medical records in the final study sample (n = 61) recorded date of neoadjuvant chemotherapy initiation (Table 4). For patients who received adjuvant chemotherapy, 90% and 89% of medical records recorded date of adjuvant chemotherapy initiation in the total population and study sample, respectively. For patients who received either pre- or postoperative radiation, 83% and 100% of medical records recorded date of radiation therapy initiation in the total population and study sample, respectively.

Table 4.

Summary of Data Documented in Medical Records

| Data Element | Percentage of Total Population of Patients (N = 499) | Percentage of Final Study Sample (n = 61) |

|---|---|---|

| Pathology report | 66 | 84 |

| Diagnosis date | 82 | 100 |

| Surgery date* | 96 | 98 |

| Adjuvant chemotherapy initiation date† | 90 | 89 |

| Neoadjuvant chemotherapy initiation date‡ | 68 | 83 |

| Radiotherapy initiation date§ | 83 | 100 |

Among patients who had surgery.

Among patients who received adjuvant chemotherapy.

Among patients who received neoadjuvant chemotherapy.

Among patients who received either pre- or postoperative radiation therapy.

Application of the metrics to any given patient assumes stage of disease was known; to accurately determine stage, a sufficient number of lymph nodes had to have been retrieved by a surgeon and evaluated by a pathologist. Sixty-six percent of medical records in the total population and 84% in the study sample contained pathology reports to provide lymph node data (Table 4). Although the median number of lymph nodes removed and examined was acceptable in both the total population (N = 499) and study sample (n = 61), complete documentation including number of lymph nodes removed, examined, and involved was frequently missing (Table 5).

Table 5.

Lymph Node Documentation and Retrieval

| Measure | Total Population of Patients (N = 499) | Final Study Sample (n = 61) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Medical Records With Documentation | No. of Lymph Nodes Retrieved | Medical Records With Documentation | No. of Lymph Nodes Retrieved | |||||||

| No. | % | Median | Minimum | Maximum | No. | % | Median | Minimum | Maximum | |

| Lymph nodes removed | 161 | 32 | 13 | 0 | 52 | 25 | 41 | 17 | 0 | 36 |

| Lymph nodes examined* | 290 | 58 | 13 | 0 | 161 | 59 | 92 | 17 | 0 | 161 |

| Lymph nodes involved | 298 | 60 | 3 | 0 | 50 | 59 | 92 | 2 | 0 | 19 |

Documentation of lymph nodes examined in the critical measure used to calculate the CRC1 quality metric.

Because of the lack of data on a substantial proportion of the study sample, analysis of conformance of care to CRC quality metrics was limited (Table 6). All patients in the study sample (n = 61) could be evaluated for conformance to CRC1, and 41 patients (67%) met the performance metric. Of the 61 patients with stage II or III CRC who were between the ages of 19 and 79 years and who had had surgery, there were 38 patients with colon cancer who could be evaluated for conformance to CRC3, and only 68% met the guideline; among 13 patients with rectal cancer, conformance to CRC3 and CRC4 was only 54% on each metric.

Table 6.

Conformance to Performance Metrics Developed by ASCO and the National Comprehensive Cancer Network

| Characteristic | Metric | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CRC1* | CRC2† | CRC3‡ | CRC4§ | |||||||||

| Total No. of Patients | Patients Meeting Guideline | Total No. of Patients | Patients Meeting Guideline | Total No. of Patients | Patients Meeting Guideline | Total No. of Patients | Patients Meeting Guideline | |||||

| No. | % | No. | % | No. | % | No. | % | |||||

| Overall | 61 | 41 | 67 | 38 | 26 | 68 | 13 | 7 | 54 | 13 | 7 | 54 |

| Practice type | ||||||||||||

| Academic based | 17 | 11 | 65 | 11 | 6 | 55 | 3 | 0 | 0 | 3 | 1 | 33 |

| Community based | 40 | 27 | 68 | 24 | 18 | 75 | 10 | 7 | 70 | 10 | 6 | 60 |

| Missing or unknown | 4 | 3 | 75 | 3 | 2 | 67 | 0 | 0 | ||||

| EMR available | ||||||||||||

| No | 15 | 9 | 60 | 11 | 7 | 64 | 2 | 1 | 50 | 2 | 2 | 100 |

| Yes | 40 | 28 | 70 | 25 | 18 | 72 | 10 | 5 | 50 | 10 | 5 | 50 |

| Missing or unknown | 6 | 4 | 67 | 2 | 1 | 50 | 1 | 1 | 100 | 1 | 0 | 0 |

| Method of payment | ||||||||||||

| Private insurance | 44 | 31 | 71 | 28 | 21 | 75 | 8 | 4 | 50 | 8 | 5 | 63 |

| Medicaid/Medicare | 12 | 6 | 50 | 8 | 3 | 37 | 3 | 2 | 67 | 3 | 0 | 0 |

| Self-pay or no insurance | 2 | 2 | 100 | 1 | 1 | 100 | 0 | 0 | ||||

| Missing or unknown | 3 | 2 | 67 | 1 | 1 | 100 | 2 | 1 | 50 | 2 | 2 | 100 |

Abbreviation: CRC, colorectal cancer; EMR, electronic medical record.

For patients with stage II or III CRC who had surgery and had 12 or more lymph nodes examined.

For patients with stage III colon cancer who had surgery and received neoadjuvant or adjuvant chemotherapy within 4 months of diagnosis.

For patients with stage II or III rectal cancer who had surgery and received adjuvant chemotherapy within 9 months of diagnosis.

For patients with stage II or III rectal cancer who had surgery and received pre- or postoperative radiation therapy.

In an effort to better describe the patterns of practice—specifically patterns of nonconformance to established quality metrics in CRC care—we analyzed performance by practice type (academic or community), presence or absence of EMRs, and payment method (Medicaid/Medicare, private insurance, or self-pay or no insurance; Table 6). Performance on all metrics was better in community-based practices than it was in academic ones. Use of EMRs was not associated with a clear pattern of improvement in performance against the quality metrics (Table 6), although documentation of diagnosis, TNM stage, stage classified by any method, and age was better in sites using EMRs than it was in those not using EMRs (Table 3). There was a trend of better performance when private insurance was the method of payment (Table 6); medical records of patients with private insurance also had better documentation of TNM stage, stage classified by any method, and age (Table 3). Although these comparisons may provide some indication of trends, the data are too few to support conclusions.

Discussion

As health care moves slowly but inexorably into a pay for performance landscape, the ability of institutions to respond to quality metrics becomes increasingly important. Quality of care and performance of clinicians are already, albeit unevenly, beginning to be evaluated on the basis of conformance to standardized recommendations, such as those articulated through quality metrics and evidence-based clinical practice guidelines.

This study addressed fundamental questions that arise as we face a pay-for-performance future: Are we in oncology ready for this metric-based method of evaluation? Do the data required to implement these sorts of metrics exist within current medical records, and what is the quality of those data?

The striking finding from this study was that documentation was inadequate to allow for assessment of performance against quality metrics. Most importantly, only 86% of medical records confirmed diagnosis, 38% reported TNM stage, 73% documented stage by any clinical notation, and 71% documented age (Table 3). Documentation was a problem regardless of practice type or payer mix, and it was only partially improved with implementation of EMRs.

The four CRC metrics articulated by the NQF, the ACoS, ASCO, and the NCCN provide a starting point from which to begin to evaluate and standardize performance. Application of these well-defined algorithms for calculating the metrics might seem, at first glance, to be a straightforward matter. However, this study highlights the fact that certain data must be available before metrics can be applied. If quality is only assessed when complete data are available (as is currently done), then reported performance on quality metrics likely does not represent quality of actual care. Because patients included will be those whose medical records reflect the best documentation—and hence will be those who are most likely to be receiving best care—reported performance is likely to be inflated. As was necessary in this study, medical records with poor documentation are systematically dropped from calculations for simple reasons such as inadequate staging information.

Poor conformance of medical care to quality metrics has been demonstrated in other areas, such as chronic kidney disease, when data were retrieved from claims databases.5 Our study used medical record abstraction to obtain the data elements needed to calculate the CRC quality metrics, because through initiatives like the Quality Oncology Practice Initiative from ASCO, oncology currently relies on medical records to capture data on care provided to patients. Medical record abstraction remains a standard method of retrieving clinical data and reconstructing a picture of care that was presumably delivered. However, concerns exist about the reliability and validity of medical record abstraction, especially when it is used as the basis for quality measurement. Studies have historically found that data populating medical records have not been systematically recorded for research or quality improvement purposes.6,7 Incompleteness, inaccuracy, and—in the case of paper medical records—illegibility of recorded data can compromise the validity of quality conclusions. In a prospective study8 designed to examine quality of medical record abstraction in 160 physician-patient encounters, medical record abstraction was compared with standardized patient reports on four aspects of the encounters (history, examination, diagnosis, and treatment); an overall sensitivity of only 70% in medical record abstraction was found. That exercise highlighted the importance of differentiating between quality of care actually provided and quality of documentation of that care. Limitations in documentation may prevent the quality evaluator from knowing what care was actually delivered and therefore may preclude a true assessment of quality of care.

Because of uncertainty about the quality of the data they contain, medical records of patients present an imperfect instrument for assessing performance. Although EMRs seem to be more reliable tools, their completeness and quality are also dependent on the people entering the data. The transition from paper medical records to EMRs will not necessarily improve quality of data collected, nor will it improve our ability to use these data to assess quality, unless the discrete data elements required by quality metrics are accurately entered into the EMR system. In this study, documentation was somewhat improved in EMRs compared with that in paper medical records, but stage was still missing in 56% of medical records; performance on metrics was similar between EMRs and paper medical records.

The quality of the abstraction process of medical records (whether paper or electronic) merits consideration. A 1996 study9 of 244 emergency medicine research articles that relied on medical record abstraction found low methodologic standards. Quality assurance methods, such as abstractor training, monitoring, blinding, and standardized abstraction forms, were rarely mentioned; inter-rater reliability was mentioned in 5% and tested statistically in 0.4% of articles. A 2003 follow-up study10 assessed 79 medical record review studies and found some improvement, although adherence fell below 50% in seven of 12 criteria for methodologic quality. To obtain a fair and accurate evaluation, both the data and the process by which those data are retrieved must be of highest quality. Our quality assurance processes included abstractor training, standardized forms, random review of 20% of medical records, and weekly project meetings. Inter-rater reliability was not calculated, and this is a limitation.

One of the most contentious issues in oncology is stage. Is it critical to document stage, if this information does not hold particular meaning in the clinical realm? In CRC, stage occupies the intersection of multiple disciplines—surgery, pathology, chemotherapy, and radiation. In the case of these CRC quality metrics, stage represents a fundamental starting point, without which the appropriate metric cannot be selected. Stage was missing in at least 27% of medical records of patients; all of these patients were omitted from subsequent analyses. Can we conclude that 27% of patients received low-quality care? Or rather did clinicians at the points of care simply not require stage or documentation of stage to provide care? Or given that disparate information required to determine stage comes from multiple sources at different times, does the absence of stage documentation indicate inadequate processes and systems to make staging simple and efficient? Could there be disagreement among the various health care providers responsible for documenting stage? Systematically evaluating local practices and barriers to staging will substantially improve this metric. EMRs and other electronic platforms provide efficient vehicles for improving staging data, but as this study shows, presence of EMRs does not guarantee documentation of stage. Because stage is the critical focal point for both care and quality assessment, a thorough discussion of the need to document and determine stage, along with systems to make staging efficient and convenient, is critical.

The primary limitation of this study was the focus on metastatic disease. Eligibility criteria required that patients chosen for this review had a metastatic diagnosis during the required timeframe. We had an oversampling of medical records of patients with advanced disease, but there were fewer patients with stage II or III CRC. Documentation for patients with metastatic cancer may have been poorer if physicians were less concerned with process variables (eg, staging) in these patients with advanced disease. However, we reviewed the medical records of all study patients in full, not just for the metastatic periods; complete care information for the nonmetastatic periods should nonetheless have been documented. All limitation concerns applicable to retrospective medical record review studies apply to this study as well. Data were retrospective and dependent on clinician recall and legible documentation. Although quality assurance measures were in place, medical record abstraction techniques can differ among study personnel.

In sum, this study found that documentation in CRC care remains a major issue that impedes assessment of quality and performance against emerging metrics. Low quality of reporting was found in areas of critical importance to management of CRC—most notably in two areas, documentation of stage and pathology, including lymph node information. On the basis of metrics that could be calculated with available data, quality of care in CRC seemed to be low; this result may approximate the true quality of care or may simply reflect inadequate documentation.

Authors' Disclosures of Potential Conflicts of Interest

Although all authors completed the disclosure declaration, the following author(s) indicated a financial or other interest that is relevant to the subject matter under consideration in this article. Certain relationships marked with a “U” are those for which no compensation was received; those relationships marked with a “C” were compensated. For a detailed description of the disclosure categories, or for more information about ASCO's conflict of interest policy, please refer to the Author Disclosure Declaration and the Disclosures of Potential Conflicts of Interest section in Information for Contributors.

Employment or Leadership Position: None Consultant or Advisory Role: Amy P. Abernethy, Pfizer (C) Stock Ownership: None Honoraria: Amy P. Abernethy, Pfizer (C) Research Funding: None Expert Testimony: None Other Remuneration: None

Acknowledgment

We thank Ms Laura Criscione for the time and energy she put into this project, redacting charts, managing data, and assisting with overall coordination. We also thank all of the nurses and oncology fellows at Duke University who helped with medical record abstraction. This study was supported by an outcomes research service agreement with Pfizer. The study was conducted, analyzed, and interpreted independently of Pfizer, which does not have access to individual data.

References

- 1.National Cancer Institute: A snapshot of colorectal cancer. http://planning.cancer.gov/disease/Colorectal-Snapshot.pdf

- 2.National Cancer Institute: Cancer Trends Progress Report: 2005 Update. http://progressreport.cancer.gov/2005/

- 3.Compton CC, Fielding LP, Burgart LJ, et al: Prognostic factors in colorectal cancer: College of American Pathologists Consensus Statement 1999. Arch Pathol Lab Med 124:979-994, 2000 [DOI] [PubMed] [Google Scholar]

- 4.Patwardhan MB, Matchar DB, Samsa GP, et al: Opportunities for improving management of advanced chronic kidney disease. Am J Med Qual 23:184-192, 2008 [DOI] [PubMed] [Google Scholar]

- 5.Patwardhan MB, Samsa GP, Matchar DB, et al: Advanced chronic kidney disease practice patterns among nephrologists and non-nephrologists: A database analysis. Clin J Am Soc Nephrol 2:277-283, 2007 [DOI] [PubMed] [Google Scholar]

- 6.Reiser SJ: The clinical record in medicine: Part 2—Reforming content and purpose. Ann Intern Med 114:980-985, 1991 [DOI] [PubMed] [Google Scholar]

- 7.Tang PC, Fafchamps D, Shortliffe EH: Traditional medical records as a source of clinical data in the outpatient setting. Proc Annu Symp Comput Appl Med Care: 575-579, 1994. 1994 [PMC free article] [PubMed]

- 8.Luck J, Peabody JW, Dresselhaus TR, et al: How well does chart abstraction measure quality? A prospective comparison of standardized patients with the medical record. Am J Med 108:642-649, 2000 [DOI] [PubMed] [Google Scholar]

- 9.Gilbert EH, Lowenstein SR, Koziol-McLain J, et al: Chart reviews in emergency medicine research: Where are the methods. Ann Emerg Med 27:305-308, 1996 [DOI] [PubMed] [Google Scholar]

- 10.Worster A, Bledsoe RD, Cleve P, et al: Reassessing the methods of medical record review studies in emergency medicine research. Ann Emerg Med 45:448-451, 2005 [DOI] [PubMed] [Google Scholar]