Abstract

The two goals of this technology transfer study were to: (1) increase the number and appropriateness of services received by substance abuse patients, and thereby (2) give clinical meaning and value to research-based assessment information. A software-based Resource Guide was developed to allow counselors to easily identify local resources for referral of their patients to additional clinical and social services. Two hours of training were provided on the use of the guide. It was hoped that this software and training would provide the counselors with a concrete method of linking the Addiction Severity Index (ASI) assessment information on patient problems to appropriate, available community services. We expected improved treatment planning, increased problem services matching, better patient–counselor rapport/satisfaction and better patient-performance during treatment. Data were analyzed from 131 patients of 33 counselors from 9 treatment programs, randomly assigned to 2 groups—Standard Assessment (SA) or Enhanced Assessment (EA).

Patients of counselors in the EA group (1) had treatment plans that were better matched to their needs, (2) received significantly more and better-matched services than patients in the SA group, and (3) were less likely to leave treatment against medical advice and more likely to complete the full course of treatment than patients of counselors in the SA group. They did not have higher levels of patient satisfaction or helping alliance scores. These findings are discussed with regard to integrating empirically supported procedures into contemporary, community-based substance abuse treatment.

Keywords: Technology transfer, Assessment, Training, Matching, Science-based treatment

1. Introduction

There has long been recognition of a gap between what is known to be effective clinical practice—as judged from the scientific literature, and what is common practice in “real world” conditions (Lamb et al., 1998). Over the past 10 years there have been significant advances in the development of effective medications, procedures and behavioral interventions for the treatment of addictive disorders (See Institute of Medicine, 1995; Lamb et al., 1998). For example, a number of admission procedures have been found to significantly increase intake attendance, including phone reminders (Dexter and Goetzke, 1995; Kluger and Karras, 1983), mailed reminders and phone orientations (O’Loughlin, 1990; Swenson and Pekarick, 1988), and decreasing the call-appointment delay (Festinger et al., 1996; Stark et al., 1990). Additionally, intensive case management (McLellan et al., 1999), the participation of family members in treatment (Higgins et al., 1994; Sisson and Azrin, 1989; Carise, 1992, 1995), Behavioral Couples Therapies (Fals-Stewart et al., 1996; Epstein and McCrady, 1998; O’Farrell and Murphy, 1995) and Motivational Interviewing (Miller and Rollnick, 1991) have all been shown to improve treatment effects.

While these therapies and interventions have established empirical support and acceptance within the scientific community, they have largely remained undelivered in community treatment programs. New and effective treatments, in any form, cannot help patients if they are not practical, accessible or utilized and researchers cannot simply assume that a “scientifically” better intervention will be advantageous, desirable, or even cost effective. To bring validated, effective interventions into community-based treatment programs, scientists must be aware of the significant economic, political, technological, and practical issues faced by the treatment community (see McLellan et al., 2003; Carise and G#x00171;rel, 2002; Carise et al., 2002). There is a recognized need to increase the transfer of what we have learned through clinical research into widespread treatment practice (Backer and David, 1995; Lamb et al., 1998).

Perhaps the best place to begin this technology transfer effort is with one of the most fundamental clinical processes: the initial assessment and treatment planning activities. Accurate patient assessment, which fosters the ability of substance abuse treatment providers to meet their patients’ needs, may be one of the most important yet under-emphasized elements of contemporary addiction treatment. The clinical logic behind patient assessment and service planning process is direct. If patients’ problems are accurately and comprehensively assessed, they may feel “heard” by their counselor potentially leading to the development of rapport and even a stronger helping alliance (See Luborsky et al., 1996; Barber et al., 1999, 2001). If this process leads to a jointly determined and feasible treatment plan for addressing the identified problems, the potential for the patient to continue with the treatment process is increased. If in addition to problem assessment and recognition, the counselor and the treatment program offer potentially effective services for the identified problems (either onsite or via referral), there is the potential for relief from those problems and with it, further likelihood of continued participation, increased retention (See Higgins et al., 1994) and the beginnings of the sustained, positive behavioral changes referred to as recovery.

However, current treatment assessment activities are widely thought to be time consuming and not clinically useful (See McLellan et al., 2003). Thus, if more clinically sensitive and useful assessment methods were developed and if they assisted the clinician in meeting requirements such as treatment planning and the biopsychosocial assessment, there is reason to think that there might be broad willingness to put them into practice.

1.1. The Addiction Severity Index (ASI)

The ASI is a research-derived problem assessment interview that allows for comprehensive assessment of patients’ problems at the time of treatment admission. The ASI interview produces reliable and valid measures of the nature and severity of patients’ problems (McLellan et al., 1985, 1992a). Research has shown it can be used effectively as the basis for providing tailored, appropriate treatment services and that patients who receive services for their identified problems are more likely to remain in treatment and have better during-treatment and post-treatment outcomes (See McLellan et al., 1997, 1992b; Hser et al., 1999). Because there are well-specified training procedures for the interview (See Fureman et al., 1994) and because of two decades of research findings showing that problem assessment and service planning with the ASI can be reliably, validly and usefully applied by researchers and clinicians across a wide range of patient populations and treatment settings, the ASI has been widely adopted by researchers and treatment providers in numerous countries (Sweden, Thailand, Egypt, Iran, Ireland, Chile, Brazil, France, Scotland and others) and across the United States in 27 different states, at least 80 cities or counties, and by large treatment provider systems (e.g. the Veterans Administration, the Indian Health Service, Kaiser Permanente, Value Behavioral Health).

Despite the broad use of the ASI in patient assessment, survey research in the US has shown the instrument is used largely because it has been mandated by state, county, or program administrators—not because it is valued for its utility by the staff who are asked to use it (See McLellan et al., 2003; Crevecoeur et al., 2000). Indeed, a recent survey of a nationally representative sample of treatment programs in the United States indicated that staff in these treatment programs considered the problem assessment–service planning phase of treatment to be merely “paperwork” with no inherent clinical value (See McLellan et al., 2003).

Why would this clinically important and administratively required clinical procedure be considered so trivial? Based on hundreds of ASI trainings, we had a simple working premise: the process of doing an ASI (or any admission assessment) was frustrating to the counselor since it is time consuming and in most cases does not take the place of the additional admission forms and narrative summaries they are required to produce. Moreover, most programs do not have adequate services available onsite that would address the various health and social problems presented by these patients, the process of finding appropriate off-site services for patients’ problems is inherently difficult and time consuming and most addiction counselors are not trained to do this type of case management activity.

We hypothesized that if this research-derived, assessment tool (the ASI) could be made more relevant to the clinical tasks of assessment and treatment planning, it would be better implemented, lead to increased patient engagement, and better treatment performance. With support from the US Office of National Drug Control Policy, we developed the computer-assisted ASI software suite known as the DENS ASI (the Drug Evaluation Network System Addiction Severity Index) (See Carise et al., 1999). The DENS ASI software reduces the time necessary to complete the ASI interview, but more importantly, provides many of the necessary reports and patient-level narrative summaries required by treatment regulatory agencies, accrediting and managed care organizations.

In the course of over 250 ASI and DENS trainings in the United States our staff had encountered large phone-book type of compilations of social services providers within the local communities, including providers of physical and mental health, housing, parenting, employment, legal and other services. An example of one such compilation is the book produced by the United Way (“First Call for Help”). However, most of these books showed little signs of use, often because they were unwieldy or physically removed from the assessment process. We reasoned that if we were able to convert this type of information on available community services into an easy to use electronic format and then develop a brief training on how to link appropriate, accessible services onsite as well as within the community to the problems presented by patients in their ASI assessment interviews, we might enable a more comprehensive assessment and services planning process.

To this end, we requested permission from the United Way to use their electronic database as a framework to create the linking software for the Philadelphia area (referred to as the Computer-assisted System for Patient Assessment and Referral—CASPAR), and have made this software available at no charge to all our collaborating treatment programs in the Philadelphia, Pennsylvania area.

With this background, the present paper reports initial tests of implementing the CASPAR system in nine substance abuse treatment programs in Philadelphia, PA, USA. We hypothesized that if the technology were provided and counselors were trained in assessment and treatment planning (including using the CASPAR to link the patient problems from the ASI with free or low-cost services targeted to those problems), those counselors would show evidence of:

A better match between the problems identified by the patient on the ASI assessment and the problems included by the counselor on the treatment plan, and

a larger number and better-matched services or service referrals.

And their patients would show:

Better counselor–patient rapport—specifically better helping alliance and patient satisfaction scores, and

better treatment performance—specifically increased attendance and program completion rates.

2. Materials and methods

2.1. Participants

This study included both counselors and patients as participants. Data are reported on 33 counselors and 131 patients from 9 community-based substance abuse treatment programs.

2.2. Instruments and measures

2.2.1. Programs

The Addiction Treatment Inventory (ATI) (Carise et al., 2000) was used to measure program characteristics. The ATI is a semi-structured interview completed with treatment program directors. It is designed to provide standard information on the staffing profile, treatment orientation, organizational structure, financing, and services delivered in all modalities of addiction treatment programs.

2.2.2. Counselors

A 33-item Counselor Characteristics questionnaire was administered that collected demographic information (age, race, gender) as well as information about work history (types of positions held, date hired, previous training on the ASI or treatment planning, years in the field, resources used for finding services), and personal history with addiction (recovery status, prior treatment experiences).

2.2.2.1. Treatment plan (TP)

All counselors are required to develop a Treatment Plan (TP) for each patient as a part of their standard clinical duties in Philadelphia. TPs were collected and blinded reviewers recorded the level and types of problems described and the nature and amount of services planned for those problems. These blinded reviewers also used a previously validated system (see below) to measure the degree to which the services planned by their counselors in the TP’s matched the needs of patients as measured by the ASI intake assessment.

2.2.3. Patients

2.2.3.1. Patient problems

The Addiction Severity Index (ASI) (McLellan et al., 1985, 1992a)was used to measure patient background and pre-treatment status. The ASI provides measures of the nature and severity of problems in the areas of medical, employment, alcohol/drug use, legal status, family relations and psychiatric functioning. The reliability and validity of the instrument has been found to be high across a wide range of substance abusers (McLellan et al., 1985, 1992a).

2.2.3.2. Patient services received

The Treatment Services Review (TSR) (McLellan et al., 1992b, 1993) was used to measure the nature and amount of services actually received by the patients—either on-site or off-site via referrals from the treating program. The TSR is a brief (10–15 min), structured interview administered in person or over the phone to collect information on the types and amounts of services provided (directly or indirectly) to a substance abuse patient while in treatment. The treatment service questions are divided into the same seven problem areas as are covered in the ASI. The TSR has been shown to provide a reliable and valid record of these services in several studies (McLellan et al., 1992b, 1993, 1999).

Within each of these problem areas, information on the types of services received is categorized as general or specialized. “General Services” include advice or even peer counseling received during general group or individual counseling sessions. “Specialized Services” are those provided by a staff member with special training (e.g., vocational counselor, case manager, family therapist, etc.) and the distinguishing feature is their focus upon a single topic or problem. For example, a group or individual counseling session where a patient discussed problems of depression would be counted as a general counseling session in the Psychiatric section of the TSR. “Specialized Services” in the psychiatric area would include evaluations or testing for psychological or emotional problems, biofeedback, or a session with a psychiatrist, psychologist or psychiatric social worker to discuss emotional problems.

2.2.3.3. Patient satisfaction

A Client Satisfaction Questionnaire (CSQ) was used to measure patient acceptance and satisfaction with their experience during treatment. The CSQ consists of 18, four-point bi-polar items that relate to client satisfaction with services. This scale has been shown to have stable psychometric properties (Attkisson and Zwick, 1982). It was administered to all patients at the time of their second TSR interview.

2.2.3.4. Patient–counselor relationship

The Helping Alliance Questionnaire (HAQ) was used to measure features of the relationship between the patient and the counselor. The HAQ is a 12-item self-administered measure of the patient’s feelings about the nature of his/her relationship with the counselor. This measure has been used regularly in therapy trials and has been a consistent predictor of favorable outcomes (Luborsky et al., 1996). It provides a sensitive indication of the early engagement of the patient and has been used to predict retention.

2.3. Procedures

2.3.1. Site recruitment and assignment

A list of all Philadelphia treatment programs was provided by Philadelphia’s Coordinating Office of Drug and Alcohol Programs (CODAP). We approached a randomly selected group of 20 adult outpatient community-based substance abuse treatment programs. Programs were offered a $200 incentive for each participating counselor (up to a maximum of $1000) to cover the costs of lost staff time due to participation in research activities. In addition, the ASI training offered by the project was accepted by CODAP for continuing education credits needed by the programs and counselors as part of their annual certification by the city. The counselors within these programs also received a $75 incentive for their participation as well as continuing education credits. The treatment programs who agreed to participate in the study were randomly assigned to one of the two training conditions (SA and EA groups) and provided with training.

2.3.2. Counselor recruitment

All counselors at the treatment programs were recruited for participation in the study independently of the program. Counselors at each site were free to decline participation and were assured that their employment would not be affected in any way by participation or non-participation. Initially, no counselors declined to participate in the study. Both counselors and patients provided written informed consent. Procedures were in accord with the standards of the Committee on Human Experimentation and were approved by the Institutional Review Board of the Treatment Research Institute.

2.3.3. Patient recruitment

Following ASI training, each counselor was asked to recruit the next five consecutive patients assigned to him/her for admission assessment (as previously agreed). Counselors approached these patients at the time of intake, using a standard script rehearsed during the ASI training. Patients were told that the research project would not interfere with their treatment and that all information would be kept confidential. Patients were informed that if they agreed to participate, their ASI admission information would be shared with the research team conducting the study and that they would be contacted by staff from the Treatment Research Institute for brief, confidential interviews at 2 and 4 weeks following their admission. Patients were offered $10.00 for each of the two 15-min telephone interviews. Patients were aware that they could quit the study at any time. A total of 132 patients were approached for participation during the course of the study and all agreed. However, one patient subsequently did not participate due to medical problems. The study design planned for each participating counselor to recruit five patients into the study; however, the average number of patients recruited per counselor was 4. It should be noted that since this study was conducted in randomly selected community-based treatment programs, we could not enforce the recruitment of a minimum number of patients. Some counselors left their jobs prior to collecting five assessments and some recruited only three or four.

In summary, data reported here result from nine substance abuse treatment programs, 33 counselors and 131 patients who participated in the study—4 programs, 18 counselors and 74 patients from the EA condition and 5 programs, 15 counselors and 57 patients from the SA condition.

2.3.4. Training on the ASI

Counselors in both conditions (EA and SA) were provided with a 12-h training on administering the ASI using a software program called the DENS ASI (Carise et al., 1999), ASI manuals, ongoing access to a toll-free help line and post-training competency feedback. As previously indicated, the DENS ASI program was designed to make the collection of the ASI easier, more accurate and more valuable to clinicians. The software provides item-by-item instructions for the interviewer including coding and probing suggestions. There are 150 automated consistency checks built into the program to assure accurate coding and all items are range checked. To improve the clinical utility of the collected information, the software program includes a feature that generates a “biopsychosocial narrative” required by most state and agency regulators.

Importantly, the ASI training provided in this study (to all counselors in both conditions) also included training in use of the DENS ASI software and automated report generating functions, now often viewed as the standard method of ASI training (See Rawson and Stein, 2002). This training method assists counselors in recognizing relevant problems in their patients at the time of admission to treatment and facilitates accurate data collection.

2.3.4.1. ASI training competency measures

Following the training, standard competency measures were collected from all counselors to test understanding of the ASI (Fureman et al., 1994). There were no significant between-groups differences in ASI coding competency based on two measures, the ASI quiz and an ASI video coding exercise. Counselors in the EA group averaged 77% and counselors in the SA group averaged 69% on the standardized ASI coding quiz, F(1, 32) = 2.139, p = 0.16). Additionally, there were no significant between-groups differences of counselor competence as measured by a standardized video coding exercise (EA = 87%, SA = 82%, F(1, 22) = 2.181, p = 0.16). These scores are considered acceptable evidence of counselor competence in understanding and using the ASI (Fureman et al., 1994).

2.3.5. Training on the resource guide (RG)

The only difference between the training interventions in the two conditions was the provision of an additional 2-h of training to counselors in the EA condition on integrating assessment information and using the computerized RG software to find social and personal health services (See Gurel et al., 2005).

As indicated, the RG was adapted from the Electronic Edition of the First Call for Help directory developed by the United Way of Southeastern Pennsylvania in cooperation with Dorland’s Directories (Mackie and Walton, 1998). The RG included information on 1524 agencies, sorted by agency name, services provided, and 131 keywords. It included substantial descriptive information about each agency (programs, special services available, fee structure, eligibility, etc.) and all necessary contact information to facilitate easy needs-services matching (See Gurel et al., 2005).

2.3.6. Post admission data collection

Independent research staff contacted each patient by phone, 2 and 4 weeks following their intake assessment, to conduct TSR interviews. The HAQ and the CSQ were also completed at 4 weeks. At week 2, research staff collected data from 126 of the 131 patients (96%), and at the 4-week follow-up point, 115 patients provided data (87%).

2.3.7. Procedures for defining and scoring “problems”, “services”, and “matching”

The study analyses required standardized operational definitions of a “problem” both at admission assessment and on the treatment plan, and “adequate, appropriate services” for addressing the problems during the course of treatment. We felt these definitions required an uncomplicated format if they were to be applied in real world conditions and allow for unambiguous interpretation. Finally, we needed an apriori algorithm to indicate a “match” between the problems presented at assessment and the services provided during treatment. To these ends we based our definitions and matching algorithms on our prior, published work on matching services to problems (See McLellan et al., 1997) as described below.

2.3.7.1. Defining “problem status” based on ASI data

“Problem” status in each of the seven ASI areas was defined using combinations of the most objective items in each section to derive a simple, three-point (0-1-2) scoring format in each content area of the ASI. A score of “0″ indicated no problem, “1″ indicated some problem, and “2″ indicated a significant problem. For example, if in the medical section the patient reports no days of medical problems, they have a problem score of “0″. If they report having a chronic problem, taking medications or receiving a medical disability payment and have 1–9 days of problems in the past 30 days, they have a problem score of “1″ and if they have been hospitalized in the past month, or if they report more than 9 days of problems in the past month, they have a problem score of “2″. Therefore, the score (0-1-2) given to identify level of problem in each of the ASI areas was standardized and automated. These methods had been used effectively in a prior matching study (See McLellan et al., 1997).

2.3.7.2. Defining “problem status” in the treatment plan

We again used a simple, three-point (0-1-2) rating to determine whether the patient’s treatment plan addressed problems in each area. In rating the treatment plan, a score of “0″ indicated that a problem was not mentioned or identified in that area; “1″ indicated a mention or comment on the problem but no clear plan, time-frame or service suggested; and “2″ indicated the problem was clearly identified with a specific plan and/or a time frame for dealing with the problem. All TP scores were completed by experienced research associates who were blinded to the study groups as well as the patient and program names.

2.3.7.3. Defining problems as “addressed”

The 2-week and 4-week TSR data were summed on the number and types of services received by each patient in each of the seven problem areas. Prior work (See McLellan et al., 1997, 1999) had shown differential impact from “specialized” sessions, as compared with simply mentioning a problem in the context of a “general” group counseling session. Examples of specialized services included seeing a social worker for an employment or housing problem, meeting with a physician or nurse for a medical problem; a family therapist for a relationship issue, etc. After analyzing results for total services received, general counseling sessions and specialized services were analyzed again, separately.

“Level” of specialized services received was coded with the same three-point rating scale described above: a problem received an “addressed score” of “2″ if the TSR showed receipt of at least two specialized services. A score of “1″ was rendered if one specialized service was received and a score of “0″ if no specialized services were received. The same clinical reviewers who rated the treatment plans also rated the level of services received based on the TSR data.

2.3.7.4. Judging the “match” between assessment-treatment plan and assessment-services received

The three-point (0-1-2) ratings in the Assessment (ASI) were compared to those in the Treatment Plan and to those in the Treatment Services received (TSR) to determine the level of matching. In these calculations each of the seven problem areas were examined separately. In each of these areas problem scores of zero were eliminated from the matching calculations, as these patients had no need for services in that area. For non-zero problem scores, a Treatment Plan score was considered “matched” if is was scored at the same number or higher as the corresponding ASI assessment score. That is, for a patient who received a “1″ in his/her employment problem area, we scored as “matched,” Treatment Plan scores of “1″ or “2″. However, if a patient’s problem score is “2″, then only a score of “2″ in the treatment plan would lead to a match. Similarly, the scores in each problem area between the ASI assessment and the results of the TSR service report were compared using the same formula. χ2-tests were then used to compare the two groups on the proportion of matched scores in each area.

Prior to any analyses, all sets of scores for the ASI and TSR, and the matching determinations were independently reviewed by four trained individuals. On 131 ASI’s there was a total of 917 “problem scores” provided for the seven ASI sections. On 126 TSR’s there were a total of 882 service scores on the seven TSR sections. There was virtually 100% agreement among independent judges on problem and service coding and the remaining differences were resolved through discussion.

Thus, the three-point scoring and matching algorithms were easy and reliable to implement. In this regard, we recognize that this is an extremely simple (some would argue simplistic) method for defining problems, services and problem-service matching. It was used for two reasons. First, this method had produced successful problem-service matching in our prior published work (McLellan et al., 1997), secondly, consistent with our goal of enabling direct translation of research to practice, the scoring system is simple enough to be used by clinical supervisors to monitor the match between patient problems, their treatment plans and the services received.

2.3.7.5. Analyses

χ2-tests were performed for between condition (EA versus SA) comparisons on descriptive, categorical variables such as treatment program characteristics, counselor race, gender, degrees held and recovery status, and descriptive patient variables. Logistic regression analyses were used to compare the groups on all “matching” variables. Analysis of covariance(ANCOVA) models were used to compare the intervention groups on continuous variables such as numbers of services received, helping alliance and patient satisfaction scores. Variables on which there were significant (p < 0.05) differences between the intervention groups (counselors and patients) were considered for inclusion as covariates in the logistic regression and ANCOVA models. All analyses were performed using SPSS 11.5.

3. Results

3.1. Descriptive findings

3.1.1. Treatment programs

Despite our best efforts, only 10 of 20 outpatient programs approached agreed to participate. Among those agencies declining, seven reported that their high level of staff turnover would not allow participation in a 6-month project, two more had a policy of never participating in any type of research, and one program wanted increased monetary compensation for their participation. Many of these agencies reported that their staff were simply overwhelmed. Because of consent limitations, we were not able to collect information on agencies that declined participation. We know of no obvious differences in size, organization, or operating characteristics between those agencies that did not participate and those that did. However, we cannot rule out some subtle bias in our participating sample.

Of the 10 outpatient treatment programs who agreed to participate, one agency dropped out immediately after receiving training. Although the study had been fully described and provided in writing and although they had signed consent forms that itemized when they would receive incentive payments (following the recruitment of patients into the study), the counselors wanted their incentive payments immediately after attending the training and receiving the Resource Guide software. When these were not provided, they dropped out of the study. We do not feel this site drop-out could have impacted the study results as only three counselors were enrolled at this site.

Data were compared on 42 variables from the ATI (Addiction Treatment Inventory). There were no significant differences (p > 0.10) between the groups (EA and SA) on 39 general measures of program characteristics (such as IRS status, University affiliation, length of program, number of treatment hours per week, average waiting list, average days wait, availability of medical, psychiatric or employment services, number of sessions with counselors, case managers, psychologists, etc.).

Differences were found on the average number of individual sessions provided by family therapists with patients in the SA group receiving more services (EA group = 0 versus SA group = 0.4, p < 0.001), the average number of group sessions provided by family therapists, with patients in the EA group receiving more services (EA = 0.33 versus SA = 0, p < 0.01) and the average number of group sessions provided by a psychiatrist, with EA patients receiving more services (EA = 0.25 versus SA = 0, p < 0.05).

We also evaluated whether there had been different levels of use of any similar community resource guides by counselors in the two groups prior to the start of the intervention. In general, there were very low levels of use and there were no significant differences between groups on the use of referral sources such as yellow, white, or ‘blue’ pages or previous use of the United Way First Call for Help guide (p > 0.10).

3.1.2. Counselors

Fifty-six counselors were initially trained, approximately 5–6 counselors per program. Although not all counselors recruited patients into the study, no counselor refused the opportunity to participate in the training.

3.1.2.1. Participating/recruiting counselors versus those who dropped-out

Among the 56 counselors initially trained, 33 (59%) recruited at least one patient into the study and 23 (41%) did not recruit any patients either because they left their job entirely or because other job requirements proved too much and they stopped participating. There were no significant between-groups differences (p = 0.79) on percent of counselors who recruited patients into the study. Additionally, these job turnover rates are consistent from those found in other studies of treatment programs in the United States. (McLellan et al., 2003).

We compared the 33 recruiting counselors with the 23 counselors who left or withdrew from the study on 22 variables. There were no significant between-groups differences (p > 0.10) on 17 of the comparisons, including: number of years working in the substance abuse field, previous experience with the ASI, the number of treatment plans previously conducted or counselors opinion about the usefulness of the ASI data in treatment planning prior to the study. Additionally, there were no differences between those recruiting and those who did not on prior history of receiving substance abuse treatment, whether they felt that treatment was matched to their needs, or current recovery status.

Male counselors were more likely than female counselors to recruit patients after training (males = 74%, females = 44%, p < 0.05), and recruiting counselors had, on average, less education.

Finally, it is not believed that counselor compensation impacted differing rates of patient recruitment as counselor incentives were tied to the recruitment of patients, not just attendance at the training.

3.1.2.2. Recruiting EA counselors versus recruiting SA counselors

We then compared the recruiting counselors in the EA group to those in the SA group. The total of 33 participating counselors recruited an average of four patients each over the 6-month course of the enrollment period—18 EA counselors recruited 74 patients and 15 SA counselors recruited 57 patients for a total of 131 patients. The original goal was for each counselor to recruit five patients for a total of 165 patients, however as noted earlier, it is difficult to enforce the recruitment of a minimum number of patients in a community-based treatment sample as some counselors left their jobs prior to collecting five assessments and some recruited only three or four.

We compared recruiting counselors in the EA and SA groups on the same 22 variables noted above. There were no significant between-groups differences (p > 0.10) on 19 of the comparisons, including: number of years working in the substance abuse field, previous training on the ASI, or number of ASI’s previously conducted or their prior opinion about the usefulness of the ASI data in treatment planning. Additionally, there were no differences between groups on prior history of receiving substance abuse treatment, whether they felt that treatment was matched to their needs, or current recovery status.

There was a significantly higher percentage of African-American counselors (73 versus 17%, p < 0.01) in the SA group. The SA group also had a significantly higher proportion of counselors with greater than a high school education (74 versus 53% in EA group, p < 0.01). Finally, counselors from the SA group had more experience writing treatment plans than those in the EA group (82 versus 41, p < 0.005).

3.1.3. Patients

As described above, the 18 counselors in the EA group recruited 74 patients and the 15 counselors in the SA group recruited 57 patients (total = 131). The majority of those patients (71%) were African American, 19% were European American, 9% were Hispanic American, and 1% Native American. Sixty-three percent of the patients were male.

There were few significant between-groups patient differences at baseline on 64 demographic, patient history and problem severity measures from the ASI. There were no differences between groups on medical problems such as lifetime hospitalizations, need for treatment, chronic medical problems, or interviewer severity ratings. There were no significant differences between groups on employment issues such as years of education, interviewer severity ratings, having a driver’s license or a car, or recent employment pattern. Differences were not seen on drug and alcohol variables such as number of days or years of alcohol or cocaine use, days of drug or alcohol problems, importance of treatment or interviewer severity ratings. Differences were not found on the following legal, family and psychiatric variables: number of lifetime convictions, percent awaiting charges, trial or sentencing, days of family or social conflicts, patient ratings on need for treatment for legal, family or psychiatric problems, nor interviewer severity ratings for these areas. Finally, there were no differences found between groups on number or type of psychiatric problems, history of prior treatment or experiences with depression, anxiety, hallucinations, or suicidal thoughts or attempts.

There were several differences found: patients in the EA group received less money from welfare in the past month ($78 versus $230, p < 0.01) and reported having fewer dependents (0.05 versus 1.1, p < 0.05).EA patients also reported spending more money on drugs in the 30 days prior to treatment ($267 versus $25, p < 0.001), and having more days of illegal activities (1.6 days versus 0 days, p < 0.001) in the past month. Additionally, EA patients were more likely to be on probation or parole (43 versus 14%, p < 0.001) and more likely to have had someone from the criminal justice system suggest they enter substance abused treatment (38 versus 9%, p < 0.001). Finally, there were more males in the EA group (73 versus 47%, p < 0.001), and fewer African American patients in the EA group (60 versus 84%, p < 01).

Including all of these patient level variables in the analysis model is impractical, so some judgment was used in selecting those to be included. Some of these client level differences appeared to be a result of between-site heterogeneity in the type of patients enrolled. For example, differences on some variables related to criminality seemed to be due to the fact that one site in the intervention group specifically recruited patients from the criminal justice system.

As a result of these considerations, ethnicity and gender of clients, and the money spent on drugs in the previous 30 days, were included as covariates in the models.

3.2. Use of the resource guide

3.2.1. Pertains to EA group only

Eleven of 18 counselors (61%) made at least one referral using the CASPAR system following the training. Four counselors used the CASPAR with all of their patients, nine counselors used the CASPAR with more than half their patients. Patients received a total of 64 ‘wrap-around’ service referrals from the CASPAR system, an average of two referrals per patient (range = 0–8).

Psychiatric services accounted for the largest number of referrals (39%, n = 25) and employment services accounted for 27% of referrals (n = 17). Family/social service referrals (n = 10) and medical service referrals (n = 8) accounted for 16 and 13%, respectively. Legal services accounted for only 4% (n = 3) and housing services for 3% (n = 2). More detail on the use of the RG can be found in an article by Gurel et al., 2005.

3.3. Findings related to hypotheses

3.3.1. H1: Patients in the EA group would have treatment plans that better matched their problems identified at admission

It should be noted that significant levels of problems (and need for treatment) were identified in six of the seven problems areas on the ASI (drug, alcohol, medical, psychiatric, employment and family), however; only a very small number of patients reported problems in the Legal section. This could be due to the fact that by the time patients with legal problems are sent into the treatment system, they are already linked with the needed services. As a result, data are not reported for the legal domain in the figures showing unadjusted percent matching analyses.

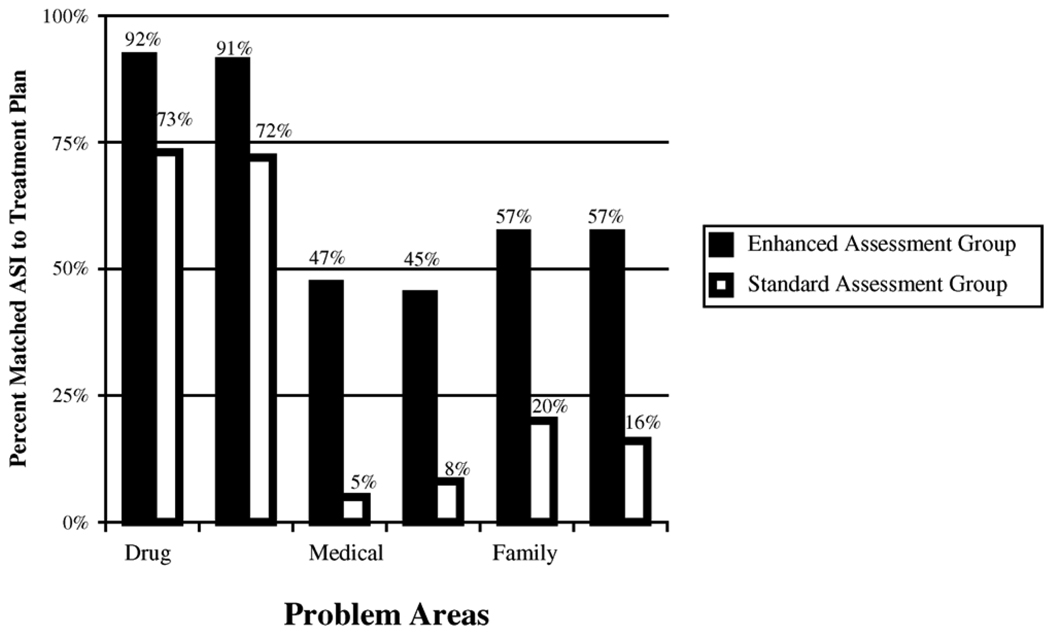

Fig. 1 shows the percent of patient problems at admission that were addressed by the Treatment Plan. These ASI (problem)–Treatment Plan matching rates for the EA and SA groups are presented in Fig. 1 with unadjusted significance levels obtained from χ2-tests for six problem areas (drug, alcohol, medical, employment, psychiatric, and family) by group (EA versus SA), for all patients reporting problems in that area. Again, if there was no problem and no mention in the TP, this was not scored as a match. In each of the six problem areas presented, patients in the EA group were more likely to have their specific problems addressed in the treatment plan than patients in the SA group (all p < 0.05).

Fig. 1.

Shows percent of patient’s whose treatment plans list services that match the problems idenitified at admission assessment by treatment group (SA—standard assessment vs. EA—enhanced assessment). In all content areas the EA group was signficiantly better matched than the SA group (p < 0.05 for family problems, p < 0.01 for drug and alcohol problems, p < 0.001 for psychiatric problems and p < 0.001 for medical problems). Note: unadjusted rates.

As described previously, there were significant differences between the intervention groups on counselor ethnicity and level of education, and on client gender, ethnicity and money spent on drugs, so these variables were included in our logistic regression models to further compare problem to treatment plan match. Table 1 shows both unadjusted and adjusted odds ratios and p-values obtained from these analyses on the seven domains. Adjusted rates of matching were significantly higher in the EA group for all areas: alcohol (p < 0.01), drug (p < 0.01), psychiatric (p < 0.01), employment (p < 0.001), medical (p < 0.0001), family (p < 0.01), and legal (p < 0.01).

Table 1.

Adjusted and unadjusted odds ratios (OR) and p-values for intervention (EA) group (reference group = SA) for ASI–TCP matches and ASI–TSR matches

| ASI domain | Unadjusted |

Adjusteda |

|||

|---|---|---|---|---|---|

| OR | p | OR | p | ||

| ASI–TCP | Alcohol | 3.92 | 0.0181 | 7.45 | 0.021 |

| Drug | 4.22 | 0.0086 | 13.2 | 0.0023 | |

| Psych | 7.20 | 0.0001 | 8.32 | 0.0051 | |

| Employ | 9.34 | <0.0001 | 10.66 | 0.0003 | |

| Medical | 14.52 | <0.0001 | 35.93 | <0.0001 | |

| Family | 5.26 | 0.0023 | 11.79 | 0.009 | |

| Legal | 1.5312 | <0.0001 | 1.2812 | 0.0067 | |

| ASI–TSR | Alcohol | 10.00 | <0.0001 | 13.98 | 0.0010 |

| Drug | 9.64 | <0.0001 | 15.74 | 0.0012 | |

| Psych | 12.75 | <0.0001 | 11.23 | 0.0026 | |

| Employ | 2.78 | 0.0010 | 1.86 | 0.2346 | |

| Medical | 4.93 | 0.0010 | 5.20 | 0.0183 | |

| Family | 0.57 | 0.3078 | 0.77 | 0.7558 | |

| Legal | 3.2111 | 0.1276 | – | – | |

Covariates in model include: counselor race (1 = black, 0 = other), counselor education (1 = beyond high school, 0 = high school or lower), client race (1 = black, 0 = other), client gender (1 = male, 0 = female), and money spent on drugs during past 30 days (1 = yes, 0 = no)

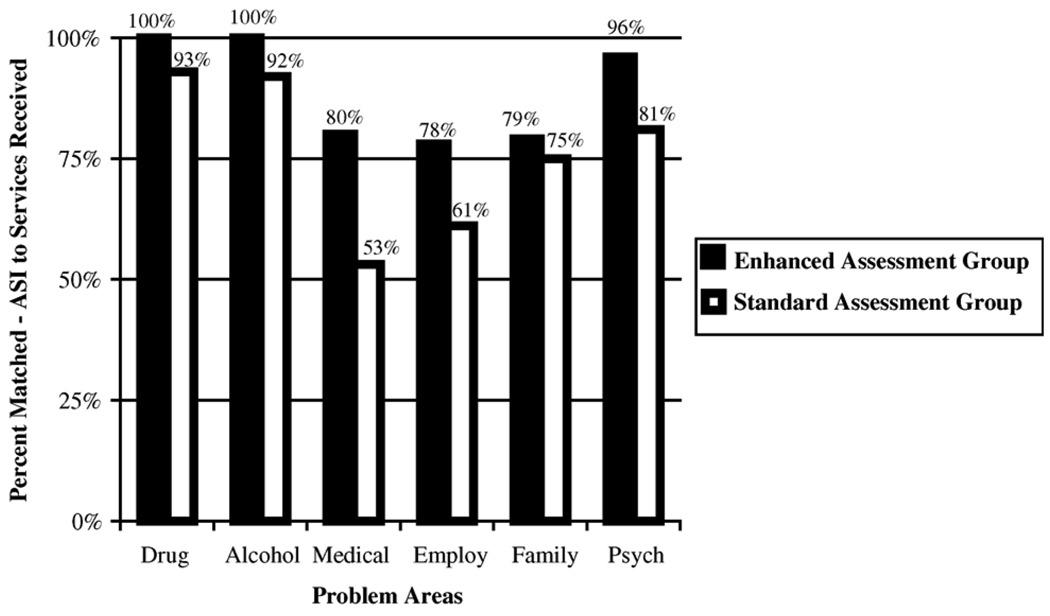

3.3.2. H2: Patients in the EA group would receive services that were better matched to their admission problems

Fig. 2 shows the percent of patients whose problems at admission (ASI) were addressed by services received. The ASI-to-TSR (problem to services) matching rates for the EA and SA groups are presented for the same problem areas (as above) with unadjusted significance levels obtained from χ2-tests. Data were collected for services received over the first 4 weeks of treatment and analysis were completed on the receipt of “specialized” services only, as those were the type of services we were trying to increase. The problems-to-services match was significantly higher for patients in the EA group in the areas of drug, alcohol, medical and psychiatric problems (all p < 0.01). EA patients received more services in the employment and family domains, however, these differences were not significant.

Fig. 2.

Shows percent of patients who received services that match the problems identified at admission assessment by treatment group (SA—standard assessment vs. EA—enhanced assessment). EA group received more services in the drug, alcohol, medical and psychiatric areas (p < 0.01). Note: unadjusted rates.

The lower half of Table 1 shows the unadjusted and adjusted odds ratios and p-values obtained from logistic regression analyses on the seven problem domains. Adjusted rates of ASI–TSR (problem to services) matching were significantly higher in the EA group in the areas of alcohol (p < 0.001), drug (p < 0.01), psychiatric (p < 0.01), and medical (p < 0.05), with no significant differences found for the employment and family areas.

3.3.3. H3: Patients and counselors in the EA group would form better relationships

We hypothesized that EA patients would form stronger relationships with their counselors and have increased patient satisfaction compared with patients in the SA group. Contrary to our hypothesis, we found no between-groups differences in either helping alliance or patient satisfaction measures (p > 0.10). Scores for both instruments were average to above average for each group, indicating generally acceptable rates of overall rapport and satisfaction.

3.3.4. H4: Patients in the EA group would show better during-treatment performance

3.3.4.1. Number of services received

Table 2 shows the adjusted average numbers of services received in the EA and SA groups. As seen, the EA group averaged significantly more total sessions (specialized and general) than the SA group (F(1,111) = 22.24, p < 0.001). These differences were most pronounced (across problem domains) in the category of “specialized” services (F(1,111) = 22.81, p < 0.001), and less pronounced in “general services” (F(1,11) = 8.70, p < 0.01).

Table 2.

Average number of services received SA and EA groups

| SA group mean (S.D.) | EA group mean (S.D.) | F | |

|---|---|---|---|

| Total # of specialized and general services received*** | 31 (29) | 100 (69) | 22.24 |

| Specialized services*** | 14 (23) | 67 (51) | 22.81 |

| General services** | 17 (12) | 34 (25) | 8.70 |

| Total # of specialized services received by content area | |||

| Drug and alcohol*** | 5 (8) | 19 (16) | 11.06 |

| Psych services*** | 3 (7) | 25 (26) | 21.74 |

| Employment services** | 1 (2) | 5 (5) | 7.09 |

| Medical services* | 3 (10) | 15 (21) | 5.74 |

| Family services (p = 0.050) | 2 (3) | 3 (4) | 3.92 |

| Legal services | 0 (0) | 1 (2) | 2.39 |

| Total services received off-site via referral*** | 6 (15) | 20 (24) | 15.23 |

| Specialized services off-site via referral** | 5 (14) | 15 (17) | 37.74 |

| General services off-site via referral*** | 7 (3) | 5 (10) | 7.45 |

Analyses adjusted for Counselor race, counselor education, client race, client gender and money spent on drugs during past 30 days.

p < 0.05.

p < 0.01.

p < 0.001.

Within the specific problems areas, the EA group received significantly more specialized services in the drug and alcohol (F(1,112) = 11.06, p < 0.001), psychiatric (F(1,112) = 21.74, p < 0.001), employment (F(1,112) = 7.09, p < 0.01) and medical (F(1,112) = 5.74, p < 0.05) domains with no significant differences found in the legal (F(1,111) = 2.39, p = 0.13) and family (F(1,112) = 3.92, p = 0.05) areas.

Finally, in regards to services received specifically through off-site providers via referrals from the index treatment program, the EA group averaged significantly more offsite referred total sessions (specialized and general) than the SA group (F(1,126) = 15.23 p < 0.001). These differences were most pronounced in the category of “general” services (F(1,126) = 7.45, p < 0.001), and less pronounced in the category of “specialized” services (F(1,126) = 37.74, p < 0.01).

3.3.4.2. Treatment retention and completion rates

Type discharge was available for 121 clients (64 EA and 57 SA). Among the EA group, 34% of patients left treatment “against medical advice” (AMA) whereas in the SA group 58% of patients left treatment AMA, X2 (3, N= 121) = 10.30, p < 0.05). Additionally, program completion rates in the EA group were higher with 53% completing the planned duration and activities of treatment compared with 24% in the SA group (X2 (1, N= 108) = 9.72, p < 0.01).

4. Discussion and limitations

We felt that making an easy to use, computerized directory of available resources and providing a brief training (2 h) on its use in the context of a research-based assessment instrument would improve the quality of patient treatment planning, the number and appropriateness of services delivered, patient-counselor alliance, and patient satisfaction levels. We found that we were indeed able to improve the “match” between information provided at intake and the issues targeted in the treatment plan. The CASPAR procedures also informed the number and “match” of services received by patients. However, we did not see evidence that the CASPAR procedures improved either patient satisfaction or helping alliance.

4.1. Limitations

Before we discuss the implications of these findings it is important to note some limitations. Primary limitations in this study include its implementation in just one geographical location (Philadelphia PA, USA), randomization at the program level instead of counselor or patient level, the number of treatment programs that would not participate in this research study, staff turnover, and the lack of post-treatment outcome data.

This study was restricted to treatment programs in Philadelphia because we thought that we should try this “technology transfer” method locally prior to incurring the expense of developing a broader application. It is possible to create CASPAR systems for any geographic location and the system is currently being developed for a study in two locations outside of Philadelphia. We are also discussing the designing of CASPAR systems in several states in the US as well as in several international sites (Lebanon, Egypt, Iran and Northern Ireland).

With regard to random assignment to treatment conditions, we would of course have saved ourselves analysis problems by performing a “within programs” design with random assignment of counselors and patients to the two conditions. However, those procedures are not practical and simply would not be tolerated at community-based treatment programs. For example, it may seem more appropriate to train all counselors in the EA procedures and randomly assign patients to receive either the standard or enhanced assessment/treatment plan. This presupposes that counselors at a treatment program, trained in matching patient problems at assessment with services provided, and with access to a resource guide that allows them to easily find services in the community, will be willing to use these skills and resources on only specified patients. Our experience is that counselors will not and in fact, should not be asked to do this. A second alternative, randomly assigning counselors to the SA or EA groups, is also not realistic. Again, it assumes that a counselor assigned to the EA group, who receives the training and resource guide, will not share it with a fellow counselor who had been randomly assigned to the SA group, but works at the same treatment program. Therefore we randomly assigned treatment programs to either the EA or SA group and adjusted for noteworthy counselor and patient differences in our analyses.

An additional problem is that we had to approach 20 treatment programs to obtain 10 that were willing to participate in the study. Although these programs were randomly assigned, a larger number of participating programs (or a larger percent of those approached participating) would have allowed for more confidence in the results. More importantly, half of the programs declined to participate even though the study had incentives for treatment program directors, counselors, and patients. This is important both for generality of the findings and for the larger goal of improving technology transfer. Seven of the 10 treatment programs that declined participation reported that their staff turnover rates simply would not permit implementation of any type of study. This level of counselor turnover was particularly disconcerting from a clinical standpoint. We have seen this same type of employee instability in a US-based national study (See McLellan et al., 2003) and have noted the implications of this instability for technology transfer efforts of any kind. There is little doubt that many of these outpatient programs are overwhelmed by the severity of patient problems, the counselor turnover and the demands of managed care procedures. We continue to feel that those programs that did not participate would also have benefited, but it remains a challenge to convince overwhelmed and skeptical programs of the “added value” in adopting new research-based technology.

As discussed above, we originally trained 56 counselors. Over the 6 months of the study, 32% (18 of 56) had left their place of employment and another 27% (15 of 56) of counselors dropped out of the study (although some of these counselors recruited a small number of patients). This level of staff change is disruptive to clinical and management efforts and underscores the need for efforts to improve working conditions (salaries, training, advancement, etc.) for the primary care givers in substance abuse treatment programs.

Of note is the difference between percent of counselors leaving their jobs in the EA group (21%, 6 of 29 counselors) and the SA group (44%, 12 of 27). It is possible, but admittedly speculative that the EA led to more mastery and job satisfaction for counselors in that group.

Finally, the addition of patient follow-up measures would have provided valuable information for our study. Follow-up measures were not planned for this study since we felt it was important to first explore whether the training and resource guide would affect counselors treatment planning, the services received by the patient and their levels of engagement and participation in treatment. Since these during-treatment results are in the expected direction, we now believe there is justification for additional exploration on the longer-term effects of the enhanced admission assessment procedure and have implemented a new study including further data collection on patient progress and outcomes.

4.2. Implications

Although there are important limitations, the results of this study are nonetheless quite interesting and promising. In fact, we were surprised at the extent to which standard measures of performance were so significantly and consistently better in the EA group, especially given the modest level of additional training and that the software-based resource guide included only services that had always been available within the community. This is important since it indicates that improved counselor performance was not because of the availability of new or additional services in Philadelphia. The computerized resource guide developed for this study was a modification of a resource guide available in print form in most treatment programs and social service agencies throughout Philadelphia – the United Way’s First Call for Help (Mackie and Walton, 1998).

Further, we do not believe that the enhanced number and appropriateness of services in the EA patients was due to more experience with or better learning of the ASI by the EA counselors. Counselors showed approximately equal understanding of the ASI across groups after participating in the training. Finally, and most surprisingly, the better performance was not because the EA group formed better helping alliances nor had greater levels of patient satisfaction, although this was a specific hypothesis of the study.

We think our findings are due to two factors. First, we provided clear, concrete instruction to counselors regarding why and how to use the ASI assessment information. Second, we provided an easy to use resource – the CASPAR system – that makes it possible for counselors to carry out the service referrals without adding to their already compromised workloads. We believe this gave new meaning to the admission assessment, which had previously been thought by most to be just another mandatory piece of paperwork (see McLellan et al., 2003). It also gave a clear rationale for the development and use of treatment plans. We were happy to see that all categories of the resource guide were used at least once (the most frequently used services were in the educational, family, medical and psychological categories). Interestingly, counselors at several sites reported using the resource guide not only for their patients in treatment, but also for their own needs.

In summary, we are encouraged by these early efforts on this technology transfer project. We look forward to implementing further studies toward our larger goal of improving the accessibility and quality of patient care in substance abuse treatment programs.

Supplementary Material

Acknowledgments

The authors are particularly grateful to the United Way of Southeastern Pennsylvania, for permission to use their electronic edition of the First Call for Help as a basis for our resource guide. The authors also want to thank the patients and counselors at the participating treatment agencies for their help throughout the data collection process, Meghan Love, David Festinger, Heather Eshelman, Molly Edwards who helped in the development of this idea as well as providing corresponding training, and Joyelle McNellis, Keith Royal, and Van Lam who helped with development of the resource guide software, and Karin Eyrich who helped in the final reviews of the manuscript. Additional thanks goes to the Office of National Drug Control Policy for their continued support of DENS, to NIDA for their support through NIDA Grant # RO1 DA13134-01, and to our project officers at NIDA, Jerry Flanzer and Thomas Hilton. The Philadelphia CASPAR Resource Guide developed as part of this grant is available at no cost to the clinical and research communities. None of the materials will be marketed or sold and no author of this manuscript will profit from software or other materials made for this grant.

References

- Attkisson CC, Zwick R. The client satisfaction questionnaire: psychometric properties and correlations with service utilization and psychotherapy outcome. Eval. Program Plann. 1982;5:233–237. doi: 10.1016/0149-7189(82)90074-x. [DOI] [PubMed] [Google Scholar]

- Backer TE, David SL. Synthesis of behavioral science learnings about technology transfer. In: Backer TE, David SL, Soucy G, editors. Reviewing the Behavioral Science Knowledge Base on Technology Transfer, 155. NIDA Res. Monogr. Rockville, MD: National Institute on Drug Abuse; 1995. pp. 262–279. [Google Scholar]

- Barber JP, Luborsky L, Crits-Christoph P, Thase ME, Weiss R, Frank A, Onken L, Gallop R. Therapeutic alliance as a predictor of outcome in treatment of cocaine dependence. Psychother. Res. 1999 Spring;9(1):54–73. [Google Scholar]

- Barber JP, Luborsky L, Gallop R, Crits-Christoph P, Frank A, Weiss RD, Thase ME, Connoly MB, Gladis M, Foltz C, Siqueland L. Therapeutic alliance as a predictor of outcome and retention in the National Institute on Drug Abuse collaborative cocaine treatment study. J. Consult. Clin. Psychol. 2001;69(1):119–124. doi: 10.1037//0022-006x.69.1.119. [DOI] [PubMed] [Google Scholar]

- Carise D. Alcoholism: family disease/family treatment. In: McCown W, editor. Treatment in High-Risk Families: A Consultation/Crisis Intervention Paradigm. New York, NY: Hayworth; 1992. [Google Scholar]

- Carise D. Effect of drug of choice, family involvement, and employer involvement on treatment completion rates of substance abusers. In: Harris LS, editor. Problems of Drug Dependence 1994. Proceedings of the 56th Annual Scientific Meeting, vol. 152, no. 2, The College on Problems of Drug Dependence, Inc. NIDA Res. Monogr; Rockville, MD: National Institute on Drug Abuse; 1995. p. 352. [Google Scholar]

- Carise D, Cornely W, Gurel O. A successful researcher-practitioner collaboration in substance abuse treatment. J. Subst. Abuse Treat. 2002;23(2):157–162. doi: 10.1016/s0740-5472(02)00260-x. [DOI] [PubMed] [Google Scholar]

- Carise D, Gűrel O. Benefits of integrating technology with treatment—the DENS project. In: Sorensen JL, Rawson R, Guydish J, Zweben JE, editors. Drug Abuse Treatment Through Collaboration: Practice and Research Partnerships that Work. Washington, DC: APA; 2002. pp. 181–195. Chapter 11. [Google Scholar]

- Carise D, McLellan AT, Gifford LS, Kleber HD. Developing a national addiction treatment information system: an introduction to the Drug Evaluation Network System. J. Subst. Abuse Treat. 1999;17(1–2):67–77. doi: 10.1016/s0740-5472(98)00047-6. [DOI] [PubMed] [Google Scholar]

- Carise D, McLellan AT, Gifford LS. Development of a ‘Treatment Program’ descriptor – the addiction treatment inventory. Subst. Use Misuse. 2000;35(12–14):1797–1818. doi: 10.3109/10826080009148241. [DOI] [PubMed] [Google Scholar]

- Crevecoeur D, Finnerty B, Rawson RA. Los Angeles County Evaluation System (LACES): bringing accountability to alcohol and drug abuse treatment through a collaboration between providers, payers, and researchers. J. Drug Issues. 2000;32:865–880. [Google Scholar]

- Dexter SB, Goetzke J. A behavioral intervention to increase appointment compliance in a substance abuse treatment program; Proceedings of the presentation at the Association for Behavioral Analysts Conference; Washington, DC: 1995. [Google Scholar]

- Epstein EE, McCrady BS. Behavioral couples treatment of alcohol and drug use disorders: current status and innovations. Clin. Psychol. Rev. 1998;18:689–711. doi: 10.1016/s0272-7358(98)00025-7. [DOI] [PubMed] [Google Scholar]

- Fals-Stewart W, Birchler GR, O’Farrell TJ. Behavioral couples therapy for male substance abusing patients: Effects on relationship adjustment and drug-using behavior. J. Consult. Clin. Psychol. 1996;64:959–972. doi: 10.1037//0022-006x.64.5.959. [DOI] [PubMed] [Google Scholar]

- Festinger DS, Lamb RJ, Kirby KC, Marlowe DB. The accelerated intake: a method for increasing initial attendance to outpatient cocaine treatment. J. Appl. Behav. Anal. 1996;29(3):118–122. doi: 10.1901/jaba.1996.29-387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fureman I, McLellan AT, Alterman A. Training for and maintaining interviewer consistency with the ASI. J. Subst. Abuse. Treat. 1994;11(3):223–237. doi: 10.1016/0740-5472(94)90080-9. [DOI] [PubMed] [Google Scholar]

- Gurel O, Carise D, Kendig K, McLellan AT. Developing CASPAR: A computer-assisted system for patient assessment and referral. J. Subst. Abuse Treat. 2005;8(3):281–289. doi: 10.1016/j.jsat.2005.02.005. [DOI] [PubMed] [Google Scholar]

- Higgins ST, Budney AJ, Bickel WK, Badger GJ. Participation of significant others in outpatient behavioral treatment predicts greater cocaine abstinence. Am. J. Drug Alcohol Abuse. 1994;20:47–56. doi: 10.3109/00952999409084056. [DOI] [PubMed] [Google Scholar]

- Hser YI, Polinsky ML, Maglione M, Anglin MD. Matching clients’ needs with drug treatment services. J. Subst. Abuse Treat. 1999;16(4):299–305. doi: 10.1016/s0740-5472(98)00037-3. [DOI] [PubMed] [Google Scholar]

- Institute of Medicine. Development of Medications for the Treatment of Opiate and Cocaine Addictions: Issues for the Government and Private Sector. Washington, DC: National Academy Press; 1995. [PubMed] [Google Scholar]

- Kluger MP, Karras A. Strategies for reducing missed initial appointments in a community mental health center. Commun. Ment. Health J. 1983;19(2):137–143. doi: 10.1007/BF00877606. [DOI] [PubMed] [Google Scholar]

- Lamb S, Greenlick MR, McCarty D, editors. Bridging the Gap between Practice and Research. Institute of Medicine Report. Washington, DC: National Academy Press; 1998. [PubMed] [Google Scholar]

- Luborsky L, Barber JP, Siqueland L, Johnson S, Najavitz LM, Frank A, Daley D. The revised helping alliance questionnaire-II (HAQ-II). psychometric properties. J. Psychother. Pract. Res. 1996;6:260–271. [PMC free article] [PubMed] [Google Scholar]

- Mackie MW, Walton T, editors. Dorland’s Directories. Philadelphia, PA: United Way of Southeastern Pennsylvania; 1998. First call for help: a guide to community services, 1998–1999. [Google Scholar]

- McLellan AT, Luborsky L, Cacciola J, Griffith J, Evans F, Barr H, O’Brien CP. New data from the Addiction Severity Index: reliability and validity in three centers. J. Nerv. Ment. Dis. 1985;173(7):412–422. doi: 10.1097/00005053-198507000-00005. [DOI] [PubMed] [Google Scholar]

- McLellan AT, Kushner H, Metzger D, Peters R, Grissom G, Pettinati H, Argeriou M. The fifth edition of the Addiction Severity Index. J. Subst. Abuse Treat. 1992a;9(3):199–213. doi: 10.1016/0740-5472(92)90062-s. [DOI] [PubMed] [Google Scholar]

- McLellan AT, Alterman AI, Cacciola J, Metzger D, O’Brien CP. A new measure of substance abuse treatment: Initial studies of the Treatment Services Review. J. Nerv. Ment. Dis. 1992b;180(2):101–110. doi: 10.1097/00005053-199202000-00007. [DOI] [PubMed] [Google Scholar]

- McLellan AT, Grissom G, Durell J, Alterman AI, Brill P, O’Brien CP. Substance abuse treatment in the private setting: are some programs more effective than others? J. Subst. Abuse Treat. 1993;10:243–254. doi: 10.1016/0740-5472(93)90071-9. [DOI] [PubMed] [Google Scholar]

- McLellan AT, Grissom GR, Zanis D, Randall M, Brill P, O’Brien CP. Problem-service ‘matching’ in addiction treatment: a prospective study in four programs. Arch. Gen. Psychiatry. 1997;54:730–735. doi: 10.1001/archpsyc.1997.01830200062008. [DOI] [PubMed] [Google Scholar]

- McLellan AT, Hagan TA, Levine M, Meyers K, Gould F, Bencivengo M, Durrell J, Jaffe J. Does clinical case management improve outpatient addiction treatment? Drug Alcohol Depend. 1999;55(1–2):91–103. doi: 10.1016/s0376-8716(98)00183-5. [DOI] [PubMed] [Google Scholar]

- McLellan AT, Carise D, Kleber HD. Can the national addiction treatment infrastructure support the public’s demand for quality care? J Subst. Abuse Treat. 2003;25:1–5. [PubMed] [Google Scholar]

- Miller WR, Rollnick S. Motivational Interviewing. NY: The Guilford Press; 1991. [Google Scholar]

- O’Farrell TJ, Murphy CM. Marital violence before and after alcoholism treatment. J. Consult. Clin. Psychol. 1995;63:256–262. doi: 10.1037//0022-006x.63.2.256. [DOI] [PubMed] [Google Scholar]

- O’Loughlin S. The effect of pre-appointment questionnaire clinical psychologist attendance rates. Br. J. Med. Psychol. 1990;63:5–9. doi: 10.1111/j.2044-8341.1990.tb02851.x. [DOI] [PubMed] [Google Scholar]

- Rawson RA, Stein J. Blending clinical practice and research: Forging partnerships to enhance drug addiction treatment. J. Subst. Abuse Treat. 2002;23:67–68. [Google Scholar]

- Sisson RW, Azrin NH. The community reinforcement approach. In: Hester RK, Miller WR, editors. Handbook of Alcoholism Treatment Approaches: Effective Alternatives. NY: Pergamon Press; 1989. pp. 242–258. [Google Scholar]

- Stark MJ, Campbell BK, Brinkerhoff CV. Hello, may we help you? A study of attrition prevention at the time of the first phone contact with substance-abusing clients. Am. J. Drug Alcohol Abuse. 1990;16:67–76. doi: 10.3109/00952999009001573. [DOI] [PubMed] [Google Scholar]

- Swenson TR, Pekarick G. Interventions for reducing missed initial appointments at a community mental health center. Commun. Ment. Health J. 1988;24(3):205–218. doi: 10.1007/BF00757138. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.