Abstract

Sleep deprivation adversely affects the ability to perform cognitive tasks, but theories range from predicting an overall decline in cognitive functioning (because of reduced stability in attentional networks) to claiming specific deficits in executive functions. In the present study, we measured the effects of sleep deprivation on a two-choice numerosity discrimination task. A diffusion model was used to decompose accuracy and response time distributions in order to produce estimates of distinct components of cognitive processing. The model assumes that, over time, noisy evidence from the task stimulus is accumulated to one of two decision criteria and that parameters governing this process can be extracted and interpreted in terms of distinct cognitive processes. The results showed that sleep deprivation affects multiple components of cognitive processing, ranging from stimulus processing to peripheral nondecision processes. Thus, sleep deprivation appears to have wide-ranging effects: Reduced attentional arousal and impaired central processing combine to produce an overall decline in cognitive functioning.

Sleep deprivation is a manipulation that allows arousal levels to be manipulated to a higher degree than one sees in the experimental interventions typically used in cognitive research. The effects of acute sleep deprivation on cognition are reversible, so sleep deprivation represents a different approach for investigating the underlying processes of cognition. Conversely, carefully selected tests of cognitive processing can provide new information about the effects of sleep deprivation on the brain.

Experimental and modeling studies of the effects of sleep deprivation on cognitive performance have thus far focused almost exclusively on global outcome measures (Durmer & Dinges, 2005; Van Dongen, 2004). Such research has revealed that overall performance declines as a function of time spent awake, modulated by circadian rhythm (Van Dongen & Dinges, 2005). However, not much is known about the effects of sleep deprivation on detailed performance outcomes, such as response time (RT) distributions, or on changes in specific cognitive components, such as decision processes. Attempts are being made to bridge this gap with the use of computational modeling that is based on cognitive architectures (Gunzelmann, Gluck, Price, Van Dongen, & Dinges, 2007), but these efforts need to be informed by precise information regarding which component processes of cognition are affected by sleep deprivation and how.

The present study addressed this issue. Participants were tested on a two-choice numerosity discrimination task at baseline after 57 h of sleep deprivation and again following 2 days with recovery sleep. Participants in a control group were tested at the same times, but without sleep deprivation. In each trial of the two-choice task, between 31 and 70 asterisks were placed in random positions in a 10 × 10 array, and the participants were instructed to judge whether the number was greater than 50 (large) or less than 50 (small). This task was selected because it has few memory demands or perceptual limitations (such as brief presentation or low contrast), allowing us to focus on central cognitive processes and decision processes.

We applied the diffusion model (Ratcliff, 1978, 1988; Ratcliff, Cherian, & Segraves, 2003; Ratcliff & McKoon, 2008; Ratcliff & Smith, 2004; Ratcliff, Van Zandt, & McKoon, 1999; Smith, 2000; Smith, Ratcliff, & Wolf-gang, 2004; Voss, Rothermund, & Voss, 2004) to the data from each individual participant. From this, we were able to obtain estimates of the model parameter values for the baseline, deprivation, and recovery sessions in the experimental group, as well as for those in the control group. These parameter estimates allowed us to draw conclusions about the effects of sleep deprivation on components of cognitive processing.

METHOD

Participants

A total of 25 participants (13 women; age range, 22–38 years) completed the study. The participants were screened with physical examinations, urine and blood chemistry tests, and questionnaires to ensure they were physically and psychologically healthy and free of traces of drugs. They were good sleepers (getting between 6 and 10 hours per night), reported themselves as being neither extreme morning nor extreme evening types, and had no sleep or circadian disorder (including normal baseline polysomnogram). They had not traveled between time zones in the prior 3 months and had not worked shifts in the prior month. The participants were required to maintain their habitual sleep schedule in the week before the study, as monitored by sleep/wake logs, time-stamped voice recordings of bedtimes and rising times, and wrist actigraphy (wrist-worn activity monitoring). They had normal or corrected-to-normal vision. The study was approved by the Institutional Review Board of Washington State University, and all of the participants gave written informed consent.

Design

The participants were in the lab for 6 consecutive nights (7 days). They were randomized to either a sleep-deprivation condition (12 participants) or a control condition (13 participants). On Days 1 and 2, all of the participants had baseline sleep (10 h in bed each night). They practiced the numerosity discrimination task for 10 min twice on Day 1. On Day 3, participants in the experimental condition began 62 h of continuous wakefulness. At 17:00 on that day, while 9 h awake, they took their baseline test. Two days later, at 17:00, while 57 h awake (48 h after Test 1), they took their second test. After the 62 h of wakefulness, participants were allowed 2 recovery nights (10 h in bed each night). At 17:00 on the last day (48 h after Test 2), they took their recovery test. The control participants were tested at the same times but had sleep (10 h in bed) each night throughout the study. Participants continually stayed inside the isolated, environmentally controlled laboratory during the study and were behaviorally monitored at all times by trained research assistants.

Procedure

Cognitive performance was tested on a two-choice numerosity discrimination task. On each trial, a number of asterisks between 31 and 70 was generated and placed in random positions in a 10 × 10 array of blank characters in the upper left-hand corner of an LCD monitor (from a viewing distance of 57 cm, the width of the asterisk array was 4°, and the height was 9°). Varying the number of asterisks causes performance accuracy to vary from near-ceiling (100% accurate) to near-floor (50% accurate) levels, providing a full range of values for this dependent variable for the purpose of modeling. They were clearly visible, light characters presented against a dark background. The participants were asked to press the “/” key if the number of displayed asterisks was judged to be large and the “z” key if the number was judged to be small. Each test array remained on the screen until a response was made. “Small” responses to arrays of 31 to 50 asterisks and “large” responses to arrays of 51 to 70 asterisks were counted as correct. Feedback on the accuracy of a response was provided by displaying the word “error” or “correct,” as appropriate, on the screen for 500 msec after each response was made. There were 30 blocks of 40 trials (all the numbers of asterisks, from 31 to 70) per test session. The participants were instructed to respond quickly and accurately, but not so quickly that they would hit the wrong key. The participants were also informed that they could take a break between blocks of trials. Examples of large and small numbers of asterisks were shown before the session to provide participants with a reference.

RESULTS

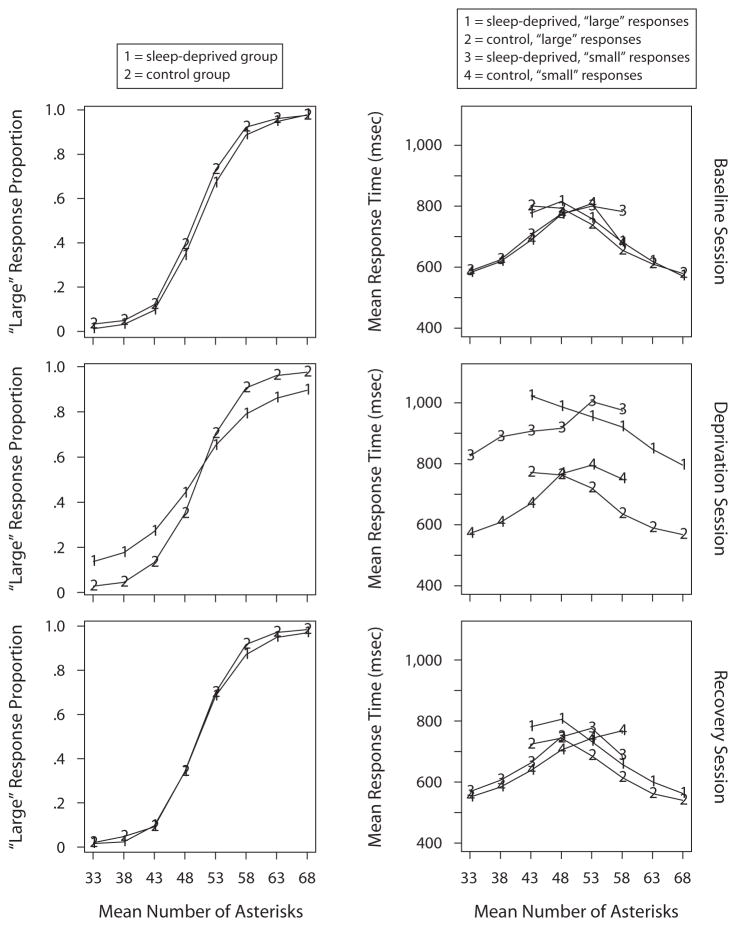

Summaries of the accuracy and RT data for the experimental and control groups for the three sessions are shown in Figure 1. Responses from the first block of each session, short (<280 msec) and long (>5,000 msec) outlier RTs in all blocks (less than 1.7% of the data in the sleep-deprivation session and less than 0.2% of the data for the other sessions), and the first response in each block were eliminated. The left-hand panels show the proportion of “large” responses as a function of eight numerosity categories representing eight groups of numbers of asterisks (31–35, 36–40, 41–45, 46–50, 51–55, 56–60, 61–65, 66–70), and the right-hand panels show mean RT for “large” and “small” responses, as a function of the number of asterisks. Response proportions were very similar for the experimental and control groups in the baseline and recovery sessions; but in the sleep-deprivation session, the experimental participants showed a loss of accuracy, as compared with the control participants’ accuracy levels. In the baseline session, RTs from the experimental and control groups were similar. In the sleep-deprivation session, the experimental group exhibited mean RTs over 200 msec longer than those exhibited by the control group. In the recovery session, the experimental group had slightly slower responses than those by the control group, but performance was almost back to baseline levels. These results are consistent with the documented overall effects of sleep deprivation on cognitive performance (Durmer & Dinges, 2005; Van Dongen & Dinges, 2005).

Figure 1.

Accuracy and mean response time (RT), as a function of numerosity category for the three sessions and two participant groups. Note that mean error RTs for the two extreme numerosity categories are not plotted; there were some participants with zero error responses in those categories.

Diffusion Model

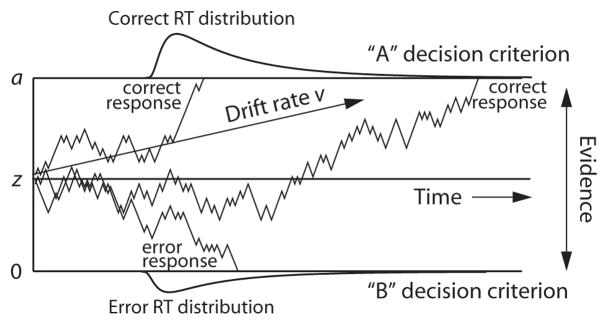

The diffusion model is designed to explain the cognitive processes involved in making simple two-choice decisions. The model separates the quality of evidence entering a decision from the decision criteria, as well as from nondecision processes. Decisions are assumed to be made by a noisy process that accumulates information over time, from a starting point z toward one of two response criteria, or boundaries: a and 0 (Figure 2). When a boundary is reached, a response is initiated. The rate of accumulation of information is called the drift rate (v), and it is determined by the quality of the information extracted from the stimulus in perceptual tasks and the quality of match between the test item and memory in lexical decision and memory tasks. The mean of the distribution of times taken up by the nondecision component (the combination of encoding, response execution, etc.) is labeled Ter. Within-trial variability (Gaussian-distributed noise) in the accumulation of information from the starting point toward the boundaries results in processes with the same mean drift rate terminating at different times (producing RT distributions) and, sometimes, terminating at the wrong boundary (producing errors).

Figure 2.

Illustration of the diffusion model with starting point z, boundary separation a, and drift rate v. Three sample paths are shown, illustrating variability within the decision process, and correct and error response time (RT) distributions are illustrated.

The values of the components of processing are assumed to vary from trial to trial, under the assumption that participants cannot accurately set the same parameter values from one trial to another (e.g., Laming, 1968; Ratcliff, 1978). Across-trial variability in drift rate is normally distributed with SD η, across-trial variability in starting point is uniformly distributed with range sz, and across-trial variability in the nondecision component is uniformly distributed with range st. This across-trial variability allows the model to fit the relative speeds of correct and error responses (Ratcliff et al., 1999). In signal detection theory, which deals only with accuracy, all sources of across-trial variability would be collapsed onto one parameter—namely, variability in evidence across trials. In contrast, in diffusion model fitting, the separate sources of across-trial variability are identified. Thus, if simulated data are fit by the model, then, for example, variability in drift rate is not incorrectly recovered as variability in starting point (Ratcliff & Tuerlinckx, 2002). The success of parameter identifiability comes partly from the requirement that the model is fit to both the correct and the error RT distributions, which provide very tight constraints on the model (see Ratcliff, 2002).

In almost all RT studies (e.g., Ratcliff, 1979, 1993), some proportion of responses are spurious contaminants, which have previously been explicitly modeled in applications of the diffusion model (Ratcliff & Tuerlinckx, 2002) as random delays in processing. Thus, predicted RTs are mixtures of pure diffusion model processes and diffusion model processes with a delay added (usually 0%–2%), which means that contaminant processes are just as accurate as processes without contaminants. In this application, we used the simplest alternative assumption we could by representing contaminants as random guesses (Vandekerckhove & Tuerlinckx, 2007) that were uniformly randomly distributed over the range from the shortest to the longest RT for each numerosity category. Thus, the predicted RT distribution is a probability mixture of diffusion model processes and random guesses. Random guesses can be distinguished from the assumption of an added random delay because random guesses reduce accuracy, as was shown in the sleep-deprivation data. Unlike the assumption of random delays added to diffusion model RTs, the assumption of random guesses means that these processes are outside the diffusion model processing assumptions. Although it may seem that this assumption provides a lot of model freedom, no additional model parameters are added beyond the proportion of contaminants, and the ability of the mixture to account for RT distributions (discussed below) supports the assumption. Note that recovery of diffusion model parameters is reasonably robust to the assumed form of the contaminant distribution; for example, if exponentially distributed contaminants are simulated and the recovery program assumes uniformly distributed contaminants, the model parameters are recovered well (Ratcliff, 2008).

The diffusion model was fit to the accuracy and RT distributions using a standard chi-square method (Ratcliff & Tuerlinckx, 2002). The values of all of the parameters, including the variability parameters, are estimated simultaneously from the data by fitting the model to all of the conditions of an experiment. It was assumed that “large” responses to large stimuli were symmetric with “small” responses to small stimuli; that is, drift rates were equal, but with opposite signs. Thus, there were four distinct drift-rate parameters (v1–v4) for the eight numerosity categories. However, participants can have a bias in the zero point of drift, so a drift criterion, vc (analogous to the criterion in signal detection theory), was added to each drift rate (Ratcliff & McKoon, 2008). Because the values for the drift rate parameter for the two middle, most difficult numerosity categories (i.e., v4, for 46–50 and 51–55 asterisks) were small and variable, they were not used in our statistical analyses; but they did help constrain the model fits. All of the parameters of the model (except drift rate) were kept constant across numerosity categories in each session, because it is routinely assumed that participants cannot change their decision criteria or adjust the duration of other components of processing as a function of the quality of the stimulus being presented. All of the parameters of the model were allowed to vary between sessions, because practice could alter any of the parameters. The model can be seen as decomposing accuracy and RT data for correct and error responses into distinct cognitive processes.

Fits of the Diffusion Model

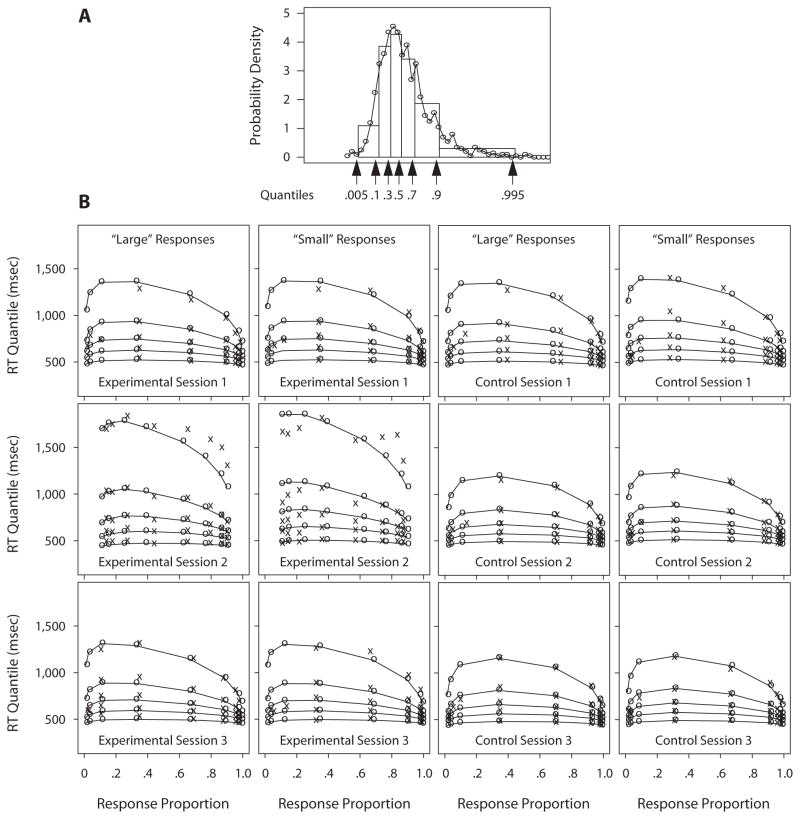

To display the data and the diffusion model fits, we computed the average over participants for quantile RTs (see Figure 3A) and for the proportion of “large” and “small” responses as a function of the numerosity categories. We also averaged the parameter estimates over participants and computed the corresponding model predictions; we then generated the quantile probability functions. To display the quality of fit of the model to the data, Figure 3B shows the observed and predicted quantile RTs (vertical) and the proportion of “large” and “small” responses (horizontal), as a function of the number of asterisks (numerosity categories) presented. These graphs contain information about all of the data and model predictions of the experiment: the probabilities of correct and error responses and the shapes of the RT distributions for both correct and error responses. As Figure 3B shows, the probability of a “large” response varied from near 1 for stimuli with large numbers of asterisks to near 0 for stimuli with small numbers of asterisks (cf. Figure 1). Median RT increased for the more difficult stimuli (numbers of asterisks close to 50), with most of the slowing coming from the skewing of the RT distributions, because the .9 quantile RTs increased much more than did the .1 quantile RTs.

Figure 3.

Response time (RT) quantiles and response proportions. Panel A shows an RT distribution histogram (the circles joined by lines), with the quantile RTs identified on the abscissa and as rectangles with .2 area between the .1, .3, .5 (median), .7, and .9 quantiles and .095 area between extremes, the .005 and .1 quantiles and the .9 and .995 quantiles (we use .005 and .995 for illustration as being a little less variable than the maximum and minimum). Panel A shows that the six rectangles between and outside the five quantiles approximate the density function rather well. Panel B shows quantile probability functions for “large” and “small” responses for the three sessions in the experimental group (Session 1 baseline, Session 2 sleep-deprived, and Session 3 recovery) and in the control group. The quantile RTs in each vertical line of Xs from the bottom to the top are the .1, .3, .5 (median), .7, and .9 quantiles, respectively. The Xs represent the data, and the os joined with lines represent the predicted quantile RTs from the diffusion model. The eight columns of Xs in each graph are for eight different stimulus categories—namely, 31–35, 36–40, 41–45, 46–50, 51–55, 56–60, 61–65, and 66–70 asterisks for “large” responses; for “small” responses, the columns of Xs are for the same eight stimulus categories, but in the opposite order. The horizontal position of the columns of Xs indicates the response proportion for that category. Note that extreme error quantiles could not be computed for some of the numerosity categories because there were too few errors for some participants, so only the median RT value is plotted.

Parameter Estimates

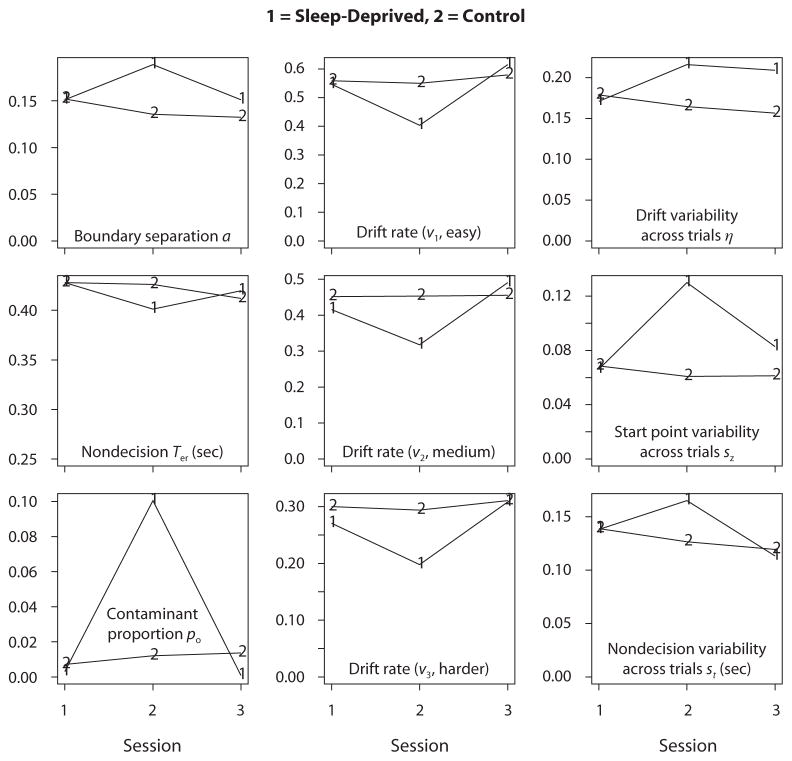

The average model parameter estimates are shown in Table 1 and plotted in Figure 4, as a function of session and experimental versus control groups. For the sleep-deprivation session, relative to the baseline and recovery sessions and to the control group, boundary separation increased, drift rates decreased, variability in starting point and nondecision components increased, and the proportion of contaminants increased.

Table 1.

Parameter Values Averaged over Participants

| Session | a | Ter | η | sz | v1 | v2 | v3 | v4 | po | st | vc | z | χ2 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Experimental Group | |||||||||||||

| Baseline | .151 | .428 | .171 | .066 | .546 | .416 | .271 | .093 | .003 | .138 | .007 | .075 | 101 |

| Sleep-deprived | .189 | .401 | .216 | .130 | .403 | .318 | .198 | .083 | .101 | .165 | .011 | .089 | 163 |

| Recovery | .151 | .420 | .209 | .083 | .615 | .491 | .308 | .110 | .001 | .113 | .005 | .076 | 101 |

| Control Group | |||||||||||||

| Baseline | .152 | .428 | .179 | .069 | .558 | .452 | .300 | .097 | .007 | .139 | .004 | .073 | 88 |

| Control | .136 | .426 | .165 | .061 | .549 | .453 | .294 | .099 | .012 | .127 | .005 | .064 | 79 |

| Recovery | .132 | .412 | .156 | .061 | .579 | .456 | .311 | .100 | .014 | .119 | −.002 | .064 | 81 |

Note—a, upper boundary of accumulation of information over time; Ter, mean of the distribution of times taken up by the nondecision component, in seconds. η, sz, and st represent across-trial variability in drift rate, in starting point, and in the nondecision component, respectively. v1, v2, v3, and v4 represent the four distinct drift-rate parameters for the eight numerosity categories (see the text for details). po, proportion of contaminants; vc, drift criterion; z, starting point of accumulation of information over time. χ2 represents the goodness-of-fit index with critical value 102 for 76 degrees of freedom. For a total of k numerosity categories and a model with m parameters, the degrees of freedom are k(12 − 1) − m, where 12 comes from the number of bins between and outside the response time quantiles for correct and error responses for a single category (minus 1, because the total probability mass must be 1). There were k = 8 numerosity categories in the experiment and m = 12 parameters in the model, so df = 88 − 12 = 76.

Figure 4.

Plots of the diffusion model parameters averaged over participants for the three sessions in the experimental (sleep-deprived) group (1) and the control group (2). Sleep deprivation in Session 2 produced significant effects of boundary separation, the proportion of contaminants, the three drift rates, starting point variability across trials, and nondecision variability across trials. The effects on the nondecision component and variability in drift rate across trials were not significant.

The parameter values represent the behavior of components of processing in the experiment, and we used their values to interpret the effects of sleep deprivation on performance in the two-choice task. Two-way mixed effects ANOVAs of each of the parameters for the three sessions × experimental and control groups were performed; the results are shown in the Table 2 note. Table 2 shows planned statistical comparisons between the experimental group and the control group, as well as between the sleep-deprivation session and the baseline and recovery sessions. For the baseline and recovery sessions, there were almost no significant differences between the experimental and control groups. For all comparisons between the sleep-deprivation session and the corresponding control session and for all comparisons between the sleep-deprivation session and the baseline and recovery sessions in the experimental group, there were significant differences for the following: boundary separation (one comparison was marginally significant), variability in starting point across trials, drift rates for the easier numerosity categories, proportion of contaminants, and variability in the duration of nondecision processes (one comparison was marginally significant). Although these comparisons involved multiple t tests, the results are consistent and clear. To summarize, the estimates for a, sz, v1, v2, v3, po, and st from the sleep-deprivation session are different from the estimates for the baseline and recovery sessions, as well as from all of the sessions in the control group.

Table 2.

p Values for Planned Comparisons of Parameters

| Comparison of Sessions | a | Ter | η | sz | v1 | v2 | v3 | v4 | po | st | vc | z |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Session 1 | ||||||||||||

| Experimental vs. control | .961 | .997 | .766 | .868 | .812 | .411 | .336 | .730 | .248 | .970 | .373 | .862 |

| Session 2 | ||||||||||||

| Experimental vs. control | .008* | .279 | .076 | .000* | .013* | .011* | .006* | .255 | .028* | .023* | .467 | .021* |

| Session 3 | ||||||||||||

| Experimental vs. control | .122 | .701 | .016* | .161 | .527 | .490 | .948 | .503 | .065 | .765 | .728 | .118 |

| Experimental Group Only | ||||||||||||

| Sleep-deprived vs. baseline | .055 | .232 | .118 | .000* | .003* | .020* | .012* | .465 | .017* | .065 | .429 | .164 |

| Sleep-deprived vs. recovery | .042* | .392 | .755 | .003* | .000* | .002* | .003* | .064 | .015* | .004* | .570 | .179 |

Note—a, upper boundary of accumulation of information over time; Ter, mean of the distribution of times taken up by the nondecision component, in seconds. η, sz, and st represent across-trial variability in drift rate, in starting point, and in the nondecision component, respectively. v1, v2, v3, and v4 represent the four distinct drift-rate parameters for the eight numerosity categories (see the text for details). po, proportion of contaminants; vc, drift criterion; z, starting point of accumulation of information over time. Session 1, baseline; Session 2, sleep-deprived (or control); Session 3, recovery. Group × session interactions are as follows: For a, F(2,46) = 7.40; for Ter, F(2,46) = 4.20; for η, F(2,46) = 2.34; for sz, F(2,46) = 7.04; for v1, F(2,46) = 8.77; for v2, F(2,46) = 10.39; for v3, F(2,46) = 6.07; for po, F(2,46) = 8.08; for st, F(2,46) = 4.2. The critical value was F.95(2,46) = 3.2.

p < .05.

The differences in the parameter estimates led to the following interpretation of the effects of sleep deprivation on the two-choice numerosity discrimination task. When deprived of sleep, most participants adopted moderately more conservative decision criteria (larger boundary separation), thus requiring more evidence for making a response. However, this was not a large difference, as compared to the effect that was seen with speed–accuracy manipulations (Ratcliff, Thapar, & McKoon, 2001). Variability in starting point and in the nondecision components of cognition became larger with sleep deprivation. The former suggested that participants experienced difficulty resetting the criteria to the normal range of values observed in the nondeprivation sessions. The latter indicated that participants were more unstable in stimulus detection and/or more variable in executing the decision process.

The mean of drift rates v1, v2, and v3 for the most accurate numerosity categories decreased from .411 for the baseline session to .307 for the sleep-deprivation session, and then increased again to .438 for the recovery session. The change induced by sleep deprivation was substantial, suggesting that central information processing was affected by sleep deprivation—specifically, the cognitive processes that extracted an estimate of the relative numerosity of the asterisk array. Sleep-deprived participants also produced more responses that were not based on the stimulus, but were random guesses (contaminants). However, there were large individual differences.

Individual Differences

Most of the participants in the experimental group showed a degradation in the components of cognitive processing from the baseline session to the sleep-deprivation session and a rebound in the recovery session. Boundary separation was higher in the sleep-deprivation session for 9 of 12 participants, and the proportion of contaminants was larger for 11 of 12 participants. Most of the participants in the sleep-deprivation group showed a decrease in drift rate from the baseline session to the sleep-deprivation session and an increase to the recovery session. These results show that the main conclusions apply to most individuals in the study and are not the result of there having been just a few participants showing a large effect and others showing no effect. That said, systematic individual differences in the response to sleep deprivation were observed, as has been documented before (Van Dongen, Baynard, Maislin, & Dinges, 2004).

Contaminant Responses

The effect of sleep deprivation on the proportion of contaminants indicates that sleep-deprived participants produced more responses that were not based on the stimulus, but were random guesses. However, there were large individual differences: In the sleep-deprivation session, 5 participants had under 4% contaminants, 5 had between 8% and 11% contaminants, and 2 participants had over 30% contaminants. This high estimate of the proportion of contaminants for those 2 participants was corroborated by accuracy values in the highest accuracy categories of around 70% for the sleep-deprivation session and over 95% in the baseline and recovery sessions. In the baseline and recovery sessions, none of the participants in the experimental group had over 3% contaminants. These observations are, again, consistent with previously documented individual differences in cognitive responses to sleep deprivation (Van Dongen et al., 2004).

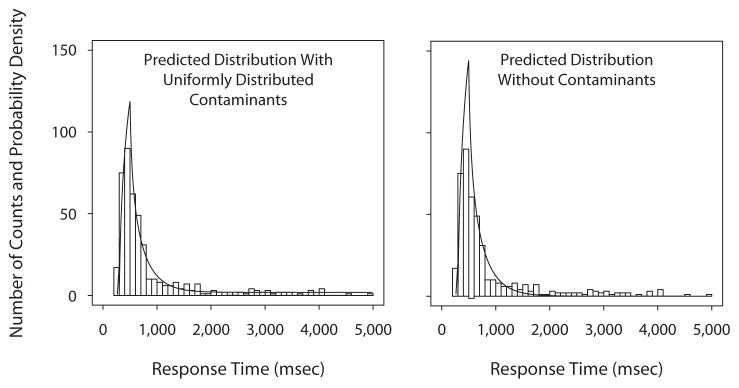

To show that the assumption of randomly distributed contaminants is reasonable, we used the data from the participant with the largest proportion of contaminants to evaluate the fit of the model to the RT distribution. This participant had similar proportions of “large” responses across five of the numerosity categories. The average proportion of “large” responses was .755, and the mean RT was 867 msec (the five categories had proportions ranging from .714 to .789 and mean RTs ranging from 794 to 875 msec); the estimated proportion of contaminants was .38. The left panel of Figure 5 shows the data histogram with the predicted distribution generated from the model parameters with the contaminant assumption; the right panel shows the predicted distribution generated from the same model parameters but with zero contaminants. The right panel shows that the tail of the distribution is mispredicted; but the left panel, with the assumption of uniform random guesses, shows the long, elevated tail that adequately describes the data.

Figure 5.

Response time (RT) distributions for the participant with the most estimated contaminants. Five numerosity categories are grouped (see the text). The left panel shows the predicted RT distribution with the contaminant assumption of uniform random guesses, and the right panel shows the predicted RT distribution without the contaminant assumption, but with the same parameter values as in the left panel. The assumption of uniform random guesses produces an RT distribution tail that adequately describes the data.

DISCUSSION

In this study, the effects of sleep deprivation on distinct cognitive processes in a two-choice decision paradigm were investigated. Data were fit with the diffusion model, which has previously been applied successfully in a number of other domains (see Ratcliff & McKoon, 2008). The diffusion model accounts for both speed and accuracy of processing in a unified approach. The model analysis of our data showed that processes that produced evidence from the stimulus entering the decision process were impaired substantially by sleep deprivation: Drift rates decreased by about 30% relative to baseline values. This is a large effect, especially considering that the two-choice task requires few, if any, perceptual or memory demands. Thus, such a deficit in this simple two-choice task argues for a potent effect of sleep deprivation on ability to effectively extract information from stimuli.

In the sleep-deprivation condition, as compared with the baseline condition, participants also adopted moderately more conservative decision criteria, which suggests some compensation for poorer processing in extracting information from the stimulus. Also, variability in the starting point across trials was larger, and variability in the nondecision component of processing across trials was modestly but significantly larger. These increases suggest a reduction in the ability to reset the decision process to the same starting point and suggest an increase in variability in the encoding and response-output processes.

There was a notable increase in the proportion of contaminants, indicating that sleep-deprived participants made more random responses, albeit with large individual differences. There was no trade-off across participants between the proportion of contaminants and other model parameters; all of the other components of processing had similar trends across participants, as a function of sleep deprivation.

There was no increase in the duration of the nondecision component of processing, which represents the duration of stimulus-encoding and response-output processes as a function of sleep deprivation. Also, there was no increase in the variability in drift rate across trials, which suggests that sleep deprivation simply lowers the quality of the output from stimulus processing on all trials rather than lowering the quality considerably on some trials but hardly at all on other trials.

There are competing hypotheses about the underlying mechanisms of the effects of sleep deprivation on performance in cognitive tasks. One hypothesis suggests that sleep loss specifically affects cognitive processes mediated by the prefrontal cortex (PFC; Harrison, Horne, & Rothwell, 2000), thereby degrading higher-order cognitive functions, such as decision making (Harrison & Horne, 2000). Our results are consistent with this hypothesis because drift rates (central cognitive processes involved in producing evidence from the stimulus) were reduced by sleep deprivation. However, the effects of sleep deprivation were not exclusively on such processes, so the hypothesis is only partially consistent with the experimental findings.

Another hypothesis, known as the state instability hypothesis (Doran, Van Dongen, & Dinges, 2001), postulates that sleep deprivation induces an escalating “state instability” that is particularly evident in experimental tasks requiring sustained attention. This hypothesis posits that sleep deprivation induces moment-to-moment fluctuations in sustained attention due to the influence of sleep-initiating mechanisms. Our results are consistent with the state instability hypothesis, in that sleep deprivation increased the number of contaminants (guesses), skewed the RT distributions (Dinges & Kribbs, 1991) partly by increasing separation of decision criteria, and increased the across-trial variability in the starting point of the decision process, as well as in the nondecision component. State instability may also affect attentional networks (Posner, 2008) and, thereby, the functioning of the PFC (Durmer & Dinges, 2005), which could explain the degradation of stimulus processing. Although this argument needs to be demonstrated experimentally, the state instability hypothesis has the potential to provide the most comprehensive explanation of the present findings, although the hypothesized moment-to-moment fluctuations in sustained attention would seem to extend to other aspects of cognition as well. The challenge is to unambiguously link these hypotheses to components of processing in explicit models so they can be subjected to experimental tests.

The nature and scope of the cognitive impairments resulting from sleep deprivation have been debated for more than a century (Doran et al., 2001; Horne, 1993; Kleitman, 1923; Lim & Dinges, 2008; Patrick & Gilbert, 1896; Williams, Lubin, & Goodnow, 1959). However, progress has been slowed by a tendency to focus on global performance outcomes, with little research being done to examine the effects of sleep deprivation on distinct cognitive processes. Using the diffusion model, we were able to study the effects of sleep deprivation on distinct cognitive processes. Within the framework of current theories about the effects of sleep deprivation on cognition (see Dinges & Kribbs, 1991; Harrison & Horne, 2000), the present results suggest that sleep deprivation degrades both attentional arousal (through state instability) and central processing. Indeed, our findings show that sleep deprivation has wide-ranging effects on brain functioning, affecting multiple, distinct components of cognition.

Acknowledgments

The present research was supported by USAMRMC Award W81XWH-05-1-0099 and DURIP Grant FA9550-06-1-0281. Preparation of this article was also supported by NIA Grant R01-AG17083 and NIMH Grant R37-MH44640 to the first author. We thank the research team of the Sleep and Performance Research Center at Washington State University–Spokane and Russ Childers of the Department of Psychology at Ohio State University for their help with the implementation of this study.

Contributor Information

Roger Ratcliff, Ohio State University, Columbus, Ohio.

Hans P. A. Van Dongen, Washington State University, Spokane, Washington

References

- Dinges DF, Kribbs NB. Performing while sleepy: Effects of experimentally-induced sleepiness. In: Monk TH, editor. Sleep, sleepiness, and performance. Chichester, U.K: Wiley; 1991. pp. 97–128. [Google Scholar]

- Doran SM, Van Dongen HPA, Dinges DF. Sustained attention performance during sleep deprivation: Evidence of state instability. Archives of Italian Biology. 2001;139:253–267. [PubMed] [Google Scholar]

- Durmer JS, Dinges DF. Neurocognitive consequences of sleep deprivation. Seminars in Neurology. 2005;25:117–129. doi: 10.1055/s-2005-867080. [DOI] [PubMed] [Google Scholar]

- Gunzelmann G, Gluck KA, Price S, Van Dongen HPA, Dinges DF. Decreased arousal as a result of sleep deprivation: The unraveling of cognitive control. In: Gray WD, editor. Integrated models of cognitive systems. New York: Oxford University Press; 2007. pp. 243–253. [Google Scholar]

- Harrison Y, Horne JA. The impact of sleep deprivation on decision making: A review. Journal of Experimental Psychology: Applied. 2000;6:236–249. doi: 10.1037//1076-898x.6.3.236. [DOI] [PubMed] [Google Scholar]

- Harrison Y, Horne JA, Rothwell A. Prefrontal neuropsychological effects of sleep deprivation in young adults: A model for healthy aging? Sleep. 2000;23:1067–1073. [PubMed] [Google Scholar]

- Horne JA. Human sleep, sleep loss and behaviour: Implications for the prefrontal cortex and psychiatric disorder. British Journal of Psychiatry. 1993;162:413–419. doi: 10.1192/bjp.162.3.413. [DOI] [PubMed] [Google Scholar]

- Kleitman N. Studies on the physiology of sleep: The effects of prolonged sleeplessness on man. American Journal of Physiology. 1923;66:67–92. [Google Scholar]

- Laming DRJ. Information theory of choice-reaction times. London: Academic Press; 1968. [Google Scholar]

- Lim J, Dinges DF. Sleep deprivation and vigilant attention. Annals of the New York Academy of Sciences. 2008;1129:305–322. doi: 10.1196/annals.1417.002. [DOI] [PubMed] [Google Scholar]

- Patrick GTW, Gilbert JA. On the effects of loss of sleep. Psychological Review. 1896;3:469–483. [Google Scholar]

- Posner MI. Measuring alertness. Annals of the New York Academy of Sciences. 2008;1129:193–199. doi: 10.1196/annals.1417.011. [DOI] [PubMed] [Google Scholar]

- Ratcliff R. A theory of memory retrieval. Psychological Review. 1978;85:59–108. [Google Scholar]

- Ratcliff R. Group reaction time distributions and an analysis of distribution statistics. Psychological Bulletin. 1979;86:446–461. [PubMed] [Google Scholar]

- Ratcliff R. Continuous versus discrete information processing: Modeling accumulation of partial information. Psychological Review. 1988;95:238–255. doi: 10.1037/0033-295x.95.2.238. [DOI] [PubMed] [Google Scholar]

- Ratcliff R. Methods for dealing with reaction time outliers. Psychological Bulletin. 1993;114:510–532. doi: 10.1037/0033-2909.114.3.510. [DOI] [PubMed] [Google Scholar]

- Ratcliff R. A diffusion model account of reaction time and accuracy in a brightness discrimination task: Fitting real data and failing to fit fake but plausible data. Psychonomic Bulletin & Review. 2002;9:278–291. doi: 10.3758/bf03196283. [DOI] [PubMed] [Google Scholar]

- Ratcliff R. The EZ diffusion method: Too EZ? Psychonomic Bulletin & Review. 2008;15:1218–1228. doi: 10.3758/PBR.15.6.1218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratcliff R, Cherian A, Segraves M. A comparison of macaque behavior and superior colliculus neuronal activity to predictions from models of two-choice decisions. Journal of Neurophysiology. 2003;90:1392–1407. doi: 10.1152/jn.01049.2002. [DOI] [PubMed] [Google Scholar]

- Ratcliff R, McKoon G. The diffusion decision model: Theory and data for two-choice decision tasks. Neural Computation. 2008;20:873–922. doi: 10.1162/neco.2008.12-06-420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratcliff R, Smith PL. A comparison of sequential sampling models for two-choice reaction time. Psychological Review. 2004;111:333–367. doi: 10.1037/0033-295X.111.2.333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratcliff R, Thapar A, McKoon G. The effects of aging on reaction time in a signal detection task. Psychology & Aging. 2001;16:323–341. [PubMed] [Google Scholar]

- Ratcliff R, Tuerlinckx F. Estimating parameters of the diffusion model: Approaches to dealing with contaminant reaction times and parameter variability. Psychonomic Bulletin & Review. 2002;9:438–481. doi: 10.3758/bf03196302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratcliff R, Van Zandt T, McKoon G. Connectionist and diffusion models of reaction time. Psychological Review. 1999;106:261–300. doi: 10.1037/0033-295x.106.2.261. [DOI] [PubMed] [Google Scholar]

- Smith PL. Stochastic dynamic models of response time and accuracy: A foundational primer. Journal of Mathematical Psychology. 2000;44:408–463. doi: 10.1006/jmps.1999.1260. [DOI] [PubMed] [Google Scholar]

- Smith PL, Ratcliff R, Wolfgang BJ. Attention orienting and the time course of perceptual decisions: Response time distributions with masked and unmasked displays. Vision Research. 2004;44:1297–1320. doi: 10.1016/j.visres.2004.01.002. [DOI] [PubMed] [Google Scholar]

- Vandekerckhove J, Tuerlinckx F. Fitting the Ratcliff diffusion model to experimental data. Psychonomic Bulletin & Review. 2007;14:1011–1026. doi: 10.3758/bf03193087. [DOI] [PubMed] [Google Scholar]

- Van Dongen HPA. Comparison of mathematical model predictions to experimental data of fatigue and performance. Aviation, Space, & Environmental Medicine. 2004;75:A15–A36. [PubMed] [Google Scholar]

- Van Dongen HPA, Baynard MD, Maislin G, Dinges DF. Systematic interindividual differences in neurobehavioral impairment from sleep loss: Evidence of trait-like differential vulnerability. Sleep. 2004;27:423–433. [PubMed] [Google Scholar]

- Van Dongen HPA, Dinges DF. Circadian rhythms in sleepiness, alertness, and performance. In: Kryger MH, Roth T, Dement WC, editors. Principles and practice of sleep medicine. 4. Philadelphia: Elsevier Saunders; 2005. pp. 435–443. [Google Scholar]

- Voss A, Rothermund K, Voss J. Interpreting the parameters of the diffusion model: An empirical validation. Memory & Cognition. 2004;32:1206–1220. doi: 10.3758/bf03196893. [DOI] [PubMed] [Google Scholar]

- Williams HL, Lubin A, Goodnow JJ. Impaired performance with acute sleep loss. Psychological Monographs: General & Applied. 1959;73 Whole No. 484. [Google Scholar]