Abstract

Assessment of face specificity in prosopagnosia is hampered by difficulty in gauging pre-morbid expertise for non-face object categories, for which humans vary widely in interest and experience. In this study, we examined the correlation between visual and verbal semantic knowledge for cars to determine if visual recognition accuracy could be predicted from verbal semantic scores. We had 33 healthy subjects and six prosopagnosic patients first rated their own knowledge of cars. They were then given a test of verbal semantic knowledge that presented them with the names of car models, to which they were to match the manufacturer. Lastly, they were given a test of visual recognition, presenting them with images of cars to which they were to provide information at three levels of specificity: model, manufacturer and decade of make. In controls, while self-ratings were only moderately correlated with either visual recognition or verbal semantic knowledge, verbal semantic knowledge was highly correlated with visual recognition, particularly for more specific levels of information. Item concordance showed that less-expert subjects were more likely to provide the most specific information (model name) for the image when they could also match the manufacturer to its name. Prosopagnosic subjects showed reduced visual recognition of cars after adjusting for verbal semantic scores. We conclude that visual recognition is highly correlated with verbal semantic knowledge, that formal measures of verbal semantic knowledge are a more accurate gauge of expertise than self-ratings, and that verbal semantic knowledge can be used to adjust tests of visual recognition for pre-morbid expertise in prosopagnosia.

Keywords: semantic memory, vision, object recognition, face processing

Introduction

One of the continuing debates in the field of face recognition is whether the defect in prosopagnosia is truly selective for faces, or if faces are only the most obvious and dramatic stimulus type affected by a problem in making fine discriminations between highly similar exemplars within any object category (Damasio et al., 1990; Farah et al., 1998). This debate is also mirrored in the contrasting views of the fusiform face area as a face-specific cortical module or a region involved in making expertise judgements for many object categories (Gauthier et al., 2000a, b; Kanwisher, 2000; Yovel and Kanwisher, 2004).

While there are cases of prosopagnosic patients who have also lost the ability to recognize types of other objects such as cars, flowers, animals, clothing or buildings (Bornstein et al., 1969; Newcombe, 1979; Assal et al., 1984; Gomori and Hawryluk, 1984; Damasio et al., 1986; de Renzi, 1986, 1991; Henke et al., 1998), there are also reports of other prosopagnosic patients who have retained the ability to discriminate between such objects (Cole and Perez-Cruet, 1964; de Renzi, 1986; McNeil and Warrington, 1993; Henke et al., 1998; Riddoch et al., 2008). While variations in lesion anatomy can always be invoked to account for such discrepancies, a critical methodological issue also confounds attempts to address object specificity: how well should any given subject be able to identify other types of objects? Because recognition performance varies with perceptual expertise, it may be difficult to deduce from a certain level of performance whether the processing of other object categories is intact in a particular individual. For example, correct naming of a car as a Volkswagen may be sufficient evidence of intact car recognition in a novice observer, but insufficient in an automobile enthusiast, from whom a more detailed answer might be expected. This problem of accounting for pre-morbid expertise reflects the fact that, while it is reasonable to assume that most humans have a relatively uniform and significant expertise with face recognition, the same cannot be said for most other objects, for which we vary in our interest and experience.

To provide more definitive evidence regarding recognition in other object domains besides faces in prosopagnosia, it would be helpful to have a method of adjusting perceptual scores for pre-morbid expertise. One possibility we considered was whether one could index visual recognition for some object category by some measure of the subject's non-visual semantic knowledge about that category. This novel strategy, however, requires a strong correlation between visual semantic knowledge about objects—which would be involved in visual object recognition—and semantic knowledge about them in a non-visual modality. We first review the grounds for believing that such a correlation exists.

Semantic knowledge or memory, also known as conceptual knowledge, is a ‘general knowledge of objects, word meanings, facts and people, without connection to any particular time or place’ (Patterson et al., 2007). There are many varieties of semantic knowledge. In neuropsychology, distinctions have been made between information categories (e.g. living versus non-living things, actions versus objects) and modalities (e.g. visual semantic knowledge versus verbal knowledge), based on the dissociations between different forms of semantic knowledge in patients (McCarthy and Warrington, 1994; Gainotti, 2006).

Neurological disorders are not the only source of inter-subject variability in semantic knowledge. Semantic information is acquired through experience with the world: thus ‘meaning is updated or transformed by a dynamic memory system that learns continuously from personal experience’ (Funnell, 2001), and others define semantic memory as ‘knowledge of the world acquired during experience, which contributes to the formation and long-term representation of concepts, categories, facts, word meanings and so on’ (Moscovitch et al., 2005). ‘Multiple trace theory’ holds that the creation of multiple traces from specific episodes results in the extraction of common data and the formation of semantic information (Moscovitch et al., 2005). Thus in healthy subjects, semantic knowledge about a specific category will reflect the degree and frequency of past experiences with that category: this is the basis of expertise effects.

Some propose that category and modality are orthogonal variables in semantic knowledge (McCarthy and Warrington, 1994). Nevertheless, it is likely that for most humans the acquisition of semantic information about a particular category is frequently multimodal, either within or across the episodes from which semantic memory is created, and that, as a result, expertise in one modality is usually paralleled by expertise in another. (This may not always be the case: one can envision anomalous exceptions, such as that of a painter of birds who develops detailed visual semantic knowledge about birds but little familiarity with their habits, their calls or even their names.) If so, then it should be possible to predict the degree of knowledge in one modalilty from the degree measured in another, as we propose.

This assumption underlies recent examinations of subjects with Alzheimer's disease (Hodges et al., 1996; Lambon Ralph et al., 1997) and semantic dementia (Lambon Ralph et al., 1999), which tested these subjects for their ability to provide information in response to either the names or the pictures of objects. When asked to give verbal definitions to the spoken names of the objects, Alzheimer's patients had more difficulty with objects whose pictures they also could not name. Furthermore, for the objects that they could not name by sight, their semantic difficulty was greater for structural (i.e. perceptual) properties than for other types of information, such as the function of the object (Hodges et al., 1996). In a clearer contrast, in patients with semantic dementia the ability to provide core definitions for pictures of objects paralleled their ability to provide similar definitions for names of objects (Lambon Ralph et al., 1999).

While these data have been used to support an amodal concept of semantic knowledge (Patterson et al., 2007), it is not clear whether the intuitive assumption that semantic knowledge correlates across different modalities holds in healthy subjects. In a small group of 10 control subjects, the ability to provide correct semantic information did not differ between objects they could or could not name by sight, although there may have been a difference in the ability to provide core definitions (Hodges et al., 1996). However, the number of items not named by sight was too small to permit definitive conclusions.

This review thus suggests that, while there are grounds to believe that semantic knowledge may be correlated across different modalities, further study is required to establish the relationship between visual and verbal semantic knowledge before we can confidently use this strategy to index visual object recognition in prosopagnosia. We decided to examine knowledge about a specific object category for which the normal population shows a wide range of knowledge due to expertise effects: cars. This object category has been frequently used in studies of perceptual expertise in healthy humans (Gauthier et al., 2000a, b, 2003; Rossion et al., 2007; Tanaka and Corneille, 2007) and prosopagnosic subjects (Gomori and Hawryluk, 1984; Damasio et al., 1986; Sergent and Signoret, 1992; Henke et al., 1998; Rossion et al., 2003; Duchaine and Nakayama, 2005). While almost all humans in our culture have daily experience with cars, car knowledge is highly variable, reflecting factors such as occupation and interest.

Our first goal was to establish, in healthy subjects, whether car knowledge accessed through the verbal domain (names of car models) is related to car knowledge accessed through the visual domain (pictures of car models). If so, this relationship could be used to create a test that adjusts visual car recognition scores for each individual's level of expertise, as gauged by performance on a test of verbal semantic car knowledge. Our second goal was to use this test to determine, in a group of prosopagnosic subjects, if the relationship between visual recognition and verbal semantic knowledge differed from that of healthy subjects.

Methods

Subjects

Thirty-three healthy subjects participated (25 male, 8 female), with mean age of 24.9 years (range 18–43), all with normal corrected vision. The protocol was approved by the Institutional Review Boards of the University of British Columbia and Vancouver Hospital. All subjects gave informed consent in accordance with the principles of the Declaration of Helsinki.

We tested six prosopagnosic subjects. As part of an ongoing prosopagnosia study, all had structural and functional magnetic resonance imaging (MRI) (Fox et al., 2008), a complete neuro-ophthalmological examination including Goldmann perimetry, as well as a battery of standardized neuropsychological tests (Table 1). In addition, their prosopagnosia was characterized with a Famous Faces Familiarity Test that measured d′ for discriminating famous from anonymous faces (Barton et al., 2001) and a test of imagery for famous faces, designed to probe the status of facial memories (Barton and Cherkasova, 2003).

Table 1.

Neuropsychological testing

| Test | Max | R-IOT1 | R-IOT3 | R-IOT4 | B-AT1 | R-AT1 | R-AT2 |

|---|---|---|---|---|---|---|---|

| Visuo-perceptual | |||||||

| Hooper Visual Organization | 30 | 27 | 27 | 22 | 20 | 25 | 28 |

| Benton Line Orientation | 30 | 29 | 25 | 24 | 28 | 29 | 28 |

| Boston Naming | 15 | 15 | 14 | 15 | – | 15 | 15 |

| Imagery | |||||||

| Mental rotation | 10 | 10 | 9 | 10 | 10 | 10 | 9 |

| Attention | |||||||

| Star cancellation | 54 | 54 | 54 | 54 | 54 | 54 | 54 |

| Visual search | 60 | 54 | 32a | 59 | 59 | 59 | |

| Memory | |||||||

| Digit span—forward | 16 | 12 | 7a | 8 | 12 | 10 | 13 |

| Spatial span—forward | 16 | 9 | 6 | 10 | 10 | 8 | 9 |

| Word list | 48 | 28 | 31 | 37 | 27a | 37 | 35 |

| Words, WRMT | 50 | 41 | 47 | 50 | 45 | 41 | 47 |

| Faces—Identity | |||||||

| Benton Face Recognition Test | 54 | 45 | 49 | 46 | 45 | 41 | 47 |

| Cambridge Face Perception Test | 0 | 62 | 92a | 76a | 48 | 58 | 40 |

| Faces—Expression | |||||||

| Affect discrimination, FAB | 20 | 19 | 15 | 15 | 17 | 20 | 19 |

| Affect naming, FAB | 20 | 17 | 14a | 17 | 18 | 20 | 18 |

| Affect selection, FAB | 20 | 19 | 17 | 18 | 20 | 19 | 20 |

| Affect matching, FAB | 20 | 18 | 16 | 15 | 17 | 20 | 20 |

| Faces—Memory | |||||||

| Faces, WRMT | 50 | 33a | 33a | 39a | 27a | 17a | 27a |

| Cambridge Face Memory Test | 72 | – | 38a | 27a | 39a | 19a | 30a |

| Famous face recognition (d′) | 3.92 | 1.96 | 0.29a | 1.28a | 1.52a,b | 1.22a | 0.65a |

| Face imagery (%) | 100 | 82 | 85 | 84 | NA | 71a | 73a |

a abnormal scores.

b Due to poor knowledge of celebrities, a version of this test using personally familiar faces.

FAB = Florida Affect Battery, WRMT = Warrington Recognition Memory Test.

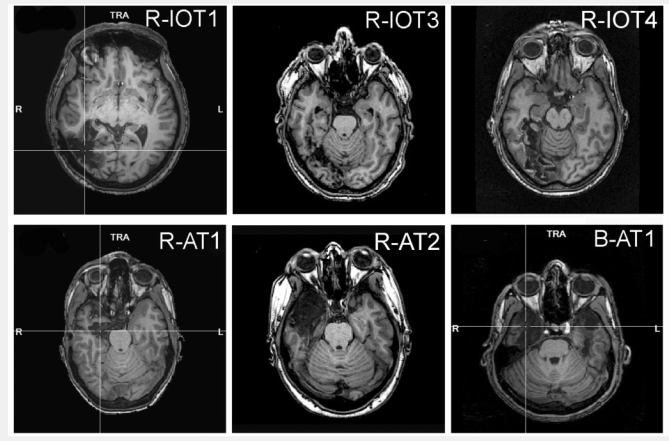

R-IOT1 is a 49 year-old left-handed male who, 12 years prior to testing, had a right occipital cerebral haemorrhage from a ruptured arteriovenous malformation. Since then he has had trouble recognizing faces, relying on hairstyle, facial hair or voice. He has a left superior quadrantanopia but normal acuity, and mild topographagnosia. He performed normally on all elements of the Visual Object and Space Perception Battery. Neuroimaging showed a right occipito-temporal lesion with loss of the right fusiform and occipital face areas (Fig. 1).

Figure 1.

Representative axial T1-weighted structural magnetic resonance images of the six prosopagnosic subjects. R-IOT1 had a haemorrhage from an arteriovenous malformation in the right posterior medial occipito-temporal cortex. R-IOT3 has a posterior cerebral arterial infarction of the right posterior medial occipito-temporal cortex. R-IOT4 has a similar infarct to R-IOT3. R-AT1 has a right amygdalohippocampectomy. R-AT2 had a right anterior temporal lesions from herpes encephalitis. B-AT1 has bilateral anterior temporal lesions from herpes encephalitis.

R-IOT3 is a 70 year-old right-handed male who had two sequential right occipital strokes 2 years prior to testing. Since then he has had trouble recognizing faces. Of note, he is a retired car mechanic. He has visual acuity of 20/25, normal colour vision and a macula-sparing left homonymous hemianopia. Neuroimaging showed a right occipito-temporal lesion with loss of the right fusiform face area.

R-IOT4 is a 57 year-old man who had a stroke 6 months prior to testing. Within a few hours of onset, he was aware that he could recognize his wife's voice but not her face. He also noted trouble recognizing his own house and began getting lost in familiar surroundings. He has visual acuity of 20/25, normal colour vision and a macula-sparing left superior quadrantanopia. Neuroimaging showed a right occipito-temporal stroke with loss of the right fusiform face area.

R-AT1 is a 24 year-old right-handed female who, 1 year prior to testing, had a right amygdalohippocampectomy for epilepsy. Since then she has had difficulty recognizing faces, relying on voice or other cues to recognize people. General mental functioning is intact and she is attending university, although she reports problems with visual memory. She has normal visual fields and acuity of 20/15. She scored perfectly on the Visual Object and Space Perception Battery. Neuroimaging showed the surgical lesion in the right anterior temporal lobe with preservation in both hemispheres of the fusiform and occipital face areas and the superior temporal sulci.

R-AT2 is a 30 year-old left-handed female who had herpes simplex encephalitis 5 years prior to testing, leaving her with right anterior temporal damage. Since recovery, she has had trouble recognizing faces, relying on body habitus, gait and voice cues instead. She has trouble recognizing buildings in her environment. She has mild problems with her memory but continues to function well in her work at a bank. She has visual acuity of 20/15 and normal peripheral visual fields. She had mild difficulty with recall on the Rey–Osterreith figure, but did well on tests of verbal, episodic and spatial memory, as well as on all tests on the Visual Object and Space Perception Battery. Neuroimaging showed a large right anterior temporal lesion with preservation in both hemispheres of the fusiform and occipital face areas and the superior temporal sulci.

B-AT1 is a 24 year-old right-handed male who, 3 years prior to testing, suffered herpes simplex encephalitis. Since recovery, he has had extreme difficulty in recognizing faces, particularly with learning new faces, though he can recognize some family members. General memory and mental functioning is unaffected, allowing him to attend college and hold full-time employment. He has mild topographagnosia and difficulty recalling the names of low-frequency items (although semantic knowledge of these items is evident). He has normal peripheral visual fields with 20/20 visual acuity. He had some difficulties with progressive silhouettes (17/20) and silhouettes (10/30) on the Visual Object and Space Perception Battery. Neuroimaging showed bilateral anterior temporal lesions with preservation in both hemispheres of the fusiform and occipital face areas and the superior temporal sulci.

All subjects performed self-ratings and the assessment of semantic knowledge, which were performed on paper, before the perceptual test, which was presented on a computer display screen.

Self-rating of car expertise

Subjects were first asked to rate their car expertise on Likert scales, ranging from 0 (novice) to 10 (expert). A separate scale was used for each of six decades (1950s–2000s), for three different regions of the manufacturers (North American, Asian and European). These 18 scores were averaged to give a mean self-rating of car knowledge.

Assessment of verbal semantic knowledge of cars

We compiled a list of all commercial car models made between 1950 and 2005 in North America, Asia and Europe, excluding trucks and sport-utility vehicles. There were 457 in total. These were divided into three groups for testing purposes: models designated by a number in the first position of the name-string (e.g. 450SL, 911; n = 63), by a letter in the first position of the name-string (e.g. SVX, A6; n = 77) or by an actual name (e.g. Corniche, Grand Am; n = 317). Subjects were given these three lists in random order and asked to write the name of the manufacturer in a blank space beside the printed name of each model. To assist them they were given a list of the possible answers, which comprised 63 manufacturers (20 North American, 16 Asian and 27 European). They were encouraged to guess and told that there would be no penalty for incorrect answers.

Ideally an assessment of knowledge should take into account the likelihood and frequency of exposure. Many variables can influence the probability of encounters with a certain car, including the number made, the number imported into the subject's country, media advertising, etc. We arbitrarily chose to make an approximate adjustment for exposure by weighting correct answers for the number of years a model was made, using a multiplicative factor of 0.1 points for each year it was available. Thus giving the correct answer that a Del Sol was made by Honda earned the subject 0.6 points, because it was available from 1993 to 1998 inclusive. The score for semantic knowledge was the sum of these weighted correct scores.

Assessment of visual semantic knowledge of cars

We obtained full-colour images of each of these 457 cars, in naturalistic settings, from the internet. From the list we selected 150 models, distributed approximately evenly over the six different decades and the three different continents of manufacture, with examples of all car configurations, such as sedans, sport cars and station wagons, and from a variety of viewpoints. We used Adobe Photoshop CS2 9.0.2 (www.adobe.com) to eliminate any identifying lettering or badges that explicitly denoted the model or manufacturer.

Images were initially randomized and then shown in the same order to all subjects on a display controlled by a G5 Powermac computer, in standard dim lighting, using Superlab Pro 2.0.4 (www.cedrus.com). Subjects were allowed to look at each image as long as they wished. Each image was numbered and subjects were asked to write the model, manufacturer and the decade of manufacture of the car shown. Short breaks were allowed.

Three separate scores were calculated, one for each of the three answers requested (model, manufacturer and decade). These scores were not weighted since each image is of a specific car made in a specific year, and not necessarily representative of all permutations of that model over the different years of manufacture.

Analysis

First, we determined the relationship between self-ratings and verbal semantic knowledge scores. This was done in two ways: we assessed the correlation across subjects between their global self-rating and their global verbal semantic score, using JMP IN 5.1 (www.jmpin.com). Also, to assess within-subject correlations, we assessed for each subject the correlation between the self-rating and the verbal semantic score for each of the 18 categories (six decades × three continents of manufacture).

Second, we performed two sets of multivariate analyses using JMP IN 5.1, one incorporating the self-ratings and each of the three visual recognition scores (model, manufacturer and decade), and the other incorporating the verbal semantic scores and the same three visual recognition scores. Because a main goal of this work was to create a test that could adjust visual recognition scores using an index of verbal semantic knowledge, we estimated multiplicative scaling factors for model, manufacturer and decade perceptual scores that, when combined, would generate a combined visual semantic index with the highest correlation (i) between self-ratings and visual recognition measures and (ii) between verbal semantic and visual recognition measures. To do this, we fixed the weight for the manufacturer perceptual score at one and created a 2D table of r-values for different weights for the model perceptual score along one dimension versus weights for the decade perceptual score along the other dimension. From this table, we could select the weight values that generated the highest r-value.

Third, given the reports of item-concordance between visual and verbal knowledge in disease states (Hodges et al., 1996; Lambon Ralph et al., 1997), we were also interested in the concordance between visual recognition and verbal semantic scores for individual test items. Were healthy subjects more likely to visually recognize the cars for which they could provide correct information on the verbal semantic test, and did this vary with the level of expertise of the subject? We took, from the verbal semantic test, the 150 items that had a corresponding car image in the visual recognition test and noted for each item the answers given by the subjects on both tests. We created three 2 × 2 tables for each subject, with columns representing accuracy on the verbal semantic test and rows representing accuracy on the visual recognition test, one table each for manufacturer, model and decade identification on the visual component. These tables were summed for the 17 subjects with the lowest semantic scores (15–75), whom we thus considered as less expert with cars, and the 16 with the highest semantic scores (114–267), whom we considered as more expert. For these two groups, we calculated odds ratios (the odds of answering correctly on a visual item if subject was correct on its corresponding verbal semantic item, divided by the odds of answering the visual item correctly if they were incorrect on the verbal item). We then tested whether the odds ratios for the more-expert group differed from those for the less-expert group with a test for the homogeneity of odds ratios (Fleiss, 1981).

Finally, to compare the prosopagnosic data to those of the healthy subjects, we used a covariance analysis of the two groups, to determine if the two regression lines for prosopagnosics and controls are significantly separated (http://department.obg.cuhk.edu.hk/ResearchSupport/Compare_2_regressions.asp). If the regression lines of visual versus verbal semantic knowledge differ between these two groups, such that for a given degree of verbal semantic knowledge the prosopagnosics have a lower score for visual recognition, this would be evidence of difficulty with non-face object recognition, even after adjustment for expertise.

Results

The relationship between self-ratings and verbal semantic knowledge

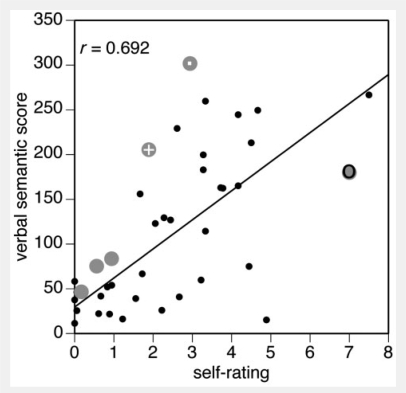

Global self-ratings were moderately but significantly correlated with verbal semantic knowledge (r = 0.68, P < 0.0001, Table 2, Fig. 2). To some degree, the moderate nature of the correlation may have represented between-subject variability in the values used in the Likert scales. To determine if there was better within-subject correlation between self-ratings and verbal semantic knowledge, we also correlated, for each subject, their self-ratings and verbal semantic scores for each of the 18 categories: the mean r-value was 0.56 (SD 0.21, range 0.03–0.83). Thus, self-ratings do correlate moderately with verbal semantic knowledge, but the range of correlations across individuals is large.

Table 2.

Multivariate analysis—pair-wise correlations

| Manufacturer | Decade | Self-rating | Verbal-semantic | |

|---|---|---|---|---|

| Visual-semantic scores | ||||

| Model | 0.87 (<0.0001) | 0.62 (0.0001) | 0.71 (<0.0001) | 0.92 (<0.0001) |

| Manufacturer | 0.39 (0.0235) | 0.70 (<0.0001) | 0.92 (<0.0001) | |

| Decade | 0.42 (0.0144) | 0.50 (0.0033) | ||

| Self-rating | 0.68 (<0.0001) |

Values are given as r (P).

Figure 2.

The relation between self-ratings of expertise and verbal semantic scores. Data from healthy subjects are shown as small black dots, with the line representing the linear regression of the relationship, and data from prosopagnosic patients are shown as large grey discs. The data point for R-IOT3 (a retired car mechanic) is marked by a grey disc with a dark ring, and that for two other more-expert subjects, R-IOT4 and R-AT2, are marked by grey discs with a dot and a white cross, respectively.

Across-subject correlations with visual semantic knowledge

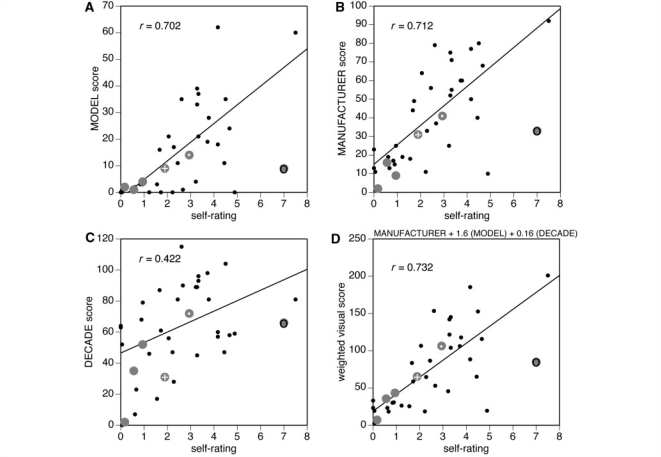

Pair-wise correlations from multivariate analysis showed that self-ratings also correlated moderately with perceptual recognition of model and manufacturer, but less so with decade of make (Table 2, Fig. 3). A weighted perceptual score of 1*(manufacturer score) + 1.6*(model score) + 0.16*(decade score) gave the optimum correlation of 0.73 (P < 0.0001).

Figure 3.

The relation between self-ratings of expertise and visual recognition scores. (A) Graph for naming the model, (B) graph for naming the manufacturer, and (C) graph for naming the decade of make. The (D) graph is for the weighted perceptual score. Data from healthy subjects are shown as small black dots, with lines representing the linear regressions; r-values are for the correlation for healthy subjects. Data from prosopagnosic patients are shown as large grey discs. The data point for R-IOT3 (a retired car mechanic) is marked by a grey disc with a dark ring, and that for two other more-expert prosopagnosic subjects, R-IOT4 and R-AT2, are marked by grey discs with a dot and a white cross, respectively.

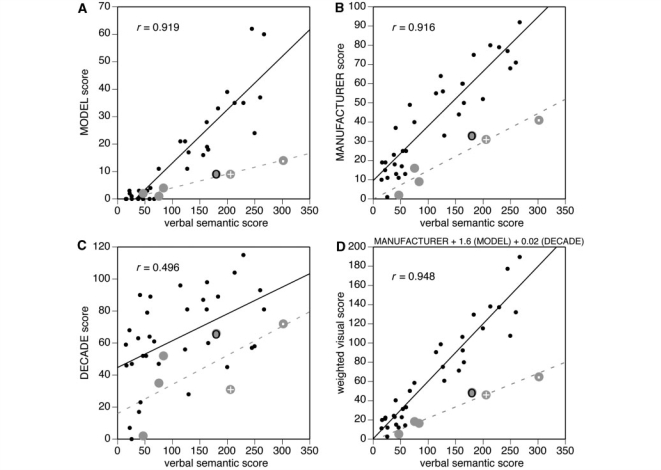

Verbal semantic scores were highly correlated with perception recognition of model and manufacturer, but again less so with decade of make (Table 2, Fig. 4). A weighted perceptual score of 1*(manufacturer score) + 1.6*(model score) + 0.02*(decade score) gave the optimum correlation of 0.95 (P < 0.0001).

Figure 4.

The relation between verbal semantic scores and visual recognition scores. (A) Graph for naming the model, (B) graph for naming the manufacturer, and (C) graph for naming the decade of make. The (D) graph is for the weighted perceptual score. Data from healthy subjects are shown as small black dots, with black lines representing the linear regressions; r-values are for the correlation for healthy subjects. Data from prosopagnosic patients are shown as large grey discs. The data point for R-IOT3 (a retired car mechanic) is marked by a grey disc with a dark ring, and that for two other more-expert prosopagnosic subjects, R-IOT4 and R-AT2, are marked by grey discs with a dot and a white cross, respectively.

Item concordance of verbal semantic and visual semantic knowledge

For all tests, healthy subjects in both the more- and less-expert groups were more likely to give the right answer on a visual recognition test if they had also given the right answer on the verbal semantic test: the confidence intervals for all odds ratios did not include the value of 1 (Table 3). The odds ratios were least for decade of make and greatest for model name; the test for homogeneity of odds ratios showed a significant difference across model, manufacturer and decade for both less-expert (χ2homog = 58.6, P < 0.0001) and more-expert subjects alike (χ2homog = 133.1, P < 0.0001). Thus, as the information demanded in the visual recognition task became more specific, the dependence of the probability of visual recognition on the accuracy of verbal semantic knowledge also increased.

Table 3.

Item concordance

| Verbal–semantic knowledge | Odds | Odds ratio (CI) | Statistical tests | |

|---|---|---|---|---|

| Model perceptual data | ||||

| Less-expert subjects | ||||

| Incorrect | 6/2285 | 41.32 (23.5–72.6) | χ2assoc | 355.95 |

| Correct | 26/265 | χ2homog | 7.83 | |

| More-expert subjects | P | 0.005 | ||

| Incorrect | 70/1330 | 10.83 (8.55–13.7) | ||

| Correct | 402/1070 | |||

| Manufacturer perceptual data | ||||

| Less-expert subjects | ||||

| Incorrect | 262/2285 | 3.58 (2.71–4.73) | χ2assoc | 295.57 |

| Correct | 84/265 | χ2homog | 0.0073 | |

| More-expert subjects | P | NS | ||

| Incorrect | 381/1330 | 3.64 (3.07–4.30) | ||

| Correct | 635/1070 | |||

| Decade perceptual data | ||||

| Less-expert subjects | ||||

| Incorrect | 751/2285 | 1.50 (1.15–1.95) | χ2assoc | 53.44 |

| Correct | 112/265 | χ2homog | 1.00 | |

| More-expert subjects | P | NS | ||

| Incorrect | 599/1330 | 1.75 (1.48–2.06) | ||

| Correct | 630/1070 | |||

CI = confidence interval; NS = not significant.

The test for homogeneity of odds ratios showed that the more- and less-expert groups differed only in odds ratios for visual recognition of the model (χ2homog = 7.83, P = 0.005), but not for recognition of manufacturer or decade of make (Table 3). Less-expert subjects rarely recognized the model of car for which they had not been able to provide the correct verbal semantic information, and thus were 41.3 times more likely to name the model of a visually presented car if they had been able to match the model name with its manufacturer, compared with an odds ratio of 10.8 for more-expert subjects.

Prosopagnosic subjects

First, we examined how well prosopagnosic self-ratings compared with their verbal semantic knowledge about cars (Fig. 2). The data of the six prosopagnosic subjects were similar to that of the healthy subjects: the covariance analysis showed that the adjusted mean verbal semantic score was 110.5 for healthy subjects and 155.2 for prosopagnosic subjects, and that the mean difference of 44.7 (SD 29.3) was not significant [t (35) = 1.52, P = 0.13]. There was also no significant difference between the slopes of the regression in the two groups [t (35) = 0.86, P = 0.39]. This suggests that the correlation of verbal semantic knowledge with self-assessment expertise was not different in the prosopagnosic and control groups; indirectly, this may suggest that their lesions had not caused a significant loss of semantic knowledge.

Second, we examined whether their visual semantic knowledge was appropriate for their degree of verbal semantic knowledge. On visual inspection, for all parameters (model, manufacturer, year and weighted score), all prosopagnosic subjects tended to fall below the mean regression line for healthy subjects (Fig. 4). However, this is less apparent in the data using self-ratings than the objective measure of verbal semantic knowledge (Fig. 3). In particular, for the overall weighted perceptual scores, three of the prosopagnosic subjects have data points that fall on the regression line for healthy subjects, including subject R-AT2, whose self-rating significantly underestimated her car knowledge (Fig. 2).

The covariance analysis showed significant differences between healthy and prosopagnosic subjects for all measures of visual recognition, as reflected in the adjusted scores. For model recognition, the adjusted mean visual recognition score was 16.4 for healthy subjects and 1.2 for prosopagnosic subjects, a significant mean difference of 15.2 [SD = 3.6, t (35) = 4.19, P = 0.0002]. For manufacturer recognition, the adjusted mean visual recognition score was 42.8 for healthy subjects and 13.8 for prosopagnosic subjects, also a significant mean difference of 29 [SD = 4.9, t(35) = 5.93, P < 0.0001]. Even for recognition of decade of make, the adjusted mean visual recognition score was 64.4 for healthy subjects and 37.7 for prosopagnosic subjects, a significant mean difference of 26.7 [SD = 10.8, t (35) = 2.48, P = 0.018]. Finally, using the weighted perceptual score, the covariance analysis showed an adjusted mean visual recognition score of 70.2 for healthy subjects and 16.5 for prosopagnosic subjects, with a highly significant adjusted mean difference of 53.7 [SD = 8.9, t (35) = 6.02, P < 0.0001]. These data thus show that after adjusting for verbal semantic knowledge, the prosopagnosic group has significantly worse visual recognition of cars than healthy subjects.

Discussion

These results show that verbal semantic knowledge about members of a specific object category (cars) is highly correlated with visual semantic knowledge about items in that category. This finding is consistent with expectations that one type of knowledge should be related to other types, given the acquisition of expertise through repeated episodes and encounters that involve different sensory modalities and multiple sources of information. Our results show that this relationship is strong enough (r = 0.95) to allow an accurate prediction of visual recognition ability from measures of verbal semantic knowledge, making this a potentially useful means of determining whether visual recognition is appropriate for the level of expertise indicated by verbal semantic knowledge.

Previous work has suggested that binary self-classification as expert or novice is correlated with the ability to match pictures of car models across different years (Gauthier et al., 2003). Our more detailed self-rating scores also show some correlation with perceptual performance, but this is more modest than the correlations between verbal semantic and visual recognition scores. This may be due to between-subject variation in the use of Likert scales. However, we also found that self-ratings were highly variable in their within-subject relationship to verbal semantic knowledge. This suggests that self-ratings are not as accurate a gauge of a subject's expertise as a formal assessment of verbal semantic knowledge.

Just as each subjects’ visual semantic score was highly correlated with their verbal semantic score, the item-concordance analysis showed that subjects were more likely to provide correct information for the image of an item they had correctly matched on the verbal semantic test, and that the odds ratio for this increased as the information required became more specific and detailed. At the most detailed level (model name), an expertise effect emerged. Less-expert subjects rarely recognized the image of a car whose name they had not matched correctly to manufacturer, whereas more-expert subjects showed less difference in visual recognition rates between cars whose names they had matched correctly and those whose names they had not. Thus, while less-expert subjects had lower rates of visual recognition of items overall, they showed greater linkage between verbal and visual semantic knowledge at the level of individual items. We speculate that this reflects the narrow and limited experience of less-expert subjects, compared with more-expert subjects who have had a larger and probably more variable bank of episodic encounters from which to derive semantic memory. If these episodes vary in the linkage between verbal and visual information, this would lead to lower concordance between such data in semantic memory.

It should be stated that a correlation between semantic knowledge across modalities in healthy subjects neither proves nor refutes amodal theories of semantic representation (Patterson et al., 2007). The multi-modal nature of most normal human experience would lead equally to cross-modality correlations in a model that segregates unimodal semantic data in distinct modules. Rather, this type of data can set the stage for examinations of dissociations following damage to structures involved in semantic processing, which can provide more definitive data to test these theories.

Assessing object recognition in prosopagnosia

Our investigation of the correlation between verbal and visual semantic knowledge was motivated by the question of object specificity in the study of prosopagnosia. Patients with prosopagnosia have lost the ability to recognize familiar faces, in the absence of other significant perceptual or memory dysfunction (Barton, 2003). General semantic knowledge is usually preserved in prosopagnosia, because such deficits are normally grounds of diagnostic exclusion, as they point to semantic dementia (Hodges and Patterson, 2007) or, if confined to knowledge about people, a ‘people-specific amnesia’ (Ellis et al., 1989; Hanley et al., 1989) rather than prosopagnosia (just as more general perceptual deficits point to general visual object agnosia rather than prosopagnosia). Thus, a non-visual assessment of expertise should provide a reasonable index of pre-morbid expertise. This may be particularly true of subjects whose lesions do not extend to the anterior temporal lobe, which has been implicated in disorders of semantic knowledge (Hodges and Patterson, 2007).

Adjusting assessments of non-facial visual object recognition for pre-morbid expertise would be desirable but has not been attempted quantitatively in prosopagnosic subjects thus far. Some have chosen object categories that they proposed were universal in experience, such as common household objects (Buxbaum et al., 1999) or fruits and vegetables (Barton et al., 2004). However, mere exposure may be insufficient to guarantee perceptual expertise, since motivated interest also plays a role. Subjective evidence of such interest has been used to guide the choice of object category in other reports. Thus a woman who worked in a restaurant was unable to recognize fruits and vegetables (de Renzi et al., 1991) and a racing fan could no longer recognize horses (Newcombe, 1979). On the other hand, a soldier could still recognize military insignia (Cole and Perez-Cruet, 1964) and R.M., a collector of miniature cars, could provide the manufacturer, model and year for a large number of cars (Sergent and Signoret, 1992). The ability to recognize personally familiar exemplars has also been used. Farmers were reported to have lost the ability to recognize their own cows (Bornstein et al., 1969, Assal et al., 1984) while W.J. was able to recognize the individuals in his flock of sheep (McNeil and Warrington, 1993), and Case 4 could recognize his own razor, wallet, neckties and glasses (de Renzi, 1986).

While these latter reports illustrate the range of associations possible between face perception and the recognition of other objects, it is clearly difficult to standardize the findings from such idiosyncratic testing material. Also, none have tried to verify the degree of expertise acquired through the reported experience of their subjects. Our results concerning the relationship between self-ratings and either verbal semantic knowledge or visual recognition raise questions about assumptions based on mere exposure or occupation. Is it reasonable to assume that any racing fan can recognize horses, and if so what degree of accuracy should we expect? In prosopagnosia, what level of accuracy for non-face object recognition is required to establish or deny a dissociation with impaired face processing? We can illustrate these difficulties by considering the case of R.M., the miniature car collector, who recognized 172 of 210 cars; whereas none of six controls, ‘two of whom claimed to have a definite interest in cars’ (p. 381), scored better than 128 (Sergent and Signoret, 1992). How can we be certain that the ‘interest’ of those two controls matched the expertise of R.M., who had up to 5000 cars in his collection? Even if their self-avowed interest is taken as a declaration of expertise, our data show the limits of self-assessment to index expertise, given that visual recognition correlates more poorly with self-ratings than with an objective verbal semantic measure. Thus, although R.M.'s score of 172 was impressive, without an objective measure of expertise, we cannot know if these two controls were appropriate matches for R.M. If they were not, it remains an open question as to whether controls with an objectively demonstrated proficiency similar to R.M. would have scored even better than his 172.

Our data from healthy subjects show that it is possible to use verbal semantic knowledge to estimate the visual recognition that we should expect from a subject. We found that the visual recognition accuracy of all six of our prosopagnosic subjects fell below the regression line of performance in healthy subjects, despite very different lesions. Thus, after adjustment for verbal semantic knowledge, prosopagnosic subjects recognized, on average, the manufacturer of 29 fewer cars in the 150 item test than did the healthy subjects. The data for R-IOT3, R-IOT4 and R-AT2 particularly underlined the utility and importance of adjusting for pre-morbid expertise. R-IOT3, a retired car mechanic, had scores for visual car recognition superior to those of many of our controls, but actually inferior when compared with controls with similar verbal semantic knowledge about cars. The same was true for R-IOT4 and R-AT2, two subjects with a non-professional interest in cars, and who had significantly under-estimated their car knowledge on their self-ratings.

Further testing of more subjects is indicated, to determine if there is a consistent deficit for visual recognition of cars in prosopagnosia, regardless of lesion size, location or functional subtype (in this regard, we would be pleased to supply the test to anyone who emails the corresponding author). If so, this may support assertions that the prosopagnosic defect represents a more general failure in the ability to discriminate subtle differences between members of the same object category, sometimes referred to as the individuation hypothesis (Moscovitch et al., 1997). Proficiency at such discriminations may reflect an experience- and interest-dependent expertise, which the expertise hypothesis argues is the fundamental defect in prosopagnosia (Gauthier et al., 1999). While our results show a consistent deficit in car recognition in our sample of six prosopagnosic subjects, despite significant variation in the location, laterality and size of their lesions, it still remains possible that their difficulties with non-face object recognition stem from damage to regions adjacent to face-processing structures, and therefore are correlated but not intrinsic to the face-processing deficit in prosopagnosia. It is therefore desirable to examine more prosopagnosic subjects, particularly those with relatively limited lesions, to determine if any prosopagnosic subject has truly spared car recognition, or if all show the same deficit. Our data show how use of verbal semantic knowledge to adjust visual recognition scores for pre-morbid expertise can increase confidence in the validity of the conclusions drawn from such perceptual data.

Funding

Canadian Institutes of Health Research (MOP-77615 and MOP-85004); National Institutes of Mental Health (1R01 MH069898); Canada Research Chair and a Senior Scholar award from the Michael Smith Foundation for Health Research (to J.B.).

Acknowledgements

We thank Rana Khalil and George Malcolm for assistance with creation of the visual semantic test, Alla Sekunova for help with data collection, Brad Duchaine for assistance with recruitment and standardized testing, and Michael Scheel, Giuseppe Iaria and Chris Fox for providing neuroimaging analysis. Portions of this work were presented at the annual meeting of the Vision Sciences Society in Naples, Florida, in May 2008.

References

- Assal G, Favre C, Anderes JP. Nonrecognition of familiar animals by a farmer. Zooagnosia or prosopagnosia for animals. Rev Neurol (Paris) 1984;140:580–4. [PubMed] [Google Scholar]

- Barton JJ. Disorders of face perception and recognition. Neurol Clin. 2003;21:521–48. doi: 10.1016/s0733-8619(02)00106-8. [DOI] [PubMed] [Google Scholar]

- Barton J, Cherkasova M. Face imagery and its relation to perception and covert recognition in prosopagnosia. Neurology. 2003;61:220–5. doi: 10.1212/01.wnl.0000071229.11658.f8. [DOI] [PubMed] [Google Scholar]

- Barton J, Cherkasova M, O'Connor M. Covert recognition in acquired and developmental prosopagnosia. Neurology. 2001;57:1161–7. doi: 10.1212/wnl.57.7.1161. [DOI] [PubMed] [Google Scholar]

- Barton JJ, Cherkasova MV, Press DZ, Intriligator JM, O'Connor M. Perceptual functions in prosopagnosia. Perception. 2004;33:939–56. doi: 10.1068/p5243. [DOI] [PubMed] [Google Scholar]

- Bornstein B, Sroka H, Munitz H. Prosopagnosia with animal face agnosia. Cortex. 1969;5:164–9. doi: 10.1016/s0010-9452(69)80027-4. [DOI] [PubMed] [Google Scholar]

- Buxbaum L, Glosser G, Coslett H. Impaired face and word recognition without object agnosia. Neuropsychologia. 1999;37:41–50. doi: 10.1016/s0028-3932(98)00048-7. [DOI] [PubMed] [Google Scholar]

- Cole M, Perez-Cruet J. Prosopagnosia. Neuropsychologia. 1964;2:237–46. [Google Scholar]

- Damasio A, Tranel D, Damasio H. Face agnosia and the neural substrates of memory. Ann Rev Neurosci. 1990;13:89–109. doi: 10.1146/annurev.ne.13.030190.000513. [DOI] [PubMed] [Google Scholar]

- Damasio AR, Damasion H, Tranel D. Prosopagnosia: anatomic and physiologic aspects. In: Ellis HD, Jeeves MA, Newcombe F, Young A, editors. Aspects of face processing. Dordecht: Martinus Nijhoff; 1986. pp. 279–90. [Google Scholar]

- de Renzi E. Current issues in prosopagnosia. In: Ellis HD, Newcombe F, Young A, editors. Aspects of face processing. Dordecht: Martinus-Nijhoff; 1986. pp. 243–52. [Google Scholar]

- de Renzi E, Faglioni P, Grossi D, Nichelli P. Apperceptive and associative forms of prosopagnosia. Cortex. 1991;27:213–21. doi: 10.1016/s0010-9452(13)80125-6. [DOI] [PubMed] [Google Scholar]

- Duchaine B, Nakayama K. Dissociations of face and object recognition in developmental prosopagnosia. J Cogn Neurosci. 2005;17:249–61. doi: 10.1162/0898929053124857. [DOI] [PubMed] [Google Scholar]

- Ellis H, Young A, Critchley E. Loss of memory for people following temporal lobe damage. Brain. 1989;1989:1469–83. doi: 10.1093/brain/112.6.1469. [DOI] [PubMed] [Google Scholar]

- Farah M, Wilson K, Drain M, Tanaka J. What is "special" about face perception? Psychol Rev. 1998;105:482–98. doi: 10.1037/0033-295x.105.3.482. [DOI] [PubMed] [Google Scholar]

- Fleiss JL. Statistical methods for rates and proportions. New York: Wiley; 1981. [Google Scholar]

- Fox CJ, Iaria G, Duchaine BC, Barton JJS. Behavioral and fMRI studies of identity and expression perception in acquired prosopagnosia. J Vision. 2008;8:708. [Google Scholar]

- Funnell E. Evidence for scripts in semantic dementia: implications for theories of semantic memory. Cogn Neuropsychol. 2001;18:323–41. doi: 10.1080/02643290042000134. [DOI] [PubMed] [Google Scholar]

- Gainotti G. Anatomical functional and cognitive determinants of semantic memory disorders. Neurosci Biobehav Rev. 2006;30:577–94. doi: 10.1016/j.neubiorev.2005.11.001. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Behrmann M, Tarr MJ. Can face recognition really be dissociated from object recognition? J Cogn Neurosci. 1999;11:349–70. doi: 10.1162/089892999563472. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Skudlarski P, Gore JC, Anderson AW. Expertise for cars and birds recruits brain areas involved in face recognition. Nat Neurosci. 2000a;3:191–7. doi: 10.1038/72140. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ, Moylan J, Skudlarski P, Gore JC, Anderson AW. The fusiform "face area" is part of a network that processes faces at the individual level. J Cogn Neurosci. 2000b;12:495–504. doi: 10.1162/089892900562165. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Curran T, Curby KM, Collins D. Perceptual interference supports a non-modular account of face processing. Nat Neurosci. 2003;6:428–32. doi: 10.1038/nn1029. [DOI] [PubMed] [Google Scholar]

- Gomori AJ, Hawryluk GA. Visual agnosia without alexia. Neurology. 1984;34:947–50. doi: 10.1212/wnl.34.7.947. [DOI] [PubMed] [Google Scholar]

- Hanley J, Young A, Pearson N. Defective recognition of familiar people. Cogn Neuropsychol. 1989;6:179–210. [Google Scholar]

- Henke K, Schweinberger S, Grigo A, Klos T, Sommer W. Specificity of face recognition: recognition of exemplars of non-face objects in prosopagnosia. Cortex. 1998;34:289–96. doi: 10.1016/s0010-9452(08)70756-1. [DOI] [PubMed] [Google Scholar]

- Hodges JR, Patterson K. Semantic dementia: a unique clinicopathological syndrome. Lancet Neurol. 2007;6:1004–14. doi: 10.1016/S1474-4422(07)70266-1. [DOI] [PubMed] [Google Scholar]

- Hodges JR, Patterson K, Graham N, Dawson K. Naming and knowing in dementia of Alzheimer's type. Brain Lang. 1996;54:302–25. doi: 10.1006/brln.1996.0077. [DOI] [PubMed] [Google Scholar]

- Kanwisher N. Domain specificity in face perception. Nat Neurosci. 2000;3:759–63. doi: 10.1038/77664. [DOI] [PubMed] [Google Scholar]

- Lambon Ralph MA, Graham KS, Patterson K, Hodges JR. Is a picture worth a thousand words? Evidence from concept definitions by patients with semantic dementia. Brain Lang. 1999;70:309–35. doi: 10.1006/brln.1999.2143. [DOI] [PubMed] [Google Scholar]

- Lambon Ralph MA, Patterson K, Hodges JR. The relationship between naming and semantic knowledge for different categories in dementia of Alzheimer's type. Neuropsychologia. 1997;35:1251–60. doi: 10.1016/s0028-3932(97)00052-3. [DOI] [PubMed] [Google Scholar]

- McCarthy RA, Warrington EK. Disorders of semantic memory. Philos Trans R Soc Lond B Biol Sci. 1994;346:89–96. doi: 10.1098/rstb.1994.0132. [DOI] [PubMed] [Google Scholar]

- McNeil J, Warrington E. Prosopagnosia: a face-specific disorder. Q J Exp Psychol. 1993;46A:1–10. doi: 10.1080/14640749308401064. [DOI] [PubMed] [Google Scholar]

- Moscovitch M, Wincour G, Behrmann M. What is special about face recognition? Nineteen experiments on a person with visual object agnosia and dyslexia but normal face recognition. J Cogn Neurosci. 1997;9:555–604. doi: 10.1162/jocn.1997.9.5.555. [DOI] [PubMed] [Google Scholar]

- Moscovitch M, Rosenbaum RS, Gilboa A, Addis DR, Westmacott R, Grady C, et al. Functional neuroanatomy of remote episodic, semantic and spatial memory: a unified account based on multiple trace theory. J Anat. 2005;207:35–66. doi: 10.1111/j.1469-7580.2005.00421.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newcombe F. The processing of visual information in prosopagnosia and acquired dyslexia: functional versus physiological interpretation. In: Osborne O, Gruneberg M, Eiser J, editors. Research in psychology and medicine. London: Academic Press; 1979. pp. 315–22. [Google Scholar]

- Patterson K, Nestor PJ, Rogers TT. Where do you know what you know? The representation of semantic knowledge in the human brain. Nat Rev Neurosci. 2007;8:976–87. doi: 10.1038/nrn2277. [DOI] [PubMed] [Google Scholar]

- Riddoch MJ, Johnston RA, Bracewell RM, Boutsen L, Humphreys GW. Are faces special? A case of pure prosopagnosia. Cogn Neuropsychol. 2008;25:3–26. doi: 10.1080/02643290801920113. [DOI] [PubMed] [Google Scholar]

- Rossion B, Caldara R, Seghier M, Schuller AM, Lazeyras F, Mayer E. A network of occipito-temporal face-sensitive areas besides the right middle fusiform gyrus is necessary for normal face processing. Brain. 2003;126(Pt 11):2381–95. doi: 10.1093/brain/awg241. [DOI] [PubMed] [Google Scholar]

- Rossion B, Collins D, Goffaux V, Curran T. Long-term expertise with artificial objects increases visual competition with early face categorization processes. J Cogn Neurosci. 2007;19:543–55. doi: 10.1162/jocn.2007.19.3.543. [DOI] [PubMed] [Google Scholar]

- Sergent J, Signoret J-L. Varieties of functional deficits in prosopagnosia. Cereb Cortex. 1992;2:375–88. doi: 10.1093/cercor/2.5.375. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Corneille O. Typicality effects in face and object perception: further evidence for the attractor field model. Percept Psychophys. 2007;69:619–27. doi: 10.3758/bf03193919. [DOI] [PubMed] [Google Scholar]

- Yovel G, Kanwisher N. Face perception: domain specific, not process specific. Neuron. 2004;44:889–98. doi: 10.1016/j.neuron.2004.11.018. [DOI] [PubMed] [Google Scholar]