Abstract

The equations of motion for deterministic molecular dynamics (MD) are chaotic, creating problems for their numerical treatment due to the exponential growth of error with time. Indeed, modeling and computational errors overwhelm numerical trajectories in typical simulations. Consequently, accuracy is expected only in a statistical sense, based on random initial conditions. Of great interest then is the relationship between errors in the dynamics and their effects on the accuracy of statistical quantities, specifically, expectations. This article provides a formula for the effect of a perturbation on an ensemble average, which explains the accuracy of such calculations. It also provides a formula for the effect of a perturbation on a time correlation function, which, however, fails to explain accuracy for these calculations. Additionally, this article clarifies the relationships among various dynamical properties of MD and provides an extension to a theory of non-Hamiltonian MD.

Keywords: molecular dynamics, symplectic integrator, perturbation, statistical mechanics, ergodic, divergence-free

1. Introduction

Molecular dynamics (MD) is heavily used in a variety of fields and consumes vast amounts of computing time. However, the equations of motion for MD are chaotic, creating problems for their numerical treatment due to the exponential growth of error with time. In particular, computational errors overwhelm numerical trajectories in typical simulations using classical mechanics, so the use of numerical integrators for computing statistical quantities needs to be defended. The book [13, p. 73] speculates that shadowing (the existence of a nearby exact trajectory) may provide the justification but concludes by saying, “that there is clearly still a corpse in the closet. We believe this corpse will not haunt us, and we quickly close the closet.” Indeed, despite some impressive successes for shadowing [18], it is unlikely that a shadowing result is possible for long durations for highly elliptic dynamical systems such as MD. This article contributes to this discussion in several ways: (i) It clarifies the significance of various dynamical properties of MD and their relationships. (ii) It extends the theory of non-Hamiltonian MD [28] by providing a general transformation of suitable dynamical equations to divergence-free form. (iii) It presents a formula for the effect of a perturbation on an ensemble average, which explains the accuracy of such a calculation. (iv) It presents a formula for the effect of a perturbation on a time correlation function, which exposes the essential difficulty in explaining the accuracy of such a calculation.

The main theme of this article is to explore the question of what makes MD work by means of a perturbation analysis. The principal motivation is to seek guidance in the construction of approximations, such as numerical integrators, fast methods for nonbonded interactions, and coarse-graining techniques. A perturbation analysis is also useful for making an informed choice among existing approximations. Additionally, such an analysis is the first step in deriving computable quantities that assess the error. Finally, error analysis increases our confidence in the results of computations. Empirical evidence, even that obtained from systematic studies, is applicable only to a narrow range of problems; whereas, theoretical evidence has much broader applicability.

Section 2 discusses various basic properties of dynamical systems with random initial values. Included is an extension to a theory of non-Hamiltonian MD.

Since calculating accurate trajectories is not typically the aim of a molecular simulation, it is desirable to specify what the precise goal is. Section 3 details two specific goals: (i) that of computing the ensemble average 〈A〉 for some quantity A(Γ), where Γ is a point in phase space and (ii) that of computing a time-dependent value expressible as a linear combination of unnormalized time correlation functions, which have the form 〈(A ○ Φt) · B〉, where Φt is the t-flow of the dynamics and B(Γ) is some (possibly) other quantity. Perhaps, only time correlation functions and time averages are insensitive to perturbations and that they are all that can be extracted from long-time dynamics. These seem to be the only dynamical quantities calculated in textbooks on MD. Knowing what functionals of trajectories can be computed is very useful for making approximations; it indicates what aspects of behavior are to be reproduced and what is to be neglected.

Section 4 derives a formula for the effect of a perturbation in the energy function H(Γ) on an ensemble average (as does [3] apparently), and this indicates that computing such an average is formally well posed. Interestingly, the effect of a perturbation depends on only the zeroth derivative of the perturbation and the observable A(Γ), except in the case of the microcanonical ensemble for which there is a limited dependence on first derivatives.

Section 5 derives a formula for the effect of a perturbation on a time correlation function. However, the result is less than satisfactory because it fails to indicate that the problem is well posed as time t → ∞. (The use of the Fourier and Laplace transforms leads to interesting formulas; however, these do not seem to advance the cause of perturbation analysis.)

It is well known [22] that the error due to numerical integration is equivalent to perturbing the vector field defining the system of ordinary differential equations (ODEs) plus an exponentially small time-dependent term. The perturbation theory of sections 4 and 5 applies if we neglect the exponentially small term and consider the effect of a divergence-free perturbation to the vector field that conserves a perturbed energy. Such is the case for a symplectic integrator applied to a Hamiltonian system, where the effect of temporal discretization error is to change the Hamiltonian H(Γ) to H(Γ) + η(Γ). Evidence favoring the use of symplectic integrators is quite compelling, as discussed in section 6.

2. Model

Let Γ = [xT, pT]T comprise the phase space variables, where consists of positions and p consists of momenta, and let the equations of motion defining the t-flow Φt(Γ) be

For divergence-free dynamics, ∇ · f(Γ) = 0. A special case is Hamiltonian dynamics for which f(Γ) = J∇H(Γ), where . Of special interest is the separable Hamiltonian , where M is a diagonal mass matrix and U(x) is the potential energy, which is a sum of few-body potentials for covalent bonded forces and 2-body potentials for nonbonded forces (electrostatic, van der Waals, excluded volume). For example, the numerical results presented in section 6 are from a simulation of 864 argon atoms (each of mass 39.95 atomic mass unit (a.m.u.)) in a cubic box of side length 69.66 angstrom (Å) with periodic boundary conditions. Each pair of atoms i, j contributes a term to the potential energy, where u(r) = 4ε((σ/r)12 − (σ/r)6) (ε =120K times Boltzmann’s constant σ =3.405 Å) is the Lennard–Jones potential and S(r) is a switching function defined to be one for r ≤ ron =10 Å, zero for r ≥ roff =12 Å, and for values of r in between.

The microscopic state Γ of a system is not known in practice; only partial information is given for the initial value Γ. Hence, the initial value Γ is assumed to be random with probability density function (PDF) denoted by ρ0(Γ). Formulation as a stochastic initial value problem dramatically changes the questions we ask, yielding problems that are well conditioned (it seems). The PDF ρ(Γ,t) for the dynamics Φt(Γ) can be shown to be given by

| (1) |

which can be shown to satisfy the continuity equation

which is simply a conservation law for probability. This equation is a linear hyperbolic PDE having trajectories Φt(Γ) as characteristics.

Of particular interest is the time average

because it represents a case of minimal information about the microstate Γ. (The existence of the time average is guaranteed by a theorem of Birkhoff.) Not surprisingly, the density defined in this way can be shown to be stationary with respect to the flow Φt, meaning that (1) holds with ρ(Γ,t) = ρ(Γ), or equivalently ∇ · (ρf) = 0.

In practice, a stationary density ρ(Γ) is determined by specifying distributions of macroscopic quantities. Given such a stationary density, we define the ensemble average for an observable A(Γ) by

From (1) with ρ(Γ,t) = ρ(Γ), it follows that

| (2) |

If there is additional information about the initial value Γ, e.g., Ak(Γ) = Ak,0, k = 1, 2,…,m, define the initial density as a conditional density:

Stationary solutions of the continuity equation are not unique, particularly if there are conserved quantities. Each distribution of the values of the conserved quantities can be used to define a different stationary solution. For simplicity, we assume that energy H(Γ) is the only conserved quantity. (In the typical case of periodic boundary conditions, angular momentum is not conserved. Also, it is desirable to set the linear momentum to zero, and this together with invariance of the center of mass can be used, in principle, to eliminate position and momentum coordinates of one of the atoms.) Extension to additional conserved quantities is outlined in [28].

It is easy to characterize stationary densities if ∇ · f = 0; hence, we assume that f is divergence-free in much of this article. Then it is easy to show that any probability density of the form

is stationary, where ζ is an arbitrary function independent of H. (This form of density is also necessary if ρ is required to be continuous, as discussed in section 2.2.) Typically, ζ(E) = e−βE, where β is inverse temperature, which gives a Boltzmann–Gibbs distribution. This is the distribution for the canonical ensemble, which represents a system in a fixed volume but exchanging energy with a much larger system of inverse temperature β. It is also the distribution for the grand canonical ensemble and for the isothermal-isobaric ensemble, popular for biomolecular simulations. The latter uses a Hamiltonian having enthalpy as its value and parameterized by volume V, 0 < V < +∞. This models a system where the volume V is variable, and there is mechanical contact with a much larger system of specified pressure P. Dependence of the potential energy on V arises from external compressing forces. For periodic boundary conditions, V is the volume of the periodic box.

2.1. Dynamics that is not divergence-free

If ∇ · f ≠ 0, it may still be possible to find stationary solutions to the continuity equation. Following [28], we try ρ(Γ) = e−w(Γ)ζ(Γ)/Z. Substitution into ∇ · (ρf) = 0 yields the equation

| (3) |

for w. In general, the existence of a solution is not expected, just as the existence of an integral of a dynamical system is unexpected. Suppose, though, there does exist a solution w. (It will be unique only up to an additive function of H(Γ).) We might call it a “compressibility integral” because its derivative along a trajectory Φt is ∇ · f, which is the compressibility [28] of the flow.

The compressibility of the vector field f can be made to vanish by multiplying it by e−w (a Sundman transformation [20]), which has the effect merely of transforming time.

Proposition 1. If (d/dt)Φt = f ○ Φt possesses an integral H and a compressibility integral w satisfying (3), then

is divergence-free, it conserves H, and its solution satisfies

where

Proof. Clearly . Also,

Example 1. Nosé–Hoover dynamics is defined by

where Q is a parameter. This has as an integral . The compressibility is ∇ · f = −3Nπ/Q, and a solution of f · ∇w = −3Nπ/Q is w = −3Nξ [28]. Hence, the vector field e3Nξf is divergence-free. Alternatively, replacing ξ by ξ’ = e3Nξ in the dynamical equations also yields a divergence-free system [28].

Example 2. The isokinetic ensemble [28] uses

to generate the canonical ensemble in configuration space. This has as an integral . The compressibility integral w satisfies

which is the derivative of (3N − 1)U(x)/(pTM−1p) along a trajectory, whence w = (3N − 1)U(x)/(pTM−1p). Again, a Sundman transformation can be used to make this divergence-free, thus providing an affirmative answer to the question posed in [28].

Clearly, other cases exist where the invariant density can be found: If a change of variables is performed for a divergence-free system with ρ = ζ(H)/Z,the transformed system has invariant and invariant density .

2.2. Ergodicity

For a stationary density ρ(Γ) to have the form ζ(H(Γ))/Z is equivalent to the following condition:

| (4) |

where Ω(E) = ∫ δ(H(Γ) − E)dΓ. This represents a uniform distribution over all Γ for which E ≤ H(Γ) < E + dE. It is a stationary density, the microcanonical ensemble, corresponding to ζ(E’) = δ(E’ − E). It is the probability density for an isolated system with energy E, with no exchange of energy, momentum, or mass between the system and its surroundings. It is almost exclusively a theoretical/computational tool. The microcanonical ensemble average of an observable A(Γ) is given by

Analytical manipulations are facilitated if the Dirac delta function δ(·) is replaced by a differentiable approximation of arbitrarily narrow width. (It could be avoided altogether by using (d/dE) ∫ θ(H(Γ) − E)ρ(Γ)dΓ, where θ(·) is the Heaviside function.)

An assumption needed for some computational techniques is that Φt be ergodic on each manifold H(Γ) = E. We say that a measure-preserving flow Φt is ergodic if the only subsets of the manifold H(Γ) = E that are invariant under Φt have measure either zero or one. The flow Φt preserves the microcanonical ensemble measure, since the latter is a stationary density for the former. If Φt is assumed to be ergodic, the ergodic theorem implies that for any sufficiently smooth observable A(Γ), its time average equals its microcanonical ensemble average

for almost any ΓE on the manifold H(Γ) = E.

Ergodicity implies that the trajectory {Φt(ΓE) | 0 ≤ t < +∞} is dense on the manifold H(Γ) = E for almost any ΓE, which, in practice, is the essence of ergodicity. Although systems of interest do not exactly have this property, it is believed that typical Hamiltonians are ergodic for all but a fraction of phase space, a fraction that becomes vanishingly small in the thermodynamic limit N → ∞.

Consider further the necessity of choosing the stationary density ρ(Γ) to have the form ζ(H(Γ))/Z or, equivalently, of ensuring that (4) hold. Suppose that the energy surface H(Γ) = E has a partition X1 + X2 + X3 + ⋯ into (disjoint) sets of positive measure such that Φt is ergodic on each set. It is reasonable to believe that the union of these sets has measure 1 if we assume that there exists no integral other than H(Γ) on any set of positive measure. The most general invariant density then has the form

where the coefficients ck are nonnegative and 1k is the indicator function for Xk. The continuous nature of H(Γ) on the energy surface H(Γ) = E makes it unreasonable to choose ρ(Γ|H(Γ) = E) to be anything but continuous on that surface. This forces the weights to be equal and (4) to hold.

3. Simulation tasks

In many applications, it is the expectation of an observable 〈A〉 = ∫ A(Γ)ρ(Γ)dΓ, e.g., internal energy 〈U〉, that is of interest, and equations of motion are not directly involved. Additionally, problems such as that of structure determination can be formulated this way: Suppose that configuration space (the set of all positions x) is partitioned into conformations corresponding to subsets X1, X2, …, XK of phase space. The problem is to find subsets Xk having the greatest probability 〈1k〉.

The expectation 〈A〉 can be estimated as an average of random samples A(Γ(ν)), ν = 1, 2, …, Ntrials. A popular sampling technique is to use nonphysical dynamics and calculate time averages. The dynamics must be ergodic and have the desired ensemble as its stationary density. Molecular dynamics with stochastic terms can be used. For example, Langevin dynamics adds friction and noise terms to the equation for (d/dt)p, where C is a diagonal matrix and W(t) is a collection of independent standard Wiener processes. Alternatively, deterministic molecular dynamics can be used if ergodic. For ensembles other than the microcanonical one, molecular dynamics with extended Hamiltonians are used.

An alternative approach of possible use in some circumstances is to represent an ensemble average as a weighted average of microcanonical ensembles: By inserting into the integral that defines 〈A〉, it follows that

| (5) |

The density of states Ω(E) can be calculated using techniques such as those in [34].

In some applications, kinetics (physical dynamics) is of interest. Representing the effects of the surroundings—exchange of particles, volume, and heat—in the dynamics is problematic, especially for periodic boundaries. For large N, these effects become negligible, so a conservative approach is to neglect them rather than risk contamination from postulated boundary conditions. The effect of surroundings can be checked by redoing the calculation for a different value of N.

A general cross-correlation function is given by

where the overbar denotes a complex conjugate. It is straightforward to show that

making it necessary to consider only (complex-valued) autocorrelation functions cAA(t). It follows from (2) that autocorrelation functions are symmetric in time in the sense that .

We assume that the quantity we want to compute can be expressed in terms of quantities like

which implies an initial PDF ρ(Γ), which is stationary with respect to the dynamics. (A nonstationary initial PDF ρ0(Γ) is accommodated by taking the factor ρ0(Γ)/ρ(Γ) into B(Γ).) This formalism includes the Einstein relation for diffusion coefficients [13] and time correlation functions, including use of the Green–Kubo formula for diffusion coefficients [13]. For example, the velocity autocorrelation function for a system of N identical atoms is given by , where vk(x,p) is the kth component of M−1p. An important biological application is to compare orientational correlation functions with NMR spectra for the purpose of deducing protein structure [19]. Also, some aspects of conformational dynamics can be formulated this way, e.g., 〈(1Y ○ Φt) · 1X〉 is the probability of a transition from one region X of phase space to another Y. However, transition rates kXY and other dynamical quantities are more complicated than this, and appropriate formulations are needed. For example, transition rates can be expressed as time derivatives of time-correlation functions of indicator functions [6, 33] and can be extracted from the matrix logarithm of a matrix of normalized time-correlation functions of indicator functions [8]. Typically, m(t) decays exponentially to a limiting value as t → ∞, but, in some cases [19], decay is proportional to t−3/2.

Transient observables m(t) can be calculated as an average of values A(Φt(Γ(ν))) · B(Γ(ν)), ν = 1, 2, …, Ntrials. Under the assumption of ergodicity, m(t) can be calculated more economically by sampling different energies E (instead of different points Γ(ν) in phase space) and computing just one trajectory Φt(ΓE) for each energy, where H(ΓE) = E: Ergodicity implies

for each energy E, and these can be averaged using (5).

3.1. Mixing

A dynamical property even stronger than ergodicity is that of mixing: With an initial density ρ0(Γ) = c(Γ)δ(H(Γ) − E) on the manifold H(Γ) = E, the subsequent density ρ(Γ,t) converges—in a weak sense—to the microcanonical density ρ(Γ) = δ(H(Γ) − E)/Ω(E) as t → +∞, meaning that

| (6) |

for sufficiently smooth A(Γ). (A simple example of this is the weak convergence of sin tΓ to 0 as t → +∞.) Equation (6) can be reformulated in terms of a time correlation function using (1): We have

where B(Γ) = ρ0(Γ)/ρ(Γ). Convergence of 〈A ○ Φt · B〉E to 〈A〉E〈B〉E as t → ∞ is equivalent to (6). Hence, microcanonical time correlation functions for general observables converge to zero if and only if the dynamics is mixing. (Note that convergence of a cross-correlation function cAB(t) to zero as t → ∞ cannot be expected for a general ensemble unless either 〈A〉E or 〈B〉E is independent of E.) It is remarkable that convergence to steady-state is possible for the linear, often time-reversible, hyperbolic PDE ∂tρ + ∇ · (ρf) = 0. It means the equation has the character of a parabolic PDE, which is to say that the ODE has the character of a stochastic differential equation.

4. Analysis for a perturbation to the ensemble

In this section, we suppose that the integral H is perturbed and consider the effect on an ensemble average. Related results have been developed independently in [3] with an emphasis on computable error estimates.

Following is the result of a perturbation analysis for a general distribution ζ(E). Note that dependence on the gradient of the perturbation η(Γ) is absent. For the canonical ensemble, ζ(E) = e−βE and the effect of the perturbation simplifies to −β〈(A − 〈A〉)η〉.

Proposition 2. If the integral H(Γ) is perturbed to become H(Γ) + η(Γ), the first order effect on 〈A〉 is

where p denotes a perturbed ensemble average.

Proof. The result follows in a straightforward way from the expression

where, for simplicity, we omit the argument Γ of functions.

In the case of the microcanonical ensemble, ζ(E) does not have a sensible derivative, but integration by parts gives the alternative formula that follows. Here, the effect of a perturbation η depends not only on the value of η(Γ), but also its derivative in some arbitrary direction k(Γ).

Proposition 3. If the integral H(Γ) is perturbed to become H(Γ) + η(Γ), the first order effect on 〈A〉E is

| (7) |

where k(Γ) is an arbitrary vector field.

Proof.

and the result follows by integrating by parts.

The expression given by (7) is potentially quite complicated. The safe choice k = ∇H involves the Hessian of H. Another choice k(x, p) = [0T, pT]T leads to a much simpler expression, namely,

The integral does not blow up as p → 0 because the product dp1dp2 ⋯ dp3N compensates for pTM−1p in the denominator. (Consider the use of 3N-dimensional polar coordinates.)

The results above apply to the use of time averages to obtain microcanonical ensemble averages (for the given Hamiltonian or for an extended Hamiltonian) if the vector fields f and fp are both divergence-free and if Φt and are both ergodic.

5. Perturbation to dynamics

Suppose that the vector field f is perturbed to become fp. The goal is to express in terms of the difference g = fp − f, where is the t-flow for the vector field fp.

Lemma 4. The first order effect of a perturbation g to the vector field is

Proof. The difference satisfies

whence

to first order. The lemma follows from this together with

Proposition 5. The first order effect of a perturbation to the dynamics

satisfies

Proof. We have

Using (∇ A) ○ Φt · (∂ΓΦt) = ∂Γ(A ○ Φt) = ∇(A ○ Φt−s) ○ Φs · (∂ΓΦs), we get

It follows from (2) that Γ in the average can be replaced by Φ−s(Γ).

The effect of the perturbation depends on

Unfortunately, ∂ΓΦt−s is a matrix whose singular values approximate eλ(t−s) (for large t), where the λ are the Lyapunov exponents. It is difficult to argue that the effect of a perturbation is not large.

In the special case of a Hamiltonian system with a Hamiltonian perturbation g = J∇η, one might ask whether the effect can be expressed in terms of just η rather than ∇η. This can be done by integration by parts if ζ(E) has a bounded first derivative, i.e., for ensembles other than the microcanonical one.

Proposition 6. Assuming also that f = J∇H and g = J∇η, the first order effect of a perturbation is

| (8) |

Proof. From Proposition 5,

Integrating by parts gives

We have

where the second equality is a consequence of Φ−s being symplectic. To complete the proof, we need the identity

which results from differentiating Φt ○ Φs = Φt+s with respect to s and setting s = 0. Using this gives

Of special concern in this result is the factor

which contains the exponentially growing matrix ∂ΓΦt. It is conceivable that the exponential growth is somehow mitigated by the dot products and integration.

5.1. The same perturbation for both dynamics and sampling

Consider now the case of a Hamiltonian system and a microcanonical ensemble in which the same perturbation η to H affects both the dynamics and the sampling, as would be the case if a time average were used for sampling. The effect of the perturbation on the dynamics is given formally by (8), and the effect on the sampling by

| (9) |

If these are combined as Δm(t) = Δmd(t )+Δms(t), there is no obvious simplification.

There is a simple case that can be analyzed exactly. Let and consider uniformly perturbed masses Mp = s2M, where s is slightly different from 1. Then it can be shown that , where Ds = diag(I, sI) and that , where Ap(Γ) = A(DsΓ) and Bp(Γ) = B(DsΓ). In particular, for a velocity autocorrelation function cp(t) = c(t/s), so the effect of the perturbation is to scale time.

For the case , it should be true that , since this is true for both the unperturbed and perturbed m(t). This provides a direct check on the correctness of the formulas.

Proposition 7. The effect of a perturbation Δm(t) = Δmd(t)+Δms(t) as given by (8) and (9) satisfies .

Proof. Substituting −t for t and then s − t for s in (8) gives

whence

The second equality uses the symplectic property of Φ−s as is done in the proof of Proposition 6. Hence,

6. Numerical integrators and shadow vector fields

There is abundant empirical evidence that numerical integrators can accurately calculate time averages and time correlation functions, e.g., see [9] for the accurate calculation of kinetic quantities over long times. It is of interest to explain why this is so and what properties numerical integrators must possess. The analysis here suggests that

phase-space volume preservation,

existence of a perturbed conserved energy, and

ergodicity

are sufficient for calculating time averages. More might be needed for accurate time correlation functions.

Most numerical integrators of interest can be written Γn+1 = ΨΔt(Γn), where ΨΔt(Γ) is an approximation to ΨΔt(Γ). A prime example is the velocity Verlet method [26] for Hamiltonian systems with separable Hamiltonians:

This is a symplectic method meaning that (∂ΓΨΔt(Γ))TJ(∂ΓΨΔt(Γ)) = J.

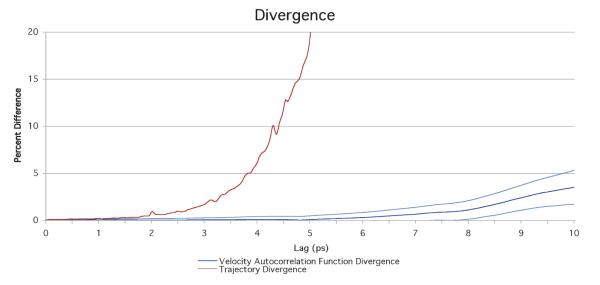

Figure 1 depicts the results of a numerical experiment that illustrates that time correlation functions can still be computed long after trajectories have lost all accuracy. The simulation is that of 864 argon atoms as described in section 2 with initial conditions chosen from the microcanonical ensemble for energy E that gives a temperature of 188.8 K. (Adjust E until , where β−1 is equal to Boltzmann’s constant times .) The experiment compares trajectories computed by the velocity Verlet method with step size 10 femtosecond (fs) to those with step size 20 fs. The rapidly rising graph is the difference in the velocities for two trajectories using the different step sizes, , as a function of time 2nΔt, relative to this difference at infinite time. The more slowly rising graph is the difference between the two velocity autocorrelation functions (defined in section 3) relative to the current value of the autocorrelation function: |c2Δt(t)−cΔt(t)|/|cΔt(t)|. This graph has error bars computed from block averages that denote one standard deviation. A time average over 5.24288 nanosecond (ns) is used. A second, quite different experiment was performed in which the atomic radius σ is increased by 0.5% and again by another 0.5%. The results are consistent with the assumed linear relationship between a perturbation and its effect on the time correlation function. However, these results are not so compelling for longer time lags, so the associated graphs are given in a separate note [24].

Fig. 1.

Trajectory velocities for different step sizes diverge much faster than velocity correlation functions.

There is evidence that geometric integrators of some sort must be used. For example, it is observed [30] that the symplectic Euler method accurately calculates time correlation functions even for large step sizes, but that the same method with a projection on each step to exactly conserve energy produces inaccurate results for practical step sizes. Similar results are observed [31] in tests on numerical integrators for steady-state simulations. Poor results from energy projection methods had already been previously observed for MD [17, 7]. See [32] for recent additional positive results for calculating various kinetic quantities using velocity Verlet.

The modified equation approach [15] to error analysis provides some justification for MD: Formally, the numerical integrator , where the latter satisfies , with fp(Γ) = f(Γ) + Δtqφq(Γ) + Δtq+1φq+1(Γ) + …. Here q is the order of accuracy of the integrator and φq(Γ), φq+1(Γ),… are vector fields. (Labeling this as a merely formal equality is supported by a proof in [5] of nonconvergence for the Euler method applied to (d/dt)y = y2.) If f(Γ) = J∇H(Γ) for some Hamiltonian H(Γ), then fp(Γ) = J∇Hp(Γ) for some shadow Hamiltonian

where ηq(Γ), ηq+1(Γ), … are scalar fields, if and only if ΨΔt is symplectic [1, 27]. Let f(k)(Γ) be the truncation of fp(Γ) just before the Δtk term. Then there exists k depending on Δt for which ΨΔt = Δt-flow of f(k) plus an time-dependent term [1, 14, 21]. The duration of Hamiltonian dynamics is only , which is too short to really justify MD.

However, conservation of the shadow Hamiltonian endures for exponentially long time, in particular,

where H(k)(Γ) is the truncation of Hp(Γ) just before the Δtk term [1]. Conservation of the truncated shadow Hamiltonian constrains the actual energy of a second order integrator to stay within a range of for exponential time. It is thus inferred that the behavior of the actual energy consists of (i) fluctuations indicative of the discrepancy between energy and shadow energy, plus (ii) very slow secular drift.

It is common with dynamics to monitor the energy for excessive drift. If the truncated shadow Hamiltonian were monitored instead, systematic fluctuations would be flattened, and excessive drift would be evident before wasting a large amount of computing time. Also, the shadow Hamiltonian can produce a posteriori estimates of the effect of integration errors for observables computed from time averages of extended Hamiltonian dynamics [2, 4]. However, as a practical tool, the truncated shadow Hamiltonian falls short: The formula for H(k) involves analytical derivatives of H and is expensive to compute. To solve this problem, [25] defines “interpolatory” shadow Hamiltonians

which are cheap and easy to evaluate. This construction applies to integrators based on splitting the Hamiltonian and is well defined even for Hamiltonians that are merely C1.

A subsequent article [11] implements the interpolatory shadow Hamiltonians up to 24th order. Associated experiments indicate that the shadow Hamiltonian is well conserved even if not all higher derivatives exist. Moreover, the fluctuations are exponentially small in the step size even for MD, where potential energy functions contain numerous singularities. Most surprising is the observation that as the order k increases, the magnitude of the fluctuations continues to decrease (to a positive limiting value). Hence, the expected divergence [15, p. 342] does not materialize for reasonable step sizes.

There are efficient nonsymplectic volume-preserving methods that conserve energy very well [12], but none are known that possess a perturbed energy that is conserved for exponentially long time. For example, the qth order symmetric linear multistep method with simple roots on unit circle conserves energy for [15]. Another example, the simplified Takahashi–Imada/Rowlands method, introduced in [35, eq. (10.5)–(10.8)], conserves energy for [16].

Molecular simulations of the average length of butane versus step size illustrated by [10, Fig. 2] and [23, Fig. 4] demonstrate that the dependence on step size is choppy though apparently quadratic. Presumably, this is a result of the dynamics being nonergodic with the inaccessible portion of phase space varying as a function of Δt. Only with unrealistic assumptions has ergodicity been proved for numerical integrations: one proof [21] assumes uniformly hyperbolic dynamics and applies a shadowing lemma; another [29] assumes a step size small enough that trajectories are resolved. A weakened property, termed δ-ergodicity, is discussed in [29]. Recently, a weakened shadowing concept has been proposed [32] as an explanation for the success of molecular dynamics.

Acknowledgments

The author is grateful to Alicia Klinvex for performing the numerical experiments and to Wayne Hayes, Paul Tupper, and anonymous referees for suggestions that improve the presentation.

Footnotes

This work supported by grant R01GM083605 from the National Institute of General Medical Sciences.

REFERENCES

- [1].Benettin G, Giorgilli A. On the Hamiltonian interpolation of near to the identity symplectic mappings with application to symplectic integration algorithms. J. Statist. Phys. 1994;74:1117–1143. [Google Scholar]

- [2].Bond S. Ph.D. thesis. Dept. of Mathematics, University of Kansas; Lawrence, KS: 2000. Numerical Methods for Extended Hamiltonian Systems with Applications in Statistical Mechanics. [Google Scholar]

- [3].Bond SD. Backward Error Analysis for Microcanonical Averages. 2008. manuscript.

- [4].Bond SD, Leimkuhler BJ. Molecular dynamics and the accuracy of numerically computed averages. Acta Numer. 2007;16:1–65. [Google Scholar]

- [5].Borwein JM, Corless RM. Emerging tools for experimental mathematics. Amer. Math. Monthly. 1999;106:889–909. [Google Scholar]

- [6].Chandler D. Statistical mechanics of isomerization dynamics in liquids and the transition state approximation. J. Chem. Phys. 1978;68:2959–2970. [Google Scholar]

- [7].Chiu SW, Clark M, Subramaniam S, Jakobsson E. Collective motion artifacts arising in long-duration molecular dynamics simulations. J. Comput. Chem. 2000;21:121–131. [Google Scholar]

- [8].Chodera JD, Singhal N, Pande VS, Dill KA, Swope WC. Automatic discovery of metastable states for the construction of Markov models of macromolecular conformational dynamics. J. Chem. Phys. 2007;126:155101. doi: 10.1063/1.2714538. (17 pp.) [DOI] [PubMed] [Google Scholar]

- [9].Crisanti A, Falcioni M, Vulpiani A. On the effects of an uncertainty on the evolution law in dynamical systems. Phys. A. 1989;160:482–502. [Google Scholar]

- [10].Deuflhard P, Dellnitz M, Junge O, Schütte Ch. Computation of essential molecular dynamics by subdivision techniques I: Basic concepts. In: Deuflhard P, Hermans J, Leimkuhler B, Mark A, Reich S, Skeel RD, editors. Computational Molecular Dynamics: Challenges, Methods, Ideas, Lect. Notes Comput. Sci. Eng. 4. Springer-Verlag; Berlin: 1998. pp. 91–108. [Google Scholar]

- [11].Engle RD, Skeel RD, Drees M. Monitoring energy drift with shadow Hamiltonians. J. Comput. Phys. 2005;206:432–452. [Google Scholar]

- [12].Faou E, Hairer E, Pham T-L. Energy conservation with non-symplectic methods: Examples and counter-examples. BIT. 2004;44:699–709. [Google Scholar]

- [13].Frenkel D, Smit B. Understanding Molecular Simulation: From Algorithms to Applications. 2nd ed Academic Press; New York: 2002. [Google Scholar]

- [14].Hairer E, Lubich C. The life-span of backward error analysis for numerical integrators. Numer. Math. 1997;76:441–462. [Google Scholar]

- [15].Hairer E, Lubich C, Wanner G. Springer Ser. Comput. Math. Vol. 31. Springer-Verlag; Berlin: 2006. Geometric Numerical Integration. [Google Scholar]

- [16].Hairer E, McLachlan R, Skeel RD. On Energy Conservation of the Modified Takahashi-Imada Method. Math. Modelling Numer. Anal. doi: 10.1051/m2an/2009019. to appear. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Harvey SC, Tan RKZ, Cheatham TE. The flying ice cube—velocity rescaling in molecular dynamics leads to violation of energy equipartition. J. Comput. Chem. 1998;19:726–740. [Google Scholar]

- [18].Hayes W. Shadowing high-dimensional Hamiltonian systems: The gravitational n-body problem. Phys. Rev. Lett. 2003;90:54104. doi: 10.1103/PhysRevLett.90.054104. (4 pp.) [DOI] [PubMed] [Google Scholar]

- [19].Leach AR. Molecular Modelling: Principles and Applications. 2nd ed Prentice Hall; Englewood Cliffs, NJ: 2001. [Google Scholar]

- [20].Leimkuhler B, Reich S. Simulating Hamiltonian Dynamics. Cambridge University Press; London: 2004. [Google Scholar]

- [21].Reich S. Backward error analysis for numerical integrators. SIAM J. Numer. Anal. 1999;36:1549–1570. [Google Scholar]

- [22].Sanz-Serna JM. Numerical ordinary differential equations vs. dynamical systems. In: Broomhead DS, Iserles A, editors. The Dynamics of Numerics and the Numerics of Dynamics. Clarendon Press; Oxford: 1992. pp. 81–106. [Google Scholar]

- [23].Schlick T. Some failures and successes of long-timestep approaches to biomolecular simulations. In: Deuflhard P, Hermans J, Leimkuhler B, Mark A, Reich S, Skeel RD, editors. Algorithms for Macromolecular Modelling, Lect. Notes Comput. Sci. Eng. 4. Springer-Verlag; Berlin: 1998. pp. 221–250. [Google Scholar]

- [24].Skeel RD. Supplement to “What Makes Molecular Dynamics Work?”. doi: 10.1137/070683660. http://bionum.cs.purdue.edu/08Skee.pdf. [DOI] [PMC free article] [PubMed]

- [25].Skeel RD, Hardy DJ. Practical construction of modified Hamiltonians. SIAM J. Sci. Comput. 2001;23:1172–1188. [Google Scholar]

- [26].Swope WC, Andersen HC, Berens PH, Wilson KR. A computer simulation method for the calculation of equilibrium constants for the formation of physical clusters of molecules: Application to small water clusters. J. Chem. Phys. 1982;76:637–649. [Google Scholar]

- [27].Tang Y-F. Formal energy of a symplectic scheme for Hamiltonian systems and its applications (I) Comput. Math. Appl. 1994;27:31–39. [Google Scholar]

- [28].Tuckerman ME, Liu Y, Ciccotti G, Martyna GJ. Non-Hamiltonian molecular dynamics: Generalizing Hamiltonian phase space principles to non-Hamiltonian systems. J. Chem. Phys. 2001;115:1678–1702. [Google Scholar]

- [29].Tupper PF. Ergodicity and the numerical simulation of Hamiltonian systems. SIAM J.Appl. Dyn. Sys. 2005;4:563–587. [Google Scholar]

- [30].Tupper PF. A test problem for molecular dynamics integrators. IMA J. Numer. Anal. 2005;25:286–309. [Google Scholar]

- [31].Tupper PF. Computing statistics for Hamiltonian systems: A case study. J. Comput. Appl. Math. 2007;205:826–834. [Google Scholar]

- [32].Tupper PF. The relation between approximation in distribution and shadowing in molecular dynamics. SIAM J. Appl. Dyn. Syst. submitted. [Google Scholar]

- [33].Voter AF, Doll JD. Dynamical corrections to transition state theory for multistate systems: Surface self-diffusion in the rare-event regime. J. Chem. Phys. 1985;82:80–92. [Google Scholar]

- [34].Wang F, Landau DP. Determining the density of states for classical statistical models: A random walk algorithm to produce a flat histogram. Phys. Rev. E. 2001;64:056101. doi: 10.1103/PhysRevE.64.056101. (16 pages) [DOI] [PubMed] [Google Scholar]

- [35].Wisdom J, Holman M, Touma J. Symplectic correctors. In: Marsden JE, Patrick GW, Shadwick WF, editors. Integration Algorithms and Classical Mechanics. American Mathematical Society; Providence, RI: 1996. pp. 217–244. Fields Inst. Commun. 10. [Google Scholar]