Abstract

Question:

Is there a means of assessing research impact beyond citation analysis?

Setting:

The case study took place at the Washington University School of Medicine Becker Medical Library.

Method:

This case study analyzed the research study process to identify indicators beyond citation count that demonstrate research impact.

Main Results:

The authors discovered a number of indicators that can be documented for assessment of research impact, as well as resources to locate evidence of impact. As a result of the project, the authors developed a model for assessment of research impact, the Becker Medical Library Model for Assessment of Research.

Conclusion:

Assessment of research impact using traditional citation analysis alone is not a sufficient tool for assessing the impact of research findings, and it is not predictive of subsequent clinical applications resulting in meaningful health outcomes. The Becker Model can be used by both researchers and librarians to document research impact to supplement citation analysis.

INTRODUCTION

A traditional method of assessing research impact, citation analysis is performed by examining an individual publication and assessing how often it has been cited, if ever, by subsequent publications [1]. It is a tool for gauging the extent of a publication's influence in the literature and for tracking the advancement of knowledge with the inherent assumption that significant publications will demonstrate a high citation count [2–4]. While citation analysis is subject to some flaws, such as self-citing and reciprocal citing by colleagues [5,6], it is accepted as a standard tool for assessing the merits of a publication.

In May 2007, a principal investigator from the Ocular Hypertension Treatment Study (OHTS) [7] requested a citation analysis of OHTS articles after viewing a poster by Sieving, “The Impact of NEI-Funded Multi-Center Trials: Bibliometric Indications of Dissemination, Acceptance and Implementation of Trial Findings” [8], which had been presented at the 2007 meeting of the Association for Research in Vision and Ophthalmology. The authors performed a citation analysis of twenty-six OHTS peer-reviewed articles using the SCOPUS, Web of Science, and Essential Science Indicators databases.

Of the twenty-six journal articles, several demonstrated high rates of citation. That is, they were often cited by subsequent publications. In some instances, the citations exceeded baseline citation rates as noted on Essential Science Indicators, a database that assesses intellectual impact by analyzing the citation rate for a publication against other publications in a particular area of research or multiple areas of research.

The high citation rates for the selected journal articles sparked the authors' interest in further investigation to determine why these articles were cited so often by other peer-reviewed journal articles. Was this indicative of significant findings that might have resulted in clinical outcomes? If so, what were those outcomes and how could they be revealed? What other evidence of research impact could the authors discover by going beyond citation analysis?

A cursory search of the Google and Yahoo search engines using key terms related to OHTS (i.e., open angle glaucoma, pachymetry, central corneal thickness, ocular hypertension) and the title of the study (i.e., OHTS, Ocular Hypertension Treatment Study) yielded findings such as practice guidelines, continuing education guidelines, curriculum guidelines, insurance coverage documents, and quality measures guidelines that noted OHTS as supporting documentation. Further analysis using other resources, such as government websites, revealed additional evidence of research impact attributed to OHTS findings. These materials are not usually indexed by databases, nor are they consistently noted as cited-by publications. Given the depth of evidence of research impact that was not revealed from citation analysis alone, the authors decided to perform a more systematic and comprehensive evaluation.

METHODOLOGY

The project methodology beyond the initial citation analysis was neither a tidy nor linear process. The authors consulted with research investigators, clinicians, and other librarians to gain insight about the research process in both bench and clinical studies in order to identify tangible outcome indicators that can be documented and quantified for assessment of research impact. Indicators are defined as specific, concrete examples that demonstrate research impact as a result of a research finding or output. Examples of tangible indicators include new or ancillary research studies, research findings used as supporting documentation for implementation of clinical guidelines, or biological material developed.

After completing the initial analysis of the research process, the authors developed a preliminary framework using a logic model approach focused on the research process and incorporating specific indicators as criteria for assessment of research impact. Creation of the preliminary framework was based on the logic model as outlined by the W. K. Kellogg Foundation, which emphasizes inputs, activities, outputs, outcomes, and impact measures as a means of evaluating a program [9]. The versatility of the Kellogg logic model allows for modification to meet the needs of a particular project or institution or to try a new approach to determine effectiveness for evaluation purposes. With that in mind, the authors adapted parts of the Kellogg logic model to determine whether assessment of research impact could be evaluated, documented, and/or quantified.

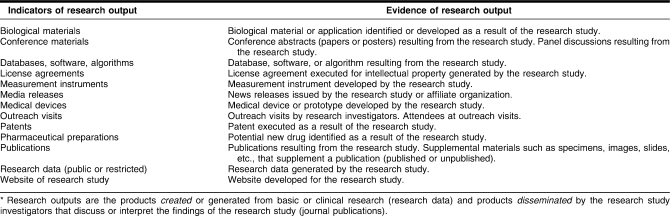

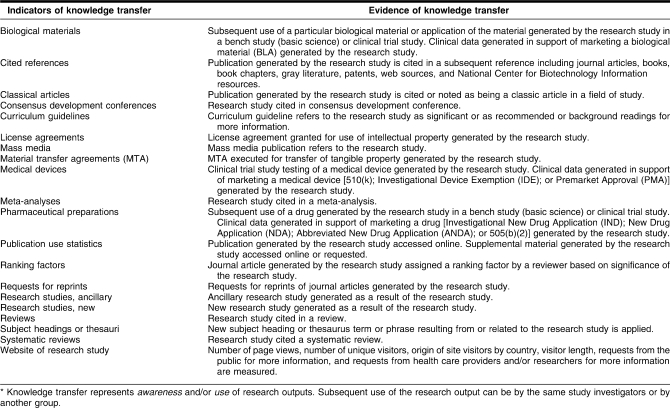

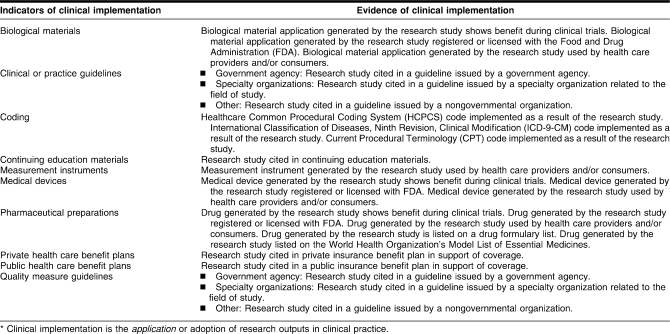

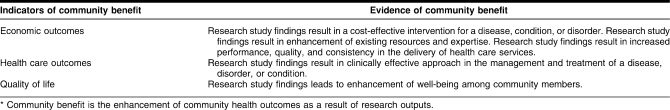

A literature review was conducted to locate a definition of research impact that could be used for the preliminary framework. Several framework examples were discovered [10–19], many of which had been developed outside the United States. The Flinders University Primary Health Care Research and Information Service provided a definition of research impact that was adapted for this project: “The term research impact describes the effects and outcomes, in terms of value and benefit, associated with the use of knowledge produced through research” [20]. Four stages of the research process were identified for the preliminary model: research output, knowledge transfer, clinical implementation, and community benefit. Indicators that demonstrate research impact were grouped at the appropriate stages along with specific criteria that serve as evidence of research impact for each indicator (Tables 1–4).

Table 1.

Indicators and evidence of research output*

Table 2.

Indicators and evidence of knowledge transfer*

Table 3.

Indicators and evidence of clinical implementation*

Table 4.

Indicators and evidence of community benefit*

A second analysis of OHTS findings was made to locate evidence of research impact using the preliminary framework. This phase of the project focused primarily on research output, knowledge transfer, and clinical implementation. Assessment of community benefit requires a different methodology and will be further addressed in a future project.

Research output

Research outputs are defined as products generated by a research study and disseminated by research investigators who discuss or interpret the findings of the research study. One form of research output utilized by OHTS is a public website. The OHTS website [7] contains a number of research outputs such as OHTS publications, a newsletter, supplemental materials, conference materials, and a risk calculator. These outputs serve to inform other research investigators, OHTS clinical trial participants, grant funding agencies, and the general public of research efforts and findings generated by OHTS. The OHTS website serves as an ideal means of not only disseminating the findings of a research study, but also documenting the output of a research study.

Knowledge transfer

Knowledge transfer is a form of research impact that can be documented and quantified but is not readily discoverable via citation analysis. Knowledge transfer represents awareness and/or use of research outputs created or disseminated by a research study. Subsequent use of the research output can be utilized by the same study investigators who created or disseminated the initial research output or by another individual or group. Two key indicators of knowledge transfer that were identified are ancillary and new research studies. Ancillary studies are indicated by instances where the findings of a research study allow for expansion of research in related areas. Just as there are instances where knowledge gained from a research study results in future research studies that expand on the research findings, there are also new research studies that focus on previously unexplored areas identified by the initial research study. Two new and five ancillary research studies were identified by the OHTS investigators, based on their anecdotal knowledge and contact with other investigators in the field. While discovery of ancillary and new research studies relies heavily on anecdotal knowledge of research investigators, identification of these studies can be a powerful indicator of knowledge transfer resulting from a research study.

Clinical implementation

Clinical implementation is the application or adoption of research outputs in clinical practice. Research findings can help effect change in the understanding of a disease, disorder, or condition that results in effective clinical outcomes. One example of a clinical implementation indicator is medical coding. Current Procedural Terminology (CPT) codes are published by the American Medical Association and represent uniform terminology for describing medical, surgical, and diagnostic services. OHTS findings were used as supporting documentation for creating new CPT I and CPT III codes for pachymetry (measurement of the cornea). Clinical and practice guidelines are also examples of clinical implementation indicators. Most guidelines include documentation to support a particular recommendation, which can be used to document the influence of a study on a guideline. OHTS findings were noted as supporting documentation for a number of clinical guidelines related to glaucoma.

Trade publications (non-peer-reviewed) for ophthalmology and optometry were especially helpful for locating evidence of clinical applications resulting from OHTS findings. Many trade publications have experts in the field who summarize bench and clinical study findings for clinicians and health care providers and who provide guidance on new practice guidelines, new reimbursement procedures, or medical coding changes along with commentary. A number of articles in trade publications written by expert specialists provided an analysis of the OHTS findings and the ways the findings translated into clinical practice, resulting in changes in treatment and practice. The new CPT I and CPT III codes resulting from the OHTS findings were discovered using trade publications, which are not typically indexed in databases that provide citation analysis tools. The benefit of trade publications is not exclusive to OHTS; there are many other examples of trade publications that follow the same practice.

RESULTS

Given the depth of evidence of research impact for OHTS that was revealed using the preliminary framework, the authors refined the framework into a standardized model as a tool for assessment of research impact: the Becker Medical Library Model for Assessment of Research Impact. The Becker Model emphasizes analyzing outcomes of research impact that demonstrate benefit based on specific indicators. Outcomes that demonstrate benefit include contribution to the knowledgebase; change in understanding of a disease, disorder, or condition; change in policy; change in practice; and/or change in community health. These outcomes have been categorized into stages. For each stage, a series of indicators were identified as well as specific criteria that serve as evidence of research impact for each indicator. This preliminary list of indicators is by no means exhaustive and will be expanded in the future. Each indicator has at least one specific criterion that demonstrates evidence of research impact; some indicators include multiple examples of impact.

There was a reasonable amount of duplication of research and revision of the preliminary framework to refine the Becker Model. Extensive review was undertaken to determine the most effective method of identifying a correlation between research output and evidence of some form of research impact. Search queries were repeated and revised to determine which was most effective in a given database. Databases and other resources were compared to determine which provided the most relevant results.

The Becker Model was subsequently published in a project website in March 2009 with no restrictions on access [21]. The website includes both guidance for quantifying and documenting research impact and resources for locating evidence of research impact. Specific databases and resources for each indicator are identified, and search tips are provided. Guidance includes tutorials from database vendors as well as those created by the authors using Camtasia software to provide concrete examples of how to best search a given database or resource.

A section of the website, Enhancing Your Impact, includes a series of strategies to help researchers enhance the visibility and awareness of their research output. These strategies provide tips and tools on how research investigators can prepare for publication, enhance the dissemination of their research outputs, and keep track of their research with the goal of enhancing their research impact. The website includes a reporting template based on the Becker Model that can be used for documenting and reporting research impact and a sample of a completed report, as used for OHTS. A comprehensive list of references related to citation analysis and assessment of research impact and a list of other frameworks and models for assessment of research is also available on the website.

DISCUSSION

Expanding the project beyond the initial citation analysis was a more exhaustive endeavor than anticipated. Not all indicators of research impact are readily tangible, nor can they be neatly quantified. A primary difficulty with the project was the lack of consensus or guidance as to indicators that represent evidence of research impact. Another challenge was establishing a clear pathway of diffusion of research output into knowledge transfer, clinical implementation, or community benefit outcomes as a result of a research study. The latter was due in part to the difficulty of establishing a direct correlation from a research finding to a specific indicator. In-depth review was required in some instances to locate a correlation, and often multiple research studies were cited as supporting documentation.

For some indicators, supporting documentation was not publicly available. In these instances, contact with policy makers or other officials was required to obtain confirmation that OHTS findings were used as supporting documentation. For example, supporting documentation for implementing CPT codes is not publicly available, even though each proposed CPT code must be supported by peer-reviewed literature. Contact with policy makers was required to confirm that OHTS findings were used as justification for implementing CPT I and CPT III codes. While awareness of implementation of new CPT codes was discovered via trade publications, confirmation of such was required via primary supporting documentation.

In addition, it is important to consider issues related to the establishment of an appropriate timeframe for using the Becker Model: When can a research investigator expect to be able to locate and document impact from a research study? Should this be done during or after completion of a research study? One can expect an assessment of research impact made ten years after completion of a study to yield more evidence of research impact than an assessment made while a study is in progress. However, the Becker Model can be useful in helping research investigators be aware of the importance of tracking and documenting research output, whether the tracking is of a single author, multiple authors, a bench or clinical study, a department, or an institution. While a single publication may not demonstrate as broad of a spectrum of research impact as evidenced by a large clinical study, the Becker Model can serve, nevertheless, as a useful tool to supplement citation analysis.

Even with these limitations, the Becker Model may provide a useful tool for research investigators not only for documenting and quantifying research impact, but also for other uses as well (Table 5), including noting potential areas of anticipated research impact for funding agencies. Research investigators are increasingly under pressure to demonstrate research impact from biomedical research. A National Institutes of Health (NIH) application [22] states that renewal applications must provide information documenting the impact of the clinical research from the original application. NIH also recently issued a notice [23] on the peer-review process that lists criteria for reviewers. Reviewers will provide an impact score for applications based on selected criteria that demonstrate overall impact, such as:

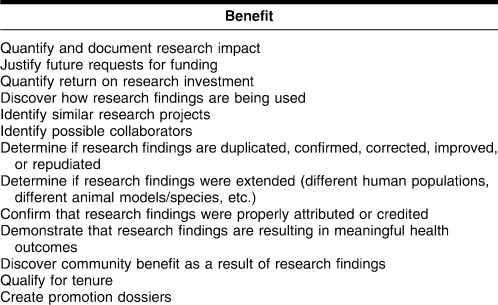

Table 5.

Benefits of assessment of research impact

How will successful completion of the aims change the concepts, methods, technologies, treatments, services, or preventative interventions that drive this field?

Does the application challenge and seek to shift current research or clinical practice paradigms by utilizing novel theoretical concepts, approaches or methodologies, instrumentation, or interventions?

If the aims of the project are achieved, how will scientific knowledge, technical capability, and/or clinical practice be improved?

Initial response to and feedback on the Becker Model has been positive. The authors are currently testing the Becker Model on two projects: one involving a single publication, the other involving three publications. To date, two indicators not included in the original Becker Model have been identified: legislation enacted and passed as a result of research findings and research findings used as supporting documentation in recommended guidelines for construction of health care facilities. The authors will add these and any other identified indicators to the Becker Model. In addition, the authors are collaborating with the Washington University Institute of Clinical and Translational Sciences to use parts of the model to assist with reporting to NIH for Clinical and Translational Science Awards. Librarians from a number of institutions have been quite receptive to the model and indicate that the model provides a wonderful opportunity for librarians to form new partnerships and provide valuable support at their institutions.

CONCLUSION

Citation analysis did not sufficiently reveal the full impact of OHTS findings and resulting synthesis into clinical practice and benefit to the community. As a result of expanding the project, the authors discovered that there are a number of resources available to track research diffusion above and beyond citation analysis. Reviewing the research process and analyzing the indicators of research impact using specialized databases, coupled with drawing on the expertise of librarians and a bioinformaticist, allowed the authors to locate quantifiable evidence of research impact and provide the OHTS investigators with a more comprehensive overview of research impact of their study findings.

Despite this exhaustive process, the authors felt that it was prudent to develop a model for methodology beyond citation counts to demonstrate a more robust and comprehensive evaluation of research impact. Citation analysis alone does not reveal whether research findings result in new diagnostic applications, a new standard of care, changes in health care policy, or improvement in public health. In short, citation analysis does not provide a full narrative of meaningful health outcomes. However, the Becker Model does not exclude the value of citation analysis but rather represents a supplemental tool that documents and quantifies research impact, thereby demonstrating a more meaningful measurement of health research outcomes. After all, citation analysis revealed the initial list of highly cited OHTS articles that served as markers prompting the need for further investigation.

Another reason for developing the Becker Model is to provide specific guidance regarding databases and resources that investigators, support staff, and librarians can use to locate evidence of research impact. The Becker Model presents both a practical do-it-yourself approach and an opportunity for medical librarians to collaborate with research investigators and institutes to facilitate the documentation and quantification of the impact of research at their institutions. Such joint endeavors are possible in light of the expertise of medical librarians in locating information coupled with the databases and resources to which medical libraries have access.

The authors actively seek feedback on ways to improve or expand the Becker Model and examples of additional indicators that can be used to document and quantify evidence of biomedical research impact or other resources that may be useful for locating evidence of biomedical research impact. Ongoing work and future plans for the Becker Model include testing the model on additional research projects, expanding the section on community benefits, and locating additional means of quantifying research output related to economic indicators.

Acknowledgments

The authors gratefully acknowledge Elizabeth Kelly, assessment and evaluation liaison for the National Network of Libraries of Medicine, MidContinental Region, and a specialist in logic and evaluation models, for her assistance in the creation of a logic model. The authors also acknowledge Robert Engeszer and Paul Schoening for their assistance and review of the manuscript and Mae Gordon for her initial question that inspired this project.

Contributor Information

Cathy C. Sarli, sarlic@wustl.edu, Scholarly Communications Specialist, Bernard Becker Medical Library, Washington University School of Medicine, 660 South Euclid Campus Box 8132, St. Louis, MO 63110.

Ellen K. Dubinsky, ellen.dubinsky@bridgew.edu, Digital Services Librarian, Clement C. Maxwell Library, Bridgewater State College, 10 Shaw Road, Bridgewater, MA 02325.

Kristi L. Holmes, holmeskr@wustl.edu, Bioinformaticist, Bernard Becker Medical Library, Washington University School of Medicine, 660 South Euclid Campus Box 8132, St. Louis, MO 63110.

REFERENCES

- 1.Nicolaisen J. Citation analysis. Annu Rev Inf Sci Technol. 2007;41(1):609–41. [Google Scholar]

- 2.Kostoff R.N. The use and misuse of citation analysis in research evaluation. Scientometrics. 1998 Sep;43(1):27–43. [Google Scholar]

- 3.Lawani S.M. Citation analysis and the quality of scientific productivity. BioScience. 1977 Jan;27(1):26–31. [Google Scholar]

- 4.Wade N. Citation analysis: a new tool for science administrators. Science. 1975 May 2;188(4187):429–32. doi: 10.1126/science.188.4187.429. [DOI] [PubMed] [Google Scholar]

- 5.MacRoberts M.H, MacRoberts B.R. Problems of citation analysis. Scientometrics. 1996 Jul;36(3):435–44. [Google Scholar]

- 6.Phelan T.J. A compendium of issues for citation analysis. Scientometrics. 1999 May;45(1):117–36. [Google Scholar]

- 7.Washington University School of Medicine, Department of Ophthalmology and Visual Sciences, Vision Research Coordinating Center. Ocular hypertension treatment study [Internet] St. Louis, MO: The School; 2009 [cited 29 May 2009].. 〈 https://vrcc.wustl.edu〉. [Google Scholar]

- 8.Sieving P.C. The impact of NEI-funded multi-center trials: bibliometric indications of dissemination, acceptance and implementation of trial findings. Invest Ophthalmol Vis Sci [Internet] 2007;48 E-abstract 2389 [cited 29 May 2009]. 〈 http://abstracts.iovs.org/cgi/content/abstract/48/5/2389?maxtoshow=&HITS=10&hits=10&RESULTFORMAT=1&author1=sieving&andorexacttitle=and&andorexacttitleabs=and&andorexactfulltext=and&searchid=1&FIRSTINDEX=0&sortspec=relevance&fdate=2/1/2000&resourcetype=HWCI〉. [Google Scholar]

- 9.W. K. Kellogg Foundation. Logic model development guide [Internet] Battle Creek, MI: The Foundation; 2004 [cited 29 May 2009].. 〈 http://www.wkkf.org/Pubs/Tools/Evaluation/Pub3669.pdf〉. [Google Scholar]

- 10.Lavis J, Ross S, McLeod C, Gildiner A. Measuring the impact of health research. J Health Serv Res Policy. 2003 Jul;8(3):165–70. doi: 10.1258/135581903322029520. [DOI] [PubMed] [Google Scholar]

- 11.Australian Research Council. Excellence in research for Australia (ERA) initiative [Internet] Canberra, Australia: The Council; 2008 [cited 29 May 2009].. 〈 http://www.arc.gov.au/pdf/ERA_ConsultationPaper.pdf〉. [Google Scholar]

- 12.Canadian Institutes of Health Research. Developing a CIHR framework for measuring the impact of health research: synthesis report of meetings, February 23, 24, and May 18, 2005 [Internet] Ottawa, ON, Canada: The Institutes; 2005 [cited 29 May 2009].. 〈 http://www.cihr-irsc.gc.ca/e/documents/meeting_synthesis_e.pdf〉. [Google Scholar]

- 13.Weiss A.P. Measuring the impact of medical research: moving from outputs to outcomes. Am J Psychiatry. 2007 Feb;164(2):206–14. doi: 10.1176/ajp.2007.164.2.206. [DOI] [PubMed] [Google Scholar]

- 14.Trochim W.M, Marcus S.E, Mâsse L.C, Moser R.P, Weld P.C. The evaluation of large research initiatives: a participatory integrative mixed-methods approach. Am J Eval. 2008;29(1):8–28. [Google Scholar]

- 15.Hanney S.R, Grant J, Wooding S, Buxton M.J. Proposed methods for reviewing the outcomes of research: the impact of funding by the UK's Arthritis Research Campaign. Health Res Policy Syst. 2004 Jul 23;2(1):4. doi: 10.1186/1478-4505-2-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.RAND Europe. Measuring the benefits from research [Internet] Cambridge, UK: RAND Europe; 2006 [cited 29 May 2009].. 〈 http://www.rand.org/pubs/research_briefs/2007/RAND_RB9202.pdf〉. [Google Scholar]

- 17.Tertiary Education Commission. Performance based research fund (PBRF) [Internet] Wellington, New Zealand: The Commission; 2008 [cited 29 May 2009].. 〈 http://www.tec.govt.nz/templates/standard.aspx?id=588〉. [Google Scholar]

- 18.Kuruvilla S, Mays N, Pleasant A, Walt G. Describing the impact of health research: a research impact framework. BMC Health Serv Res [Internet] 2006 Oct 18;6:134. doi: 10.1186/1472-6963-6-134. [cited 29 May 2009]. 〈 http://www.biomedcentral.com/1472-6963/6/134/〉. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kalucy L, McIntyre E, Jackson-Bowers E. Primary health care research impact project: final report stage 1 [Internet] Adelaide, Australia: Flinders University; 2007 [cited 29 May 2009].. 〈 www.phcris.org.au/phplib/filedownload.php?file=/elib/lib/downloaded_files/publications/pdfs/phcris_pub_3338.pdf〉. [Google Scholar]

- 20.Flinders University, Adelaide Department of General Practice, Primary Health Care Research and Information Service. Focus on understanding and measuring research impact [Internet] Adelaide, Australia: The University; 2005 [cited 29 May 2009].. 〈 http://www.phcris.org.au/phplib/filedownload.php?file=/elib/lib/downloaded_files/publications/pdfs/phcris_pub_3236.pdf〉. [Google Scholar]

- 21.Washington University School of Medicine, Bernard Becker Medical Library. Becker Medical Library model for assessment of research impact [Internet] St. Louis, MO: The School; 2009 [cited 29 May 2009].. 〈 http://www.becker.wustl.edu/impact/assessment/〉. [Google Scholar]

- 22.National Institutes of Health. Rare Diseases Clinical Research Consortia (RDCRC) for the Rare Diseases Clinical Research Network (U54) [Internet] Bethesda, MD: The Institutes; 2009 [cited 29 May 2009].. 〈 http://www.grants.nih.gov/grants/guide/rfa-files/RFA-OD-08-001.html〉. [Google Scholar]

- 23.National Institutes of Health. Enhancing peer review: the NIH announces enhanced review criteria for evaluation of research applications received for potential FY2010 funding [Internet] Bethesda, MD: The Institutes; 2009 [cited 29 May 2009].. 〈 http://www.grants.nih.gov/grants/guide/notice-files/NOT-OD-09-025.html〉. [Google Scholar]