Abstract

The goals of our study were to determine the predictive value and usability of an audience response system (ARS) as a knowledge assessment tool in an undergraduate medical curriculum. Over a three year period (2006–2008), data were collected from first year didactic blocks in Genetics/Histology and Anatomy/Radiology (n=42–50 per class). During each block, students answered clinically oriented multiple choice questions using the ARS. Students’ performances were recorded and cumulative ARS scores were compared with final examination performances. Correlation coefficients between these variables were calculated to assess the existence and direction of an association between ARS and final examination score. If associations existed, univariate models were then constructed using ARS as a predictor of final examination score. Student and faculty perception of ARS difficulty, usefulness, effect on performance, and preferred use were evaluated using a questionnaire. There was a statistically significant positive correlation between ARS and final examination scores in all didactic blocks and predictive univariate models were constructed for each relationship (all P < 0.0001). Students and faculty agreed that ARS was easy to use and are liable tool for providing real-time feedback that improved their performance and participation. In conclusion, we found ARS to be an effective assessment tool benefiting the faculty and the students in a curriculum focused on interaction and self-directed learning.

Keywords: audience response system, ARS, innovations, technology, medical curriculum, interactive learning, gross anatomy, histology, formative feedback, medical students

Introduction

In the past, most of medical schools’ curricula were based on the traditional model of teaching in which education activities were reduced to a transfer of information (Mazur, 2009). Lectures represented the major and sometimes only venue to transfer information from content expert to student. Often students relied on memorization and became overly focused on a short-term objective “What do I have to know to pass the exam?” In many instances, students failed to connect newly acquired material to preexisting knowledge and apply principles needed for problem solving and understanding concepts (Dufresne et al., 2000; Miller et al., 2002).

Creating an interactive and self-directed learning environment has been a critical part of enhancing student performance at Mayo Medical School (Jelsing et al., 2007). The audience response system (ARS) technology has played an important role in promoting individual students’ responsibility for their own learning and allowing for practical application of learned material (Zielinska and Pawlina, 2006).

Usage of ARS technologies has been growing in popularity in higher education settings over the last 15 years (Banks and Bateman, 2004; Kay and LeSage, 2009). Originally used to anonymously survey focus group members in marketing research or unreleased Hollywood movies, ARS was first developed by IBM in the 1960s (Collins, 2008). More recently, it was made well known by the television game show, “Who Wants to Be a Millionaire” with the ask-the-audience lifeline (Caldwell, 2007). ARS allows instructors to integrate questions into PowerPoint® (Microsoft Corp., Redmond, WA) or an equivalent software, while students use individual response pads or “keypads” (also known as “classroom clickers”) to select answer choices. Answers are then transmitted usually via radio frequency (RF) to the” plug & play” USB RF receiver installed on a classroom computer and saved in a form of report for future use.

Instructors can use ARS for a variety of purposes (Robertson, 2000; Premkumar and Coupal, 2008). Primarily, it has been used to emphasize important topics and to hold students accountable for the teaching material. However, it can also serve as a creative and interactive solution for teachers trying to engage their students in active learning (Meyers and Jones, 1993; Roschelle, 2003.). ARS has been shown to generate more student questions as compared to classrooms that do not use the tool (Horowitz, 1988). When a student must actively commit to an answer, he or she becomes more engaged in the follow-up discussion and is therefore more likely to ask questions and seek understanding (Beatty et al, 2006; Caldwell, 2007). Other teachers use ARS as a pre-test for the topics covered in the forthcoming lecture. A family medicine as well as obstetrics and gynecology residency program employed this method and found that residents had significantly better long-term retention compared to residents who did not have ARS pre-tests (Schackow et al., 2004; Pradhan et al., 2005). Some teachers use ARS as an opinion survey simply as a way to engage every student as opposed to involving only the outspoken few and thus helping to close the communication gap between lecturer and student.

There are several studies that suggest that ARS is an effective assessment tool (Beatty et al., 2006; Simpson and Oliver, 2007; Kay and LeSage, 2009), however, limited evidence exists to what degree ARS formative assessment correlates to the performance on final course examinations in a medical school curriculum. The goal of our study was to determine if and to what degree an individual student’s performance on the ARS correlates with their final examination score and to use that information to predict final examination performance from a student’s ARS score. We also wanted to assess the students’ and faculty’s experiences with this system as a formative tool in education.

Methods

After extensive evaluation of existing audience response systems, Mayo Medical School choose TurningPoint® ARS manufactured by the Turning Technologies LLC (TurningPoint, 2009) which utilizes a PowerPoint® (Microsoft Corp., Redmond, WA) software plug-in. Slides with interactive questions (ARS slides) can be quickly assembled using a variety of professionally-designed templates from an extra TurningPoint® toolbar incorporated into the PowerPoint program screen. Interactive ARS slides can be interspersed between standard, non-ARS slides to provide an overall session comprising a mixture of standard and interactive slides. Students select the correct answer to the question on the ARS slide using a personal ResponseCard® (Turning Technologies; 2009). As shown in Figure 1, this keypad is a lightweight compact device, in dimensions of a credit card, and have 12 buttons numbered 0–9 and lettered A–J. Also included is a “?” button used to indicate that the student did not fully understand the item and a “GO” button to log in. Other response keypads contain buttons for power, send, and text (Caldwell, 2007). Some products use infrared transmission. However, radio frequency is more popular because the signal is stronger and there is less interference from objects within the transmission pathway or from classroom lights (Caldwell, 2007). The system collects student responses and displays them in a histogram format. Each response card also transmits its user ID number which can be traced back to the student using it, allowing for monitoring of individual student progress. Results of the ARS sessions can be saved in the form of a customized report using Microsoft Word® or Excel® programs (Microsoft Corp., Redmond, WA). TurningPoint® ARS has been incorporated into the Mayo Medical School curriculum since the Fall of 2006 (Zielinska and Pawlina, 2006, TurningPoint, 2009). Examples of other ARS products commercially available include: CPS (DeKalb, IL), H-ITT (Cocoa, FL), iClicker (Houston, TX), Interwrite PRS (Denton, TX), and Qwizdom (Puyallup, WA), (Barber and Njus, 2007).

Figure 1.

ResponseCard® from Turning Technologies is a portable, lightweight, and compact device of 3.3 × 2.1 × 0.3 inches in dimensions. Note that keypad is resting on the “plug & play” USB Radio-Frequency receiver in the size of average thumb drive. This device inserted into USB port of classroom computer allows for a 200 foot range reception of signals from classroom keypads (TurningPoint, 2009).

Approval for this study was granted by the Mayo Foundation Institutional Review Board (Protocol ID# 06-004894). Data for this report were collected over a period of three years from Classes 2010, 2011 and 2012 during their first two didactic blocks in medical school. The ResponseCard® clickers (see Figure 1; TurningPoint, 2009) were issued to each matriculating student during Orientation (Block I), which included a short training session. Several short survey and program evaluations were conducted during this block using ARS. The systematic use of ARS was implemented in six week-long Block II (Genetics/Histology) and subsequent Block III (Anatomy/Radiology). Both blocks reflect integrative student-centered approach to medical education. A total of 120 contact hours (four hours every morning for five days a week) spent in Block III are solely devoted to gross anatomy and radiology. Except the Basic Doctoring course which integrates the history and physical examination findings with the anatomy knowledge, students do not have any other parallel courses or assignments. Didactic time in Block III is distributed between briefing sessions, laboratory exercises, and daily ARS sessions. Short, 45-minute long briefing sessions to the entire class presented by a team of anatomists and radiologists replace traditional lectures. Sessions are designed to provide daily overview and radiological interpretations of major clinical anatomy concepts, important directions for laboratory exercises and entertain any questions related to assigned material.

There were 42–50 students in each class. Instructors presented clinically applied questions that were relevant to current class material. Questions were presented at the beginning or end of the daily briefing session in Block II and at the end of the laboratory exercise in Block III. Most of the questions were multiple choice type, written in the NBME style and contained clinical vignettes to test application of core knowledge into a specific clinical scenario (Case and Swanson, 2002). Some of the questions were intended to test individual student knowledge thus students were asked to key in an answer choice without consulting their peers. About one-third of a question pool was design for evaluating a team effort; students were given longer time to discuss possible answers with team members and then key in their group’s answer. Time allotted for team discussion on an ARS question varied and depended on subject area, difficulty level, and pedagogical goals of the instructor (Beatty at al., 2006). After students submitted their responses, the system displayed a histogram of the selected answer choices. Without first revealing the correct answer, the instructor discussed the item, solicited volunteer discussions, and moderated a classroom discussion related to that question. ARS was used primarily for providing real-time formative feedback to students about their progress and allowing for opportunity to seek extra help if needed.

Each student’s classroom performance on the ARS was recorded during the Genetics/Histology and Anatomy/Radiology academic blocks (Blocks II and III) and accounted for 10% of the final course grade. The cumulative ARS score for these didactic blocks were calculated for each student and then correlated with their score on the final written histology examination (in Block II), final written and practical gross anatomy examinations, and NBME Anatomy and Embryology subject examination (in Block III) using a Pearson correlation. Statistical significance of these correlations was also assessed. For significant associations between block ARS score and block final examination score, predictive univariate linear regression models were constructed to quantitate this relationship. A t-test determined the significance of the ARS predictor variable in the univariate model. To assess any potential confounding of the relationship between ARS and final examination by academic year, multivariate linear regression models were also generated to adjust for class year.

In addition, we assessed the students’ perception of ARS difficulty, usefulness, effect on performance, and preferences of use with an e-mail questionnaire. Faculty’s perception of ARS on similar parameters was also collected using a similar questionnaire. All questions were answered on a five point Likert scale from strongly agree (+2 points) to neutral (0 points) to strongly disagree (−2 points). Mean Likert scores were calculated for each question and the mean student or faculty score was compared to a mean score of 0 (neutral opinion) using t-tests.

All data analysis was conducted using JMP Version 8 and SAS Version 9.1 (SAS Institute Inc., Cary, NC) programs. No adjustments were made for multiple comparisons.

Results

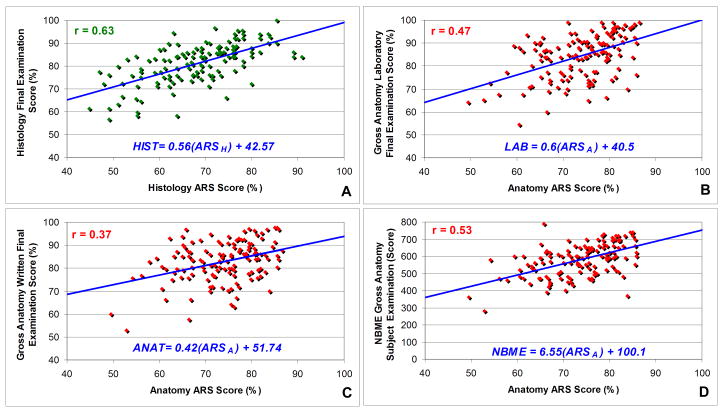

There were statistically significant positive correlations between histology ARS score and histology final examination score (p < 0.0001; Figure 2A). There were also statistically significant positive correlations between anatomy ARS score and anatomy final written and laboratory practical examinations as well as the NBME Anatomy and Embryology Subject Examination scores (all p < 0.0001; Figure 2A–D). The strongest correlation shown in Figure 2A was between histology ARS score and histology final examination score (r = 0.63) while the strongest correlation involving the anatomy ARS score was with NBME Anatomy and Embryology Subject Examination(r = 0.53; Figure 2D).

Figure 2.

Students’ performance on the audience response system quizzes in correlation with final examination scores in histology (A) and gross anatomy (B–D). p < 0.0001 for all correlations and predictive univariate models. For a given ARS score, the linear regression formulas given for each relationship can estimate the mean final examination scores.

Given the statistically significant correlations for all four ARS associations tested, univariate linear regression models were created to predict a student’s final examination score from their ARS score (Figure 2). In each of these four predictive models, ARS score was a statistically significant predictor of final examination score (p < 0.0001; t-test). Since the exact relationship between ARS score and final examination score may be confounded by academic year, multivariable predictive linear regression models were generated to adjust for class year. Even after this adjustment, ARS scores still remained a statistically significant predictor of examination performance (data not shown).

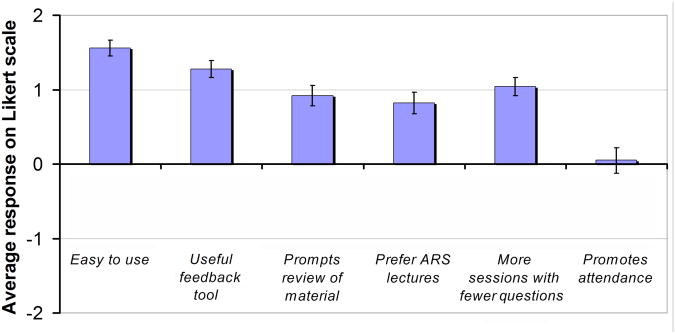

Students who utilized ARS in their curriculum were asked to give their perceptions of ARS (n= 75). A web site-based questionnaire was administered by SurveyMonkey.com, and scored along a 5-point Likert scale. Averaged student responses to these 6 questions were then compared to a null hypothesis value of 0 points (Figure 3). Students felt that ARS was easy to use and a useful feedback tool that prompted them to review material. Moreover, students preferred lectures that contained ARS questions (all p < 0.0001). Students also expressed a preference for more ARS quizzes with fewer questions and indicated that these quizzes had no impact on their decision to attend class.

Figure 3.

Average student responses on questionnaire about the use of ARS (n = 75). All responses were scored along a 5-point Likert scale (+2 = strongly agree; +1 = slightly agree; 0 = neutral; −1 = slightly disagree; −2 = strongly disagree). Standard error of estimate represented by error bars.

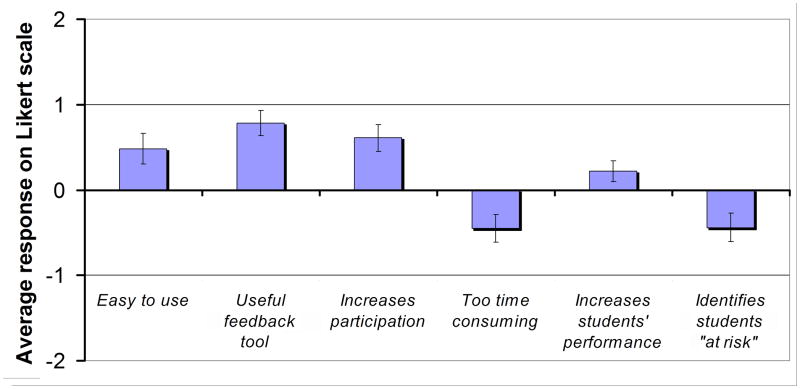

Faculty teaching in didactic blocks utilizing ARS (n =51) also completed similar ARS surveys (Figure 4). Faculty felt that ARS was also easy to use, gave useful feedback on their instruction to the students, and increased participation by students in class (n = 51; all p < 0.01). They disagreed with the statement that ARS was too time-consuming (p = 0.008). Faculty indicated that ARS did not help to identify students “at risk” (p = 0.01) with no significant preference for the role of ARS in improving student performance (p = 0.09). Five responders expressed a need for comparative data with respect to this item. Faculty similar to students also preferred to have more quizzes with fewer questions (45%), 40% were neutral and only 15% preferred less frequent quizzes with more questions.

Figure 4.

Average faculty responses on questionnaire about the use of ARS (n = 51). All responses were scored along a 5-point Likert scale (+2 = strongly agree; +1 = slightly agree; 0 = neutral; −1 = slightly disagree; −2 = strongly disagree). Standard error of estimate represented by error bars.

Discussion

ARS technology is becoming a more frequently adopted tool by medical schools and residency programs (Pradhan et al., 2005; Keengwe, 2007; Nayak and Erinjeri, 2008; Premkumar and Coupal, 2008; Rubio et al., 2008). Providing timely and quality feedback and reinforcement to students are vital to the synthesis and integration processes of learning (Simpson and Oliver, 2009). It has been shown that the use of classroom clickers promote active learning (Meyers and Jones, 1993) and interactivity in the classroom environment (Horowitz, 1988), improves critical thinking skills, and increases retention and transfer of new information (Premkumar and Coupal, 2008, Rubio et al. 2008).

More recently browser-based systems have also become available and can be adopted for online courses using content management system such as WebCT or Blackboard. These options allow students to log-on to the ARS session through an IP address and submit answers through their internet connection (Barber and Njus, 2007; Collins, 2008). Theoretically, the system could be used to transmit answers while at home taking an online class or while watching a web-cast lecture. Students can also use the internet to submit answers during a live lecture using devices utilizing WiFi (wireless fidelity) technology such as pocket/notebook/laptop/desktop personal computers, handheld video game consoles or cellphones as well as non-WiFi Web-enable Windows Mobile® devices or BlackBerry® smartphones (Research in Motion, Waterloo, Ontario, Canada), (Streeter and Rybicki, 2006; TurningPoint, 2009). Interestingly, these recent developments in ARS methodology where a student can remotely participate in education activity without being physically present in the classroom are congruent with the opinions of our students who indicated that ARS had no impact on their decision to attend classes. Due to nature of team-based approach and emphasis on teamwork, the attendance in Block II and III was nearly 100% (excluding excused absences). This also may be a positive reflection of students’ commitment to learning within a highly student-centered environment with emphasis on professionalism (Viggiano et al., 2007).

Our study shows that ARS can be used as an important tool for students to continuously monitor their progress in the curriculum. This formative feedback allows students to evaluate how they comprehend the assigned material and instantly compare their progress with other classmates.

Surveyed faculty however, felt that it did not help to identify students “at risk” and therefore did not express a significant preference when it came to the role of ARS in improving student performance. This response appears to be in keeping with the fact that the extent of utilization of ARS within other courses in the Mayo Medical School curriculum at that time was limited to formative feedback without a percentage contribution to the final course grade. Our correlation and modeling data in Block II and III however, provide evidence that ARS scores obtained during the course are strong positive predictors of final examination performance in the Genetics/Histology and Anatomy/Radiology block. Students with low ARS scores were more likely to have a lower performance on their final examination at the end of the didactic block.

Tracking of student performance during the anatomy course, especially in a condensed curriculum, and providing appropriate formative feedback to students is essential to promoting successful outcomes. The use of teaching and learning interventions such as organized tutoring programs have proved to be effective in the undergraduate medical curriculum (Sobral, 2002). In the absence of a quantitative system, criteria for the identification of “at-risk” students have been based on faculty perception and student self-assessment of tutoring needs to a large extent. With accessibility to ARS scores and its predictive value, faculty may be better able to identify students who require additional assistance and implement interventional strategies earlier in the course.

Incorporation of new technology has a potential for changing the educational environment and the way students learn (Boyle, 2006; Keengwe, 2007). At Mayo Medical School, daily use of ARS during the first two didactic blocks provided students with a learner-centered environment with real-time reflection of their grasp of the educational material, study habits, and critical thinking skills (Johnson, 2005). As this translated into their final examination score, students were able to use this tool to assess their own progress and seek remediation if necessary.

The majority of students rated ARS as a useful tool in providing feedback on their performance which is consistent with our correlation data. Many students reported that the daily ARS quizzes motivated them to study and review areas that required additional attention as reflected by their ARS score. Students had virtually no difficulty with the ARS technology. It worked well with PowerPoint and rarely delayed or significantly interrupted lectures. The instructors also reported ease of use with the system and indicated that they did not find it time consuming to incorporate and use ARS in their lectures. We concur with this impression since from our experience of using the system for the past three years, we have managed to successfully integrate ARS into the Genetics/Histology and Anatomy/Radiology didactic blocks as a standard component of the teaching and learning activity. Instructors also used ARS in courses as formative feedback for their own instruction. They enjoyed having a reliable tool to gauge student understanding of material. Overall, ARS appears to be a relatively user-friendly useful tool in a medical school curriculum. However, our results generated several questions for future research regarding students’ experience with ARS and impact on their learning process. Even though our study predicted course outcomes, it did not identify the study habits that led to positive outcomes in medical school courses.

Limitations

Several factors may have an effect on the interpretation and presentation of our findings. Due to the extensive revision of the medical curriculum at Mayo Medical School in 2006(Jelsing et al., 2007), it is difficult to compare current students’ performance on standardized examination (i.e., NBME Anatomy and Embryology Subject Examination) with courses in the “old curriculum.” In addition, the specific nature of Block II and III (only blocks with an extensive laboratory component and structured leadership program) does not allow for direct comparison with other didactic blocks. To various extents almost all blocks at Mayo Medical School use ARS technology (mainly for providing feedback).

Other factors may also potentially underlie our interpretation of the correlation between ARS scores and final examination scores. The most obvious is the intrinsic test-taking ability which is a part of a larger self-domain involving one’s overall competence as a student (Nowak and Vallacher, 1998). However, the structure of our ARS questions were based on the NBME guidelines in order to eliminate flaws and to provide a level playing field for the testwise and not-so-testwise students (Case and Swanson, 2002). We think that the expertise on test-taking strategies had no major impact on our correlations. Students in both blocks were organized in four-member teams with rotating leadership. During the course all students received anonymous feedback on peer-evaluation, and leaders received faculty formative evaluation on their performance as team leaders (Bryan et al., 2005). The ARS performance of the team was discussed with leaders, and they were encouraged to work with their teams in developing reciprocal peer-teaching activities. It is possible that those students who scored lower on ARS questions in the earlier part of the course could have benefited from their own team interactions.

Conclusion

Using ARS as an assessment tool is beneficial for students and faculty. It is a useful measure for predicting and tracking student performance. ARS provides immediate feedback to students and faculty, allowing for earlier identification of areas for improvement and implementation of strategies to promote more effective teaching and learning.

Acknowledgments

Grant support: This publication was made possible with statistical support funded by Grant Number 1 UL1 RR024150 from the National Center for Research Resources (NCRR), a component of the National Institutes of Health (NIH), and the NIH Roadmap for Medical Research.

This publication was made possible with statistical support funded by Grant Number 1 UL1 RR024150 from the National Center for Research Resources (NCRR), a component of the National Institutes of Health (NIH), and the NIH Roadmap for Medical Research. Its contents are solely the responsibility of the authors and do not necessarily represent the official view of NCRR or NIH. Information on NCRR is available at http://www.ncrr.nih.gov/. Information on Reengineering the Clinical Research Enterprise can be obtained from http://nihroadmap.nih.gov/clinicalresearch/overviewtranslational.asp.

Footnotes

NOTES ON CONTRIBUTORS: CARA J. ALEXANDER, B.A., is a third-year medical student at Mayo Medical School, College of Medicine, Mayo Clinic, Rochester, Minnesota. She was a teaching assistant in the Human Structure didactic block for first-year medical students.

WERONIKA M. CRESCINI, M.D., is an anesthesiology resident at the Department of Anesthesiology, Duke University School of Medicine, Durham, North Carolina. She is a Mayo Medical School graduate and while volunteering in Fall 2006 as a teaching assistant in the Basic Structure block, she initiated evaluation of ARS technology in basic science courses.

JUSTIN E. JUSKEWITCH, B.A., is an M.D./Ph.D., student at Mayo Medical School, College of Medicine, Mayo Clinic, Rochester, Minnesota. He is a student coordinator for the tutorial program for first and second year medical students.

NIRUSHA LACHMAN, Ph.D., is an assistant professor in the Department of Anatomy at the Mayo Medical School, College of Medicine, Mayo Clinic, Rochester, Minnesota. She teaches anatomy and histology to first-year medical students.

WOJCIECH PAWLINA, M.D., is a professor and chair of the Department of Anatomy and assistant dean for the Curriculum Development and Innovation at the Mayo Medical School, College of Medicine, Mayo Clinic, Rochester, Minnesota. He teaches anatomy and histology, and serves as a director of the Human Structure didactic block for first-year medical students.

LITERATURE CITED

- Banks DA, Bateman S. Audience Response System in Education: Supporting ‘lost in the Desert’ Learning Scenario. Proceedings of the International Conference on Computers in Education (ICCE 2004); Melbourne, Australia. 2004 Nov 30-Dec 3; Chung-Li, Taiwan: The Asia-Pacific Society for Computer in Education; 2004. pp. 1219–1223. [Google Scholar]

- Barber M, Njus D. Clicker evolution: Seeking intelligent design. CBE Life Sci Educ. 2007;6:1–8. doi: 10.1187/cbe.06-12-0206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beatty ID, Leonard WJ, Gerace WJ, Dufresne . Question driven instructions: Teaching science (well) with an audience response system. In: Banks DA, editor. Audience Response System in Higher Education: Applications and Cases. Hershey, PA: Information Science Publishing; 2006. pp. 96–115. [Google Scholar]

- Boyle J. Eight years of asking questions. In: Banks DA, editor. Audience Response System in Higher Education: Applications and Cases. Hershey, PA: Information Science Publishing; 2006. pp. 289–304. [Google Scholar]

- Bryan RE, Krych AJ, Carmichael SW, Viggiano TR, Pawlina W. Assessing professionalism in early medical education: Experience with peer evaluation and self-evaluation in the gross anatomy course. Ann Acad Med Singapore. 2005;34:486–491. [PubMed] [Google Scholar]

- Caldwell JE. Clickers in the large classroom: Current research and best-practice tips. CBE Life Sci Educ. 2007;6:9–20. doi: 10.1187/cbe.06-12-0205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Case SM, Swanson DB. Constructing Written Test Questions for the Basic and Clinical Sciences. 3. Philadelphia, PA: National Board of Medical Examiners; 2002. [Accessed 24 June 2009]. p. 180. URL: http://www.nbme.org/publications/item-writing-manual.html. [Google Scholar]

- Collins J. Audience response systems: Technology to engage learners. J Am Coll Radiol. 2008;5:993–1000. doi: 10.1016/j.jacr.2008.04.008. [DOI] [PubMed] [Google Scholar]

- Dufresne RJ, Gerace WJ, Mestre JP, Leonard WJ. University of Massachusetts Physics Education Research Group (UMPERG) Technical Report. Scientific Reasoning Research Institute, University of Massachusetts Amherst; Amherst, MA: 2000. [accessed 3 June 2009]. ASK-IT/A2L: Assessing student knowledge with instructional technology; pp. 1–28. URL: http://srri.umass.edu/files/dufresne-2000ask.pdf. [Google Scholar]

- Horowitz HM. Student Response Systems: Interactivity in a classroom environment. New York, IBM Corporation, Corporate Education Center; Thornwood, NY: 1988. [accessed 3 June 2008]. URL: http://www.einstruction.com/News/index.cfm?fuseaction=News.display&Menu=newsroom&content=FormalPaper&id=210. [Google Scholar]

- Jelsing EJ, Lachman N, O’Neil AE, Pawlina W. Can a flexible medical curriculum promote student learning and satisfaction? Ann Acad Med Singapore. 2007;36:713–718. [PubMed] [Google Scholar]

- Johnson JT. Creating learner-centered classrooms: Use of an audience response system in pediatric dentistry education. J Dent Educ. 2005;69:378–381. [PubMed] [Google Scholar]

- Kay RH, LeSage A. A strategic assessment of audience response systems used in higher education. Australas J Educ Tech. 2009;25:235–249. [Google Scholar]

- Keengwe J. Faculty integration of technology into instruction and students’ perceptions of computer technology to improve student learning. J Info Tech Educ. 2007;6:169–179. [Google Scholar]

- Mazur E. Farewell, Lecture? Science. 2009;323:50–51. doi: 10.1126/science.1168927. [DOI] [PubMed] [Google Scholar]

- Meyers C, Jones TB. Promoting Active Learning—Strategies for the College Classroom. 1. San Francisco, CA: Jossey-Bass Inc; 1993. p. 224. [Google Scholar]

- Miller SA, Perrotti W, Silverthorn DU, Dalley AF, Rarey KE. From college to clinic: Reasoning over memorization is key for understanding anatomy. Anat Rec. 2002;269:69–80. doi: 10.1002/ar.10071. [DOI] [PubMed] [Google Scholar]

- Nowak A, Vallacher RR. Dynamical Social Psychology. 1. New York, NY: The Guilford Press; 1998. p. 318. [Google Scholar]

- Nayak L, Erinjeri JP. Audience response systems in medical student education benefit learners and presenters. Acad Radiol. 2008;15:383–389. doi: 10.1016/j.acra.2007.09.021. [DOI] [PubMed] [Google Scholar]

- Pradhan A, Sparano D, Ananth CV. The influence of an audience response system on knowledge retention: An application to resident education. Am J Obstet Gynecol. 2005;193:1827–1830. doi: 10.1016/j.ajog.2005.07.075. [DOI] [PubMed] [Google Scholar]

- Premkumar K, Coupal C. Rules of engagement 12 tips for successful use of “clickers” in the classroom. Med Teach. 2008;30:146–149. doi: 10.1080/01421590801965111. [DOI] [PubMed] [Google Scholar]

- Robertson LJ. Twelve tips for using a computerised interactive audience response system. Med Teach. 2000;22:237–239. [Google Scholar]

- Roschelle J. Keynote paper: Unlocking the learning value of wireless mobile devices. J Comp Assist Learn. 2003;19:260–272. [Google Scholar]

- Rubio EI, Bassignani MJ, White MA, Brant WE. Effect of an audience response system on resident learning and retention of lecture material. Am J Roentgenol. 2008;190:W319–322. doi: 10.2214/AJR.07.3038. [DOI] [PubMed] [Google Scholar]

- Schackow TE, Chavez M, Loya L, Friedman M. Audience response system: Effect on learning in family medicine residents. Fam Med. 2004;36:496–504. [PubMed] [Google Scholar]

- Simpson V, Oliver M. Electronic voting systems for lectures then and now: A comparison of research and practice. Australas J Educ Tech. 2007;23:187–208. [Google Scholar]

- Sobral DT. Cross-year peer tutoring experience in a medical school: Conditions and outcomes for student tutors. Med Educ. 2002;36:1064–1070. doi: 10.1046/j.1365-2923.2002.01308.x. [DOI] [PubMed] [Google Scholar]

- Streeter JL, Rybicki FJ. A novel standard-compliant audience response system for medical education. Radiographics. 2006;26:1243–1249. doi: 10.1148/rg.264055212. [DOI] [PubMed] [Google Scholar]

- TurningPoint. Turning Technologies LLC; Youngstown, OH: 2009. [accessed 3 June 2009]. URL: http://www.turningtechnologies.com/ [Google Scholar]

- Viggiano TR, Pawlina W, Lindor KD, Olsen KD, Cortese DA. Putting the needs of the patient first: Mayo Clinic’s core value, institutional culture, and professionalism covenant. Acad Med. 2007;82:1089–1093. doi: 10.1097/ACM.0b013e3181575dcd. [DOI] [PubMed] [Google Scholar]

- Zielinska W, Pawlina W. Evaluation of student performance using an audience response system. Proceedings of the E-Learn 2006 -World Conference on E-Learning in Corporate Government, Healthcare, and Higher Education; Honolulu, HI. 2006 Oct 13–17; Chesapeake, VA: Association for the Advancement of Computing in Education; 2006. p. 108. [Google Scholar]