Abstract

Objectives

This project assessed electroacoustic benefit for speech recognition with a competing talker.

Design

Using a cochlear implant subject with normal hearing in the contralateral ear, the contribution of low-pass and high-pass natural sound to speech recognition was systematically measured.

Results

High-frequency sound did not improve performance, but low-frequency sound did, even when unintelligible and limited to frequencies below 150 Hz.

Conclusions

The low-frequency sound assists separation of the two talkers, presumably using the fundamental frequency cue. Extrapolating this finding to regular cochlear implant users may suggest that using a hearing aid on the contralateral ear will improve performance, even with very limited residual hearing.

Keywords: cochlear implant, electroacoustic, bimodal hearing

INTRODUCTION

Traditional cochlear implant sound processors use a fixed-rate pulse carrier, modulated by low-pass filtered amplitude envelopes; the temporal fine structure is discarded. Pitch cues are poorly represented, leading to degraded performance in speech recognition in noise, music perception, talker identification, and intonation recognition. In particular, changes in human voice pitch, the fundamental frequency (F0), are difficult for cochlear implant users to perceive (Green et al. 2004). Some newer processing strategies now attempt to provide some temporal fine structure (Riss et al. 2008).

Cochlear implant simulation studies have demonstrated that the addition of low-frequency unprocessed sound improves speech recognition in noise (Brown and Bacon 2009; Chang et al. 2006; Kong and Carlyon 2007; Qin and Oxenham 2006). Chang et al (2006) suggested that the presence of low-frequency sound helps to segregate the information-bearing high-frequency sounds into different auditory streams, thus assisting speech recognition with a competing talker. Debate exists concerning the exact mechanism for this improvement. Some researchers suggest that F0 plays an important role (Brown and Bacon 2009; Qin and Oxenham 2006) perhaps through glimpsing rather than segregation (Brown and Bacon 2009), while Kong and Carlyon (2007) proposed that rather than F0, the preservation of the first formant (F1) and other low-frequency phonetic cues is more important.

This research involved an unusual cochlear implant subject with virtually normal hearing in the contralateral ear. Although cases of cochlear implantation in unilateral deafness have been reported (Vermeire and Van de Heyning 2008), it remains uncommon. The aim was to assess speech recognition with a competing talker in the ear using a cochlear implant, while systematically adding acoustic information to the normal-hearing ear.

MATERIALS AND METHODS

Subject

The subject was a 46 year old male who received a Clarion® HiRes 90k (Advanced Bionics Corporation, Sylmar, California) cochlear implant in his right ear 16 months prior to testing, with virtually normal hearing in his left ear (≤ 20 dBHL re ANSI-1996 for octave frequencies between 0.25 and 8 kHz, except 35 dBHL at 4 kHz). He used an Auria® speech processor and a HiRes® S clinical map with default frequency allocation, and received an implant due to intractable tinnitus. The study protocol was approved by the UC Irvine Institutional Review Board.

Test material

The target speech material was HINT sentences spoken by a male (Nilsson et al. 1994); two lists (20 sentences) were used for each condition. All test materials were digitized with a sampling rate of 44.1 kHz, and comprised mono 16-bit resolution wav files.

The masker was a female speaking the IEEE sentences (IEEE 1969) (used with permission from the Sensory Communication Group of the Research Laboratory of Electronics at MIT). She spoke 40 different sentences which were selected to have greater duration than the longest HINT sentence, ensuring that no part of the target was presented in quiet. The mean fundamental frequencies of the two voices were 109 Hz for the target male, and 214 Hz for the competing female.

Procedure

A MATLAB® (The MathWorks, Inc., Natick, Massachusetts) program, developed by the first author, was used to present and score the sentences. The target and masker were added digitally with a signal-to-noise ratio (SNR) of 0 dB. This SNR was chosen because the subject scores very poorly at this level with electrical stimulation alone; this allows any improvement with electroacoustic stimulation to be shown. The masker sentence began approximately 0.45 seconds before the target. The resultant signal was split into two channels; one channel was sent unprocessed to the ear with the cochlear implant via direct connection to the speech processor. The other channel was low- or high-pass filtered and routed to the normal-hearing ear via an insert ear phone. Digital filtering was implemented in MATLAB® using third order elliptical filters with 1 dB of peak-to-peak ripple in the pass-band, and 80 dB minimum attenuation in the stop-band. The cutoff frequencies were 150, 250, 500, 1000, 2000, 4000, and 6000 Hz. Although the root mean square (rms) level of the filtered sound was the same as the unfiltered, it sounded very quiet when heavily filtered. For the electroacoustic stimulation, the filtered acoustic information was presented to the normal-hearing ear at the same time as the full signal was presented to the speech processor.

The subject was tested in three configurations: electroacoustic stimulation (cochlear implant in one ear plus filtered speech information in the other ear), cochlear implant alone, and acoustic information alone. To counteract the practice effect, he was tested first with his expected best configuration (electroacoustic), then with cochlear implant alone, then with acoustic alone. Within each configuration, he was again tested with the expected best conditions first. Before each condition, two lists were presented for practice allowing the subject to become accustomed to that condition. As there are limited numbers of HINT sentences the practice lists were IEEE sentences.

The presentation level in the cochlear implant ear was adjusted to a comfortable listening level, using the speech processor adjustment. The rms level of the target remained constant throughout the testing (60 dBHL for the normal-hearing ear, and a comfortable level for the direct connection); the masker intensity was the same. The sentences were scored for words correct (not key words).

RESULTS

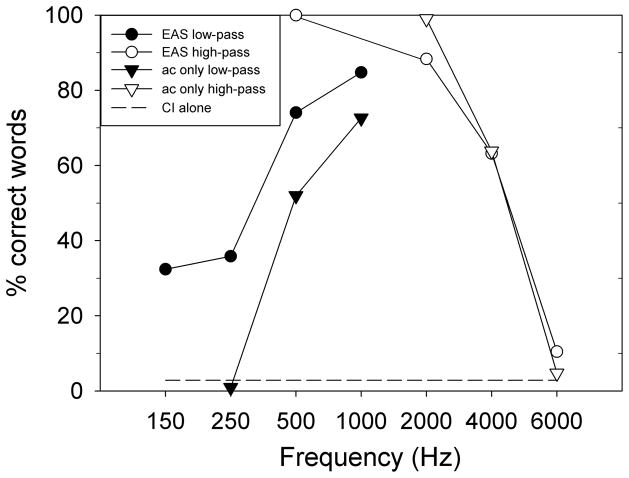

With the cochlear implant alone, the subject scored 3% words correct at 0 dB SNR. The cochlear implant alone condition was repeated at the end of the test session in order to check for any practice effect. The score at this point was 2%. Figure 1 shows the percentage correct word scores as a function of cutoff frequency. The cochlear implant alone score of 3% is shown by a dotted horizontal line. Examination of the acoustic only scores represented by the triangles shows that information below 250 Hz was unintelligible (1% word score). 150 Hz low-pass was not tested, as it was assumed to be at or below the 250 Hz score. The score improved as the cutoff frequency increased, reaching 73% for information below 1000 Hz. In the high-pass situation (represented by open triangles), information solely above 6000 Hz was virtually unintelligible (5% word score), and the score increased as the cutoff frequency lowered, reaching 99% for information above 2000 Hz.

Figure 1.

Percentage correct word score on HINT sentences presented with a female talker masker at 0 dB SNR. The cochlear implant alone score of 3% is shown by a dotted horizontal line. The triangles represent scores for filtered acoustic stimuli presented to the normal-hearing ear at various cutoff frequencies. The circles represent scores for the combination of filtered acoustic stimuli to the normal-hearing ear and unfiltered stimuli to the cochlear implant ear (electroacoustic stimulation).

The electroacoustic scores are represented by the circles. Although the 150 and 250 Hz acoustic only conditions provided no intelligibility and the cochlear implant alone score was 3%, when these signals were presented together, the word scores were 32 and 36% respectively for 150 and 250 Hz electroacoustic stimulation. This is clearly not a simple additive effect; adding the cochlear implant performance to the acoustic only score would raise the curve negligibly by 3%. A similar electroacoustic advantage was seen for 500 and 1000 Hz low-pass cutoffs. When the high-frequency electroacoustic data was examined, a different pattern was seen. The high-frequency electroacoustic stimulation did not offer any advantage over the acoustic only condition.

DISCUSSION

In common with previous studies such as Chang et al (2006) and Dorman et al (2008) this research demonstrates that low-frequency acoustic sound provides significant benefit when combined with cochlear implant stimulation. Different from previous studies, this subject has normal hearing in the contralateral ear which ensures that the electroacoustic benefit is not confounded by the hearing status in the contralateral ear. A novel finding is that this electroacoustic advantage is limited to low-frequency sound, even if it only contains the fundamental frequency. The high-frequency electroacoustic condition produced virtually identical results to the high-frequency acoustic only condition, suggesting that speech cues from the high-frequency sound are redundant, as opposed to the independent and complementary information from the low-frequency sound and the cochlear implant. This demonstrates that it is not simply additional sound which provides the benefit; it is the low-frequency sound specifically.

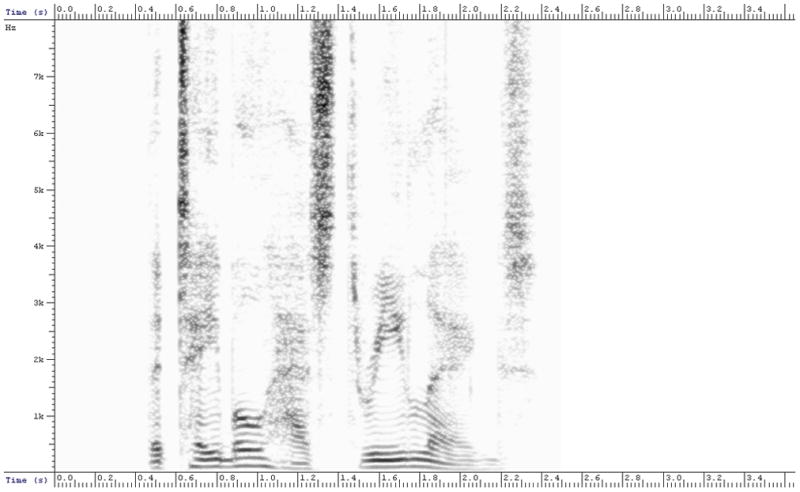

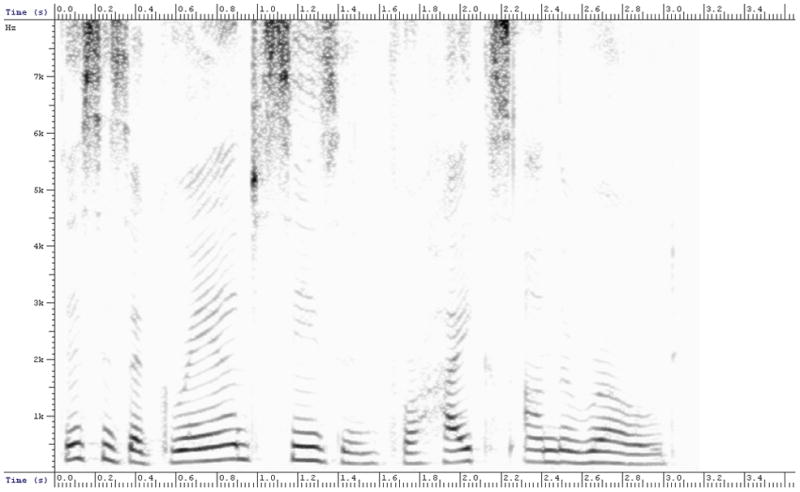

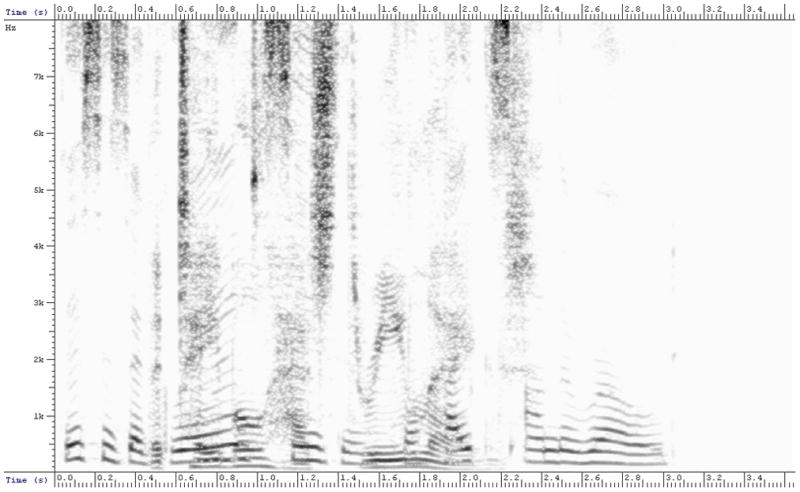

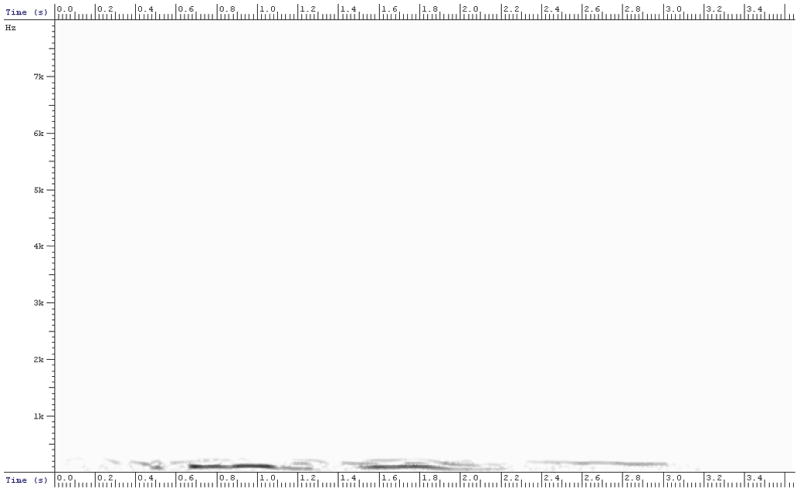

Even unintelligible information below 150 Hz provided substantial electroacoustic benefit. Figure 2 shows spectrograms for a) the target male, b) the female masker, c) the target and masker combined at 0 dB SNR, and d) the target and masker mix filtered at 150 Hz. Although the predominant signal present is F0 from the male; there is also some F0 from the female represented at reduced intensity, presumably at the limits of the filter. The authors suggest that the F0 cue in the low-frequency sound is helping central mechanisms to separate them.

Figure 2.

Spectrograms for a) the target male talker, b) the female masker, c) the target and masker combined at 0 dB SNR, and d) the low-pass sound filtered at 150 Hz. The target talker spoke the sentence ‘The table has three legs’; the female masker spoke the sentence ‘This is a grand season for hikes on the road’. The target and masker mix was filtered to produce d). The x axis represents time from 0 to 3.6 seconds; the y axis depicts frequency from 0 to 8 kHz.

First formant cues generally occur above 300 Hz (Hillenbrand et al. 1995), however due to filter roll-off properties, there may have been some weak first formant cues even in the 150 Hz low-pass stimuli. In their simulation study, Kong and Carlyon (2007) suggested that low-frequency phonetic cues (for example formant transition, voicing, first formant) provided a mechanism for electroacoustic benefit. Results from this study suggest more reliance on F0 although the mechanism has not been specifically explored. Another consideration is that with the 150 Hz low-pass stimuli, the actual SNR would be higher than 0 dB because the male’s voice would predominate in this frequency region, thus allowing some reliance on target voicing cues.

This subject has normal hearing in the contralateral ear. Of course regular cochlear implant users generally have a severe to profound hearing loss; acoustic information will be more difficult to utilize in a very damaged ear. If the trend of these findings can be extrapolated to regular cochlear implant users it suggests that they should benefit from electroacoustic stimulation by wearing a hearing aid on the contralateral ear, even with very limited residual hearing.

Acknowledgments

The authors gratefully acknowledge the participation of the research subject. This work was supported by National Institutes of Health (F31-DC008742 to HEC and RO1-DC008858 to FGZ).

Footnotes

This single-subject study assessed electroacoustic benefit for speech recognition with a competing talker. Using a cochlear implant subject with normal hearing in the contralateral ear, the contribution of low-pass and high-pass natural sound to speech recognition was systematically measured. High-frequency sound did not improve performance, but low-frequency sound did, even when unintelligible and limited to below 150 Hz. The fundamental frequency cue in the low-frequency sound assists separation of the two talkers. Extrapolating this finding to regular cochlear implant users may suggest that using a hearing aid on the contralateral ear will improve performance, even with very limited residual hearing.

References

- Brown CA, Bacon SP. Low-frequency speech cues and simulated electric-acoustic hearing. J Acoust Soc Am. 2009;125:1658–1665. doi: 10.1121/1.3068441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang JE, Bai JY, Zeng FG. Unintelligible low-frequency sound enhances simulated cochlear-implant speech recognition in noise. IEEE Trans Biomed Eng. 2006;53:2598–2601. doi: 10.1109/TBME.2006.883793. [DOI] [PubMed] [Google Scholar]

- Dorman MF, Gifford RH, Spahr AJ, et al. The benefits of combining acoustic and electric stimulation for the recognition of speech, voice and melodies. Audiol Neurootol. 2008;13:105–112. doi: 10.1159/000111782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green T, Faulkner A, Rosen S. Enhancing temporal cues to voice pitch in continuous interleaved sampling cochlear implants. J Acoust Soc Am. 2004;116:2298–2310. doi: 10.1121/1.1785611. [DOI] [PubMed] [Google Scholar]

- Hillenbrand J, Getty LA, Clark MJ, et al. Acoustic characteristics of American English vowels. J Acoust Soc Am. 1995;97:3099–3111. doi: 10.1121/1.411872. [DOI] [PubMed] [Google Scholar]

- IEEE. IEEE Recommended Practice for Speech Quality Measurements. IEEE Transactions on Audio and Electroacoustics, AU-17. 1969:225–246. [Google Scholar]

- Kong YY, Carlyon RP. Improved speech recognition in noise in simulated binaurally combined acoustic and electric stimulation. J Acoust Soc Am. 2007;121:3717–3727. doi: 10.1121/1.2717408. [DOI] [PubMed] [Google Scholar]

- Nilsson M, Soli SD, Sullivan JA. Development of the Hearing in Noise Test for the measurement of speech reception thresholds in quiet and in noise. J Acoust Soc Am. 1994;95:1085–1099. doi: 10.1121/1.408469. [DOI] [PubMed] [Google Scholar]

- Qin MK, Oxenham AJ. Effects of introducing unprocessed low-frequency information on the reception of envelope-vocoder processed speech. J Acoust Soc Am. 2006;119:2417–2426. doi: 10.1121/1.2178719. [DOI] [PubMed] [Google Scholar]

- Riss D, Arnoldner C, Baumgartner WD, et al. A new fine structure speech coding strategy: speech perception at a reduced number of channels. Otol Neurotol. 2008;29:784–788. doi: 10.1097/MAO.0b013e31817fe00f. [DOI] [PubMed] [Google Scholar]

- Vermeire K, Van de Heyning P. Binaural hearing after cochlear implantation in subjects with unilateral sensorineural deafness and tinnitus. Audiol Neurootol. 2008;14:163–171. doi: 10.1159/000171478. [DOI] [PubMed] [Google Scholar]