Abstract

Several lines of research indicate that emotional and motivational information may be useful in guiding the allocation of attentional resources. Two areas of the frontal lobe that are particularly implicated in the encoding of motivational information are the orbital prefrontal cortex (PFo) and the dorsomedial region of prefrontal cortex, specifically the anterior cingulate sulcus (PFcs). However, it remains unclear whether these areas use this information to influence spatial attention. We used single-unit neurophysiology to examine whether, at the level of individual neurons, there was evidence for integration between reward information and spatial attention. We trained two subjects to perform a task that required them to attend to a spatial location across a delay under different expectancies of reward for correct performance. We balanced the order of presentation of spatial and reward information so we could assess the neuronal encoding of the two pieces of information independently and conjointly. We found little evidence for encoding of the spatial location in either PFo or PFcs. In contrast, both areas encoded the expected reward. Furthermore, PFo consistently encoded reward more quickly than PFcs, although reward encoding was subsequently more prevalent and stronger in PFcs. These results suggest a differential contribution of PFo and PFcs to reward encoding, with PFo potentially more important for initially determining the value of rewards predicted by sensory stimuli. They also suggest that neither PFo nor PFcs play a direct role in the control of spatial attention.

INTRODUCTION

Emotional and motivational stimuli preferentially attract attentional resources. For example, when subjects view two tachistoscopically presented faces of differing emotional valence, attention is preferentially directed away from threatening faces and toward happy faces (Bradley et al. 1997). Attentional resources are modulated by monetary rewards (Della Libera and Chelazzi 2006; Engelmann and Pessoa 2007) and are biased toward pictures of food when one is hungry (Mogg et al. 1998) and toward smoking-related pictures in smokers (Mogg et al. 2003). It is important to identify the neuronal underpinnings of these processes because they are potentially of clinical relevance. Attentional bias toward threatening stimuli, for example, may serve to maintain anxiety states in clinically anxious patients because they would be more likely to detect potential sources of danger in their environment (Mathews 1988). In support of this, clinically anxious individuals are faster to detect threatening words than controls (MacLeod et al. 1986; Mogg et al. 1992).

However, it remains unclear precisely how emotional and motivational information regulate attentional processes to guide behavior. One possibility is that increasing the incentive value of a behavior increases the allocation of attentional control processes, an effect that may underlie the tendency for behavioral performance to improve as incentive value increases (Roesch and Olson 2007). Recent findings in humans using functional magnetic resonance imaging (fMRI) suggest that orbital prefrontal cortex (PFo) may have an important role to play. When subjects saw two stimuli, one of which predicted an aversive event, their attention was biased toward the location of the aversive stimulus and there was activation in PFo (Armony and Dolan 2002). Critically, if both locations contained the aversive stimulus there was no activation in PFo. Thus activity did not simply relate to the expectancy of the aversive event but rather reflected the integration of the emotional stimulus with spatial attention. Similarly, activity in PFo correlates with the speed of attentional shifts toward food targets when a subject either is in a hungry or sated motivational state (Mohanty et al. 2008). However, neurophysiological studies, while clearly implicating PFo in the processing of reward information (Padoa-Schioppa and Assad 2006; Wallis 2007; Wallis and Miller 2003), have found a notable lack of spatial signals in this area (Padoa-Schioppa and Assad 2006; Tremblay and Schultz 1999; Wallis and Miller 2003). Thus it remains unclear whether PFo could serve the role of integrating attentional and reward-related processes.

The anterior cingulate sulcus (PFcs) may be a more likely region to serve this role. It receives convergent input from limbic and prefrontal areas and sends direct projections to the cingulate motor areas, which in turn project to the motor cortex and spinal cord (Bates and Goldman-Rakic 1993; Dum and Strick 1993; Morecraft and Van Hoesen 1998; Ongur and Price 2000; Van Hoesen et al. 1993). Neurons in PFcs encode reward information (Amiez et al. 2006; Kennerley et al. 2009; Matsumoto et al. 2007; Sallet et al. 2007; Seo and Lee 2007) and use this information to guide spatially distinct behavioral responses (Matsumoto et al. 2003; Williams et al. 2004). In addition, there is some evidence to implicate PFcs in working memory, a process that, at least at the neuronal level, appears to have much in common with attentional control (Everling et al. 2002; LaBar et al. 1999; Rainer et al. 1998). Single neurons in PFcs modulate their activity during delays in working memory tasks, and this delay activity can reflect the position of spatial cues or upcoming spatial responses (Amiez et al. 2006; Niki and Watanabe 1976; Procyk and Joseph 2001; Procyk et al. 2000). Furthermore, neuroimaging studies have repeatedly shown activations in and around PFcs in tasks requiring working memory and spatial attention (Mesulam et al. 2001; Nobre et al. 2000; Paus et al. 1998; Petit et al. 1998). Finally, damage to the dorsomedial prefrontal frontal cortex including PFcs can produce impairments in tasks requiring spatial working memory (Meunier et al. 1997; Pribram et al. 1962; Rushworth et al. 2003). This has led to the suggestion that PFcs is one component of a network (along with parietal and frontal cortex) that is responsible for directing spatial attention, in particular, integrating motivational and attentional information to ensure that attention is directed toward motivationally relevant stimuli (Mesulam 1999). In support of this idea, studies using fMRI that manipulate both attentional focus and emotional valence typically find that the dorsomedial prefrontal frontal cortex including PFcs is the only brain area sensitive to both manipulations (De Martino et al. 2008; Fichtenholtz et al. 2004).

In this study, we used single-unit neurophysiology to examine whether there was a functional dissociation in the neuronal properties of PFcs and PFo in the integration of reward-related information with spatial working memory. We trained two subjects to perform a task that required them to hold a spatial mnemonic stimulus in working memory under different expectancies of reward for correct performance. We predicted that neurons in both areas would encode the expected reward but that the integration of reward with spatial working memory would preferentially occur in PFcs. Specifically, we predicted that this integration would take the form of an increase in a neuron's spatial selectivity as the size of the expected reward increased, consistent with the idea of more focused spatial attention occurring in the presence of motivationally relevant stimuli.

METHODS

Subjects and neurophysiological procedures

Two male rhesus monkeys (Macaca mulatta) served as subjects. They were 5–6 yr of age and weighed 8–11 kg at the time of recording. We regulated their fluid intake to maintain motivation on the task. We recorded single neurons simultaneously from PFcs and PFo as well as lateral prefrontal cortex. We report the data from the lateral prefrontal cortex elsewhere (Kennerley and Wallis 2009b). The current paper focuses on the results from PFcs and PFo. We recorded PFo neurons from the cortex between the lateral and medial orbital sulci in both hemispheres of both subjects. We recorded PFcs neurons from the dorsal bank of the anterior cingulate sulcus from the left hemisphere in subject A and the right hemisphere in subject B. Although this region is in the cingulate sulcus, anatomists consider it part of prefrontal cortex (area 9) rather than anterior cingulate cortex. The anterior cingulate cortex begins in the ventral bank of the cingulate sulcus and includes areas 24, 25 and 32 of the cingulate gyrus (Petrides and Pandya 1994; Vogt et al. 2005).

For each recording session, we recorded neuronal activity from tungsten microelectrodes (FHC Instruments, Bowdoin, ME) using custom-built, manual microdrives. The microdrives were inserted into a custom-built grid that allowed access to neuronal tissue through grid holes in a 15 × 15-mm orientation with 1-mm spacing. We used a 1.5 T MRI scanner to ensure accurate positioning of our electrodes and to determine the approximate distance to lower the electrodes (Fig. 1 A). Each microdrive lowered two electrodes, and each day we lowered multiple pairs of electrodes into the areas of interest, typically lowering 10–24 electrodes per day. We typically recorded from a single grid position from 1 to 3 days, and each day the electrodes were at a different depth. Each day we lowered the electrodes until we found a well-isolated neuronal signal. This was typically 200-μm deeper than the previous day's recording location, although on occasion it could take ≤2 mm of advancement to find a suitable signal. Neuronal waveforms were digitized and analyzed off-line (Plexon Instruments, Dallas, TX). We randomly sampled neurons; we did not attempt to select neurons based on responsiveness. This procedure aimed to reduce bias in our estimate of neuronal activity thereby allowing a fair comparison of neuronal properties between the different brain regions. We reconstructed our recording locations by measuring the position of the recording chambers using stereotactic methods. We plotted the positions onto the MRI sections using commercial graphics software (Adobe Illustrator, San Jose, CA). We confirmed the correspondence between the MRI sections and our recording chambers by mapping the position of sulci and gray and white matter boundaries using neurophysiological recordings. We traced and measured the distance of each recording location along the cortical surface from the lip of the ventral bank of the principal sulcus. We also measured the positions of the other sulci relative to the principal sulcus in this way, allowing the construction of the unfolded cortical maps shown in Fig. 1B. All procedures were in accord with the National Institute of Health guidelines and the recommendations of the University of California Berkeley Animal Care and Use Committee.

Fig. 1.

A: magnetic resonance imaging (MRI) scans illustrating the coronal slice from the middle of our recording locations illustrating potential electrode paths. B: flattened cortical reconstructions illustrating the recording locations. Top: anterior cingulate sulcus (PFcs); bottom: orbital prefrontal cortex (PFo). We measured the anterior-posterior (AP) position from the interaural line and the dorsoventral position from the ventral lip of the principal sulcus. The diameter of the circle indicates the number of recordings made at that particular location. CSd, dorsal bank of the cingulate sulcus; CSv, ventral bank of the cingulate sulcus; LOS, lateral orbital sulcus; MOS, medial orbital sulcus. In subject A, the genu of the arcuate sulcus was at AP 21 mm, the genu of the corpus callosum was at AP 29 mm, and the tip of the frontal pole was at AP 48 mm. In subject B, the genu of the arcuate sulcus was at AP 20 mm, the genu of the corpus callosum was at AP 30 mm, and the tip of the frontal pole was at AP 48 mm. C: in the RS task, the subject sees 2 cues separated by a delay. The 1st cue indicates the amount of juice to expect for successful performance of the task, and the 2nd cue indicates the location the subject must maintain in spatial working memory. The subject indicates his response by making a saccade to the location of the mnemonic cue 1 s later. The fixation cue changes to yellow to tell the subject to initiate his saccade. The SR task is identical except the cues appear in the opposite order. There are 5 different reward amounts, each predicted by 1 of 2 cues, and 24 spatial locations.

Behavioral task

We used NIMH Cortex (http://www.cortex.salk.edu) to control the presentation of the stimuli and the task contingencies. We monitored eye position and pupil dilation using an infrared system with a sampling rate of 125 Hz (ISCAN, Burlington, MA). We presented two cues sequentially separated by a delay, one of which was a spatial location that the subject had to hold in working memory (the mnemonic stimulus), and one of which indicated to the subject how much reward they would receive for performing the task correctly (the reward-predictive cue; Fig. 1C). We tested 24 spatial locations that formed a 5 × 5 matrix centered at fixation with each location separated by 4.5°. The subject had to maintain fixation within ±2° of the fixation cue throughout the trial until the fixation cue changed color. Failure to maintain fixation resulted in a 5-s timeout, and we recorded the trial as a break of fixation. Once the fixation cue changed color, the subject was free to saccade to the target. If the subject failed to acquire the target within 400 ms, or failed to maintain fixation of the target (within 3° of the target location) for 150 ms following target acquisition, we recorded an inaccurate response and terminated the trial without delivery of reward. There were five different sizes of reward. We instructed each reward amount with one of two pictures (i.e., 10 pictures in total). This allowed us to distinguish neuronal responses to the visual properties of the picture from neuronal responses that encoded the reward predicted by the picture. In the RS task, the first cue was the reward-predictive cue and the second cue the spatial mnemonic stimulus, whereas the cues occur in the opposite order for the SR task. We fully counterbalanced all experimental factors, and the different trial types were randomly intermingled. Subjects completed ∼600 correct trials per day.

Statistical methods

We conducted all statistical analyses using MATLAB (Mathworks, Natick, MA). To analyze the behavioral performance, we calculated the percentage of trials that the subject performed correctly in each recording session for each reward amount and each task. In addition, for each correctly performed trial, we calculated the subject's reaction time defined as the time from the fixation spot changing color until the time at which the subject's eye position moved outside of the fixation window.

Our first step in analyzing the neuronal data was to visualize it by constructing spike density histograms. We calculated the mean firing rate of the neuron across the appropriate experimental conditions using a sliding window of 100 ms. We then analyzed neuronal activity in six predefined epochs of 500 ms each, corresponding to the presentation of the two cues and the first and second half of each of the delays. We chose the epochs to ensure each was of equivalent size. For each neuron, we calculated its mean firing rate on each trial during each epoch. We used this information to visualize the mnemonic field of the neuron during each epoch. For each spatial location, we calculated the neuron's standardized firing rate by subtracting the mean firing rate of the neuron across all spatial locations and dividing by the SD of the firing rate across all spatial locations. We then performed five iterations of a two-dimensional linear interpolation and plotted the resulting matrix on a pseudocolor plot.

To determine whether a neuron encoded an experimental factor, we used linear regression to quantify how well the experimental manipulation predicted the neuron's firing rate. Before conducting the regression, we standardized our dependent variable (i.e., firing rate) by subtracting the mean of the dependent variable from each data point and dividing each data point by the SD of the distribution. We also centered the independent variables by subtracting a single value from all of the data points to shift the scale of the predictor variables. Thus all our predictor variables (x position, y position and reward magnitude) ranged from −2 to +2. This reduced multicollinearity for interaction terms. We evaluated the significance of selectivity at the single neuron level using an alpha level of P < 0.01.

We examined how neurons encoded reward information by performing a linear regression on the neuron's mean firing rate (F) during a given epoch using the size of the reward predicted by the cue as the predictive variable (Vr). We classified a neuron as reward selective if the equation F = b0 + brVr significantly predicted the neuron's firing rate. To determine this, we used the least-squared methods to calculate an F statistic: we divided the sum of squared errors produced by the equation by the sum of squared errors for a least-squares equation with no independent variables (F = b0). We evaluated the significance of the F statistic on V and N – V – 1 degree of freedom, where N is the total number of observations and V is the total number of predictor variables (V = 1 for reward analysis). To examine the time course of reward selectivity, we performed a “sliding” regression analysis to calculate at each time point whether the expected reward size significantly predicted the neuron's firing rate. We fit the regression equation to neuronal firing for overlapping 200-ms windows, beginning with the first 200 ms of the fixation period and then shifting the window in 10-ms steps until we had analyzed the entire trial. If the equation significantly predicted the neuron's firing rate for three consecutive time bins (evaluated at P < 0.005), then we took the first of these time bins as the neuron's latency. We determined this criterion by examining how many neurons reached the criterion during a baseline period consisting of a 500-ms epoch centered in the middle of the fixation period preceding the onset of the first cue. At this stage of the task, the subject has no information about the upcoming trial, and so any neurons that reached criterion must have done so by chance. Consequently we can use this information to determine the false alarm rate of our criterion. We calculated the proportion of neurons during the baseline period where the significance of the regression equation exceeded P < 0.005 for three consecutive time bins. We repeated this using significance levels of P < 0.01 and P < 0.05. For the encoding of reward information, 1.5% of neurons reached the criterion using P < 0.005. If we evaluated our criterion at P < 0.01, our false alarm rate increased to 4.4%, whereas a criterion of P < 0.05 yielded a false alarm rate of 20%. Thus we used P < 0.005 as our criterion because this yielded a false alarm rate that was clearly <5%.

To examine how neurons encoded spatial information, we performed a linear regression using the neuron's mean firing rate (F) as the dependent variable and the x and y coordinates of the spatial cue as two predictive variables (Vx and Vy, respectively). We classified a neuron as spatially selective if the equation F = b0 + bxVx + byVy significantly predicted the neuron's firing rate. We evaluated the significance of the F statistic on V and N – V – 1 degree of freedom, where N is the total number of observations and P is the total number of predictor variables (V = 2 for spatial analysis).

RESULTS

We recorded the activity of 143 neurons from PFo (subject A: 75, subject B: 68) and 194 neurons from PFcs (A: 132, B: 62). We collected the data across 33 recording sessions for subject A and 12 recording sessions for subject B. We recorded neuronal activity from lateral prefrontal cortex during these same sessions, and so the subjects' behavior is the same as previously reported (Kennerley and Wallis 2009b). The previous report contains a more detailed analysis of the behavioral data. We summarize the main findings in the following text.

The subjects performed 90% of the RS trials correctly, and both subjects performed more trials correctly as the reward size increased, consistent with the notion that larger expected rewards increased spatial attention. We calculated whether there was a significant effect of the expected reward on behavioral performance by performing a linear regression using the equation B = b0 + brVr where B is the percentage of trials for a given reward amount that the subject performed correctly during each session and Vr is the size of the expected reward. For both subjects, there was a significant positive relationship between the expected reward and behavioral performance [subject A: F(1,143) = 44, P <1 × 10−9, subject B: F(1,48) = 9.9, P < 0.005]. Subject A performed 6% more trials correctly for the largest expected reward (92% correct) compared with the smallest expected reward, whereas subject B performed 5% more trials correctly for the largest expected reward (94% correct) compared with the smallest expected reward. In addition, for subject A, there was a significant negative relationship between the expected reward size and reaction time [F(1,7638) = 40, P < 5 × 10−10] although the effect size was small (the subject was 7 ms quicker when he expected the largest reward compared with the smallest reward). Performing more quickly in addition to more accurately is another hallmark of increased attention. However, in subject B, the relationship between expected reward size and reaction time was not significant [F(1,2443) = 2.1, P > 0.1].

In the SR task, there was no relationship between the percentage of trials performed correctly and the expected reward (P > 0.1 for both subjects). The subjects found this task more difficult than the RS task, performing 82% of the trials correctly. In addition, subject A showed significantly longer reaction times as the size of the reward increased [F(1,6723) = 202, P < 1 × 10−15] taking 18 ms longer to respond when he expected the largest reward compared with the smallest reward. There was no relationship between reaction time and reward size in subject B [F(1,2212) < 1, P > 0.1]. In summary, SR task accuracy was generally worse than in the RS task, and for subject A, responses were slower with increased reward. Our previous interpretation of this effect (Kennerley and Wallis 2009b) was that that in the SR task the centrally presented reward cue competes for attentional resources, interfering with ongoing working memory or spatial attention processes that are attempting to maintain the location of the peripherally presented mnemonic cue.

Neuronal encoding of reward and space

We examined the ability of neurons to encode spatial or reward information by initially focusing on the first cue and delay epochs of the SR and RS tasks, respectively. The predominant neuronal selectivity in both PFcs and PFo was the encoding of the expected reward. Figure 2 A illustrates a PFcs neuron that encodes the size of the expected reward in the RS task during the first cue epoch, showing an increase in firing rate as the size of the expected reward increases. However, it encodes no information about the location of the spatial mnemonic cue in the SR task. Figure 2B illustrates another PFcs neuron that encodes the expected reward during the first cue epoch, but this neuron shows an increase in firing rate as the size of the expected reward decreases. Figure 2C illustrates a PFcs neuron that encodes the size of the expected reward across the first delay, showing an increase in firing as the reward size increases. Finally, Fig. 2D illustrates a PFo neuron that encodes reward information showing an increase in firing rate as the size of the expected reward increases. It is one of the few neurons to encode spatial information as well as reward information, showing an increase in firing rate when the mnemonic cue appeared in the top right of the screen in the SR task.

Fig. 2.

A: spike density histogram from a PFcs neuron that encodes reward information during the cue epoch of the RS task, showing an increase in firing rate as the size of the predicted reward increases but does not encode spatial information during the SR task. The spike density histogram for the RS task shows the neuron's activity sorted by reward size, whereas the spike density histogram for the SR task shows the same neuron's activity sorted by the location of the mnemonic cue. We have collapsed the spatial data into 4 groups to enable clear visualization of spatial selectivity. Each group consists of 6 of the 24 locations as indicated by the spatial key. The gray bars indicate the presentation of the 1st and 2nd cue. Inset: the mean standardized firing rate of the neuron across the 24 spatial locations from the epoch that elicited the maximum spatial selectivity (cue 1, delay 1a, or delay 1b). B: a PFcs neuron that also encodes the size of the expected reward during the cue epoch of the RS task, but in this case, the neuron shows an increased firing rate as the size of the expected reward decreases. C: a PFcs neuron that encodes the size of the expected reward during the delay epoch rather than the cue epoch. This neuron shows an increase in firing rate as the size of the expected reward increases. D: a PFo neuron that encodes reward during the 1st cue and delay epochs of the RS task, and spatial information during the 1st cue epoch of the SR task. The neuron shows an increase in firing rate as the reward increases and prefers spatial locations in the top right of the screen.

We determined the proportion of reward-selective neurons in the first three epochs of the RS task: the first cue epoch (cue 1) and the two delay epochs corresponding to each half of the first delay (delay 1a and delay 1b). In every epoch, there were significantly more reward-selective neurons in PFcs than PFo (Table 1). In total, 101/194 (52%) of PFcs neurons exhibited reward selectivity in at least one of the three epochs compared with 43/143 (30%) of PFo neurons (χ2 = 15, P < 0.0001). In both areas, an approximately equal number of neurons had a positive relationship between firing rate and reward value as had a negative relationship (PFcs: 54 vs. 46%, PFo: 47 vs. 53%). Neither proportion differed from the 50/50 split that one would expect by chance (binomial test, P > 0.1 in both areas). In contrast to the prevalent encoding of reward in both areas, few neurons encoded spatial information (Table 1).

Table 1.

Percentage of neurons that encode different experimental parameters during the first cue and delay epochs

| Cue 1 |

Delay 1a |

Delay 1b |

||||

|---|---|---|---|---|---|---|

| PFo | PFcs | PFo | PFcs | PFo | PFcs | |

| Reward | 12 | 27 | 19 | 32 | 8 | 25 |

| Space | 9 | 8 | 12 | 7 | 4 | 6 |

Numbers in bold indicate that there was a significant difference between the areas in the percentage of selective neurons (χ2 test, P < 0.05). PFo and PFcs, orbital and cingulate sulcus prefrontal cortex, respectively.

We also investigated the effect of a more complete regression model that included the picture set from which the reward-predictive cue was drawn as a second dummy predictive variable (Vp) in addition to reward size (Vr). We used the linear equation F = b0 + brVr + bpVp + bp.rVpVr. On average, just 3% of the neurons showed a significant predictive relationship between their firing rate and either the picture set or the interaction between picture set and reward size (cue 1: 4%, delay 1a: 4%, delay 1b: 2%). Thus the size of the reward indicated by the reward-predictive cue modulated the firing rate of most neurons rather than the visual properties of the cue.

We next examined the extent to which a linear relationship adequately characterized neuronal encoding of reward. For each neuron and each epoch in turn, we performed a one-way ANOVA using the neuron's mean firing rate as the dependent variable and the amount of predicted reward as the independent variable. The advantage of ANOVA for this analysis is that it does not make any assumptions regarding the relationship between firing rate and reward size. For those neurons that showed significant reward encoding, we performed a post hoc trend analysis. For a clear majority of the reward-selective neurons, a linear contrast explained the differences between the means, and the residual variance was not significantly greater than the error variance (Fig. 3 A). In other words, there was no significant variability left to explain beyond that accounted for by the linear component. If there was residual variance, we tested a quadratic contrast, which tests for neurons the firing rate of which is greatest to the middle reward sizes. This contrast only explained the differences between the means for a small minority of the reward-selective neurons. As a further test, we determined for each reward-selective neuron which reward size elicited the maximum firing rate. If most neurons showed a linear relationship between firing rate and reward size, then most neurons should show their maximum firing rate to either the smallest or largest reward size, while few neurons should show their maximum firing rate to the intermediate reward sizes. This is indeed the pattern we observed (Fig. 3B).

Fig. 3.

A: percentage of neurons that are reward-selective during the 1st cue epoch (C1), the 1st half of the 1st delay epoch (Da), and the 2nd half of the 1st delay epoch (Db). The shading of the bars illustrates the proportion of neurons that demonstrate either a linear or quadratic relationship between their firing rate and the size of the reward. B: for each reward size, we plotted the percentage of reward-selective neurons whose maximum firing rate was elicited by that reward size.

We next examined neuronal encoding during the second cue and delay epochs. It was similar to the encoding during the first cue and delay, with strong encoding of the expected reward and little encoding of the spatial mnemonic cue (Table 2). Reward-selective neurons were more prevalent in PFcs than in PFo in every epoch, although this reached significance only in the cue epoch of the RS task. In the RS task, there was an equal number of neurons the firing rate of which had a positive relationship with the expected reward as had a negative relationship (PFo: 50 vs. 50%, PFcs: 52 vs. 48%, binomial, P > 0.1 in both cases). However, in the SR task, neurons with a positive relationship were more common (PFo: 65 vs. 35%, binomial test, P < 0.01, PFcs: 58 vs. 42%, binomial test, P = 0.054).

Table 2.

Percentage of neurons that encode different experimental parameters during the second cue and delay epochs for the RS and SR tasks

| Cue 2 |

Delay 2a |

Delay 2b |

||||

|---|---|---|---|---|---|---|

| PFo | PFcs | PFo | PFcs | PFo | PFcs | |

| RS | ||||||

| Reward | 8 | 22 | 10 | 16 | 8 | 15 |

| Space | 10 | 5 | 3 | 6 | 6 | 4 |

| SR | ||||||

| Reward | 17 | 23 | 20 | 28 | 15 | 24 |

| Space | 3 | 5 | 5 | 3 | 3 | 4 |

Numbers in bold indicate that there was a significant difference between the areas in the percentage of selective neurons (χ2 test, P < 0.05). RS, reward-predictive cue followed by spatial mnemonic stimulus; SR, stimulus followed by reward cue.

Reward modulation of spatial selectivity

In a previous report, we found that information about the expected reward modulated the spatial representation of neurons in ventrolateral prefrontal cortex (PFvl) (Kennerley and Wallis 2009b). Furthermore, in the RS task (where increasing expected reward size produced better performance in each subject) increasing reward produced stronger spatial selectivity in the PFvl neurons. In contrast, in the SR task (where reward disrupted the subject's performance), increasing reward produced weaker spatial selectivity. We interpreted these effects as consistent with a role for PFvl in controlling resources related to the allocation of spatial attention.

We examined whether similar effects were present in PFo and PFcs neurons by performing the same analysis as in our previous report (Kennerley and Wallis 2009b). Specifically, for each neuron, task and epoch in turn, we calculated the strength of spatial selectivity when the expected reward was small (i.e., when the 2 smallest rewards were expected) and contrasted this to when the expected reward was large (i.e., when the 2 largest rewards were expected). We grouped the trials in this way to ensure that there were sufficient trials for each spatial location to permit an analysis with sufficient statistical power. We used the neuron's mean firing rate (F) as the dependent variable and the x and y coordinates of the spatial cue as two predictive variables (Vx and Vy, respectively), using the equation F = b0 + bxVx + byVy. We used the r2 value from the regression to calculate the percentage of the variance (PEV) in the neuron's firing rate that was explained by the spatial location of the mnemonic cue (PEVspace) for each of the two groups of trials. We then compared this value when the subject expected a large reward to when they expected a small reward.

In general, few neurons (∼5%) showed spatial selectivity during the second cue and delay epochs of either the SR and RS task. It was difficult to discern any consistent pattern in the effect of reward size on spatial selectivity (Fig. 4). Only one of our contrasts was significant: larger rewards produced stronger spatial selectivity in PFcs during the first half of the delay of the SR task (t3 = 7.8, P < 0.005). However, we are reluctant to make any strong conclusions from this result, as it relied on the activity of only four neurons (2% of our sample).

Fig. 4.

Mean spatial selectivity in PFo and PFcs for the RS task (top) and SR task (bottom) when the subject expects 1 of the 2 smallest or 2 largest rewards. Asterisks indicate that the difference between the 2 reward conditions is significant, evaluated using a t-test at P < 0.05. The number underneath the bars indicates the number of neurons included in the analysis.

Comparison of the time course for encoding of reward information

To investigate the latency at which neurons encoded reward information, we focused on neuronal activity during the presentation of the reward-predictive cue (cue 1 for the RS task and cue 2 for the SR task). First, we examined how a neuron's reward selectivity compared during these epochs across the two tasks. For each neuron, each epoch and each task in turn, we calculated the beta coefficient associated with the reward-predictive cue (br) using the equation F = b0 + brVr where F is the neuron's mean firing rate during a given epoch and Vr is the size of the reward predicted by the cue. We then compared the slopes of our beta coefficients associated with the reward-predictive cue during the corresponding epochs of both tasks (Fig. 5). There was a very strong correlation, indicating that an individual neuron's reward selectivity was very similar across the two tasks.

Fig. 5.

Scatter plots illustrating the relationship between reward selectivity during the SR task (as measured by the beta coefficient from the linear regression) and reward selectivity during the RS task for each area and each epoch.

Because of this correlation, we collapsed across the two tasks to investigate the time-course of reward selectivity. We used the sliding regression analysis to calculate the absolute value of the beta coefficient associated with the reward-predictive cue (br) at each time-point (Fig. 6 A). We then used this analysis to calculate the time at which reward selectivity occurred in each neuron. This method of determining neuronal selectivity yielded a higher estimate of the prevalence of reward selective neurons than our analysis based on predefined task epochs (cf. Fig. 6A and Table 2). This higher incidence resulted from the analysis having greater statistical power (because it collapsed across both tasks) and being more sensitive to phasic neuronal responses. We calculated the number of neurons in each area that reached our statistical criterion for encoding reward at any point during the cue epoch (see methods). Our conclusion remained the same as the analysis based on predefined epochs: reward selectivity was significantly more prevalent in PFcs (113/194 or 58%) compared with PFo (57/143 or 40%, χ2 = 10, P < 0.005).

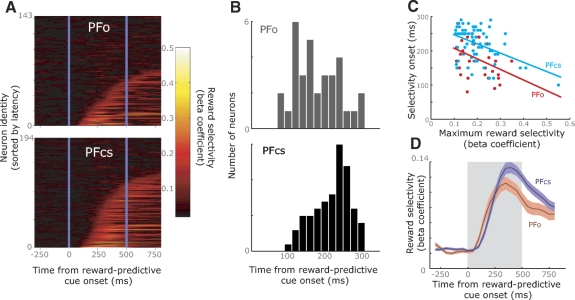

Fig. 6.

A: the time course of selectivity for encoding the predicted reward. For each brain area, the entire neuronal population from which we recorded is shown. On each plot, each horizontal line indicates the data from a single neuron, and the color code illustrates how the beta coefficient from the linear regression changes across the course of the trial. We have sorted the neurons on the y axis according to the latency at which their selectivity exceeds criterion during the cue epoch. The vertical blue lines indicate the extent of this epoch. The dark area at the top of each plot consists of neurons that did not reach the criterion during the cue epoch. B: the histograms illustrating the distribution of neuronal latencies at which selectivity appeared in each brain area. Selectivity appeared significantly earlier in PFo (median: 170 ms) relative to PFcs (median: 230 ms). C: scatter plot and corresponding least-squares line illustrating the relationship between the onset of reward selectivity and the maximum reward selectivity during the cue epoch for PFo and PFcs neurons. D: time course of reward selectivity in PFo and PFcs. The bold line indicates the mean of the population, while the colored shading indicates the standard error of the mean. The gray shading illustrates the extent of the cue epoch.

To determine whether the onset of reward selectivity occurred earlier in one area relative to the other, for each neuron we calculated the onset of selectivity focusing on the first 300 ms of the cue epoch (see methods). The onset of reward selectivity occurred significantly earlier in PFo neurons than PFcs neurons (Fig. 6B, median PFo = 170 ms, median PFcs = 230 ms, Wilcoxon's rank-sum test, P < 0.0005). A possible explanation for this effect may reside in the strength of the reward signal. A stronger signal is by definition easier (and therefore quicker) to detect. To examine this, we performed a one-way ANCOVA using the onset of selectivity as the dependent variable, areas (PFo or PFcs) as the independent variable, and the neuron's maximum selectivity during the 500-ms cue epoch as the covariate (Fig. 6C). There was a significant effect of the covariate [F(1,95) = 20.6, P < 0.00005], indicating that neurons with earlier response onsets tended to be those with the largest maximum selectivity. However, there was also a significant effect of area [F(1,95) = 19.7, P < 0.00005], indicating that PFo neurons tended to show earlier selectivity than PFcs neurons after taking into consideration the maximum selectivity of the neurons. The interaction between the covariate and the independent variable was not significant [F(1,95) = 0.1, P > 0.1], indicating that the relationship between response onset and maximum selectivity was similar in both areas. Figure 6D illustrates the mean strength of the reward signal across time for those neurons that reached criterion during the first 300 ms of the cue epoch in both areas. This also shows that the initial increase in selectivity occurs earlier in PFo than PFcs.

Finally, we examined the extent to which neurons that reached the criterion for reward selectivity during the cue epoch subsequently sustained this selectivity in the ensuing delay. For each of these neurons, we calculated the beta coefficient associated with the reward-predictive cue (br) using the equation F = b0 + brVr where F is the neuron's mean firing rate during a given epoch and Vr is the size of the reward predicted by the cue. We performed a two-way ANOVA using the beta coefficient as the dependent variable, and independent variables of area (PFcs or PFo) and epoch (the 3 epochs, corresponding to the cue and the first and second halves of the delay). There was a significant main effect of area [F(1,504) = 7.2, P < 0.01] due to greater selectivity strength in PFcs than PFo. This effect is also evident in Fig. 6D, with mean selectivity in PFcs stronger than in PFo. In addition, there was a significant main effect of epoch [F(2,504) = 5.8, P < 0.0005], with selectivity becoming gradually weaker as one moved from the cue epoch to the end of the delay. The interaction between the factors was not significant [F(2,504) < 1, P > 0.1] indicating that neither area was more or less likely to sustain its selectivity than the other.

In summary, neurons in PFo and PFcs showed similar responses to the reward-predictive cue irrespective of whether it occurred in the context of the RS or SR task. Encoding of the predicted reward occurred earlier in PFo, but the subsequent encoding of this information was stronger and more prevalent in PFcs.

Comparison with ventrolateral prefrontal cortex

In a previous report, we found that many neurons in PFvl showed strong encoding of both spatial and reward information in the current task (Kennerley and Wallis 2009b). We therefore compared the reward selectivity in PFvl with reward selectivity in PFo and PFcs. Figure 7 A illustrates the incidence of selectivity for reward information in the three areas during each epoch for both tasks. Reward selectivity was significantly less prevalent in PFo compared with PFvl and PFcs during the first and second cue epochs and during the second half of the first delay epoch the RS task. In addition, reward selectivity was more prevalent in PFcs during the second delay, although the difference did not reach significance. Figure 7B illustrates the prevalence of encoding of spatial information. Such encoding was very prevalent in PFvl and virtually absent in PFcs and PFo.

Fig. 7.

Prevalence of selectivity for the expected reward (A) and the spatial location (B) of the mnemonic cue across different task epochs and in different brain areas. *, a significant difference in the prevalence of selective neurons between the areas as determined by a χ2 test (evaluated at P < 0.017 to correct for multiple comparisons); —, which areas are significantly different from one another. C: scatter plot and corresponding least-squares line illustrating the relationship between the onset of reward selectivity and the maximum reward selectivity for PFo, PFcs, and ventrolateral prefrontal cortex (PFvl) neurons.

With regard to the onset of reward selectivity, the onset time in PFvl (median: 190-ms) was significantly earlier than the onset time in PFcs (median: 230 ms) but was not significantly different from the onset time in PFo (median: 170 ms, Kruskal-Wallis 1-way ANOVA, χ2 2,170 = 16, P < 0.0005, post hoc Tukey-Kramer test evaluated at P < 0.05). We reached a similar conclusion using a one-way ANCOVA, using onset of selectivity as the dependent variable, area (PFvl, PFo, or PFcs) as the independent variable, and the neuron's maximum selectivity during the 500-ms cue epoch as the covariate (Fig. 7C). There was a significant effect of area [F(2,167) = 10, P < 0.0001], which a post hoc Tukey-Kramer test revealed was due to significantly earlier onset times in PFo and PFvl relative to PFcs, whereas the onset times in PFo and PFvl did not significantly differ from one another. In addition, there was a significant effect of the covariate [F(1,167) = 24, P < 0.000005] but no interaction between the covariate and the independent variable [F(1,167) < 1, P > 0.1]. Thus the onset of reward selectivity was significantly earlier in PFvl and PFo than PFcs even after taking into consideration the maximum selectivity of the neurons.

DISCUSSION

We found little evidence that the integration of reward and spatial working memory took place in either PFo or PFcs. Neither PFcs nor PFo showed strong encoding of spatial information. In comparison to the weak encoding of spatial information, many neurons in PFcs and PFo encoded the amount of expected reward as indicated by the reward-predictive cue. PFo neurons consistently encoded reward information earlier than PFcs neurons. However, reward encoding was subsequently stronger and more prevalent in PFcs.

Reward selectivity in PFcs and PFo

Many neurons in PFcs (Amiez et al. 2006; Kennerley et al. 2009; Matsumoto et al. 2007; Quilodran et al. 2008; Sallet et al. 2007; Seo and Lee 2007) and PFo (Critchley and Rolls 1996; Kennerley et al. 2009; Padoa-Schioppa and Assad 2006; Tremblay and Schultz 1999, 2000; Wallis and Miller 2003) encode the value of expected rewards, as do neurons in adjoining cortical areas, such as the ventral bank of the cingulate sulcus (Shidara and Richmond 2002). Moreover, damage to either PFo (Baxter et al. 2000; Bechara et al. 1994; Burke et al. 2008; Izquierdo et al. 2004) or PFcs (Amiez et al. 2006; Hadland et al. 2003; Kennerley et al. 2006) impairs the ability to use rewards to guide behavior. Although the precise contribution of PFcs and PFo to reward processing remains unclear, the two areas show clear differences in their pattern of connections with the rest of the brain, which may constrain their functional roles. PFcs and PFo both connect with areas responsible for processing rewards, such as the amygdala and hypothalamus (Carmichael and Price 1995a; Ongur et al. 1998), and receive dopaminergic inputs (Williams and Goldman-Rakic 1993), which also carry reward signals (Bayer and Glimcher 2005; Matsumoto and Hikosaka 2009; Morris et al. 2006; Roesch et al. 2007; Schultz 1998). However, whereas PFo heavily connects with all sensory areas, including visual, olfactory, and gustatory cortices, it only weakly connects with motor areas (Carmichael and Price 1995b; Cavada et al. 2000; Croxson et al. 2005; Kondo et al. 2005). In contrast, PFcs strongly connects with cingulate motor areas but has few direct connections with sensory cortex (Carmichael and Price 1995b; Croxson et al. 2005; Dum and Strick 1993; Morecraft and Van Hoesen 1998; Vogt et al. 1987). Based on these anatomical differences, PFo may be more important for associating reward values with sensory stimuli, whereas PFcs may associate reward values with behavioral responses.

Several studies support this distinction. For example, monkeys with PFcs lesions show less influence of past reward history on motor selection (Kennerley et al. 2006), while PFo lesions interfere with the ability to update associations between a stimulus and its associated outcome in response to reinforcer devaluation (Baxter et al. 2000; Izquierdo et al. 2004). Lesions of PFo impair probabilistic matching tasks when the discriminanda are visual pictures but not when the discriminanda are motor responses, whereas the opposite pattern occurs for PFcs lesions (Rudebeck et al. 2008). The results of the current study are also consistent with this framework. In the current task, a picture signals the amount of reward expected, and PFo was the area in which reward encoding first appeared, consistent with its putative role in extracting the value of reward-predicting visual stimuli. However, reward encoding was subsequently more prevalent in PFcs, consistent with a putative role for PFcs in using information about predicted rewards to guide selection of the appropriate response.

The somewhat phasic nature of PFo reward encoding is evident in many previous studies that used visual stimuli to predict upcoming rewards (Padoa-Schioppa and Assad 2006; Roesch and Olson 2004, 2005). Indeed studies that used long delays (>2 s) between the reward cue and the go cue report that <3% of PFo neurons maintain reward information throughout the delay (Tremblay and Schultz 1999, 2000). In terms of the prevalence of reward encoding in PFo, we found that 15% of the neurons encoded the reward during the presentation of the cue, 20% of the neurons encoded the reward in the epoch immediately following the offset of the cue, but only 12% encoded reward in the final half of the delay epoch. This is similar to previous studies in which typically 15–25% of PFo neurons are reward-selective (Padoa-Schioppa and Assad 2006; Roesch and Olson 2005; 2004; Tremblay and Schultz 1999). However, this is noticeably less than in our previous studies where we have found between 30 and 40% of the neurons encoding the predicted reward (Kennerley et al. 2009; Wallis and Miller 2003).

A potentially important difference between the current study and our previous studies is that our previous studies required the subject to choose between the stimuli to obtain the most preferred outcome. In contrast, in the current study, the stimuli simply indicated the outcome, but there was no choice. Neuroimaging results in humans show stronger PFo activation when a subject must actively choose between outcomes compared with when they are simply rating the desirability of the outcome (Arana et al. 2003).

In the current study, we also found that in both PFo and PFcs there was an approximately equal number of neurons that either increased or decreased their firing rate as the size of the predicted reward increased, which is consistent with several recent studies (Kennerley et al. 2009; Sallet et al. 2007; Seo and Lee 2009; Tremblay and Schultz 2000). However, other studies have found that PFo and PFcs neurons consistently increase their firing rate as the predicted reward increases (Hayden et al. 2009; Roesch and Olson 2004, 2005), while electroencephalograms (Gehring et al. 1993; Miltner et al. 1997) and fMRIs (Carter et al. 1998; Holroyd et al. 2004; Ullsperger and von Cramon 2003) suggest that PFcs activity is stronger to failure than success. Even our own studies are inconsistent in this regard. For example, in a recent task, we had the subjects choose between primary reinforcers (rather than visual stimuli predictive of primary reinforcers) and found that PFcs neurons consistently responded with increased firing rate to less desirable reinforcers (Luk and Wallis 2009). However, in another study, where we looked at neuronal responses to the primary reinforcement received following a choice between reward-predictive visual stimuli, we again found an even split in both PFcs and PFo of neurons that responded with either an increase or decrease in firing rate to more desirable outcomes (Kennerley and Wallis 2009a). Further research will be needed to determine when exactly these different encoding schemes occur. Some evidence suggests that neurons in both PFcs and PFo are highly sensitive to the context in which a reward occurs (Luk and Wallis 2009; Sallet et al. 2007; Tremblay and Schultz 1999). For example, a PFcs response to a large reward will be larger if the reward occurs in a block of relatively small rewards compared with a block of relatively large rewards (Sallet et al. 2007). Neuronal responses may depend on the precise reward scale and training history of the subject, which may underlie the complexity of the encoding schemes.

Spatial selectivity in PFcs and PFo

The role of PFo and PFcs in spatial attention is an issue that neurophysiological studies have rarely addressed. Unlike the lateral prefrontal cortex, where investigators have extensively mapped and examined the properties of neuronal spatial receptive fields (Funahashi et al. 1989; Kennerley and Wallis 2009b; Rao et al. 1997), there are no comparable studies in PFo and PFcs. A spatial delayed response task using two spatial positions found just 3% of PFo neurons encoded spatial location (Tremblay and Schultz 1999). In a study that used four different spatial locations, ∼12 and 4% of PFo neurons exhibited spatial selectivity during the cue and delay epochs, respectively, compared with 30 and 34% of lateral prefrontal cortex neurons in the respective epochs (Ichihara-Takeda and Funahashi 2007). Spatial delayed response tasks using two to four different spatial positions have found <10% of PFcs neurons encode spatial information (Nakamura et al. 2005; Niki and Watanabe 1976). A study using three different spatial positions found delay-related activity in PFcs, but the activity appeared to be related more to the serial order of upcoming movements rather than their spatial characteristics (Procyk and Joseph 2001; Procyk et al. 2000). However, all of these studies used only a small number of locations over which to assess spatial encoding (usually just 2), which might explain the low incidence of spatially selective neurons observed. The current study, which used a large matrix of 24 spatial positions, sought to provide a more thorough examination of the extent to which PFo and PFcs neurons exhibit spatial receptive fields.

Although several neuroimaging studies suggest that PFcs combines spatial and emotional information (Armony and Dolan 2002; De Martino et al. 2008; Fichtenholtz et al. 2004; Mohanty et al. 2008), we found little evidence that this was the case. There was little encoding of spatial information in either PFcs or PFo; in fact, rarely did the number of spatially selective neurons in either PFo or PFcs exceed what would be expected by chance. Remembering a cue across a delay and planning a saccade to that location does not appear to drive PFcs or PFo neurons. This is consistent with the fact that lesions to PFo or PFcs do not consistently impair performance in spatial delayed response tasks (Bachevalier and Mishkin 1986; Meunier et al. 1997). Thus there appears to be a discrepancy between the results from neuroimaging studies and those from neurophysiological studies regarding the role of PFcs in encoding spatial information. Importantly, however, neuroimaging studies that implicate the area in an around PFcs in spatial attention typically identify activity in this region in conditions where covert spatial attention occurs but do not necessarily show that this activity is associated with encoding particular regions of space (Mesulam et al. 2001; Nobre et al. 2000; Paus et al. 1998; Petit et al. 1998). PFcs may have a more generalized role in allocating attentional resources. For example, PFcs may detect circumstances that require increased attentional resources, such as when an error occurs or when there is uncertainty about which response to make (Bush et al. 2000; Kerns et al. 2004; Ridderinkhof et al. 2004; Rushworth et al. 2007). Moreover, although PFo and PFcs neurons do not appear to encode spatial parameters per se, these areas may participate in spatial attention indirectly. The maintenance of reward information by PFo and PFcs neurons during delay periods could serve to promote the allocation of additional attentional resources, thereby ensuring that those areas responsible for the maintenance of the spatial information ultimately achieve the behavioral goal (Kouneiher et al. 2009).

Although we found little encoding of spatial information in either PFcs or PFo, it might be the case that the encoding of such information is more common in these areas when there is a requirement to select between responses of different value. Single neurons in PFcs encode the relationship between actions and their values and track the reward history of different actions (Luk and Wallis 2009; Matsumoto et al. 2003; Seo and Lee 2007; Williams et al. 2004). Damage to PFcs also impairs the ability to link actions with their outcomes and to learn the value of different actions (Amiez et al. 2006; Hadland et al. 2003; Kennerley et al. 2006; Rushworth et al. 2004). Moreover, damage to PFcs impairs performance in delayed alternation, a task that requires inhibition of one spatial response and selection of a different spatial response to obtain reward (Pribram et al. 1962; Rushworth et al. 2003). Rather than a functional role in encoding specific spatial positions, the role of PFcs in encoding motor parameters may be more related to its role in representing the value of different actions (Kennerley et al. 2006; Rushworth et al. 2007).

Localization of function within prefrontal cortex

In contrast to PFcs and PFo, we previously observed prominent encoding of spatial information in PFvl, in addition to encoding of reward information in PFvl (Kennerley and Wallis 2009b). Indeed despite recording from a large expanse of prefrontal cortex, including the medial, orbital, and lateral surfaces (including dorsal lateral areas and both banks of the principal sulcus), the only area where there was prominent encoding of both spatial and reward information was the inferior convexity, consisting of areas 47/12 and 45A. Thus while several theories of prefrontal function emphasize the flexibility in encoding information that prefrontal neurons exhibit (Duncan 2001; Duncan and Owen 2000; Miller and Cohen 2001), it is nevertheless clear that some cognitive processes are constrained to relatively restricted regions of prefrontal cortex. Theories of the functional organization of prefrontal cortex must therefore take into account the anatomical complexity of this region (Petrides and Pandya 1994).

Interpretational issues

The spatial delayed response task potentially taxes several cognitive processes including working memory, executive control, spatial attention, and motor planning. In addition, there has been considerable debate over whether some of these processes, such as attention and working memory, are even dissociable in principle or whether the differences are purely semantic (Awh and Jonides 2001; Lebedev et al. 2004; Rainer et al. 1998; Woodman et al. 2007). We did not design the current experiment to dissociate these possibilities. However, studies that have attempted to dissociate these possibilities have generally favored a sensory (Constantinidis et al. 2001; Funahashi et al. 1993) and attentional account of prefrontal activity (Everling et al. 2002; Lebedev et al. 2004). For example, in a task that required subjects to hold one spatial location in working memory while attending to an alternative location, prefrontal neurons tended to encode the attentional locus more than the remembered location (Lebedev et al., 2004). Although we did not design our task to dissociate between the various cognitive processes that might underlie spatial delayed response performance, our results show that the expected reward dynamically regulates the neuronal representations underpinning performance of the task, at least in PFvl. This is consistent with the notion that working memory consists of a limited resource that can be dynamically adjusted in response to ongoing task demands (Bays and Husain 2008).

Conclusion

In summary, neither PFcs nor PFo integrated reward information with spatial working memory. This contrasts with PFvl where strong encoding of both spatial and reward information occurs and where expected rewards modulate spatial selectivity (Kennerley and Wallis 2009b). These findings are compatible with an account of prefrontal organization in which the lateral prefrontal cortex is responsible for the control of attention and executive processing, whereas orbital and medial frontal regions are responsible for goal-directed behavior and decision-making (Miller and Cohen 2001; Wallis 2007).

GRANTS

The project was funded by National Institutes of Health Grants R01DA-19028 and P01NS-040813 to J. D. Wallis and NIH Training Grant F32MH-081521 to S. W. Kennerley. S.W.K. and J.D.W. contributed to all aspects of the project.

ACKNOWLEDGMENTS

S. W. Kennerley and J. D. Wallis contributed to all aspects of the project.

REFERENCES

- Amiez C, Joseph JP, Procyk E. Reward encoding in the monkey anterior cingulate cortex. Cereb Cortex 16: 1040–1055, 2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arana FS, Parkinson JA, Hinton E, Holland AJ, Owen AM, Roberts AC. Dissociable contributions of the human amygdala and orbitofrontal cortex to incentive motivation and goal selection. J Neurosci 23: 9632–9638, 2003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Armony JL, Dolan RJ. Modulation of spatial attention by fear-conditioned stimuli: an event-related fMRI study. Neuropsychologia 40: 817–826, 2002 [DOI] [PubMed] [Google Scholar]

- Awh E, Jonides J. Overlapping mechanisms of attention and spatial working memory. Trends Cogn Sci 5: 119–126, 2001 [DOI] [PubMed] [Google Scholar]

- Bachevalier J, Mishkin M. Visual recognition impairment follows ventromedial but not dorsolateral prefrontal lesions in monkeys. Behav Brain Res 20: 249–261, 1986 [DOI] [PubMed] [Google Scholar]

- Bates JF, Goldman-Rakic PS. Prefrontal connections of medial motor areas in the rhesus monkey. J Comp Neurol 336: 211–228, 1993 [DOI] [PubMed] [Google Scholar]

- Baxter MG, Parker A, Lindner CC, Izquierdo AD, Murray EA. Control of response selection by reinforcer value requires interaction of amygdala and orbital prefrontal cortex. J Neurosci 20: 4311–4319, 2000 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bayer HM, Glimcher PW. Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron 47: 129–141, 2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bays PM, Husain M. Dynamic shifts of limited working memory resources in human vision. Science 321: 851–854, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bechara A, Damasio AR, Damasio H, Anderson SW. Insensitivity to future consequences following damage to human prefrontal cortex. Cognition 50: 7–15, 1994 [DOI] [PubMed] [Google Scholar]

- Bradley BP, Mogg K, Millar N, Bonham-Carter C, Fergusson E, Jenkins J, Parr M. Attentional biases for emotional faces. Cogn Emotion 11: 25–42, 1997 [Google Scholar]

- Burke KA, Franz TM, Miller DN, Schoenbaum G. The role of the orbitofrontal cortex in the pursuit of happiness and more specific rewards. Nature 454: 340–344, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bush G, Luu P, Posner MI. Cognitive and emotional influences in anterior cingulate cortex. Trends Cogn Sci 4: 215–222, 2000 [DOI] [PubMed] [Google Scholar]

- Carmichael ST, Price JL. Limbic connections of the orbital and medial prefrontal cortex in macaque monkeys. J Comp Neurol 363: 615–641, 1995a [DOI] [PubMed] [Google Scholar]

- Carmichael ST, Price JL. Sensory and premotor connections of the orbital and medial prefrontal cortex of macaque monkeys. J Comp Neurol 363: 642–664, 1995b [DOI] [PubMed] [Google Scholar]

- Carter CS, Braver TS, Barch DM, Botvinick MM, Noll D, Cohen JD. Anterior cingulate cortex, error detection, and the online monitoring of performance. Science 280: 747–749, 1998 [DOI] [PubMed] [Google Scholar]

- Cavada C, Company T, Tejedor J, Cruz-Rizzolo RJ, Reinoso-Suarez F. The anatomical connections of the macaque monkey orbitofrontal cortex. A review. Cereb Cortex 10: 220–242, 2000 [DOI] [PubMed] [Google Scholar]

- Constantinidis C, Franowicz MN, Goldman-Rakic PS. The sensory nature of mnemonic representation in the primate prefrontal cortex. Nat Neurosci 4: 311–316, 2001 [DOI] [PubMed] [Google Scholar]

- Critchley HD, Rolls ET. Hunger and satiety modify the responses of olfactory and visual neurons in the primate orbitofrontal cortex. J Neurophysiol 75: 1673–1686, 1996 [DOI] [PubMed] [Google Scholar]

- Croxson PL, Johansen-Berg H, Behrens TE, Robson MD, Pinsk MA, Gross CG, Richter W, Richter MC, Kastner S, Rushworth MF. Quantitative investigation of connections of the prefrontal cortex in the human and macaque using probabilistic diffusion tractography. J Neurosci 25: 8854–8866, 2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Martino B, Kalisch R, Rees G, Dolan RJ. Enhanced processing of threat stimuli under limited attentional resources. Cereb Cortex 19: 127–133, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Della Libera C, Chelazzi L. Visual selective attention and the effects of monetary rewards. Psychol Sci 17: 222–227, 2006 [DOI] [PubMed] [Google Scholar]

- Dum RP, Strick PL. Cingulate motor areas. In: Neurobiology of Cingulate Cortex and Limbic Thalamus: A Comprehensive Handbook, edited by Vogt BA, Gabriel M. Cambridge, MA: Birkhaeuser, 1993, p. 415–441 [Google Scholar]

- Duncan J. An adaptive coding model of neural function in prefrontal cortex. Nat Rev Neurosci 2: 820–829, 2001 [DOI] [PubMed] [Google Scholar]

- Duncan J, Owen AM. Common regions of the human frontal lobe recruited by diverse cognitive demands. Trends Neurosci 23: 475–483, 2000 [DOI] [PubMed] [Google Scholar]

- Engelmann JB, Pessoa L. Motivation sharpens exogenous spatial attention. Emotion 7: 668–674, 2007 [DOI] [PubMed] [Google Scholar]

- Everling S, Tinsley CJ, Gaffan D, Duncan J. Filtering of neural signals by focused attention in the monkey prefrontal cortex. Nat Neurosci 5: 671–676, 2002 [DOI] [PubMed] [Google Scholar]

- Fichtenholtz HM, Dean HL, Dillon DG, Yamasaki H, McCarthy G, LaBar KS. Emotion-attention network interactions during a visual oddball task. Brain Res Cogn Brain Res 20: 67–80, 2004 [DOI] [PubMed] [Google Scholar]

- Funahashi S, Bruce CJ, Goldman-Rakic PS. Dorsolateral prefrontal lesions and oculomotor delayed-response performance: evidence for mnemonic “scotomas.” J Neurosci 13: 1479–1497, 1993 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Funahashi S, Bruce CJ, Goldman-Rakic PS. Mnemonic coding of visual space in the monkey's dorsolateral prefrontal cortex. J Neurophysiol 61: 331–349, 1989 [DOI] [PubMed] [Google Scholar]

- Gehring WJ, Goss B, Coles MGH, Meyer DE, Donchin E. A neural system for error detection compensation. Psychol Sci 4: 385–390, 1993 [Google Scholar]

- Hadland KA, Rushworth MF, Gaffan D, Passingham RE. The anterior cingulate and reward-guided selection of actions. J Neurophysiol 89: 1161–1164, 2003 [DOI] [PubMed] [Google Scholar]

- Hayden BY, Pearson JM, Platt ML. Fictive reward signals in the anterior cingulate cortex. Science 324: 948–950, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holroyd CB, Nieuwenhuis S, Yeung N, Nystrom L, Mars RB, Coles MG, Cohen JD. Dorsal anterior cingulate cortex shows fMRI response to internal and external error signals. Nat Neurosci 7: 497–498, 2004 [DOI] [PubMed] [Google Scholar]

- Ichihara-Takeda S, Funahashi S. Activity of primate orbitofrontal and dorsolateral prefrontal neurons: task-related activity during an oculomotor delayed-response task. Exp Brain Res 181: 409–425, 2007 [DOI] [PubMed] [Google Scholar]

- Izquierdo A, Suda RK, Murray EA. Bilateral orbital prefrontal cortex lesions in rhesus monkeys disrupt choices guided by both reward value and reward contingency. J Neurosci 24: 7540–7548, 2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennerley SW, Dahmubed AF, Lara AH, Wallis JD. Neurons in the frontal lobe encode the value of multiple decision variables. J Cogn Neurosci 21: 1162–1178, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennerley SW, Wallis JD. Evaluating choices by single neurons in the frontal lobe: outcome value encoded across multiple decision variables. Eur J Neurosci 29: 2061–2073, 2009a [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennerley SW, Wallis JD. Reward-dependent modulation of working memory in lateral prefrontal cortex. J Neurosci 29: 3259–3270, 2009b [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennerley SW, Walton ME, Behrens TE, Buckley MJ, Rushworth MF. Optimal decision making and the anterior cingulate cortex. Nat Neurosci 9: 940–947, 2006 [DOI] [PubMed] [Google Scholar]

- Kerns JG, Cohen JD, MacDonald AW, 3rd, Cho RY, Stenger VA, Carter CS. Anterior cingulate conflict monitoring and adjustments in control. Science 303: 1023–1026, 2004 [DOI] [PubMed] [Google Scholar]

- Kondo H, Saleem KS, Price JL. Differential connections of the perirhinal and parahippocampal cortex with the orbital and medial prefrontal networks in macaque monkeys. J Comp Neurol 493: 479–509, 2005 [DOI] [PubMed] [Google Scholar]

- Kouneiher F, Charron S, Koechlin E. Motivation and cognitive control in the human prefrontal cortex. Nat Neurosci 12: 939–945, 2009 [DOI] [PubMed] [Google Scholar]

- LaBar KS, Gitelman DR, Parrish TB, Mesulam M. Neuroanatomic overlap of working memory and spatial attention networks: a functional MRI comparison within subjects. Neuroimage 10: 695–704, 1999 [DOI] [PubMed] [Google Scholar]

- Lebedev MA, Messinger A, Kralik JD, Wise SP. Representation of attended versus remembered locations in prefrontal cortex. PLoS Biol 2: e365, 2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luk CH, Wallis JD. Dynamic encoding of responses and outcomes by neurons in medial prefrontal cortex. J Neurosci 29: 7526–7539, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacLeod C, Mathews A, Tata P. Attentional bias in emotional disorders. J Abnormal Psychol 40: 653–670, 1986 [DOI] [PubMed] [Google Scholar]

- Mathews A. Anxiety and the processing of threatening information. In: Cognitive Perspectives on Emotion and Motivation, edited by Hamilton V, Bower G, Frijda N. Dortrecht: Nijhoff, 1988, p. 265–284 [Google Scholar]

- Matsumoto K, Suzuki W, Tanaka K. Neuronal correlates of goal-based motor selection in the prefrontal cortex. Science 301: 229–232, 2003 [DOI] [PubMed] [Google Scholar]

- Matsumoto M, Hikosaka O. Two types of dopamine neuron distinctly convey positive and negative motivational signals. Nature 459: 837–841, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matsumoto M, Matsumoto K, Abe H, Tanaka K. Medial prefrontal cell activity signaling prediction errors of action values. Nat Neurosci 10: 647–656, 2007 [DOI] [PubMed] [Google Scholar]

- Mesulam MM. Spatial attention and neglect: parietal, frontal and cingulate contributions to the mental representation and attentional targeting of salient extrapersonal events. Philos Trans R Soc Lond B Biol Sci 354: 1325–1346, 1999 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mesulam MM, Nobre AC, Kim YH, Parrish TB, Gitelman DR. Heterogeneity of cingulate contributions to spatial attention. Neuroimage 13: 1065–1072, 2001 [DOI] [PubMed] [Google Scholar]

- Meunier M, Bachevalier J, Mishkin M. Effects of orbital frontal and anterior cingulate lesions on object and spatial memory in rhesus monkeys. Neuropsychologia 35: 999–1015, 1997 [DOI] [PubMed] [Google Scholar]

- Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annu Rev Neurosci 24: 167–202, 2001 [DOI] [PubMed] [Google Scholar]

- Miltner WHR, Braun CH, Coles MGH. Event-related brain potentials following incorrect feedback in a time-estimation task: evidence for a “generic” neural system for error detection. J Cogn Neurosci 9: 788–798, 1997 [DOI] [PubMed] [Google Scholar]

- Mogg K, Bradley BP, Field M, De Houwer J. Eye movements to smoking-related pictures in smokers: relationship between attentional biases and implicit and explicit measures of stimulus valence. Addiction 98: 825–836, 2003 [DOI] [PubMed] [Google Scholar]

- Mogg K, Bradley BP, Hyare H, Lee S. Selective attention to food-related stimuli in hunger: are attentional biases specific to emotional and psychopathological states, or are they also found in normal drive states? Behav Res Ther 36: 227–237, 1998 [DOI] [PubMed] [Google Scholar]

- Mogg K, Mathews A, Eysenck M. Attentional bias to threat in clinical anxiety states. Cogn Emotion 6: 149–159, 1992 [Google Scholar]

- Mohanty A, Gitelman DR, Small DM, Mesulam MM. The spatial attention network interacts with limbic and monoaminergic systems to modulate motivation-induced attention shifts. Cereb Cortex 18: 2604–2613, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morecraft RJ, Van Hoesen GW. Convergence of limbic input to the cingulate motor cortex in the rhesus monkey. Brain Res Bull 45: 209–232, 1998 [DOI] [PubMed] [Google Scholar]

- Morris G, Nevet A, Arkadir D, Vaadia E, Bergman H. Midbrain dopamine neurons encode decisions for future action. Nat Neurosci 9: 1057–1063, 2006 [DOI] [PubMed] [Google Scholar]

- Nakamura K, Roesch MR, Olson CR. Neuronal activity in macaque SEF and ACC during performance of tasks involving conflict. J Neurophysiol 93: 884–908, 2005 [DOI] [PubMed] [Google Scholar]

- Niki H, Watanabe M. Cingulate unit activity and delayed response. Brain Res 110: 381–386, 1976 [DOI] [PubMed] [Google Scholar]

- Nobre AC, Gitelman DR, Dias EC, Mesulam MM. Covert visual spatial orienting and saccades: overlapping neural systems. Neuroimage 11: 210–216, 2000 [DOI] [PubMed] [Google Scholar]

- Ongur D, An X, Price JL. Prefrontal cortical projections to the hypothalamus in macaque monkeys. J Comp Neurol 401: 480–505, 1998 [PubMed] [Google Scholar]

- Ongur D, Price JL. The organization of networks within the orbital and medial prefrontal cortex of rats, monkeys and humans. Cereb Cortex 10: 206–219, 2000 [DOI] [PubMed] [Google Scholar]

- Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature 441: 223–226, 2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paus T, Koski L, Caramanos Z, Westbury C. Regional differences in the effects of task difficulty and motor output on blood flow response in the human anterior cingulate cortex: a review of 107 PET activation studies. Neuroreport 9: R37–47, 1998 [DOI] [PubMed] [Google Scholar]

- Petit L, Courtney SM, Ungerleider LG, Haxby JV. Sustained activity in the medial wall during working memory delays. J Neurosci 18: 9429–9437, 1998 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. Comparative architectonic analysis of the human and macaque frontal cortex. In: Handbook of Neuropsychology, edited by Boller F, Grafman J. New York: Elsevier, 1994, p. 17–57 [Google Scholar]

- Pribram KH, Wilson WA, Jr, Connors J. Effects of lesions of the medial forebrain on alternation behavior of rhesus monkeys. Exp Neurol 6: 36–47, 1962 [DOI] [PubMed] [Google Scholar]

- Procyk E, Joseph JP. Characterization of serial order encoding in the monkey anterior cingulate sulcus. Eur J Neurosci 14: 1041–1046, 2001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Procyk E, Tanaka YL, Joseph JP. Anterior cingulate activity during routine and non-routine sequential behaviors in macaques. Nat Neurosci 3: 502–508, 2000 [DOI] [PubMed] [Google Scholar]

- Quilodran R, Rothe M, Procyk E. Behavioral shifts and action valuation in the anterior cingulate cortex. Neuron 57: 314–325, 2008 [DOI] [PubMed] [Google Scholar]

- Rainer G, Asaad WF, Miller EK. Selective representation of relevant information by neurons in the primate prefrontal cortex. Nature 393: 577–579, 1998 [DOI] [PubMed] [Google Scholar]

- Rao SC, Rainer G, Miller EK. Integration of what and where in the primate prefrontal cortex. Science 276: 821–824, 1997 [DOI] [PubMed] [Google Scholar]

- Ridderinkhof KR, Ullsperger M, Crone EA, Nieuwenhuis S. The role of the medial frontal cortex in cognitive control. Science 306: 443–447, 2004 [DOI] [PubMed] [Google Scholar]

- Roesch MR, Calu DJ, Schoenbaum G. Dopamine neurons encode the better option in rats deciding between differently delayed or sized rewards. Nat Neurosci 10: 1615–1624, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roesch MR, Olson CR. Neuronal activity related to reward value and motivation in primate frontal cortex. Science 304: 307–310, 2004 [DOI] [PubMed] [Google Scholar]

- Roesch MR, Olson CR. Neuronal activity in primate orbitofrontal cortex reflects the value of time. J Neurophysiol 94: 2457–2471, 2005 [DOI] [PubMed] [Google Scholar]

- Roesch MR, Olson CR. Neuronal activity related to anticipated reward in frontal cortex: does it represent value or reflect motivation? Ann NY Acad Sci 1121: 431–446, 2007 [DOI] [PubMed] [Google Scholar]

- Rudebeck PH, Behrens TE, Kennerley SW, Baxter MG, Buckley MJ, Walton ME, Rushworth MF. Frontal cortex subregions play distinct roles in choices between actions and stimuli. J Neurosci 28: 13775–13785, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rushworth MF, Buckley MJ, Behrens TE, Walton ME, Bannerman DM. Functional organization of the medial frontal cortex. Curr Opin Neurobiol 17: 220–227, 2007 [DOI] [PubMed] [Google Scholar]

- Rushworth MF, Hadland KA, Gaffan D, Passingham RE. The effect of cingulate cortex lesions on task switching and working memory. J Cogn Neurosci 15: 338–353, 2003 [DOI] [PubMed] [Google Scholar]

- Rushworth MF, Walton ME, Kennerley SW, Bannerman DM. Action sets and decisions in the medial frontal cortex. Trends Cogn Sci 8: 410–417, 2004 [DOI] [PubMed] [Google Scholar]

- Sallet J, Quilodran R, Rothe M, Vezoli J, Joseph JP, Procyk E. Expectations, gains, and losses in the anterior cingulate cortex. Cogn Affect Behav Neurosci 7: 327–336, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz W. Predictive reward signal of dopamine neurons. J Neurophysiol 80: 1–27, 1998 [DOI] [PubMed] [Google Scholar]

- Seo H, Lee D. Temporal filtering of reward signals in the dorsal anterior cingulate cortex during a mixed-strategy game. J Neurosci 27: 8366–8377, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seo H, Lee D. Behavioral and neural changes after gains and losses of conditioned reinforcers. J Neurosci 29: 3627–3641, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shidara M, Richmond BJ. Anterior cingulate: single neuronal signals related to degree of reward expectancy. Science 296: 1709–1711, 2002 [DOI] [PubMed] [Google Scholar]

- Tremblay L, Schultz W. Relative reward preference in primate orbitofrontal cortex. Nature 398: 704–708, 1999 [DOI] [PubMed] [Google Scholar]

- Tremblay L, Schultz W. Reward-related neuronal activity during go-nogo task performance in primate orbitofrontal cortex. J Neurophysiol 83: 1864–1876, 2000 [DOI] [PubMed] [Google Scholar]

- Ullsperger M, von Cramon DY. Error monitoring using external feedback: specific roles of the habenular complex, the reward system, and the cingulate motor area revealed by functional magnetic resonance imaging. J Neurosci 23: 4308–4314, 2003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Hoesen GW, Morecraft RJ, Vogt BA. Connections of the monkey cingulate cortex. In: Neurobiology of Cingulate Cortex and Limbic Thalamus: A Comprehensive Handbook, edited by Vogt BA, Gabriel M. Cambridge, MA: Birkhaeuser, 1993, p. 249–284 [Google Scholar]

- Vogt BA, Pandya DN, Rosene DL. Cingulate cortex of the rhesus monkey. I. Cytoarchitecture and thalamic afferents. J Comp Neurol 262: 256–270, 1987 [DOI] [PubMed] [Google Scholar]

- Vogt BA, Vogt L, Farber NB, Bush G. Architecture and neurocytology of monkey cingulate gyrus. J Comp Neurol 485: 218–239, 2005 [DOI] [PMC free article] [PubMed] [Google Scholar]