Summary

In recent years, many methods have been developed for regression in high-dimensional settings. We propose covariance-regularized regression, a family of methods that use a shrunken estimate of the inverse covariance matrix of the features in order to achieve superior prediction. An estimate of the inverse covariance matrix is obtained by maximizing its log likelihood, under a multivariate normal model, subject to a constraint on its elements; this estimate is then used to estimate coefficients for the regression of the response onto the features. We show that ridge regression, the lasso, and the elastic net are special cases of covariance-regularized regression, and we demonstrate that certain previously unexplored forms of covariance-regularized regression can outperform existing methods in a range of situations. The covariance-regularized regression framework is extended to generalized linear models and linear discriminant analysis, and is used to analyze gene expression data sets with multiple class and survival outcomes.

Keywords: regression, classification, n ≪ p, covariance regularization

1. Introduction

In high-dimensional regression problems, where p, the number of features, is nearly as large as, or larger than, n, the number of observations, ordinary least squares regression does not provide a satisfactory solution. A remedy for the shortcomings of least squares is to modify the sum of squared errors criterion used to estimate the regression coefficients, using penalties that are based on the magnitudes of the coefficients:

| (1) |

(Here, the notation ||β||s is used to indicate .) Many popular regularization methods fall into this framework. For instance, when λ2 = 0, p1 = 0 gives best subset selection, p1 = 2 gives ridge regression (Hoerl & Kennard 1970), and p1 = 1 gives the lasso (Tibshirani 1996). More generally, for λ2 = 0 and p1 ≥ 0, the above equation defines the bridge estimators (Frank & Friedman 1993). Equation 1 defines the elastic net (up to a scaling) in the case that p1 = 1 and p2 = 2 (Zou & Hastie 2005). In this paper, we present a new approach to regularizing linear regression that involves applying a penalty not to the sum of squared errors, but rather to the log likelihood of the inverse covariance matrix under a multivariate normal model.

The least squares solution is β̂ = (XTX)−1XTy. In multivariate normal theory, the entries of (XTX)−1 that equal zero correspond to pairs of variables that have no partial correlation; in other words, pairs of variables that are conditionally independent, given all of the other features in the data. Non-zero entries of (XTX)−1 correspond to nonzero partial correlations. One way to perform regularization of least squares regression is to shrink the matrix (XTX)−1; in fact, this is done by ridge regression, since the ridge solution can be written as β̂ridge = (XTX + λI)−1XTy. Here, we propose a more general approach to shrinkage of the inverse covariance matrix. Our method involves estimating a regularized inverse covariance matrix by maximizing its log likelihood under a multivariate normal model, subject to a constraint on its elements. In doing this, we attempt to distinguish between variables that truly are partially correlated with each other and variables that in fact have zero partial correlation. We then use this regularized inverse covariance matrix in order to obtain regularized regression coefficients. We call the class of regression methods defined by this procedure the scout.

In Section 2, we present the scout criteria and explain the method in greater detail. We also discuss connections between the scout and pre-existing regression methods. In particular, we show that ridge regression, the lasso, and the elastic net are special cases of the scout. In addition, we present some specific members of the scout class that perform well relative to pre-existing methods in a variety of situations. In Sections 3, 4, and 5, we demonstrate the use of these methods in regression, classification, and generalized linear model settings on simulated data and on a number of gene expression data sets.

2. The Scout Method

2.1. The General Scout Family

Let X = (x1,…, xp) denote an n × p matrix of data, where n is the number of observations and p the number of features. Let y denote a vector of length n, containing a response value for each observation. Assume that the columns of X are standardized, and that y is centered. We can create a matrix X̃ = (X y), which has dimension n × (p+1). If we assume that X̃ is generated from the model N(0, Σ), then we can find the maximum likelihood estimator of the population inverse covariance matrix Σ−1 by maximizing

| (2) |

where is the empirical covariance matrix of X̃. Assume for a moment that S is invertible. Then, the maximum likelihood estimator for Σ−1 is S−1 (we use the fact that log det W = W-1 for a symmetric positive definite matrix W). Let denote a symmetric estimate of Σ−1. The problem of regressing y onto X is closely related to the problem of estimating Σ−1, since the least squares coefficients for the regression equal for Θ = S−1 (this follows from the partitioned inverse formula). If p > n, then some type of regularization is needed in order to estimate the regression coefficients, since S is not invertible. Even if p < n, we may want to shrink the least squares coefficients in some way in order to achieve superior prediction. The connection between estimation of Θ and estimation of the least squares coefficients suggests the possibility that rather than shrinking the coefficients β by applying a penalty to the sum of squared errors for the regression of y onto X, as is done in e.g. ridge regression or the lasso, we can obtain shrunken β estimates through maximization of the penalized log likelihood of the inverse covariance matrix Σ−1.

To do this, one could estimate Σ−1 as Θ that maximizes

| (3) |

where J(Θ) is a penalty function. For example, J(Θ) = ||Θ||p denotes the sum of absolute values of the elements of Θ if p = 1, and it denotes the sum of squared elements of Θ if p = 2. Our regression coefficients would then be given by the formula . However, recall that if X̃ ~ N (0, Σ), then the ij element of Θ gives the correlation of x̃i with x̃j, conditional on all of the other variables in X̃. Note that y is included in X̃. So it does not make sense to regularize the elements of Θ as presented above, because we really care about the partial correlations of pairs of variables given the other variables, as opposed to the partial correlations of pairs of variables given the other variables and the response.

For these reasons, rather than obtaining an estimate of Σ−1 by maximizing the penalized log likelihood in Equation 3, we estimate it via a two-stage maximization, given in the following algorithm:

The Scout Procedure for General Penalty Functions

-

Compute Θ̂xx, which maximizes

(4) -

Compute Θ̂, which maximizes

(5) where the top left p × p submatrix of Θ̂ is constrained to equal Θ̂xx, the solution to Step 1.

Compute β̂, defined by .

Compute β̂* = cβ̂, where c is the coefficient for the regression of y onto Xβ̂.

β̂* denotes the regularized coefficients obtained using this new method. Step 1 of the Scout Procedure involves obtaining shrunken estimates of (Σxx)−1 in order to smooth our estimates of which variables are conditionally independent. Step 2 involves obtaining shrunken estimates of Σ−1, conditional on (Σ−1)xx = Θ̂xx, the estimate obtained in Step 1. Thus, we obtain regularized estimates of which predictors are dependent on y, given all of the other predictors. The scaling in the last step is performed because it has been found, empirically, to improve performance.

By penalizing the entries of the inverse covariance matrix of the predictors in Step 1 of the Scout Procedure, we are attempting to distinguish between pairs of variables that truly are conditionally dependent, and pairs of variables that appear to be conditionally dependent due only to chance. We are searching, or scouting, for variables that truly are correlated with each other, conditional on all of the other variables. Our hope is that sets of variables that truly are conditionally dependent will also be related to the response. In the context of a microarray experiment, where the variables are genes and the response is some clinical outcome, this assumption is reasonable: we seek genes that are part of a pathway related to the response. One expects that such genes will also be conditionally dependent. In Step 2, we shrink our estimates of the partial correlation between each predictor and the response, given the shrunken partial correlations between the predictors that we estimated in Step 1. In contrast to ordinary least squares regression, which uses the inverse of the empirical covariance matrix to compute regression coefficients, we jointly model the relationship that the p predictors have with each other and with the response in order to obtain shrunken regression coefficients.

We define the scout family of estimated coefficients for the regression of y onto X as the solutions β̂* obtained in Step 4 of the Scout Procedure. We refer to the penalized log likelihoods in Steps 1 and 2 of the Scout Procedure as the first and second scout criteria.

In the rest of the paper, when we discuss properties of the scout, for ease of notation we will ignore the scale factor in Step 4 of the Scout Procedure. For instance, if we claim that two procedures yield the same regression coefficients, we more specifically mean that the regression coefficients are the same up to scaling by a constant factor.

Least squares, the elastic net, the lasso, and ridge regression result from the scout procedure with appropriate choices of J1 and J2 (up to a scaling by a constant). Details are in Table 1. The first two results can be shown directly by differentiating the scout criteria, and the others follow from Equation 11 in Section 2.4.

Table 1.

Special cases of the scout.

| J1(Θxx) | J2(Θ) | Method |

|---|---|---|

| 0 | 0 | Least Squares |

| tr(Θxx) | 0 | Ridge Regression |

| tr(Θxx) | ||Θ||1 | Elastic Net |

| 0 | ||Θ||1 | Lasso |

| 0 | ||Θ||2 | Ridge Regression |

2.2. Lp Penalties

Throughout the remainder of this paper, we will exclusively be interested in the case that J1(Θ) = λ1||Θ||p1 and , where the norm is taken elementwise over the entries of Θ, and where λ1, λ2 ≥ 0. For ease of notation, Scout(p1, p2) will refer to the solution to the scout criterion with J1 and J2 as just mentioned. If λ2 = 0, then this will be indicated by Scout(p1, ·), and if λ1 = 0, then this will be indicated by Scout(·, p2). Therefore, in the rest of this paper, the Scout Procedure will be as follows:

The Scout Procedure with Lp Penalties

-

Compute Θ̂xx, which maximizes

(6) -

Compute Θ̂, which maximizes

(7) where the top left p × p submatrix of Θ̂ is constrained to equal Θ̂xx, the solution to Step 1. Note that because of this constraint, the penalty really is only being applied to the last row and column of Θ̂.

Compute β̂, defined by .

Compute β̂* = cβ̂, where c is the coefficient for the regression of y onto Xβ̂.

(Note that in Step 2, because the top left p × p submatrix of Θ̂ is fixed, the penalty on the top left p × p elements has no effect).

2.3. Simple Example

Here, we present a toy example in which n = 20 observations on p = 19 variables are generated under the model y = Xβ + ε, where βj = j for j ≤ 10 and βj = 0 for j > 10, and where ε ~ N (0, 25). In addition, the first 10 variables have correlation 0.5 with each other; the rest are uncorrelated.

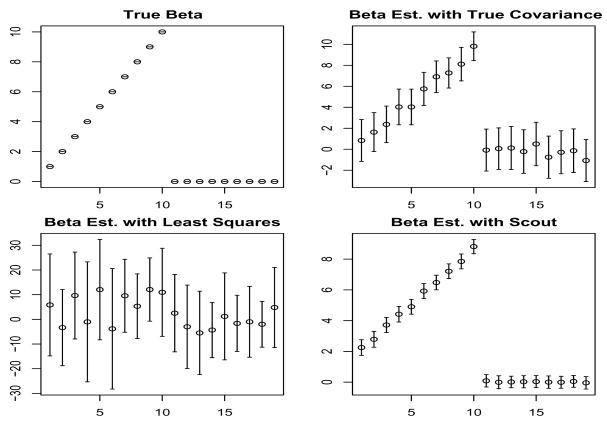

Figure 1 shows the average over 500 simulations of the following four quantities: the true value of β, the least squares regression coefficients, the estimate Σ−1Cov(X, y) where Σ is the true covariance matrix of X in the underlying model, and the Scout(1, ·) regression estimate. It is not surprising that least squares performs poorly in this situation, since N is barely larger than p. Scout(1, ·) performs extremely well; though it results in coefficient estimates that are slightly biased, they have much less variance than the estimates obtained using the true covariance matrix. This simple example demonstrates that benefits can result from the use of a shrunken estimate of the inverse covariance matrix.

Fig. 1.

Data were generated under a simple model; results shown are averaged over 500 simulations. Standard error bars and coefficient estimates are shown. Clockwise from the top left, panels show the true value of β, Σ−1Cov(X, y), (XTX)−1XTy, and Scout (1,·).

2.4. Minimization of the Scout Criteria with Lp Penalties

If λ1 = 0, then the minimum of the first scout criterion is given by (Sxx)−1 (if Sxx is invertible). In the case that λ1 > 0 and p1 = 1, minimization of the first scout criterion has been studied extensively; see e.g. Meinshausen & Bühlmann (2006). The solution can be found via the “graphical lasso”, an efficient algorithm given by Banerjee et al. (2008) and Friedman et al. (2008b) that involves iteratively regressing one row of the estimated covariance matrix onto the others, subject to an L1 constraint, in order to update the estimate for that row.

If λ1 > 0 and p1 = 2, the solution to Step 1 of the Scout Procedure is even easier. We want to find θxx that maximizes

| (8) |

Differentiating with respect to θxx, we see that the maximum solves

| (9) |

This equation implies that θxx and Sxx share the same eigenvectors. Letting λi denote the ith eigenvalue of θxx and letting si denote the ith eigenvalue of Sxx, it is clear that

| (10) |

We can easily solve for λi, and can therefore solve the first scout criterion exactly in the case p1 = 2, in essentially just the computational cost of obtaining the eigenvalues of Sxx.

It turns out that if p2 = 1 or p2 = 2, then it is not necessary to minimize the second scout criterion directly, as there is an easier alternative:

Claim 1

For p2 ∈ {1, 2}, the solution to Step 3 of the Scout Procedure is equal to the solution to the following, up to scaling by a constant:

| (11) |

where Σ̂xx is the inverse of the solution to Step 1 of the Scout Procedure.

(The proof of Claim 1 is in Section 8.1.1 in the Appendix.) Therefore, we can replace Steps 2 and 3 of the Scout Procedure with an Lp2 regression. It is trivial to show that if λ2 = 0 in the Scout Procedure, then the scout solution is given by β̂ = (Σ̂xx)−1Sxy. It also follows that if λ1 = 0, then the cases λ2 = 0, p2 = 1, and p2 = 2 correspond to ordinary least squares regression (if the empirical covariance matrix is invertible), the lasso, and ridge regression, respectively.

In addition, we will show in Section 2.5.1 that if p1 = 2 and p2 = 1, then the scout can be re-written as an elastic net problem with slightly different data; therefore, fast algorithms for solving the elastic net (Friedman et al. 2008a) can be used to solve Scout(2, 1). The methods for minimizing the scout criteria are summarized in Table 2.

Table 2.

Minimization of the scout criteria: special cases. The scout criteria can be easily minimized if λ1 = 0 or p1 ∈ {1, 2}, and if λ2 = 0 or p2 ∈ {1, 2}.

| λ2 = 0 | p2 = 1 | p2 = 2 | |

|---|---|---|---|

| λ1 = 0 | Least Squares | L1 Regression | L2 Regression |

| p1 = 1 | Graphical Lasso | Graphical Lasso + L1 Reg. | Graphical Lasso + L2 Reg. |

| p1 = 2 | Eigenvalue Problem | Elastic Net | Eigenvalue Problem + L2 Reg. |

We compared computation times for Scout(2, ·), Scout(1, ·), Scout(2, 1), and Scout(1, 1) on an example with n = 100, λ2 = λ1 = 0.2, and X dense. All timings were carried out on a Intel Xeon 2.80 GHz processor. Table 3 shows the number of CPU seconds required for each of these methods for a range of values of p. For all methods, after the scout coefficients have been estimated for a given set of parameter values, estimation for different parameter values is faster due to a warm start (when p1 = 1) or because the eigen decomposition has already been computed (when p1 = 2).

Table 3.

Timing comparisons for minimization of the scout criteria. The numbers of CPU seconds required to run four versions of the scout are shown, for λ1 = λ2 = 0.2, n = 100, X dense, and various values of p.

| p | Scout(1, ·) | Scout(1, 1) | Scout(2, ·) | Scout(2, 1) |

|---|---|---|---|---|

| 500 | 1.685 | 1.700 | 0.034 | 0.072 |

| 1000 | 22.432 | 22.504 | 0.083 | 0.239 |

| 2000 | 241.289 | 241.483 | 0.260 | 0.466 |

2.5. Properties of the Scout

In this section, for ease of notation, we will consider an equivalent form of the Scout Procedure obtained by replacing Sxx with XT X and Sxy with XT y.

2.5.1. Similarities between Scout, Ridge Regression, and the Elastic Net

Let denote the singular value decomposition of X with di the ith diagonal element of D and d1 ≥ d2 ≥ … ≥ dr > dr+1 = … = dp = 0, where r = rank(X) ≤ min(n, p). Consider Scout(2, p2). As previously discussed, the first step in the Scout Procedure corresponds to finding θ that solves

| (12) |

Since θ and XTX therefore share the same eigenvectors, it follows that θ−1 = V(D2 + D̃2)VT where D̃2 is a p×p diagonal matrix with ith diagonal entry equal to . It is not difficult to see that ridge regression, Scout(2, ·), and Scout(2, 2) result in similar regression coefficients:

| (13) |

Therefore, while ridge regression simply adds a constant to the diagonal elements of D in the least squares solution, Scout(2, ·) instead adds a function that is monotone decreasing in the value of the diagonal element. (The consequences of this alternative shrinkage are explored under a latent variable model in Section 2.6). Scout(2, 2) is a compromise between Scout(2, ·) and ridge regression.

In addition, we note that the solutions to the naive elastic net and Scout(2, 1) are quite similar:

| (14) |

In fact, both solutions can be re-written:

| (15) |

where D̂ is a diagonal matrix with diagonal elements , X*= D̂(r)V(r)T, . D(r) and D̂(r) are the r × r submatrices of D and D̂ corresponding to non-zero diagonal elements, and U(r) and V(r) correspond to the first r columns of U and V. From Equation 15, it is clear that Scout(2, 1) solutions can be obtained using software for the elastic net on data X* (which has dimension no greater than the original data X) and y*. In addition, given the similarity between the elastic net and Scout(2, 1) solutions, it is not surprising that Scout(2, 1) shares some of the elastic net’s desirable properties, as is shown in Section 2.5.2.

2.5.2. Variable Grouping Effect

Zou & Hastie (2005) show that unlike the lasso, the elastic net and ridge regression have a variable grouping effect: correlated variables result in similar coefficients. The same is true of Scout(2, 1):

Claim 2

Assume that the predictors are standardized and that y is centered. Let ρ denote the correlation between xi and xj, and let β̂ denote the solution to Scout(2, 1). If sgn(β̂i) = sgn(β̂j), then the following holds:

| (16) |

The proof of Claim 2 is in Section 8.1.2 in the Appendix. Similar results hold for Scout(2, ·) and Scout(2, 2), without the assumptions about the signs of β̂i and β̂j.

2.5.3. Connections to Regression with Orthogonal Features

Assume that the features are standardized, and consider the scout criterion with p1 = 1. For λ1 sufficiently large, the solution θ̂xx to the first scout criterion (Equation 6) is a diagonal matrix with diagonal elements . (More specifically, if for all i ≠ j, then then the scout criterion with p1 = 1 results in a diagonal matrix; see Banerjee et al. (2008) Theorem 4). Thus, if is the ith component of the Scout(1, ·) solution, then . If λ2 > 0, then the resulting scout solutions with p2 = 1 are given by a variation of the univariate soft thresholding formula for L1 regression:

| (17) |

Similarly, if p2 = 2, the resulting scout solutions are given by the following formula:

| (18) |

Therefore, as the parameter λ1 is increased, the solutions that are obtained range (up to a scaling) from the ordinary Lp2 multivariate regression solution to the regularized regression solution for orthonormal features.

2.6. An Underlying Latent Variable Model

Let X be a n × p matrix of n observations on p variables, and y a n × 1 vector of response values. Suppose that X and y are generated under the following latent variable model:

| (19) |

where ui and vj are the singular vectors of X, and ε is a n × 1 vector of noise.

Claim 3

Under this model, if d1 > d2, then Scout(2, ·) results in estimates of the regression coefficients that have lower variance than those obtained via ridge regression.

A more technical explanation of Claim 3, as well as a proof, are given in Section 8.1.3 of the Appendix. Note that a simple example of the above model would be the case of a block diagonal covariance matrix with two blocks, where one of the blocks of correlated features is associated with the outcome. In the case of gene expression data, these blocks could represent gene pathways, one of which is responsible for, and has expression that is correlated with, the outcome. Claim 3 shows that if the signal associated with the relevant gene pathway is sufficiently large, then Scout(2, ·) will provide a benefit over ridge.

3. Numerical Studies: Regression via the Scout

3.1. Simulated Data

We compare the performance of ordinary least squares, the lasso, the elastic net, Scout(2, 1), and Scout(1, 1) on a suite of five simulated examples. The first four simulations are based on those used in the original elastic net paper (Zou & Hastie 2005) and the original lasso paper (Tibshirani 1996). The fifth is of our own invention. All five simulations are based on the model y = Xβ + σε where ε ~ N(0, 1). For each simulation, each data set consists of a small training set, a small validation set (used to select the values of the various parameters) and a large test set. We indicate the size of the training, validation, and test sets using the notation ·/·/·. The five simulations are as follows:

Each data set consists of 20/20/200 observations, 8 predictors with coefficients β = (3, 1.5, 0, 0, 2, 0, 0, 0), and σ = 3. The pairwise correlation between xi and xj is 0.5| i − j |.

This simulation is as in Simulation 1, except that βi = 0.85 for all i.

Each data set consists of 100/100/400 observations and 40 predictors. βi = 0 for i ∈ 1, …, 10 and for i ∈ 21, …, 30; for all other i, βi = 2. We also set σ = 15 and the correlation between all pairs of predictors was 0.5.

-

Each data set consists of 50/50/400 observations and 40 predictors. βi = 3 for i ∈ 1, …, 15 and βi = 0 for i ∈ 16, …, 40, and σ = 15. The predictors are generated as follows:

(20) Also, xi ~ N (0, 1) are independent and identically distributed for i = 16, …, 40, and are independent and identically distributed for i = 1, …, 15.

Each data set consists of 50/50/400 observations and 50 predictors; βi = 2 for i < 9 and βi = 0 for i ≥ 9. σ = 2 and Cor(xi, xj) = .5 · 1i,j ≤ 9.

For each simulation, 200 data sets were generated, and the median mean squared errors (with standard errors given in parentheses) are given in Table 4. For each simulation, the two methods resulting in lowest median mean squared error are shown in bold. The scout provides an improvement over the lasso in all simulations. Both scout methods result in lower mean squared error than the elastic net in Simulations 2, 3, and 5; in Simulations 1 and 4, the scout methods are quite competitive. Table 5 shows median L2 distances between the true and estimated coefficients for each of the five models.

Table 4.

Median mean squared error over 200 simulated data sets is shown for each simulation. Standard errors are given in parentheses. For each simulation, the two methods with lowest median mean squared errors are shown in bold. Least squares was not performed for Simulation 5, because p = n.

| Simulation | Least Squares | Lasso | ENet | Scout(1, 1) | Scout(2, 1) |

|---|---|---|---|---|---|

| Sim 1 | 5.83(0.43) | 2.30(0.16) | 1.77(0.20) | 1.71(0.13) | 1.85(0.14) |

| Sim 2 | 5.83(0.43) | 2.84(0.10) | 1.90(0.10) | 0.89(0.08) | 1.15(0.10) |

| Sim 3 | 147.14(3.63) | 42.03(0.91) | 30.79(0.61) | 20.11(0.16) | 18.22(0.27) |

| Sim 4 | 961.57(42.82) | 46.44(2.14) | 20.49(1.97) | 23.15(1.62) | 23.70(1.89) |

| Sim 5 | NA | 1.32(0.06) | 0.55(0.02) | 0.27(0.02) | 0.52(0.04) |

Table 5.

Median L2 distance over 200 simulated data sets is shown for each simulation; details are as in Table 4.

| Simulation | Least Squares | Lasso | ENet | Scout(1, 1) | Scout(2, 1) |

|---|---|---|---|---|---|

| Sim 1 | 3.05(0.10) | 1.74(0.05) | 1.65(0.08) | 1.58(0.05) | 1.62(0.06) |

| Sim 2 | 3.05(0.10) | 1.95(0.02) | 1.62(0.03) | 0.90(0.03) | 1.04(0.04) |

| Sim 3 | 17.03(0.22) | 8.91(0.09) | 7.70(0.06) | 6.15(0.01) | 5.83(0.03) |

| Sim 4 | 168.40(5.13) | 17.40(0.16) | 3.85(0.13) | 5.19(2.3) | 3.80(0.14) |

| Sim 5 | NA | 1.23(0.04) | 1.03(0.03) | 0.62(0.03) | 0.89(0.02) |

3.2. Making Use of Observations without Response Values

In Step 1 of the Scout Procedure, we estimate the inverse covariance matrix based on the training set X data, and in Steps 2–4, we compute a penalized least squares solution based on that estimated inverse covariance matrix and Cov(X, y). Step 1 of this procedure does not involve the response y at all.

Now, consider a situation in which one has access to a large amount of X data, but responses are known for only some of the observations. (For instance, this could be the case for a medical researcher who has clinical measurements on hundreds of cancer patients, but survival times for only dozens of patients.) More specifically, let X1 denote the observations for which there is an associated response y, and let X2 denote the observations for which no response data is available. Then, one could estimate the inverse covariance matrix in Step 1 of the Scout Procedure using both X1 and X2, and perform Step 2 using Cov(X1, y). By also using X2 in Step 1, we achieve a more accurate estimate of the inverse covariance matrix than would have been possible using only X1.

Such an approach will not provide an improvement in all cases. For instance, consider the trivial case in which the response is a linear function of the predictors, p < n, and there is no noise: y = X1β. Then, the least squares solution, using only X1 and not X2, is β̂ = (X1TX1)−1X1Ty = (X1TX1)−1X1TX1β = β. In this case, it clearly is best to only use X1 in estimating the inverse covariance matrix. However, one can imagine situations in which one can use X2 to obtain a more accurate estimate of the inverse covariance matrix.

Consider a model in which a latent variable has generated some of the features, as well as the response. In particular, suppose that the data are generated as follows:

| (21) |

In addition, we let εij, , ui ~ N (0, 1) i.i.d. The first five variables are “signal” variables, and the rest are “noise” variables. Suppose that we have three sets of observations: a training set of size n = 12, for which the y values are known, a test set of size n = 200, for which we wish to predict the y values, and an additional set of size n = 36 observations for which we do not know the y values and do not wish to predict them. This layout is shown in Table 6.

Table 6.

Making use of observations w/o response values: Set-up. The training set consists of 12 observations and associated responses. We wish to predict responses on a test set of 200 observations. We have access to 36 observations for which responses are not available and not of interest.

| Sample Size | Response Description | |

|---|---|---|

| Training Set | 12 | Available |

| Test Set | 200 | Unavailable - Must be predicted |

| Additional Obs. | 36 | Unavailable - Not of interest |

We compare the performances of the scout and other regression methods. The scout method is applied in two ways: using only the training set X values to estimate the inverse covariance matrix, and using also the observations without response values. All tuning parameter values are chosen by 5-fold cross-validation. The results in Table 7 are the average mean squared prediction errors obtained over 500 simulations. From the table, it is clear that both versions of scout outperform all of the other methods. In addition, using observations that do not have response values does result in a significant improvement.

Table 7.

Making use of observations w/o response values: Results. Mean squared prediction errors are averaged over 500 simulations; standard errors are shown in parentheses.

| Method | Mean Squared Prediction Error |

|---|---|

| Scout(1, ·) w/Additional Obs. | 25.65 (0.38) |

| Scout(1, ·) w/o Additional Obs. | 29.92 (0.62) |

| ENet | 32.38 (1.04) |

| Lasso | 47.24 (3.58) |

| Σ−1Cov(X, y) | 86.66 (2.07) |

| Least Squares | 1104.9 (428.84) |

| Null Model | 79.24 (0.3) |

In this example, twelve labeled observations on ten variables do not suffice to reliably estimate the inverse covariance matrix. The scout can make use of the observations that lack response values in order to improve the estimate of the inverse covariance matrix, thereby yielding superior predictions. It is worth noting that in this exaple, the formula β̂ = Σ−1Cov(X1, y) (where Σ is the true covariance matrix) yields an average prediction error that is higher than that of the null model. Therefore, it is clear that in this example, shrinkage is necessary to achieve good predictions.

4. Classification via the Scout

In classification problems, linear discriminant analysis (LDA) can be used if n > p, but when p > n, regularization of the within-class covariance matrix is necessary. Regularized linear discriminant analysis is discussed in Friedman (1989) and Guo et al. (2007). In Guo et al. (2007), the within-class covariance matrix is shrunken, as in ridge regression, by adding a multiple of the identity matrix to the empirical covariance matrix. Here, we instead estimate a shrunken within-class inverse covariance matrix by maximizing its log likelihood, under a multivariate normal model, subject to an Lp penalty on its elements.

4.1. Details of Extension of Scout to Classification

Consider a classification problem with K classes; each observation belongs to some class k ∈ 1, …, K. Let C(i) denote the class of training set observation i, which is denoted Xi. Our goal is to classify observations in an independent test set.

Let μ̂k denote the p × 1 vector that contains the mean of observations in class k, and let denote the estimated within-class covariance matrix (based on the training set) that is used for ordinary LDA. Then, the scout procedure for clasification is as follows:

The Scout Procedure for Classification

-

Compute the shrunken within-class inverse covariance matrix as follows:

(22) where λ is a shrinkage parameter.

-

Classify test set observation X to class k′ if , where

(23) and πk is the frequency of class k in the training set.

This procedure is analogous to LDA, but we have replaced Swc with a shrunken estimate.

This classification rule performs quite well on real microarray data (as is shown below), but has the drawback that it results in a classification rule that makes use of all of the genes. We can remedy this in one of two ways. We can apply the method described above to only the genes with highest univariate rankings on the training data; this is done in the next section. Alternatively, we can apply an L1 penalty in estimating the quantity ; note (from Equation 23) that sparsity in this quantity will result in a classification rule that is sparse in the features. Details of this second method, which is not implemented here, are given in Section 8.2 of the Appendix. We will refer to the method detailed in Equation 23 as Scout(s, ·) because the penalized log likelihood that is maximized in Equation 22 is analogous to the first scout criterion in the regression case. The tuning parameter λ in Equations 22 and 23 can be chosen via cross-validation.

4.2. Ramaswamy Data

We assess the performance of this method on the Ramaswamy microarray data set, which is discussed in detail in Ramaswamy et al. (2002) and explored further in Zhu & Hastie (2004) and Guo et al. (2007). It consists of a training set of 144 samples and a test set of 54 samples, each of which contains measurements on 16063 genes. The samples are classified into 14 distinct cancer types. We compare the performance of Scout(2, ·) to nearest shrunken centroids (NSC) (Tibshirani et al. (2002) and Tibshirani et al. (2003)), L2 penalized multinomial (Zhu & Hastie 2004), the support vector machine (SVM) with one-versus-all classification (Ramaswamy et al. 2002), and regularized discriminant analysis (RDA) (Guo et al. 2007). For each method, tuning parameter values were chosen by cross-validation. In addition to running Scout(2, ·) on all 16063 genes, we also ran it on only the genes with highest univariate t-statistics (Tibshirani et al. 2002) in the training set. In the latter case, cross-validation was performed in order to select the number of genes to include in the model. (The model with 4000 genes had lowest cross-validation error). Note that the selection of genes with highest t-statistics was performed separately in each training fold during cross-validation.

The results can be seen in Table 8. Scout(2, ·) performed on the 4000 genes with highest training set t-statistics had the lowest test error rate out of all of the methods that we considered.

Table 8.

Cross-validation and test set errors for the following methods are compared on the Ramaswamy Data: Scout(2, ·), Scout(2, ·) using the genes with highest t-statistics on the training set, regularized discriminant analysis, nearest shrunken centroids, support vector machine using one-versus-all classification, and the L2 penalized multinomial. With the exception of RDA, all methods were performed after cube roots of the data were taken and the patients were standardized. RDA was run on the standardized patients, without taking cube roots, as this led to much better performance.

| Method | CV Err. (of 144) | Test Err. (of 54) | No. Genes |

|---|---|---|---|

| NSC | 35 | 17 | 5217 |

| L2 Penalized Mult. | 29 | 15 | 16063 |

| SVM | 33 | 14 | 16063 |

| RDA | 34 | 10 | 16063 |

| Scout(2, ·) | 38 | 11 | 16063 |

| Scout(2, ·) High T-stat. | 21 | 8 | 4000 |

5. Extension to Generalized Linear Models and the Cox Model

We have discussed the application of the scout to classification and regression problems, and we have shown examples in which these methods perform well. In fact, the scout can also be used in fitting generalized linear models, by replacing the iteratively reweighted least squares step with a covariance-regularized regression. In particular, we discuss the use of the scout in the context of fitting a Cox proportional hazards model for survival data. We present an example involving four lymphoma microarray datasets in which the scout results in improved performance relative to other methods.

5.1. Details of Extension of Scout to the Cox Model

Consider survival data of the form (yi, xi, δi) for i ∈ 1, …, n, where δi is an indicator variable that equals 1 if observation i is complete and 0 if censored, and xi is a vector of predictors ( ) for individual i. Failure times are t1 < t2 < … < tk; there are di failures at time ti. We wish to estimate the parameter β = (β1, …, βp)T in the proportional hazards model λ(t|x) = λo(t)exp(Σj xjβj). We assume that censoring is noninformative. Letting η = Xβ, D the set of indices of the failures, Rr the set of indices of the individuals at risk at time tr, and Dr the set of indices of the failures at tr, the partial likelihood is given as follows (see e.g. Kalbfleisch & Prentice (1980)):

| (24) |

In order to fit the proportional hazards model, we must find the β that maximizes the likelihood above. Note that and . Let and . The iteratively reweighted least squares algorithm that implements the Newton-Raphson method, for β0 the value of β from the previous step, involves finding β that solves

| (25) |

This is equivalent to finding β that minimizes

| (26) |

where X* = A1/2X, y* = A−1/2u, β* = β − βo (Green 1984).

The traditional iterative reweighted least squares algorithm involves solving the above least squares problem repeatedly, recomputing y* and X* at each step and setting β0 equal to the solution β attained at the previous iteration. We propose to solve the above equation using the scout, rather than by a simple linear regression. We have found empirically that good results are obtained if we initially set β0 = 0, and then perform just one Newton-Raphson step (using the scout). This is convenient, since for data sets with many features, solving a scout regression can be time-consuming. Therefore, our implementation of the scout method for survival data involves simply performing one Newton-Raphson step, beginning with β0 = 0.

Using the notation and , the Scout Procedure for survival data is almost identical to the regression case, as follows:

The Scout Procedure for the Cox Model

-

Let Θ̂xx maximize

(27) -

Let Θ̂ maximize

(28) where the top p × p submatrix of Θ is constrained to equal Θ̂xx, obtained in the previous step.

Compute .

Let β̂* = cβ̂, where c is the coefficient of a Cox proportional hazards model fit to y using Xβ as a predictor.

β̂* obtained in Step 4 is the vector of estimated coefficients for the Cox proportional hazards model. In the procedure above, λ1, λ2 > 0 are tuning parameters. In keeping with the notation of previous sections, we will refer to the resulting coefficient estimates as Scout(p1, p2).

5.2. Lymphoma Data

We illustrate the effectiveness of the scout method on survival data using four different data sets, all involving survival times and gene expression measurements for patients with diffuse large B-cell lymphoma. The four data sets are as follows: Rosenwald et al. (2002) (“Rosenwald”), which consists of 240 patients, Shipp et al. (2002) (“Shipp”), which consists of 58 patients, Hummel et al. (2006) (“Hummel”), which consists of 81 patients, and Monti et al. (2005) (“Monti”), which consists of 129 patients. For consistency and ease of comparison, we considered only a subset of around 1482 genes that were present in all four data sets.

We randomly split each of the data sets into a training set, a validation set, and a test set of equal sizes. For each data set, we fit four models to the training set: the L1 penalized Cox proportional hazards (“L1 Cox”) method of Park & Hastie (2007), the supervised principal components (SPC) method of Bair & Tibshirani (2004), Scout(2, 1), and Scout(1, 1). For each data set, we chose the tuning parameter values that resulted in the predictor that gave the highest log likelihood when used to fit a Cox proportional hazards model on the validation set (this predictor was Xvalβtrain for L1 Cox and scout, and it was the first supervised principal component for SPC). We tested the resulting models on the test set. The mean value of 2(log(L) − log(Lo)) over ten separate training/test/validation set splits is given in Table 9, where L denotes the likelihood of the Cox proportional hazards model fit on the test set using the predictor obtained from the training set (for L1 Cox and scout, this was Xtestβtrain, and for SPC, this was the first supervised principal component), and Lo denotes the likelihood of the null model. From Tables 9 and 10, it is clear that the scout results in predictors that are quite competitive with, if not better than, the competing methods on all four data sets.

Table 9.

Mean of 2(log(L) − log(Lo)) on Survival Data. L1 Cox, supervised principal components, Scout(1, 1), and Scout(2, 1) are compared on the Hummel, Monti, Rosenwald, and Shipp data sets over ten random training/validation/test set splits. The predictor obtained from the training set is fit on the test set using a Cox proportional hazards model, and the median value of 2(log(L) − log(Lo)) over the ten repetitions is reported. For each data set, the two highest mean values of 2(log(L) − log(Lo)) are shown in bold.

| Data Set | L1 Cox | SPC | Scout(1, 1) | Scout(2, 1) |

|---|---|---|---|---|

| Hummel | 2.640 | 3.823 | 4.245 | 3.293 |

| Monti | 1.647 | 1.231 | 2.149 | 2.606 |

| Rosenwald | 4.129 | 3.542 | 3.987 | 4.930 |

| Shipp | 1.903 | 1.004 | 2.807 | 2.627 |

Table 10.

Median Number of Genes Used for Survival Data. L1 Cox, supervised principal components, Scout(1, 1) and Scout(2, 1) are compared on the Hummel, Monti, Rosenwald, and Shipp data sets over ten random training/validation/test set splits; the median number of genes used in each of the resulting models is reported.

| Data Set | L1Cox | SPC | Scout(1, 1) | Scout(2, 1) |

|---|---|---|---|---|

| Hummel | 14 | 33 | 78 | 13 |

| Monti | 18.5 | 17 | 801.5 | 144.5 |

| Rosenwald | 37.5 | 32 | 294 | 85 |

| Shipp | 5.5 | 10 | 4.5 | 5 |

6. Discussion

We have presented covariance-regularized regression, a class of regression procedures (the “scout” family) obtained by maximizing the log likelihood of the inverse covariance matrix of the data, rather than by minimizing the sum of squared errors, subject to a penalty. We have shown that three well-known regression methods - ridge, the lasso, and the elastic net - fall into the covariance-regularized regression framework. In addition, we have explored some new methods within this framework. We have extended the covariance-regularized regression framework to classification and generalized linear model settings, and we have demonstrated the performance of the resulting methods on a number of gene expression data sets.

A drawback of the scout method is that when p1 = 1 and the number of features is large, then minimizing the first scout criterion can be quite slow. When more than a few thousand features are present, the scout with p1 = 1 is not a viable option at present. However, scout with p1 = 2 is very fast, and we are confident that computational and algorithmic improvements will lead to increases in the number of features for which the scout criteria can be minimized with p1 = 1.

Covariance-regularized regression represents a new way to understand existing regularization methods for regression, as well as an approach to develop new regularization methods that appear to perform better in practice in many examples.

Acknowledgments

We thank Trevor Hastie for showing us the solution to the penalized log likelihood with an L2 penalty. We thank both Trevor Hastie and Jerome Friedman for valuable discussions and for providing the code for the L2 penalized multinomial and the elastic net. Daniela Witten was supported by a National Defense Science and Engineering Graduate Fellowship. Robert Tibshirani was partially supported by National Science Foundation Grant DMS-9971405 and National Institutes of Health Contract N01-HV-28183.

8. Appendix

8.1. Proofs of Claims

8.1.1. Proof of Claim 1

First, suppose that p2 = 1. Consider the penalized log-likelihood

| (29) |

with Θxx, the top left p × p submatrix of Θ, fixed to equal the matrix that maximizes the log likelihood in Step 1 of the Scout Procedure. It is clear that if Θ̂ maximizes the log likelihood, then . The subgradient equation for maximization of the remaining portion of the log-likelihood is

| (30) |

where Γi = 1 if the ith element of Θxy is positive, Γi = −1 if the ith element of Θxy is negative, and otherwise Γi is between −1 and 1.

Let β = Θxx(Θ−1)xy. Therefore, we equivalently wish to find β that solves

| (31) |

From the partitioned inverse formula, it is clear that sgn(β) = −sgn(Θxy). Therefore, our task is equivalent to finding β which minimizes

| (32) |

Of course, this is Equation 11. It is an L1-penalized regression of y onto X, using only the inner products, with Sxx replaced with (Θxx) −1. In other words, β̂ that solves Equation 11 is given by Θxx(Θ−1)xy, where Θ solves Step 2 of the Scout Procedure.

Now, the solution to Step 3 of the Scout Procedure is . By the partitioned inverse formula, Θxx(Θ−1)xy + Θxy(Θ−1)yy = 0, so . In other words, the solution to Step 3 of the Scout Procedure and the solution to Equation 11 differ by a factor of (Θ−1)yyΘyy. Since Step 4 of the Scout Procedure involves scaling the solution to Step 3 by a constant, it is clear that one can replace Step 3 of the Scout Procedure with the solution to Equation 11.

Now, suppose p2 = 2. To find Θxy that maximizes this penalized log-likelihood, we take the gradient and set it to zero:

| (33) |

Again, let β = Θxx(Θ−1)xy. Therefore, we equivalently wish to find β that solves

| (34) |

for some new constant λ3, using the fact, from the partitioned inverse formula, that . The solution β minimizes

Of course, this is again Equation 11. Therefore, β̂ that solves Equation 11 is given (up to scaling by a constant) by Θxx(Θ−1)xy, where Θ solves Step 2 of the Scout Procedure. As before, by the partitioned inverse formula, and since Step 4 of the Scout Procedure involves scaling the solution to Step 3 by a constant, it is clear that one can replace Step 3 of the Scout Procedure with the solution to Equation 11.

8.1.2. Proof of Claim 2

Recall that the solution to Scout(2, 1) minimizes the following:

| (35) |

where D̃2 is a p × p diagonal matrix with ith diagonal entry equal to and X = UDVT. Equivalently, the solution minimizes

| (36) |

where D̄2 is the diagonal matrix with elements , because V is p × p orthogonal. It is easy to see that the solution also minimizes the following:

| (37) |

where . If β̂ minimizes Equation 37, and if we assume that sgn(β̂i) = sgn(β̂j), then it follows that

| (38) |

Note that

| (39) |

Therefore,

| (40) |

Now, . Since we assumed that the features are standardized, it follows that where ρ is the correlation between xi and xj. It also is easy to see that ||(D̄VT)i − (D̄VT)j||2 ≤ 1 − ρ. Therefore, it follows that

| (41) |

8.1.3. Proof of Claim 3

Consider data generated under the latent variable model given in Section 2.6. Note that it follows that

| (42) |

where d3 = … = dp = 0. We consider two options for the regression of y onto X: ridge regression and Scout(2, ·). Let β̂rr and β̂sc denote the resulting estimates, and let λrr and λsc be the tuning parameters of the two methods, respectively.

| (43) |

Similarly, using the fact that Scout(2, ·) results in replacing with :

| (44) |

It is clear that the signal part of β̂rr is , and that the signal part of β̂sc is . The following relationship between λrr and λsc results in signals that are equal:

| (45) |

From now on, we assume that Equation 45 holds. It is clear that the noise parts of β̂rr and β̂sc are and . Using Equation 45, we know that . So it suffces to compare and . Recall that d2 > 0. So,

| (46) |

If d1 > d2, then the above quantity is negative; if d1 < d2, then it is positive. Therefore, the scout solution has a smaller noise term than the ridge solution if and only if d1 > d2. In other words, if the portion of X that is correlated with y has a stronger signal than the portion that is orthogonal to y, then Scout(2, ·) will perform better than ridge, because it will shrink the parts of the inverse covariance matrix that correspond to variables that are uncorrelated with the response.

8.2. Feature Selection for Scout LDA

The method that we propose in Section 4.1 can be easily modified in order to perform built-in feature selection. Using the notation in Section 4.1, we observe that

| (47) |

and so we replace μ̂k in Equation 23 with

| (48) |

The above can be solved via an L1 regression, and it gives the following classification rule for a test observation X:

| (49) |

Contributor Information

Daniela M. Witten, Department of Statistics, Stanford University, 390 Serra Mall, Stanford CA 94305, USA. E-mail: dwitten@stanford.edu

Robert Tibshirani, Departments of Statistics and Health Research & Policy, Stanford University, 390 Serra Mall, Stanford CA 94305, USA. E-mail: tibs@stat.stanford.edu.

References

- Bair E, Tibshirani R. Semi-supervised methods to predict patient survival from gene expression data. PLOS Biology. 2004;2:511–522. doi: 10.1371/journal.pbio.0020108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banerjee O, El Ghaoui LE, d’Aspremont A. Model selection through sparse maximum likelihood estimation for multivariate gaussian or binary data. Journal of Machine Learning Research 2008 [Google Scholar]

- Frank I, Friedman J. A statistical view of some chemometrics regression tools (with discussion) Technometrics. 1993;35(2):109–148. [Google Scholar]

- Friedman J. Regularized discriminant analysis. Journal of the American Statistical Association. 1989;84:165–175. [Google Scholar]

- Friedman J, Hastie T, Tibshirani R. Regularization paths for generalized linear models via coordinate descent. 2008a In preparation. [PMC free article] [PubMed] [Google Scholar]

- Friedman J, Hastie T, Tibshirani R. Sparse inverse covariance estimation with the graphical lasso. Biostatistics. 2008b doi: 10.1093/biostatistics/kxm045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green PJ. Iteratively reweighted least squares for maximum likelihood estimation, and some robust and resistant alternatives. Journal of the Royal Statistical Society, Series B. 1984;46:149–192. [Google Scholar]

- Guo Y, Hastie T, Tibshirani R. Regularized linear discriminant analysis and its application in microarrays. Biostatistics. 2007;8:86–100. doi: 10.1093/biostatistics/kxj035. [DOI] [PubMed] [Google Scholar]

- Hoerl AE, Kennard R. Ridge regression: Biased estimation for nonorthogonal problems. Technometrics. 1970;12:55–67. [Google Scholar]

- Hummel M, Bentink S, Berger H, Klappwe W, Wessendorf S, Barth FTE, Bernd H-W, Cogliatti SB, Dierlamm J, Feller AC, Hansmann ML, Haralambieva E, Harder L, Hasenclever D, Kuhn M, Lenze D, Lichter P, Martin-Subero JI, Moller P, Muller-Hermelink H-K, Ott G, Parwaresch RM, Pott C, Rosenwald A, Rosolowski M, Schwaenen C, Sturzenhofecker B, Szczepanowski M, Trautmann H, Wacker H-H, Spang R, Loefler M, Trumper L, Stein H, Siebert R. A biological definition of Burkitt’s lymphoma from transcriptional and genomic profiling. New England Journal of Medicine. 2006;354:2419–2430. doi: 10.1056/NEJMoa055351. [DOI] [PubMed] [Google Scholar]

- Kalbfleisch J, Prentice R. The statistical analysis of failure time data. Wiley; New York: 1980. [Google Scholar]

- Meinshausen N, Bühlmann P. High dimensional graphs and variable selection with the lasso. Annals of Statistics. 2006;34:1436–1462. [Google Scholar]

- Monti S, Savage KJ, Kutok JL, Feuerhake F, Kurtin P, Mihm M, Wu B, Pasqualucci L, Neuberg D, Aguiar RCT, Dal Cin P, Ladd C, Pinkus GS, Salles G, Harris NL, Dalla-Favera R, Habermann TM, Aster JC, Golub TR, Shipp MA. Molecular profiling of diffuse large B-cell lymphoma identifies robust subtypes including one characterized by host inflammatory response. Blood. 2005;105:1851–1861. doi: 10.1182/blood-2004-07-2947. [DOI] [PubMed] [Google Scholar]

- Park MY, Hastie T. An L1 regularization path algorithm for generalized linear models. Journal of the Royal Statistical Society Series B. 2007;69(4):659–677. [Google Scholar]

- Ramaswamy S, Tamayo P, Rifkin R, Mukherjee S, Yeang C, Angelo M, Ladd C, Reich M, Latulippe E, Mesirov J, Poggio T, Gerald W, Loda M, Lander E, Golub T. Multiclass cancer diagnosis using tumor gene expression signature. PNAS. 2002;98:15149–15154. doi: 10.1073/pnas.211566398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenwald A, Wright G, Chan WC, Connors JM, Campo E, Fisher RI, Gascoyne RD, Muller-Hermelink HK, Smeland EB, Staudt LM. The use of molecular profiling to predict survival after chemotherapy for diffuse large B-cell lymphoma. The New England Journal of Medicine. 2002;346:1937–1947. doi: 10.1056/NEJMoa012914. [DOI] [PubMed] [Google Scholar]

- Shipp MA, Ross KN, Tamayo P, Weng AP, Kutok JL, Aguiar RC, Gaasenbeek M, Angelo M, Reich M, Pinkus GS, Ray TS, Koval MA, Last KW, Norton A, Lister TA, Mesirov J, Neuberg DS, Lander ES, Aster JC, Golub TR. Diffuse large B-cell lymphoma outcome prediction by gene-expression profiling and supervised machine learning. Nature Medicine. 2002;8:68–74. doi: 10.1038/nm0102-68. [DOI] [PubMed] [Google Scholar]

- Tibshirani R. Regression shrinkage and selection via the lasso. J Royal Statist Soc B. 1996;58:267–288. [Google Scholar]

- Tibshirani R, Hastie T, Narasimhan B, Chu G. Diagnosis of multiple cancer types by shrunken centroids of gene expression. Proc Natl Acad Sci. 2002;99:6567–6572. doi: 10.1073/pnas.082099299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tibshirani R, Hastie T, Narasimhan B, Chu G. Class prediction by nearest shrunken centroids, with applications to DNA microarrays. Statistical Science. 2003:104–117. [Google Scholar]

- Zhu J, Hastie T. Classification of gene microarrays by penalized logistic regression. Biostatistics. 2004;5(2):427–443. doi: 10.1093/biostatistics/5.3.427. [DOI] [PubMed] [Google Scholar]

- Zou H, Hastie T. Regularization and variable selection via the elastic net. J Royal Stat Soc B. 2005;67:301–320. [Google Scholar]