Abstract

When both visual and proprioceptive information are available about the position of a part of the body, the brain weights and combines these sources to form a single estimate, often modeled by minimum variance integration. These weights are known to vary with different circumstances, but the type of information causing the brain to change weights (reweight) is unknown. Here we studied reweighting in the context of estimating the position of a hand for the purpose of reaching it with the other hand. Subjects reached to visual (V), proprioceptive (P), or combined (VP) targets in a virtual reality setup. We calculated weights for vision and proprioception by comparing endpoints on VP reaches with endpoints on P and V reaches. Endpoint visual feedback was manipulated to control completely for the error history seen by subjects. In different experiments, we manipulated target salience, conscious effort, or statistics of the visual error history to see if these cues could cause reweighting. Most subjects could reweight strongly by conscious effort. Changes in target salience reliably caused reweighting, but seen error history alone did not. We also found that experimental weights can be predicted by minimizing the variance of visual and proprioceptive estimates, supporting the idea that minimum variance integration is an important principle of sensorimotor processing.

INTRODUCTION

One of the most common reaching behaviors involves moving objects from one hand to the other. Thus, estimating a hand's position for the purpose of reaching to it with the other hand is an important computation for humans (e.g., placing a teacup in a saucer held by the other hand). Hand position can be estimated by two independent sensory modalities: vision and proprioception. Because of inherent differences in the two sensory processing pathways, these estimates are not necessarily in perfect agreement (Smeets et al. 2006). Yet it is still thought to be optimal to weight and combine the available estimates into a single integrated estimate of hand position with which to guide movements (Ghahramani et al. 1997). The determination of these weights is often modeled by minimum variance integration (e.g., Ernst and Banks 2002; Ghahramani et al. 1997; van Beers et al. 1996; Welch 1978), which rests on the assumption that more variable sensory modalities are given less weight by the CNS in determining an integrated position estimate (Ghahramani et al. 1997).

However, the circumstances of sensory integration are more complex than such a basic model would suggest (Sober and Sabes 2005). Vision is often said to dominate proprioception (e.g., Botvinick and Cohen 1998; Hagura et al. 2007), but there are a number of circumstances in which other modalities are weighted as much as or greater than vision (e.g., Ernst and Banks 2002; Mon-Williams et al. 1997; Naito 2004; Shams et al. 2000; van Beers et al. 2002). Indeed, the reliability and usefulness of different sensory modalities, and the weights assigned to them, are not constant, depending instead on environmental conditions (Mon-Williams et al. 1997); characteristics of sensory organs (van Beers et al. 1999, 2002) and sensory information (Ernst and Banks 2002); attentional factors (Warren and Schmitt 1978; Welch and Warren 1980); and computations being performed (Sober and Sabes 2003, 2005).

While it is known that weights vary in different circumstances, the cues that cause the brain to reweight, i.e., to dynamically switch which modality it “listens to” the most in estimating a hand's position, are unknown. For example, when a person down-weights vision in low-light conditions (Mon-Williams et al. 1997), is it due to a conscious effort to rely less on vision? Is it because the person has experienced increased movement errors when relying on vision in such circumstances in the past? Does the decrease in salience of the target image itself cause reweighting? We manipulated conscious effort, seen error history, and target salience in separate experiments to determine which of these cues could cause subjects to reweight their reliance on vision versus proprioception when estimating the position of the target hand.

Minimum variance integration (i.e., weighting the least variable sensory estimate the most) has been demonstrated in a variety of tasks, e.g., height discrimination (Ernst and Banks 2002) and sound localization (Ghahramani et al. 1997). We therefore also asked whether subjects' weights could be predicted by applying the minimum variance model to their estimates of visual and proprioceptive target position.

METHODS

Our paradigm required people to reach to visual targets (V), proprioceptive targets (P), or both simultaneously (VP) in a virtual reality setup with no vision of either arm. Subjects received endpoint visual feedback only on the V and P reaches. The VP reaches were used to determine weightings of vision versus proprioception when estimating the position of a target hand with both modalities available. The different manipulations described in the following text were used to elicit a change in weightings.

Subjects

We studied 64 individuals (34 women, 30 men; age: 19–65, median: 27.5 yr). Each gave written informed consent. Twenty of the subjects participated in more than one experiment, making the total sample size 89. All subjects stated that they were neurologically healthy and had normal or corrected-to-normal vision. Protocols were approved by the Johns Hopkins Institutional Review Board.

Experimental setup

Experiments were performed in a reflected rear projection setup (Fig. 1 A). Infrared-emitting markers were placed on each index fingertip, and an Optotrak 3020 (Northern Digital) was used to record three-dimensional (3D) position data at 100 Hz. Black fabric obscured the subject's view of both arms and the room beyond the apparatus.

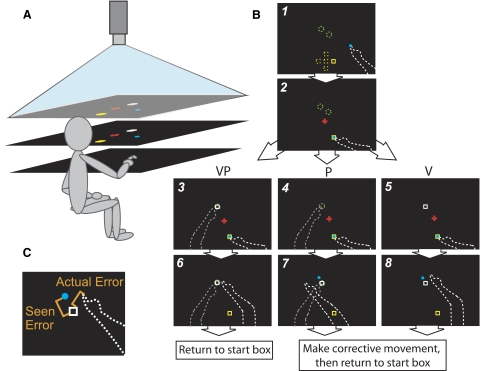

Fig. 1.

Experimental setup. A: subject looks down into a horizontal mirror at chin level (middle) and sees images reflected from a horizontal rear projection screen (top). The reaching hand rests on a hard acrylic reaching surface (bottom) 22.5 cm below the mirror. The target hand remains below the reaching surface at all times. The mirror is positioned midway between screen and reaching surface, such that objects in the mirror appear to be in the plane of the reaching surface. Not pictured: black drapes obscuring the subject's vision of his arms and the room outside the apparatus. B: timeline of a single reach and bird's eye view of the display. Solid images were visible to the subject; dashed lines were not. The entire display was scaled to 80% of the subject's maximum reach, for a reaching distance of 13–33 cm. Subjects were physically able to point ≥15 cm past the farther target. Figure not to scale. Screen 1: subjects placed their reaching finger (white dashed line) in the yellow start box, which appeared in 1 of 5 possible positions (yellow dashed squares), with the aid of an 8 mm-diam blue cursor indicating reaching finger position (veridical to minimize proprioceptive drift between reaches: Wann and Ibrahim 1992). Possible start box positions were at origin (∼200 mm in front of subject's chest at midline; 1), 20 mm ahead of origin (2), 20 mm behind origin (3), 20 mm left of origin (4), or 20 mm right of origin (5). Screen 2: a red fixation cross appeared, and subjects were instructed to fixate on it for the duration of the reach. Screens 3–5: subjects positioned their target finger (dashed gray line) as instructed: on 1 of the 2 tactile markers stuck to the bottom of the reaching surface ∼40 mm apart (green dashed circles) for a proprioceptive (P) or visual-proprioceptive (VP) target, or down in their lap for a V target, which appeared as a 12 × 12-mm white box in 1 of the 2 possible target locations. On VP reaches, the V target was projected in the same location as the P target. For a subject of about average arm length, target position 1 was 18 mm right of origin and 118 mm ahead of origin. Target position 2 was 18 mm left of origin and 155 mm ahead of origin. Once both hands were correctly positioned, subjects reached toward the target with the cursor disappearing at movement initiation. Movement speed was not restricted, and subjects were permitted to make adjustments. Screens 6–8: when the reaching finger had not moved >2 mm for 2 s, the red fixation cross disappeared and the endpoint visual feedback (blue dot, P and V reaches only) appeared in a predetermined location unrelated to the reaching finger's true endpoint position. On P reaches, a 12 × 12-mm white box also appeared directly over the target finger position so subjects could see the error. If feedback indicated a miss, subjects had to make a corrective movement such that the blue feedback dot reached the center of the target. We included this step because of evidence that visual feedback while a hand is moving is a richer signal than static feedback from a stationary hand (Cheng and Sabes 2007); we also found that subjects were less likely to believe static feedback was veridical. If endpoint visual feedback was initially within 8 mm of the target's center, the target appeared to explode, indicating a hit, and no correction was required. C. close-up of screen 7 or 8 showing the 2 types of error information available to the subject at the end of a reach to a V or P target: “seen error” and “actual error.” Seen error (perceived visually) is the distance from the endpoint visual feedback (blue dot) to the target (white box); the blue dot's location is predetermined (held constant across subjects in a given condition) and so independent of the actual error (distance between reaching finger's final position and the target, estimated nonvisually through proprioception or efference copy).

Each experiment was divided into two blocks of 90 reaches. In each block, the subject reached to 30 V targets (12 × 12 mm white square, Fig. 1B, screen 5), 30 P targets (target hand index fingertip touching a tactile marker beneath the reaching surface, Fig. 1B, screen 4), and 30 VP targets where the V component was always projected at the exact coordinates of the P component (Fig. 1B, screen 3). Targets could appear at either of two locations 4 cm apart and 33–53 cm (depending on arm length; mean: 40 cm) in front of the subject's chest at midline, and reaches could begin in any of five start locations centered 20 cm in front of the subject's chest at midline (details in Fig. 1B), making it difficult for subjects to memorize a specific reach direction or extent. Target modality and position order were randomized but identical for every subject. Subjects were told to indicate where they thought the target was with the fingertip of their dominant hand, and that the V target was directly on top of the P target on VP reaches. They were told not to worry about movement speed but to be as accurate as possible. The task timeline is illustrated in Fig. 1B.

Manipulations

We tested whether subjects could reweight reliance on vision versus proprioception based on: conscious effort, changes in the error history seen by subjects, or changes in target salience (13 experiments summarized in Table 1). In one block, we encouraged subjects to down-weight vision and up-weight proprioception through conscious effort, seen error history, or target salience (BadV block), and in the other we encouraged subjects to down-weight proprioception and up-weight vision (BadP block). The order of the two blocks was counterbalanced across subjects for all experiments.

Table 1.

Summary of manipulations, predictions, and results

| Manipulation | n | Predicted %ΔWv | Actual %ΔWv |

|---|---|---|---|

| Control | 7 | 0 | 0.5 |

| Conscious effort | 9 | — | −17.0 |

| 5 | + | 51.3* | |

| Error history | |||

| Variance | 10 | — | −9.5 |

| 6 | + | 11.0 | |

| Bias | 6 | — | 3.4 |

| 6 | + | −4.4 | |

| Variance and bias | 10 | — | 9.5 |

| 8 | + | 8.2 | |

| Target salience | |||

| Target salience | 6 | — | −18.4* |

| 6 | + | 23.5* | |

| Target salience and error history | 5 | — | −24.0* |

| 5 | + | 30.9* |

For each experiment, some subjects had the blocks ordered such that they were predicted to down-weight vision (−) while others had the blocks reversed and were predicted to up-weight (+). %ΔWv refers to normalized between-block reweighting averaged across the group.

Significant result in ANOVA.

Coordinates of the endpoint visual feedback were predetermined so that we could control and manipulate the variance and bias of error feedback. Figure 2 A shows a distribution of endpoint visual feedback coordinates with zero bias and equal variance in x and y dimensions. For some experiments, this distribution was manipulated to change variance (Fig. 2B), bias (C), or both (D). In 89 testing sessions, no subject became explicitly aware of the manipulation. In fact, the manipulation of endpoint visual feedback made it appear that most subjects hit targets more often than they did in reality (compare the bias of the actual reaching endpoints in Fig. 3 with the endpoint visual feedback distributions in Fig. 2).

Fig. 2.

Coordinates of endpoint visual feedback seen by subjects (seen errors). Targets were at the origin. Subjects saw the target explode if their feedback was within 8 mm of the origin. Left: feedback seen after reaches to targets of the “good” modality (e.g., P reaches in a BadV block). Right: feedback seen after reaches to targets of the “bad” modality (e.g., V reaches in a BadV block). A: Control feedback pattern. Every subject in the target salience, salience/error history, conscious effort, and control manipulations saw an identical pattern of endpoint visual feedback for both V and P targets. Mean of the distribution is at the origin and variance is equal in x and y. B: error history variance manipulation. The control feedback pattern was transformed to have low variance for the “good” modality and high variance for the “bad” modality. C: error history bias manipulation. The control feedback pattern was used for the “good” modality, and given a bias of 18 mm for the “bad” modality (mean of the points was offset 15 mm in the x direction and −10 mm in the y direction). D: error history variance/bias manipulation. The control feedback pattern was transformed to have low variance for the “good” modality, and high variance plus an 18 mm bias for the “bad” modality.

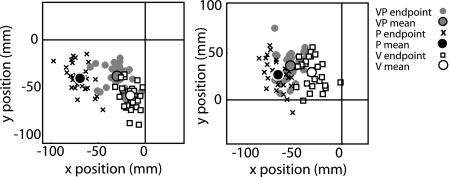

Fig. 3.

Example data from two subjects reaching to V, P, and VP targets (actual errors). Positions of reach endpoints depend on the type of target. Axes represent a bird's eye view of the reaching surface with the subject reaching from the direction of the negative y axis. All targets are at the origin, but reaching endpoints reveal that P targets are systematically estimated to be in a different location than V targets. VP endpoints tend to fall between the distributions of V endpoints and P endpoints rather than within one or the other distribution.

In the conscious effort manipulation, subjects were asked during VP reaches to consciously aim for the V and ignore the P component during one block (BadP) and aim for the P and ignore the V component in the other block (BadV). Subjects saw the same endpoint visual feedback for both P and V reaches in both blocks (Fig. 2A) so that seen error history was held constant across subjects.

Three error history manipulations were done to determine whether an increase in variance, bias, or both (i.e., mean squared error) of the error history seen by subjects could cue reweighting. In one block (BadV block, Fig. 2, B–D), endpoint visual feedback about reaches to V targets indicated worse performance than on P targets in terms of bias, variance, or both (i.e., mean squared error), and in the other block (BadP block) this was reversed.

In the target salience manipulation, we tested the effect of altering the salience of V or P targets. In a separate preliminary experiment, we examined a number of potential manipulations of V and P target salience (see appendix), presuming that a change in salience would be reflected by a change in the variance of subjects' actual reaching endpoints. We chose manipulations that were not identical in nature but produced changes in variance of reaching endpoint position on the same order of magnitude. For high salience P targets, subjects positioned their target finger on the tactile marker as usual; for low salience P targets, subjects placed their target finger on the bottom of a soft 10 cm-diameter plush ball. The high salience V targets were the white boxes used in the other experiments. For low salience V targets, the white box disappeared after the subject had viewed it for one second, and a random visual noise mask covered the entire display for six seconds before the subject reached to the remembered location of the white target box. Subjects saw the same distribution of endpoint visual feedback for both P and V reaches in both blocks (Fig. 2A) so that seen error history was held constant.

The last manipulation was to combine target salience and error history stimuli in an attempt to enhance reweighting: targets that were made less salient were paired with endpoint visual feedback that was more variable and more biased (high mean squared error; Fig. 2D). Finally, a control experiment was done where endpoint visual feedback was constant (Fig. 2A), and there was no manipulation of target salience or conscious effort.

Calculation of experimental weights

Our weighting calculation took advantage of the fact that reaches to visual versus proprioceptive targets are biased in different directions (e.g., Crowe et al. 1987; Foley and Held 1972; Haggard et al. 2000; Smeets et al. 2006). In our data (e.g., Fig. 3), the average two-dimensional distance between mean V endpoint position and mean P endpoint position was significant (33.1 mm). We reasoned that on VP reaches, subjects would point closer to their mean V endpoint position if they were assigning more weight to vision and closer to their mean P endpoint position if they were assigning more weight to proprioception. If Wv is experimental weight of vision and Wp is experimental weight of proprioception

| (1) |

| (2) |

For simplicity, we will refer to weightings only in terms of vision (Wv). To compensate for any adaptation or drift in the position of V and P endpoints throughout the experiment, we computed a Wv for every VP reach. For the Wv associated with the ith VP reach (VPi), we used the mean position of the four V and four P endpoints occurring closest in time and compared these two positions to the mean position of VPi, VPi-1 and VPi+1. Thus, we could observe the evolution of reweighting on a trial-by-trial basis. We used bootstrapping to estimate the SD associated with each Wv.

Inherent in our method of weighting calculation is the assumption that vision and proprioception are integrated. In other words, we assume the brain does not ever rely totally on vision or proprioception in a winner-take-all manner, and Wv can only fall between 0 and 1; to detect if a winner-take-all strategy is used, it would have to be possible for Wv to be calculated as exactly 0 or 1. We checked to see if a more continuous vectorial method of calculating weights changed the results (i.e., using the position of the VP estimate in the dimension that connects the P and V estimates to calculate Wv; this makes it possible for Wv to be ≤0 or ≥1) and found it did not, although it increased intra- and intersubject variability. The integration assumption is also important in our method because we average three VP endpoints for each Wv. If a subject weights vision 100% on one VP reach and 0% on the other two, Wv would be 0.33, a misleading result in this case. We checked whether this affected our results in two ways. First, we tried the weighting calculation with one VP endpoint rather than averaging three; individual data were more variable, but group results were unchanged. Second, we checked how many individual VP reaches could be considered winner-take-all by calculating the percentage that fell within one SD of either the V or the P distribution. Across all subjects (n = 89), this occurred for only 17 ± 8.8% (mean ± SD) of VP reaches, suggesting vision and proprioception were integrated the vast majority of the time.

Because our method of weighting calculation is meaningless if V and P endpoints are too close to each other, we discarded any Wv that resulted from a calculation where the V-to-P separation was smaller than half a SD of either the V or P endpoint distributions. This occurred for ∼5% of the 5134 VP reaches analyzed. Van Beers et al. (1999, 2002) have shown that weights are matrices rather than scalars; i.e., the brain uses one Wv to estimate hand position in azimuth and a different Wv for depth. Our calculation of a single Wv using two-dimensional distances is therefore a simplification, but this was necessary here because the visual and proprioceptive covariance matrices were not known.

Sensory weightings have generally been treated as though they are static in time throughout a given experimental condition, with data from the entire period used to calculate a single sensory weight (e.g., Ernst and Banks 2002; Ernst et al. 2000; van Beers et al. 2002). Here we use a method of calculating trial-by-trial experimental weightings that permits us to examine reweighting within a given condition as well as between conditions. We thus made two different comparisons to ascertain whether reweighting had taken place in each experiment: within-block and between-block. To determine the difference in weightings between Blocks 1 and 2, we subtracted the mean of all Wv in Block 1 from the mean of all Wv in Block 2. To quantify the change in Wv over the course of a single block, we subtracted the mean of the first four Wv of that block from the mean of the last four Wv of that block. In cases where V-to-P separation was not large enough to calculate Wv for all of the first or last four VP reaches in a block, we included Wv from the next set of four or left that subject out of the group data. Every group data calculation used 4–10 subjects per group, with most calculations using 6–9 subjects.

Normalization of reweighting

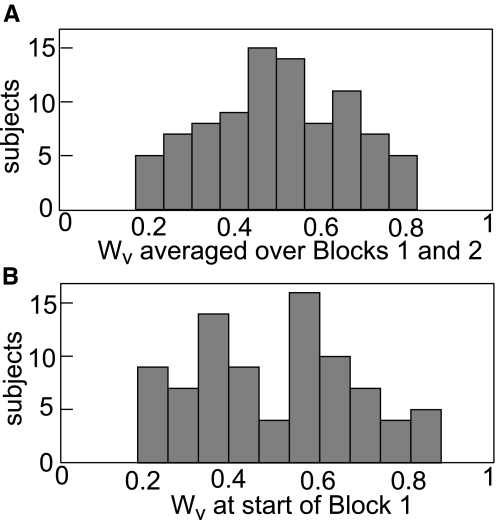

We first calculated the mean of all Wv in Blocks 1 and 2 and examined its distribution across subjects and experiments (Fig. 4 A). This would reveal any systematic preference for vision or proprioception over the course of the entire experiment. Many subjects gave vision and proprioception approximately equal weight (distribution of mean Wv centered at 0.49 ± 0.16, Fig. 4A). The distribution of Wv at the very beginning of the experiment was similar in range and mean but bimodal, with individuals tending to have a slight bias toward favoring either vision or proprioception (Fig. 4B).

Fig. 4.

Distribution of Wv for subjects across all manipulations. A: histogram of subjects' weightings for the whole experiment (mean of all the Wv in Blocks 1 and 2). n = 89, mean = 0.49. Because every experiment consists of both a BadP block and a BadV block, the average of all Wv in both blocks should reveal any systematic preference for vision or proprioception. B: histogram of subjects' starting weights (mean of first 4 Wv in Block 1). n = 85, mean = 0.5. The bimodal distribution indicates that subjects began the experiment favoring either vision or proprioception.

In addition to a wide range of starting weights, we found that weight of vision tends to regress toward the mean. Results showed that a subject's weight at the start of a block predicts the direction and extent of reweighting over the course of that block (correlation between starting weight and change in weight: r = −0.41, P < 0.001 for Block 1; r = −0.35, P < 0.001 for Block 2; Fig. 5), and mean weight in Block 1 predicts between-block reweighting (r = −0.23, P < 0.03). In other words, a subject who starts with a high weight of vision is likely to down-weight vision. To isolate any effect of our manipulations, we normalized all changes in weighting (both within-block and between-block) to the subject's starting weight. This enabled us to compare, for example, a subject who started with a Wv of 0.8 and up-weighted vision by 0.1 with a subject who started at 0.6 and up-weighted by 0.1. The two reweightings are equal in absolute terms, but 0.1 is half the maximum amount the first subject could have up-weighted (0.2) and only a quarter of the maximum amount the second subject could have up-weighted (0.4). The normalized change in weight for these two subjects would thus be 50% and 25%, respectively. There was no significant correlation between starting weight and normalized within- or between-block reweighting (r = −0.15, P = 0.2 within Block 1; r = −0.08, P = 0.4 within Block 2; r = −0.01, P = 0.9 for between-block reweighting), suggesting that our normalization method is an effective way of isolating any effect of our manipulations from the effect of starting weight and regression toward the mean. All changes in weighting are normalized in this way (%ΔWv) unless otherwise specified (ΔWv). If vision is down-weighted, and α is the mean of the first four Wv in a block, while γ is the mean of the last four Wv in a block

| (3) |

Fig. 5.

Effect of starting weight on reweighting. Each point represents a single subject. Filled symbols = BadP in Block 1. Open symbols = BadV in Block 1. A: In Block 1, the first 4 weights predict absolute reweighting within Block 1, e.g., subjects who began with a high weight of vision were likely to down-weight vision (n = 80, r = −0.40, P = 0.00013). Here subjects indicated by filled symbols should have increased the weight of vision (i.e., filled symbols above the 0 line) and vice versa for subjects with open symbols. This did not occur. The fact that the center of the distribution is negative of 0 on the y axis reflects that many subjects down-weighted vision in Block 1, regardless of manipulation. B: in Block 2, the first 4 weights predict absolute reweighting within Block 2, e.g., subjects who began with a high weight of vision were likely to down-weight vision (n = 83, r = −0.36, P = 0.00076). Here subjects indicated by filled symbols should have decreased the weight of vision (i.e., filled symbols below the 0 line) and vice versa for subjects with open symbols. There was a tendency for this to occur.

To normalize between-block reweighting, α is the mean of all Wv in Block 1 and γ is the mean of all Wv in Block 2. If vision was up-weighted, we subtracted the denominator from 1

| (4) |

Minimum variance predictions

We wanted to know if subjects' weightings for VP target position estimation followed the minimum variance model (e.g., Ghahramani et al. 1997). In other words, if we apply the model to the variances of subjects' estimates of V target and P target position (σ2V target and σ2P target), does the predicted weight of vision (Wv minvar) match our experimentally measured Wv

| (5) |

To answer this equation, we use Eq. 6, where σ2V endpoints and σ2P endpoints are the variances of subjects' reaching endpoints for V and P targets, respectively. See appendix for derivation

| (6) |

To determine if the minimum variance model could explain subjects' experimental weightings, we evaluated Eq. 6 using the variance of the last 15 reach endpoints in Block 2 to P targets (σ2P endpoints) and V targets (σ2 V endpoints). We then compared Wv minvar to the experimental Wv observed at the end of Block 2 and determined the correlation between the two. We also evaluated the relationship of Wv minvar and Wv for individual subjects but found the same pattern of results.

Statistical analysis

One-way ANOVAs were used to compare within-block and between-block reweighting in the control group and the two conscious effort groups. Where the effect was significant, post hoc comparisons (Scheffe's test) were used to determine which groups contributed. Factorial ANOVAs (manipulation × block order) were used to examine within- and between-block reweighting in the six error history groups and in the four target salience groups. Two-sample t-tests were used to ascertain whether the two error history variance groups were different from each other or from the control group. Two-sided values are reported for all hypothesis tests.

RESULTS

Conscious effort

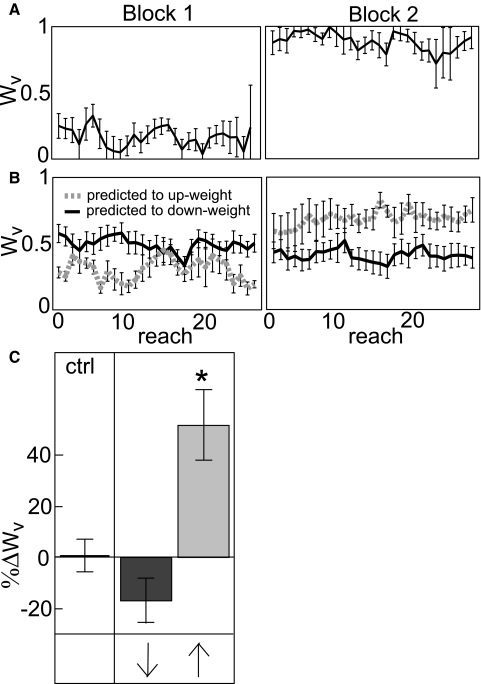

In this task, when reaching to VP targets, subjects were told to consciously aim for the V and ignore the P component (BadP block) or aim for the P and ignore the V component (BadV block) despite receiving endpoint visual feedback of equal variance and bias on V trials and P trials throughout both blocks (Fig. 2A). We only used experienced subjects in this experiment, i.e., subjects who had already done at least one of the other experiments and could be expected to understand what we wanted them to do. Most subjects were able to immediately shift their weighting toward vision or proprioception (Fig. 6, A and B).

Fig. 6.

Conscious effort manipulation. A: Wv of a subject who was told explicitly to aim for the P component of VP targets in Block 1 and the V component in Block 2 and was therefore predicted to up-weight vision between blocks. Error bars represent SD. B: trial-by-trial Wv averaged across subjects who were told to aim for the P component in Block 1 and the V component in Block 2 and thus predicted to up-weight between blocks (gray dashed line) or who performed the blocks in the reverse order and were predicted to down-weight between blocks (black line). Error bars represent standard error. C: group difference in Wv between Blocks 1 and 2, normalized to Block 1 Subjects were able to change weightings consciously, especially when trying to up-weight vision. Ctrl = control group. Dark gray bar: aiming for V in Block 1. Light gray bar: aiming for P in Block 1. Arrows: predicted direction of reweighting. Asterisk: significantly different from both other groups.

ANOVAs of within- and between-block reweighting in the two conscious effort groups and the control group revealed a strong effect of manipulation on between-block reweighting (F2 = 12.49, P < 0.0005). Post hoc analysis showed that the conscious effort group we expected to up-weight vision (%ΔWv of 51%, Fig. 6C, light gray bar) was significantly different from both the control group and the group we expected to down-weight vision (Scheffe's test MS = 0.061, dof = 18, P < 0.01 and P < 0.0005, respectively). The down-weighting group (%ΔWv of −17%, Fig. 6C, dark gray bar) was not significantly different from the control group (P > 0.3). In other words, it was easier to consciously up-weight vision than to down-weight. We also observed nonsignificant reweighting in the expected directions within Block 2, but within Block 1 there was a tendency for subjects to down-weight vision regardless of order, a phenomenon we observed in most experiments (Fig. 5A).

Error history

When we manipulated the variance of the endpoint visual feedback seen by subjects (Fig. 2B) while target salience was held constant, subjects reweighted a small but significant amount in the predicted directions between blocks (Fig. 7, A and B and 2nd panel of C; %ΔWv = −9.5 and 11.0%). In two-sample t-tests, the two error history variance groups were different from each other (t = 3.01, P = 0.009, significant at α = 0.025), but neither was different from the control group (both P values > 0.2). Manipulating the bias or bias and variance (i.e., mean squared error) of the endpoint visual feedback did not cause a predictable pattern of between-block reweighting (3rd and 4th panels of Fig. 7C). A factorial ANOVA of all six error history groups (3 manipulations × 2 experimental orders) revealed no effect of manipulation or experimental order on between-block reweighting (Fig. 7C) or within-block reweighting (all P values > 0.25). Overall, visual error history does not appear to be a robust reweighting cue.

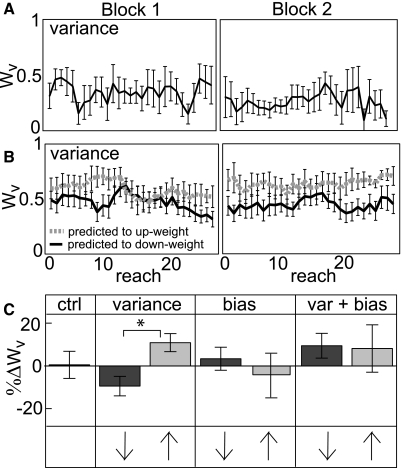

Fig. 7.

Error history manipulation. A: Wv of one subject in the error history variance condition (BadP in Block 1, BadV in Block 2; down-weighting of vision predicted between blocks). Error bars represent SD. B: trial-by-trial Wv averaged across subjects in the error history variance condition who were predicted to up-weight (gray dashed line) or down-weight (black line) vision between blocks. Error bars represent SE. C: group difference in Wv between Blocks 1 and 2, normalized to Block 1. Ctrl = control group. Dark gray bars: BadP in Block 1, BadV in Block 2. Light gray bars: BadV in Block 1, BadP in Block 2. Arrows indicate predicted direction of reweighting. Factorial ANOVA revealed no effect of manipulation or experimental order on reweighting, reflecting that the error history manipulations overall did not cause robust reweighting. The two error history variance manipulation groups were significantly different from each other but not from the control group.

Target salience

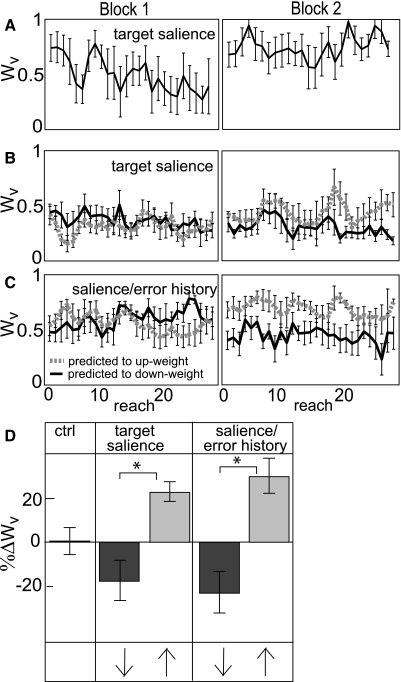

Figure 8 A shows that target salience was an adequate cue to cause reweighting. This experiment was identical to the control experiment except for the use of high versus low salience targets for V and P conditions (see methods and appendix). Subjects reweighted in the expected directions both within each block and between Blocks 1 and 2 (Fig. 8, B and D, 2nd panel).

Fig. 8.

Target Salience manipulations. A: Wv of one subject in the target salience experiment (BadV in Block 1, BadP in Block 2; predicted to up-weight vision). Error bars represent SD. B and C. trial-by-trial Wv averaged across subjects who were predicted to up-weight (gray dashed line) or down-weight (black line) vision between blocks. Target salience manipulation (B) and target salience/error history manipulation (C). Error bars represent standard error. D: group difference in Wv between Blocks 1 and 2, normalized to Block 1. Ctrl = control group. Dark gray bars: BadP in Block 1, BadV in Block 2. Light gray bars: BadV in Block 1, BadP in Block 2. Arrows indicate predicted direction of reweighting. Subjects reweighted significantly in the predicted directions when target salience was manipulated, whether or not error history was held constant (target salience panel) or manipulated in variance and bias so that less salient targets yielded larger seen errors (salience/error history panel). Asterisk: factorial ANOVA revealed a significant effect of experimental order, suggesting that target salience is a robust reweighting cue, independent of seen error history.

Recall that our manipulations of endpoint visual feedback alone (error history experiments) were insufficient to cue robust reweighting. However, in those experiments, there was no change in the environment or the subject to which the brain could attribute the change in seen error history. We wondered whether combining the error history and target salience manipulations (e.g., giving subjects more variable/biased endpoint visual feedback after reaches to targets of lower salience) would result in greater reweighting than either manipulation alone. Here the experiment was identical to the target salience manipulation except that subjects saw the endpoint visual feedback associated with the error history variance/bias manipulation (Fig. 2D) instead of the control feedback (Fig. 2A).

When target salience was altered and the statistics of endpoint visual feedback (seen error history) were manipulated as well, subjects reweighted in the expected directions both within blocks and between blocks (Fig. 8, C and D, 3rd panel) but not to a greater extent than in the target salience manipulation; a factorial ANOVA of the four groups (2 manipulations × 2 experimental orders) revealed no effect of manipulation on between-block reweighting (Fig. 8D) or within-block reweighting. There was, however, an effect of experimental order on between-block reweighting (F1,18 = 34.748, P = 0.00001, Fig. 8D), reflecting that our manipulation of target salience was sufficient to cue between-block reweighting, regardless of endpoint visual feedback manipulation.

Minimum variance predictions

Finally, we found that Wv minvar, predicted by minimizing the variance of subjects' estimates of V target and P target positions, was positively correlated with experimentally measured Wv (r = 0.68, P = 0.01). This significant correlation suggests that minimum variance integration is a good model for how subjects combine visual and proprioceptive information about the position of a VP target.

DISCUSSION

We have shown that subjects can consciously choose to assign a higher weight to visual or proprioceptive estimates of a target hand's position when both modalities are available. Manipulations of visual or proprioceptive target salience can also drive reweighting in favor of the more discernable target. Our manipulations of endpoint visual feedback did not cause strong reweighting, however. Finally, we found that minimizing the variance of visual and proprioceptive target estimates predicts experimentally-measured sensory weights.

General observations

Vision is the dominant modality in many circumstances (e.g., Botvinick and Cohen 1998; Hagura et al. 2007; Smeets et al. 2006; van Beers et al. 1996, 1998). In the present study, however, this was not the case, likely because visual information about the hand was reduced to a 12 × 12-mm white square on the fingertip in a visually sparse environment. These conditions have been shown to increase reliance on proprioception (Mon-Williams et al. 1997). In addition, actively positioning the target finger likely caused subjects to rely more heavily on proprioception than if the target finger had been passively placed (Welch et al. 1979). Indeed, the majority of subjects weighted vision and proprioception approximately equal, with some strongly favoring vision throughout testing and a similar number strongly favoring proprioception.

Subjects typically down-weighted vision over the course of Block 1, independent of manipulation and starting weight. This is reflected in Fig. 5A where the entire distribution of changes in weight is shifted down relative to the origin. We suspect that this is a consequence of subjects transitioning from the brightly lit, visually rich environment of the lab to the dim, visually sparse experimental apparatus (Mon-Williams et al. 1997). It may therefore be important to acclimate subjects to the new visual environment for a fairly extended period of time before beginning an experiment involving visual weightings (a purpose served by Block 1 in the present study).

Sensory integration

Sensory integration research has yielded a great deal of information about how the nervous system makes use of the multiple sensory modalities available to it. In addition to environmental conditions (Mon-Williams et al. 1997), sensory integration can depend on properties of the sensory organs themselves. For example, vision is more precise in azimuth than in depth because the former estimate relies on an image's position on the retina, while the latter relies on less-precise binocular cues (van Beers et al. 1999). Thus, vision is weighted more heavily when localizing the hand in azimuth than in depth (van Beers et al. 2002).

The specific computation being performed also affects sensory integration (Sober and Sabes 2005). Subjects use a higher visual weight for planning movement vectors and a higher proprioceptive weight for computing the joint-based motor command when visual feedback is limited to fingertip position (Sober and Sabes 2003). Further, subjects weight vision more heavily when reaching to visual targets than to proprioceptive targets (Sober and Sabes 2005), which suggests that sensory integration minimizes coordinate transformations (Sober and Sabes 2005).

With so many variables affecting sensory integration, all of which are subject to change, the nervous system must be able to dynamically reweight the available sensory inputs. To determine what types of information might be involved in the reweighting process, we investigated the ability of conscious effort, seen error history, and target salience to cause reweighting in the context of estimating a target hand's location.

Conscious effort

Of the three cues studied here, conscious effort yielded the most robust reweighting. Previous studies of the role of attention in sensory integration have found that simply instructing subjects to attend to one modality or the other has an effect on prism adaptation (the nonattended modality adapts the most, Kelso et al. 1975) but not on intersensory bias, a measure of weightings obtained using prisms without adaptation (Welch and Warren 1980). Warren and Schmitt (1978) found that subjects could only direct their attention in a way that affected intersensory bias if, in addition to instructions, the nature of the task changed the focus of their attention to the desired modality. In the present study, conversely, subjects reweighted strongly on the basis of instructions alone; the task, environment, and even endpoint visual feedback remained constant. This suggests that humans can indeed exert conscious control over sensory integration. An important difference between the present study and that of Warren and Schmitt (1978) is that in our experiments, the target finger was never visible to the subject, and the fingertip's position was indicated visually by a small white square. Being able to see the target finger itself may have increased the salience of vision such that subjects could not ignore vision on the basis of instructions alone (Welch and Warren 1980).

In addition, we found that subjects were better able to up-weight vision than proprioception by conscious effort. Perhaps this is because humans have more practice at consciously directing their vision and tend to rely on proprioception in a less conscious manner. The difficulty of decreasing visual target salience enough to affect behavior (see appendix) supports this explanation.

Target salience

Target salience also appears to be a strong cue for reweighting. Decreasing the salience of a target of a given modality led to down-weighting of that modality and increased reliance on the other when estimating the target's position. This is clearly an adaptive strategy for the brain given that target salience can vary widely and change rapidly (e.g., visual salience decreases markedly when light is very low). Target salience is directly related to variance, so this result supports the minimum variance theory of sensory integration. Because subjects produced more variable reaching endpoints for the low salience target, it is possible that both the target salience and the resulting variable motor behavior (actual errors) were important cues for reweighting.

Error history

In the error history manipulation, we had expected subjects to down-weight the modality associated with more variability or bias in endpoint visual feedback. This prediction falls in line with evidence that visual noise affects the weighting of vision in a height discrimination task (Ernst and Banks 2002) and that when a random offset is added to continuous visual feedback in a fast reaching task, subjects are able to learn the new variability associated with their movements (Trommershauser et al. 2005). However, our manipulations of visual error history did not cause robust reweighting in the present study. Manipulating the bias or mean squared error of endpoint visual feedback did not cause reweighting in the predicted directions; manipulating variance of the endpoint visual feedback did cause reweighting in the predicted directions but of smaller magnitude than the conscious effort or target salience manipulations and not significantly different from controls.

There are a number of possible reasons why the error history manipulation was less effective than conscious effort or target salience. First, unlike the study by Trommershauser and colleagues (2005), we used a predetermined distribution of endpoint visual feedback coordinates. Even though subjects were unaware of the manipulation, seen errors (endpoint visual feedback) were unaffected by actual errors (estimated by the brain based on proprioception or efference copy), potentially diminishing the role of the endpoint visual feedback in sensory integration.

A second and perhaps more likely reason for the limited success of the error history manipulation is that integrating error history over time may be a slow and difficult process (Burge et al. 2008; Ernst and Bulthoff 2004), particularly when different modality targets are interleaved, requiring the brain to keep track of which errors went with which target type. Trommershauser et al. (2005) found that <120 trials were needed for subjects to learn a new variability associated with their movements, but learning to simultaneously associate two variabilities with two sensory modalities, as required in the present study, may be a more complex undertaking, especially because visual feedback was given only at movement endpoints rather than continuously. It is possible that seen error history, a weak reweighting cue in the ∼30-min periods studied here, may play a more important role on a longer time scale.

Minimum variance model

Minimum variance integration, in which the least variable sensory modality is weighted highest, has a well-demonstrated role in accounting for human behavior (e.g., Ernst and Banks 2002; Ghahramani et al. 1997; Jacobs 1999; van Beers et al. 1999). We might thus predict that sensory weightings would minimize the variance of seen reaching errors, i.e., if a person observes highly variable reaching endpoints when reaching to visual targets and very precise reaching endpoints when reaching to proprioceptive targets, he may down-weight vision when reaching to a visuoproprioceptive target. However, our manipulation of the variance of seen error history did not result in robust reweighting, supporting the conclusion that seen error history does not play a major role in sensory reweighting. Note that the weakness of the variance manipulation of seen error history does not indicate that variance is unimportant in this task; on the contrary, we were able to predict experimental weightings by applying the minimum variance model to sensory estimates of target position, supporting the idea that minimizing variance is an important principle of sensorimotor processing.

In sum, reliance on vision versus proprioception varies widely across individuals even in consistent experimental conditions. On the time scale we studied here (∼30 min), the brain can reweight sensory inputs appropriately with conscious effort or when target salience changes, but weights are not strongly affected by changing patterns of visual error history. Sensory weights appear to minimize the variance of visual and proprioceptive estimates of target position in accordance with the minimum variance model. The ability to reweight sensory modalities is one source of behavioral flexibility in humans, and it merits further study, as it could help us understand the brain's capacity to adjust behavior in the face of changing sensory information.

GRANTS

This work was supported by National Institutes of Health Grants R01HD-040289 and 1F31NS-061547-01.

ACKNOWLEDGMENTS

The authors thank C. Fuentes, N. Bhanpuri, and K. Bowman for helpful comments on the manuscript and Dr. Richard E. Thompson for advice on statistical analysis.

APPENDIX

Determination of target salience manipulations

To measure the ability of target salience to cue sensory reweighting, we first had to determine which target manipulations would equivalently affect visual and proprioceptive salience compared with their control conditions. A change in salience would be reflected by the variance of subjects' actual reaching endpoint errors. We tested four manipulations of V target salience and three manipulations of P target salience, in each case dividing endpoint variance on reaches to the manipulated target by endpoint variance on reaches to the “standard” target (the target finger on a tactile marker for P reaches, and the 12x12-mm white box for V reaches). Subjects made 30 reaches to a manipulated target followed by 30 reaches to the standard target. Individual reaches followed the same timeline as the main experiment (Fig. 1B), but no endpoint feedback was given. Two to four subjects were tested for each manipulation.

Three P target salience manipulations changed the variance of subjects' behavior: using a 10 cm-diam soft plush ball as the tactile marker, having subjects drop their target hand 6 s before reaching to the remembered location of the target finger, and placing the target finger on a small vibrator attached to the tactile marker. The plush ball created both an unstable surface for the target finger and a 10-cm separation between the target finger and the reaching surface. Subjects reported that this manipulation made them less certain of the position of their target finger, and mean endpoint variance was two times as much as with the standard P target. Subjects attempting to reach to the remembered location of their target finger after a 6-s delay reported that they did not remember where their target finger had been and were forced to guess. Mean endpoint variance with this manipulation was 18 times as much as the standard target. Vibration may have slightly improved subjects' ability to locate their target finger; mean endpoint variance was 0.8 times as much as the standard target, but not all individual subjects decreased their pointing variance.

Four V target salience manipulations changed the variance of subjects' behavior: using a 20-cm gray circle in place of the 12 × 12-mm white box, having subjects wear blurred goggles with one eye covered, having the white box, appear to vibrate, and making the white box disappear six seconds before the reach. However, only the final manipulation affected subjects' behavior in a robust and consistent manner. When subjects reached for the center of the 20-cm gray circle, mean endpoint variance was 1.4 times as much as the standard V target. Subjects who wore goggles with one eye covered and the other eye blurred tended to become less variable (mean endpoint variance was 0.7 times as much as the standard, but this trend was not uniform across individual subjects). The effect of making the V target appear to vibrate was unclear; mean endpoint variance was 1.3 times as much as standard for a 5-mm-amplitude vibration and 0.7 times as much for a 50-mm-amplitude vibration, but not all individuals in the 5-mm group increased in endpoint variance. In the final manipulation, the 12 × 12-mm white box disappeared and the entire display was covered with a mask of random visual noise except for the fixation point. After 6 s, the mask and fixation point disappeared, and the subject reached to the remembered location of the V target. Mean endpoint variance was 3.6 times as much as with the standard target.

Given these results, we chose manipulations that were not identical in nature but produced changes in variance of endpoint error that were consistent and on the same order of magnitude. We used the “standard” V and P targets for the high-salience condition. The low-salience P targets were the soft plush 10-cm-diam balls. The low-salience V targets were the disappearing 12 × 12-mm white box followed by a random visual noise mask and 6-s delay.

Derivation of eq. 6

In this study, we did not directly assess the variance of subjects' sensory estimates of target position (σ2V target and σ2P target in Eq. 5 from methods). We have only the variance of their actual reaching endpoints (Fig. 1C) to V targets (σ2V endpoints) and to P targets (σ2P endpoints), which reflects variance of the proprioceptive estimate of the reaching hand's position (σ2P reaching hand) in addition to variance of the target (σ2V target or σ2P target)

| (A1) |

| (A2) |

There may also be a contribution of motor variance due to movement of the reaching hand, but we are assuming this is small because subjects were told to move slowly and accurately and to make as many adjustments as they wanted.

To approximate the variance of subjects' sensory estimates of target position (σ2V target and σ2P target), we can make use of the finding that proprioceptive variance of the two hands is similar (van Beers et al. 1998)

| (A3) |

| (A4) |

| (A5) |

Solving for (σ2V target and σ2P target) yields

| (A6) |

| (A7) |

Now we can substitute Eqs. A6 and A7 into Eq. 5

| (A8) |

REFERENCES

- Botvinick M, Cohen J. Rubber hands “feel” touch that eyes see. Nature 391: 756, 1998 [DOI] [PubMed] [Google Scholar]

- Burge J, Ernst MO, Banks MS. The statistical determinants of adaptation rate in human reaching. J Vis 8: 1–19, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheng S, Sabes PN. Calibration of visually guided reaching is driven by error-corrective learning and internal dynamics. J Neurophysiol 97: 3057–3069, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crowe A, Keessen W, Kuus W, van Vliet R, Zegeling A. Proprioceptive accuracy in two dimensions. Percept Mot Skills 64: 831–846, 1987 [DOI] [PubMed] [Google Scholar]

- Ernst MO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nature 415: 429–433, 2002 [DOI] [PubMed] [Google Scholar]

- Ernst MO, Banks MS, Bulthoff HH. Touch can change visual slant perception. Nat Neurosci 3: 69–73, 2000 [DOI] [PubMed] [Google Scholar]

- Ernst MO, Bulthoff HH. Merging the senses into a robust percept. Trends Cogn Sci 8: 162–169, 2004 [DOI] [PubMed] [Google Scholar]

- Foley JM, Held R. Visually directed pointing as a function of target distance, direction, and available cues. Percept Psychophys 12: 263–268, 1972 [Google Scholar]

- Ghahramani Z, Wolpert DM, Jordan MI. Computational models for sensorimotor integration. In: Self-Organization, Computational Maps and Motor Control, edited by Morasso PG, Sanguineti V. Amsterdam: North-Holland, 1997, p. 117–147 [Google Scholar]

- Haggard P, Newman C, Blundell J, Andrew H. The perceived position of the hand in space. Percept Psychophys 62: 363–377, 2000 [DOI] [PubMed] [Google Scholar]

- Hagura N, Takei T, Hirose S, Aramaki Y, Matsumura M, Sadato N, Naito E. Activity in the posterior parietal cortex mediates visual dominance over kinesthesia. J Neurosci 27: 7047–7053, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacobs RA. Optimal integration of texture and motion cues to depth. Vision Res 39: 3621–3629, 1999 [DOI] [PubMed] [Google Scholar]

- Kelso JA, Cook E, Olson ME, Epstein W. Allocation of attention and the locus of adaptation to displaced vision. J Exp Psychol Hum Percept Perform 1: 237–245, 1975 [DOI] [PubMed] [Google Scholar]

- Mon-Williams M, Wann JP, Jenkinson M, Rushton K. Synesthesia in the normal limb. Proc Biol Sci 264: 1007–1010, 1997 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Naito E. Sensing limb movements in the motor cortex: how humans sense limb movement. Neuroscientist 10: 73–82, 2004 [DOI] [PubMed] [Google Scholar]

- Shams L, Kamitani Y, Shimojo S. Illusions. What you see is what you hear. Nature 408: 788, 2000 [DOI] [PubMed] [Google Scholar]

- Smeets JB, van den Dobbelsteen JJ, de Grave DD, van Beers RJ, Brenner E. Sensory integration does not lead to sensory calibration. Proc Natl Acad Sci USA 103: 18781–18786, 2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sober SJ, Sabes PN. Multisensory integration during motor planning. J Neurosci 23: 6982–6992, 2003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sober SJ, Sabes PN. Flexible strategies for sensory integration during motor planning. Nat Neurosci 8: 490–497, 2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trommershauser J, Gepshtein S, Maloney LT, Landy MS, Banks MS. Optimal compensation for changes in task-relevant movement variability. J Neurosci 25: 7169–7178, 2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Beers RJ, Baraduc P, Wolpert DM. Role of uncertainty in sensorimotor control. Philos Trans R Soc Lond B Biol Sci 357: 1137–1145, 2002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Beers RJ, Sittig AC, Denier van der Gon JJ. How humans combine simultaneous proprioceptive and visual position information. Exp Brain Res 111: 253–261, 1996 [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Sittig AC, Denier van der Gon JJ. The precision of proprioceptive position sense. Exp Brain Res 122: 367–377, 1998 [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Sittig AC, Gon JJ. Integration of proprioceptive and visual position-information: an experimentally supported model. J Neurophysiol 81: 1355–1364, 1999 [DOI] [PubMed] [Google Scholar]

- Warren DH, Schmitt TL. On the plasticity of visual-proprioceptive bias effects. J Exp Psychol Hum Percept Perform 4: 302–310, 1978 [DOI] [PubMed] [Google Scholar]

- Wann JP, Ibrahim SF. Does limb proprioception drift?. Exp Brain Res 91: 162–166, 1992 [DOI] [PubMed] [Google Scholar]

- Welch RB. The effect of experienced limb identity upon adaptation to simulated displacement of the visual field. PerceptPsychophys 12: 453–456, 1972 [Google Scholar]

- Welch RB. Perceptual Modification New York: Academic, 1978 [Google Scholar]

- Welch RB, Warren DH. Immediate perceptual response to intersensory discrepancy. Psychol Bull 88: 638–667, 1980 [PubMed] [Google Scholar]

- Welch RB, Widawski MH, Harrington J, Warren DH. An examination of the relationship between visual capture and prism adaptation. Percep Psychophys 25: 126–132, 1979 [DOI] [PubMed] [Google Scholar]