Abstract

Driven by the desire to understand genomic functions through the interactions among genes and gene products, the research in gene regulatory networks has become a heated area in genomic signal processing. Among the most studied mathematical models are Boolean networks and probabilistic Boolean networks, which are rule-based dynamic systems. This tutorial provides an introduction to the essential concepts of these two Boolean models, and presents the up-to-date analysis and simulation methods developed for them. In the Analysis section, we will show that Boolean models are Markov chains, based on which we present a Markovian steady-state analysis on attractors, and also reveal the relationship between probabilistic Boolean networks and dynamic Bayesian networks (another popular genetic network model), again via Markov analysis; we dedicate the last subsection to structural analysis, which opens a door to other topics such as network control. The Simulation section will start from the basic tasks of creating state transition diagrams and finding attractors, proceed to the simulation of network dynamics and obtaining the steady-state distributions, and finally come to an algorithm of generating artificial Boolean networks with prescribed attractors. The contents are arranged in a roughly logical order, such that the Markov chain analysis lays the basis for the most part of Analysis section, and also prepares the readers to the topics in Simulation section.

1. INTRODUCTION

In most living organisms, genome carries the hereditary information that governs their life, death, and reproduction. Central to genomic functions are the coordinated interactions between genes (both the protein-coding DNA sequences and regulatory non-coding DNA sequences), RNAs and proteins, forming the so called gene regulatory networks (or genetic regulatory networks).

The urgency of understanding gene regulations from systems level has increased tremendously ever since the early stage of genomics research. A driving force is that, if we can build good gene regulatory network models and apply intervention techniques to control the genes, we may find better treatment for diseases resulting from aberrant gene regulations, such as cancer. In the past decade, the invention of high throughput technologies has made it possible to harvest large quantities of data efficiently, which is turning the quantitative study of gene regulatory networks into a reality. Such study requires the application of signal processing techniques and fast computing algorithms to process the data and interpret the results. These needs in turn have fueled the development of genomic signal processing and the use of mathematical models to describe the complex interactions between genes.

The roles of mathematical models for gene regulatory networks include:

Describing genetic regulations at a system level;

Enabling artificial simulation of network behavior;

Predicting new structures and relationships;

Making it possible to analyze or intervene in the network through signal processing methods.

Among various mathematical endeavors are two Boolean models, Boolean networks (BNs) [1] and probabilistic Boolean networks (PBNs) [2], in which each node (gene) takes on two possible values, ON or OFF (or 1 and 0), and the way genes interact with each other is formulated by standard logic functions. They constitute an important class of models for gene regulatory networks, in that they capture some fundamental characteristics of gene regulations, are conceptually simple, and their rule-based structures bear physical and biological meanings. Moreover, Boolean models can be physically implemented by electronic circuits, and demonstrate rich dynamics that can be studied using mathematical and signal processing theory (for instance, Markov chains [2, 3]).

In practice, Boolean models have been successfully applied to describe real gene regulatory relations (for instance, the drosophila segment polarity network [4]), and the attractors of BNs and PBNs have been associated with cellular phenotypes in the living organisms [5]. The association of network attractors and actual phenotypes has inspired the development of control strategy [6] to increase the possibility of reaching desirable attractors (“good” phenotypes) and decrease the likelihood of undesirable attractors (“bad” phenotypes such as cancer). The effort of applying control theory to Boolean models is especially appealing in the medical community, as it holds potential to guide the effective intervention and treatment in cancer.

The author would like to bring the fundamentals of Boolean models to a wider audience in light of their theoretical value and pragmatic utility. This tutorial will introduce the basic concepts of Boolean networks and probabilistic Boolean networks, present the mathematical essentials, and discuss some analyses developed for the models and the common simulation issues. It is written for researchers in the genomic signal processing area, as well as researchers with general mathematics, statistics, engineering, or computer science backgrounds who are interested in this topic. It intends to provide a quick reference to the fundamentals of Boolean models, allowing the readers to apply those techniques to their own studies. Formal definitions and mathematical foundations will be laid out concisely, with some in-depth mathematical details left to the references.

2. PRELIMINARIES

In Boolean models, each variable (known as a node) can take two possible values, 1 (ON) and 0 (OFF). A node can represent a gene, RNA sequence, or protein, and its value (1 or 0) indicates its measured abundance (expressed or unexpressed; high or low). In this paper, we use “node” and “gene” interchangeably.

A state in Boolean models is a binary vector of all the gene values measured at the same time, and is also called the gene activity (or expression) profile (GAP). The state space of a Boolean model consists of all the possible states, and its size will be 2n for a model with n nodes.

Definition 1[2, 7] A Boolean network is defined on a set of n binary-valued nodes (genes) , where each node xi has ki parent nodes (regulators) chosen from V, and its value at time t + 1 is determined by its parent nodes at t through a Boolean function fi,

| (1) |

ki is called the connectivity of xi, and fi is the regulatory function. Defining network function f = (f1,...,fn), we denote the Boolean network as β(V, f). Let the network state at time t be x(t) = (x1(t),...,xn(t)), the state transition x(t) → x(t + 1) is governed by f, written as x(t + 1) = f(x(t)).

In Boolean networks, genetic interactions and regulations are hard-wired with the assumption of biological determinism. However, any gene regulatory network is not a closed system and has interactions with its environment and other genetic networks, and it is also likely that genetic regulations are inherently stochastic; therefore, Boolean networks will have limitations in their modeling power. Probabilistic Boolean networks were introduced to address this issue [2, 7], such that they are composed of a family of Boolean networks, each of which is considered a context [8]. At any given time, gene regulations are governed by one component Boolean network, and network switchings are possible such that at a later time instant, genes can interact under a different context. In this sense, probabilistic Boolean networks are more flexible in modeling and interpreting biological data.

Definition 2 [2, 3, 7] A probabilistic Boolean network is defined on , and consists of r Boolean networks β1(V,f1),...,βr(V,fr), with associated network selection probabilities c1,...,cr such that . The network function of the j -th BN is . At any time, genes are regulated by one of the BNs, and at the next time instant, there is a probability q (switching probability) to change network; once a change is decided upon, we choose a BN randomly (from r BNs) by the selection probabilities. Let p be the rate of random gene perturbation (flipping a gene value from 0 to 1 or 1 to 0), the state transition of PBN at t (assuming operation under βj) is probabilistic, namely [3],

| (2) |

where ⊕ is bit-wise modulo-2 addition, γ=(γ1,...,γn) is a random vector with Pr{γi=1}= p, and x(t)⊕γ denotes a random perturbation on the state x(t) (one or more genes are flipped). Let the set of network functions be F={f1,...,fr}, and we denote the PBN by G(V,F,c,p) (see Remark 1).

Alternatively, the PBN can be represented as G(V,Ψ,α,p), with Ψ = {Ψ1,...,Ψn} and α={α1,...,αn}. In this representation, each node xi is regarded as being regulated by a set of l(i) Boolean functions with the corresponding set of function selection probabilities . The two representations are related such that any network function fj is a realization of the regulatory functions of n genes by choosing one function from the function set Ψi for each gene xi, and we can write

| (3) |

Moreover, if it is an independent PBN, namely , c and α are related by

| (4) |

Remark 1 q does not appear in the PBN representation, because according to the network switching scheme described, it can be shown that the probability of being in the βj at any time is equal to cj, regardless of q. However, if we modify the network switching scheme such that, once a network switch is decided upon, we randomly choose any network other than the current network, it will require the definition of r(r–1) conditional selection probabilities, , and the derivation of Pr{fj} (the probability of being in βj) is left as an exercise to the reader.

A Boolean model with finite number of nodes has a finite state space. From the definition of Boolean network, it follows that its state transitions are deterministic, that is, given a state, its successor state is unique. Naturally, if we represent the whole state space and the transitions among the sates of a BN graphically, we can have a state transition diagram.

Definition 3 The state transition diagram of an n-node Boolean network β(V, f) is a directed graph D(S,E). S is a set of 2n vertices, each representing a possible state of a Boolean network; E is a set of 2n edges, each pointing from a state to its successor state in state transition. If a state transits to itself, then the edge is a loop. The state transitions are computed by evaluating x(t+1) = f(x(t)) exactly 2n times, each time x(t) being 00...0,00...1,...,11...1 respectively.

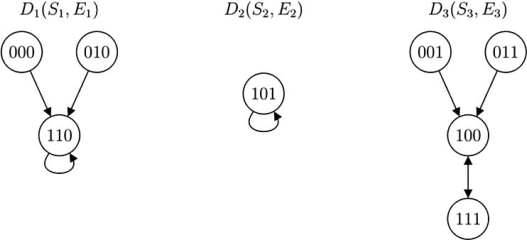

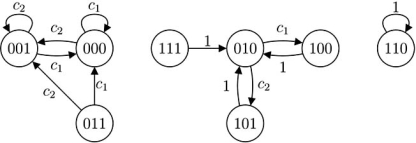

Fig. (1) is an example of state transition diagram of a three-node BN. Like BNs, a PBN also has finite state space. Although state transitions in a PBN are not deterministic, they can be represented probabilistically. We will show how to construct the state transition diagram of a PBN in the Simulation section.

Fig. (1).

A state transition diagram D(S, E).

With the help of state transition diagram, such as the one in Fig. (1), we can easily visualize that in a BN, any state trajectory in time x(0)→ x(1)→ x(2)→ ... must end up in a “trap”, and stay there forever unless a gene perturbation occurs. Similarly, if neither gene perturbation nor network switching has occurred, a time trajectory in a PBN will end up in a “trap” in one of the component BNs too; however, either gene perturbation or a network switch may cause it to escape from the trap. In spite of this, when gene perturbation and network switching are rare, a PBN is most likely to reach a “trap” before either occurs and will spend a reasonably long time there.

Definition 4 Starting from any initial state in a finite Boolean network, when free of gene perturbation, state transitions will allow the network to reach a finite set of states {a1,...,am} and cycle among them in a fixed order forever. The set of states is called an attractor, denoted by A. If A contains merely one state, it is a singleton attractor; otherwise, it is an attractor cycle. The set of states from which the network will eventually reach an attractor A constitutes the basin of attraction of A. A BN may have more than one attractor.

The attractors of a PBN are defined as the union of attractors of its component BNs. In particular, if a PBN is composed of r BNs, and the k -th BN has mk attractors, Ak1 ,Ak2,..., Akmk , then the attractors of PBN are .

In a BN, different basins of attraction are depicted in the state transition diagram as disjoint subgraphs. In Fig. (1), D(S, E) is composed of three disjoint subgraphs, D1(S1,E1), D2(S2,E2) , and D3(S3,E3). 110 and 101 are singleton attractors, while 100 and 111 constitute a cycle. Their respective basins of attraction are S1 = {000,010,110}, S2 = {101} and S3 = {001,011,100,111}.

We are interested in the attractors of a Boolean model for at least two reasons: (1) Attractors represent the stable states of a dynamic system, thus they are tied to the long term behavior of Boolean models; (2) Earlier researchers demonstrated the association of cellular phenotype with attractors [5], thus giving a biological meaning to the attractors. Intuitively, when an attractor has a large basin of attraction, the corresponding phenotype is more likely than that of an attractor with much smaller basin of attraction. To develop intervention strategies that change the long term behavior of Boolean models, it is important to study the attractors.

3. ANALYSES OF BOOLEAN MODELS

Although analysis and simulation are two parallel subjects with Boolean models, the former includes some essential results that lay a foundation for the latter. In this section, we visit Boolean model analysis first.

One of the central ideas with Boolean models is their connection with Markov chains (subsection 3.1). Because of this, Boolean models, under certain conditions, possess steady-state distributions. The steady-state probabilities of attractors, which indicate the long-run trend of network dynamics, can be found analytically via Markov chain analysis (subsection 3.2). Moreover, the relationship between PBNs and Bayesian networks (another class of gene regulatory network models) can be established in a similar manner (subsection 3.3). Lastly, a subsection will be dedicated to structural analysis, which opens a door to other topics beyond this tutorial (such as control of genetic networks).

3.1. Markov Chain Analysis

As readers will find out soon, the transition probability matrix introduced below is not only a convenience in Markov chain analysis, but also finds itself useful in simulation, to be discussed in Section 4.

3.1.1. Transition Probability Matrix

On a Boolean model of n nodes, a transition probability matrix can be defined where tij indicates the probability of transition from one state (which is equal to i–1 if we convert the binary vector to an integer) to another state (which corresponds to j–1).

In a Boolean network β(V,f), tij can be computed by

| (5) |

where dec(.) converts a binary vector to an integer, for instance, dec(00101) = 5. Since BN is deterministic, T contains one 1 on each row, and all other elements are 0's.

In a PBN consisting of r BNs β1(V,f1),...,βr(V,fr), tij can be computed as follows [2, 3]. Note that p (random gene perturbation rate) and γ are defined as in Definition 2, and ck is the selection probability of βk.

| (6) |

where 1 's are indicator functions, , l=number of 1's in the random vector γ=(γ1,...,γn), and l indicates the Hamming distance between s and w.

When taking a closer look at Eq. (6), we find that T is the sum of a fixed transition matrix and a perturbation matrix ,

| (7) |

| (8) |

where Tj and cj are the transition probability matrix and the network selection probability of the j -th Boolean network, respectively; ηij is the Hamming distance between states s and w, with dec(s) = i–1 and dec(w) = j–1.

Tj is sparse with only 2n non-zero entries (out of 2n×2n entries), where each is a state transition driven by the network function fj and involves n computations. depends only on n and p , and involves n computations. Thus, the computational complexity for T is [9].

3.1.2. Boolean Models are Markov Chains

Given the definition of T matrix in Section 3.1.1, we can see that a Boolean model with n genes is a homogeneous Markov chain of N = 2n states, with T being the Markov matrix and . A state x (a binary vector of length n) in Boolean model has one-to-one correspondence with the i -th state (1 ≤ i ≤ N) in the associated Markov chain by dec(x) = i–1.

What is the use of matrix T ? Let W = Tn, we can show that the (i,j) -th element of W is equal to the probability of transition from the i -th state to the j -th state of the Markov chain in n steps, .

The proof is left as an exercise to the reader.

An N -state Markov chain possesses a stationary distribution (or invariant distribution) if there exists a probability distribution π = (π1,...,πN) such that

π = πT.

π = πT implies . Thus in a Markov chain with stationary distribution π , if we start from the i -th state with probability πi, the chance of being in any state j after an arbitrary number of steps is always πj .

An N -state Markov chain possesses a steady-state distribution if starting from any initial distribution π

it means that regardless of the initial state, the probability of a Markov chain being in the i -th state in the long run is . A Markov chain possessing a stationary distribution does not necessarily possess a steady-state distribution.

Why should it be of our concern if the Markov chain has a steady-state distribution or not? This is because we are interested in the Boolean model associated with the Markov chain, and would like to know how it behaves in the long-run. As a reminder, the attractors of a Boolean model are often associated with cellular phenotype, and by finding out the steady-state probabilities of a given attractor, we can have a general picture of the likelihood of a certain phenotype. When a Boolean model possesses (namely, its Markov chain possesses) a steady-state distribution, we can find those probabilities by simulating the model for a long time, starting from an arbitrary initial state x(0). In fact, this implies the equivalence of “space average” and “time average”, as is a common concept in stochastic processes.

When will a Markov chain possess a steady-state distribution? It turns out that an ergodic Markov chain will do. A Markov chain is said to be ergodic if it is irreducible and aperiodic [10].

Definition 5 A Markov chain is irreducible if it is possible to go from every state to every state (not necessarily in one move).

Definition 6 In a Markov chain, a state has period d if starting from this state, we can only return to it in n steps and n is a multiple of d. A state is periodic if it has some period > 1. A Markov chain is aperiodic if none of its state is periodic.

A Boolean network possesses a stationary distribution, but not a steady-state distribution unless it has one singleton attractor and no other attractors. Here we show how to find a stationary distribution. Assume a BN has m singleton attractors, a1,...,am, or an attractor cycle {a1,...,am}, where dec(a1) = i1–1,...,dec(am)=im–1, then π with and is a stationary distribution (the proof is left as an exercise to the reader). If a BN has a combination of singleton attractors and cycles, π can be constructed such that the probabilities corresponding to the singleton attractors are equal, the probabilities corresponding to the states within each attractor cycle are equal, and . When there is only one attractor in the BN, the stationary distribution is unique.

When p,q> 0, a PBN possesses steady-state distribution, because the Markov chain corresponding to the PBN is ergodic. Interested readers can find the proof in [3]. Now that PBN has a steady-state distribution, we can obtain such distribution in two ways: (2) solving the linear equations (I is the identity matrix), and interested readers can consult books on linear algebra; (2) using the empirical methods in Section 4.3. If we are interested in the steady-state probabilities of the attractors only, an analytic method exists, to be discussed next.

3.2. Analytic Method for Computing the Steady-State Probabilities of Attractors

Recall from Section 2 that attractors are important to the long-term behavior of Boolean models because they are associated with cellular phenotypes; now we also know that PBNs possess steady-state distributions, which means that a PBN has a unique long-term trend independent of initial state. Therefore, we would naturally ask the question, how can we find the long-term probabilities of these attractors which are so important to us?

In the following, we will present a Markov chain based analytic method that answers this question, and more details, including proofs, can be found in [11].

3.2.1. Steady-State Distributions of Attractors in a BN with Perturbations

First consider a special case of PBN, Boolean network with perturbations (BNp), in which any gene has a probability p of flipping its value. A BNp inherits all the attractors and corresponding basins of attraction from the original BN. Because of the random gene perturbations, BNps possess steady-state distributions (the proof is similar to that of PBN, and it is left as an exercise to the reader).

A BNp defined on V = {x1,...,xn} with gene perturbation rate p can be viewed as homogenous irreducible Markov chain Xt with state space {0,1}n. Let be any two states, then at any time t,Py(x)=Pr{Xt+1=x|Xt=y} is the probability of state transition from y to x.

For Xt, there exists a unique steady-state distribution π. Let the steady-state probability of state x be π(x), and let be a collection of states, then the steady-state probability of B is .

Assume the BNp has attractors A1,...,Am, with corresponding basins of attraction (or simply referred to as basins) B1,...,Bm. Since the attractors are subsets of the basins,

| (9) |

Therefore, we can compute the steady-state probability of any attractor Ak by the following two steps: (1) the steady-state probability of basin Bk, π(Bk), and (2) the conditional probability of attractor Ak given its being in Bk, π(Ak|Bk).

(I). Obtaining the Steady-State Probability of Basin, π(Bk)

Define a random variable τ(t) which measures the time elapsed between the last perturbation and the current time t. τ(t) = 0 means a perturbation occurs at t. For any starting state h, let

| (10) |

and define the conditional probability of being in state given that the system is inside a set B, prior to a perturbation,

| (11) |

The following theorem represents the steady-state distribution of the basins as the solution of a group of linear equations, where the coefficients are 's. The lemma that follows gives the formula for the coefficients.

Theorem 1

| (12) |

Lemma 1

| (13) |

where is the probability that state transition goes from y to x in one step by gene perturbation.

Now the only unknown is π*(y|Bi). When p is small, the system spends majority of the time inside an attractor, and we can use the following approximation,

| (14) |

where |Ai| is the cardinality of Ai. Therefore,

| (15) |

(II). Obtaining the Steady-State Probability of Attractor, π(Ak)

Lemma 2 For basin Bk, initial state h, and fixed value j≥0,

| (16) |

Lemma 3 If δ(x,Ak) is the number of iterations of f needed to reach the attractor Ak from the state x, then for any ,

| (17) |

Applying the two lemmas and letting b=(1-p)n, we can obtain the steady-state probability of attractor Ak.

Theorem 2

| (18) |

When p is small, using the approximation in Eq. (14), we have

| (19) |

3.2.2. Steady-State Distributions of Attractors in a PBN

In a PBN, we represent the pair (x,f) as the state of a homogeneous Markov chain, (Xt,Ft), and the transition probabilities are defined as

| (20) |

Assume the PBN is composed of r BNs β1(V,f1),...,βr(V,fr). Within BN βk, the attractors and basins are denoted Aki and Bki, i = 1,...,mk. The computation of the steady-state probabilities are now split into three steps: (1) steady-state probabilities π(Bki,fk) of the basins, (2) conditional probabilities π(Aki,fk|Bki,fk), and (3) approximation to the marginal steady-state probabilities π(Aki) (since different BNs may have the same attractor).

The computations in steps (1) and (2) are similar to that of BNp, with (Bki,fk) in place of Bk whenever applicable, and there is one extra summation for the r component BNs. Interested readers can find details in [11].

From steps (1) and (2), we can obtain π(Aki,fk). The last step sums up π(Aki,fl) over l whenever the l -th BN has Aki as an attractor,

| (21) |

Since π(Aki,fl) is unknown when k ≠ l, we use the following approximation when p is small,

| (22) |

Thus,

| (23) |

and

| (24) |

3.3. Relationship Between PBNs and Bayesian Networks

Bayesian networks (BaN) are graphic models that describe the conditional probabilistic dependencies between variables, and have been used to model genetic regulatory networks [12]. An advantage of BaNs is that they involve model selection to optimally explain the observed data [2]; BaNs can use either continuous or discrete variables, which is more flexible for modeling. In comparison, Boolean models have explicit regulatory rules that carry biological information, which can be more appealing to biologist than the statistic representation of BaNs. Although Boolean models use binary-quantized variables which sets a limitation on the data usage, they are computational less complex than BaNs when learning the network structure from data (see Section 3.3 of [2] for a more detailed discussion and references). Since network structure learning is out of scope of this article, interested readers can refer to [12] for Bayesian learning, [13] for Boolean network learning, and [8, 14] for PBN learning.

While BNs are deterministic, PBNs and BaNs are related by their probabilistic nature; like PBNs, dynamic BaNs can be considered as Markov chains too. In the following analysis, we will show that equivalence between PBNs and BaNs can be established under certain conditions [15]. In this analysis, the random gene perturbation rate p in PBN is assumed to be 0.

A BaN with n random variables X1,...,Xn (not necessarily binary) is represented by Ba(H,Θ), where H is a directed acyclic graph whose vertices correspond to the n variables and Θ is a set of conditional probability distributions induced by graph H. Letting X = (X1,...,Xn), xi be a realization of the random variable Xi, and Pa(Xi) be the parents of Xi, the unique joint probability distribution over the n variables is given by

A dynamic Bayesian network (DBN) is a temporal extension of BaN, and consists of two parts: (1) an initial BaN Ba0 = (H0,Θ0) that defines the joint distribution of the variables x1(0),...,xn(0), and (2) a transition BaN Ba1 = (H1,Θ1) that defines the transition probabilities . Let x represent a realization of X, and the joint distribution of X(0),...,X(T) can be expressed by

| (25) |

In a PBN G(V,F,c), where and F = {f1,...,fr}, the joint probability distribution of states over the time period [0,T] can be expressed as

For an independent PBN,

| (26) |

3.3.1. An Independent PBN as a Binary-Valued DBN

Let the independent PBN be G(V,Ψα) (the alternative representation, see what follows Definition 2). First, since a BaN can represent arbitrary joint distribution, the distribution of the initial state of PBN, Pr{x0} , can be represented by some Ba0. Second, to construct Ba1(H1,Θ1) from the PBN, we let set denote the regulators of gene xi in function ,

| (27) |

We construct graph H1 such that there are two layers of nodes, the first layer has nodes X1(t–1),...,Xn(t–1), the second layer has nodes X1(t),...,Xn(t), and there exists a directed edge from in the PBN such that . Thus in H1, Pa(Xi(t)) corresponds to the set of all possible regulators of xi in the PBN.

Let Di be the joint distribution of the variables in Pa(xi), and recall that , then

| (28) |

| (29) |

| (30) |

and we have

| (31) |

Eq. (31) defines Θ1 (induced by H1) for each node, thus any independent PBN G(V,Ψ,α) can be expressed as a binary DBN (Ba0,Ba1).

Remark 2 Strictly speaking, the input variables for are a subset of Pa(xi), so the notations in Eqs. (29-31) are not accurate when we use the same vector x (or z) for and for Di (or Pa(Xi(t))). We should understand that those notations are only used as a convenience.

3.3.2. A Binary-Valued DBN as an Independent PBN

Assume DBN (Ba0,Ba1) defined on X=(X1,...,Xn) is given, and Xi's are binary-valued random variables. Now we demonstrate how to construct a PBN. Define the set of nodes V = {x1,...,xn} in PBN corresponding to X1,...,Xn, and let the distribution of PBN initial state x0 = (x1(0),...,xn(0)) match Θ0 in Ba0(H0,Θ0).

In , Ba1(H1,Θ1), assume Pa(Xi(t)) contains ki variables Xi1,...,Xiki. For each Xi, we enumerate each conditional probability regarding Xi(t) in Θ1 as a triplet and there are 2ki+1 such triplets. The triplets are arranged such that the first 2ki of them have zj = 1, and pj's are in ascending order. For every j≤2ki, define a sequence of symbols , where we choose the variable xjd for symbol , and choose (the negation of variable xjd) for symbol .

Letting l(i)=2ki+1, we define the set of l(i) Boolean functions for gene xi in the PBN as , where

| (32) |

is a disjunction of conjunctions, and is a zero function. Define the corresponding function selection probabilities it can be verified that

| (33) |

Therefore, a binary DBN can be represented as a PBN G(V,Ψ,α), where Ψ={Ψ1,...,Ψn}, α = {α1,...,αn}, and . It should be noted that the mapping from a binary DBN to an independent PBN is not unique, and the above representation is one solution.

Summarizing subsections 3.3.1 and 3.3.2, we have the following theorem [15].

Theorem 3 Independent PBNs G(V,Ψ,α) and binary-valued DBNs (Ba0,Ba1) whose initial and transition BNs Ba0 and Ba1 are assumed to have only within and between consecutive slice connections, respectively, can represent the same joint distribution over their common variables.

3.4. Structural Analysis

Boolean models, like any other networks, have two issues of interest: Is the model robust? Is the model controllable? From the standpoint of system stability, we require the model be robust, namely, resistent to small changes in the network; from the standpoint of network intervention, we desire that the network be controllable, such that it will respond to certain perturbation. There needs to be a balance of the two properties. These two questions encourage researchers to do the following, (1) Find structural properties of the network that are related to robustness and controllability; (2) Seek ways to analyze the effect of perturbations and to design control techniques.

In 3.4.1, (1) is addressed. We review some structural measures of Boolean models that quantify the propagation of expression level change from one gene to others (or vice versa). In 3.4.2, (2) is partly addressed, where we review structural perturbations, and present a methodology that analyzes the perturbation on Boolean functions. Since the control techniques are out of the scope of this paper, interested readers can find more information in the review articles [16, 17].

3.4.1. Quantitative Measures of the Structure

In gene regulatory networks, the interactions among genes are reflected by two facts: the connections among genes, and the Boolean functions defined upon the connection. No matter it is the robustness or the controllability issue we are interested in, it all boils down to one central question: how a change in the expression level of one gene leads to changes in other genes in the network and vice versa. Here, we introduce three measures of the structural properties that are related to the question: canalization, influence and sensitivity.

When a gene is regulated by several parent genes through function f, some parent genes can be more important in determining its value than others. An extreme case is canalizing function, in which one variable (canalizing variable) can determine the function output regardless of other variables.

Definition 7 [18] A Boolean function f : {0,1}n → {0,1} is said to be canalizing if there exists an and such that for all , if xi = u then f(x1,...,xn)=v.

In gene regulatory networks, canalizing variables are also referred to as the master genes. Canalization is commonly observed in real organisms, and it plays an important role in the stability of genetic regulation, as discussed in [19, 20]. Mathematically, researchers have shown that canalization is associated with the stability of Boolean networks. For more theoretical work, see [21-23].

Other than canalization, the degree of gene-gene interaction can be described in more general terms, and we define two quantitative measures, influence and sensitivity, as follows.

Consider a Boolean function f with input variables x1,...,xn. Letting x = (x1,...,xn), we define the influence of a gene on the function f.

Definition 8 [2] The influence of a variable xj on the Boolean function f is the expectation of the partial derivative with respect to the distribution D(x),

| (34) |

Note that the partial derivative of f with respect to xi is

| (35) |

in which x(j) = (x1,...,1-xj,...,xn) (with xj toggled).

In a BN, since each node xi has one regulatory function fi, so the influence of node xj (assuming it regulates xi) on xi is Ij(xi)=Ij(fi). In a PBN, let the set of regulating functions for xi is , with function selection probabilities , the influence of gene xj on xi

| (36) |

Thus for a Boolean model with n genes, an influence matrix Γ of dimension n × n can be constructed, where its i,j element being Γij = Ii(xj). We can define influence of gene xi to be the collective influence of xi on all other genes,

| (37) |

Related to influence, we define the sensitivity of a function,

| (38) |

Then the average sensitivity of f with respect to distribution D is

| (39) |

The meaning of average sensitivity is that, on average, how much the function f changes between the Hamming distance one neighbors (i.e., the input vectors differ by one bit). For PBNs, the average sensitivity of gene xi is (cf. Eq. (37))

| (40) |

Biologically, the influence of a gene indicates its overall impact on other genes. A gene with high influence has the potential to regulate the system dynamics and its perturbation has significant downstream effect. The sensitivity of a gene measures its stability or autonomy. Low sensitivity means that other genes have little effect on it, and the “house-keeping” genes usually have this property [2]. It is shown that such quantitative measures (or variants) can help guide the control of genetic networks [24] and aid in the steady-state analysis [25].

3.4.2. Structural Perturbation Analysis

There are two types of perturbation on Boolean models: perturbation on network states and perturbation on network structure. The former refers to a sudden (forced or spontaneous) change in the current state from x to x' , which causes the system dynamics to be disturbed temporarily. Such disturbance is transient in nature, because the network nodes and connections are intact, and the underlying gene regulation principles do not change. Therefore, the network attractors and the basins of attraction remain the same. However, if the perturbed Boolean model has multiple attractors, state perturbations may cause convergence to a different attractor than the original one, and may change the steady-state distribution of the network. This type of perturbation has been studied extensively (e.g. [26]), and finds its use in network control (e.g. [6]).

Perturbation on network structure refers to any change in the “wiring” or functions of the network. For instance, we may remove or add a gene to the network, change connections among genes, change the Boolean functions, or even change the synchronous Boolean network to an asynchronous model (where not all the genes are updated at the same time). Structural perturbation is more complex and less studied, compared to state perturbation. When network structure is perturbed, the network attractors and basins of attraction will be impacted, therefore the long-term consequence is more difficult to gauge than that of state perturbation.

The reasons for studying structural perturbation are: (1) modeling of gene regulatory networks is subject to uncertainty, and it is desirable to study the effect of small difference in network models on the network dynamic behavior; (2) it is likely that gene regulations, like other biological functions, have intrinsic stochasticity, and it is of interest to predict the consequence of any perturbation in regulation; (3) changing the network structure can alter the network steady-state distribution, thus structural perturbation can be an alternative way (with respect to state perturbation) of network control [25, 27, 28].

In [8], the authors developed theories to predict the impact of function perturbations on network dynamics and attractors, and main results are presented below. For more applications, see [28]. For further analysis in terms of steady-state distribution and application in network intervention, see [25].

Problem formulation. Given a Boolean network β(V,f), V = {x1,...,xn}, f = (f1,...,fn) , if one or more functions have one or more flips on their truth table outputs, we would like to predict the effect on state transitions and attractors.

Assume gene xi has ki regulators xi1,xi2,...,xiki then the truth table of fi has 2ki rows, as is shown below. The input vector on row j will be denoted , for instance, . If we flip the output on row j, then we call it a one-bit function perturbation on fi, and denote it

| Row label | fi(.) | ||||

|---|---|---|---|---|---|

| 1 | 0 | ||||

| 2 | 1 | ||||

| 0 |

Any state transition s → w contains n mappings, fi :s → wi. We define Ini(s)=(si1,si2,...,siki), which is a sub-vector of s that corresponds to the regulators of xi.

The following proposition and corollaries state the basic effects of one-bit function perturbation on the state transitions and attractors. Proofs and extensions to two-bit perturbations can be found in [28].

Proposition 1 A state transition s → w is affected by one-bit perturbation if and only if . If the state transition is affected, the new state transition will be s → w(i), where w(i) is defined to be the same as w except the i -th digit is flipped.

Corollary 1 If xi has ki regulators, then the one-bit perturbation will result in 2n-ki changed state transitions in the state transition diagram. This is equivalent to 2n-ki altered edges in the state transition diagram.

Corollary 2 (Invariant singleton attractor) Suppose state S is a singleton attractor. It will no longer be a singleton attractor following the one-bit perturbation if and only if .

Corollary 3 (Emerging singleton attractor) A non-singleton-attractor state S becomes a singleton attractor as a result of the one-bit perturbation if and only if the following are true: (1) , and (2) absent the perturbation, S → S(i).

We use the following toy example to demonstrate the above results. From these results, more applications can be derived, such as controlling the network steady-state distribution through function perturbation, or identifying functional perturbation by observing phenotype changes [28].

Example 1 Consider a BN with n = 3 genes,

| (41) |

| (42) |

| (43) |

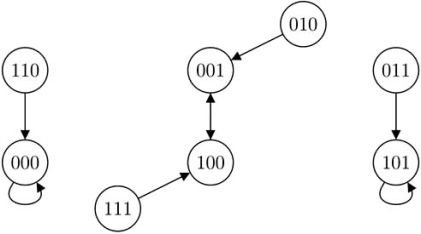

where the truth table of f3 is shown below and the state transition diagram is shown in Fig. (2).

Fig. (2).

State transition diagram of the original BN, Example 1.

| Row label | x1 | x2 | f3(.) |

|---|---|---|---|

| 1 | 0 | 0 | 0 |

| 2 | 0 | 1 | 1 |

| 3 | 1 | 0 | 1 |

| 4 | 1 | 1 | 0 |

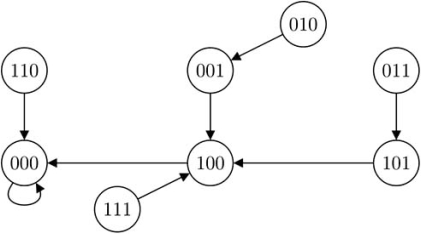

If a one-bit perturbation forces f3 to become , since k3 = 2, 2 state transitions will be affected. By Proposition 1, states 100 and 101 no longer transit to 001 and 101 but to 000 and 100 respectively. Because of that, attractor cycle {001, 100} will be affected. Moreover, Corollary 2 predicts that the singleton attractor 000 is robust to the perturbation while 101 is not. The predictions are confirmed by the new state transition diagram shown in Fig. (3).

Fig. (3).

State transition diagram of the perturbed BN, Example 1.

Finally, the author would like to remind the readers that other works on (various types of) structural perturbation are available. For instance, in [29], the authors added a redundant node to Boolean network, such that the bolstered network is more resistent to a one-bit function perturbation (as defined above). In [30], the effect of asynchronous update of a drosophila segment polarity network model is examined in terms of the phenotypes (steady-states). In [25], the authors derived analytical results of how function perturbations affects network steady-state distributions and applied them to structural intervention. In [31], the author modeled gene knockdown and broken regulatory pathway in Boolean networks, and analyzed the effects.

4. SIMULATION ISSUES WITH BOOLEAN MODELS

Recall from Section 2 that a Boolean model of n genes has a finite state space, and a BN has deterministic dynamic behavior which can be fully captured by the state transition diagram. A PBN is probabilistic in nature, therefore its state transition is also probabilistic. For both BNs and PBNs, attractors are characteristic of their long-term behavior. Given the above knowledge, if we would like to know anything about a Boolean model, we should find out its state transition diagram and attractors first. This is to be discussed in Section 4.1.

For Boolean models, the most commonly encountered simulation issues include: (1) how to generate the time sequence data of a network, x(0),x(1),...,x(t),...; (2) how to find the network steady-state distribution if it exists; and (3) how to produce artificial Boolean models with prescribed attractors to facilitate other studies. Among them, (1) is a basic practice that can be utilized in (2) and (3), and we will deal with them in Sections 4.2, 4.3 and 4.4 respectively. Note that the techniques in 4.1 is crucial to all the three issues.

4.1. Generating State Transition Diagram and Finding Attractors

To obtain the state transition diagram of a BN, we first compile a state transition table. Assuming n nodes in the network, x1,...,xn, we evaluate the current state x(t) to be 00...0, 00...1, ..., 11...0, and 11...1 in turn, compute their respective x(t+1) 's, and tabulate the results. The states can also be represented by integers instead of binary vectors. Table 1 is an example when n = 3. In practice, we only store the second row (“next states”) for computational purpose, because by default the current states are always arranged such that they correspond to integers 0,1,2,...,2n–1.

Table 1.

Example of a State Transition Table for a BN

| Current states | 000 | 001 | 010 | 011 | 100 | 101 | 110 | 111 |

| Next states | 000 | 000 | 100 | 000 | 010 | 010 | 110 | 010 |

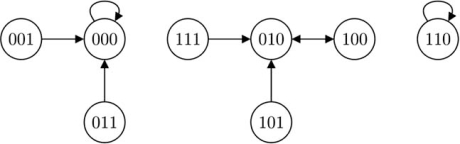

To obtain the state transition diagram of a BN, we draw 2n vertices, each representing a possible state, and connect two vertices by a directed edge if one state transits to the other based on the state transition table. If a state transits to itself, the edge points to itself. Fig. (4) is the state transition diagram based on Table 1.

Fig. (4).

State transition diagram of a Boolean network.

Similarly for a PBN, when gene perturbation rate p=0, we can draw its state transition diagram by combining the state transition diagrams of its component BNs. Now each edge has a probability attached to it, representing the possibility of one state transiting to the other. For example, if a PBN is composed of two BNs, where the first BN has state transitions shown in Table 1, and the second BN has state transitions shown in Table 2, and their selection probabilities are c1 and c2 = 1-c1 respectively, then when p = 0, the PBN's state transition diagram is shown in Fig. (5). When p > 0 , a state transition can either be driven by some network function or by random gene perturbations, and we may refer to its state transition matrix T when constructing the state transition diagram. It should be noted that the sum of probabilities of all the edges exiting a vertex should always be 1.

Table 2.

State Transition Table for the Second BN in a PBN

| Current states | 000 | 001 | 010 | 011 | 100 | 101 | 110 | 111 |

| Next states | 001 | 001 | 101 | 001 | 010 | 010 | 110 | 010 |

Fig. (5).

Example of state transition diagram of a probabilistic Boolean network when gene perturbation rate p = 0.

The following is a simple algorithm for finding the attractors of BN based on the state transition table (using integer representation of the states).

Algorithm 1 (Finding attractors)

Generate an array a of size 2n, and initialize all ai'S to 0. ai corresponds to state i–1.

Search for singleton attractors. For each state i between 0 and 2n–1, look up the (i+1) -th entry in the state transition table for its next state j. If j = 1, then j is a singleton attractor, set aj+1 :=1.

Search for attractor cycles. For each state i between 0 to 2n-1, if ai+1=0, look up the state transition table repeatedly for the successor states of i, such that i→j→k... until a singleton attractor or an attractor cycle is reached. If an attractor cycle is reached, save the cycle states and set the corresponding elements in a to 1.

4.2. Simulating a Dynamic System

A common practice with a Boolean model defined on V = {x1,...,xn} is to generate time sequence data. x(0),x(1),x(2),.... A direct method is to start from an initial state x(0), and plug in the Boolean functions repeatedly to find the subsequent states (for BNs and PBNs), sometimes taking into consideration network switches and gene perturbations (for PBNs).

An alternative way, which is more efficient when simulation time is long , is to utilize the information of state transition diagram (encoded in the state transition table) or transition probability matrix T. For BN, it entails converting the current state x(t) to an integer, and looking up the state transition table or matrix T for the next state x(t+1). For PBN, one can start from a randomly chosen initial state and a randomly chosen initial network (from r BNs), and follow either of the two protocols below. Note that we follow the notations in Definition 2 and use p, q to denote the random gene perturbation rate and network switching probability, respectively. Network selection probabilities are denoted by c1,...,cr.

Table-lookup and real-time computation based method. Construct r state transition tables for the r component BNs respectively (letting gene perturbation rate p = 0). At any time t, if at the k -th network, generate n independent [0,1] uniformly distributed random numbers p1,...,pn. If pi<p, flip xi(t) to get (no gene perturbation), convert x(t) to integer and look up the k -th state transition table to find x(t+1). Finally, generate a [0,1] uniformly distributed random number qs and compare to q to decide if the system will switch network at t+1; if switch will occur, choose from the r networks according to the selection probabilities.

T matrix based method. Compute the transition probability matrix T. If dec(x(t))=i–1, generate a [0,1] uniformly distributed random number pt. If (til is the (i,l) element of T), then convert j–1 to a n -bit vector s (dec(s)=j–1) and the next state is x(t+1)=S.

One other issue of simulating a PBN is the choice of parameters p and q. As stated in Section 2, network switching probability q does not affect the probability of being at any constituent BN, and in theory we can choose any value for q; however, we prefer to choose small q because in a biological system, switching network corresponds to the change of context (reflecting a change of regulatory paradigm, either caused by environment change or internal signals), which should not occur very often. Moreover, if q is large, or even q=1, then network switching is frequent, and a short time sequence of data x(t1),x(t1+1),...,x(t2) are more likely to come from several BNs instead of from one single BN. This may pose a difficulty if we try to identify the underlying PBN and its component BNs from the sequence data [32]. On the other hand, if q is too small, and the number of BNs in the PBN is large, it will take too long a time to obtain the steady-state distribution by simulation method. Usually p should be small to reflect the rarity of random gene perturbation, and we let p <<q. Also, small p is helpful if the generated sequence data will be used as artificial time-series data for the identification the underlying PBN and its component BNs. However, if p is too small, it will take longer to obtain the steady-state distribution. Usually, we can choose q = 0.01 ~ 0.2 and p = 0.01% ~ 0.5%.

4.3. Obtaining the Steady-State Distributions

4.3.1. Power Method

As discussed in Section 3.1, a PBN possesses a steady-state distribution when q, p > 0 [3]. By definition, this distribution π* is the solution to linear equations π = π.T with constraint is unique and can be estimated by iteration, given the transition probability matrix T (assuming n genes, and N = 2n ).

Algorithm 2 (Finding steady-state distribution)

Set δ* and generate an initial distribution ; Let k:=0.

-

DO

Compute π(k+1):=π(k).T;

k: = k+1;

UNTIL (δ<δ*)

π* :=π(k).

Note that ||.|| can be any norm, such as ||.||∞.

When the number of BNs in a PBN is large and some BNs have small selection probabilities, an approximation method for constructing T is proposed in [33]. In the approximation, is computed instead of T, which ignores r0 BNs whose selection probabilities ck1,...,ckr0 are less than a threshold value ε,

| (44) |

| (45) |

where Tj (1≤ j≤ r) and are defined as in Eqs. (7) and (8). If is used in place of T, and the solution for , the expected relative error in steady-state distribution is shown to be bounded by O(ε) [33]

| (46) |

The following is an alternative method of obtaining steady-state distribution. If we are interested in the attractors only, knowing that the majority of the steady-state probability mass is on attractors if p is small, we may apply the Markov chain based analytic method in Section 3.2.

4.3.2. Monte Carlo Simulation Method [34]

This method requires generation of a long time sequence of data, x(0),x(1),...,x(T), such that the frequencies of all the possible 2n states approach the steady-state distribution. In a given n -gene PBN with gene perturbation rate p, the smaller p is and the larger n is, the longer it takes to converge to the steady-state distribution. In general, we need to simulate at least 10.2n.p-1 steps.

To estimate when the PBN has converged to its steady-state distribution, we can use the Kolmogorov-Smirnov test. The basic idea of Kolmogorov-Smirnov test is to measure the closeness of an empirical probability distribution to the theoretical distribution. Since the latter (steady-state distribution) is unknown in this case, we will test the closeness of two empirical distributions.

To get two quasi-i.i.d (independently and identically distributed) samples in PBN, we select two samples , and the Kolmogorov-Smirnov statistic is defined as

| (47) |

In the definition, the maximum is over the state space {0,1}n, and 1[00...0,s](x) is an indicator function whose output equals 1 if and only if , and the output equals 0 otherwise.

4.4. Generating Artificial BNs with Prescribed Attractors [35]

In a simulation study of Boolean models, it is often necessary to create artificial networks with certain properties. Of special interest is the problem of generating artificial BNs with a given set of attractors, since attractors are hypothesized to correspond to cellular phenotypes and play an important role in the long term behavior of Boolean models.

First, note that the state transition diagram can be partitioned into level sets, where level set lj consists of all states that transit to one of the attractors in exactly j steps, and the attractors belong to the level set l0.

Problem formulation [35] Given a set of n nodes V={x1,...,xn}, a family of n subsets with 0<k≤|Pi|≤K, a set A of d states (binary vectors of n bits), and integers l,L satisfying 0<l<L, we will construct a BN defined on V, which satisfies the following constraints: the set of regulators of node xi is Pi (P={P1,...,Pn} is called the regulator set of V), the attractors are A1,...,Am such that , and the BN has between l and L level sets.

Specifically, if we are interested in constructing a BN with only singleton attractors, its state transition diagram will be a d -forest (containing d single-rooted trees) if the BN has d singleton attractors. The following theorem gives the number of all possible state transition diagrams that only contain singleton attractors (the proof can be found in [35]).

Theorem 4 The cardinality of the collection of all forests on N vertices is (N+1)N-1.

Since N=2n and the number of all possible state transition diagrams are NN, when n is large, the ratio (N+1)N-1/NN is asymptotically e/2n, thus a brute force search has a low success rate.

Assuming only singleton attractors are allowed, the following algorithm is for solving the search problem formulated above. A second algorithm is also given in [35], but shown to be less efficient.

Algorithm 3 (Generating artificial Boolean network)

Randomly generate or give in advance a set A of d states (as singleton attractors).

Randomly generate a predictor set P, where each Pi has k to K nodes. If Step 2 has been repeated more than a pre-specified number of times, go back to Step 1.

Check if the attractor set A is compatible with P, i.e. only the attractors (each transits to itself) of the state transition diagram are checked for compatibility against P. If not compatible, go back to Step 2.

Fill in the entries of the truth tables that correspond to the attractors generated in Step 1. Using the predictor set P and randomly fill in the remaining entries of the truth table. If Step 4 has been repeated more than a pre-specified number of times go back to Step 2.

Search for cycles of any length in the state transition diagram D based on the truth table generated in Step 4. If a cycle is found go back to Step 4, otherwise continue to Step 6.

If D has less than l or more than L level sets go back to Step 4.

Save the generated BN and terminate the algorithm.

5. CLOSING WORDS

This paper has presented the following analysis and simulation issues of Boolean networks and probabilistic Boolean networks, which are models for gene regulatory networks.

Analysis. An important aspect of Boolean models is that they can be viewed as homogeneous Markov chains; for a PBN, when the network switching probability q > 0 and gene perturbation rate p > 0, it possesses a steady-state distribution. Markov analysis serves as a basis for finding the steady-state probabilities of attractors and for proving the equivalence of PBN and dynamic Bayesian networks. Finally, a structural analysis is provided, where quantitative measures of gene-to-gene relationships are introduced, and the effect of perturbation on Boolean functions are analyzed.

Simulation. Central to the simulation of Boolean models is the use of state transition diagram and transition probability matrix. In network simulation, different methods are presented and simple guidelines of parameter selection are provided. To test the convergence of a simulated PBN to its steady-state distribution, we can employ Kolmogorov-Smirnov statistic. Lastly, an algorithm for generating artificial BNs with prescribed attractors is presented.

To find more references on Boolean models, and obtain a MATLAB toolbox for BN/PBN, readers can go to the following website, http://personal.systemsbiology.net/ilya/PBN/PBN.htm. Another online source of papers is http://gsp.tamu.edu/Publications/journal-publications.

ACKNOWLEDGEMENT

The author thanks Yuping Xiao for giving valuable critique on the initial manuscript. The anonymous reviewers' insightful comments have helped the author's revision considerably.

REFERENCES

- 1.Kauffman SA. The origins of order self-organization and selection in evolution. New York: Oxford University Press; 1993. [Google Scholar]

- 2.Shmulevich I, Dougherty ER, Kim S, Zhang W. Probabilistic Boolean networks: a rule-based uncertainty model for gene regulatory networks. Bioinformatics. 2002;18:261–274. doi: 10.1093/bioinformatics/18.2.261. [DOI] [PubMed] [Google Scholar]

- 3.Shmulevich I, Dougherty ER, Zhang W. Gene perturbation and intervention in probabilistic Boolean networks. Bioinformatics. 2002;18:1319–1331. doi: 10.1093/bioinformatics/18.10.1319. [DOI] [PubMed] [Google Scholar]

- 4.Albert R, Othmer HG. The topology of the regulatory interactions predicts the expression pattern of the segment polarity genes in Drosophila melanogaster. J. Theor. Biol. 2003;223:1–18. doi: 10.1016/s0022-5193(03)00035-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Huang S. Gene expression profiling, genetic networks, and cellular states: an integrating concept for tumorigenesis and drug discovery. J. Mol. Med. 1999;77:469–480. doi: 10.1007/s001099900023. [DOI] [PubMed] [Google Scholar]

- 6.Datta A, Choudhary A, Bittner ML, Dougherty ER. External control in Markovian genetic regulatory networks. Mach. Learn. 2003;52:169–191. doi: 10.1093/bioinformatics/bth008. [DOI] [PubMed] [Google Scholar]

- 7.Shmulevich I, Dougherty ER, Zhang W. From Boolean to probabilistic Boolean networks as models of genetic regulatory networks. Proc. IEEE. 2002;90:1778–1792. [Google Scholar]

- 8.Dougherty ER, Xiao Y. Design of probabilistic Boolean networks under the requirement of contextual data consistency. IEEE Trans. Signal Processing. 2006;54:3603–3613. [Google Scholar]

- 9.Zhang S-Q, Ching W-K, Ng MK, Akutsu T. Simulation study in probabilistic Boolean network models for genetic regulatory networks. Int. J. Data Min. Bioinform. 2007;1(3):217–240. doi: 10.1504/ijdmb.2007.011610. [DOI] [PubMed] [Google Scholar]

- 10.Çinlar E. Introduction to stochastic processes. 1st ed. Englewood Cliffs NJ: Prentice Hall; 1997. [Google Scholar]

- 11.Brun M, Dougherty ER, Shmulevich I. Steady-state probabilities for attractors in probabilistic Boolean networks. EURASIP J. Signal Processing. 2005;85:1993–2013. [Google Scholar]

- 12.Friedman N, Linial M, Nachman I, Pe’er D. Using Bayesian networks to analyze expression data. J. Comput. Biol. 2000;7:601–620. doi: 10.1089/106652700750050961. [DOI] [PubMed] [Google Scholar]

- 13.Ideker T, Thorsson V. Discovery of regulatory interactions through perturbation: inference and experiment design. Pac. Symp. Biocomput. 2000;5:302–313. doi: 10.1142/9789814447331_0029. [DOI] [PubMed] [Google Scholar]

- 14.Xiao Y, Dougherty ER. Optimizing consistency-based design of context-sensitive gene regulatory networks. IEEE Trans. Circ. Syst. I. 2006;53:2431–2437. [Google Scholar]

- 15.Lähdesmäki H, Hautaniemi S, Shmulevich I, Yli-Harja O. Relationship between probabilistic Boolean networks and dynamic Bayesian networks as models for gene regulatory networks. Signal Processing. 2006;86:814–834. doi: 10.1016/j.sigpro.2005.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Datta A, Pal R, Dougherty ER. Intervention in probabilistic gene regulatory networks. Curr. Genomics. 2006;1(2):167–184. [Google Scholar]

- 17.Datta A, Pal R, Choudhary A, Dougherty ER. Control approaches for probabilistic gene regulatory networks. IEEE Signal Processing Mag. 2007;24(1):54–63. [Google Scholar]

- 18.Shmulevich I, Lähdesmäki H, Dougherty ER, Astola J, Zhang W. The role of certain Post classes in Boolean network models of genetic networks. PNAS. 2003;100(19):10734–10739. doi: 10.1073/pnas.1534782100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Szallasi Z, Liang S. Modeling the normal and neoplastic cell cycle with realistic Boolean genetic networks: their application for understanding carcinogenesis and assessing therapeutic strategies. Pac. Symp. Biocomput. 1998;3:66–76. [PubMed] [Google Scholar]

- 20.Martins-Jr DC, Hashimoto RF, Braga-Neto U, Bittner ML, Dougherty ER. Intrinsically multivariate predictive genes. IEEE J. Select. Topics Signal Processing. 2008;2:424–439. [Google Scholar]

- 21.Kauffman S, Peterson C, Samuelsson B, Troein C. Genetic networks with canalizing Boolean rules are always stable. PNAS. 2004;101(49):17102–17107. doi: 10.1073/pnas.0407783101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Aldana M, Cluzel P. A natural class of robust networks. PNAS. 2003;100(15):8710–8714. doi: 10.1073/pnas.1536783100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Reichhardt CJO, Bassler KE. Canalization and symmetry in Boolean genetic regulatory networks. Journal of Physics A: Math. Theor. 2007;40:4339–4350. [Google Scholar]

- 24.Choudhary A, Datta A, Bittner ML, Dougherty ER. Assignment of terminal penalties in controlling genetic regulatory networks. American Control Conference; Portland, OR. 2005. [Google Scholar]

- 25.Qian X, Dougherty ER. Effect of function perturbation on the steady-state distribution of genetic regulatory networks: optimal structural intervention. IEEE Trans. Signal Processing. 2008;56(10):4966–4975. [Google Scholar]

- 26.Qu X, Aldana M, Kadanoff LP. Numerical and theoretical studies of noise effects in the Kauffman model. J. Stat. Phys. 2002;109(516):967–985. [Google Scholar]

- 27.Shmulevich I, Dougherty ER, Zhang W. Control of stationary behavior in probabilistic Boolean networks by means of structural intervention. Biol. Syst. 2002;10:431–445. [Google Scholar]

- 28.Xiao Y, Dougherty ER. The impact of function perturbations in Boolean networks. Bioinformatics. 2007;23:1265–1273. doi: 10.1093/bioinformatics/btm093. [DOI] [PubMed] [Google Scholar]

- 29.Gershenson C, Kauffman SA, Shmulevich I. The role of redundancy in the robustness of random Boolean networks. Artificial Life X, Proceedings of the Tenth International Conference on the Simulation and Synthesis of Living Systems; 2006. available online at http://arxiv.org/abs/nlin/0511018 . [Google Scholar]

- 30.Chaves M, Albert R, Sontag ED. Robustness and fragility of Boolean models for genetic regulatory network. J. Theor. Biol. 2005;235:431–449. doi: 10.1016/j.jtbi.2005.01.023. [DOI] [PubMed] [Google Scholar]

- 31.Xiao Y. Modeling gene knockdown and pathway blockage in gene regulatory networks. Proc. IEEE Workshop on Systems Biology and Medicine, Philadelphia, PA, November. 2008.

- 32.Marshall S, Yu L, Xiao Y, Dougherty ER. Inference of a probabilistic Boolean network from a single observed temporal sequence. EURASIP J. Bioinform. Syst. Biol. 2007;1 doi: 10.1155/2007/32454. Article ID 32454, 15 pages. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Ching W-K, Zhang S, Ng MK, Akutsu T. An approximation method for solving the steady-state probability distribution of probabilistic Boolean networks. Bioinformatics. 2007;23:1511–1518. doi: 10.1093/bioinformatics/btm142. [DOI] [PubMed] [Google Scholar]

- 34.Shmulevich I, Gluhovsky I, Hashimoto R, Dougherty ER, Zhang W. Steady-state analysis of genetic regulatory networks modeled by probabilistic Boolean networks. Comp. Funct. Genomics. 2003;4:601–608. doi: 10.1002/cfg.342. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Pal R, Ivanov I, Datta A, Bittner ML, Dougherty ER. Generating Boolean networks with a prescribed attractor structure. Bioinformatics. 2005;21:4021–4025. doi: 10.1093/bioinformatics/bti664. [DOI] [PubMed] [Google Scholar]