Abstract

The Veterans Health Administration (VHA) is a leader in development and use of electronic patient records and clinical decision support. The VHA is currently reengineering a somewhat dated platform for its Computerized Patient Record System (CPRS). This process affords a unique opportunity to implement major changes to the current design and function of the system. We report on two human factors studies designed to provide input and guidance during this reengineering process. One study involved a card sort to better understand how providers tend to cognitively organize clinical data, and how that understanding can help guide interface design. The other involved a simulation to assess the impact of redesign modifications on computerized clinical reminders, a form of clinical decision support in the CPRS, on the learnability of the system for first-time users.

Introduction

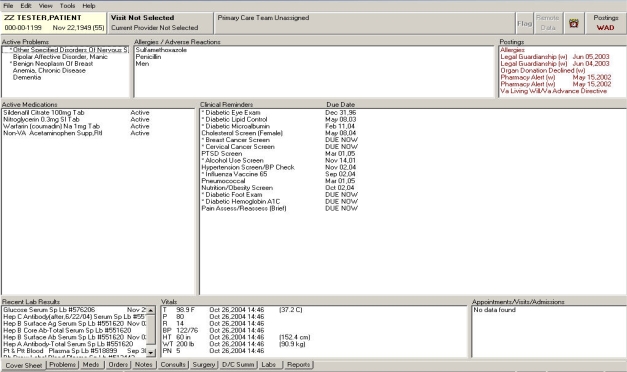

The VHA Computerized Patient Record System (CPRS) is an integrated, umbrella program with multiple dimensions of an information technology (IT) system designed to improve quality of care.1 The components of CPRS allow healthcare providers to order medications, laboratory tests, consultations, and to document actions. CPRS uses a tab metaphor for organizing problem lists, medications, orders, progress notes, consultations, lab results, and other clinical data (Figure 1). CPRS applications provide order checking, allergy checking, notifications, and clinical reminders automatically.2 CPRS installation was nationally mandated by the VHA in 1999 and it is used in virtually all of the VHA’s medical centers and outpatient clinics.2 Because CPRS is so widely implemented, the VHA provides an ideal forum in which to study IT factors that may help promote patient care. CPRS is based on the Delphi programming language but is currently being “reengineered” to operate using Java and Java 2 Enterprise Edition (J2EE) technology. This change in platform affords a unique opportunity to design and implement new approaches to how the medical record and decision support are integrated. During this reengineering phase we are conducting studies meant to provide prompt feedback to developers to support design changes and enhancements with empirical human factors input.

Figure 1:

The VHA’s CPRS coversheet of a fictitious patient record

Human factors research and methodology, including usability and human-computer interaction (HCI) has a rich literature base outside of healthcare. However, empirical work on usability of clinical systems is not as large. Kushniruk and Patel provide a comprehensive methodological review of cognitive and usability engineering methods for evaluation of clinical information systems,3 including the importance of formative usability evaluation throughout the development process of a clinical information system in order to provide prompt and actionable feedback to developers prior to final implementation. In addition to usability testing, other forms of usability evaluation of clinical systems have included, for example, cognitive walkthrough or cognitive task analysis,4 and heuristic evaluation.5 Card sorting, a method used in this paper, is another usability approach that can help designers understand how users cognitively organize information and how that should inform information system design.6

In this paper we focus on two design issues: (1) a model for organizing the large amount of clinical information in the medical record and (2) enhancing the usability of the clinical reminders (CRs), the primary form of clinical decision support in CPRS.

For organizing the clinical information in the medical record, the question of several tabs to display clinical documents, as the current system is designed (see bottom of Figure 1), versus a consolidated document tab (Figure 2) has been debated for CPRS-reengineering. We conducted a card-sorting exercise to collect empirical data on this issue and to understand how physicians prefer to cognitively organize clinical data when using the medical record.

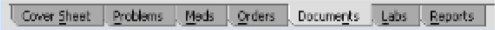

Figure 2:

Consolidated clinical ‘Documents’ tab with progress notes, consultation reports, surgery reports, and discharge summaries

For enhancing the usability of the clinical reminders, we introduced design enhancements derived from previous research7–10 meant to increase the learnability of the clinical reminders for new users, and conducted a simulation study to test these design modifications. The results presented here from this simulation is the exploration phase of a larger study meant to evaluate user performance, workload, and usability of a CPRS redesign.11 We present results from the portion of this study meant to assess factors influencing the learnabilty of first-time users.

Methods

Participants

Six resident physicians participated in the card sorting experiment, and 16 nurses participated in the simulation study. We recruited physicians for the card sorting experiment, since physicians generally have a need to access a wider variety of clinical data in the CPRS, as compared to nursing personnel (e.g., access to laboratory results, consultations, imaging). Resident physicians were chosen for this exercise as they have some exposure to CPRS through previous VA rotations, but do not use it frequently or regularly where they might be biased toward the current CPRS design. We limited the participants for the simulation study to nursing personnel as their clinical workflow and CR interaction differs greatly from that of physicians. We planned a separate, future simulation study for physicians because the typical workflow for the two users groups is quite different, as well as their interaction with the clinical reminder system. The nursing participants were all experienced with patient check-in/intake in outpatient clinic settings, but had no experience with the VHA’s CPRS software, so as not to bias one design over the other.

Apparatus

Both the card sorting experiment and the simulation study were conducted at the Veterans Administration (VA) Getting at Patient Safety (GAPS) Center’s Usability Laboratory, located at the Cincinnati VA Medical Center. For the card sorting experiment, participants performed the card sorting task on an empty table. For the simulation, participants used a clinical computer workstation (PC, Windows 2000, 17” monitor). The experimenter’s station consisted of media recording devices and a slave LCD TV monitor to observe the participant’s screen. We recorded audio and two video sources, one of the computer screen interaction and one of the participant’s face. The direct computer image was recorded using a video graphics array (VGA) splitter. All media sources were synchronized and recorded together on Mini-Digital Video tapes.

Card Sort

A card-sorting experiment was conducted to explore how physicians prefer to cognitively organize clinical data from a patient chart. Card sorting is a usability technique for investigating how people group items, which has implications for computer interface design. See Rugg and McGeorge for a comprehensive overview on card sorting methods.6 Each of six residents was given a stack of index cards that contained data from a comprehensive fictitious patient record, including problem list/past medical history, medications, allergies, orders, tasks, lab results, progress notes, consults, reports, and other clinical data one would expect to find in a complete patient record. Residents were asked to organize the cards into groupings that reflected their preferred organization of the data.

Simulation

The redesigned prototype was programmed in Hyper Text Markup Language (HTML) as a low-fidelity mock-up. That is, screen captures of the current design were used as a visual base and then redesigned. Links and buttons were made interactive to mimic the actual function of how the clinical reminders would work if fully programmed. To enable us to compare the redesign with the way the current system functions, we also “prototyped” the current system in the same fashion so that both designs were at the same simulation fidelity level.

The redesigned prototype (B) differed from the current design (A) in the following ways: (1) In design B, CRs were prefaced with a ‘P’ or ‘N’ for primary care provider or nurse to clarify who was responsible for attending to the reminder, (2) CR dialog boxes were accessible directly from the cover sheet via a single mouse click rather than through the new progress note submenu, and (3) CR dialog box formats were standardized; information was displayed as What, When, Who, and More Information. Figure 3 shows this standardization of format for an example clinical reminder: Influenza screening.

Figure 3:

Comparison of design A and B CR dialog box for influenza vaccine. Design B (redesign) is on the bottom

Each of the 16 nursing participants was introduced to designs A and B, in a counter-balanced fashion (i.e., participant 1 used design A first, participant 2 used design B first, etc.). Designs A and B were presented on the computer workstation. Each participant was given brief written instructions to satisfy a Pain Screening CR for each design, with relevant patient information necessary to satisfy the CR. Time to satisfy a single Pain Screening CR without prior training was recorded, with a maximum time limit of five minutes per design. We used this time measurement as an a priori measure of learnability for first-time users. To test if design B would be more “learnable” than A, we used a t-test to compare the time to complete a CR with A and B from the exploration session. We used only the data from the first design each participant was presented to control for the carryover learning effect.

Results

Card Sort

Five of six residents organized the data reflecting separate groupings for progress notes, consults, reports, and discharge summary. Four of those residents created separate groupings for each of the document types. The fifth preferred grouping consults from other physicians with the physician progress notes, but created separate grouping for the other document types.

Relevant quotes during debrief.

○ “I don’t want other documents mixed with my day to day notes, I want it separated, I don’t want to separate them myself.”

○ “I prefer these [documents] are pre-separated. I don’t want to have to separate them myself.”

One of six residents preferred organizing all of the clinical documents together.

Relevant quotes during debrief.

○ “I like seeing everything clumped together in a time-based view and then sort from there.”

Of the five residents who preferred separate organization of the clinical documents, three residents preferred even greater separation and grouped all non-physician notes and consults into an “ancillary staff documentation” grouping.

Simulation

For the eight individuals who used design A first, only one participant was able to complete the CR task within the five minute limit. Conversely, for those eight individuals who used design B first, only one participant did not complete the CR task within the five minute limit. A two-tailed t-test shows that time in seconds to satisfy a CR with design B (M = 141, SD = 84.6) was significantly less than time with design A (M = 286, SD = 40.3), t(14) = 4.37, p < .001.

Discussion

With the increasing complexity of medical care, and with multiple providers and systems, the innovative use of information technology (IT) can help assemble accurate medical information to provide effective care and sound clinical decision-making.12 A major challenge for health care organizations is to better align the health information system with healthcare processes within the organization. There are multiple human factors or process engineering issues to consider in the design and testing of an integrated IT system to improve care delivery.12

When clinicians are faced with high workload and extreme time pressure, as in a typical clinic, they must be able to use the supporting clinical information systems during a patient visit without being hindered by suboptimal design. Modest changes to the user interface in clinical information systems can have an important and clinically significant impact on human performance, even as users are learning systems, as we demonstrated empirically with the simulation comparison of the current redesigned prototypes for satisfying CRs. A redesigned interface for CRs with the modest changes was found to significantly increase learnability for first-time users as measured by time to complete the first CR. Further, formative assessment of design alternatives with human factors methods, such as a card sorting task, can provide user data to help guide design prior to implementation. For example, the results of the card sort reported in this paper support a customizable view for the “reengineered” CPRS that allows each user to specify a preference to routinely organize and present the clinical documents.

Our findings suggest that an initial view should keep clinical documents organized in separate tabs for each document type, as in the current design of CPRS. However, one potential limitation of this approach is that we did not do an exhaustive brainstorming or envisioned world discovery process in order to identify all possible approaches to reorganizing clinical information in the electronic medical record. Such an approach might allow testing of multiple data organization options in order to better integrate the health information into optimal work flow and practice. Also, the results reported in this paper related to nurses’ use of CRs and resident physicians’ organization of clinical information. Therefore, these results cannot necessarily be extrapolated across user groups since their clinical workflow differs substantially.

Conclusion

Human factors methods should be routinely used to rapidly collect empirical data to support design decisions formatively (i.e., prior to implementation) and throughout the redesign of a complex health information system. This is a model that is still not widely adopted when implementing new information systems. We reported on two such formative human factors studies mean to provide rapid feedback for the CPRS-reengineering effort. Adopting human factors input early and iteratively into clinical information system development can improve user performance and usability, as well as reduce cost by addressing important human-computer interaction considerations pre-implementation, where cost to redesign is much less than cost post-implementation.

Acknowledgments

The authors would like to thank Mike Hendry for reviewing this paper for accuracy and providing helpful suggestions. This research was supported by the Department of Veterans Affairs, Veterans Health Administration, Health Services Research and Development Service (TRX 02-216) and the VA Office of Information, and also partially supported by HSRD Center grant #HFP 04-148. A VA HSR&D Advanced Career Development Award supported Dr. Asch and a VA HSR&D Merit Review Entry Program Award supported Dr. Patterson. The views expressed in this article are those of the authors and do not necessarily represent the view of the Department of Veterans Affairs.

References

- 1.Doebbeling BN, Vaughn TE, McCoy KD, Glassman P. Informatics implementation in the Veterans Health Administration (VHA) healthcare system to improve quality of care. Proc AMIA Symp. 2006:204–8. [PMC free article] [PubMed] [Google Scholar]

- 2.Brown SH, Lincoln MJ, Groen PJ, Kolodner RM. VistA – U.S. Department of Veterans Affairs national-scale HIS. Int J Med Inf. 2005;69:135–56. doi: 10.1016/s1386-5056(02)00131-4. [DOI] [PubMed] [Google Scholar]

- 3.Kushniruk AW, Patel VL. Cognitive and usability engineering methods for the evaluation of clinical information systems. J Biomed Inform. 2004;37:56–76. doi: 10.1016/j.jbi.2004.01.003. [DOI] [PubMed] [Google Scholar]

- 4.Horsky J, Kaufman DR, Oppenheim MI, Patel VL. A framework for analyzing the cognitive complexity of computer-assisted clinical ordering. J Biomed Inform. 2003;36:4–22. doi: 10.1016/s1532-0464(03)00062-5. [DOI] [PubMed] [Google Scholar]

- 5.Zhang J, Johnson TR, Patel VL, Paige DL, Kubose T. Using usability heuristics to evaluate patient safety of medical devices. J Biomed Inform. 2003;36:23–30. doi: 10.1016/s1532-0464(03)00060-1. [DOI] [PubMed] [Google Scholar]

- 6.Rugg G, McGeorge P. The sorting techniques: A tutorial paper on card sorts, picture sorts and item sorts. Expert Systems. 1997;14(2):80–93. [Google Scholar]

- 7.Fung CH, Woods JN, Asch SM, Glassman P, Doebbeling BN.Variation in implementation and use of computerized clinical reminders in an integrated healthcare system Am J. Managed Care 20041011Part 2:878–85. [PubMed] [Google Scholar]

- 8.Militello L, Patterson ES, Tripp-Reimer T, et al. Clinical reminders: Why don’t they use them?. Proceedings of the Human Factors and Ergonomics Society 48th Annual Meeting; 2004. pp. 1651–5. [Google Scholar]

- 9.Patterson ES, Nguyen AD, Halloran JP, Asch SM. Human factors barriers to the effective use of ten HIV clinical reminders. J Am Med Inform Assoc. 2004;11(1):50–9. doi: 10.1197/jamia.M1364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Saleem JJ, Patterson ES, Militello L, Render ML, Orshansky G, Asch SM. Exploring barriers and facilitators to the use of computerized clinical reminders. J Am Med Inform Assoc. 2005;12(4):438–47. doi: 10.1197/jamia.M1777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Saleem JJ, Patterson ES, Militello L, et al. Impact of clinical reminder redesign on learnability, efficiency, usability, and workload for ambulatory clinic nurses J Am Med Inform Assoc 2007145In Press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Doebbeling BN, Chou AF, Tierney WM. Priorities and strategies for the implementation of integrated informatics and communications technology to improve evidence-based practice. J Gen Intern Med. 2006;21:S50–7. doi: 10.1111/j.1525-1497.2006.00363.x. [DOI] [PMC free article] [PubMed] [Google Scholar]