Abstract

A software system to provide intuitive navigation for MRI-guided robotic transperineal prostate therapy is presented. In the system, the robot control unit, the MRI scanner, and the open-source navigation software are connected together via Ethernet to exchange commands, coordinates, and images using an open network communication protocol, OpenIGTLink. The system has six states called “workphases” that provide the necessary synchronization of all components during each stage of the clinical workflow, and the user interface guides the operator linearly through these workphases. On top of this framework, the software provides the following features for needle guidance: interactive target planning; 3D image visualization with current needle position; treatment monitoring through real-time MR images of needle trajectories in the prostate. These features are supported by calibration of robot and image coordinates by fiducial-based registration. Performance tests show that the registration error of the system was 2.6 mm within the prostate volume. Registered real-time 2D images were displayed 1.97 s after the image location is specified.

Keywords: Image-guided therapy, MRI-guided intervention, Prostate brachytherapy, Prostate biopsy, MRI-compatible robot, Surgical navigation

1. Introduction

Magnetic resonance imaging (MRI) has been emerging as guidance and targeting tool for prostate interventions including biopsy and brachytherapy [1–3]. The main benefit of using MRI for prostate interventions is that MRI can delineate sub-structures of prostate as well as surrounding but critical anatomical structures such as rectum, bladder, and neuro-vascular bundle. An early report by D’Amico et al. reported that MRI-guided prostate brachytherapy is possible by using an open-configuration 0.5 T MRI scanner [4,5]. In this study, intra-operative MRI was used for on-site dosimetry planning as well as for guiding brachytherapy applicators to place radioactive seeds in the planned sites. Intra-operative MRI was particularly useful to visualize the placed seeds, hence enabling them to update the dosimetry plan and place subsequent seeds according to the updated plan. A 1.5 T close-bore scanner was used in the study by Susil et al. where prostate brachytherapy was performed by transferring the patient out of the bore for seed implantation and back into the bore for imaging [6]. Krieger et al. and Zangos et al. reported their approaches to keep the patients inside the bore and insert needles using manual needle driver [7] and robotic driver [8]. Robotic needle driver continues to be a popular choice to enable MRI-guided prostate intervention in close-bore scanner [9–13]. While the usefulness of robotic needle driver has been a focus of argument in the abovementioned studies, the software integration strategy and solutions to enable seamless integration of robot in MRI has not been documented well. It is well known, from the literatures on MRI-guided robot of other organs than prostate [14,15], the precision and efficacy of MRI-guided robotic therapy can be best achieved by careful integration of control and navigation software to MRI for calibration, registration and scanner control. Such effort is underway in the related preliminary study and subcomponents of it has been in part reported elsewhere [16].

The objective of this study is to develop and validate an integrated control and navigation software system for MRI-guided intervention of prostate using pneumatically actuated robot [16] with emphasis on image based calibration of the robot to MRI scanner. Unlike the related studies, the calibration method newly presented here does not require any operator intervention to identify fiducial markers but performs calibration automatically using Z-shaped frame marker. The user interface and workflow management were designed based on thorough analysis of the clinical workflow of MRI-guided prostate intervention. The validation study included accuracy assessment of the on-line calibration and imaging.

2. Materials and methods

2.1. Software system overview

The software system consists of three subcomponents: (a) control software for the needle placement robot (Fig. 1), (b) software to control a closed-bore whole body 3 T MRI scanner (GE Excite HD 3T, GE Healthcare, Chalfont St. Giles, UK), and (c) open-source surgical navigation software (3D Slicer, http://www.slicer.org/) [17] (Fig. 2). The core component of the software system is 3D Slicer, running on a Linux-based workstation (SunJava Workstation W2100z, Sun Microsystems, CA), that serves as an integrated environment for calibration, surgical planning, image guidance and device monitoring and control. The 3D Slicer communicates with the other components through 100 Base-T Ethernet to exchange data and commands using an open network communication protocol, OpenIGTLink [18]. We developed a software module in 3D Slicer that offers all features uniquely required for MR-guided robotic prostate intervention, as follows: (1) management of the ‘workphase’ of the all components in the system; (2) treatment planning by placing target points on the pre-operative 3D images loaded on the 3D Slicer and robot control based on the plan; (3) registration of the robot and patient coordinate systems; (4) integrated visualization of real-time 2D image, preoperative 3D image, and visualization of the current needle position on the 3D viewer of 3D Slicer.

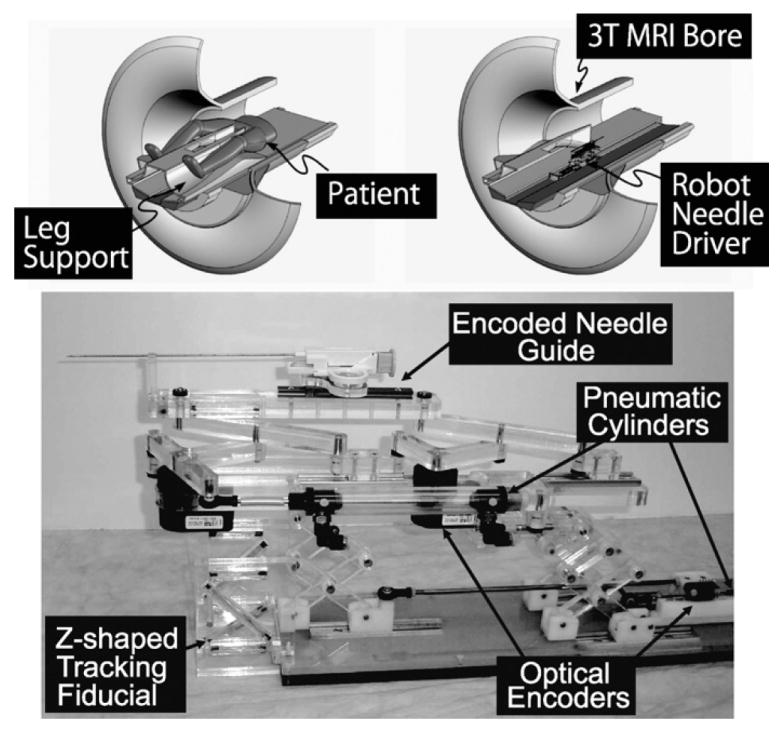

Fig. 1.

A robot for transperineal prostate biopsy and treatment [16]. Pneumatic actuators and optical encoders allow operating the robot inside a closed-bore 3 T MRI scanner. Z-shape fiducial frame was attached for a calibration.

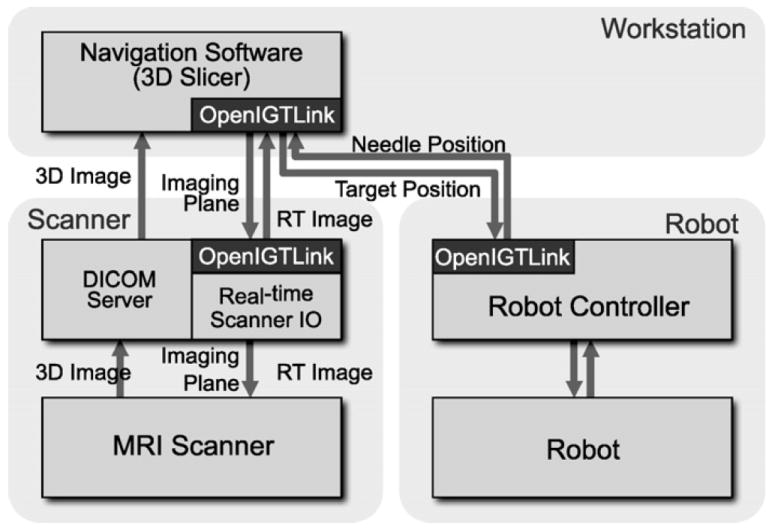

Fig. 2.

The diagram shows communication data flow of the proposed software system.

2.2. Workphases

We defined six states of the software system called ‘workphases,’ reflecting the six phases of clinical workflow for prostate intervention using the robotic device: START-UP, PLANNING, CALIBRATION, TARGETING, MANUAL, and EMERGENCY. The workphase determines the behavior of the software system that is required for each clinical phase. Each component switches its workphase according to a command received from 3D Slicer, thus the phases of all components are always synchronized. Details of each workphase are described below:

START-UP. The software system is initialized. Meanwhile, the operator prepares the robot by connecting the pneumatic system to pressurized air, connecting the device to the control unit, and attaching sterilized needle driver kit and needles to the robot. The needle is adjusted to a pre-defined home position of the robot. The imaging coil is attached to the patient, who is then positioned in the scanner.

PLANNING. Pre-procedural 3D images, including T1- and T2-weighted images, are acquired and loaded into the 3D Slicer. Target points for needle insertions are interactively defined on the pre-operative images.

CALIBRATION. The transformation that registers robot coordinates to patient coordinates is calculated by acquiring images of the Z-shape fiducial frame. This calibration procedure is performed for every intervention. Once the robot is calibrated, the robot control unit and 3D Slicer exchange target positions and the current position of the needle. Details of the Z-shape fiducial will be described in Section 2.4.

TARGETING. A current target is selected from the targets defined in the PLANNING workphase, and sent to the robot control unit. The robot moves the needle to the target while transmitting its current position to the 3D Slicer in real-time. After the needle guide is maneuvered to the desired position, the needle is manually inserted along an encoded guide to the target lesion. The insertion process is monitored through semi real-time 2D images, which are automatically aligned to a plane along the needle axis.

MANUAL. The operator can directly control the robot position remotely from 3D Slicer. The system enters this workphase when the needle position needs to be adjusted manually.

EMERGENCY. As soon as the system enters this workphase, all robot motion is halted and the actuators are locked to prevent unwanted motion and to allow manual needle retraction. This is an exceptional workphase provided as a safety consideration.

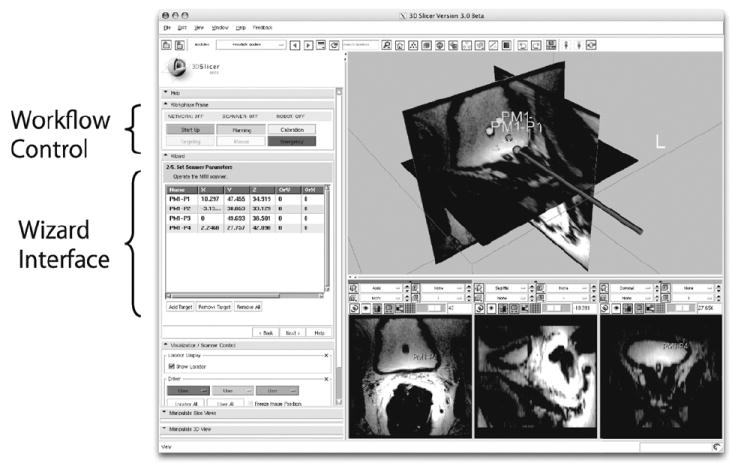

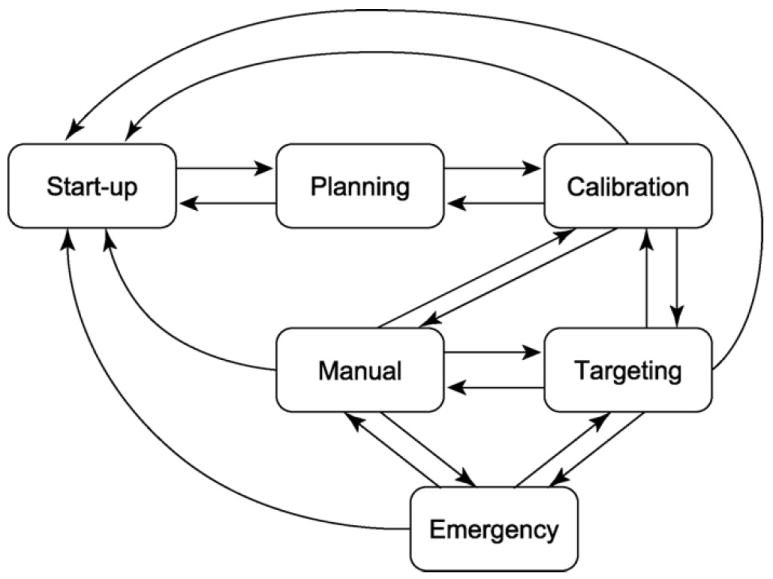

The transitions between workphases are invoked by the operator using a graphical user interface (GUI) within 3D Slicer. This wizard-style GUI provides one panel with 6 buttons to switch the workphase, and a second panel that only shows the functions available in the current workphase (Fig. 3). The buttons are activated or deactivated based on the workphase transition diagram (Fig. 4), in order to prevent the operator from skipping steps while going through the workphases.

Fig. 3.

An example of a workphase transition diagram. The GUI only allows the transitions defined in the transition diagram in order to prevent the user from accidentally skipping necessary steps during clinical procedures.

Fig. 4.

An example of a workphase transition diagram. The GUI only allows the transitions defined in the transition diagram in order to prevent the user from accidentally skipping necessary steps during clinical procedures.

2.3. Target planning and robot control

The software provides features for planning and managing a set of targets for tissue sampling in biopsy or radioactive seeds implantation in brachyterahpy. In the planning workphase, the user can interactively place the fiducial points to define the targets on the pre-operative 3D MRI volume loaded into 3D Slicer. Other diagnostic images can also be used for planning, by registering them to the pre-operative 3D MRI volume. The fiducial points are visualized in both the 2D and 3D image views by 3D Slicer, allowing the physician to review the target distribution. The reviewed targets are then exported to the robot controller over the network. These targets can also be loaded from or saved to files on disk, allowing exchange of target data between 3D Slicer and other software.

2.4. Calibration

The integrated software system internally holds two distinctively independent coordinate systems, namely Image coordinate system and Robot coordinate system. The image coordinate system is defined by MRI scanner with its origin near the isocenter of it. The robot coordinate system is defined in the robot control software with pre-defined origin in the robot. For integrated online control and exchange of commands among the components, one must know the transformation matrix to convert a coordinate in robot coordinate system to the corresponding coordinate in image coordinate system, and vise versa. The software system performs calibration of the robot by finding this transformation matrix using a Z-frame calibration device developed in [19] as fiducial. Z-frame is made of seven rigid glass tubes with 3 mm inner diameters that are filled with a contrast agent (MR Spots, Beekley, Bristol, CT) and placed on three adjacent faces of a 60 mm cube. In particular, location and orientation of the z-shaped frame, or Z-frame is automatically quantified in MRI by identifying crossing points in the cross sectional image of the Z-frame. As location and orientation of the Z-frame in the robot coordinate system is known from its original design, one can relate the two coordinate systems by comparing the locations of Z-frame in robot- and image coordinate system, hence the transformation matrix between the two coordinate system.

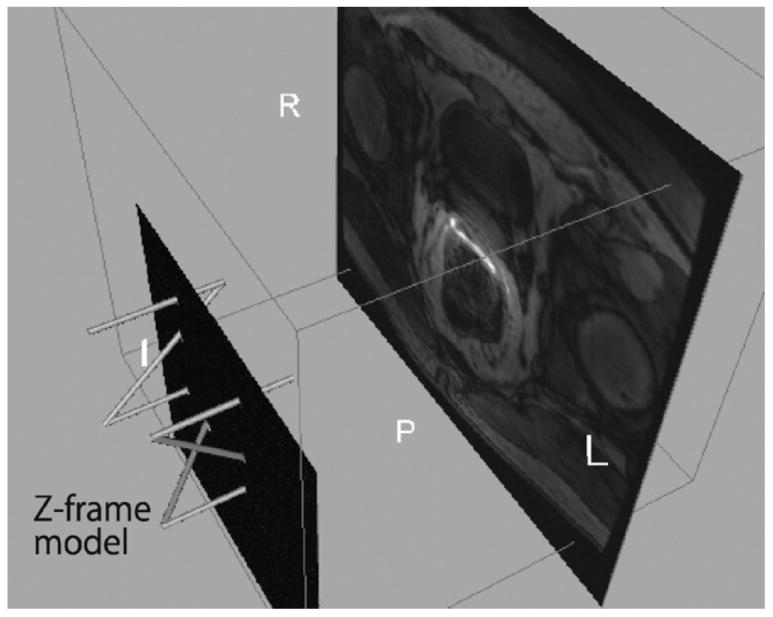

The imaging sequence of the Z-frame was a fast spoiled gradient echo recalled (SPGR) sequence with time of repetition (TR)/echo time (TE): 34/3.8 ms, flip angle: 30°, number of excitation (NEX): 3, field of view (FOV): 160 mm. The automatic Z-frame digitization in MRI is implemented in 3D Slicer as a part of the software plug-in module for robotic prostate interventions. Once the on-line calibration is completed, the three-dimensional model of Z-frame appears on the 3D viewer of 3D Slicer (Fig. 5) allowing the user to confirm that the registration is performed correctly. The transformation matrix from the robot coordinate system to the image coordinate system is transferred to the robot controller.

Fig. 5.

The 3D Slicer visualizes the physical relationship among the model of Z-frame, the 2D image intersecting the Z-frame, and the slice of the preoperative 3D image after the Z-frame registration. This helps the users to confirm that the Z-frame registration is performed correctly.

2.5. Real-time MRI control and visualization

We developed proxy software in MRI unit that allows the scan plane to be controlled from external components, e.g. 3D Slicer, and that also exports images over the network to the external component in real-time. During the procedure, 3D Slicer sends the current position of the robot that was obtained from the controller to the scanner interface software, so that the MRI scanner can acquire images from the planes parallel and perpendicular to the needle. The acquired image is then transferred back to 3D Slicer. The merit of importing the real-time image to 3D Slicer is that it allows 3D Slicer to extract an oblique slice of the pre-operative 3D image at the same position as the real-time image and fuse them. This feature allows the physician to correlate the real-time image that displays the actual needle with a pre-operative image that provides better delineation of the target. The pulse sequences available for this purpose are fast gradient echo (FGRE) and spoiled gradient recalled (SPGR), which have been used for MR-guided manual prostate biopsy and brachytherapy [20]. Although the software system currently works with GE Excite System, 3D Slicer can be adapted to any other MRI scanner platforms by providing the necessary interface program.

The positions of the target lesion are specified on the 3D Slicer interface and transferred to the robot control unit. While the robot control unit is driving the needle towards the target, the needle position is calculated from the optical encoders and sent back to 3D Slicer every 100 ms. The imaging plane that intersects the needle’s axis is then computed by 3D Slicer and transferred to the scanner, which in turn acquires semi real-time images in that plane.

3. Experimental design

We conducted a set of experiments to validate the accuracy of the Z-frame registration, which is crucial for both targeting and real-time imaging control. Our previous work demonstrated that the fiducial localization error (FLE) were 0.031 mm for in-plane motion, 0.14 mm for out-of-plane motion and 0.37° for rotation, when the Z-frame was placed at the isocenter of the magnet [19]. Given the distance between the Z-frame and the prostate (100 mm), the target registration error (TRE) is estimated to be less than 1 mm by calculating the offset at the target due to the rotational component in the FLE. However, the TRE in the clinical setting is still unknown due to the difference of the coil configuration and the position of the Z-frame. In the clinical application, we will use a torso coil to acquire the Z-frame image instead of a head coil used in the pervious work. In addition, the Z-frame is not placed directly at the isocenter causing larger distortion on the Z-frame image.

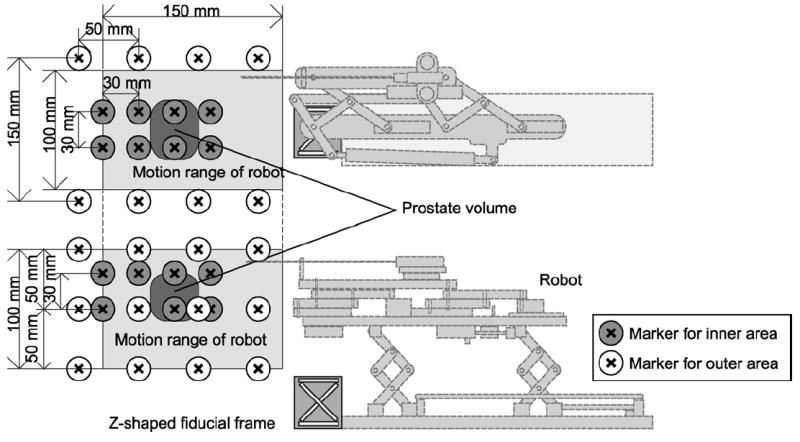

3.1. Accuracy of calibration

In this first experiment, we placed the Z-frame and a water phantom at approximately the same locations where the Z-frame and the prostate would be placed in the clinical setting. There were 40 markers made of plastic beads fixed on a plastic block in the water phantom, representing the planned target positions. Out of the 40 markers, 24 markers were placed near the outer end of the volume and 16 markers near the inner end of the volume, corresponding to the end of the motion range of the robot and the typical position of the prostate, respectively (Fig. 6). Both the plastic block and the Z-frame were fixed to the container of the water phantom allowing us to calculate the planned target positions in the image (patient) coordinate system after the Z-frame calibration. To focus on evaluating the registration error due to the Z-frame registration, we replaced the robot control unit with a software simulator, which receives the target position and returns exactly the same position to the 3D Slicer as a current virtual needle position. This allowed us to exclude from our validation any mechanical error from the robot itself, the targeting accuracy of which is described elsewhere [16]. The orientation of the virtual needle was fixed along the static magnetic field to simulate the transperineal biopsy/brachytherapy case, where the needle approaches from inferior to superior direction.

Fig. 6.

The physical relationship among robot, Z-frame, targets, range of motion, and markers is shown. The Z-frame is attached. Twenty-four markers are in the outer area of the phantom, which covers entire motion range of the robot, and 16 markers are in the inner area, covering the typical position of the prostate.

After placing the phantom and the Z-frame, we acquired a 2D image intersecting the Z-frame to locate the Z-frame using a 2D fast gradient recalled echo (FGRE) sequence (matrix = 256 × 256; FOV = 16 cm; slice thickness = 5 mm; TR/TE = 34/3.8 ms; flip angle = 60°; NEX = 3). Based on the position and orientation of the Z-frame, we calculated the marker positions in the image coordinate system. We also acquired a 3D image of the phantom using a 3D FGRE sequence (matrix = 256 × 256 × 72; FOV = 30 cm; slice thickness = 2 mm; TR/TE = 6.3/2.1 ms; flip angle = 30°) to locate the actual target in the image coordinate system as gold standards. The actual target positions were compared with the marker position.

3.2. Latency of real-time MRI control and visualization

We also evaluated the performance of 2D real-time imaging in terms of latency for acquisition and visualization. For the accuracy, a 2D spoiled gradient recalled (SPGR) sequence was used with following parameters: TR/TE = 12.8/6.2 ms, matrix = 256 × 256; FOV = 30 mm; slice thickness = 5 mm; flip angle = 30°). We acquired the images by specifying the image center position. Three images in orthogonal planes were acquired at each position. Positional error of imaging plane was defined by the offset of the imaged target marker from the intersection of the three planes. For the combined latency of 2D real-time image acquisition, reconstruction and visualization, we measured the time for the images to reflect the change of the imaging plane position after the new position was specified from the 3D Slicer. We used the 2D FGRE sequence with two different image size and frame rate: 128 × 128 matrix with TR = 11.0 ms (1.4 s/image) and 256 × 256 matrix with TR = 12.7 ms (3.3 s/image). We oscillated the imaging plane position using 3D Slicer with range of 100 mm and period of 20 s while acquiring the images of the phantom.

4. Results

The root mean square (RMS) of positional error due to the calibration was 3.7 mm for outer area and 1.8 mm for inner area (representing the volume within the prostate capsule). The latencies between the receipt of the robot needle position by the navigation software and the subsequent display of semi real-time images on the navigation software were as follows for each frame rate: 1.97 ± 0.08 s (1.40 s/frame, matrix 128 × 128) and 5.56 ± 1.00 s (3.25 s/frame, matrix 256 × 256). Note that the latency here includes the time for both image acquisition and reconstruction. The result indicates that the larger image data caused longer reconstruction time with large deviation, partially because the reconstruction performed on the host workstation of the scanner, where other processes were also running during the scan. The latency can be improved by performing image reconstructing in the external workstation with better computing capability.

5. Discussion

Design and implementation of a navigation system for robotic transperineal prostate therapy in closed-bore MRI is presented. The system is designed for the ‘closed-loop’ consisting of the physician, robot, imaging device and navigation software, in order to provide instantaneous feedback to the physician to properly control the procedure. Since all components in the system must share the coordinate system in the ‘closed-loop’ therapy, we focused on the registration of the robot and image coordinate systems, and real-time MR imaging. The instantaneous feedback will allow the physician to correct the needle insertion path by using needle steering technique, such as [21], as necessary. In addition, we proposed software to manage the clinical workflow, which is separated into six ‘workphases’ to define the behavior of the system based on the stage of the treatment process. The workphases are defined based on the clinical workflow of MRI-guided robotic transperineal prostate biopsy and brachytherapy, but should be consistent with most MRI-guided robotic intervention (e.g., liver ablation therapy [14]).

The proposed software system incorporates a calibration based on the Z-frame to register the robot coordinate system to the image coordinate system. The accuracy study demonstrated that the integrated system provided sufficient registration accuracy for prostate biopsy and brachytherapy compared with the clinical significant size (0.5 cc) and traditional grid spacing (5 mm). The result is also comparable with the accuracy study on clinical targeted biopsy by Blumenfeld et al. (6.5 mm) [22]. Since mechanical error was excluded from the methodology in the presented report, the study must be continued to evaluate the overall accuracy of targeting using the robotic device guided by the system. It was previously reported that the RMS positioning error due to the mechanism was 0.94 mm [16].

The latency of real-time MRI control and visualization we measured includes the time for both image acquisition and reconstruction. The result indicates that the larger image data caused longer reconstruction time with large deviation, partially because the reconstruction performed on the host workstation of the scanner, where other processes were also running during the scan. The latency can be improved by performing image reconstruction in the external workstation with better computing capability.

Our study also demonstrates that the semi real-time 2D MR images captured the target with clinically relevant positional accuracy. Semi real-time 2D images were successfully acquired in three orthogonal planes parallel and perpendicular to the simulated needle axis, and visualized on the navigation software. The RMS error between the specified target position and imaged target was 3.7 mm for the targets in the outer area and 1.8 mm for the inner area. The accuracy was degraded near the outer end of the phantom, where the distance from the Z-frame was larger than near the inner end. In addition, the image was distorted by the field inhomogeneity, causing positional error of the imaged target. Thus, a distortion correction after image reconstruction could be effective in improving accuracy.

In conclusion, the proposed system provides a user interface based upon the workphase concept that allows operators intuitively to walk through the clinical workflow. It is demonstrated that the system provides semi real-time image guidance with adequate accuracy and speed for interactive needle insertion in MRI-guided robotic intervention for prostate therapy.

Acknowledgments

This work is supported by 1R01CA111288, 5U41RR019703, 5P01CA067165, 1R01CA124377, 5P41RR013218, 5U54EB005149, 5R01CA109246 from NIH. Its contents are solely the responsibility of the authors and do not necessarily represent the official views of the NIH. This study was also in part supported by NSF 9731748, CIMIT, Intelligent Surgical Instruments Project of METI (Japan).

Biographies

Junichi Tokuda is a Research Fellow of Brigham and Women’s Hospital and Harvard Medical School. He received a B.S. in Engineering in 2002, a M.S. in Information Science and Technology in 2004, and a Ph.D. in Information Science and Technology in 2007, from The University of Tokyo, Japan. His main research interest is MRI guided therapy including MR pulse sequence, navigation software, MRI-compatible robot and integration of these technologies for operating environment.

Gregory S. Fischer is an Assistant Professor and Director of Automation and Interventional Medicine Laboratory at Worcester Polytechnic Institute. He received the B.S. degrees in electrical engineering and mechanical engineering from Rensselaer Polytechnic Institute, Troy, NY, in 2002 and the M.S.E. degrees in electrical engineering and mechanical engineering from The Johns Hopkins University, Baltimore, MD, in 2004 and 2005. He received the Ph.D. degree from The Johns Hopkins University in 2008. His research interests include development of interventional robotic systems, robot mechanism design, pneumatic control systems, surgical device instrumentation and MRI-compatible robotic systems.

Simon P. DiMaio received the B.Sc. degree in electrical engineering from the University of Cape Town, South Africa, in 1995. In 1998 and 2003, he completed the M.A.Sc. and Ph.D. degrees at the University of British Columbia, Vancouver, Canada, respectively. After completing his doctoral studies, he moved to Boston for a Research Fellowship at the Surgical Planning Laboratory, Brigham and Women’s Hospital (Harvard Medical School), where he worked on robotic mechanisms and tracking devices for image-guided surgery. In 2007, Dr. DiMaio joined the Applied Research Group at Intuitive Surgical Inc. in Sunnyvale, California. His research interests include mechanisms and control systems for surgical robotics, medical simulation, image-guided therapies and haptics.

Csaba Csoma is a Software Engineer at the Engineering Research Center for Computer Integrated Surgical Systems and Technology of Johns Hopkins University. He holds a B.Sc. degree in Computer Science. His recent activities concentrate on development of communication software and graphical user interface for surgical navigation and medical robotics applications.

Philip W. Mewes is a PhD student at the chair of pattern recognition at the Friedrich-Alexander University Erlangen-Nuremberg and Siemens Healthcare. He received his MSc in Computer Science in 2008, from the University Pierre et Marie Curie – Paris VI, France and his Electrical Engineering Diploma from the University of Applied Sciences in Saarland, Germany. His main research interest is image guided therapy including several computer vision techniques such as shape recognition and 3D reconstruction and the integration of these technologies for interventional medical environments.

Gabor Fichtinger received the B.S. and M.S. degrees in electrical engineering, and the Ph.D. degree in computer science from the Technical University of Budapest, Budapest, Hungary, in 1986, 1988, and 1990, respectively. He has been a charter faculty member since 1998 in the NSF Engineering Research Center for Computer Integrated Surgery Systems and Technologies at the Johns Hopkins University. In 2007, he moved to Queen’s University, Canada, as an interdisciplinary faculty of Computer Assisted Surgery; currently an Associate Professor of Computer Science, Mechanical Engineering and Surgery. Dr. Fichtinger’s research focuses on commuter-assisted surgery and medical robotics, with a strong emphasis on image-guided oncological interventions.

Clare M. Tempany is a medical graduate of the Royal College of Surgeons in Ireland. She is currently the Ferenc Jolesz chair and vice chair of Radiology Research in the Department of Radiology at the Brigham and Women’s hospital and a Professor of Radiology at Harvard Medical School. She is also the co-principal investigator and clinical director of the National image guided therapy center (NCIGT) at BWH Her major areas of research interest are MR imaging of the pelvis and image-guided therapy. She now leads an active research group—the MR guided prostate interventions laboratory, which encompasses basic research in IGT and clinical programs.

Nobuhiko Hata was born in Kobe, Japan. He received the B.E. degree in precision machinery engineering in 1993 from School of Engineering, The University of Tokyo, Tokyo, Japan, and the M.E. and the Doctor of Engineering degrees in precision machinery engineering in 1995 and 1998 respectively, both from Graduate School of Engineering, The University of Tokyo, Tokyo, Japan. He is currently an Assistant Professor of Radiology, Harvard Medical School and Technical Director of Image Guided Therapy Program, Brigham and Women’s Hospital. His research focus has been on medical image processing and robotics in image-guided surgery.

References

- 1.Zangos S, Eichler K, Thalhammer A, Schoepf JU, Costello P, Herzog C, et al. MR-guided interventions of the prostate gland. Minim Invasive Ther Allied Technol. 2007;16(4):222–9. doi: 10.1080/13645700701520669. [DOI] [PubMed] [Google Scholar]

- 2.Tempany C, Straus S, Hata N, Haker S. MR-guided prostate interventions. J Magn Reson Imaging. 2008;27(2):356–67. doi: 10.1002/jmri.21259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Pondman KM, Futterer JJ, ten Haken B, Kool LJS, Witjes JA, Hambrock T, et al. MR-guided biopsy of the prostate: an overview of techniques and a systematic review. Eur Urol. 2008;54(3):517–27. doi: 10.1016/j.eururo.2008.06.001. [DOI] [PubMed] [Google Scholar]

- 4.D’Amico AV, Cormack R, Tempany CM, Kumar S, Topulos G, Kooy HM, et al. Real-time magnetic resonance image-guided interstitial brachytherapy in the treatment of select patients with clinically localized prostate cancer. Int J Radiat Oncol Biol Phys. 1998;42(3):507–15. doi: 10.1016/s0360-3016(98)00271-5. [DOI] [PubMed] [Google Scholar]

- 5.D’Amico AV, Tempany CM, Cormack R, Hata N, Jinzaki M, Tuncali K, et al. Transperineal magnetic resonance image guided prostate biopsy. J Urol. 2000;164(2):385–7. [PubMed] [Google Scholar]

- 6.Susil R, Camphausen K, Choyke P, McVeigh E, Gustafson G, Ning H, et al. System for prostate brachytherapy and biopsy in a standard 1.5 T MRI scanner. Magn Reson Med. 2004;52(3):683–7. doi: 10.1002/mrm.20138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Krieger A, Susil RC, Menard C, Coleman JA, Fichtinger G, Atalar E, et al. Design of a novel MRI compatible manipulator for image guided prostate interventions. IEEE TBME. 2005;52:306–13. doi: 10.1109/TBME.2004.840497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Zangos S, Eichler K, Engelmann K, Ahmed M, Dettmer S, Herzog C, et al. MR-guided transgluteal biopsies with an open low-field system in patients with clinically suspected prostate cancer: technique and preliminary results. Eur Radiol. 2005;15(1):174–82. doi: 10.1007/s00330-004-2458-2. [DOI] [PubMed] [Google Scholar]

- 9.Elhawary H, Zivanovic A, Rea M, Davies B, Besant C, McRobbie D, et al. The feasibility of MR-image guided prostate biopsy using piezoceramic motors inside or near to the magnet isocentre. In: Larsen R, Nielsen M, Sporring J, editors. Medical image computing and computer-assisted interveNTION—MICCAI 2006. PT 1, vol. 4190 of lecture notes in computer science. 2006. pp. 519–26. [DOI] [PubMed] [Google Scholar]

- 10.Goldenberg AA, Trachtenberg J, Kucharczyk W, Yi Y, Haider M, Ma L, et al. Robotic system for closed-bore MRI-guided prostatic interventions. IEEE-ASME Trans Mechatron. 2008;13(3):374–9. [Google Scholar]

- 11.Kaiser W, Fischer H, Vagner J, Selig M. Robotic system for biopsy and therapy of breast lesions in a high-field whole-body magnetic resonance tomography unit. Invest Radiol. 2000;35(8):513–9. doi: 10.1097/00004424-200008000-00008. [DOI] [PubMed] [Google Scholar]

- 12.Lagerburg V, Moerland MA, van Vulpen M, Lagendijk JJW. A new robotic needle insertion method to minimise attendant prostate motion. Radiother Oncol. 2006;80(1):73–7. doi: 10.1016/j.radonc.2006.06.013. [DOI] [PubMed] [Google Scholar]

- 13.Stoianovici D, Song D, Petrisor D, Ursu D, Mazilu D, Mutener M, et al. “MRI Stealth” robot for prostate interventions. Minim Invasive Ther Allied Technol. 2007;16(4):241–8. doi: 10.1080/13645700701520735. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hata N, Tokuda J, Hurwitz S, Morikawa S. MRI-compatible manipulator with remote-center-of-motion control. J Magn Reson Imaging. 2008;27(5):1130–8. doi: 10.1002/jmri.21314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Pfieiderer S, Marx C, Vagner J, Franke R, Reichenbach A, Kaiser W. Magnetic resonance-guided large-core breast biopsy inside a 1.5-T magnetic resonance scanner using an automatic system—in vitro experiments and preliminary clinical experience in four patients. Invest Radiol. 2005;40(7):458–63. doi: 10.1097/01.rli.0000167423.27180.54. [DOI] [PubMed] [Google Scholar]

- 16.Fischer G, Iordachita I, Csoma C, Tokuda J, DiMaio SP, Tempany C, et al. MRI-compatible pneumatic robot for transperineal prostate needle placement. IEEE/ASME Trans Mechatron. 2008;13(3):295–305. doi: 10.1109/TMECH.2008.924044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gering DT, Nabavi A, Kikinis R, Hata N, O’Donnell J, Grimson WEL, et al. An integrated visualization system for surgical planning and guidance using image fusion and an open MR. J Magn Reson Imaging. 2001;13(6):967–75. doi: 10.1002/jmri.1139. [DOI] [PubMed] [Google Scholar]

- 18.Tokuda J, Fischer GS, Papademetris X, Yaniv Z, Ibanez L, Cheng P, Liu H, Blevins J, Arata J, Golby A, Kapur T, Pieper S, Burdette EC, Fichtinger G, Tempany CM, Hata N. OpenIGTLink: An Open Network Protocol for Image-Guided Therapy Environment. Int J Med Robot Comput Assist Surg. 2009 doi: 10.1002/rcs.274. In print. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.DiMaio S, Samset E, Fischer G, Iordachita I, Fichtinger G, Jolesz F, et al. Dynamic MRI scan plane control for passive tracking of instruments and devices. MICCAI. 2007;10:50–8. doi: 10.1007/978-3-540-75759-7_7. [DOI] [PubMed] [Google Scholar]

- 20.Hata N, Jinzaki M, Kacher D, Cormak R, Gering D, Nabavi A, et al. MR imaging-guided prostate biopsy with surgical navigation software: device validation and feasibility. Radiology. 2001;220(1):263–8. doi: 10.1148/radiology.220.1.r01jl44263. [DOI] [PubMed] [Google Scholar]

- 21.DiMaio S, Salcudean S. Needle steering and motion planning in soft tissues. IEEE Trans Biomed Eng. 2005;52(6):965–74. doi: 10.1109/TBME.2005.846734. [DOI] [PubMed] [Google Scholar]

- 22.Blumenfeld P, Hata N, DiMaio S, Zou K, Haker S, Fichtinger G, et al. Transperineal prostate biopsy under magnetic resonance image guidance: a needle placement accuracy study. J Magn Reson Imaging. 2007;26(3):688–94. doi: 10.1002/jmri.21067. [DOI] [PubMed] [Google Scholar]