Abstract

In concept and practice, clinical decision support (CDS) and performance measurement represent distinct approaches to organizational change, yet these two organizational processes are interrelated. We set out to better understand how the relationship between the two is perceived, as well as how they jointly influence clinical practice. To understand the use of CDS at benchmark institutions, we conducted semistructured interviews with key managers, information technology personnel, and clinical leaders during a qualitative field study. Improved performance was frequently cited as a rationale for the use of clinical reminders. Pay-for-performance efforts also appeared to provide motivation for the use of clinical reminders. Shared performance measures were associated with shared clinical reminders. The close link between clinical reminders and performance measurement causes these tools to have many of the same implementation challenges.

INTRODUCTION

Clinical decision support (CDS) and performance measurement are commonly discussed separately in the medical literature. CDS is oftentimes introduced as a tool or intervention that can improve health care quality1, 2; however, CDS is also discussed as a means to the ends of improved patient safety and clinical outcomes.

Performance improvement is commonly framed as an end towards which many organizational tools or interventions can be directed, including CDS. Yet performance or quality improvement can also be pursued through other interventions, such as interactive medical education, local opinion leaders, benchmarking, or financial incentives3.

In concept and practice, CDS and performance measurement represent distinct approaches to organizational change. Yet these two major organizational processes are interrelated. We set out here to better understand how the relationship between the two is perceived, as well as how they jointly influence clinical practice.

Opportunities exist to improve the use and dissemination of CDS and performance measurement by studying their current implementation and relationship with one another. A recent systematic review on CDS identified the Regenstrief Institute (RI), Partners HealthCare System (PHS), Intermountain Healthcare, and the Veterans Heath Administration (VHA) as the four benchmark institutions most frequently demonstrating the efficacy of CDS in improving outcomes with high quality research4. Detailed descriptions of each institution’s electronic health record have been previously published.5, 6 Because CDS is so widely implemented and used in these institutions, they provide excellent health care environments in which to study how key stakeholders view the relationship between CDS and performance measurement. To better understand this relationship, we conducted semistructured interviews among key stakeholders during a qualitative field study at benchmark institutions.

METHODS

Site Selection:

We selected three of the four benchmark institutions for health information technology for this study: RI, PHS, and VHA4. Two Veterans Affairs Medical Center (VAMC) sites were selected based on having a strong medical informatics presence, strong clinical performance, and being geographically distributed nationally (south and east). At each site, qualitative data was collected in multiple outpatient clinics using the same electronic health record system.

Qualitative Methods:

The researchers conducted key informant interviews, as well as opportunistic interviews during site visits, of CDS use and performance measurement at two VAMCs, RI, and PHS. Before each site visit, a local contact person, or “shepherd”, was engaged who identified key informants and served as the liaison during the visit. This person introduced the interviewers and scheduled the ethnographic observations in outpatient clinics. Informed consent was obtained from all participants. The study was approved by the IU Institutional Review Board, the Indianapolis VA Medical Center Research Committee, and each individual study site.

Key Informant Interviews:

At each site, three key informants were identified. Key informants were chosen from multiple backgrounds to represent a range of diverse perspectives, including managers (three primary care clinic directors, a quality manager, and implementation director), information technology personnel (a programmer, human factors engineer, knowledge manager, and physician-investigator focused upon CDS), and clinical leaders (three primary care physicians, one gastroenterologist, and a medical assistant). The key informant interviews were conducted either in-person during the site visit or afterwards by phone and then transcribed. The content of key informant interviews covered mechanisms used to facilitate CDS implementation. The same core questions were asked for each interviewee, and the semi-structured format allowed for flexibility to cover important topics that arose during the course of the interviews.

Site Visits and Opportunistic Interviews:

The researchers used direct observation to understand the ways in which providers behave in their health care environments in real time, so as to gather data on the context and processes surrounding CDS and performance measurement use. During site visits, two to four observers experienced in ethnographic observation separately shadowed physicians and nurses. Observers conducted opportunistic interviews of providers on their use of CDS in the outpatient clinics to better understand the key informant and ethnographic data. This discussion covered why providers took certain actions, as well as opinions and feedback about the relationship between CDS and performance measurement. This opportunistic interview feedback was recorded via handwritten notes on a structured observation form during participant interaction with the CDS, capturing observable activities and verbalizations.

Data Analysis:

All the data from key informant interviews and opportunistic interviews during the site visits were analyzed using a coding template. The research team developed this template based on the sociotechnical model7. The coding template included a category for each component of the sociotechnical model: social subsystem, technical subsystem, external subsystem. For each of these categories, subcategory labels were identified and modified as coding proceeded and themes emerged from the data. Findings were integrated across sites into meaningful patterns and the data abstracted into emergent themes, as guided by qualitative analysis norms8.

RESULTS

Across sites, CDS was commonly used in support of performance measurement efforts. Improved performance, or quality, was frequently cited as the rationale for the use clinical reminders. The link between clinical reminders and performance measurement appeared particularly strong in the VA. Pay-for-performance efforts also appeared to provide motivation for the use of clinical reminders, both inside and outside the VA. Clinical reminders and performance were sometimes discussed almost synonymously. Individuals tasked with quality management and information technology roles each had input into the implementation of both CDS and performance measures.

The remaining findings represent quotes and observations from the key informant and opportunistic interviews, related to the emergent themes about the relationship between CDS and performance measurement.

Paradigm shift

Performance measures have the potential to serve as the impetus for a paradigm shift in how providers operate in the health care environment. One primary care clinic director made a series of observations describing this shifting approach.

“You just kind of wrote your notes and looked to see if you wrote the notes or when you signed them or any of that stuff so you could get by, but with the advent of lots and lots of…performance measures, that model had to change.”

New CDS or clinical reminders oftentimes served as key organizational tools for health care systems to meet the demands of new performance measures. The adoption of clinical reminders in support of performance measurement was also described as being transformative, to the extent that after this paradigm shift, other changes could be met more easily.

“But with our new world view with performance measures, clinical reminders, I think everybody is…much more facile with change. We expect it whereas we hadn’t expected it for a long time, but now it just comes at us right and left”

One concrete way in which performance measures enabled health care managers to cope with change was to enable organizational self-assessment and planning: “getting the data so we see where we are, so we see where we need to go”.

Design issues

CDS can be programmed into pre-existing electronic health records (EHRs) to support performance measurement. Yet to most efficiently support performance measurement, EHR systems need to consider this need early in the design process. These observations stemmed from the interview with a knowledge manager who served as a liaison between developers and providers. Although many of the benchmark systems we observed were addressing performance measurement and quality, clearly there was a transition period and challenges in the legacy systems.

“There are a lot quality initiatives that we would love to be able to pull data from, and it’s not designed for that...I have colleagues at other health centers who are buying prepackaged electronic records, and that is one of the key things… they really need, and as a result, they’re getting that built in up front.”

In EHR design, the immediate usability of structured, as opposed to unstructured, data to support CDS and performance measurement needs to be considered. However, the time cost of capturing structured data within established patterns of provider workflow may be prohibitive.

“The problem is that it’s very possible that if a clinician is someone who dictates, their activity doesn’t hit the coded fields….In order to get the information out, such as…who’s overdue for colorectal screening, you have to have entered the data in the coded fields…If you dictate…it isn’t linked in any way with those coded fields. You have to go on to the health maintenance and do the clicking through the fields. If you do it, you have better data, but some people don’t find they have sufficient time during the patient visit or activate it to do that. So I think it’s a matter of…how it’s designed.”

Means to an end

Clinical reminders were often discussed among health care managers, particularly in the VA, as a means to the end of performance measurement.

“Getting your staff to understand the clinical reminders so that they would accept them as a way to improve our performance and just a tool to that effect. The big fear with clinical reminders was that we were grading them on it, that we were measuring their performance on the clinical reminders”

In a large organization or bureaucracy, clinicians perceived as a danger the possibility that clinical reminders may start as a means to the end of improved performance, but could later become an end in themselves.

VA managers perceived clinical reminders as having the ability to improve performance measures and consequently pursued mandatory implementation.

“So how am I going to remember to check off a microalbumin every year? This year it’s a performance measure. We need to be much more directive about it and mandate that that reminder is turned on for everybody”

The tight linkage of a clinical reminder with a performance measure appeared to be strong justification for its mandatory activation. This observation implies, conversely, that clinical reminders for clinical reasons alone may not warrant mandatory implementation.

“And the local ones we can mandate that everybody has turned on or we can leave it so that the provider has the choice of keeping it on or off. And, I just thought nobody had microalbumin on them. We were failing that performance measure….That’s what flagged it…saw we need to make this mandated.”

Pay-for-performance

Pay-for-performance provided an additional incentive to implement clinical reminders beyond just performance measurement alone. In the VA, both managers and providers had financial incentives for better performance.

“So even the firm chiefs do, and even each individual provider gets paid for performance, and we link 80% of that to quality measures. So hemoglobin A1C, LDL…and we have reminders that help us get those up…So we like that kind of reminder.”

Pay-for-performance programs also influenced the implementation of clinical reminders outside the VA.

Not only reminders

CDS for performance measurement also took on forms other than clinical reminders, such as tables or reports, as described by a quality manager.

“And they go on the intranet, they click on the physician compensation page. They can see their compensation, how quality relates to it. They can click on…the quality table and pull up results for the company, their site and themselves on probably sixty different measures.”

Clinical appropriateness

Similar to risk adjustment with performance measures, issues of clinical appropriateness affected the implementation of clinical reminders. One clinician made the following comment.

“Sometimes it’s hard to get the rules right…so the rules and reminders trigger in a situation and it’s not exactly relevant.... so it’s not that they are really noncompliant, it’s just that…for my specific patient in these circumstances, the clinical rules are not refined to cover my patient case so that’s where they fall many times...It’s not exactly clear what it means.”

Shared measures

Unique to the VA’s integrated delivery system, shared performance measures led to the development of shared clinical reminders across primary care and mental health. Collaboration between the clinical services ensued.

“So we have joint reminders that are turned on both for the mental health providers and for the primary care providers. And they’re joint performance measures…so we’re both being forced to look at it. So I have to meet with my mental health counterpart, and we have to discuss our performance together and figure out how we’re doing…We can’t work individually anymore…We have to collaborate.”

Not all performance measures were supported by clinical reminders. Conversely, clinical reminders were not implemented solely in support of performance measurement, but were sometimes implemented for issues of perceived clinical significance. When serving more purely clinical purposes, the implementation of reminders may be at the request of health care providers: “these are reminders that providers had suggested, so we listened”.

DISCUSSION

The implementation of clinical reminders in support of performance measurement has the potential to serve as a catalyst for transformation among health care organizations. If an organization is able to undertake the changes necessary to effectively implement these tools into clinical practice, the paradigm shift required may move that entity closer to functioning as a learning organization9.

From a design standpoint, early consideration of the appropriate configuration among performance measurement, CDS, and the electronic medical record may reduce additional work later. This lesson is worth special consideration as the health care system prepares to undertake widespread implementation of health information technology10. In adopting the right balance between structured and unstructured data, designers should balance the competing needs for usability among front-line clinicians and usability among quality managers. When free text data is necessary for reasons of provider time, advances in natural language processing methods may enable the capture of additional meaning and value from unstructured data.

The managers with whom we spoke largely appeared convinced of the effectiveness of clinical reminders, motivated by performance measurement, in promoting improved quality. The medical literature suggests a modest benefit to audit and feedback3, although as noted earlier, benchmark institutions have reported substantial success with CDS4.

The achievement of higher scores on performance measures serves as a clear motivation for the implementation of clinical reminders by managers. Pay-for-performance added to the motivation and also created provider incentives. Financial incentives for high performance are present in both private and public (VA) health care settings.

Our interviews revealed that shared measures of performance between primary care and another specialty service led to the development of shared clinical reminders. This finding reinforces current health care reform proposals suggesting that shared accountability for performance will lead larger organizations to invest in coordinated quality improvement efforts11; the effect of CDS shared across clinical services is an area of future research.

Limitations of this study include the small number of sites which may not necessarily be representative of the experience with CDS and performance measurement in organizations with less experience implementing these approaches.

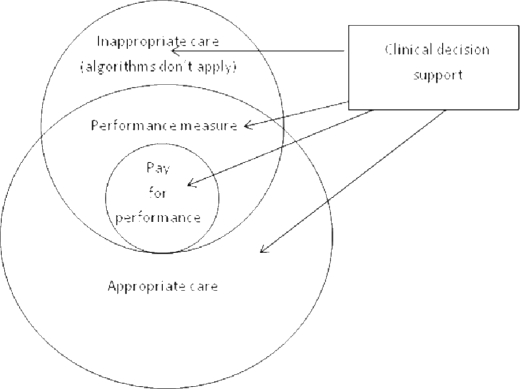

Both performance measures and clinical reminders face significant challenges when they attempt to operationalize clinical guidelines, including difficulties in electronic data availability and addressing clinical appropriateness. Performance measures do not capture all dimensions of appropriate care, and furthermore, performance measures can be inaccurate and label inappropriate care as being of high quality (Figure 1). The close link between CDS and performance measurement causes these tools to face many of the same implementation challenges.

Figure 1.

Relationship of clinical decision support with performance measurement and clinical appropriateness.

For performance measures, statistical risk adjustment can be done for methodologic refinement, as these scores are averaged across populations. However, clinical reminders are targeted at a single patient at a time, and consequently, rule-based approaches are often necessary to handle clinical appropriateness. A clinical reminder may be thought of as a real-time performance measure with an n of one.

Due to the close synergy between clinical reminders and performance measures, developers should consider how to integrate these tools when designing information systems. For instance, completion of clinical reminders should be linked to the completion of performance measures to help administrators more efficiently collect performance data, as well as give clinicians appropriate credit for their work. Conversely, completion of performance measures should be facilitated by clinical decision support. Clinicians also have a vital and necessary role in providing feedback about the clinical appropriateness of decision support to highlight exceptions to the decision rules and potential unintended consequences.

Acknowledgments

Dr. Haggstrom was supported by a VA HSR&D Career Development Award, grant #CD2-07016-1. This research was supported by the Agency for Healthcare Research and Quality (AHRQ) contract HSA2902006000131 and partially supported by the VA HSR&D Center of Excellence on Implementing Evidence-Based Practice, grant #HFP 04-148. The views expressed in this article are those of the authors and do not necessarily represent the view of the Department of Veterans Affairs.

References

- 1.Bates DW, Pappius E, Kuperman GJ, et al. Using information systems to measure and improve quality. Int J Med Inform. 1999 Feb–Mar;53(2–3):115–124. doi: 10.1016/s1386-5056(98)00152-x. [DOI] [PubMed] [Google Scholar]

- 2.Doebbeling BN, Chou AF, Tierney WM.Priorities and strategies for the implementation of integrated informatics and communications technology to improve evidence-based practice J Gen Intern Med February200621Suppl 2(2)S50–57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Jamtvedt G, Young JM, Kristoffersen DT, O'Brien MA, Oxman AD. Audit and feedback: effects on professional practice and health care outcomes. Cochrane Database Syst Rev. 2006;19(2):CD000259. doi: 10.1002/14651858.CD000259.pub2. [DOI] [PubMed] [Google Scholar]

- 4.Chaudhry B, Wang J, Wu S, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med. 2006 May 16;144(10):742–752. doi: 10.7326/0003-4819-144-10-200605160-00125. [DOI] [PubMed] [Google Scholar]

- 5.McDonald CJ, Overhage JM, Tierney WM, et al. The Regenstrief Medical Record System: a quarter century experience. Int J Med Inform. 1999 Jun;54(3):225–253. doi: 10.1016/s1386-5056(99)00009-x. [DOI] [PubMed] [Google Scholar]

- 6.Brown SH, Lincoln MJ, Groen PJ, Kolodner RM. VistA--U.S. Department of Veterans Affairs national-scale HIS. Int J Med Inform. 2003 Mar;69(2–3):135–156. doi: 10.1016/s1386-5056(02)00131-4. [DOI] [PubMed] [Google Scholar]

- 7.Westbrook JI, Braithwaite J, Georgiou A, et al. Multimethod evaluation of information and communication technologies in health in the context of wicked problems and sociotechnical theory. J Am Med Inform Assoc. 2007 Nov–Dec;14(6):746–755. doi: 10.1197/jamia.M2462. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Xiao Y, Vicente KJ. A framework for epistemological analysis in empirical (laboratory and field) studies. Hum Factors. Spring. 2000;42(1):87–101. doi: 10.1518/001872000779656642. [DOI] [PubMed] [Google Scholar]

- 9.Etheredge LM. A rapid-learning health system. Health Aff (Millwood) 2007 Mar–Apr;26(2):w107–118. doi: 10.1377/hlthaff.26.2.w107. [DOI] [PubMed] [Google Scholar]

- 10.Clancy CM, Anderson KM, White PJ. Investing in health information infrastructure: can it help achieve health reform? Health Aff (Millwood) 2009 Mar–Apr;28(2):478–482. doi: 10.1377/hlthaff.28.2.478. [DOI] [PubMed] [Google Scholar]

- 11.Fisher ES, Staiger DO, Bynum JP, Gottlieb DJ. Creating accountable care organizations: the extended hospital medical staff. Health Aff (Millwood) 2007 Jan–Feb;26(1):w44–57. doi: 10.1377/hlthaff.26.1.w44. [DOI] [PMC free article] [PubMed] [Google Scholar]