Abstract

Following up a previous study that examined public health students’ intention to use e-resources for completing research paper assignments, the present study proposed two models to investigate whether or not public health students actually used the e-resources they intended to use and whether or not the determinants of intention to use predict actual use of e-resources. Focus groups and pre- and post-questionnaires were used to collect data. Descriptive analysis, data screening, and Structural Equation Modeling (SEM) techniques were used for data analysis. The study found that the determinants of intention-to-use significantly predict actual use behavior. Direct impact of perceived usefulness and indirect impact of perceived ease of use to both behavior intention and actual behavior indicated the importance of ease of use at the early stage of technology acceptance. Non-significant intention-behavior relationship prompted thoughts on the measurement of actual behavior and multidimensional characteristics of the intention construct.

Introduction

In order to ensure effective deployment of information technology (IT) resources in an organization, usage intention and actual behavior of individual users have been heavily examined in the IT adoption research. Intention-based models that use behavior intention to predict usage have been widely used. Previous studies have shown behavior intention (BI) significantly affects actual behavior1–6. However, measures of usage intention and self-reported usage are often collected coincidentally with the measurement of beliefs, attitude and beliefs3, which may produce skewed study results.

To develop a comprehensive understanding of technology acceptance and use, the relationship between BI and actual behavior as well as their determinants are worth being investigated. As a follow-up on a previous study that examined public health students’ intention to use electronic information resources (e-resources) for completing research papers in a U.S. Midwestern university7,8, this study proposed two research models revised from the previous study, aiming to answer the following two research questions: 1) does intention to use determine actual use of e-resources, and 2) can the determinants of intention to use e-resources be used to predict actual use of e-resources?

Theoretical Background

The Baseline Model

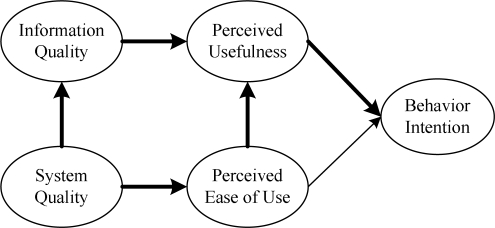

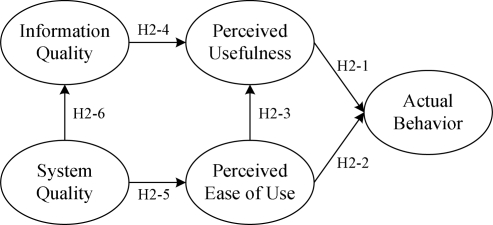

Based on the Technology Acceptance Model (TAM)9, an extended model examining the roles of two aspects of e-resource characteristics, namely, information quality (IQ) and system quality (SQ), in predicting public health students’ intention to use e-resources for completing research papers was proposed and tested7. The study found the five significant causal paths, which included perceived usefulness (USE) to BI, IQ to USE, SQ to ease of use (EOU), EOU to USE, and SQ to IQ. The impact of EOU on BI was mediated through USE. The impacts that both IQ and SQ had on BI were mediated through USE and EOU (Figure 1, arrows in bold indicate the significant causal paths).

Figure 1.

The baseline model

Research Models and Hypotheses

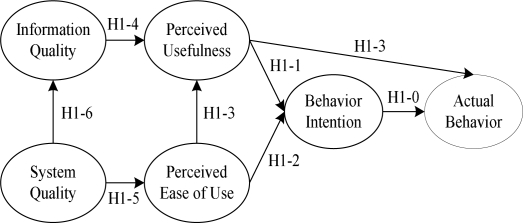

Based on the baseline model, two research models were proposed to examine whether or not BI can predict actual use behavior (AU) (Research model 1) and whether the determinants of BI can be used to predict AU (Research model 2).

Prior research has demonstrated that BI is the major determinant of AU1–6. Empirical evidence also suggests the direct effect of USE on AU2,4,10,11. Therefore, Research model 1 extended the baseline model by adding the AU construct and the causal paths from BI to AU, and from USE to AU (Figure 2).

Figure 2.

Research model 1

Due to the inconsistent findings about the significant impact of BI on AU4,9, 12 and the impact of USE and EOU on AU10–13, Research model 2 was proposed with AU replacing BI in the baseline model.

With the above empirical support, three new hypotheses were proposed:

Hypothesis 1-0: Intention to use an e-resource has a positive effect on actual use of the e-resource.

Hypothesis 1-3 & 2-1: Perceived usefulness has a positive effect on actual use of e-resources.

Hypothesis 2-2: Perceived ease of use has a positive effect on actual use of e-resources.

The relationships of USE-BI, EOU-BI, EOU-USE, IQ-USE, SQ-EOU, and SQ-IQ were hypothesized to follow the causal paths in the baseline model:

Hypothesis 1-1: Perceived usefulness has a positive effect on intention to use e-resources.

Hypothesis 1-2: Perceived ease of use has a positive effect on intention to use e-resources.

Hypothesis 1-3 & 2-3: Perceived ease of use has a positive effect on perceived usefulness.

Hypothesis 1-4 & 2-4: The quality of the information contained in e-resources has a positive effect on perceived usefulness.

Hypothesis 1-5 & 2-5: System quality has a positive effect on perceived ease of use.

Hypothesis 1-6 & 2-6: System quality has a positive effect on information quality.

Methods

All graduate students (N=284) enrolled in the School of Public Health during the 2008 spring semester at a U.S. Midwestern university were given two self-administered questionnaires. The pre-questionnaire was distributed in late March to early April before students started using any information resources to search information for their paper assignments and asked about intentions to use e-resources (BI), perceptions of behavior beliefs (USE and EOU) and system characteristics (IQ and SQ). The post-questionnaire that was distributed in late April to early May asked about actual use of e-resources (AU) and the students’ demographic information. Face validity and content validity of both questionnaires were tested with two focus group interviews following the same procedures as the previous study7. Both questionnaires were administered through mass emailing, including two email reminders, campus mailing, and face-to-face distribution in class. Ten students who attended the focus groups were excluded from the questionnaire distribution.

Measurements

Previously developed and validated measures with 7-point Likert scales from 1 (strongly disagree) to 7 (strongly agree) were used to measure BI, USE, EOU, IQ, and SQ with minor wording modifications of the questions to fit the study context7. AU was measured by the frequency and the duration of usage of e-resources1,13.

Data Analysis

Descriptive analysis, data screening, and Structural Equation Modeling (SEM) techniques with SPSS 15.0 for Windows and AMOS 7.0 statistic applications were conducted for data analysis.

Results

Descriptive Analysis

A total of 180 students (180/284=63.4%) responded the questionnaire. 152 of them (152/180=84.4%) fully completed the questionnaires, which included 135 Master students and 17 PhD students. Among them, 133 students indicated they intended to use e-resources to finish their paper assignments while 125 students actually used their intention-to-use e-resources. Eight students (8/133=6.0%) used instead print books and course materials (4), course instructors (2), and reference librarians (2). The three most popular e-resources that students both intended to use and actually used were online databases, the Internet, and electronic journals.

Measurement Model Estimation

Data screening did not find the missing values and 24 measured items were normally distributed and no outliers were detected. Item reliability (squared factor loading, SFL) and construct reliability (Cronbach’s α and composite reliability ρ) were tested for psychometric soundness of the data. Except the AU construct and its two measured items, the SFLs of 22 measured items, Cronbach’s α and composite reliability ρ of 5 other constructs ranged from 0.50–0.90 (SFL), 0.84–0.93(α) and 0.72–0.91 (ρ), respectively, which met the criteria with SFL ≥ 0.50, Cronbach’s α ≥ 0.70, and composite reliability ρ ≥ 0.7014.

Convergent validity was assessed with average variance extracted (AVE). AVEs of all 5 other constructs but AU ranged from 0.52 to 0.73, greater than the recommended value 0.5014. Discriminant validity is verified with the average variance shared between a construct and its measured items, greater than the variance shared between the construct and other constructs14. Table 1 showed the AVE of 5 other constructs but AU on the diagonal row were larger than the squared correlations between that construct and other constructs.

Table 1.

Discriminant validity table

| Construct | BI | USE | EOU | IQ | SQ |

|---|---|---|---|---|---|

| BI | 0.73 | ||||

| USE | 0.13 | 0.72 | |||

| EOU | 0.04 | 0.22 | 0.67 | ||

| IQ | 0.08 | 0.27 | 0.11 | 0.70 | |

| SQ | 0.07 | 0.20 | 0.30 | 0.30 | 0.52 |

Although the construct AU failed to meet the criteria of reliability and validity, considering the importance of this construct in this study, the average value of its two measured items was used for the following structural model analysis.

Structural Model Analysis

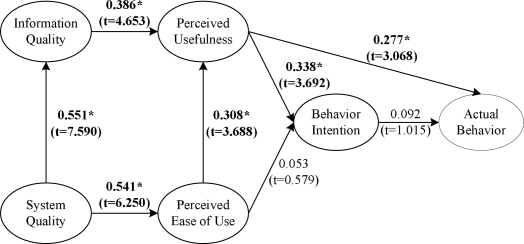

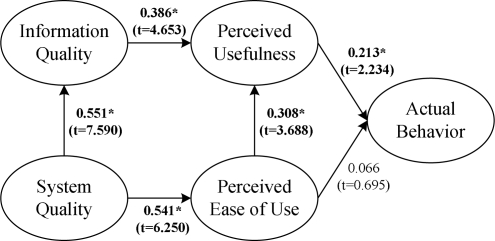

Maximum likelihood estimation was employed to compute path coefficients between constructs. Figure 4 and 5 presented the results of causal relationships with estimated path coefficients and associated t values of two research models (* indicates P < 0.05). Same as the findings in the baseline model, four hypothesized causal paths EOU->USE (H1-3 & H2-3), IQ->USE (H1–4 & H2–4), SQ->EOU (H1–5 & H2–5), and SQ->IQ (H1–6 & H2–6) were found significant. In Research model 1, only USE, not EOU (H1–2) significantly affected BI (H1-1). Similarly, USE, not EOU (H2-2), was found significantly and directly affecting AU (H2-1) in Research model 2.

Figure 4.

Path coefficients in the research model 1

Figure 5.

Path coefficients in the research model 2

The study did not find the significant impact of BI on AU (H1-0).

Overall, Research model 1 explained 13.4% variance in BI and 6.7% in AU while the variance accounted for in AU in Research model 2 was 6.3%.

The overall fit of the two research models was tested and all model fit indices met the recommended criteria15 (Table 2).

Table 2.

Model fit indices report

| Model Fit Index | Criteria | Research Model 1 | Research Model 2 |

|---|---|---|---|

| X2 | P ≥ 0.05 | P = 0.374 | P = 0.366 |

| X2/df | ≤ 3.00 | 1.072 | 1.066 |

| GFI | ≥ 0.90 | 0.987 | 0.994 |

| AGFI | ≥ 0.80 | 0.945 | 0.955 |

| NFI | ≥ 0.90 | 0.972 | 0.988 |

| CFI | ≥ 0.90 | 0.998 | 1.000 |

| RMR | ≤ 0.09 | 0.044 | 0.027 |

| RMSEA | ≤ 0.10 | 0.023 | 0.007 |

Discussion

Theoretical Implications

The study found no significant impact of behavior intention on actual use (H1-0) and low correlations between intention and behavior (r=0.13), which differ from the prior study findings1–6,16. The poor measurement of AU and the weaknesses of subjective self-reported usage may explain the inconsistency. Some scholars suggested that self-reported usage measures are biased or relative so that it can not accurately represent actual usage12. In the case of this study, it is possible that students’ perceptions about “how often” (frequency) and “how many total hours” (duration) they actually used e-resources reflect only their general usage of e-resources in different contexts and at different times. Although previous studies suggested both significant causal relationships between behavior intention and subjective self-reported usage1–6 as well as between intention and objective usage records (e.g., computer logs and actual counts)3,6, many studies found that self-reported usage was related more to behavior beliefs and behavior intentions than computer-recorded system usage2,4,10,12. Relationships between users’ perceptions about behavior beliefs, behavior intentions and actual usage with objective measurements at the different times of the technology implementation process is worth being further investigated to improve the predictive power of TAM.

A possible cause of discrepancy between intention and behavior is changes in intention due to new information or unforeseen obstacles to action18,19. Instability of intention along the user-system interaction may cause the inconsistent findings of intention-behavior relationship in previous studies4,9,12. Same causal relationships among USE, EOU, IQ, SQ, and AU found in Research models comparing to the baseline model indicated the determinants of intention to use can be used to predict actual use of e-resources. This finding implies that intention may disappear or remain stable through time. Therefore, measuring intention and actual behavior at different times during the implementation process may yield different intention-behavior relationships. In addition, the questions of “Do you intend to do X?” and “Will you do X?” seems redundant but indicate two different sub-concepts of intention: intention to perform a behavior and subjective estimates of actually performing a behavior16,17. They denote different degrees of attempt to perform a behavior. Therefore, decomposing the intention construct into intention stability and strength as well as intention to perform a behavior and subjective estimates of actually performing a behavior would improve both predictive and explanation power of TAM.

Practical Implications

The study found perceived usefulness had the significant impact on both intention to use and actual use of e-resources while the effect of ease of use on intention to use and actual use was mediated by perceived usefulness. These results imply that in a work/study-related setting, e-resources that can provide useful information will be used because useful information helps students improve their professional/academic performance and ease of use is not the students’ major concern for deciding to use the e-resources because they had some experience of using e-resources and knew how to use them. Davis9, Chang20, and Szajna4 found that when users have no or little previous experience of using a system, they usually pay more attention to the system’s ease of use rather than its usefulness, but once familiarized with the system, system’s usefulness is the major concern for whether or not to continue using the system. Therefore, users’ first impressions about a system’s ease of use will open the door to further explore the system, and if the system can also provide useful information, it is easier for users to accept the system eventually. However, if the system is not perceived to be easy to use from the start, users may “turn off” to the system and it would be hard to get users to adopt later despite improvements made to the system6. Therefore, emphasizing systems’ ease-of-use should be the focus in the early training during the system implementation process. Both usefulness and ease of use factors coact to drive the eventual technology adoption while ease of use plays a very important role in early acceptance and usefulness is an important factor that affects the continuance acceptance. That both IQ and SQ impacted BI and AU via USE and EOU indicates that IQ and SQ are two important system characteristics that provide the specific diagnostic information for system designers and adopters at any stage of a system’s implementation or usage process.

Study Limitations and Further Studies

Low variances explained in behavior intention (13.4%) and in actual use (6.7% & 6.3%) indicate there are other factors affecting behavior intention and actual behavior besides the system utility and usability. Interactions between technologies and users account for only some reasons for technology acceptance challenges. How IT deployment strategies and other organizational factors impact users’ beliefs about technology and subsequent use of technology during the technology implementation process need to be examined.

Some studies found that the effects of behavior beliefs and behavior intention on actual use change over time with users employment of the technologies1,3,6. Given that intention’s length and strength are subject to change over the time18,21,, longitudinal study on the impact of intention on actual behavior at different points of time would provide a comprehensive picture about how long intentions last, when it had the strongest relationship with actual behavior, and when it disappears. This would allow IT adopters to identify the best times to conduct interventions (e.g., training and promoting) for enhancing technology adoption.

Conclusion

The present study proposed two revised models based on the baseline model tested in a previous study to investigate the intention-behavior relationship and the determinants of public health students’ actual use of e-resources. Study findings provide thought-provoking theoretical and practical implications to researchers, system designers, and IT adopters. Particularly, direct impact of perceived usefulness and indirect impact of perceived ease of use to both behavior intention and actual behavior indicated the importance of ease of use at the early stage of technology acceptance. Non-significant intention-behavior relationship prompted the thoughts on the measurement of actual behavior and multidimensional characteristics of the intention construct.

Figure 3.

Research model 2

Acknowledgments

The author thanks Linda Nitta Holyoke for her editing help.

References

- 1.Davis FD, Bagozzi RP, Warshaw PR. User acceptance of computer technology: A comparison of two theoretical models. Manage Sci. 1989;35:982–1003. [Google Scholar]

- 2.Davis FD, Bagozzi RP, Warshaw PR. Extrinsic and intrinsic motivation to use computers in the workplace. J Appl Soc Psychol. 1992;22:1111–32. [Google Scholar]

- 3.Taylor S, Todd PA. Understanding information technology usage: a test of competing models. Inform Syst Res. 1995;6:144–76. [Google Scholar]

- 4.Szajna B. Empirical evaluation of the revised Technology Acceptance Model. Manage Sci. 1996;42:85–92. [Google Scholar]

- 5.Venkatesh V, Davis FD. A theoretical extension of the Technology Acceptance Model: four longitudinal field studies. Manage Sci. 2000;46:186–204. [Google Scholar]

- 6.Venkatesh V, Sperier C, Morris MG. User acceptance enablers in individual decision making about technology: toward an integrated model. Decison Sci. 2002;33:297–316. [Google Scholar]

- 7.Tao D. Understanding intention to use electronic information resources: A theoretical extension of the Technology Acceptance Model (TAM) In: Suermondt J, Evans RS, Ohno-Machado L, editors. American Medical Informatics Association Annual Symposium Proceedings; 2008. Washington DC: American Medical Informatics Association; 2008. pp. 717–21. [PMC free article] [PubMed] [Google Scholar]

- 8.Tao D. Using Theory Of Reasoned Action (TRA) in understanding selection and use of information resources: An information resource selection and use model [dissertation] Columbia (MO): University of Missouri Columbia; 2008. [Google Scholar]

- 9.Davis FD. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quart. 1989;13:319–40. [Google Scholar]

- 10.Adam DA, Nelson RR, Todd PA. Perceived usefulness, ease of use, and usage of information technology: A replication. MIS Quart. 1992;16:227–47. [Google Scholar]

- 11.Igbaria M, Guimaraes T, Davis GB. Testing the determinants of microcomputer usage via a Structural Equation Model. J Manage Inform Syst. 1995;11:87–114. [Google Scholar]

- 12.Straub D, Limayem M, Karahanna-Evaristo E. Measuring system usage: Implications for IS theory testing. Manage Sci. 1995;41:1328–42. [Google Scholar]

- 13.Lederer AL, Maupin DJ, Sena MP, Zhuang Y. The technology acceptance model and the World Wide Web. Decis Support Syst. 2005;29:269–82. [Google Scholar]

- 14.Fornell C, Larcker DF. Evaluating structural equation models with unobservable variables and measurement errors. J Marketing Res. 1981;18:39–50. [Google Scholar]

- 15.Meyers LS, Gamst G, Guarino AJ. Applied Multivariate Research: Design and Interpretation. Thousand Oaks, CA: Sage Publications, Inc; 2006. [Google Scholar]

- 16.Sheppard BH, Hartwick J, Warshaw PR. The Theory of Reasoned Action: A meta-analysis of past research with recommendations for modifications and future research. J Consum Res. 1988;15:325–43. [Google Scholar]

- 17.Warshaw PR, Davis FD. Disentangling behavioral intention and behavioral expectation. J Exp Soc Psychol. 1985;21:213–28. [Google Scholar]

- 18.Sheeren P, Orbell S, Trafimow D. Does the temporal stability of behavioral intentions moderate intention-behavior and past behavior-future behavior relations? Pers Soc Psychol Bull. 1999;25:724–34. [Google Scholar]

- 19.Kim H, Kwahk K. Proceedings of the 8th World Congress on the Management of eBusiness; 2007. Toronto, Cananda: IEEE; 2007. Comparing the usage behavior and the continuance intention of mobile Internet services; pp. 15–23. [Google Scholar]

- 20.Chang IC, Li YC, Hung WF, Hwang HG. An empirical study on the impact of quality antecedents on tax payers acceptance of Internet tax-filing systems’. Gov Inform Q. 2005;22:389–410. doi: 10.1016/j.giq.2005.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Ajzen I, Fishbein M. Understanding Attitude and Predicting Social Behavior. New Jersey: Prentice-Hall, Inc; 1980. [Google Scholar]