Abstract

In image-guided diagnosis and treatment of small peripheral lung lesions the alignment of the pre-procedural lung CT images and the intra-procedural images is an important step to accurately guide and monitor the interventional procedure. Registering the serial images often relies on correct segmentation of the images and, on the other hand, the segmentation results can be further improved by temporal alignment of the serial images. This paper presents a joint serial image registration and segmentation algorithm. In this algorithm, serial images are segmented based on the current deformations, and the deformations among the serial images are iteratively refined based on the updated segmentation results. No temporal smoothness about the deformation fields is enforced so that the algorithm can tolerate larger or discontinuous temporal changes that often appear during image-guided therapy. Physical procedure models could also be incorporated to our framework to better handle the temporal changes of the serial images during intervention. In experiments, we apply the proposed algorithm to align serial lung CT images. Results using both simulated and clinical images show that the new algorithm is more robust compared to the method that only uses deformable registration.

1 Introduction

Lung cancer is the most common cause of cancer death in the world, and peripheral lung cancer constitutes more than half of all lung cancer cases. Decades of research in early detection and treatment methods have little progress to impact the long term survival of lung cancer patients [1]. It is generally believed that the key to improve long term survival of cancers are early detection and intervention with novel therapies. The traditional approach of Computed Tomography (CT) screening alone to lung cancer now appears to result in high false positive rates. On initial screening of high risk populations, most studies report false positive rates between 10% and 20% [2]. The Mayo Clinic experience showed that when high risk individuals are screened for lung cancer with CT, the likelihood that they undergo a thoracic resection for lung cancer is increased by ten folds [3]. These observations suggest that a better diagnostic and therapeutic strategy is needed to identify the early staged lung cancer and treat them accordingly in order to make CT screening cost-effective [4] and to eliminate unnecessary surgeries. Molecular imaging guided diagnosis has the advantage to fill this gap by high sensitivity detection to avoid biannual screening, and the image-guided platform offers the possibility to treat immediately after confirmed diagnosis.

To tackle the common difficulties in identifying small lung lesions (< 1.5cm) at an early stage, an image-guided therapy approach is most promising. This approach relies on pre-procedural CT images and real-time electromagnetic (EM) tracking to guide a fiber-optic microendoscope to the small lesion of interest. With the injection of fluorescent molecular imaging agent prior to the procedure, a high sensitivity molecular imaging diagnosis can be performed intraoperatively. It also offers the possibility to treat the small lung lesion simultaneously and eliminates unnecessary surgeries and the procedure related mortality. This type of workflow integration has a tremendous financial implication to lower healthcare cost as well as detect and treat peripheral lung cancer earlier. Segmentation of suspected lesion and vascular structures in pre-procedural high resolution lung CT images can be used to accurately guide the physicians during intraoperative procedures when it is used in conjunction with EM tracked real-time guidance system. Compared to the non-real-time guided interventional lung procedure, fewer punctures and lower radiation dose is expected without the iterative CT imaging to confirm the needle location.

Image-guided system plays an important role in this procedure in order to accurately and efficiently guide the interventional device to the target of interest. To reduce the intra-procedural procedure time, a pre-procedural lung CT image is often captured days before the procedure, thus the lung image and suspected lesion and vascular structures can be segmented for surgical planning. During the interventional procedure, the image and its segmentation information can be quickly warped onto the CT scan right before the intervention to highlight the target and surgical path. Then, with the help of the electromagnetic tracking and the visualization system, physicians can efficiently and accurately perform the microendoscopic optical imaging or radio frequency ablation, should the lesion is confirmed by the molecular imaging results. Thus, the alignment of the pre-procedural lung CT images as well as the intra-procedural serial images is an important step to accurately guide and monitor the interventional procedure in the diagnosis and treatment of these small lung lesions.

Traditional pairwise [5, 6, 7] and group-wise [8, 9] image registration algorithms have been used in these applications. However the pairwise algorithms warp each image separately, and they often cause unstable measures of the serial images because no temporal information of the serial images has been used in the registration procedure. Groupwise image registration methods simultaneously process multiple images, however they often consider the images as a group, not a time series. Thus the temporal information has not been used efficiently. For serial image registration, the relationship between temporally neighboring images is much more important than that of the images with larger time intervals, since both anatomical structure and tissue properties of neighboring images tend to be more similar for neighboring images than others; moreover these temporal changes can be characterized using specific physical processes models.

Recently, several 4-D image processing algorithms as well as joint segmentation and registration algorithms have been proposed in the literature [10, 11, 12, 13, 14, 15, 16, 17, 18]. Serial image processing aims at calculating the image series in the 4-D space wherein temporal deformations among images are estimated simultaneously [10, 11, 12], while segmentation and registration can be combined together since image registration significantly benefits from segmentation and vice versa [13, 14, 15, 16, 17, 18]. Accurate registration of serial images often relies on correct extraction and matching of robust and distinctive image features. Thus it is an important step to segment the image into different tissue regions, which act as additional features in the image registration to further improve the temporal alignment of the serial images. Moreover the deformations across serial images also provide more robust image segmentation.

This paper presents a joint serial image registration and segmentation, wherein serial images are segmented based on the current temporal deformations so that the temporally corresponding tissues tend to be segmented into the same tissue type, and at the same time, temporal deformations among the serial images are iteratively refined based on the updated segmentation results. Notice that the simultaneous registration and segmentation framework had been studied in [19, 12, 20] for MR images. The previous work, CLASSIC [20], relies on a temporal smoothness constraint, and it is particularly suitable for longitudinal analysis of MR brain images of normal healthy subjects, where the changes from one time-point to another is small. Nevertheless, in that algorithm the registration is purely dependent on the segmented images. In this new algorithm, no temporal smoothness about the deformation fields is enforced so that our algorithm can tolerate larger or discontinuous temporal changes that often appear during image-guided therapy. Moreover, physical procedure models could also be incorporated to our algorithm to better handle the temporal changes of the serial images during intervention.

We first demonstrate that, based on the Bayes’ rules, the registration of the current time-point image is not only related to the baseline image, but also to its temporally neighboring images. Based on this principle, a new serial image similarity measure is defined, and the deformation is modeled using the traditional Free Form Deformation (FFD) [6]. Based on the current estimate of temporal deformations, a 4-D clustering algorithm is applied for segmenting the serial images. No adaptive centroids are used because of relatively small effect of inhomogeneity in CT. The proposed joint algorithm then iteratively refines the serial deformations and segments the images until convergence. The advantage of the proposed method is that it is particularly suitable for registering serial images in image-guided therapy applications with possible large and discontinuous temporal deformations.

We have applied the proposed algorithm to both simulated and real serial lung CT images and compared it with the FFD. The dataset used in the experiments consists of anonymized serial lung CT images of lung cancer patients (N = 20). The spatial resolutions of the images from the same patient are different due to different settings and imaging protocols. For the serial images of the same patient, all the subsequent images have been globally aligned and re-sampled onto the space of the first image using the ITK package [21]. The results show that the proposed algorithm yields more robust registration results and more stable longitudinal measures.

2 Method

2.1 Software Workflow for Image-Guided Intervention

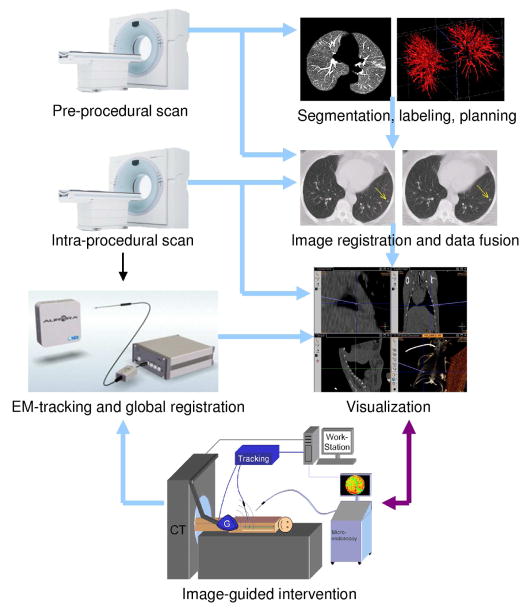

Fig. 1 illustrates the software workflow of the image-guided interventional system. First, a pre-procedural lung CT image is captured before the intervention. Then, the lung image, vascular structures, suspected lesions can be segmented for surgical planning. In the interventional procedure, the intra-procedural image is first obtained, and the image registration and data fusion step is then performed in order to transfer all the diagnosis and surgical planning data onto the current image accurately and efficiently. During the interventional procedure, with the help of the electromagnetic (EM) tracking system, the coordinates of the EM tracking and the CT images are registered or calibrated before visualization. Using this system, physicians can efficiently perform the intervention by referring to the feedback from the visualization system, which shows the real-time location of the interventional device in the 3D CT images. This paper will focus on the segmentation and registration algorithms used in the image-guided interventional system.

Figure 1.

The interventional system.

2.2 Algorithm Framework

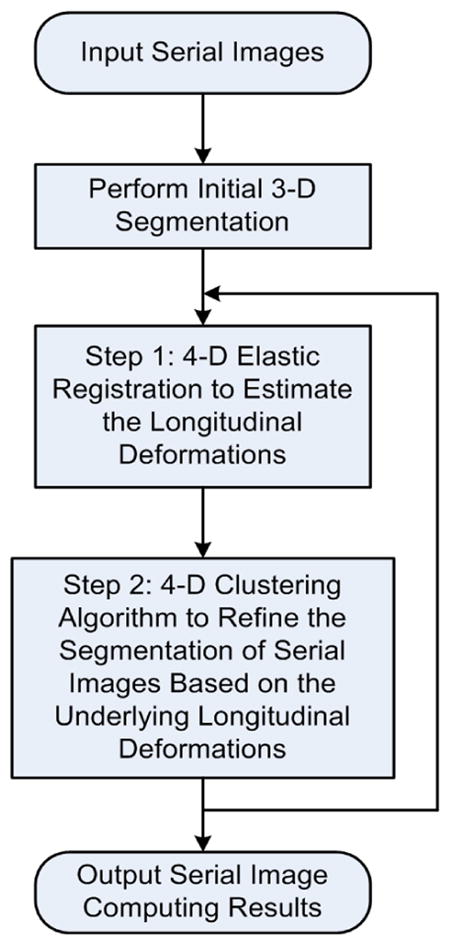

The algorithm incorporates an iterative serial image segmentation and registration strategy in order to improve the longitudinal stability for 3-D image series. It iteratively performs two steps [18]:

Step 1: Given the current segmentation results, the algorithm refines the underlying longitudinal deformations among the serial images using the 4-D elastic image warping algorithm. In Section 2.3, the new serial registration algorithm is proposed.

Step 2: Given a current estimate of the longitudinal deformations necessary to align serial 3-D images, the algorithm jointly segments the image series using the 4-D clustering algorithm. This algorithm had been proposed in [18] and is briefly introduced in Section 2.4.

Fig. 2 illustrates the flowchart of this iterative algorithm. After inputting a series of images, the initial 3-D segmentation of the serial images is performed using AdpkMean [22]. Then, these segmented images can be used as the input for the 4-D elastic deformable registration, and after the longitudinal deformations among the serial images have been estimated, the 4-D clustering algorithm for serial images will refine the segmentation. These 4-D registration and segmentation procedures can be performed iteratively, and the algorithm stops when the difference between two consequent iterations is smaller than a prescribed threshold.

Figure 2.

The framework of the algorithm.

In the registration algorithm, no temporal smoothness about the deformation fields is enforced so that our algorithm can tolerate larger or discontinuous temporal changes that often appear during image-guided therapy. Moreover, physical procedure models could also be incorporated to our algorithm to better handle the temporal changes of the serial images during intervention.

2.3 The Serial Image Registration Algorithm

Given a series of images It, t = 0,..., T (I0 is usually called the baseline, and all the subsequent images have been globally aligned onto the space of the baseline by applying the rigid registration in Insight Toolkit (ITK)), we often need to estimate the deformations from the baseline onto each image, i.e., f0 → t or simply denoted as ft. Since no longitudinal information is used in pairwise registration, the temporal stability of the resultant serial deformations cannot be preserved. Groupwise registration jointly registers all the images with the baseline, however no temporal information has been considered effectively.

In this work, we formulate the serial image registration in the Bayes’ framework, so that the registration of the current time-point image is related to not only the previous but also the following images (if available). No longitudinal smoothness constraints are applied to the serial deformations so that our algorithm can tolerate temporal anatomical and tissue property changes. For the current image It, if the deformation of its previous image It−1, ft−1, and that of the next image It+1, ft+1, are known, the posterior probabilities of the current deformation ft can be defined as p(ft|I0, It−1, It, ft−1) and p(ft+1|I0, It, It+1, ft), respectively. By jointly considering both the previous and the next images, we calculate the deformation of the current image, ft, by maximizing the combined posterior probabilities,

| (1) |

Using the chain rule of probability equations, we can easily calculate Eq. (1) as,

| (2) |

where λ(t) gives other probability terms, which are not related to ft. p(ft+1|ft) and p(ft+1|ft) are the conditional probability of the serial deformation fields. Since in this work, no temporal smoothness about the deformation fields is enforced so that our algorithm can tolerate larger or discontinuous temporal changes that often appear during image-guided therapy, these two conditional probabilities are assumed to be constants. Therefore, can be calculated by minimizing,

| (3) |

where Es,t() is the serial image difference/similarity measure, and Er() is the regularization term of the deformation field. λr is the weight of Er. The serial image difference measure can be defined as,

| (4) |

where e[] is the operator for calculating a feature vector, and Ω is the image domain. In this work, the feature vector for each voxel consists of the intensity, gradient magnitude, and the fuzzy membership functions calculated from the segmentation step, i.e., e[v] = [I(v), | ∇ I(v)|, μv,1, μv,2,..., μv,K]T. When a physical process model is available, it can be incorporated into the second term of Eq. (4) or the conditional probabilities of deformations (such as p(ft+1|ft) and p(ft+1|ft)), so that any feature changes from one image onto another can be considered. Further, the deformations of neighboring images might not be independent by embedding a temporal model of deformations according to the physical model. However that is out of the scope of this study. Er in Eq. (3) is the regularization energy of deformation field ft, and it can be derived from the prior distribution of the deformation. If no prior distribution is available, the regularization term can be some continuity and smoothness constraints. In this work, since the cubic B-Spline is used to model the deformation field, the continuity and smoothness is guaranteed, thus the regularization term Er is omitted.

The serial image registration algorithm then iteratively calculates/refines the deformation field ft of each time-point image by minimizing the energy function in Eq. (3) until convergence. Notice that in the first iteration, since the registration results for neighboring images are not available, only the first term of Eq. (4) is used, which is essentially a pairwise FFD.

2.4 The 4-D Clustering Algorithm for Image Segmentation

Given the serial images It, t = 0, 1,..., T and the longitudinal deformations ft, t = 1,..., T, the purpose of the 4-D segmentation is to calculate the segmented images by considering not only the spatial but also the temporal neighborhoods. The clustering algorithm is performed to classify each voxel of the serial image into different tissue types by minimizing the objective function,

| (5) |

where voxel v in image I0 corresponds to voxel ft(v) in image It, referred to as voxel (t, v), and μ, c, q, K follow the FCM formulation [23].

| (6) |

where and are the spatial and temporal neighborhoods of voxel (t, v), and Mk = {m = 1,..., K; m ≠ 6 k}. The fuzzy membership functions μ are subject to, , for all t and v.

The second term of Eq. (5) reflects the spatial constraints of the fuzzy membership functions, which is analogous to the FANTASM algorithm [24]. The difference is that an additional weight is used as an image-adaptive weighting coefficient, thus stronger smoothness constraints are applied in the image regions that have more uniform intensities, and vice versa. is defined as, , where (Dr * It)(t,v) refers to first calculating the spatial convolution (D r * It), and then taking its value at location (t, v), where Dr is a spatial differential operator along axis r. Similarly, the third term of Eq. (5) reflects the temporal consistency constraints, and is calculated as, , where Dt is the temporal differential operator, and (Dt * I(t,v))t refers to first calculating the temporal convolution (Dt * I(t, v)) and then taking its value at t. It is worth noting that the temporal smoothness constraint herein does not mean that the serial deformations have to be smooth across different time-points.

Using Lagrange multipliers to enforce the constraint of fuzzy membership function in the objective function Eq. (5), we get the following two equations to iteratively update the fuzzy membership functions and calculate the clustering centroids,

| (7) |

| (8) |

In summary, the proposed joint registration and segmentation algorithm iteratively performs the serial registration and the segmentation algorithms until the difference between two iterations is smaller than a prescribed value. No temporal smoothness about the deformation fields is enforced in order to tolerate larger or discontinuous temporal changes that often appear during image-guided diagnosis and treatment. Another advantage of this method is that physical procedure models could also be incorporated to our framework to better handle the temporal changes of the serial images during intervention. However, relatively simple clustering segmentation algorithm is used in the iterative procedure, where no anatomical information such as airway, blood vessels, and others are available. A sophisticated segmentation might be used to better represent the lung information for more robust registration.

3 Experimental Results

The dataset used in the experiments consists of anonymized serial lung CT images of lung cancer patients (N = 20). The spatial resolutions of the images from the same patient are different due to different settings and imaging protocols. The typical voxel spacing in our data is 0.81mm×0.81mm×5mm, 0.98mm×0.98mm×5mm, and 0.7mm × 0.7mm × 1.25mm. For the serial images of the same patient, the time interval between different scans for our data set is between two to three months, and all the subsequent images have been globally aligned and re-sampled onto the space of the first image using the ITK package.

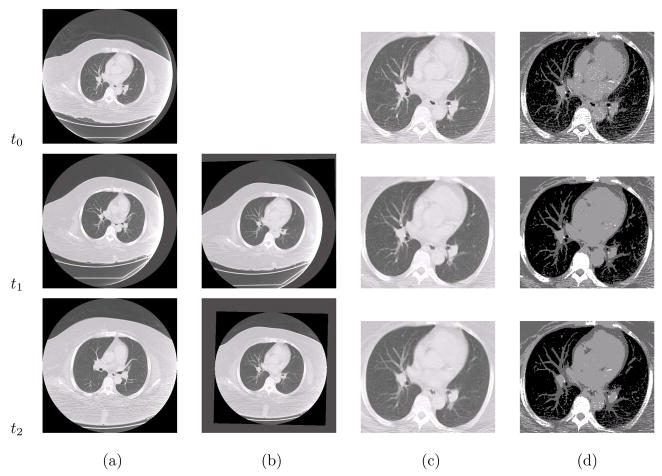

Fig. 3 gives an example of the segmentation results of our dataset. It can be seen that after global registration of the original images in Fig. 3(a), the lung regions can be roughly aligned in Fig. 3(b). By performing the joint registration and segmentation for such serial images, the lung region can be precisely aligned as shown in Fig. 3(c) and (d). The parameters used in the experiments are as follows: K = 3, α = 200, β = 200, σs = 2, σt = 1, and the spatial and temporal neighboring voxels are used. All algorithms have been implemented using the standard GNU C language on an Intel Core 2 Duo CPU 2.2GHz with 2GB RAM. Based on the number of CPU cores, the program can divide the images to be processed into 2, 4 or 8 overlapping subimages, and perform registration of each subimage in parallel. The communication and updating of the data within the overlapping regions among the subimages are achieved by using the shared memory of the Linux system. For dual-core CPU, the processing time for a series of 3 images with size 512 × 512 × 55 is about 2.5 minutes. The algorithm typically stops with 2 or 3 iterations.

Figure 3.

Illustration of the joint registration and segmentation algorithm. (a) Input serial images, (b) the globally registered images, (c) the results of the proposed algorithm, and (d) the corresponding segmented images.

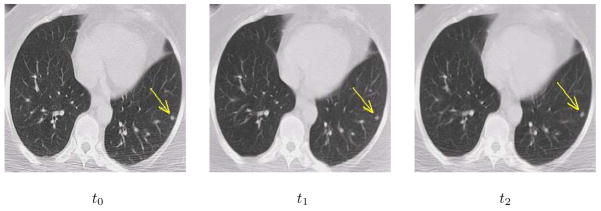

Fig. 4 illustrates the registration results of serial images with small lesion. It can be seen that the lesion appears in the same slice and the same location in the registered serial images. Using this technique, we can easily calculate the longitudinal changes of the lesion for diagnosis and assessment, and also precisely guide the interventional devices toward the lesion during image-guided therapy.

Figure 4.

Registration results of serial images with lesion. The arrows point to the lesion.

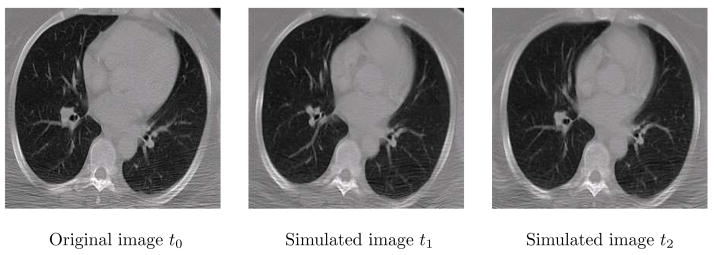

We evaluated the performance of the proposed algorithm using simulated images. The images are simulated by randomly sampling the statistical model trained from the sample deformation fields. First, for each series of the sample images, the demon’s algorithm was used to register the subsequent images onto the first time-point image. Then, one sample image is selected as the template image, and all the other first time-point images of other subjects are deformed onto the template space using the same deformable registration algorithm. Finally the statistical model about the spatially warped/normalized temporal deformations are calculated, which is then used to randomly generate the longitudinal deformation of the template image. In this paper, the statistical model of deformation (SMD) presented in [25] was used to train such a model. In our image datasets, one image was selected as the template and other 19 images were selected as the training samples. Fig. 5 shows an example of the simulated serial images from the template image.

Figure 5.

Example of the simulated serial images.

After applying the algorithm on the ten randomly simulated images, the registration errors with the ground truth were calculated reflecting the accuracy of the registration. The results show that the mean registration errors (resolution 0.7mm×0.7mm×1.5mm) is 0.9mm, and for images with resolution 0.81mm×0.81mm×5mm, the mean registration error is 4.1mm. In general, the registration errors are found less than the largest voxel spacing of the simulated images.

In order to illustrate the advantages of joint registration and segmentation, we also calculated the temporal stability of the segmentation results. For each image series, we calculate the total number of tissue type changes along the serial deformation fields and normalize this number by the total number of voxels. It turns out that for 20 series of images we tested, the proposed algorithm has about 25% voxels with tissue type changes across the temporal domain, while the FFD yields about 32% tissue type changes across the image series. The results indicate that the joint registration and segmentation generates relatively stable measures for serial images.

4 Conclusion

Integrated image-guided lung cancer diagnosis and therapy provides a paradigm shift in the workflow of early stage lung cancer care. Image-guided molecular imaging has the potential to increase the diagnostic specificity and sensitivity in detecting small peripheral lung cancer in real-time. The confirmed lesion can be treated immediately under image guidance. This change in the workflow will greatly reduce healthcare cost and provide a window of opportunity to detect and treat small peripheral lung cancer earlier. In our image-guided diagnostic and therapeutic system of small peripheral lung lesions the alignment of the pre-procedural lung CT images as well as the intra-procedural serial images is an important step to accurately guide and monitor the interventional procedure. We proposed a joint registration and segmentation algorithm for serial image processing in lung cancer molecular image-guided therapy. In this algorithm, the serial image registration and segmentation procedures are performed iteratively to improve the robustness and temporal stability for serial image processing. A new serial image registration algorithm has been proposed based on the Bayes’ framework, and the registration of the current time-point image is not only related to the baseline image, but also to its temporally neighboring images. Based on this principle, a new serial image similarity measure is defined, and the deformation is modeled using the traditional Free Form Deformation (FFD). Experimental results using both simulated and real lung CT images show the accuracy and robustness compared to the free form deformation. Future works include incorporation of physical procedure models into our algorithm to better handle the temporal changes of the serial images during intervention, and the integration to the real-time EM-guided fiber-optic microendoscope system.

Acknowledgments

The research of STCW is partially supported by a grant NIH NLM G08LM008937.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.McWilliams A, MacAulay C, Gazdar A, Lam S. Innovative molecular and imaging approaches for the detection of lung cancer and its precursor lesions. Oncogene. 2002;21:6949–6959. doi: 10.1038/sj.onc.1205831. [DOI] [PubMed] [Google Scholar]

- 2.Wardwell N, Massion P. Novel strategies for the early detection and prevention of lung cancer. Semin Oncol. 2005;32(3):259–268. doi: 10.1053/j.seminoncol.2005.02.009. [DOI] [PubMed] [Google Scholar]

- 3.Bach P, Jett J, Pastorino U, Tockman M, Swensen S, Begg C. CT screening and lung cancer outcomes. Jama. 2009;297(9):953–961. doi: 10.1001/jama.297.9.953. [DOI] [PubMed] [Google Scholar]

- 4.Black C, de Verteuil R, Walker S, Ayres J, Boland A, Bagust A, Waugh N. Population screening for lung cancer using CT, is there evidence of clinical effectiveness? a systematic review of the literature. Thorax. 2007;62(2):131–138. doi: 10.1136/thx.2006.064659. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Duncan J, Ayache N. Medical image analysis: Progress over two decades and the challenges ahead. IEEE Trans on Pattern Analysis and Machine Intelligence. 2000;22(1):85–106. [Google Scholar]

- 6.Rueckert D, Sonoda L, Hayes C, Hill C, Leach M, Hawkes DJ. Nonrigid registration using free-form deformations: application to breast MR images. IEEE Trans on Medical Imaging. 1999;18(8):712–721. doi: 10.1109/42.796284. [DOI] [PubMed] [Google Scholar]

- 7.Johnson H, Christensen G. Consistent landmark and intensity-based image registration. IEEE Trans on Medical Imaging. 2002;21(5):450–461. doi: 10.1109/TMI.2002.1009381. [DOI] [PubMed] [Google Scholar]

- 8.Bhatia K, Hajnal J, Puri B, Edwards A, Rueckert D. Consistent groupwise non-rigid registration for atlas construction. IEEE Symposium on Biomedical Imaging (ISBI) 2004:908–911. [Google Scholar]

- 9.Marsland S, Twining C, Taylor C. A minimum description length objective function for group-wise non-rigid image registration. Image and Vision Computing. 2008 March;26:333–346. [Google Scholar]

- 10.Shen D, Davatzikos C. Measuring temporal morphological changes robustly in brain MR images via 4-D template warping. NeuroImage. 2004;21(4):1508–1517. doi: 10.1016/j.neuroimage.2003.12.015. [DOI] [PubMed] [Google Scholar]

- 11.Wang F, Vemuri B. MICCAI. Palm Springs; CA, Springer: 2005. Simultaneous registration and segmentation of anatomical structures from brain MRI. [DOI] [PubMed] [Google Scholar]

- 12.Chen X, Brady M, Lo J, Moore N. IPMI. Glenwood Springs; CO, Springer: 2005. Simultaneous segmentation and registration of contrast-enhanced breast MRI. [DOI] [PubMed] [Google Scholar]

- 13.Ayvaci A, Freedman D. Joint segmentation-registration of organs using geometric models. Conf Proc IEEE Eng Med Biol Soc. 2007;2007:5251–4. doi: 10.1109/IEMBS.2007.4353526. [DOI] [PubMed] [Google Scholar]

- 14.Droske M, Rumpf M. Multiscale joint segmentation and registration of image morphology. IEEE Trans Pattern Anal Mach Intell. 2007;29(12):2181–94. doi: 10.1109/TPAMI.2007.1120. [DOI] [PubMed] [Google Scholar]

- 15.Pohl KM, Fisher J, Grimson WE, Kikinis R, Wells WM. A bayesian model for joint segmentation and registration. Neuroimage. 2006;31(1):228–39. doi: 10.1016/j.neuroimage.2005.11.044. [DOI] [PubMed] [Google Scholar]

- 16.Wang F, Vemuri BC, Eisenschenk SJ. Joint registration and segmentation of neuroanatomic structures from brain mri. Acad Radiol. 2006;13(9):1104–11. doi: 10.1016/j.acra.2006.05.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Wyatt PP, Noble JA. Map mrf joint segmentation and registration of medical images. Med Image Anal. 2003;7(4):539–52. doi: 10.1016/s1361-8415(03)00067-7. [DOI] [PubMed] [Google Scholar]

- 18.Xue Z, Shen D, Davatzikos C. Classic: consistent longitudinal alignment and segmentation for serial image computing. Neuroimage. 2006;30(2):388–99. doi: 10.1016/j.neuroimage.2005.09.054. [DOI] [PubMed] [Google Scholar]

- 19.Wang F, Vemuri B. MICCAI. Palm Springs; CA, Springer: 2005. Simultaneous registration and segmentation of anatomical structures from brain MRI. [DOI] [PubMed] [Google Scholar]

- 20.Xue Z, Shen D, Davatzikos C. CLASSIC: Consistent longitudinal alignment and segmentation for serial image computing. Medical Image Analysis. 2006;30:388–399. doi: 10.1016/j.neuroimage.2005.09.054. [DOI] [PubMed] [Google Scholar]

- 21.Kitware. itk insight toolkit. http://www.itk.org/(www)

- 22.Yan M, Karp J. An adaptive bayesian approach to three-dimensional MR image segmentation. the Conference on Information Process in Medical Imaging. 1995 [Google Scholar]

- 23.Bezdek J, Hall L, Clarke L. Review of MR image segmentation techniques using pattern recognition. Medical Physics. 1993;20(4):1033–1048. doi: 10.1118/1.597000. [DOI] [PubMed] [Google Scholar]

- 24.Pham P, Prince J. Fantasm: Fuzzy and noise tolerant adaptive segmentation method. http://iacl.ece.jhu.edu/projects/fantasm/(www)

- 25.Xue Z, Shen D, Karacali B, Stern J, Rottenberg D, Davatzikos C. Simulating deformations of mr brain images for validation of atlas-based segmentation and registration algorithms. NeuroImage. 2006;33(3):855–866. doi: 10.1016/j.neuroimage.2006.08.007. [DOI] [PMC free article] [PubMed] [Google Scholar]