Abstract

The effect of feedback and materials on perceptual learning was examined in normal hearing listeners exposed to cochlear implant simulations. Generalization was most robust when feedback paired the spectrally degraded sentences with their written transcriptions, promoting mapping between the degraded signal and its acoustic-phonetic representation. Transfer appropriate processing theory suggests that such feedback was most successful because the original learning conditions were reinstated at testing: performance was facilitated when both training and testing contained degraded stimuli. In addition, the effect of semantic context on generalization was assessed by training listeners on meaningful or anomalous sentences. Training with anomalous sentences was as effective as with meaningful sentences, suggesting that listeners were encouraged to use acoustic-phonetic information to identify speech than to make predictions from semantic context.

Keywords: acoustic simulations, cochlear implants, deafness, perceptual learning

Cochlear implants (CI) have emerged as a successful treatment for many profoundly deaf adults and children who derive little or no benefit from hearing aids (e.g., Eddington, Dobelle, Brackmann, Mladejovsky, & Parkin, 1977; House, Berliner, & Eisenberg, 1981; Michelson, 1971). However, the amount of success CI users have with their devices depends on numerous variables such as onset, duration, degree, and etiology of deafness, electrode placement, and psychophysical detection and discrimination skills (Blamey, Pyman, Gordon, Clark, Brown, Dowell & Howell, 1992; Donaldson & Nelson, 2000). Moreover, a large portion of the variability in performance and outcome benefit between CI users remains unexplained, even after accounting for obvious differences in clinical and demographic variables (Burkholder & Pisoni, 2003; Collison, Munson, & Carney, 2004; Dawson, Busby, McKay, & Clark, 2002; Pisoni, Cleary, Geers, & Tobey, 2000).

Given that there are few well-established or universal methods of training available to new CI users, one possible source of variability in CI users’ performance may reside in the perceptual learning strategies that they use to understand speech with their new implant (Brown, Dowell, Martin, & Mecklenburg, 1990; Clark, 2003; McConkey-Robbins, 2000). Therefore, some of the unexplained variability in CI users’ performance may be related to differences in the basic auditory perceptual learning processes and the cognitive and linguistic skills that develop as a result. The present study sought to assess whether different types of training and feedback differentially affect perceptual learning of speech processed with a cochlear implant simulation in order to evaluate the efficacy of different rehabilitation methodologies for newly implanted individuals.

Vocoder simulations of cochlear implants that process the acoustic signal in a manner consistent with the output of a CI speech processor have proven to be a very effective and useful experimental tool for understanding the processes of electric hearing (Shannon, Zeng, Kamath, Wygonski & Ekelid, 1995). In such models, the acoustic signal is divided into a limited number of frequency bands to simulate the limited number of stimulation sites on the electrode array, the fine structure from each band is replaced with noise in order to simulate the spread of electrical current to the surrounding auditory nerve fibers, and modulated with the amplitude envelope from the original band in order to preserve the overall temporal profile of electric stimulation (Shannon et al., 1995). Vocoder CI simulations are severely spectrally degraded, but surprisingly intelligible. Previous work has demonstrated that normal hearing subjects listening to CI simulations perform similarly to CI users themselves. Normal hearing subjects listening to speech processed with 1, 2, 3 and 4-channel vocoders (Shannon et al., 1995) perform comparably to the best performing CI users with 1, 2 and 4 active electrodes (Fishman et al., 1997). Moreover, normal hearing subjects listening to six band simulations perform comparably to the best CI users with six channel CIS processors (Dorman & Loizou, 1998). Thus, vocoder simulations elicit speech perception levels similar to those experienced by CI users.

Although accurate open set speech recognition is a major goal of cochlear implantation, the type of training and materials that will provide the greatest benefit are not well understood. Synthetic approaches, which focus on the understanding of words in sentences or paragraphs (see Sweetow & Sabes, 2007 for a review) have a high degree of ecological validity, since we understand words in the context of the conversations in which they are embedded (Borg, 2000). They may not be most beneficial for training, however, in that context may fail to provide sufficient information for the accurate prediction of upcoming words or the restoration of partially heard words.

Recent work suggests that an analytic approach, which focuses the listener’s attention on the acoustic elements of the sounds themselves, may be more effective than synthetic training alone. Research has demonstrated that phonological disorders may be more effectively treated by using nonwords than real words presumably because the former approach focuses the listeners’ attention on sublexical information and encourages them to modify their phonological inventories independently of the lexical representation (Martin & Gierut, 2004). Perceptual learning studies have also demonstrated that listeners generalize concepts such as novel pronunciations to new words and contexts when they appear to lack talker and word specificity (Kraljic and Samuel, 2006). While some cases can be attributable to a particular talker or context (Eisner & McQueen, 2005), increased generalization is promoted when the situation is not context specific. Thus, training that does not rely on lexical representations may prevent listeners from confining their learning to the words they were trained on or words they already know, and promote generalization to different phonological environments and novel words.

Recently, short-term auditory perceptual learning has been studied in normal-hearing listeners exposed to CI simulations in order to determine what stimulus materials were most effective in teaching normal-hearing listeners to understand spectrally degraded speech (Davis, Johnsrude, Harvais-Adelman, Taylor, & McGettigan, 2005; Fu, Nogaki, & Galvin, 2005; McCabe & Chiu, 2003, Loebach and Pisoni, 2008). Fu and colleagues (2005) found that training that focused on vowel contrasts using monosyllabic words was more effective than connected discourse tracking for improving listeners’ phoneme recognition. Davis and colleagues (2005) demonstrated that subjects trained with semantically anomalous sentences performed just as well as subjects trained with meaningful sentences, and significantly better than untrained subjects or subjects exposed to nonword sentences. Davis and colleagues (2005) concluded that perceptual learning of degraded speech relies primarily on lexical rather than syntactic information and semantic predictability of the training stimuli. More recent work (Loebach & Pisoni, 2008) found that participants trained to identify meaningful environmental sounds demonstrated significant generalization to speech, but participants trained on speech did not show generalization to environmental sounds. These results suggest that analytic training with nonspeech acoustic stimuli may increase the sensitivity to acoustic elements of speech (Loebach & Pisoni, 2008).

In addition to determining which stimuli are most effective for training, it is also important to determine what type feedback promotes robust perceptual learning. Most post-lingually deafened cochlear implant recipients rarely receive any long-term systematic rehabilitative treatment to improve speech perception (Brown et al., 1990; Clark, 2003; McConkey-Robbins, 2000) or other auditory skills such as music appreciation (Gfeller, 2001) or environmental sound recognition (Reed & Delhorne, 2005). This may explain some of the variability in outcome and benefit across CI users: if each CI user undergoes a different process during adaptation, they may be on fundamentally different levels from the start (Loebach & Pisoni, 2008). Although a small selection of interactive training programs are available through manufacturers of cochlear implants (Rehabilitation Manual, Cochlear Corporation, 1998), there is little consensus among professionals, clinicians, and patients about what is the most beneficial form of treatment for newly implanted individuals. Examining the effects of different training methods on normal-hearing listeners’ abilities to learn to understand speech processed through acoustic CI simulations may therefore provide new insights into what training methods would be most useful to CI recipients.

Past research has demonstrated that feedback that induces a “pop-out effect” is most effective for promoting perceptual learning (Davis et al., 2005). Pop-out is the experience of rehearing a spectrally degraded stimulus after its identity has been revealed; upon the second presentation of the stimulus, the meaning suddenly becomes clear. Presumably, when a listener knows the meaning of a sentence, when s/he hears it again, the percept of all or part of the sentence may pop-out (Remez, Rubin, Berns, Pardo, & Lang, 1994). In their training paradigm, Davis and colleagues (2005) used written or auditory feedback to induce a pop-out effect, finding that perceptual learning of meaningful sentences was most robust when participants heard an unprocessed version or saw the written version of the sentence followed by the representation of the vocoded sentence.

One problem with Davis and colleagues’ (2005) methodology that limits application to CI users is the use of feedback that is impossible to use in the clinic. Unlike normal hearing listeners, deaf CI users cannot be presented with an “undegraded” repetition of a stimulus; given their hearing loss and their reliance on the CI speech processor, all auditory feedback would be spectrally degraded. Therefore, the extension of these data to cochlear implant users is limited. Moreover, Davis and colleagues (2005) only used written feedback during training with meaningful sentences not with semantically anomalous sentences. Therefore, understanding the effects of written feedback on the perceptual learning of semantically anomalous sentences is incomplete. If the goal is to understand how such perceptual learning can be applicable to CI users, studies of perceptual learning of CI simulations in normal-hearing listeners should include some form of written feedback that would be clinically viable.

Another limitation is that Davis and colleagues (2005) did not assess generalization of training to new materials. While previous research has demonstrated that perceptual learning of vocoded stimuli generalizes to new materials from the same or different class, only written feedback was assessed (Loebach & Pisoni, 2008). Therefore, a direct comparison of the efficacy of training using different types of feedback is currently lacking.

In order to address these issues, the present study compared the effects of training with different types of feedback on the perceptual learning of speech processed with a noise vocoder using a pre-/posttest design. Subjects were trained to identify meaningful or anomalous sentences using clinically applicable (written sentences paired with the vocoded signal) or inapplicable (undegraded auditory signal) feedback. In addition, two control groups were included. To control for exposure effects, one control group heard the spectrally degraded stimuli during training but received no feedback at all. To control for practice effects unrelated to adaptation to the degraded stimuli, a second control group was presented with the undegraded stimuli during training. We chose not to include a text-only feedback condition because we felt that it would not promote rapid perceptual learning without being paired with the re-presentation of the degraded stimulus.

We hypothesized that all groups would perform comparably at pretest, but the subjects who received written and degraded auditory feedback would display significantly higher performance at posttest and generalization than subjects who received undegraded auditory feedback. Additionally, we hypothesized that subjects trained on anomalous sentences would perform as well as or better than subjects trained on meaningful sentences because their training focused on the sublexical acoustic-phonetic structure of the stimuli. Therefore, the present experiment is a novel extension of previous work in that it directly compares the effects of clinically appropriate feedback on the perceptual learning of speech processed with an acoustic CI simulation, while also assessing generalization to new materials.

Method

Participants

One hundred forty-four young adults participated in the experiment. All participants were monolingual, native speakers of American English, reported no speech, hearing, language, or attentional disorders at testing and received partial course credit for participation in this study.

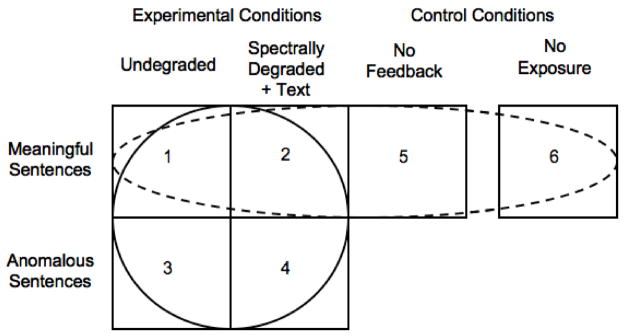

Subjects were randomly assigned to one of four experimental conditions or one of two control conditions (Figure 1) to assess the effect of feedback and sentence materials on the perceptual learning of spectrally degraded speech. The four experimental conditions varied according to the type of materials that subjects transcribed during training (spectrally degraded versions of either meaningful or anomalous sentences) and the type of feedback they received (undegraded speech, or spectrally degraded speech paired with the written orthographic representation of the sentences). Subjects in Group 5 transcribed spectrally degraded meaningful sentences during training, but did not receive any feedback (No Feedback). Subjects in Group 6 were not exposed to any spectrally degraded speech at all during training; they simply transcribed undegraded versions of the meaningful sentences (No Exposure).

Figure 1.

Experimental design of the six possible training conditions to which subjects were assigned. Two analyses were conducted. The circle indicated with the solid line around groups 1–4 shows the comparison that was used to assess the effect of feedback and materials on adaptation to spectrally degraded speech. The dashed line ellipse around groups 1, 2, 5, and 6 shows the comparison across experimental and control conditions to distinguish between perceptual learning effects and repetition/familiarity effects.

Acoustic Simulation of a Cochlear Implant

The signal processing strategy used for the CI simulation used the methods of Kaiser and Svirsky (2000). Each stimulus was pre-emphasized using a second order Butterworth low-pass filter (1200 Hz) and divided into eight frequency bands using a bank of band-pass IIR analysis filters (854–1467 Hz, 1467–2032 Hz, 2032–2732 Hz, 2732–3800 Hz, 3800–5150 Hz, 5150–6622 Hz, 6622–9568 Hz, 9568–11,000 Hz). The amplitude envelope was extracted from each band using a third order Butterworth low-pass filter (150 Hz) and used to modulate bands of white noise that were filtered with the same cutoff frequencies as the original analysis filters. The resulting stimuli contain eight spectral channels that lack the acoustic fine structure of the original stimulus, and simulate the perceptual experience of cochlear implant users who have an electrode array with eight stimulation points (e.g., Kaiser & Svirsky, 2000; Fu et al., 2005).

Stimuli

Six highly familiar nursery rhymes (e.g., Jack and Jill; Humpty Dumpty) were used to initially familiarize the subjects with the spectrally degraded stimuli. 140 meaningful Harvard (IEEE, 1969) and 60 meaningful Boys Town sentences (Stelmachowicz, Hoover, Lewis, Kortekaas & Pitman, 2000) were used in the study. All meaningful Harvard sentences contained 5 keywords (e.g., The ripe taste of cheese improves with age). All meaningful Boys Town sentences contained 4 keywords (e.g., Fresh bread smells great). All responses were scored based on the number of keywords correctly transcribed. Additionally, 90 anomalous Harvard (Herman & Pisoni, 2000) and 100 anomalous Boys Town sentences (Stelmachowicz et al., 2000) were also used in the study. To make the sentences semantically anomalous, the content words from one set of Harvard or Boys Town sentences were classified according to parts of speech and replaced with unrelated keywords from the appropriate syntactic category from another set of Harvard or Boys Town sentences. The overall syntactic structure of the sentences remained unchanged but the semantic relationships between the content words were removed. Since anomalous sentences are derived from meaningful sentences, the anomalous Harvard sentences also contained 5 unrelated keywords (e.g., Trout is straight and also writes brass) and anomalous Boys Town sentences contained 4 unrelated keywords (e.g., Strange nails taste dark).

All stimuli were produced by a female speaker who had highly intelligible speech (Burkholder, 2005). Recordings were made in a sound-attenuated booth (IAC Audiometric Testing Room, Model 402A) using a Shure head-mounted microphone (SM98). Recordings were digitized online (16-bit analog-to-digital converter (DSC Model 240)) at 22,050 Hz, and stored as Windows PCM .wav files. All stimuli were normalized to 65dB(A) RMS.

Experimental Procedures

Participants were tested at individual testing stations equipped with a Gateway PC (P5-133) with a 15″ CRT monitor (Vivitron15). Auditory stimuli were presented over calibrated headphones (Beyer Dynamics DT100) at approximately 70dB(A).

The experiment was divided into 5 phases: (1) familiarization; (2) pretest; (3) training; (4) posttest; and (5) generalization. In the familiarization block, participants listened to 6 spectrally degraded nursery rhymes while reading along with the printed text on the monitor.

In the pretest, all participants were asked to transcribe 20 spectrally degraded meaningful Harvard sentences. An on-screen dialog box appeared immediately after each sentence prompting subjects to begin typing their responses on the keyboard. Subjects did not receive any feedback during the pretest and were not permitted to repeat sentences.

During training, listeners were asked to transcribe either 130 meaningful (Groups 1, 2, and 5) or anomalous (Groups 3 and 4) spectrally degraded sentences (Figure 1). In addition, listeners in Group 6 transcribed meaningful sentences that were not spectrally degraded. After hearing each sentence, listeners typed their responses into an on-screen dialog box.

Subjects in the experimental conditions (Groups 1, 2, 3 and 4) received feedback immediately after entering their responses. Subjects in the Undegraded conditions (Groups 1 and 3) were presented with an undegraded version of each sentence as feedback, whereas subjects in the Spectrally Degraded + Text conditions (Groups 2 and 4) were presented with the vocoded version of the sentence paired with the orthographic representation of the sentence. Subjects in the two control conditions (Groups 5 and 6) did not receive any feedback.

During the posttest, all listeners were asked to transcribe the same 20 spectrally degraded meaningful Harvard sentences that they heard in the pretest. Subjects did not receive feedback during the posttest and did not have the option to repeat sentences.

Two generalization blocks were used to assess the efficacy of training and feedback on the transfer of perceptual learning to novel spectrally degraded materials. In the first generalization block, subjects were asked to transcribe 50 novel meaningful Harvard sentences. In the second generalization block, subjects transcribed 20 novel anomalous Harvard sentences. The generalization phases were composed of differing numbers of stimuli due to availability: there are larger databases of meaningful sentences available to researchers. For the experiment, we used all of the anomalous sentences that were available to us, therefore the generalization phases consist of different numbers of stimuli.

Data Analysis

For each sentence, the percentage of keywords correct was calculated and averaged across each block. Typographical errors were scored as correct if a target letter was substituted by any immediately surrounding letter on the computer keyboard. Responses in which the correct letters were transposed were also considered as typographical errors and scored as correct. Keywords that contained obvious spelling errors and homophones were also scored as correct. However, changes in the word tense or other incorrect affixes were scored as incorrect responses.

A series of ANCOVAs were used to compare subject performance across training materials and feedback conditions. The first was a one-way ANCOVA that assessed the relative benefits of the four types of feedback and exposure conditions used when listeners were trained with meaningful sentences (Figure 1, dashed ellipse). These analyses compared the performance of groups 1 (undegraded auditory feedback), 2 (text plus spectrally degraded feedback), 5 (a control group that was exposed to the same stimuli as groups 1 and 2 but received no feedback at all) and 6 (another control group that was not even exposed to the spectrally degraded stimuli) across the five blocks of the experiment. The second analysis was a two-way ANCOVA (Figure 1, solid circle) comparing the effect of type of material presented (spectrally degraded meaningful vs. spectrally degraded anomalous sentences) and the type of feedback condition (undegraded auditory feedback vs. text plus spectrally degraded feedback) across the five blocks of the experiments. All analyses of performance during the training, post-test and generalization blocks included pretest performance as a covariate to ensure that the effects we observed were due to the experimental manipulations rather than from better initial performance before training. All post hoc comparisons used the Bonferroni correction to adjust for multiple comparisons.

Although undegraded speech may be beneficial for training normal hearing subjects (Davis et al., 2005), such feedback cannot be used with CI users because they always receive spectrally degraded input due to the nature of their hearing loss and cochlear prostheses. It is of interest to determine whether the spectrally degraded sentence paired with the text representation would be beneficial to perceptual learning since such feedback is clinically feasible in CI users. Comparisons across training materials were used to determine whether sentence context influences pre- to posttest improvement in performance. In meaningful sentences, subjects can use semantic context to predict upcoming words based on their transitional probabilities and thematic relations. In anomalous sentences, however, subjects must rely primarily on the acoustic-phonetic information encoded in the auditory signal, because they cannot predict words based on semantic context. Comparing performance across these two conditions provides a way to assess whether training that focuses on the acoustic-phonetic information in the signal is as effective as training them to recognize words using semantic context.

Subjects in the control conditions were included to assess the amount of improvement that can be attributed to procedural learning. Subjects in the No Feedback condition (Group 5) provide a measure of whether the mere exposure to vocoded sentences without any feedback improves posttest performance, and allows the differentiation of training effects from exposure effects. Subjects in the No Exposure condition (group 6) provide a measure of the amount of procedural learning that arises from being exposed to the same sentences in the pre- and posttest, allowing us to differentiate perceptual learning from procedural learning. Since subjects in the No Exposure group are exposed to undegraded sentences during training, they may show less improvement from pre- to posttest than the other groups since they are not being trained to attend to and use the acoustic-phonetic information in the spectrally degraded signal.

Results

A summary of the descriptive statistics for each group’s performance during the pre-, posttest, and generalization phases of the experiment is shown in Table 1.

Table 1.

Means and standard deviations of the percent of key words correctly identified by the six groups of listeners before, during, and after training (left three columns) and during generalization (right two columns).

| Pretest | Training | Posttest | Generalization | ||||

|---|---|---|---|---|---|---|---|

| Group | Feedback | Training Materials | Novel Meaningful | Novel Anomalous | |||

| 1 | Undegraded | Degraded Meaningful | .37 (.11) | .50 (.11) | .52 (.11) | .49 (.12) | .39 (.12) |

| 3 | Undegraded | Degraded Anomalous | .36 (.13) | .41 (.11) | .56 (.14) | .50 (.14) | .41 (.12) |

| 2 | Degraded + Text | Degraded Meaningful | .42 (.10) | .60 (.08) | .63 (.10) | .57 (.09) | .41 (.07) |

| 4 | Degraded + Text | Degraded Anomalous | .40 (.13) | .48 (.10) | .61 (.12) | .57 (.10) | .48 (.10) |

| 5 | None | Degraded Meaningful | .36 (.13) | .50 (.15) | .52 (.18) | .49 (.12) | .37 (.13) |

| 6 | None | Undegraded Meaningful | .36 (.11) | .95 (.02) | .47 (.14) | .44 (.13) | .31 (.11) |

Does orthographic and spectrally degraded auditory feedback promote greater training benefits than undegraded auditory feedback?

These analyses compare the performance of participants who were trained with meaningful sentences but received varying degrees of feedback (Figure 1, dashed ellipse). For all analyses, one-way ANOVAs and ANCOVAs were conducted using feedback (spectrally degraded + text, undegraded, no feedback and no exposure) as the between subjects variable.

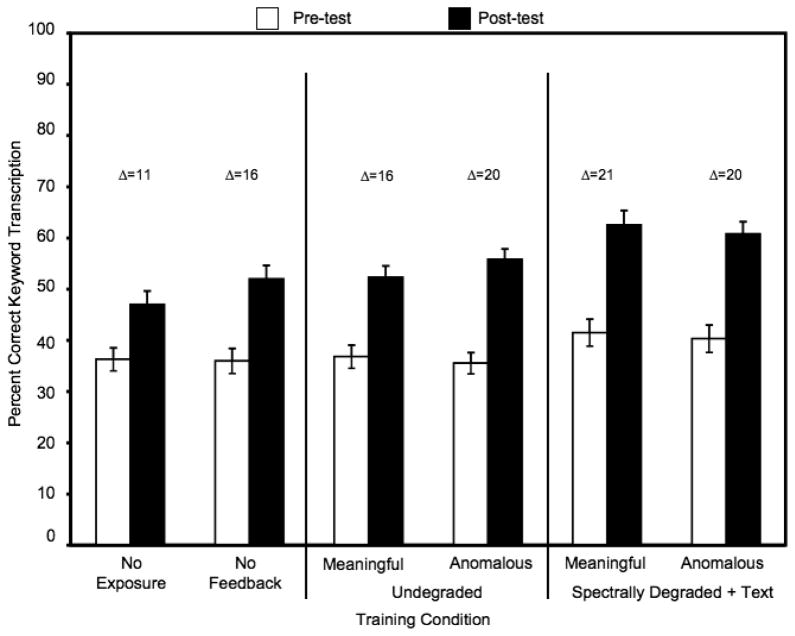

The ANOVA comparing the scores at pretest (Figure 2) failed to reveal a significant main effect of feedback (F (3, 91) = 1.087, p = .359, ηp2 = .035), thus establishing a baseline measure of performance and confirming that all subjects performed comparably before training began.

Figure 2.

Pre- and posttest performance of listeners who received No Feedback (left panel), Undegraded (middle panel), and Spectrally Degraded + Text feedback (right panel). The change in percentage points from pre-training to post-training appears above the bars representing each group. Error bars represent the standard error of the mean.

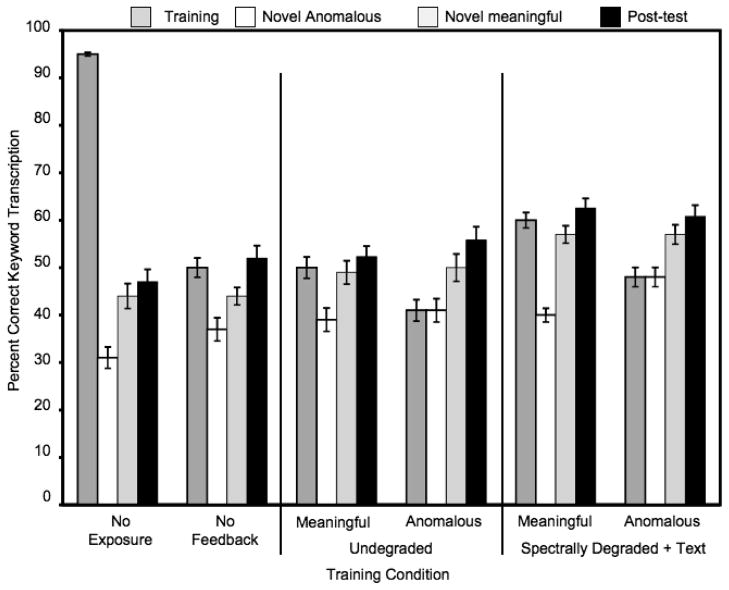

The ANCOVA comparing performance across groups during the training session (Figure 3) revealed a significant main effect of feedback (F (2, 68) = 6.004, p = .004, ηp2 = .888). Participants who received the Spectrally Degraded + Text feedback (M = .60, SD = .08) performed significantly better during training than participants who received Undegraded (M = .50, SD = .11, p = .013) or No Feedback (M = .50, SD = .15, p = 0009), both of whom performed similarly to each other (p = 1.00). Participants from group 6 who transcribed unprocessed sentences during training were omitted from these analyses due to their scores being near ceiling (M = .95, SD = .02).

Figure 3.

Generalization of perceptual learning to anomalous and meaningful sentences by listeners trained without feedback, with Undegraded or with Spectrally Degraded + Text feedback. Error bars represent the standard error of the mean.

The ANCOVA comparing posttest performance across groups (Figure 2) revealed a significant main effect of feedback (F (3, 90) = 8.675, p < .001, ηp2 = .224). Participants who received the Spectrally Degraded + Text feedback (M = .63, SD = .10) performed significantly better than participants in the Undegraded (M = .52, SD = .11, p = .023), No feedback (M = .52, SD = .18, p = .044) and No Exposure (M = .47, SD = .14, p < .001) feedback conditions, who did not differ from one another (all p > .110). The ANCOVA for the difference scores also revealed a significant main effect of feedback (F (3, 90) = 8.675, p < .001, ηp2 = .224), with the subjects in the Spectrally Degraded + Text condition showing significantly more gain from training (M = .21, SD = .07) than participants in the other feedback conditions (MU = .16, SD = .08; MNF = .16, SD = .06; MNE = .11, SD = .05, all p < .04), who did not differ from each other (all p > .110).

The ANCOVA for novel meaningful sentences in the first generalization block (Figure 3) revealed a significant main effect of feedback (F (3, 90) = 4.722, p = .004, ηp2 = .136). Participants trained with Spectrally Degraded + Text feedback (M = .57, SD = .09) performed as well as participants trained with Undegraded (M = .49, SD = .12, p = .231) and No Feedback (M = .49, SD = .12, p = 0.43), and significantly better than subjects in the No Exposure group (M = .44, SD = .13, p = .002). All subjects in the Undegraded, No Feedback and No Exposure groups performed similarly to one another (all p > .31).

The ANCOVA for novel anomalous sentences in block 2 of generalization (Figure 3) revealed a significant main effect of feedback (F (3, 90) = 2.929, p = .038, ηp2 = .089). Participants in the Spectrally Degraded + Text group (M = .41, SD = .07) performed as well as participants in the Undegraded group (M = .39, SD = .12, p = 1.00), No Feedback group (M = .36, SD = .12, p = 1.00) and No Exposure group (M = .31, SD = .11, p = .112).

Taken together, these results suggest that Spectrally Degraded + Text feedback produces greater gains at posttest and during generalization to novel meaningful sentences than Undegraded feedback or No Feedback at all. Generalization to anomalous sentences appears to be equal across feedback conditions, and will be explored further in the next section.

Do meaningful sentences promote greater training benefits than anomalous sentences?

These analyses compare the performance of participants trained with meaningful or anomalous sentences with varying degrees of feedback (Figure 1, circle). For all analyses, two-way ANOVAs and ANCOVAs were conducted using materials (meaningful or anomalous sentences) and feedback (spectrally degraded + text, undegraded) as between subjects variables.

The ANOVA comparing the scores at pretest (Figure 2) failed to reveal a significant main effect of feedback (F (1,92) = 3.805, p = .054, ηp2 = .04) or materials (F (1, 92) = .257, p = .613, ηp2 = .003). This establishes a baseline measure of performance and confirms that all subjects performed comparably before any training began. However, since a numerical trend was observed favoring participants in the Spectrally Degraded + Text conditions, all analyses will include pretest performance as a covariate.

The ANCOVA comparing performance during the training session (Figure 3) revealed a significant main effect of materials (F (1, 92) = 55.581, p < .001, ηp2 = .379) and feedback (F (1, 92) = 15.751, p < .001, ηp2 = .148). Participants who were trained with meaningful sentences (M = .55, SD = .11) performed significantly better during training than participants trained with anomalous sentences (M = .44, SD = .11). Participants given Spectrally Degraded + Text feedback (M = .54, SD = .11) performed significantly better during training than participants given Unprocessed sentences (M = .44, SD = .12).

The ANCOVA for keyword recognition scores at posttest (Figure 2) failed to reveal a significant main effect of materials (F (1, 91) = 1.573, p = 0.213, ηp2 = .017). Participants trained with meaningful sentences (M = .57, SD = .12) performed as well as participants trained with anomalous sentences (M = .58, SD = .13). A significant main effect of feedback was observed (F (1, 91) = 6.597, p = 0.012, ηp2 = .068), indicating that participants given Spectrally Degraded + Text feedback (M = .62, SD = .11) performed significantly better than participants given Unprocessed feedback (M = .54, SD = .13).

The ANCOVA for the pre-/post test difference scores revealed similar findings, with no main effect of materials (F (1, 90) = 1.735, p = 0.191, ηp2 = .019), indicating that participants trained with meaningful sentences (M = .18, SD = .08) showed an equivalent gain from training as participants trained with anomalous sentences (M = .20, SD = .07). A significant main effect of feedback (F (1, 90) = 6.036, p = 0.016, ηp2 = .063) was observed, and participants trained with the Processed + Text feedback (M = .21, SD = .07) performed better than participants who received Unprocessed feedback (M = .18, SD = .08).

The ANCOVA for keyword recognition scores for novel meaningful sentences in the first generalization block did not reveal a main effect of materials (F (1, 91) = .578, p = 0.449, ηp2 = .006) after pretest performance was excluded (Figure 3). Participants trained on meaningful sentences (M = .53, SD = .11) performed as well as participants trained on anomalous sentences (M = .54, SD = .12). A significant main effect of feedback was observed (F (1, 91) = 5.591, p = 0.02, ηp2 = .058), indicating that participants trained with the Processed + Text feedback (M = .57, SD = .09) performed significantly better than participants trained with the Unprocessed feedback (M = .50, SD = .13).

The ANCOVA for keyword recognition scores for novel anomalous sentences in the second generalization block did reveal a significant main effect of materials (F (1, 91) = 10.162, p = 0.002, ηp2 = .10). Participants trained on anomalous sentences showed better generalization of training to novel anomalous sentences (M = .45, SD = .11) than did participants trained on meaningful sentences (M = .40, SD = .10). A main effect of feedback was not observed (F (1, 91) = .979, p = 0.325, ηp2 = .011), indicating that participants trained with the Spectrally Degraded + Text feedback performed as well as participants trained with the Unprocessed feedback (Figure 3).

Taken together, these findings suggest that meaningful and anomalous sentences produce similar performance at posttest, similar pre-posttest difference scores, and equivalent levels of generalization to the recognition of novel meaningful sentences. However, for the recognition of novel anomalous sentences, training specificity was observed, with participants trained with anomalous sentences showing significantly better generalization than training with meaningful sentences. Moreover, in all but one instance, feedback pairing the spectrally degraded signal with its orthographic form produced uniformly better performance than using the unprocessed signal.

Discussion

The results of the present study support three conclusions about perceptual learning of speech that has been processed with a cochlear implant simulation. First, sentence recognition improves rapidly over a short period of time regardless of the amount of exposure to the stimuli, the presence or quality of feedback, or the semantic content of the training materials (Figure 2). Second, the magnitude and robustness of perceptual learning were influenced by exposure to the stimuli and the type of feedback subjects received (Figure 2 and 3). Third, training with anomalous sentences was just as effective as training with meaningful sentences, and produced equivalent levels of generalization to novel meaningful sentences, and better generalization to novel anomalous sentences (Figure 3).

The initial stages of perceptual learning of speech processed through a CI simulation are rapid and robust. Even listeners not exposed to the spectrally degraded speech during training displayed significant gains from pre- to posttest. However, because the same set of sentences was used during both phases, this improvement represents a practice effect rather than an effect of adaptation to the processing condition. Simply exposing listeners to the spectrally degraded stimuli without providing them with explicit feedback during training (No Feedback) did not result in improvements that were greater than the practice effect alone. However, listeners trained on either meaningful or anomalous sentences with feedback (Undegraded or Spectrally Degraded + Text) achieved significantly higher posttest scores than listeners who received no feedback. This finding cannot be attributed to some subjects simply being better, since the effects were robust after pretest scores were factored out. This finding suggests that actively providing a subject with feedback increases the benefit they will receive from training over passive exposure.

Additionally, the type of materials that a listener experiences during training had no overall effect on perceptual learning. Presentation of meaningful sentences during training did produce significantly higher scores across the 130 training trials compared to anomalous sentences, but this could be attributed to differences in relative task difficulty. Moreover, these differences did not bear out across the other experimental blocks (posttest, generalization to novel meaningful sentences), indicating that training with anomalous sentences was as effective as training with meaningful sentences. Additionally, a training specific effect was observed for anomalous sentences: while training with anomalous sentences did generalize to novel meaningful sentences, training with meaningful sentences did not generalize to anomalous sentences. This finding is similar to that observed by Loebach and Pisoni (2008) who found that training specificities exist depending on stimulus materials used during training. Taken together, these findings suggest that training with meaningful sentences may place the listener in an interpretive mode (synthetic), whereas training with anomalous sentences may allow the listener to be more analytic, focusing instead on the acoustic phonetic structure of the signal.

More importantly, the type of feedback given to listeners during training was found to have a substantial effect on perceptual learning and posttest performance. The amount of improvement from pre- to posttest, and generalization scores to novel meaningful stimuli was greater for the group who received Spectrally Degraded + Text feedback than for the groups who heard the Undegraded stimuli alone, or received no feedback at all. This result suggests that subjects were better able to adapt and adjust their internal representations of the spectrally degraded stimuli when it was paired with the orthographic representation of the sentence. Generalization to novel anomalous sentences, however, was not affected by feedback, and was rather driven by exposure to anomalous sentences during training.

Taken together, the finding that pairing the spectrally degraded version of a stimulus with its textual representation as feedback is superior to the undegraded auditory signal are both theoretically and clinically significant. Clinically, pairing the degraded signal with text is the only type of feedback examined in this study that would be possible to use with cochlear implant recipients in rehabilitative protocols. Unlike the normal-hearing listeners used in this study, deaf CI users do not have the option to hear a clear auditory stimulus as feedback. Although auditory and orthographic feedback would not be a viable form of feedback in an everyday adaptive online capacity, it could be used in clinic or home based targeted training paradigms. Given the findings of the present study, such training would be expected to generalize well to real world listening environments and thereby produce an improvement in speech recognition overall. Indeed, a small but growing body of literature suggests that targeted speech training does enhance the perceptual abilities of CI users (e.g., Fu, Galvin, Wang and Nogaki, 2006), although further research is necessary to determine the most efficient and effective training paradigms.

Theoretically, it is also important to consider why pairing the spectrally degraded signal with text as feedback is superior to undegraded auditory feedback, and why listeners receiving such feedback showed more robust generalization. Davis and colleagues (2005) suggested that feedback is most effective when it elicits a “pop out” effect enabling listeners to sub-vocally experience an utterance while simultaneously hearing the spectrally degraded stimulus. The Spectrally Degraded + Text feedback used in the present study allowed listeners to read along with the text of sentence as it was being played, presumably facilitating the mapping of the spectrally degraded acoustic-phonetic information in the signal with their familiar phonological representations providing an advantage over subjects who received auditory alone feedback. Although it cannot be determined whether subjects in the present study experienced a “pop-out” effect, such an effect is certainly a possibility given previous research (Davis et al., 2005).

The effectiveness of the Spectrally Degraded + Text feedback condition can be further explained by reference to the literature on the transfer appropriate processing (TAP) theory of learning and memory (e.g., Lockhart, 2002; Morris, Bransford, & Franks, 1977; Rajaram, Srinivas, & Roediger, 1998; Roediger, Buckner, & McDermott, 1999). While generally applied to learning and recall of higher order material, TAP can be applied by extension to perceptual learning. TAP predicts that recall will be enhanced if the same cognitive processes are executed during both study and testing. For the transcription task in the present study, listeners were asked to formulate the acoustic-phonetic mapping for the degraded sentences in order to type them on the keyboard. During training, subjects who heard the spectrally degraded stimulus while seeing the orthographic representation would have been able to co-register the spectrally degraded stimulus with the identity of the sentence presented on the screen immediately, placing them in a specific cognitive mode during training (first order acoustic-phonetic mapping). Since this task is similar to that used during test, subjects in the Spectrally Degraded + Text feedback condition would be operating under similar cognitive constraints. In contrast, subjects who received the undegraded stimulus as feedback only heard the spectrally degraded stimuli once, and therefore had to co-register their sensory memory trace of the spectrally degraded stimulus with the undegraded version from memory (second order acoustic phonetic mapping). This may have placed them in a different cognitive processing mode during training than would be evoked at testing, resulting in poorer performance. Thus, when both groups of subjects are presented with the spectrally degraded stimuli at test, subjects from the Spectrally Degraded + Text group may be more likely to utilize processes similar to those used during training than would subjects who heard the undegraded stimuli as feedback.

A similar phenomenon may explain the differences in performance of subjects in past research (Davis et al., 2005; Hervais-Adelman, Davis, Johnsrude & Carlyon, 2008). For meaningful sentences, subjects who received the DDC feedback (conditions where a second repetition of the distorted stimulus preceded the presentation of the clear version of the sentence) performed significantly more poorly than subjects who received the clear version before the distorted (DCD). In the DDC condition, subjects perform first order acoustic-phonetic mapping when transcribing the degraded stimulus, then hear a second presentation of the degraded stimulus before hearing the clear version, at which point they have to map the undegraded representation onto the degraded representation from memory (second order mapping). In the DCD condition, subjects are able to perform the first order acoustic-phonetic mapping more easily, since the clear version precedes the degraded version. Therefore at test, subjects in the DCD condition have the original conditions of training reinstated, thereby resulting in better performance.

Additional support for this argument comes from the training specificity effect observed for novel anomalous sentences. Subjects trained with meaningful sentences showed less generalization to anomalous sentences compared to subject trained with anomalous sentences. As Loebach and Pisoni (2008) suggest, training with anomalous sentences may encourage the listener to form these first order acoustic-phonetic links automatically by decreasing reliance on sentence context. Therefore, when a listener trained with anomalous sentences is presented with novel anomalous sentences, they will evoke similar perceptual processes as were used during training (first order acoustic-phonetic mappings). That listeners trained with meaningful sentences did not show such an effect suggests that they may have been operating in a different processing mode (synthetic) at training than was required when tested with anomalous sentences.

In the present study, the type of materials with which subjects were trained also appeared to provide advantages at testing. As expected, listeners trained on meaningful sentences performed better during training than listeners trained on anomalous sentences. This result is consistent with earlier studies examining speech perception for stimuli that deviate from normal linguistic rules (Malgady & Johnson, 1977; Marks & Miller, 1964; Miller & Isard, 1963). In addition, listeners trained on meaningful sentences demonstrated larger repetition effects for stimuli that they heard during training than listeners trained on anomalous sentences. The difficulty in encoding and retaining the semantic content of anomalous sentences in memory (Malgady & Johnson, 1977; Marks & Miller, 1964) may be one factor responsible for the reduced repetition effects observed in the anomalous sentence training groups.

However, in the posttest phase, listeners who were trained on anomalous sentences performed comparably to listeners trained on meaningful sentences and achieved higher posttest scores than listeners who received no exposure to the spectrally degraded speech during training. Taken together, these results suggest that learning to perceive spectrally degraded speech can effectively occur even when the training materials lack semantic context. These results replicate the findings of Davis and colleagues (2005) but, importantly, extend them by directly comparing training effects resulting from clinically unusable feedback to feedback that can actually be used in hearing impaired patients who use cochlear implants. We chose to not include a degraded only feedback condition for two reasons. First, it would have been too close of a replication of the work of Davis and colleagues (2005), who previously established the utility of this condition during the perceptual learning of vocoded speech. Second, we felt that such a condition would not benefit participants as much as a degraded plus text condition, since it would not correct the errors that participants are making during transcription, and could serve to reinforce their previous (possibly erroneous) interpretations.

That training on anomalous sentences would yield equivalent benefit to training on meaningful sentences is of theoretical interest because it suggests that being trained on more difficult materials lacking semantic interpretation may encourage listeners to rely more on bottom-up processing to encode the stimulus materials. While engaging more in bottom-up processing to identify words that are simply strung together in no predictable manner, listeners may become more aware of the fine acoustic-phonetic and sublexical changes that result from the acoustic simulation (analytic approach) than listeners who can rely on the sentential context (synthetic approach). Recent models of Hebbian learning would support similar predictions (e.g., Mirman, McClelland and Holt, 2006).

The suggestion that harder and unfamiliar training materials can lead to more robust learning and generalization is not new (e.g., Bjork, 1994). Recently, Martin and Gierut (2004) proposed that using nonword-training materials to treat children with phonological disorders may result in faster and more generalizeable perceptual learning. However, in treating a phonological disorder, the primary goal is to change speech production by focusing the speaker’s attention on aspects of the stimuli that are being incorrectly produced. In contrast, the present study examined changes in speech perception. Thus, the current evidence showing that training with anomalous sentences is as effective as training with meaningful sentences suggests that the benefits of using training materials that lack semantic interpretation may be observed in speech perception and production as well as in auditory perceptual learning of degraded signals.

Clinically, this is an important finding because it suggests that including semantically anomalous sentences as a subset of the training materials may provide additional benefits to cochlear implant users. By specifically focusing the subjects’ attentional resources on sublexical acoustic-phonetic and phonological information rather than to lexical and semantic cues, CI users may better learn to “hear” using their prosthetic device, rather than learn to successfully interpret the linguistic message based on thematic information and top-down processes using sentence context. Such an analytic skill may transfer more readily to other auditory tasks, such as the recognition of indexical information in speech, or the interpretation of environmental stimuli (Burkholder, 2005; Loebach and Pisoni, 2008).

Cochlear implant users frequently encounter communicative situations in which important top-down cues are absent. While using a telephone or listening to a public-service announcement, both lip-reading and pragmatic contextual cues are reduced or unavailable. Therefore, it is important to understand how perceiving speech through a cochlear implant is affected when the listener is forced to rely primarily on bottom-up processing of acoustic-phonetic and sublexical information. The results of the present investigation are encouraging because they suggest that training cochlear implant users to understand speech that lacks top-down processing cues could be a successful approach in promoting improved speech perception in a variety of everyday listening conditions where there is high variability and differential reliance on top-down context.

Conclusions

In conclusion, the results of the present study demonstrate that both the type of materials and the specific kind of feedback used during training contribute significantly to perceptual learning and generalization to new signals processed through a cochlear implant simulation. Feedback that allowed the listeners to read the content of the stimuli while listening to the spectrally degraded sentence was consistently better than the undegraded auditory alone feedback. Spectrally degraded stimuli paired with written feedback is presumed to be more effective because it may promote the reinstatement at the time of testing of the cognitive processes (first order acoustic phonetic mapping) used during training (e.g., Lockhart, 2002; Morris et al., 1977; Rajaram et al., 1998).

The results of this study also replicate earlier findings that semantic information is important to the top-down processing skills used in identifying speech. However, semantic information is not required for listeners learning to understand spectrally degraded speech as subjects trained with anomalous sentences showed equivalent levels of generalization to subjects trained with meaningful sentences. Learning to understand spectrally degraded speech may be more robust when semantic cues are removed because under these circumstances, listeners are forced to rely on the low level acoustic-phonetic signal instead of semantic context. Directing the listeners’ attention to the acoustic-phonetic properties of the speech signal may make it easier to generalize learning to new types of stimuli than traditional training methods which rely more on interpreting semantic information and lexical cues. This approach aims to train the process by which speech is perceived (analytic processes), rather than the final product of perceptual analysis and spoken language comprehension (synthetic processes).

Acknowledgments

This research was supported by NIH-NIDCD research grants DC00111 and DC03937, training grant DC00012, and the American Hearing Research Foundation. We are grateful for the time and support from Speech Research Lab members who participated in the piloting of these experiments. We also thank Shivank Sinha and Luis Hernandez for their technical assistance on this project. We would like to thank Rose Burkholder-Juhasz for her assistance in data collection and for her contributions to an earlier draft of the manuscript.

Footnotes

Publisher's Disclaimer: The following manuscript is the final accepted manuscript. It has not been subjected to the final copyediting, fact-checking, and proofreading required for formal publication. It is not the definitive, publisher-authenticated version. The American Psychological Association and its Council of Editors disclaim any responsibility or liabilities for errors or omissions of this manuscript version, any version derived from this manuscript by NIH, or other third parties. The published version is available at www.apa.org/journals/xhp.

Contributor Information

Jeremy L. Loebach, Department of Psychological and Brain Sciences, Indiana University

David B. Pisoni, Department of Psychological and Brain Sciences, Indiana University, and Indiana University School of Medicine

Mario A. Svirsky, Department of Otolaryngology, New York University School of Medicine

References

- Bjork RA. Memory and metamemory considerations in the training of human beings. In: Metcalfe J, Shimamura A, editors. Metacognition: Knowing about knowing. Cambridge, MA: MIT Press; 1994. pp. 185–205. [Google Scholar]

- Blamey PJ, Pyman BC, Gordon MG, Clark GM, Brown AM, Dowell RC, Howell RD. Factors predicting postoperative sentence scores in postlinguistically deaf adult cochlear implant patients. Annals of Otology, Rhinology, and Laryngology. 1992;101:342–348. doi: 10.1177/000348949210100410. [DOI] [PubMed] [Google Scholar]

- Borg E. Ecological aspects of auditory rehabilitation. Acta Oto-Laryngologica. 2000;1(20):234–241. doi: 10.1080/000164800750001008. [DOI] [PubMed] [Google Scholar]

- Brown AM, Dowell RC, Martin LF, Mecklenburg DJ. Training of communication skills in implanted deaf adults. In: Clark GM, Tong YC, Patrick JF, editors. Cochlear Prostheses. Edinburgh, UK: Churchill Livingstone; 1990. pp. 181–192. [Google Scholar]

- Burkholder RA. Unpublished doctoral dissertation. Indiana University-Bloomington; 2005. Perceptual Learning with Acoustic Simulations of Cochlear Implants. [Google Scholar]

- Burkholder RA, Pisoni DB. Speech timing and working memory in profoundly deaf children after cochlear implantation. Journal of Experimental Child Psychology. 2003;85:63–88. doi: 10.1016/s0022-0965(03)00033-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark GM. Cochlear Implants: Fundamentals and Applications. New York, NY: Springer-Verlag; 2003. Rehabilitation and Habilitation; pp. 654–706. [Google Scholar]

- Cochlear Corporation. Rehabilitation manual. Englewood, CO: Cochlear Corporation; 1998. [Google Scholar]

- Collison EA, Munson B, Carney AE. Relations among linguistic and cognitive skills and spoken word recognition in adults with cochlear implants. Journal of Speech, Language, and Hearing Research. 2004;47:496–508. doi: 10.1044/1092-4388(2004/039). [DOI] [PubMed] [Google Scholar]

- Davis MH, Johnsrude IS, Hervais-Adelman A, Taylor K, McGettigan C. Lexical information drives perceptual learning of distorted speech: evidence from the comprehension of noise-vocoded sentences. Journal of Experimental Psychology: General. 2005;134(2):222–241. doi: 10.1037/0096-3445.134.2.222. [DOI] [PubMed] [Google Scholar]

- Dawson P, Busby P, McKay C, Clark G. Short-term auditory memory in children using cochlear implants and its relevance to receptive language. Journal of Speech, Language, and Hearing Research. 2002;45:789–801. doi: 10.1044/1092-4388(2002/064). [DOI] [PubMed] [Google Scholar]

- Donaldson GS, Nelson DA. Place-pitch sensitivity and its relation to consonant recognition by cochlear implant listeners using the MPEAK and SPEAK speech processing strategies. Journal of the Acoustical Society of America. 2000;107:1645–1658. doi: 10.1121/1.428449. [DOI] [PubMed] [Google Scholar]

- Dorman MF, Loizou PC. The identification of consonants and vowels by cochlear implants patients using a 6-channel CIS processor and by normal hearing listeners using simulations of processors with two to nine channels. Ear and Hearing. 1998;19:162–166. doi: 10.1097/00003446-199804000-00008. [DOI] [PubMed] [Google Scholar]

- Eddington DK, Dobelle WH, Brackmann DE, Mladejovsky MG, Parkin JL. Auditory prostheses research with multichannel intracochlear stimulation in man. Annals of Otology, Rhinology, &Laryngology, Suppl 53. 1978;87(6 part 2):1–39. [PubMed] [Google Scholar]

- Eisner F, McQueen JM. The specificity of perceptual learning in speech processing. Perception & Psychophysics. 2005;67:224–238. doi: 10.3758/bf03206487. [DOI] [PubMed] [Google Scholar]

- Fishman KE, Shannon RV, Slattery WH. Speech recognition as a function of the number of electrodes used in the speak cochlear implant speech processor. Journal of Speech Language and Hearing Research. 1997;40:1201–1215. doi: 10.1044/jslhr.4005.1201. [DOI] [PubMed] [Google Scholar]

- Fu QJ, Nogaki G, Galvin JJ. Auditory training with spectrally shifted speech: implications for cochlear implant patient auditory rehabilitation. Journal of the Association for Research in Otolaryngology. 2005;6:180–189. doi: 10.1007/s10162-005-5061-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fu Q-J, Galvin J, Wang X, Nogaki G. Moderate auditory training can improve speech performance of adult cochlear implant patients. Acoustic Research Letters Online. 2006;6(3):106–111. [Google Scholar]

- Gfeller K. Aural rehabilitation of music listening for adult cochlear implant recipients: addressing learner characteristics. Music Therapy Perspectives. 2001;19(2):88–95. [Google Scholar]

- Herman R, Pisoni DB. Perception of “elliptical speech” following cochlear implantation: use of broad phonetic categories in speech perception. The Volta Review. 2003;102(4):321–347. [PMC free article] [PubMed] [Google Scholar]

- Hervais-Adelman A, Davis MH, Johnsrude IS, Carlyon RP. Perceptual learning of noise vocoded words: Effects of feedback and lexicality. Journal of Experimental Psychology: Human Perception and Performance. 2008;34(2):460–474. doi: 10.1037/0096-1523.34.2.460. [DOI] [PubMed] [Google Scholar]

- House WF, Berliner KI, Eisenberg LS. The cochlear implant: 1980 update. Acta Oto-Laryngologica. 1981;91:457–462. doi: 10.3109/00016488109138528. [DOI] [PubMed] [Google Scholar]

- IEEE. IEEE Recommended Practice for Speech Quality measurements. Institute of Electrical and Electronic Engineers; New York: 1969. [Google Scholar]

- Kaiser AR, Svirsky MA. Using a personal computer to perform meaningful-time signal processing in cochlear implant research. Paper presented at the Proceedings of the IXth IEEE-DSP Workshop; Hunt, TX. 2000. Oct 15–18, [Google Scholar]

- Kraljic T, Samuel AG. Generalization in perceptual learning for speech. Psychonomic Bulletin and Review. 2006;13(2):262–268. doi: 10.3758/bf03193841. [DOI] [PubMed] [Google Scholar]

- Lockhart RS. Levels of processing, transfer-appropriate processing, and the concept of robust encoding. Memory. 2002;10(56):397–403. doi: 10.1080/09658210244000225. [DOI] [PubMed] [Google Scholar]

- Loebach JL, Pisoni DB. Perceptual learning of spectrally degraded speech and environmental sounds. Journal of the Acoustical Society of America. 2008;123(2):1126–1139. doi: 10.1121/1.2823453. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malgady RG, Johnson MG. Recognition memory for literal, figurative, and anomalous sentences. Bulletin of the Psychonomic Society. 1977;9(3):214–216. [Google Scholar]

- Marks LE, Miller GA. The role of semantic and syntactic constraints in the memorization of English sentences. Journal of Verbal Learning & Verbal Behavior. 1964;3:1–5. [Google Scholar]

- Martin SM, Gierut JA. Sublexical effects in phonological treatment. Poster presented at the Symposium on Research in Child Language Disorders; Madison, WI. 2004. [Google Scholar]

- McCabe M, Chiu P. A comparison of speech recognition training programs for cochlear implant users: a simulation study. Poster session presented at the 146th meeting of the Acoustical Society of America; Austin, TX. 2003. [Google Scholar]

- McConkey-Robbins A. Rehabilitation after cochlear implantation. In: Niparko J, Kirk K, Mellon N, McConkey A, Tucci D, Wilson B, editors. Cochlear Implants: Principles and Practices. Philadelphia, PA: Lippincott Williams & Wilkins; 2000. [Google Scholar]

- Michelson RP. Electrical stimulation of the human cochlea—a preliminary report. Archives of Otolaryngology. 1971;93:317–323. doi: 10.1001/archotol.1971.00770060455016. [DOI] [PubMed] [Google Scholar]

- Miller GA, Isard S. Some perceptual consequences of linguistic rules. Journal of Verbal Learning and Behavior. 1963;2:217–228. [Google Scholar]

- Mirman D, McClelland JL, Holt LL. An interactive Hebbian account of lexically guided tuning of speech perception. Psychonomic Bulletin & Review. 2006;13(6):958–965. doi: 10.3758/bf03213909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris CD, Bransford JD, Franks JJ. Levels of processing versus transfer-appropriate processing. Journal of Verbal Learning and Verbal Behavior. 1977;16:519–533. [Google Scholar]

- Pisoni D, Cleary M, Geers A, Tobey E. Individual differences in effectiveness of cochlear implants in children who are prelingually deaf: New process measures of performance. The Volta Review. 2000;101:111–164. [PMC free article] [PubMed] [Google Scholar]

- Rajaram S, Srinivas K, Roediger H. A transfer-appropriate processing account of context effects in word-fragment completion. Journal of Experimental Psychology: Learning, memory, & Cognition. 1998;24(4):993–1004. [Google Scholar]

- Reed CM, Delhorne LA. Reception of environmental sounds through cochlear implants. Ear and Hearing. 2005;26(1):48–61. doi: 10.1097/00003446-200502000-00005. [DOI] [PubMed] [Google Scholar]

- Remez RE, Rubin PE, Berns SM, Pardo JS, Lang JM. On the perceptual organization of speech. Psychological Review. 1994;101(1):129–156. doi: 10.1037/0033-295X.101.1.129. [DOI] [PubMed] [Google Scholar]

- Roediger HL, Buckner RL, McDermott KB. In: Components of processingmemory: Systems, process, or function? Foster JK, Jelicic M, editors. Oxford, UK: Oxford University Press; 1999. [Google Scholar]

- Shannon RV, Zeng FG, Kamath V, Wygonski J, Ekelid M. Speech recognition with primarily temporal cues. Science. 1995;270:303–304. doi: 10.1126/science.270.5234.303. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Hoover BM, Lewis DE, Kortekaas RWL, Pittman AL. The relation between stimulus context, speech audibility, and perception for normal-hearing and hearing-impaired children. Journal of Speech, Language, and Hearing Research. 2000;43:902–914. doi: 10.1044/jslhr.4304.902. [DOI] [PubMed] [Google Scholar]

- Sweetow RW, Sabes JH. Technological advances in aural rehabilitation: Applications and innovative methods of service delivery. Trends in Amplification. 2007;11(2):101–111. doi: 10.1177/1084713807301321. [DOI] [PMC free article] [PubMed] [Google Scholar]