Abstract

Background

A growing body of literature shows that patients accept the use of computers in clinical care. Nonetheless, studies have shown that computers unequivocally change both verbal and non-verbal communication style and increase patients' concerns about the privacy of their records. We found no studies which evaluated the use of Electronic Health Records (EHRs) specifically on psychiatric patient satisfaction, nor any that took place exclusively in a psychiatric treatment setting. Due to the special reliance on communication for psychiatric diagnosis and evaluation, and the emphasis on confidentiality of psychiatric records, the results of previous studies may not apply equally to psychiatric patients.

Method

We examined the association between EHR use and changes to the patient-psychiatrist relationship. A patient satisfaction survey was administered to psychiatric patient volunteers prior to and following implementation of an EHR. All subjects were adult outpatients with chronic mental illness.

Results

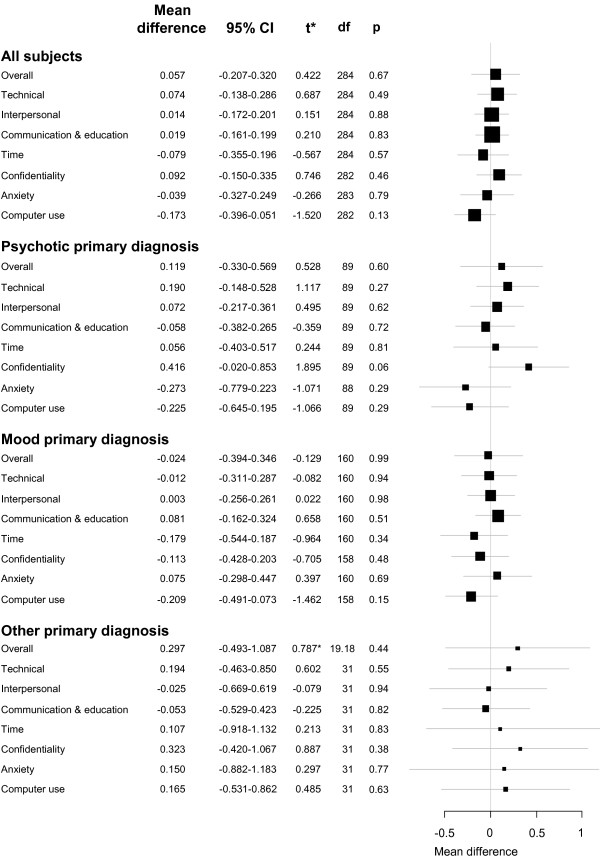

Survey responses were grouped into categories of "Overall," "Technical," "Interpersonal," "Communication & Education,," "Time," "Confidentiality," "Anxiety," and "Computer Use." Multiple, unpaired, two-tailed t-tests comparing pre- and post-implementation groups showed no significant differences (at the 0.05 level) to any questionnaire category for all subjects combined or when subjects were stratified by primary diagnosis category.

Conclusions

While many barriers to the adoption of electronic health records do exist, concerns about disruption to the patient-psychiatrist relationship need not be a prominent focus. Attention to communication style, interpersonal manner, and computer proficiency may help maintain the quality of the patient-psychiatrist relationship following EHR implementation.

Background

The current emphasis on the adoption and use of Electronic Health Records (EHRs) is well known. The Institute of Medicine advocated for EHR use as early as 2001 [1]. The Bush administration created the Office of the National Coordinator for Health Information Technology and set the goal of nationwide EHR implementation by 2014 [2,3]. The American Recovery and Reinvestment Act of 2009 will provide $20 billion in funding for health information technology, while at the same time stipulating that physician practices which do not use a certified EHR by 2014 may forfeit up to 3% of their Medicare reimbursements [4]. Recent Medicare and Medicaid legislation provides a 2% incentive for physicians to implement e-prescribing by 2009, while instituting a 2% penalty for those that do not by 2012 [5].

In spite of the improving costs of initial investment, barriers to EHR adoption remain [6]. Among these are effects on eye contact, time with the patient, and clinical workflow [7,8]; lack of interoperability between different EHR systems [9,10]; the need for training and the effects on time utilization [11]; culture changes, changes in the distribution of power, and user resistance [12]; uncertain or equivocal benefits [13,14]; and the introduction of new errors and other types of unintended consequences [15,16].

Patient satisfaction, however, does not seem to be a barrier. Since the 1980s, numerous studies have shown little change to overall patient satisfaction when physicians use computers in a clinical setting [17-23]. Patients generally seem to accept the use of computers in the delivery of their care. Some more recent studies have indicated an increase in patient satisfaction when EHRs are used [24,25]. Other studies have shown, however, that certain aspects of the patient-physician relationship are altered by computer use. Communication style becomes less fluent [26-29] and concerns about confidentiality of the health record increase [22,30-34]. Some early studies suggested that computer use may lead to increases (or smaller decreases) in anxiety over the course of an outpatient encounter [35-37] or that physicians who use computers during encounters are seen as "less ideal" than those who don't [38].

Unfortunately, psychiatric patients may be disproportionately influenced by these changes. The patient-psychiatrist relationship is arguably more reliant on communication skills, confidentiality, and psychodynamic interpretations than non-psychiatric specialties. Makoul [39] found that electronic records may lead to more "complete" documentation, but that there was a non-significant decrease in the amount of "patient-centered" communication and exploration of psychosocial issues. Changes to communication pattern [40] or eye contact [41] could conceivable lead practitioners to overlook or misinterpret the verbal and non-verbal cues which often lead to refined lines of inquiry. Similarly, physical placement of computer equipment (such as in corners, or around the perimeter of a room) could make sustained observation of patient behavior difficult, or lead to changes in the psychiatrist's body language that patients might misinterpreted as disinterest. The stigma against mental illness may magnify patients' concerns about confidentiality, leading to less open or less truthful communication [33,40,42]. This could subsequently alter screening for suicide or other high-risk events. Because symptoms of anxiety are associated with diagnoses of depression, bipolar disorder, schizophrenia, substance use, and posttraumatic stress disorder, changes in anxiety, brought about by EHR use, could potentially alter the accurate evaluation of these disorders. The "idealism" study by Cruickshank [38], performed in the United Kingdom in the early 1980s, is of uncertain significance today. It could represent discomfort with the emerging technology of the desktop computer, or the desire for a more traditional approach to medicine. More recent studies, however, have likened the computer to a "third party" in the examination room, altering the physicians' focus on the patient and altering the quality of the therapeutic dyad [43-45].

We found no studies which looked exclusively at the effect of EHR use on the relationship between the patient and his or her psychiatrist. This study investigates the effect of EHR use among psychiatric outpatients. A group of 161 psychiatric outpatients completed satisfaction surveys prior to EHR adoption and another 141 completed surveys at least 4 months following EHR adoption. The primary objective was to examine the correlation between EHR use and aspects of the patient-psychiatric relationship. We hypothesized that EHR use would decrease patient satisfaction scores related to communication, confidentiality, and anxiety.

Methods

Study Design

We used a quasi-experimental, pre-test and post-test design approved by the University of New Mexico (UNM) Health Sciences Center Human Research Review Committee (HRRC No. 04-365). The quasi-independent variable was exposure to paper charting (before an EHR implementation) or electronic charting (after implementation). The dependent variable was the quality of the patient-psychiatrist relationship as measured by a self-administered, paper-based questionnaire. Patient primary diagnosis was also recorded as a covariate.

Instrument & Data Collection

Because of its ease of administration and its public availability, we chose the Rand Corporation's previously validated Patient Satisfaction Questionnaire-18 (PSQ-18) as a starting point in survey design [46]. The PSQ-18 captures seven dimensions of satisfaction, including "General Satisfaction," "Technical Quality," "Interpersonal Manner," "Communication," "Financial Aspects," "Time Spent with Doctor," and "Accessibility and Convenience." In order to control for acquiescence bias, the PSQ-18 applies balanced keying, in which both positively and negatively worded questions are included. Subjects record their responses on a five-point Likert scale ranging from "Strongly Agree" (1) to "Strongly Disagree" (5). During scoring, the scores for positively-worded questions are reversed so that for all questions, low scores consistently indicate low satisfaction and high scores consistently indicate high satisfaction.

We included all of the original PSQ-18 questions except for those in the "Financial Aspects" and "Accessibility & Convenience" subscales. We removed those questions since the literature review did not suggest that EHR use would change patients' attitudes towards these factors. Where necessary to make questions psychiatric specific, we replaced the word "medical" with "psychiatric." "Doctor" or "physician" was likewise replaced with "psychiatrist." This resulted in a draft of only 12 questions. Next, we added questions from an unpublished and unvalidated survey which had been locally drafted during study inception. This locally drafted survey included all of the PSQ-18 subscales as well as three additional subscales of "Anxiety," "Computer use," and "Confidentiality." The resulting composite draft, consisting of both PSQ-18 and locally drafted questions, included 49 questions.

Because questions on the locally drafted survey had been rationally derived without statistical analysis, we solicited feedback on survey design and understanding from a convenience sample of six inpatient volunteers from the UNM Psychiatric Center inpatient wards. We used the feedback to re-word confusing questions and to rank the questions by importance as perceived by the patients. In the final survey, we included all of the PSQ-18 questions (except for those in the "Financial Aspects" and "Accessibility & Convenience" subscales), and retained only enough of the highest-ranking local questions in order to yield a one-page survey that included at least two questions in each subscale. This final, composite survey contained 23 questions, 12 from the PSQ-18 and 11 from the local survey. The questions and subscales of the final survey are shown in Table 1. We retained the original PSQ-18 Likert scale and practice of balanced keying.

Table 1.

Survey subscales and questions

| Subscales & questions | Original PSQ-18 subscale* |

|---|---|

| Overall: | |

| The psychiatric care I have been receiving is just about perfect. | General satisfaction |

| I am dissatisfied with some things about the psychiatric care I receive. | General satisfaction |

| Technical: | |

| I have some doubts about the ability of the psychiatrists who treat me. | Technical quality |

| Sometimes psychiatrists make me wonder if their diagnosis is correct. | Technical quality |

| My psychiatrist could be a lot better. | local |

| I think my psychiatrist's office has everything needed to provide complete psychiatric care. | Technical quality |

| When I go for psychiatric care, they are careful to check everything when treating and examining me. | Technical quality |

| Interpersonal: | |

| Psychiatrists act too businesslike and impersonal toward me. | Interpersonal manner |

| I wish that I had a different psychiatrist. | local |

| My psychiatrist treats me in a very friendly and courteous manner. | Interpersonal manner |

| Communication & Education: | |

| Psychiatrists sometimes ignore what I tell them. | Communication |

| My psychiatrist understands what I tell him or her. | local |

| The psychiatrist answers all of my questions. | local |

| My psychiatrist is too quiet. | local |

| Psychiatrists are good about explaining the reasons for tests. | Communication |

| Time: | |

| Those who provide my psychiatric care sometimes hurry too much when they treat me. | Time spent with doctor |

| Psychiatrists usually spend plenty of time with me. | Time spent with doctor |

| Confidentiality: | |

| My psychiatric record is kept safe. | local |

| I worry about who sees my psychiatric record. | local |

| Anxiety: | |

| I worry about the future. | local |

| I worry about my psychiatric care. | local |

| Computer Use: | |

| The computer gets in the way of the psychiatrist. | local |

| I am comfortable with the computer in my psychiatrist's office. | local |

*In the "Original PSQ-18 subscale" column, "local" indicates the question was based on an unpublished survey that had been drafted by the Principle Investigator during study inception. Otherwise, the question was based on the PSQ-18 and this column shows its PSQ-18 subscale. The Confidentiality, Anxiety, and Computer Use subscales contain locally drafted questions only and are not part of the original PSQ-18 scoring system. PSQ-18 questions belonging to the "Financial Aspects" and "Accessibility & Convenience" subscales were not used.

Setting & Subjects

Between November 2004 and December 2005, 161 pre-implementation subjects were recruited. A total of 141 Post-implementation surveys were completed Between December 2007 and December 2008. The 24-month interim between collection periods resulted from unanticipated extensions to the EHR implementation date. It also included a four-month acclimation period between full-scale implementation and the beginning of post-implementation recruitment. This acclimation period was intended to prevent the capture of transient results as physicians became more proficient with using the EHR.

All subjects were adult, ambulatory outpatients seen in the University of New Mexico Psychiatric Center (UNM-PC) Continuing Care Clinics. Approximately 2000 chronically mentally ill patients attend these clinics, which are staffed by approximately 10 attending physicians, 5 residents, two certified nurse practitioners, and 10 nurses. Approximately 20 to 40 patients per day are treated for a wide range of psychiatric disorders, including mood, psychotic, anxiety, and personality disorders. Treatment focuses on medication management, although short term psychotherapies are used for select patients. Although case management is widely employed, the vast majority of patients are stabilized on medication and live independently in the community. Dually-diagnosed patients do attend these clinics, but most patients whose primary diagnosis is substance use-related are seen at a different UMN facility. Additionally, patients with dementia or developmental disorders attend other clinics and were therefore not sampled. Those that spoke no English (estimated to be less than 1% of the clinic population) were excluded from the study due to limited bilingual resources. Patients who required psychiatric hospital admission directly from their clinic appointment were excluded from the sample population. During the study period there were no significant changes to the clinic routine other than EHR implementation.

Consent & Procedure

Potential subjects were approached as they checked out from their outpatient appointments and asked if they would like to participate in a research project investigating the effect of computer use on the patient-psychiatrist relationship. Using a protocol based on order of arrival at the checkout desk, we attempted to approach every patient who checked out from clinic during the data collection periods. If the subject indicated interest, they were taken to an office or secluded area of the waiting room where the purpose, risks, and voluntary nature of the study were fully explained to them. Those that continued to express an interest in participating gave written consent. Each subject was permitted to complete only one satisfaction survey in each study period.

We obtained the participants' written consent for a psychiatric record review and manually recorded their most recent primary diagnosis from their psychiatric record. For comparison of the pre- and post-implementation groups, we also collected race, age, and sex from their hospital record.

Data Analysis

Target enrollment was 160 subjects per group. This would allow unpaired, two-tailed t-tests to detect a 5% change in survey responses with a 5% chance of Type I error, 20% chance of Type II error, and a standard deviation of 0.8 (on a five-point Likert scale). Because actual enrollment was less than our target, the smallest significant effect size became 7% while maintaining the same chance of Type I and Type II error.

The internal consistency reliability of the composite survey was assessed using standardized Cronbach's coefficient alpha. Comparison between pre-implementation and post-implementation groups was by chi-square tests for categorical variables and by two-tailed, unpaired t-tests for continuous variables. All t-tests used pooled variance except for the "Overall" subscale of the Mood stratum which used the Welch approximation to degrees of freedom due to unequal variances. All statistical analyses and graphics were prepared using version 2.9.0 of the open source and freely available R programming language and environment for statistical computing [47].

Results

Comparison of Groups

A total of 161 pre-implementation and 141 post-implementation surveys were initially collected. After eliminating redundant surveys, patient withdrawal, or unclear inclusion criteria found on subsequent review, we were left with 149 pre-implementation and 137 post-implementation surveys. During data analysis, infrequently reported races or infrequently given primary diagnoses were combined into "Other" categories. Table 2 compares demographic characteristics of the pre- and post-implementation groups. The pre-implementation and post-implementation groups were similar with respect to age, race, sex, and primary diagnosis. Characteristics of non-responders were not recorded.

Table 2.

Comparison of groups

| Pre-implementation | Post-implementation | χ2 (t for age) | df | p | |

|---|---|---|---|---|---|

| Number of respondents | 149 | 137 | |||

| Average age (years) | 49.9 | 47.6 | t = 1.823 | 284 | 0.07 |

| % female (n) | 50% (75) | 55% (75) | 0.747 | 1 | 0.39 |

| Race*: | 2.654 | 2 | 0.27 | ||

| Caucasian | 91 (61%) | 74 (54%) | |||

| Hispanic | 39 (26%) | 48 (35%) | |||

| Other | 19 (13%) | 15 (11%) | |||

| Primary diagnosis**: | 0.555 | 2 | 0.78 | ||

| Mood | 83 (55%) | 80 (59%) | |||

| Psychotic | 48 (32%) | 43 (31%) | |||

| Other | 19 (13%) | 14 (10%) | |||

* Racial categories of "Black or African American" (9 pre-implementation; 7 post-implementation), "American Indian or Alaskan Native" (0 pre-implementation; 2 post-implementation); and "Other" (9 pre-implementation; 6 post-implementation) were combined into one "Other" category for statistical analysis.

** Primary diagnosis categories of "Anxiety" (16 pre-implementation; 8 post-implementation), "Substance use" (0 pre-implementation; 3 post-implementation), and "Other" (3 pre-implementation; 3 post-implementation) were combined into one "Other" category for statistical analysis.

Survey Internal Consistency Reliability

Table 3 shows the internal consistency reliability for each of the subscales of the composite survey. Only one of our subscales (Technical) met the 0.7 level that is usually considered the minimum for desirable reliability. The Communication & Education subscale scored lower at 0.64, although this value is identical to that of the original PSQ-18 Communication subscale[46]. The three locally generated subscales (Confidentiality, Anxiety, and Computer Use) scored the lowest with standardized alphas of 0.24, 0.59, and 0.38 respectively.

Table 3.

Internal consistency reliability for composite survey subscales

| Composite survey subscale | Standardized alpha | Original PSQ-18 subscale | Original PSQ-18 alpha |

|---|---|---|---|

| Overall | 0.58 | General | 0.75 |

| Technical | 0.77 | Technical quality | 0.74 |

| Interpersonal | 0.57 | Interpersonal manner | 0.66 |

| Communication & Education | 0.64 | Communication | 0.64 |

| Time | 0.67 | Time Spent with Doctor | 0.77 |

| Confidentiality | 0.24 | ||

| Anxiety | 0.59 | ||

| Computer Use | 0.38 | ||

Electronic Health Record Associations

Figure 1 shows the change in average survey sub-scores before and after EHR implementation. For all subjects, and for subjects stratified by their primary diagnosis, none of the changes reached statistical significance. A post-hoc analysis of average responses for each question separately (rather than grouped into subscales) also showed no significant changes between pre- and post-implementation groups. Raw, mean survey scores are available from the primary author on request.

Figure 1.

Change in satisfaction sub-scores. *All t-tests were based on pooled variance except for the Overall subscale of the Mood stratum which used the Welch approximation to degrees of freedom due to unequal variance.

Discussion

Although the adoption of Electronic Health Records in the United States has proceeded cautiously, in today's technologically-dependent environment the trend is not likely to be reversed. Instead, emphasis may best be placed on the design of efficient EHR systems [48], determination of best practices for their use [49], attention to communication skills (regardless of the charting modality) [50], and more rigorous collection of data to assess the true impact of EHR use on quality of care, costs, efficiency, and patient views [24].

This study is the first we are aware of that attempted to assess the impact of EHR use on the quality of the patient-psychiatrist relationship in a behavioral health venue. Consistent with several decades of research in the non-psychiatric realm, we found no change in satisfaction scores among adult, psychiatric patients when an EHR was used during outpatient encounters instead of paper charting. Our results should lessen the concerns of behavioral health providers and clinic managers who are hesitant to adopt EHRs because of concerns over potentially negative reactions from their patients. Contrary to our hypotheses and some prior studies, we found no change in patient satisfaction in the Communication & Education, Confidentiality, Anxiety, or any other satisfaction subscales.

Because our samples were powered for a 7% change in satisfaction, Type II error is not likely to explain the lack of significance. Instead, the lack of findings may represent a truly negligible impact of EHR use on the patient-psychiatrist relationship, or it may be due to study limitations.

Limitations

Interpretation of our results should be tempered in light of its limitations. First, all of our measures were surrogate estimates. We did not attempt to directly measure actual changes in communication patterns, anxiety, or changes in behavior (either on the part of the patient or the psychiatrist). We also did not measure changes in actual patient outcomes.

Second, our survey was not validated. Though it was based on a valid instrument, the changes we made to it resulted in substantially lower internal consistency reliability than the PSQ-18. As well, the PSQ-18 was initially validated in a population that was not exclusively psychiatric and its native validity might not apply as well to the psychiatric population. The ad-hoc analysis, in which the pre- and post-implementation responses to individual questions (as opposed to subscales) were compared, was performed to address this deficiency. Although there is uncertainty in the exact quality being measured by each question, we do know that there were no statistically significant changes to the subjects' ratings of each question. We retained the concept of subscales in our reporting for their face validity and as a way of summarizing data. In order to avoid invalid comparison with the original PSQ-18 subscales, the labels given to our composite subscales were slightly altered from those of the PSQ-18.

The characteristics of any particular EHR system, or the way individual providers use the EHR, can clearly affect patient-physician interaction [51]. We intentionally did not control for the EHR usage patterns of individual providers in order to enhance the sense of patient-provider privacy and to keep the research project strictly separate from any expectations regarding EHR use. Instead, we relied on a large sample size and very low provider turnover to enhance the probability that each provider would be equally represented in the pre-implementation and post-implementation groups.

Fourth, consistent with much survey research of a voluntary nature, our sampling strategy may have biased our samples towards subjects who were more likely to participate in the project because of high satisfaction.

Finally, our use of primary diagnosis offers only a coarse description of the patient pathology and types of personality characteristics that could affect a patient's reactions to EHR use. Many psychiatric diagnoses are co-morbid, particularly mood, personality, and anxiety disorders, and the disorder considered primary on any particular visit may not remain constant. This may have increased the heterogeneity of patient characteristics within each diagnosis strata, while also increasing the homogeneity between strata. Similarly, we did not differentiate between patients with and without personality disorders. Because Axis II disorders are rarely used as the primary diagnoses, we did not attempt to stratify by Axis II pathology. Also, in order to maintain sufficient numbers of subjects in each diagnostic stratum, we grouped diagnoses by major diagnostic category (e.g. "mood disorder") rather than actual primary diagnosis (e.g., "Major Depressive Disorder, recurrent, severe, without psychotic features"). This resulted in only three broad diagnostic strata of "Mood," "Psychotic," and "Other" disorders.

Conclusion

Consistent with previously published studies on EHR use and patient satisfaction, this study suggests that the use of an Electronic Health Record does not change the overall quality of the patient-psychiatrist relationship.

Patient satisfaction has been shown to affect patient compliance [52,53], treatment outcomes [54,55], malpractice suits [56,57], and the ability to remember instructions [58,59]. Communication skills have consistently shown to affect patient satisfaction [60-62]. Therefore, factors which change communication patterns might also be expected to affect patient outcomes. Psychiatrists and psychiatric patients, who are especially reliant on and sensitive to communication skills, are understandably concerned about the potential impact of EHR use on quality of care provided. This study increases the confidence with which we can extend prior EHR satisfaction studies into the psychiatric realm. While other barriers to EHR adoption do exist, concerns about excessive disruption to the patient-psychiatrist relationship need not be one of them.

Competing interests

None of the authors report any conflicts of interest, competing interests, or financial disclosures. The National Library of Medicine sponsored this study as part of an Individual Biomedical Informatics Fellowship Grant. The sponsor approved the study design as appropriate for the educational goals of the primary author's (RFS) fellowship, but played no role in the conduct of the study, data collection, data analysis, data interpretation, or preparation of the manuscript.

Authors' contributions

The primary author (RFS) is responsible for the study concept, initial design, data collection, data analysis, and initial manuscript preparation. MS contributed to study design aspects involving human research, statistical analysis, and data collection methods. RB and PJK participated in psychiatric and biomedical informatics aspects of the study design respectively. All authors read and approved the final manuscript.

Authors' Information

RFS is an assistant professor in the Department of Biomedical Informatics Research, Training and Scholarship. MS is a professor of Internal Medicine and the Associate Program Director of the UNM General Clinical Research Center Scholars' Program. RB is a Professor of Psychiatry and the Associate Dean for Clinical Affairs of the UNM School of Medicine. PJK is an Assistant Professor and the Director of Biomedical Informatics Research, Training and Scholarship in the UNM Health Sciences Library & Informatics Center. PJK also has an appointment in the Department of Internal Medicine.

Pre-publication history

The pre-publication history for this paper can be accessed here:

Contributor Information

Randall F Stewart, Email: randallfstewart@gmail.com.

Philip J Kroth, Email: pkroth@salud.unm.edu.

Mark Schuyler, Email: mschuyler@salud.unm.edu.

Robert Bailey, Email: bbailey@salud.unm.edu.

Acknowledgements

This study was supported by the National Library of Medicine Grant No. 1 F37 LM008747. Statistical consultation was funded through DHHS-NIH-NCRR GCRC Grant No. 5M01-RR00997 and provided by Ron Schrader, Ph.D., UNM Professor of Math and Statistics.

References

- Institute of Medicine; Committee on Quality of Health Care in America. To Err is Human: Building a Safer Health System. Washington, D.C.: National Academies Press; 2000. [PubMed] [Google Scholar]

- Steinbrook R. Health care and the American Recovery and Reinvestment Act. N Engl J Med. 2009;360(11):1057–60. doi: 10.1056/NEJMp0900665. [DOI] [PubMed] [Google Scholar]

- Heath highway. Nature. 2009. pp. 259–60. [DOI] [PubMed]

- Ledue C. Physicians to receive incentives for EHR use. Healthcare Finance News. 2009. http://www.healthcarefinancenews.com/news/physicians-receive-incentives-ehr-use

- Ford EW, Menachemi N, Peterson LT, Huerta TR. Resistance is futile: but it is slowing the pace of EHR adoption nonetheless. J Am Med Inform Assoc. 2009;16:274–81. doi: 10.1197/jamia.M3042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ash JS, Bates DW. Factors and forces affecting EHR system adoption: report of a 2004 ACMI discussion. J Am Med Inform Assoc. 2005;12:8–12. doi: 10.1197/jamia.M1684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Linder JA, Schnipper JL, Tsurikova R, Melnikas AJ, Volk LA, Middleton B. Barriers to electronic health record use during patient visits. AMIA Annu Symp Proc. 2006. pp. 499–503. [PMC free article] [PubMed]

- Chaudhry B, Wang J, Wu S, Maglione M, Mojica W, Roth E, Morton SC, Shekelle PG. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med. 2006;144:E12–E22. doi: 10.7326/0003-4819-144-10-200605160-00125. [DOI] [PubMed] [Google Scholar]

- Baron RJ, Fabens EL, Schiffman M, Wolf E. Electronic health records: just around the corner? or over the cliff? Ann Intern Med. 2005;143(3):222–6. doi: 10.7326/0003-4819-143-3-200508020-00008. [DOI] [PubMed] [Google Scholar]

- Jaspers MW, Knaup P, Schmidt D. The computerized patient record: where do we stand. Yearb Med Inform. 2006. pp. 29–39. [PubMed]

- Poissant L, Pereira J, Tamblyn R, Kawasumi Y. The impact of electronic health records on time efficiency of physicians and nurses: a systematic review. J Am Med Inform Assoc. 2005;12:505–16. doi: 10.1197/jamia.M1700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeijden MJ Van Der, Tange HJ, Troost J, Hasman A. Determinants of success of inpatient clinical information systems: a literature review. J Am Med Inform Assoc. 2003;10(3):235–43. doi: 10.1197/jamia.M1094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaushal R, Shojania KG, Bates DW. Effects of computerized physician order entry and clinical decision support systems on medication safety: s systematic review. Arch Intern Med. 2003;163(12):1409–16. doi: 10.1001/archinte.163.12.1409. [DOI] [PubMed] [Google Scholar]

- Hunt DL, Haynes RB, Hanna SE, Smith K. Effects of computer-based clinical decision support system on physician performance and patient outcomes: a systemic review. JAMA. 1998;280(15):1339–46. doi: 10.1001/jama.280.15.1339. [DOI] [PubMed] [Google Scholar]

- Ash JS, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc. 2004;11:104–12. doi: 10.1197/jamia.M1471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campbell EM, Sittig DF, Ash JS, Guappone KP, Dykstr RH. Types of unintended consequences related to computerized provider order entry. J Am Med Inform Assoc. 2006;13:547–56. doi: 10.1197/jamia.M2042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aydin CE, Rosen PN, Jewell SM, Fellitti VJ. Computers in the examining room: the patient's perspective. Proc Annu Symp Comput Appl Med Care. 1995. pp. 824–8. [PMC free article] [PubMed]

- Koide D, Asonuma M, Naito K, Igawa S, Shimizu S. Evaluation of electronic health records from viewpoint of patients. Stud Health Technol Inform. 2006;122:304–8. [PubMed] [Google Scholar]

- Ridsdale L, Hudd S. Computers in the consultation: the patient's view. Br J Gen Pract. 1994;44:367–9. [PMC free article] [PubMed] [Google Scholar]

- Garrison GM, Bernard ME, Rasmussen NH. 21st-century health care: the effect of computer use by physicians on patient satisfaction at a family medicine clinic. Fam Med. 2002;34(5):362–8. [PubMed] [Google Scholar]

- Penrod LE, Gadd CS. Attitudes of academic-based and community-based physicians regarding EMR use during outpatient encounters. Proc AMIA Symp. 2001. pp. 528–32. [PMC free article] [PubMed]

- Ornstein S, Bearden A. Patient perspectives on computer-based medical records. J Fam Pract. 1994;38(6):606–10. [PubMed] [Google Scholar]

- Solomon GL, Dechter M. Are patients pleased with computer use in the examination room? J Fam Pract. 1995;41:241–4. [PubMed] [Google Scholar]

- Delpierre C, Cuzin L, Fillaux J, Alvarez M, Massip P, Lang T. A systematic review of computer-based patient record systems and quality of care: more randomized clinical trials or a broader approach? Int J Qual Health Care. 2004;16(5):407–16. doi: 10.1093/intqhc/mzh064. [DOI] [PubMed] [Google Scholar]

- Johnson KB, Serwint JR, Fagan LA, Thompson RE. Computer-based documentation: effects on parent-provider communication during pediatric health maintenance encounters. Pediatrics. 2008;122(3):590–8. doi: 10.1542/peds.2007-3005. [DOI] [PubMed] [Google Scholar]

- Greatbatch D, Heath C, Campion P, Luff P. How do desk-top computers affect the doctor-patient interaction? Fam Pract. 1995;12:32–6. doi: 10.1093/fampra/12.1.32. [DOI] [PubMed] [Google Scholar]

- Warshawsky SS, Pliskin JS, Urkin J, Cohen N, Sharon A, Binstok M, Margolis CZ. Physician use of a computerized medical record system during the patient encounter: a descriptive study. Comput Methods Programs Biomed. 1994;43(3-4):269–73. doi: 10.1016/0169-2607(94)90079-5. [DOI] [PubMed] [Google Scholar]

- Sullivan F, Mitchell E. Has general practitioner computing made a difference to patient care? A systematic review of published reports. BMJ. 1995;311:848–52. doi: 10.1136/bmj.311.7009.848. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brownbridge G, Lilford RJ, Tindale-Biscoe S. Use of a computer to take booking histories in a hospital antenatal clinic. Acceptability to midwives and patients and effects on the midwife-patient interaction. Med Care. 1988;26(5):474–87. doi: 10.1097/00005650-198805000-00004. [DOI] [PubMed] [Google Scholar]

- Churgin PG. Computerized patient records: the patients' response. HMO Pract. 1995;9(4):182–5. [PubMed] [Google Scholar]

- Gadd CS, Penrod LE. Dichotomy between physicians' and patients' attitudes regarding EMR use during outpatient encounters. Proc AMIA Symp. 2000. pp. 275–9. [PMC free article] [PubMed]

- Rethans J, Hoppener P, Wolfs G, Diederiks J. Do personal computers make doctors less personal? Br Med J. 1988;296:1446–8. doi: 10.1136/bmj.296.6634.1446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell E, Sullivan F. A descriptive feast but an evaluative famine: systematic review of published articles on primary care computing during 1980-97. BMJ. 2001;322:279–82. doi: 10.1136/bmj.322.7281.279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carman D, Britten N. Confidentiality of medical records: the patient's perspective. Br J Gen Pract. 1995;45(398):485–8. [PMC free article] [PubMed] [Google Scholar]

- Brownbridge G, Herzmark GA, Wall TD. Patient reactions to doctors' computer use in general practice consultations. Soc Sci Med. 1985;20(1):47–52. doi: 10.1016/0277-9536(85)90310-7. [DOI] [PubMed] [Google Scholar]

- Cruickshank PJ. Patient stress and the computer in the consulting room. Soc Sci Med. 1982;16:1371–6. doi: 10.1016/0277-9536(82)90034-X. [DOI] [PubMed] [Google Scholar]

- Pringle M, Robins S, Brown G. Computers in the surgery the patient's view. Br Med J (Clin Res Ed) 1984;288:289–91. doi: 10.1136/bmj.288.6413.289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cruickshank PJ. Patient rating of doctors using computers. Soc Sci Med. 1985;21(6):615–22. doi: 10.1016/0277-9536(85)90200-X. [DOI] [PubMed] [Google Scholar]

- Makoul G, Curry RH, Tang PC. The use of electronic medical records: communication patterns in outpatient encounters. J Am Med Inform Assoc. 2001;8:610–5. doi: 10.1136/jamia.2001.0080610. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGrath JM, Arar NH, Pugh JA. The influence of electronic medical record usage on nonverbal communication in the medical interview. Health Informatics J. 2007;13:105–18. doi: 10.1177/1460458207076466. [DOI] [PubMed] [Google Scholar]

- Duggan AP, Parrott RL. Physicians' nonverbal rapport building and patients' talk about the subjective component of illness. Hum Commun Res. 2001;27:299–311. [Google Scholar]

- Annas GJ. The Rights of Patients: The ACLU Guide to Patient Rights. 2. Carbondale, Ill.: Southern Illinois University Press; 1989. [Google Scholar]

- Margalit RS, Roter D, Dunevant MA, Larson S, Reis S. Electronic medical record use and physician-patient communication: an observational study of Isreali primary care encounters. Patient Educ Couns. 2006;61(1):134–41. doi: 10.1016/j.pec.2005.03.004. [DOI] [PubMed] [Google Scholar]

- Als AB. The desk-top computer as a magic box: patterns of behavior connected with the desk-top computer: GPs' and patients' perceptions. Fam Pract. 1997;14:17–23. doi: 10.1093/fampra/14.1.17. [DOI] [PubMed] [Google Scholar]

- Hsu J, Huang J, Fung V, Robertson N, Jimison H, Frankel R. Health information technology and physician-patient interactions: impact of computers on communication during outpatient primary care visits. J Am Med Inform Assoc. 2005;12:474–80. doi: 10.1197/jamia.M1741. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marshall GN, Hays RD. The Patient Satisfaction Questionnaire Short-Form (PSQ-18) Santa Monica, Ca.: Rand; 1994. [Google Scholar]

- R Development Core Team. R: A Language and Environment for Statistical Computing. Vienna, Austria: R Foundation for Statistical Computing; 2009. [Google Scholar]

- Häyrinen K, Saranto K, Nykänen P. Definition, structure, content, use and impacts of electronic health records: a review of the research literature. Int J Med Infom. 2008;77(5):291–304. doi: 10.1016/j.ijmedinf.2007.09.001. [DOI] [PubMed] [Google Scholar]

- Ventres W, Kooienga S, Marlin R. EHRs in the exam room: tips on patient-centered care. Fam Pract Manag. 2006;13(3):45–7. [PubMed] [Google Scholar]

- Frankel R, Altschuler A, George S, Kinsman J, Jimison H, Robertson NR, Hsu J. Effects of exam-room computing on clinician-patient communication: a longitudinal qualitative study. J Gen Intern Med. 2005;20:677–82. doi: 10.1111/j.1525-1497.2005.0163.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rouf E, Whittle J, Lu N, Schwartz M. Computers in the exam room: differences in physician-patient interaction may be due to physician experience. J Gen Intern Med. 2007;22(1):43–8. doi: 10.1007/s11606-007-0112-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Starfield B. Stability and change: another view. Am J Public Health. 1981;71(3):301–2. doi: 10.2105/AJPH.71.3.301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolf MH, Putnam SM, James SA, Stiles WB. The Medical Interview Satisfaction Scale: development of a scale to measure patient perceptions of physician behavior. J Behav Med. 1978;1(4):391–401. doi: 10.1007/BF00846695. [DOI] [PubMed] [Google Scholar]

- Ries R, Jaffe C, Comtois K, Kitchell M. Treatment satisfaction compared with outcome in severe dual disorders. Community Ment Health J. 1999;35(3):213–21. doi: 10.1023/A:1018737201773. [DOI] [PubMed] [Google Scholar]

- Priebe S, Gruyters T. Patients' assessment of treatment predicting outcome. Schizophr Bull. 1995;21(1):87–94. doi: 10.1093/schbul/21.1.87. [DOI] [PubMed] [Google Scholar]

- Levinson W, Roter DL, Mullooly JP, Dull VT, Frankel RM. Physician-patient communication. The relationship with malpractice claims among primary care physicians and surgeons. JAMA. 1997;277(7):553–9. doi: 10.1001/jama.277.7.553. [DOI] [PubMed] [Google Scholar]

- Beckman HB, Markakis KM, Suchman AL, Frankel RM. The doctor-patient relationship and malpractice. Lessons from plaintiff depositions. Arch Intern Med. 1994;154(12):1365–70. doi: 10.1001/archinte.154.12.1365. [DOI] [PubMed] [Google Scholar]

- Ley P, Spelman MS. Communications in an out-patient setting. Br J Soc Clin Psychol. 1965;4(2):114–6. doi: 10.1111/j.2044-8260.1965.tb00449.x. [DOI] [PubMed] [Google Scholar]

- Ware JE, Davies AR. Behavioural consequences of consumer dissatisfaction with medical care. Eval Program Plann. 1983;6:291–7. doi: 10.1016/0149-7189(83)90009-5. [DOI] [PubMed] [Google Scholar]

- White J, Levinson W, Roter D. "Oh, by the way ...": the closing moments of the medical visit. J Gen Intern Med. 1994;9(1):24–8. doi: 10.1007/BF02599139. [DOI] [PubMed] [Google Scholar]

- Hall JA, Dornan MC. Meta-analysis of satisfaction with medical care: description of research domain and analysis of overall satisfaction levels. Soc Sci Med. 1988;27(6):637–44. doi: 10.1016/0277-9536(88)90012-3. [DOI] [PubMed] [Google Scholar]

- Woolley FR, Kane RL, Hughes CC, Wright DD. The effects of doctor-patient communication on satisfaction and outcome of care. Soc Sci Med. 1978;12(2A):123–8. [PubMed] [Google Scholar]