Abstract

Emerging neurophysiologic evidence indicates that motor systems are activated during the perception of speech, but whether this activity reflects basic processes underlying speech perception remains a matter of considerable debate. Our contribution to this debate is to report direct behavioral evidence that specific articulatory commands are activated automatically and involuntarily during speech perception. We used electropalatography to measure whether motor information activated from spoken distractors would yield specific distortions on the articulation of printed target syllables. Participants produced target syllables beginning with /k/ or /s/ while listening to the same syllables or to incongruent rhyming syllables beginning with /t/. Tongue–palate contact for target productions was measured during the articulatory closure of /k/ and during the frication of /s/. Results revealed “traces” of the incongruent distractors on target productions, with the incongruent /t/-initial distractors inducing greater alveolar contact in the articulation of /k/ and /s/ than the congruent distractors. Two further experiments established that (i) the nature of this interference effect is dependent specifically on the articulatory properties of the spoken distractors; and (ii) this interference effect is unique to spoken distractors and does not arise when distractors are presented in printed form. Results are discussed in terms of a broader emerging framework concerning the relationship between perception and action, whereby the perception of action entails activation of the motor system.

Keywords: motor theory, perception–action relationship, speech production, interference, electropalatography

One of the most exciting questions in the neuroscience of language concerns the involvement of the motor system in the perception of speech: is the motor system activated during speech perception, and does it play a causal role? Key studies using functional MRI have demonstrated that the brain regions involved in the perception of speech overlap with those involved in the production of speech (1) in a manner that seems to be articulator specific (2). Similarly, studies using transcranial magnetic stimulation (TMS) have shown potentiation of motor cortex representations of the lip (3) and tongue (4) muscles when participants listen to speech. Finally, recent work using repetitive TMS has revealed that disruption to regions of the premotor cortex impacts on perceptual discrimination of speech sounds (5) in a somatotopic manner (6). These studies are all consistent with the proposal that the motor system is activated (and perhaps even essential) in the perception of speech.

However, although there is agreement that motor regions can be activated in speech perception studies, recent reviews of the literature have raised two important challenges over precisely what drives this activation (7, 8). The first challenge stems from the fact that neuroimaging data have been inconsistent, with relatively few studies showing motor activity at a whole-brain corrected level of significance compared with matched nonspeech conditions (7). Some investigators have attributed this inconsistency to the baselines used across studies, claiming that those studies using a complex acoustic baseline (e.g., musical rain, spectrally rotated speech) are less likely to yield motor activity unique to speech perception than those studies using a silent baseline (7). The implication of this first challenge is that the motor activity sometimes observed in speech perception studies has nothing to do with the phonetic content of speech but rather is driven by some acoustic event common to speech and nonspeech sounds (7, 8). The second challenge concerns the possibility that motor activation arises not as a result of basic processes underlying speech perception but as a result of the requirements of certain tasks or listening situations (7–9). The motor system could be recruited strategically to aid the interpretation of degraded speech, for example, or in conjunction with covert rehearsal processes used to improve performance on particular speech perception tasks. The implication of this second challenge is that there is a system for speech perception that is independent of the motor system and that evidence for motor involvement in speech perception arises as a consequence of neural processes that are outside of those normally and necessarily recruited in speech perception. This proposal is consistent with dual-pathway conceptions of speech perception embodied in dorsal–ventral stream accounts in which the primary mode of speech comprehension involves ventral auditory processes that are nonmotoric (10, 11).

Our approach to this issue was to investigate the behavioral consequences of heard speech on the production of speech. Specifically, we hypothesized that if articulatory information is activated in speech perception, then this information should interfere with articulation in a scenario in which participants are asked to produce a target syllable while listening to a different auditory distractor. Rather than assessing whether conflicting auditory information introduces a delay in responding (a finding that would be difficult to attribute unambiguously to a particular level of processing), our approach was to investigate how an auditory distractor impacts upon the actual articulation of a different target. Our reasoning was that if articulatory information is activated in speech perception, then that information may interfere with speech production by introducing particular distortions of the target syllable that reflect the articulatory properties of the distractor.

This interference paradigm is characterized by two critical features that permit us to address the challenges described above in relation to neurophysiologic evidence for motor involvement in speech perception. First, the interference effects on speech production that we hypothesize are highly specific, reflecting particular phonetic properties of the spoken distractors. The observation of such specific distortions in the articulation of targets would be extremely difficult to reconcile with the view that motor activation in speech perception is driven by some acoustic event common to speech and nonspeech sounds (7). Second, the interference effects that we hypothesize constitute distortions of speech production, making the articulatory encoding of auditory distractors disadvantageous to performance. Thus, it would be difficult to argue that the motor system was being recruited strategically to assist participants with this particular speech perception task (7–9). Indeed, if there is a system for speech perception that is independent of the motor system, then participants should be highly motivated to use it in this situation.

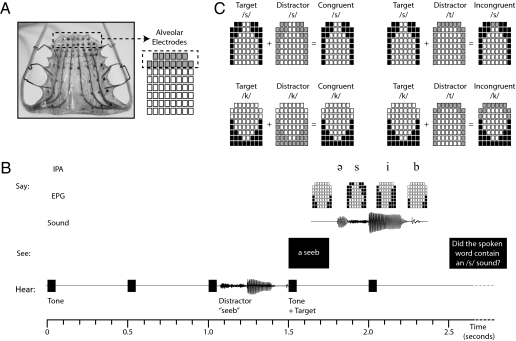

Recent studies in the area of experimental phonetics (12, 13) provide evidence that laboratory-induced speech errors often consist of articulatory elements of the intended targets as well as the responses actually produced. This evidence suggests that the interference effects under examination here could also be manifest as articulatory blends of the target and auditory distractor. Thus, to quantify whether and how auditory distractors impact on the articulation of target syllables, we used a speech physiologic technique known as electropalatography (EPG). EPG permits fine-grained analysis of spatiotemporal changes in the contact of the tongue against the roof of the mouth by sampling contact between the tongue and 62 sites on the palate every 10 ms (Fig. 1A). Thus, the use of EPG in this context will enable us to detect both transient and partial distortions of the articulatory gestures involved in speech production.

Fig. 1.

Experimental predictions and design. (A) Sample palate worn by participants, with alveolar electrodes highlighted; (B) structure of an experimental trial; (C) simplified schematic of the experimental predictions.

Targets and distractors were presented in the context of a specialized “tempo naming” paradigm that enabled us to control the time taken for each response (14). Participants were exposed to a series of five isochronous tones (500 ms apart), on the fourth of which a printed target preceded immediately by an auditory distractor was presented. Participants were required to produce the printed target in line with the fifth tone. After this sequence of events, participants made a phoneme monitoring judgment about the distractor (Fig. 1B). The tempo naming paradigm was used to force participants to pronounce the targets rapidly, thereby preventing them from resolving any interference caused by the distractors before the initiation of articulation (14).

Participants produced target syllables beginning with /k/ or /s/ (e.g., /kib/) while listening to the same syllables (congruent distractors) or to rhyming syllables beginning with /t/ (incongruent distractors). Because the production of /t/ requires a complete alveolar closure formed with the tongue tip, our prediction was that the production of targets in the incongruent distractor condition would be characterized by greater tongue–palate contact in the first two rows of the palate (representing the alveolar region) than would the production of targets in the congruent distractor condition (Fig. 1C). The target phonemes /k/ and /s/ were chosen to establish the generality of this interference effect. Because the production of /k/ requires a complete velar closure formed with the tongue body, any effects of /t/-initial distractors would be manifest on a different articulator (i.e., with a different place of articulation) than that used to produce the target phoneme. In contrast, because the production of /s/ requires a narrow alveolar channel formed with the tongue tip, any effects of /t/-initial distractors would be manifest on the same articulator used to produce the target phoneme, suggesting that the effect of motor activation can also be driven by dimensions such as the degree of constriction or manner of articulation. For both target phonemes, by analogy to models of manual action that propose a control system able to adjust motor programs in flight (15), we predicted that the magnitude of this interference effect would be greater in the initial stages of target production than in the final stages of target production.

Two further experiments were conducted to rule out potential alternative explanations for any interference effects observed, and to home in on a theoretical account of those effects. The first additional experiment replaced the /t/-initial distractors with /g/-initial distractors for the /k/ targets and with /z/-initial distractors for the /s/ targets (these distractors being the voiced equivalents of their respective targets). This experiment thus allowed us to determine whether our interference effects could be attributed to the specific alveolar properties of the /t/-initial distractors rather than being a manifestation of phonetic incongruency between heard distractors and intended targets in general. The second additional experiment used printed /t/-initial distractors instead of auditory ones, thus allowing us to establish whether our interference effects were specific to the spoken modality or also arise in another domain in which there are strong associative links to motor output. This experiment also allowed us to rule out covert rehearsal processes (possibly associated with short-term memory demands of the phoneme monitoring judgments on auditory distractors) as an explanation for any interference effects observed. Although it is unlikely that these rehearsal processes would play a significant role in our interference paradigm [it has been known for at least 25 years that the concurrent articulation of a different target abolishes subvocal rehearsal (16)], this experiment provided a further check, because it is also well known that the modality of presentation makes no difference to whether these rehearsal processes are engaged (16). If such processes were associated with judgments on auditory distractors, then they should also be associated with judgments on printed distractors.

Results

Experiment 1: Spoken Distraction.

The prediction was that we would observe an effect of congruency, with incongruent /t/-initial distractors leaving articulatory traces of themselves on the production of /k/ and /s/ target phonemes, in the form of increased alveolar contact. Results (Fig. 2 and Table 1) confirmed this prediction in revealing a main effect of congruency on tongue contact in the first two rows of the palate [F(1,4) = 32.76, P = 0.005]. This main effect of congruency was modulated by an interaction with time [F(1,4) = 8.89, P = 0.041], because the congruency effect was larger in the initial phase of target production than in the final phase. This congruency × time interaction was further modulated by target phoneme [F(1,4) = 9.20, P = 0.039] because the reduction in the size of the congruency effect toward the end of each phoneme was greater for /s/ targets than it was for /k/ targets, perhaps owing to the greater duration of /s/ targets (M = 145 ms) than /k/ targets (M = 80 ms). Further analyses revealed that the lexical status of targets and distractors did not influence the magnitude of the congruency effect in the initial portion of /k/ and /s/ target phonemes. Inspection of the individual subject data revealed that the congruency effect (and its predominance in the initial portion of targets) was apparent for each of the five participants (Fig. S1).

Fig. 2.

Congruency effect in each experiment as a function of time. Graph shows mean proportion tongue contact on alveolar electrodes during the initial and final portions of annotated segments collapsed across target phoneme. Error bars show SEM for each experiment after removing between-subject variance [suitable for repeated-measures comparisons (34)].

Table 1.

Tongue contact values for each target phoneme as a function of congruency and time for experiment 1

| Congruency | /k/ targets | /s/ targets | ||

| Initial | Final | Initial | Final | |

| Congruent | 0.009 | 0.009 | 0.324 | 0.402 |

| Incongruent | 0.018 | 0.015 | 0.363 | 0.409 |

Values expressed as number of active contacts in the first two rows of the palate divided by the total number of contacts in these rows (n = 14).

To establish that the articulatory consequences of the auditory distractors were highly specific (i.e., arising only in the first two rows of the palate, consistent with the production of /t/), we conducted exactly the same analysis using average tongue contact values obtained in rows 3–8 of the palate. Because the tongue body (indicated by the region in rows 3–8 of the palate) is not a primary articulator in the production of /t/, we would not expect to observe any congruency effect in this analysis. Indeed, this analysis revealed no effect of congruency [F(1,4) = 1.17, P = 0.34], no interaction between congruency and time [F(1,4) = 1.41, P = 0.30], and no three-way interaction between congruency, time, and target phoneme [F(1,4) = 0.378, P = 0.57]. These analyses confirm that the articulatory traces left on target syllables by the incongruent /t/-initial distractors were highly specific: they were apparent in the alveolar region of the palate (rows 1 and 2) but not in the other regions of the palate (rows 3–8).

Experiment 2: Spoken Noncompeting Distraction.

Incongruent distractors in experiment 2 began with /g/ (for /k/ targets) and /z/ (for /s/ targets). If the increased alveolar contact observed in the first experiment was the result of incongruity itself (instead of being a specific articulatory manifestation of the /t/-initial distractors), then the same congruency effect should be observed in this case. Results (Fig. 2 and Table 2) showed no effects of congruency, or interactions between congruency, time, and target phoneme on tongue contact in the first two rows of the palate (all F < 1). Furthermore, cross-experiment statistical comparisons revealed a larger effect of congruency in the first experiment than in this experiment [F(1,4) = 12.74, P = 0.023]. These data confirm that the increased alveolar contact observed in experiment 1 was due specifically to the presence of an incongruent /t/-initial distractor characterized by a complete alveolar closure.

Table 2.

Tongue contact values for each target phoneme as a function of congruency and time for experiment 2

| Congruency | /k/ targets | /s/ targets | ||

| Initial | Final | Initial | Final | |

| Congruent | 0.001 | 0.001 | 0.380 | 0.412 |

| Incongruent | 0.002 | 0.003 | 0.376 | 0.412 |

Values expressed as number of active contacts in the first two rows of the palate divided by the total number of contacts in these rows (n = 14).

Experiment 3: Printed Distraction.

This experiment was conducted (i) to test whether the interference effects observed in experiment 1 also arise in a domain in which there are strong associative links to the motor system; and (ii) to rule out subvocal rehearsal of distractors as an explanation for the interference effects observed. Results (Fig. 2 and Table 3) showed no effects of congruency (F < 1) and no interactions between congruency and time [F(1,4) = 1.96, P = 0.23] or between congruency, time, and target phoneme [F(1,4) = 2.57, P = 0.18] on tongue contact in the first two rows of the palate. Furthermore, cross-experiment statistical comparisons revealed a larger congruency effect in the first experiment than in this experiment [F(1,4) = 10.34, P = 0.032]. These results confirm that the interference effects observed in experiment 1 are unique to the perception of spoken distractors and undermine any account of those effects based on covert rehearsal.

Table 3.

Tongue contact values for each target phoneme as a function of congruency and time for experiment 3

| Congruency | /k/ targets | /s/ targets | ||

| Initial | Final | Initial | Final | |

| Congruent | 0.004 | 0.004 | 0.331 | 0.393 |

| Incongruent | 0.001 | 0.003 | 0.352 | 0.395 |

Values expressed as number of active contacts in the first two rows of the palate divided by the total number of contacts in these rows (n = 14).

Discussion

The research reported in this article contributes to debate regarding the involvement of motor systems in the perception of speech. Although recent neurophysiologic findings have demonstrated that motor and premotor regions can be activated in speech perception (1–6), many have argued strongly that this activation arises as a result of neural processes outside the realm of normal speech perception (7–9). These include processes associated with the interpretation of complex acoustic signals not specific to speech (7) or processes associated with the strategic demands of particular speech perception tasks (7–9).

Our approach to this problem was to hypothesize that if articulatory information is activated in speech perception, then that information should interfere with the articulation of a different target in speech production. Participants were asked to read aloud printed targets beginning with /k/ and /s/ under deadline conditions, while also listening to an auditory distractor that matched the target (congruent) or that rhymed with the target but began with /t/ (incongruent). Specialized artificial palates were custom-fitted to each participant so that we could monitor tongue–palate contact during articulation of the targets. Results showed that articulation of the target phonemes /k/ and /s/ was modified by the phonetic characteristics of the auditory distractors. Specifically, the articulation of both /k/ and /s/ phonemes revealed significantly greater tongue–palate contact in the alveolar region of the palate when distractors began with /t/ (a phoneme characterized by a complete alveolar closure) than when distractors were identical to the targets. This interference effect was particularly pronounced in the initial portions of each target segment. Further experiments established that this interference effect was caused by the specific articulatory characteristics of the /t/-initial distractors and that it reflects processes unique to the perception of spoken (as opposed to printed) distractors.

The interference effects that we have observed permit us to address the two challenges described in the introduction regarding the nature of motor activation in speech perception. In respect of the first challenge, the fact that these interference effects were highly specific (reflecting the articulatory properties of the spoken distractors) lends substantial weight to the argument that motor activation in speech perception is driven by the phonetic content of speech, rather than some acoustic event common to speech and nonspeech sounds (7). With respect to the second challenge, what is critical is that we observed these effects in a situation in which the articulatory encoding of auditory distractors was disadvantageous to performance (because it resulted in distorted speech tokens). Participants could not inhibit the articulatory encoding of the auditory distractors, implying that articulatory information is activated automatically and involuntarily in speech perception, rather than being recruited strategically to assist participants with challenging listening situations or particular tasks (7, 8). Although we cannot rule out dual-pathway conceptions of speech perception embodied in dorsal–ventral stream accounts (in which the primary route for speech comprehension involves ventral auditory processes that are nonmotoric) (10, 11), our evidence would suggest that the operation of the dorsal pathway linking auditory regions to prefrontal and motor cortex cannot be suppressed even under task conditions that would directly favor this.

Our interpretation of the interference effects reported in this article is that motor programs were activated by the auditory distractors, and when these conflicted with the motor programs activated by the printed targets, the motor programs combined, resulting in an intermediate articulatory outcome. The fact that these interference effects were unique to the spoken modality demonstrates that speech has a privileged status insofar as the mapping to articulation is concerned. However, although our work demonstrates the tight coupling between auditory and articulatory information, it does not allow us to conclude that motor processes are required in speech perception [as in single-pathway architectures in which motor representations mediate the acoustic and linguistic processing of speech (17, 18)]. Such inferences about causality can be drawn conclusively only by studying speech perception in situations in which motor processes are impaired through temporary lesions [as in TMS (5, 6)] or as a result of brain injury (19, 20). However, further work establishing the temporal character of motor activation in speech perception could also be important in this respect. If motor activation were a necessary precursor to speech comprehension, it would need to occur very rapidly indeed, given existing data concerning the time-course of spoken word recognition (21). This information could be gleaned in further work using the interference paradigm, for example, by varying the interval between distractor and target and measuring the resulting influence on the magnitude of interference.

Some may claim that evidence for motor involvement in speech perception is unsurprising given the strong cooccurrence between heard speech and produced speech (7). Specifically, it has been suggested that functional connections between neuron groups involved in articulatory and acoustic processing emerge simply as a result of associative learning (22) in the same way as distributed neuronal assemblies develop to bind the semantic representations of action words (e.g., kick, lick) and the motor representations used for implementing those actions (23). However, if the acoustic-to-articulatory links implicated by our findings emerged as a result of associative learning, then surely we should have observed articulatory interference effects when distractors were presented in printed form. Indeed, it is well accepted that there is a strong association between text and speech—a relationship that is central to adult skilled reading (24) and reading development (25). However, despite this strong association (and unlike the situation in which distractors were presented in the auditory modality) there is no evidence that articulatory information was activated when distractors were presented in this manner.

Although our data suggest that the link between speech perception and motor gestures is not one that can be explained by simple associative learning processes, we would not like to claim that this link arises through a specialized linguistic module encapsulated from other perceptual processes (26). Rather, we prefer to interpret our data in the context of a broader emerging framework, whereby the perception of action entails activation of the motor system. It has not escaped our attention that effects similar to the ones that we observed in speech have already been observed in research using kinematic analyses to investigate reaching and grasping behavior (27, 28). In a manner somewhat analogous to our findings, one study showed that grip apertures for grasping an apple were smaller when a cherry was presented as a distractor fruit than when an orange was presented as a distractor fruit (27). It seems unlikely to us that these effects should be interpreted as arising from wholly different mechanisms than those that we observed.

The finding that the articulatory characteristics of auditory distractors exert a highly specific interference effect on the articulation of target syllables argues strongly for the automatic recruitment of motor systems in speech perception, yet also raises numerous possibilities for future research. One interesting question concerns whether articulatory information is extracted from synthesized speech. On the basis of recent behavioral (29) and neurophysiologic (30) evidence suggesting that the mirror system underlying the perception of manual action is biologically tuned, it seems possible that the interference effects on articulation reported here would be observed only when auditory distractors comprise human speech. Having established a behavioral diagnostic for the activation of articulatory information in speech perception, we are now in a position to answer this and other important questions.

Materials and Methods

Participants.

Four women and one man between the ages of 21 and 50 years participated in the experiments (sample sizes of five or fewer participants are typical of EPG experiments (31, 32)). Participants were all native speakers of English, had normal or corrected-to-normal vision, and had no known language, speech, or hearing deficits. Participants all signed a consent form approved by the Royal Holloway, University of London ethics committee.

Materials.

Twenty-four target syllables with a consonant–vowel and consonant–vowel–consonant structure were created. These syllables reflected every combination of two onsets (/k/ and /s/), three vowels (/i/, /a/, and /u/) and four codas (/p/, /b/, /m/, and null). During the experiment, participants were instructed to produce these syllables following a schwa (e.g., / ə kup/), and they appeared visually as the second constituent of a two-syllable stimulus (e.g., a koop).

Each of the target syllables was paired with a congruent distractor and an incongruent distractor. The congruent distractors were always phonologically identical to the targets. The nature of the incongruent distractors varied as a function of experiment: in experiments 1 and 3 they rhymed with the targets but began with /t/; in experiment 2 they rhymed with the targets but began with /g/ (in the case of /k/ targets) and /z/ (in the case of /s/ targets). Auditory distractors were produced by a female speaker of Standard Southern British English and were recorded directly to the hard drive of a personal computer (PC) at a sampling rate of 22 KHz. They were ≈400 ms in duration.

Each target syllable was presented twice with its congruent and incongruent distractors, yielding a total of 96 productions per participant per experiment. Stimuli were presented in a different random order for each participant.

Apparatus.

Participants were tested in a sound-treated room in the Department of Psychology, Royal Holloway University of London using a PC-based WinEPG electropalatography system (Articulate Instruments).

Each participant was fitted with an artificial acrylic palate suitable for use with the WinEPG system. The artificial palates were made from plaster cast impressions of each participant’s mouth. Embedded in each palate were 62 electrodes in eight evenly spaced rows from the front to the back of the palate (with six electrodes in the front row and eight electrodes in every other row). These electrodes registered tongue–palate contact in a binary manner, from behind the upper front teeth to the junction of the hard and soft palate. The wires connected to each electrode were bundled into two thin tubes that were fed out from the corners of the mouth and connected to the control box of the WinEPG system. Participants had practiced using their palates while speaking and were subject to a 30-min acclimatization exercise before the testing session.

Stimulus presentation was controlled by DMDX software (33) running on a Pentium 4 PC. The recording of acoustic and EPG data were controlled by Articulate Assistant software (version 1.12) running on a separate Pentium 4 PC at sampling rates of 22 KHz and 100 Hz, respectively. Acoustic data were collected using an AKG microphone placed ≈10–12 cm diagonally from the center of the lower lip. Stimulus presentation was integrated with the acoustic and EPG data collection, such that each auditory stimulus delivered to participants was also delivered to the acoustic record.

Procedure.

Participants were seated ≈16 inches (≈40 cm) from the computer monitor and were asked to produce the syllables /di/ and /da/ several times each while the EPG apparatus was calibrated. Participants were given two practice blocks before each experiment.

Each experimental trial comprised a series of five 50-ms tones separated by intervals of 500 ms. On the fourth tone, a target stimulus appeared on screen. The appearance of this target stimulus was immediately preceded by an auditory or printed distractor (printed in a different color from the target). Participants were instructed to produce the target stimulus (including the initial schwa) on the fifth tone. To ensure that participants attended to the distractors, they were required to make a judgment about the distractor after their spoken response, in which they were asked about the presence of a particular sound or letter. These judgments required a “YES” response on 50% of trials and a “NO” response on the other 50% of trials.

Data Preparation.

Targets were scrutinized for accuracy, with tokens excluded from the analyses according to perceptual criteria (e.g., producing the wrong onset, vowel, or coda). Four tokens were removed from experiment 1 (0.83% of the data), eight tokens were removed from experiment 2 (1.67% of the data), and seven tokens were removed from experiment 3 (1.46% of the data).

Target utterances were annotated using waveform and spectrographic data. The initial segment of the /s/ targets, defined by a period of acoustic frication resulting from a turbulent airstream being forced through a narrow channel, was labeled using various acoustic markers. The onset of /s/ was defined by the beginning of high-frequency noise in the spectrogram, whereas the offset of /s/ was defined by (i) the end of high-frequency noise in the spectrogram; and (ii) the onset of the glottal pulse and first formant of the following vowel in the spectrogram. For the /k/ targets, we were interested in the closure phase of production, during which time the vocal tract is occluded completely and there is no acoustic radiation from the lips. Because the target syllables were preceded by a schwa, the onset of the /k/ closure was defined by the minimal amplitude in the waveform and the onset of silence in the spectrogram. The offset of the closure period was defined by the sudden burst associated with the release of the articulatory closure. This burst was visible on the spectrogram as the onset of mid-high frequency energy and on the waveform as an increase in amplitude.

The onset and offset boundaries of /s/ and /k/ were used to extract these target segments from each utterance. The duration of each segment was then used to calculate two equally spaced time points within each segment, representing one third and two thirds of the duration of the segment. To test our hypothesis that auditory /t/-initial distractors would leave alveolar traces of themselves on the articulation of target syllables, tongue contact values in rows 1 and 2 of the palate (the rows immediately behind the upper front teeth) were extracted at each of four time points (onset, 1/3, 2/3, offset) for each of the /k/ and /s/ target segments for each of the experiments. Palates for the first two time points were averaged and treated as the “initial” portion of the segment, whereas palates for the last two time points were averaged and treated as the “final” portion of the segment. Similar values were obtained for tongue contact in rows 3–8 of the palate, so that the specificity of any effect of congruency in the alveolar region of the palate could be established.

Statistical Analysis.

EPG data were analyzed in a repeated-measures ANOVA with target phoneme (/k/ or /s/), congruency (congruent or incongruent), and time (initial or final portion of target phonemes) as factors. Because we were interested specifically in the congruency effect, only main effects and interactions involving that factor are reported.

Supplementary Material

Acknowledgments

This work was supported by the UK Biotechnology and Biological Sciences Research Council (BB/E003419/1) and the UK Medical Research Council (U.1055.04.013.1.1).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/cgi/content/full/0904774107/DCSupplemental.

References

- 1.Wilson SM, Saygin AP, Sereno MI, Iacoboni M. Listening to speech activates motor areas involved in speech production. Nat Neurosci. 2004;7:701–702. doi: 10.1038/nn1263. [DOI] [PubMed] [Google Scholar]

- 2.Pulvermüller F, et al. Motor cortex maps articulatory features of speech sounds. Proc Natl Acad Sci USA. 2006;103:7865–7870. doi: 10.1073/pnas.0509989103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Watkins KE, Strafella AP, Paus T. Seeing and hearing speech excites the motor system involved in speech production. Neuropsychologia. 2003;41:989–994. doi: 10.1016/s0028-3932(02)00316-0. [DOI] [PubMed] [Google Scholar]

- 4.Fadiga L, Craighero L, Buccino G, Rizzolatti G. Speech listening specifically modulates the excitability of tongue muscles: A TMS study. Eur J Neurosci. 2002;15:399–402. doi: 10.1046/j.0953-816x.2001.01874.x. [DOI] [PubMed] [Google Scholar]

- 5.Meister IG, Wilson SM, Deblieck C, Wu AD, Iacoboni M. The essential role of premotor cortex in speech perception. Curr Biol. 2007;17:1692–1696. doi: 10.1016/j.cub.2007.08.064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.D’Ausilio A, et al. The motor somatotopy of speech perception. Curr Biol. 2009;19:381–385. doi: 10.1016/j.cub.2009.01.017. [DOI] [PubMed] [Google Scholar]

- 7.Scott SK, McGettigan C, Eisner F. A little more conversation, a little less action—candidate roles for the motor cortex in speech perception. Nat Rev Neurosci. 2009;10:295–302. doi: 10.1038/nrn2603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Lotto AJ, Hickok GS, Holt LL. Reflections on mirror neurons and speech perception. Trends Cogn Sci. 2009;13:110–114. doi: 10.1016/j.tics.2008.11.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hickok G. Speech perception does not rely on motor cortex: Response to D’Ausilio et al. 2009. Available at: http://www.cell.com/current-biology/comments_Dausilio Accessed April 24, 2009.

- 10.Hickok G, Poeppel D. Dorsal and ventral streams: A framework for understanding aspects of the functional anatomy of language. Cognition. 2004;92:67–99. doi: 10.1016/j.cognition.2003.10.011. [DOI] [PubMed] [Google Scholar]

- 11.Scott SK, Johnsrude IS. The neuroanatomical and functional organization of speech perception. Trends Neurosci. 2003;26:100–107. doi: 10.1016/S0166-2236(02)00037-1. [DOI] [PubMed] [Google Scholar]

- 12.Goldrick M, Blumstein SE. Cascading activation from phonological planning to articulatory processes: Evidence from tongue twisters. Lang Cogn Process. 2006;21:649–683. [Google Scholar]

- 13.Pouplier M. Tongue kinematics during utterances elicited with the SLIP technique. Lang Speech. 2007;50:311–341. doi: 10.1177/00238309070500030201. [DOI] [PubMed] [Google Scholar]

- 14.Kello CT, Plaut DC. Strategic control in word reading: Evidence from speeded responding in the tempo naming task. J Exp Psychol Learn Mem Cogn. 2000;26:719–750. doi: 10.1037//0278-7393.26.3.719. [DOI] [PubMed] [Google Scholar]

- 15.Glover S. Separate visual representations in the planning and control of action. Behav Brain Sci. 2004;27:3–24. doi: 10.1017/s0140525x04000020. discussion 24–78. [DOI] [PubMed] [Google Scholar]

- 16.Baddeley AD, Lewis VJ, Vallar G. Exploring the articulatory loop. Q J Exp Psychol. 1984;36:233–252. [Google Scholar]

- 17.Liberman AM, Whalen DH. On the relation of speech to language. Trends Cogn Sci. 2000;4:187–196. doi: 10.1016/s1364-6613(00)01471-6. [DOI] [PubMed] [Google Scholar]

- 18.Hickok G, Holt LL, Lotto AJ. Response to Wilson: What does motor cortex contribute to speech perception? Trends Cogn Sci. 2009;13:330–331. doi: 10.1016/j.tics.2008.11.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Moineau S, Dronkers NF, Bates E. Exploring the processing continuum of single-word comprehension in aphasia. J Speech Lang Hear Res. 2005;48:884–896. doi: 10.1044/1092-4388(2005/061). [DOI] [PubMed] [Google Scholar]

- 20.Utman JA, Blumstein SE, Sullivan K. Mapping from sound to meaning: Reduced lexical activation in Broca’s aphasics. Brain Lang. 2001;79:444–472. doi: 10.1006/brln.2001.2500. [DOI] [PubMed] [Google Scholar]

- 21.Marslen-Wilson WD, Tyler LK. The temporal structure of spoken language understanding. Cognition. 1980;8:1–71. doi: 10.1016/0010-0277(80)90015-3. [DOI] [PubMed] [Google Scholar]

- 22.Pulvermüller F. The Neuroscience of Language: On Brain Circuits of Words and Serial Order. Cambridge, UK: Cambridge Univ Press; 2008. [Google Scholar]

- 23.Pulvermüller F. Brain mechanisms linking language and action. Nat Rev Neurosci. 2005;6:576–582. doi: 10.1038/nrn1706. [DOI] [PubMed] [Google Scholar]

- 24.Rastle K, Brysbaert M. Masked phonological priming effects in English: Are they real? Do they matter? Cognit Psychol. 2006;53:97–145. doi: 10.1016/j.cogpsych.2006.01.002. [DOI] [PubMed] [Google Scholar]

- 25.Rack JP, Snowling MJ, Olson RK. The nonword reading deficit in developmental dyslexia: A review. Reading Res Q. 1992;27:29–53. [Google Scholar]

- 26.Liberman AM, Mattingly IG. The motor theory of speech perception revised. Cognition. 1985;21:1–36. doi: 10.1016/0010-0277(85)90021-6. [DOI] [PubMed] [Google Scholar]

- 27.Castiello U. Grasping a fruit: Selection for action. J Exp Psychol Hum Percept Perform. 1996;22:582–603. doi: 10.1037//0096-1523.22.3.582. [DOI] [PubMed] [Google Scholar]

- 28.Glover S, Dixon P. Semantics affect the planning but not control of grasping. Exp Brain Res. 2002;146:383–387. doi: 10.1007/s00221-002-1222-6. [DOI] [PubMed] [Google Scholar]

- 29.Kilner JM, Paulignan Y, Blakemore SJ. An interference effect of observed biological movement on action. Curr Biol. 2003;13:522–525. doi: 10.1016/s0960-9822(03)00165-9. [DOI] [PubMed] [Google Scholar]

- 30.Tai YF, Scherfler C, Brooks DJ, Sawamoto N, Castiello U. The human premotor cortex is ‘mirror’ only for biological actions. Curr Biol. 2004;14:117–120. doi: 10.1016/j.cub.2004.01.005. [DOI] [PubMed] [Google Scholar]

- 31.Narayanan SS, Alwan AA, Haker K. Toward articulatory-acoustic models for liquid approximants based on MRI and EPG data. Part I. The laterals. J Acoust Soc Am. 1997;101:1064–1077. doi: 10.1121/1.418030. [DOI] [PubMed] [Google Scholar]

- 32.Rastle K, Harrington J, Coltheart M, Palethorpe S. Reading aloud begins when the computation of phonology is complete. J Exp Psychol Hum Percept Perform. 2000;26:1178–1191. doi: 10.1037//0096-1523.26.3.1178. [DOI] [PubMed] [Google Scholar]

- 33.Forster KI, Forster JC. DMDX: A windows display program with millisecond accuracy. Behav Res Methods Instrum Comput. 2003;35:116–124. doi: 10.3758/bf03195503. [DOI] [PubMed] [Google Scholar]

- 34.Loftus GR, Masson MEJ. Using confidence intervals in within-subject designs. Psychon Bull Rev. 1994;1:476–490. doi: 10.3758/BF03210951. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.