Abstract

This study examined the effects of increased processing load on the closed-set speech-identification performance of young and older adults in a one-talker background. Since the older adults had impaired hearing, speech-identification performance was measured for spectrally shaped stimuli comparable to those experienced when wearing well-fit hearing aids. There were three groups of listeners: (1) 19 older adults with high-frequency sensorineural hearing loss; (2) 10 young adults with normal hearing who were assessed with the same spectrally shaped stimuli as the older adults; (3) 9 young adults with normal hearing who were assessed without spectral shaping and at a poorer target-to-competition ratio in an effort to equate overall performance to that of the older adults. In addition to this group factor, there were three within-participant repeated-measures independent variables designed to increase the demands on processing for the target and competing speech stimuli. These were: (1) competition meaningfulness (played in forward or reverse direction); (2) gender match between target and competing talkers (same or different gender); and (3) talker uncertainty (either the same target/competition talker pair or one of many such pairs on each trial). These three repeated-measures independent variables were examined in a 2 × 2 × 2 factorial design. They showed roughly independent and additive effects on speech-identification such that combinations of these variables decreased performance cumulatively. Older adults performed worse than young adults across the board, but also showed diminished relative improvement as the processing load was decreased. Individual differences in performance among the older adults were also examined.

Keywords: Aging, speech perception, hearing loss

INTRODUCTION

To understand speech, the acoustic signal must be encoded in the periphery, followed by information processing and extraction of meaningful aspects of the stimulus at the level of the brainstem and cortex. Known factors contributing to the speech-understanding problems associated with aging involve peripheral and central auditory changes to the hearing mechanism, as well as changes in cognitive function [Committee on Hearing, Bioacoustics and Biomechanics (CHABA), 1988; Humes, 1996; Willott, 1996]. Typical peripheral impairment associated with aging, presbycusis, includes sensorineural hearing loss which limits audibility of the speech stimulus. Presbycusis is characterized by a sensorineural hearing loss at the higher frequencies by about 60 years of age, which progresses in severity and spreads to increasingly lower frequencies as a function of age [e.g., Corso, 1963; International Standards Organization (ISO), 2000]. This sensorineural hearing loss may also impair peripheral spectral- or temporal-resolution processes that may be critical to the understanding of speech (e.g., Fitzgibbons & Gordon-Salant, 1996; Moore, 1996).

The relatively simple and automatic task of understanding one talker in the presence of another involves a variety of peripheral and higher level processes that can impact performance. Aging can have a negative impact on both the peripheral encoding, as noted, and the higher level processing of the target and competing talkers. In fact, older adults with impaired hearing have shown performance decrements for speech-on-speech masking tasks that have been attributed to deficits in selective attention (Helfer & Freyman, 2008), selective and divided attention (Humes, Lee and Coughlin, 2006), memory (Foo, Rudner, Ronnberg & Lunner, 2007; Lunner & Sundewall-Thoren, 2007; Ronnberg, Rudner, Foo, and Lunner, 2008), auditory stream segregation (Mackersie, Prida & Stiles, 2001), or a combination of such factors (Li, Daneman, Qi & Schneider, 2004; Marrone, Mason & Kidd, 2008). Spectral shaping of the speech stimuli, in a manner similar to that of well-fit amplification, should help minimize some aspects of the peripheral deficit, but does not address the potential age-related deficits in higher level processing (George, Zekveld, Kramer, Goverts, Festen & Houtgast, 2007; Humes, 2007).

In a recent comprehensive and detailed review of 20 studies of speech recognition in noise in normal-hearing and hearing-impaired adults, Akeroyd (2008) noted that the peripheral hearing loss was most consistently identified as the primary factor underlying individual differences in performance among listeners. He also noted that some aspect of cognitive function (defined and measured differently across the various studies) frequently, but inconsistently, emerged as a significant secondary contributor to individual differences in speech-recognition performance. Seven of the 20 studies reviewed concerned aided listening conditions, but the relative roles of peripheral versus higher-level contributors did not differ from those observed for unaided listening. It should be noted, however, that most of these seven studies made use of actual hearing aids and the ability of these devices to restore audibility optimally is often limited (see Humes (2007) for a more detailed discussion of this issue). In the present study, care was taken to ensure optimal or near-optimal audibility of the speech signal based on acoustical predictors of speech intelligibility such as the Speech Intelligibility Index (SII; ANSI, 1997).

The effects of competing talkers on ongoing speech processing must also be considered. Although the effects of manipulations of the meaningfulness of the competing talker (e.g., Dirks & Bower, 1969; Duquesnoy, 1983; Festen & Plomp, 1990; Rhebergen, Versfeld & Dreschler, 2005; Tun, O’Kane & Wingfield, 2002; Van Engen & Bradlow, 2007), the gender agreement of the target and competing talkers (e.g., Brungart, 2001a; Darwin, Brungart & Simpson, 2003; Festen & Plomp, 1990; Humes, Lee, and Coughlin, 2006), and the uncertainty of the competing talker (e.g., Goldinger, Pisoni & Logan, 1991; Humes, Lee, and Coughlin, 2006; Mullenix, Pisoni & Martin, 1989; Sommers, 1996, 1997; Watson & Kelly, 1981) have been investigated individually in normal-hearing and hearing-impaired listeners, these factors have seldom been examined in combination. Yet, in many real-world listening situations, these factors occur in combination. For example, consider the following hypothetical situation in which two women are the first to arrive for a party and begin a conversation. As other guests arrive, both men and women, they join in the conversation and eventually the group grows such that multiple smaller conversations are taking place simultaneously. At various moments in the development of this scenario there could have been just one competing talker of the same or opposite gender affecting the perception of the target talker’s speech. As the party grows, the effects of competing talkers might not be linear with the number of talkers present. For instance, the background resulting from simultaneous conversations could assume a “babble-like” quality, so the competing speech may become less intelligible (or less meaningful) and, therefore, less distracting. Also, as the group grows in size, there might be greater uncertainty from moment to moment with regard to both the target talker’s voice and the voice(s) of the competing talker(s). The interfering effects of competing talkers in such a scenario have frequently been partitioned between two types of masking: energetic and informational (e.g., Durlach, Mason, Kidd, Arbogast, Colburn, and Shinn-Cunningham, 2003; Watson, 2005).

The present study focused on how the factors of talker uncertainty, competition meaningfulness, and the gender agreement of the target and competing talker combine or interact to impact the recognition of the talker’s message. To the extent that these factors involve higher level processes that may be vulnerable to the negative effects of aging, do older adults show greater decrements for these listening conditions than younger adults? How does the hierarchy of difficulty of each factor established for young adults compare to that observed in older adults? These questions are addressed in the present study. To minimize the impact of stimulus inaudibility on the performance of older adults, the target and competing speech stimuli were spectrally shaped to ensure audibility through at least 4000 Hz. Comparison groups of young normal-hearing adults were tested in two conditions: (1) a stimulus-matched acoustically identical set of listening conditions, including comparable spectral shaping and presentation levels; and (2) an unshaped stimulus condition making use of a more difficult target-to-competition ratio to attempt a perceptual- or performance-match to the older adults. Additional methodological details are provided in the section to follow.

METHODS

Participants

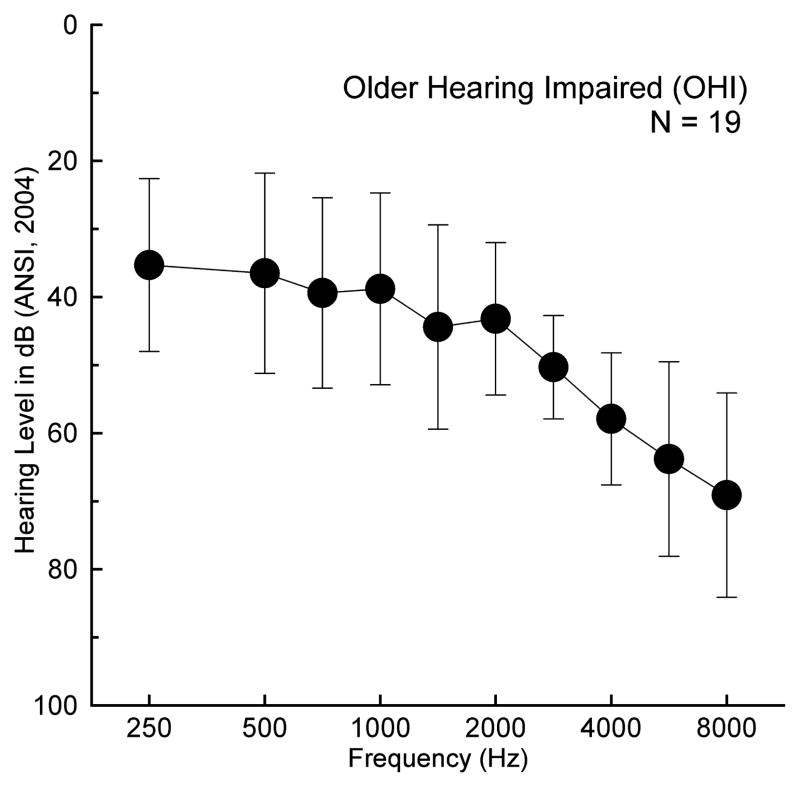

Three groups of listeners were tested in this study. One group comprised older adults ranging in age from 65 to 86 years (M = 75.1 years, SD = 5.9 years). They were recruited from a larger study conducted in the Audiology Research Laboratory at Indiana University. All were living independently in the local community at the time of testing. They were paid $10/hour for their participation. In addition to audiologic findings consistent with the presence of a mild-to-moderate bilateral sensorineural hearing loss, participants were required to be native speakers of American English and to have a passing score (>27/30) on the Mini-Mental Status Exam (MMSE; Folstein, Folstein & McHugh, 1975). The hearing loss was restricted to mild-to-moderate severity in order to provide sufficient amplification to ensure audibility for the speech stimuli used in this study. Figure 1 provides mean air-conduction hearing thresholds for the right ear (test ear) for this group of 19 older adults. This group is designated in this study as the older hearing-impaired (OHI) group.

Fig. 1.

Mean audiogram for the test (right) ear of the 19 older hearing-impaired (OHI) listeners in this study. Error bars represent one standard deviation above and below the mean.

In addition, two groups of young normal-hearing adults were tested. One of the groups was presented with stimuli acoustically identical to those presented to the elderly listeners [young normal-hearing stimulus-match group (YSM), n = 10, aged 18–28 years, M = 22 years (SD = 2.4 years)]. The other group of young normal-hearing adults received stimuli at more moderate sound levels, and at a worse target-to-competition ratio to more closely match the performance levels of the older group, and is referred to here as the young normal-hearing performance-match group [YPM, n = 9, aged 18–28 years, M = 22 years (SD = 2.4 years)]. These two groups were students recruited from the general Indiana University (IU) campus community. They were paid $7/hour for their participation. In order to qualify for participation, the young normal-hearing participants were required to be between the ages of 18 and 28 years, possess air-conduction hearing thresholds less than 20 dB HL from 250–8000 Hz bilaterally, and be native speakers of American English.

Stimuli and apparatus

Coordinate Response Measure (CRM)

The Coordinate Response Measure corpus (Bolia, Nelson, Ericson & Simpson, 2000) was chosen as a sentence-level speech test for this project. The structure of each sentence is identical, with each sentence using the form, “Ready (call sign), go to (color) (number) now.” By instructing the listener to attend only to the designated call sign while ignoring competing sentences, it is possible to investigate the effectiveness of masking from competing speech signals. The corpus consists of every combination of the eight call signs (arrow, baron, charlie, eagle, hopper, laker, ringo, tiger), four colors (blue, green, red, white) and eight numbers (1–8) resulting in 256 phrases for each talker. For example, one possible target sentence might be “Ready Baron go to blue four now.” The listeners were informed that “Baron” was the target call sign throughout this study. Competing sentences made use of any of the seven remaining call signs. Four male and four female talkers yield a total of 2,048 phrases or sentences. Overall percent-correct measures obtained by Brungart (2001b) showed that Talker 3 of the original corpus was approximately 10% more intelligible than all other talkers. Also, measures of the overall percent-correct performance showed that Talker 3 and Talker 4 were the least effective maskers as competing talkers. As a result, Talker 3 (male) and Talker 4 (female) of the original corpus were removed from the current project in an effort to keep the inter-talker intelligibility and masking-effectiveness more homogenous across talkers. Six talkers (three male and three female) were used in the current study (T0, T1, T2, T5, T6, and T7).

The test conditions were designed to manipulate the processing load on the listener by using combinations of talker uncertainty, gender match between target and competing talkers, and meaningfulness of the speech. Conditions representing factorial combinations of competition meaningfulness or the direction of the competing message playback (two values: forward or reverse, F or R), talker uncertainty (two values: low or high, L or H), and target-competition talker gender match (two values: same or different gender, S or D). The resulting eight conditions were coded using one value of each of the three independent variables as follows: Forward High Same (FHS), Forward High Different (FHD), Forward Low Same (FLS), Forward Low Different (FLD), Reverse High Same (RHS), Reverse High Different (RHD), Reverse Low Same (RLS) and Reverse Low Different (RLD). High uncertainty, for the purpose of this project, was defined in the following ways. First, if it was “different gender” condition, any one of three talkers could serve as the target and any one of three talkers of the opposite gender could serve as the competition. This resulted in 18 unique target talker-competing talker pairs on a given trial. Second, if it was a “same gender” condition, any one of three talkers could serve as the target and either of the other two talkers of the same gender could serve as the competing talker, which resulted in 6 possible target talker-competing talker pairs on any given trial. Low uncertainty is defined as the same primary and competing talker on every trial per block. T1 (male) and T4 (female) were chosen as the low-uncertainty target talkers and T0 (male) and T5 (female) were chosen as the low-uncertainty competing talkers. Thus, low-uncertainty, same-gender conditions made use of T1/T0 and T4/T5 as target/competition-talker pairs whereas, low-uncertainty, different-gender conditions made use of T1/T5 and T4/T0 as target/competition pairs. These four talkers, two of each gender, were chosen because they were most similar in terms of intelligibility for young normal-hearing listeners (Brungart, 2001b).

Using CoolEdit Pro 2000 (Syntrillium, version 1.2a), the CRM sentences were converted from binary files (*.bin) to wave files (*.wav) and from stereo to mono recordings for use in a custom MATLAB (Mathworks, version 6.5) program. The MATLAB program was designed to present two stimuli simultaneously through Tucker Davis Technologies (TDT) System-II equipment. The sampling rate was 22050 Hz. The stimuli were routed through channel 1 (target) and 2 (competition) of a 16-bit digital-to-analog converter (DA1), followed by an anti-aliasing low-pass filter (TDT FT5) set to a cut-off frequency of 10 kHz. The two channels were subsequently amplified, added together (TTES Adder), and passed through an FIR programmable filter (TDT PF1) created specifically for each participant based on their hearing thresholds (see below). The signal was then sent to an amplifier (Crown, D-75), headphone buffer (TDT HB3), and finally output to an insert earphone (E-A-R, ER-3A).

Calibration

The CRM corpus was designed so that each sentence was equated for total RMS power. A 30-second calibration noise and a calibration tone (1000 Hz) were created and equated using CoolEditPro 2000. The calibration tone was matched to the peak levels for the speech materials and was used to determine the maximum possible presentation level without peak clipping. Once this maximum output setting was established, the calibration noise was used to measure the maximum levels in one-third octave bands from 125 through 5000 Hz.

The calibration noise was matched in spectrum and RMS amplitude to the long-term spectrum and RMS amplitude of the CRM materials. To determine the long-term average speech spectrum of the CRM stimuli, the target CRM sentences used in this study were digitized using CoolEditPro 2000 and concatenated to create one large file of all six talkers. The average amplitude spectrum of the concatenated wave file was then measured. RMS values (50-ms window) for each one-third-octave band were noted and recorded. These values were then converted to 2-cm3 coupler SPL values using a directly measured transfer function, in one-third octave bands, between the output of the TDT equipment and the insert earphone coupled to an HA-2 2-cm3 coupler (ANSI, 2004). The one-third octave band levels in the 2-cm3 coupler for the CRM were then established for an overall speech level of 112 dBC (maximum linear output).

Next, assuming approximate equivalence of threshold for pure tones and one-third-octave bands of noise (Cox & McDaniel, 1986), the corresponding reference equivalent threshold sound pressure levels (RETSPLs) for insert earphones in a 2-cm3 coupler (ANSI, 2004) were subtracted from these derived values to yield the CRM one-third octave band spectrum in dB HL. From these one-third octave band dB HL values, 10 dB was subtracted to obtain a dB HL value corresponding to the maximum permissible hearing threshold at that frequency for use in this study. By doing so, a buffer of at least 10 dB of audibility between threshold and CRM RMS amplitude was ensured at one-third octave band levels from 200–4000 Hz.

When the calibration noise was presented using the same custom MATLAB software, stimulus levels of 112 dBC (slow meter setting) were recorded in an HA-2 2-cm3 coupler using the calibration set-up described in ANSI (2004) for insert earphones. This sound level measure was obtained using a Larson Davis model 800B sound level meter (Larson Davis model 2575 microphone) calibrated with a Larson Davis model CA250 calibrator (250 Hz, 114 dB SPL).

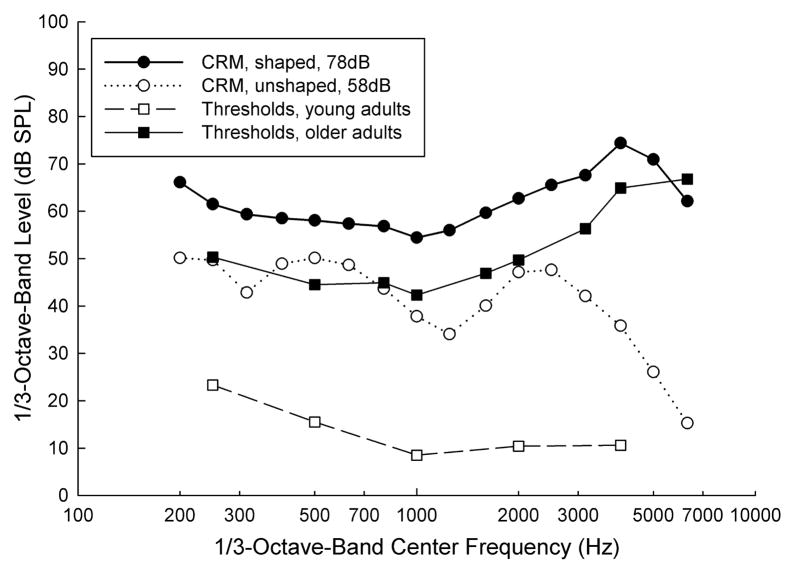

Filter for Spectral Shaping

A 256-tap digital FIR filter was designed for each individual hearing-impaired participant, to compensate for the decreased audibility associated with the hearing loss. The filter was created using the TDT software utility, FIR.exe. The filter was designed using a quasi-DSL approach (Cornelisse, Seewald & Jamieson, 1995). Specifically, the amplification target was such that the aided speech spectrum for the CRM material would be at least 10 dB above threshold for all participants from 250 Hz through 4000 Hz and is depicted in Fig. 2 using mean hearing threshold levels for the OHI group. Filter attenuation values, as calculated from the participant’s hearing thresholds, were entered into the software program at one-third-octave band frequencies. The average overall sound pressure level for the CRM was 78 dB SPL when presented to the OHI and YSM groups.

Fig. 2.

A comparison of mean hearing thresholds in dB SPL for 19 elderly hearing-impaired listeners (filled squares), the speech spectrum of the CRM measured at one-third octave band levels without frequency shaping (unfilled circles) and with frequency shaping (filled circles) based on the mean hearing thresholds. The mean hearing thresholds of the 19 young adults (YSM and YPM groups combined) are also provided for reference (unfilled squares).

Cognitive Measures

A series of standardized cognitive measures were obtained from all participants. These included measures of working memory and attention and are described in more detail below.

Working Memory Test

The Working Memory Test (WMT) from Lehman and Tompkins (1998) was chosen for use in this study. The test is a listening-span paradigm and was created by modifying a working-memory measure originally described by Daneman and Carpenter (1980). The WMT is an auditory task that consists of a series of simple declarative sentences containing three to five words (e.g., “Florida is next to Ohio.”). The participant is instructed to indicate whether the sentence is “True” or “False” by pointing to cards printed with the words “True” and “False” while concurrently memorizing the last word of each sentence. At the end of the set of sentences, the participant is asked to recall the last word of each sentence in that set. The initial test sets have two sentences each and the sets progressively increase the number of sentences to a maximum of five sentences per set. The words were deemed correct regardless of order of recall. Participants were given as much time as needed to recall the words.

In order to evaluate the effect of hearing loss on the test administration, a word list of the 46 final words (42 test words and 4 practice words) was compiled and labeled the WMT identification test. It was administered to the elderly as an identification task to verify that the participant could hear the target recall words. The test was administered to elderly hearing-impaired participants during the initial evaluation session and at the same presentation level as the WMT (most comfortable loudness, MCL). Substituted words were marked on the WMT answer sheet as acceptable responses for the subsequent administration of the WMT.

Test of Everyday Attention

The Test of Everyday Attention (TEA) was created by Robertson, Ward, Ridgeway and Nimmo-Smith (1994) and represents a battery of subtests designed to measure various aspects of attention such as selective attention, sustained attention, attentional switching, auditory verbal-working memory and divided attention. The TEA is designed for use with clinical populations, but is sensitive enough to reveal normal age effects. Subtests 2 (sustained attention), 3 (auditory selective attention and working memory), 4 (attentional switching) and 5 (auditory-verbal working memory) were chosen for the current project. Gatehouse and Akeroyd (2008) also have recently investigated the possible predictive utility of the TEA for speech-recognition in noise and provide a more detailed description of the TEA tests in the Appendix of that article (pp. 159–60).

Procedures

Each participant underwent initial audiometric examination to determine eligibility. Also at the initial visit the cognitive test battery was completed. It consisted of each of the following tests administered in the following order: (1) Test of Auditory Attention (TEA) subtests 2, 3, 4 and 5; and (2) the Working Memory Test (WMT). All cognitive tests were presented at the participants’ most comfortable loudness level (MCL). For these cognitive tests, the participant was seated in a chair within a double-walled sound booth facing a small table. The experimenter, also in the sound booth, sat facing the participant across the table. A loudspeaker (Acoustic Research, Powered Partner 570) was located on the right side of the participant at about a 45-degree azimuth and connected to a laptop computer (Toshiba Satellite, 15 inch screen), which delivered the sound stimuli through the loudspeaker for all cognitive tests.

Following completion of this cognitive-testing session, participants were scheduled to return to the Audiology Research Laboratory for 2–3 additional testing sessions. All but two of the elderly hearing-impaired participants required three additional sessions to complete the protocol while the young normal-hearing participants were able to complete the protocol in two additional sessions. Two elderly participants required a total of four additional test sessions to complete the study. At the second and all subsequent test sessions, the participant was seated in a single-walled sound booth at a computer terminal and was given written instructions for the CRM test.

CRM test presentation was designed so that participants would hear 32 presentations of competing sentence pairs per test block. Thirty-two presentations per test block allowed for representation of each color and number combination per test block without repetition. Participants first received one 32-sentence block as practice. They then listened to a total of 1024 test sentence pairs (4 blocks of 32 sentence pairs for a total of 128 sentence pairs per condition for each of the 8 conditions). The listener indicated the color and number of the sentence containing the target call sign (“Baron”) by clicking one of four rectangular radio buttons on the computer screen presenting both the color name and the color itself (i.e. a red rectangle with a text label of “red”) and then clicking on a number from a column of numbers listing 1 through 8. The listener did not receive trial-to-trial feedback.

CRM presentation level was set based on an unshaped presentation level of 58 dB SPL (Fig. 2), with +3 dB target-to-competition ratio (TCR), for the OHI and YSM groups, which resulted in an average presentation level of 78 dB SPL after spectral shaping by the custom FIR filters. The YSM group experienced the same acoustic listening conditions as the OHI group. Listeners in the OHI group were presented with the CRM test under a +3 dB target-to-competition ratio (TCR) and listened through a custom FIR filter to provide adequate gain such that the signal was at least 10 dB above their hearing threshold from 250 through 4000 Hz. In the case of the YSM listeners, each was matched to a different and arbitrarily selected OHI listener and listened to the CRM through that OHI participant’s filter. In addition, since the TCR was +3 dB and the target’s RMS speech spectrum was at least 10 dB above threshold, the YSM and OHI groups had equivalent long-term speech audibility, on average.

For the YPM group, the materials were not spectrally shaped, which resulted in a presentation level of 58 dB SPL, and a −3 dB TCR was used based on pilot testing. The purpose of collecting the data from the YPM listeners was to determine baseline performance for a group of young listeners listening at an unshaped, moderate or conversational level (55 dB SPL) and in background conditions that would yield overall performance more closely matched to the performance of the older listeners. The pilot testing suggested that the TCR would have to be made about 6 dB worse (from +3 dB TCR to −3 dB TCR) to equate performance of young adults to that of the older listeners for test conditions of moderate difficulty.

The CRM testing was self-paced in that the program waited for the participant to hit the space bar to receive the next sentence pair. As noted, the OHI participants showed some variability in the time required to complete all 32 blocks for the CRM and all older listeners required more time than the younger listeners to complete this testing.

RESULTS AND DISCUSSION

Cognitive measures

The mean scores on the TEA scales and the WMT were analyzed for the two young normal-hearing groups and were not found to differ significantly. Consequently, the results from these two groups of young adults were pooled (N = 19) and compared to the results from the group of older adults (N = 19) on the same cognitive measures. The means and standard deviations for these two groups of participants on the TEA and the WMT are provided in Table 1. Independent sample t-tests indicated significant (p < 0.01) differences in performance between age groups on the recall portion of the WMT with the older adults performing worse than the younger adults. The average word-recognition score for the 42 test words presented in isolation for the older adults was 97.7% correct (at MCL), indicating that inaudibility of the test items was not a factor underlying the observed deficit for the older adults on the WMT. Regarding the TEA, independent sample t-tests indicated that the older adults performed significantly (p < 0.01) worse than the younger adults on all but the TEA2 (sustained attention) scale. Thus, the older adults in this study showed declines in cognitive performance on both the WMT and the TEA compared to the group of young adults. The relative contribution of these two cognitive measures to individual differences in speech-identification performance among the older adults is examined in a subsequent section below.

Table 1.

Means (M) and standard deviations (SD) for the two groups of young adults (N = 19) and the group of older adults (N = 19) on the Working Memory Test (WMT) and four scales of the Test of Everyday Attention (TEA)

| Young adults |

Older adults |

||||

|---|---|---|---|---|---|

| Test | M | SD | M | SD | t (35) |

| WMT: true/false | 41.8 | 0.4 | 41.7 | 0.7 | 0.5 |

| WMT: recall | 40.1 | 2.3 | 31.1 | 5.2 | 6.7* |

| TEA 2 | 7.0 | 0.2 | 7.0 | 0.2 | −0.1 |

| TEA 3 | 9.5 | 0.8 | 7.4 | 2.8 | 3.0* |

| TEA 4 | 9.8 | 0.5 | 7.8 | 2.3 | 3.6* |

| TEA 5 | 9.0 | 1.2 | 3.7 | 2.8 | 7.5* |

Notes: Values are in number of test items correct. Significant differences (independent-sample t-test, df = 35; p < 0.01) are indicated by an asterisk after the corresponding t value.

Speech-identification measures

CRM responses were considered correct if the listener chose both the color and the number correctly on each trial. As noted, the percentage correct was determined from four test blocks consisting of 32 sentences (total of 128 utterances) for each of eight conditions. Individual CRM percent-correct scores for each block were transformed to rationalized arcsine units (RAUs; Studebaker, 1985) to stabilize the error variance. Scores across the four test blocks per condition were examined to determine if performance was consistent. A univariate repeated-measures ANOVA was conducted to examine the effect of block number on performance for each condition. Because some of the inter-block comparisons were significant, but not consistently across conditions, a four-block average making use of all the data was used. The RAUs were then averaged, yielding one four-block average score for each of the eight conditions for each of the participants for analysis. Table 2 displays mean percent-correct scores, and standard deviations (in parentheses), for the four-block averages for each of the three listener groups and across the eight conditions whereas the transformed scores in RAUs appear in all subsequent figures.

Table 2.

Means (M) and standard deviations (SD) for CRM scores (percent-correct) based on the presentation of 128 sentences to each listener in each of eight conditions

| OHI |

YSM |

YPM |

||||

|---|---|---|---|---|---|---|

| Condition | M | SD | M | SD | M | SD |

| FHS | 52.0 | (12.3) | 67.9 | (10.7) | 32.8 | (12.5) |

| FHD | 67.6 | (16.9) | 86.8 | (9.9) | 73.8 | (13.0) |

| FLS | 61.8 | (13.3) | 77.6 | (12.2) | 55.6 | (16.0) |

| FLD | 72.9 | (16.4) | 94.8 | (6.6) | 89.2 | (7.9) |

| RHS | 66.7 | (21.5) | 97.8 | (2.8) | 77.3 | (16.1) |

| RHD | 80.2 | (21.6) | 98.5 | (2.3) | 88.5 | (10.7) |

| RLS | 73.7 | (24.2) | 97.3 | (3.3) | 81.9 | (10.9) |

| RLD | 81.6 | (20.6) | 98.4 | (2.5) | 95.8 | (4.3) |

Notes: Groups: OHI = older hearing impaired; YSM = young stimulus match; YPM = young performance match. Conditions: FHS = forward high same; FHD = forward high different; FLS = forward low same; FLD = forward low different; RHS = reverse high same; RHD = reverse high different; RLS = reverse low same; RLD = reverse low different.

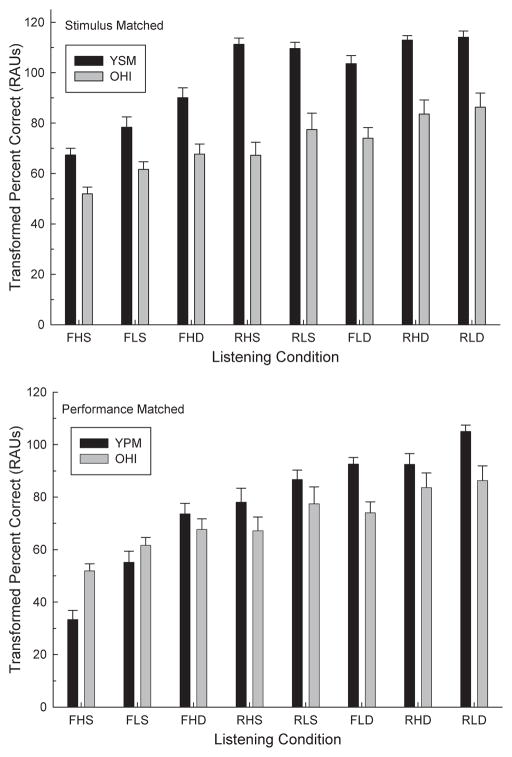

The mean speech-identification scores for the YSM and OHI groups are provided in the top panel of Fig. 3 and the mean data for the YPM and OHI groups are provided in the bottom panel of this figure. By design, the data for the young “performance matched” group (YPM, Fig. 3, bottom) are closer to the corresponding values for the OHI group than was the case for the “stimulus matched” group comparison (Fig. 3, top). It is also apparent that the performance of the YSM group approaches ceiling for several of the easier conditions.

Fig. 3.

Mean speech-identification scores in RAUs for the young stimulus-matched (YSM) and the older hearing-impaired (OHI) groups (top) and for the young performance-matched (YPM) and OHI groups (bottom) across the eight listening conditions representing factorial combinations of competition meaningfulness (Forward/Reverse), talker uncertainty (High/Low), and gender match (Same/Different). Conditions are ordered along the x-axis from most difficult (left) to least difficulty (right), based on rank ordering of means and individual data. Error bars represent one standard error above the mean. FHS: forward, high, same; FLS: forward, low, same; FHD: forward, high, different: RHS: reverse, high, same; RLS: reverse, low, same; FLD: forward, low, different; RHD: reverse, high, different; and RLD: reverse, low, different.

The stimulus conditions were ordered along the x-axis based on the difficulty of the conditions for the two young normal-hearing groups combined. This was determined by examining the grand means for the 19 young listeners in each of the eight listening conditions and also by examining the rank orders of performance for each of the 19 young listeners. Across both groups of young listeners, the rank ordering of difficulty was very consistent for the three most difficult conditions, FHS, FLS and FHD, respectively, as well as the two easiest conditions, RLD and RHD, respectively. For conditions in between these extremes, however, the individual ordering on the basis of performance was less consistent across the 19 young adults and the grand means were used to order these conditions. In general, as conditions progress from the most difficult (left) to the easiest (right), performance steadily improves for all three groups, but is limited by apparent ceiling effects for the YSM listeners in the easiest conditions.

In addition to the variations in performance for all groups across the eight listening conditions, one of the other observations that can be made by inspecting the data in Fig. 3 is that the YSM group generally outperforms the OHI group (Fig. 3, top) whereas the differences are much smaller between the YPM and OHI groups (Fig. 3, bottom). Thus, under conditions of equivalent (long-term or steady-state) audibility of the speech spectrum (Fig. 3, top), young adults outperform older adults. In addition, use of unshaped speech materials and a 6 dB worse TCR accomplished the desired objective of matching the overall performance of the YPM and OHI groups.

To examine these apparent trends in the data in each panel of Fig. 3, two separate General Linear Model (GLM) analyses were performed, one for the data in each panel. In each of these analyses, a 2 × 2 × 2 × 2 factorial mixed-model analysis was performed, with group as a between-participants factor and competition meaningfulness (forward/reverse), uncertainty (high/low) and gender match (same/different) as within-participant repeated-measures factors. The results of these two analyses are summarized in Table 3. In both analyses, all four independent variables (one between-participant and three within-participant) revealed significant main effects. The younger group, either YSM or YPM, performed significantly better than the OHI group. Time-forward competition resulted in lower scores than time-reversed competition. Same-gender competition was more difficult than different-gender competition, and high talker uncertainty yielded lower scores than low-uncertainty conditions. However, as is apparent in Table 3, there were several significant two-way and three-way interactions, especially with listener group, that confound a simple interpretation of these main effects.

Table 3.

Summary of F and p values for 2 × 2 × 2 × 2 GLM analyses for the between-participants factor of group (left: YSM, OHI; right: YPM, OHI) and within-participants factors of competition meaningfulness, talker uncertainty, and gender match for the CRM speech identification task

| Variable | F | p | F | p |

|---|---|---|---|---|

| Group (G) | 20.7 | <0.001* | 0.8 | 0.366 |

| Meaningfulness (F) | 102.7 | <0.001* | 103.2 | <0.001* |

| Uncertainty (U) | 27.1 | <0.001* | 80.3 | <0.001* |

| Gender Match (M) | 114.6 | <0.001* | 186.5 | <0.001* |

| G × F | 8.8 | 0.006* | 8.6 | 0.007* |

| G × U | 0.2 | 0.634 | 10.6 | 0.003* |

| G × M | 0.0 | 0.948 | 22.5 | <0.001* |

| F × U | 7.5 | 0.011 | 4.2 | 0.050 |

| F × M | 30.2 | <0.001* | 30.5 | <0.001* |

| M × U | 0.5 | 0.487 | 1.2 | 0.284 |

| G × F × U | 4.6 | 0.041 | 2.3 | 0.145 |

| G × F × M | 23.0 | <0.001* | 23.7 | <0.001* |

| G × U × M | 3.9 | 0.057 | 1.7 | 0.199 |

| F × U × M | 0.2 | 0.683 | 0.1 | 0.811 |

| G × F × U × M | 0.2 | 0.634 | 1.2 | 0.286 |

Notes: p < 0.01. Degrees of freedom = 1, 27 for all F values.

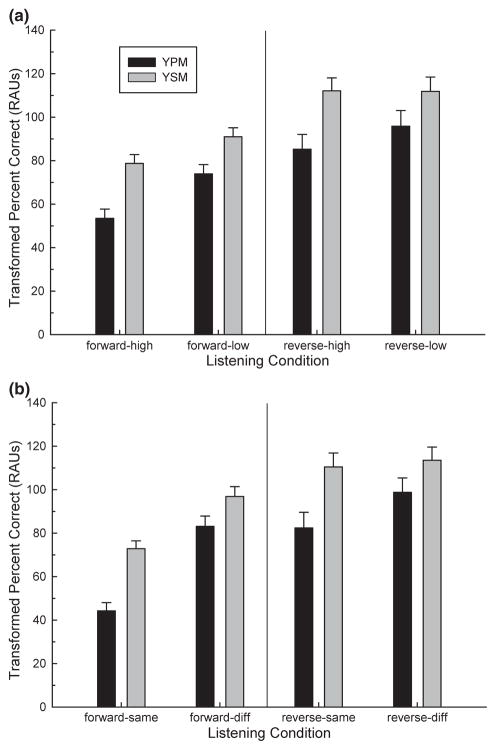

Next, three additional GLM analyses were performed; a separate one for each group, with each having a 2 × 2 × 2 repeated-measures design. These results are summarized in Table 4. As seen in this table, for each of the three groups, there is again a significant effect of the three independent variables: competition meaningfulness, gender match, and stimulus uncertainty. Moreover, there are no interactions apparent in the data for the OHI listeners. For each of the young normal-hearing groups, however, two two-way interactions remain, both involving the effects of competition meaningfulness. One interaction, the interaction between competition meaningfulness (forward, reversed) and stimulus uncertainty (high, low), is illustrated for these two groups in the top panel of Fig. 4. For both groups, for the two conditions with time-forward competition (left half of figure), the effect of uncertainty is larger than that observed in the time-reversed competition (right half of figure). Likewise, the bottom panel of Fig. 4 shows a similar pattern of results for the interaction of competition meaningfulness (forward, reversed) with gender match (same, different) of the target and competing talkers. Specifically, the gender match between target and competing talkers has a bigger impact on performance when the competing speech is played time-forward, rather than time-reversed. The pattern of results for these interactions in the two groups of young listeners appears to be reasonable. If the competing talker is basically unintelligible when played time reversed, then the factors of gender match between target and competition or the uncertainty of the target and competing talkers from trial to trial are not likely to have as much effect as when the competing talker is intelligible (time forward).

Table 4.

Summary of F values for 2 × 2 × 2 factorial repeated-measures GLM analyses of transformed CRM scores each group separately

| OHI | YSM | YPM | |

|---|---|---|---|

| F | 29.9* | 127.7* | 318.6* |

| U | 19.1* | 15.8* | 201.6* |

| M | 58.3* | 237.3* | 143.9* |

| F × U | 0.2 | 42.7* | 13.1* |

| U × M | 3.9 | 2.2 | 0.0 |

| F × M | 0.3 | 109.0* | 91.9* |

| F × U × M | 0.5 | 0.0 | 1.0 |

Notes: Groups: OHI = older hearing impaired; YSM = young stimulus-match; YPM = young performance-match. Repeated-measures variables: F = meaningfulness of competition; U = talker uncertainty; and M = gender match.

Degrees of freedom = 1, 8 for all F values.

p < 0.01.

Fig. 4.

Means illustrating the significant interaction of competition meaningfulness (Forward/Reverse) with talker uncertainty (High/Low) in the top panel (a) and the significant interaction of competition meaningfulness (Forward/Reverse) with gender match (Same/Different) in the bottom panel (b) for the two groups of young normal-hearing listeners (YSM and YPM). Error bars represent one standard error above the means.

Our data for young normal-hearing adults can be compared to comparable data obtained by Brungart (2001a) for the CRM. Brungart (2001a) examined same versus different gender as well as several different TCRs ranging from −21 to +15 dB, in a group of young normal-hearing listeners. He used all eight talkers in the administration of the test (similar to the high uncertainty condition with six talkers in the current study) and the competition phrases were always played in a time-forward manner. To compare the same versus different gender conditions from Brungart (2001a), the scores for FHS were compared with the percent correct for the FHD condition in the current study. Percent-correct scores from Brungart (2001a) for the −3 dB TCR, were approximately 62% for same gender and approximately 85% for different gender. When the TCR was +3 dB the scores became approximately 72% and 90%, respectively. The scores for the YSM (+3 dB TCR) listeners in the FHS and FHD conditions were 68% and 87% while the scores for the YPM group (−3 dB TCR) were 33% and 74%. Scores for the two studies compared across gender match and TCR for young normal hearing participants are similar with the exception of the −3 dB TCR, same gender comparison, in which case, the scores in the current study are much lower (33%) than those reported in Brungart (2001a; 62%). It is unclear why the scores for this one condition differ so much across the two studies.

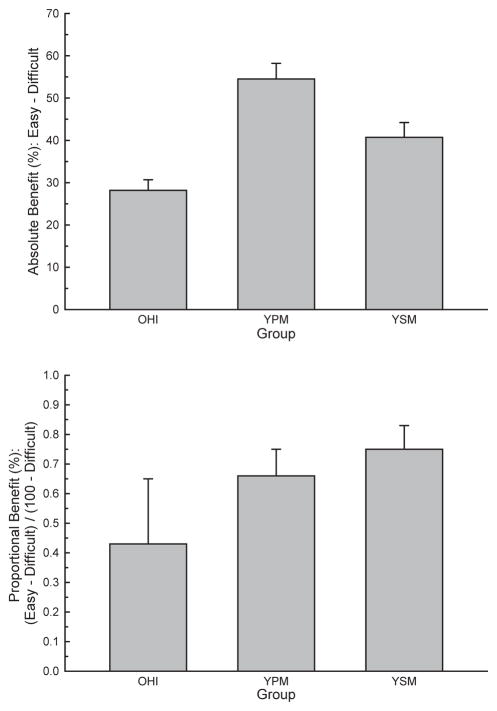

In addition to comparisons of absolute performance across groups and conditions it was also of interest to examine the relative change in performance across conditions for each of the groups. Scores from the two most difficult listening conditions, FHS and FLS, were averaged, as were the scores from the two easiest listening conditions, RHD and RLD. Figure 5 shows the mean improvement in performance from the easy to difficult listening conditions for each group, with the absolute benefit plotted in the top panel and a measure of relative benefit in the bottom panel. In the latter case, the difference observed in the top panel has been normalized by the room available for improvement. That is, the bottom panel illustrates the proportion of the total possible improvement realized calculated as (% easy–% difficult)/(100%–% difficult). For the benefit measures in the top panel, univariate analysis of variance and post hoc follow-up t-tests indicated that there was a main effect of group and the groups differed significantly (p < 0.05) from one another. For the proportional benefit measures in the bottom panel, parallel analyses revealed that the older group differed significantly from the two younger groups, but the two younger groups did not differ from one another. Thus, whether expressed as absolute or relative benefit, the older adults demonstrated less improvement in performance as the processing load was decreased than younger adults under identical stimulus conditions (YSM) or under more challenging stimulus conditions designed to equate overall performance (YPM).

Fig. 5.

Means and standard errors for each of the three listener groups for the derived measures of absolute benefit (top panel; simple difference between scores for easiest and most difficult conditions) and proportional benefit (bottom panel; absolute benefit relative to maximum possible benefit that could be obtained). OHI: older hearing impaired; YPM: young performance matched; and YSM: young stimulus matched.

To get an idea of the relative magnitude of some of the main effects observed, grand means were calculated for the combined data from young adults (YPM and YSM groups) in Figure 3 and compared to the group data for the OHI listeners. This descriptive analysis reveals that the two age groups did not differ that much with regard to the relative effects of stimulus uncertainty on sentence identification. The young adults, for example, showed a 10.5 RAU improvement and the older adults a 7.2 RAU improvement when going from high to low talker uncertainty (when collapsing across the other two independent variables). Sommers (1996) also observed significant effects of stimulus uncertainty in both younger and older adults, although talker uncertainty was manipulated over a wider range in the study by Sommers (1996). A similar descriptive analysis for the meaningfulness of the competing speech reveals that the differences in age group, however, were much larger for this factor, with young adults showing almost twice the benefit (27.0 RAU) as older adults (14.8 RAU) as the competing talker’s speech was time reversed. Similarly, the differential effects of gender mismatch between target and competing talkers were about 1.5 times larger in young adults (20.2 RAU) than in older adults (13.9 RAU). Brungart (2001a) observed an overall differential effect of gender mismatch between target and competing talkers of about 20% for young normal-hearing adults. This is comparable to that observed here for young adults. As noted, the OHI group in this study showed a differential effect of gender mismatch that was smaller; about two-thirds the size of that observed in younger adults.

Finally, individual differences in improvement or benefit from decreased processing load were examined among the older adults using linear regression analysis. Predictor variables included age, TEA scale scores, WMT recall score, and average high-frequency (1000, 2000 and 4000 Hz) hearing loss. The regression analyses for absolute benefit did not identify any significant predictors. The analysis for proportional benefit, on the other hand, found that individual differences in age among the OHI listeners accounted for 24.2% of the variance (r = −0.49). The cognitive measures and average high-frequency hearing loss did not account for significant portions of the variance in performance among the OHI participants. Among the older adults, age was also negatively and significantly (p < 0.05) correlated with CRM scores in five of the eight listening conditions (−0.61 < r < −0.49). It should also be noted that there were no significant correlations between age and hearing loss or between either of these variables and the cognitive measures. Thus, for the OHI listeners, the older the individual, the lower their CRM scores, in general, and the less they benefited from a reduction in processing load. Moreover, performance on the WMT or TEA and amount of high-frequency hearing loss did not explain individual differences in performance among the OHI listeners.

With regard to individual differences among the OHI listeners, it was noted that individual differences in speech identification were consistent across conditions. An elderly participant who performed poorly in one condition tended to perform poorly in all other conditions. Speech-identification performance in background competition was moderately correlated with age when audibility was controlled, but not with measures of hearing loss or cognitive function (WMT, TEA).

Summary

This study examined the closed-set speech-identification abilities of young and older adults when the speech from one target talker was presented concurrently with the speech of another competing talker saying a very similar sentence. In all experimental conditions in this study, the messages spoken by each talker were presented to the same ear of the listener and the cue used to identify the target talker was lexical in nature (the call sign “Baron”). Because the older adults had impaired hearing, the auditory stimuli were spectrally shaped to optimize the audibility of the target and competing speech signals from 250 Hz through at least 4000 Hz for this group and for one of the two groups of normal-hearing young adults. The other group of young normal-hearing adults was tested under acoustically dissimilar conditions designed to bring their overall speech-identification performance down to the same level as the older adults.

The separate and combined effects of talker uncertainty, gender mismatch between target and competing talkers, and meaningfulness of the competing message on speech-identification performance were examined in all three groups. For each of these three factors there were two levels of difficulty (low vs. high uncertainty, same vs. different talker genders, and intelligible or unintelligible competing message). Significant main effects of each factor were observed in the group data and older adults performed significantly worse than young adults under acoustically equivalent test conditions. For all participant groups, as the number of factors with low difficulty increased from one to two to three, speech-identification performance improved, but the amount of the benefit from the progressive reduction of difficulty was less for the older adults. Among the older adults, individual differences in speech-identification performance were significantly and negatively correlated with age, but not with the degree of high-frequency hearing loss or with clinical measures of cognitive function (WMT and TEA).

Acknowledgments

Funding for this study was provided, in part, by the National Institute of Health, NIH R01 AG008293. The data presented in this paper are from the co-author’s previously unpublished dissertation (Coughlin, 2004).

References

- Akeroyd MA. Are individual differences in speech reception related to individual differences in cognitive ability? A survey of twenty experimental studies with normal and hearing-impaired adults. International Journal of Audiology. 2008;47(Suppl 2):S53–S71. doi: 10.1080/14992020802301142. [DOI] [PubMed] [Google Scholar]

- ANSI. ANSI S3.5 – 1996. New York: American National Standards Institute; 1997. Methods for Calculation of the Speech Intelligibility Index. [Google Scholar]

- ANSI. ANSI S3.6 – 2004. New York: American National Standards Institute; 2004. Specifications for Audiometers. [Google Scholar]

- Bolia RS, Nelson WT, Ericson MA, Simpson BD. A speech corpus for multitalker communications research. Journal of the Acoustical Society of America. 2000;107:1065–1066. doi: 10.1121/1.428288. [DOI] [PubMed] [Google Scholar]

- Brungart DS. Informational and energetic masking effects in the perception of two simultaneous talkers. Journal of the Acoustical Society of America. 2001a;109:1101–1109. doi: 10.1121/1.1345696. [DOI] [PubMed] [Google Scholar]

- Brungart DS. Evaluation of speech intelligibility with the coordinate response measure. Journal of the Acoustical Society of America. 2001b;109:2276–2279. doi: 10.1121/1.1357812. [DOI] [PubMed] [Google Scholar]

- Committee on Hearing, Bioacoustics and Biomechanics (CHABA) Speech understanding and aging. Journal of the Acoustical Society of America. 1988;83:859–895. [PubMed] [Google Scholar]

- Cornelisse LE, Seewald RC, Jamieson DG. The input/output formula: a theoretical approach to the fitting of personal amplification devices. Journal of the Acoustical Society of America. 1995;97:1854–1864. doi: 10.1121/1.412980. [DOI] [PubMed] [Google Scholar]

- Corso JF. Aging and auditory thresholds in men and women. Archives of Environmental Health. 1963;6:350–356. doi: 10.1080/00039896.1963.10663405. [DOI] [PubMed] [Google Scholar]

- Coughlin MP. Aided speech recognition in single-talker competition by elderly hearing-impaired listeners. Indiana University; Bloomington, Indiana: 2004. Unpublished doctoral dissertation. [Google Scholar]

- Cox RM, McDaniel DM. Reference equivalent threshold levels for pure tones and 1/3-octave noise bands: Insert earphone and TDH-49 earphone. Journal of the Acoustical Society of America. 1986;79(2):443–446. doi: 10.1121/1.393531. [DOI] [PubMed] [Google Scholar]

- Daneman M, Carpenter PA. Individual differences in working memory and reading. Journal of Verbal Learning and Verbal Behavior. 1980;19:450–466. [Google Scholar]

- Darwin CJ, Brungart DS, Simpson BD. Effects of fundamental frequency and vocal-tract length changes on attention to one of two simultaneous talkers. Journal of the Acoustical Society of America. 2003;114:2913–2922. doi: 10.1121/1.1616924. [DOI] [PubMed] [Google Scholar]

- Dirks DD, Bower DR. Masking effects of speech competing messages. Journal of Speech and Hearing Research. 1969;12:229–245. doi: 10.1044/jshr.1202.229. [DOI] [PubMed] [Google Scholar]

- Duquesnoy AJ. Effect of a single interfering noise or speech source upon the binaural sentence intelligibility of aged persons. Journal of the Acoustical Society of America. 1983;74(3):739–743. doi: 10.1121/1.389859. [DOI] [PubMed] [Google Scholar]

- Durlach NI, Mason CR, Kidd G, Jr, Arbogast TL, Colburn HS, Shinn-Cunningham BG. Note on informational masking. Journal of the Acoustical Society of America. 2003;113:2984–2987. doi: 10.1121/1.1570435. [DOI] [PubMed] [Google Scholar]

- Festen JM, Plomp R. Effects of fluctuating noise and interfering speech on the speech-reception threshold for impaired and normal hearing. Journal of the Acoustical Society of America. 1990;88:1725–1736. doi: 10.1121/1.400247. [DOI] [PubMed] [Google Scholar]

- Fitzgibbons PJ, Gordon-Salant S. Auditory temporal processing in elderly listeners. Journal of the American Academy of Audiology. 1996;7(3):183–189. [PubMed] [Google Scholar]

- Folstein MF, Folstein SE, McHugh PR. Mini-Mental State: A practical method for grading the cognitive state of patients for the clinician. Journal of Psychiatric Research. 1975;12:189–198. doi: 10.1016/0022-3956(75)90026-6. [DOI] [PubMed] [Google Scholar]

- Foo C, Rudner M, Ronnberg J, Lunner T. Recognition of speech in noise with new hearing instrument compression settings requires explicit cognitive storage and processing capacity. Journal of the American Academy of Audiology. 2007;18:539–552. doi: 10.3766/jaaa.18.7.8. [DOI] [PubMed] [Google Scholar]

- Gatehouse S, Akeroyd MA. The effects of cueing temporal and spatial attention on word recognition in a complex listening task in hearing-impaired listeners. Trends in Amplification. 2008;12:145–161. doi: 10.1177/1084713808317395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- George ELJ, Zekveld AA, Kramer SE, Goverts ST, Festen JM, Houtgast T. Auditory and nonauditory factors affecting speech reception in noise by older listeners. Journal of the Acoustical Society of America. 2007;121:2362–2375. doi: 10.1121/1.2642072. [DOI] [PubMed] [Google Scholar]

- Goldinger SD, Pisoni DB, Logan JS. On the nature of talker variability effects on recall of spoken word lists. Journal of Experimental Psychology: Learning, Memory and Cognition. 1991;17(1):152–162. doi: 10.1037//0278-7393.17.1.152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Helfer KS, Freyman RL. Aging and speech-on-speech masking. Ear and Hearing. 2008;29:87–98. doi: 10.1097/AUD.0b013e31815d638b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humes LE. Speech understanding in the elderly. Journal of the American Academy of Audiology. 1996;7:161–167. [PubMed] [Google Scholar]

- Humes LE. The contributions of audibility and cognitive factors to the benefit provided by amplified speech to older adults. Journal of the American Academy of Audiology. 2007;18:590–603. doi: 10.3766/jaaa.18.7.6. [DOI] [PubMed] [Google Scholar]

- Humes LE, Lee JH, Coughlin MP. Auditory measures of selective and divided attention in young and older adults using single-talker competition. Journal of the Acoustical Society of America. 2006;120:2926–2937. doi: 10.1121/1.2354070. [DOI] [PubMed] [Google Scholar]

- International Standards Organization (ISO) Acoustics-Statistical distribution of hearing thresholds as a function of age, ISO-7029. Basel, Switzerland: ISO; 2000. [Google Scholar]

- Lehman MT, Tompkins CA. Reliability and validity of an auditory working memory measure: Data from elderly and right-hemisphere damaged adults. Aphasiology. 1998;12(78):771–785. [Google Scholar]

- Li L, Daneman M, Qi JG, Schneider BA. Does the information content of an irrelevant source differentially affect spoken word recognition in younger and older adults? Journal of Experimental Psychology: Human Perception and Performance. 2004;30:1077–1091. doi: 10.1037/0096-1523.30.6.1077. [DOI] [PubMed] [Google Scholar]

- Lunner T, Sundewall-Thoren E. Interactions between cognition, compression, and listening conditions: Effects on speech-in-noise performance in a two-channel hearing aid. Journal of the American Academy of Audiology. 2007;18:539–552. doi: 10.3766/jaaa.18.7.7. [DOI] [PubMed] [Google Scholar]

- Mackersie CL, Prida TL, Stiles D. The role of sequential stream segregation and frequency selectivity in the perception of simultaneous sentences by listeners with sensorineural hearing loss. Journal of Speech, Language, and Hearing Research. 2001;44:19–28. doi: 10.1044/1092-4388(2001/002). [DOI] [PubMed] [Google Scholar]

- Marrone N, Mason CR, Kidd G., Jr The effects of hearing loss and age on the benefit of spatial separation between multiple talkers in reverberant rooms. Journal of the Acoustical Society of America. 2008;124:3064–3075. doi: 10.1121/1.2980441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore BCJ. Perceptual consequences of cochlear hearing loss and their implications for the design of hearing aids. Ear and Hearing. 1996;17(2):133–160. doi: 10.1097/00003446-199604000-00007. [DOI] [PubMed] [Google Scholar]

- Mullenix JW, Pisoni DB, Martin CS. Some effects of talker variability on spoken word recognition. Journal of the Acoustical Society of America. 1989;85:365–378. doi: 10.1121/1.397688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rhebergen KS, Versfeld NJ, Dreschler WA. Release from informational masking by time reversal of native and non-native interfering speech. Journal of the Acoustical Society of America. 2005;118:1274–1277. doi: 10.1121/1.2000751. [DOI] [PubMed] [Google Scholar]

- Robertson IH, Ward T, Ridgeway V, Nimmo-Smith I. The Test of Everyday Attention. Cambridge: Thames Valley Test Company; 1994. [Google Scholar]

- Ronnberg J, Rudner M, Foo C, Lunner T. Cognition counts: A working memory system for ease of language understanding (ELU) International Journal of Audiology. 2008;47(Suppl 2):S171–S177. doi: 10.1080/14992020802301167. [DOI] [PubMed] [Google Scholar]

- Sommers MS. The structural organization of the mental lexicon and its contribution to age-related declines in spoken-word recognition. Psychology and Aging. 1996;11(2):333–341. doi: 10.1037//0882-7974.11.2.333. [DOI] [PubMed] [Google Scholar]

- Sommers MS. Stimulus variability and spoken word recognition. II. The effects of age and hearing impairment. Journal of the Acoustical Society of America. 1997;101(4):2278–2288. doi: 10.1121/1.418208. [DOI] [PubMed] [Google Scholar]

- Studebaker GA. A rationalized arcsine transform. Journal of Speech and Hearing Research. 1985;28:455–462. doi: 10.1044/jshr.2803.455. [DOI] [PubMed] [Google Scholar]

- Tun PA, O’Kane G, Wingfield A. Distraction by competing speech in young and older adult listeners. Psychology and Aging. 2002;17(3):453–467. doi: 10.1037//0882-7974.17.3.453. [DOI] [PubMed] [Google Scholar]

- Van Engen KJ, Bradlow A. Sentence recognition in native- and foreign-language multi-talker background noise. Journal of the Acoustical Society of America. 2007;121:519–526. doi: 10.1121/1.2400666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watson CS. Some comments on informational masking. Acta Acustica. 2005;91:502–512. [Google Scholar]

- Watson CS, Kelly WJ. The role of stimulus uncertainty in the discrimination of auditory patterns. In: Getty DJ, Howard JN, editors. Auditory and Visual Pattern Recognition. Hillsdale, NJ: Lawrence Erlbaum; 1981. [Google Scholar]

- Willott JF. Anatomic and physiologic aging: A behavioral neuroscience perspective. Journal of the American Academy of Audiology. 1996;7(3):141–151. [PubMed] [Google Scholar]