Abstract

Several types of biological networks have recently been shown to be accurately described by a maximum entropy model with pairwise interactions, also known as the Ising model. Here we present an approach for finding the optimal mappings between input signals and network states that allow the network to convey the maximal information about input signals drawn from a given distribution. This mapping also produces a set of linear equations for calculating the optimal Ising-model coupling constants, as well as geometric properties that indicate the applicability of the pairwise Ising model. We show that the optimal pairwise interactions are on average zero for Gaussian and uniformly distributed inputs, whereas they are nonzero for inputs approximating those in natural environments. These nonzero network interactions are predicted to increase in strength as the noise in the response functions of each network node increases. This approach also suggests ways for how interactions with unmeasured parts of the network can be inferred from the parameters of response functions for the measured network nodes.

I. INTRODUCTION

Many organisms rely on complex biological networks both within and between cells to process information about their environments [1,2]. As such, their performance can be quantified using the tools of information theory [3–6]. Because these networks often involve large numbers of nodes, one might fear that difficult-to-measure high-order interactions are important for their function. Surprisingly, recent studies have shown that neural networks [7–10], gene regulatory networks [11,12], and protein sequences [13,14] can be accurately described by a maximum entropy model including only up to second-order interactions. In these studies, the nodes of biological networks are approximated as existing in one of a finite number of discrete states at any given time. In a gene regulatory network, the individual genes are binary variables, being either in the inactivated or the metabolically expensive activated states. Similarly, in a protein, the nodes are the amino acid sites on a chain which can take on any one of 20 values.

We will work in the context of neural networks, where the neurons communicate by firing voltage pulses commonly referred to as “spikes” [15]. When considered in small-enough time windows, the state of a network of N neurons can be represented by a binary word σ=(σ1,σ2,…,σN), where the state of neuron i is given by σi= 1 if it is spiking and σi=−1 if it is silent, similar to the ↑/↓ states of Ising spins.

The Ising model, developed in statistical physics to describe pairwise interactions between spins, can also be used to describe the state probabilities Pσ of a neural network

| (1) |

Here, Z is the partition function and the parameters {hi} and {Jij} are the coupling constants. This is the least structured (or equivalently, the maximum entropy [16]) model consistent with given first- and second-order correlations, obtained by measuring 〈σi〉 and 〈σiσj〉, where these averages are over the distribution of network states.

In magnetic systems, one seeks the response probabilities from the coupling constants, but in the case of neural networks, one seeks to solve the inverse problem of determining the coupling constants from measurements of the state probabilities. Because this model provides a concise and accurate description of response patterns in networks of real neurons [7–10], we are interested in finding the values of the coupling constants which allow neural responses to convey the maximum amount of information about input signals.

The Shannon mutual information can be written as the difference between the so-called response and noise entropies [3]

The response entropy quantifies the diversity of network responses across all possible input signals {ℐ}. For our discrete neural system, this is given by

| (2) |

In the absence of any constraints on the neural responses, Hresp is maximized when all 2N states are equally likely.

The noise entropy takes into account that the network states may vary with respect to repeated presentations of inputs, which reduces the amount of information transmitted. The noise entropy is obtained by computing the conditional response entropy Pσ|ℐ and averaging over all inputs

| (3) |

where Pℐ is the input probability distribution. Thus in order to find the maximally informative coupling constants, we must first confront the difficult problem of finding the optimal mapping between inputs ℐ and network states σ.

II. DECISION BOUNDARIES

The simplest mappings from inputs to neural response involve only a single input dimension [17–20]. In such cases, the response of a single neuron can often be described by a sigmoidal function with respect to the relevant input dimension [15,21]. However, studies in various sensory systems, including the retina [22], the primary visual [23–26], auditory [27], and somatosensory [28] cortices have shown that neural response can be affected by multiple input components, resulting in highly nonlinear, multidimensional mappings from input signals to neural responses.

In Fig. 1, we provide examples of response functions estimated for two neurons in the cat primary visual cortex [29]. For each neuron, the heat map shows the average firing rate in the space of the two most relevant input dimensions. As this figure illustrates, even in two dimensions, the mapping from inputs to the neural response (in this case the presence or absence of a spike in a small time bin) can be quite complex. Nevertheless, one can delineate regions in the input space where the firing rate is above or below its average (red solid lines). As an approximation, one can equate all firing rate values to the maximum value in regions where it is above average and to zero in regions where it is below average. This approximation of a sharp transition region of the response function is equivalent to assuming small noise in the response. Across the boundary separating these regions, we will assume that the firing rate varies from zero to the maximum in a smooth manner (inset in Fig. 2).

FIG. 1.

(Color online) Example analysis of firing rate for (a) a simple and (b) a complex cell in the cat primary visual cortex probed with natural stimuli from the data set [29]. Two relevant input dimensions were found for each neuron using the maximally informative dimensions method described in [30]. Color shows the firing rate as a function of input similarity to the first (x axis) and second (y axis) relevant input dimensions. The values on the x and y axes have been normalized to have zero mean and unit variance. Blue (dashed) lines show regions with signal-to-noise ratio > 2.0. Red (solid) lines are drawn at half the maximum rate and represent estimates of the decision boundaries.

FIG. 2.

(Color online) Schematic of response probability and noise entropy. The response function in two dimensions (inset) is assumed to be deterministic everywhere except at the transition region which may curve in the input space. In a direction x, perpendicular to some point on the decision boundary, the response function is sigmoidal (blue, no shading) going from silent to spiking. The conditional response entropy (red, shading underneath) is −Pspike|x log2(Pspike|x) − (1 − Pspike|x)log2(1 − Pspike|x) and decays to zero at x = ± ∞. The contribution to the total noise entropy due to this cross section, η, is the shaded area under the conditional response entropy curve.

As we discuss below, this approximation simplifies the response functions enough to make the optimization problem tractable, yet it still allows for a large diversity of nonlinear dependencies. Upon discretization into a binary variable, the firing rate of a single neuron can be described by specifying regions in the input space where spiking or silence is nearly always observed. We will assume that these deterministic regions are connected by sigmoidal transition regions called decision boundaries [31] near which Pσ|ℐ≈ 0.5. The crucial component in the model is that the sigmoidal transitions are sharp, affecting only a small portion of the input space. Quantitatively, decision boundaries are well defined if the width of the sigmoidal transition region is much smaller than the radius of curvature of the boundary.

The decision boundary approach is amenable to the calculation of mutual information. The contribution to the noise entropy Hnoise from inputs near the boundary is on the order of 1 bit and decays to zero in the spiking or silent regions (Fig. 2). We introduce a weighting factor η to denote the summed contribution of inputs near a decision boundary obtained by integrating −Σσ Pσ|x log2(Pσ|x) across the boundary. The factor η depends on the specific functional form of the transition from spiking to silence and represents a measure of neuronal noise. In a single-neuron system, the total noise entropy is then an integral along the boundary, Hnoise ≈ η∫γdsPℐ, where γ represents the boundary and the response entropy is Hresp= −P log2 P − (1 − P)log2(1 − P), where P is the spike probability of the neuron, equal to the integral of Pℐ over the spiking region.

The decision boundary approach is also easily extended to the case of multiple neurons, as shown in Fig. 3(a). In the multineuronal circuit, the various response patterns are obtained from intersections between decision boundaries of individual neurons. In principle, all 2N response patterns can be obtained in this way. We denote as Gσ the region of the space where inputs elicit a response σ from the network. To calculate the response entropy for a given set of decision boundaries in a D-dimensional space, the state probabilities are evaluated as D-dimensional integrals over {Gσ},

| (4) |

weighted by Pℐ. Just as in the case of a single neuron, the network response is assumed to be deterministic everywhere except near any of the transition regions. Near a decision boundary, the network can be with approximately equal probability in one of two states that differ in the response of the neuron associated with that boundary. Thus, such inputs contribute ~1 bit to the noise entropy [cf. Eq. (3) and Fig. 2]. The total noise entropy can therefore be approximated as a surface integral over all decision boundaries weighted by Pℐ,

| (5) |

where γn is the decision boundary of the nth neuron. In this paper, we will assume that η is the same for all neurons and is position-independent, but the extension to the more general case of spatially varying η is possible [31]. Finding the optimal mapping from inputs to network states can now be turned into a variational problem with respect to decision boundary shapes and locations.

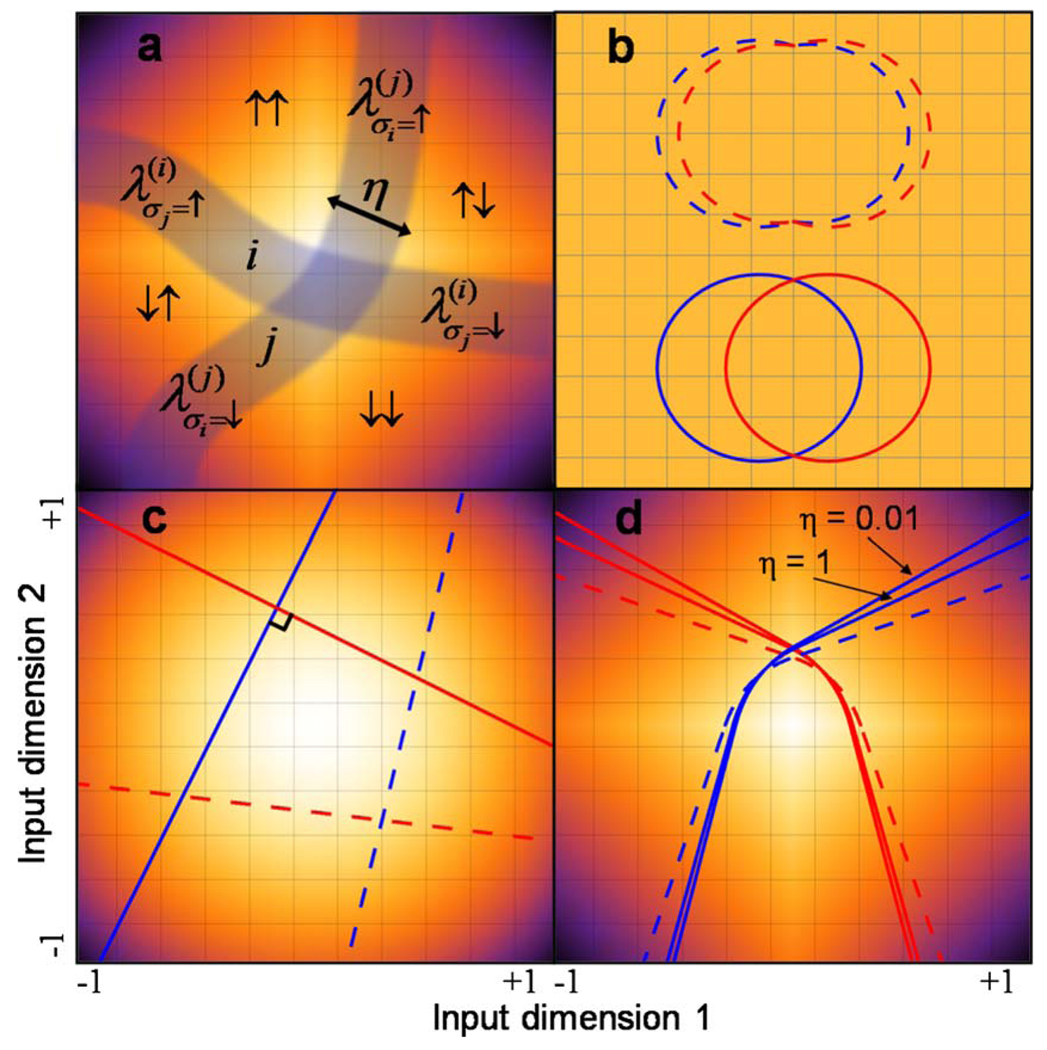

FIG. 3.

(Color online) Network decision boundaries. Color in input space corresponds to the input distribution Pℐ and each line is a boundary for an individual neuron. (a) For a general intersection between neurons i and j, the two boundaries divide the space into four regions corresponding to the possible network states (e.g., Gσi=↑,σj=↑). Each segment has a width η which determines the noise level and is described by a parameter [cf. Eq. (10)]. (b) In a uniform space, with two neurons (different colors/shading), the boundary segments satisfying the optimality condition are circular. Networks which have segments with different curvatures (dashed) are less informative than smooth circles (solid). (c) In a Gaussian space (in units of standard deviations), straight perpendicular lines (solid) provide more information about inputs than decision boundaries that intersect at any other angle (dashed). (d) For approximately natural inputs (plotted in units of standard deviations), the suboptimal balanced solutions (dashed) are two independent boundaries with the same P. The optimal solutions (solid) change their curvature at the intersection point and depend on the neuronal noise level η.

III. RESULTS

A. General solution for optimal coupling constants

Our approach for finding the optimal coupling constants consists of three steps. The first step is to find the optimal mapping from inputs to network states, as described by decision boundaries. The second step is to use this mapping to compute the optimal values of the response probabilities by averaging across all possible inputs. The final step is to determine the coupling constants of the Ising model from the set of optimal response probabilities.

Due to a high metabolic cost of spiking [32], we are interested in finding the optimal mapping from inputs to network states that result in a certain average spike probability across all neurons

| (6) |

where Sσ is the number of “up spins,” or firing neurons, in configuration σ. Taking metabolic constraints into account, we maximize the functional

| (7) |

where λ, {βσ} are Lagrange multipliers for the constraints and the last term demands self-consistency through Eq. (4).

To accomplish the first step, we optimize the shape of each segment between two intersection points. Requiring yields the following equation:

| (8) |

for the segment of the ith decision boundary that separates the regions Gσ1,…,σi−1,↑,σi+1,…,σN and Gσ1,…,σi−1,↓,σi+1,…,σN. Here, n̂ is the unit normal vector to the decision boundary and κ = ∇·n̂ is the total curvature of the boundary. We then optimize with respect to the state probabilities, , which gives a set of equations

| (9) |

Combining Eqs. (8) and (9), we arrive at the following equation for the segment of the ith decision boundary across which σi changes while leaving the rest of the network in state σ′,

| (10) |

The parameter

| (11) |

is specific to that segment and is determined by the ratio of probabilities of observing the states which this segment separates. Generally, this ratio (and therefore the parameter ) may change when the boundaries intersect. For example, in the schematic in Fig. 3(a), depends on the ratio of Pσi=↑,σj=↓ /Pσi=↓,σj=↓, whereas depends on Pσi=↑,σj=↑ /Pσi=↓,σj=↑. The values of the parameters for the two segments of the ith boundary are equal only when Pσi=↑,σj=↓ /Pσi=↓,σj=↓ =Pσi=↑,σj=↑ /Pσi=↓,σj=↑. This condition is satisfied when the neurons are independent. Therefore, we will refer to the special case of a solution where λ does not change its value across any intersection points as an independent boundary. In fact, Eq. (10) with a constant λ is the same as was obtained in [31] for a network with only one neuron. In that case, the boundary was described by a single parameter λ, which was determined from the neuron’s firing rate. Thus, in the case of multiple neurons, the individual decision boundaries are concatenations of segments of optimal boundaries computed for single neurons with, in general different, constraints. A change in across an intersection point results in a kink—an abrupt change in the curvature of the boundary. Thus, by measuring the change in curvature of a decision boundary of an individual neuron, one can obtain indirect measurements on the degree of interdependence with other, possibly unmeasured, neurons.

Our main observation is that the λ parameters determining decision boundary segments can be directly related to the coupling constants of the Ising model through a set of linear relationships. For example, consider two neurons i and j within a network of N neurons whose decision boundaries intersect. It follows from Eq. (11) that the change in λ parameters along a decision boundary is the same for the ith and jth neurons and is given by

| (12) |

where σ″ represents the network state of all the neurons other than i and j. Taking into account the Ising model via Eq. (1), this leads to a simple relationship for the interaction terms {Jij}

| (13) |

We note that only the average (“symmetric”) component of pairwise interactions can be determined in the Ising model. Indeed, simultaneously increasing Jij and decreasing Jji by the same amount will leave the Ising-model probabilities unchanged because of the permutation symmetry in Eq. (1). This same limitation is present in the determination of {Jij} via any method (e.g., an inverse Ising algorithm).

Once the interaction terms {Jij} are known, the local fields can be found as well from Eq. (11)

| (14) |

This equation can be evaluated for any response pattern σ′ because consistency between changes in λ is guaranteed by Eq. (13).

The linear relationships between the Ising-model coupling constants and the parameters are useful because they can indicate what configurations of network decision boundaries are consistent with an Ising model. First, Eq. (13) tells us that if the ith boundary is smooth at an intersection with another boundary j, then the average pairwise interaction in the Ising model between neurons i and j is zero (as mentioned above, the cases of truly zero interaction Jij=Jji= 0 and that of a balanced coupling Jij= −Jji cannot be distinguished in an Ising model). Second, we know that if one boundary is smooth at an intersection, then any other boundary it intersects with is also smooth at that point. More generally, the change in curvature has to be the same for the two boundaries and we can use it to determine the average pairwise interaction between the two neurons. Finally, the change in curvature has to be the same at all points where the same two boundaries intersect. For example, intersection between two planar boundaries is allowed because the change in curvature is zero at points across the intersection line. In cases where intersections form disjoint sets, the equal change in curvature would presumably have to be due to a symmetry of decision boundaries.

In summary then, we have the analytical equations for the maximally informative decision boundaries of a network through Eqs. (10) and (11). We now study their solutions for specific input distributions and then determine, through Eqs. (13) and (14), the corresponding maximally informative coupling constants.

B. Uniform and Gaussian distributions

We first consider the cases of uniformly and Gaussian-distributed input signals. As discussed above, finding optimal configurations of decision boundaries is a tradeoff between maximizing the response entropy and minimizing the noise entropy. Segments of decision boundaries described by Eq. (10) minimize the noise entropy locally, whereas changes in the λ parameters arise as a result of maximizing the response entropy. The independent decision boundaries, which have one constant λ for each boundary, minimize the noise entropy globally for a given firing rate. Because for one neuron, specifying the spike probability is sufficient to determine the response entropy P1 = P [cf. Eq. (6)] and Hresp = −P log2 P − (1 − P)log2(1 − P), maximizing information for a given spike probability is equivalent to minimizing the noise entropy [31]. When finding the optimal configuration of boundaries in a network with an arbitrary number of nodes, the response entropy is not fixed because the response probability may vary for each node (it is specified only on average across the network). However, if there is some way of arranging a collection of independent boundaries to obtain response probabilities that also maximize the response entropy, then such a configuration must be optimal because it simultaneously minimizes the noise entropy and maximizes the response entropy. It turns out that such solutions are possible for both the uniformly and Gaussian distributed input signals.

For the simple case of two neurons receiving a two-dimensional uniformly distributed input, as in Fig. 3(b), the optimal independent boundaries are circles because they minimize the noise entropy (circumference) for a given probability P (area). In general, the response entropy, Eq. (2), is maximized for the case of two neurons when the probability of both neurons spiking is equal to P2. It is always possible to arrange two circular boundaries to satisfy this requirement. The same reasoning extends to overlapping hyperspheres in higher dimensions, allowing one to calculate the optimal network decision boundary configuration for uniform inputs. Therefore, for uniformly distributed inputs, the optimal network decision boundaries are overlapping circles in two dimensions or hyperspheres in higher dimensions.

For an uncorrelated Gaussian distribution [Fig. 3(c)], the independent boundaries are (D − 1)-dimensional hyperplanes [31]. If we again consider two neurons in a two-dimensional input space, then the individual response probabilities for each boundary determine the perpendicular distance from the origin to the lines. Any two straight lines will have the same noise entropy, independent of the angle between them. However, orthogonal lines (orthogonal hyperplanes in higher dimensions) maximize the response entropy. The optimality of orthogonal boundaries holds for any number of neurons and any input dimensionality. We also find that for a given average firing rate across the network, the maximal information per neuron does not depend on input dimensionality as long as the number of neurons N is less than the input dimensionality D. For N > D, the information per neuron begins to decrease with N, indicating redundancy between neurons.

C. Naturalistic distributions

Biological organisms rarely experience uniform or Gaussian input distributions in their natural environments and might be evolutionarily optimized to optimally process inputs with very different statistics. To approximate natural inputs, we use a two-dimensional Laplace distribution, Pℐ(r) ∝ exp(−|x| − |y|), which captures the large-amplitude fluctuations observed in natural stimuli [33,34], as well as bursting in protein concentrations within a cell [35]. For this input distribution, there are four families of solutions to Eq. (10) (see [31] for details), giving rise to many potentially optimal network boundaries. For a given λ, the decision boundaries can be found analytically. To find the appropriate value of the λ’s, we numerically solved Eq. (11) using MATHEMATICA [36]. We found no solutions for independent boundaries. The optimal boundaries therefore will have different λ’s and kinks at intersection points. As a result, the neurons will have a nonzero average coupling between them, examples of which are shown in Fig. 3(d).

We found that the shapes of the boundaries change with the noise level η, which does not happen for independent boundaries [31]. To see if this noise dependence gives the network the ability to compensate for noise in some way, we look at the maximum possible information the optimal network boundaries are able to encode about this particular input distribution for different noise levels (Fig. 4). We compare this to the suboptimal combination of two independent boundaries with the same P. The figure illustrates that the optimal solutions decrease in information less quickly as the noise level increases. The improvement in performance results from their ability to change shape in order to compensate for the increasing noise level.

FIG. 4.

(Color online) Information from independent and optimally coupled network boundaries as a function of neuronal noise level. In both examples shown here, the independent boundaries (blue circles) lose information faster than the optimal boundaries (red triangles) as the noise level η increases. Each curve represents two response probabilities (e.g., P =0.45 and 0.55) because information is invariant under switching the spiking or silent regions.

We calculated both h ≡ h1 =h2 and J ≡ (J12+J21) /2 for various noise levels and response probabilities. Figures 5(a) and 5(c) show that the local field h is practically independent of the noise level but does depend on the response probability. The coupling strength, however, depends on both noise level and response probability, increasing in magnitude with neuronal noise, shown in Fig. 5(b). The combination of this result and the noise compensation observed in Fig. 4 suggests that the network is able to use pairwise coupling for error correction, with larger noise requiring larger coupling strengths. This strategy is similar to the adage “two heads are better than one,” to which we add “…especially if the two heads are noisy.”

FIG. 5.

(Color online) Optimal coupling constants for naturalistic inputs. (a) The local fields show very little dependence on the noise level of the neurons, but (b) the magnitude of the interaction strength J increases in the same fashion with the noise level regardless of the response probability P. (c) h depends strongly on the response probability. (d) J changes sign about P = 1/2. Below this point, the coupling is excitatory and above, the coupling is inhibitory.

In Fig. 5(d), we observe that the sign of the coupling changes as the value of P crosses 1/2. When P = 1/2, the optimal solution is an X crossing through the origin, which is the only response probability for which the network boundary is made of two independent boundaries, making J = 0 for any noise level when P = 1/2. It can also be seen that J → 0 as η→0 for P ≠ 1/2. Curiously, for a given η, the dependence of the optimal J on P is highly nonmonotonic: it changes sign across P = 1/2 and reaches a maximum (minimum) value for P≈0.25(0.75).

IV. DISCUSSION

The general mapping between inputs and maximally informative network responses in the decision boundary approximation has allowed us to calculate the Ising-model coupling constants. In this approach, network responses to a given input are not described by an Ising model, which emerges after averaging network responses across many different inputs. Although there are many configurations of network decision boundaries that can be consistent with a pairwise-interaction model, certain restrictions apply. For example, the change in curvature of a decision boundary that can occur when two boundaries intersect has to be the same for both boundaries and, if they intersect at more than one disjoint surfaces, the changes in curvature of the decision boundaries must be the same at all intersection points.

We find that for both the uniform and Gaussian input distributions, the optimal network boundaries are independent. This implies that the average interaction strength is zero for all pairs of nodes through Eq. (13). Such balance between excitatory and inhibitory connections has been observed in other contexts including rapid state switching [37], Hebbian learning [38], and selective amplification [39]. In this context, balanced coupling is just one possible configuration of a network of decision boundaries and happens to be optimal for uniformly and Gaussian distributed inputs.

For a more realistic input distribution, the Laplace distribution, we found the optimal boundaries were not smooth at intersection points. This indicates that the average coupling between the nodes in the network should be nonzero to achieve maximal information transmission. We also observed that the optimal configuration of the network depended on the noise level in the responses of the nodes, giving the network the ability to partially compensate for the encoding errors introduced by the noise, which did not happen for the less natural input distributions considered. Also, the fact that J can be positive or negative between two nodes leads to the potential for many stable states in the network, which could give the network the capacity to function as autoassociative memory, as in the Hopfield model [40,41]. Similar network behaviors were reported in [42] for networks of ten neurons, where the optimal coupling constants were numerically found for correlated binary and Gaussian inputs. Our approach is different in that we use an Ising model to describe average network responses, but not responses to particular inputs, Pσ|ℐ.

Previous experiments have shown that simultaneous recordings from neural populations could be well described by the Ising model. In one such experiment using natural inputs [7], the distributions of coupling constants showed an average h which was of order unity and negative and average J which was small and positive. Our results for the Laplace distribution are in qualitative agreement with these previous findings if one assumes a response probability P < 1/2. Due to the high metabolic cost of spiking, this is a plausible assumption to make.

The method we have put forth goes beyond predicting the maximally informative coupling constants to make statements about optimal coding strategies for networks. Although both uniform and Gaussian inputs can be optimally encoded by balanced networks, for example, their organizational strategies are remarkably different. In the uniform input case, the optimal boundaries curve in all dimensions, meaning each node is attending to and encoding information about every component of the possibly high-dimensional input and they organize themselves by determining the optimal amount of overlap between boundaries. However, for the Gaussian distribution, each boundary is planar, indicating that the nodes of the network are sensitive to only one component of the input. The optimal strategy for networks receiving this type of input is to attend to and encode orthogonal directions in the input space, minimizing the redundancy in the coding process. In terms of practical applications, perhaps the most useful aspect of this framework is the ability to infer the strength of pairwise interactions with other nodes in the network by examining decision boundaries of single nodes [cf. Eq. (13)].

The observation of different types of pairwise interactions for networks processing Gaussian and naturalistic Laplace inputs raises the possibility of discovering novel adaptive phenomena. Previous studies in several sensory modalities have demonstrated that the principle of information maximization can account well for changes in the relevant input dimensions [29,43–53] as well as the neural gain [21,52,54,55] following changes in the input distribution. For example, nonlinear gain functions have been shown to rescale with changes in input variance [28,48,51,54,55]. Our results suggest that if neurons were adapted on one hand to Gaussian inputs and then to naturalistic non-Gaussian inputs, then multidimensional input-output functions of individual neurons might change qualitatively, with larger changes expected for noisier neurons.

By studying the geometry of interacting decision boundaries, we have gained insights into optimal coding strategies and coupling mechanisms in networks. Our work focused on the application to neural networks, but the method developed here is general to any network with nodes which have multidimensional, sigmoidal response functions. Although we have only considered three particular distributions of inputs, the framework described here is general and can be applied to other classes of inputs with the potential of uncovering novel, metabolically efficient combinatorial coding schemes. In addition to making predictions for how optimal pairwise interactions should change with adaptation to different statistics of inputs, this approach provides a way to infer interactions with unmeasured parts of the network simply by observing the geometric properties of decisions boundaries of individual neurons.

ACKNOWLEDGMENTS

The authors thank William Bialek and members of the CNL-T group for helpful discussions. This work was supported by the Alfred P. Sloan Foundation, the Searle Funds, National Institute of Mental Health Grant No. K25MH068904, the Ray Thomas Edwards Foundation, the McKnight Foundation, the W. M. Keck Foundation, and the Center for Theoretical Biological Physics (NSF PHY-0822283).

Footnotes

PACS number(s): 87.18.Sn, 87.19.ll, 87.19.lo, 87.19.ls

References

- 1.Bray D. Nature (London) 1995;376:307. doi: 10.1038/376307a0. [DOI] [PubMed] [Google Scholar]

- 2.Bock G, Goode J. Complexity in Biological Information Processing. West Sussex, England: John Wiley & Sons, Ltd; 2001. [Google Scholar]

- 3.Cover TM, Thomas JA. Information Theory. New York: John Wiley & Sons; 1991. [Google Scholar]

- 4.Tkačik G, Callan CG, Jr, Bialek W. Phys. Rev. E. 2008;78:011910. doi: 10.1103/PhysRevE.78.011910. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Tostevin F, ten Wolde PR. Phys. Rev. Lett. 2009;102:218101. doi: 10.1103/PhysRevLett.102.218101. [DOI] [PubMed] [Google Scholar]

- 6.Ziv E, Nemenman I, Wiggins CH. PLoS ONE. 2007;2:e1077. doi: 10.1371/journal.pone.0001077. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Schneidman E, Berry MJ, II, Segev R, Bialek W. Nature (London) 2006;440:1007. doi: 10.1038/nature04701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Shlens J, Field GD, Gauthier JL, Grivich MI, Petrusca D, Sher A, Litke AM, Chichilnisky EJ. J. Neurosci. 2006;26:8254. doi: 10.1523/JNEUROSCI.1282-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Tang A, et al. J. Neurosci. 2008;28:505. doi: 10.1523/JNEUROSCI.3359-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Pillow JW, Shlens J, Paninski L, Sher A, Litke AM, Chichilnisky EJ, Simoncelli EP. Nature (London) 2008;454:995. doi: 10.1038/nature07140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Lezon TR, Banavar JR, Cieplak M, Maritan A, Federoff NV. Proc. Natl. Acad. Sci. U.S.A. 2006;103:19033. doi: 10.1073/pnas.0609152103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Walczak AM, Wolynes PG. Biophys. J. 2009;96:4525. doi: 10.1016/j.bpj.2009.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Socolich M, Lockless SW, Russ WP, Lee H, Gardner KH, Ranganathan R. Nature (London) 2005;437:512. doi: 10.1038/nature03991. [DOI] [PubMed] [Google Scholar]

- 14.Bialek W, Ranganathan R. e-print arXiv:q-bio.QM/4397. [Google Scholar]

- 15.Rieke F, Warland D, de Ruyter van Steveninck RR, Bialek W. Spikes: Exploring the Neural Code. Cambridge, MA: MIT Press; 1997. [Google Scholar]

- 16.Jaynes ET. Phys. Rev. 1957;106:620. [Google Scholar]

- 17.de Boer E, Kuyper P. IEEE Trans. Biomed. Eng. 1968;BME-15:169. doi: 10.1109/tbme.1968.4502561. [DOI] [PubMed] [Google Scholar]

- 18.Meister M, Berry MJ. Neuron. 1999;22:435. doi: 10.1016/s0896-6273(00)80700-x. [DOI] [PubMed] [Google Scholar]

- 19.Schwartz O, Pillow J, Rust N, Simoncelli EP. J. Vision. 2006;6:484. doi: 10.1167/6.4.13. [DOI] [PubMed] [Google Scholar]

- 20.Victor J, Shapley R. Biophys. J. 1980;29:459. doi: 10.1016/S0006-3495(80)85146-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Laughlin SB, Naturforsch Z. 1981;36:910. [C] [PubMed] [Google Scholar]

- 22.Fairhall AL, Burlingame CA, Narasimhan R, Harris RA, Puchalla JL, Berry Mn. J. Neurophysiol. 2006;96:2724. doi: 10.1152/jn.00995.2005. [DOI] [PubMed] [Google Scholar]

- 23.Rust NC, Schwartz O, Movshon JA, Simoncelli EP. Neuron. 2005;46:945. doi: 10.1016/j.neuron.2005.05.021. [DOI] [PubMed] [Google Scholar]

- 24.Chen X, Han F, Poo MM, Dan Y. Proc. Natl. Acad. Sci. U.S.A. 2007;104:19120. doi: 10.1073/pnas.0706938104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Touryan J, Lau B, Dan Y. J. Neurosci. 2002;22:10811. doi: 10.1523/JNEUROSCI.22-24-10811.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Felsen G, Touryan J, Han F, Dan Y. PLoS Biol. 2005;3:e342. doi: 10.1371/journal.pbio.0030342. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Atencio CA, Sharpee TO, Schreiner CE. Neuron. 2008;58:956. doi: 10.1016/j.neuron.2008.04.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Maravall M, Petersen RS, Fairhall A, Arabzadeh E, Diamond M. PLoS Biol. 2007;5:e19. doi: 10.1371/journal.pbio.0050019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Sharpee TO, Sugihara H, Kurgansky AV, Rebrik SP, Stryker MP, Miller KD. Nature (London) 2006;439:936. doi: 10.1038/nature04519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Sharpee T, Rust N, Bialek W. Neural Comput. 2004;16:223. doi: 10.1162/089976604322742010. see also e-print arXiv:physics/0212110; and a preliminary account in Advances in Neural Information Processing 15, edited by S. Becker, S. Thrun, and K. Obermayer (MIT Press, Cambridge, MA, 2003), pp. 261–268. [DOI] [PubMed] [Google Scholar]

- 31.Sharpee TO, Bialek W. PLoS ONE. 2007;2:e646. doi: 10.1371/journal.pone.0000646. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Attwell D, Laughlin SB, Cereb J. Blood Flow Metab. 2001;21:1133. doi: 10.1097/00004647-200110000-00001. [DOI] [PubMed] [Google Scholar]

- 33.Ruderman DL, Bialek W. Phys. Rev. Lett. 1994;73:814. doi: 10.1103/PhysRevLett.73.814. [DOI] [PubMed] [Google Scholar]

- 34.Simoncelli EP, Olshausen BA. Annu. Rev. Neurosci. 2001;24:1193. doi: 10.1146/annurev.neuro.24.1.1193. [DOI] [PubMed] [Google Scholar]

- 35.Paulsson J. Nature (London) 2004;427:415. doi: 10.1038/nature02257. [DOI] [PubMed] [Google Scholar]

- 36.Wolfram Research, Inc., MATHEMATICA, Version 6. 2007 [Google Scholar]

- 37.Tsodyks MV, Sejnowski T. Network Comput. Neural Syst. 1995;6:111. [Google Scholar]

- 38.Song S, Miller KD, Abbott LF. Nat. Neurosci. 2000;3:919. doi: 10.1038/78829. [DOI] [PubMed] [Google Scholar]

- 39.Murphy BK, Miller KD. Neuron. 2009;61:635. doi: 10.1016/j.neuron.2009.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Hopfield JJ. Proc. Natl. Acad. Sci. U.S.A. 1982;79:2554. doi: 10.1073/pnas.79.8.2554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Amit DJ, Gutfreund H, Sompolinsky H. Phys. Rev. A. 1985;32:1007. doi: 10.1103/physreva.32.1007. [DOI] [PubMed] [Google Scholar]

- 42.Prentice J, Tkačik G, Schneidman E, Balasubramanian V. Frontiers in Systems Neuroscience. Conference Abstract : Computations and Systems Neuroscience; 2009. [Google Scholar]

- 43.Smirnakis SM, Berry MJ, Warland DK, Bialek W, Meister M. Nature (London) 1997;386:69. doi: 10.1038/386069a0. [DOI] [PubMed] [Google Scholar]

- 44.Shapley R, Victor J. J. Physiol. 1978;285:275. doi: 10.1113/jphysiol.1978.sp012571. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Kim KJ, Rieke F. J. Neurosci. 2001;21:287. doi: 10.1523/JNEUROSCI.21-01-00287.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Srinivasan MV, Laughlin SB, Dubs A. Proc. R. Soc. London, Ser. B. 1982;216:427. doi: 10.1098/rspb.1982.0085. [DOI] [PubMed] [Google Scholar]

- 47.Buchsbaum G, Gottschalk A. Proc. R. Soc. London, Ser. B. 1983;220:89. doi: 10.1098/rspb.1983.0090. [DOI] [PubMed] [Google Scholar]

- 48.Shapley R, Victor J. Vision Res. 1979;19:431. doi: 10.1016/0042-6989(79)90109-3. [DOI] [PubMed] [Google Scholar]

- 49.Chander D, Chichilnisky EJ. J. Neurosci. 2001;21:9904. doi: 10.1523/JNEUROSCI.21-24-09904.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Hosoya T, Baccus SA, Meister M. Nature (London) 2005;436:71. doi: 10.1038/nature03689. [DOI] [PubMed] [Google Scholar]

- 51.Theunissen FE, Sen K, Doupe AJ. J. Neurosci. 2000;20:2315. doi: 10.1523/JNEUROSCI.20-06-02315.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Nagel KI, Doupe AJ. Neuron. 2006;51:845. doi: 10.1016/j.neuron.2006.08.030. [DOI] [PubMed] [Google Scholar]

- 53.David SV, Vinje WE, Gallant JL. J. Neurosci. 2004;24:6991. doi: 10.1523/JNEUROSCI.1422-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Fairhall AL, Lewen GD, Bialek W, de Ruyter van Steveninck RR. Nature (London) 2001;412:787. doi: 10.1038/35090500. [DOI] [PubMed] [Google Scholar]

- 55.Brenner N, Bialek W, de Ruyter van Steveninck RR. Neuron. 2000;26:695. doi: 10.1016/s0896-6273(00)81205-2. [DOI] [PubMed] [Google Scholar]