Abstract

When 2 different visual targets presented among different distracters in a rapid serial visual presentation (RSVP) are separated by 400 ms or less, detection and identification of the 2nd targets are reduced relative to longer time intervals. This phenomenon, termed the attentional blink (AB), is attributed to the temporary engagement of a limited-capacity attentional system by the 1st target, which reduces resources available for processing the 2nd target. Although AB has been reliably obtained with many stimulus types, it has not been found for faces (E. Awh et al., 2004). In the present study, the authors investigate the underpinnings of this immunity. Unveiling circumstances in which AB occurs within and across faces and other categories, the authors demonstrate that a multichannel model cannot account for the absence of AB effects on faces. The authors suggest instead that perceptual salience of the face within the distracters’ series as well as the available resources determine whether or not faces are blinked in RSVP.

Keywords: attentional blink, repetition blindness, face perception, attention resources

Since the seminal work of Kahneman (1973), the concept of limited processing capacity in the brain has been pivotal for explaining the selective nature of attention. Moreover, the concept of “limited attention capacity” and metaphors such as “bottleneck” have been used to explain the reduction in performance in dual-task compared to single-task paradigms (e.g., Pashler, 1998). Among the most striking demonstrations of resource limitation in perceptual processing is the attentional blink (AB) phenomenon (Raymond, Shapiro, & Arnell, 1992; for a review, see Shapiro, Arnell, & Raymond, 1997).

The AB phenomenon is usually observed in a rapid serial visual presentation (RSVP) paradigm when participants are requested to respond to each of two pre-designated targets. Ample evidence with stimuli of different kinds demonstrates that if the participant is requested to respond to two target stimuli (T1 and T2) that are separated by short time intervals (up to about 400 ms), the processing of T1 interferes with the processing of T2 (Shapiro, Caldwell, & Sorensen, 1997). The effect of AB is demonstrated by a reduction in T2-related performance when it follows T1 within the above critical time interval, relative to a condition in which T1 is ignored or the T1–T2 time interval is longer. Although different accounts of this phenomenon have emphasized different aspects of processing T2, they all share the idea that while the processing of T1 continues, there is insufficient attention for efficient processing of T2 either at the perceptual level (e.g., Chun & Potter, 1995; Duncan, Ward, & Shapiro, 1994; Ward, Duncan, & Shapiro, 1997) or at the level of working memory and response selection (Jolicoeur, 1999). Moreover, attentional demands in RSVP increase due to masking exerted on the targets by the immediately adjacent distracters (Giesbrecht & Di Lollo, 1998). Consequently, the processing of the perceptual representation of T2 might be prematurely interrupted, for example by being overwritten in visual short-term memory by the subsequent stimulus in the series (Brehaut, Enns, & Di Lollo, 1999).

Although AB is particularly robust when stimuli are letters, digits, or artificial symbols, the effect has also been observed with perceptually rich and naturalistic stimuli, such as scenes or pictures of objects (Evans & Treisman, 2005). There is, however, one noticeable exception to this rule. Attentional blink was absent when T2 was a face and T1 was a symbol, both requiring identification (Awh, Serences, Laurey, Dhaliwal, van der Jagt & Dassonville, 2004). However, even faces were blinked in that study if both T1 and T2 were faces. On the basis of the distinction between the effective interference with the recognition of T2 faces by T1 faces, as opposed to the absence of such interference when T1 were symbols, Awh et al. suggested a multichannel resource-allocation system supporting a long line of arguments against a unitary pool of attention capacity (e.g., Navon & Gopher, 1979; Wickens, 1984). According to Awh et al.’s model, differences between processing faces and processing the nonface stimuli account for this AB pattern. Echoing a similar suggestion by Palermo and Rhodes (2002), they suggested that “holistic” or “configural” processes that are presumably characteristic of face recognition draw on different resources channels than the “feature-based” mode of processing that is presumably characteristic of object (or symbol) recognition.

The theoretical framework on which Awh et al.’s (2004) model is based is somewhat supported by neurophysiological and neuroimaging studies revealing complex but independent neural networks that seem to realize different perceptual and cognitive acts (e.g., Allison, Puce, Spencer, & McCarthy, 1999; Bentin, Allison, Puce, Perez, & McCarthy, 1996; Downing, Jiang, Shuman, & Kanwisher, 2001; Haxby, Hoffman, & Gobbini, 2002; Schwarzlose, Baker, & Kanwisher, 2005). Moreover, although several electrophysiological studies showed interactions between processing of faces and processing objects of expertise (Rossion, Kung, & Tarr, 2004; for a review, see Gauthier & Curby, 2005), this interaction could be accounted for by the multichannel model if we assume, as Awh et al. actually did, that these channels are not category specific but process specific. Indeed, Gauthier, Curran, Curby, and Collins (2003) assumed that the interaction between the processing of faces and the processing of cars in car experts reflects the use of configural processes for cars as well as for faces by experts, whereas novices use configural processes only for faces. Furthermore, several studies showed that attention can selectively modulate neural activity associated with the visual perception of particular object categories (e.g., Furey et al., 2006; Haxby et al., 1994; Lueschow et al., 2004; O’Craven, Downing, & Kanwisher, 1999). However, no direct evidence has been provided so far for a within-modality dissociation of attention mechanisms linked to different perceptual processes (cf. Clark et al., 1997). The goal of the present study is to further explore the differences between AB effects on faces among objects1 and objects among objects to shed additional light on the use of attention resources during face detection and whether the allocation of attention to faces and objects is, indeed, independent.

The role of attention in face processing has not been exhaustively investigated, and studies have been mainly concerned with the question of whether faces attract attention automatically or not. Most of these studies used schematic faces, and the general pattern of results suggests that although gaze direction and some face expressions might capture attention (Driver et al., 1999), neutral schematic faces do not usually pop out (e.g., Brown, Huey, & Findlay, 1997). This pattern might be taken as evidence that face processing requires attentional resources like the processing of nonface objects. However, a recent study using natural faces demonstrated a form of high-level pop-out-like phenomena (Hershler & Hochstein, 2005), whereas other studies have showed that changing a face with another face in a six-item display is more likely to be detected than changing an object with another object (Ro, Russell, & Lavie, 2001). Although these findings might suggest that, indeed, faces are more conspicuous than simultaneously presented objects and capture attention, they have been challenged either on their methodological basis (Palermo & Rhodes, 2003), by suggesting alternative (low-level-vision) explanations for the pop-out effect (VanRullen, 2006), or by demonstrating their susceptibility to strategic factors (Austen & Enns, 2003). Furthermore, these findings could also be accounted for by the multiple-channel model suggested by Awh et al. (2004).

Notwithstanding the importance of Awh et al.’s (2004) demonstration of face immunity to AB, their results require additional corroboration. First, the two-target procedure used in that experiment could have imposed different demands on the attention system than does RSVP (Visser, Bischof, & Di Lollo, 2004). Second, the perceptual difference between faces and digits might have been too great to allow proper comparison of the reciprocal effects. Hence, the absence of AB from digits to faces could reflect the immense difference in the perceptual richness of the two stimulus categories rather than independent channels of attention resources.2 Indeed, whereas Awh et al. showed that faces engage processing resources that are not obstructed by digits, they also found that when faces were used as T1 there was a robust AB effect on digits. Note that a strong multichannel hypothesis requires a double dissociation—that is, not only that objects should not blink faces but also that faces should not blink objects under similar stimulus conditions.

To address these concerns, in the present study we used a traditional RSVP procedure to assess AB effects for faces and other frequently seen objects, manipulating T1 stimuli, T2 stimuli, and the relationship between them. In Experiment 1 we verified that the relative immunity of faces to AB is reliable and that the dissociation between faces and objects is evident in an RSVP paradigm in which all stimuli are objects and faces of similar perceptual complexity. Experiment 2 suggested a double dissociation between faces and objects (watches), showing a modest AB effect on T2 faces but not on watches when T1 was a face, and on T2 watches but not on faces when T1 was a watch. In Experiment 3 we found AB effects across two different object categories, demonstrating that the presumed double dissociation between faces and objects could not be explained by perceptual factors such as repetition blindness (RB) alone (Kanwisher, Yin, & Wojciulik, 1999). In Experiment 4, however, we found that increasing the attentional demands required for processing the faces at T1 augments the AB on faces but also induces a significant effect on watches, raising doubts about the double dissociation suggested by Experiment 2. Finally, in Experiment 5 we showed that the dissociation between the AB effects on faces and watches persists even if both stimulus types are processed using a feature-based perceptual strategy.

Experiment 1

Using a variant of the AB paradigm, Awh et al. (2004) reported that identifying a digit (T1) significantly impaired identification of a subsequently presented letter (T2) if the stimulus onset asynchrony (SOA) between T1 and T2 is smaller than 500 ms. In contrast, the same digit identification task did not reduce the accuracy in identifying a previously learned face. The goal of the present experiment was to replicate and extend these findings. In particular, we sought to (a) use a conventional RSVP paradigm in which many different stimuli are presented in fast sequence, (b) compare faces with objects that were similarly complex by using photographs from the two categories, (c) use a variety of different exemplars from each category rather than only three exemplars used repeatedly as in Awh et al. (2004), and (d) compare the AB effect across objects with the AB effect from objects to faces in a within-subjects design. To achieve these goals we used a flower discrimination task for the T1 task, whereas the T2 task was detection of either a watch or a face, in different blocks. On the basis of Awh et al.’s results, we predicted that the discrimination of flowers would blink the detection of watches but not of faces.

Method

Participants

Twelve undergraduates from the Hebrew University with normal or corrected-to-normal vision participated for class credit or payment.

Stimuli

Stimuli were grayscale photographs of 115 different Caucasian faces (57 men and 58 women, front close-up portraits without hairlines), 115 different analog watches, 120 flowers (60 different tulips and 60 different sunflowers), and 240 different items of furniture (chairs, tables, cupboards, and sofas). All photographs were the same size; they were presented at the center of a 19″ (48 cm) monitor and subtended a visual angle of about 3° × 3.2°.

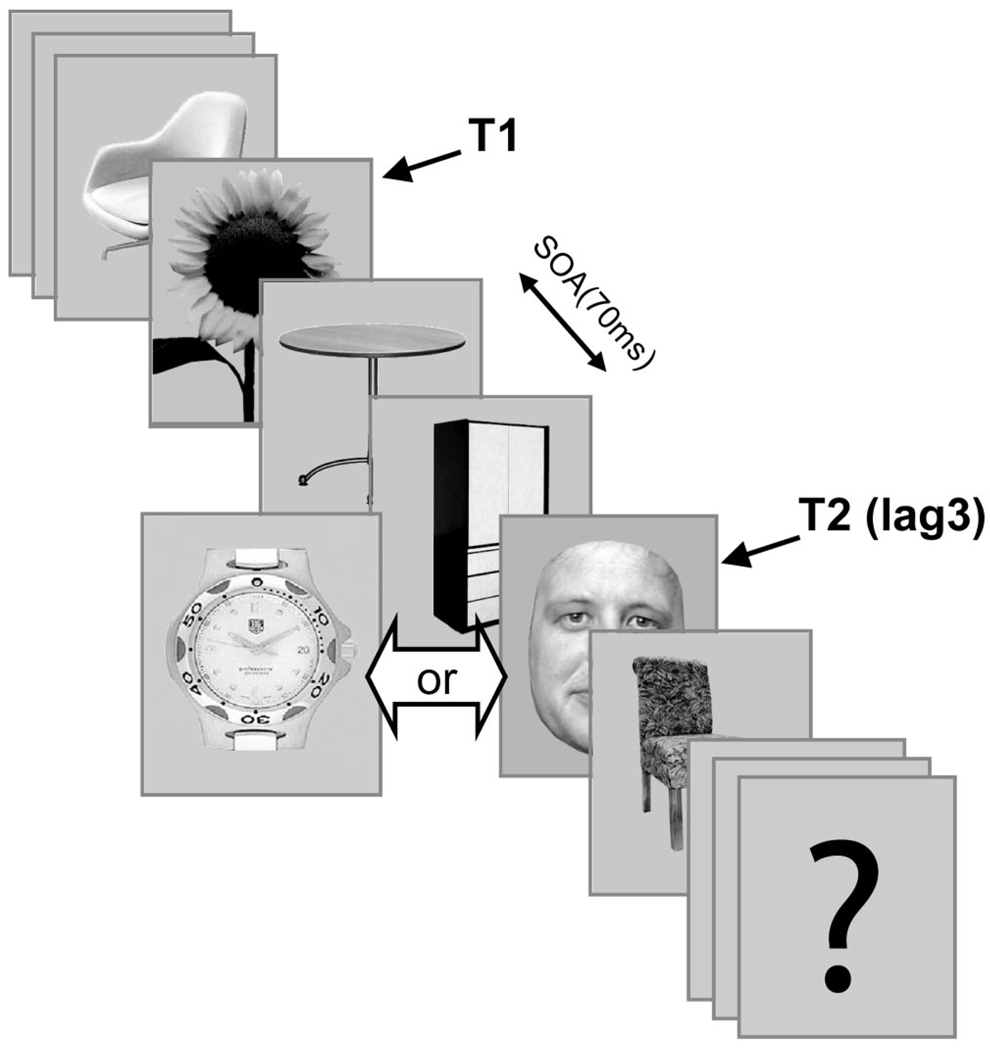

Procedure

A trial started with a fixation cross for 500 ms, immediately followed by a sequence of 20 pictures presented at a rate of 14/s with no time intervals between items (Figure 1). Two targets (T1 and T2) were predefined and interspersed among 18 distracters that were items of furniture. Following each trial a question mark cued the subject to respond to both T1- and T2-associated tasks, in that order.

Figure 1.

An example of a rapid serial visual presentation trial consisting of 20 images in the T2-present condition: Target 1 stimulus (T1), a flower that was either a sunflower or a tulip; Target 2 stimulus (T2), a face or a watch (in separate blocks) appeared on 50% of the trials. Conditions were manipulated within subject in separate blocks; the 18 distracters were different items of furniture. Each image was exposed for 70 ms with no time intervals between them. SOA = stimulus onset asynchrony.

The T1 was a flower and was presented in every trial. Either a tulip or a sunflower was chosen at random from the pool of flower photographs and presented in either the 7th or the 10th serial position in the stimulus series. In 50% of the trials the flower was followed by a T2, which was randomly placed as the 1st, 3rd, or 7th stimulus following T1 (Lag 1, Lag 3, and Lag 7; the respective SOAs were 70 ms, 210 ms, and 490 ms). In each trial the participants were requested to discriminate between sunflowers and tulips (T1) and to detect whether a T2 was or was not presented. Responses were given by pressing two of four alternative buttons, one with the right hand for the T1 discrimination and another one with the left hand for T2 detection. Both responses were withheld and given at the end of each trial, cued by a question mark.

The experiment consisted of two blocks of 450 trials each. In one block the T2 stimuli were randomly chosen faces (without repetitions), and in the other block the T2 stimuli were randomly chosen watches (without repetitions). Within each block, 225 trials contained a T2 with 75 trials at each lag, and 225 trials contained no T2. The 450 trials in each block were presented in random order with short breaks after each 75 trials. The order of blocks was counterbalanced across participants.

Results and Discussion

Discrimination accuracy of flowers (T1) was similar in the two blocks: 89% and 91% for the faces and watches blocks, respectively, t(11) = 0.669, ns. The following analysis of T2 performance was based only on trials in which T1 was correctly identified.

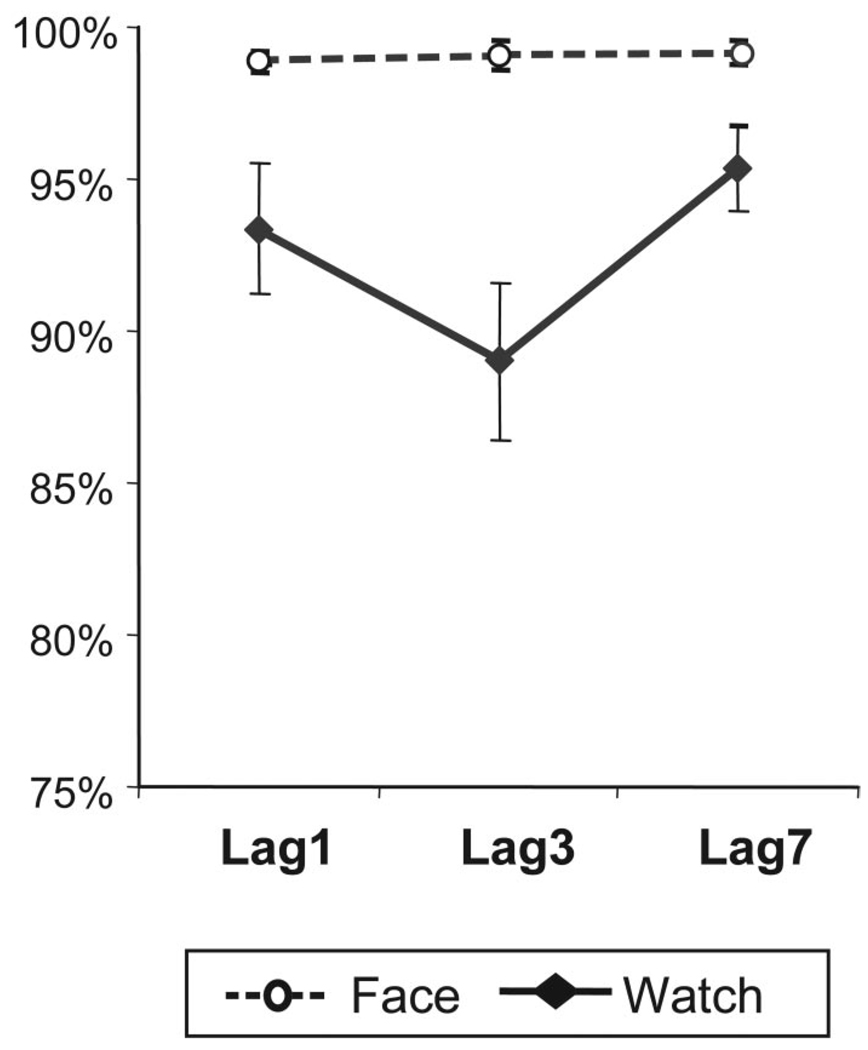

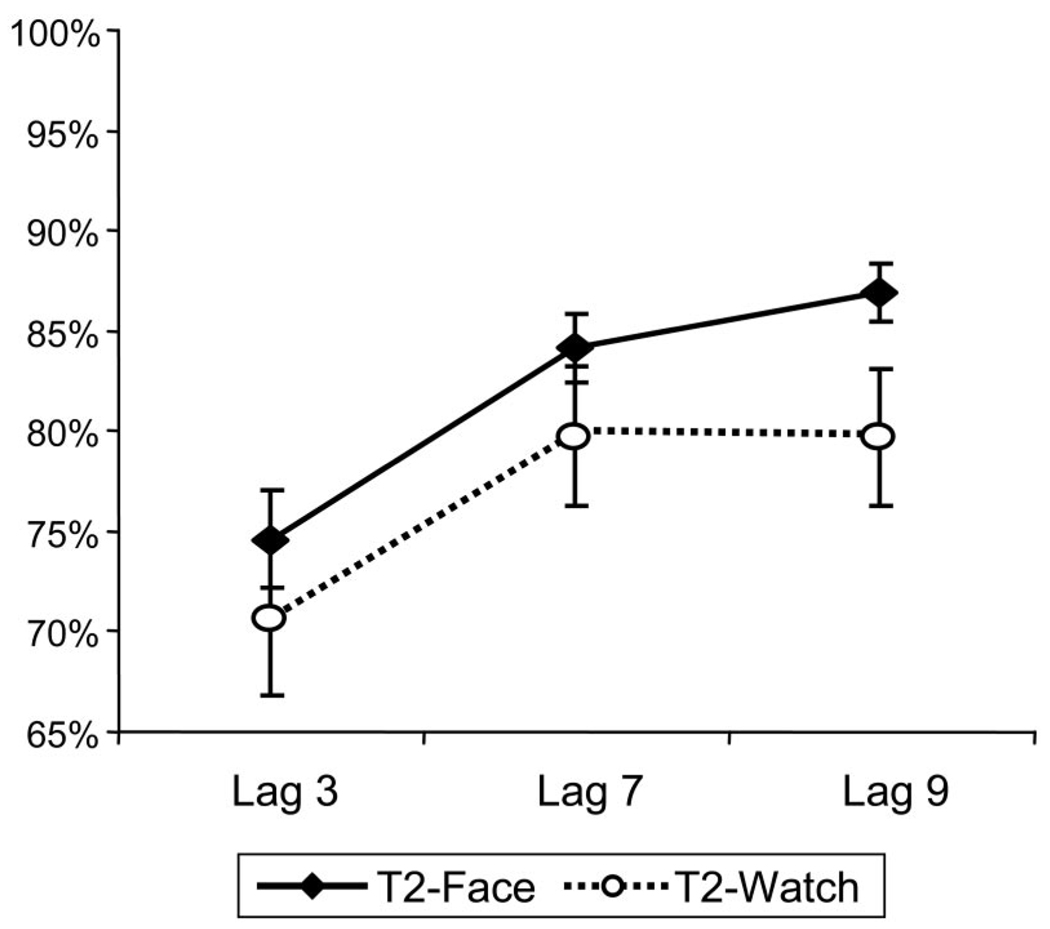

Detection of faces in this experiment was nearly perfect, considerably better than the detection of watches. More important, as evident in Figure 2, flowers induced a significant AB effect for watches but not for faces.

Figure 2.

Target 2 stimulus detection rates for faces and watches following Target 1 stimulus flower. Flowers induced a significant attentional blink effect on watches but not on faces.

Because performance with T2 at Lag 1 can be attributed to factors other than AB (e.g., Enns, Visser, Kawahara, & Di Lollo, 2001), the current analysis focused on Lag 3 (T1/T2 SOA = 210 ms) and Lag 7 (T1/T2 SOA = 490 ms).3 A T2 Type (face, watch) × Lag (3, 7) analysis of variance (ANOVA) with repeated measures showed a main effect of T2 type, F(1, 11) = 11.6, p < .01; a main effect of lag, F(1, 11) = 10.2, p < .01; and most important, a significant interaction between the two, F(1, 11) = 10.6, p < .01. Planned contrasts demonstrated that the AB effect (the difference between detection rate at Lag 7 and at Lag 3) was significant for watches, t(11) = 3.296, p < .01, but not for faces, t(11) = 0.216, ns.

The absence of the AB effect for faces in the present experiment supports the immunity of faces to AB first shown by Awh et al. (2004). In addition, it extends this finding to a traditional RSVP paradigm with the use of photographs of lifelike stimuli for both targets (T1 and T2) and distracters and extends it to an easier T2-detection task rather than identification. Although ceiling effects in face detection might partly account for the difference between faces and watches, it is unlikely that this was the only factor accounting for this dissociation. First, this pattern of results was predicted a priori and replicated the previous pattern found by Awh et al. in which the face–T2 task required face identification and performance was lower. Second, a similar pattern was repeatedly found using similar stimuli in RSVP in experiments in which more difficult T2 tasks were used and the performance with faces was lower (Landau, 2004).4 Third, a similar dissociation between face and watch T2 tasks was found in Experiment 2 with lower detection rates and without a main effect of stimulus type. Nevertheless, the high performance with faces could, indeed, reflect the easy discrimination of faces among objects. We return to this issue in the General Discussion.

According to the multichannel model, a possible interpretation of the different AB effects induced by the T1 flower on faces and objects is that faces and various objects engage different perceptual channels and perhaps different attention resources. To this end, in the next experiment we explored whether a double dissociation occurs between AB effects induced by processing faces on processing objects and vice versa.

Experiment 2

As noted in the introduction, Awh et al. (2004) reported a single dissociation between faces and digits, but the multichannel hypothesis should predict a double dissociation. To explore this prediction we used the RSVP method with a T2 face in one block and a T2 watch in the other block. The major manipulation, however, was the categorical relationship between T1 and T2. In one group of participants T1 was a face, and in another group T1 was a watch. The multichannel hypothesis predicts that in the T1-face group AB would be found for faces, whereas no such effect would be found in the T1-watch group. Conversely, in the T1-watch group AB would be found for watches, but no such effect would be found in the T1-face group.

Method

Participants

Sixty undergraduates from the Hebrew University with normal or corrected-to-normal vision participated for class credit or payment. Thirty-two were included in the T1-face group and 285 in the T1-watch group. None participated in Experiment 1.

Stimuli

Stimuli were the grayscale photographs used in Experiment 1 but without the flowers. In addition, 115 Asian faces and 115 digital watches were added to the Caucasian faces and the analog watches.

Tasks and design

The RSVP procedure was similar to that used in Experiment 1. T1 type distinguished between two groups: In the T1-face group the participants were instructed to report whether the first face appearing in each sequence (T1) was Asian or Caucasian. In the T1-watch group, the participants were instructed to report whether the first watch appearing in each sequence was digital or analog. The second task in each trial was identical in both groups. It required the detection of T2, which appeared in 50% of the trials. T2 was presented at the same lags as in Experiment 1. For each group, T2 was a face in one block and a watch in the other block, counterbalanced across participants.

Procedure

The time course and experimental procedures in this experiment were identical to those described in Experiment 1.

Results and Discussion

As in Experiment 1, T1-task accuracy was high, and the analysis of T2 was based only on trials in which T1 was correctly identified.

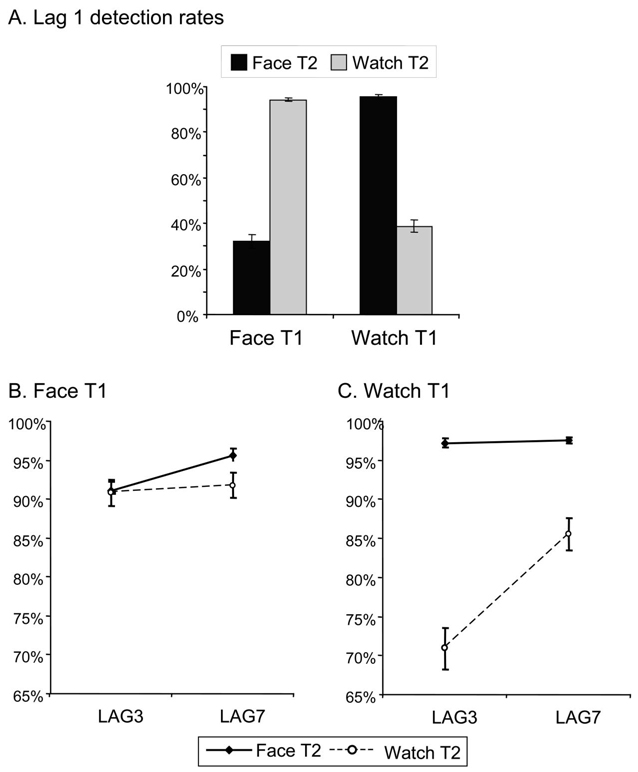

As evident in Figure 3, T2 performance dissociated faces and watches. The direction of this dissociation was determined by the T1 type. When T1 was a watch, the detection of a subsequent watch was significantly reduced at Lags 1 and 3 compared to Lag 7, whereas the detection of faces was unaffected. In contrast, when T1 was a face the detection of a subsequent face was reduced at the shorter lags compared to Lag 7, whereas the detection of watches was not significantly affected. As in Experiment 1, for statistical evaluation we separated the T1 effects on the immediately subsequent T2 (Lag 1) from its effects on T2 presented at Lag 3 and Lag 7. In addition to the typical inconsistency of the AB effects at Lag 1, this separation was necessary because, although T2 was never physically identical to T1, it is conceivable that the effects at Lag 1 were influenced primarily by RB (Kanwisher, 1987), whereas the difference between performance at Lag 3 and Lag 7 reflects the AB effect more purely.

Figure 3.

Target 2 stimulus (T2) detection rates, following correctly categorized Target 1 stimuli (T1). A: Despite different stimuli identity, categorical similarity between T1 and T2 induced a significant repetition blindness effect on both faces and watches. B: A significant attentional blink was found for faces but not for watches. C: A significant attentional blink was found for watches but not for faces.

An initial mixed model ANOVA (T1 Type × T2 Type × Lag) showed a significant second order interaction, suggesting that the AB effect was different for faces and watches and that this difference was modified by whether T1 was a face or a watch, F(1, 58) = 72.3, p < .001. Separate ANOVAs for each T1-type group showed that in both groups the AB effect significantly interacted with the T2 type, F(1, 31) = 6.55, p < .02, and F(1, 27) = 81.9, p < .001, for the T1 face and T1 watch, respectively. Finally, planned t-test comparisons revealed that in the T1-face group the AB effect was significant for T2 faces, t(31) = 5.17, p < .001, but not for T2 watches, t(31) = 0.89, p = .38. In contrast, in the T1-watch group the AB effect was significant for watches, t(27) = 8.96, p < .001, but not for faces, t(27) = .58, p = .57. The AB effect was larger for watches (14.6%) than for faces (4.5%), t(58) = 5.623, p < .001.

A similar pattern was found for the RB effects at Lag 1. The mixed-model ANOVA showed a significant interaction between the T1-type and T2-type effects, F(1, 58) = 690.9, p < .001. Separate planned t-tests in each group revealed that whereas in the T1-face group detection of faces at Lag 1 was significantly reduced relative to watches, t(31) = 18.52, p < .001, in the T1-watch group detection of watches was significantly reduced relative to faces, t(27) = 19.25, p < .001. The difference in the RB effect on faces (61.9%) and on watches (56.9%) was similar, t(58) = 1.112, p = .27.

The T1-associated performance was analyzed by a mixed model ANOVA with T1 group (face, watch) as the between-subjects factor and T1–T2 categorical similarity (same category, different category) as the within-subjects factor. This ANOVA showed that Asian or Caucasian face discrimination was less accurate (81.8%) than analog or digital watch discrimination (88.3%), F(1, 58) = 11.2, p = .001. In addition, across groups the T1 discrimination was lower when T1 and T2 were from the same category (83.2%) than when they were from different categories (86.5%), F(1, 58) = 13.0, p < .001. The absence of an interaction, F(1, 58) = 1.5, ns, showed that the difference between T1 performance within-category and across categories was similar across groups (3.3% and 4.3% for the T1-watch and T1-face groups, respectively). Assuming that the reduced T1 task accuracy in the within-category condition was induced by the confusion between immediately repeated stimuli from the same category,6 we analyzed T1 performance again, only for trials in which T1 was separated from T2 by two or six items of furniture (that is, Lag 3 and Lag 7). As expected, this analysis revealed that whereas the difference between the discrimination of T1 watches (89.7%) and the discrimination between T1 faces (83.7%) remained reliable, F(1, 58) = 8.7, p < .01, there was no difference between the within-category and across-category conditions, F(1, 58) = 2.1, p = .15. There was also no interaction between the two factors, F(1, 58) < 1.00.

Using a traditional RSVP paradigm and comparing AB effects for faces and watches, we found initial evidence for a double dissociation. This pattern of results suggests not only that watches do not induce AB on faces but also that faces do not induce AB effects on watches. The double dissociation seemingly supports the multichannel model suggested by Awh et al. (2004). However, there are a few concerns that need to be addressed before accepting these findings as unequivocal evidence for the multichannel model.

The first is that the reduction in the detection performance at Lag 3 within category might reflect a long-lasting RB rather than an AB. Previous studies showed that RB can be observed among pictures of different items from the same semantic category, even if separated by one unrelated object (Kanwisher et al., 1999). Although in the present study two items of furniture separated T2 from T1, it is possible that the RB lasts longer than previously anticipated. Although both AB and RB are manifestations of processing deficits during RSVP, they are distinguished by several experimental manipulations, which suggests that they are probably associated with different aspects of visual processing (Chun, 1997). Therefore, before drawing conclusions about the structure of the attentional system it is important to verify that the pattern in Experiment 2 reflects the modulation of AB rather than RB.

The second concern is that, although significant, the magnitude of the face–face AB obtained in Experiment 2 was small—indeed, much smaller than similar effects found by Awh et al. (2004) in their Experiment 6. This discrepancy might reflect methodological differences (such as, for example, peripheral target presentation in their study as opposed to central presentation in ours); however, given such a small effect, the present AB effects on faces need corroboration.

Finally and most important, the absence of an AB effect when T1 was a face and T2 was a watch in the present study contrasts with the robust AB effect found by Awh et al. (2004) when T1 was a face and T2 was a digit. In addition to the difference in the perceptual distinction of objects relative to digits, it is possible that the face identification task used at T1 by Awh et al. was more attention demanding than the race distinction used in the present experiment. The following two experiments were designed to address these issues.

Experiment 3

This experiment was designed to address the putative confound of RB with AB in Experiment 2. The significant AB effect induced by flowers on watches in Experiment 1 indicates that the processing of these two different stimulus categories might engage the same processing resources. This interpretation is in line with theoretically based considerations that led Awh et al. (2004) to restrict the multichannel hypothesis to faces as opposed to objects, rather than assuming categorical encapsulation in general. Along with this theoretical constraint, AB effects should be found across different categories of nonface objects. RB, in contrast, is by definition a within-category effect. Therefore, if the double dissociation found in Experiment 2 manifested only RB, it should not replicate across categories of nonface objects.

To disentangle the contribution of AB and RB effects on the attenuation of detection rate at Lag 3, in the present experiment we replicated the design of Experiment 2, this time with two nonface object categories (cars and watches). Because we assumed that the doubly dissociated AB is peculiar to faces, our prediction was that AB would be present for both cars and watches regardless of the T1 category. In contrast, we predicted a double dissociation at Lag 1, where performance should be influenced primarily by RB.

Method

Participants

Thirty-two Hebrew University undergraduates with normal or corrected-to-normal vision participated for class credit or payment. Sixteen were the T1-car group and the other 16 were the T1-watch group. None of the participants were tested in the previous experiments.

Stimuli

Stimuli were the 230 watches used in Experiment 2 (115 digital and 115 analog) and 230 cars. Among the cars, 115 were oriented to the left side and 115 to the right side.

Tasks and design

In the T1-car group the participants were instructed to discriminate the orientation (left or right) of the first car appearing in each trial and then to detect if a T2 target followed or did not follow T1. As before, in one block T2 was a watch, whereas in the other block T2 was a car. In the T1-watch group the instructions and the tasks were identical to Experiment 2. The order of the blocks was counterbalanced across the participants in each group.

Procedure

The procedure was identical to that used in the previous experiments.

Results and Discussion

As before, the T2 performance was based only on trials in which T1 performance was accurate (81.4% of the trials in the T1-car block and 86.4% of the trials in the T2-watch block).

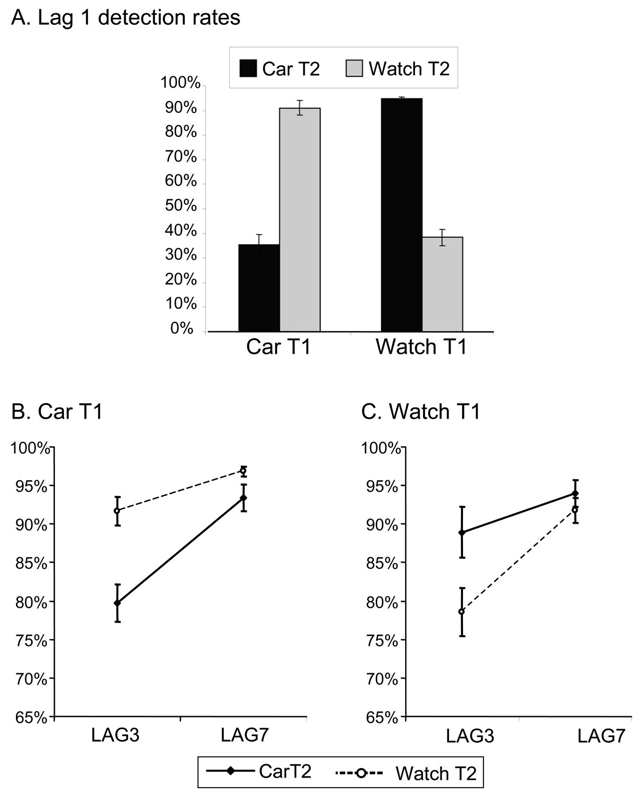

As in Experiment 2, RB doubly dissociated the two groups (Figure 4A). In the T1-car group, detection of cars at Lag 1 was very low compared to the detection of watches, whereas the opposite pattern was observed in the T1-watch group. Similarly, a double dissociation was also observed at Lag 3 and Lag 7; at both these longer lags, the detection of cars was better in the T1-watch block than in the T1-car block, and the inverse pattern was found for watches. It is important, however, that unlike Experiment 2, there was an AB effect across as well as within categories, as evidenced by attenuated detection of watches as well as cars at Lag 3 compared to Lag 7 for both T1 groups. Yet this effect was not identical between and across categories; the lag effect was augmented when the two exemplars were from the same category (~13%), compared with when T1 and T2 were from different categories (~5%; Figure 4B).

Figure 4.

Target 2 stimulus (T2) detection rates, following correctly categorized Target 1 stimuli (T1). A: Despite different stimuli identity, categorical similarity between T1 and T2 induced a significant repetition blindness effect on both cars and watches. B: A significant attentional blink was found for both cars and watches. C: A significant attentional blink was found for both watches and cars.

The pattern described above was corroborated by mixed-design ANOVAs conducted separately for Lag 1 (the RB effect) and for Lags 3 and 7 (the AB effect). The T1 Group × T2 Type ANOVA with detection rate at Lag 1 as dependent variable showed a significant interaction, F(1, 30) = 428.2, p < .001. Planned t tests showed that both effects were highly significant, t(15) = 13.13, p < .001, and t(15) = 15.967, p < .001, for T1-car and T1-watch groups, respectively, and that the RB effect was similar across groups, t(30) = 1.250, p = .22.

The AB effect was examined by a T1 Group (T1 car, T1 watch) × T2 Type (car, watch) × Lag (Lag 3, Lag 7) mixed model ANOVA. This analysis revealed a significant second-order interaction between the three factors, F(1, 30) = 16.4, p < .001, which was further elaborated by separate T2 Type × Lag ANOVAs. Significant interactions indicated that in both T1 groups the AB effect within category (13.7% and 13.2% for the car and watch categories, respectively) was larger than across categories (5.2% and 5.1% for the T1-car and T1-watch groups, respectively), F(1, 15) = 10.8, p < .005, and F(1, 15) = 6.5, p < .05, for the T1-car and T1-watch groups, respectively. It is important to note, however, that in the present experiment cars and watches blinked each other reciprocally. Planned t tests revealed significant car–watch AB effects across categories, t(15) = 3.401,p < .005, and t(15) = 1.932, p < .07, for the T1-car and T1-watch groups, respectively.

T1 discrimination was similar for cars and watches, F(1, 30) = 2.8, p = .10. However, as in Experiment 2, discrimination was more accurate when T2 was from a different category than from the same category as T1, F(1, 30) = 13.2, p < .01. Although the interaction was not significant, F(1, 30) = 3.4, p = .075, it is noteworthy that the category similarity effect was considerably larger for cars (8.1%) than for watches (2.6%). The same ANOVA of T1 performance excluding the Lag 1 conditions showed that, excluding the trials in which T2 was at Lag 1, the difference between T1 performance in the within-category condition (85%) was not different from the T1 performance when T1 and T2 were from different categories (87.5%), F(1, 30) = 2.9, p = .10. In addition to showing an interesting reciprocal interference between the first and the second stimuli in RB, the absence of backward interference with T1 performance in the within-category blocks at Lag 3 and Lag 7 supports our assumption that RB is not effective at these lags.

Because RB does not account for the reduction of T2 detection at Lag 3 relative to Lag 7 when T1 and T2 stimuli are from different categories, the present results confirm the existence of an AB effect across different objects categories. By extension, these results suggest that the AB effects found within but not across categories in Experiment 2 reflect, at least partly, some type of categorical specificity in the processing resources used by faces and those used by objects. However, significantly larger lag effects were found within stimulus category than across stimulus category, which might suggest a contribution of RB effect to the AB reduction of T2 detection at Lag 3 in the within-category condition. This conclusion is also supported by the similar detection performance at Lag 7 in both the across- and within-categories conditions (95.4% and 92.6%, respectively). Note, however, that although RB could in principle account for the significant lag effect within faces in Experiment 2, it cannot account for the absence of such an effect when T1 was faces and T2 was watches. Neither can it account for the absence of a lag effect when T1 was watches and T2 was faces. Because the absence of AB effects when faces were processed as T1 on watches at T2 was crucial for our interpretation, and the overall AB effect between faces was small, we sought to replicate them in an additional experiment.

Experiment 4

In the present experiment we had two major goals. One was to replicate the absence of AB effects on T2 watches when faces were processed at T1. The second goal was to verify the role of attention in the AB effect on faces by increasing the difficulty of the T1 task, rendering it more taxing on processing resources (Chun & Potter, 1995; Shapiro, Schmitz, Martens, Hommel, & Schintzler, 2006). In addition, because we were not interested in RB effects, we did not use the Lag 1 condition. Instead, we introduced a Lag 9 condition, which allowed us to explore the putative AB effects at a longer T1–T2 SOA (630 ms).

Method

Participants

Twenty-four undergraduates from the Hebrew University with normal or corrected-to-normal vision participated for class credit or payment. None of the participants in this experiment had ever taken part in RSVP experiments before. The 24 participants were selected from a group of 29 on the basis of a criterion of more than 60% correct discrimination of T1 faces.

Stimuli

The T2 stimuli were the grayscale photographs of faces and watches used in Experiment 2. In addition, a new set of 225 faces of Israelis (half men and half women) and 224 faces of Indians (half men and half women) were used as T1. All the T1 faces were equated for luminance, size, and exclusion of hair line and paraphernalia of any kind.

Tasks and design

The RSVP procedure was similar to that used in Experiment 2. The T1 task was face–race discrimination. Participants were instructed to wait for the end of the RSVP series and, after the question mark, to press one button if T1 was an Israeli face and another button if T1 was an Indian face. To ensure that this task was sufficiently demanding, yet make the discrimination possible, we chose the task and the particular faces on the basis of a pilot study in which participants demonstrated above-chance discrimination performance.

Because in this experiment we were interested only in comparing the AB effect induced by T1 faces on the detection of T2 faces and T2 watches, the design of Experiment 2 was reduced to one session with faces as T1. Hence each participant was examined in two blocks of 450 trials each. In each block 50% of the trials included a T2 stimulus that was a face in one block and a watch in the other block (counterbalanced across participants). Participants were required to detect whether the T2 stimulus appeared after the T1 face and provide both T1 and T2 responses by button-press at the end of each trial. There were 75 trials for each T2-present lag, Lag 3 (SOA = 210 ms), Lag 7 (SOA = 490 ms), and Lag 9 (SOA = 630 ms).

Procedure

The time course and experimental procedures in this experiment were identical to those described in Experiment 2.

Results and Discussion

The discrimination between Israeli and Indian faces was above chance (70.6%) but considerably lower than the discrimination between Asian and Caucasian faces in Experiment 2 (83.7%). The following analyses were based only on trials in which the response to T1 was correct.

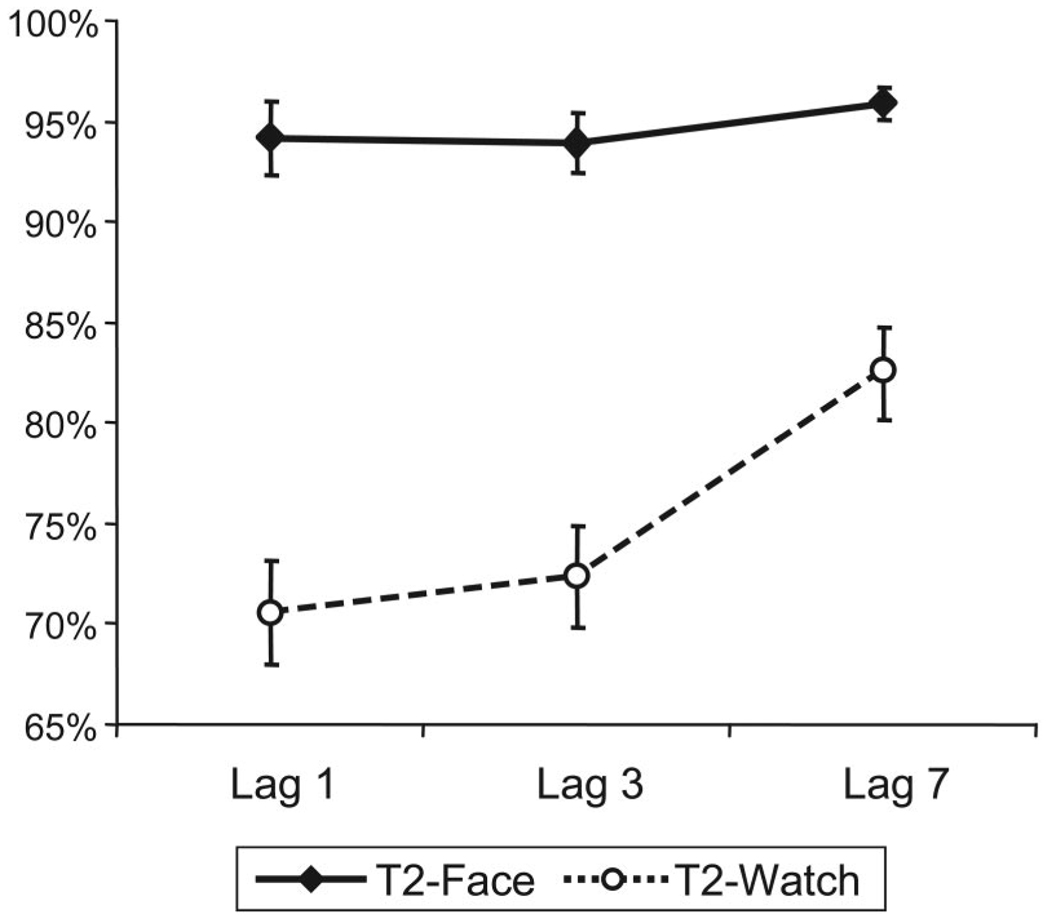

As evident in Figure 5, the discrimination between T1-Israeli and T1-Indian faces reduced detection of both watches and faces in the T2 position. This trend is demonstrated by a ~9% lower detection at Lag 3 relative to Lag 7 for both faces and watches. For both faces and watches there was no marked change between the detection at Lag 7 and Lag 9. It is interesting that although T1 was a face, and notwithstanding the AB effect, faces were detected slightly better (81.33%) than watches (76.73%).

Figure 5.

Target 2 stimulus (T2) detection levels for face and watches following a Target 1 stimulus (T1) face discrimination task. Difficulty affected the overall performance level. The attentional blink effects are larger than in previous experiments; however, contrary to Experiment 2, the demanding T1-face discrimination task induced an attentional blink for both T2-face and T2-watch detection. Note also that performance for faces and watches is not significantly different; both reach a plateau at Lag 7.

These effects were analyzed using ANOVA with repeated measures. The factors were T2 type (face, watch) and Lag (Lag 3, Lag7, Lag 9). The main effect of T2 type was not significant, suggesting that across lags, faces and watches were detected at a similar level F(1, 23) = 2.8, p = .11. A significant lag main effect, F(2, 46) = 23.290, p < .001, and an absent interaction, F(2, 46) <1, indicated that the AB effects were similar for T2 face and T2 watch. Planned comparisons indicated that for both faces and watches detection was better at Lag 7 than at Lag 3, F(1, 23) = 25.208, p < .001, whereas the difference between Lag 7 and Lag 9 was not significant, F(1, 23) = 1.6, p = .22.

An ANOVA was performed to measure T1 performance with factors T2 type (face, watch) and lag (3, 7, 9); it showed that T1 discrimination was lower in the T2 face block compared to the T2 watch block, F(1, 23) = 5.32, p < .05. In addition, performance on T1 was significantly affected by the T2 lag, F(2, 46) = 5.47, p < .01. Planned comparisons reveal that this effect was due to a significant decrease in accuracy when T2 appeared at Lag 3 compared to when it appeared at Lag 7, F(1, 23 = 12.25, p < .01), whereas T1 discrimination was similar for trials in which T2 appeared in Lag 7 and in Lag 9, F(1, 23) = 1.59, p < .22. It is important to note that there was no significant interaction between the two factors, F(2, 46) < 1.00, indicating that for both T2-face and T2-watch condition the backwards effects from T2 to T1 were limited to Lag 3.

The results of this experiment limit the interpretation of the double dissociation observed in Experiment 2. Using a more resource-demanding face discrimination task at T1 (as evident in the dramatically reduced T1 discrimination accuracy), we were able to augment the AB effects on faces, as expected. This augmentation supports the interpretation of the Lag 3 effect as AB, because RB should not be influenced by task modulations. However, in parallel to the augmentation of AB on faces, a similar effect was found on T2 watches. The cross-modal effect from faces to watches is similar, indeed, to Marois, Yi, and Chun (2004), who reported significant AB effects from faces (T1) to scenes (T2). Moreover, our present AB effect from faces to watches was observed for an easy T2 task (detection), whereas in the previous study the T2 task required a more difficult categorization between indoor and outdoor scenes. Apparently, across-categories AB effects when T1 are faces can be obtained in some circumstances but not in others, probably determined by the difficulty of the tasks employed.

Regardless of its source, the AB effect casts doubts on the generality of the double dissociation found in Experiment 2, and thus on the multichannel model suggested by Awh et al. (2004) to explain the relative immunity of faces to AB. Whereas the immunity of faces to AB from nonface categories is apparently stable across task difficulty, the inverse effects implied by a strong version of the multichannel model depend on the amount of available resources. In other words, the resources used by faces and nonface objects, at least for detection, are not completely independent. To this end, we turn to other accounts for the face immunity to AB, accounts that draw on the particular processes that are involved in face but not object perception. Although Awh et al. interpreted their findings in terms of a multichannel model, they also associated these channels with different modes of perception for faces and objects. Specifically, they suggested that faces are using resources needed for global (or configural) perception whereas nonface objects (or symbols) use other types of resources dedicated to local (or featural) processing. In the next experiment in this series, we tested this hypothesis.

Experiment 5

The goal of this experiment was to explore the hypothesis that the immunity of faces to AB reflects the allocation of resources to global (or configural) aspects of the stimulus when faces are seen but to local (or featural) aspects for nonface objects. The design and procedures as well as the stimulus categories in the present experiment were similar to those used in Experiment 1 (flowers as T1, Caucasian faces and analog watches as T2). The T2 task, however, was discrimination rather than detection. We chose a discrimination task that required the processing of features rather than configuration for both faces and watches. For faces, the task required discrimination of gaze direction (eyes looking to the left or the right in faces oriented to the viewer); for watches, the task required discrimination of whether the minute hand pointed after the half hour (that is, left of the vertical axis) or before the half hour (that is, right of the vertical axis). Recall that, in fact, Awh et al.’s (2004) multichannel model suggested that the channels are actually distinguished by the level of analysis, configural (for faces) versus featural (for objects). Accordingly, this model should predict that if the processing of faces and objects use the same type of process (featural in this case), the same channel will be occupied, and consequently the face immunity to AB from objects will break down. In other words, this interpretation predicts that if faces require featural processing, they will be blinked by processing flowers similarly to watches. The present experiment tested this prediction directly.

Method

Participants

Twenty undergraduates from the Hebrew University with normal or corrected-to-normal vision participated in this experiment for class credit or payment. None of the current participants were tested in previous AB experiments.

Stimuli

Stimuli were grayscale photographs of 120 different Caucasian faces (front close-up portraits), 120 different analog watches, 120 flowers (60 tulips and 60 sunflowers), and 240 items of furniture (chairs, tables, cupboards, and sofas). Among the faces, there were 60 in which the pupils in the eyes were edited to look right and 60 in which they were edited to look left. Among the watches, in 60 the minute hand pointed to the right of the vertical axis, and in the other 60 the minute hand pointed to the left of the vertical axis. Within each group of watches, the hour hand pointed to different corresponding locations either to the left or to the right of the vertical axis, selected on random basis. All photographs were the same size, were presented at the center of a 19″ (48 cm) monitor, and subtended a visual angle of about 3°.

Design, tasks, and procedures

As in Experiment 1 (in which the T1 was flowers), the participants were tested in two blocks, one including faces as T2 and the other watches as T2. The order of the blocks was counterbalanced across participants. The T1 task in both blocks was the tulip–sunflower discrimination task as in Experiment 1. Unlike Experiment 1, however, a T2 was presented in each trial. In the T2-face block the participants were instructed to determine whether the eyes looked to the right or to the left. In the T2-watch condition, the participants were instructed to determine whether the minute hand of the analog watch was pointing to a time before the half hour or after the half hour. All other procedural details were identical to those used in Experiment 1.

Results and Discussion

T1 performance was slightly higher in the T2-face block (89.8%) than in the T2-watch block (84.2%), t(19) = −2.312, p < .05. The analysis of T2 was based only on trials in which T1 discrimination was accurate.

As evident in Figure 6, decisions across lags about gaze direction were considerably more accurate (91.1%) than decisions about watch-arm orientation (73.0%). Further, as in Experiment 1, AB reduced performance at Lag 3 in the T2-watch block but not in the T2-face block.

Figure 6.

Target 2 stimulus (T2) discrimination levels for faces and watches following a Target 1 stimulus (T1) flower task. Difficulty affected the overall performance level; the pattern seen here is very similar to that of Experiment 1. Flowers had a significant attentional blink effect on watches but not on faces.

The pattern revealed in Figure 6 was corroborated by T2 Type (face, watch) × Lag (Lag 3, Lag 7) ANOVA with repeated measures. This analysis showed significant main effects of T2 type, F(1, 19) = 31.5, p < .001, and of lag, F(1, 19) = 33.6, p < .001. Most important, however, a significant interaction between the two factors showed that the effect of lag was different for the two types of T2, F(1, 19) = 25.6, p < .001. Planned t-tests demonstrated that whereas for T2 watch the 9.2% difference between accuracy at Lag 7 and accuracy at Lag 3 was significant, t(19) = 6.398,p < .001, the 1.3% difference between Lags 3 and 7 in T2-face accuracy was not, t(19) = 1.441, p = .17.

The absence of the AB for T2 face following T1 flower despite the need to process the face features casts doubt on the assumption that the relative immunity of faces to AB reflects independence between configural and featural processes. Note also that this dissociation occurred despite the higher T1 discrimination accuracy in the faces block. Although it is possible that configural processes are applied by default when faces are processed (see, e.g., the composite face effect; Young, Hellawell, & Hay, 1987), new evidence suggests that when global shapes of faces are encountered attention is immediately allocated to the eyes region where a local/featural process is attempted (Bentin, Golland, Flaveris, Robertson, & Moscovitch, 2006). Adding a task that requires local perception (of gaze direction) should, at the very least, occupy the featural channel in addition to the configural one. Hence, these data together with the previous experiments call for a reevaluation of previous accounts of the face immunity to the AB and the data supporting them.

General Discussion

The goal of the present study was to extend the evidence and explore the underpinnings of face immunity to AB procedures in an attempt to understand its cognitive source. In Experiment 1 we replicated the finding that faces are immune to AB (Awh et al., 2004) and extended this dissociation to the conventional RSVP paradigm with T1 and T2 stimuli that were perceptually rich, lifelike photographs. Further, Experiment 1 extended Awh et al.’s (2004) results, showing that AB effects dissociate faces and objects even if the T2 task is simple detection rather than identification. In Experiment 2 we found some evidence for a double dissociation between faces and objects in the AB paradigm: that is, not only that objects did not elicit an AB on faces but also that faces did not elicit an AB on objects. In contrast to the absence of across-category AB effects between faces and watches, in Experiment 3 we found across- as well as within-category AB effects between cars and watches. Further, the decrement in performance at Lag 3 relative to Lag 7 was larger within than across categories, suggesting that RB may have reinforced the AB effect. Yet, the overall pattern of the results in Experiment 3 showed that the double dissociation between faces and watches cannot be explained by RB alone. In an attempt to replicate the absence of AB effects from faces to objects that was found in Experiment 2 and augment the AB effect on faces, we used a more resource-demanding T1 task in Experiment 4. Whereas the AB effect on faces increased, in this experiment we also found a cross-category AB effect from faces to watches. The latter result limits a strong interpretation of the double dissociation found in Experiment 2. Finally, in Experiment 5 we found that the immunity of faces to AB effects when T1 is an object holds even if featural processing strategies are encouraged by the T2 task. This outcome questions the hypothesis that the immunity of faces to AB is solely explained by different perceptual processing modes applied to the identification of faces and objects (configural and featural, respectively; cf. Awh et al., 2004). To this end, additional accounts should be considered.

First, the significant AB effects across flowers and watches and across cars and watches in concert with the absence of AB effects across flowers and faces and across watches and faces suggest that this immunity is peculiar to faces (or other objects of expertise), rather than a general dissociation between pictures of different perceptual categories. Why would faces be immune to AB induced by processing other stimulus categories but not to the processing of other faces?

Previous studies suggested that two major factors interact to produce the AB effect. One is visual masking of T2 by the subsequent distracter presented in the same spatial location (Brehaut et al., 1999). The second is the control and deployment of a limited-capacity attentional system constrained either during the identification of visual pattern (Chun & Potter, 1995; Raymond, Shapiro, & Arnell, 1995) or at a later stage in the information processing sequence (Jolicoeur, Dell’Acqua, & Crebolder, 2001) or at multiple locations (Ruthruff & Pashler, 2001). The multichannel account for face immunity to AB draws from both these sources: On the one hand, if different perceptual processes occupy different channels, masking would be more efficient from objects (items of furniture in this case) to objects than from objects to faces because in the former case, the same processing channel is occupied. On the other hand, if different attention resources are independently allocated to different processing channels, faces would be immune because allocating resources to the face-processing channel would not draw on the same pool of resources that is used to process the T1 object. Although they cast doubts on the multichannel hypothesis, the present data suggest that both attention and perceptual differences between faces and objects probably interact in sparing faces from the deleterious effects of the AB. The considerably larger AB effect on faces in Experiment 4 relative to Experiment 2, as well as the fact that this effect dissipates with increasing the SOA between T1 and T2, indicates that the amount of resources needed to accomplish the T1 task influences the probability of detecting a face at T2. This pattern contradicts the view that face processing does not require attention (e.g., Lavie, Ro, & Russell, 2003; Young, Ellis, Flude, McWeeny, & Hay, 1986). Moreover, it suggests that a limited-capacity system is involved in the AB for faces. However, the similar AB effect from faces to faces and from faces to watches in Experiment 4 suggests that the resource channels used for detecting faces and objects are not independent. When much resource is engaged in the T1 face discrimination task, the detection of watches is also affected. Moreover, despite the parallel AB effects, the overall probability of face detection was still numerically higher (albeit not significantly so) than the probability of watch detection in both Experiments 2 and 4. This trend, along with the conspicuously high performance with faces overall, suggests that faces are more easily detected among items of furniture than watches are. A possible interpretation of this suggestion could imply a sort of high-level “pop out” effect for faces (Hershler & Hochstein, 2005). That is, the global and local masking effects of the distracters’ set (items of furniture) on faces were reduced relative to their effect on other nonface categories such as cars or watches (see Chun & Potter, 1995).

According to this interpretation of the masking account, the detection of stimuli from one category among stimuli from another category is governed by the degree of cohesion within categories and the perceptual variance across categories, as well as by the expertise that observers have with these categories. Following this line of thought, the global structure of faces is highly coherent and well distinguished from any other category type. By contrast, the variability between the global shape of different watches or cars is higher and might share more perceptual properties with the distracting items of furniture. Following the reasoning suggested by Visser et al. (2004), this difference could account, at least partly, for why AB effects were smaller for faces than they were for watches and cars when these targets had to be detected among items of furniture.7 The validity of this perceptual discrimination factor (coupled with the existence of reliable mental representations of these faces) has been convincingly demonstrated in a recent study by Jackson and Raymond (2006). These authors used faces as distracters as well as T2 stimuli in a RSVP paradigm, manipulating the familiarity of the faces. They found significant AB effects for unfamiliar faces (regardless of whether the distracters were other unfamiliar faces or familiar faces) but no AB effects for highly familiar or famous faces. Obviously, the detection of faces among objects requires different perceptual and attentional mechanisms than the identification of a particular prelearned face among other faces. Nevertheless, these data converge with ours in demonstrating that (a) face processing requires attention resources when they cannot be easily discriminated; (b) a multichannel hypothesis based on different processing modes for faces and objects cannot solely account for face immunity to AB, even if the distracters are nonfaces; and (c) the saliency of faces among nonface distracters is an important factor in determining the susceptibility of the face to AB. Finally, we should also remember that humans are experts in processing faces (Bukach, Gauthier, & Tarr, 2006) and therefore might be more efficient when processing faces, rendering them easier to detect. Therefore, it is possible that, on the one hand, faces are easier to detect among objects as perceptual images and, on the other hand, this process is additionally facilitated by experience. The consequence of both these factors might be that reduced resources are necessary to detect faces, accounting for their immunity from AB without additional assumptions of resource-independent channels.

Perceptual discrimination, however, cannot account for the entire pattern of effects unveiled in the present study. In particular, this type of interference cannot account for the higher within- than across-category AB effects when cars and watches were compared. Recall that in both T1-type conditions the distracters were the same. This pattern corresponds with previous work showing that the magnitude of the AB is determined by the degree of similarity between the attentional demands of T1 and T2 (Raymond et al., 1995). Indeed, several authors have suggested that the categorical specificity reflected by the AB might index the cost of reconfiguring the attentional system for processing T2 after the processing of T1 (Enns et al., 2001), or even across distracters and targets (Visser et al., 2004).

In conclusion, the present study suggests that the occasional immunity of faces to AB reflects the perceptual salience of the face category, which might reduce the amount of resource needed to detect faces among distracters from a different category. Furthermore, these data demonstrate that even when the task is as simple as detecting faces among distracters from a different category, the availability of processing resources (as determined by the complexity of the T1 task) influences performance. This conceptualization is in line with Chun and Potter’s (1995) elegant demonstration that the detection of T2 in RSVP is a function of both global and local target–distracter discriminability and is modulated by the difficulty of T1 processing. In fact, the present data extend the two-stage model for the AB suggested by these authors, showing that similar factors account for AB to faces, objects, and symbols. The apparent immunity of faces to AB does not reflect a special attentional or perceptual channel but rather their particular perceptual salience. It remains to be seen whether similar effects are found for other objects of expertise.

Acknowledgments

This study was funded by National Institute of Mental Health Grant R01 MH 64458 to Shlomo Bentin. We thank Tal Golan for running Experiment 4.

Footnotes

In a recent study, Jackson & Raymond (2006) demonstrated significant AB effects when T2 unfamiliar faces had to be identified among familiar or other unfamiliar faces that were distracters. We refer to this relevant study in the General Discussion.

Evidence that faces can be blinked by other rich stimuli such as watches (albeit less pronounced than by other faces) has been recently provided (Einhäuser, Koch, & Makeig, 2007). However, the procedure used in this study was not standard for AB. First, there was no priority of T1 and T2 (both were equally task relevant). Second, the task required study of both T1 and T2 for later forced-choice identification. Hence, the effects observed in that paradigm could reflect visual short-term memory capacity rather than attention factors.

A similar analysis using all three lags as levels in the lag factor yielded similar results.

For example, when the T2-face task was discrimination between Caucasian and Asian faces and the T2-watch task was a discrimination between analog and digital watches (following the same T1 sunflower–tulip discrimination), accuracy for faces was 85% at Lag 3 and 88% at Lag 7, whereas for watches it was 88% at Lag 3 and 94% at Lag 7, with no main effect of T2 type and a significant T2 Type × Lag interaction, F(1, 11) = 4.32, p < .06.

The data of 4 participants in the T1-watch group were lost by mistake.

Imagine an Asian face immediately followed by a Caucasian face or a digital watch immediately followed by an analog watch.

Note, however, that unlike Visser et al. (2004), we found here significant (albeit not very large) AB effects even if the targets and distracters were from different categories.

Contributor Information

Ayelet N. Landau, Department of Psychology, Hebrew University of Jerusalem, Jerusalem, Israel, and Department of Psychology, University of California, Berkeley

Shlomo Bentin, Department of Psychology and Center for Neural Computation, Hebrew University of Jerusalem..

References

- Allison T, Puce A, Spencer DD, McCarthy G. Electrophysiological studies of human face perception: I. Potentials generated in occipitotemporal cortex by face and non-face stimuli. Cerebral Cortex. 1999;9:416–430. doi: 10.1093/cercor/9.5.415. [DOI] [PubMed] [Google Scholar]

- Austen EL, Enns TJ. Change detection in an attended face depends on the expectation of the observer. Journal of Vision. 2003;3:64–74. doi: 10.1167/3.1.7. [DOI] [PubMed] [Google Scholar]

- Awh E, Serences J, Laurey P, Dhaliwal H, van der Jagt T, Dassonville P. Evidence against a central bottleneck during the attentional blink: Multiple channels for configural and featural processing. Cognitive Psychology. 2004;48:95–126. doi: 10.1016/s0010-0285(03)00116-6. [DOI] [PubMed] [Google Scholar]

- Bentin S, Allison T, Puce A, Perez A, McCarthy G. Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience. 1996;8:551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentin S, Golland Y, Flaveris A, Robertson LC, Moscovitch M. Processing the trees and the forest during initial stages of face perception: Electrophysiological evidence. Journal of Cognitive Neuroscience. 2006;18:1406–1421. doi: 10.1162/jocn.2006.18.8.1406. [DOI] [PubMed] [Google Scholar]

- Brehaut JC, Enns JT, Di Lollo V. Visual masking plays two roles in the attentional blink. Perception and Psychophysics. 1999;61:1436–1488. doi: 10.3758/bf03206192. [DOI] [PubMed] [Google Scholar]

- Brown V, Huey D, Findlay JN. Face detection in peripheral vision: Do faces pop out? Perception. 1997;26:1555–1570. doi: 10.1068/p261555. [DOI] [PubMed] [Google Scholar]

- Bukach CM, Gauthier I, Tarr MJ. Beyond faces and modularity: The power of an expertise framework. Trends in Cognitive Science. 2006;10:159–166. doi: 10.1016/j.tics.2006.02.004. [DOI] [PubMed] [Google Scholar]

- Chun MM. Types and tokens in visual processing: A double dissociation between the attentional blink and repetition blindness. Journal of Experimental Psychology: Human Perception and Performance. 1997;23:738–755. doi: 10.1037//0096-1523.23.3.738. [DOI] [PubMed] [Google Scholar]

- Chun MM, Potter MC. A two-stage model for multiple target detection in rapid serial visual presentation. Journal of Experimental Psychology: Human Perception and Performance. 1995;21:109–127. doi: 10.1037//0096-1523.21.1.109. [DOI] [PubMed] [Google Scholar]

- Clark VP, Parasuraman R, Keil K, Kulansky R, Fannon S, Maisog J-M, et al. Selective attention to face identity and color studied with fMRI. Human Brain Mapping. 1997;5:293–297. doi: 10.1002/(SICI)1097-0193(1997)5:4<293::AID-HBM15>3.0.CO;2-F. [DOI] [PubMed] [Google Scholar]

- Downing P, Jiang Y, Shuman M, Kanwisher N. A cortical area selective for visual processing of the human body. Science. 2001 September 28;293:2470–2473. doi: 10.1126/science.1063414. [DOI] [PubMed] [Google Scholar]

- Driver J, Davis J, Ricciardelli P, Kid P, Maxwell R, Baron-Cohen S. Gaze perception triggers reflexive visual spatial orienting. Visual Cognition. 1999;6:509–540. [Google Scholar]

- Duncan J, Ward R, Shapiro KL. Direct measurement of attentional dwell time in human vision. Nature. 1994 May 26;369:313–315. doi: 10.1038/369313a0. [DOI] [PubMed] [Google Scholar]

- Einhäuser W, Koch C, Makeig S. The duration of attentional blink in natural scenes depends on stimulus category. Vision Research. 2007;47:597–607. doi: 10.1016/j.visres.2006.12.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Enns JT, Visser TAW, Kawahara J, Di Lollo V. Visual masking and task switching in the attentional blink. In: Shapiro K, editor. The limits of attention: Temporal constraints in human information processing. New York: Oxford University Press; 2001. pp. 68–81. [Google Scholar]

- Evans KK, Treisman A. Perception of objects in natural scenes: Is it really attention free? Journal of Experimental Psychology: Human Perception and Performance. 2005;31:1476–1492. doi: 10.1037/0096-1523.31.6.1476. [DOI] [PubMed] [Google Scholar]

- Furey ML, Tanskanen T, Beauchamp MS, Avikainen S, Uutela K, Hari R, Haxby JV. Dissociation of face-selective cortical responses by attention. Proceedings of the National Academy of Sciences. 2006 January 24;103:1065–1070. doi: 10.1073/pnas.0510124103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gauthier I, Curby KM. A perceptual traffic jam on Highway N170. Current Directions in Psychological Science. 2005;14:30–33. [Google Scholar]

- Gauthier I, Curran T, Curby KM, Collins D. Perceptual interference supports a non-modular account of face processing. Nature Neuroscience. 2003;6:428–432. doi: 10.1038/nn1029. [DOI] [PubMed] [Google Scholar]

- Giesbrecht B, Di Lollo V. Beyond the attentional blink: Visual masking by object substitution. Journal of Experimental Psychology: Human Perception and Performance. 1998;24:1454–1466. doi: 10.1037//0096-1523.24.5.1454. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. Human neural systems for face recognition and social communication. Biological Psychiatry. 2002;51:59–67. doi: 10.1016/s0006-3223(01)01330-0. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Horwitz B, Ungerleider LG, Maisog JM, Pietrini P, Grady CL. The functional organization of human extrastriate cortex: A PET-rCBF study of selective attention to faces and locations. Journal of Neuroscience. 1994;14:6336–6353. doi: 10.1523/JNEUROSCI.14-11-06336.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hershler O, Hochstein S. At first sight: A high-level pop out effect for faces. Vision Research. 2005;45:1707–1724. doi: 10.1016/j.visres.2004.12.021. [DOI] [PubMed] [Google Scholar]

- Jackson MC, Raymond JE. The role of attention and familiarity in face identification. Perception and Psychophysics. 2006;68:543–557. doi: 10.3758/bf03208757. [DOI] [PubMed] [Google Scholar]

- Jolicoeur P. Concurrent response-selection demands modulate the attentional blink. Journal of Experimental Psychology: Human Perception and Performance. 1999;25:1097–1113. doi: 10.1037//0096-1523.25.6.1483. [DOI] [PubMed] [Google Scholar]

- Jolicoeur P, Dell’Acqua R, Crebolder JM. The attentional blink bottleneck. In: Shapiro K, editor. The limits of attention: Temporal constraints in human information processing. New York: Oxford University Press; 2001. pp. 81–99. [Google Scholar]

- Kahneman D. Attention and effort. Englewood Cliffs, NJ: Prentice-Hall; 1973. [Google Scholar]

- Kanwisher NG. Repetition blindness: Type recognition without token individuation. Cognition. 1987;27:117–143. doi: 10.1016/0010-0277(87)90016-3. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, Yin C, Wojciulik E. Repetition blindness for pictures: Evidence for the rapid computation of abstract visual descriptions. In: Coltheart V, editor. Fleeting memories: Cognition of brief visual stimuli. Cambridge, MA: MIT Press; 1999. pp. 119–150. [Google Scholar]

- Landau AN. The role of attention in the processing of faces: An attentional blink ERP study. Jerusalem, Israel: Hebrew University; 2004. Unpublished master’s thesis. [Google Scholar]

- Lavie N, Ro T, Russell C. The role of perceptual load in processing distractor faces. Psychological Science. 2003;14:510–515. doi: 10.1111/1467-9280.03453. [DOI] [PubMed] [Google Scholar]

- Lueschow A, Sander T, Boehm SG, Nolte G, Trahms L, Curio G. Looking for faces: Attention modulates early occipitotemporal object processing. Psychophysiology. 2004;41:350–360. doi: 10.1111/j.1469-8986.2004.00159.x. [DOI] [PubMed] [Google Scholar]

- Marois R, Yi DJ, Chun MM. The neural fate of consciously perceived and missed events in the attentional blink. Neuron. 2004;41:465–472. doi: 10.1016/s0896-6273(04)00012-1. [DOI] [PubMed] [Google Scholar]

- Navon D, Gopher D. On the economy of the human-processing system. Psychological Review. 1979;86:214–255. [Google Scholar]

- O’Craven KM, Downing PE, Kanwisher N. fMRI evidence for objects as the units of attentional selection. Nature. 1999 October 7;401:584–587. doi: 10.1038/44134. [DOI] [PubMed] [Google Scholar]

- Palermo R, Rhodes G. The influence of divided attention on holistic face perception. Cognition. 2002;82:225–257. doi: 10.1016/s0010-0277(01)00160-3. [DOI] [PubMed] [Google Scholar]

- Palermo R, Rhodes G. Change detection in the flicker paradigm: Do faces have an advantage? Vision Research. 2003;10:683–713. [Google Scholar]

- Pashler H. The psychology of attention. Cambridge, MA: MIT Press; 1998. [Google Scholar]

- Raymond JE, Shapiro KL, Arnell KM. Temporary suppression of visual processing in an RSVP task: An attentional blink. Journal of Experimental Psychology: Human Perception and Performance. 1992;19:849–860. doi: 10.1037//0096-1523.18.3.849. [DOI] [PubMed] [Google Scholar]

- Raymond JE, Shapiro KL, Arnell KM. Similarity determines the attentional blink. Journal of Experimental Psychology: Human Perception and Performance. 1995;21:653–662. doi: 10.1037//0096-1523.21.3.653. [DOI] [PubMed] [Google Scholar]

- Ro T, Russell C, Lavie N. Changing faces: A detection advantage in the flicker paradigm. Psychological Science. 2001;12:94–99. doi: 10.1111/1467-9280.00317. [DOI] [PubMed] [Google Scholar]

- Rossion B, Kung CC, Tarr MJ. Competition within the early perceptual processing of faces in the human occipito-temporal cortex. Proceedings of the National Academy of Sciences. 2004 October 5;101:14521–14526. doi: 10.1073/pnas.0405613101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruthruff E, Pashler HR. Perceptual and central interference in dual-task performance. In: Shapiro K, editor. The limits of attention: Temporal constraints in human information processing. New York: Oxford University Press; 2001. pp. 100–123. [Google Scholar]

- Schwarzlose RF, Baker CI, Kanwisher N. Separate face and body selectivity on the fusiform gyrus. Journal of Neuroscience. 2005;25:11055–11059. doi: 10.1523/JNEUROSCI.2621-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shapiro KL, Arnell KM, Raymond JE. The attentional blink. Trends in Cognitive Science. 1997;1:291–296. doi: 10.1016/S1364-6613(97)01094-2. [DOI] [PubMed] [Google Scholar]

- Shapiro KL, Caldwell J, Sorensen RE. Personal names and the attentional blink: A visual “cocktail party” effect. Journal of Experimental Psychology: Human Perception and Performance. 1997;24:504–514. doi: 10.1037//0096-1523.23.2.504. [DOI] [PubMed] [Google Scholar]

- Shapiro K, Schmitz F, Martens S, Hommel B, Schintzler A. Resource sharing in the attentional blink. NeuroReport. 2006;17(2):163–166. doi: 10.1097/01.wnr.0000195670.37892.1a. [DOI] [PubMed] [Google Scholar]

- VanRullen R. On second glance: Still no high-level pop-out effect for faces. Vision Research. 2006;46:3017–3027. doi: 10.1016/j.visres.2005.07.009. [DOI] [PubMed] [Google Scholar]

- Visser TAW, Bischof WF, Di Lollo V. Rapid serial visual distraction: Task-irrelevant items can produce an attentional blink. Perception and Psychophysics. 2004;66:1418–1432. doi: 10.3758/bf03195008. [DOI] [PubMed] [Google Scholar]

- Ward R, Duncan J, Shapiro K. Effects of similarity, difficulty, and nontarget presentation on the time course of visual attention. Perception and Psychophysics. 1997;59:593–600. doi: 10.3758/bf03211867. [DOI] [PubMed] [Google Scholar]

- Wickens CD. Processing resources in attention. In: Parassurman R, Davis RD, editors. Varieties of attention. Orlando, FL: Academic Press; 1984. pp. 63–102. [Google Scholar]

- Young AW, Ellis AW, Flude DM, McWeeny KH, Hay DC. Face–name interference. Journal of Experimental Psychology: Human Perception and Performance. 1986;12:466–475. doi: 10.1037//0096-1523.12.4.466. [DOI] [PubMed] [Google Scholar]

- Young AW, Hellawell D, Hay DC. Configural information in face perception. Perception. 1987;16:747–759. doi: 10.1068/p160747. [DOI] [PubMed] [Google Scholar]