Abstract

INTRODUCTION

Although the substrates that mediate singing abilities in the human brain are not well understood, invasive brain mapping techniques used for clinical decision making such as intracranial electrocortical testing and Wada testing offer a rare opportunity to examine music-related function in a select group of subjects, affording exceptional spatial and temporal specificity.

METHODS

We studied eight patients with medically refractory epilepsy undergoing indwelling subdural electrode seizure focus localization. All patients underwent Wada testing for language lateralization. Functional assessment of language and music tasks was done by electrode grid cortical stimulation. One patient was also tested non-invasively with functional MRI. Functional organization of singing ability compared to language ability was determined based on four regions-ofinterest: left and right inferior frontal gyrus (IFG), and left and right posterior superior temporal gyrus (pSTG).

RESULTS

In some subjects, electrical stimulation of dominant pSTG can interfere with speech and not singing, whereas stimulation of non-dominant pSTG area can interfere with singing and not speech. Stimulation of the dominant IFG tends to interfere with both musical and language expression, while non-dominant IFG stimulation was often observed to cause no interference with either task; and finally, that stimulation of areas adjacent to but not within non-dominant pSTG typically does not affect either ability. FMRI mappings of one subject revealed similar music/language dissociation with respect to activation asymmetry within the regions-of-interest.

CONCLUSION

Despite inherent limitations with respect to strictly research objectives, invasive clinical techniques offer a rare opportunity to probe musical and language cognitive processes of the brain in a select group of patients.

Keywords: singing laterality, cortical stimulation, fMRI, Wada test, music ability

1. INTRODUCTION

Recent advances in noninvasive neuroimaging techniques have enabled the functional study of diverse aspects of music production, perception, and discrimination. Noninvasive functional imaging such as functional magnetic resonance imaging (fMRI) provides functional localization (Kleber et al., 2007; Saito et al., 2007; Ozdemir et al., 2006; Riecker et al., 2000) and lateralization (Ozdemir et al., 2006; Riecker et al., 2000), that is often complementary to traditional lesion studies (Peretz et al., 1997; Terao et al., 2006; Racette et al., 2006; Kohlmetz et al., 2003; Warren et al., 2003; Buklina and Skvortsava, 2006; Lechevalier et al., 2006; Takeda et al., 1990). Although careful studies of lesion patients and increasing sophistication in noninvasive imaging techniques have allowed more detailed exploration of singing ability, at present a complete description of the neural substrate underlying this complex human skill is still lacking.

Cognitive research using a variety of neuroimaging modalities has demonstrated a significant overlap between many music-related functions and language processing (Ozdemir et al., 2006; Riecker et al., 2000; Patel et al., 2003; Platel et al., 1997; Maess et al., 2001; Koelsch et al., 2002,2005a, and 2006). As such, in attempting to define music function, many have drawn analogies to human language. Both abilities share fundamental characteristics such as syntactic structure, tonal and temporal properties, a vocabulary (phrases, chords/words), contextual composition, and written representations (Koelsch et al., 2004,2005b, 2006; Patel et al., 2003 and 1998). Similar to language, music production and appreciation can be separated into its expressive and receptive facets. For example, singing can be viewed as a form of acoustic communication between the singer and the listener. A framework designed for the study of language function, by assessing functional localization and lateralization, has established a strong structural specialization within the underlying neural substrates that mediate speech processing (Ojemann 1989 and Ojemann 1989 1993; Petrovich et al., 2007). It is generally recognized that language function is clustered in perisylvian regions of the dominant hemisphere with frontal and temporal components implicated in the expressive and receptive aspects of language. It is also generally known that language function is generally carried out predominately in one cerebral hemisphere of the brain, typically the left (Wada and Rasmussen, 1960; Binder et al., 1996). Given the analogies between music and language, it is plausible that experimental approaches that focus on functional lateralization and localization within the inferior frontal gyrus (IFG) and the posterior superior temporal gyrus (pSTG) may be useful in the evaluation of musical abilities.

Lesion studies have verified the importance of non-language-dominant pSTG for specialized music processing and have demonstrated dissociations between music ability and language in a number of patients. Numerous case reports have shown dissociations in brain-injured individuals between speech function and singing ability; left hemisphere damage may produce aphasia with or without inability to sing, while right-sided lesions can result in isolated musical deficits (Terao et al., 2006; Racette et al., 2006; Buklina and Skvortsava, 2006; Lechevalier et al., 2006; Kohlmetz et al., 2003; Warren et al., 2003; Takeda et al., 1990). This phenomenon, however, has not been well studied in patients without gross structural lesions.

As an alternative to lesion studies, invasive clinical testing administered for functional localization by subdural electrocortical stimulation, and for functional lateralization by intracarotid amytal testing (Wada test) offer functional mapping approaches with superior spatial and temporal resolution (Wada and Rasmussen 1960; Lesser et al., 1984; Luders et al., 1986). Thus, the understanding of cognitive functions such as musical skills can be informed by results gathered from gold-standard clinical testing methodologies.

In this study, we present data acquired in eight clinical cases using cortical stimulation from implanted subdural electrodes and Wada testing to assess various music and language tasks. One subject also underwent fMRI mapping. In these eight cases, we explored the hypothesis that singing ability is mediated by specialized functionality within the putative language regions of the cortex, and/or their homologues in the contralateral hemisphere, and therefore that singing laterality in these regions can be described relative to a subject’s language dominance. To our knowledge, no study of this type has previously been presented in the literature and therefore this series represents a unique opportunity to probe the underlying neural organization of human singing ability.

2. METHODS

Subjects

We studied eight epilepsy patients with indwelling subdural electrodes implanted for seizure focus localization (3 female, 5 male). In two subjects, grid implantation was bilateral (subjects 1 and 3); the rest of the subjects had unilateral grid implantations. The Wechsler Adult Intelligence Scale revealed normal Full Scale, Verbal, and Performance IQs, and Memory Quotients in all eight subjects. All patients had language dominance determined by Wada testing. Patients gave informed written consent for all of the clinical and research studies performed including subdural electrode placement and stimulation. The research procedures used in this study were approved by the Institutional Review Boards (IRB) of the National Institutes of Health Clinical Center and of Brigham and Women’s Hospital, in compliance with the United States National Research Act, and comply fully with the ethical human research principles as specified by The Declaration of Helsinki.

One subject (number 8), a 31 year old right-handed man with a background as a professional pianist and singer, was also studied noninvasively using fMRI. Subject 4, a 19 year old male, presented with mild right-sided hemiparesis and atypical right-dominant language, likely related to a long-standing epileptogenic lesion in the left hemisphere. A summary of clinical characteristics is shown in Table 1. Language dominance, additional subject demographics, and lists of stimulation tasks used for each subject are shown in Table 2.

Table 1.

Clinical Characteristics of Subjects.

| Subject | Etiology (if known) | Duration (years) | Neurological Exam | CT/MRI | PET | EEG Interictal D/C | EEG Ictal Onset |

|---|---|---|---|---|---|---|---|

| 1 | … | 28 | normal | normal | normal | B temp L > R | Scalp: interminate B subdural: 16/17 L 1/17 R mesial temp |

| 2 | ?infection | 15 | normal | normal | R inferior temp hypo | temp R | Scalp: diffuse R R subdural: mesial temp |

| 3 | … | 22 | normal | normal | L temp hypo | B temp L > R | Scalp: L predominance B subdural: 6/6 L mesial temp |

| 4 | ?perinatal hypoxia | 18 | mild cognitive deficit mild RHP | normal | L temp hypo | frontotemp L | Scalp: diffuse L L subdural: 10/10 lateral and inferior temp |

| 5 | … | 9 | normal | normal | normal | temp R > L | Scalp: R temp R subdural: 34/34 inferolateral temp |

| 6 | ?perinatal hypoxia | 23 | normal | L frontal WM hyper-intensity | L temp hypo | frontotemp L | Scalp: frontotemp L subdural: 4/5 mesial temp 1/5 inferior frontal |

| 7 | … | 28 | normal | normal | L temp hypo | frontotemp L | Scalp: L hemisphere L subdural: 13/13 mesial temp |

| 8 | … | 18 | normal | normal | … | temp R | R subdural: 35/35 R inferomesail temp |

R --- right

L --- left

B --- bilateral

temp --- temporal

D/C --- epileptiform discharges

WM --- white matter

hypo --- hypometabolism

HP --- hemiparesis

Table 2.

Subject Demographics, Wada Dominance, and Stimulation Tasks.

| Subject | Age/Sex | Handedness | Musical Experience | Wada Dominance | Subdural Grid(s) | Stimulation Tasks |

|---|---|---|---|---|---|---|

| 1 | 31M | Right | no musical training, amateur chorus singer | Left | Bilateral | SgS, RdP, RcL |

| 2 | 28F | Right | no formal musical training | Left | Right | SgS, RdP, RcL |

| 3 | 28F | Right | no formal musical training | Left | Bilateral | SgS, RdP, RcL, TM |

| 4 | 19M | Right | no formal musical training | Right | Left | SgS, RdP, RcL |

| 5 | 30F | Right | no formal musical training | Left | Right | SgS, RdP, RcL |

| 6 | 32M | Left | no formal musical training | Right | Left | SgS, RdP, RcL, HmM |

| 7 | 29M | Right | no formal musical training | Left | Left | SgS, RdP, RcL, HmM |

| 8 | 31M | Right | professional musician: piano player, band singer | Left | Right | SgS, RdP, RcL, HmM |

RdP --- reading of prose passages

RcL --- recitation of song lyrics

SgS ---singing of familiar songs

HmM --- humming of melodies

TM ---alternating tongue movements

Cortical stimulation methods

Electrodes were implanted under general anesthesia via unilateral or bilateral frontotemporal craniotomies. Electrodes consisted of 8 × 4, 8 × 2, 8 × 1, 3 × 3, 5 × 3, 5 × 2, 5 × 1, and 4 × 1 arrays of platinum contacts with 3 mm diameter exposed surface and centers separated by 10 mm. Electrical stimulation consisted of 4–5 sec trains of 2–10 mA, 1 msec biphasic square-wave pulses at 60 or 75 Hz generated by a constant current stimulator (Nuclear-Chicago, IL, USA; subject 8—Ojemann stimulator, Radionics, Inc., Burlington, MA, USA). Stimulus intensity began at 2 mA and was increased in 1 or 2 mA steps until the occurrence of afterdischarges, seizure, pain, or impaired task performance. Bipolar stimulation of adjacent contacts was generally employed, though occasionally a distant reference, inactive both in terms of spontaneous epileptiform activity and stimulation effects, was used.

Tasks included singing familiar songs, reading prose passages, reciting song lyrics, humming melodies, and alternating tongue movements. Not all subjects did all of these tasks (see Table 2). Songs were chosen by the subjects, in some cases after suggestions by the investigators, for familiarity of both words and music. Stimulation began 3–10 sec after initiation of the task. Singing and recitation were performed one to five times at each stimulation site; repetitions were limited by subject fatigue, pain (usually ipsilateral), or afterdischarges. A performance was considered to fail if it stopped abruptly for at least three seconds of a given stimulation.

Data recording and analysis

During cortical stimulation, both positive and negative responses were evaluated: those causing disruptions in task performance or those failing to cause disruptions of task performance, respectively. All stimulation responses were assessed based on their proximity to the putative language areas and their non-language-dominant homologues as within four regions-of-interest (ROIs): dominant IFG and pSTG and non-dominant IFG and pSTG. All positive and negative response sites were confirmed by 1–3 separate trials each, and their relative anatomical positions with respect to ROIs were recorded. Electrode position was measured at grid insertion and confirmed by skull x-ray and intraoperative photography at the time of electrode removal, or in subject 8, by CT and registration using a surgical navigation system (GE InstaTrak 3500 Plus, Milwaukee, WI, USA)

FMRI methods (subject 8)

Stimulus paradigms were presented on a laptop computer (Dell Inc., Round Rock, TX, USA) running the Presentation software package, version 9.70 (Neurobehavioral Systems Inc., Davis, CA, USA). Visual stimuli were presented through MRI-compatible video goggles (Resonance Technology, Los Angeles, CA, USA). Auditory stimuli were presented through headphones (Avotec Inc., Stuart, FL, USA). The subject produced overt responses during language and singing tasks. He was instructed to verbalize his responses, or to sing, while minimizing motion—speaking or singing while not moving his head, lips, or tongue (Suarez et al., 2008). Behavioral language tasks included overt antonym-generation and noun-categorization performed within event-related paradigms consisting of two separate runs, each with jittered inter-stimulus-intervals [M = 8.3 sec, SD = 5.1 sec]. The subject was asked to say a word having the opposite meaning for antonym-generation, or for the noun-categorization task to state whether the noun presented described something that is “living” or “non-living.” Each word was presented for 2.0 seconds in the center of the screen. A total of 50 stimuli words were delivered during each run; run durations were approximately 7.5 minutes each.

A standard boxcar acquisition paradigm was also used which incorporated, language-specific, music-specific, and passive rest tasks. The activation blocks, each 150 sec long, were divided into five distinct task epochs lasting 30 sec each: 1) passive listening to instrumental piano music (“Being Alive” by Stephen Sondheim, a piece the subject plays proficiently), 2) passive listening to an innocuous spoken narrative, 3) passive listening to a lyrical song (a contemporary soft-rock song of his choosing), 4) passive listening to 0.5 and 2 kHz pure tones, and 5) singing of his favorite song. Each of the six activation blocks (made up of counter-balanced combinations of the five tasks described above), were separated by 20 sec rest blocks. The total run duration was approximately 18 minutes.

MR images were acquired at 3T using a GE Signa system (General Electric, Milwaukee, WI, USA) equipped with a quadrature head coil. Blood-oxygen-dependent (BOLD) functional imaging was performed using echo-planar imaging (EPI) in contiguous axial slices (4 mm thick with no gaps between slices). In-plane spatial resolution was 2 × 2 mm2; TR = 2000 msec; TE = 40 msec; flip angle = 90°; 25.6 cm field of view (FOV); 128 × 128 matrix acquisition. Whole brain T1-weighted axial 3D-SPGR (SPoiled Gradient Recalled echo) was also acquired (TR = 7500 msec; TE = 30 msec; FOV = 25.6 cm; flip angle = 20°; 256 × 256 matrix acquisition; 124 slices; voxel size = 1 × 1 × 1 mm3) to provide a high-resolution anatomic reference frame for subsequent overlay of functional activation maps.

FMRI data analysis

FMRI activation images were generated using the SPM2 (Statistical Parametric Mapping) software package (Wellcome Department of Imaging Neuroscience, London, U.K.). Functional maps were divided into two groups: 1) language-related tasks, and 2) music-related tasks. The language-related group consisted of the antonym-generation and noun-categorization (acquired in event-related paradigms), and passive listening of a narrative (acquired in a blocked paradigm). The music-related group included three different contrasts, acquired in the blocked paradigm: piano music versus tones, lyrical music versus narrative, and singing versus rest. Functional activation patterns were assessed at a threshold of p < 10−4, uncorrected. However, lateralization indices (LI) were calculated using a threshold-independent methodology that compares whole, weighted voxel distributions between the left and right hemispheres, as we previously described in Branco et al., (2006) and Suarez et al., (2008). This method defines asymmetric activation distributions as having an absolute LI value greater than 0.1, positive values denoting leftward asymmetry and negative values denoting rightward asymmetry. The LIs calculated in IFG and pSTG were compared across the six tasks tested.

3. RESULTS

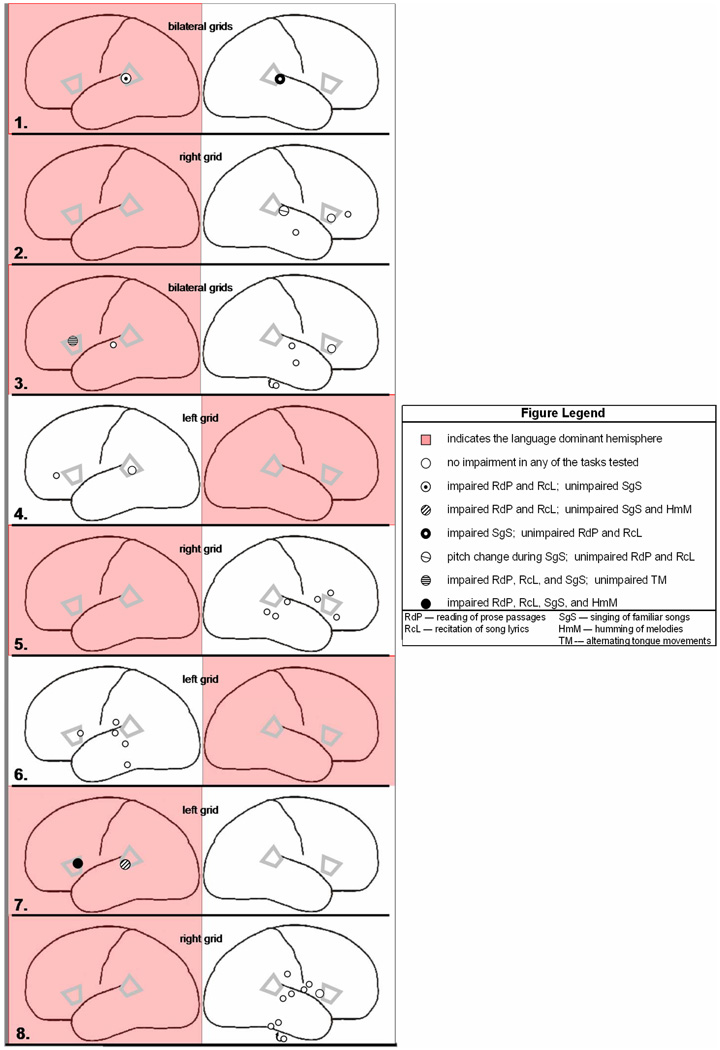

Figure 1 summarizes all the stimulation sites and the resulting behavioral effects observed during each of the tasks tested, the dominant hemisphere is highlighted in red.

Figure 1.

Composite results of stimulation testing administered during speech and singing, and the corresponding behavioral effect observed in each subject (subject’s number is indicated at the lower corner of each panel). Gray markers indicate the location of four ROIs: the inferior frontal gyrus of both hemispheres (IFG), and the posterior portion of the superior temporal gyrus (pSTG) of both hemispheres. All negative and positive response sites are shown, along with relative proximities to IFG and pSTG. Indicated for each subject are: language-dominant hemisphere (shown in red), and the hemisphere of subdural grid placement.

Subject No. 1

Stimulation of dominant pSTG caused impairments in reading of prose passages and in recitation of song lyrics. However, singing of familiar songs was not impaired by stimulation of the same site. By contrast, stimulation of non-dominant pSTG did cause impairments in singing of familiar songs, whereas neither reading of prose passages or recitation of song lyrics was affected.

After stimulation of dominant pSTG, causing impairments in reading of prose passages and recitation of song lyrics, subject 1 stated that he “just went blank and could not think of what the words were.” After stimulation of non-dominant pSTG, causing impairments in singing of familiar songs, subject 1 stated “these feelings are so hard to describe; I definitely could not sing anymore, I could not get the tune out. I knew the words but I could not get them out… I felt confused like not knowing what to do next.”

Subject No. 2

Stimulation of a region anterior to non-dominant pSTG caused a pitch change during singing of familiar songs, however, no impairment in either reading of prose passages or recitation of song lyrics was observed during stimulation of the same site. Stimulation of non-dominant IFG demonstrated no impairment in reading of prose passages, recitation of song lyrics, or singing of familiar songs. Additional stimulation of sites in the non-dominant hemisphere anterior to IFG and in the anterior portion of the middle temporal gyrus caused no effect during any of the tasks tested.

Subject No. 3

Stimulation of dominant IFG caused impairments in reading of prose passages, recitation of song lyrics, and singing of familiar songs, although, no impairment in alternating tongue movements were observed during stimulation of the same site. By contrast, stimulation of non-dominant IFG caused no effect during any of the tasks tested. Stimulation of a site anterior to dominant pSTG similarly caused no effect in any of the tasks tested. Additional stimulation of sites in the non-dominant hemisphere in the middle superior temporal gyrus, anterior middle temporal gyrus, and parahippocampal gyrus also caused no effect during any of the tasks.

After stimulation of dominant IFG, causing impairments in reading of prose passages, recitation of song lyrics, and singing of familiar songs, subject 3 stated that “everything just would not come.”

Subject No. 4

Stimulation in non-dominant pSTG caused no impairment in reading of prose passages, recitation of song lyrics, or in singing of familiar songs. Stimulation of a site in the non-dominant hemisphere anterior to IFG caused no effect during any of the tasks tested.

Subject No. 5

Various sites in close proximity to non-dominant IFG were stimulated during reading of prose passages, recitation of song lyrics, and singing of familiar songs (superior, posterior, and anterior to the ROI), no impairment in any of the tasks was observed. Additional stimulation of sites anterior to non-dominant pSTG, and in two sites in the posterior portion of the middle temporal gyrus similarly demonstrated no effect during any of the tasks tested.

Subject No. 6

Stimulation of non-dominant IFG caused no impairment in reading of prose passages, recitation of song lyrics, singing of familiar songs, or humming of melodies. Stimulation of sites in the non-dominant hemisphere in the inferior parietal lobule, posterior middle temporal gyrus, posterior inferior temporal gyrus, and superior temporal gyrus anterior to pSTG similarly demonstrated no effect during any of the tasks tested.

Subject No. 7

Stimulation of dominant IFG caused impairments in reading of prose passages, recitation of song lyrics, singing of familiar songs and humming of melodies. Stimulation of dominant STG caused impairments in reading of prose passages and recitation of song lyrics, but not in singing of familiar songs or humming of melodies.

After stimulation of dominant pSTG, causing impairments in reading of prose passages and recitation of song lyrics, subject 7 stated “I just couldn’t get [the words] out… I just kept losing it.” He likened his inability to speak to having “lockjaw.”

Subject No. 8

Stimulation of non-dominant IFG caused no impairment in reading of prose passages, recitation of song lyrics, singing of familiar songs, or humming of melodies. Stimulation of sites in the non-dominant hemisphere in the posterior portion of inferior temporal gyrus, inferior parietal lobule, parahippocampal gyrus, and superior temporal gyrus anterior to pSTG similarly demonstrated no effect during any of the tasks tested.

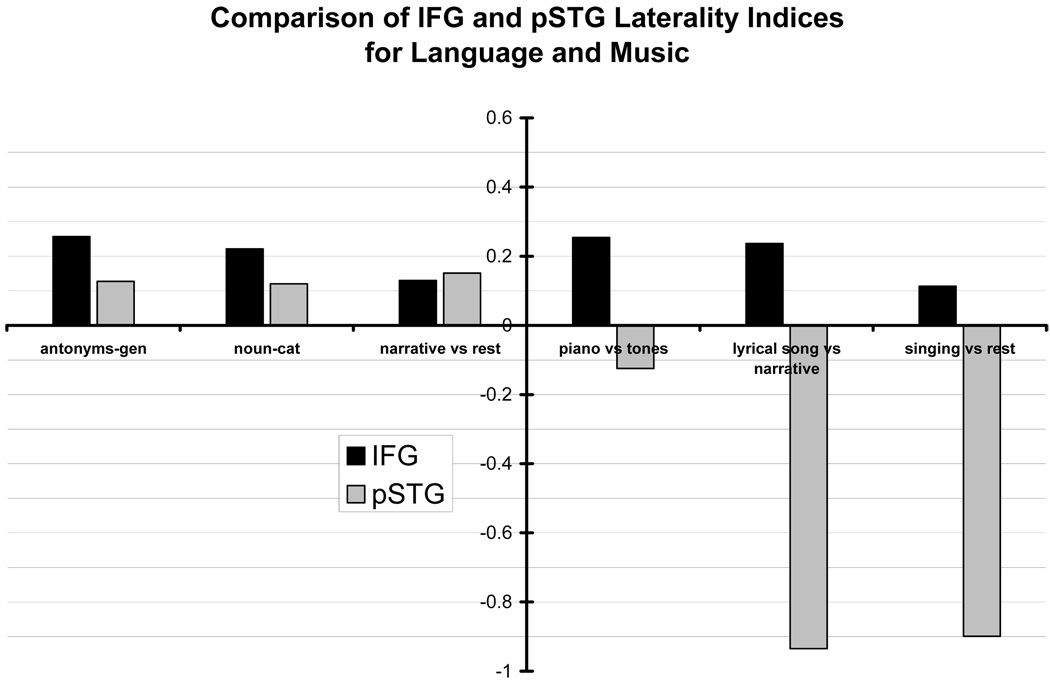

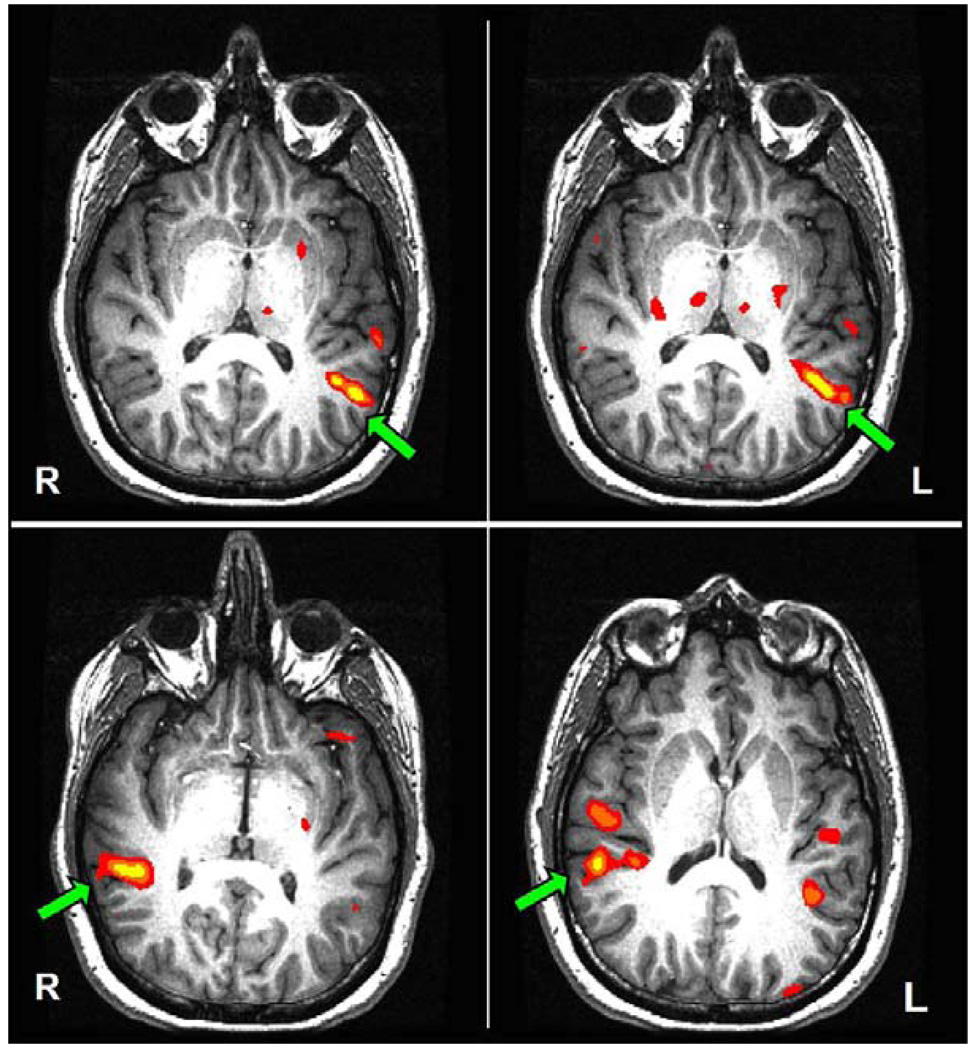

All of the fMRI maps for this subject, both language-related and music-related, demonstrated asymmetry of IFG activation favoring the left hemisphere. The IFG LI for antonym-generation = 0.26, for noun-categorization = 0.22, for narrative versus rest = 0.13, for piano versus tones = 0.25, for lyrical song versus narrative = 0.24, and for singing versus rest = 0.11. See Figure 2. However, the fMRI activation patterns observed in pSTG differed from those in IFG (see Figure 3). Within pSTG, we observed leftward-asymmetries for language-related tasks, and rightward-asymmetries for music-related contrasts. As illustrated in Figure 2, the pSTG LI for antonym-generation = 0.13, for noun-categorization = 0.12, for narrative versus rest = 0.15, for piano versus tones =−0.13, for lyrical song versus narrative =−0.94, and for singing versus rest =−0.9.

Figure 2.

Laterality indices (LI) for the inferior frontal gyrus (IFG, black bars) and the posterior portion of the superior temporal gyrus (pSTG, gray bars) of subject 8. Left panel indicates LIs from language tasks, right panel indicates LIs from music contrasts. An LI with an absolute value greater than 0.1 is defined as asymmetric where positive values (upward) indicate leftward-asymmetry and negative values (downward) indicate rightward-asymmetry. Both language and music contrasts resulted in leftward-asymmetry in IFG; however, pSTG demonstrated leftward-asymmetry for language contrast, but rightward-asymmetry for music tasks.

Figure 3.

Functional MRI activation maps of subject 8 (threshold at p < 10−4, uncorrected). Top left panel: antonym-generation. Top right panel: noun-categorization. Lower left panel: singing vs. rest. Lower right panel: piano music vs. pure tones. Green arrows outline the dominant activation in the posterior portion of the superior temporal gyrus (pSTG) for the various contrasts.

4. DISCUSSION

Patients undergoing intracranial testing for planning of seizure surgery provide a rare opportunity to investigate the brain basis of cognitive functions, including musical performance. We presented a series of eight patients who underwent cortical stimulation testing of musical and language functions, and one who also underwent fMRI mappings. Our objective was to explore how singing abilities appear to be supported by the putative language regions and/or their non-dominant homologues; specifically, we focused on IFG and pSTG. Using cortical stimulation testing, we demonstrated dissociation between singing and speech in pSTG for two subjects and for subject 8 by multiple fMRI mappings. Correspondingly, we demonstrated an overlap of speech and singing abilities in dominant IFG for two subjects using cortical stimulation testing and for subject 8 by multiple fMRI mappings. We also showed, for six subjects, that cortical stimulation of frontal lobe sites within or in close proximity to non-dominant IFG does not impair singing or speech function; and for five subjects, that stimulation of temporal lobe regions outside non-dominant pSTG does not impair signing or speech performance.

Due to the inevitable limitations of cortical mapping by way of grid stimulation—e.g., technical matters, subject fatigue, and clinically driven electrode coverage—we were not able to conclusively establish analogous patterns in all of our case studies. It is important to note, however, that cortical stimulation testing of six subjects served to provide corroborating evidence that stimulation outside the targeted ROIs resulted in no impairment, lending some support to the conclusion that stimulation of many regions outside IFG and pSTG do not cause similar disruptions of speech and/or singing function demonstrated when stimulation was applied within IFG and pSTG in multiple subjects.

The dissociation between speech and singing on posterior temporal stimulation observed in some of our subjects suggests distinct neuroanatomical substrates for motorically similar tasks, with control of production determined by whether or not the words are expressed in a musical context. A similar phenomenon was first reported by Dalin (1745), who described a subject with profound expressive aphasia but preserved ability to sing the words to familiar songs. This particular dissociation, expressive aphasia without amusia, has since been observed in numerous lesion cases (Racette et al., 2006; Warren et al., 2003; Buklina and Skvortsava, 2006; Lechevalier et al., 2006; Takeda et al., 1999), and is consistent with noninvasive activation studies using fMRI (Ozdemir et al., 2006; Riecker et al., 2000). Some early investigators, however, linked both language and musical deficits to left hemisphere injury (Henschen, 1926). Our results would predict this linkage in cases of inferior frontal abnormalities with associated production/execution defects. One might speculate in light of our results during IFG stimulation that the early cases of concordant amusia and aphasia with left hemisphere lesions most likely involved this area. The possibility of a negative motor effect explaining this finding is raised by subject 7, whose humming was disrupted by stimulation of this site, though subject 3 had a similar result without apparent difficulty performing lateral tongue movements. Evidence for left-hemisphere dominance for vocal muscle control supports the intuitively plausible hypothesis that such anterior parietal to posterior IFG regions have a less task-specific and more global motoric basis (Ludlow et al., 1989).

The opposite dissociation, normal expressive language in the presence of a music deficit, has also been observed in lesion cases; this phenomenon generally occurs as a result of a right hemisphere lesion (Peretz et al., 1997; Terao et al., 2006). Localization within the right hemisphere has not been reliably related to the specific type of musical dysfunction, although some have proposed an anterior-posterior expressive-receptive dichotomy analogous to that described in aphasia (Benton, 1977). Our observations, however, suggest at least some posterior superior temporal gyrus involvement in singing; this may be related to the stimulation technique, which typically produces expressive changes posteriorly in temporal regions even during language testing (Luders et al., 1986).

The lack of definite non-dominant hemisphere effect in subject 4, with right hemisphere language dominance, left hemisphere seizure onset, and left temporal lobe coverage extending 8 cm posteriorly, suggests that in this patient language re-organized to the right following a left hemisphere lesion, although musical abilities presumably remained in the right hemisphere. Gordon and Bogen (1974) studied patients with epilepsy during transient hemispheric dysfunction produced by intracarotid injections of sodium amytal (i.e., the Wada test). In 4 of 5 subjects who received left hemisphere injections, after resolution of initial muteness, singing in syllables ("la-la-la") returned to normal several minutes before speech. Alternatively, in 7 of 8 subjects receiving right carotid injections, singing was initially reduced to a near monotone, while language was only minimally impaired. Of note is that unlike direct cortical stimulation which provides very localized testing, Gordon and Bogen observed patients with comparatively widespread dysfunction produced by hemispherical injections.

Assessment of laterality using fMRI can serve as a noninvasive adjunct to standard clinical testing. We assessed fMRI activation for language-specific asymmetries within the ROIs in subject 8 and compared against the Wada-derived language dominance determination. We found language-specific LIs to be congruent with Wada lateralization results, in each case favoring the left hemisphere. Additionally, our functional maps confirmed that both language and music tasks robustly activated IFG and pSTG areas (Figure 3). In comparing the lateralization patterns between these two types of activation, we noted that for music-related tasks IFG asymmetries coincided with those observed for language-related tasks, while pSTG laterality instead favored the non-language-dominant hemisphere (Figure 2 and Figure 3). These findings are consistent with other fMRI reports that have found similar dissociations (Saito et al., 2007; Ozdemir et al., 2006) and with lesion case reports (Terao et al., 2006; Peretz et al., 1997; Racette et al., 2006; Kohlmetz et al., 2003; Warren et al., 2003 Racette et al., 2006; Buklina and Skvortsava, 2006; Lechevalier et al., 2006; Takeda et al., 1999). We further present supporting evidence in this subject by way of cortical stimulation testing, verifying that stimulation of widespread regions outside non-dominant pSTG does not cause disruption of singing ability (Figure 1).

The concept of cerebral dominance for music remains problematic, and appears to vary with the specific nature of the task and the background of the subject (Gordon and Bogen, 1971 and 1974; Hough et al., 1994; Limb et al., 2006b). Several investigators have suggested that melodic aspects of perception and performance tend to be preferentially mediated by the right hemisphere and temporal rhythmic aspects by the left (Limb, 2006a). Some reports have suggested preferentially left-asymmetric activation of the temporal lobes in trained musicians, compared with untrained or nonmusical subjects, whenever the task chosen is of a perceptual-music nature (Limb et al., 2006b; Ohnishi et al., 2001; Perry et al., 1999; Zatorre et al., 1994). Overt singing has been explored noninvasively by fMRI in non-diseased populations and has often shown right-dominant processing (Saito et al., 2007; Ozdemir et al., 2006). Conversely, a recent lesion case study presents an individual who, after sustaining a right hemisphere lesion, is unable to retrieve a song when asked to sing, but is able to correctly perform music discrimination tasks (Schön et al., 2003). However, it is clear that dedicated studies of cerebral laterality comparing musicians to non-musicians for tasks specifically involving the production of music are still lacking. In comparing our fMRI laterality results for pSTG, between the more receptive musical task (passive listening to piano music versus tones), and the more productive task (singing versus rest), we demonstrated a much stronger right-lateralization for singing versus rest and passive listening of lyrical song versus narrative than was seen for listening to piano music versus tones (Figure 2 and Figure 3). Given the prior reports of leftward temporal lobe laterality during perceptual music tasks in trained musicians, one might expect left-asymmetry of pSTG for the more receptive music tasks, particularly in subject 8, a professional musician. While we observed right-laterality in pSTG for listening to piano music versus pure tones, it was comparatively the least right-asymmetric activation in that region. It is furthermore interesting to note that the piano music used was not chosen at random, but was in fact a piece the subject had performed publicly in the past. In a positron emission tomography (PET) study comparing musicians to non-musicians Sergent et al. (1992) found that for musical sight-reading translated to piano keyboard performance, musicians tended to demonstrate activity patterns that more closely localized to known verbal performance areas in the left hemisphere. It is plausible that our subject’s familiarity with the performance of the particular piano piece used might have introduced such linguistic aspects of piano music production into the task, thus accounting for the significantly decreased rightward activation of pSTG we observed for that particular contrast. This interpretation, however, warrants further study.

Our subjects varied widely in their degree of musical interest and proficiency of singing, and might therefore be expected to maintain right hemisphere dominance more than if they had more formal training. It may be of interest that the three subjects who showed dissociation—during stimulation testing or fMRI mappings—between musical and verbal performance (subjects 1, 7 and 8) were, by consensus, the best singers in terms of melodic accuracy. The task used in this study may relate to the concept of right hemisphere specialization for "holistic" processes (Kupfermann, 1985) in that singing a familiar song is an over-learned, unitary task, whereas reciting the words non-musically is both more analytical and less automatic. Our results then could reflect this division rather than a strictly musical-verbal one.

We observed in five subjects that stimulation within or in close proximity to non-dominant IFG did not produce impairment in any of the tasks we tested, whether they were musical or language in nature, and irrespective of the degree of the subject’s musical training or proficiency. These results appear to contradict previous findings noting morphometric asymmetry favoring non-dominant IFG in subjects with a strong ability to discriminate musical tones (Hyde et al., 2006), and previous fMRI studies that have found increased fMRI activation in non-dominant IFG during singing tasks (Ozdemir et al., 2007). A preliminary interpretation of this apparent discrepancy can be reconciled in the context of an interpretation put forth by Saito et al. (2006), who concluded that fMRI activation asymmetry observed in dominant IFG results from the language-specific text processing that occurs in the singing of lyrics. However, this interpretation was contradicted in subject 7 who after stimulation of dominant IFG was not able to continue humming melodies—a task which presumably does not contain text processing, or any other language components. Perhaps a more plausible interpretation describing the perceived discordance would focus on the inherent differences between activation type techniques, such as fMRI, compared to de-activation techniques, such as electro-cortical stimulation and Wada testing. These differences additionally serve to emphasize the advantages of stimulation as the clinical gold-standard, which by inducing temporary deficits (i.e., localized de-activation) is able to ascertain the vital neural components of task performance, as opposed to non-invasive methodologies which yield a more distributed activation map that highlights supportive networks not necessarily critical to the task at hand. It is therefore difficult to generalize electro-cortical stimulation findings to non-invasive fMRI results. However, given the limited data presented here, this interpretation necessitates further study, perhaps by way of transcranial magnetic stimulation (TMS) which more closely replicates electro-cortical stimulation by similarly de-activating task performance.

Cortical stimulation from implanted grids, while offering a powerful modality for ascertaining critical functional sites, has logistical and technical issues involved in its practice that can often be prohibitive. First, subject fatigue or pain, and technical difficulties, coupled with the restricted coverage afforded by clinical grid placement, often make it unfeasible to stimulate all the regions that may be of importance to a given cognitive process. Second, because of the complexity of the tasks and brevity of stimulation, sensitivity and specificity of performance measures are difficult to estimate. Third, the myriad of factors affecting subject performance can be controlled for only by careful questioning and repeated trials. Fourth, even repeat stimulations at a constant current may not be truly identical, as either adaptation or facilitation of the brain to repeated stimulation is possible. And finally, electrical spread of the stimulus cannot be ruled out even when no afterdischarge is seen.

In summary, despite the limitations that our subjects all had chronic brain abnormalities and therefore possibly abnormal brain organization, that ideal testing coverage was not always available, and that repeated trials were often not possible, our results present persuasive evidence that in some subjects, electrical stimulation of dominant pSTG can interfere with speech and not singing; that stimulation of the homologous non-dominant pSTG area can interfere with overt singing and not speech; that dominant IFG stimulation tends to interfere with both verbal and musical expression, while non-dominant IFG stimulation causes no interference in either abilities; and that stimulation of areas adjacent to but not within non-dominant pSTG typically causes no interference in either abilities. These results are consistent with subject 8’s fMRI activation asymmetry in which leftward IFG activation was observed for all tasks, while differential activation asymmetry patterns were observed in pSTG: language tasks yielded leftward asymmetry while musical tasks yielded rightward asymmetry.

ACKNOWLEDGMENTS

The subjects' motivation and cooperation made this study possible. Drs. Paul Fedio, Christine Cox, and Aaron Nelson performed neuropsychological testing and assisted with intracarotid amytal testing. Ms. Kathleen Kelley, Ms. Patricia Reeves, Mr. Robert Long, and Mr. Hugh Malek provided invaluable assistance with EEG and video recording. Mr. Joseph Bucolo ably assisted with technical aspects of stimulation. Partial support for this research was provided by National Institutes of Health (NIH) grants: NINDS, K08-NS048063-02 (AJG); NIBIB, T32-EB002177 (ROS); NCRR, U41-RR019703 (AJG) and 3U41RR019703-03S1 (ROS).

REFERENCES

- Benton AL. The amusias. In: Critchley M, Henson RA, editors. Music and the brain. London: Heinemann; 1977. 378-39. [Google Scholar]

- Binder JR, Swanson SJ, Hammeke TA, Morris GL, Mueller WM, Fischer M, Benbadis S, Frost JA, Rao SM, Haughton VM. Determination of language dominance using functional MRI: a comparison with the Wada test. Neurology. 1996 Apr;46(4):978–984. doi: 10.1212/wnl.46.4.978. [DOI] [PubMed] [Google Scholar]

- Bogen JE, Gordon HW. Musical tests for functional lateralization with intracarotid amobarbital. Nature. 1971 Apr 23;230(5295):524–525. doi: 10.1038/230524a0. [DOI] [PubMed] [Google Scholar]

- Branco DM, Suarez RO, Whalen S, O'Shea JP, Nelson AP, da Costa JC, Golby AJ. Functional MRI of memory in the hippocampus: Laterality indices may be more meaningful if calculated from whole voxel distributions. Neuroimage. 2006 Aug;1532(2):592–602. doi: 10.1016/j.neuroimage.2006.04.201. [DOI] [PubMed] [Google Scholar]

- Broca PP. Perte de la parole ramolissement chronique et destruction partielle du lobe antérieur gauche de cerveau. Bulletins de la Société d’anthropologie de Paris. 1861;2:235–238. [Google Scholar]

- Buklina SB, Skvortsova VB. Amusia and its topic specification. Zh Nevrol Psikhiatr Im S S Korsakova. 2007;107(9):4–10. [PubMed] [Google Scholar]

- Dalin O. K Svenska Vetensk Academi Handligar. Vol. 6. Stockholm; 1745. Berattelse om en dumbe, som Kan siunga; pp. 114–115. [Google Scholar]

- Gordon HW, Bogen JE. Hemispheric lateralization of singing after intracarotid sodium amylobarbitone. Journal of Neurology, Neurosurgery & Psychiatry. 1974 Jun;37(6):727–738. doi: 10.1136/jnnp.37.6.727. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henschen RE. On the function of the right hemisphere of the brain in relation to the left in speech, music, and calculation. Brain. 1926;49:110–126. [Google Scholar]

- Hough MS, Daniel HJ, Snow MA, O'Brien KF, Hume WG. Gender differences in laterality patterns for speaking and singing. Neuropsychologia. 1994 Sep;32(9):1067–1078. doi: 10.1016/0028-3932(94)90153-8. [DOI] [PubMed] [Google Scholar]

- Hyde KL, Zatorre RJ, Griffiths TD, Lerch JP, Peretz I. Morphometry of the amusic brain: a two-site study. Brain. 2006 Oct;129(Pt 10):2562–2570. doi: 10.1093/brain/awl204. [DOI] [PubMed] [Google Scholar]

- Kleber B, Birbaumer N, Veit R, Trevorrow T, Lotze M. Overt and imagined singing of an Italian aria. Neuroimage. 2007 Jul 1;36(3):889–900. doi: 10.1016/j.neuroimage.2007.02.053. [DOI] [PubMed] [Google Scholar]

- Koelsch S, Fritz T, Schulze K, Alsop D, Schlaug G. Adults and children processing music: an fMRI study. Neuroimage. 2005a May 1;25(4):1068–1076. doi: 10.1016/j.neuroimage.2004.12.050. [DOI] [PubMed] [Google Scholar]

- Koelsch S, Gunter TC, v Cramon DY, Zysset S, Lohmann G, Friederici AD. Bach speaks: a cortical "language-network" serves the processing of music. Neuroimage. 2002 Oct;17(2):956–966. [PubMed] [Google Scholar]

- Koelsch S, Kasper E, Sammler D, Schulze K, Gunter T, Friederici AD. Music, language and meaning: brain signatures of semantic processing. Nature Neuroscience. 2004 Mar;7(3):302–307. doi: 10.1038/nn1197. [DOI] [PubMed] [Google Scholar]

- Koelsch S. Neural substrates of processing syntax and semantics in music. Current Opinion in Neurobiology. 2005b Apr;15(2):207–212. doi: 10.1016/j.conb.2005.03.005. [DOI] [PubMed] [Google Scholar]

- Koelsch S. Significance of Broca's area and ventral premotor cortex for music-syntactic processing. Cortex. 2006 May;42(4):518–520. doi: 10.1016/s0010-9452(08)70390-3. [DOI] [PubMed] [Google Scholar]

- Kohlmetz C, Müller SV, Nager W, Münte TF, Altenmüller E. Selective loss of timbre perception for keyboard and percussion instruments following a right temporal lesion. Neurocase. 2003;9(1):86–93. doi: 10.1076/neur.9.1.86.14372. [DOI] [PubMed] [Google Scholar]

- Kupfermann I. Hemispheric asymmetries and the cortical localization of higher cognitive and affective functions. In: Kandel E, Schwartz JH, editors. Principles of neural science. 2nd ed. New York: Elsevier; 1985. pp. 673–687. [Google Scholar]

- Lancaster JL, Woldorff MG, Parsons LM, Liotti M, Freitas CS, Rainey L, Kochunov PV, Nickerson D, Mikiten SA, Fox PT. Automated Talairach atlas labels for functional brain mapping. Human Brain Mapping. 2000 Jul;10(3):120–131. doi: 10.1002/1097-0193(200007)10:3<120::AID-HBM30>3.0.CO;2-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lechevalier B, Rumbach L, Platel H, Lambert J. Pure amusia revealing an ischaemic lesion of right temporal planum. Participation of the right temporal lobe in perception of music. Bulletin Academy of Natural Medicine. 2006 Nov;190(8):1697–1709. [PubMed] [Google Scholar]

- Lesser RP, Lüders H, Klem G, Dinner DS, Morris HH, Hahn J. Cortical afterdischarge and functional response thresholds: results of extraoperative testing. Epilepsia. 1984 Oct;25(5):615–621. doi: 10.1111/j.1528-1157.1984.tb03471.x. [DOI] [PubMed] [Google Scholar]

- Limb CJ. Structural and functional neural correlates of music perception. The Anatomical Record Part A: Discoveries in Molecular, Cellular, and Evolutionary Biology. 2006a Apr;288(4):435–446. doi: 10.1002/ar.a.20316. [DOI] [PubMed] [Google Scholar]

- Limb CJ, Kemeny S, Ortigoza EB, Rouhani S, Braun AR. Left hemispheric lateralization of brain activity during passive rhythm perception in musicians. The Anatomical Record Part A: Discoveries in Molecular, Cellular, and Evolutionary Biology. 2006b Apr;288(4):382–389. doi: 10.1002/ar.a.20298. [DOI] [PubMed] [Google Scholar]

- Luders H, Lesser RP, Hahn J, Dinner DS, Morris H, Resor S, Harrison M. Basal temporal language area demonstrated by electrical stimulation. Neurology. 1986;36:505–510. doi: 10.1212/wnl.36.4.505. [DOI] [PubMed] [Google Scholar]

- Ludlow CL, Cohen LG, Hallett M, Sedory SE. Bilateral intrinsic laryngeal muscle response to transcranial magnetic stimulation with left hemisphere dominance. Neurology. 1989;39 Suppl 1:376. [Google Scholar]

- Maess B, Koelsch S, Gunter TC, Friederici AD. Musical syntax is processed in Broca's area: an MEG study. Nature Neuroscience. 2001 May;4(5):540–545. doi: 10.1038/87502. [DOI] [PubMed] [Google Scholar]

- Ohnishi T, Matsuda H, Asada T, Aruga M, Hirakata M, Nishikawa M, Katoh A, Imabayashi E. Functional anatomy of musical perception in musicians. Cerebral Cortex. 2001 Aug;11(8):754–760. doi: 10.1093/cercor/11.8.754. [DOI] [PubMed] [Google Scholar]

- Ojemann GA, Fried I, Lettich E. Electrocorticographic (ECoG) correlates of language. I. Desynchronization in temporal language cortex during object naming. Electroencephalography Clinical Neurophysiology. 1989 Nov;73(5):453–463. doi: 10.1016/0013-4694(89)90095-3. [DOI] [PubMed] [Google Scholar]

- Ojemann GA. Functional mapping of cortical language areas in adults. Intraoperative approaches. Advances in Neurology. 1993;63:155–163. [PubMed] [Google Scholar]

- Ozdemir E, Norton A, Schlaug G. Shared and distinct neural correlates of singing and speaking. Neuroimage. 2006 Nov 1;33(2):628–635. doi: 10.1016/j.neuroimage.2006.07.013. [DOI] [PubMed] [Google Scholar]

- Patel AD, Gibson E, Ratner J, Besson M, Holcomb PJ. Processing syntactic relations in language and music: an event-related potential study. Journal of Cognitive Neuroscience. 1998 Nov;10(6):717–733. doi: 10.1162/089892998563121. [DOI] [PubMed] [Google Scholar]

- Patel AD. Language, music, syntax and the brain. Nature Neuroscience. 2003 Jul;6(7):674–681. doi: 10.1038/nn1082. [DOI] [PubMed] [Google Scholar]

- Peretz I, Belleville S, Fontaine S. Dissociations between music and language functions after cerebral resection: A new case of amusia without aphasia. Canadian Journal of Experimental Psychology. 1997 Dec;51(4):354–368. [PubMed] [Google Scholar]

- Perry DW, Zatorre RJ, Petrides M, Alivisatos B, Meyer E, Evans AC. Localization of cerebral activity during simple singing. Neuroreport. 1999 Dec 16;10(18):3979–3984. doi: 10.1097/00001756-199912160-00046. [DOI] [PubMed] [Google Scholar]

- Petrovich Brennan NM, Whalen S, de Morales Branco D, O'shea JP, Norton IH, Golby AJ. Object naming is a more sensitive measure of speech localization than number counting: Converging evidence from direct cortical stimulation and fMRI. Neuroimage. 2007;37 Suppl 1:S100–S108. doi: 10.1016/j.neuroimage.2007.04.052. [DOI] [PubMed] [Google Scholar]

- Platel H, Price C, Baron JC, Wise R, Lambert J, Frackowiak RS, Lechevalier B, Eustache F. The structural components of music perception. A functional anatomical study. Brain. 1997 Feb;120(Pt 2):229–243. doi: 10.1093/brain/120.2.229. [DOI] [PubMed] [Google Scholar]

- Racette A, Bard C, Peretz I. Making non-fluent aphasics speak: sing along! Brain. 2006 Oct;129(Pt 10):2571–2584. doi: 10.1093/brain/awl250. [DOI] [PubMed] [Google Scholar]

- Riecker A, Ackermann H, Wildgruber D, Dogil G, Grodd W. Opposite hemispheric lateralization effects during speaking and singing at motor cortex, insula and cerebellum. Neuroreport. 2000 Jun 26;11(9):1997–2000. doi: 10.1097/00001756-200006260-00038. [DOI] [PubMed] [Google Scholar]

- Saito Y, Ishii K, Yagi K, Tatsumi IF, Mizusawa H. Cerebral networks for spontaneous and synchronized singing and speaking. Neuroreport. 2006 Dec 18;17(18):1893–1897. doi: 10.1097/WNR.0b013e328011519c. Erratum in: Neuroreport, 2007 Aug 27;18(13): 1409. [DOI] [PubMed] [Google Scholar]

- Sergent J, Zuck E, Terriah S, MacDonald B. Distributed neural network underlying musical sight-reading and keyboard performance. Science. 1992 Jul 3;257(5066):106–109. doi: 10.1126/science.1621084. [DOI] [PubMed] [Google Scholar]

- Schön D, Lorber B, Spacal M, Semenza C. Singing: a selective deficit in the retrieval of musical intervals. Annals of the New York Academy of Sciences. 2003 Nov;999:189–192. doi: 10.1196/annals.1284.027. [DOI] [PubMed] [Google Scholar]

- Suarez RO, Whalen S, O’Shea JP, Golby AJ. A Surgical Planning Method for Functional MRI Assessment of Language Dominance: Influences from Threshold, Region-of-Interest, and Stimulus Mode. Brain Imaging and Behavior. 2008 June;2(2):59–73. 1931–7557. [Google Scholar]

- Takeda K, Bandou M, Nishimura Y. Motor amusia following a right temporal lobe hemorrhage—a case report. Rinsho Shinkeigaku. 1990 Jan;30(1):78–83. [PubMed] [Google Scholar]

- Terao Y, Mizuno T, Shindoh M, Sakurai Y, Ugawa Y, Kobayashi S, Nagai C, Furubayashi T, Arai N, Okabe S, Mochizuki H, Hanajima R, Tsuji S. Vocal amusia in a professional tango singer due to a right superior temporal cortex infarction. Neuropsychologia. 2006;44(3):479–488. doi: 10.1016/j.neuropsychologia.2005.05.013. [DOI] [PubMed] [Google Scholar]

- Wada J, Rasmussen T. Intracarotid injection of sodium amytal for the lateralization of cerebral speech dominance. Journal of Neurosurgery. 1960;17:266–282. doi: 10.3171/jns.2007.106.6.1117. [DOI] [PubMed] [Google Scholar]

- Warren JD, Warren JE, Fox NC, Warrington EK. Nothing to say, something to sing: primary progressive dynamic aphasia. Neurocase. 2003 Apr;9(2):140–155. doi: 10.1076/neur.9.2.140.15068. [DOI] [PubMed] [Google Scholar]

- Wernicke K. Der aphasische Symptomencomplex. Eine psychologische Studie auf anatomischer Basis. Breslau M. Crohn und Weigert; 1874. [Google Scholar]

- Zatorre RJ, Evans AC, Meyer E. Neural mechanisms underlying melodic perception and memory for pitch. Journal of Neuroscience. 1994 Apr;14(4):1908–1919. doi: 10.1523/JNEUROSCI.14-04-01908.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]