Abstract

We propose the use of multiscale amplitude-modulation frequency-modulation (AM-FM) methods for discriminating between normal and pathological retinal images. The method presented in this paper is tested using standard images from the Early Treatment Diabetic Retinopathy Study (ETDRS). We use 120 regions of 40×40 pixels containing 4 types of lesions commonly associated with diabetic retinopathy (DR) and two types of normal retinal regions that were manually selected by a trained analyst. The region types included: microaneurysms, exudates, neovascularization on the retina, hemorrhages, normal retinal background, and normal vessels patterns. The cumulative distribution functions of the instantaneous amplitude, the instantaneous frequency magnitude, and the relative instantaneous frequency angle from multiple scales are used as texture features vectors. We use distance metrics between the extracted feature vectors to measure interstructure similarity. Our results demonstrate a statistical differentiation of normal retinal structures and pathological lesions based on AM-FM features. We further demonstrate our AM-FM methodology by applying it to classification of retinal images from the MESSIDOR database. Overall, the proposed methodology shows significant capability for use in automatic DR screening.

Index Terms: Diabetic Retinopathy, Multiscale AM-FM methods, automatic screening

I. INTRODUCTION

Most methods developed for the detection of diabetic retinopathy (DR) require the development of a specific segmentation technique for each of a number of abnormalities found on the retina, such as microaneurysms, exudates, etc. [1]–[4]. Here we present a new texture-based modeling technique that avoids the difficulties of explicit feature segmentation techniques used by some current methodologies in detection of DR in retinal images. This approach utilizes amplitude modulation-frequency modulation (AM-FM) methods for the characterization of retinal structures [5].

To apply feature segmentation, several researchers find it necessary to train the algorithm on reference images. This requires manually annotated individual lesions. Providing these is a tedious and time-consuming activity which has hampered the application of the algorithms to digital retinal images with varying image formats, e.g. compressed images, images of different sizes, etc. Generalized texture modeling techniques that avoid manual segmentation would greatly enhance progress toward an automated screening of retinal images.

Another common problem when applying image processing methods to retinal images is the need for correction of uneven illumination. The first step in the analysis of retinal images has commonly been to process the images to remove lighting artifacts which enhances detection of lesions. Osareh et al. [6], [7] used color normalization and local contrast enhancement as an initial step for detecting exudates. Spencer et al. [8], Frame et al. [9], and Niemejer et al. [10] removed the slow gradients in the background of the green channel of each image resulting in a shade corrected image.

Other approaches are focused on the development of preprocessing steps for the detection and subsequent removal of normal anatomical "background" structures in the image. Flemming et al. [11] applied a 3×3 pixel median filter to reduce this variation. They convolved the retinal image with a Gaussian filter and then normalized the image for the detection of exudates. Other methods segment and remove retinal vessels which simulate red lesions [12]–[14]. Our method is like the approach described by Niemeijer et al. [15] which does not require any preprocessing. In our method, the green channel of the image is the input for applying the feature extraction technique.

Much of the published literature on retinal lesion detection has focused either on the detection of red lesions such as microaneurysms (MAs) and hemorrhages or on the detection of bright lesions such as exudates and cotton wool spots. Niemeijer et al. [11] in 2005, Larsen et al. [1] in 2003, and Sander et al. [4] in 2001 described methods for detecting red lesions. Similarly, Streeter et al. [16] and Jelinek et al. [17] developed systems for detecting microaneurysms only. Niemeijer et al. [15] also proposed an automated method for the differentiation and detection of exudates, cotton wool spots, which are characteristics of diabetic retinopathy, and age-related macular degeneration, respectively. Similarly, Sopharak et al. [18] and Osareh et al. [5] developed methods for the detection of exudates.

The extraction of features in the diagnosis of a retinal image is commonly the basis for an automatic classification system. The most popular methods for feature extraction are: morphology in the detection of exudates [18], [19], Gabor filters in the detection and differentiation of bright lesions [15] and the classification of retinal images [20], Wavelet transforms in the detection of microaneurysms by Quellec et al. [21], [22], and Match filters for vessel extraction in [23], [24].

The main contribution of our research is the rigorous characterization of normal and pathological retinal structures based on their instantaneous amplitude and instantaneous frequency characteristics and a high area under the ROC for the detection of DR in retinal images. This paper analyzes six different types of retinal structures in the retina and discusses how AM-FM texture features can be used for differentiating among them.

The organization of this paper is as follows. Section II describes the methodology used for the characterization of the structures in the retina. The AM-FM methodology is explained using ETDRS images. The results are shown and explained in section III. Results are based on the classification of 400 images from the Messidor data base. Finally the discussion is presented in section IV.

II. METHODS

A. Database

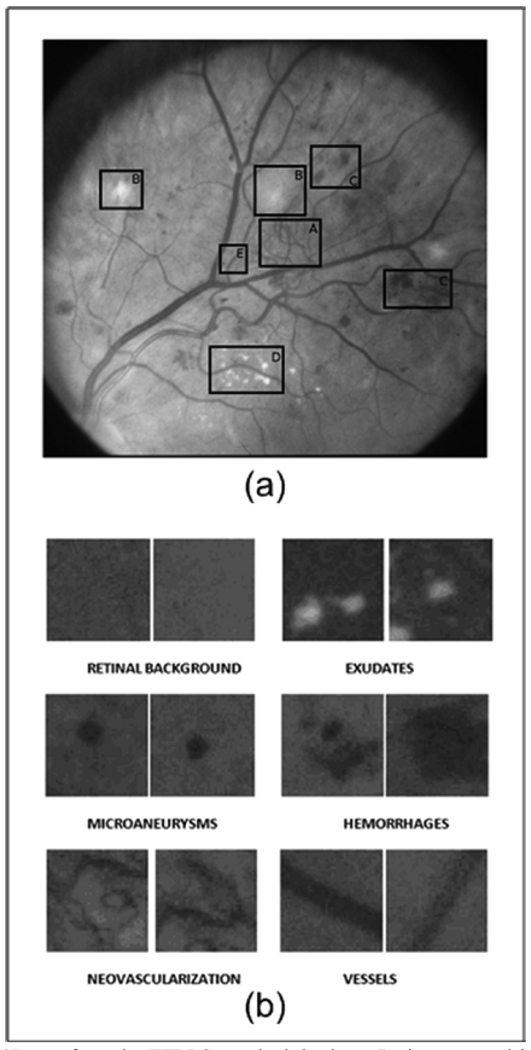

Images were selected from the online ETDRS database [25]. Working images are uncompressed TIF format with a size of 1000 by 1060 pixels. The ETDRS standard photographs contain 15 stereo pair images that are used to train graders on diabetic retinopathy. From these images, 120 regions of 40×40 pixels containing retinal structures of interest were selected for this study. These regions were grouped into 6 categories of 20 regions per structure: microaneurysms, hemorrhages, exudates, neovascularization, retinal background, and vessels. Fig.1a shows one of the standard ETDRS images used in this paper with abnormal retinal structures delimited by boxes. Fig. 1b shows samples of the structures mentioned above on regions of interest (ROI) of 40×40 pixels in size.

Fig. 1.

(a)Image from the ETDRS standard database. Lesions encased in the boxes are examples of A) Neovascularization, B) Cottonwool spots, C) Hemorrhages, D) Exudates, and E) Microaneurysms; (b) Examples of retinal structures on ROIs of 40 × 40 pixels.

B. AM-FM Decompositions

An image can be approximated by a sum of AM-FM components given by

| (1) |

where M is the number of AM-FM components, an (x, y) denote instantaneous amplitude functions (IA) and φn (x, y) denote the instantaneous phase functions [26]. We refer to [27] for further details on the use of AM-FM decomposition. Here, our focus will be on the extraction of AM-FM texture features.

First, we extract AM-FM components from each image scale, as outlined in Section II.C. For each AM-FM component, the instantaneous frequency (IF) is defined in terms of the gradient of the phase φn :

| (2) |

In terms of extracting textural features from each component, we are interested in using the instantaneous frequency (IF) and the instantaneous amplitude (IA). Conceptually, the IF measures local frequency content. When expressed in terms of cycles per mm, the IF magnitude is independent of any image rotations or retinal imaging hardware characteristics since it reflects an actual physical measurement of local image texture, extracted from each image scale. Furthermore, the IF magnitude is a measurement of the geometry of the texture, with a strong degree of independence from contrast and non-uniform illumination variations.

We are also interested in working with an invariant IF angle feature. To this end, instead of using the actual IF angle, we use relative angles. Here, relative angles are estimated locally as deviations from the dominant neighborhood angle. Thus, directional structures, such as blood vessels will produce a relative angle distribution concentrated around zero. We constraint the relative angle to range from −π/2 to π/2. Thus, a sign ambiguity occurs from the fact that cosφ(x, y) represents the same image as cos[−φ(x, y)].

Local image intensity variations, including edges, are reflected in the IA. As we shall discuss next, large spatial scale variations will be reflected in the low-frequency scales.

C. Frequency Scales and Filterbanks

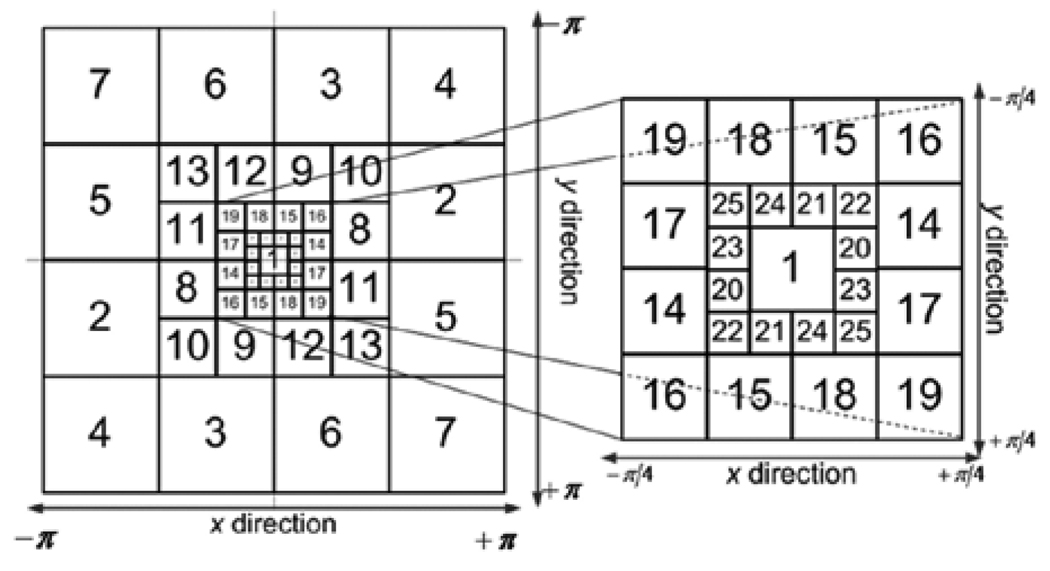

AM-FM components are extracted from different image scales. We consider the use of 25 bandpass channel filters associated with four frequency scales and nine possible Combinations of Scales (CoS) (see Fig. 2). We estimate a single AM-FM component over each combination of scales using Dominant Component Analysis [5], [27].

Fig. 2.

Filterbank for Multi-Scale AM-FM Decomposition. The discretespectrum is decomposed using 25 bandpass filters. Each scale (see Table 1).

At lower frequency scales, the magnitude values of the |IF| are small and the extracted AM-FM features reflect slowly-varying image texture. For example, the most appropriate scale for blood vessels is the one that captures frequencies with a period that is proportional to their width. On the other hand, the fine details within individual lesions, such as the small vessels in neovascular structures, are captured by the higher-frequency scales. To analyze the image at different scales, we use a multi-scale channel decomposition outlined in Fig. 2.

The use of different scales also considers the size variability among structures such as MAs, exudates, hemorrhages, etc. A predominant characteristic of patients with diabetic retinopathy is that the lesion sizes will vary. Dark lesions such as MAs, or bright lesions such as exudates may be present in an image as structures with areas on the order of a few pixels. In the images that were analyzed for this study, the MAs’ and exudates’ diameters are on the order of 8 pixels, which represent a size 0.04 mm. Hemorrhages and cotton wool spots diameters are on the order of 25 pixels, representing a size of 0.12 mm. Multiple scales are used to capture these features of different sizes. The different filters (within any given scale) also consider the orientation of the feature being encoded.

Table 1 relates the number of pixels and the frequency ranges of each band-pass filter shown in Fig. 2. The combinations of scales were grouped in such a way that contiguous frequency bands were covered. In this way, structures that only appear in a specific frequency range or appear between two or three contiguous bands can also be described. For this reason, the nine combinations of scales (CoS), given in Table 2, were grouped to encode the features for different structures.

TABLE 1.

Band pass filters associated with multiple image scales

| Frequency Scale Band | Filters | Instantaneous Wavelength (period) Range in pixels |

Range in mm |

|---|---|---|---|

| Low Pass Filter (LPF) | 1 | 22.6 to ∞ | 0.226 to ∞ |

| Very Low Frequencies (VL) | 20–25 | 11.3 to 32 | 0.113 to 0.32 |

| Low Frequencies (L) | 14–19 | 5.7 to 16 | 0.057 to 0.16 |

| Medium Frequencies (M) | 8–13 | 2.8 to 8 | 0.028 to 0.08 |

| High Frequencies (H) | 2–7 | 1.4 to 4 | 0.014 to 0.04 |

TABLE 2.

Combinations of scales

| Combination Number |

Filters | Frequency Bands | Range in cycles/mm |

|---|---|---|---|

| 1 | 8:25 | M + L + VL | 0.028 to 0.32 |

| 2 | 1 | LPF | 0.226 to ∞ |

| 3 | 20:25 | VL | 0.113 to 0.32 |

| 4 | 14:19 | L | 0.057 to 0.16 |

| 5 | 8:13 | M | 0.028 to 0.08 |

| 6 | 14:25 | L + VL | 0.057 to 0.32 |

| 7 | 8:19 | M + L | 0.028 to 0.16 |

| 8 | 2:7 | H | 0.014 to 0.04 |

| 9 | 2:13 | H+M | 0.014 to 0.08 |

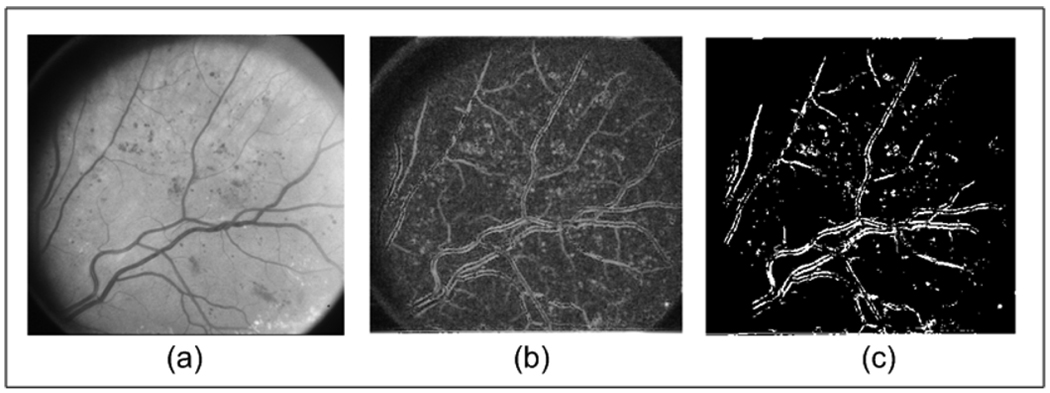

Twenty-seven AM-FM histogram estimates were computed corresponding to the three AM-FM features IA, |IF|, and relative angle, for each of the nine combinations of scales. The bandpass filters were implemented using an equi-ripple dyadic FIR filters design and have a pass band and a stop band ripple of 0.001 dB and 0.0005 dB respectively [5]. Robust AM-FM demodulation was applied over each bandpass filter [5], [28]. The AM-FM demodulation algorithm has been shown to yield significantly improved AM-FM estimates via the use of the equi-ripple filterbank and a variable-spacing linear-phase approximation [5], [28]. Then, at each pixel, for each combination of scales, we use Dominant Component Analysis (DCA) to select the AM-FM features from the band-pass filter that gave the maximum IA estimate. Fig 3–5 shows some examples of the AM-FM estimates obtained after applying to three of the images in the ETDRS dataset.

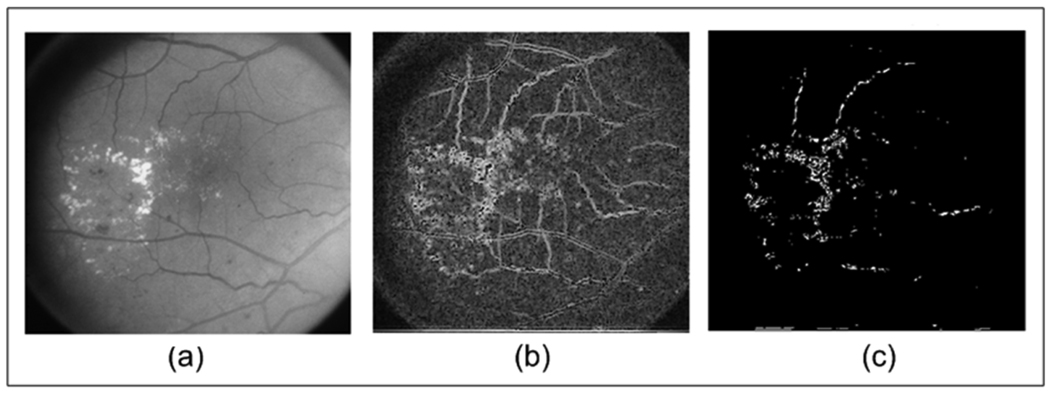

Fig. 3.

(a) Original Image from ETDRS; (b) Instantaneous Amplitude using medium, low and very low frequencies; and (c) Thresholded Image of (b).

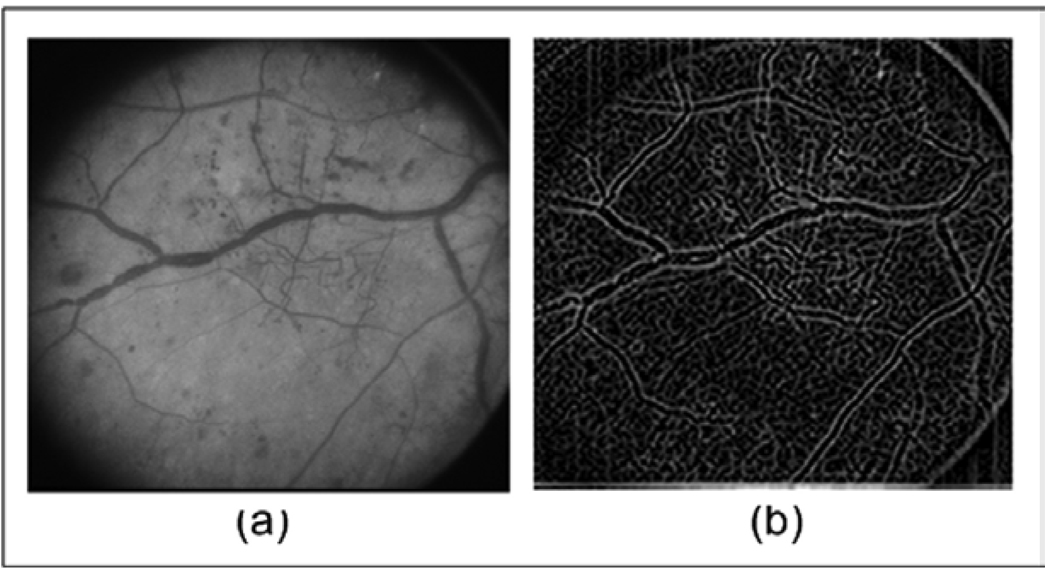

Fig. 5.

(a) Original Image from ETDRS; (b) Instantaneous Frequency Magnitude using low pass filter.

D. Encoding of structures using AM-FM

To characterize the retinal structures the cumulative distribution functions (CDF) of the IA, |IF|, and the relative angle are used. Since the range of values of each estimate varies according the CoS used, the histograms (or pdf) are computed from the global minimum value to the global maximum value. For example, for the IA using CoS 4 (low frequencies), the histograms were computed in the range of [0 72] pixels because this is the grayscale range for the IA in which the low frequencies are supported.

A region with small pixel intensity variation will also be characterized by low IA values in the higher frequency scales. This is due to the fact that low intensity variation regions will also contain weak frequency components. Furthermore, darker regions will also be characterized by low IA values in the lower frequency scales. This is due to the linearity of the AM-FM decomposition. Low amplitude image regions will mostly need low-amplitude AM-FM components. For example, retinal background (see Fig. 1b) analyzed in the whole frequency spectrum will have roughly constant, low IA values. In general, for any given scale, low IA values will reflect the fact that frequency components from that particular scale are not contained in the image. Thus, since there are no high IA values to account for, the CDF of this kind of structure is expected to rise rapidly for low IA values. On the other hand, if a region contains structures with significant edges and intensity variations such as vessels, microaneurysms, neovascularization, or exudates, we expect that the rate of rise (pdf) of their CDFs will be slower due to the presence of both low and high IA components.

E. Defining Retinal Characteristics of AM-FM Feature Vectors

In this section, we describe how the AM-FM estimates encode structures and how this encoding can be related to the creation of relevant feature vectors for the detection of the analyzed lesions.

The instantaneous frequency magnitude (|IF|) is insensitive to the direction of image intensity variations. Furthermore, the IF magnitude is a function of the local geometry as opposed to the slowly-varying brightness variations captured in the IA. Thus, a single dark round structure in a lighter background will have similar |IF| distribution as a single bright round structure of the same size in darker region. This is roughly the case for exudates (bright lesions) and microaneurysms (dark lesions) when they have similar areas.

|IF| estimates can be used for differentiating between two regions where one has a single vessel (as in a normal retinal vessel) and a second region that has multiple narrow vessels (as in neovascularization). Even though both regions may have information in the same frequency ranges, the counts on the histogram of the latter region will be greater. The larger histogram counts reflect the fact that a larger number of pixels exhibit these frequency components. The histogram for a region with neovascularization will have higher kurtosis (a more pronounced peak) than a region containing just one vessel.

We also analyze image regions in terms of the relative IF angle. First, we note that image structures without any dominant orientation will have a relatively flat histogram (regardless of what is chosen as the dominant orientation). This kind of feature should be observed on structures such as microaneurysms and exudates. Conversely, an area with a single vessel in a region has a unique angle of inclination. In this case, the (non-relative) IF angle estimate is expected to be highly peaked at the inclination angle, assigning much smaller count values to angles that are further away from the angle of inclination. Then, as discussed earlier, the relative angle histograms will have their peak at zero. One last case includes structures within a region which have several elements with different orientations, such as neovascularization. The histograms for these regions would include several well-defined peaks at different angles. Thus, this feature can be differentiated from the other two well-defined distributions described above.

F. Classification

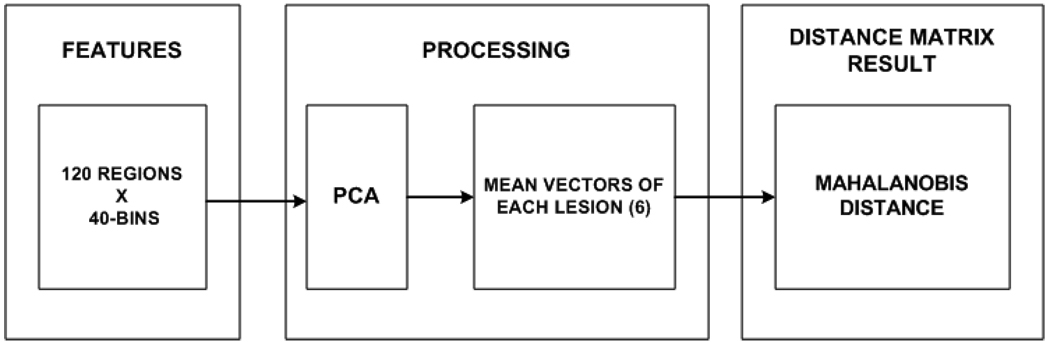

In order to demonstrate that the methodology presented in this work can distinguish between the structures in the retina, two types of classification were performed. The first one is focused on the classification of small regions containing structures and the second one is focused on the greater problem of classification of retinal images as a DR or non DR. For the first classification test, a well known statistical metric, the Mahalanobis distance, is computed. Fig. 6 shows the procedure to calculate the distance between the lesions for each of the 9 CoS. First, the cumulative distribution function (CDF) is extracted for each of the 3 estimates in the 120 regions. After that, the dimensionality of the feature vectors is reduced using Principal Component Analysis (PCA), where the principal component projections are chosen so as to account for 95% of the variance. This procedure is applied for each of the 9 combinations of scales. In this way, the combinations and the estimates that produce the greatest distance between lesions can be found. It is important to mention that in order to normalize the calculation of the distance between lesions, the reduced matrix is adjusted to have standard deviation 1 and the distances of the lesions with respect to a specific lesion are calculated between mean vectors.

Fig. 6.

Procedure to find the Mahalanobis distance between lesions for each estimate and each CoS. First the features for the regions are extracted per estimate (IA, |IF| and relative angle). Then a reduction of dimensionality method (PCA) is applied for each feature estimate of the regions. After that, the mean of 20 regions corresponding to a specific lesion is found. Using the information of the 6 means, the Mahalanobis distance is found for each estimate. This process is repeated for each CoS given as a result 27 different distances between lesions.

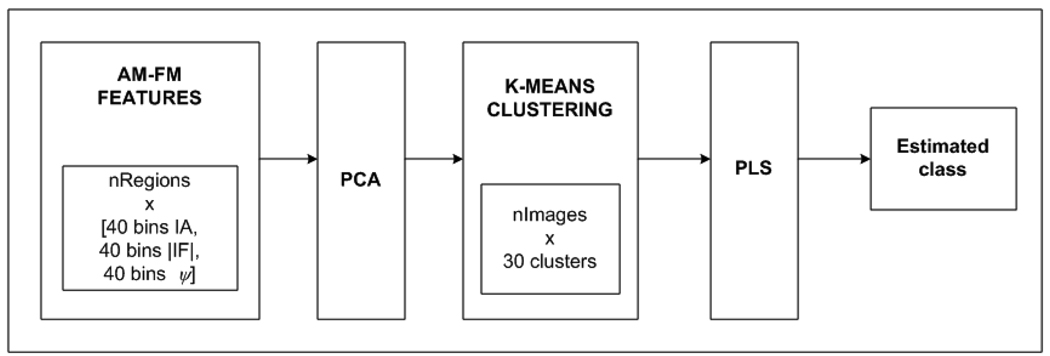

In our second experiment, using a cross validation approach, we classified retinas from healthy subjects and subjects thathad different levels of diabetic retinopathy (DR). To test the significance of the AM-FM processing as a methodology for the extraction of features, 376 images of the MESSIDOR database [29] were selected. These images are classified by ophthalmologists in 4 levels where Risk 0 correspond to non DR images, Risk 1 mild DR, Risk 2 moderate DR, and Risk 3 corresponds to an advanced stage of DR. Table 3 shows the distribution of the dataset used for the experiment. The retinal image was divided in regions of 100×100 pixels. We excluded the optic disc for our analysis. A total of 100 regions were obtained for each retinal image. The procedure to extract the features and reduce their dimensionality is the same as our first experiment. After the features are extracted, we used k-means clustering (an unsupervised classification method) to cluster the information in 30 groups so a feature vector for each image can be obtained. This vector represents the number of regions in each of the 30 clusters for each image. For testing purposes the centroids are stored for each cluster. Finally a linear regression method, Partial Least Square (PLS), was applied to derive classes and the loading matrix and the coefficient factors were stored for the testing stage.

TABLE 3.

Database information

| DR RISK |

Number of Images |

Number of MA |

Number of Hemorrhages |

Neovascularization |

|---|---|---|---|---|

| RISK 0 | 140 | 0 | 0 | 0 |

| RISK 1 | 28 | [1, 5] | 0 | 0 |

| RISK 2 | 68 | <5,15> | [0, 5] | 0 |

| RISK 3 | 140 | [15, ∞> | [5, ∞> | 1 |

Any of the three conditions for the number of hemorrhages or the number of microaneurysms should be held to consider the image as RISK 2 and Risk 3. Some of the retinal images of Risk2 present exudates and some of the retinal images of Risk3 present exudates, and neovascularization. Macular edema was graded separately as either none, >1DD from fovea or <1DD from fovea (clinically significant Macular edema, CSME).

III. RESULTS

This section presents the results of an exhaustive analysis using multi-scale AM-FM for the purposes of characterizing retinal structures and for classifying different types of lesions. As we have discussed in the previous section, the various retinal structures are encoded differently by the AM-FM features. Furthermore, we use frequency scales and filterbanks to focus on the various size structures and to eliminate noise introduced by other less meaningful structures that are present in the image.

Using the methodology previously described, the Mahalanobis distance values between features for each of the 3 estimates (IA, |IF|, and relative angle) and the 9 combinations of scales (Table 2) are found. Table 4 and Table 5 show the maximum distance values and the corresponding estimate and combination found per lesion pair. For example, the maximum distance between hemorrhages and microaneurysms is 4.71 standard deviations and is given by the instantaneous amplitude combination of scales #2 (IA-2 on table 5). Distances among the features range from 2.65 to 8.18 standard deviations, meaning that if we would use just this distance to classify the images we would have accuracies between 92% and >99.99%.

TABLE 4.

Maximum distance matrix between lesions

| Retinal Structures |

RB | EX | MA | HE | NV |

|---|---|---|---|---|---|

| EX | 6.87 | 0 | - | - | - |

| MA | 3.48 | 3.97 | 0 | - | - |

| HE | 4.72 | 4.89 | 4.71 | 0 | - |

| NV | 8.18 | 4.59 | 2.92 | 2.65 | 0 |

| VE | 6.14 | 3.31 | 2.83 | 3.51 | 3.35 |

RB: Retinal Background, EX: Exudate,

MA: Microaneurysm, HE: Hemorrhage

NV: Neovascularization, VE: Vessel

TABLE 5.

Combination of scales (see Table 2) for the maximum distances between lesions

| Retinal Structure s |

RB | EX | MA | HE | NV |

|---|---|---|---|---|---|

| EX | |IF| - 7 | - | - | - | - |

| MA | |IF| - 6 | IA - 9 | - | - | - |

| HE | |IF| - 2 | IA - 1 | IA - 2 | - | - |

| NV | IA - 1 | IA - 1 | IA - 5 | ΙΑ - 1 | - |

| VE | |IF| - 2 | θ - 1 | θ - 1 | θ – 1 | |IF| - 1 |

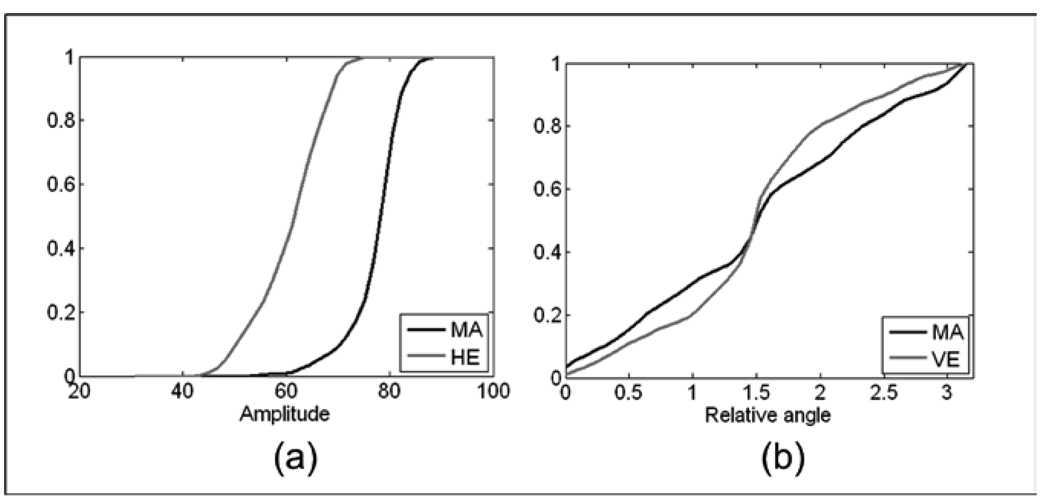

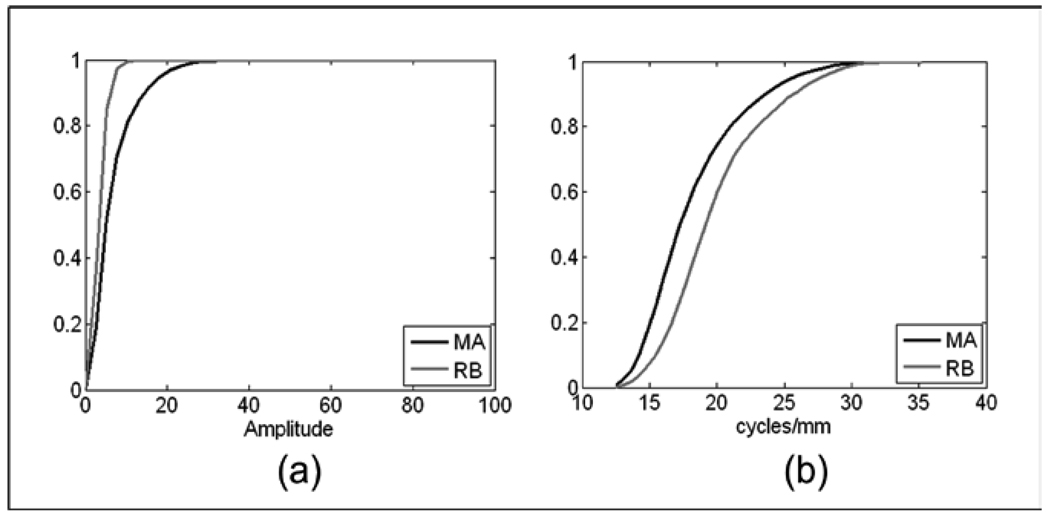

Most structures have their largest Mahalanobis distance from retinal background when using the |IF| features. Regions composed of only retinal background will have a histogram in most scales that reflects its high frequency, random pixel brightness structure. Because vessels and the neovascularization (NV) appear to have a predominant orientation in the IF, the relative IF angle can be used for differentiating vessels from microaneurysms (Fig. 9b), exudates, and hemorrhages. It can also be seen that in the case of the NV vs. vessels, the |IF| features are the most appropriate. This happens since the IA content may reflect similar information while the NV frequency components will vary significantly from components associated with a normal vessel. The regions with NV could also be differentiated using the angle estimation. This is the case for the classification between NV and hemorrhages (Fig. 12b).

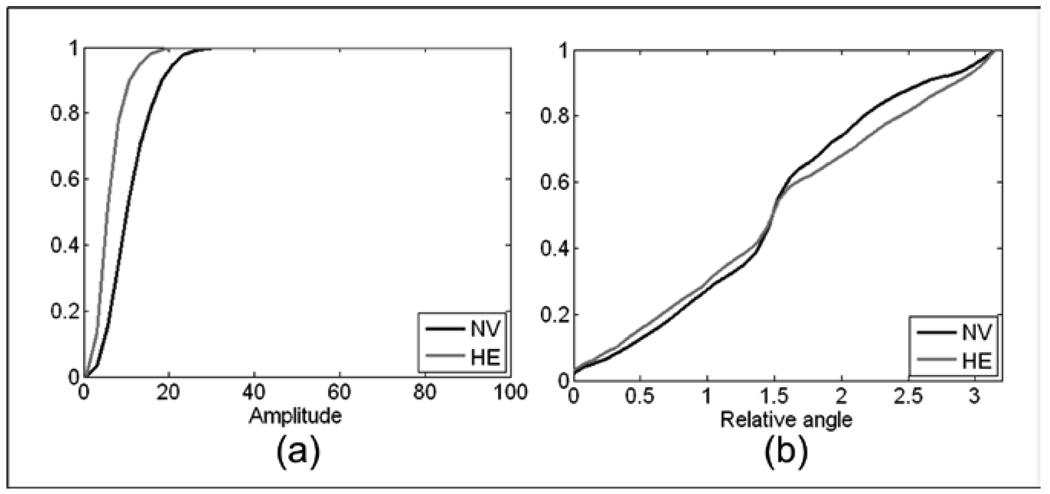

Fig. 9.

(a)Comparison of the mean of the IA CDFs between Microaneurysm (MA) and Hemorrhage (HE) for the low pass filter, (b) Comparison of the mean of the angle CDFs between Microaneurysm (MA) and Vessels (VE) for medium, low and very low frequencies.

Fig. 12.

Comparison of the mean of the CDFs between Neovascularization (NV) and Hemorrhage (HE). (a) CDFs of the IA for medium, low and very low frequencies, (b) CDFs of the θ for low frequencies.

For differentiating between NV vs retinal background and microaneurysms, the IA works best. From the results shown on table 4, we see that the lowest Mahalanobis is between NV and HE. The distance obtained in our analysis is of 2.65 standard deviations. However, the distance between NV and retinal background is 8.18. From a clinical perspective it is critically important to be able to isolate regions in the retina presenting with the high risk lesions, such as NV. Our results imply that NV will be classified or differentiated from the retinal background with greater than 99.99% accuracy and in the worst case with 92% of accuracy (NV vs. HE).

Clinically significant macular edema (CSME) appears in an advanced stage of DR. This disease is characterized by exudates that appear near to the fovea. These were shown in Table 4 to be easily differentiated from regions of retinal background (d=6.87) using the |IF| with Cos = 7. None of the other structures studied gave distances that are less than d=3.31. IA provides the greatest distances to other structures except for the distance from exudates to retinal background. It can be observed that the maximum distance between exudates and microaneurysms are obtained using the IA’s extracted from medium and high frequencies. This occurs since the microaneurysms have a smaller size than the exudates, and are thus characterized by higher frequencies.

For vessels, the relative angle and the |IF| provide relevant features for the discrimination of vessels from the rest of structures. The lower, medium and higher frequencies are all involved in this characterization. This range of frequencies varies depending on the size of the element to which the vessels are compared. It is important to mention that in many MA segmentation studies, algorithms are often confounded by normal vessels segments and hemorrhages. For that reason it is not surprising that although MAs are easily differentiated from retinal background (d=3.48), they are found to be similar, as measured by the Mahalanobis distance, to neovascularization and normal retinal vessels (d = 2.83 and d = 2.92, respectively).

Using the results shown in table 4 and table 5, we can determine which CoS and which estimate provided that most relevant features for a particular comparison. These results are of great importance for our AM-FM algorithm since they allow us to pinpoint which are the features and CoS needed to separate the retinal structures. Knowing the relevant CoS, this step will reduce the numbers of features to be extracted. In addition to the Mahalanobis results that presents meaningful distance between CDFs of structures for all the CoS, the K-S test demonstrated that almost all the Cos and estimates provide useful information for the characterization of structures. Table 6 shows that all CoS (from 1 to 9) between the three estimates are specified as relevant information for the classification. It can also be observed that the pair of structures in which the vessels are presented except vessels vs. neovascularization, the angle estimation helps in the discrimination of structures.

TABLE 6.

Relevant scales of the AM-FM estimates using K-S test

| Pair of Structures |

Instantaneous Amplitude Scales |

Instantaneous Frequency Magnitude Scales |

Relative Angle Scales |

|---|---|---|---|

| RB, EX | 1,3,4,5,6,7 | 1,3,4,5,6,7 | 5 |

| RB, MA | 4,6,7, | 4,6,7 | |

| RB, HE | 2,3,4,6,7 | 3,4,6,7 | 2 |

| RB, NV | 1,3,4,5,6,7 | 1,4,5,6,7 | |

| RB, VE | 1,4,6,7 | 1,4,6,7 | 1,7 |

| EX, MA | 1,4,5,6,7 | 5,9 | 5 |

| EX, HE | 1,2,3,4,5,6,7 | 4,5,6 | 2 |

| EX, NV | 1,4,6,7 | 4,6 | |

| EX, VE | 1,2,4,5,6,7,8,9 | 2,7 | 2 |

| MA, HE | 1,2,4,6,7 | 2 | 2 |

| MA, NV | 1,4,5,6,7 | 1,7,9 | |

| MA, VE | 1,2,4,6,7 | 1,2,4,6 | 1,7 |

| HE, NV | 1,3,4,5,6,7,9 | 1,3,4,5,7,9 | 3 |

| HE, VE | 1,4,6,7 | 4,6 | |

| NV, VE | 1,3,4,5,6,7,8,9 | 1,2,4,6,7 |

RB: Retinal Background, EX: Exudate

MA: Microaneurysm, HE: Hemorrhage

NV: Neovascularization, VE: Vessel

Finally, Table 8 presents a statistical analysis in percentiles for the most relevant estimates. It can be observed that for the IA-CoS 2, the hemorrhage presents values that are different from the rest of structures. This CoS is adequate for large structures (> 0.226 mm) since the lower frequencies are supported by it. Using the medium frequencies (CoS 5) we notice that exudates and neovascularization are clearly distinguished from the retinal background while the microaneurysms present similar values than the retinal background. This occurs since the medium frequencies capture structures comparable to exudates, the width of the neovascularization and the smallest structures such microaneurysms which are call captured in high frequencies.

TABLE 8.

Percentiles of the distributions for each structure

| Retinal Structures |

Hemorrhages | Neovascularization | Vessels | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Percentiles | |||||||||

| 25 | 50 | 75 | 25 | 50 | 75 | 25 | 50 | 75 | |

| Estimates | |||||||||

| IA CoS 2 | 99.64 | 110.81 | 120.92 | 126.98 | 134.59 | 141.91 | 123.53 | 142.29 | 153.17 |

| IA CoS 4 | 3.33 | 4.65 | 6.27 | 5.89 | 8.13 | 10.80 | 3.66 | 6.48 | 12.83 |

| IA CoS 5 | 2.32 | 2.96 | 3.72 | 3.03 | 4.05 | 5.26 | 2.37 | 3.23 | 4.37 |

| |IF| CoS 2 (cycles/mm) |

0.07 | 1.04 | 1.71 | 0.04 | 1.07 | 1.81 | 0.15 | 1.42 | 2.23 |

The following figures show the comparison of the mean of the CDFs between some of the pairs of structures from the list in Table 4 and Table 5. The selected pairs include structures for which the majority of classification algorithms have problems distinguishing between them. In Fig. 11 and Fig. 12 neovascularization samples are compared with 3 different types of structures. This multiple comparison is presented due to the importance of neovascularization, indicative of an advanced stage of DR. The common problem in the detection of the neovascularization is that this type of lesion may have visually similar structures to hemorrhages and vessels, and therefore similar analytical features which have previously produced inaccurate results using other image processing methods. In Fig. 12 the neovascularization is compared with a hemorrhage using 2 different AM-FM estimates.

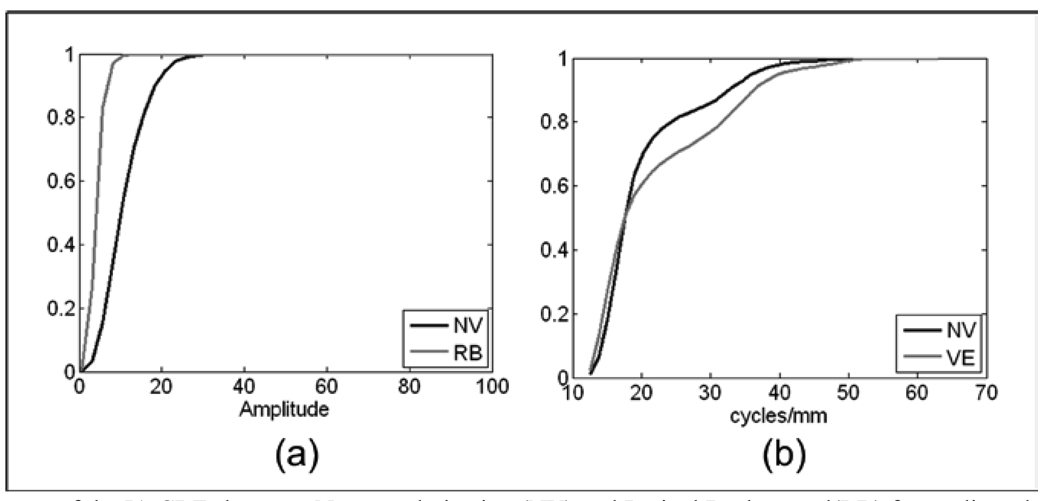

Fig. 11.

(a)Comparison of the mean of the IA CDFs between Neovascularization (NV) and Retinal Background(RB) for medium, low and very low frequencies, (b) Comparison of the mean of the |IF| CDFs between Neovascularization (NV) and Vessels (VE) for medium, low and very low frequencies.

The characteristics of the AM-FM feature vectors that produced the distance table were given in Figure 8 through Figure 12.

Fig. 8.

Comparison of the mean of the CDFs between Microaneurysm (MA) and Retinal Background (RB). (a) CDFs of the IA for low and very low frequencies, (b) CDFs of the |IF| for low and very low frequencies.

1. Microaneurysms

Fig. 8 compares MAs and retinal background using |IF| and IA for the combined L+VL frequency bands. The ROI that contains the MA displays a distribution of the |IF| that is shifted to the smaller magnitudes with respect to those of the retinal background for the low (L) and very low (VL) frequency bands (see Fig. 8b). The retinal background, because of its near homogeneous gray level, presents a CDF for IA that rises sharply to 1, while the ROI with the MA will have a slower rise in the CDF or a broader distribution of the IA histogram (see Fig 7b). These differences are quantified in Table 4, where a Mahalanobis distance of 3.48 is observed.

Fig. 7.

Procedure to classify retinal images. First the features are extracted using AM_FM. Then a reduction of dimensionality method (PCA) is applied for each CoS. After that an unsupervised method called hierarchical clustering is applied in order to reduce the dimensionality. Finally, the PLS is applied to obtain the estimated class for each image.

Fig. 9 presents the CDFs for ROIs with MAs and hemorrhages (9a) and for MAs and retinal vessels (9b). To differentiate MAs from hemorrhages IA from the lowest (LPF) frequency band gave the greatest Mahalanobis distance. This comes from the fact that hemorrhages exhibit strong low-frequency components due to their larger size. In comparison, ROIs with an MA were characterized by weaker low frequency components, as seen by the faster rise in the IA CDF for MAs. MAs and retinal vessels are easily differentiated by the CDF of the relative IF angle. While MA angles are more evenly distributed, the retinal vessels clearly show a dominant orientation, as seen by the sharp rise at the central bin (~20) in the CDF for retinal vessels in Figure 9b.

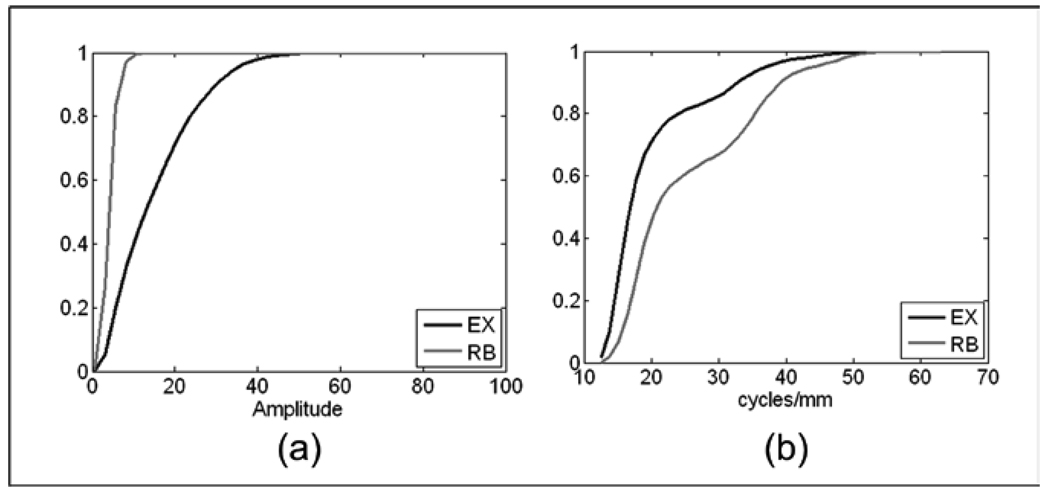

2. Exudates

The maximum Mahalanobis distance for differentiating exudates from the retinal background (6.87 standard deviations) was given by the |IF| for medium (M) and low (L) frequency bands. This large distance between the two groups occurs mainly due to the fact that the retinal background is characterized by weaker medium and lower frequency content, while exudates have stronger components due to their well-defined size characteristics. Fig 10 presents the CDFs for |IF| and IA. As with the MAs, detecting ROIs with exudates is facilitated by the broader distribution of the IA histogram as compared to the histograms for the retinal background.

Fig. 10.

Comparison of the mean of the CDFs between Exudates (EX) and Retinal Background (RB). (a) CDFs of the IA for medium and low frequencies, (b) CDFs of the |IF| for medium and low frequencies,

3. Neovascularization

In Figs. 11a and 11b and Figs. 12a and 12b, the neovascularization is compared with the CDF for retinal background, retinal vessels, and hemorrhages, respectively. Table 3 shows Mahalanobis distances between neovascular abnormalities and retinal background, retina vessels, MAs, and hemorrhages as 8.18, 6.14, 2.92, and 2.65 standard deviations, respectively. This indicates a high probability of differentiation between neovascularization and these other structures. The identifiable CDF of the ROIs with only retinal background is easily differentiated from ROIs with neovascular abnormalities through the IA CDF (Fig. 11a) for the M+L+VL frequency band. |IF| was used to differentiate neovascularization from normal retinal vessels. Figure 11b shows the CDFs for the two types of structures using the M+L+VL frequency bands.

In Figs 12a and 12b, IA and |IF| are presented to illustrate that it is IA that gives the greatest contribution to the Mahalanobis distance between these two types of structures.

4. Hemorrhages

Large structures like hemorrhages have their stronger AM-FM components in the lower frequencies. For this reason, combinations of scales with low frequencies are necessary to detect this kind of structure. Fig. 9a shows the comparison of the CDFs of the hemorrhages vs. the microaneurysms. It can be appreciated in the figure that the difference between both structures is large. On the other hand, when we use scales with that incorporate higher frequencies, the content of the hemorrhages cannot be detected completely. Fig 12a shows the comparison of the hemorrhage vs. neovascularization for CoS 1. From this figure, it is clear that the instantaneous amplitude for the hemorrhage is concentrated near zero, implying weaker components for the hemorrhages, as opposed to neovascularization that has stronger components in the medium and higher frequencies.

5. Vessels

This structure can be differentiated using relative-angle estimates because it presents a well defined geometrical orientation. Fig 9b is a clear example of the expected shape for the vessels in which the CDF rises sharply at the center, as explained earlier.

As it can be seen in these plots of the CDF's, strong differences can be seen between different structures. To assess the significance of these features for the classification, the Kolmogorov-Smirnov (K-S) test was applied for each pair of structures. In this analysis each bin of the CDF of the structures is extracted. Since there are 20 regions per structure, each bin has a distribution with 20 elements. In this way, a meticulous analysis is performed to assess the relevance of the bins in our feature vector. Table 6 shows the combination of scales that produced a significant difference between the structures pairs when using the K-S test. It can be observed that most of the CoS of the three estimates, specially the IA, contributed relevant information in the characterization of the DR lesions.

In addition to the previous analysis, the statistics for the distribution of the most relevant features for the classification are presented in Table 7 and Table 8. These statistics were calculated with the pixel information for each of the 120 regions in our analysis. Table 7 and Table 8 present the median and the 25 and 75 percentiles are presented for each of the 6 structures described before. These three quartiles are used to compare the population of all the CDFs for each structure.

TABLE 7.

Percentiles of the distributions for each structure

| Retinal Structures |

Retinal Background | Exudates | Microaneurysms | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Percentiles | |||||||||

| 25 | 50 | 75 | 25 | 50 | 75 | 25 | 50 | 75 | |

| Estimates | |||||||||

| IA CoS 2 | 169.30 | 172.38 | 175.30 | 145.95 | 163.28 | 182.77 | 162.69 | 169.07 | 174.11 |

| IA CoS 4 | 2.47 | 3.19 | 4.08 | 6.84 | 10.97 | 16.10 | 3.15 | 4.56 | 7.28 |

| IA CoS 5 | 2.03 | 2.58 | 3.21 | 3.19 | 4.55 | 6.23 | 2.16 | 2.79 | 3.59 |

| |IF| CoS 2 (cycles/mm) |

0.03 | 0.56 | 0.93 | 0.12 | 1.29 | 2.22 | 0.11 | 0.93 | 1.53 |

Finally, automatic classification of DR and non DR subjects was performed using the AM-FM features. Two experiments were conducted to determine the ability to correctly detect those images with signs of DR (see Table 3). The first experiment consists in the classification of non DR images vs. DR images. We select half of our database for training purposes and the other half for testing purposes. Our result shows an area under the ROC curve (AUC) of 0.84 with best sensitivity/specificity of 92%/54%. Table 9 shows the distribution of images used in the sets of training and testing and Table 10 shows the percentage of images correctly classified using the best sensitivity/specificity.

TABLE 9.

Distribution of training and testing data

| DR Level | Training | Testing |

|---|---|---|

| Risk 0 | 70 | 70 |

| Risk 1 | 18 | 9 |

| Risk 2 | 30 | 39 |

| Risk 3 | 70 | 70 |

TABLE 10.

Abnormal images correctly classified per risk level

| Level | Number | Percentage |

|---|---|---|

| Risk 3 | 70 | 97% |

| Risk 2 | 18 | 82% |

| Risk 1 | 30 | 89% |

| Total | 108 | 92% |

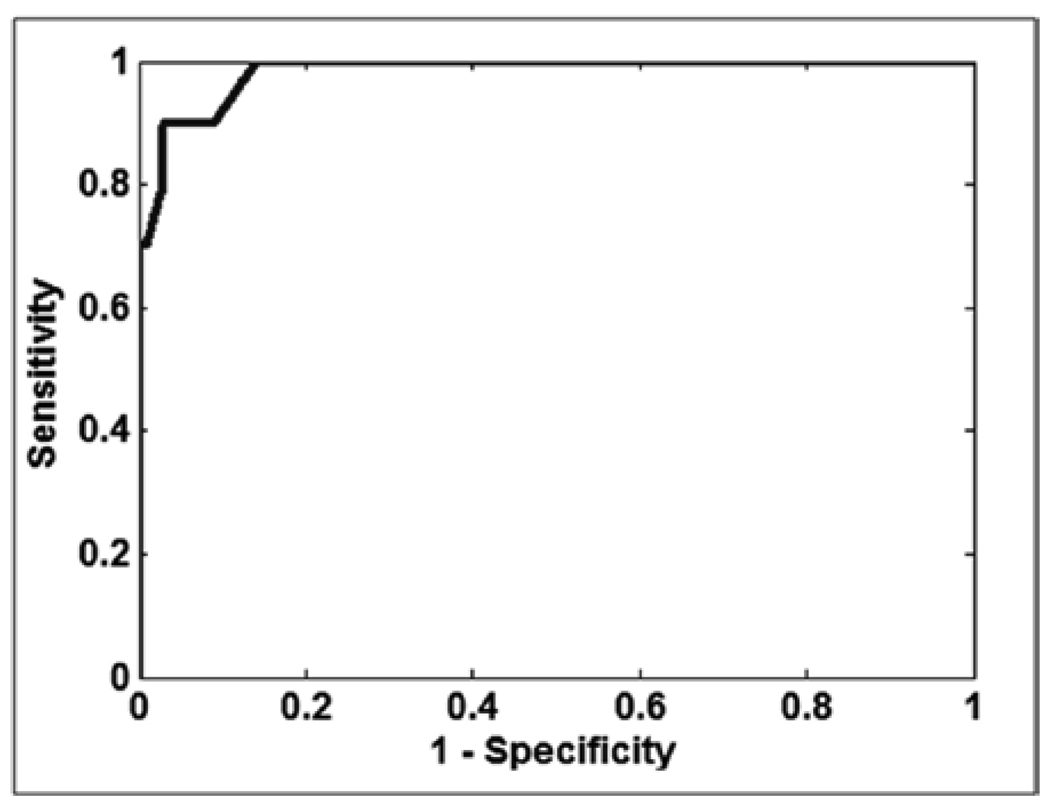

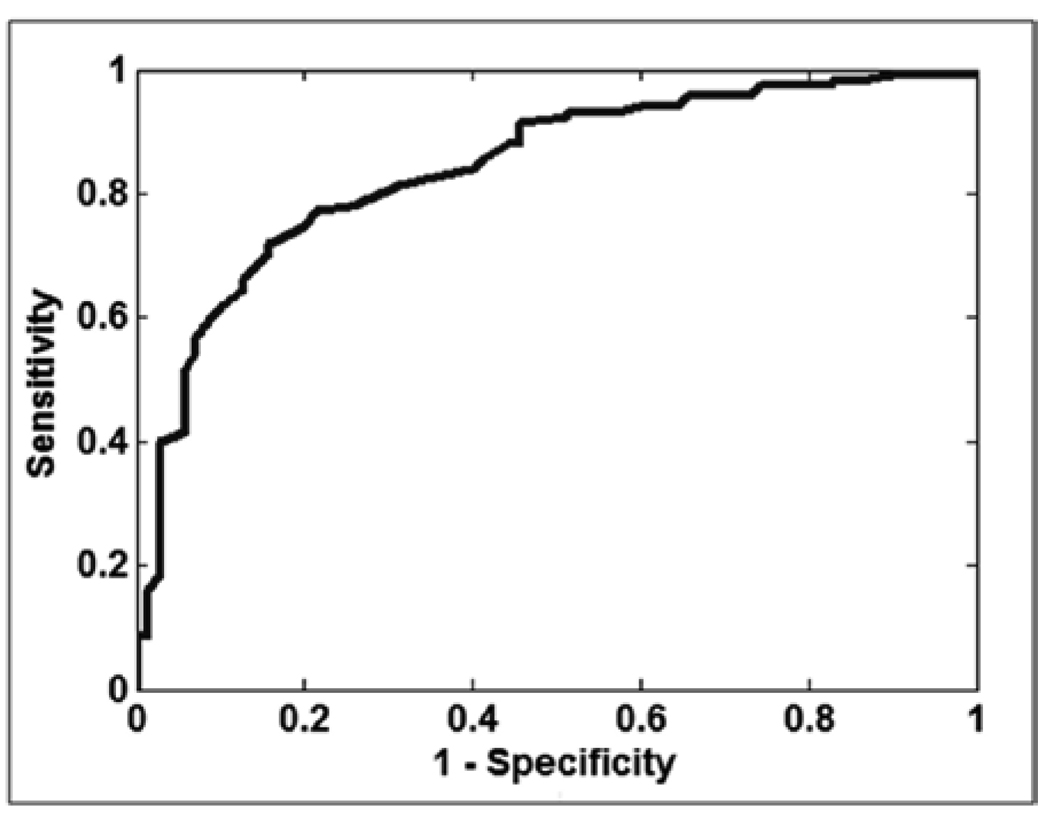

In addition to the previous experiment, a second experiment was performed. In this experiment we took in account that diabetic patients with advanced forms of DR, such as clinical significant macular edema need to be referred immediately to an ophthalmologist for treatment. The Risk 3 and Risk 2 were further stratified into those with signs of clinically significant macular edema (CSME). Exudates within 1/3 disc diameter of the fovea was used as a surrogate for CSME. Table 11 shows the distribution of images of the experiment IR vs. normal images used for testing and training purposes. Since the number of images was smaller, the experiment was run 20 times with randomly selection of images and the average result of AUC = 0.98 is shown in Fig. 14

TABLE 11.

Distribution of training and testing data for the IR experiment

| DR Level | Training | Testing |

|---|---|---|

| Risk 0 | 70 | 70 |

| IR | 18 | 9 |

| Total | 30 | 39 |

Fig. 14.

ROC curve for the classification of the third experiment: IR vs. Risk 0. A best sensitivity/specificity of 100%/88% was obtained.

IV. DISCUSSION AND CONCLUSIONS

The effectiveness of computer-based DR screening has been reported by several investigators, including an early commercial system, Retinalyze, by Larsen et al. [1]). Retinalyze produced a sensitivity of 0.97, a specificity of 0.71, and a AROC of 0.90 for 100 cases where two fields from two eyes (400 images total) were combined for identifying patients with DR. The specificity of the combined red and bright lesion detection could be improved to 78% by sorting and using only visually gradable quality images. The test data were from digitized 35mm color slides and collected through dilated eyes.

More recently, Abràmoff et al. [30] achieved a sensitivity of 84% and specificity of 64% (0.84 area under the receiver operating characteristic (ROC)). His retinal images were collected non-mydriatically and with variable compression of up to 100:1. The quality of this database is more representative of a screening environment, i.e. is dramatically worse than the samples provided by Larsen et al.; thereby suggesting a much more robust algorithm.

Abràmoff et al. results are consistent with the work of Fleming et al. [11], Lee et al. [31], and Sanchez et al. [32] who described a system in Aberdeen, Scotland et al. [33] where they reported a sensitivity of 90.5% and specificity of 67.4%, superior to manual reading of the same images. Our results compare well with the published results of these and others not mentioned.

Our algorithm demonstrated that the most advanced stage of DR (risk 3) was strongly differentiated from the non DR (risk 0) images assuring that most of the lesions in the retina could be captured using the features provided by AM-FM. The classification using the features extracted with AM-FM demonstrated an efficient methodology to detect DR. Using the AM-FM technique to extract the features, good sensitivity for the abnormal images vs. abnormal images and high sensitivity/specificity for IR vs. normal images were obtained.

This algorithm allows the user to obtain a detailed analysis of the images since the features are extracted by regions. Then, a combination of unsupervised and supervised methods are used for global classification. In this way, all lesion kinds could be detected without the need of manually segmentation by a technician.

This paper reports on the first time that AM-FM has been used with a multiscale decomposition of retinal structures for the purposes of classifying them into pathological and normal structures. The results demonstrate a significant capability to differentiate between retinal features, such as normal anatomy (retinal background and retinal vessels), from pathological structures (neovascularization, microaneurysms, hemorrhages, and exudates). The histograms for regions of interest containing these structures yield a signature through the CDF that can be used to successfully differentiate these structures as summarized in Table 6.

The application of the proposed methodology to DR screening is new. The principal advantage of this approach is that the methodology can be trained with only global classification of images, e.g. no DR or DR present, without having the develop a training database that requires each lesion to be annotated. CDFs for an image classified as having no DR can be used to establish a normative database. Deviations from this normative database will reflect potential pathological ROIs and the image and ROI can be marked as such. This capability will allow rapid re-training, if necessary, on any database of retinal images with different spatial resolution, field of view, image compression, etc. This is an important capability where a large number of imaging protocols are used with a variety of digital cameras.

Fig. 4.

(a) Original Image from ETDRS; (b) Instantaneous Amplitude using low frequencies; and (c) Thresholded Image of (b).

Fig. 13.

ROC curve for the classification of: Risk 3,2 and 1 vs. Risk 0. Area under the ROC = 0,84. A best sensitivity/specificity of 92%/54% was obtained.

ACKNOWLEDGMENTS

This work was funded by the National Eye Institute (NEI) under grant EY018280.

We would like to thank Méthodes d'Evaluation de Systèmes de Segmentation et d'Indexation Dédiées à l'Ophtalmologie Rétinienne (MESSIDOR) for allowing us to use their database in this study

Contributor Information

Carla Agurto, Email: capaagri@unm.edu;, Department of Electrical and Computer Engineering, University of New Mexico, Albuquerque, NM 87109 USA.

Victor Murray, Email: vmurray@ieee.org, Department of Electrical and Computer Engineering, University of New Mexico, Albuquerque, NM 87109 USA.

Eduardo Barriga, Email: sbarriga@visionquest-bio.com, VisionQuest Biomedical, LLC, Albuquerque, NM 87106 USA.

Sergio Murillo, Email: smurillo@ece.unm.edu, Department of Electrical and Computer Engineering, University of New Mexico, Albuquerque, NM 87109 USA.

Marios Pattichis, Email: pattichis@ece.unm.edu, Department of Electrical and Computer Engineering, University of New Mexico, Albuquerque, NM 87109 USA.

Herbert Davis, Email: bert@visionquest-bio.com, VisionQuest Biomedical, LLC, Albuquerque, NM 87106 USA.

Stephen Russell, Email: steverussell@uiowa.edu, Department of Ophtalmology and Visual Sciences University of Iowa Hospitals and Clinics, Iowa City, IA 52242 USA.

Michael Abràmoff, Email: michael-abramoff@uiowa.edu, Department of Ophtalmology and Visual Sciences University of Iowa Hospitals and Clinics, Iowa City, IA 52242 USA.

Peter Soliz, Email: psoliz@visionquest-bio.com, VisionQuest Biomedical, LLC, Albuquerque, NM 87106 USA.

References

- 1.Larsen M, Godt J, Larsen N, Lund-Andersen H, Sjolie AK, Agardh E, et al. Automated detection of fundus photographic red lesions in diabetic retinopathy. Invest Ophthalmol Vis Sci. 2003;44:761–766. doi: 10.1167/iovs.02-0418. [DOI] [PubMed] [Google Scholar]

- 2.Niemeijer M, Russell SR, Suttorp MA, van Ginneken B, Abràmoff MD. Automated detection and differentiation of drusen, exudates, and cotton-wool spots in digital color fundus photographs for early diagnosis of diabetic retinopathy. Invest Ophthalmol Vis Sci. 2007;48:2260–2267. doi: 10.1167/iovs.06-0996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Niemeijer M, van Ginneken B, Staal J, Suttorp-Schulten MSA, Abràmoff MD. Automatic detection of red lesions in digital color fundus photographs. IEEE Transactions on Medical Imaging. 2005;24:584–592. doi: 10.1109/TMI.2005.843738. [DOI] [PubMed] [Google Scholar]

- 4.Sander B, Godt J, Lund-Andersen H, Grunkin M, Owens D, Larsen N, et al. Automatic detection of fundus photographic red lesions in diabetic retinopathy. ARVO. 2001 doi: 10.1167/iovs.02-0418. Poster No. 4338. [DOI] [PubMed] [Google Scholar]

- 5.Murray V. Ph.D. dissertation. University of New Mexico; 2008. Sep, AM-FM methods for image and video processing. [Google Scholar]

- 6.Osareh A, Mirmehdi M, Thomas B, Markham R. Automated identification of diabetic retinal exudates in digital colour images. British Journal of Ophthalmology. 2003;87:1220–1223. doi: 10.1136/bjo.87.10.1220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Osareh A, Mirmehdi M, Thomas B, Markham R. Classification and localisation of diabetic-related eye disease; 7th European Conference on Computer Vision; 2002. [Google Scholar]

- 8.Spencer T, Olson JA, McHardy KC, Sharp PF, Forrester JV. An image-processing strategy for the segmentation and quantification of microaneurysms in fluorescein angiograms of the ocular fundus. Comput. Biomed. 1996;29:284–302. doi: 10.1006/cbmr.1996.0021. [DOI] [PubMed] [Google Scholar]

- 9.Frame A, Undrill PE, Cree MJ, Olson JA, Mchardy KC, Sharp PF, et al. A comparison of computer based classification methods applied to the detection of microaneurysms in ophthalmic fluorescein angiograms. Computers in Biology and Medicine. 1998;28:225–238. doi: 10.1016/s0010-4825(98)00011-0. [DOI] [PubMed] [Google Scholar]

- 10.Niemeijer M, van Ginneken B, Staal J, Suttorp-Schulten MSA, Abràmoff MD. Automatic Detection of Red Lesions in Digital Color Fundus Photographs. IEEE Transactions on Medical Imaging. 2005;24:584–592. doi: 10.1109/TMI.2005.843738. [DOI] [PubMed] [Google Scholar]

- 11.Fleming AD, Philip S, Goatman KA, Williams GJ, Olson JA, Sharp PF. Automated detection of exudates for diabetic retinopathy screening. Phys. Med. Biol. 2007;52:7385–7396. doi: 10.1088/0031-9155/52/24/012. [DOI] [PubMed] [Google Scholar]

- 12.Ricci E, Perfetti R. Retinal blood vessel segmentation using line operators and support vector classification. IEEE Transactions on Medical Imaging. 2007;26:1357–1365. doi: 10.1109/TMI.2007.898551. [DOI] [PubMed] [Google Scholar]

- 13.Staal J, Abràmoff MD, Niemeijer M, Viergever MA, van Ginneken B. Ridge based vessel segmentation in color images of the retina. IEEE Transactions on Medical Imaging. 2004;23:501–509. doi: 10.1109/TMI.2004.825627. [DOI] [PubMed] [Google Scholar]

- 14.Leandro JG, Cesar RM, Jelinek H. Blood vessels segmentation in retina: Preliminary assessment of the mathematical morphology and of the wavelet transform techniques; Proc. of the 14th Brazilian Symposium on Computer Graphics and Image Processing - SIBGRAPI. IEEE Computer Society; 2001. pp. 84–90. [Google Scholar]

- 15.Niemeijer M, Russell SR, Suttorp MA, Van Ginneken B, Abràmoff MD. Automated Detection and Differentiation of Drusen, Exudates, and Cotton-wool Spots in Digital Color Fundus Photographs for Early Diagnosis of Diabetic Retinopathy. Invest Ophthalmol Vis Sci. 2007;48:2260–2267. doi: 10.1167/iovs.06-0996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Streeter L, Cree M. Microanaeurysm detection in colour fundus images. Image and Vision Computing. 2003:280–285. [Google Scholar]

- 17.Jelinek HJ, Cree MJ, Worsley D, Luckie A, Nixon P. An automated microaneurysm detector as a tool for identification of diabetic retinopathy in rural optometric practice. Clinical and Experimental Optometry. 2006;89:299–305. doi: 10.1111/j.1444-0938.2006.00071.x. [DOI] [PubMed] [Google Scholar]

- 18.Sopharak A, Uyyanonvara B. Automatic exudates detection from diabetic retinopathy retinal image using fuzzy c-means and morphological methods; Proceedings of the Third IASTED International Conference Advances in Computer Science and Technology; Phuket, Thailand: 2007. pp. 359–364. [Google Scholar]

- 19.Walter T, Klein JC, Massin P, Erginay A. A contribution of image processing to the diagnosis of diabetic retinopathy-detection of exudates in colour fundus images of the human retina. IEEE Transactions on Medical Imaging. 2002;21:1236–1243. doi: 10.1109/TMI.2002.806290. [DOI] [PubMed] [Google Scholar]

- 20.Vallabha D, Dorairaj R, Namuduri K, Thompson H. Automated detection and classification of vascular abnormalities in diabetic retinopathy; Asilomar Conference on Signals, Systems & Computers; Pacific Grove CA: 2004. No. 38, [Google Scholar]

- 21.Quellec G, Lamard M, Josselin P, Cazuguel G. Optimal Wavelet Transform for the Detection of Microaneurysm in Retina Photographs. IEEE Transactions on Medical Imaging. 2008;27:1230–1241. doi: 10.1109/TMI.2008.920619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Quellec G, Lamard M, Josselin P, Cazuguel G, Cochener B, Roux C. Detection of lesions in retina photographs based on the wavelet transform; Proceedings of the 28th IEEE EMBS Annual International Conference; 2006. pp. 2618–2621. [DOI] [PubMed] [Google Scholar]

- 23.Sofka M, Stewart CV. Retinal vessel extraction using multiscale matched filters confidence and edge measures. IEEE Transactions on Medical Imaging. 2005;25 doi: 10.1109/tmi.2006.884190. No. 12, [DOI] [PubMed] [Google Scholar]

- 24.Al-Rawi M, Qutaishat M, Arrar M. An improved matched filter for blood vessel detection of digital retinal images. Comput. Biol. Med. 2007;37:262–267. doi: 10.1016/j.compbiomed.2006.03.003. No. 2, [DOI] [PubMed] [Google Scholar]

- 25.Fundus Photograph Reading Center, Dept. of Ophtalmology and Visual Sciences. Madison: University of Wisconsin; [Online]. Available: http://eyephoto.ophth.wisc.edu/ResearchAreas/Diabetes/DiabStds.htm. [Google Scholar]

- 26.Pattichis MS, Bovik AC. Analyzing image structure by multidimensional frecuency modulation. IEEE Trans. Pattern Anal. Mach. Intell. 2007:753–766. doi: 10.1109/TPAMI.2007.1051. no. 5, [DOI] [PubMed] [Google Scholar]

- 27.Havlicek JP. Ph.D. Dissertation. The University of Texas at Austin; 1996. AM-FM image models. [Google Scholar]

- 28.Murray V, Rodriguez P, Pattichis MS. “Multi-scale AM-FM Demodulation and Reconstruction Methods with Improved Accuracy,” accepted with minor mandatory changes. IEEE Transactions on Image Processing. doi: 10.1109/TIP.2010.2040446. [DOI] [PubMed] [Google Scholar]

- 29.TECHNO-VISION Project. MESSIDOR: methods to evaluate segmentation and indexing techniques in the field of retinal ophthalmology. [Online]. Available: http://messidor.crihan.fr/

- 30.Abràmoff MD, Meindert N, Suttorp-Schulten MA, Viergever MA, Russell SR, van Ginneken B. Evaluation of a System for Automatic Detection of Diabetic Retinopathy From Color Fundus Photographs in a Large Population of Patients With Diabetes. Diabetes Care. 2008;31:193–198. doi: 10.2337/dc08-0952. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Lee SCET, Wang Y, Klein R, Kingsley RM, Warn A. Computer classification of non-proliferative diabetic retinopathy. Archives of Ophthalmology. 2005;123:759–764. doi: 10.1001/archopht.123.6.759. [DOI] [PubMed] [Google Scholar]

- 32.Sánchez CI, Hornero R, López MI, Poza J. Retinal image analysis to detect and quantify lesions associated with diabetic retinopathy; Conf Proc IEEE Eng Med Biol Soc; 2004. pp. 1624–1627. [DOI] [PubMed] [Google Scholar]

- 33.Philip S, Fleming AD, Goatman KA, Fonseca S, Mcnamee P, Scotland GS, Prescott GJ, Sharp PF, Olson JA. The efficacy of automated "disease/no disease" grading for diabetic retinopathy in a systematic screening programme. Br J Ophthalmol. 2007;91:1512–1517. doi: 10.1136/bjo.2007.119453. [DOI] [PMC free article] [PubMed] [Google Scholar]